95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neural Circuits , 28 October 2014

Volume 8 - 2014 | https://doi.org/10.3389/fncir.2014.00127

This article is part of the Research Topic What can simple brains teach us about how vision works View all 22 articles

Despite their miniature brains insects, such as flies, bees and wasps, are able to navigate by highly erobatic flight maneuvers in cluttered environments. They rely on spatial information that is contained in the retinal motion patterns induced on the eyes while moving around (“optic flow”) to accomplish their extraordinary performance. Thereby, they employ an active flight and gaze strategy that separates rapid saccade-like turns from translatory flight phases where the gaze direction is kept largely constant. This behavioral strategy facilitates the processing of environmental information, because information about the distance of the animal to objects in the environment is only contained in the optic flow generated by translatory motion. However, motion detectors as are widespread in biological systems do not represent veridically the velocity of the optic flow vectors, but also reflect textural information about the environment. This characteristic has often been regarded as a limitation of a biological motion detection mechanism. In contrast, we conclude from analyses challenging insect movement detectors with image flow as generated during translatory locomotion through cluttered natural environments that this mechanism represents the contours of nearby objects. Contrast borders are a main carrier of functionally relevant object information in artificial and natural sceneries. The motion detection system thus segregates in a computationally parsimonious way the environment into behaviorally relevant nearby objects and—in many behavioral contexts—less relevant distant structures. Hence, by making use of an active flight and gaze strategy, insects are capable of performing extraordinarily well even with a computationally simple motion detection mechanism.

A key function of vision is to extract behaviorally relevant information about the outside world from the activity patterns evoked in the retina. Especially fast locomotion requires information about the spatial layout of the environment to allow for meaningful behavioral decisions. Spatial information can be obtained from the relative movements of the retinal image, the optic flow patterns that are generated on the eyes during locomotion.

Visual motion information is not only generated on the eyes when a moving object crosses the visual field, but also all the time while the animal moves around in the environment. Despite this ongoing movement on the retina, we usually perceive the outside world as static. Nevertheless, the retinal motion information is conventionally thought to be important to signal self-motion. One particular type of self-motion has been studied intensively, especially in tethered animals: confronted with a rotating environment, most animals generate eye- or body-movements following this rotation. These rotational responses of the eyes and/or the body to visual motion were monitored and interpreted to compensate for deviations from an intended course of locomotion or an intended gaze direction. In this context the retinal motion is regarded as a disturbance that needs to be compensated (reviews: Götz, 1972; Taylor and Krapp, 2008; Borst, 2014). Although this view may be correct in many behavioral situations, it misses one important point: retinal image motion induced by self-motion of the animal is not just a nuisance, but may also be a highly relevant source of environmental information. In particular, fast flying animals, such as many insects, heavily rely on environmental information derived from optic flow, for instance, to avoid collisions with obstacles, to find a landing site and control landing maneuvers or when learning the landmark constellation around a goal and when later navigating towards this previously learnt site. However, also sitting animals may induce specific body, head, and eye movements for estimating distances to objects in their environment (for review see Collett and Zeil, 1996; Kral, 2003; Srinivasan, 2011; Egelhaaf et al., 2012; Zeil, 2012).

The working hypothesis of much of our recent research on insects, such as flies and bees (Egelhaaf et al., 2012) and, thus, the assumption underlying this article is that the output of the motion vision system combines two highly relevant cues of environmental information: nearness and contrast borders. As a consequence it segments the time-dependent retinal images into potentially relevant nearby structures and—in many behavioral contexts–potentially less relevant distant objects. On the one hand, we will argue that all this is likely to be accomplished by simple computational principles that have been conceptually lumped into a well-known and well-established computational model, i.e., the correlation-type movement detector (often also termed Hassenstein-Reichardt detector or elementary motion detector, EMD; Reichardt, 1961; Borst and Egelhaaf, 1989; Egelhaaf and Borst, 1993; Borst, 2000). On the other hand, we will sketch the current knowledge about how local motion information is further processed to guide orientation behavior.

The correlation-type motion detection scheme has been derived originally as a computational model on the basis of behavioral and electrophysiological experiments on insects (Reichardt, 1961; Egelhaaf and Borst, 1993; Borst et al., 2003; Borst, 2004; Lindemann et al., 2005; Straw et al., 2008; Brinkworth and O’Carroll, 2010; Meyer et al., 2011). Only recently, the computational principles are being decomposed on the circuit level. The neural networks and synaptic interactions underlying motion detection are investigated mainly in the fruitfly Drosophila by employing the sophisticated repertoire of novel genetic tools (e.g., Freifeld et al., 2013; Joesch et al., 2013; Maisak et al., 2013; Reiser and Dickinson, 2013; Silies et al., 2013; Tuthill et al., 2013; Behnia et al., 2014; Hopp et al., 2014; Mauss et al., 2014; Meier et al., 2014; Strother et al., 2014). Since we are focusing here especially on the overall output of the motion detection system, rather than on the cellular details of its internal structure, our considerations are mainly based on model analyses of EMDs. Variants of this computational model can account for many features of motion detection, as they manifest themselves in the activity of output cells of the motion vision pathway and even in the behavioral performance of the entire animal.

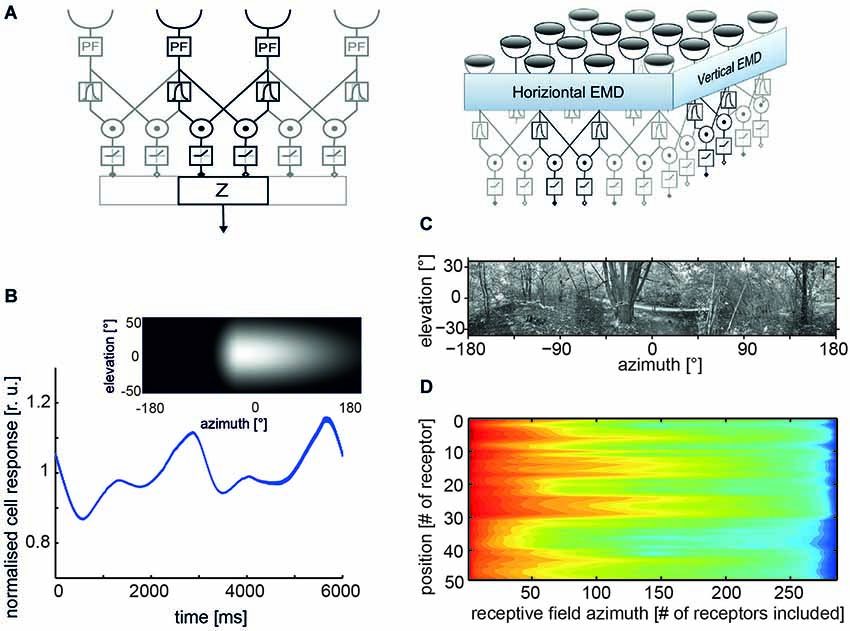

In its simplest form, an EMD is composed of two mirror-symmetrical subunits (Figure 1A). In each subunit, the signals of adjacent light-sensitive cells receiving the filtered brightness signals from neighboring points in visual space are multiplied after one of them has been delayed. The final detector response is obtained by subtracting the outputs of two such subunits with opposite preferred directions, thereby considerably enhancing the direction selectivity of the motion detection circuit. Each motion detector reacts with a positive signal to motion in a given direction and with a negative signal to motion in the opposite direction (reviews: Reichardt, 1961; Borst and Egelhaaf, 1989, 1993). Various elaborations of this basic motion detection scheme have been proposed to account for the responses of insect motion-sensitive neurons under a wide range of stimulus conditions including even natural optic flow as experienced under free-flight conditions (e.g., Borst et al., 2003; Lindemann et al., 2005; Shoemaker et al., 2005; Brinkworth et al., 2009; Hennig et al., 2011; Hennig and Egelhaaf, 2012).

Figure 1. Properties of correlation-type elementary movement detectors (EMDs). (A) Structure of a basic variant of three neighboring movement detectors including peripheral filtering (PF) in the input lines; signals from each receptor are delayed via the phase delay of a temporal first-order low-pass filter, multiplied and half-wave rectified; spatial pooling of signals in accomplished by the output element Z (left); a two-dimensional EMD array consisting of EMDs most sensitive to horizontal and vertical motion, respectively (right). (B) Time course of pattern-dependent response modulations of model cell that pools the responses of an array of EMDs with horizontal preferred direction. The spatial sensitivity distribution of the model cell is given by the weight field shown in the inset. The brighter the gray level the larger the local weight of the corresponding EMDs and, thus, the spatial sensitivity. The frontal equatorial viewing direction is at 0° azimuth and 0° elevation. The model cell was stimulated by horizontal constant velocity motion of the panoramic high dynamic range image shown in (C). (D) Logarithmic color coded standard deviation of the mean pattern-dependent modulation for one-dimensional receptive fields differing in vertical receptor position and azimuthal receptive field size (# of receptors included horizontally). The pattern-dependent modulation amplitude decreases with horizontal receptive field extent. They depend on the contrast distribution of the input image, as can be seen, when comparing pattern-dependent modulation amplitudes corresponding to the different elevations of the input image. (Data from Meyer et al., 2011).

As a consequence of their computational structure, EMDs and their counterparts in the insect brain have a number of peculiar features that deviate in many respects from those of veridical velocity sensors. Therefore, they often have been interpreted as the consequence of a simple, but somehow deficient computational mechanism. The most relevant of these features are:

• Ambiguous velocity dependence: EMDs do not operate like speedometers: their mean responses increase with increasing velocity, reach a maximum, and then decrease again. The location of the velocity optimum depends on the spatial frequency composition of the stimulus pattern (Egelhaaf and Borst, 1993), but also on stimulus history and the behavioral state of the animal (Maddess and Laughlin, 1985; Egelhaaf and Borst, 1989; Warzecha et al., 1999; Kurtz et al., 2009; Chiappe et al., 2010; Longden and Krapp, 2010; Maimon et al., 2010; Rosner et al., 2010; Jung et al., 2011; Longden et al., 2014). At least the pattern dependence of velocity tuning is reduced if the stimulus pattern consists of a broad range of spatial frequencies, as is characteristic of natural scenes (Dror et al., 2001; Straw et al., 2008).

• Contrast dependence: the response of EMDs, at least in their most basic form, depends strongly on contrast, being a consequence of the multiplicative interaction between the two EMD input lines (Borst and Egelhaaf, 1989; Egelhaaf and Borst, 1993). This contrast dependence can be reduced to some extent by saturation nonlinearities or more elaborate contrast normalization measures (Egelhaaf and Borst, 1989; Shoemaker et al., 2005; Babies et al., 2011).

• Pattern dependence of time-dependent responses: owing to the small receptive fields of EMDs, their responses are temporally modulated even during pattern motion at a constant velocity. The modulations are a consequence of the texture of the environment. Since neighboring EMDs receive, at a given time, their inputs from different parts of the environment, their output signals modulate with a different time course. As a consequence, spatial pooling over EMDs reduces mainly those pattern-dependent response modulations that originate from the high spatial frequencies of the stimulus pattern (Figure 1B). The pattern-dependent response modulations decrease with increasing the spatial pooling range (Figures 1C,D; Egelhaaf et al., 1989; Single and Borst, 1998; Meyer et al., 2011; O’Carroll et al., 2011; Schwegmann et al., 2014).

• Motion adaptation: the responses of motion vision systems were found to depend on stimulus history and to be adjusted by a variety of mechanisms to the prevalent stimulus conditions (reviews: Clifford and Ibbotson, 2002; Egelhaaf, 2006; Kurtz, 2012). These processes are usually regarded as adaptive, although their functional significance is still not entirely clear. Several non-exclusive functional roles have been proposed, such as adjusting the dynamic range of motion sensitivity to the prevailing stimulus dynamics (Brenner et al., 2000; Fairhall et al., 2001), saving energy by adjusting the neural response amplitudes without affecting the overall information that is conveyed (Heitwerth et al., 2005), and increasing the sensitivity to temporal discontinuities in the retinal input (Maddess and Laughlin, 1985; Liang et al., 2008, 2011; Kurtz et al., 2009).

These response features of EMDs make their responses ambiguous with respect to a representation of the retinal velocity. Because these ambiguities, especially the contrast- and texture-dependent response modulations, deteriorate the quality of representing pattern velocity, they have often been discussed as “pattern noise” (Dror et al., 2001; Shoemaker et al., 2005; Rajesh et al., 2006; O’Carroll et al., 2011) and, thus, as a limitation of the biological motion detection mechanism. Here we want to take an alternative stance by proposing that these pattern-dependent modulations of the movement detector output do not reflect noise in the context of velocity coding. Rather, they can be interpreted as being relevant from a functional point of view, as they reflect potentially useful information about the environment and, thus, may be relevant for visually guided orientation behavior (Meyer et al., 2011; O’Carroll et al., 2011; Hennig and Egelhaaf, 2012; Schwegmann et al., 2014; Ullrich et al., 2014b).

It is indispensable that the animal is active and moves to be able to use the environmental information provided by EMDs. This is because movement detectors do not respond in a stationary world if the animal is also stationary. However, not every type of self-motion is equally suitable for the brain to extract useful information about the environment from the image flow and, thus, from the EMD responses. Especially, if spatial information is concerned only the optic flow component generated by translational self-motion is useful. During pure translational self-motion the retinal images of objects close to the observer move faster than those of more distant ones. More specifically, for a given translation velocity, retinal image velocity evoked by an environmental object at a given viewing angle increases linearly with its nearness, i.e., the inverse of its distance. However, the retinal velocity of an object even at a given distance also depends on its viewing angle relative to the direction of motion: the optic flow vectors are maximal at 90° relative to the direction of motion and decrease according to a sine function from here towards the direction of self-motion, where they are zero. Hence, at this singular point, i.e., the direction in which the agent is heading, it is not possible to obtain nearness information. The geometrical situation differs much for pure rotational self-movements of the agent. Then the retinal image displacements are independent of the distance to objects in the environment (Koenderink, 1986).

If locomotion is characterized by an arbitrary combination of translation and rotation, the optic flow field is more complex, and information about the spatial structure of the environment cannot readily be derived. Nevertheless, a segregation of the optic flow into its rotational and translational components can, at least in principle, be accomplished computationally for most realistic situations (Longuet-Higgins and Prazdny, 1980; Prazdny, 1980; Dahmen et al., 2000). However, such a computational strategy is demanding, and it is not clear whether it can be pursued by a nervous system. Several insect species with their tiny brains appear to employ other computationally much more parsimonious strategies.

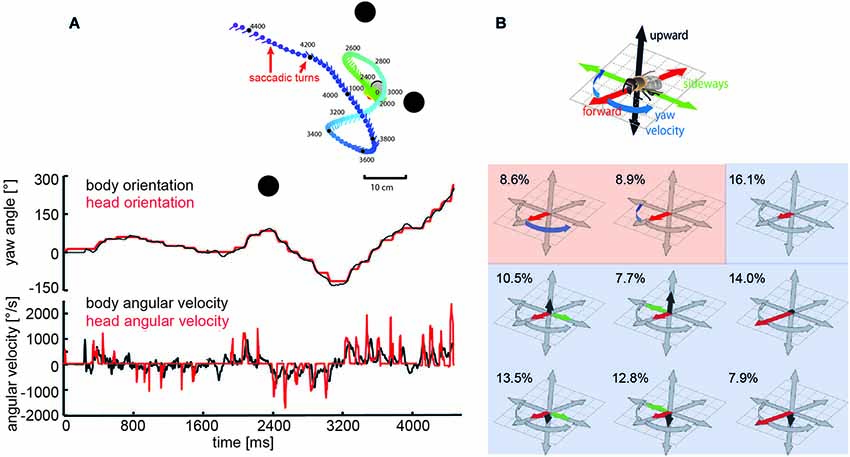

Specific combinations of rotatory and translatory self-motion may generate an optic flow pattern that contains useful spatial information. For instance, when the animal circles around a pivot point while fixating it, the retinal images of objects before and behind the pivot point move in opposite directions and, thus, provide distance information relative to the pivot point, rather than to the moving observer (Collett and Zeil, 1996; Zeil et al., 1996). Other insects generate pure translational self-motion to obtain distance information relative to the animal. For instance, mantids, dragonflies, and locusts, perform lateral body and head translations and employ the resulting optic flow for gaining distance information, when sitting in ambush to catch a prey or preparing for a jump (Collett, 1978; Sobel, 1990; Collett and Paterson, 1991; Kral and Poteser, 1997; Olberg et al., 2005). During flight, flies, wasps and bees reveal a distinctive behavior that is characterized by sequences of rapid saccade-like turns of body and head interspersed with virtually pure translational, i.e., straight flight phases (Schilstra and Van Hateren, 1999; van Hateren and Schilstra, 1999; Mronz and Lehmann, 2008; Boeddeker et al., 2010, submitted; Braun et al., 2010, 2012; Geurten et al., 2010; Kern et al., 2012; van Breugel and Dickinson, 2012; Zeil, 2012). Saccadic gaze changes have a rather uniform time course and are shorter than 100 ms. Angular velocities of up to several thousand °/s can occur during saccades (Figures 2A, 3A). Rotational movements associated with body saccades are shortened for the visual system by coordinated head movements and roll rotations performed for steering purposes during sideways translations, are compensated by counter-directed head movements. As a consequence, the animal’s gaze direction is kept virtually constant during intersaccades (Schilstra and Van Hateren, 1999; van Hateren and Schilstra, 1999; Boeddeker and Hemmi, 2010; Boeddeker et al., 2010, submitted; Braun et al., 2010, 2012; Geurten et al., 2010, 2012). Hence, turns that are essential to reach behavioral goals are minimized in duration and separated from translational flight phases in which the direction of gaze is kept largely constant. This peculiar time structure of insect flight facilitates the processing of distance information from the translational intersaccadic optic flow. With regard to gathering information about the outside world, it is highly relevant from a functional perspective that the intersaccadic translational motion phases last for more than 80% of the entire flight time (van Hateren and Schilstra, 1999; Boeddeker and Hemmi, 2010; Boeddeker et al., 2010; Braun et al., 2012; van Breugel and Dickinson, 2012). Still, the individual intersaccadic time intervals are short and usually last for only some ten milliseconds; they are only rarely longer than 100 to 200 ms in blowflies, for example (Kern et al., 2012). This characteristic dynamic feature of the active flight and gaze strategy of insects, thus, constrains considerably the timescales on which spatial information can be extracted from the optic flow patterns during flight, a fact the underlying neuronal mechanisms have to cope with (Egelhaaf et al., 2012).

Figure 2. Saccadic flight strategy and variability of translational self-motion. Saccadic flight and gaze strategy of free-flying bumblebees and honeybees. (A) Inset: Trajectory of a typical learning flight of a bumblebee as seen from above during a navigational task involving landmarks (black objects). Each line indicates a point in space and the corresponding viewing direction of the bee’s head each 20 ms. The color code indicates time (given in ms after the start of the learning flight at the goal). Upper diagram: Angular orientation of longitudinal axis of body (black line) and head (red line) of a sample flight trajectory of a bumblebee during a learning flight after departing from a visually inconspicuous feeder surrounded by three landmarks. Note that step-like, i.e., saccadic direction changes are more pronounced for the head than for the body. Bottom diagram: Angular yaw velocity of body (black line) and head (red line) of the same flight (Boeddeker et al., submitted; Data from Mertes et al., 2014). (B) Translational and rotational prototypical movements of honeybees during local landmark navigation. Flight sequences while the bee was searching for a visually inconspicuous feeder located between three cylindrical landmarks can be decomposed into nine prototypical movements using clustering algorithms in order to reduce the behavioral complexity. Each prototype is depicted in a coordinate system as explained by the inset. The length of each arrow determines the value of the corresponding velocity component. Percentage values provide the relative occurrence of each prototype. More than 80% of flight-time corresponds to a varied set of translational prototypical movements (light blue background) and less than 20% has significantly non-zero rotational velocity corresponding to the saccades (light red background) (Data from Braun et al., 2012).

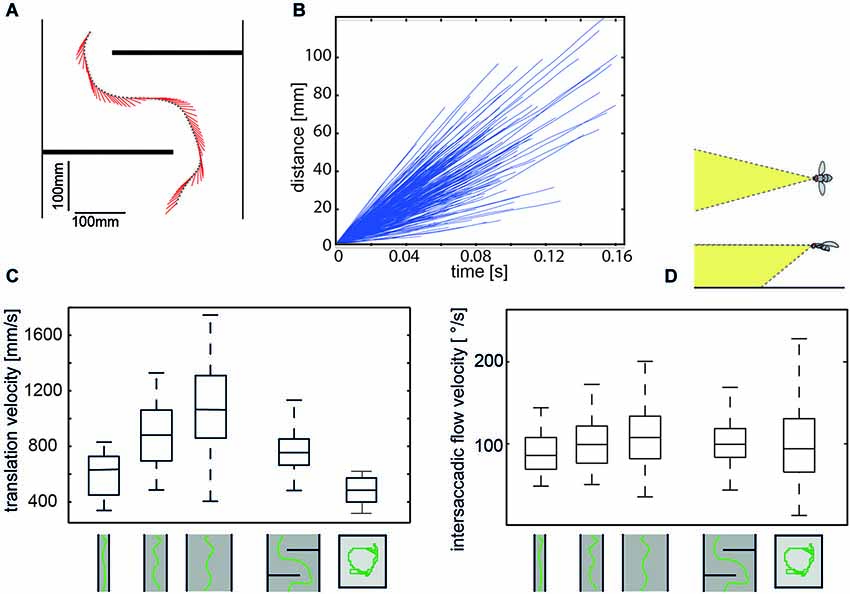

Figure 3. Regulation of intersaccadic retinal velocity by context-dependent control of flight speed. (A) Sample flight trajectory of a blowfly as seen from above negotiating an obstacle in a flight tunnel (only middle section shown); the position of the fly (black dot) and the projection of the orientation of the body long axis in the horizontal plane (red line) are given every 10 ms. (B) Distance flown within individual intersaccades as a function of the time that is needed. Shown are incremental distance vs. time plots for 320 intersaccadic intervals obtained from 10 spontaneous flights in a cubic box (see right pictogram in (C) (Flight trajectories provided by van Hateren and Schilstra, 1999). (C) Boxplot of the translational velocity in flight tunnels of different widths, in a flight arena with two obstacles and in a cubic flight arena (sketched below data). Translation velocity strongly depends on the geometry of the flight arena (Data from Kern et al., 2012). (D) Boxplot of the retinal image velocities within intersaccadic intervals experienced in the fronto-ventral visual field (see inset above boxplot) in the different flight arenas. In this area of the visual field, the intersaccadic retinal velocities are kept roughly constant by regulating the translation velocity according to clearance with respect to environmental structures. The upper and lower margins of the boxes in (C) and (D) indicate the 75th and 25th percentiles, and the whiskers the data range (Data from Kern et al., 2012).

Although the translational intersaccadic flight phases are diverse with regard to the direction and velocity of motion they appear to be adjusted to the respective behavioral context (Figure 2B; Braun et al., 2010, 2012; Dittmar et al., 2010; Geurten et al., 2010). This is especially true for the overall velocity of translational self-motion, although it does not change much during individual intersaccadic intervals (Figure 3B; Schilstra and Van Hateren, 1999; van Hateren and Schilstra, 1999; Boeddeker et al., 2010; Kern et al., 2012). For instance, insects tend to decelerate when their flight path is obstructed, and flight speed is thought to be controlled by optic flow generated during flight (David, 1979, 1982; Farina et al., 1995; Srinivasan et al., 1996; Kern and Varjú, 1998; Baird et al., 2005, 2006, 2010; Frye and Dickinson, 2007; Fry et al., 2009; Dyhr and Higgins, 2010; Straw et al., 2010; Kern et al., 2012). Thereby, they appear to regulate their intersaccadic translational flight velocity to keep the retinal velocities in the frontolateral visual field largely constant at a “preset” level (Baird et al., 2010; Portelli et al., 2011; Kern et al., 2012). This level appears to lie within the part of the operating range of the motion detection system where the response amplitude still increases with increasing retinal velocity (Figure 3; See Section Insect Motion Detection Reflects the Properties of the Environment in Addition to Velocity). These features are likely to be of functional significance from the perspective of spatial vision, because they help to reduce the ambiguities in extracting nearness information from the EMD outputs that represent the optic flow in the visual system. On the other hand, since insects may adjust their translational velocity to the behavioral context (see above, but also Srinivasan et al., 2000), no absolute nearness cues can be obtained by any mechanism extracting spatial information from optic flow: this is because a given retinal velocity and, thus, response level of a motion detection system may be obtained for different combinations of translation velocity and nearness. Hence, nearness information can be extracted only in relative terms, unless translation velocity is known. This implies that translation velocity should be kept constant, if from the response modulations of EMDs (See Section Insect Motion Detection Reflects the Properties of the Environment in Addition to Velocity) nearness information needs to be determined. If also the translation velocity varies, the resulting response modulations are ambiguous with regard to their origin: they could be a consequence of either changes in self-motion or the spatial structure of the surroundings.

Insects provide the basis for representing computationally efficient environmental information from the optic flow generated during the intersaccadic intervals of largely translational self-motion. However, optic flow information is not explicitly given at the retinal input. Rather, it needs to be computed from the spatiotemporal brightness fluctuations that are sensed by the array of photoreceptors of the retina. This is accomplished by local neural circuits residing in the visual neuropils. As explained in Section Insect Motion Detection Reflects the Properties of the Environment in Addition to Velocity the overall performance of these circuits can be lumped together and explained by variants of the correlation-type EMD. Despite the detailed knowledge at the cellular and computational level, the functional significance of the information provided by these movement detectors has not been clearly unraveled yet. Since EMDs are sensitive to velocity, they may exploit the different speeds of objects at different nearnesses during translational self-motion and, thus, may represent information about the depth structure of the environment. However, EMDs are also sensitive to textural features of the environment (See Section Insect Motion Detection Reflects the Properties of the Environment in Addition to Velocity). Is this pattern dependence of the EMD output just an unwanted by-product of a simple computational mechanism, or could it have any functional significance?

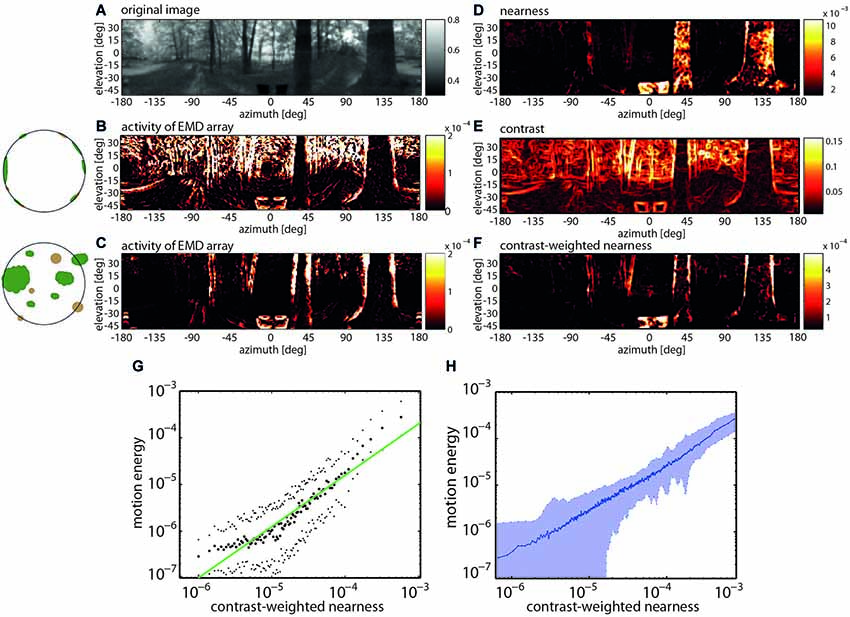

Recent model simulations of arrays of EMDs provided evidence that their pattern dependence may make sense from a functional perspective during translatory self-motion in cluttered natural environments. Although several experimental and modeling studies probed the insect motion vision system already before with moving natural images, they only employed image sequences that did not contain any depth structure and, thus, differed much from what an animal experiences in natural environments (Straw et al., 2008; Wiederman et al., 2008; Brinkworth et al., 2009; Barnett et al., 2010; Meyer et al., 2011; O’Carroll et al., 2011). The potential significance of the combined velocity and pattern dependence of correlation-type EMDs became obvious by comparing the activity profiles of EMD arrays induced by image sequences that were obtained from constant-velocity translational movements through a variety of cluttered natural environments containing the full depth information and after the depth structure of the environment was removed. For both types of situations, sample activity profiles of EMD arrays are shown in Figure 4. They differ much, because without depth structure all environmental objects move at the same velocity and, thus, lead to responses irrespective of their distance. It is obvious that the activity profile evoked by motion through the environment with its natural depth structure preserved is most similar not to the nearness map per se, but to the contrast-weighted nearness map, which is the nearness multiplied by the contrast. However, the activity profile evoked by the artificially depth-removed image sequences matches best the contrast map (Schwegmann et al., 2014). This exemplary finding is corroborated by correlation analysis based on translatory motion through several different natural environments (Figure 4).

Figure 4. Representation of nearby contours by EMD arrays during translatory self-motion. (A) Panoramic input image with brightness adjusted to the spectral sensitivity of the motion detection system. (B) Activity profile of EMD array after equalizing the depth structure of the environment (see inset). (C) Activity profile of EMD array in response to translatory motion in environment with natural depth structure. (D) Nearness of environmental structures. (E) Local contrast of environmental structures. (F) Contrast-weighted nearness of environmental structures. (G) Relation between motion energy and the contrast-weighted nearness plotted in a double logarithmic way for the center of the track of the forest scenery shown in (A). (H) Relation between motion energy and the contrast-weighted nearness for 37 full-depth motion sequences recorded in a wide range of different types of natural environments (Data from Schwegmann et al., 2014).

Hence, EMD arrays do not respond best to the retinal velocity per se and, thus, to the nearness of environmental structures, but to the contrast-weighted nearness. This means that, during translational self-motion in natural environments, the arrays of EMDs represent to a large degree the nearness of high-contrast contours of objects. This conclusion holds true as long as the translational velocity varies only little and, thus, does not induce time-dependent response changes on its own (See Section Enhancing the Overall Power of Insect Brains: Reducing Computational Load by Active Vision Strategy). As mentioned above, this condition is met to a large extent for the short time of most intersaccadic intervals (Figure 3B; Schilstra and Van Hateren, 1999; van Hateren and Schilstra, 1999; Kern et al., 2012). By representing the contours of nearby objects, the distinctive feature of EMDs to jointly represent contrast and nearness information may make perfect sense from a functional point of view. Cluttered spatial sceneries are segmented in this way, without much computational expenditure, into nearby and distant objects. This finding underlines the notion that the mechanism of motion detection has been tweaked by evolution to allow the tiny brains of insects to gather behaviorally relevant information in a computationally efficient way.

However, motion measurements cannot be made instantaneously. As is reflected by the time constants that are an integral constituent of any motion detection mechanism including correlation-type EMDs, it may take some time until reliable motion information and, thus, spatial cues can be extracted from their responses. This may be a challenge as the uninterrupted translational movement phases during intersaccadic intervals are short, ranging between 30 ms up to little more than 100 ms (Figure 3B). It takes few milliseconds after a change from a saccadic rotation to an intersaccadic translational movement for the EMD response to reach a kind of steady-state level. This finding indicates that the initial part of a translational sequence cannot be used by the animal for a reliable estimation of nearness information from the EMD responses (Schwegmann et al., 2014). Even under such constraints the duration of most intersaccadic intervals appears to be long enough to allow for extracting spatial information from the optic flow patterns on the eyes.

In conclusion, during constant-velocity translatory locomotion the largest responses of the motion detection system are induced by contrast borders of nearby objects. Hence, it appears to be of functional significance that insects, such as flies and bees move essentially straight for more than 80% of their flight time and change their direction by interspersed saccadic turns of variable amplitude (Figure 2B). Since translation velocity does not change much during intersaccadic intervals, the output of the motion detection system during individual intersaccadic intervals highlights contrast borders of nearby objects. Thus, what has been conceived often to be a limitation of the insect motion detection system may turn out to be a means that allows—in combination with the active flight and gaze strategy—to parse the environment into near and far and, at the same time, enhance the representation of object borders in a computationally extremely parsimonious way. By combining contrast edge information and motion-based segmentation of the scene in a single representation, the insect vision pathway may reflect an elegant and computationally parsimonious mechanism for cue integration.

In computer vision optic flow is also used for segmentation purposes as well as for solving other spatial vision tasks, such as the recovery of the shape and relative depth of three-dimensional surface structures or the determination of the time-to-collision to an obstacle and the position of the focus of expansion to detect the heading direction (Beauchemin and Barron, 1995; Zappella et al., 2008). Since quite some time, a variety of approaches to optic flow computation has been proposed and applied to robotic applications. These algorithms are based on different assumptions on image motion and operate on different image representations, e.g., directly on the gray level values or the edges in the image sequences (Beauchemin and Barron, 1995; Fleet and Weiss, 2005). In contrast to EMDs that provide jointly information about motion and contrast edges during translatory motion, these technical optic flow approaches have in common that they attempt to estimate the optic flow field veridically, i.e., the flow vectors (up to a scaling factor) according to their velocity in the image plane. If applied to natural image sequence this, however, proofed to be possible to only some extent and erroneous velocity estimates are a common result depending on the pattern properties of the sceneries (Barron et al., 1994; McCarthy and Barnes, 2004). To what extent segmentation algorithms which compute segment borders from discontinuities in a dense field of optic flow estimates as provided by the various computer vision algorithms (Zappella et al., 2008) may be also applicable for computing segmentations based on a motion image computed by EMDs remains to be tested.

Is the environmental information provided by the insect motion detection system during the translational phases of intersaccadic intervals really used by downstream processes in the nervous system and does it eventually play a role in controlling orientation behavior? Answers to this question can only be tentative so far, although it is suggested by two lines of evidence that the EMD-based environmental information might be functionally relevant. On the one hand, detailed knowledge is available of the computational properties of one neural pathway processing the information provided by the arrays of local motion detectors. On the other hand, behavioral studies and current modeling attempts suggest that the motion-based information about the environment may well be exploited for solving behavioral tasks such as collision avoidance and landmark navigation. Both aspects will be dealt with briefly in the following.

The output of the local motion sensitive elements in insects are spatially pooled to a varying degree in one neural pathway depending on the computational tasks that are being solved (Hausen, 1981; Krapp, 2000; Borst and Haag, 2002; Egelhaaf, 2006; Borst et al., 2010). However, spatial pooling inevitably reduces the precision with which a moving stimulus can be localized. Although this might appear, at least at first sight, to be a disadvantage, this is not necessarily the case. The determination of self-motion of the animal is one obvious task of motion vision systems. In this case, the retinal motion should not be localized, but rather only few output variables, i.e., of its translational as well as rotational velocities, are to be computed from the global optic flow. Information about self-motion is thought to be relevant for solving tasks such as, for instance, attitude control during flight, the compensation of involuntary disturbances by corrective steering maneuvers or the determination of the direction of heading (Dahmen et al., 2000; Lappe, 2000; Vaina et al., 2004; Taylor and Krapp, 2008; Egelhaaf et al., 2012). Accordingly, spatial pooling of local motion information over relatively large parts of the visual system as is done by wide-field cells (LWCs) in the lobula complex of insects enhances the specificity of the system for different types of self-motion (Hausen, 1981; Krapp et al., 1998, 2001; Franz and Krapp, 2000; Horstmann et al., 2000; Dror et al., 2001; Karmeier et al., 2003; Franz et al., 2004; Wertz et al., 2009).

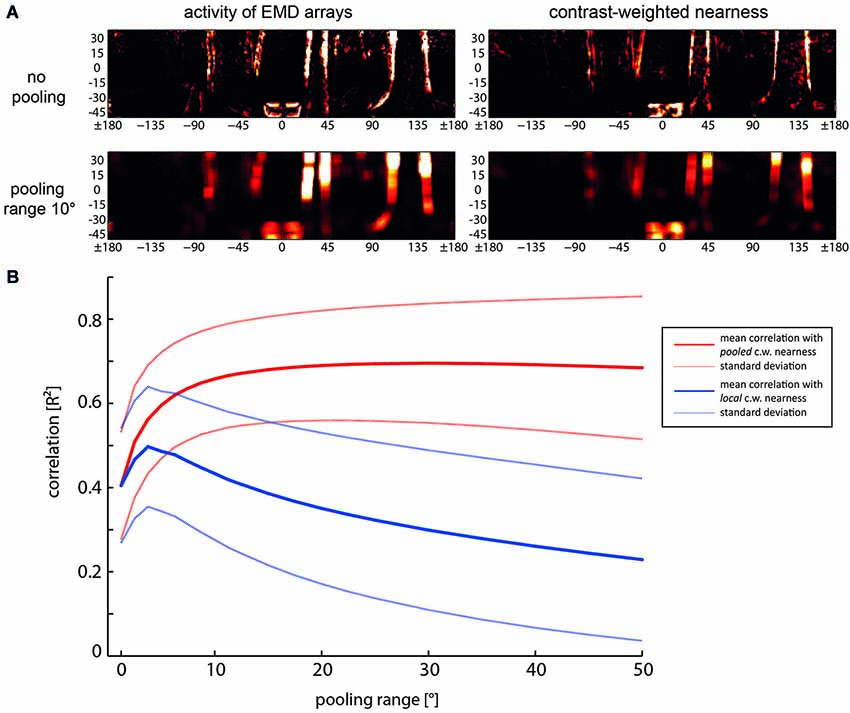

In contrast, if information about the spatial layout of the environment is required, it might be relevant to localize objects together with their nearness to the animal. Then spatial pooling over only a relatively small spatial area will be acceptable. Integration of the outputs of neighboring EMDs was found to increase considerably the reliability with which the boundaries of nearby objects are represented in the activity profile of EMDs; pooling of the direct and second neighbors is already sufficient. Increasing the pooling area further does not increase the contrast-weighted nearness information significantly, but reduces the localizability of environmental features to a spatial range as given by the receptive field size of the pooling neuron (Figure 5; Schwegmann et al., 2014). Spatial pooling across larger areas of the visual field provides only information about the averaged spatial information within the pooling areas during translational self-motion without being able to localize environmental features within this area of the visual field.

Figure 5. Relationship between spatial pooling of local motion information, the reliability of representing nearby contours and their localizability. (A) Examples of activity distribution of EMD arrays (left) and contrast-weighted nearness map (right) for no pooling (the upper row) and for a pooling range of 10°, i.e., spatially integrating the output a square array 8 × 8 neighboring EMDs (bottom row). (B) Mean correlation (solid lines) and standard deviations (dashed lines) as a function of pooling range. Red lines: Correlation of the pooled motion energy profile with the pooled contrast-weighted (c.w.) nearness map. Blue line: Correlation of the pooled motion energy profile with local non-pooled contast-weighted nearness map, indicating the reduction of localizability with increasing pooling range (Data from Schwegmann et al., 2014).

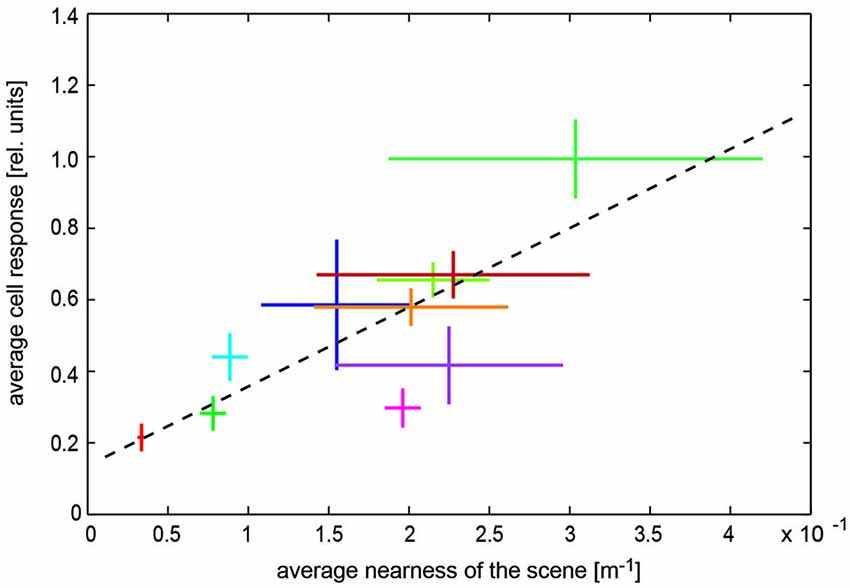

Experimentally most information about how the spatial layout of the environment might be represented by the visual motion pathway during translational self-motion is available from recent experiments on LWCs, those neurons that have usually been conceived as sensors for self-motion estimation because of their relatively large receptive fields (see above). However, individual LWCs are far from being ideal for self-motion estimation as their receptive fields are spatially clearly restricted and show distinct spatial sensitivity peaks. Accordingly, they show pronounced response modulations even during constant-velocity motion resulting from textural features of the environment (Meyer et al., 2011; O’Carroll et al., 2011; Ullrich et al., 2014b). In addition, the responses of LWCs provide information about the spatial layout of the environment—at least on a coarse spatial scale, but even on the short timescale of intersaccadic intervals: the intersaccadic response amplitudes evoked by ego-perspective movies were found to depend on the distance to the walls of the flight arena in which the corresponding behavioral experiments were performed or on objects that were inserted close to the flight trajectory (Boeddeker et al., 2005; Kern et al., 2005; Karmeier et al., 2006; Liang et al., 2008, 2012; Hennig and Egelhaaf, 2012). Moreover, LWC responses are found to reflect the overall depth structure of different natural environments (Figure 6; Ullrich et al., 2014a). Recently, it could even been shown that the intersaccadic responses of bee LWCs to visual stimuli as experienced during navigation flights in the vicinity of a goal strongly depend on the spatial layout of the environment. The spatial landmark constellation that guides the bees to their goal leads to a characteristic time-dependent response profile in LWCs during the intersaccadic intervals of navigation flights (Mertes et al., 2014).

Figure 6. Representation of spatial information by visual wide-field neurons. Dependence of blowfly LWC with large receptive field (H1 neuron) on overall nearness during translatory self-motion in various cluttered natural environments. Data obtained in different environments are indicated by different colors. Horizontal bars: Standard deviation of the “time-dependent nearness” during the translation sequence within a given scenery, indicating the difference in the spatial structure of the different environments. Vertical bars: Standard deviation of response modulations obtained during the translation sequence in a given scenery. Corresponding mean values are given by the crossing of the horizontal and vertical bars. Regression line (black dashed line) illustrating the relation between nearness values and cell responses (Data from Ullrich et al., 2014a).

What is the range within which spatial information is represented on the basis of motion information? Under spatially constrained conditions with the flies flying at translational velocities of only slightly more than 0.5 m/s, the spatial range within which significant distance dependent intersaccadic responses are evoked amounts to approximately two meters (Kern et al., 2005; Liang et al., 2012). Since a given retinal velocity is determined in a reciprocal way by distance and velocity of self-motion, respectively, the spatial range that is represented by LWCs can be expected to increase with increasing translational velocity. Accordingly, at higher translation velocities as are characteristic of flights under spatially less constrained conditions the spatial range within which environmental objects lead to significant intersaccadic response increments is extended to a few more meters (Ullrich et al., 2014a). From an ecological perspective it appears to be economical and efficient that the behaviorally relevant spatial range that is represented by motion detection systems scales with locomotion velocity: a fast moving animal can thus initiate an avoidance maneuver at a greater distance from an obstacle than when moving slowly.

We can conclude from this experimental evidence that during translational self-motion as is characteristic of the intersaccadic flight phases of flies and bees that even motion sensitive cells with relatively large receptive fields provide spatial information about the environment. Although it is still not clear to what extent this information is exploited for behavioral control (see below), its potential functional significance is underlined by the fact that the object-induced responses observed during intersaccadic intervals are further increased relative to the background activity of the cell as a consequence of motion adaptation (Liang et al., 2008, 2011, 2012; Ullrich et al., 2014b).

Fast flying animals, such as many insects, need to respond to environmental cues often already at some distance, for instance, when they have to evade a potential obstacle in their flight path or when using objects as landmarks in guiding them to a previously learnt goal location. Then optic flow is likely to be the most relevant cue to provide spatial information. Accordingly, motion cues have been implicated on the basis of many behavioral analyses to be decisive in controlling behavioral components of flying insects. Optic flow processing determines several aspects of the landing behavior (Wagner, 1982; Lehrer et al., 1988; Srinivasan et al., 1989, 2001; Kimmerle et al., 1996; Evangelista et al., 2010; van Breugel and Dickinson, 2012; Baird et al., 2013), and is used for flower distance estimation and tracking (Lehrer et al., 1988; Kern and Varjú, 1998). Insects also seem to exploit retinal motion in the context of collision avoidance (Tammero and Dickinson, 2002a,b; Reiser and Dickinson, 2003; Lindemann et al., 2008, 2012; Kern et al., 2012; van Breugel and Dickinson, 2012; Lindemann and Egelhaaf, 2013). Moreover, insects, such as bees and wasps, show a rich repertoire of visual navigation behavior employing motion cues on a wide range of spatial scales. When a large distance to a goal needs to be spanned, odometry, i.e., determining flown distances, based on optic flow cues is a central constituent of navigation mechanisms of bees (Srinivasan et al., 1997; Esch et al., 2001; Si et al., 2003; Tautz et al., 2004; Wolf, 2011; Eckles et al., 2012). However, even if the animal is already in the vicinity of its goal it can use spatial cues based on optic flow to find the goal (Zeil, 1993b; Lehrer and Collett, 1994; Dittmar et al., 2010, 2011), although also textural and other cues play an important role in local navigation (Collett et al., 2002, 2006; Zeil et al., 2009; Zeil, 2012). Bees even seem to orchestrate their flights in specific ways that facilitate gathering spatial information by intersaccadic movements with a strong sideways component (Lehrer, 1991; Zeil et al., 2009; Dittmar et al., 2010; Braun et al., 2012; Collett et al., 2013; Philippides et al., 2013; Riabinina et al., 2014; Boeddeker et al., submitted).

Turns, at least of flies and bees, are thought in most behavioral contexts including collision avoidance behavior to be accomplished in a saccadic fashion. Hence, understanding the mechanisms underlying collision avoidance means understanding by what visual input during an intersaccadic interval evasive saccades are elicited. There is consensus that intersaccadic optic flow plays a decisive role in controlling the direction and amplitude of saccades in this behavioral context. Despite discrepancies in detail, all proposed mechanisms of evoking saccades rely on extracting asymmetries between the optic flow patterns in front of the two eyes. Asymmetries may be due to the location of the expansion focus in front of one eye or to a difference between the overall optic flow in the visual fields of the two eyes (Tammero and Dickinson, 2002b; Lindemann et al., 2008, 2012; Mronz and Lehmann, 2008; Kern et al., 2012; Lindemann and Egelhaaf, 2013). Not all parts of the visual field have been concluded to be involved in saccade control of blowflies in the context of collision avoidance. The intersaccadic optic flow in the lateral parts of the visual field does not play a role in determining saccade direction (Kern et al., 2012). This feature appears to be functional as blowflies during intersaccades fly mainly forwards with only relatively small sideways components occurring mainly directly after saccades. These sideways components shift the pole of expansion of the flow field slightly towards frontolateral locations (Kern et al., 2012). In contrast, in Drosophila, which often hover and fly sideways (Ristroph et al., 2009), the optic flow and, thus, the spatial information sensed in lateral and even rear parts of the visual field has been concluded to be also involved in saccade control in the context of collision avoidance (Tammero and Dickinson, 2002b).

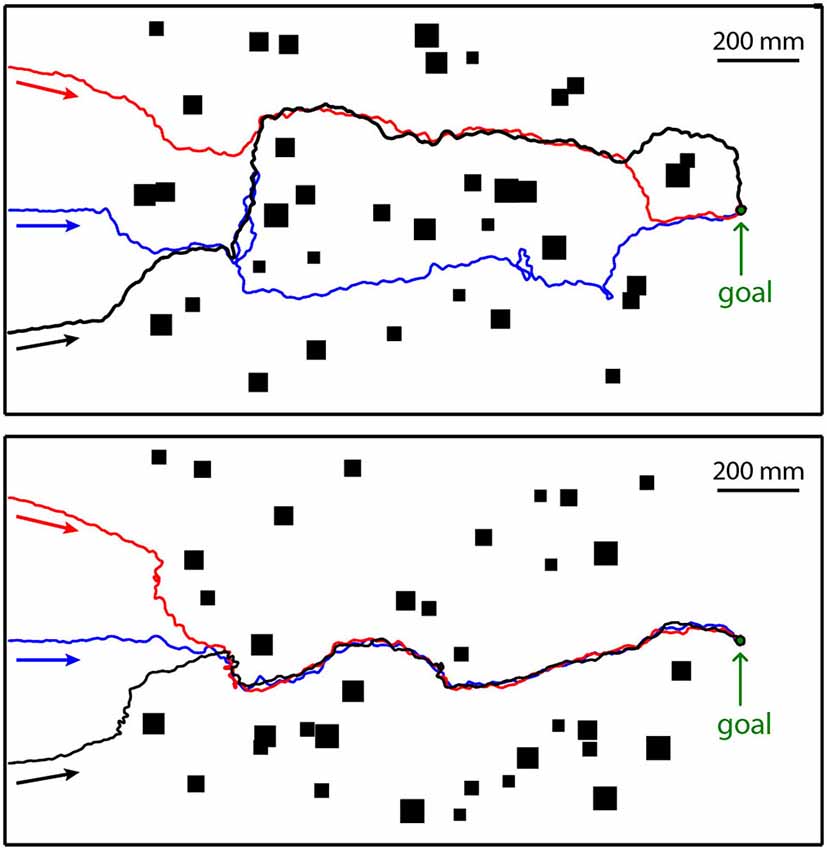

Nonetheless, systematic analyses based on models of LWCs with EMDs as their input revealed difficulties with regard to collision avoidance performance of a simulated insect arising from the contrast and texture dependence of the local motion detectors (Lindemann et al., 2008, 2012; Lindemann and Egelhaaf, 2013). The difficulties with these models can be reduced to some extent by implementing contrast normalization in the peripheral visual system (Babies et al., 2011). Recent modeling based on a somewhat different approach indicates an even more robust solution to the problem. Here, a spatial profile of the environment is determined along the horizontal extent of the visual field from local EMD-based motion measurements. The motion measurements are performed during short intersaccadic translatory flight segments. Although this spatial profile does not represent pure nearness information, but also the contours of nearby environmental structures (Figure 7), it allows determining a locomotion vector that points in the direction which makes a collision least likely and, thus, allows, under most circumstances, to avoid colliding with obstacles. This is even true when the objects are camouflaged by being covered with the same texture as the background of the environment (Bertrand et al., submitted). If the collision avoidance algorithm is combined with an overall goal direction, leading for example to a previously learnt food source or a nest, the model insect tends to move on quite similar trajectories to the goal through a heavily cluttered environment irrespective of the exact starting conditions by employing just the local motion-based collision avoidance mechanism, but no genuine route knowledge (Figure 7). It is interesting to note that these trajectories are reminiscent of routes of ants heading for their nest hole from different starting locations that are usually interpreted within the conceptual framework of navigation mechanisms (Wehner, 2003; Kohler and Wehner, 2005).

Figure 7. Collision avoidance while heading for a goal. The model insect starts at the left at three different positions (colored arrows) in two different cluttered environments (top and bottom diagrams). The goal is indicated at the right of the environment. The three resulting trajectories in each environment are given in red, blue and black. The objects as seen from above are indicated by black rectangles. The walls enclosing the environment are represented by thick black lines. The walls and the objects were covered with the same random texture. Direction of locomotion is indicated by arrows underneath trajectories (Data from Bertrand et al., submitted).

Whereas collision avoidance and landing are spatial tasks that must be solved by any flying insect, local navigation is relevant especially for particular insects, such as bees, wasps and ants, which care for their brood and, thus, have to return to their nest after foraging. Apart from finding without collisions a way towards the area where the goal may reside, motion information may be employed to determine the exact goal location by using the spatial configuration of objects, i.e., landmarks located in the vicinity of the goal (Lehrer, 1991; Zeil, 1993a,b; Lehrer and Collett, 1994; Collett and Zeil, 1996; Zeil et al., 2009; Dittmar et al., 2010, 2011; Braun et al., 2012; Collett et al., 2013; Philippides et al., 2013; Boeddeker et al., submitted). Motion information is especially relevant, if the landmarks are largely camouflaged by similar textural properties as those of the background (Dittmar et al., 2010). Information about the landmark constellation around the goal is memorized during elaborate learning flights: the animal flies characteristic sequences of ever increasing arcs while facing the area around the goal. During these learning flights, the animal is thought to gather relevant information about the spatial relationship of the goal and its surroundings. This information is subsequently used to relocate the goal when returning to it after an excursion (Collett et al., 2002, 2006; Zeil et al., 2009; Zeil, 2012). The mechanisms by which information about the landmark constellation is learnt and subsequently used to localize the goal are still controversial. However, optic flow information is likely to be required to detect texturally camouflaged landmarks and to derive spatial cues that are generated actively during the intersaccadic intervals of translational flight. Also textural cues characterizing the landmarks seem to be relevant for localizing the goal, since bees were found to adjust their flight movements in the vicinity of the landmarks according to the landmarks’ specific textural properties (Dittmar et al., 2010; Braun et al., 2012). It remains to be shown in future behavioral experiments and model analyses, whether the optic flow information and textural cues relevant for navigation performance can be accounted for on the basis of the joint velocity and texture dependence of biological movement detectors and of EMDs as their model equivalents. Alternatively, mechanisms may be required that process optic flow and environmental texture separately and combine both cues only at a later processing stage.

The nearness of objects is reflected in the optic flow generated on the eyes during translational self-motion as is characteristic of the intersaccades of insect flight. In many behavioral contexts nearby objects are particularly relevant. Examples are obstacles that need to be evaded, landing sites, or landmarks that indicate the location of an inconspicuous goal. The main assumption of this review is that the behaviorally highly relevant spatial information can be gained without sophisticated computational mechanisms from the optic flow generated as a consequence of translational locomotion through the environment.

However, movement detectors as are widespread in biological systems and can be modeled by correlation-type EMDs do not represent veridically the velocity vectors of the optic flow, but rather also reflect textural information of the environment. This distinguishing feature has often been regarded as nothing but a nuisance of a simple motion detection mechanism. This opinion has been challenged recently by analyzing motion detectors with image flow as generated during translational movements through a wide range of cluttered natural environments. On this basis, the texture information has been suggested to be potentially of functional significance, because it basically reflects the contours of nearby objects. Contrast borders are thought for long to be the main carrier of functionally relevant information about objects in artificial and natural sceneries. This is evidenced by the well-established finding that contrast borders are enhanced by early visual processing in biological visual systems including that of primates (e.g., Marr, 1982; van Hateren and Ruderman, 1998; Simoncelli and Olshausen, 2001; Seriès et al., 2004; Girshick et al., 2011; Berens et al., 2012). One major function of this type of peripheral information processing is thought to be the enhancement of contrast borders at the expense of the overall brightness of the image, but also redundancy reduction in images. Independent of the particular conceptual framework, enhancing contrast borders is seen as advantageous with regard to representing visual environments.

The main conclusion of this paper is that the motion vision system of insects combines both nearness and contour information and preferentially represents contrast borders of nearby environmental structures and/or objects during translatory self-motion. It makes just use of the fact that in normal behavioral situations all this information is only required when an animal is moving. Then the motion vision system segregates, in a computationally parsimonious way, the environment into behaviorally relevant nearby objects and—at least in many behavioral contexts—less relevant distant structures. This characteristic matches—as we think—one major task of the motion detection system, to provide behaviorally relevant behavioral information about the environment, rather than only to extract the velocity of self-motion or the velocity of moving objects. Based on this conclusion, motion detection should not be conceptualized exclusively in the context of velocity representation, which is certainly important in many contexts, but also in the context of gathering behaviorally relevant information about the environment.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The research of our group has been generously supported by the Deutsche Forschungsgemeinschaft (DFG). We also acknowledge the support for the publication fee by the Deutsche Forschungsgemeinschaft and the Open Access Publication Funds of Bielefeld University.

Babies, B., Lindemann, J. P., Egelhaaf, M., and Möeller, R. (2011). Contrast-independent biologically inspired motion detection. Sensors (Basel) 11, 3303–3326. doi: 10.3390/s110303303

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Baird, E., Boeddeker, N., Ibbotson, M. R., and Srinivasan, M. V. (2013). A universal strategy for visually guided landing. Proc. Natl. Acad. Sci. U S A 110, 18686–18691. doi: 10.1073/pnas.1314311110

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Baird, E., Kornfeldt, T., and Dacke, M. (2010). Minimum viewing angle for visually guided ground speed control in bumblebees. J. Exp. Biol. 213, 1625–1632. doi: 10.1242/jeb.038802

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Baird, E., Srinivasan, M. V., Zhang, S., and Cowling, A. (2005). Visual control of flight speed in honeybees. J. Exp. Biol. 208, 3895–3905. doi: 10.1242/jeb.01818

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Baird, E., Srinivasan, M. V., Zhang, S., Lamont, R., and Cowling, A. (2006). “Visual control of flight speed and height in the honeybee,” in From Animals to Animats 9, eds S. Nolfi, G. Baldassare, R. Calabretta, J. Hallam, D. Marocco, O. Miglino, J. A. Meyer and D. Parisi (Berlin / Heidelberg: Springer), 40–51.

Barnett, P. D., Nordström, K., and O’Carroll, D. C. (2010). Motion adaptation and the velocity coding of natural scenes. Curr. Biol. 20, 994–999. doi: 10.1016/j.cub.2010.03.072

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Barron, J. L., Fleet, D. J., and Beauchemin, S. S. (1994). Performance of optic flow techniques. Int. J. Comput. Vis. 12, 43–77. doi: 10.1007/BF01420984

Beauchemin, S. S., and Barron, J. L. (1995). The computation of optical flow. ACM Comput. Surv. 27, 433–466. doi: 10.1145/212094.212141

Behnia, R., Clark, D. A., Carter, A. G., Clandinin, T. R., and Desplan, C. (2014). Processing properties of ON and OFF pathways for Drosophila motion detection. Nature 512, 427–430. doi: 10.1038/nature13427

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Berens, P., Ecker, A. S., Cotton, R. J., Ma, W. J., Bethge, M., and Tolias, A. S. (2012). A fast and simple population code for orientation in primate V1. J. Neurosci. 32, 10618–10626. doi: 10.1523/jneurosci.1335-12.2012

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Boeddeker, N., Dittmar, L., Stürzl, W., and Egelhaaf, M. (2010). The fine structure of honeybee head and body yaw movements in a homing task. Proc. Biol. Sci. 277, 1899–1906. doi: 10.1098/rspb.2009.2326

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Boeddeker, N., and Hemmi, J. M. (2010). Visual gaze control during peering flight manoeuvres in honeybees. Proc. Biol. Sci. 277, 1209–1217. doi: 10.1098/rspb.2009.1928

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Boeddeker, N., Lindemann, J. P., Egelhaaf, M., and Zeil, J. (2005). Responses of blowfly motion-sensitive neurons to reconstructed optic flow along outdoor flight paths. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 25, 1143–1155. doi: 10.1007/s00359-005-0038-9

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A. (2000). Models of motion detection. Nat. Neurosci. 3, 1168. doi: 10.1038/81435

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A. (2004). “Modelling fly motion vision,” in Computation Neuroscience: A Comprehensive Approach, ed J. Feng (Boca Raton, London, New York: Chapman and Hall/CTC), 397–429.

Borst, A. (2014). Fly visual course control: behaviour, algorithms and circuits. Nat. Rev. Neurosci. 15, 590–599. doi: 10.1038/nrn3799

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A., and Egelhaaf, M. (1989). Principles of visual motion detection. Trends Neurosci. 12, 297–306. doi: 10.1016/0166-2236(89)90010-6

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A., and Egelhaaf, M. (1993). “Detecting visual motion: theory and models,” in Visual Motion and its Role in the Stabilization of Gaze, eds F. A. Miles and J. Wallman (Amsterdam: Elsevier), 3–27.

Borst, A., and Haag, J. (2002). Neural networks in the cockpit of the fly. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 188, 419–437. doi: 10.1007/s00359-002-0316-8

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A., Haag, J., and Reiff, D. F. (2010). Fly motion vision. Annu. Rev. Neurosci. 33, 49–70. doi: 10.1146/annurev-neuro-060909-153155

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Borst, A., Reisenman, C., and Haag, J. (2003). Adaptation of response transients in fly motion vision. II: model studies. Vision Res. 43, 1311–1324. doi: 10.1016/s0042-6989(03)00092-0

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Braun, E., Dittmar, L., Boeddeker, N., and Egelhaaf, M. (2012). Prototypical components of honeybee homing flight behaviour depend on the visual appearance of objects surrounding the goal. Front. Behav. Neurosci. 6:1. doi: 10.3389/fnbeh.2012.00001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Braun, E., Geurten, B., and Egelhaaf, M. (2010). Identifying prototypical components in behaviour using clustering algorithms. PLoS One 5:e9361. doi: 10.1371/journal.pone.0009361

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Brenner, N., Bialek, W., and de Ruyter van Steveninck, R. (2000). Adaptive rescaling maximizes information transmission. Neuron 26, 695–702. doi: 10.1016/s0896-6273(00)81205-2

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Brinkworth, R. S. A., and O’Carroll, D. C. (2010). “Bio-inspired model for robust motion detection under noisy conditions,” in Neural Networks (IJCNN), The 2010 International Joint Conference (Barcelona), 1–8. doi: 10.1109/ijcnn.2010.5596502

Brinkworth, R. S. A., O’Carroll, D. C., and Graham, L. J. (2009). Robust models for optic flow coding in natural scenes inspired by insect biology. PLoS Comput. Biol. 5:e1000555. doi: 10.1371/journal.pcbi.1000555

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Chiappe, M. E., Seelig, J. D., Reiser, M. B., and Jayaraman, V. (2010). Walking modulates speed sensitivity in Drosophila motion vision. Curr. Biol. 20, 1470–1475. doi: 10.1016/j.cub.2010.06.072

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Clifford, C. W. G., and Ibbotson, M. R. (2002). Fundamental mechanisms of visual motion detection: models, cells and functions. Prog. Neurobiol. 68, 409–437. doi: 10.1016/s0301-0082(02)00154-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Collett, T. S. (1978). Peering—a locust behavior pattern for obtaining motion parallax information. J. Exp. Biol. 76, 237–241.

Collett, T. S., de Ibarra, N. H., Riabinina, O., and Philippides, A. (2013). Coordinating compass-based and nest-based flight directions during bumblebee learning and return flights. J. Exp. Biol. 216, 1105–1113. doi: 10.1242/jeb.081463

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Collett, T. S., Graham, P., Harris, R. A., and Hempel-De-Ibarra, N. (2006). Navigational memories in ants and bees: memory retrieval when selecting and following routes. Adv. Study Behav. 36, 123–172. doi: 10.1016/s0065-3454(06)36003-2

Collett, M., Harland, D., and Collett, T. S. (2002). The use of landmarks and panoramic context in the performance of local vectors by navigating honeybees. J. Exp. Biol. 205, 807–814.

Collett, T. S., and Paterson, C. J. (1991). Relative motion parallax and target localization in the locust, Schistocerca gregaria. J. Comp. Physiol. A 169, 615–621. doi: 10.1007/bf00193551

Collett, T. S., and Zeil, J. (1996). Flights of learning. Curr. Dir. Psychol. Sci. 5, 149–155. doi: 10.1111/1467-8721.ep11512352

Dahmen, H. J., Franz, M. O., and Krapp, H. G. (2000). “Extracting ego-motion from optic flow: limits of accuracy and neuronal filters,” in Computational, Neural and Ecological Constraints of Visual Motion Processing, eds J. M. Zanker and J. Zeil (Berlin, Heidelberg, New York: Springer), 143–168.

David, C. T. (1979). Optomotor control of speed and height by free-flying Drosophila. J. Exp. Biol. 82, 389–392.

David, C. T. (1982). Competition between fixed and moving stripes in the control of orientation by flying Drosophila. Physiol. Entomol. 7, 151–156. doi: 10.1111/j.1365-3032.1982.tb00283.x

Dittmar, L., Egelhaaf, M., Stürzl, W., and Boeddeker, N. (2011). The behavioral relevance of landmark texture for honeybee homing. Front. Behav. Neurosci. 5:20. doi: 10.3389/fnbeh.2011.00020

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dittmar, L., Stürzl, W., Baird, E., Boeddeker, N., and Egelhaaf, M. (2010). Goal seeking in honeybees: matching of optic flow snapshots. J. Exp. Biol. 213, 2913–2923. doi: 10.1242/jeb.043737

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dror, R. O., O’Carroll, D. C., and Laughlin, S. B. (2001). Accuracy of velocity estimation by Reichardt correlators. J. Opt. Soc. Am. A Opt. Image. Sci. Vis. 18, 241–252. doi: 10.1364/josaa.18.000241

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dyhr, J. P., and Higgins, C. M. (2010). The spatial frequency tuning of optic-flow-dependent behaviors in the bumblebee Bombus impatiens. J. Exp. Biol. 213, 1643–1650. doi: 10.1242/jeb.041426

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Eckles, M. A., Roubik, D. W., and Nieh, J. C. (2012). A stingless bee can use visual odometry to estimate both height and distance. J. Exp. Biol. 215, 3155–3160. doi: 10.1242/jeb.070540

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Egelhaaf, M. (2006). “The neural computation of visual motion,” in Invertebrate Vision, eds E. Warrant and D. E. Nilsson (Cambridge, UK: Cambridge University Press), 399–461.

Egelhaaf, M., Boeddeker, N., Kern, R., and Lindemann, J. P. (2012). Spatial vision in insects is facilitated by shaping the dynamics of visual input through behavioral action. Front. Neural Circuits 6:108. doi: 10.3389/fncir.2012.00108

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Egelhaaf, M., and Borst, A. (1989). Transient and steady-state response properties of movement detectors. J. Opt. Soc. Am. A 6, 116–127. doi: 10.1364/JOSAA.6.000116

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Egelhaaf, M., and Borst, A. (1993). “Movement detection in arthropods,” in Visual Motion and its Role in the Stabilization of Gaze, eds F. A. Miles and J. Wallman (Amsterdam: Elsevier), 53–77.

Egelhaaf, M., Borst, A., and Reichardt, W. (1989). Computational structure of a biological motion detection system as revealed by local detector analysis in the fly’s nervous system. J. Opt. Soc. Am. A 6, 1070–1087. doi: 10.1364/josaa.6.001070

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Esch, H. E., Zhang, S., Srinivasan, M. V., and Tautz, J. (2001). Honeybee dances communicate distances measured by optic flow. Nature 411, 581–583. doi: 10.1038/35079072

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Evangelista, C., Kraft, P., Dacke, M., Reinhard, J., and Srinivasan, M. V. (2010). The moment before touchdown: landing manoeuvres of the honeybee Apis mellifera. J. Exp. Biol. 213, 262–270. doi: 10.1242/jeb.037465

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fairhall, A. L., Lewen, G. D., Bialek, W., and de Ruyter Van Steveninck, R. R. (2001). Efficiency and ambiguity in an adaptive neural code. Nature 412, 787–792. doi: 10.1038/35090500

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Farina, W. M., Kramer, D., and Varjú, D. (1995). The response of the hovering hawk moth Macroglossum stellatarum to translatory pattern motion. J. Comp. Physiol. 176, 551–562. doi: 10.1007/bf00196420

Fleet, D. J., and Weiss, Y. (2005). “Optic flow estimation,” in Mathematical Models in Computer Vision: The Handbook, eds N. Paragios, Y. Chen and O. Faugeras (Berlin, Heidelberg, New York: Springer), 239–258.

Franz, M. O., Chahl, J. S., and Krapp, H. G. (2004). Insect-inspired estimation of egomotion. Neural Comput. 16, 2245–2260. doi: 10.1162/0899766041941899

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Franz, M. O., and Krapp, H. G. (2000). Wide-field, motion-sensitive neurons and optimal matched filters for optic flow. Biol. Cybern. 83, 185–197. doi: 10.1007/s004220000163

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Freifeld, L., Clark, D. A., Schnitzer, M. J., Horowitz, M. A., and Clandinin, T. R. (2013). GABAergic lateral interactions tune the early stages of visual processing in Drosophila. Neuron 78, 1075–1089. doi: 10.1016/j.neuron.2013.04.024

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Fry, S. N., Rohrseitz, N., Straw, A. D., and Dickinson, M. H. (2009). Visual control of flight speed in Drosophila melanogaster. J. Exp. Biol. 212, 1120–1130. doi: 10.1242/jeb.020768

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Frye, M. A., and Dickinson, M. H. (2007). Visual edge orientation shapes free-flight behavior in Drosophila. Fly (Austin) 1, 153–154. doi: 10.4161/fly.4563

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Geurten, B. R. H., Kern, R., Braun, E., and Egelhaaf, M. (2010). A syntax of hoverfly flight prototypes. J. Exp. Biol. 213, 2461–2475. doi: 10.1242/jeb.036079

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Geurten, B. R. H., Kern, R., and Egelhaaf, M. (2012). Species-Specific flight styles of flies are reflected in the response dynamics of a homolog motion-sensitive neuron. Front. Integr. Neurosci. 6:11. doi: 10.3389/fnint.2012.00011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Girshick, A. R., Landy, M. S., and Simoncelli, E. P. (2011). Cardinal rules: visual orientation perception reflects knowledge of environmental statistics. Nat. Neurosci. 14, 926–932. doi: 10.1038/nn.2831

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hausen, K. (1981). Monocular and binocular computation of motion in the lobula plate of the fly. Negot. German Zool. Soc. 74, 49–70.

Heitwerth, J., Kern, R., Van Hateren, J. H., and Egelhaaf, M. (2005). Motion adaptation leads to parsimonious encoding of natural optic flow by blowfly motion vision system. J. Neurophysiol. 94, 1761–1769. doi: 10.1152/jn.00308.2005

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hennig, P., and Egelhaaf, M. (2012). Neuronal encoding of object and distance information: a model simulation study on naturalistic optic flow processing. Front. Neural Circuits 6:14. doi: 10.3389/fncir.2012.00014

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hennig, P., Kern, R., and Egelhaaf, M. (2011). Binocular integration of visual information: a model study on naturalistic optic flow processing. Front. Neural Circuits 5:4. doi: 10.3389/fncir.2011.00004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hopp, E., Borst, A., and Haag, J. (2014). Subcellular mapping of dendritic activity in optic flow processing neurons. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 200, 359–370. doi: 10.1007/s00359-014-0893-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Horstmann, W., Egelhaaf, M., and Warzecha, A.-K. (2000). Synaptic interactions increase optic flow specificity. Eur. J. Neurosci. 12, 2157–2165. doi: 10.1046/j.1460-9568.2000.00094.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Joesch, M., Weber, F., Eichner, H., and Borst, A. (2013). Functional specialization of parallel motion detection circuits in the fly. J. Neurosci. 33, 902–905. doi: 10.1523/JNEUROSCI.3374-12.2013

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Jung, S. N., Borst, A., and Haag, J. (2011). Flight activity alters velocity tuning of fly motion-sensitive neurons. J. Neurosci. 31, 9231–9237. doi: 10.1523/JNEUROSCI.1138-11.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Karmeier, K., Krapp, H. G., and Egelhaaf, M. (2003). Robustness of the tuning of fly visual interneurons to rotatory optic flow. J. Neurophysiol. 90, 1626–1634. doi: 10.1152/jn.00234.2003

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Karmeier, K., van Hateren, J. H., Kern, R., and Egelhaaf, M. (2006). Encoding of naturalistic optic flow by a population of blowfly motion sensitive neurons. J. Neurophysiol. 96, 1602–1614. doi: 10.1152/jn.00023.2006

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kern, R., Boeddeker, N., Dittmar, L., and Egelhaaf, M. (2012). Blowfly flight characteristics are shaped by environmental features and controlled by optic flow information. J. Exp. Biol. 215, 2501–2514. doi: 10.1242/jeb.061713

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kern, R., van Hateren, J. H., Michaelis, C., Lindemann, J. P., and Egelhaaf, M. (2005). Function of a fly motion-sensitive neuron matches eye movements during free flight. PLoS Biol. 3:e171. doi: 10.1371/journal.pbio.0030171

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kern, R., and Varjú, D. (1998). Visual position stabilization in the hummingbird hawk moth, Macroglossum stellatarum L.: I. Behavioural analysis. J. Comp. Physiol. A 182, 225–237. doi: 10.1007/s003590050173

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kimmerle, B., Srinivasan, M. V., and Egelhaaf, M. (1996). Object detection by relative motion in freely flying flies. Nat. Sci. 83, 380–381. doi: 10.1007/s001140050305

Koenderink, J. J. (1986). Optic flow. Vision Res. 26, 161–179. doi: 10.1016/0042-6989(86)90078-7

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kohler, M., and Wehner, R. (2005). Idiosyncratic route-based memories in desert ants, Melophorus bagoti: how do they interact with path-integration vectors? Neurobiol. Learn. Mem. 83, 1–12. doi: 10.1016/j.nlm.2004.05.011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kral, K. (2003). Behavioural-analytical studies of the role of head movements in depth perception in insects, birds and mammals. Behav. Processes 64, 1–12. doi: 10.1016/s0376-6357(03)00054-8

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kral, K., and Poteser, M. (1997). Motion parallax as a source of distance information in locusts and mantids. J. Insect Behav. 10, 145–163. doi: 10.1007/bf02765480

Krapp, H. G. (2000). “Neuronal matched filters for optic flow processing in flying insects,” in Neuronal Processing of Optic Flow, ed M. Lappe (San Diego: Academic Press), 93–120.

Krapp, H. G., Hengstenberg, R., and Egelhaaf, M. (2001). Binocular contribution to optic flow processing in the fly visual system. J. Neurophysiol. 85, 724–734.

Krapp, H. G., Hengstenberg, B., and Hengstenberg, R. (1998). Dendritic structure and receptive-field organization of optic flow processing interneurons in the fly. J. Neurophysiol. 79, 1902–1917.

Kurtz, R. (2012). “Adaptive encoding of motion information in the fly visual system,” in Frontiers in Sensing, eds F. Barth, J. Humphrey and M. V. Srinivasan (Wien, NY: Springer), 115–128. doi: 10.1007/978-3-211-99749-9_8

Kurtz, R., Egelhaaf, M., Meyer, H. G., and Kern, R. (2009). Adaptation accentuates responses of fly motion-sensitive visual neurons to sudden stimulus changes. Proc. Biol. Sci. 276, 3711–3719. doi: 10.1098/rspb.2009.0596

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lehrer, M., and Collett, T. S. (1994). Approaching and departing bees learn different cues to the distance of a landmark. J. Comp. Physiol. A 175, 171–177. doi: 10.1007/bf00215113

Lehrer, M., Srinivasan, M. V., Zhang, S. W., and Horridge, G. A. (1988). Motion cues provide the bee’s visual world with a third dimension. Nature 332, 356–357. doi: 10.1038/332356a0

Liang, P., Heitwerth, J., Kern, R., Kurtz, R., and Egelhaaf, M. (2012). Object representation and distance encoding in three-dimensional environments by a neural circuit in the visual system of the blowfly. J. Neurophysiol. 107, 3446–3457. doi: 10.1152/jn.00530.2011

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Liang, P., Kern, R., and Egelhaaf, M. (2008). Motion adaptation enhances object-induced neural activity in three-dimensional virtual environment. J. Neurosci. 28, 11328–11332. doi: 10.1523/JNEUROSCI.0203-08.2008

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Liang, P., Kern, R., Kurtz, R., and Egelhaaf, M. (2011). Impact of visual motion adaptation on neural responses to objects and its dependence on the temporal characteristics of optic flow. J. Neurophysiol. 105, 1825–1834. doi: 10.1152/jn.00359.2010

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar