- 1BYD Company Limited, Shenzhen, China

- 2School of Statistics, Renmin University of China, Beijing, China

- 3School of Computer Science and Technology, Xinjiang University, Urumqi, China

The user perception of mobile game is crucial for improving user experience and thus enhancing game profitability. The sparse data captured in the game can lead to sporadic performance of the model. This paper proposes a new method, the balanced graph factorization machine (BGFM), based on existing algorithms, considering the data imbalance and important high-dimensional features. The data categories are first balanced by Borderline-SMOTE oversampling, and then features are represented naturally in a graph-structured way. The highlight is that the BGFM contains interaction mechanisms for aggregating beneficial features. The results are represented as edges in the graph. Next, BGFM combines factorization machine (FM) and graph neural network strategies to concatenate any sequential feature interactions of features in the graph with an attention mechanism that assigns inter-feature weights. Experiments were conducted on the collected game perception dataset. The performance of proposed BGFM was compared with eight state-of-the-art models, significantly surpassing all of them by AUC, precision, recall, and F-measure indices.

1 Introduction

Mobile games gained a large share of global business, especially during the COVID-induced dead season for other entertainment businesses and activities. Game-related services, from run to finish, interact with each other in multiple directions. The complex functionality of the game user during play requires multiple services to reach together, which involve different functions. The application runs by invoking the most appropriate ones from many alternative services to be combined. In a real Internet environment, multiple service providers usually offer services with the required functionality. These services are distributed differently and hosted on servers in different user regions. These many services are combined through network selection and application invocation to realize the complex functionality the user requires. Therefore, how the customer can judge the most suitable quality service is the key to improving the gaming user’s perceived experience.

When investigating how service quality affects user experience, it is necessary to consider the influence of user and environmental factors such as the user’s level of play, game mechanics, game team, and individual performance. The main idea of existing studies on game user perception is to clarify multiple dimensions of interrelated game perceptions, then establish an objective and easy-to-measure correlation mapping between service quality indices and experience quality, fully consider the influence of other dimensions on the correlation, and finally assess or predict game perceptions using the objective features, to achieve the purpose of optimizing game perceptions. However, these studies need to pay more attention to the importance of features other than service quality features on the outcome of game perception.

This study aims to mitigate some deficiencies of existing algorithms by an alternative approach. To this end, a new method called Balanced Graph Factorization Machine (BGFM), which considers the data imbalance and the importance of high-dimensional features, is elaborated and tested. The data categories are first balanced by Borderline-SMOTE oversampling, and then features are represented naturally in a graph-structured way. The highlight is that the BGFM contains interaction mechanisms for aggregating beneficial features. The results are represented as edges in the graph. Next, BGFM combines factorization machine (FM) and graph neural network strategies to concatenate any sequential feature interactions of features in the graph with an attention mechanism that assigns inter-feature weights. The main highlights in this paper are listed as follows:

The strengths and weaknesses of FM and GNN in modeling feature interactions are analyzed. To solve their problems and take advantage of their strengths, a new model for feature interaction modeling, BGFM, is proposed, which bridges the gap between GNN and FM. The features in the graph are the nodes, and the two-by-two interactions between features are the edges connecting the nodes, making it possible to solve the FM problem by taking advantage of the strengths of GNN.

The similarity between the computed feature interactions of the attention mechanism is introduced to ensure the robustness of LTFM. This enhances the positive effects of effective features while reducing the negative effects due to biased features.

We conducted several experiments on the QoE dataset. The results show that the proposed BGFM performs well and outperforms the existing methods.

2 Related work

Previous studies of game user perception have focused only on the correspondence between QoS parameters and game QoE (Wattimena et al., 2006; Koo et al., 2007; Denieffe et al., 2007). This was initially done using linear models (e.g., logistic regression and generalized regression) to generate user game perception scores (Pornpongtechavanich et al., 2022). User-perceived assessment models based on machine learning techniques, such as QoE modeling using SVM to construct prediction models (Suznjevic et al., 2019), have become a research hotspot as they effectively predict user perception. These models ignore useful but unseen feature interactions in the data, as evidenced by the effectiveness of hidden variable models (Sun et al., 2013). Factor decomposition machines (Rendle, 2010) provide a general-purpose predictor to efficiently model higher-order interactions between interpreted features within linear time complexity.

Yang et al. (2021) transformed location information into neighborhood information and added it into a factor decomposition machine to propose the LBFM model. More recently, Wang et al. (2022) proposed an LDFM model using information entropy and location projection of users and services. While the above algorithms extend the dataset somewhat, different cross-cutting features are not distinguished, making the model performance fluctuate. He and Chua (2017) proposed neural factorization machines (NFM) for sparse predictive analytics. Xiao and Ye (2017) thus introduced the neural network strategy on top of the previous ones and proposed the AFM model, which distinguishes between different second-order feature combinations through the attention mechanism. Hong et al. (2019) proposed interaction-aware factorization machines for recommender systems, considering that perceived data sparsity can lead to fluctuations in model performance. To the best of the authors’ knowledge, the only data-driven study of game user perception that considered the effects of multiple factors has been reported in our previous paper (Xie and Jia, 2022), which introduced the location-time-aware factorization machine based on fuzzy set theory for game perception (LTFM).

Despite some progress in the relevant research, two major aspects of the problem need further clarification. On the one hand, poor game user perceptions are a minority occurrence, similar to positive samples required for trade fraud risk prediction in banks. This inevitably runs into the problem of data imbalance. The collected game user perception data are categorized into three evaluation categories: excellent, good, and poor, with an approximate ratio of 5:1:1. In LTFM, the data are divided by tiers to reduce the impact of data imbalance on the overall performance of the algorithmic model. Although the model outperformed others, there is much room for its improvement in several aspects.

On the other hand, a factorial decomposition machine is a model for modeling interaction features. The core of FM is to learn the uniquely hot-coded features corresponding to the hidden vectors, and then the interaction between features is modeled by the inner product of vectors (Rendle, 2010). FM has been used in Cheng et al. (2016) and Guo et al. (2017), exhibiting at least two weak points: (i) it failed to capture higher-order feature interactions, and (ii) it assigned the same weights to all feature interactions, overfitting the model by useless interactions (Zhang et al., 2016; Su et al., 2021). Attempts have been made to transform FM to learn higher-order feature interactions by introducing deep neural networks (DNNs). Neural Factorization Machine (NFM) combines DNNs and dual interaction layers to obtain information about higher-order feature interactions (He and Chua, 2017). Wide & Deep learning model, and DeepFM model combine shallow and deep structures to achieve multi-order feature interactions (Cheng et al., 2016; Guo et al., 2017). However, implicit learning models introduced into DNNs are usually weakly interpretable, while Graph Neural Networks (GNNs) provide a lucrative alternative for grasping higher-order interactions between features (Zhang C. et al., 2021; Hamilton et al., 2017). The core technical point of GNN is to achieve a higher learning rate by accumulating layer by layer and aggregating multidimensional relevant features. As a result, higher-order interactions between features can be explicitly encoded into the embedding, which inspired this study.

All in all, there is a great need to evaluate the perceived experience of game users. There are two main advantages of the proposed BGFM over previous studies:

Treating features as nodes and two-by-two interactions between features as edges mitigates the problem of comprehensively combining GNN and FM, making it possible to solve FM problems via GNN.

The attention mechanism assigns different weights to different features interactively to enhance the utilization of effective features and reduce the probability of deviant features.

3 Proposed method

To address the above algorithmic pain points in game perception research, we propose the Balanced Graph Factorization Machine (BGFM) model. To this end, the overall framework of BGFM is decomposed, and the overall working principle of BGFM is summarized. The BGFM firstly chooses Borderline-SMOTE to solve the problem of unbalanced distribution of training data, which leads to fluctuation of model performance. Then, we focus on how to model higher-order beneficial feature interactions. For this purpose, we design a special mechanism in BGFM, which can be split into two main parts: the selection of beneficial interaction features and interaction aggregation. The implementation principles of these two parts are described in detail. Finally, the model-based predictions and the model optimization are discussed.

3.1 BGFM

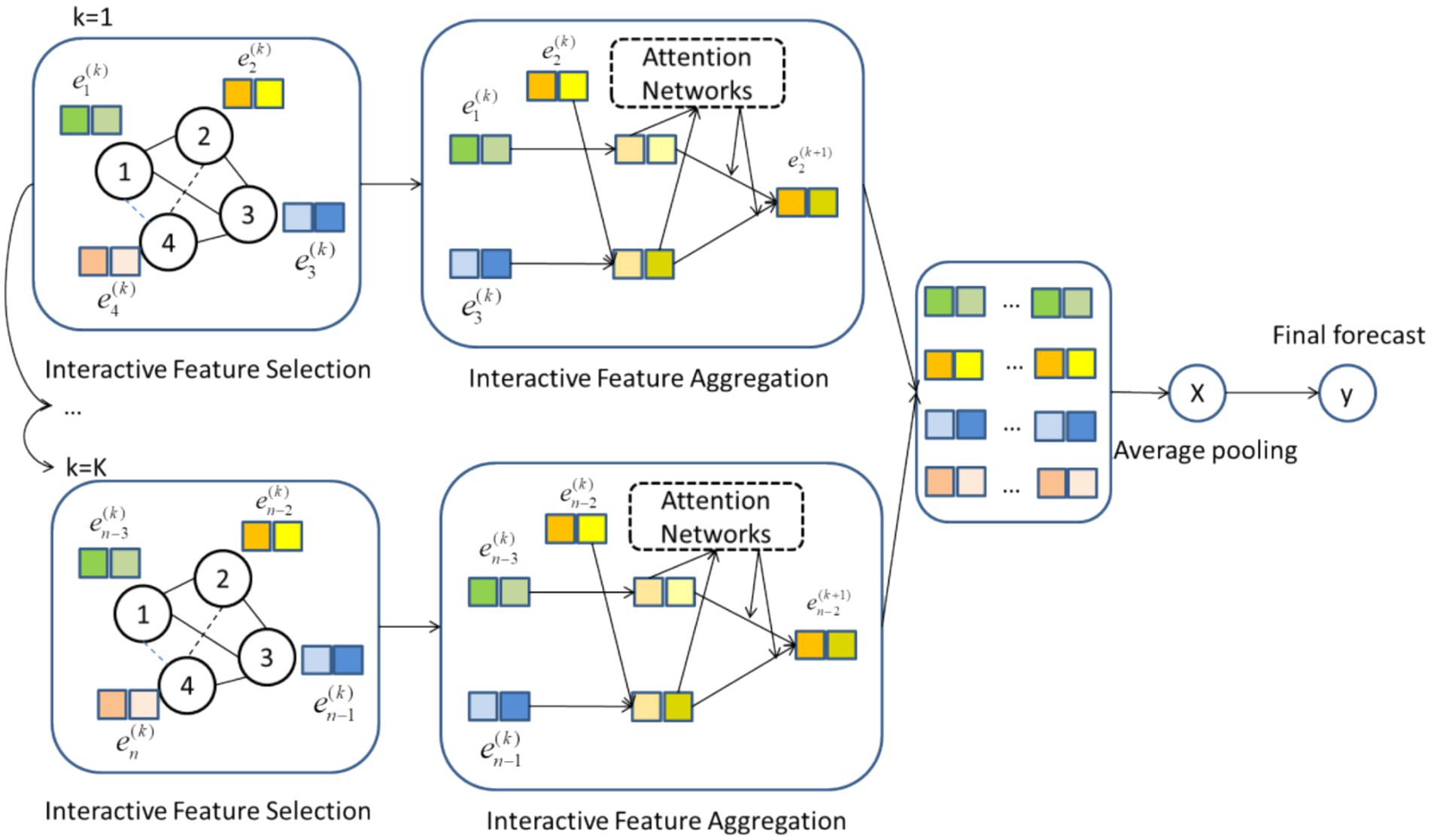

Figure 1 shows the network structure of BGFM. The graph flexibly represents higher-order associations between features. Edges in BGFM are useful feature interactions obtained by model aggregation. After resolving the data imbalance, beneficial feature interactions are selected. After learning by the attention mechanism, different feature interactions are given different weights and jointly output for final prediction.

The BGFM will update the network step by step. The input values are processed through feature embedding as the initial data for BGFM, as , where is the latest feature embedding for the -th layer. The model initially has no pre-input edge information, so edges are first obtained by the interaction selection component. The resulting edge information is then aggregated to update the feature embeddings in the remaining regions.

Existing methods for unbalanced data learning can be divided into three categories. BGFM uses a data-level solution, while others need more flexibility and robustness. BGFM greatly simplifies the workload of model training, improves efficiency, and gets various classifiers. Borderline-SMOTE is an algorithm extended for SMOTE. It considers the effect of noisy samples, and the algorithm uses only a few classes of samples with the attribute Danger on the border to obtain new samples, yielding a balanced distribution of the training sample set. After solving the problem of perceived data imbalance, two main components exist in each layer of BGFM. Both of them are described in detail next.

3.2 Interaction feature selection

We devised a mechanism to obtain favorable pairwise feature interactions in the paper. The mechanism is the inference of connections between perceptual features through the graph structure, which models higher-order connections between features. However, the edges connecting two nodes exist deterministically, greatly simplifying the selection process compared to the direct introduction of gradient descent-based optimization techniques.

This limitation is resolved by replacing the set of edges by heightened neighbors , which is explained as the likelihoods of . It shows that the interaction between the features is very important. A different graph structure needs to be learnt at each -th layer and comparing it with the previously derived graph. These treatments provide higher performance. Specifically, each layer of the model’s graph structure is fixed, culminating in fixed-form outputs. However, our model is characterized by adaptive learning and can model associations of beneficial features.

This section aims to design a metric function to obtain beneficial feature interactions. The metric function calculate s the weights of the edges. NMF-based functions are used to evaluate the edge weights (He and Chua, 2017). The product of elements of these feature vectors is converted into a scalar using Multilayer Perception (MLP) with one hidden layer, which can be calculated as Equation 1.

where , , and are the inputs to the multilayer player. and activation functions are represented by and , respectively. It is worth noting the order of the inputs to is invariant, as . The same pair of nodes have the same edge weights at this point. This successive graph structure modeling allows the gradient to backpropagate. Since there is no truth graph structure, the gradient here is defined by the deviation between the model’s estimated and actual values. Feature interactions are treated as one, and the weights are estimated using MLP. Euclidean distance or other distance metrics can also be chosen (Zhang W. et al., 2021).

3.3 Interaction aggregation

After selecting the beneficial feature interactions, the feature representation is updated by performing an interaction aggregation operation. For the target feature node , the attention coefficient of each feature interaction is measured while aggregating its beneficial interactions with its neighbors. The learnable projection a and nonlinear activation function are applied to measure the attention coefficients as Equation 2.

This implies the significance of interactions between features and In this paper, we only compute of the node . represents the neighbors of the node , which is the sum for features that are useful to interact with . In the paper, the following function is used to normalize them in all choices of , as shown in Equation 3.

where is the output value of the -th node, the output values of the multi-classification range vary from 0 to 1; is the number of nodes that the network finally outputs, that is, the number of categories that can be classified. This makes it easy to compare the coefficients obtained between different feature nodes. After obtaining the normalized attention coefficients, the linear and nonlinear combinations of links between features are computed as subsequent new feature inputs as Equation 4.

where measures the attentional coefficient of feature and feature interactions, and indicates the probability that such feature association is helpful. The attention coefficient is computed via the soft-attention mechanism and is computed by the hard-attention mechanism. The information about the selected feature interactions is controlled by multiplying them and making the input values of the feature interaction selection mechanism learnable by gradient backpropagation.

To capture the diverse polysemy of feature interactions in different semantic subspaces and to stabilize the learning process, this paper extends our mechanism by applying multi-head attention (Li et al., 2017; Marcheggiani and Titov, 2017; Wang et al., 2019). Specifically, H individual attention mechanisms perform the update of Equation 4 and then concatenate these features to produce an output feature representation as Equation 5.

where denotes the cascade, is the normalized value obtained through the -th attention machine with is the linear transformation matrix of the former. Optionally, the feature representation can be updated using average pooling, as shown in Equation 6.

3.4 Forecasting and improvement

The results for -th layer is , which is a collection of feature representation vectors. Because the representations acquired in multiple layers model different orders of interactions, they play different roles in the ultimate result. Thus, they are connected in series to get the definitive expression for every feature (Beck et al., 2018) as Equation 7.

Finally, all the feature vectors are pooled equally to get the result at the graph level, and the final prediction is made using the projection vector . The obtained results are computed using Equations 8, 9:

4 Results and discussion

4.1 Research data

The research focuses on exploring the effects of multiple influencing factors (including user, system, and contextual ones) on the perceived QoE of game users. A general taxonomy of the various factors in the literature is drawn upon, and further references are made to the taxonomy of existing game-related studies in terms of game QoE. Finally, an empirical test method is derived (Pornpongtechavanich et al., 2022; Jiang et al., 2019). Specifically, this gaming dataset considers the effects of three different system factors (latency, packet loss rate, jitter, and additional network parameters), user skills (user-personal factors in terms of gaming experience), and context (in terms of action categories and social context). The game entity under study is Glory of Kings, a game in which the interaction is mainly based on the UDP protocol, which requires a high level of real-time and user engagement. Due to the lack of a dataset of user game perception, the testing process in the study’s laboratory environment was determined after reviewing the relevant literature.

In joint efforts of team members and participants, data from 789 games were collected, with each piece of data representing three dimensions of user, service, and environmental data. Each dataset has 21 features, consisting of 4 pieces of user data (player ID, age, gender, and skill level), 16 pieces of in-game and post-game service data, and a user-perceived score for the last one.

4.2 Comparison algorithms

In this paper, to demonstrate the effectiveness of the proposed algorithm, we compared it with algorithms from four categories: (A) linear methods, (B) FM-based methods, (C) DNN-based methods, and (D) aggregation-based methods. The specific eight comparison algorithms include LR (A), Standard FM (Rendle, 2010) (B), NFM (He and Chua, 2017) (C), AFM (Xiao and Ye, 2017) (B), AutoInt (Song et al., 2020) (D), Fi-GNN (Cui et al., 2019) (D), InterHat (Li et al., 2020) (D).

LR is a linear regression, modelled using only a single feature; Standard FM is able to model second-order interaction links of features; NFM designed a dual interaction layer and DNN to handle nonlinear features and model higher order feature interactions; AFM introduces the attention mechanism to give weight to the interaction of different features; AutoInt is to improve the efficiency of the model in learning higher-order feature interactions through self-attentive networks; Fi-GNN uses gated graph neural networks to model higher-order feature connections as fully connected graphs; InterHat uses the attention machine to select features, and raw feature multiplication produces higher-order feature interactions; LTFM is an extended FM-based model that considers the effects of temporal and spatial information projections and feature interactions on the final game perception results.

4.3 Evaluation of performance indices

The following five assessment metrics are used in the experiments of game user perception evaluation: AUC (Gospodinova et al., 2023; Li et al., 2022), Precision (Annadurai et al., 2024; Chan et al., 2024), Recall (Wang et al., 2024; Zheng et al., 2024; Hong et al., 2024), and F-measure (Li et al., 2024a; Li et al., 2021; Li et al., 2023a; Guo et al., 2024a; Guo et al., 2024b; Ma and Tong, 2024; Sultan et al., 2024; Li et al., 2024b; Li et al., 2024c; Li et al., 2023b; Li et al., 2023c).

The AUC curve is taken as the area under the ROC curve. The larger the value, the better the model performance. Precision is used to calculate the proportion of correct predictions among all samples with positive predictions. Recall is the ratio of positive class samples correctly judged by the classifier to the total number of positive class samples. Usually, accuracy is inversely proportional to recall. A composite metric, F-measure, is introduced to balance the effects of precision and recall and to evaluate a classifier more fully. When both precision and recall are high, the value of F-measure is high.

4.4 Results and analysis

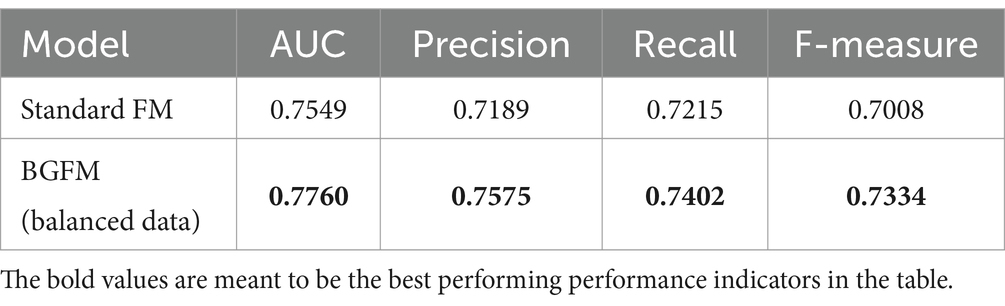

The performance of the BGFM model after balancing the data was first analyzed experimentally, as shown in Table 1. It is concluded that there is an improvement in the BGFM model performance compared to the standard FM.

It can be deduced from Table 1 that FM performs well for sparse feature data. However, since poor game perception is a minority class occurrence, FM is impairing the correctness of the final judgment by judging the minority class as the majority class. Due to the data volume limitation, we consider preprocessing the data and de-rationalizing the generation of new data from the existing data to achieve the result that the data classes to be judged are basically the same. The balanced data is then imported into our model. The results show that using Borderline-SMOTE oversampling to balance the data category distribution is beneficial for the final perceptual evaluation.

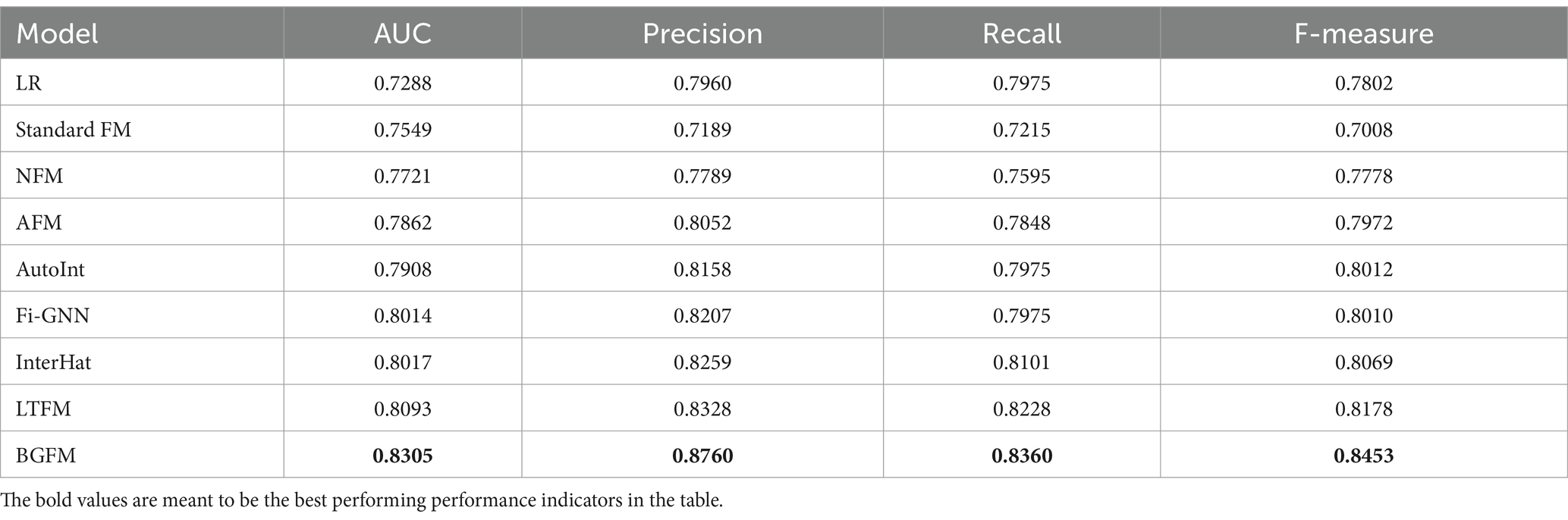

The performance comparison of these methods on the game-aware dataset is shown in Table 2, from which the following observations are obtained: the BGFM proposed in this chapter achieves the best performance on the game perception dataset. The enhanced efficiency of the BGFM compared to the four classes (A, B, C, and D) of methods is particularly significant. BGFM employs a mechanism for choosing and aggregating beneficial feature interactions, and its performance is superior and easy to manage. Taking all aspects together, BGFM is superior to existing algorithms. In the following, the four types of algorithms, A, B, C, and D, will be specifically analyzed.

Aggregation-based methods outperform the other three classes of models, demonstrating the advantages of selection strategies in getting higher-order relationships. However, the LTFM model performance still performs well, suggesting that the importance of projected information that considers both temporal and spatial information interacting with and capturing features for the final game perception results is favorable for the final perceptual evaluation. However, this model only expands the data dimensions and captures hidden feature interactions based on the properties of FM for sparse data, which fails to address the performance fluctuations caused by the imbalance of data categories and captures higher-order beneficial feature interactions. The BGFM solves these problems well.

Compared to the powerful aggregation-based baseline AutoInt and Fi-GNN, BGFM still offers a significant performance improvement and can be considered important for game-aware prediction tasks. This enhancement is due to the combination of GNN with FM. Treating features as nodes, two-by-two interactions between features as edges, and each input as a graph, GNN’s aggregation strategy solves two of FM’s problems: suboptimal feature interactions that lead to model overfitting and the difficulty of modeling higher-order feature interactions. GNN introduces the concept of feature interaction and a beneficial interaction selection method that greatly improves the model’s performance.

The attention mechanism assigns different weights to interactions. AFM outperforms FM, demonstrating the necessity of considering feature interaction weights. Although NFM uses DNNs to model higher-order interactions, they do not ensure an improvement over the base model and the improved model with the addition of an attention mechanism, possibly because of their implicit feature interaction learning approach. AutoInt performs better than AFM because the multi-head attention mechanism in the model takes into account the richness of feature interactions in multiple spaces.

5 Conclusion

This study bridges FM and GNN approaches, yielding a new BGFM model. It exploits the respective strengths of FM and GNN, attempting to compensate for their individual deficiencies. Beneficial feature interactions are selected at each layer of BGFM and considered edges in the graph. The interactions are then encoded as feature representations using the neighborhood interaction aggregation operation. The model adds higher-order feature learning at each layer, and the layer depth determines the median result. This leads to the conclusion that our model can learn the highest-order feature interactions. The BGFM learns higher-order interactions between features and provides high interpretability of model results. The experimental results prove that the proposed BGFM outperforms eight state-of-the-art models to a large extent.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

XX: Conceptualization, Data curation, Investigation, Methodology, Validation, Visualization, Writing – original draft, Writing – review & editing. YJ: Methodology, Resources, Software, Supervision, Validation, Writing – original draft, Writing – review & editing. TM: Funding acquisition, Project administration, Resources, Software, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was funded by the Tianshan Talent Training Project-Xinjiang Science and Technology Innovation Team Program (2023TSYCTD).

Conflict of interest

XX was employed by BYD Company Limited.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Annadurai, A., Sureshkumar, V., Jaganathan, D., and Dhanasekaran, S. (2024). Enhancing medical image quality using fractional order denoising integrated with transfer learning. Fractal Fract. 8:511. doi: 10.3390/fractalfract8090511

Beck, D., Haffari, G., and Cohn, T. (2018). Graph-to-sequence learning using gated graph neural networks. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. 1, 273–283.

Chan, H., Qiu, X., Gao, X., and Lu, D. (2024). A complex background SAR ship target detection method based on fusion tensor and cross-domain adversarial learning. Remote Sens. 16:3492. doi: 10.3390/rs16183492

Cheng, H., Koc, L., and Harmsen, J. (2016). Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems. pp. 7–10.

Cui, Z., Li, Z., and Wu, S. (2019). Dressing as a whole: outfit compatibility learning based on node-wise graph neural networks. Proceedings of the World Wide Web Conference. pp. 307–317.

Denieffe, D., Carrig, B., and Marshall, D. (2007). A game assessment metric for the online gamer. Adv. Elect. Comput. Eng. 7, 3–6. doi: 10.4316/aece.2007.02001

Gospodinova, E., Lebamovski, P., Georgieva-Tsaneva, G., and Negreva, M. (2023). Evaluation of the methods for nonlinear analysis of heart rate variability. Fractal Fract. 7:388. doi: 10.3390/fractalfract7050388

Guo, F., Ma, H., Li, L., Lv, M., and Jia, Z. (2024a). FCNet: flexible convolution network for infrared small ship detection. Remote Sens. 16:2218. doi: 10.3390/rs16122218

Guo, F., Ma, H., Li, L., Lv, M., and Jia, Z. (2024b). Multi-attention pyramid context network for infrared small ship detection. J. Mar. Sci. Eng. 12:345. doi: 10.3390/jmse12020345

Guo, H., Tang, R., and Ye, Y. (2017). DeepFM: a factorization-machine based neural network for ctr prediction. In Proceedings of the 26th International Joint Conference on Artificial Intelligence. pp. 1725–1731.

Hamilton, W., Ying, R., and Leskovec, J. (2017). Inductive representation learning on large graphs. Adv. Neural Inf. Proces. Syst. 30, 1025–1035.

He, X., and Chua, T. (2017). Neural factorization machines for sparse predictive analytics. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval. pp. 355–364.

Hong, F., Huang, D., and Chen, G. (2019). Interaction-aware factorization machines for recommender systems. AAAI Conf. Artif. Intell. 33, 3804–3811. doi: 10.1609/aaai.v33i01.33013804

Hong, L., Lee, S., and Song, G. (2024). CAM-Vtrans: real-time sports training utilizing multi-modal robot data. Front. Neurorobot. 18:1453571. doi: 10.3389/fnbot.2024.1453571

Jiang, B., Zhang, Z., and Lin, D. (2019). Semi-supervised learning with graph learning-convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition. pp. 11305–11312.

Koo, D., Lee, S., and Chang, H. (2007). Experiential motives for playing online games. J. Cyber Psychol. Behav. 2, 772–775.

Li, Z., Cheng, W., and Chen, Y. (2020). Interpretable click-through rate prediction through hierarchical attention. Proceedings of the 13th International Conference on Web Search and Data Mining. pp. 313–321.

Li, L., Lv, M., Jia, Z., Jin, Q., Liu, M., Chen, L., et al. (2023b). An effective infrared and visible image fusion approach via rolling guidance filtering and gradient saliency map. Remote Sens. 15:2486. doi: 10.3390/rs15102486

Li, L., Lv, M., Jia, Z., and Ma, H. (2023c). Sparse representation-based multi-focus image fusion method via local energy in shearlet domain. Sensors 23:2888. doi: 10.3390/s23062888

Li, L., Ma, H., and Jia, Z. (2021). Change detection from SAR images based on convolutional neural networks guided by saliency enhancement. Remote Sens. 13:3697. doi: 10.3390/rs13183697

Li, L., Ma, H., and Jia, Z. (2022). Multiscale geometric analysis fusion-based unsupervised change detection in remote sensing images via FLICM model. Entropy 24:291. doi: 10.3390/e24020291

Li, L., Ma, H., and Jia, Z. (2023a). Gamma correction-based automatic unsupervised change detection in SAR images via FLICM model. J. Indian Soc. Remote Sens. 51, 1077–1088. doi: 10.1007/s12524-023-01674-4

Li, L., Ma, H., Zhang, X., and Zhao, X. (2024a). Synthetic aperture radar image change detection based on principal component analysis and two-level clustering. Remote Sens. 16:1861. doi: 10.3390/rs16111861

Li, L., Shi, Y., Lv, M., and Jia, Z. (2024b). Infrared and visible image fusion via sparse representation and guided filtering in Laplacian pyramid domain. Remote Sens. 16:3804. doi: 10.3390/rs16203804

Li, R., Tapaswi, M., and Liao, R. (2017). Situation recognition with graph neural networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision. pp. 4183–4192.

Li, L., Zhao, X., Hou, H., and Zhang, X. (2024c). Fractal dimension-based multi-focus image fusion via coupled neural P systems in NSCT domain. Fractal Fract. 8:554. doi: 10.3390/fractalfract8100554

Ma, L., and Tong, Y. (2024). TL-CStrans net: a vision robot for table tennis player action recognition driven via CS-transformer. Front. Neurorobot. 18:1443177. doi: 10.3389/fnbot.2024.1443177

Marcheggiani, D., and Titov, I. (2017). Encoding sentences with graph convolutional networks for semantic role labeling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. pp. 1506–1515.

Pornpongtechavanich, P., Wuttidittachotti, P., and Daengsi, T. (2022). QoE modeling for audiovisual associated with MOBA game using subjective approach. Multimed. Tools Appl. 81, 37763–37779. doi: 10.1007/s11042-022-12807-1

Rendle, S. Factorization Machines. (2010). In Proceedings of the 2010 IEEE international conference on data mining. Sydney. pp. 995–1000.

Song, W., Shi, C., and Xiao, Z. (2020). AutoInt: automatic feature interaction learning via self-attentive neural networks. Proceedings of the 28th ACM International Conference on Information and Knowledge Management. pp. 1161–1170.

Su, Y., Zhang, R., and Erfani, S. (2021). Detecting beneficial feature interactions for recommender systems. AAAI Conf. Artif. Intell. 35, 4357–4365. doi: 10.1609/aaai.v35i5.16561

Sultan, H., Ullah, N., Hong, J. S., and Kim, S. G. (2024). Estimation of fractal dimension and segmentation of brain tumor with parallel features aggregation network. Fractal Fract. 8:357. doi: 10.3390/fractalfract8060357

Sun, H., Zheng, Z., and Chen, J. (2013). Personalized web service recommendation via normal recovery collaborative filtering. IEEE Trans. Serv. Comput. 6, 573–579. doi: 10.1109/TSC.2012.31

Suznjevic, M., Skorin-Kapov, L., and Cerekovic, A. (2019). How to measure and model QoE for networked games? Multimedia Systems 25, 395–420. doi: 10.1007/s00530-019-00615-x

Wang, S., Chen, Y., and Yuan, Y. (2024). TSAE-UNet: a novel network for multi-scene and multi-temporal water body detection based on spatiotemporal feature extraction. Remote Sens. 16:3829. doi: 10.3390/rs16203829

Wang, X., He, X., and Wang, M. (2019). Neural graph collaborative filtering. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval. pp. 165–174.

Wang, Q., Zhang, M., and Zhang, Y. (2022). Location-based deep factorization machine model for service recommendation. Appl. Intell. 52, 9899–9918. doi: 10.1007/s10489-021-02998-9

Wattimena, A., Kooij, R., and Van, V. (2006). Predicting the perceived quality of a first person shooter: the quake iv G-model. In Proceedings of 5th ACM SIGCOMM workshop on network and system support for games, NetGames '06, pp. 42–45.

Xiao, J., and Ye, H. (2017). Attentional factorization machines: learning the weight of feature interactions via attention networks. In Proceedings of the 26th international joint conference on artificial intelligence. pp. 3119–3125.

Xie, X., and Jia, Z. (2022). A location-time-aware factorization machine based on fuzzy set theory for game perception. Appl. Sci. 12:12819. doi: 10.3390/app122412819

Yang, Y., Zheng, Z., and Niu, X. (2021). A location-based factorization machine model for web service QoS prediction. IEEE Trans. Serv. Comput. 14, 1264–1277. doi: 10.1109/TSC.2018.2876532

Zhang, C., Bengio, S., and Hardt, M. (2021). Understanding deep learning (still) requires rethinking generalization. Commun. ACM 64, 107–115. doi: 10.1145/3446776

Zhang, W., Du, T., and Wang, J. (2016). Deep learning over multi-field categorical data—A case study on user response prediction. Lect. Notes Comput. Sci 9626, 45–57. doi: 10.1007/978-3-319-30671-1_4

Zhang, H., Lu, G., and Zhan, M. (2021). Semi-supervised classification of graph convolutional networks with Laplacian rank constraints. Neural. Process. Lett. 54, 2645–2656. doi: 10.1007/s11063-020-10404-7

Keywords: machine learning, mobile game user evaluation, quality of experience, factorization machine, graph neural network

Citation: Xie X, Jia Y and Ma T (2024) An improved graph factorization machine based on solving unbalanced game perception. Front. Neurorobot. 18:1481297. doi: 10.3389/fnbot.2024.1481297

Edited by:

Yu Zhang, Beihang University, ChinaReviewed by:

Yinsheng Li, Henan University of Technology, ChinaYongzhi Zhai, Xi’an University of Posts and Telecommunications, China

Copyright © 2024 Xie, Jia and Ma. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tiande Ma, MjAyMDEzMDMyMjVAc3R1LnhqdS5lZHUuY24=

Xiaoxia Xie

Xiaoxia Xie Yuan Jia2

Yuan Jia2 Tiande Ma

Tiande Ma