- College of Computer Science and Technology, Chongqing University of Posts and Telecommunications, Chongqing, China

High-dynamic-range (HDR) image has a wide range of applications, but its access is limited. Multi-exposure image fusion techniques have been widely concerned because they can obtain images similar to HDR images. In order to solve the detail loss of multi-exposure image fusion (MEF) in image reconstruction process, exposure moderate evaluation and relative brightness are used as joint weight functions. On the basis of the existing Laplacian pyramid fusion algorithm, the improved weight function can capture the more accurate image details, thereby making the fused image more detailed. In 20 sets of multi-exposure image sequences, six multi-exposure image fusion methods are compared in both subjective and objective aspects. Both qualitative and quantitative performance analysis of experimental results confirm that the proposed multi-scale decomposition image fusion method can produce high-quality HDR images.

1. Introduction

Due to the limited dynamic range of imaging equipment, it is impossible for existing imaging equipment to capture all the details in one scene with a single exposure. Therefore, underexposure or overexposure often occurs in daily shooting, which seriously affects the visualization of images and the display of key information. High-dynamic-range (HDR) imaging techniques overcome this limitation, but most of currently used standard monitors use low dynamic range (LDR) (Ma et al., 2015a). So, a tone mapping process is required to compress the dynamic range of HDR images for display after acquiring HDR images. Multi-exposure image fusion (MEF) methods use a cost-effective way to solve the dynamic range mismatch between HDR imaging and LDR display. Source image sequences with different exposure levels are taken as input and the brightness information in accordance with the human visual system (Ma et al., 2017) is fused with them to generate HDR images with rich information and sensitive perception.

In recent years, many MEF algorithms have been developed. Like multi-source image fusion (Jin et al., 2021a), MEF algorithms are usually divided into four categories (Liu et al., 2020): spatial domain methods, transform domain methods, the combination of spatial domain and transform domain methods and deep learning methods (Jin et al., 2021b). This article mainly studies the MEF method in spatial domain,these methods mainly focus on providing the weighted sum of the input exposures image to obtain the fused image. Different MEF methods use different techniques to obtain the suitable weight map. Li et al. (2013) obtained the corresponding base and detail layers by decomposing the source image in two scales, and then processed them separately to obtain the final fusion image. Liu and Wang (2015) applied dense scale invariant feature transform (SIFT) (Liu et al., 2016) to obtain both contrast and spatial consistency weights based on local gradient information. Mertens et al. (2010) applied multi-resolution exposure sequences to Laplacian pyramid-based image fusion. The weighted average value was first calculated from the weighted values determined by contrast, saturation and good exposure, and then applied to obtain the pyramid coefficients. Finally, image fusion was achieved by reconstructing the obtained pyramid coefficients. Shen et al. (2014) proposed an exposure fusion method based on hybrid exposure weights and an improved Laplacian pyramid. This method considers the gradient vectors between different exposure source images, and uses an improved Laplacian pyramid to decompose input signals into both base and detail layers. Shen et al. (2011) proposed a probability model of MEF. According to the two quality indicators of both local contrast and color consistency of source image sequences, the generalized random walk framework was first used to calculate the optimal probability set. Then, the obtained probability set was used as the corresponding weights to realize image Fusion. Fei et al. (2017) applied an image smoothing algorithm based on weighted least squares to MEF for achieving detail extraction of HDR scenes. The extracted detail information was used in the multi-scale exposure fusion algorithm to achieve image fusion. So, fused images with rich colors and detailed information can be obtained. Li and Kang (2012) proposed a fusion method based on weighted sum. Firstly, three image features composed of local contrast, brightness and color differences are measured to estimate the weight, and then the weight map is optimized by recursive filter. Zhang and Cham (2012) proposed a simple and effective method, which uses gradient information to complete multi exposure image synthesis in static and dynamic scenes. Given multiple images with different exposures, the proposed method can seamlessly synthesize them under the guidance of gradient based quality evaluation, so as to produce a pleasant tone mapped high dynamic range image. Ma et al. (2017) proposed a method based on image structure block decomposition, which represents the image block with average intensity, signal intensity and signal structure, and then uses the intensity and exposure factor of the image block for weighted fusion, which can be used for both static scene fusion and dynamic scene fusion. Moriyama et al. (2019) proposed to use the light conversion method of preserving hue and saturation to generate a new multi exposure image for fusion, realize brightness conversion based on local color correction, and obtain the fused image by weighted average (weight is calculated by saturation). Wang and Zhao (2020) proposed using the super-pixel segmentation method to divide the input image into non overlapping image blocks composed of pixels with similar visual attributes, decompose the image block into three independent components: signal intensity, image structure and intensity, and then fuse the three components respectively according to the characteristics of human visual system and the exposure level of the input image. Qi et al. (2020) used the exposure quality a priori to select the reference image, used the reference image to solve the ghosting problem in the dynamic scene in the structural consistency test, and then decomposed the image by using the guidance filter, and proposed a fusion method combining spatial domain scale decomposition, image block structure decomposition and moderate exposure evaluation. Li et al. (2020) proposed a multi exposure image fusion algorithm based on improved pyramid transform. The algorithm improves the local contrast information of the image by using the adaptive histogram equalization algorithm, and calculates the image fusion weight coefficient with good contrast information, image entropy and exposure. Hayat and Imran (2019) proposed a ghosting free multi exposure image fusion technology based on dense sift descriptor and guided filter. Ulucan et al. (2020) proposed a new, simple and effective still image exposure fusion method. This technique uses weight map extraction based on linear embedding and watershed masking. Xu et al. (2021). Proposed a new multi exposure image fusion method based on tensor product and tensor singular value decomposition. A new fusion strategy is designed by using tensor product and t-svd. The luminance and chrominance channels are fused respectively to maintain color consistency. Finally, the chrominance and luminance channels are fused to obtain the fused image.

Both multi-scale decomposition method and fusion strategy of multi-scale coefficients determine the performance of the image fusion framework based on multi-scale decomposition. Pyramid transformation is a commonly used multi-scale decomposition method. Due to different scales and resolutions, the corresponding decomposition layer has different image feature information. In addition, the weight function design of feature extraction plays a decisive role in the final fusion result. Therefore, this article, proposes a fast and effective image fusion method based on improved weight function. The fusion weight map is calculated through the evaluation of exposure moderation and relative brightness. Combined with pyramid multi-scale decomposition, images with different resolutions are fused to generate the required high dynamic range image.

The rest of this article is organized as follows. The second section describes the overall process of the fusion algorithm; The third section is a detailed explanation of the weight function; The fourth section describes the process of image Gaussian pyramid decomposition and Laplace pyramid decomposition; The fifth section is the experimental results and analysis; The sixth section is the summary of this article.

2. Workflow of Image Fusion Algorithm

MEF aims to generate an image containing the best pixel information from a series of images with different exposure levels. The pixel-based MEF performs weighted image fusion as follows.

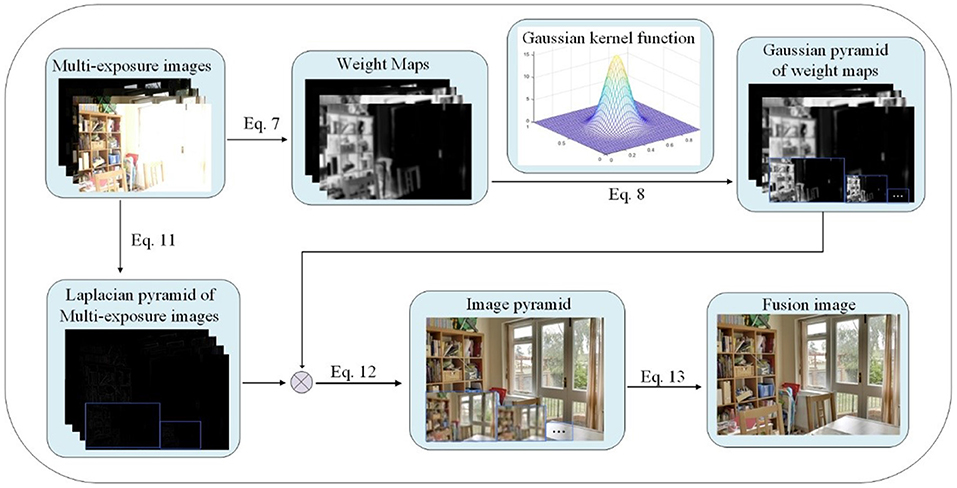

where FI represents the fusion image, (x, y) represents pixel coordinates, N represents the number of images, In represents the pixel intensity of the nth image, and Wn represents the pixel weight of the nth image. The workflow of the proposed image fusion based on improved weight function is shown in Figure 1. Equation (7), Equation (8) and other symbols in Figure 1 correspond to the formula below, indicating that the operation corresponding to the equation has been performed. The symbol before Equation (12) in Figure 1 represents the multiplication sign.

3. Weight Function

As the core part of the proposed image fusion method, a reasonable weight function is designed based on the appropriate evaluation of exposure levels (Shen-yu et al., 2015). Gray value, as an important measure of image visible information, usually determines the fusion weight based on the distance between image gray and 0.5, but this single index will cause the loss of information of the fused image and some areas of the image are dark. Using the Evaluation of Moderate Exposure, the fusion weight is determined by the gray mean value of the multi exposure image at a certain point and the distance from 0.5 to retain more image information. Additionally, the relative brightness is applied to measure the corresponding weight.

3.1. Evaluation of Moderate Exposure

In the evaluation process, the brightness and darkness changes of different pixels obtained by the limited sampling of a scene are analyzed, and each image pixel value in the scene under the optimal moderate exposure is estimated. The differences between the pixel values of each input image and the corresponding optimal pixel values are compared to evaluate moderate exposure. The evaluation value can be directly used as the corresponding weight value for image fusion. For N images with different exposures from the same scene, In(x, y) represents the pixel value at the coordinate (x, y) of the nth image, and the evaluation indicator of moderate exposure is the sum of weights used to obtain the fused image.

In Equation (2), μ(x, y) represents the optimal pixel value of the pixel at the coordinate (x, y) of the image, which is estimated by Equation (3). On one hand, the value of μ(x, y) should be around 0.5, which can ensure ideal human visual experience. On the other hand, in order to reflect the real-world light-dark contrast information, it is necessary to approximate the brightness information from the limited sampling of the scene. Therefore, the average value of each pixel in the images with different exposures is calculated by Equation (4). μ(x, y) is the weighted sum of 0.5 and this average value. The weight factor β is a balance parameter between detail information and light-dark contrast information.

3.2. Relative Brightness

The evaluation indicator of moderate exposure cannot well capture the information from dark areas of long-exposure images or bright areas of short-exposure images. Therefore, the relative brightness proposed by Lee et al. (2018) is added as another weight indicator. Specifically, when the overall image is bright (long exposure), the relatively dark areas are given greater weights. Conversely, when the overall image is dark (short exposure), the relatively bright areas are given greater weights. The average pixel intensity of the nth image is denoted as mn. When In(x, y) is close to 1 − mn, the corresponding weight should be relatively large. Therefore, the relative brightness can be expressed as follows.

In addition, when the adjacent exposed images and the input images have relatively large differences, the different objects in the two images are often in a good exposure state. Therefore, when the average brightness mn of the nth image considerably differs from the average brightness mn−1,mn+1 of adjacent images, a larger δn value is given.α is a constant with a value of 0.75. δn controls the weight according to the different mn values of the image, which can be expressed as follows.

Therefore, the final weight function can be expressed as follows.

4. Multi-Scale Image Decomposition

Because the pixels of the image are closely related, it is more reliable to use a wider range of pixels to calculate the fusion weight. In addition, in the real world, objects have different structures at different scales. This shows that if you observe the same object from different scales, you will get different results. Therefore, in the case of multi-scale decomposition, using the image pyramid to calculate the result image will get better fusion results.

Gaussian pyramid decomposition is first performed on the weight map and the multi-exposure image sequences. Then the Laplacian pyramid decomposition is applied to the multi-exposure image sequences. After the Gaussian pyramid and Laplacian pyramid of the image are fused between the corresponding layers, the upper layer image of the fused pyramid is up-sampled, and the up-sampled image is added to the lower layer image to obtain an image with the equal size of the image to be fused.

4.1. Gaussian Pyramid Decomposition

The Gaussian pyramid obtains a series of down-sampled images through Gaussian smoothing and sub-sampling. Gaussian kernel is first used to convolve the image of the l layer, and then all even rows and columns are deleted to obtain the image of the l + 1 pyramid layer. The decomposition algorithm is shown as follows.

where Gl is the image of the lth layer of the Gaussian Pyramid, Cl, Rl is the total number of rows and columns of the lth layer image, w(m, n) is the value of the mth row and nth column of the Gaussian filter template, Lev represents the number of Gaussian pyramid layers, and the maximum decomposable number of layers is log2[min(C0, R0)].

4.2. Laplace Pyramid Decomposition

The Gaussian pyramid obtained by Gaussian convolution and downsampling often loses detailed image information. Therefore, Mertens et al. (2010) introduced Laplacian pyramid to restore detailed image information. The image of each layer of Gaussian pyramid subtracts the predicted image obtained after the upsampling and Gaussian convolution of the upper layer image to obtain a series of difference images, which are the Laplacian decomposition images. First, the upsampling process is expressed as follows

where Z represents an integer, indicates that an upsampling operation is performed on the lth layer of Gaussian pyramid. As shown in Equation (11), the image Gl of the lth layer of Gaussian pyramid subtracts to obtain the lth layer image Ll containing detailed information.

The Laplace decomposition process of the image is shown in Figure 2. In this article, the number of layers of image pyramid is 7.

4.3. Image Fusion and Reconstruction

According to the above process, the Gaussian pyramid of the weighted image and the Laplacian pyramid of multi-exposure image sequences are first obtained, and then fused between the corresponding layers.

FIl represents the fused image data of the lth layer. Wk,l represents the lth layer data of the kth weighted image. Lk,l represents the lth layer data of the Laplacian pyramid of the kth multi-exposure image. Lev represents the total number of pyramid layers. N represents the number of images. The upper layer image of the fused pyramid is first upsampled, and then expanded and added to the lower layer image to obtain an image with the equal size of the image to be fused as follows.

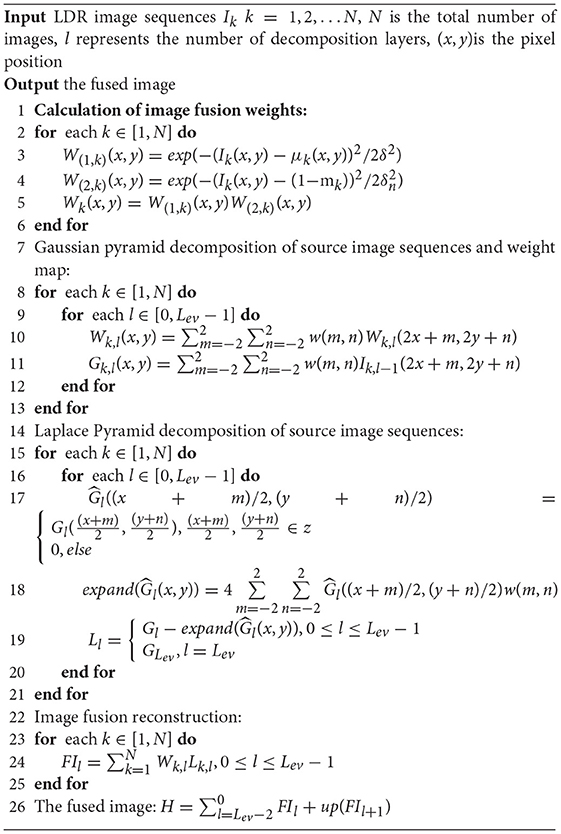

where FIl represents the lth layer image of the fused pyramid, up represents upsampling, Lev represents the number of pyramid levels, and H represents the final fusion image. The overall workflow of the proposed method is shown in Algorithm 1.

5. Experimental Results and Analysis

20 sets of multi-exposure image sequences, involving Arno, Balloons, Cave, ChineseGarden, etc., are applied to comparative experiments. The proposed method is subjectively and objectively compared with six existing MEF methods, including MESPD (Li et al., 2021), GD-MEF (Zhang and Cham, 2012), Fmmr (Li and Kang, 2012), DSIFT (Liu and Wang, 2015), GFF (Li et al., 2013), SPD-MEF (Ma et al., 2017) and PMEF (Qi et al., 2020). All experiments were performed in the matlab2019 environment on an Intel I7 9750H@2.60Ghz laptop with 8.00GB RAM. The relevant parameters are set to δ = 0.2, β = 0.5 and α = 0.75.

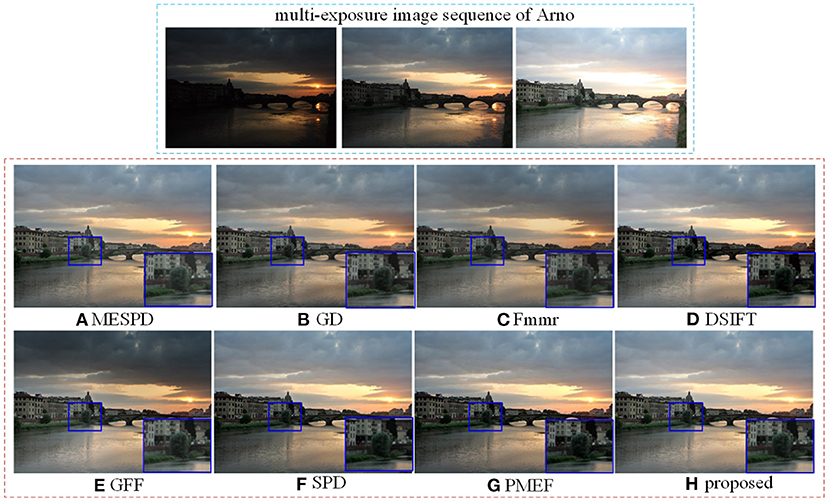

5.1. Subjective Comparison

Firstly, experiments are carried out on the “Arno” scene, and the fusion results of different algorithms are shown in Figure 3. It is not difficult to see that when dsift processes the clouds in the right sky, it is generally dark and can not capture the details of the clouds well. The GFF and SPD algorithms, when dealing with the bridge, have the problems of low brightness, resulting in the loss of detail information and poor visual effect. GD, PMEF and the algorithm proposed in this article can maintain the uniformity of the overall brightness of the image while retaining more details, and the visual effect is excellent.

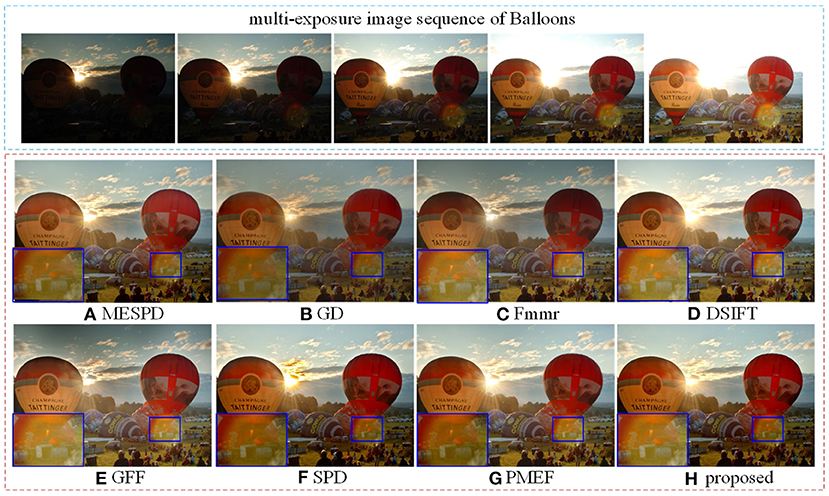

The experimental results of “Balloons” scene are shown in Figure 4. The fusion results of GD, Fmmr and PMEF are dark. The details of clouds at the sunset are well captured, which means the details of the overexposed image areas can be well captured. But the overall scene is dark, resulting in the detail loss of underexposed image areas. The image fused by GFF has a slight halo on the edge of the hot air balloon. Additionally, part of the sky is dark and the image color is slightly distorted. The sunset area of the image fused by SPD is abnormal. In addition, the image color is seriously distorted, which seriously affects the overall performance of the fused image. When comparing the enlarged details, MESPD, GD, Fmmr, SPD, and PMEF have low brightness, poor visibility and serious loss of details in this area.

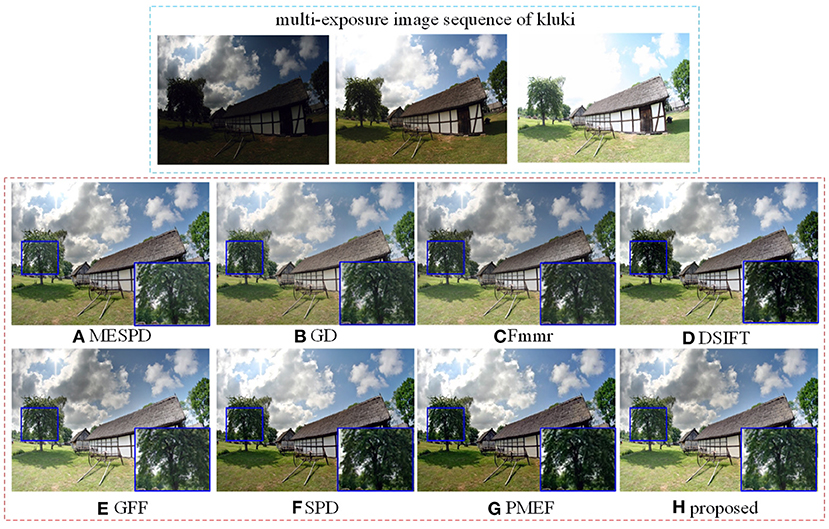

In the experimental results of the “Kluki” scene, as shown in Figure 5, the saturation of SPD and PMEF is too high, resulting in some distortion of the color of the resulting image, and poor retention of the details of the clouds in the sky; Other algorithms retain the details of the clouds, and the visual effect is good. In contrast, the fusion results obtained by the proposed method and DSIFT consider the details of the bright and dark areas of the scene. So, the corresponding colors are real, the contrast is clear, and the visual performance of the fused images is good. From the enlarged details of the trees on the left, dsift, SPD, and PMEF have the problems of low brightness and high saturation, resulting in poor retention effect of details.

5.2. Objective Evaluation Indicator Analysis

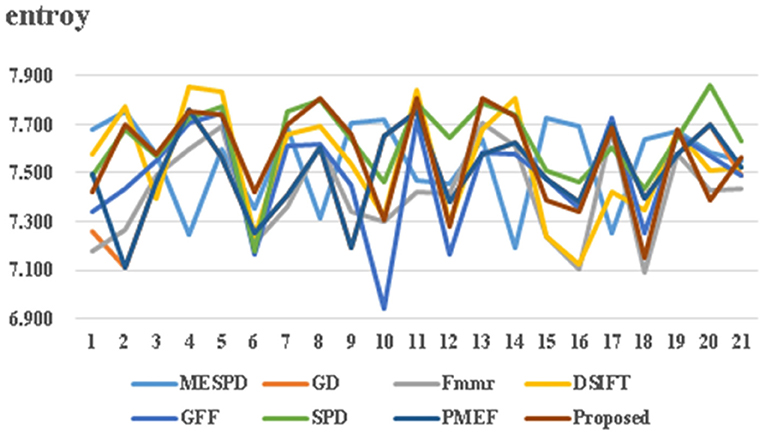

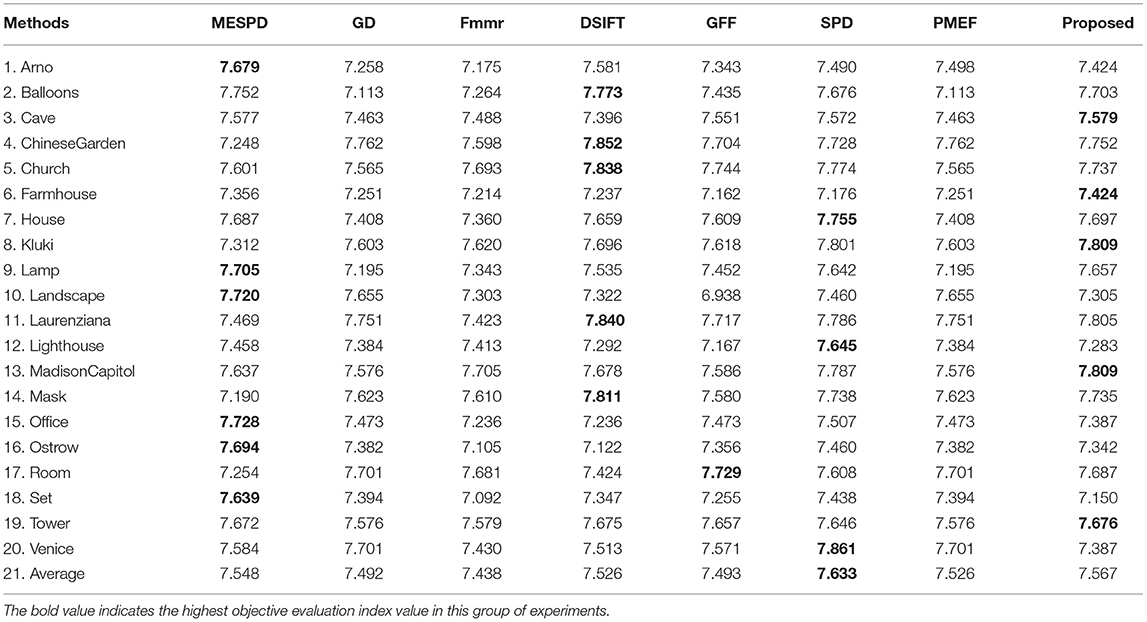

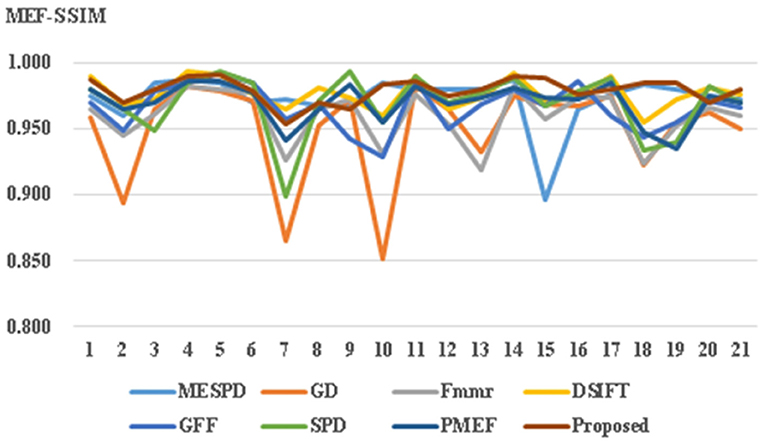

This article uses both structural similarity index (SSIM) and image information entropy for objective evaluation. As shown in Figure 6 and Tables 1, 2, the results confirm that the propose method achieves good performance in both subjective and objective evaluations. The abscissa in Figure 6 represents the value of information entropy, and the abscissa in Figure 7 represents the value of structural similarity; In addition, the ordinates of the two figures are the same: 1-20 represents different multi exposure sequences, and 21 represents the average value.

1) Image information entropy indicator comparison

Image information entropy is one of the important factors that determine the final effect of image fusion. The larger the information entropy, the more detailed information contained in the experimental result graph; On the contrary, the smaller the information entropy, the less detailed information contained in the experimental result graph. The evaluation results are shown in Figure 6 and Table 1. The multi exposure fusion algorithm under multi-scale decomposition is slightly lower than the SPD algorithm based on image block decomposition and better than other algorithms. This is because the SPD algorithm based on image block decomposition avoids the partial loss of information caused by up and down sampling in multi-scale decomposition, and its entropy is better than the multi exposure fusion algorithm under multi-scale decomposition. The calculation formula of image entropy is as follows.

Pi represents the proportion of pixels with gray value i in the image.

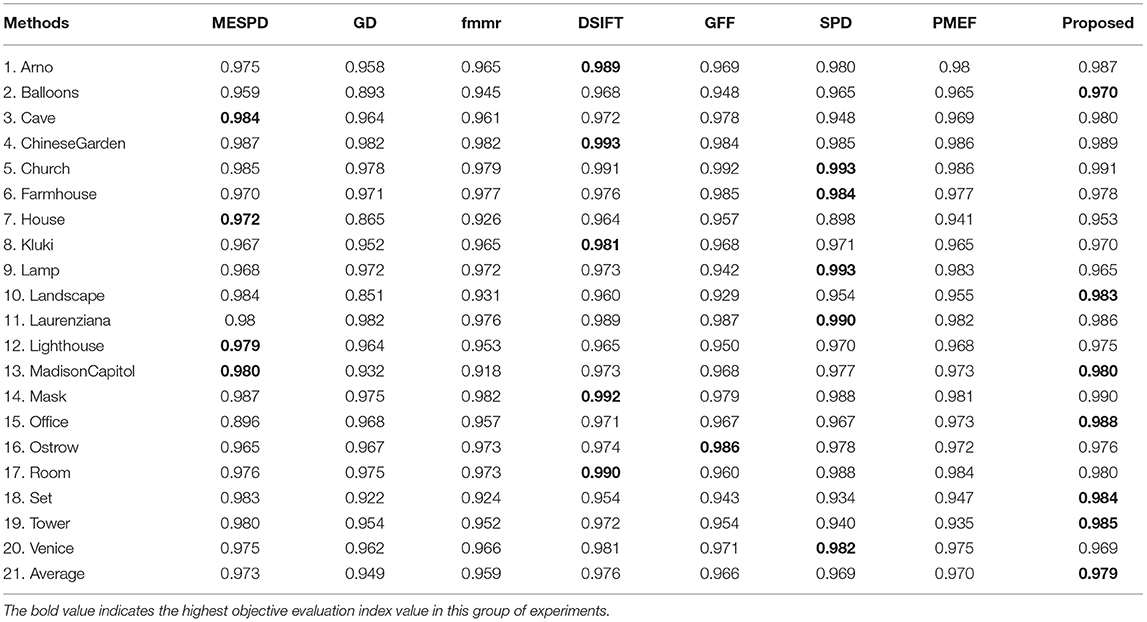

2) MEF-SSIM comparison

This article uses the MEF quality evaluation model (Ma et al., 2015b) for evaluation. The proposed method is objectively compared with six existing MEF method. Natural images usually contain object information of different scales. Multi-scale can ensure the correlation between the pixels of different scales and optimize image fusion. Structural similarity as an index is used to measure the similarity of two images. As shown in Figure 7 and Table 2, the MEF method under multi-scale decomposition achieves the best SSIM.

From the perspective of image composition, structural information is defined as an attribute that reflects the structure of objects in the scene independent of brightness and contrast. Additionally, model distortion is treated as a combination of three different factors, brightness, contrast, and structure. The mean is used as an estimate of brightness. The standard deviation is used as an estimate of contrast. The covariance is used as a measure of structural similarity. All the definitions are shown as follows.

L(x, y), C(x, y), and S(x, y) are the comparison results of image brightness, contrast, and structure, respectively. μx and μy are the mean values of image pixels. δx and δy are the standard deviations of image pixel values. δx,y is the covariance of x and y. c1, c2, and c3 are constants to avoid system errors when the denominator is 0. α, β, γ used to adjust the weight of each component, usually α = β = γ = 1. The structural similarity index is used for different scales, and the final image quality score is obtained through Formula (19).

where L is the total number of scales and βl is the weight assigned to the lth scale.

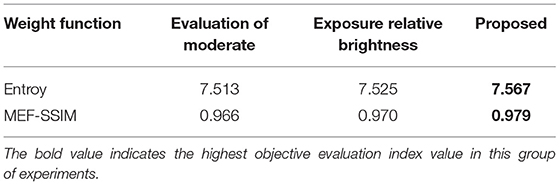

5.3. Ablation Experiment of Weight Function

In order to prove that the weight function of two different feature indexes, moderate exposure evaluation and relative brightness, can make the multi exposure image fusion get better results. The following ablation experiments were carried out in this article. As shown in Table 3, the objective evaluation index of the fused image obtained by the improved weight function in this article performs well.

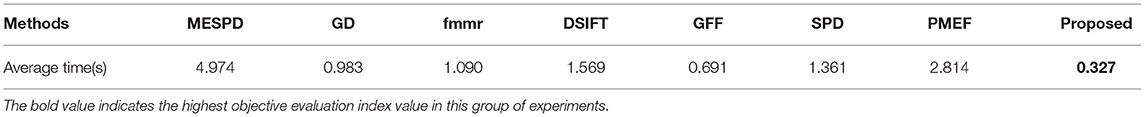

5.4. Comparison and Analysis of Computational Efficiency

As shown in Table 4, The computational efficiency of the multi exposure fusion algorithm based on the improved weight function is better than the comparison algorithm. In the multi-exposure fusion algorithm based on the improved weight function, although the Laplace image pyramid is used, in the continuous down sampling, the amount of calculation increases only a little due to the doubling of the number of pixels. In addition, because the weight calculation method of this algorithm is simple and easy to calculate, it does not need additional time. Therefore, the computational efficiency of this algorithm is obviously better than other comparison algorithms.

6. Conclusion

In this article, the weight function is improved, and the weight map is calculated by using the evaluation of moderate exposure and relative brightness. Pyramid-based multi-scale decomposition is used to fuse images with different resolutions to generate the final HDR image. The proposed method can effectively capture the rich image details and solve the issues such as splicing traces and border discontinuities in the fused image, avoiding the generation of artifacts. Both MEF-SSIM and image information entropy are used to evaluate the performance of image fusion. Experimental results confirm that the proposed method achieves good image fusion performance.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

KX: conceptualization, funding acquisition, and supervision. QW: investigation and methodology. HX and KL: software. All authors have read and agreed to the published version of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Fei, K., Zhe, W., Chen, W., Wu, X., and Li, Z. (2017). Intelligent detail enhancement for exposure fusion. IEEE Trans. Multimedia 20, 484–495. doi: 10.1109/TMM.2017.2743988

Hayat, N., and Imran, M. (2019). Ghost-free multi exposure image fusion technique using dense sift descriptor and guided filter. J. Vis. Commun. Image Represent. 62, 295–308. doi: 10.1016/J.JVCIR.2019.06.002

Jin, X., Huang, S., Jiang, Q., Lee, S. J., and Yao, S. (2021a). Semi-supervised remote sensing image fusion using multi-scale conditional generative adversarial network with siamese structure. IEEE J. Sel. Top. Appl. Earth Observations Rem. Sens. doi: 10.1109/JSTARS.2021.3090958

Jin, X., Zhou, D., Jiang, Q., Chu, X., and Zhou, W. (2021b). How to analyze the neurodynamic characteristics of pulse-coupled neural networks? a theoretical analysis and case study of intersecting cortical model. IEEE Trans. Cybern. doi: 10.1109/TCYB.2020.3043233

Lee, S.-H., Park, J. S., and Cho, N. I. (2018). “A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient,” in 2018 25th IEEE International Conference on Image Processing (ICIP) (Athens), 1737–1741.

Li, H., Chan, T. N., Qi, X., and Xie, W. (2021). Detail-preserving multi-exposure fusion with edge-preserving structural patch decomposition. IEEE Trans. Circuits Syst. Video Technol. doi: 10.1109/TCSVT.2021.3053405

Li, H., Ma, K., Yong, H., and Zhang, L. (2020). Fast multi-scale structural patch decomposition for multi-exposure image fusion. IEEE Trans. Image Process. 29, 5805–5816. doi: 10.1109/TIP.2020.2987133

Li, S., and Kang, X. (2012). Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 58, 626–632. doi: 10.1109/TCE.2012.6227469

Li, S., Kang, X., and Hu, J. (2013). Image fusion with guided filtering. IEEE Trans. Image Process. 22, 2864–2875. doi: 10.1109/TIP.2013.2244222

Liu, C., Yuen, J., and Torralba, A. (2016). SIFT Flow: Dense Correspondence Across Scenes and Its Applications. Springer International Publishing, 15–49. doi: 10.1007/978-3-319-23048-1_2

Liu, Y., Wang, L., Cheng, J., Li, C., and Chen, X. (2020). Multi-focus image fusion: a survey of the state of the art. Inf. Fusion 64, 71–91. doi: 10.1016/j.inffus.2020.06.013

Liu, Y., and Wang, Z. (2015). Dense sift for ghost-free multi-exposure fusion. J. Vis. Commun. Image Represent. 31, 208–224. doi: 10.1016/j.jvcir.2015.06.021

Ma, K., Hui, L., Yong, H., Zhou, W., Meng, D., and Lei, Z. (2017). Robust multi-exposure image fusion: a structural patch decomposition approach. IEEE Trans. Image Process. 26, 2519–2532. doi: 10.1109/TIP.2017.2671921

Ma, K., Yeganeh, H., Zeng, K., and Wang, Z. (2015a). High dynamic range image compression by optimizing tone mapped image quality index. IEEE Trans. Image Process. 24, 3086–3097. doi: 10.1109/TIP.2015.2436340

Ma, K., Zeng, K., and Wang, Z. (2015b). Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. Publicat. IEEE Signal Process. Soc. 24, 3345. doi: 10.1109/TIP.2015.2442920

Mertens, T., Kautz, J., and Reeth, F. V. (2010). Exposure fusion: a simple and practical alternative to high dynamic range photography. Comput. Graph. Forum 28, 161–171. doi: 10.1111/j.1467-8659.2008.01171.x

Moriyama, D., Ueda, Y., Misawa, H., Suetake, N., and Uchino, E. (2019). “Saturation-based multi-exposure image fusion employing local color correction,” in 2019 IEEE International Conference on Image Processing (ICIP) (Taipei), 3512–3516.

Qi, G., Chang, L., Luo, Y., Chen, Y., Zhu, Z., and Wang, S. (2020). A precise multi-exposure image fusion method based on low-level features. Sensors (Basel, Switzerland) 20, 1597. doi: 10.3390/s20061597

Shen, J., Zhao, Y., Yan, S., and Li, X. (2014). Exposure fusion using boosting laplacian pyramid. IEEE Trans. Cybern. 44, 1579–1590. doi: 10.1109/TCYB.2013.2290435

Shen, R., Cheng, I., Shi, J., and Basu, A. (2011). Generalized random walks for fusion of multi-exposure images. IEEE Trans. Image Process. 20, 3634–3646. doi: 10.1109/TIP.2011.2150235

Shen-yu, J., Kuo, C., Zhi-hai, X., Hua-jun, F., Qi, L., and Yue-ting, C. (2015). Multi-exposure image fusion based on well-exposedness assessment. J. Zhejiang Univ. Eng. Sci. (Hangzhou), 24, 7.

Ulucan, O., Karakaya, D., and Turkan, M. (2020). Multi-exposure image fusion based on linear embeddings and watershed masking. Signal Process. 178, 107791. doi: 10.1016/j.sigpro.2020.107791

Wang, S., and Zhao, Y. (2020). A novel patch-based multi-exposure image fusion using super-pixel segmentation. IEEE Access 8, 39034–39045. doi: 10.1109/ACCESS.2020.2975896

Xu, H., Jiang, G., Yu, M., Zhu, Z., Bai, Y., Song, Y., and Sun, H. (2021). Tensor product and tensor-singular value decomposition based multi-exposure fusion of images. IEEE Trans. Multimedia 1. doi: 10.1109/TMM.2021.3106789

Keywords: high dynamic range image, multi-scale decomposition, multi-exposure images, image fusion, Laplacian pyramid (LP)

Citation: Xu K, Wang Q, Xiao H and Liu K (2022) Multi-Exposure Image Fusion Algorithm Based on Improved Weight Function. Front. Neurorobot. 16:846580. doi: 10.3389/fnbot.2022.846580

Received: 31 December 2021; Accepted: 07 February 2022;

Published: 08 March 2022.

Edited by:

Xin Jin, Yunnan University, ChinaReviewed by:

Yu Liu, Hefei University of Technology, ChinaHuafeng Li, Kunming University of Science and Technology, China

Copyright © 2022 Xu, Wang, Xiao and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ke Xu, eHVrZUBjcXVwdC5lZHUuY24=

Ke Xu

Ke Xu Qin Wang

Qin Wang