- 1Hangzhou Xinmei Complete Electric Appliance Manufacturing Co., Ltd., Hangzhou, China

- 2School of Electrical and Electronic Engineering, Shandong University of Technology, Zibo, China

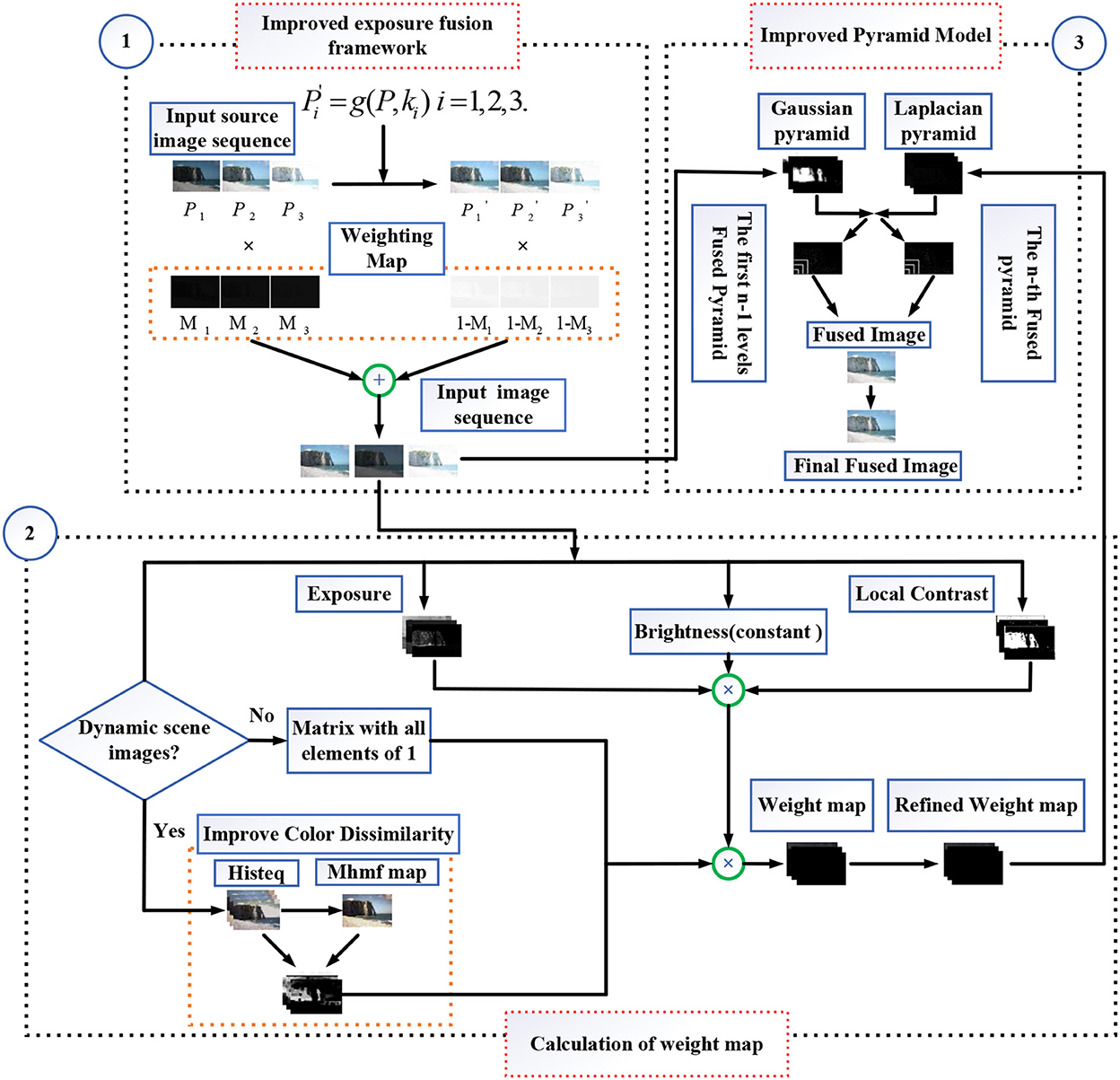

To solve the ghosting artifacts problem in dynamic scene multi-scale exposure fusion, an improved multi-exposure fusion method has been proposed without ghosting based on the exposure fusion framework and the color dissimilarity feature of this study. This fusion method can be further applied to power system monitoring and unmanned aerial vehicle monitoring. In this study, first, an improved exposure fusion framework based on the camera response model was applied to preprocess the input image sequence. Second, the initial weight map was estimated by multiplying four weight items. In removing the ghosting weight term, an improved color dissimilarity feature was used to detect the object motion features in dynamic scenes. Finally, the improved pyramid model as adopted to retain detailed information about the poor exposure areas. Experimental results indicated that the proposed method improves the performance of images in terms of sharpness, detail processing, and ghosting artifacts removal and is superior to the five existing multi-exposure image fusion (MEF) methods in quality evaluation.

1. Introduction

Since the objects are constantly in motion, compared with most natural scenes, the dynamic range of the existing ordinary cameras is very narrow (Akçay et al., 2017). Therefore, the captured image cannot have all the details in the high dynamic range (HDR) scene at disposable. Dynamic range refers to the ratio between the brightness in the brightest and darkest areas of the images. To address the issue of low dynamic range (LDR) images, we used HDR imaging technology to merge LDR images of different scenes captured into HDR images (Debevec and Malik, 2008).

At present, there are two methods for HDR imaging, namely, MEF and tone mapping. The tone mapping method requires the camera response function (CRF) for correction in the HDR imaging process and also uses the tone mapping operator to convert HDR images to LDR images that can be shown on traditional LDR devices. The MEF method directly fuses images taken at different exposure levels in the same scene to generate HDR images with rich information. It makes up for the shortcomings of the tone mapping method. Exposure evaluation, CRF correction, and tone mapping operation are not required during HDR imaging. Therefore, it saves computation costs and is widely used in high-dynamic-range imaging.

In recent years, many MEF methods have been successfully developed. According to whether the objects in the input image sequence are moving or not, they were divided into two methods, namely, the static scene MEF method (Mertens et al., 2007; Heo et al., 2010; Gu et al., 2012; Zhang and Cham, 2012; Shen et al., 2014; Ma and Wang, 2015; Nejati et al., 2017; Huang et al., 2018; Lee et al., 2018; Ma et al., 2018; Wang et al., 2019; Ulucan et al., 2021; Wu et al., 2021; Hu et al., 2022) and the dynamic scene MEF method (Li and Kang, 2012; Qin et al., 2014; Liu and Wang, 2015; Vanmali et al., 2015; Fu et al., 2016; Ma et al., 2017; Zhang et al., 2017; Hayat and Imran, 2019; Li et al., 2020; Qi et al., 2020; Jiang et al., 2022; Luo et al., 2022; Yin et al., 2022). Mertens et al. (2007) proposed a technique for fusing exposure sequences into high-quality images using multi-scale resolution. It can generate natural-color images, but the edge texture details of the fusion image are largely lost. Zhang and Cham (2012) proposed a method to process static and dynamic exposure compositions using image gradient information. This method can reduce the tedious tone mapping steps but cannot deal with the ghosts caused by the movement of objects and cameras. Gu et al. (2012) proposed a MEF method using the Euclidean metric to measure intensity distance in gradient domain feature space. It can produce fused images with rich information. Shen et al. (2014) proposed an advanced exposure fusion method. The method integrates local, global, and saliency weights into the weight processing problem. Ma and Wang (2015) proposed a patch decomposition MEF method to save running time. It improves the color appearance of the fusion image based on the decomposition of the image patches into three components, namely, average intensity, signal structure, and signal strength. Later, Ma et al. combined structural similarity with patch structure. Ma et al. (2018) proposed a MEF method to increase the perceptual quality by optimizing the color structure similarity index (MEF-SSIMc). Nejati et al. (2017) first disaggregated the source input image into basic and detail levels. Second, the exposure function is adopted to handle the weight problem. Although this method improves computational efficiency, it cannot remove the ghosts of dynamic scenes. Lee et al. (2018) designed an advanced weight function. Its function is to increase the weights of the bright regions in underexposure images and the dark regions in overexposure images while suppressing the oversaturation of these regions. Huang et al. (2018) proposed the color multi-exposure image fusion method to enhance the detailed information of fusion images. The method is based on decomposing the images into three weights, including intensity adjustment, structure preservation, and contrast extraction, and fusing them separately, preserving a great deal of detailed information for the input images. Wang et al. (2019) proposed a multi-exposure image fusion method in YUV color space. Simple detail components are used to strengthen the fused image details, which can retain the brightest and darkest area details in the HDR scene. A few pieces of literature (Ulucan et al., 2021; Wu et al., 2021; Hu et al., 2022) describe the recent results of the MEF method. Ulucan et al. (2021) designed a MEF technology to obtain accurate weights of fused images. The weight map is constructed by watershed masking and linear embedding weights. Then, the weight map and the input image are fused. This method can produce fusion images with lots of details and a good color appearance. Wu et al. (2021) presented a MEF method based on the improved exposure evaluation and the dual-pyramid model. The method can be applied in the computer vision field and the medical, remote sensing, and electrical fields. Hu et al. (2022) proposed a MEF method for detail enhancement based on homomorphic filtering. In terms of weight map calculation, threshold segmentation and Gaussian curves are utilized for processing. In terms of detail enhancement, the pyramid model of homomorphic filtering is used for processing weight maps and input image sequences.

In the dynamic scene MEF process, there is an object motion phenomenon in the input image sequence. Therefore, we should consider removing ghosting caused by object motion. Heo et al. (2010) proposed a high-dynamic-range imaging (HDRI) algorithm using a global intensity transfer function to remove ghosting artifacts. Li and Kang (2012) proposed a MEF method to remove ghosting utilizing histogram equalization and color dissimilarity feature using median filtering. Qin et al. (2014) used a random walk algorithm to maintain the content of the moving objects and provide more details. Therefore, this method can process dynamic scenes and reduce the ghosting artifacts of fused images. To increase the color brightness of the fused image, Vanmali et al. (2015) proposed a weight-forced MEF method without ghosting. Mertens et al. (2007) presented an algorithm to obtain the weighted map and used the weight-forced technology to force the weight of newly detected objects to zero. Therefore, it can produce ghost-free images with good color and texture details. Li and Kang (2012) presented a multi-exposure image fusion method based on DSIFT deghosting. It was adopted to extract the local contrast of the source image and remove the ghosting artifacts in the dynamic scene using the dense SIFT descriptor. To enhance the quality of ghost-free fusion images, Ma et al. (2017) proposed a MEF method (SPD-MEF) based on structural patch decomposition. It uses the direction of signal structure in the patch vector space to detect motion consistency, which removes ghosts. Zhang et al. (2017) introduced the inter-consistency of pixel intensity similarity in input image sequences and the intra-consistency of the interrelationships between adjacent pixels. To reduce the cost of motion estimation and accelerate MEF efficiency, Hayat and Imran (2019) presented a MEF method (MEF-DSIFT) based on dense SIFT descriptors and guided filtering. The method calculates the color dissimilarity feature using histogram equalization and median filtering, which removes the ghosting phenomenon in the MEF of dynamic scenes. Recently, Qi et al. (2020) proposed a MEF method based on feature patches. This method removes ghosts in dynamic scenes by prior exposure quality and structural consistency checking, which improves the performance of ghost removal. Li et al. (2020) proposed a fast multi-scale SPD-MEF method. It can decrease halos in static scenes and ghosting in dynamic scenes.

The available MEF methods are mainly suitable for static scene fusion, but they lack robustness to dynamic scenes, which causes a poor ghost removal effect. Therefore, this study adopts the multi-exposure image fusion method of weighted term deghosting. Based on the Ying method, an improved exposure fusion framework based on the camera response model is proposed to process input image sequences. Based on the Hayat method, an improved color dissimilarity feature is proposed for dynamic scenes, which is used to remove ghosting artifacts caused by object motion. In this study, the proposed method can generate images without ghosting fusion with pleasing naturalness and sharp texture details. Overall, the main advantages of the proposed method are summarized as follows:

(1) This study proposes an improved exposure fusion framework based on the camera response model. For the first time, the input image sequences processed by the fusion framework are used as multi-exposure input source image sequences. Through the fusion framework processing, the brightness and contrast of the source image are enhanced, and vast details are retained.

(2) The initial weight map is designed. It is obtained by calculating four weight terms, namely, local contrast, exposure feature, brightness feature, and improved color dissimilarity feature, of the input image and multiplying the four weight terms together. For dynamic scenes, an improved color dissimilarity feature is proposed based on a hybrid median filter and histogram equalization, which strengthens the sharpness of the image and has a better deghosting effect.

(3) Weighted guided image filtering (WGIF) is utilized to refine the initial weight map. The improved multi-scale pyramid decomposition model is used to add the Laplacian pyramid information to the highest level of the weighted mapping pyramid to weaken halo artifacts and retain details.

The rest of the study is organized as follows: Section 2 describes in detail the proposed multi-scale fusion deghosting method. In section 3, the effectiveness of the proposed method is obtained by analyzing the experiment results. Finally, section 4 concludes this study and makes prospects for the future.

2. Multi-scale image fusion ghosting removal

2.1. Improved exposure fusion framework based on the camera response model

There are overexposure/underexposure areas in the input image sequence. The input image sequence used for direct multi-scale image fusion may affect the contrast and sharpness of the fused images. Therefore, we transform the brightness of all images in the exposure sequence and carry out a weighted fusion of images before and after brightness transform to enhance image contrast, as in Equation (1).

where g is the brightness transfer function, which uses the β-γ correction model. Pi(x,y), I = 1, 2, 3 …; N is the input image; is the image of Pi(x,y) brightness change in the exposure sequence; and ki is the exposure rate of the i-th image. M is the weight map of the input image of Pi(x,y); “◦” indicates the dot product operator; c is the index of three-color channels; a = −0.3293 and b = 1.1258 are the parameters of the CRF; and Ii(x,y) is the enhancement result.

For low-light images, image brightness Li(x,y) is obtained using the maximal value in the three color channels in Equation (2).

The illumination map T estimation algorithm has been extensively studied. This study adopts the morphological closure operation to calculate the initial illumination map Ti by Fu et al. (2016), as shown in Equation (3).

where Qi(x,y) denotes a structural element, and “⋅” denotes an end operation. The range is mapped to [0,1] downstream operations by dividing by 255. Then, weighted guided image filtering (WGIF) (Li et al., 2014) is used to optimize the initial illumination map Ti(x,y), which can better remove the halo phenomenon than the existing guided image filter (GIF). The V level in the HSV color space for the input images is regarded as the guiding image in WGIF.

It should be noted that the key point of image fusion enhancement is the design of the weight map M(x,y). The weight map M(x,y) is calculated using the method proposed by Ying et al. (2017a) in Equation (4).

where θ = 0.5 is a parameter to control the enhanced intensity and represents the optimized illumination map. Besides, we used the Ying et al. (2017a) exposure rate determination method to obtain the best exposure rate k. To obtain images with good sharpness, the non-linear unsharp masking algorithm (Ngo et al., 2020) proposed by Ngo et al. is used to increase the naturalness and sharpness of fused images.

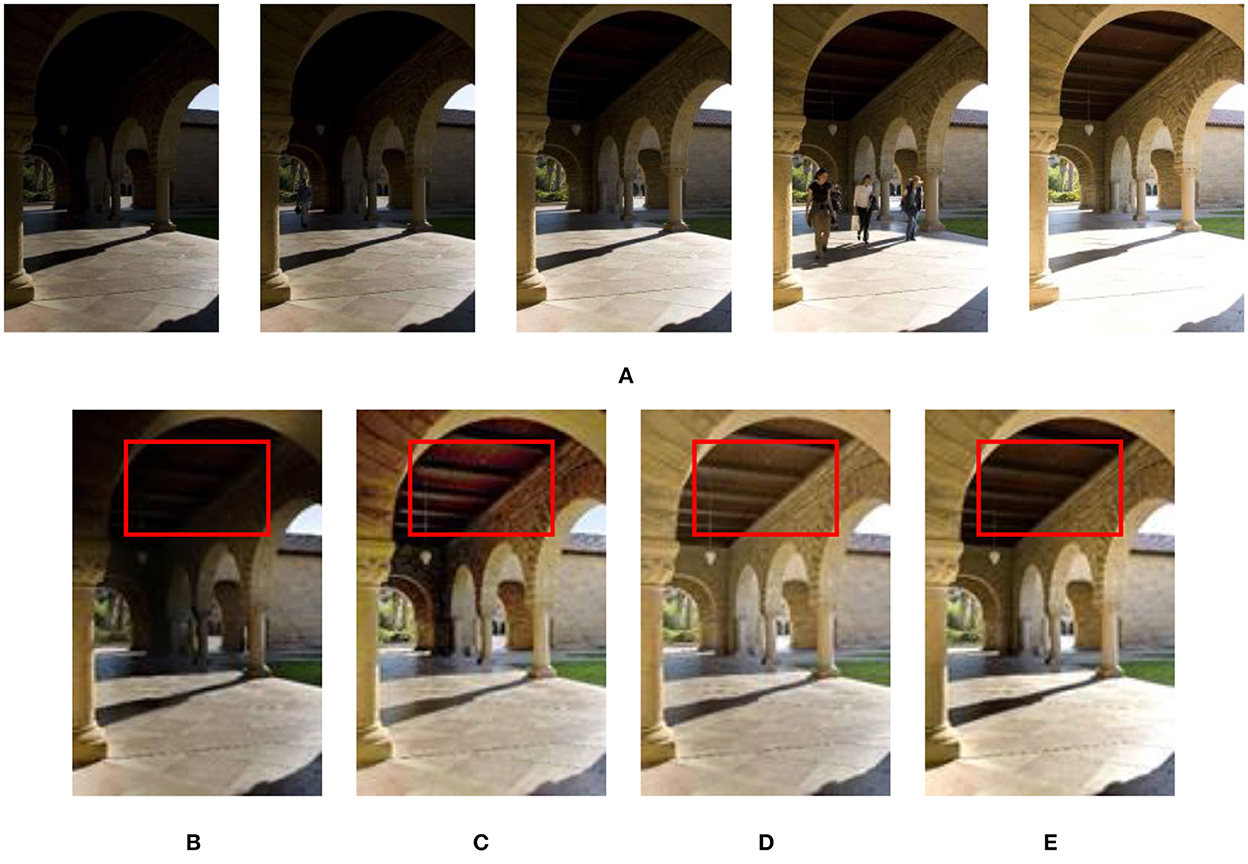

Figure 1 shows the effect with/without CRF exposure fusion framework on experiment results. Figure 1B shows the results of the without CRF exposure fusion framework. Figures 1C–E are the result of the CRF exposure fusion framework. In Figure 1D, although the contrast of the image is improved, the image suffers from oversaturation distortion. The proposed fusion framework (see Figure 1E) significantly improves the brightness and sharpness of over/underexposure regions in the source input image sequences. Therefore, we used the proposed exposure fusion framework for related experiments in the following algorithm.

Figure 1. Results of dynamic scene “Arch” image sequence processed with/without CRF exposure fusion framework. (A) “Arch” image sequence; (B) Without CRF exposure fusion framework processing method (Hayat and Imran, 2019); (C) ICCV image processing method (Ying et al., 2017b); (D) CAIP image processing method (Ying et al., 2017a); (E) The image processing method proposed in this study.

2.2. Multi-exposure image fusion without ghosting based on improved color dissimilarity feature and improved pyramid model

This section proposes an improved multi-exposure image fusion method without ghosting. The proposed method is mainly for motion scenes in multi-exposure images. Figure 2 shows the flow schematic drawing of the proposed method.

2.2.1. Improved color dissimilarity feature

An improved color dissimilarity feature based on fast multi-exposure image fusion with a median filter and recursive filter is proposed by Li and Kang (2012). Unlike the method proposed by Li and Kang (2012), static background images IS of the scene are processed by a hybrid median filter (mHMF) (Kim et al., 2018) as in Equation (5).

where IS represents the static background of the scene and mhmf (·) denotes an operator. The hybrid median filter (mHMF) (Kim et al., 2018) was applied to the worst image in the histogram equalized exposure sequence , which is more beneficial to preserving the image edges in regions such as mutation than the median filter. Besides, the color dissimilarity feature Di(x,y) of moving objects is calculated between the static background image IS and histogram equalized image in Li and Kang (2012) and Hayat and Imran (2019).

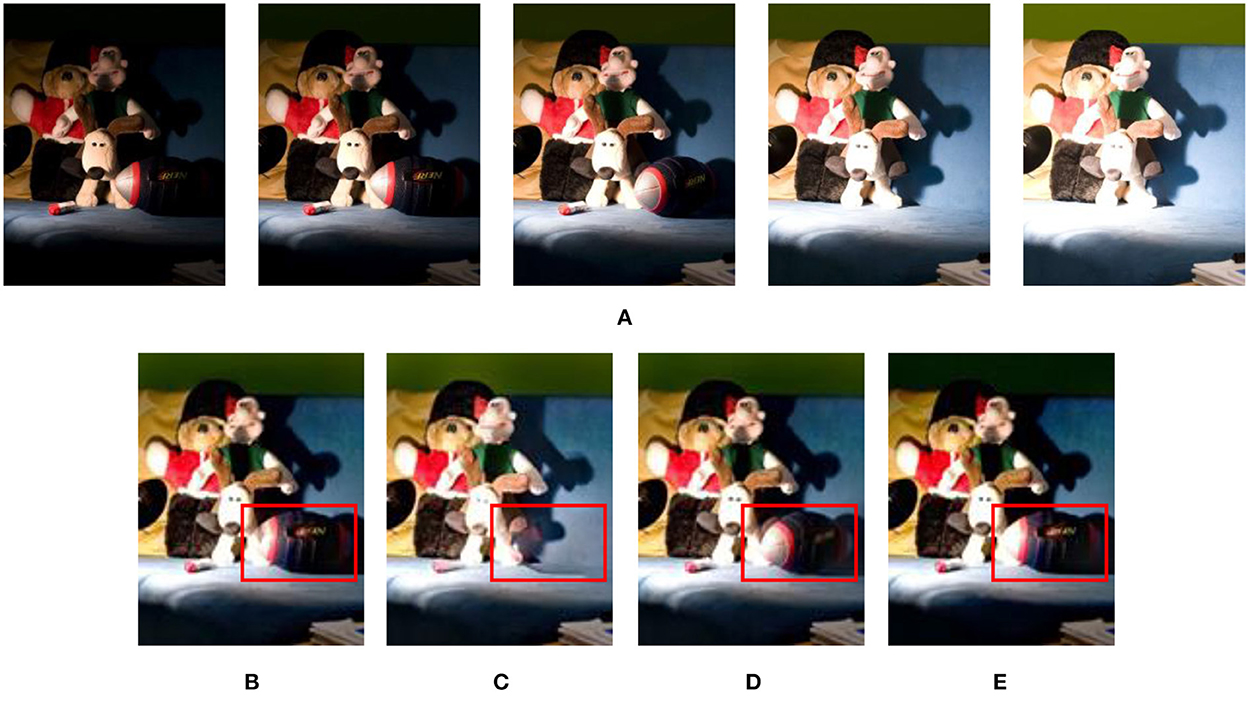

Comparisons of the color dissimilarity feature by Li and Kang (2012) and the proposed method have been conducted, as shown in Figure 3. The fused image in Figure 3B generated by the method of Li and Kang (2012) has ghosting artifacts at the ellipsoid. The proposed algorithm is validated by adopting underexposure, exposure normal, and overexposure source images, as shown in Figures 3C–E. According to Figure 3E, it can be seen that the results generated by underexposure images have a good effect on deghosting and are better than in Figure 3B. Therefore, in the following algorithm, we utilized the mHMF to handle underexposure images for related experiments.

Figure 3. The results of processing the dynamic scene of the “Puppets” image sequence using the original/improved color dissimilarity features. (A) “Puppets” image sequence; (B) using the original color dissimilarity feature (Li and Kang, 2012); (C) hybrid median filter processing the brightest exposure image; (D) hybrid median filter processing the good exposure image; (E) hybrid median filter processing the darkest exposure image.

2.2.2. Exposure feature and brightness feature

Because of the correlation between the three channels in RGB color space, which affects the final multi-scale pyramid decomposition and fusion, the input source image is converted from RGB to YUV color space. The exposure feature weight item Ei(x,y) of the input image is measured in the Y channel as in Equation (6).

where Yi(x,y) is the standardized value of the Y channel, is the mean value of Yi(x,y), and σ is a Gaussian kernel parameter taken as σ = 0.2. Besides, to increase the SNR of the input image sequence and retain the detailed information of the brightest/darkest regions, this method uses the brightness quality metric in Kou et al. (2018).

2.2.3. Local contrast using dense SIFT descriptor

The local contrast is measured using Equation (7), which is extracted by non-standardized dense filtering in dense SIFT descriptor (Liu et al., 2010).

where DSIFT(.) represents the operator that computes the non-normalized dense SIFT source image mapping, Ci(x,y) represents a simple indicator vector for local contrast measurement, and denotes the grayscale image corresponding to the input image sequence Ii(x,y). At each pixel, the mapping is regarded as the l1 norm of Ci(x,y). Besides, this study selects a winner-take-all weight allocation strategy (Liu and Wang, 2015; Hayat and Imran, 2019) to obtain the final local contrast weight term .

2.2.4. Estimation and refinement of the weight map

First, the following four weight items of the input image sequence are calculated: color dissimilarity feature, exposure feature, brightness feature, and local contrast. Second, weight items are multiplied to generate a weighted mapping, as in Equation (8).

Using WGIF (Li et al., 2014) directly refines and filters the weight map obtained by Equation (8), which is different from the refinement of the weight map in Liu and Wang (2015) and Hayat and Imran (2019). In the process of filter refinement, both the source image and the guide image are used Wi(x, y). Then, normalizing refined weight maps makes weight maps sum to 1 at every pixel. The final weight map is shown in Equation (9).

where denotes the weight map after WGIF refinement, denotes the final normalized weight map, and ε = 10−5 is a small positive value, avoiding a zero denominator in the calculation process.

2.2.5. Improved pyramid decomposition fusion model

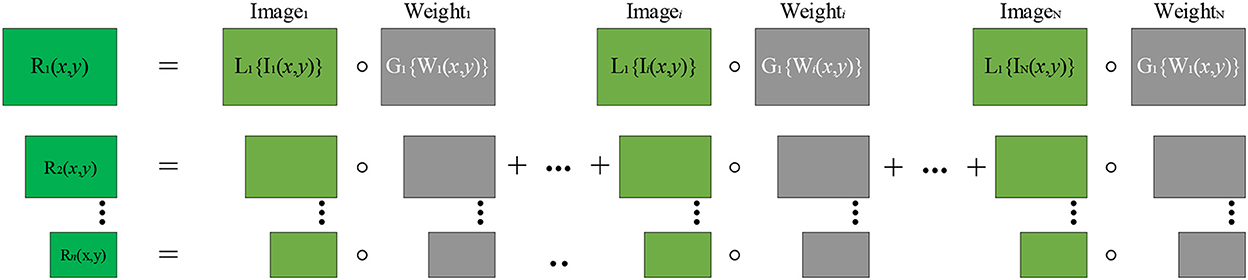

Utilizing the original multi-scale pyramid model (Mertens et al., 2007) may produce fusion images with a loss of details and the halo phenomenon. Therefore, an improved pyramid fusion model is used. In this pyramid model, the Laplacian and Gaussian pyramids are disaggregated into n levels, as shown in Figure 4. The total number of levels n is defined by Equation (10).

where ro and co are the number of rows and columns of input image pixels, respectively.

Figure 4. General flow of multi-scale exposure fusion. Ii(x,y) is an LDR image. Wi(x,y) is a weighted mapping. The Laplacian pyramid is obtained by LDR image decomposition, and the weighted mapping decomposition obtains the Gaussian pyramid. R1(x,y)–Rn(x,y) is the resulting level of the Laplacian pyramid.

It is considered that, at the highest level of the Gaussian pyramid, improper smoothing of edges is the main reason for producing halos. On the lower levels of the Gaussian pyramid, the improper smoothing of the edges is not evident for the generation of halos. Therefore, on the n-th level of the RGB color space pyramid, using the single-scale fusion algorithm in Ancuti et al. (2016) adds the Laplacian pyramid information of the source image to the Gaussian pyramid weighted mapping as in Equation (11).

where Ii is the input image of LDR, is the result of fusing the i-th image and the i-th image weight on the n-th level, and is the n-th Gaussian pyramid of . In Ancuti et al. (2016), n is the maximum number of levels of the Gaussian pyramid, L1{Ii(x, y)} is the first level of the input image Ii(x,y) Laplacian pyramid, and λ is the coefficient of L1{Ii(x, y)}, which controls the amplitude of the high-frequency signal L1{Ii(x, y) }.

To retain detailed information on overexposed/underexposed areas, on the n-th level, the improved multi-scale exposure fusion algorithm proposed by Wang et al. (2019) is used as in Equation (12).

For underexposure source images, |L1{Ln{Ii(x, y)}}| in Equation (12) is introduced at the n-th level to correct the incorrect weights introduced by the weighted mapping smoothed by the Gaussian smoothing filter. It also reasonably enhances the weight of the well-exposure areas in the underexposure image, which retains the details of the underexposure areas. For overexposure images, the weight map of the n-th level adopts the primary Gaussian smoothing filter to smooth, which retains the details of the overexposure area.

For other scales, the improved pyramid fusion is the same as the original pyramid fusion (Mertens et al., 2007). Finally, reconstructing the Laplacian pyramid composed of Rl(x,y) in Equation (13) generates the fused image R.

where l represents the level number of the pyramid. The image details and brightness enhancement method proposed by Li et al. (2017) is adopted to enhance fusion image detail information, which obtains the final multi-scale exposure fusion image.

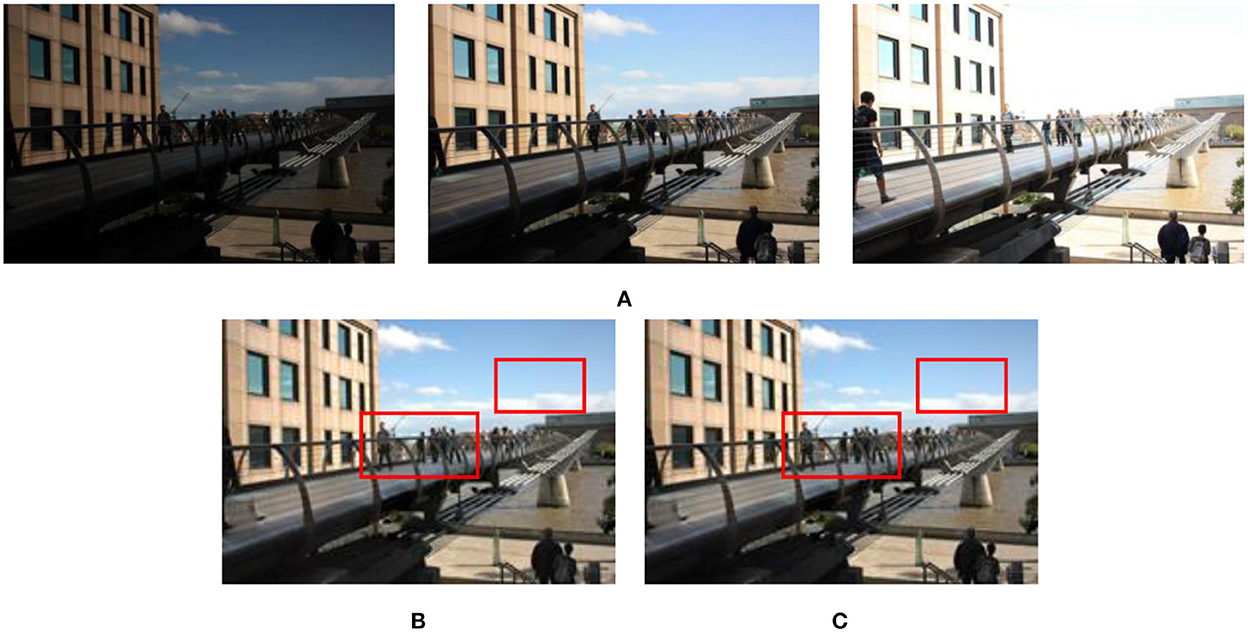

Comparisons of the original and improved pyramid models have been conducted, as shown in Figure 5. Compared with the original pyramid model (see Figure 5B), the generated image in Figure 5C by the improved pyramid model performs well in contrast and detail processing aspects, especially in pedestrian and white cloud areas. It is considered that multi-scale pyramid decomposition and fusion, loss of details, and the halo phenomenon are complex problems in pyramid decomposition and fusion. Therefore, this study selects the improved pyramid model to decompose and fuse the input image.

Figure 5. Experimental results of processing a dynamic scene “Tate” image sequence using the original/improved pyramid model. (A) “Tate” image sequence; (B) using the original pyramid model (Mertens et al., 2007); (C) using the improved pyramid model.

3. Experimental analysis

3.1. Experimental setup

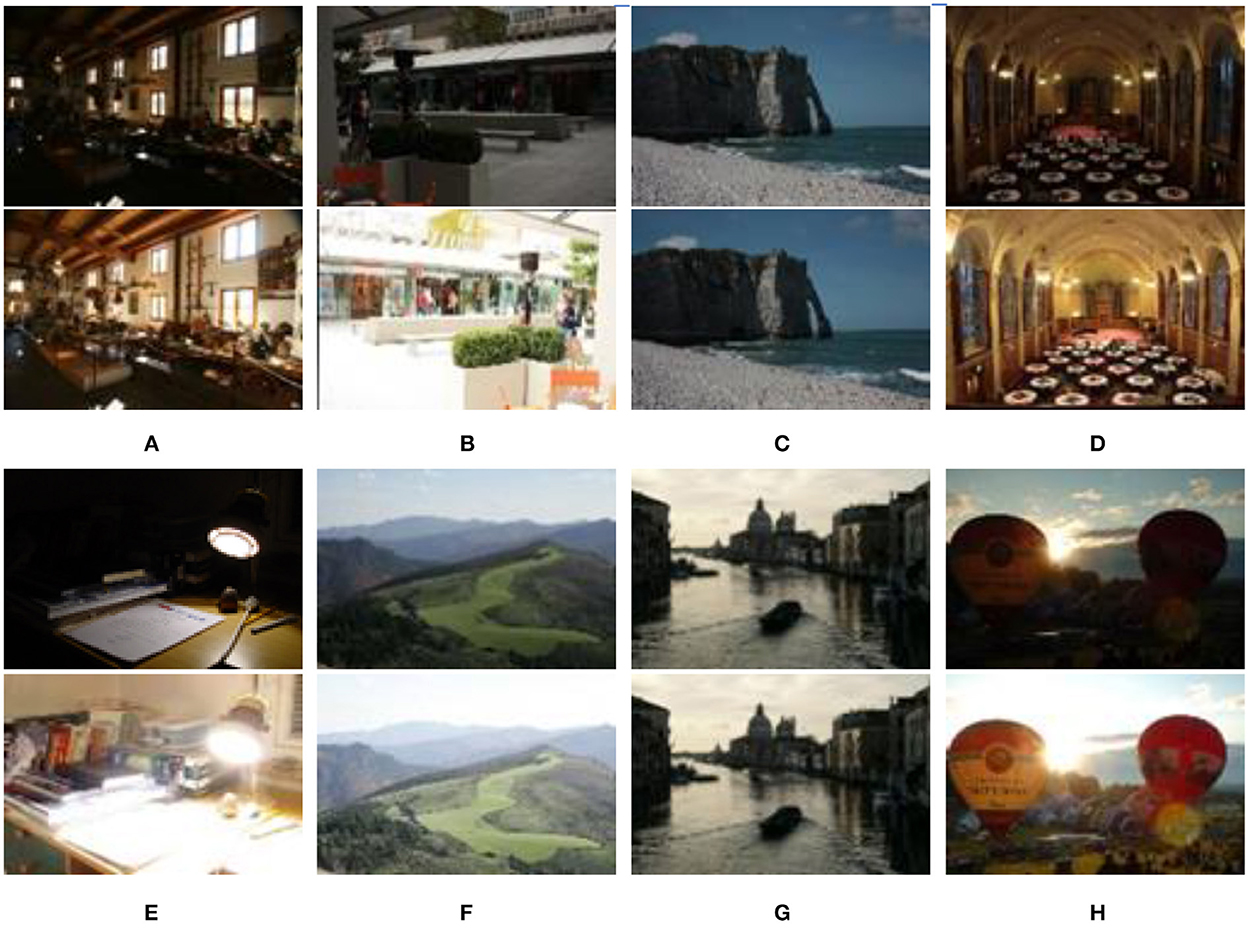

In our experiments, five and six image groups were selected from seventeen static scene (Kede, 2018) and twenty dynamic scene (DeghostingIQADatabase, 2019) image groups, respectively. As shown in Figure 6, two images with different brightnesses are extracted from the above input image sequences. We utilized eleven image groups to test five existing MEF methods and the proposed method. The five MEF methods were presented by Mertens et al. (2007), Li and Kang (2012), Liu and Wang (2015), Lee et al. (2018), and Hayat and Imran (2019), respectively. All experiments are run on MATLAB 2019a [Intel Xeon X5675 3.07 GHz desktop with 32.00 GB RAM].

Figure 6. Source image sequences used in experiments. (A) Farmhouse; (B) Brunswick; (C) Cliff; (D) Llandudno; (E) Cadik; (F) Landscape; (G) Venice; (H) Balloons.

3.2. Subjective evaluation

In this section, to thoroughly discuss the content of the experimental results, we performed a local amplification close-up shot of the results of most sequence images.

3.2.1. Dynamic scenes

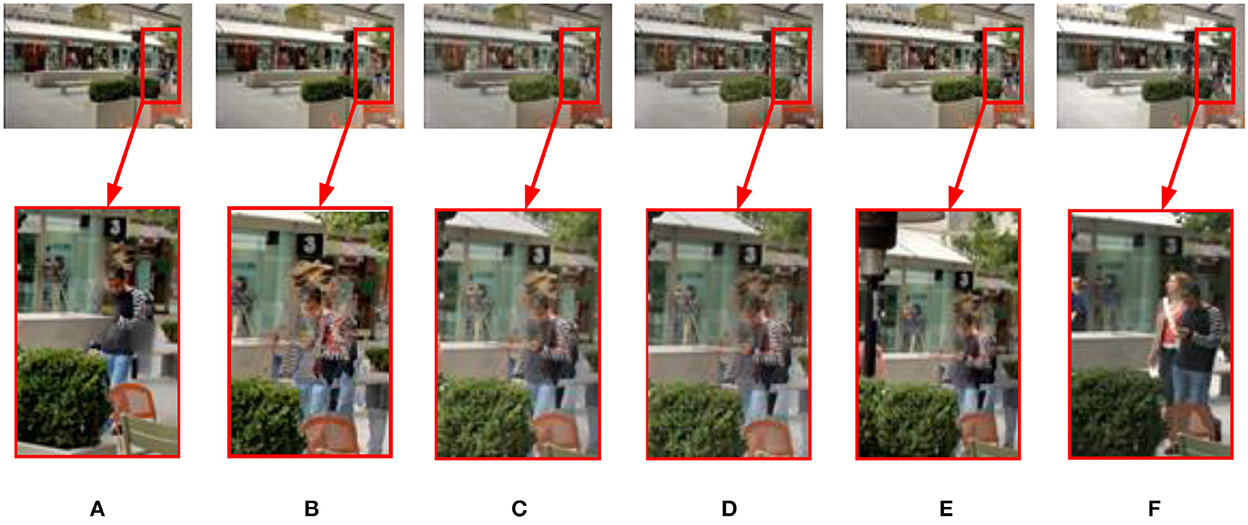

Figure 7 shows the experimental results of different methods in the dynamic Brunswick sequence. In terms of ghost removal, the methods (see Figures 7B–E) presented by Mertens et al. (2007), Li and Kang (2012), Liu and Wang (2015), and Lee et al. (2018) have poor effects and cannot effectively remove ghosts in pedestrian areas. The pixel oversaturation distortion in Figure 7A significantly reduces the visual quality. The proposed method can produce a good result (see Figure 7F). No ghosting artifact phenomenon exists in the image, and human visual perception is natural.

Figure 7. Comparison results of different methods on the dynamic “Brunswick” image sequence. (A) Hayat and Imran (2019); (B) Mertens et al. (2007); (C) Li and Kang (2012); (D) Liu and Wang (2015); (E) Lee et al. (2018); (F) the proposed method in this study.

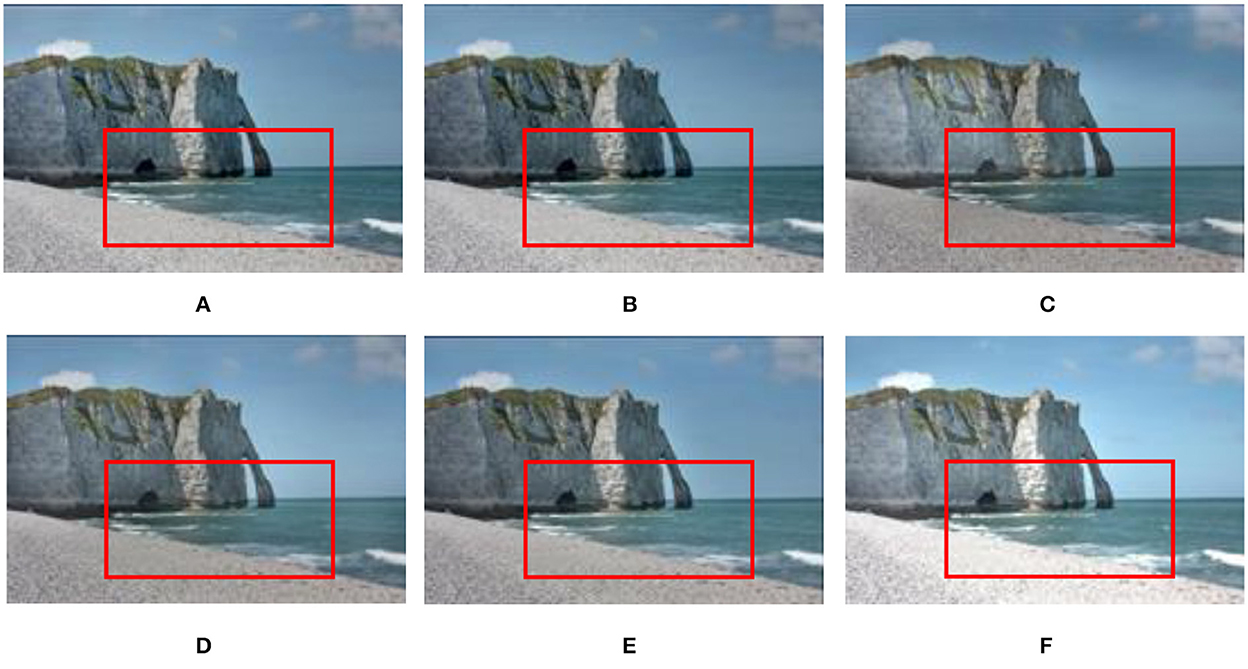

Figure 8 shows the fusion results of different methods in the dynamic Cliff sequence. The images in Figures 8A, B generated by the methods of Mertens et al. (2007) and Hayat and Imran (2019) are dark in color, the local contrast is not apparent, and the ghosting phenomenon exists in the water waves, which reduces the visual observation effect to a certain extent. Although the methods (see Figures 8C–E) of Li and Kang (2012), Liu and Wang (2015), and Lee et al. (2018) increase the contrast of the image, there are still darker colors and ghost phenomena. Figure 8F is the method proposed in this study. In contrast, the ghost removal performance significantly improved. On the waves and beaches, detailed information, local contrast, and naturalness are maintained, consistent with human visual observation.

Figure 8. On dynamic “Cliff” image sequence, the available MEF methods compare with the proposed method. (A) Hayat and Imran (2019); (B) Mertens et al. (2007); (C) Li and Kang (2012); (D) Liu and Wang (2015); (E) Lee et al. (2018); (F) the proposed method in this study.

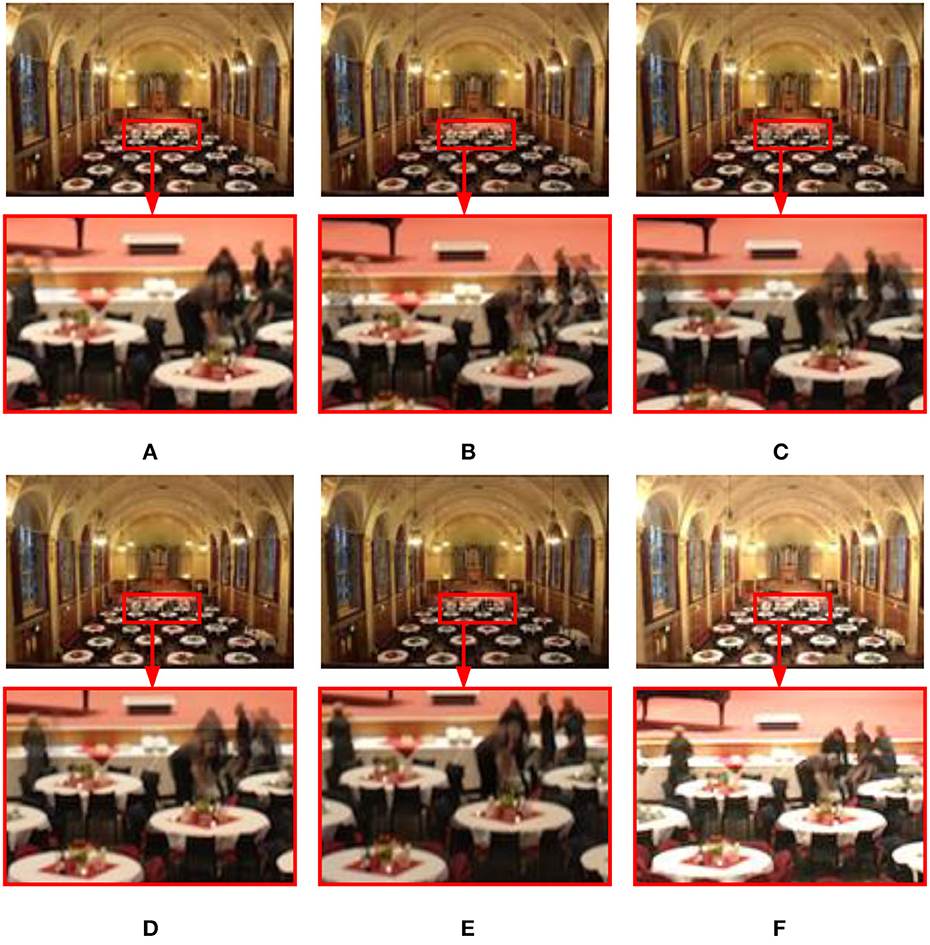

Figure 9 shows the performance comparison of different methods in the dynamic Llandudno sequence. The results (see Figures 9B–D) acquired by Mertens et al. (2007), Li and Kang (2012), and Liu and Wang (2015) show that there are apparent ghosting artifacts in the area of characters and that there is a loss of detail information and color distortion. In Figure 9A, the overall image deghosting effect is good, but the color above the house is dark. The image in Figure 9E is unclear, and there is a color distortion phenomenon. The proposed method can produce a good result (see Figure 9F). The characters in the image have no noticeable ghosting artifacts, details are well preserved, and the exposure level is consistent with human visual observation.

Figure 9. Fusion results of different methods on the dynamic “Llandudno” image sequence. (A) Hayat and Imran (2019); (B) Mertens et al. (2007); (C) Li and Kang (2012); (D) Liu and Wang (2015); (E) Lee et al. (2018); (F) the proposed method in this study.

3.2.2. Static scenes

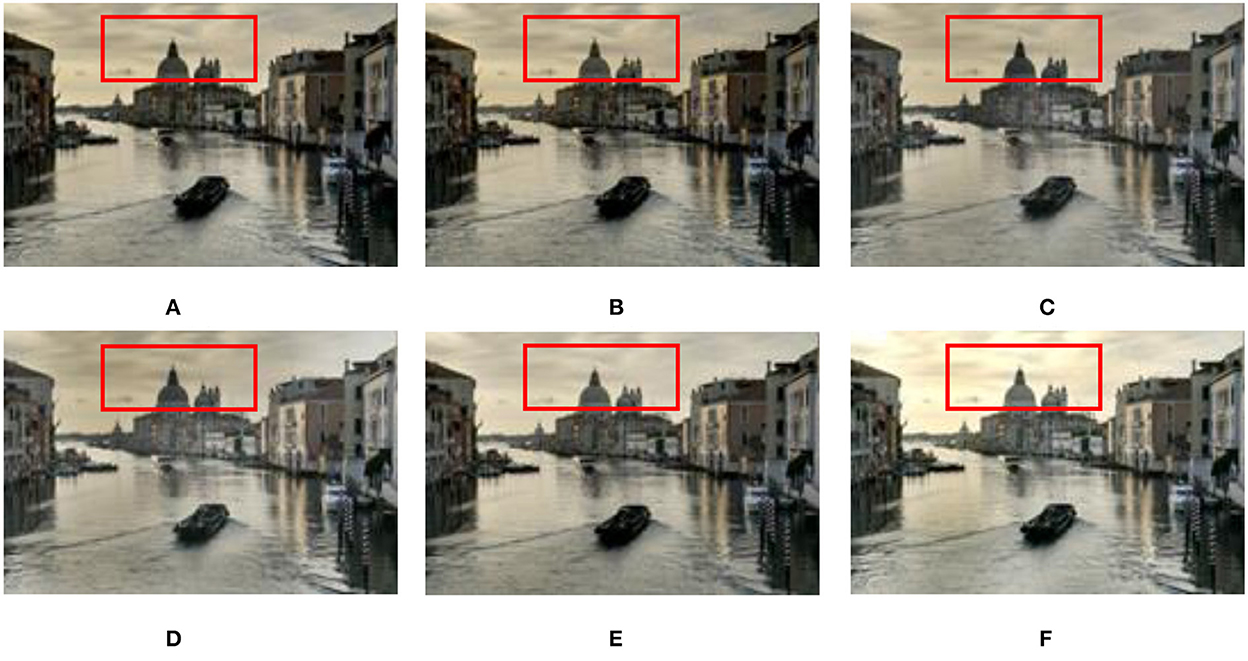

Experimental results on the static Venice sequence using different methods are shown in Figure 10. In terms of image sharpness and detail processing, the proposed method (see Figure 10F) is superior to the methods (see Figures 10A–E) proposed by Mertens et al. (2007), Li and Kang (2012), Liu and Wang (2015), Lee et al. (2018), and Hayat and Imran (2019). Especially in Figures 10B–D, in the sky and church areas of the image, exposure and sharpness are poor, local contrast is not apparent, and fused image details are lost. In the results of the method proposed by Lee et al. (2018) and Hayat and Imran (2019), the sharpness of the fused image has improved, but there is still local contrast that is not obvious, and details are lost (see Figures 10A, E).

Figure 10. Comparison of the proposed method with Mertens et al. (2007), Li and Kang (2012), Hayat and Imran (2019), Liu and Wang (2015), and Lee et al. (2018) in the static “Venice” image sequence. (A) Hayat and Imran (2019); (B) Mertens et al. (2007); (C) Li and Kang (2012); (D) Liu and Wang (2015); (E) Lee et al. (2018); (F) the proposed method in this study.

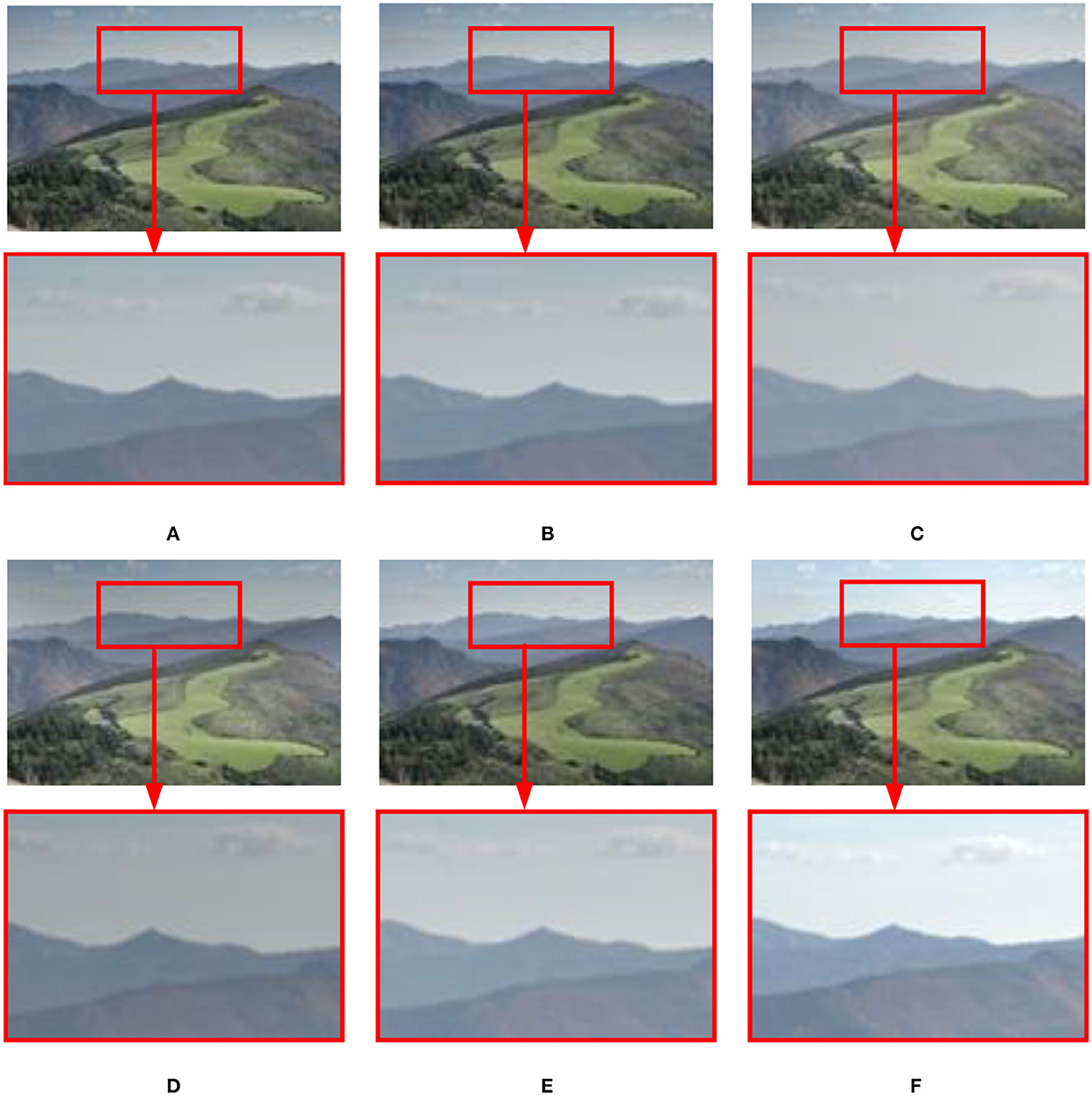

The fusion results of six MEF methods on static scene landscape sequences are shown in Figure 11. In Figures 11B–E, in the sky area (white cloud parts), the sharpness is not good enough. In the method (see Figure 11A) proposed by Hayat and Imran (2019), although the sharpness and naturalness of the image are enhanced in the sky area, the fused image details are seriously lost. Compared with the methods (see Figures 11A–E) presented by Mertens et al. (2007), Li and Kang (2012), Liu and Wang (2015), Lee et al. (2018), and Hayat and Imran (2019), the proposed method in this study (see Figure 11F) has good saturation and contrast in the sky area, and the detailed information is retained better.

Figure 11. Comparison results of different methods on the static “Landscape” image sequence. (A) Hayat and Imran (2019); (B) Mertens et al. (2007); (C) Li and Kang (2012); (D) Liu and Wang (2015); (E) Lee et al. (2018); (F) the proposed method in this study.

3.3. Objective evaluation

3.3.1. Evaluation using dynamic scene structural similarity index (MEF-SSIMd)

The structural similarity index (MEF-SSIMd) (Fang et al., 2019) is applied to measure structural similarity between input image sequences and fused images in dynamic ranges. The overall MEF-SSIMd is defined in Equation (14).

where qd represents MEF-SSIMd of dynamic scenes and qs represents MEF-SSIMd of static scenes.

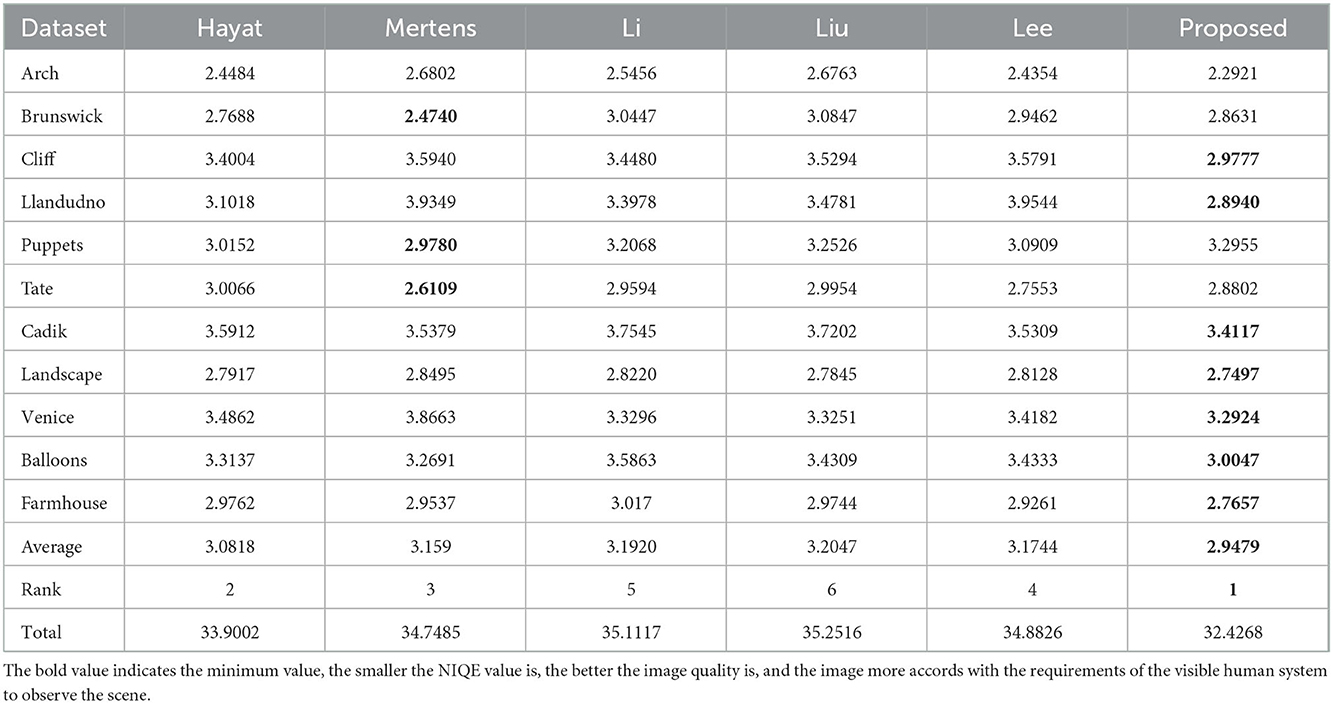

The data range of MEF-SSIMd is [0,1]. The greater the value, the better the deghosting efficiency, and the stronger the robustness of the dynamic scene. The smaller the value is, the opposite is true. As shown in Table 1, using MEF-SSIMd objectively evaluates six MEF methods for the quality of generating fused images. Overall, the proposed method is superior to the other five existing MEF methods in the performance evaluation of MEF-SSIMd.

3.3.2. Evaluation using natural image quality evaluator (NIQE)

In multi-exposure image fusion, the fused image should meet the requirements of the human visual system to observe the scene. Since the general purpose does not reference the IQA (image quality assessment), the algorithm requires much training to meet the IQA. Thus, a non-reference quality metric, NIQE (Mittal et al., 2012) was proposed. The smaller the NIQE value is, the better the image quality is, and the image more closely accords with the requirements of the visible human system to observe the scene. On the contrary, the greater the NIQE value is, the fewer images conform requirements of the human visual system observation scene. As shown in Table 2, NIQE is used to evaluate the quality of fusion images produced by different MEF methods. Overall, the proposed method can acquire images with better naturalness.

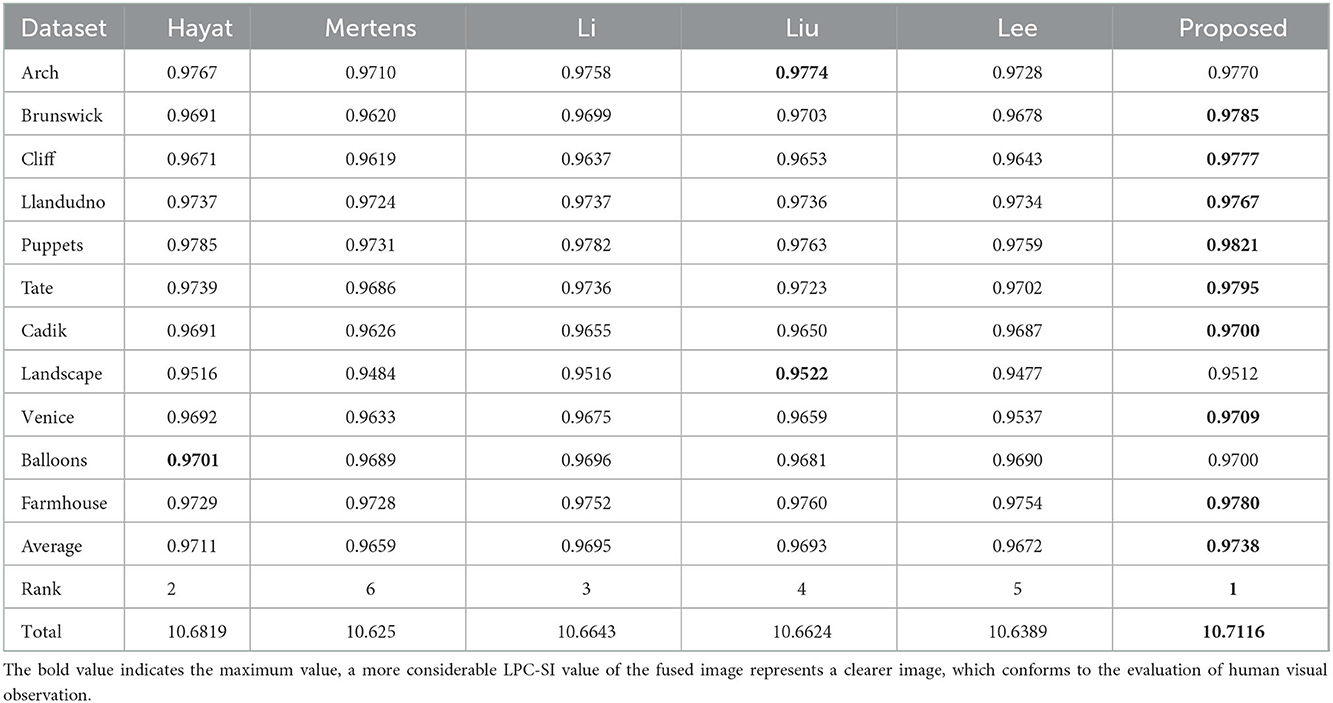

3.3.3. Evaluation of image sharpness using local phase coherence (LPC)

In multi-exposure image fusion, sharpness is a critical factor in the visual evaluation of image quality. The sharpness of the image to achieve the human visual system can effortlessly detect blur and observe visual images. Therefore, Hassen et al. (2013) used sharpness in the complex wavelet transform domain to evaluate the local solid phase coherence (LPC) of the image features. Then, the overall sharpness index of LPC (LPC-SI) is proposed. A more considerable LPC-SI value of the fused image represents a clearer image, which conforms to the evaluation of human visual observation. A smaller LPC-SI value of the fused image represents a blurred image. The value range of LPC-SI is [0,100]. Table 3 shows the comparison results of LPC-SI values between the other five MEF methods and the presented method. A comprehensive comparison shows that the proposed method in this study outperforms the other five existing MEF methods.

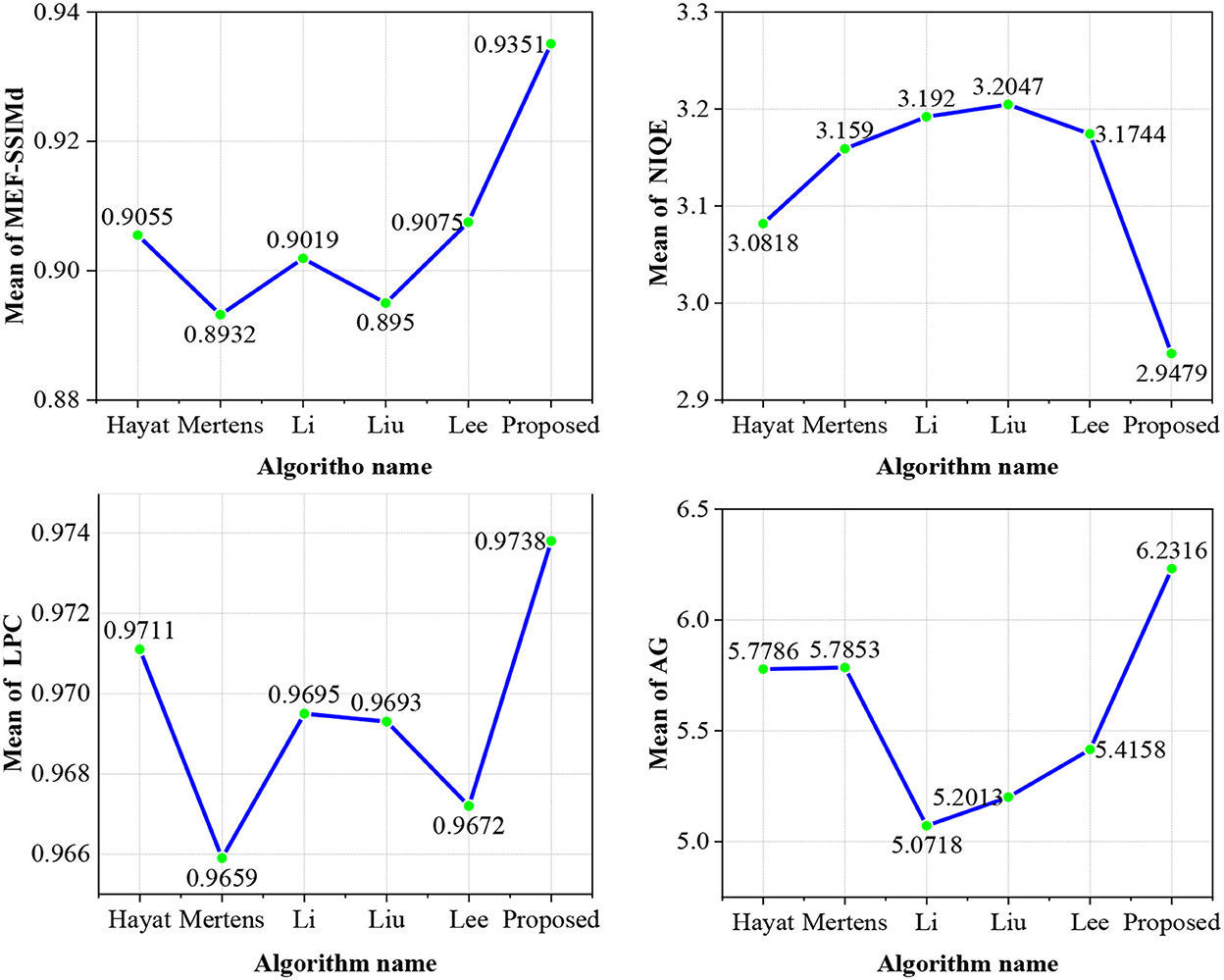

3.3.4. Mean value analysis of objective evaluation indexes

As shown in Figure 12, the proposed method in this study ranks first in the line graph of the mean values of the entire reference objective evaluation index MEF-SSIMd and non-reference objective evaluation index NIQE, LPC, and average gradient (AG). The proposed MEF method without ghosting based on the exposure fusion framework and color dissimilarity feature can effectively remove ghosting in dynamic scene MEF. It also improves the sharpness and naturalness of the fused image and retains many details.

4. Conclusion

An improved MEF method has been proposed in this study without ghosting based on the exposure fusion framework and color dissimilarity feature. It generates ghost-free, high-quality images with good sharpness and rich details. The proposed algorithm in this study can be further applied to power system monitoring and unmanned aerial vehicle monitoring fields. An improved exposure fusion framework based on the camera response model has been utilized to improve the contrast and sharpness of over/underexposure regions in the input image sequence. The WGIF refined weight map with an improved color dissimilarity feature was adopted to remove ghosting artifacts and to retain more image details utilizing an improved pyramid model. In the experimental tests of qualitative and quantitative evaluation for eleven image groups, including five static scene image groups and six dynamic scene image groups, this method ranks first compared with the five available MEF methods. However, when objects move frequently or move more widely, the fusion results may produce ghosting artifacts. Therefore, we hope that the researchers further study to overcome the above problems.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/h4nwei/MEF-SSIMd.

Author contributions

SC and ZL: conceptualization, methodology, software, and validation. DS: data curation. ZL: writing and original draft preparation. YA: writing, review, and editing. JY, BL, and SC: visualization. GZ: funding acquisition. All authors agreed to be accountable for the content of the study. All authors contributed to the article and approved the submitted version.

Funding

This research was funded by the National Natural Science Foundation of China (Grant Number: 51807113).

Acknowledgments

The authors thank the editors and the reviewers for their careful work and valuable suggestions for this study.

Conflict of interest

SC, DS, JY, BL, and GZ were employed by the company Hangzhou Xinmei Complete Electric Appliance Manufacturing Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akçay, Ö., Erenoglu, R. C., and Avşar, E. Ö. (2017). The effect of Jpeg compression in close range photogrammetry. Int. J. Eng. Geosci. 2, 35–40. doi: 10.26833/ijeg.287308

Ancuti, C. O., Ancuti, C., De Vleeschouwer, C., and Bovik, A. C. (2016). Single-scale fusion: an effective approach to merging images. IEEE Trans. Image Process. 26, 65–78. doi: 10.1109/TIP.2016.2621674

Debevec, P. E., and Malik, J. (2008). “Rendering high dynamic range radiance maps from photographs,” in Proceedings of the SIGGRAPH 1997: 24th Annual Conference on Computer Graphics and Interactive Techniques (Los Angeles, CA: SIGGRAPH 1997), 369–378.

DeghostingIQADatabase (2019). Available online at: https://github.com/h4nwei/MEF-SSIMd (accessed March 5, 2022).

Fang, Y., Zhu, H., Ma, K., Wang, Z., and Li, S. (2019). Perceptual evaluation for multi-exposure image fusion of dynamic scenes. IEEE Trans. Image Process. 29, 1127–1138. doi: 10.1109/TIP.2019.2940678

Fu, X., Zeng, D., Huang, Y., Liao, Y., Ding, X., and Paisley, J. (2016). A fusion-based enhancing method for weakly illuminated images. Signal Process. 129, 82–96. doi: 10.1016/j.sigpro.2016.05.031

Gu, B., Li, W., Wong, J., Zhu, M., and Wang, M. (2012). Gradient field multi-exposure images fusion for high dynamic range image visualization. J. Vis. Commun. Image Represent. 23, 604–610. doi: 10.1016/j.jvcir.2012.02.009

Hassen, R., Wang, Z., and Salama, M. M. (2013). Image sharpness assessment based on local phase coherence. IEEE Trans. Image Process. 22, 2798–2810. doi: 10.1109/TIP.2013.2251643

Hayat, N., and Imran, M. (2019). Ghost-free multi exposure image fusion technique using dense SIFT descriptor and guided filter. J. Vis. Commun. Image Represent. 62, 295–308. doi: 10.1016/j.jvcir.2019.06.002

Heo, Y. S., Lee, K. M., Lee, S. U., Moon, Y., and Cha, J. (2010). “Ghost-free high dynamic range imaging,” in Proceedings of the 10th Asian Conference on Computer Vision (Queenstown: Springer, Berlin, Heidelberg), 486–500.

Hu, Y., Xu, C., Li, Z., Lei, F., Feng, B., Chu, L., et al. (2022). Detail enhancement multi-exposure image fusion based on homomorphic filtering. Electronics 11, 1211. doi: 10.3390/electronics11081211

Huang, F., Zhou, D., Nie, R., and Yu, C. (2018). A color multi-exposure image fusion approach using structural patch decomposition. IEEE Access 6, 42877–42885. doi: 10.1109/ACCESS.2018.2859355

Jiang, Q., Lee, S., Zeng, X., Jin, X., Hou, J., Zhou, W., et al. (2022). A multi-focus image fusion scheme based on similarity measure of transformed isosceles triangles between intuitionistic fuzzy sets. IEEE Trans. Instrument. Meas. 71, 1–15. doi: 10.1109/TIM.2022.3169571

Kede, M. (2018). Available online at: https://ece.uwaterloo.ca/~k29ma/ (accessed March 15, 2022).

Kim, G. J., Lee, S., and Kang, B. (2018). Single image haze removal using hazy particle maps. IEICE Trans. Fund. Electr. 101, 1999–2002. doi: 10.1587/transfun.E101.A.1999

Kou, F., Li, Z., Wen, C., and Chen, W. (2018). Edge-preserving smoothing pyramid based multi-scale exposure fusion. J. Vis. Commun. Image Represent. 53, 235–244. doi: 10.1016/j.jvcir.2018.03.020

Lee, S. H., Park, J. S., and Cho, N. I. (2018). “A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient,” in Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP) (Athens: IEEE), 1737–1741.

Li, H., Ma, K., Yong, H., and Zhang, L. (2020). Fast multi-scale structural patch decomposition for multi-exposure image fusion. IEEE Trans. Image Process. 29, 5805–5816. doi: 10.1109/TIP.2020.2987133

Li, S., and Kang, X. (2012). Fast multi-exposure image fusion with median filter and recursive filter. IEEE Trans. Consum. Electron. 58, 626–632. doi: 10.1109/TCE.2012.6227469

Li, Z., Wei, Z., Wen, C., and Zheng, J. (2017). Detail-enhanced multi-scale exposure fusion. IEEE Trans. Image Process. 26, 1243–1252. doi: 10.1109/TIP.2017.2651366

Li, Z., Zheng, J., Zhu, Z., Yao, W., and Wu, S. (2014). Weighted guided image filtering. IEEE Trans. Image Process. 24, 120–129. doi: 10.1109/TIP.2014.2371234

Liu, Y., and Wang, Z. (2015). Dense SIFT for ghost-free multi-exposure fusion. J. Vis. Commun. Image Represent. 31, 208–224. doi: 10.1016/j.jvcir.2015.06.021

Liu, C., Yuen, J., and Torralba, A. (2010). Sift flow: dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 33, 978–994. doi: 10.1109/TPAMI.2010.147

Luo, Y., He, K., Xu, D., Yin, W., and Liu, W. (2022). Infrared and visible image fusion based on visibility enhancement and hybrid multiscale decomposition. Optik 258, 168914. doi: 10.1016/j.ijleo.2022.168914

Ma, K., Duanmu, Z., Yeganeh, H., and Wang, Z. (2018). Multi-exposure image fusion by optimizing a structural similarity index. IEEE Trans. Comput. Imaging 4, 60–72. doi: 10.1109/TCI.2017.2786138

Ma, K., Li, H., Yong, H., Wang, Z., Meng, D., and Zhang, L. (2017). Robust multi-exposure image fusion: a structural patch decomposition approach. IEEE Trans. Image Process. 26, 2519–2532. doi: 10.1109/TIP.2017.2671921

Ma, K., and Wang, Z. (2015). “Multi-exposure image fusion: A patch-wise approach,” in Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP) (Quebec, QC: IEEE), 1717–1721.

Mertens, T., Kautz, J., and Van Reeth, F. (2007). “Exposure fusion,” in Proceedings of the 15th Pacific Conference on Computer Graphics and Applications (PG'07) (Maui, HI: IEEE), 382–390.

Mittal, A., Soundararajan, R., and Bovik, A. C. (2012). Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 20, 209–212. doi: 10.1109/LSP.2012.2227726

Nejati, M., Karimi, M., Soroushmehr, S. R., Karimi, N., Samavi, S., and Najarian, K. (2017). “Fast exposure fusion using exposedness function,” in Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP) (Beijing: IEEE), 2234–2238.

Ngo, D., Lee, S., and Kang, B. (2020). “Nonlinear unsharp masking algorithm,” in Proceedings of the 2020 International Conference on Electronics, Information, and Communication (ICEIC) (Barcelona: IEEE), 1–6.

Qi, G., Chang, L., Luo, Y., Chen, Y., Zhu, Z., and Wang, S. (2020). A precise multi-exposure image fusion method based on low-level features. Sensors 20, 1597. doi: 10.3390/s20061597

Qin, X., Shen, J., Mao, X., Li, X., and Jia, Y. (2014). Robust match fusion using optimization. IEEE Trans. Cybern. 45, 1549–1560. doi: 10.1109/TCYB.2014.2355140

Shen, J., Zhao, Y., Yan, S., and Li, X. (2014). Exposure fusion using boosting Laplacian pyramid. IEEE Trans. Cybern. 44, 1579–1590. doi: 10.1109/TCYB.2013.2290435

Ulucan, O., Karakaya, D., and Turkan, M. (2021). Multi-exposure image fusion based on linear embeddings and watershed masking. Signal Process. 178, 107791. doi: 10.1016/j.sigpro.2020.107791

Vanmali, A. V., Kelkar, S. G., and Gadre, V. M. (2015). “Multi-exposure image fusion for dynamic scenes without ghost effect,” in Proceedings of the 2015 Twenty First National Conference on Communications (NCC) (Mumbai: IEEE), 1–6.

Wang, Q., Chen, W., Wu, X., and Li, Z. (2019). Detail-enhanced multi-scale exposure fusion in YUV color space. IEEE Trans. Circ. Syst. Video Technol. 30, 2418–2429. doi: 10.1109/TCSVT.2019.2919310

Wu, L., Hu, J., Yuan, C., and Shao, Z. (2021). Details-preserving multi-exposure image fusion based on dual-pyramid using improved exposure evaluation. Results Opt. 2, 100046. doi: 10.1016/j.rio.2020.100046

Yin, W., He, K., Xu, D., Luo, Y., and Gong, J. (2022). Significant target analysis and detail preserving based infrared and visible image fusion. Infrared Phys. Technol. 121, 104041. doi: 10.1016/j.infrared.2022.104041

Ying, Z., Li, G., Ren, Y., Wang, R., and Wang, W. (2017a). “A new image contrast enhancement algorithm using exposure fusion framework,” in Proceedings of the 17th International Conference on Computer Analysis of Images and Patterns (CAIP 2017) (Ystad: Springer, Cham), 36–46.

Ying, Z., Li, G., Ren, Y., Wang, R., and Wang, W. (2017b). “A new low-light image enhancement algorithm using camera response model,” in Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW) (Venice: IEEE), 3015–3022.

Zhang, W., and Cham, W. K. (2012). Gradient-directed multiexposure composition. IEEE Trans. Image Process. 21, 2318–2323. doi: 10.1109/TIP.2011.2170079

Keywords: ghosting artifacts, electric power monitoring, camera response model, color dissimilarity feature, pyramid, multi-exposure image fusion

Citation: Chen S, Li Z, Shen D, An Y, Yang J, Lv B and Zhou G (2023) Multi-exposure electric power monitoring image fusion method without ghosting based on exposure fusion framework and color dissimilarity feature. Front. Neurorobot. 16:1105385. doi: 10.3389/fnbot.2022.1105385

Received: 22 November 2022; Accepted: 13 December 2022;

Published: 10 January 2023.

Edited by:

Xin Jin, Yunnan University, ChinaReviewed by:

Fang Chunhua, China Three Gorges University, ChinaKangjian He, Yunnan University, China

Copyright © 2023 Chen, Li, Shen, An, Yang, Lv and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yunzhu An,  YW51eXVuemh1MjAwNkAxNjMuY29t

YW51eXVuemh1MjAwNkAxNjMuY29t

Sichao Chen1

Sichao Chen1 Zhenfei Li

Zhenfei Li Yunzhu An

Yunzhu An