94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Neurorobot., 24 November 2022

Volume 16 - 2022 | https://doi.org/10.3389/fnbot.2022.1041108

This article is part of the Research TopicMachine Learning and Applied NeuroscienceView all 8 articles

With the development of technology, Moore's law will come to an end, and scientists are trying to find a new way out in brain-like computing. But we still know very little about how the brain works. At the present stage of research, brain-like models are all structured to mimic the brain in order to achieve some of the brain's functions, and then continue to improve the theories and models. This article summarizes the important progress and status of brain-like computing, summarizes the generally accepted and feasible brain-like computing models, introduces, analyzes, and compares the more mature brain-like computing chips, outlines the attempts and challenges of brain-like computing applications at this stage, and looks forward to the future development of brain-like computing. It is hoped that the summarized results will help relevant researchers and practitioners to quickly grasp the research progress in the field of brain-like computing and acquire the application methods and related knowledge in this field.

Achieving artificial intelligence as the major goal of mankind has been at the top of the heated debate. Since the Dartmouth Conference in 1956 (McCarthy et al., 2006), the development of AI has gone through three waves. They can be roughly divided into four basic ideas: symbolism, connectionism, behaviorism, and statism. These different ideas have captured some of the characteristics of “intelligence” in different aspects, but only partially surpassed the brain of humans in the aspect of function. In recent years, the computer hardware base has become more perfect, and deep learning has revealed its huge potential (Huang Y. et al., 2022; Yang et al., 2022). In 2016, AlphaGo defeated Lee Sedol, the ninth-degree Go master, which marked that the third wave of artificial intelligence technology revolution has reached its peak.

In particular, the realization of AI has become one of the wrestling points of national power competition. In 2017, China released and implemented a new generation of artificial intelligence development planning. In June 2019, the United States released the latest version of the National Artificial Intelligence Research and Development Strategic Plan (Amundson et al., 1911). Europe has also identified AI as a priority development project: in 2016, the European Commission proposed a legislative motion on AI; in 2018, the European Commission submitted the European Artificial Intelligence (Delponte and Tamburrini, 2018), and published Coordinated Plan on Artificial Intelligence with the theme “Made in Europe with Artificial Intelligence.”

Achieving artificial intelligence requires more powerful information processing capabilities, but relying on the current classical computer architecture cannot meet the huge amount of data computing. The classical computer system has encountered two major bottlenecks in its development: the storage wall effect due to von Neumann structure and Moore's law will fail in the next few years. On the one hand, traditional processor architecture is inefficient and energy intensive. When dealing with intelligent problems in real-time, it is impossible to construct suitable algorithms for processing unstructured information. In addition, the mismatch between the rate of programs or data transferred back and forth and the rate of the central processor processing information leads to a storage wall effect. On the other hand, as the chip's size assembly gets closer to the size of a single atom, the devices are getting closer to the limits of their respective physical miniaturization. So, the cost of performance enhancement will become higher and the technical implementation will become more difficult. Therefore, researchers put their hopes on brain-like computing in order to break through the current technical bottleneck.

Early research in brain-like computing followed the traditional computer manufacturing process that we first recognize how the human brain works and develop a neuromorphic computer based on the theory. But after more than a decade of research, mankind is almost standing still in the field of brain science. So, the path of theory before technology was abandoned by mainstream brain-like research. Looking back at human development, we see that many technologies precede theories. For example, in the case of airplanes, we can build the physical object before conducting research to refine the theory. Based on it, researchers adopted structural brain analogs: using existing brain science knowledge and technology to simulate the structure of the human brain, and then refining the theory after success.

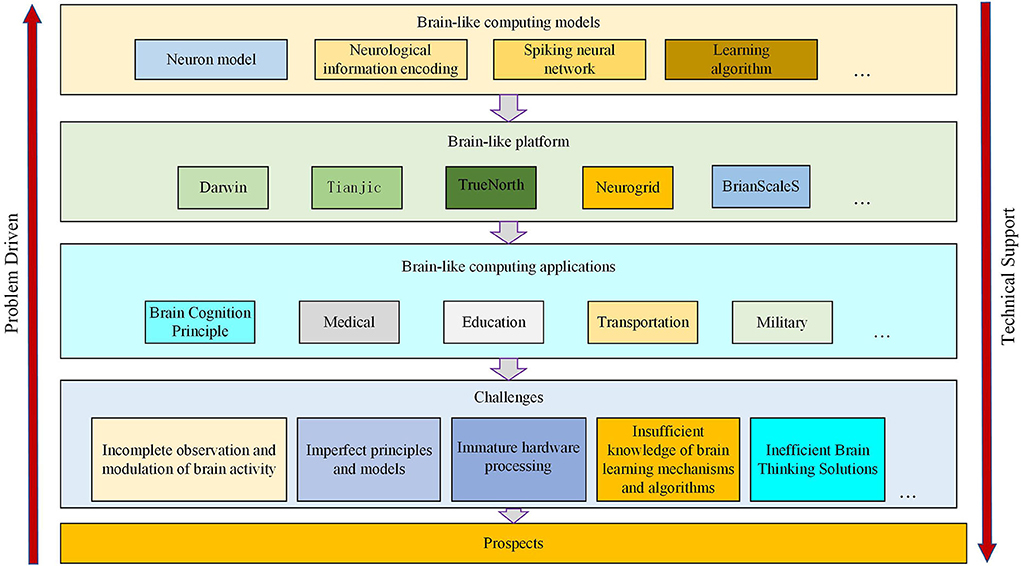

This article first introduces the idea behind the research significance of brain-like computing in a general way. Then we summarize the research history and compare the current research progress with analysis and outlook. The article structure is shown in Figure 1.

Figure 1. The structure of the article is as follows the analysis of relevant models, the establishment of related platforms, implementation of related applications, challenges, and prospects.

Brain-like computers use spiking neural networks (SNNs) instead of the von Neumann architecture of classical computers and use micro and nano-optoelectronic devices to simulate the characteristics of information processing of biological neurons and synapses (Huang, 2016). Brain-like computers are not a new idea, in 1943, before the invention of the computer, Turing and Shannon had a debate about the imaginary “computer” (Hodges and Turing, 1992). In 1950, Turing mentioned it in Computers and Intelligence (Neuman, 1958). In 1958, Von Neumann also discusses neurons, neural impulses, neural networks, and information processing mechanisms of the brain of humans in the Computers and the Human Brain (Yon Neumann, 1958). However, due to the limitations of various technologies at that time and the ideal future described by Moore's theorem, brain-like computing did not receive enough attention. Around 2005, it was generally believed that Moore's law would come to an end around 2020. Researchers began to shift their focus to brain-like computing. Then, the brain-like computing officially entered an accelerated period of development.

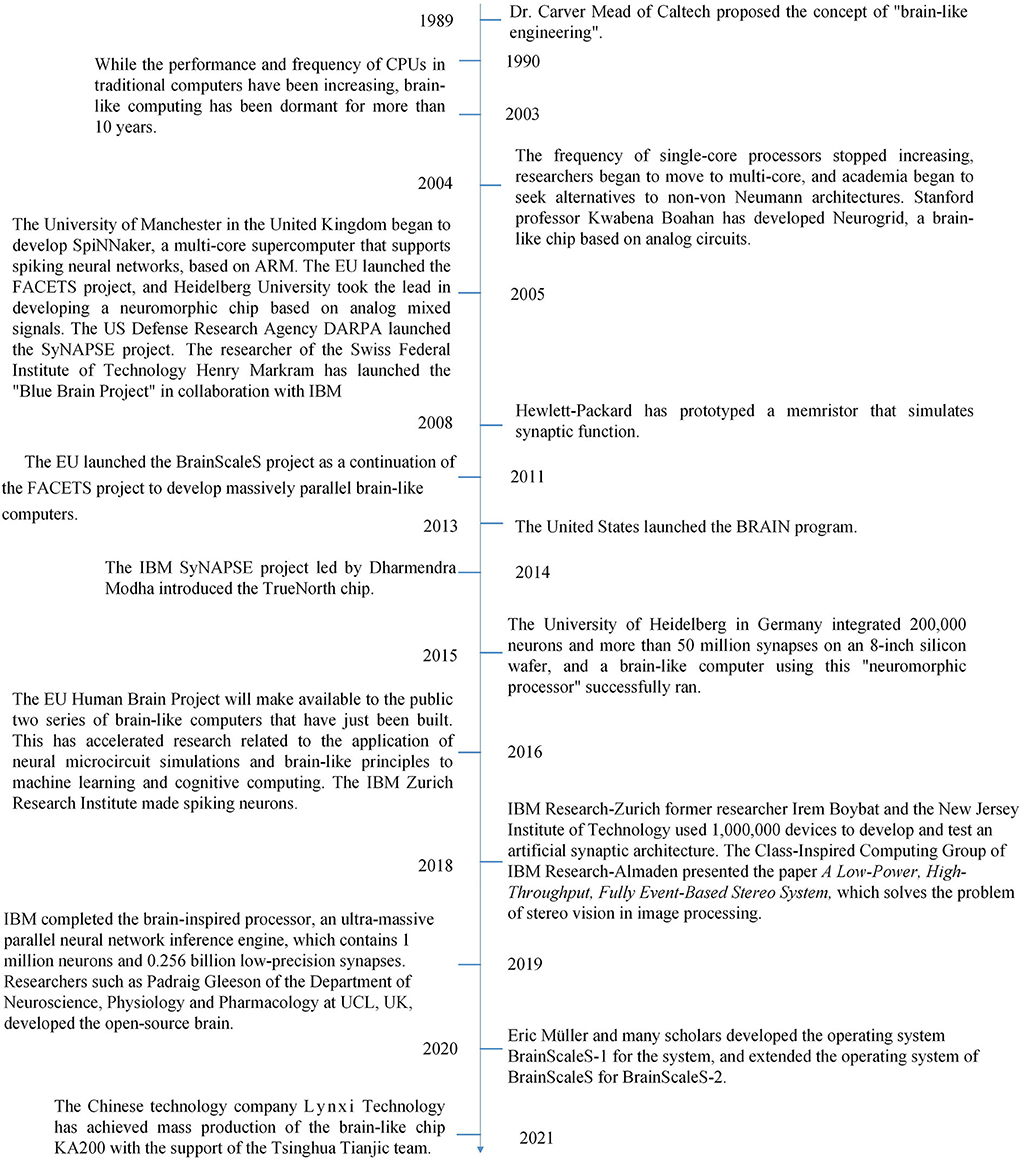

A summary of the evolution of brain-like computing (Mead, 1989; Gu and Pan, 2015; Andreopoulos et al., 2018; Boybat et al., 2018; Gleeson et al., 2019) is shown in Figure 2.

Figure 2. Brain-like computing has evolved from conceptual advancement to technical hibernation to accelerated development due to the possible end of Moore's law.

There are three main aspects of brain-like computing: simulation of neurons, information encoding of neural systems, and learning algorithms of neural networks.

Neurons are the basic structural and functional units of the human brain nervous system. The most commonly used models in the SNN network construction are the Hodgkin–Huxley (HH) model (Burkitt, 2006), integrate-and-fire (IF) model (Abbott, 1999; Burkitt, 2006), leaky integrate-and-fire (LIF) model (Gerstner and Kistler, 2002), Izhikevich model (Izhikevich, 2003; Valadez-Godínez et al., 2020), and AdExIF model (Brette and Gerstner, 2005), and so on.

1) HH model

The HH model is closest to biological reality in the description of neuronal features and is widely used in the field of computational neuroscience. It can simulate many neuronal functions, like activation, inactivation, action potentials, and ion channels. The HH model describes the neuronal electrical activity in terms of ionic activity. The cell membrane contains sodium, potassium, and leaky channels. Each ion channel has different gating proteins. It can restrict the passage of ions, so the permeability of each kind of ions is different in the membrane. Because of this, neurons have abundant electrical activity. At a mathematical level, the binding effect of gating proteins is equivalent to ion channel conductance. The conductance of the ion channel, as a dependent variable, varies with the variables of activation and deactivation of the ion channel. The current of the ion channel is determined by the conductance of ion channel, the reversal potential of the ion channel, and the membrane potential. And the total current consists of the leakage, sodium, potassium current, and the current due to membrane potential changes. Therefore, the HH model also equates the cell membrane to a circuit diagram.

2) IF and LIF models

In 1907, the integrate-and-fire neuron model was proposed by Lapicque (1907). According to the variation of neuronal membrane potential with time in the model, it can be divided into the IF model and the LIF model. The IF model describes the membrane potential of neurons with input current, as shown in Equation 1:

Cm represents the neuronal membrane capacitance, which determines the rate of change of the membrane potential. I represents the neuronal input current. The model is called the leak-free IF model because the neuronal membrane potential is only correlated with the input current. When the current input zero, the membrane potential remains unchanged. Its discrete form is shown in Equation 2:

where Δt is the step length of discrete sampling.

In contrast, the LIF model adds the simulation of neuron voltage leakage. When there is no current input for a certain period of time, the membrane voltage will gradually leak to resting potential, as shown in Equation 3 (citing Equation 1):

gleak is the leaky conductance of the neuron. Erest is the resting potential of the neuron. Neuroscience-related studies have shown that the binding of neurotransmitters to receptors in the postsynaptic membrane primarily affects the electrical conductance of postsynaptic neurons, thereby altering the neuronal membrane potential. So, it is more biologically reasonable to expand the input current I in Equation 1 into excitatory and inhibitory currents described by conductance. However, both neurons change to resting potentials directly after activation unable to retain the previous spike.

3) Izhikevich model

In 2003, researcher Eugene M. lzhikevich proposed the lzhikevich model from the perspective of nonlinear dynamical systems (Izhikevich, 2004). It can present the firing behavior of a variety of biological neurons with an arithmetic complexity close to that of the LIF model, as shown in Equation 4:

In Equations 5 and 6, U is an auxiliary variable, adjusted for the parameters a, b, c, and d, the lzhikevich model can exhibit a discharge behavior similar to the HH model. But unlike the HH model in which each parameter has a clear physiological meaning (e.g., ion channels, etc.), these parameters no longer have the corresponding properties.

4) AdEx IF model

The AdEx IF model is a modification of the lzhikevich model. However, the AdEx IF model reduces the response speed of the membrane voltage. This results in a gradual decrease in the frequency of pulse delivery from neurons under constant voltage stimulation conditions. We can think of this as a slowing down of the response of neurons that gradually gets “tired” after sending impulses. It is an essential feature of the AdEx IF model that is closer to the HH model in terms of firing behavior results.

The comparison of the above five neuronal models is summarized in Table 1.

Neural information encoding consists of two processes: feature extraction and spike sequence generation. In terms of feature extraction, there is no mature theory or algorithm. In terms of spike sequence generation, there are two approaches commonly used by researchers: rate coding (Butts et al., 2007; Panzeri et al., 2010) and temporal coding. Rate coding uses the frequency of spike to express all the information of spike sequences, which cannot effectively describe the fast time-varying perceptual information. Unlike average-rate coding, temporal coding takes into account that precisely timed spike carries valid information. Thus, temporal coding can describe neuronal activity more accurately. Precise spike timing plays an important role in the processing of visual, auditory, and other perceptual information.

1) Rate coding

Rate coding primarily utilizes a stochastic process approach to generate a spike sequences. The response function of a neuron suitable for Poisson coding is consist of a series of spike functions as shown in Equation 7:

k is the number of spikes in a given spike sequence, t represents the arrival time of each spike, and ti denotes the time at which each spike occurs. The unit spike signal is defined as shown in Equation 8:

The integral is in the form of . The time of neural action potential response is equivalent to the spike release time in a spike sequence. From the pulse function property, the number of pulses within t1 to t2 can be calculated by . Thus, the instantaneous discharge frequency can be defined as the expectation of the neuronal response function. According to the statistical theory of probability, the mean value of the neuronal response function in a short time interval is used as an estimate of the discharge frequency (Koutrouvelis and Canavos, 1999; Adam, 2014; Safiullina, 2016; Shanker, 2017; Allo and Otok, 2019) as shown in Equation 9:

rM(t) is the number of spikes in the entire time window and ρj(t) is the number of spike responses per neuron. Neither rM(t) nor ρj(t) is a continuous function and only under the condition of infinite time window, a smooth function can be obtained. The rules of encoding are crucial for the mapping between values and spike.

2) Temporal coding

The time-to-first-spike mechanism is generally used in time encoding as the moment of spike issuance, as shown in Equations 10 and 11:

I represents the actual intensity of each image pixel represented in the field of pattern recognition. Imax represents the maximum value of each pixel intensity. is a time window with a temporal pattern to ensure the pixel intensity value can be converted. Ts is the exact moment of the emitted spike, and a spike sequence will only emit one spike in the time-to-first-spike mechanism.

3) Population coding

Population coding (Leutgeb et al., 2005; Samonds et al., 2006) is a method of representing a stimulus using the joint activity of multiple neurons. Gaussian population coding is the most widely used model for group-skewed coding. In the actual encoding of the SNN, the pixel intensity is set to a real value that is determined by a set of overlapping Gaussian receptive field neurons. The larger the pixel intensity, the larger the value, the shorter the encoding time, and the easier it is for the Gaussian receptive field neurons near the front to generate a spike and form a spike sequence. Let k Gaussian receptive field neurons be encoded then, the centers and widths of k Gaussian functions are shown in Equation 12:

This type of encoding is used to encode continuous variables. For example, the population coding method ensures higher accuracy and realism compared to the first two coding methods for coding sound frequencies and joint positions. Due to the characteristics of this encoding method, it can significantly reduce the number of neurons required for the same accuracy. In order to improve the effectiveness of information encoding, the researchers are also trying to introduce different mechanisms in the encoding process (Dennis et al., 2013; Yu et al., 2013).

Over the past decades, researchers have drawn inspiration from biological experimental phenomena and findings to explore the theory of synaptic plasticity. Bi and Pope proposed the spike-timing-dependent plasticity (STDP) mechanism and extended it to different spike learning mechanisms (Bi and Poo, 1999; Gjorgjieva et al., 2011), which order of firing, adjusting the strength of neuronal connections.

To solve the supervised learning problem of SNNs, researchers have combined the STDP mechanism with other weight adjustment methods. This mainly contains the gradient descent and Widrow-Hoff rules. Based on gradient descent rules (Shi et al., 1996), Gutig et al. put forward a Tempotron learning algorithm (Gütig and Sompolinsky, 2006). The algorithm updates the synaptic weights according to the combined effect of the pre-synaptic and post-synaptic pulse time difference and the error signal. Ponulak et al. proposed the ReSuMe learning method (Florian, 2012) avoiding the gradient descent algorithm in the gradient solving problem. The SPAN algorithm was proposed in ref. (Mohemmed et al., 2013). The algorithm is similar to ReSuMe, except that it uses a spike convolution transform to convert spikes into analog values before performing operations, which is computationally intensive and can only be learned offline. Based on the gradient descent, an E-Leaning rule is given by the Chronotron algorithm (Victor and Purpura, 1997; van Rossum, 2001). It adjusts the synapse by minimizing an error function that is defined by the difference in the pulse sequence of the target and actual output. In a comparison of the single-spike output of neurons (e.g., Tempotron) and multi-spike output (e.g., ReSuMe), it was found that the multi-spike output of neurons can greatly improve classification accuracy and learning capacity (Gardner and Gruning, 2014; Giitig, 2014). Therefore, the use of neurons with multi-spike input–output mapping as computational units is the basis for designing efficient and large learning capacity SNNs. Although multi-spike input–output mapping can be implemented, it is only applicable to single-layer SNNs. In the literature (Ghosh-Dastidar and Adeli, 2009; McKennoch et al., 2009; Sporea and Gruning, 2013; Xu et al., 2013), researchers have tried to study algorithms applicable to multilayer SNNs. However, the algorithms for multilayer SNNs are still limited by the current algorithms, and the research on multilayer SNNs is still in its initial stage.

Since the training algorithm for SNNs is less mature, some researchers have proposed algorithms to convert traditional ANNs into SNNs. A deep ANN-based neural network is trained by a comparable mature ANN training algorithm, then, transformed into an SNN by firing rate encoding (Diehl et al., 2015), thus avoiding the difficulty of training SNNs directly. Based on this conversion mechanism, HRL Labs researchers (Cao et al., 2014) converted a Convolutional Neural Network (CNN) (Liu X. et al., 2021) to a Spiking CNN with recognition accuracy close to that of a CNN on the commonly used object recognition test set Neovision2 with CIFAR-10. There is another SNN architecture called liquid state machine (LSM) (Maass et al., 2002), which can also avoid direct training of SNNs. As long as the SNN is large enough, it can theoretically achieve any complex input classification task. Since LSMs are regression neural networks, this confers on them the ability to memorize and can effectively handle the analysis of temporal information. New Zealand researcher Nikola Kasabov proposed the NeuCube system (Kasabov et al., 2016) architecture based on the basic idea of LSM for temporal and spatial information processing. In the training phase, NeuCube uses STDP, a halo-inspired genetic algorithm, etc. to train the SNN. In the operation phase, the parameters of the SNN and the output layer classification algorithm are also dynamically changing, which gives the NeuCube system a strong adaptive capability.

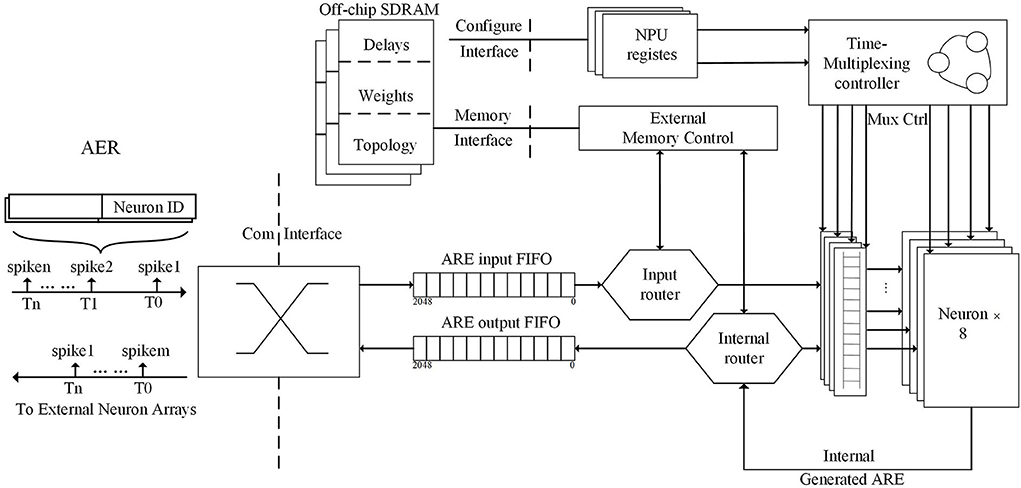

Figure 3 shows the overall microstructure of the Darwin Neural Processing Unit (NPU) (Ma et al., 2017). The Address-event representation (AER) is the format that represents the input and output spike information encoded. AER packet contains the neuronal ID that generates the spike and the timestamp when the spike is generated, which can represent each spike. The NPU, driven by the input AER packets, works in an event-triggered approach works. Spike routers translate spikes into weighted latency information by accessing storage and SDRAM (Stankovic and Milenkovic, 2015; Goossens et al., 2016; Ecco and Ernst, 2017; Li et al., 2017; Garrido and Pirsch, 2020; Benchehida et al., 2022).

Figure 3. Overall microarchitecture of the Darwin Neural Processing Unit (NPU) and the process of processing AER packages and outputting them.

The execution steps of the AER connection runtime are shown below:

The NPU works on an event-driven basis. When the FIFO receives a peak, it sent an AER packet to the NPU. The timestamp of the AER packet will be checked by the NPU. The AER packet will enter the peak routing process, if it matches the current time, or, it will go to the neuron state update process.

Each AER packet's input spike consists of the timestamp and the source (presynaptic) neuron ID. It is used to find the target (postsynaptic) neuron ID and synaptic properties, containing weights and delays stored in the off-chip DRAM.

Each synapse has an independently configurable delay parameter. The parameter defines the delay from the generation of the presynaptic neuronal spikes to the reception of the postsynaptic neuronal spikes. Each entry of the weights and queues has the intermediate result of the weights and is sent to the neuron after a certain delay.

Each neuron updates its state. First, the neuron receives the biological neuronal current state being updated from the local state memory. Then, it receives the sum of the weights of the current step from the weights and queue. If an output spike generates, it will be sent to the spike router as an AER packet.

It is similar to the process of input spike routing.

Darwin Chip's NPU is an SNN-based neuromorphic co-processor, while it still is a single-chip system, for now, the standard communication interface defined by the AER format allows expanding to multi-chip distributed systems (Nejad, 2020; Cui et al., 2021; Hao et al., 2021; Ding et al., 2022) with AER bus connections in the future. NPU, as a processing element in a network-on-a-chip (NoC) architecture200, can use the AER format for input and output peaking to scale the SNN's size of the chip to potentially millions of neurons, not just thousands of neurons.

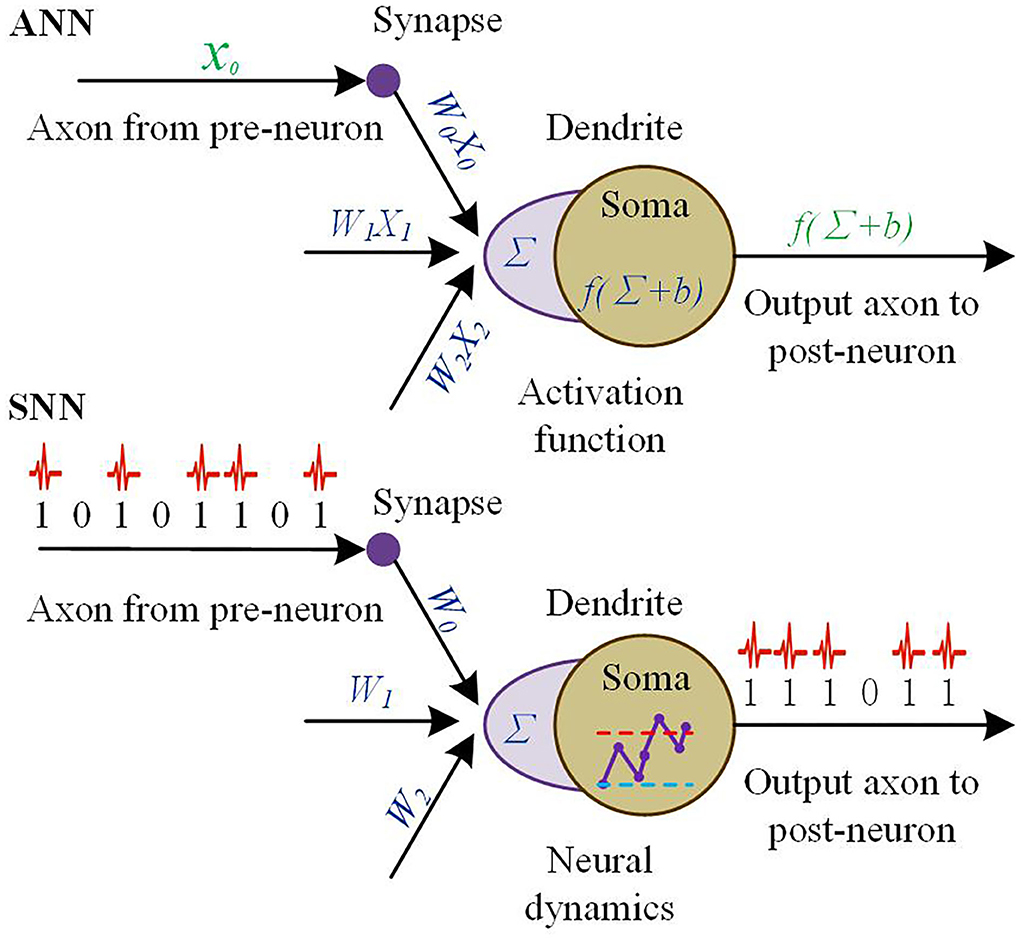

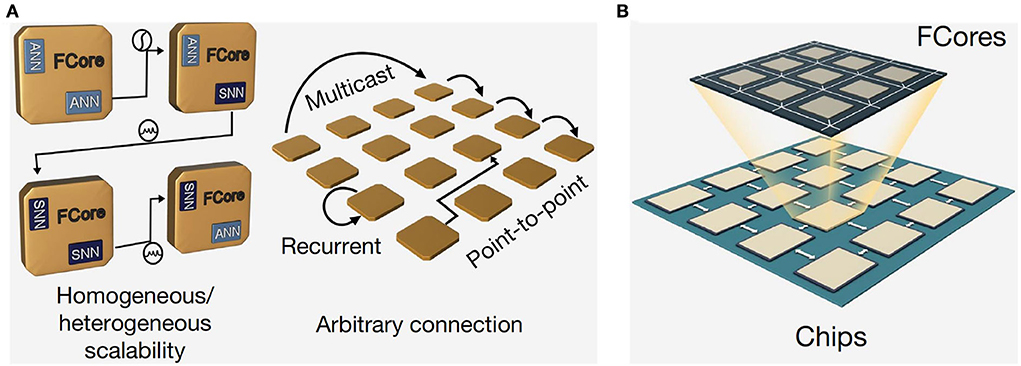

The Tianjic team is committed to create a brain-like computing chip that has the advantages of both traditional computer and neuromorphic computation. To this end, the researchers designed the unified functional core (Fcore) of the Tianjic chip (Pei et al., 2019), which consists of four main components as follows: axons, dendrites, soma, and router. Figure 4 shows the architecture of the Tianjic chip.

Figure 4. The unified functional core (Fcore) of the Tianjic chip consists of four main components: axons, dendrites, soma, and router.

The Tianjic team sets a small buffer memory to record the historical spikes in SNN (Yang Z. et al., 2020; Agebure et al., 2021; Liu F. et al., 2021; Syed et al., 2021; Das et al., 2022; Mao et al., 2022) mode. The buffer memory can support reconfigurable peak collection durations and bit access via shift operations.

Membrane potential integration of SNN mode and MACs (multiply-and-accumulate) of ANN mode use the same calculator together in order to reunify the level of abstraction of SNNs and ANNs during processing. In detail, MAC units are used to multiply and accumulate in ANN mode. In SNN mode, there is a bypassing mechanism that can skip multiplication to reduce energy under a time window of length one.

In SNN mode, the Soma is reconfigurable in order to have peak resetting, deterministic, potential storage, probabilistic fire, and threshold comparison. In ANN mode, fixed or adaptive leakage of the potential value can reduce the leakage function of the membrane potential.

To transmit information between neurons, a router receives and sends information, which is responsible for the transmission and conversion of information between cell bodies and axons. The Design of Tianjic chip is shown in Figure 5.

In order to support parallel processing of large networks or multiple networks, the chip equipped with a multi-core architecture (Chai et al., 2007; Chaparro et al., 2007; Yu et al., 2014; Grassia et al., 2018; Kiyoyama et al., 2021; Kimura et al., 2022; Zhenghao et al., 2022) can perform seamless communication at the same time. The FCores of the chip, shown in Figure 6, arrange in a two-dimensional (2D) grid. Reconfigurable routing tables of the routers of FCore have the ability of arbitrary connection topologies.

Figure 6. Arrangement format of Fcores on the Tianjic chip. (A) Reconfigurable routing tables of the routers of FCore have the ability of arbitrary connection topologies. (B) The arrangement of Fcore on the chip.

IBM started from the level of neuronal composition and principles to build a brain-like computing chip by mimicking the brain structure and performing neural simulation with the help of the spike signal conduction process (Birkhoff, 1940). Starting from neuroscience, the neuromorphic synaptic nuclei are used as the basic building blocks of the entire network (Service, 2014; Wang and Hua, 2016). The designers of TrueNorth consider neurons as the main arithmetic unit. The neuron receives and integrates the “1” or “0” pulse signal and issues instructions based on that signal. Then output the instructions to other neurons through the synapses at the connections between neurons (Abramsky and Tzevelekos, 2010; Russo, 2010). It is shown in Figure 7.

The data transmission is implemented in two stages: first, the transmission data between core blocks are passed along the x-direction and then along the y-direction until it reaches the target core block. Then, the information is transmitted within the core block, where in the same core block it first passes through presynaptic axons (horizontal lines), cross-aligned synapses (binary junctions), and finally, to the input of the postsynaptic neuron (longitudinal lines).

When a neuron on a nucleus block is excited, it first searches local memory for the axon delay value and the destination address, and then encodes this information into a data packet. If the destination neuron and the source neuron are in the same nucleus block, the local channel in the router is used to transmit the data, otherwise, they will use the channel in the other direction. To prevent the limitation caused by the excessive number of nucleus blocks, a combined decentralized structure is used at the four edges of the network. When leaving the core block, the spike leaving the network is marked with upward (east–west direction) and column (north–south direction). When entering the core block, the spikes sharing the link input are propagated to the corresponding row or column using the marking information, as shown in Figure 8. The global synchronization clock is 1 Khz, which ensures that the one-to-one hardware and software corresponds exactly to the core block operating in parallel (Hermeline, 2007).

The Neurogrid chip (Benjamin et al., 2014) consists of axonal, synaptic, dendritic, and cytosolic parts. Neurogrid chips are available in four structures: fully dedicated (FD) (Boahen et al., 1989; Sivilotti et al., 1990), shared axons (SA) (Sivilotti, 1991; Mahowald, 1994; Boahen, 2000), shared synapses (SS) (Hammerstrom, 1990; Yasunaga et al., 1990), and shared dendrites (SD) (Merolla and Boahen, 2003; Choi et al., 2005). The four elements that make up the chip, axon, synapse, dendrite, and soma can be classified according to the architecture and the implementation. As shown in Figure 9, in the analog implementation, a switched current source, a comparator, a wire, and another wire mode are the four elements, respectively (Mead, 1989). A vertical wire (axon) instrumentation charge (synapse) is inserted into a horizontal wire (dendrite), and the charge will be integrated by the capacitance of dendrite. The generated voltage is compared with the threshold by the comparator (cell) and the comparator triggers an output peak if the voltage exceeds a threshold. After that, the capacitor will be discharged (reset) and starts a new cycle.

In the simplest all-digital implementation, the switching current source is replaced by a bit unit. Functions of axonal and dendritic as word and bit lines are integrated and compared, respectively, digitally: In a loop, a binary 1 is read from the synapse, triggered by the axon, the counter increments (dendrite), and the counter's (cell) output is compared with the threshold digitally stored. During the threshold, the counter will be reset and start a new cycle if a peak is triggered. The process is shown in Figure 10.

Spikes of a neuron are sent from its array through a transmitter, passed through a router to the parents and two children of its neural core, and passed through a receiver to the receivers. All these digital circuits are event driven and their logic synthesizes vat only when a spike event occurs, following Martin's asynchronous circuit (Martin, 1989; Martin and Nystrom, 2006). The chip has a transmitter and a receiver. The receiver sends multiple peaks to one row and then, the transmitter sends multiple peaks from the row. The address of the common row and the unique column of these peaks will be communicated sequentially. This design facilitates an increase in throughput during communication.

The BrainScaleS team released two versions of the BrainScaleS chip design in 2020, and here in this article, we present the BrainScaleS-2 chip (Schemmel et al., 2003; Grübl et al., 2020). The architecture of BrainScaleS-2 depends on a tight interaction of analog circuit blocks and digital circuit blocks. Due to the main intended function of the digital processor core, it is referred to as the plasticity processing unit (PPU). The analog core serves as the main neuromorphic component and includes synaptic and neuronal circuits (Aamir et al., 2016, 2018), PPU interfaces, analog parameter memory, and all event-related interface components.

There is a digital plasticity processor in the BSS-2ASIC (Friedmann et al., 2016). This microprocessor, which is specialized in highly parallel single instruction multiple data (SIMD), has an additional layer of the capability of modeling. Figure 11 shows the architecture.

The PPU is an embedded microprocessor core with the SIMD units. The unit and simulation core are together optimized for computing plasticity rules (Friedmann et al., 2016). In the current architecture of BSS-2, the same simulation core can be shared by two PPUs. This makes the neuronal circuits to the most efficient arrangement in the center of the simulation core. Figure 12 shows the individual functional blocks in the ring core.

In order to make the vertical and horizontal lines that through the subarrays as short as possible, synapses are divided into four blocks of equal size. This reduces their parasitic capacitance (Friedmann et al., 2016; Aamir et al., 2018). Each synaptic array resembles a static memory block and each synapse has 16 memory cells. Two PPUs are connected to the static memory interface of the two adjacent synaptic arrays using a fully parallel connection with 8 x 256 data lines.

Four rows of neuronal compartment circuits are placed at the synaptic blocks' edge. Each pair of dendritic input lines of the neuronal compartment connects with 256 synapses. Neuron chambers implement the AdEx neuron model.

There is a row of simulation parameters storage between each row of neurons. There are 24 simulated values and another 48 global parameters stored in these capacitive memories for each neuron. These parameters can automatically refresh with the reception of values from the storage block.

The digital neuron control block can be shared by two rows of neurons, which synchronize neural events to a 125-MHz digital system clock and serializes them to the digital output bus.

Presynaptic events of the array are input by synaptic drivers. They contain circuits that simulate the simplified Tsodys–Markram model (Tsodyks and Markram, 1997; Schemmel et al., 2007) of short-term plasticity. Synaptic drivers can handle single- or multi-valued input signals.

The random generator is fed directly into the synaptic array via the synaptic driver through the synaptic driver, which greatly reduces the use of external bandwidth when using the random model (Pfeil et al., 2015; Jordan et al., 2019).

SIMD units of PPU arrange the location of the top and bottom edges of the ring core. Analog data from the synaptic array and given analog signals from the neurons are converted into the digital representation required by the PPU by column-parallel ADCs.

The Table 2 summarizes the above representative domestic and international brain-like computing projects and chips or hardware stations.

The main advantages of neuromorphic computing over traditional methods are energy efficiency, speed of execution, robustness to local failures, and learning ability. Currently, neuroscientific knowledge of the human brain is only superficial and the development of neuromorphic computing is not guided by theory. Researchers hope to refine models and theories by using brain-like computing for partial simulations of brain function and structure (Casali et al., 2019; Rizza et al., 2021).

In 2018, Rosanna Migliore et al. (2018) used a unified data-driven modeling workflow to explore the physiological variability of channel density in hippocampal CA1 pyramidal cells and interneurons. In 2019, Alice Geminiani et al. (2019) optimized extended generalized leaky integrals and excitation (E-GLIF) neurons. In 2020, Paolo Migliore et al. (2018) designed new recurrent spiking neural networks (RSNNs) in the brain based on voltage-dependent learning rules. Their model can generate theoretical predictions for experimental validation.

Brain-like computing can help neuroscience understand the human brain more deeply and parse its structure (Amunts et al., 2016; Dobs et al., 2022). After understanding enough about the operation mechanism of the human brain, we can act directly on the human brain to improve thinking ability and solve the currently untreatable brain diseases. What is more, it can make the human intelligence level break through to new heights.

The application of brain-like computing in medical field mainly relies on the development and application of brain–computer interface (Mudgal et al., 2020; Huang D. et al., 2022). It is reflected in the following four aspects: monitoring, improvement, replacement, and enhancement.

Monitoring means that the brain–computer interface system completes the real-time monitoring and measurement of the human nervous system state (Mikołajewska and Mikołajewski, 2014; Shiotani et al., 2016; Olaronke et al., 2018; Sengupta et al., 2020). It can help grade consciousness in patients in a deep coma and measure the state of neural pathways in patients with visual/auditory impairment. Improvement means that we can provide recovery training for ADHD, stroke, epilepsy, and other conditions (Cheng et al., 2020). After doctors detect abnormal neuronal discharges through brain–computer interface technology, they can apply the appropriate electrical stimulation to the brain to suppress seizures. “replacement” is primarily for patients who have lost some function due to injury or disease. For example, people who have lost the ability to speak or speech can express themselves through a brain–computer interface (Ramakuri et al., 2019; Czech, 2021); groups of people with severe motor disabilities can communicate what they are thinking in their heads through a brain–computer interface system. “enhancement” refers to the strengthening of brain functions by implanting chips into the brain (Kotchetkov et al., 2010). For example, it enhances memory and helps a person to call mechanical devices directly.

The education and development of children is an important issue of social concern. But the research on children's development and psychological problems has been conducted only through dialog and observation. Brain-like computing research hopes to directly observe the corresponding brain waves and decoding of brain activity.

In the “Brain Science and Brain-like Research” project guidelines, the state mentions the use of brain-like technology to study the mental health of children and adolescents, including the interaction between emotional problems and cognitive abilities and their brain mechanisms, the development of screening tools and early warning systems for emotional problems in children and adolescents by combining machine learning (Dwyer et al., 2018; Yang J. et al., 2020; Du et al., 2021; Paton and Tiffin, 2022) and other means, and encouraging the integration of medicine and education. Eventually, we will develop psychological intervention and regulation tools for children and adolescents' emotional problems, and establish a platform for monitoring and intervening in children and adolescents' psychological crises based on multi-level systems, such as schools and medical care.

Nowadays, self-driving cars have many sensors, including radar, infrared, camera, GPS, and so on, but the car still does not have the ability to make the right decision like a human. Humans only need to use vision and hearing among their senses to ensure the safe driving of the vehicle. The human brain has powerful synchronous and asynchronous processing capabilities for reasonable scheduling, and human eye recognition is more accurate than all current camera recognition.

Inspired by the way neurons in the biological retina transmit information, Mahowald and Mead proposed in the early 1990s an asynchronous signal transmission method called AER (Tsodyks et al., 1998; Service, 2014). When a pixel in a pixel array occurs an “event,” the position of this pixel is output with the “event.” Based on this principle, the Dynamic Vision Sensor (DVS) (Amunts et al., 2016) was developed at the University of Zurich, Switzerland, to detect changes in the brightness of pixels in an image. The low bandwidth of DVS gives it a natural advantage in the field of robot vision, and work has been done to use it in autonomous walking vehicles and autonomous vehicles. Dr. Shoushun Chen of Nanyang Technological University, Singapore, developed an asynchronous sensing chip with a temporal sensitivity of 25 nanoseconds (Schemmel et al., 2003, 2010; Scholze et al., 2012). The brain-like cochlea (Scholze et al., 2012) is a brain-like auditory sensor based on a similar principle that can be used for sound recognition and localization. The results of all these studies will accelerate the implementation of autonomous driving and ensure the safety of the autonomous driving process.

Brain-like chips have the technical potential for ultra-low-power consumption, massively parallel computing, and real-time information processing. It has unique advantages in military application scenarios, especially in conditions with strong constraints on performance, speed, and power consumption. It can be used for ultra-low latency dynamic visual recognition against military targets in the sky, and the formation of a cognitive supercomputer to achieve rapid processing of massive amounts of data (Czech, 2021). In addition, brain-like computing can be used for intelligent gaming confrontation and decision-making in the future battlefield.

The ultra-low-power consumption, ultra-low latency, real-time high-speed dynamic visual recognition, tracking technology, and sensor information processing technology of the brain-like chip is a key technology at the strategic level of national defense science and technology. Especially the ultra-low latency real-time high-speed dynamic visual recognition technology has an extremely important role in the field of high-speed dynamic recognition. In 2014, the U.S. Air Force signed a contract with IBM to make high-altitude flying targets efficient and low powered through brain-like computing. The U.S. Air Force Research Laboratory began developing a brain-like supercomputer using IBM's True North brain-like chip in June 2017. In the following year, the laboratory released the world's largest neuromorphic supercomputer, the Blue Jay. The computer can simultaneously simulate 64 million biological neurons and 16 billion biological synapses, and power consumption is only 40 watts, 100 times lower than traditional supercomputers. They plan to demonstrate an airborne target recognition application developed by the Blue Jay in 2019. By 2024, they will enable real-time analysis of 10 times more big data than current global Internet traffic. This turns the big data that constrain the next generation of warplanes from a problem to a resource and greatly shortens the development cycle of defense technology and engineering.

Brain observation and regulation technology are an important technical means to understand the input, transmission, and output mechanism of brain information and is also the core technical support to understand, simulate, and enhance the brain. Although various in vivo means of acquiring and modulating brain neural information by MRI, optical/optical genetic imaging, and other technologies are becoming more abundant and rapidly developed, the following problems still exist in current research: single observation mode and modulation means, partial observation information, lack of knowledge of brain function, inability to synchronize brain modulation, and observation.

Neuroscientists have a clearer understanding of the single neuron model, the principles of partial neural loop information transfer, and the mechanisms of primary perceptual functions. But the global information processing in the brain, especially the understanding of higher cognitive functions, is still very sketchy (Aimone, 2021). To build a computational model that can explain the brain information processing process and perform cognitive tasks, we must understand the mathematical principles and brain information processing.

The use of hardware to simulate brain-like computational processes still faces an important challenge in terms of brain-like architectures, devices, and chips. On the one hand, CMOS and other traditional processes have encountered bottlenecks in on-chip storage density and power consumption (Chauhan, 2019), while new nano-devices still have outstanding problems, such as poor process stability and difficulty in scaling. Brain-like materials and devices require new technologies to break through current bottlenecks (Chen L. et al., 2021; Chen T. et al., 2021; Wang et al., 2021; Zhang et al., 2021). On the other hand, brain-like systems require tens of billions of neurons to work together. However, the existing brain-like chip is difficult to achieve large-scale interconnected integration of neurons and efficient real-time transmission of neuronal pulse information under the constraints of limited hardware resources and limited energy consumption.

Due to the complexity of the brain and the great difference between brain and machine, it brings poor robustness of brain signal acquisition, low efficiency of brain–machine interaction, lack of brain intelligence intervention means, high requirement of brain area intervention targets, and difficulty of fusion system construction. Given the complementary nature of machine intelligence and human intelligence, how to efficiently interpret the information transmitted by the human brain, realize the interconnection of biological intelligence and machine intelligence, integrate their respective strengths, and create intelligent forms with stronger performance are the main challenges of brain-like research (Guo and Yang, 2022).

The study of brain-like computing models (Voutsas et al., 2005) is an important foundation of brain-like computing, which determines the upper limit of neuromorphic computing from the bottom, mainly divided into neuron models, neural network models, and their learning methods. We can look forward to the development of brain-like computational models in the following directions: studying the dynamic coding mechanisms of biological neurons and neural networks, establishing efficient spike coding theories and models with biological rationality, multimodal coordination, and joint representation at multiple spatial and temporal scales; studying and exploring the coordination mechanisms of multisynaptic plasticity, the mechanisms of cross-scale learning plasticity, and the global plasticity mechanisms of biological neural networks; establish efficient learning theories and algorithms for deep SNNs to realize intelligent learning, reasoning, and decision-making under multi-cognitive collaborative tasks of brain-like; to study mathematical descriptions of different levels of brain organization and continuous learning strategies under multi-temporal neural computational scales to realize rapid association, transfer, and storage of multimodal information.

The current development of artificial neuromorphic devices mainly includes two technical routes. One is based on the traditional mature CMOS technology of SRAM or DRAM build (Asghar et al., 2021), and the prototype device is volatile in terms of information storage; the other is built based on non-volatile Flexible FLASH devices or new memory devices and new materials (Feng et al., 2021; He et al., 2021). Non-volatile neuromorphic devices are memristors with artificial neuromorphic characteristics and unique nonlinear properties that have become new basic information processing units that mimic biological neurons and synapses (Yang et al., 2013; Prezioso et al., 2015). In future inquiries, we need to clarify some basic questions: in neural operations, which level is needed to simulate the neural properties of organisms, and which functions are primary in neuromorphic operations. These issues are critical to the implementation of neural computing.

Artificial neural network chips have made progress in practical applications, whereas pulsed neural network chips are still in the exploratory application stage. Future research on neuromorphic chips can try to study neuromorphic computing chips from several different directions, such as architecture, operation method, and peripheral circuit technology of neuromorphic component arrays suitable for convolutional and matrix operations, hardware description, mapping scheme of neural network algorithm to new neuromorphic component arrays, compensation algorithm and circuit compensation method for various non-ideal factors of new neuromorphic components, and data routing method between arrays.

However, the results are not satisfactory in practical applications. For example, the efficiency of online learning is much lower than the speed of neural computing, and the efficiency and accuracy of SNN learning are not as good as traditional ANN. We can study the high-efficiency deployment of neural network learning training algorithms, the compensation method of learning performance loss during the process of computing efficiency improvement, and carry out flow verification of prototype prototypes; we can build a large-scale brain-like computing chip simulation platform with online learning functions and demonstrate a variety of online brain-like chip-based learning applications. Develop the potential of neuromorphic computing in terms of platforms, systems, applications, and algorithms.

At present, brain-like computing technology is still a certain distance away from being formally put into industrial production (Zou et al., 2021), but it will certainly be one of the important points of contention between various countries and enterprises in the next 10 years. So, this is an opportunity for all researchers, and whether it can be truly applied in production life depends on the researchers' key research results in certain aspects. We hope that we researchers will achieve a technological breakthrough to bring brain-like to life as soon as possible.

WO: conception and design of study. CZ: participated in the literature collection, collation of the article, and was responsible for the second and third revision of the article. SX: acquisition of data. WH: analysis and interpretation of data. QZ: revising the manuscript critically for important intellectual content. All authors contributed to the article and approved the submitted version.

This work was supported in part by the Hainan Provincial Natural Science Foundation of China (621RC508), Henan Key Laboratory of Network Cryptography Technology (Grant/Award Number: LNCT2021-A16), and the Science Project of Hainan University (KYQD(ZR)-21075).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Aamir, S. A., Müller, P., Hartel, A., Schemmel, J., and Meier, K. (2016). “A highly tunable 65-nm CMOS LIF neuron for a largescale neuromorphic system,” in ESSCIRC Conference 2016, 42nd. European Solid-State Circuits Conference (IEEE), p. 71–74. doi: 10.1109/ESSCIRC.2016.7598245

Aamir, S. A., Stradmann, Y., Müller, P., Pehle, C., Hartel, A., Grübl, A., et al. (2018). An accelerated lif neuronal network array for a large-scale mixed-signal neuromorphic architecture. IEEE Trans. Circuits Syst. I Regular Papers. 65, 4299–4312. doi: 10.1109/TCSI.2018.2840718

Abbott, L. F. (1999). Lapicque's introduction of the integrate-and-fire model neuron. Brain Res. Bull. 50, 303–304. doi: 10.1016/S0361-9230(99)00161-6

Abramsky, S., and Tzevelekos, N. (2010). Introduction To Categories and Categorical Logic/New Structures For Physics. Berlin, Heidelberg: Springer, p. 3–94. doi: 10.1007/978-3-642-12821-9_1

Adam, L. (2014). The moments of the Gompertz distribution and maximum likelihood estimation of its parameters. Scand Actuarial J. 23, 255–277. doi: 10.1080/03461238.2012.687697

Agebure, M. A., Wumnaya, P. A., and Baagyere, E. Y. (2021). A survey of supervised learning models for spiking neural network. Asian J. Res. Comput. Sci. 9, 35–49. doi: 10.9734/ajrcos/2021/v9i430228

Aimone, J. B. A. (2021). Roadmap for reaching the potential of brain-derived computing. Adv. Intell. Syst. 3, 2000191. doi: 10.1002/aisy.202000191

Allo, C. B., and Otok, B. W. (2019). Estimation parameter of generalized poisson regression model using generalized method of moments and its application. IOP Conf. Ser. Mater. Sci. Eng. 546, 50–52. doi: 10.1088/1757-899X/546/5/052050

Amundson, J., Annis, J., Avestruz, C., Bowring, D., Caldeira, J., Cerati, G., et al. (1911). Response to NITRD, NCO, NSF Request for Information on “Update to the 2016 National Artificial Intelligence Research and Development Strategic Plan”. arXiv preprint arXiv:05796, 2019. doi: 10.2172/1592156

Amunts, K., Ebell, C., Muller, J., Telefont, M., Knoll, A., Lippert, T., et al. (2016). The human brain project: creating a European research infrastructure to decode the human brain. Neuron 92, 574–581. doi: 10.1016/j.neuron.2016.10.046

Andreopoulos, A., Kashyap, H. J., Nayak, T. K., Amir, A., and Flickner, M. D. (2018). A low power, high throughput, fully event-based stereo system. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. p. 7532–7542. doi: 10.1109/CVPR.2018.00786

Asghar, M. S., Arslan, S., and Kim, H. (2021). A low-power spiking neural network chip based on a compact LIF neuron and binary exponential charge injector synapse circuits. Sensors 21, 4462. doi: 10.3390/s21134462

Benchehida, C., Benhaoua, M. K., Zahaf, H. E., and Lipari, G. (2022). Memory-processor co-scheduling for real-time tasks on network-on-chip manycore architectures. Int. J. High Perf. Syst. Architect. 11, 1–11. doi: 10.1504/IJHPSA.2022.121877

Benjamin, B. V., Gao, P., McQuinn, E., Choudhary, S., Chandrasekaran, A. R., Bussat, J. M., et al. (2014). Neurogrid: a mixed-analog-digital multichip system for large-scale neural simulations. Proc. IEEE. 102, 699–716. doi: 10.1109/JPROC.2014.2313565

Bi, G., and Poo, M. (1999). Distributed synaptic modification in neural networks induced by patterned stimulation. Nature. 401, 792–796. doi: 10.1038/44573

Birkhoff, G. (1940). Lattice Theory. Rhode Island, RI: American Mathematical Society. doi: 10.1090/coll/025

Boahen, K. A. (2000). Point-to-point connectivity between neuromorphic chips using address events. IEEE Trans. Circuits Syst. II Analog Digital Signal Process. 47, 416–434. doi: 10.1109/82.842110

Boahen, K. A., Pouliquen, P. O., Andreou, A. G., and Jenkins, R. E. A. (1989). heteroassociative memory using current-mode MOS analog VLSI circuits. IEEE Trans Circuits Syst. 36, 747–755. doi: 10.1109/31.31323

Boybat, I., Gallo, M. L., Nandakumar, S. R., Moraitis, T., Parnell, T., Tuma, T., et al. (2018). Neuromorphic computing with multi-memristive synapses. Nat. Commun. 9, 1–12. doi: 10.1038/s41467-018-04933-y

Brette, R., and Gerstner, W. (2005). Adaptive exponential integrate-and-fire model as an effective description of neuronal activity. J. Neurophysiol. 94, 3637–3642. doi: 10.1152/jn.00686.2005

Burkitt, A. N. (2006). A review of the integrate-and-fire neuron model: I. homogeneous synaptic input. Biol. Cybern. 95, 1–19. doi: 10.1007/s00422-006-0068-6

Butts, D. A., Weng, C., Jin, J., Yeh, C. I., Lesica, N. A., Alonso, J. M., et al. (2007). Temporal precision in the neural code and the timescales of natural vision. Nature 449, 92–95. doi: 10.1038/nature06105

Cao, Y., Chen, Y., and Khosla, D. (2014). Spiking deep convolutional neural networks for energy-efficient object recognition. Int. J. Comput. Vis. 113, 1–13. doi: 10.1007/s11263-014-0788-3

Casali, S., Marenzi, E., Medini, C., Casellato, C., and D'Angelo, E. (2019). Reconstruction and simulation of a scaffold model of the cerebellar network. Front. Neuroinform. 13, 37. doi: 10.3389/fninf.2019.00037

Chai, L., Gao, Q., and Panda, D. K. (2007). “Understanding the impact of multi-core architecture in cluster computing: a case study with intel dual-core system,” in Seventh IEEE International Symposium On Cluster Computing and the Grid CCGrid'07 (IEEE), p. 471–478. doi: 10.1109/CCGRID.2007.119

Chaparro, P., Gonzáles, J., Magklis, G., Cai, Q., and González, A. (2007). Understanding the thermal implications of multi-core architectures. IEEE Trans. Parallel Distrib. Syst. 18, 1055–1065. doi: 10.1109/TPDS.2007.1092

Chen, L., Wang, T. Y., Ding, S. J., and Zhang, D. W. (2021). ALD Based Flexible Memristive Synapses for Neuromorphic Computing Application. ECS Meeting Abstracts (Bristol: IOP Publishing), 874. doi: 10.1149/MA2021-0229874mtgabs

Chen, T., Wang, X., Hao, D., Dai, S., Ou, Q., Zhang, J., et al. (2021). Photonic synapses with ultra-low energy consumption based on vertical organic field-effect. Trans. Adv. Opt. Mater. 9, 2002030. doi: 10.1002/adom.202002030

Cheng, N., Phua, K. S., Lai, H. S., Tam, P. K., Tang, K. Y., Cheng, K. K., et al. (2020). Brain-computer interface-based soft robotic glove rehabilitation for stroke. IEEE Trans. Bio-Med. Eng. 67, 3339–3351. doi: 10.1109/TBME.2020.2984003

Choi, T. Y., Merolla, P. A., Arthur, J. V., Boahen, K. A., and Shi, B. E. (2005). Neuromorphic implementation of orientation hypercolumns. IEEE Trans. Circuits Syst. I Regular Papers. 52, 1049–1060. doi: 10.1109/TCSI.2005.849136

Cui, G., Ye, W., Hou, Z., Li, T., and Liu, R. (2021). Research on low-power main control chip architecture based on edge computing technology. J. Phys. Conf. Ser. 1802, 31–32. doi: 10.1088/1742-6596/1802/3/032031

Czech, A. (2021). “Brain-computer interface use to control military weapons and tools,” International Scientific Conference on Brain-Computer Interfaces BCI Opole (Cham: Springer), p. 196–204. doi: 10.1007/978-3-030-72254-8_20

Das, R., Biswas, C., and Majumder, S. (2022). “Study of spiking neural network architecture for neuromorphic computing,” in 2022 IEEE 11th International Conference on Communication, Systems and Network Technologies CSNT (IEEE), p. 373–379. doi: 10.1109/CSNT54456.2022.9787590

Delponte, L., and Tamburrini, G. (2018). European artificial intelligence (AI) leadership, the path for an integrated vision. European Parliament.

Dennis, J., Yu, Q., Tang, H., Tran, H. D., and Li, H. (2013). “Temporal coding of local spectrogram features for robust sound recognition,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (IEEE), p. 803–807. doi: 10.1109/ICASSP.2013.6637759

Diehl, P. U., Neil, D., Binas, J., Cook, M., Liu, S. C., Pfeiffer, M., et al. (2015). Fast-Classifying, High-Accuracy Spiking Deep Networks Through Weight and Threshold Balancing. doi: 10.1109/IJCNN.2015.7280696

Ding, C., Huan, Y., Jia, H., Yan, Y., Yang, F., Liu, L., et al. (2022). A hybrid-mode on-chip router for the large-scale FPGA-based neuromorphic platform. IEEE Trans. Circuits Syst. I Regular Papers 69, 1990–2001. doi: 10.1109/TCSI.2022.3145016

Dobs, K., Martinez, J., Kell, A. J. E., and Kanwisher, N. (2022). Brain-like functional specialization emerges spontaneously in deep neural networks. Sci. Adv. 8, eabl8913. doi: 10.1126/sciadv.abl8913

Du, C., Liu, C., Balamurugan, P., and Selvaraj, P. (2021). Deep learning-based mental health monitoring scheme for college students using convolutional neural network. Int. J. Artificial Intell. Tools 30, 06n08 doi: 10.1142/S0218213021400145

Dwyer, D. B., Falkai, P., and Koutsouleris, N. (2018). Machine learning approaches for clinical, psychology and psychiatry. Annual Rev. Clin. Psychol. 14, 91–118. doi: 10.1146/annurev-clinpsy-032816-045037

Ecco, L., and Ernst, R. (2017). Tackling the bus turnaround overhead in real-time SDRAM controllers. IEEE Trans. Comput. 66, 1961–1974. doi: 10.1109/TC.2017.2714672

Feng, C., Gu, J., Zhu, H., Ying, Z., Zhao, Z., Pan, D. Z., et al. (2021). Silicon Photonic Subspace Neural Chip for Hardware-Efficient Deep Learning.

Florian, R. V. (2012). The Chronotron: A Neuron That Learns to Fire Temporally Precise Spike Patterns. doi: 10.1371/journal.pone.0040233

Friedmann, S., Schemmel, J., Grubl, A., Hartel, A., Hock, M., Meier, K., et al. (2016). Demonstrating hybrid learning in a flexible neuromorphic hardware system. IEEE Trans. Biomed. Circuits Syst. 11, 128–142. doi: 10.1109/TBCAS.2016.2579164

Gardner, B., and Gruning, A. (2014). “Classifying patterns in a spiking neural network,” in Proceedings of the 22nd European Symposium on Artificial Neural Networks (ESANN2014) (New York: Springer), 23–28.

Garrido, M., and Pirsch, P. (2020). Continuous-flow matrix transposition using memories. IEEE Trans. Circuits Syst. I Regular Papers 67, 3035–3046. doi: 10.1109/TCSI.2020.2987736

Geminiani, A., Casellato, C., D'Angelo, E., and Pedrocchi, A. (2019). Complex electroresponsive dynamics in olivocerebellar neurons represented with extended-generalized leaky integrate and fire models. Front. Comput. Neurosci. 13, 35. doi: 10.3389/fncom.2019.00035

Gerstner, W., and Kistler, W. M. (2002). Spiking Neuron Models: Single Neurons, Populations, Plasticity. Cambridge: Cambridge University Press. doi: 10.1017/CBO9780511815706

Ghosh-Dastidar, S., and Adeli, H. (2009). A new supervised learning algorithm for multiple spiking neural networks with application in epilepsy and seizure detection. Neural Netw. 22, 1419–1431. doi: 10.1016/j.neunet.2009.04.003

Giitig, R. (2014). To spike, or when to spike. Current Opinion Neurobiol. 25, 134–139. doi: 10.1016/j.conb.2014.01.004

Gjorgjieva, J., Clopath, C., Audet, J., and Pfister, J-. P. (2011). A triplet spike-timing–dependent plasticity model generalizes the Bienenstock–Cooper–Munro rule to higher-order spatiotemporal correlations. Proc. Natl. Acad. Sci. 108, 19383–19388. doi: 10.1073/pnas.1105933108

Gleeson, P., Cantarelli, M., Marin, B., Quintana, A., Earnshaw, M., Sadeh, S., et al. (2019). Open source brain: a collaborative resource for visualizing, analyzing, simulating, and developing standardized models of neurons and circuits. Neuron 103, 395–411. doi: 10.1016/j.neuron.2019.05.019

Goossens, S., Chandrasekar, K., Akesson, B., and Goossens, K. (2016). Power/performance trade-offs in real-time SDRAM command scheduling. IEEE Trans. Comput. 65, 1882–1895. doi: 10.1109/TC.2015.2458859

Grassia, F., Levi, T., Doukkali, E., and Kohno, T. (2018). Spike pattern recognition using artificial neuron and spike-timing-dependent plasticity implemented on a multi-core embedded platform. Artificial Life Robot. 23, 200–204. doi: 10.1007/s10015-017-0421-y

Grübl, A., Billaudelle, S., Cramer, B., Karasenko, V., and Schemmel, J. (2020). Verification and design methods for the brainscales neuromorphic hardware system. J. Signal Process. Syst. 92, 1277–1292. doi: 10.1007/s11265-020-01558-7

Guo, C., and Yang, K. (2022). Preliminary Concept of General Intelligent Network (GIN) for Brain-Like Intelligence.

Gütig, R., and Sompolinsky, H. (2006). The tempotron: a neuron that learns spike timing–based decisions. Nat. Neurosci. 9, 420–428. doi: 10.1038/nn1643

Hammerstrom, D. A. (1990). “VLSI architecture for high-performance, low-cost, on-chip learning,” in 1990 IJCNN International Joint Conference on Neural Networks (IEEE), p. 537–544. doi: 10.1109/IJCNN.1990.137621

Hao, Y., Xiang, S., Han, G., Zhang, J., Ma, X., Zhu, Z., et al. (2021). Recent progress of integrated circuits and optoelectronic chips. Sci. China Inf. Sci. 64, 1–33. doi: 10.1007/s11432-021-3235-7

He, Y., Jiang, S., Chen, C., Wan, C., Shi, Y., Wan, Q., et al. (2021). Electrolyte-gated neuromorphic transistors for brain-like dynamic computing. J. Appl. Phys. 130, 190904. doi: 10.1063/5.0069456

Hermeline, F. (2007). Approximation of 2-D and 3-D diffusion operators with variable full tensor coefficients on arbitrary meshes. Comput. Methods Appl. Mech. Eng. 196, 2497–2526. doi: 10.1016/j.cma.2007.01.005

Huang, D., Wang, M., Wang, J., and Yan, J. A. (2022). Survey of quantum computing hybrid applications with brain-computer interface. Cognit. Robot. 2, 164–176. doi: 10.1016/j.cogr.2022.07.002

Huang, Y., Qiao, X., Dustdar, S., Zhang, J., and Li, J. (2022). Toward decentralized and collaborative deep learning inference for intelligent iot devices. IEEE Network 36, 59–68. doi: 10.1109/MNET.011.2000639

Izhikevich, E. M. (2003). Simple model of spiking neurons. IEEE Trans Neural Netw. 14, 1569–1572. doi: 10.1109/TNN.2003.820440

Izhikevich, E. M. (2004). Which model to use for cortical spiking neurons? IEEE Trans. Neural Netw. 15, 1063–1070. doi: 10.1109/TNN.2004.832719

Jordan, J., Petrovici, M. A., Breitwieser, O., Schemmel, J., Meier, K., Diesmann, M., et al. (2019). Deterministic networks for probabilistic computing. Sci. Rep. 9, 1–17. doi: 10.1038/s41598-019-54137-7

Kasabov, N., Scott, N. M., Tu, E., Marks, S., Sengupta, N., Capecci, E., et al. (2016). Evolving spatio-temporal data machines based on the NeuCube neuromorphic framework: design methodology and selected applications. Neural Netw. 78, 1–14. doi: 10.1016/j.neunet.2015.09.011

Kimura, M., Shibayama, Y., and Nakashima, Y. (2022). Neuromorphic chip integrated with a large-scale integration circuit and amorphous-metal-oxide semiconductor thin-film synapse devices. Sci. Rep. 12, 1–6. doi: 10.1038/s41598-022-09443-y

Kiyoyama, K., Horio, Y., Fukushima, T., Hashimoto, H., Orima, T., Koyanagi, M., et al. (2021). “Design for 3-D Stacked Neural Network Circuit with Cyclic Analog Computing,” in 2021 IEEE International 3D Systems Integration Conference (3DIC) (IEEE), p. 1–4. doi: 10.1109/3DIC52383.2021.9687608

Kotchetkov, I. S., Hwang, B. Y., Appelboom, G., Kellner, C. P., and Connolly, E. S. (2010). Brain-computer interfaces: military, neurosurgical, and ethical perspective. Neurosurg. Focus, 28, E25. doi: 10.3171/2010.2.FOCUS1027

Koutrouvelis, I. A., and Canavos, G. C. (1999). Estimation in the Pearson type 3 distribution. Water Resour. Res. 35, 2693–2704. doi: 10.1029/1999WR900174

Leutgeb, S., Leutgeb, J. K., Moser, M. B., and Moser, E. I. (2005). Place cells, spatial maps and the population code for memory. Current Opinion Neurobiol. 15, 738–746. doi: 10.1016/j.conb.2005.10.002

Li, S., Yin, H., Fang, X., and Lu, H. (2017). Lossless image compression algorithm and hardware architecture for bandwidth reduction of external memory. IET Image Process. 11, 376–388. doi: 10.1049/iet-ipr.2016.0636

Liu, F., Zhao, W., Chen, Y., Wang, Z., Yang, T., Jiang, L. S., et al. (2021). STDP: Supervised spike timing dependent plasticity for efficient spiking neural network training. Front. Neurosci. 15, 756876. doi: 10.3389/fnins.2021.756876

Liu, X., Yang, J., Zou, C., Chen, Q., Yan, X., Chen, Y., et al. (2021). Collaborative edge computing with FPGA-based CNN accelerators for energy-efficient and time-aware face tracking system. IEEE Trans. Comput. Social Syst. 9, 252–266. doi: 10.1109/TCSS.2021.3059318

Ma, D., Shen, J., Gu, Z., Zhang, M., Zhu, X., Xu, X., et al. (2017). Darwin: a neuromorphic hardware co-processor based on spiking neural networks. J. Syst. Arch. 77, 43–51. doi: 10.1016/j.sysarc.2017.01.003

Maass, W., Natschlager, T., and Markram, H. (2002). Real-time computing without stable states: a new framework for neural computation based on perturbations. Neural Comput. 14, 2531–2560. doi: 10.1162/089976602760407955

Mahowald, M. (1994). Ananalog VLSI System for Stereoscopic Vision. Berlin: Springer Science and Business Media. doi: 10.1007/978-1-4615-2724-4

Mao, R., Li, S., Zhang, Z., Xia, Z., Xiao, J., Zhu, Z., et al. (2022). An ultra-energy-efficient and high accuracy ECG classification processor with SNN inference assisted by on-chip, ANN learning. IEEE Trans. Biomed. Circuits Syst. 1–9. doi: 10.1109/TBCAS.2022.3185720

Martin, A. J. (1989). Programming in VLSI: From Communicating Processes to Delay-Insensitive Circuits. Pasadena: California Institute of Technology Pasadena Department of Computer Science.

Martin, A. J., and Nystrom, M. (2006). Asynchronous techniques for system-on-chip design. Proc. IEEE 94, 1089–1120. doi: 10.1109/JPROC.2006.875789

McCarthy, J., Minsky, M. L., Rochester, N., and Shannon, C. E. (2006). A proposal for the Dartmouth summer research project on artifificial intelligence. AI Magazine. 27, 12–12. doi: 10.1609/aimag.v27i4.1904

McKennoch, S., Voegtlin, T., and Bushnell, L. (2009). Spike-timing error backpropagation in theta neuron networks. Neural Comput. 21, 9–45. doi: 10.1162/neco.2009.09-07-610

Merolla, P., and Boahen, K. A. (2003). A Recurrent Model of Orientation Maps with Simple and Complex Cells.

Migliore, R., Lupascu, C. A., Bologna, L. L., Romani, A., Courcol, J-. D., Antonel, S., et al. (2018). The physiological variability of channel density in hippocampal CA1 pyramidal cells and interneurons explored using a unified data-driven modeling workflow. PLoS Comput. Biol. 14, e1006423. doi: 10.1371/journal.pcbi.1006423

Mikołajewska, E., and Mikołajewski, D. (2014). Non-invasive EEG- based brain-computer interfaces in patients with disorders of consciousness. Military Med. Res. 1, 1–6. doi: 10.1186/2054-9369-1-14

Mohemmed, A., Schliebs, S., Matsuda, S., and Kasabov, N. (2013). Training spiking neural networks to associate spatio-temporal input–output spike patterns. Neurocomputing 107, 3–10. doi: 10.1016/j.neucom.2012.08.034

Mudgal, S. K., Sharma, S. K., Chaturvedi, J., and Sharma, A. (2020). Brain computer interface advancement in neurosciences: applications and issues. Interdisciplin. Neurosurg. Adv. Tech. Case Manage. 20, 100694. doi: 10.1016/j.inat.2020.100694

Nejad, M. B. (2020). Parametric evaluation of routing algorithms in network on chip architecture. Comput. Syst. Sci. Eng. 35, 367–375. doi: 10.32604/csse.2020.35.367

Neuman, J. V. (1958). The computer and the brain. Annals History Comput. 11, 161–163. doi: 10.1109/MAHC.1989.10032

Olaronke, I., Rhoda, I., Gambo, I., Oluwaseun, O., and Janet, O. (2018). Prospects and problems of brain computer interface in healthcare. Current J. Appl. Sci. Technol. 23, 1–7. doi: 10.9734/CJAST/2018/44358

Panzeri, S., Brunel, N., Logothetis, N. K., and Kayser, C. (2010). Sensory neural codes using multiplexed temporal scales. Trends Neurosci. 33, 111–120. doi: 10.1016/j.tins.2009.12.001

Paton, L. W., and Tiffin, P. A. (2022). Technology matters: machine learning approaches to personalised child and adolescent mental health care. Child Adolescent Mental Health 27, 307–308. doi: 10.1111/camh.12546

Pei, J., Deng, L., Song, S., Zhao, M., Zhang, Y., Wu, S., et al. (2019). Towards artificial general intelligence with hybrid Tianjicc chip architecture. Nature 572, 106–111. doi: 10.1038/s41586-019-1424-8

Pfeil, T., Jordan, J., Tetzlaff, T., Grübl, A., Schemmel, J., Diesmann, M., et al. (2015). “The effect of heterogeneity on decorrelation mechanisms in spiking neural networks: a neuromorphic-hardware study,” in 11th Göttingen Meeting of the German Neuroscience Society. Computational and Systems Neuroscience (FZJ-2015-05833). doi: 10.1103/PhysRevX.6.021023

Prezioso, M., Merrikh-Bayat, F., Hoskins, B. D., Adam, G. C., Likharev, K. K., and Strukov, D. B (2015). Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64. doi: 10.1038/nature14441

Ramakuri, S. K., Chithaluru, P., and Kumar, S. (2019). Eyeblink robot control using brain-computer interface for healthcare applications. Int. J. Mobile Dev. Wearable Technol. Flexible Electron. 10, 38–50. doi: 10.4018/IJMDWTFE.2019070103

Rizza, M. F., Locatelli, F., Masoli, S., Sánchez-Ponce, D., Muñoz, A., Prestori, F., et al. (2021). Stellate cell computational modeling predicts signal filtering in the molecular layer circuit of cerebellum. Sci Rep. 11, 3873. doi: 10.1038/s41598-021-83209-w

Russo, C. (2010). Quantale modules and their operators, with applications. J Logic Comput. 20, 917–946. doi: 10.1093/logcom/exn088

Safiullina, A. N. (2016). Estimation of the binominal distribution parameters using the method of moments and its asymptotic properties. Učënye Zapiski Kazanskogo Universiteta: Seriâ Fiziko-Matematičeskie Nauki 158, 221–230. Available online at: http://www.mathnet.ru/php/archive.phtml?wshow=paper&jrnid=uzku&paperid=1364&option_lang=eng

Samonds, J. M., Zhou, Z., Bernard, M. R., and Bonds, A. B. (2006). Synchronous activity in cat visual cortex encodes collinear and cocircular contours. J. Neurophysiol. 95, 2602–2616. doi: 10.1152/jn.01070.2005

Schemmel, J., Billaudelle, S., Dauer, P., and Weis, J. (2003). Accelerated Analog Neuromorphic Computing. arXiv preprint arXiv:11996, 2020. doi: 10.1007/978-3-030-91741-8_6

Schemmel, J., Brüderle, D., Grübl, A., Hock, M., Meier, K., Millner, S. A., et al. (2010). “Wafer-scale neuromorphic hardware system for large-scale neural modeling,” in 2010 IEEE International Symposium on Circuits, and Systems ISCAS (IEEE), 1947–1950. doi: 10.1109/ISCAS.2010.5536970

Schemmel, J., Bruderle, D., Meier, K., and Ostendorf, B. (2007). “Modeling synaptic plasticity within networks of highly accelerated Iand Fneurons,” in 2007 IEEE International Symposium on Circuits and Systems (IEEE), p. 3367–3370. doi: 10.1109/ISCAS.2007.378289

Scholze, S., Eisenreich, H., Höppner, S., Ellguth, G., Henker, S., Ander, M., et al. (2012). A 32 GBit/s communication SoC for a waferscale neuromorphic system. Integration 45, 61–75. doi: 10.1016/j.vlsi.2011.05.003

Sengupta, P., Stalin John, M. R., and Sridhar, S. S. (2020). Classification of conscious, semi-conscious and minimally conscious state for medical assisting system using brain computer, interface and deep neural network. J. Med. Robot. Res. doi: 10.1142/S2424905X19420042

Shanker, R. (2017). The discrete poisson-akash distribution. Int. J. Probab. Stat. 6, 1–10. doi: 10.5336/biostatic.2017-54834

Shi, Y., Mizumoto, M., Yubazaki, N., and Otani, M. (1996). “A learning algorithm for tuning fuzzy rules based on the gradient descent method,” in Proceedings of IEEE 5th International Fuzzy Systems, Vol. 1 (IEEE), p. 55–61.

Shiotani, M., Nagano, N., Ishikawa, A., Sakai, K., Yoshinaga, T., Kato, H., et al. (2016). Challenges in detection of premonitory electroencephalographic (EEG) changes of drug-induced seizure using a non-human primate EEG telemetry model. J. Pharmacol. Toxicol. Methods 81, 337. doi: 10.1016/j.vascn.2016.02.010

Sivilotti, M. A. (1991). Wiring Considerations in Analog VLSI Systems, with Application to Field-Programmable Networks. California: California Institute of Technology.

Sivilotti, M. A., Emerling, M., and Mead, C. (1990). A Novel Associative Memory Implemented Using Collective Computation.

Sporea, I., and Gruning, A. (2013). Supervised learning in multilayer spiking neural networks. Neural Comput. 25, 473–509. doi: 10.1162/NECO_a_00396

Stankovic, V. V., and Milenkovic, N. Z. (2015). Synchronization algorithm for predictors for SDRAM memories. J. Supercomput. 71, 3609–3636. doi: 10.1007/s11227-015-1452-6

Syed, T., Kakani, V., Cui, X., and Kim, H. (2021). Exploring optimized spiking neural network architectures for classification tasks on embedded platforms. Sensors 21, 32–40. doi: 10.3390/s21093240

Tsodyks, M., Pawelzik, K., and Markram, H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835. doi: 10.1162/089976698300017502

Tsodyks, M. V., and Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. 94, 719–723. doi: 10.1073/pnas.94.2.719

Valadez-Godínez, S., Sossa, H., and Santiago-Montero, R. (2020). On the accuracy and computational cost of spiking neuron implementation. Neural Netw. 122, 196–217. doi: 10.1016/j.neunet.2019.09.026

van Rossum, M. C. W. (2001). A novel spike distance. Neural Comput. 13, 751–763. doi: 10.1162/089976601300014321

Victor, J. D., and Purpura, K. P. (1997). Metric-space analysis of spike trains: theory, algorithms and application. Network Comput. Neural Syst. 8, 127–164. doi: 10.1088/0954-898X_8_2_003

Voutsas, K., Langner, G., Adamy, J., and Ochse, M. (2005). A brain-like neural network for periodicity analysis. IEEE Trans. Syst. Man Cybern. Part B 35, 12–22. doi: 10.1109/TSMCB.2004.837751

Wang, Q. N., Zhao, C., Liu, W., Van Zalinge, H., Liu, Y. N., Yang, L., et al. (2021). All-solid-state ion doping synaptic transistor for bionic neural computing,” in 2021 International Conference on IC Design and Technology (ICICDT) (IEEE), p. 1–4. doi: 10.1109/ICICDT51558.2021.9626468

Wang, Y. C., and Hua, H. U. (2016). New development of artificial cognitive computation: true north neuron chip. Comput. Sci. 43, 17–20+24. Available online at: https://www.cnki.com.cn/Article/CJFDTotal-JSJA2016S1004.htm

Xu, Y., Zeng, X., and Zhong, S. (2013). A new supervised learning algorithm for spiking neurons. Neural Comput. 25, 1472–1511. doi: 10.1162/NECO_a_00450

Yang, H., Yuan, J., Li, C., Zhao, G., Sun, Z., Yao, Q., et al. (2022). BrainIoT: brain-like productive services provisioning with federated learning in industrial IoT. IEEE Internet Things J. 9, 2014–2024. doi: 10.1109/JIOT.2021.3089334

Yang, J., Wang, R., Guan, X., Hassan, M. M., Almogren, A., and Alsanad, A. (2020). AI-enabled emotion-aware robot: the fusion of smart clothing, edge clouds and robotics. Future Gener. Comput. Syst. 102, 701–709. doi: 10.1016/j.future.2019.09.029

Yang, J. J., Strukov, D. B., and Stewart, D. R. (2013). Memristive devices for computing. Nat. Nanotechnol. 8, 13–24. doi: 10.1038/nnano.2012.240

Yang, Z., Huang, Y., Zhu, J., and Ye, T. T. (2020). “Analog circuit implementation of LIF, and, STDP models for spiking neural networks,” in Proceedings of the 2020 on Great Lakes Symposium on VLSI, p. 469–474. doi: 10.1145/3386263.3406940

Yasunaga, M., Masuda, N., Yagyu, M., Asai, M., Yamada, M., Masaki, A., et al. (1990). “Design, fabrication and evaluation of a 5-inch wafer scale neural network LSI composed on 576 digital neurons,” in 1990 IJCNN International Joint Conference on Neural Networks (IEEE), p. 527–535. doi: 10.1109/IJCNN.1990.137618

Yu, Q., Tang, H., Tan, K. C., and Li, H. (2013). Rapid feedforward computation by temporal encoding and learning with spiking neurons. IEEE Trans. Neural Netw. Learn. Syst. 24, 1539–1552. doi: 10.1109/TNNLS.2013.2245677

Yu, Q. I., Hong-bing, P. A., Shu-zhuan, H. E., Li, L. I., Wei, L. I., and Feng, H. A. (2014). Parallelization of NCS and algorithm based on heterogeneous multi-core prototype chip. Microelectron. Comput. 31, 87–91.

Zhang, J., Shi, Q., Wang, R., Zhang, X., Li, L., Zhang, J., et al. (2021). Spectrum-dependent photonic synapses based on 2D imine polymers for power-efficient neuromorphic computing. InfoMat 3, 904–916. doi: 10.1002/inf2.12198

Zhenghao, Z. H., Zhilei, C. H., Xia, H. U., and Cong, X. U. (2022). Design and implementation of NEST brain-like simulator based on heterogeneous computing platform. Microelectron. Comput. 39, 54–62.

Zou, Z., Kim, Y., Imani, F., Alimohamadi, H., Cammarota, R., Imani, M., et al. (2021). “Scalable edge-based hyperdimensional learning system with brain-like neural adaptation,” in Proceedings of the International Conference for High Performance Computing Networking Storage and Analysis (St. Louis, MI), 1–15. doi: 10.1145/3458817.3480958

Keywords: brain-like computing, neuronal models, spiking neuron networks, spiking neural learning, learning algorithms, neuromorphic chips

Citation: Ou W, Xiao S, Zhu C, Han W and Zhang Q (2022) An overview of brain-like computing: Architecture, applications, and future trends. Front. Neurorobot. 16:1041108. doi: 10.3389/fnbot.2022.1041108

Received: 10 September 2022; Accepted: 31 October 2022;

Published: 24 November 2022.

Edited by:

Wellington Pinheiro dos Santos, Federal University of Pernambuco, BrazilReviewed by: