94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

PERSPECTIVE article

Front. Neurorobot., 09 April 2021

Volume 15 - 2021 | https://doi.org/10.3389/fnbot.2021.661603

This article is part of the Research TopicEmbodiment and Co-Adaptation through Human-Machine Interfaces: at the border of Robotics, Neuroscience and PsychologyView all 15 articles

Jonathon S. Schofield1

Jonathon S. Schofield1 Marcus A. Battraw1

Marcus A. Battraw1 Adam S. R. Parker2

Adam S. R. Parker2 Patrick M. Pilarski2

Patrick M. Pilarski2 Jonathon W. Sensinger3

Jonathon W. Sensinger3 Paul D. Marasco4,5*

Paul D. Marasco4,5*During every waking moment, we must engage with our environments, the people around us, the tools we use, and even our own bodies to perform actions and achieve our intentions. There is a spectrum of control that we have over our surroundings that spans the extremes from full to negligible. When the outcomes of our actions do not align with our goals, we have a tremendous capacity to displace blame and frustration on external factors while forgiving ourselves. This is especially true when we cooperate with machines; they are rarely afforded the level of forgiveness we provide our bodies and often bear much of our blame. Yet, our brain readily engages with autonomous processes in controlling our bodies to coordinate complex patterns of muscle contractions, make postural adjustments, adapt to external perturbations, among many others. This acceptance of biological autonomy may provide avenues to promote more forgiving human-machine partnerships. In this perspectives paper, we argue that striving for machine embodiment is a pathway to achieving effective and forgiving human-machine relationships. We discuss the mechanisms that help us identify ourselves and our bodies as separate from our environments and we describe their roles in achieving embodied cooperation. Using a representative selection of examples in neurally interfaced prosthetic limbs and intelligent mechatronics, we describe techniques to engage these same mechanisms when designing autonomous systems and their potential bidirectional interfaces.

From smartphones to self-driving vehicles to advanced artificial limbs, cooperative machines are becoming increasingly integrated into our society. As they continue to grow in their level of sophistication and autonomy, so does the complexity of human-machine relationships. When engaging with technology, frustration is never far away and negative emotions may shape our disposition to using a technology (Klein et al., 2002). Sometimes these emotions are merited by the poor performance of the technology, but we often misjudge technologies and place unfair expectations on them (Jackson, 2002) simply because of the way they communicate with us. Like the way the glimmer of a smile or the touch of a hand can change our reception of hard news, the way that technologies interface with us is imperative to accepting their capabilities. As humans, we are quick to distinguish between ourselves and cooperating machines, and to blame them for errors (Serenko, 2007). However, the perception that our bodies and our actions are our own is incredibly malleable and this malleability provides a pathway to improved human-machine interactions. Our brains and our bodies host a variety of conscious and non-conscious perceptual mechanisms to perceive ourselves as separate from our environments, and these mechanisms may be targeted through bidirectional machine-interfaces. In doing so, we may assume ownership of cooperative machines and their collaborative actions to promote more forgiving interactions, a concept we call embodied cooperation.

How do we know that we are “ourselves”; autonomous agents that have physical bodies, and act within an external environment? Although various forms of this age-old question have long been explored across disciplines including philosophy, phenomenology, psychology, and cognitive neuroscience; a single unifying theory of self-awareness has yet to be developed (Braun et al., 2018). Rather, there are several neurocognitive theories that hypothesize varying degrees of influence from the brain integrating multisensory information and internal representations of the body (Tsakiris, 2010; Braun et al., 2018). What is clear, is that our brains readily and constantly distinguish ourselves as separate from our environments, the tools we use, and the people around us. These distinctions of “self or other” shape our perceptions of nearly every action we perform.

There is a spectrum of control that we have over the outcomes of our actions that spans the extremes from full to negligible correlation. In between, our perceived role in an outcome is directly linked to the brain's distinction of self or other. As individuals, our locus of control describes the degree to which we believe that we control the events around us, as opposed to external forces (Rotter, 1966). There are many factors that may shape this perception including age, gender, and cultural differences (Strickland and Haley, 1980; Berry et al., 1992; Hovenkamp-Hermelink et al., 2019); however, there are inherent biases in our perceived control of events. The term self-serving bias describes the larger group behavior in which we tend to disproportionately credit ourselves for positive outcomes of actions while blaming negative outcomes on things beyond our control (Davis and Davis, 1972). This behavior has been observed in numerous contexts including competitive sports (Lau and Russell, 1980; Riess and Taylor, 1984; De Michele et al., 1998), perceptions of one's own employability (Furnham, 1982), and academic performance (McAllister, 1996), among many others (Gray and Silver, 1990; Sedikides et al., 1998; Farmer and Pecorino, 2002). Self-serving bias is highly relevant to human-machine interactions as people tend to not only blame technology for mistakes but are also less likely to attribute positive outcomes to machine-partners and even take credit for themselves (Friedman, 1995; Moon, 2003; You et al., 2011).

Autonomous machines have an even more troublesome relationship with self-serving biases. This behavior is observed during interactions with artificial intelligence (Vilaza et al., 2014) and amplified as the degree of machine autonomy increases (Serenko, 2007). Further, in the event of an inappropriate interaction, frustration and emotional consequences are never far away. Negative emotional states are linked to more extreme self-serving behaviors (Jahoda et al., 2006; Coleman, 2011) and frustrating interactions can leave users negatively disposed to technologies (Klein et al., 2002). Here, there is a difficult “blame cycle” in which systems of increasing autonomy receive increased blame for errors and these errors can promote negative emotional states that further reinforce the displacement of blame. Rather than blaming and becoming frustrated with our technological partners, we need to develop more forgiving relationships to break this blame cycle. As humans, we do have the capacity to form these forgiving relationships. For example, individuals are more inclined to assist a computer to complete a cooperative task if that same computer has previously assisted the user (Fogg and Nass, 1997). Further, Mirnig et al. performed a study in which participants were provided simple task instructions by an anthropomorphized social robot. Participants described the robot as more likable when minor non-task-related errors were made, suggesting that like perceptions of other humans, minor imperfections carry the potential of increasing likability (Mirnig et al., 2017). Therefore, we argue that natural human tendencies and biases also provide opportunities rather than just barriers to improve interactions with autonomous machines. We further suggest that if a technology (and/or its actions) can be perceived as belonging to the user, many of our existing biases may be flipped to the benefit of more effective and forgiving cooperation.

One might think that autonomous machines would be more easily accepted and forgiven, given that our brains are hardwired to cooperate with the autonomous processes in our own bodies. For instance, a single motor task may be achieved by nearly infinite combinations of joint motions and timings (Bernstein, 1967). To be completed without attending to every muscle's action, the central nervous system appears to rely on repertoires of autonomous movement patterns (Bizzi et al., 1991; Wolpert et al., 2001; Giszter and Hart, 2013). Although we feel in complete control of our limbs and bodies, when we move our bodies or manipulate objects, the specifics of those motions are executed through autonomous sensorimotor control loops outside of our conscious control. It is this biological-autonomous framework that cooperative machines should seek to engage. To do this, carefully constructed bidirectional interfaces may be employed. Like our biological bodies, these devices will need to consistently and accurately trigger a machine to perform cooperative actions while also returning relevant and temporally appropriate information to the user.

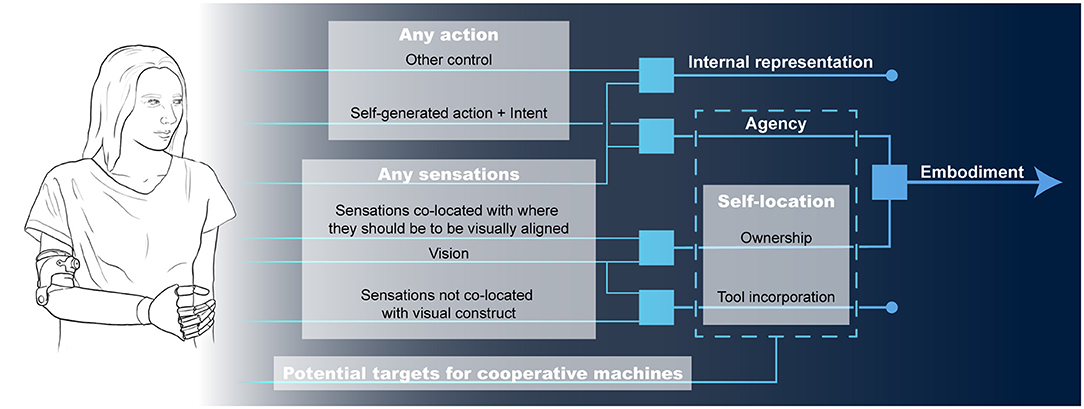

In nearly all interactions with cooperative machines, we perceive ourselves and our actions as separate from the machine. This distinction is a product of our sense of embodiment. Here, we adopt the definition of embodiment as the combined experiences of owning and controlling a body and its parts (Matamala-Gomez et al., 2019; Schettler et al., 2019). Embodiment emerges from the integration of our intentions, motor actions, and sensory outcomes (Braun et al., 2018; Schettler et al., 2019). More specifically, it integrates perceptions and mental constructs built around vision, cutaneous sensation, proprioception, interoception, motor control, and vestibular sensations (Maselli and Slater, 2013). The sense of embodiment is malleable and manipulating these channels can extend the perceived borders and capabilities of our bodies to include non-bodily objects and even cooperative machines (Botvinick and Cohen, 1998; Braun et al., 2018; Schettler et al., 2019). In this context, there are three key experiences that a machine may engage with, these are: (1) the sense of self-location, experienced as the volume in space where one feels their body is located; (2) the sense of ownership, the experience of something being a part of the body; (3) and the sense of agency, the experience of authoring the actions of one's body and the resulting sensory outcomes (De Vignemont, 2011; Kilteni et al., 2015). Figure 1 illustrates the relationship between actions, intentions, sensations, and the experiences that summate to the sense of embodiment. In this paper, we discuss varying degrees in which machines may engage these experiences to create a spectrum of perceptions that spans between operating a tool as an extra-personal extension of the body through the complete embodiment of a machine.

Figure 1. The pieces of embodiment and potential targets for cooperative human-machine interactions.

In literature, the larger concept of embodiment is often confounded with tool-incorporation which is the extra-personal experience of operating a tool as an extension of the body (rather than a part of the body). Promoting tool-incorporation in autonomous machines may be achieved by simply providing appropriate sensory feedback to the user. In doing so, users may develop a keen awareness of the tool's physicality as the brain adapts its geometric representation of the body and surrounding workspace (peripersonal space) (Iriki et al., 1996; Schettler et al., 2019). For example, the haptic feedback provided through canes used by visually impaired individuals can promote tool-incorporation. This results in an expansion of peripersonal space and an acute awareness of the area around the cane's tip (Serino et al., 2007). Similar effects are observed in numerous tools spanning the complexities of a rake through an automobile (Iriki et al., 1996; Sposito et al., 2012; Moeller et al., 2016). However, at no point do these users perceive their tools as a part of their bodies as they do not engage all the key mechanisms of embodiment. Here, peripersonal space and the sense of self-location are closely linked and may be influenced by tool use (Noel et al., 2015). Further, tool use may even promote a sense of external-agency (described below). However, these tools do not provide visually collocated feedback, which is required to form a sense of ownership, the distinguishing factor in this case. Of further relevance to human-machine cooperations, there appears to be a link between tool-use proficiency and the changes in peripersonal space that accompany tool-incorporation (Sposito et al., 2012; Biggio et al., 2017). For instance, experienced drivers underestimate distances in front of their vehicles (Moeller et al., 2016), and skilled archers perceive their targets as larger (Lee et al., 2012). Therefore, further exploring tool-incorporation and how cooperative machines may engage the requisite sensorimotor mechanisms may be an important avenue to accelerating user proficiency.

The sense of ownership is the experience of our body and body parts belonging to ourselves and describes the feeling of “mineness” that we experience (Braun et al., 2018). It is the feeling that is captured in statements such as “this is ‘my’ hand,” and it often occurs at the fringe of consciousness (De Vignemont, 2011; Braun et al., 2018). There is strong evidence suggesting the sense of ownership is a product of the integration of visual and (most commonly) tactile sensory channels (Botvinick and Cohen, 1998; Kilteni et al., 2015; Braun et al., 2018; Schettler et al., 2019). Much of our current understanding originates from the rubber hand illusion in which participants report experiencing a rubber hand as a part of their bodies with strategic manipulation of what they see and feel (Botvinick and Cohen, 1998). This well-known experimental paradigm demonstrates that our experience of body ownership is dynamic, adaptable, and is not constrained to our biological body parts. Not only does the rubber hand illusion influence participants' sense of ownership, but it also influences the sense of self-location. When participants are asked to close their eyes and point to the location of their hand, their estimates are typically shifted toward the rubber hand (Botvinick and Cohen, 1998; Tsakiris and Haggard, 2005). This finding suggests that the brain is updating its body representation at conscious and non-conscious levels, with other non-conscious temporary physiological changes being observed, including hand temperature (Moseley et al., 2008), touch and pain sensitivity (Folegatti et al., 2009; Fang et al., 2019), skin conductance (Ehrsson et al., 2007), and cortical excitability (Della Gatta et al., 2016).

The rubber hand illusion is of specific relevance to human-machine embodied cooperation. It is one of the more encouraging pieces of evidence suggesting that non-bodily objects and even cooperative machines such as a robotic prosthesis (described below) can engage the brain's mechanisms that distinguish self or other. Yet here, the appropriateness of bidirectional human-machine communication becomes a key element. The rubber hand illusion is diminished in cases where visual and tactile stimulation are asynchronous, demonstrating that the congruency of multisensory inputs is vital to the illusion (Botvinick and Cohen, 1998). Therefore, for a cooperative machine to engage one's sense of ownership, the sensory feedback from that system must be strategically designed and tuned. The rubber hand illusion has found many applications throughout human-machine cooperative literature and purposefully developing a sense of ownership has been a goal in prosthetic limbs (discussed below) (Niedernhuber et al., 2018), chronic pain treatment (Martini, 2016), and virtual reality avatars (Matamala-Gomez et al., 2019).

The sense of agency is distinct from the sense of ownership and can be thought of as the feeling of “mineness” for our actions. It distinguishes our self-generated actions (and their outcomes) from those generated by others (David et al., 2008). It accounts for the experience of authoring our actions and is captured in statements such as “I moved my leg” or “I pressed the button and made that happen” (Jeannerod, 2003; Braun et al., 2018). The sense of agency emerges when the motor and sensory outcomes of our actions align with our brain's predictions of the body acting in its environment (internal models) (Gallagher, 2000; Wolpert et al., 2001; Van Den Bos and Jeannerod, 2002; Legaspi and Toyoizumi, 2019). There are two levels of agency (Wen, 2019), both of which have implications in cooperations with autonomous machines. The first emerges during the control of our bodies (internal-agency). As humans, we trust our bodies to perform the actions we intend; when this is achieved, we establish an intrinsic sense of agency that is closely coupled, yet distinct from the sense of ownership (Gallagher, 2000). This sense is largely influenced by the intentions and brain's predictive models of a movement as well as the sensory experiences generated in our bodies (Gallagher, 2000; Marasco et al., 2018). The second level describes the experience of controlling external events (external-agency) (Wen et al., 2019). Pressing buttons, pulling levers, and even operating complex machinery falls into this category (Wen et al., 2019). Internal- and external-agency are both highly relevant to the perception of authoring outcomes during human-machine cooperations. Importantly, it only forms when user actions and internal models align with the sensory information returned from the machine and environment, an important goal when designing a machine's bidirectional interface.

Promoting a sense of agency during autonomous human-machine cooperation is important as it allows the user to assume authorship over cooperative actions; and therefore, may promote more forgiving interactions. The sense of agency is heavily influenced by our perceptions of self or other, and when achieved, individuals will explicitly judge themselves as responsible for the outcomes of actions (Dewey and Knoblich, 2014; Braun et al., 2018; Schofield et al., 2019). Not only does it influence explicit perceptions, but also subconscious processes. When an action produces an appropriate sensory outcome, the action and outcome are perceived as closer together in time, a phenomenon known as intentional binding (Haggard et al., 2002). Of further relevance, the sense of agency may be formed during cooperative actions. In human-human cooperations, a joint sense of authorship may be formed (Obhi and Hall, 2011; Sahai et al., 2017). Yet, these effects are diminished if a human partner is replaced with a machine (Obhi and Hall, 2011; Sahai et al., 2017; Grynszpan et al., 2019), and increasing autonomy in machine-partners reduces the sense of agency (Berberian et al., 2012). Relevant to interactions with autonomous machines, we suggest that the communicative potential of cooperating with our own bodies, another human, or a machine is dramatically different and reflected in our brain's models of these partnerships. The sense of agency is important in achieving embodied cooperation, and cooperative machines have the potential to form a joint sense of agency (or even external or internal agency) through careful construction of bidirectional interfaces. Consistent and accurate contributions of the machine will be necessary, and relevant temporally-appropriate sensory feedback will be required to allow the brain to build robust internal models.

There are numerous examples of bidirectional human-machine interfaces that promote embodied cooperation. Some of the more prominent work has emerged in the active field of advanced artificial limbs [for reviews see (Niedernhuber et al., 2018; Sensinger and Dosen, 2020)]. Robotic upper limb prostheses are computerized machines, and here embodiment may be an intuitive goal as they are often prescribed to augment or return function after limb loss. Like many other cooperative technologies, control and sensory feedback remain a driving factor influencing device abandonment (Biddiss and Chau, 2007; Østlie et al., 2012; Schofield et al., 2014). However, experimental prosthetic sensory interfaces that provide (most commonly) touch-based feedback have become widely investigated. Studies have shown that various modalities of feedback including vibration, skin-based pushing forces, and electrical stimulation of relevant nerves can be integrated into the brain's sensorimotor control loops and even promote a sense of ownership over an artificial limb (Ehrsson et al., 2008; D'Alonzo et al., 2015; Blustein et al., 2018; Graczyk et al., 2018; Valle et al., 2018; Cuberovic et al., 2019).

Recently, bidirectional neural-machine interfaces have been established for robotic prosthesis users. One such example leverages targeted reinnervation (TR) surgery to provide prosthetic control through users thinking about moving their missing limbs (Kuiken et al., 2004), and can even restore the senses of touch (Kuiken et al., 2007) and movement (Marasco et al., 2018). Working with individuals that received TR surgery, Marasco et al. used a modified version of the rubber hand illusion to demonstrate that ownership over a prosthesis can be readily achieved (Marasco et al., 2011). When participants viewed touch to a prosthetic hand while receiving synchronous sensations of touch to their missing hands, a strong sense of ownership was formed and captured across multiple independent measures. Since, Schofield et al. have reported on a long-term trial of similar touch-enabled prostheses (Schofield et al., 2020). Restoring touch sensation improved participants' grasping abilities, and over time participants tightly integrated touch into their prosthesis control strategies. Participants demonstrated long-term adaptations, developing a strong sense of ownership only when feedback was temporally and spatially appropriate (Schofield et al., 2020). Individuals who received TR surgery can also form an internal sense of agency over their prostheses. Vibration of muscles and/or tendons can induce illusory perceptions of limb movement (Goodwin et al., 1972), and vibration of participants' reinnervated muscles can induce perceptions of missing hand movements (Marasco et al., 2018). Marasco et al. demonstrated that these sensations of missing hand movement can be integrated with visual information of a prosthesis moving to influence perceptions of self-generated actions and develop a strong sense of internal-agency (Marasco et al., 2018).

Beyond these studies, prosthetic embodiment has been a rapidly growing area of interest. In fact, a PubMed search of the terms prosthetic, (or) prosthesis, (or) artificial limb, (and) embodiment, returned 195 research articles in the 30 years between 1989 and 2019. Nearly 80% of these articles were published in the last 10 years. Here, we are reaching a critical mass and beginning to reshape the way we view the relationship between a prosthesis and user. As robotic prostheses continue to advance, we are beginning to depart from simply evaluating these devices as tools for improved function and starting to assess their influence on the mechanisms that drive embodiment, an important next step.

The distinction of self or other shapes our perceptions of nearly every action we perform and drives our propensity to blame cooperative technologies when errors are made. Autonomous systems are becoming increasingly integrated into our society, and we need to reframe how we approach our cooperative relationships such that they engage the fundamental mechanisms that distinguish self or other. Neurally interfaced prostheses provide a strong example of how we may begin achieving this goal; however, they are far from the only technology in which embodied cooperation is desirable. Other assistive devices such as orthotic exoskeletons and powered mobility aids may also benefit. In these applications, the goal of bidirectional interfaces may be two-fold: the first being effective control to improve the user's physical capabilities, and the second being the embodiment of the technology. If such devices are truly embodied, the capabilities they afford the user become perceived as body function. This is a significant shift for the user as they depart from feelings of dependance on a machine to feelings of being more independent and physically capable with their bodies. It is important to note that ethical considerations will grow increasingly important as we move closer to seamless partnerships and even begin to augment human capabilities. We will need to be cognizant of the relationships and dependencies we create with machines; their implications to the user and society; as well as their accessibility and equity, especially in medical care contexts; among many others.

Full embodiment of every cooperative machine is an incredibly ambitious goal. However, as our society and our relationships with autonomous machines continues to evolve, cooperative embodiment may provide meaningful pathways to promote effective control, foster forgiving interactions, and encourage device adoption. The experience of embodiment arises from the senses of self-location, ownership, and agency, all of which cross a spectrum of workspaces that may be targeted by various cooperative machines. Just as it is valuable in prostheses, we will need to begin shifting how we evaluate interactions with cooperative machines to include assessments of cooperative embodiment. In doing so we can begin carefully constructing contextually appropriate bidirectional interfaces that leverage our inborn distinctions of self or other, and flip our natural biases to accept cooperative machines and their actions as indistinguishable from our own.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

JS participated in the preparation of the figure. PM participated in writing, figure generation, and shaping the scientific direction of the manuscript. All authors contributed to the writing, reviewing, and editing processes as well as figure generation.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Berberian, B., Sarrazin, J. C., Le Blaye, P., and Haggard, P. (2012). Automation technology and sense of control: a window on human agency. PLoS ONE 7:e34075. doi: 10.1371/journal.pone.0034075

Berry, J., Poortinga, Y., Segall, M., and Dasen, P. (1992). Cross-Cultural Psychology: Research and Applications. Cambridge: Cambridge University Press.

Biddiss, E., and Chau, T. (2007). Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 31, 236–257. doi: 10.1080/03093640600994581

Biggio, M., Bisio, A., Avanzino, L., Ruggeri, P., and Bove, M. (2017). This racket is not mine: the influence of the tool-use on peripersonal space. Neuropsychologia 103, 54–58. doi: 10.1016/j.neuropsychologia.2017.07.018

Bizzi, E., Mussa-Ivaldi, F. A., and Giszter, S. (1991). Computations underlying the execution of movement: a biological perspective. Science 253, 287–291. doi: 10.1126/science.1857964

Blustein, D., Wilson, A., and Sensinger, J. (2018). Assessing the quality of supplementary sensory feedback using the crossmodal congruency task. Sci. Rep. 8:6203. doi: 10.1038/s41598-018-24560-3

Botvinick, M., and Cohen, J. (1998). Rubber hands “feel” touch that eyes see. Nature 391:756. doi: 10.1038/35784

Braun, N., Debener, S., Spychala, N., Bongartz, E., Sörös, P., Müller, H. H. O., et al. (2018). The senses of agency and ownership: a review. Front. Psychol. 9:535. doi: 10.3389/fpsyg.2018.00535

Coleman, M. D. (2011). Emotion and the self-serving bias. Curr. Psychol. 30, 345–354. doi: 10.1007/s12144-011-9121-2

Cuberovic, I., Gill, A., Resnik, L. J., Tyler, D. J., and Graczyk, E. L. (2019). Learning of artificial sensation through long-term home use of a sensory-enabled prosthesis. Front. Neurosci. 13:853. doi: 10.3389/fnins.2019.00853

D'Alonzo, M., Clemente, F., and Cipriani, C. (2015). Vibrotactile stimulation promotes embodiment of an alien hand in amputees with phantom sensations. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 450–457. doi: 10.1109/TNSRE.2014.2337952

David, N., Newen, A., and Vogeley, K. (2008). The “sense of agency” and its underlying cognitive and neural mechanisms. Conscious Cogn. 17, 523–534. doi: 10.1016/j.concog.2008.03.004

Davis, W. L., and Davis, D. E. (1972). Internal-external control and attribution of responsibility for success and failure. J. Pers. 40, 123–136. doi: 10.1111/j.1467-6494.1972.tb00653.x

De Michele, P. E., Gansneder, B., and Solomon, G. B. (1998). Success and failure attributions of wrestlers: further evidence of the self-serving bias. J. Sport Behav. 21, 242–255.

De Vignemont, F. (2011). Embodiment, ownership and disownership. Conscious Cogn. 20, 82–93. doi: 10.1016/j.concog.2010.09.004

Della Gatta, F., Garbarini, F., Puglisi, G., Leonetti, A., Berti, A., and Borroni, P. (2016). Decreased motor cortex excitability mirrors own hand disembodiment during the rubber hand illusion. eLife 5:e14972. doi: 10.7554/eLife.14972

Dewey, J. A., and Knoblich, G. (2014). Do implicit and explicit measures of the sense of agency measure the same thing? PLoS ONE 9:e110118. doi: 10.1371/journal.pone.0110118

Ehrsson, H. H., Rosén, B., Stockselius, A., Ragnö, C., Köhler, P., and Lundborg, G. (2008). Upper limb amputees can be induced to experience a rubber hand as their own. Brain 131, 3443–3452. doi: 10.1093/brain/awn297

Ehrsson, H. H., Wiech, K., Weiskopf, N., Dolan, R. J., and Passingham, R. E. (2007). Threatening a rubber hand that you feel is yours elicits a cortical anxiety response. Proc. Natl. Acad. Sci. U.S.A. 104, 9828–9833. doi: 10.1073/pnas.0610011104

Fang, W., Zhang, R., Zhao, Y., Wang, L., and Zhou, Y. (2019). Attenuation of pain perception induced by the rubber hand illusion. Front. Neurosci. 13:261. doi: 10.3389/fnins.2019.00261

Farmer, A., and Pecorino, P. (2002). Pretrial bargaining with self-serving bias and asymmetric information. J. Econ. Behav. Organ. 48, 163–176. doi: 10.1016/S0167-2681(01)00236-0

Fogg, B. J., and Nass, C. (1997). “How users reciprocate to computers: an experiment that demonstrates behavior change,” in Conference on Human Factors in Computing Systems – Proceedings (New York, NY: Association for Computing Machinery), 331–332. doi: 10.1145/1120212.1120419

Folegatti, A., de Vignemont, F., Pavani, F., Rossetti, Y., and Farnè, A. (2009). Losing one's hand: visual-proprioceptive conflict affects touch perception. PLoS ONE 4:e6920. doi: 10.1371/journal.pone.0006920

Friedman, B. (1995). “‘It's the computer's fault”: reasoning about computers as moral agents,” in Conference Companion on Human Factors in Computing Systems - CHI '95 (New York, NY: Association for Computing Machinery), 226–227. doi: 10.1145/223355.223537

Furnham, A. (1982). Explanations for unemployment in Britain. Eur. J. Soc. Psychol. 12, 335–352. doi: 10.1002/ejsp.2420120402

Gallagher, S. (2000). Philosophical conceptions of the self: implications for cognitive science. Trends Cogn Sci. 4, 14–21. doi: 10.1016/S1364-6613(99)01417-5

Giszter, S., and Hart, C. (2013). Motor primitives and synergies in the spinal cord and after injury–the current state of play. Ann. N. Y. Acad. Sci. 1279, 114–126. doi: 10.1111/nyas.12065

Goodwin, G. M., McCloskey, D. I., and Matthews, P. B. C. (1972). The contribution of muscle afferents to keslesthesia shown by vibration induced illusionsof movement and by the effects of paralysing joint afferents. Brain 95, 705–748. doi: 10.1093/brain/95.4.705

Graczyk, E. L., Resnik, L., Schiefer, M. A., Schmitt, M. S., and Tyler, D. J. (2018). Home use of a neural-connected sensory prosthesis provides the functional and psychosocial experience of having a hand again. Sci. Rep. 8:9866. doi: 10.1038/s41598-018-26952-x

Gray, J. D., and Silver, R. C. (1990). Opposite sides of the same coin: former spouses' divergent perspectives in coping with their divorce. J. Pers. Soc. Psychol. 59, 1180–1191. doi: 10.1037/0022-3514.59.6.1180

Grynszpan, O., Sahaï, A., Hamidi, N., Pacherie, E., Berberian, B., Roche, L., et al. (2019). The sense of agency in human-human vs human-robot joint action. Conscious. Cogn. 75:102820. doi: 10.1016/j.concog.2019.102820

Haggard, P., Clark, S., and Kalogeras, J. (2002). Voluntary action and conscious awareness. Nat. Neurosci. 5, 382–385. doi: 10.1038/nn827

Hovenkamp-Hermelink, J. H. M., Jeronimus, B. F., van der Veen, D. C., Spinhoven, P., Penninx, B. W. J. H., Schoevers, R. A., et al. (2019). Differential associations of locus of control with anxiety, depression and life-events: a five-wave, nine-year study to test stability and change. J. Affect. Disord. 253, 26–34. doi: 10.1016/j.jad.2019.04.005

Iriki, A., Tanaka, M., and Iwamura, Y. (1996). Coding of modified body schema during tool use by macaque postcentral neurones. Neuroreport 7, 2325–2330. doi: 10.1097/00001756-199610020-00010

Jackson, M. (2002). Familiar and foreign bodies: a phenomenological exploration of the human-technology interface. J. R. Anthropol. Instit. 8, 333–346. doi: 10.1111/1467-9655.00006

Jahoda, A., Pert, C., and Trower, P. (2006). Frequent aggression and attribution of hostile intent in people with mild to moderate intellectual disabilities: an empirical investigation. Am. J. Ment. Retardat. 111, 90–99. doi: 10.1352/0895-8017(2006)111[90:FAAAOH]2.0.CO;2

Jeannerod, M. (2003). The mechanism of self-recognition in humans. Behav. Brain Res. 142, 1–15. doi: 10.1016/S0166-4328(02)00384-4

Kilteni, K., Maselli, A., Kording, K. P., and Slater, M. (2015). Over my fake body: body ownership illusions for studying the multisensory basis of own-body perception. Front. Hum Neurosci. 9:141. doi: 10.3389/fnhum.2015.00141

Klein, J., Moon, Y., and Picard, R. W. (2002). This computer responds to user frustration: theory, design, and results. Interact. Comput. 14, 119–140. doi: 10.1016/S0953-5438(01)00053-4

Kuiken, T. A., Dumanian, G. A., Lipschutz, R. D., Miller, L. A., and Stubblefield, K. A. (2004). The use of targeted muscle reinnervation for improved myoelectric prosthesis control in a bilateral shoulder disarticulation amputee. Prosthet. Orthot. Int. 28, 245–253. doi: 10.3109/03093640409167756

Kuiken, T. A., Marasco, P. D., Lock, B. A., Harden, R. N., and Dewald, J. P. A. (2007). Redirection of cutaneous sensation from the hand to the chest skin of human amputees with targeted reinnervation. Proc. Natl. Acad. Sci. U.S.A. 104, 20061–20066. doi: 10.1073/pnas.0706525104

Lau, R. R., and Russell, D. (1980). Attributions in the sports pages. J. Pers. Soc. Psychol. 39, 29–38. doi: 10.1037/0022-3514.39.1.29

Lee, Y., Lee, S., Carello, C., and Turvey, M. T. (2012). An archer's perceived form scales the “hitableness” of archery targets. J. Exp. Psychol. Hum. Percept. Perform. 38, 1125–1131. doi: 10.1037/a0029036

Legaspi, R., and Toyoizumi, T. (2019). A Bayesian psychophysics model of sense of agency. Nat. Commun. 10:4250. doi: 10.1038/s41467-019-12170-0

Marasco, P. D., Hebert, J. S., Sensinger, J. W., Shell, C. E., Schofield, J. S., Thumser, Z. C., et al. (2018). Illusory movement perception improves motor control for prosthetic hands. Sci. Transl. Med. 10:eaa06990. doi: 10.1126/scitranslmed.aao6990

Marasco, P. D., Kim, K., Colgate, J. E., Peshkin, M. A., and Kuiken, T. A. (2011). Robotic touch shifts perception of embodiment to a prosthesis in targeted reinnervation amputees. Brain 134, 747–758. doi: 10.1093/brain/awq361

Martini, M. (2016). Real, rubber or virtual: The vision of “one's own” body as a means for pain modulation. A narrative review. Conscious. Cogn. 43, 143–151. doi: 10.1016/j.concog.2016.06.005

Maselli, A., and Slater, M. (2013). The building blocks of the full body ownership illusion. Front. Hum. Neurosci. 7:83. doi: 10.3389/fnhum.2013.00083

Matamala-Gomez, M., Donegan, T., Bottiroli, S., Sandrini, G., Sanchez-Vives, M. V., and Tassorelli, C. (2019). Immersive virtual reality and virtual embodiment for pain relief. Front. Hum. Neurosci. 13:279. doi: 10.3389/fnhum.2019.00279

McAllister, H. A. (1996). Self-serving bias in the classroom: who shows it? Who Knows It? J. Educ. Psychol. 88, 123–131. doi: 10.1037/0022-0663.88.1.123

Mirnig, N., Stollnberger, G., Miksch, M., Stadler, S., Giuliani, M., and Tscheligi, M. (2017). To err is robot: how humans assess and act toward an erroneous social robot. Front. Robot. AI 4:21. doi: 10.3389/frobt.2017.00021

Moeller, B., Zoppke, H., and Frings, C. (2016). What a car does to your perception: Distance evaluations differ from within and outside of a car. Psychon. Bull. Rev. 23, pp. 781–788. doi: 10.3758/s13423-015-0954-9

Moon, Y. (2003). Don't blame the computer: when self-disclosure moderates the self-serving bias. J. Consum. Psychol. 13, 125–137. doi: 10.1207/S15327663JCP13-1&2_11

Moseley, G. L., Olthof, N., Venema, A., Don, S., Wijers, M., Gallace, A., et al. (2008). Psychologically induced cooling of a specific body part caused by the illusory ownership of an artificial counterpart. Proc. Natl. Acad. Sci. U.S.A. 105, 13169–13173. doi: 10.1073/pnas.0803768105

Niedernhuber, M., Barone, D. G., and Lenggenhager, B. (2018). Prostheses as extensions of the body: Progress and challenges. Neurosci. Biobehav. Rev. 92, 1–6. doi: 10.1016/j.neubiorev.2018.04.020

Noel, J. P., Pfeiffer, C., Blanke, O., and Serino, A. (2015). Peripersonal space as the space of the bodily self. Cognition 144, 49–57. doi: 10.1016/j.cognition.2015.07.012

Obhi, S. S., and Hall, P. (2011). Sense of agency in joint action: Influence of human and computer co-actors. Exp. Brain Res. 211, 663–670. doi: 10.1007/s00221-011-2662-7

Østlie, K., Lesjø, I. M., Franklin, R. J., Garfelt, B., Skjeldal, O. H., and Magnus, P. (2012). Prosthesis rejection in acquired major upper-limb amputees: a population-based survey. Disabil. Rehabil. Assist. Technol. 7, 294–303. doi: 10.3109/17483107.2011.635405

Riess, M., and Taylor, J. (1984). Ego-involvement and attributions for success and failure in a field setting. Person. Soc. Psychol. Bull. 10, 536–543. doi: 10.1177/0146167284104006

Rotter, J. B. (1966). Generalized expectancies for internal versus external control of reinforcement. Psychol. Monogr. 80, 1–28. doi: 10.1037/h0092976

Sahai, A., Pacherie, E., Grynszpan, O., and Berberian, B. (2017). “Co-representation of human-generated actions vs. machine-generated actions: impact on our sense of we-agency?,” in RO-MAN 2017 - 26th IEEE International Symposium on Robot and Human Interactive Communication (Lisbon: Institute of Electrical and Electronics Engineers Inc), 341–345. doi: 10.1109/ROMAN.2017.8172324

Schettler, A., Raja, V., and Anderson, M. (2019). The embodiment of objects: review, analysis, and future directions. Front. Neurosci. 13:1332. doi: 10.3389/fnins.2019.01332

Schofield, J. S., Evans, K. R., Carey, J. P., and Hebert, J. S. (2014). Applications of sensory feedback in motorized upper extremity prosthesis: a review. Expert Rev. Med. Dev. 11, 499–511. doi: 10.1586/17434440.2014.929496

Schofield, J. S., Shell, C. E., Beckler, D. T., Thumser, Z. C., and Marasco, P. D. (2020). Long-term home-use of sensory-motor-integrated bidirectional bionic prosthetic arms promotes functional, perceptual, and cognitive changes. Front. Neurosci. 14:120. doi: 10.3389/fnins.2020.00120

Schofield, J. S., Shell, C. E., Thumser, Z. C., Beckler, D. T., Nataraj, R., and Marasco, P. D. (2019). Characterization of the sense of agency over the actions of neural-machine interface-operated prostheses. J. Visual. Exp. 2019:e58702. doi: 10.3791/58702

Sedikides, C., Campbell, W. K., Reeder, G. D., and Elliot, A. J. (1998). The self-serving bias in relational context. J. Pers. Soc. Psychol. 74, 378–386. doi: 10.1037/0022-3514.74.2.378

Sensinger, J. W., and Dosen, S. (2020). A review of sensory feedback in upper-limb prostheses from the perspective of human motor control. Front. Neurosci. 14:345. doi: 10.3389/fnins.2020.00345

Serenko, A. (2007). Are interface agents scapegoats? Attributions of responsibility in human–agent interaction. Interact. Comput. 19, 293–303. doi: 10.1016/j.intcom.2006.07.005

Serino, A., Bassolino, M., Farnè, A., and Làdavas, E. (2007). Extended multisensory space in blind cane users. Psychol. Sci, 18, pp. 642–648. doi: 10.1111/j.1467-9280.2007.01952.x

Sposito, A., Bolognini, N., Vallar, G., and Maravita, A. (2012). Extension of perceived arm length following tool-use: clues to plasticity of body metrics. Neuropsychologia 50, 2187–2194. doi: 10.1016/j.neuropsychologia.2012.05.022

Strickland, B. R., and Haley, W. E. (1980). Sex differences on the rotter I-E scale. J. Pers. Soc. Psychol. 39, 930–939. doi: 10.1037/0022-3514.39.5.930

Tsakiris, M. (2010). My body in the brain: a neurocognitive model of body-ownership. Neuropsychologia 48, 703–712. doi: 10.1016/j.neuropsychologia.2009.09.034

Tsakiris, M., and Haggard, P. (2005). The rubber hand illusion revisited: visuotactile integration and self-attribution. J. Exper. Psychol. Hum. Percept. Perform. 31, 80–91. doi: 10.1037/0096-1523.31.1.80

Valle, G., Mazzoni, A., Iberite, F., D'Anna, E., Strauss, I., Granata, G., et al. (2018). Biomimetic intraneural sensory feedback enhances sensation naturalness, tactile sensitivity, and manual dexterity in a bidirectional prosthesis. Neuron 100, 37–45.e7. doi: 10.1016/j.neuron.2018.08.033

Van Den Bos, E., and Jeannerod, M. (2002). Sense of body and sense of action both contribute to self-recognition. Cognition 85, 177–187. doi: 10.1016/S0010-0277(02)00100-2

Vilaza, G., Campos, A., Haselager, W., and Vuurpijl, L. (2014). Using games to investigate sense of agency and attribution of responsibility. Proc. SBGames 2014, 393–399. Available online at: http://www.sbgames.org/sbgames2014/files/papers/culture/full/Cult_Full_Using_games_to_investigate.pdf

Wen, W. (2019). Does delay in feedback diminish sense of agency? A review. Conscious. Cogn. 73:102759. doi: 10.1016/j.concog.2019.05.007

Wen, W., Kuroki, Y., and Asama, H. (2019). The sense of agency in driving automation. Front. Psychol. 10:2691. doi: 10.3389/fpsyg.2019.02691

Wolpert, D. M., Ghahramani, Z., and Flanagan, J. R. (2001). Perspectives and problems in motor learning. Trends Cogn. Sci. 5, 487–494. doi: 10.1016/S1364-6613(00)01773-3

Keywords: embodiment, human–machine interaction, autonomous machine, bidirectional interface, perception, cooperation

Citation: Schofield JS, Battraw MA, Parker ASR, Pilarski PM, Sensinger JW and Marasco PD (2021) Embodied Cooperation to Promote Forgiving Interactions With Autonomous Machines. Front. Neurorobot. 15:661603. doi: 10.3389/fnbot.2021.661603

Received: 31 January 2021; Accepted: 15 March 2021;

Published: 09 April 2021.

Edited by:

Claudio Castellini, Helmholtz Association of German Research Centers (HZ), GermanyReviewed by:

Heather Roff, Johns Hopkins University, United StatesCopyright © 2021 Schofield, Battraw, Parker, Pilarski, Sensinger and Marasco. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Paul D. Marasco, bWFyYXNjcDJAY2NmLm9yZw==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.