94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurorobot. , 29 June 2020

Volume 14 - 2020 | https://doi.org/10.3389/fnbot.2020.00030

This article is part of the Research Topic Advanced Planning, Control, and Signal Processing Methods and Applications in Robotic Systems View all 13 articles

With requirements to improve life quality, smart homes, and healthcare have gradually become a future lifestyle. In particular, service robots with human behavioral sensing for private or personal use in the home have attracted a lot of research attention thanks to their advantages in relieving high labor costs and the fatigue of human assistance. In this paper, a novel force-sensing- and robotic learning algorithm-based teaching interface for robot massaging has been proposed. For the teaching purposes, a human operator physically holds the end-effector of the robot to perform the demonstration. At this stage, the end position data are outputted and sent to be segmented via the Finite Difference (FD) method. A Dynamic Movement Primitive (DMP) is utilized to model and generalize the human-like movements. In order to learn from multiple demonstrations, Dynamic Time Warping (DTW) is used for the preprocessing of the data recorded on the robot platform, and a Gaussian Mixture Model (GMM) is employed for the evaluation of DMP to generate multiple patterns after the completion of the teaching process. After that, a Gaussian Mixture Regression (GMR) algorithm is applied to generate a synthesized trajectory to minimize position errors. Then a hybrid position/force controller is integrated to track the desired trajectory in the task space while considering the safety of human-robot interaction. The validation of our proposed method has been performed and proved by conducting massage tasks on a KUKA LBR iiwa robot platform.

With the continuous development of technology, many traditional industries have been gradually replaced by high-tech products. Among them, the development of robot technology plays an important role. Robot technology integrates multiple disciplines, such as machinery, information, materials, intelligent control, and biomedicine, and intelligent service robots are intelligent equipment that provide humans with necessary services in an unstructured environment. The tasks that a robot can accomplish are divided into two categories. One is non-contact operation: the robot carries and operates the target in a free space, and the simple position control can be used for another type of contact operation. For tasks such as assembly, grinding, polishing, debarring, etc., simple position control is no longer sufficient. In such cases where the contact force is required, the slight positional deviation of the robot end may cause the contact force to damage the robot and the target. Therefore, the contact force control function must be added to the contact robot control system. The traditional robot control system for free space motion cannot meet the requirements of the extended application of robots. The perception and control of the robots of environmental forces are problems that current robot technology needs to solve. The control strategy combined with the end pose and the contact force for a robot is defined as the robotic force/position control. The key technologies of the current robotic force/position control mainly include the following aspects:

1. the robotic impedance control under the condition that the environmental parameter geometry and dynamic model parameters are unknown or changed;

2. the robotic force/position hybrid control under system interference (model error, measurement noise, and external input interference);

3. the robotic collision contact control;

4. the engineering implementation of robotic force/position control.

Service robots mimic humans in shape and behavior design; for instance, they have hands, feet, heads, and a torso. This helps them to adapt to human life and a work environment. Service robots replace human beings to complete various tasks and expand human capabilities in many aspects. Recently, research into robot massaging in smart homes (shown in Figure 1) has attracted widespread attention. Massaging is an important part of people's realization of a healthy lifestyle that can relieve stress and relax the body. By relaxing the muscles and joints, massages improve the flexibility of the body's joints and muscles, thereby reducing muscle pain caused by poor posture. However, for the manual massage tasks, it takes lots of energy and labor cost. Thus, in recent years, robot massaging has become a research hotspot in the field of robots. In 1996, the Mechatronics Research Center of Japan's Sanyo Electric Co., Ltd. designed a mechanical therapy unit and verified the feasibility of developing an intelligent massage robot (Kume et al., 1996). In 2000s, Toyohashi University of Technology and Japan's Gifu Institute of Technology carried out research on humanoid multi-finger massage robots with four fingers and 13 joints (Zhang and Zhang, 2017). Since 2004, Chinese scholars have carried out research on massage robots based on the theory of traditional Chinese massage and systematically discussed the robotic synthesis of various traditional Chinese medical massage techniques (Ma et al., 2005). However, existing massage robots are complicated to operate with limited functions, a large size with bulky equipment, and they are also expensive. Moreover, most of the existing robot massage technology depends on the function of the robot product itself; in other words, these robots cannot be used for operations other than massage, and their tandem structure stiffness is large, the motion inertia is unstable, and the working space and flexibility are limited (Zhang and Zhang, 2017; Field, 2018). To overcome the abovementioned issues, this paper develops a teaching-by-demonstration-based interface using a hybrid position/force control strategy with adjustable stiffness, which can be implemented onto a general robot manipulator with high accuracy, taught by a human operator.

Goradia et al. (2002) studied robotic forces/positions under different surfaces (i.e., unknown to the environment) to better facilitate the polishing or grinding processes. Scherillo et al. (2003) studied the force/position control of multi-finger grasping, Nguyen et al. (2000) used the sliding mode variable structure to control the force and the position of the robot, Ha et al. (2000) and used the impedance control method to control the force/position of the robot. In Fanaei and Farrokhi (2006), authors used a robust adaptive method to control the force and the position of the robot. Research on the force/position intelligent control of the robot is still mainly theoretical, the technical realization is at the stage of exploration, and there is still a certain distance to go in terms of promotion and practicality.

Movement is necessary to directly produce skill effects. The motion model generates a continuous robot space state representation skill offline or online with clear meanings related to the physical system, such as position, attitude, and contact force. Generally, the motion is assumed to be nonlinear. The motion model mainly includes two categories: trajectory encoding modeling and dynamic system modeling. Trajectory encoding is a compact mathematical model that represents the shape, constraints, and other information of the trajectory. When the human operators teach and store one or more specific fixed trajectories in the robot, then the robot can accurately reproduce the trajectory when the motion skills are executed. Calinon and Billard (2007) used trajectory encoding with a multivariate Gaussian mixture model (GMM), which expresses the trajectory in a fixed coordinate system. However, considering reality, the trajectory often needs to be expressed in different reference coordinate systems, so the transformation relationship of the reference coordinate system may also change. Thus, in Silvério et al. (2015), they proposed a task-parameterized Gaussian Mixture Model (TP-GMM) wherein the origin and rotation transformation matrix of the coordinate system are used as the task parameters in the model, which allows the observation and reproduction of the motion trajectory in different reference coordinate systems. However, the trajectory encoding modeling method only explicitly generates a fixed trajectory. When the robot is disturbed and deviates from the trajectory, the trajectory cannot be adjusted in real time, and a new trajectory needs to be regenerated.

The dynamic system is automatically evolved according to certain rules. Compared with the trajectory encoding, there are two main differences: the dynamic system does not explicitly depend on the time variable–only the relationship between the spatial state and its time derivative; the dynamic system can be online. The trajectory generated by such a dynamic system obtains the online adaptive ability for disturbance. DMP was proposed by Schaal (2003) in 2002 as a dynamic system model that can generate trajectories of arbitrary shapes where its basic idea is to drive a transform system with a canonical system. Nanayakkara et al. (2004) proposed a Mixture of motor primitives (MoMP) using a Gaiting network to calculate the weight of each DMP in the current state. Ideally, the Gaiting network should only choose one DMP implementation. However, the reality is more complicated; in order to achieve a good generalized performance, MoMP weights the output of all DMPs based on the weight of the output of the gated network to obtain the final output. In Matsubara et al. (2011), the coordination matrix is used to represent the coupling relationship between multiple DMPs corresponding to multiple degrees of freedom, and the iterative dimensionality reduction method is used to reduce the unnecessary degrees of freedom in the cooperation matrix, which is beneficial to enhancing the learning efficiently. DMP mainly depends on the target state and weight coefficient. The former is determined by the environment or setting. The latter can learn from the teaching trajectory based on the linear weighted regression (LWR) method. However, the LWR can only learn the DMP model parameters from a single demonstration. This paper mainly presents two aspects of research: the theory of hybrid force/position control with direct human–robot interaction and the experimental studies on a real robotic platform. The motion planning is performed in 3D task space, where the GMM is employed to evaluate the DMP to learn from multiple demonstrations, and GMR is used for the reproduction of the generalized trajectory with a smaller error. For the force input aspects, a hybrid force/position controller is introduced to ensure the safety of direct human–robot interaction. An overview of the presented control architecture is shown in Figure 2. The contributions of this paper are summarized.

1. This paper employs a teaching interface to perform the robot massaging under the demonstrations of the human operator, and the experimental studies show that, after the teaching process, the robot can generate an even smoother trajectory that strictly adheres to what is requested to be followed.

2. The application of the hybrid force/position control scheme takes care of the safety issues and achieves massage services performing without knowing subject profiles.

3. The generalization functions of our proposed method supplies a more flexible and convenient option with only once teaching for multiple tasks to the carers and patients, which promotes the user experiences.

FD approximates the derivative by the limitable variance and finds an estimated solution for the differential equation (Chelikowsky et al., 1994). The differential form, especially, is to substitute the differential with a finite difference and the derivative with a finite differential quotient so as to roughly change the fundamental formula and limit state (usually the differential equation) into the differential equation (Algebraic equation) (Gear, 1988). The solution of the differential equations problem is updated so that the algebraic equations problem is resolved.

Segmentation of skills is usually a complicated and systematic process that requires more time and efforts on its algorithms designing. For such a complex method, there are often difficulties in setting the priori parameters. Considering the situation that the massaging motion is planed on a horizontal plane, and the force is applied into vertical direction of that plane, during the massaging tasks, it is easy to track for the system at which point the position being massaged has been changed. This is because its changing position represents the value varying in z coordinates. Consequently, a simple segmentation method (FD) is employed in this paper. In view of yi=f(zi) relies upon the zi variables, where zi denotes the coordinate values in the vertical direction at the ith time series, and yi represents the corresponding spatial sequence of the whole dataset. If zi is changed into zi+1, the corresponding changes in the whole dataset is df(zi) = f(zi+1) − f(zi) and d is the differentiation operator. Difference has a differential-like arithmetic value. This displays the following equation (Li et al., 2018):

where one significant massaging aspect is that the endpoint would be lifted once every single massaging task is done. The “z” alignment values of the experimental data are thus viewed as the segmentation reference. We have received, pursuing the FD,

Where ξ is the gaping variable, abd θ is a constant. We could modify the segmented characters, such as, θ, here θ=0.5, by giving different values of θ. Sign is the Signum function, and, for each component of ξ, the formulation could be described as following:

Up to now, the segmented motion trajectories sign(ξ) for massaging have been outputted to different local text files for the use of GMM and DMP generalization, which correspond to the “z” coordinate information in the robot space, suddenly and sharply rising, which implies every time the massaging for one position was done. The flowchart of the segmentation is shown in Figure 3.

The DTW employed in this paper aligned the outputted trajectories curves in the form of W = {w1, w2, ⋯ , wp, ⋯ , w(p)} in which w(p) = (ip, jp) denotes the match variable in Petitjean et al. (2014). In this situation, the skewed characteristic W is required to reduce the discrepancy between the pending trajectory and the reference trajectory. The formula is therefore described as

where d[w(p)] = d[Ti(p), Rj(p)] represents the measured distance from the i(p)th featured point of the pending trajectory to the j(p)th featured point of the reference trajectory, which is normally described by a square measure specified as follows:

We need to construct a matrix grid of m × n in order to coordinate the two specimens; the matrix element (I, j) represents the range d(Ti, Rj) between the two points Ti and Rj, and each matrix element (i, j) represents the alignment of points Rj and Rj. DTW aims to find a direction which reflects the matched points for both samples to be determined via several grid points. First we illustrate, as DAcc(i, j), the total minimum range between the two trajectories, then we consider (Senin, 2008)

where (qi, qj) belongs to the set of points between (1, 1) and (i, j) within a certain direction. From the above components it can be seen that the average cumulative distance of the (i, j) element is linked not only to the regional distance d(Ti, Rj) of the own values Ti, Rj but also to the total cumulative distance earlier than this stage in the coordinate system.

We thus assume that (i, j − 1), (i − 1, j), and (i − 1, j − 1) for any point c(p) = (i, j) within the coordinate system may enter the preceding point of c(p), so the choice of the preceding variable also needs to agree with the above three factors. We may measure the corresponding DTW range between the test pattern vector and the comparison model vector, as shown below, according to the formula.

DMP is an innovative model (Schaal, 2006) dynamic system learned from biological research that learns from motor primitives. The definition of dynamic primitive can be separated into two groups. One is to use different formulas based on dynamic system to represent the state, and the other is to produce the track by interpolation through the interpolation points (Li et al., 2017). DMP is made up of two parts: the transformed model r and the canonical framework h. The equation is shown as follows:

where t and s are the transformed process states and the canonical function, and the canonical system output variable h is referred to as w.

The canonical model is defined by an exponential differential equation, which is given:

where s is a step function varying from 0 to 1, τ > 0, and αf is a temporal scaling variable and a balanced component.

The transformed model consists of two nonlinear term sections and a Cartesian space spring damping mechanism, the formulas are defined as (Schaal, 2006):

where p0 is the starting position, p ∈ R is the Cartesian position, v ∈ R is the end-effector velocity of the robot, g is the goal, k is the spring variable, and c is the damping factor. X is a conversion method of dynamic nonlinear structures capable of transforming the outcomes of the canonical model found in the following formula:

Where the GMM number is N, wi ∈ R is the weights, and l is the uniform radial variable value that can be supplied as follows:

where ci > 0 are the centers, and hi > 0 are the widths of the functions of the Gaussian foundation. N is the number of functions in Gaussian.

In addition, we can use the weight variable to produce motions by specifying the starting point of the canonical process (s=0) X0 and aim g, which is the canonical system integration. The theory of DMP is to measure the nonlinear transition feature X by observing the presenter's movements. Nonetheless, there are drawbacks in developing a multi-demonstration conversion model, which is why the GMM is used to solve the above problems.

GMM's parameter estimation is the method by which the design parameters are obtained under certain conditions. In fact, it is the process of knowing the parameters of the model, namely, the method of resolving to bring the GMM sequence of observation (Tong and Huang, 2008). The most commonly employed parameter estimation is the total probability approximation process. The basic idea is to consider the system parameter λ when the peak likelihood of GMM is obtained by providing the observation sequence X obtained by DMP from the previous chapter, then λ is the model. The optimum function, λ, defines to the maximum extent practicable the distribution of the observed string.

The end goal of the total probability calculation after providing the training information is to seek a template variable that maximizes the GMM's likelihood. For a training vector series of X = {x1, x2…xD} of duration D, it is possible to describe the likelihood of GMM as

The parameter λ is then continuously updated until a set of parameters λ is found to maximize P(X ∣ λ):

For the convenience of analysis, P(X ∣ λ) usually takes its log likelihood, giving us

The Expectation Maximization (EM) algorithm can be used for parameter estimation provided that there is a relatively complex nonlinear interaction between the probability function and the template parameters, and the peak value cannot be determined according to the standard probability estimation process. The EM algorithm is in essence an iterative method for calculating the probability model's maximum likelihood. The process of each iteration is to estimate the unknown data distribution based on the parameters that have been acquired and then calculate the new model parameters under the maximum likelihood condition. Let the initial model parameter be λ, which satisfies

Next, we calculate the new model variable λ′ according to the equation above and then use the λ′ parameter as the original parameter for the next iteration. It iteratively iterates until the state of convergence is met. Here we assume a Q function that represents the E phase of the EM process shown below:

where i is an elusive and unpredictable secret country. Q(λ, λ′) corresponds to all observable data's log likelihood assumptions. Calculating the maximum value of Q(λ, λ′) increasing give the maximum log likelihood of the observed data, which is the M stage of the EM process. It is possible to obtain replacement formulas (15) and (16) for equations (6):

The approximate values of each variable are then computed according to E and M. Phase E calculates the posterior likelihood of the tth test Xt of the training data according to the Bayesian equation in the ith state; phase M first uses the Q method to extract the three parameters independently and then evaluate the corresponding figures. To re-evaluate the variables, we iteratively perform measures E and M. The loop is halted when the peak value of the likelihood function is reached.

The first step when using the EM method to calculate the GMM parameters is to determine the number of Gaussian components in the GMM, such as system M order and model initial parameter λ (Howlett et al., 2009). Based on the actual situation, such as the sum of training data, it is appropriate to choose the order M of the template. The most widely employed approach for the model's initial variable λ is the K-means algorithm. Currently, the K-means algorithm is the simplest and most effective classification algorithm, commonly used in different models (Nazeer and Sebastian, 2009). The GMM used in this paper chooses the basic parameters using the K-means method. The K-means algorithm partitions the information into K clusters according to the in-cluster number of squares in the category theory. After using the K-means method to cluster the feature vectors, the mean and variance of each group are determined and the percentage of each class 'feature vectors is calculated as the blending weight (Tatiraju and Mehta, 2008). The average, variance, and combined weight can be then collected as the predicted values. Finally, by applying the GMR, the same theory as our previous work (Li et al., 2018), the reconstructed motion trajectories can be then obtained.

The robot kinematics model of n DOF robot is presented in the subsequent form

where x(t) ∈ Rn represents the position and direction vector, and Θ ∈ Rn represents the joint angle vector. The inverse kinematics are

The derivative of (23) can thus be rewritten:

where J(Θ) is the Jacobian matrix of the robot. Moreover, differentiating (24), we can get

The relationship between wrench and joint force can be described:

In addition, the robot manipulator dynamics in joint space is

where and are the vectors of velocity and accelerations, respectively. Mq(Θ) ∈ Rn is the inertia matrix; ) is the Coriolis and centripetal torque; GΘ(Θ) is the gravity; T is the robot torque; Tfric is the friction torque and Text is the external torque.

• Property 1: Matrix MΘ(Θ) is bounded above and below and positive definite symmetric.

• Property 2: Matrix is skew symmetric. matrix.

A force-position model of the relationship between the external force and position in joint space is

where and are the desired trajectory generated from GMR algorithm and virtual desired trajectory, respectively. The Mg, Cg, and Kg are gain matrix of the mass, damping and stiffness matrices designed by the controller, respectively.

• Assumption 1: c1; c2; and c3 are positive constants, and both qg and qr are differentiable and bounded: .

• Remark 1: In the specific cases, force-position models such as the damping-stiffness model and stiffness model are applied

In the case that the desired manipulator's motion is free and no external collisions are generated, we can get qr = qg; Text = 0. In the opposite, while there is an external collision, the robot will generate and follow the new trajectory, which is the adaptation to the force-position model and the external torque specified in Bernhardt et al. (2005) illustrates the relationship.

Regarding to the safety consideration, a moveable limit has been added to the KUKA iiwa platform in both of the Cartesian spaces to make sure the manipulator can only reach the areas in front of it with a radian of 0.6 m; in this case, the robot manipulator cannot fully stretch. Meanwhile, the stiffness of its endpoint in all directions is set upon a reasonable level, hence participants can easily afford the force from the robot. Furthermore, by adjusting the threshold in SafetyConfiguration.sconf of the KUKA controller, the Collision Detection Framework can be activated. It is relevant to decide at what levels the external force is to lock down the robot to protect the participants. Here, we set up this parameter at a low level, which avoids all the touching with high force.

A KUKA LBR iiwa robot, which has 7 DOFs of flexible joints, is implemented in our experimental studies to validate the proposed method. It is controlled utilizing the KUKA Smartpad. A massage glove was attached to the end-effector of the robot. As can be seen from Figure 4, there is a master computer lying by the robotic controller for offline data training purposes, and it is linked to the robot controller by an Ethernet cable. The labels A1–A7 in Figure 4 show the joint actuator's position with force sensor each. In addition, there is a treat table placed in front of the robot base, which is in the workspace of the robot manipulator.

The control frequency is set as 10Hz for the KUKA LBR iiwa manipulator, and the running time is limited to 30 s for the massage path tracking, and thus 300 time samplings are executed for the control loop. The endpoint stiffness in X, Y, and Z directions, Roll, Pitch, and Yaw orientations are set as 1,000, 1,000, 100, 300, 300, and 300 N/m, respectively. The control gains Mg, Cg and Kg are gain matrix of the mass, damping and stiffness and respectively set as diag[1.0], diag[1.0], and 0.5. The reason for setting those values are that we planned the motion path in horizontal plane using GMM-evoluted DMP algorithms with implementing the force in Z directions, which resulted in the impedance effectiveness in the vertical direction of the patient's back.

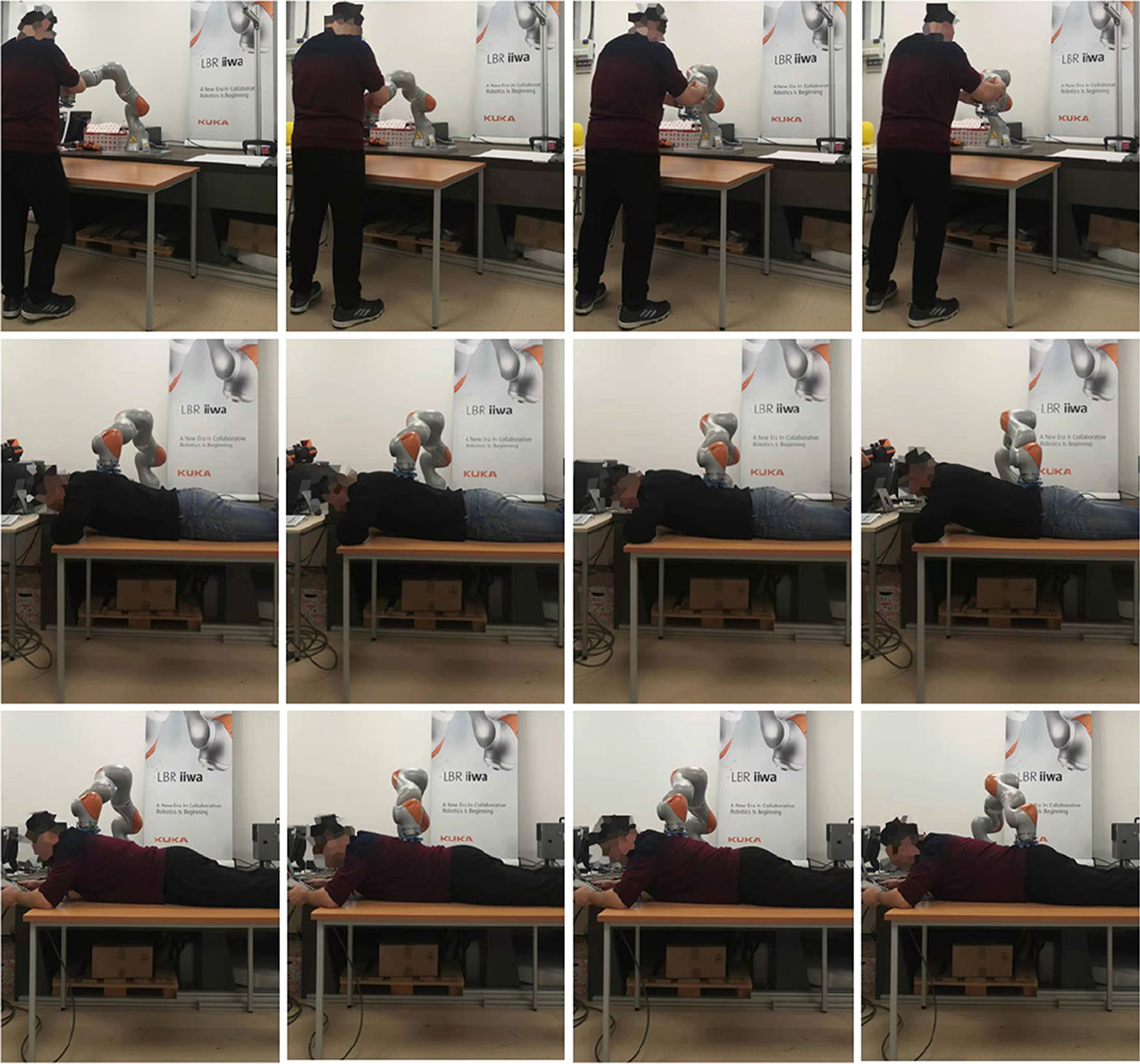

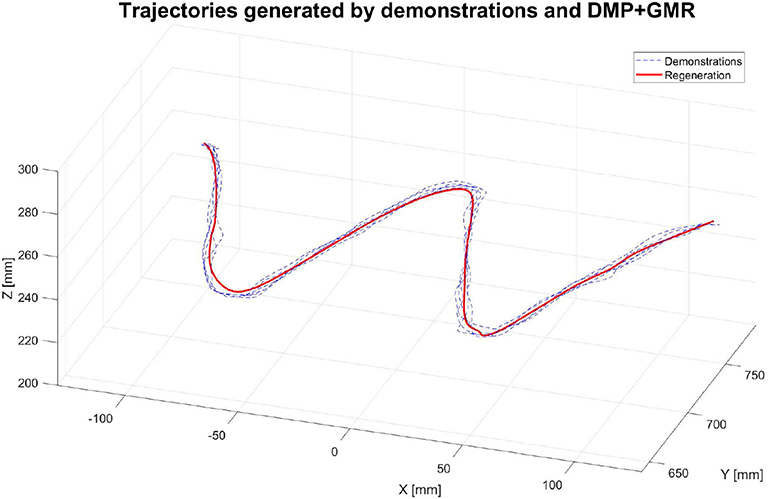

First of all, we conducted an experiment to test the accuracy of our proposed robotic teaching interface where only position control was considered. As in some particular situations during the massage, the service robot may require to manipulate as accurately as our human carer; there are specific areas of the patients required to be massaged. This is the reason behind the accuracy of the teaching interface matters. In order to test the accuracy, firstly the position of the KUKA iiwa robot end-effector in the Cartesian space was chosen as the performance index. The human operator physically guided the robot to draw a sine curve for five times in the treat table by holding its end-effector. After training process, the robot could regenerate a new smooth trajectory. For the second experiment, one human operator physically taught the robot to perform the massage movements on the first participant by holding the end-effector of the robot. After the robot was taught, a participant as well as the operator himself were massaged by the robot; the participants slowly and smoothly lifted their back up and down. Figure 5 shows the teaching-based massage process and we can observe that the KUKA LBR iiwa robot successfully accomplished the desired massage task with only one time teaching. Consequently, our proposed massage system could automatically fit different body shapes. Meanwhile, the instantaneous external force and torque of the robot endpoint in Cartesian space were outputted to the master PC for data analysis. The real position in both the Joint space and Cartesian space were plotted by MATLAB.

Figure 5. Experiment snapshots of the Kuka LBR iiwa manipulator for massage tasks by the proposed hybrid position/force control method.

In addition, the third experiment has been conducted to validate the spatial generalization functions of our proposed robotic teaching interface. To do this, three participants were sought to be undertaken the massage services of their shoulder by seating under the robot manipulator. The operator taught the robot to do the massage task on all the three participants with only once teaching. All the parameters kept the same as those from the second experiment. The massage services were reproduced by the robot for all the participants one by one with different orders.

The first group of experiments aimed to verify the learning performance of our proposed teaching interface when the demonstrations are defective. To verify the learning performance of the modified DMP better, we designed a drawing task for the robot, and the experiment setup is shown in Figure 5. In this experiment, the robot is required to draw an image of sinusoid on the paper after the human operator demonstrates the task five times. The parameters of the DMP model are set as τ = 1, k = 25, c = 10, and αf = 8. As is shown in Figure 6, the demonstrations are defective and the curves are irregular. One of the reasons is that the demonstrator is drawing on the paper indirectly by holding the wrist of the robot, which affects the exertion of drawing skill. The demonstrations are modeled in the task space. As is shown in Figure 6, comparing the performance index of the demonstrations and the generated trajectory, a smooth curve is accurately retrieved from multiple demonstrations using the modified DMP without any unexpected drawing. The robot performs the drawing task after learning, and the curve that the robot draws is smoother than the demonstrations.

Figure 6. The trajectories of the robot endpoint in Cartesian space generated by demonstrations and the proposed teaching interface.

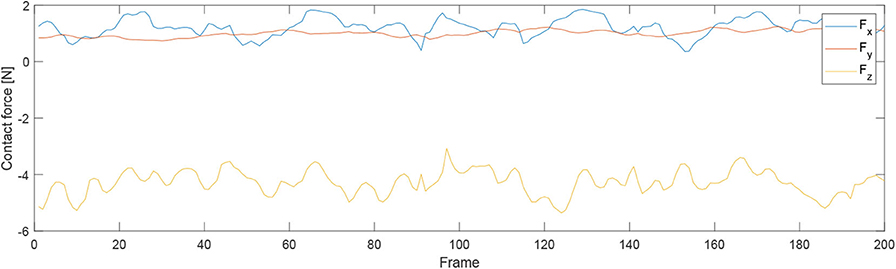

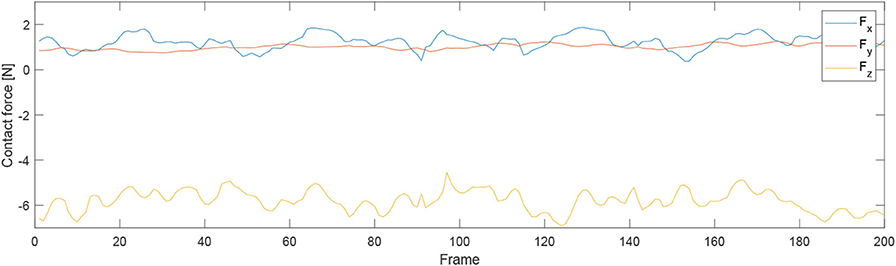

For the second experiment, two participants are massaged by the robot by only teaching once. During the massaging, the A7 joint of the robot is set as fixed value because it is only related to the end-effector's orientation. Figures 7, 8 illustrate the robot's angular data of all the seven joints when the robot was reproducing the massage on the first and second participant, respectively. Because the massage task was first along the direction of the back of the participants and then pounded the back; from the figures, we can thus see that the first joint of the robot A1 was firstly fluctuating and then kept stable; the A2, A4, and A6 joints were firstly kept stable and then fluctuated. This is because the robot via A1 joint moves left and right; via A2, A4, and A6, joints move up and down. Comparing Figures 7, 8, we can notice that the A2 and A4 joints were increasing, and the A6 joint was decreasing during the whole massage process. This is caused by the fact that the second participant has a thicker body shape, and when the robot is in a lift-up configuration, its 6th joint will be more folded. Figures 9, 10 show the contact force variables of the end-effector of the robot in X, Y, and Z directions during the massage for the first and second participants. Here we define the moving direction is the positive direction of the robot endpoint. We can notice that the contact forces in X and Y directions are the positive values while those in Z direction are negative values with bigger figures. This is caused by the fact that, during the massage paths playback process, the endpoint of the robot in Z direction met resistance comparing to its original teaching configuration. In addition, the contact force vertical to the massage paths (Z direction) also differed with two participants. Contact force variables when massaging the first participant are in the interval [−3.5 −5] N, while the variables when the robot was massaging the second participant are in [−5 −6.5] N. This is owing to the two participants differing in body thickness. The third test has validated the generalization ability of our proposed massaging system. The training results are shown in Figure 11. The motions of the robot are regenerated from an one time teaching based demonstration, which synthesize the features of the demonstration and enable the robot to perform the massage task successfully as shown in Figure 11. The target order of the massage service is then modulated to be changed. A conclusion can be drawn from the video abstract; the profile of the reproduction is obtained.

Figure 9. Contact force variables of the end-effector of the robot in X Y Z directions during the massage for the first participant.

Figure 10. Contact force variables of the end-effector of the robot in X Y Z directions during the massage for the second participant.

Through the above conducted three experiments, we can note several things.

1. Our proposed hybrid position/force mode teaching interface was able to generate an accurate path after being taught, which reduces the errors in 3D space.

2. Our proposed hybrid position/force mode teaching interface was able to automatically and adaptively fit all body shapes with smooth force implementing.

3. The spatial generalization ability was validated, where the whole massage tasks can be segmented into several unit movement primitives, which can be regrouped into different orders with only one-time teaching, and it promoted the working flexibility.

In this paper, an enhanced force-sensing and robotic-learning algorithm-based robotic teaching interface has been developed to perform the massaging tasks. In the motion generation part, the discrete DMP is selected as the basic motion model, which can generalize the motions. To improve the learning performance of the DMP model, the GMM, and GMR are employed for the estimation of the unknown function of the motion model. With this modification, the DMP model is able to retrieve a better motion from multiple demonstrations of a specific task. For the force input aspects, a hybrid force/position controller is introduced to ensure the safety of direct human-robot interaction. Several experiments have been performed on the KUKA LBR iiwa robot to test the performance of our proposed methods, which has proven that our proposed method can be used to establish a novel robot learning framework for massaging and facilitate the robot learning at a higher level. Our future work will focus on combining with visual monitoring technology, where the acupuncture point and bones of the patient's back will be clearly recognized and tracked, which results in better demonstrations for the robot to learn from.

All datasets presented in this study are included in the article/Supplementary Material.

The written informed consent was obtained from the individuals for the publication of any potentially identifiable images or data included in this article.

CL, AF, JS, and SL: conceptualization. CL and AF: methodology and writing–review and editing. CL: validation and formal analysis. AF and JS: resources. CL and SL: writing–original draft preparation. JS and SL: supervision and funding acquisition. AF: project administration. All authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnbot.2020.00030/full#supplementary-material

Bernhardt, M., Frey, M., Colombo, G., and Riener, R. (2005). “Hybrid force-position control yields cooperative behaviour of the rehabilitation robot lokomat,” in 9th International Conference on Rehabilitation Robotics, 2005 (Chicago, IL), 536–539. doi: 10.1109/ICORR.2005.1501159

Calinon, S., and Billard, A. (2007). “Incremental learning of gestures by imitation in a humanoid robot,” in Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction (Washington, DC: ACM), 255-262. doi: 10.1145/1228716.1228751

Chelikowsky, J. R., Troullier, N., and Saad, Y. (1994). Finite-difference-pseudopotential method: electronic structure calculations without a basis. Phys. Rev. Lett. 72:1240. doi: 10.1103/PhysRevLett.72.1240

Fanaei, A., and Farrokhi, M. (2006). Robust adaptive neuro-fuzzy controller for hybrid position/force control of robot manipulators in contact with unknown environment. J. Intell. Fuzzy Syst. 17, 125–144.

Field, T. (2018). Pain and massage therapy: a narrative review. Curr. Res. Complement. Altern. Med. doi: 10.29011/CRCAM-125/100025

Gear, C. W. (1988). Differential-algebraic equation index transformations. SIAM J. Sci. Stat. Comput. 9, 39–47. doi: 10.1137/0909004

Goradia, A., Xi, N., and Tan, J. (2002). “Hybrid force/position control in moving hand coordinate frame,” in 7th International Conference on Control, Automation, Robotics and Vision, 2002. ICARCV 2002, Vol. 3 (Guangzhou), 1126–1131. doi: 10.1109/ICARCV.2002.1234931

Ha, Q., Nguyen, Q., Rye, D., and Durrant-Whyte, H. (2000). Impedance control of a hydraulically actuated robotic excavator. Autom. Constr. 9, 421–435. doi: 10.1016/S0926-5805(00)00056-X

Howlett, P. G., Pudney, P. J., and Vu, X. (2009). Local energy minimization in optimal train control. Automatica 45, 2692–2698. doi: 10.1016/j.automatica.2009.07.028

Kume, M., Morita, Y., Yamauchi, Y., Aoki, H., Yamada, M., and Tsukamoto, K. (1996). “Development of a mechanotherapy unit for examining the possibility of an intelligent massage robot,” in Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems. IROS'96, Vol. 1 (Osaka), 346–353. doi: 10.1109/IROS.1996.570698

Li, C., Yang, C., Ju, Z., and Annamalai, A. S. (2018). An enhanced teaching interface for a robot using DMP and GMR. Int. J. Intell. Robot. Appl. 2, 110–121. doi: 10.1007/s41315-018-0046-x

Li, C., Yang, C., Wan, J., Annamalai, A., and Cangelosi, A. (2017). “Neural learning and kalman filtering enhanced teaching by demonstration for a baxter robot,” in 2017 23rd International Conference on Automation and Computing (ICAC) (Huddersfield, UK), 1–6. doi: 10.23919/IConAC.2017.8081985

Ma, L.-Z., Yao, G.-Y., Ni, Q.-L., and Zhu, Z. (2005). Study of the traditional Chinese medicine (TCM) massage robot based on the hybrid mechanism to obtain rolling treatment. Mach. Des. Res. 21, 43–46.

Matsubara, T., Hyon, S.-H., and Morimoto, J. (2011). Learning parametric dynamic movement primitives from multiple demonstrations. Neural Netw. 24, 493–500. doi: 10.1016/j.neunet.2011.02.004

Nanayakkara, T., Watanabe, K., Kiguchi, K., and Izumi, K. (2004). Evolving a multiobjective obstacle avoidance skill of a seven-link manipulator subject to constraints. Int. J. Syst. Sci. 35, 167–178. doi: 10.1080/00207720410001684530

Nazeer, K. A., and Sebastian, M. (2009). “Improving the accuracy and efficiency of the K-means clustering algorithm,” in Proceedings of the World Congress on Engineering, Vol. 1, 1–3 (London, UK: Association of Engineers).

Nguyen, Q., Ha, Q., Rye, D., and Durrant-Whyte, H. (2000). “Force/position tracking for electrohydraulic systems of a robotic excavator,” in Proceedings of the 39th IEEE Conference on Decision and Control (Cat. No. 00CH37187), Vol. 5 (Sydney, NSW), 5224–5229. doi: 10.1109/CDC.2001.914787

Petitjean, F., Forestier, G., Webb, G. I., Nicholson, A. E., Chen, Y., and Keogh, E. (2014). “Dynamic time warping averaging of time series allows faster and more accurate classification,” in 2014 IEEE International Conference on Data Mining (Shenzhen), 470–479. doi: 10.1109/ICDM.2014.27

Schaal, S. (2003). “Movement planning and imitation by shaping nonlinear attractors,” in Proceedings of the 12th Yale Workshop on Adaptive and Learning Systems (New Haven, CT: Citeseer).

Schaal, S. (2006). Dynamic movement primitives-a framework for motor control in humans and humanoid robotics, Adaptive Motion of Animals and Machines (Springer), 261–280. doi: 10.1007/4-431-31381-8_23

Scherillo, P., Siciliano, B., Zollo, L., Carrozza, M., Guglielmelli, E., and Dario, P. (2003). “Parallel force/position control of a novel biomechatronic hand prosthesis,” in Proceedings 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003) (Kobe), Vol. 2, 920–925. doi: 10.1109/AIM.2003.1225465

Senin, P. (2008). Dynamic Time Warping Algorithm Review. Honolulu, HI: Information and Computer Science Department University of Hawaii, 855.

Silvério, J., Rozo, L., Calinon, S., and Caldwell, D. G. (2015). “Learning bimanual end-effector poses from demonstrations using task-parameterized dynamical systems,” in 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (Hamburg), 464–470. doi: 10.1109/IROS.2015.7353413

Tatiraju, S., and Mehta, A. (2008). Image segmentation using K-means clustering, EM and normalized cuts. Depart. EECS 1, 1–7.

Tong, K.-F., and Huang, J. (2008). New proximity coupled feeding method for reconfigurable circularly polarized microstrip ring antennas. IEEE Trans. Antenn. Propag. 56, 1860–1866. doi: 10.1109/TAP.2008.924736

Keywords: hybrid force/position, teaching by demonstration, dynamic motion primitive, dynamic time warping, gaussian mixture regression

Citation: Li C, Fahmy A, Li S and Sienz J (2020) An Enhanced Robot Massage System in Smart Homes Using Force Sensing and a Dynamic Movement Primitive. Front. Neurorobot. 14:30. doi: 10.3389/fnbot.2020.00030

Received: 19 March 2020; Accepted: 04 May 2020;

Published: 29 June 2020.

Edited by:

Yangming Li, Rochester Institute of Technology, United StatesReviewed by:

Hang Su, Fondazione Politecnico di Milano, ItalyCopyright © 2020 Li, Fahmy, Li and Sienz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shaoxiang Li, bGVlc2hhb3hpYW5nQDEyNi5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.