95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurorobot. , 13 February 2020

Volume 14 - 2020 | https://doi.org/10.3389/fnbot.2020.00006

Christopher A. Harris1*

Christopher A. Harris1* Lucia Guerri2

Lucia Guerri2 Stanislav Mircic1

Stanislav Mircic1 Zachary Reining1

Zachary Reining1 Marcio Amorim1

Marcio Amorim1 Ðorđe Jović1

Ðorđe Jović1 William Wallace3

William Wallace3 Jennifer DeBoer4

Jennifer DeBoer4 Gregory J. Gage1

Gregory J. Gage1Understanding the brain is a fascinating challenge, captivating the scientific community and the public alike. The lack of effective treatment for most brain disorders makes the training of the next generation of neuroscientists, engineers and physicians a key concern. Over the past decade there has been a growing effort to introduce neuroscience in primary and secondary schools, however, hands-on laboratories have been limited to anatomical or electrophysiological activities. Modern neuroscience research labs are increasingly using computational tools to model circuits of the brain to understand information processing. Here we introduce the use of neurorobots – robots controlled by computer models of biological brains – as an introduction to computational neuroscience in the classroom. Neurorobotics has enormous potential as an education technology because it combines multiple activities with clear educational benefits including neuroscience, active learning, and robotics. We describe a 1-week introductory neurorobot workshop that teaches high school students how to use neurorobots to investigate key concepts in neuroscience, including spiking neural networks, synaptic plasticity, and adaptive action selection. Our do-it-yourself (DIY) neurorobot uses wheels, a camera, a speaker, and a distance sensor to interact with its environment, and can be built from generic parts costing about $170 in under 4 h. Our Neurorobot App visualizes the neurorobot’s visual input and brain activity in real-time, and enables students to design new brains and deliver dopamine-like reward signals to reinforce chosen behaviors. We ran the neurorobot workshop at two high schools (n = 295 students total) and found significant improvement in students’ understanding of key neuroscience concepts and in students’ confidence in neuroscience, as assessed by a pre/post workshop survey. Here we provide DIY hardware assembly instructions, discuss our open-source Neurorobot App and demonstrate how to teach the Neurorobot Workshop. By doing this we hope to accelerate research in educational neurorobotics and promote the use of neurorobots to teach computational neuroscience in high school.

Understanding the brain is necessary to understand ourselves, treat brain disorders and inspire new scientists. Insights from neuroscience are also facilitating rapid progress in artificial intelligence (Hassabis et al., 2017; Zador, 2019). Nevertheless, most students receive almost no education in neuroscience and the public’s understanding of the brain is lacking (Frantz et al., 2009; Labriole, 2010; Fulop and Tanner, 2012; Sperduti et al., 2012; Dekker and Jolles, 2015). The reasons cited are that the brain is perceived to be too complex, and that the tools needed to study it are too expensive and hard to use. Although neuroscience is not yet an independent component of typical high school curricula, some schools are adopting neuroscience courses or steering their biology or psychology classes in the direction of neuroscience to satisfy growing interest in the brain (Gage, 2019).

An important cause of the increasing prominence and appeal of neuroscience in recent years is its powerful synergy with computer technology. Brain imaging and visualization techniques have given neuroscientists and the public unprecedented access to the complex structures and dynamics of brains. Computer modeling is enabling researchers to go beyond theorizing about brain function to actually implementing those functions in silico. Large networks of simple neurons connected by plastic synapses and subject to biologically inspired forms of learning can now perform feats of prediction and control previously thought to be the sole purview of the human brain, and increasingly permeate all aspects of digital life (Krizhevsky et al., 2012; Hassabis et al., 2017; Levine et al., 2018). Neurorobotics - the study of robots controlled by artificial nervous systems - leverages much of this synergy and is proving a powerful method for developing and validating computational models of brain function (Falotico et al., 2017; Krichmar, 2018; Antonietti et al., 2019; Tieck et al., 2019; Zhong et al., 2019).

Neurorobotics also has enormous and largely untapped potential as a neuroscience education technology because it combines multiple activities with clear educational benefits. (1) Robotics is a highly motivating and effective framework for teaching STEM in schools (Barker, 2012; Benitti, 2012; Karim et al., 2015), including to underrepresented students (Weinberg et al., 2007; Ludi, 2012; Rosen et al., 2012; Yuen et al., 2013). (2) The process of designing, testing and modifying neurorobot brains with interesting behavioral and psychological capacities engages students in active learning, which has been shown to improve STEM outcomes (Freeman et al., 2014), especially among disadvantaged students (Kanter and Konstantopoulos, 2010; Haak et al., 2011; Cervantes et al., 2015). (3) Finally, neurorobotics combines robotics and active learning with neuroscience, a highly multidisciplinary subject that presents itself in a wide array of real-life situations and readily appeals to the public (Frazzetto and Anker, 2009; Sperduti et al., 2012). The aim of neurorobotics is convincing robotic embodiment of attention, emotion, decision-making and many other mental capacities that are inherently interesting to students. Given user-friendly and affordable robot hardware, intuitive brain design and visualization software, and well-researched curriculum, educational neurorobotics has the potential to revolutionize neuroscience tuition, STEM education and the understanding of the brain.

Educational neurorobotics is a small but growing area of research and development. Iguana Robotics developed perhaps the first neurorobot for education – an inexpensive four-legged robot that uses capacitors and resistors to emulate neural networks and move (Lewis and Rogers, 2005). Middle and high-school students were readily motivated to modify these neural networks in order to change the robot’s gait, and demonstrated improved neuroscience attitudes as a result. NeuroTinker has more recently developed LED-equipped hardware modules that emulate individual neurons and can be connected into small neural circuits and attached to sensors and motors. Undergraduate students demonstrated improved understanding of neuroscience concepts after using the modules (Petto et al., 2017). Robert Calin-Jageman’s Cartoon Network is an educational neural network simulator that can connect via USB to the Finch Robot (BirdBrain Technologies LLC), a mobile robot with temperature-, light- and touch sensors, motorized wheels, lights, and buzzers. Cartoon Network allows students to use different types of neurons and synapses to build neural circuits and control the Finch Robot, and generated promising results in workshops with undergraduates and teachers (Calin-Jageman, 2017, 2018). Asaph Zylbertal’s NeuronCAD is a Raspberry Pi-based neurorobot that uses simulated neurons to control motors and process input from a camera (Zylbertal, 2016) but the project’s educational aims have not yet been implemented. Finally, Martin Sanchez at University Pompeu Fabra organizes an annual educational neurorobotics project as part of the Barcelona International Youth Science Challenge (Sanchez, 2016). We set out to expand on these promising developments in educational neurorobotics and extend the range of brain functions and biologically inspired neural networks students are able to create and the ways in which these networks can be visualized, analyzed and modified.

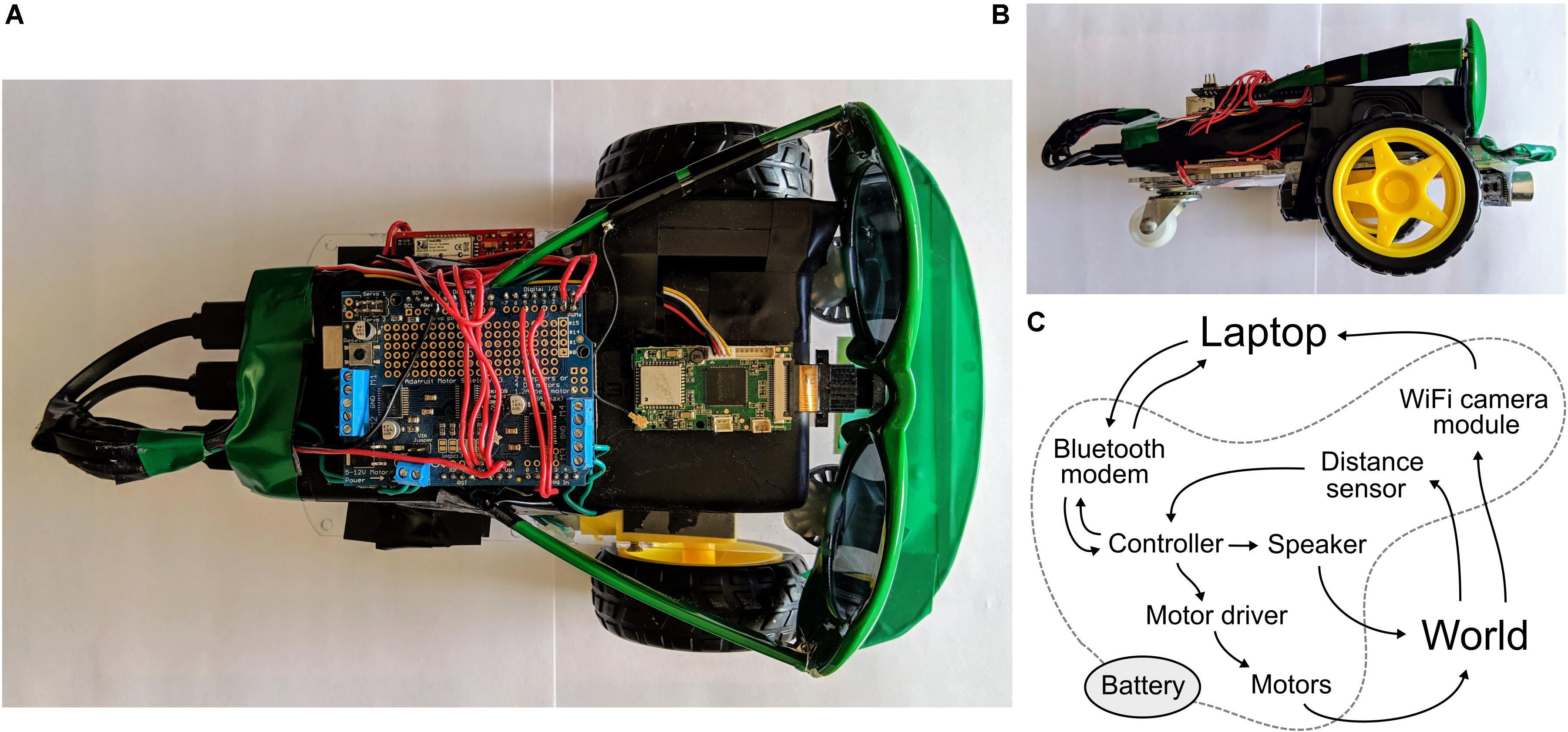

We have developed a neurorobot for high school neuroscience education that combines easy-to-use brain simulation and brain design software with affordable, wireless, camera-equipped do-it-yourself (DIY) hardware. Our DIY neurorobot is a mobile robot that uses wheels, a camera, a speaker and a distance sensor to navigate and interact with its environment (Figure 1). To keep hardware cost low while allowing students to leverage compute-intensive graphical user interface, brain simulation and machine learning functionality, we chose to perform most computations on a wirelessly connected laptop that receives sensory input from the robot, extracts sensory features, simulates user-defined Izhikevich-type neural networks (Izhikevich, 2003) and sends commands back to the robot’s motors and speaker in real-time. The neurorobot consists of generic hardware components that can be purchased online at a total cost of about $170 and assembled in under 4 hrs. with a soldering iron and a glue gun. A parts list and assembly instructions are provided below.

Figure 1. A DIY neurorobot for education. (A,B) Top and sideways views showing the neurorobot’s chassis, battery, controller (with motor shield on top), WiFi camera module, speaker, distance sensor, Bluetooth modem and sunglasses. (C) Schematic showing the flow of signals in the system.

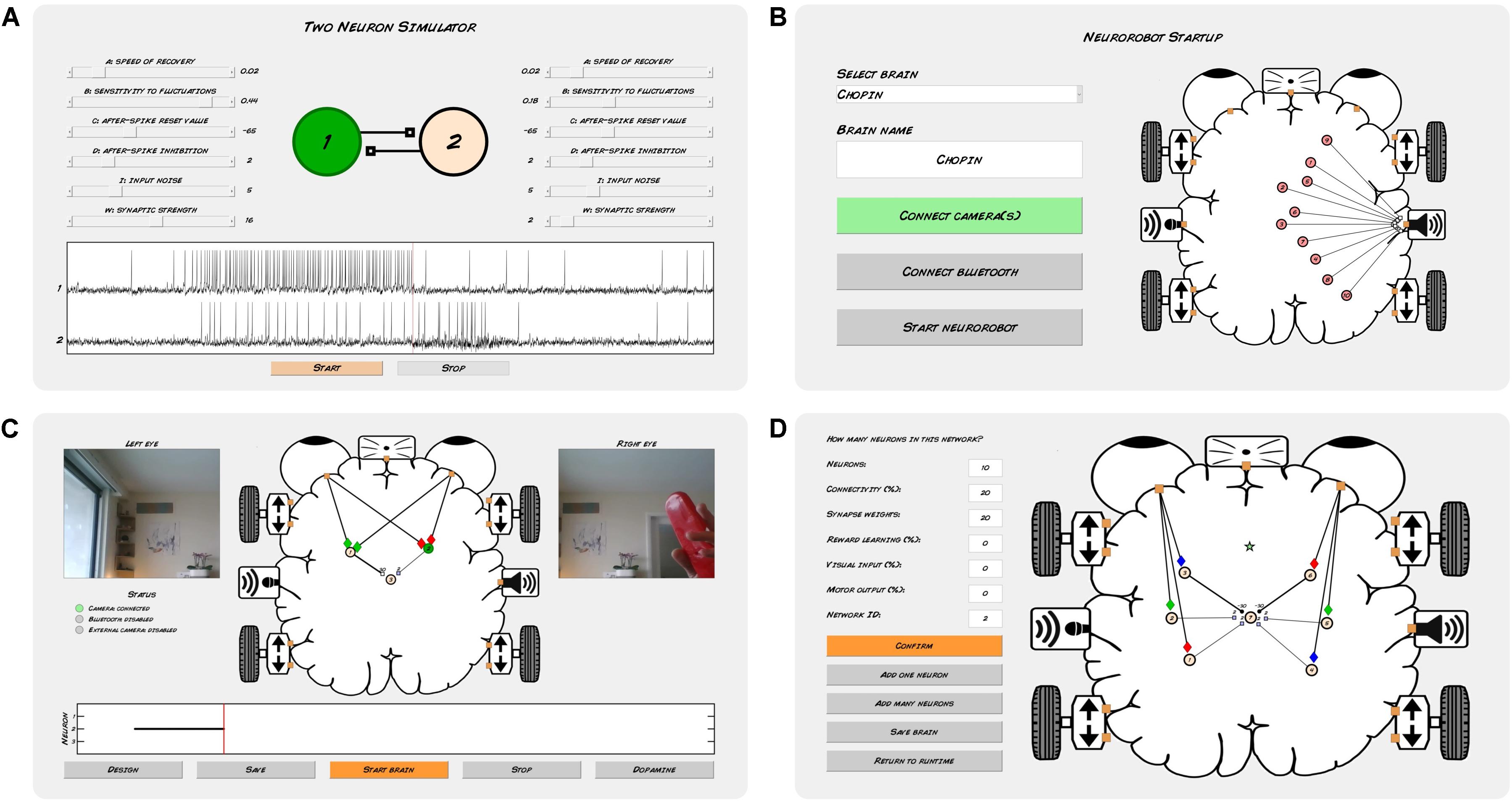

We have also developed a software application that performs real-time simulation and visualization of the neurorobot’s brain and visual input, and allows user-controlled delivery of dopamine-like rewards and other commands (Figure 2). The app includes a brain design environment for building neural networks, either neuron-by-neuron and synapse-by-synapse or by algorithmic definition of larger networks. The Neurorobot App is written in Matlab and is available to download at github.com/backyardbrains/neurorobot. Although we recommend using neurorobot hardware, the app can run without it, and can use a normal web camera to acquire visual input.

Figure 2. Neurorobot app. (A) Two Neuron Simulator. (B) Startup mode. (C) Runtime mode. (D) Brain design mode.

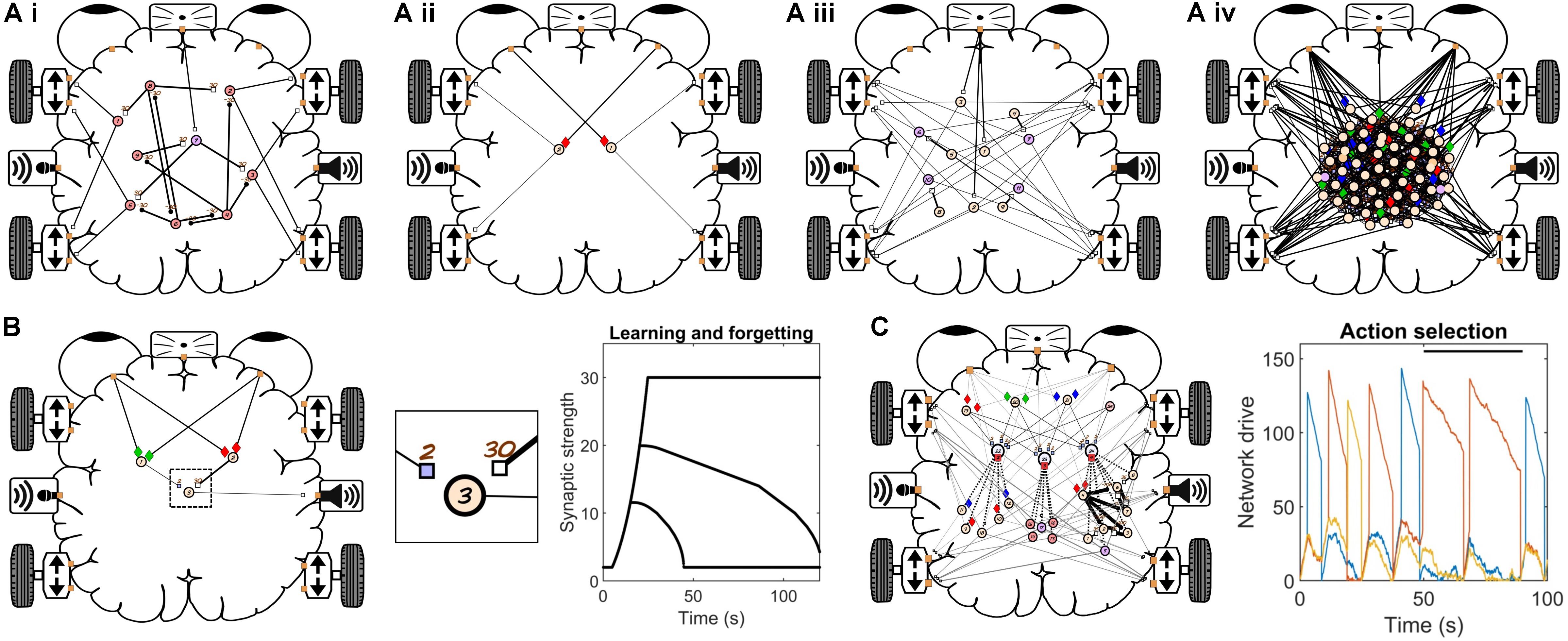

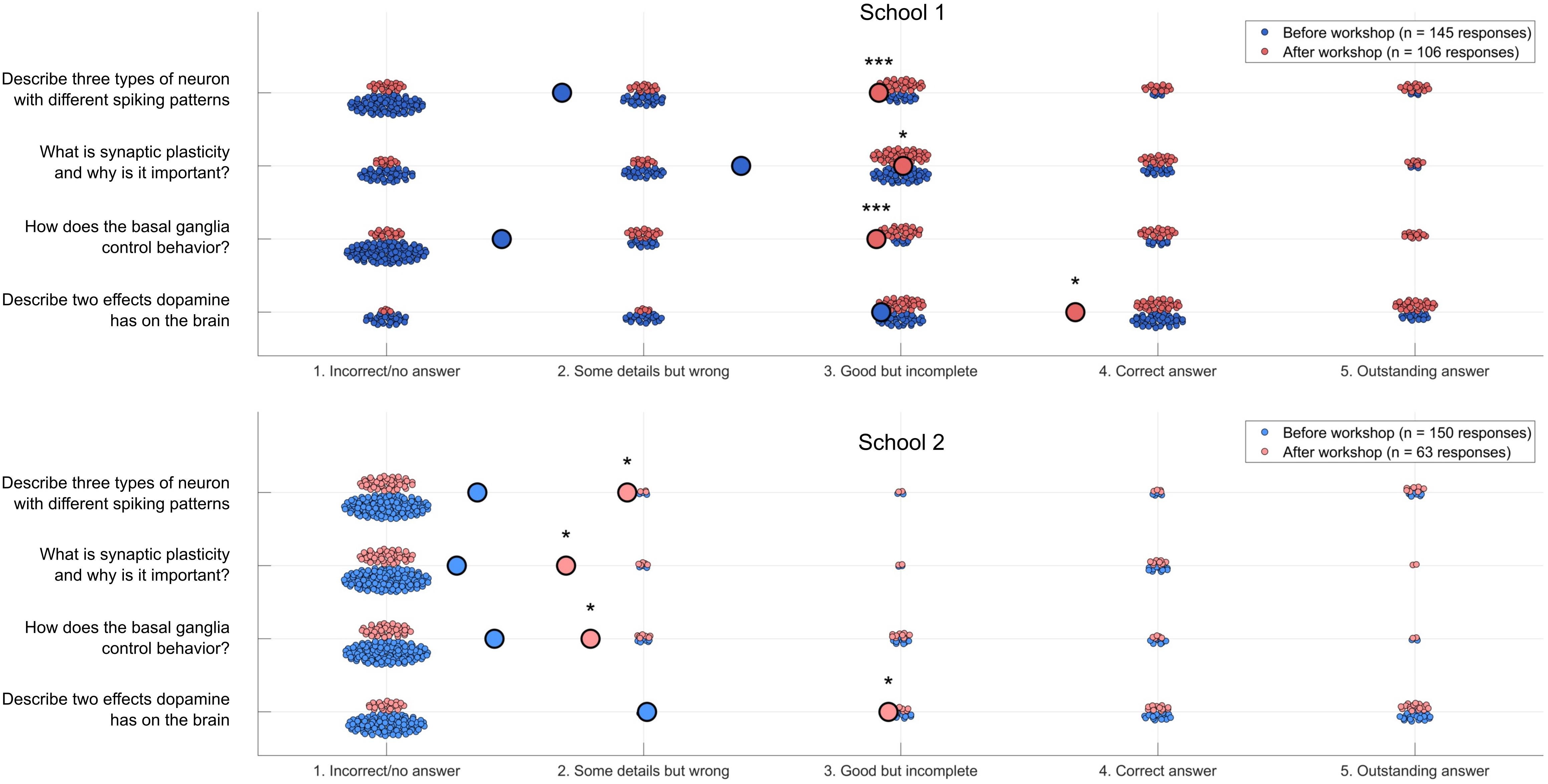

To provide an initial assessment of the educational value of our neurorobots, we developed a 1-week long introductory Neurorobot Workshop for high school students (Figure 3). In this workshop, students are first familiarized with the behavior of the Izhikevich neuron model and shown how to connect such neurons with synapses to produce goal-directed behavior. Students then investigate Hebbian learning as they train their neurorobot to remember new visual stimuli. They also explore action selection and reinforcement learning by using a “dopamine button” to teach their robot how to behave in different sensory contexts. To finish, students design a brain to perform behaviors of their own choosing, and present their results to the rest of the class. We taught the Neurorobot Workshop at two high schools (n = 295 students) and found significant student gains in neuroscience learning and students’ attitudes to neuroscience (Figures 4,5).

Figure 3. Neurorobot workshop. (A) Brains used to investigate spontaneous behavior (Ai), directed navigation (Braitenberg vehicle) (Aii), slow exploration (Aiii) and random networks (Aiv). In a Braitenberg vehicle, detection of a visual feature in either half of the visual field activates motors on the opposite side of the robot, allowing it to approach a target even if the target moves around. (B) Brain used to investigate Hebbian learning. Plot shows synaptic decay rates for three durations of training. (C) Brain used to investigate adaptive action selection. Plot shows network drive for 3 networks during normal and rewarded (black bar) behavior.

Figure 4. Neuroscience content quiz results. Students’ results on a neuroscience quiz (n = 295 pre-workshop responses, 169 post-workshop responses). Large circles indicate average responses. Wilcoxon rank sum: *p < 0.05, ***p < 0.000000000005.

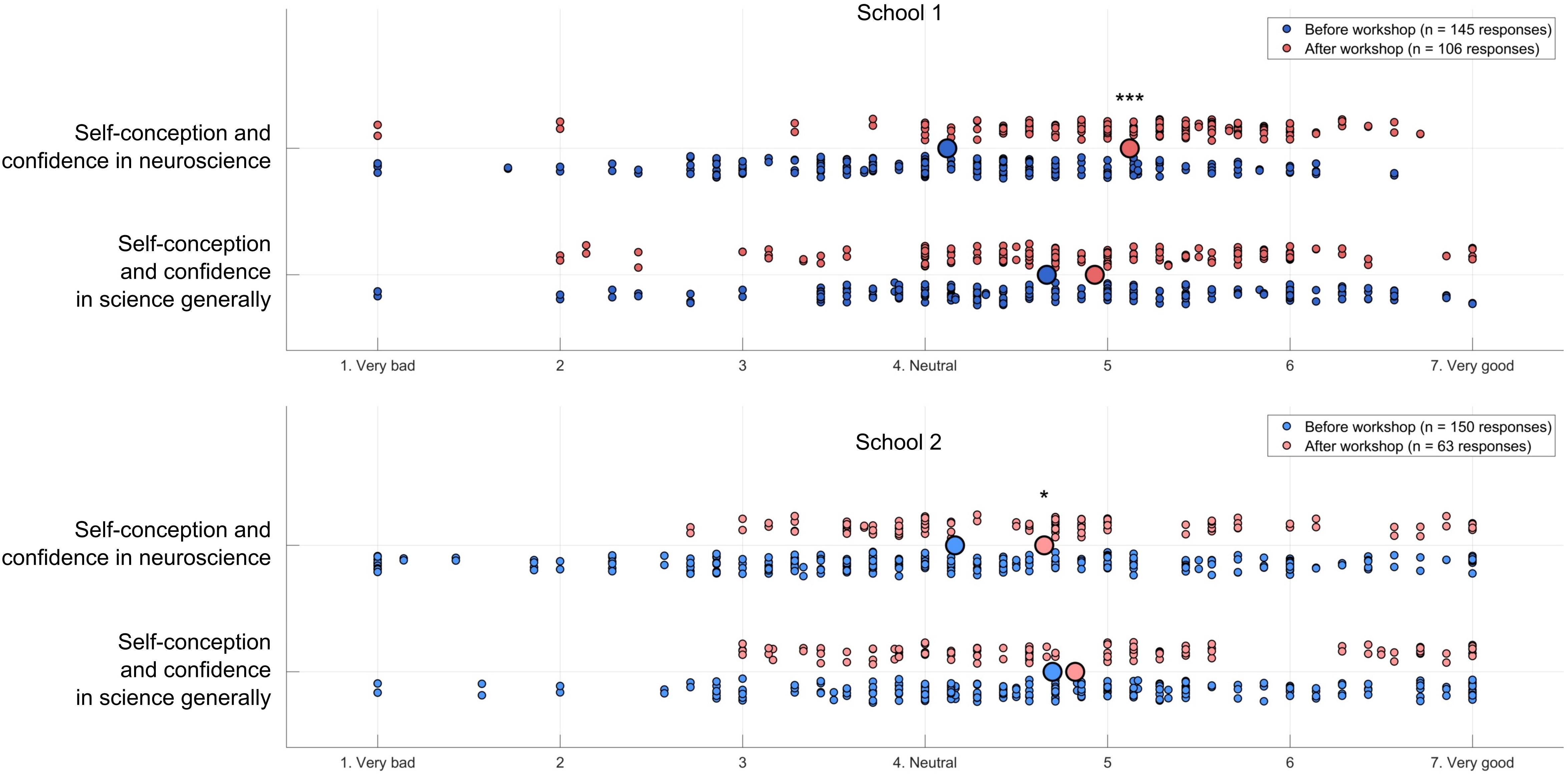

Figure 5. Science attitudes survey results. Students’ results on a self-report science attitudes survey (n = 295 pre-workshop responses, 169 post-workshop responses). Small dots represent individual students’ average level of agreement or disagreement with seven statements about their attitudes to neuroscience or science generally (see Supplementary Material for details). Large circles indicate average responses. Wilcoxon rank sum: * p < 0.05, *** p < 0.000000000005.

We developed our DIY neurorobot hardware design (Figure 1) with the aim of making neurorobotics accessible and appealing to a wide range of learners and learning communities. To accomplish this, we sought to use affordable components that can be purchased online and easily assembled, while at the same time allowing fast, wireless robot mobility, real-time processing of 720p video, audio communication, and simulation of relatively large spiking neural networks. The neurorobot uses a plastic chassis with two motorized wheels and a single swivel wheel. Double-sided tape was used to attach a 22000 mAh battery with 3 USB ports to the chassis. An UNO R3 controller with an Adafruit motor shield was affixed on top of the battery. A RAK5206 WiFi camera module was also attached on top of the battery with the camera attached to the forward-facing side of the battery. An 8 ohm speaker was taped to the side of the battery. A HC-SR04 ultrasonic distance sensor was attached to the forward-facing underside of the chassis with a glue gun. A SparkFun BlueSMiRF Bluetooth modem was attached to the chassis, next to the battery. Finally, to increase the robot’s appeal to high school students, a pair of sunglasses was attached and color-matched tape was used to decorate the front of the chassis. A parts list, wiring diagram, and step-by-step assembly instructions are provided in the Supplementary Material and Supplementary Figure S1.

The neurorobot’s UNO R3 controller firmware is written in C/C++ and is available to download at github.com/backyardbrains/neurorobot. The controller communicates via the Bluetooth modem with the Neurorobot App, which runs on a dedicated laptop (Figure 1C). Every 100 ms the controller looks for a 5 byte package from the laptop representing a speed (0–250) and direction (1 = forward, 2 = backward) for the left motor (bytes 1–2), a speed and direction for the right motor (bytes 3–4) and an output frequency for the speaker (31–4978 Hz, 8-bit resolution). If the package is available, motor and speaker states are updated accordingly. In the same 10 Hz cycle, the controller also uses the ultrasonic sensor to estimate the distance to the nearest object in front of the neurorobot and sends this distance to the laptop (range: 4–300 cm, 32-bit resolution). In parallel, the neurorobot’s RAK5206 WiFi camera module collects 720p color images at 10 frames per second and sends them via WiFi to the dedicated laptop. We are currently developing a Matlab/C++ library that will allow the WiFi camera module to perform all wireless communication, obviating the need for the Bluetooth modem.

We developed a Matlab-based app to enable students with no background in neuroscience or programming to design spiking neural networks for the DIY neurorobot, and to visualize and interact with those neural networks in real-time. Real-time processing is ensured using Matlab timer objects, which report the execution time of each processing step in milliseconds as well as the number of missed cycles. We chose to implement the Izhikevich neuron (Izhikevich, 2003), a spiking neuron model that balances realistic-looking membrane potentials and spike patterns with relatively light compute load. The Neurorobot App (Figure 2) simulates neurons at a speed of 1000 Hz as required by the Izhikevich formalism, while performing all other processes including audiovisual processing, plasticity and brain visualization at a speed of 10 Hz. Synaptic connections between neurons can be either excitatory or inhibitory (measured in mV, range: −30 to 30 mV). Individual excitatory synapses can be made subject to Hebbian learning and will then be strengthened if their presynaptic neuron fires simultaneously with or just before their postsynaptic neuron. This synaptic reinforcement is subject to inverse exponential decay and disappears completely after a few minutes unless the synaptic reinforcement is sufficiently strong (>24 mV) to form long-term memory. Hebbian learning can furthermore be made conditional on simultaneous delivery of dopamine-like reward signals.

To help students familiarize themselves with Izhikevich neurons the Neurorobot App features the Two Neuron Simulator (Figure 2A), which simulates a pair of neurons connected by reciprocal excitatory synapses. Sliders allow students to update the neuronal activity parameters, input noise and synaptic strengths of both neurons in real-time. The Two Neuron Simulator can be launched from the Neurorobot App startup menu.

In addition to simulating neurons and their synaptic connections, the Neurorobot App optionally implements action selection functionality modeled on the dynamics of action selection in the basal ganglia (Grillner, 2006; Prescott et al., 2006; Seth et al., 2011; Bolado-Gomez and Gurney, 2013). Each neuron is assigned a network ID during the brain design process and each such network is associated with a stochastically increasing level of “drive” (or “motivation”) which reflects the network’s likelihood of being selected by the basal ganglia. Only neurons with a selected network ID can fire spikes (i.e., control behavior). If the drive of a network crosses a threshold the network may be selected (the selection process is currently implemented algorithmically, not neuronally). If selected, a network’s drive is significantly increased. While selected, the network will inhibit the drive of all other networks but will also slowly lose its own drive unless excitatory synaptic inputs or dopamine-like reward signals are provided. A special “basal ganglia” neuron type can be used to integrate sensory and other neuronal inputs intended to modulate the drive of a specific network in different contexts, mirroring corticostriatal input to the basal ganglia. Synaptic inputs onto a basal ganglia neuron increase the drive of that neuron’s network proportionally. Network ID 1 is exempt from selection to allow for uninterrupted sensory and other neuronal activity.

In the Neurorobot App, neurons and their connections are represented within a brain-shaped workspace (Figures 2B–D). The workspace is lined with icons representing camera input, distance input, audio input (not yet in use), motor output and sound output. Neurons are represented within this workspace as colored circles connected by axons (black lines) that end in synapses (small rectangles or circles representing excitatory and inhibitory synapses, respectively).

The Neurorobot App has three modes of operation: startup, runtime and design. In startup mode (Figure 2B), students are able to load and preview different brains, and establish their robot’s WiFi and Bluetooth connections. In runtime mode (Figure 2C), students can visualize their robot’s visual input, brain activity and brain structure in real-time, and send dopamine-like reward signals to the brain. Neuronal spikes are indicated by black markers in a continually updated raster plot, and by the spiking cell body briefly turning green. The width of each line representing an axon indicates the absolute strength of that synaptic connection and is also updated in real-time. In design mode (Figure 2D), students are able to add individual neurons to the brain by selecting from a range of pre-specified neuron types with different firing properties, and connect them to other neurons by means of excitatory or inhibitory synapses with different strengths and plasticity rules. Students can also assign neurons a range of sensory preferences (colors, objects, and distances) and motor outputs (speed and direction of movement, sound frequencies) by drawing axonal connections between neurons and the various icons lining the brain-shaped workspace. We use a variety of sensory filters as input to neurons in the brain depending on the content of the lesson and the computational resources available. Each sensory filter indicates the presence or absence of a specific color or object in the video frame. Maximal sensory filter output is 50 mV. Object recognition is accomplished using Matlab’s Deep Learning and Parallel Computing toolboxes and requires a relatively high-performance graphics card (i.e., a CUDA-enabled NVIDIA GPU with compute capability 3.0 or higher). However, colors provide sufficient sensory diversity for all exercises described in the Neurorobot Workshop below. The design mode also allows students to add groups of neurons to the brain by algorithmically defining their properties (Figure 2D). The code needed to run the Neurorobot App is available at github.com/backyardbrains/neurorobot and does not require neurorobot hardware to run.

We developed and performed a 1-week long Neurorobot Workshop with the aim of introducing students to our neurorobot and providing an initial evaluation of its potential as a tool to teach computational neuroscience in high schools (Figures 3–5). Our protocol was approved by IntegReview IRB (March 21, 2018, protocol number 5552). The workshop was conducted in two high schools (Michigan, United States) and consisted of 10 classes (6 at the first school, 4 at the second school) of about 30 students each, and involved a total of 295 students aged 14–19. Each class had 4 or 5 consecutive days (for school 1 and 2, respectively) of 1 hr. neurorobot lessons. During the lessons, students worked in groups of 3–4, with one robot and laptop per group (total of 9 robots and groups per class). Students were recruited to the study as part of their regular teaching in AP (Advanced Placement) biology or psychology. Female students made up 57% of the students in school 1 and 65% in school 2. Laptops were provided by the project team. A school teacher attended each workshop.

The workshop began with the instructor providing a short introduction to the concept of brain-based robots. Students then started the Neurorobot App by navigating to the app folder in Matlab and running the script neurorobot.m. Students began by accessing the Two Neuron Simulator (Figure 2A) and were taught to identify spikes, reduce input noise, increase or decrease spike rate, and trigger postsynaptic spikes by varying synaptic strength. The aim of this exercise was to familiarize students with spiking neurons, with the fact that neurons can be quiet or spontaneously active, and with the synaptic strength needed to reliably trigger spikes in a postsynaptic neuron.

Students were then instructed to use the Neurorobot App to design a brain that would move forward in response to seeing a specific color. (This can be accomplished by adding a single neuron to the brain, assigning it a color preference, and extending axons to the forward-going motors on both sides of the robot.) This required students to learn how to transition between the startup, runtime and design modes of the app, to add a neuron of the correct (i.e., quiet) type to the workspace, and to assign the neuron specific sensory inputs and motor outputs. Students were then encouraged to modify the brain so that the robot would spin around in response to seeing a different color, move backward in response to the distance sensor registering a nearby object, and to produce distinct tones during each behavior. The aim of this exercise was to familiarize students with the Neurorobot App and help them develop an understanding of how functionally distinct neural circuits can co-exist in a single brain.

Students were then encouraged to explore the various pre-configured brains available from the startup menu (e.g., Figures 3Ai–iv), to try to understand how it is possible for those brains to generate distinct spontaneous behaviors in the absence of specific sensory inputs, and to incorporate some of the operative neural mechanisms in their own brain designs. This required students to learn how to load different brains and analyze them in order to identify neuronal properties that contribute to spontaneous behavior (e.g., spontaneously active or bursting neurons). Students were also introduced to the concept of a goal-directed Braitenberg vehicle (Braitenberg, 1986; Figure 3Aii) and to random neural networks generated by defining neuronal and network properties probabilistically (Figure 3Aiv).

In the second lesson of the workshop students were first introduced to Hebbian learning and synaptic plasticity. Students were instructed to load a pre-configured brain consisting of three neurons (“Betsy,” Figure 3B). Neuron 1 was responsive to green and projected a weak but plastic synapse to neuron 3. Neuron 2 was responsive to red and projected a strong but non-plastic synapse to neuron 3. Neuron 3 produced a sound output. Thus, showing the robot a red object led to activation of neurons 2 and 3 and the sound output, whereas showing the robot a green object only activated neuron 1. The challenge was to train the neurorobot to produce a sound in response to seeing the green color alone. The instructor explained the concept of Hebbian learning and showed students how to reinforce the synapse connecting neurons 1 and 3 by presenting the robot with both red and green colors simultaneously. Students were then asked to plot the strength of the plastic synapse in order to quantify how the duration of training (simultaneous stimulus presentation) affected the rate of learning and subsequent forgetting. The aim of the exercise was familiarize students with Hebbian synaptic reinforcement, which depends on simultaneous activation of a pre- and a postsynaptic neuron, to teach them how to read and plot synaptic strengths, and to introduce the idea that sufficient synaptic reinforcement can trigger long-term memory.

In the third lesson students were introduced to the concepts of action selection and reinforcement learning (Figure 3C). The instructor explained how the basal ganglia enables action selection in the vertebrate brain by selective disinhibition of specific behavior-generating neural networks (Grillner, 2006; Prescott et al., 2006; Seth et al., 2011; Bolado-Gomez and Gurney, 2013), and how this process is modulated by dopamine to promote behaviors that lead to reward. Students were shown how this selection process is implemented in the Neurorobot App by means of neural network IDs, thresholded levels of network drive and the basal ganglia neuron type (see section “Neurorobot App”). Students were then instructed to load a preconfigured brain that produces three different behaviors and can be conditioned using the dopamine button (“Merlin,” Figure 3C). Students trained the brain to perform one of its three behaviors in response to seeing a specific color by showing the color to the robot, waiting for it to perform the desired behavior, and then using the dopamine button to reinforce the color-behavior association.

The final 1–2 lessons of the Neurorobot Workshop consisted of a student-led team exercise in which students were challenged to design a brain capable of behaviors of the students’ own choosing, and then present their results to the rest of the class.

To assess the educational value of the Neurorobot Workshop we asked students to complete a survey designed to test neuroscience content learning (4 open-ended questions) and science attitudes (14 Likert scale questions) before and after the workshop. Answers on the neuroscience content quiz were scored 1–5 following a grading grid (1 Incorrect/no answer; 2 Some correct elements with main concept missing or wrong; 3 Correct but incomplete answer; 4 Correct answer; and 5 Outstanding answer) by an evaluator who was blind to the pre/post survey condition.

We perceived the stability and speed of the Neurorobot App to be critical to student engagement during the workshop. The rendering speed of the Neurorobot App depends on the laptop used to run it. We found that laptops with 1.1 GHz dual-core CPUs, 8GB RAM and internal graphics (4165 MB total memory, 128 MB VRAM) were not able to render the app at an acceptable rate. The rendering of buttons and execution of their associated functions in brain design mode were particularly affected, resulting in Matlab errors and crashes as students attempted to start new processes before previously triggered ones had completed. We found that laptops with 2.80 GHz quad-core CPUs, 16GB RAM, and NVIDIA GeForce GTX 1060 graphics (11156 MB total memory, 2987 MB VRAM) were able to render the app with only occasional delays. We used laptops with these specs, running Windows 10 and Matlab 2018a or higher, in the workshops discussed here.

The neurorobot app is configured to run at 10 Hz, with its Izhikevich neurons running at 1000 Hz. With these settings we were able to simulate brains of up to 1000 neurons. Nevertheless, improving our code to allow simulation of much larger brains is a priority. We found that we had to use a slower rendering speed of 5 Hz when simulating larger brains or when using the Deep Learning and Parallel Computing toolboxes to perform object recognition.

Early iterations of the Neurorobot App suffered from regular WiFi connection failures (“crashes”) that often required a system restart and irritated students. The current version of the app is more stable. However, while we experienced almost no problems maintaining WiFi connectivity for all nine neurorobots at the first school, we had significant problems at the second school, where we experienced 30–70 WiFi crashes in each lesson. Although we were able to complete the workshop, students’ ability to work with the robots was severely disrupted. While the first school has only a single floor and is located in a suburban area, the second school is an urban high rise. We have subsequently experienced similar WiFi connectivity problems at other inner city schools, suggesting that such schools present a much more challenging environments for WiFi.

Another persistent problem we encountered during workshops was color detection. In rooms with plenty of natural light our neurorobots were easily able to recognize and distinguish between red, green, and blue objects. However, rooms with limited or amber ceiling lights were difficult to work in, with detection of green and blue being particularly affected. We are currently working to improve the color detection functionality of the Neurorobot App, and recommend testing a range of different color objects in the same room and lighting conditions in which work with the app is to take place.

Two features of the brain design mode were not intuitive to students. First, the app assumed that the presynaptic origin of a synapse would be selected before its postsynaptic target. This was a natural way of establishing directed connections between pairs of neurons. However, it also meant that to make a neuron responsive to sensory input, the sensory input icon had to be selected before the target neuron. Similarly, to enable a neuron to produce motor or speaker output, the neuron had to be selected before the output icon. Although these requirements may reinforce the concept of directed signal flow in neural networks, students found the constraints frustrating. Students also found the process of modifying or deleting synapses confusing. To create a synapse, students had to extend an axon from the presynaptic origin (e.g., a neuron) to the postsynaptic target (e.g., another neuron). However, modifying or deleting an existing synapse also required students to extend an axon from the presynaptic origin to the postsynaptic target. Students repeatedly voiced the opinion that clicking directly on an existing axon or synapse to delete it or edit its properties would be more intuitive. We are currently working to solve both these user interface issues.

To assess whether students learned the workshop’s core concepts, such as the role of synaptic plasticity and dopamine in the brain, we presented students with 4 open-ended neuroscience quiz questions before and after the workshop (Figure 4):

Q1: Describe three types of neuron with different spiking patterns

Q2: What is synaptic plasticity and why is it important?

Q3: How does the basal ganglia control behavior?

Q4: Describe two effects dopamine has on the brain

The answers ranged from no answer or fully incorrect, such as “Axon, myelin, helium” or “What” for Q1, to fully correct answers such as “Quiet neurons, highly active neurons, bursting when activated”. Examples of correct answers for Q2 include “It is training the brain to learn stuff” and “Synaptic plasticity is the ability of neural connections to strengthen or weaken based on how much the connection is used”. Examples of correct answers for Q3 include “It controls decision making by evaluating all options someone has” and “It controls which behavior you choose to do”. Examples of correct answers for Q4: “Motivation, concentration,” “Motivation and will,” “Dopamine helps you make decisions, it also controls what you want and don‘t want,” “It can create bad habits. It creates habits” and “It brings pleasure to the body, and too much dopamine leads to addiction.” We found significant improvement on all content questions, particularly Q1 and Q3 in the first school.

To also assess whether participating in the Neurorobot Workshop improved students’ confidence in science generally and in neuroscience in particular, we asked students to indicate their level of agreement or disagreement with 14 statements (e.g., “I am confident I can understand complex material in neuroscience”) on a 7-point Likert scale (Figure 5). The complete list of 14 statements can be found in the Supplementary Material. We found that participation in the Neurorobot Workshop did not change students’ general science attitudes but significantly improved their attitudes in neuroscience, particularly in the first school.

The end-of-workshop group exercise allowed students to apply the neuroscience concepts and skills learned in order to create brains from scratch that performed behaviors of the students’ choosing. Students were able to present their brain designs to the rest of the class and comment on challenges and unexpected neurorobot behaviors. Examples of students’ brain designs are shown in Supplementary Figure S2.

The discrepancy in the number of responses before and after the workshop is due to final day group presentations running over time, sometimes leaving students without enough time to complete the post-workshop survey. We will improve our scheduling to accommodate both activities in future workshops.

Neurorobotics offers students a unique opportunity to learn neuroscience and computational methods by building and interacting with embodied models of neurons and brains. To promote neurorobot-based neuroscience tuition in schools and educational neurorobotics as an area of research, we have provided here the instructions to build our DIY neurorobot, the Matlab-code of the associated Neurorobot App, and the contents and results of a Neurorobot Workshop for high school students. A pre/post workshop survey revealed significant improvements in students’ understanding of key neuroscience concepts and confidence in neuroscience as a result of the workshop (Figures 4, 5). The gains were larger in the first of the two participating high schools, likely due to the significant WiFi connectivity problems we experienced in the second school.

Development of our educational neurorobotics platform is ongoing. In the near future we will remove the need for the Bluetooth modem by conducting all communication via WiFi. This involves creating a new library for communication between Matlab and the RAK5206 WiFi module, as the HebiCam library we are currently using for this purpose only allows video transmission. We are also working on a lighter, fabricated hardware design with microphone, gyroscope and accelerometer input, motor encoders and multi-color LEDs.

On the software side there are numerous near-term improvements that would enhance usability, including tools to edit neurons and synapses en masse, and methods for working with larger brains (e.g., zooming in and out, hiding different types of brain structure). An efficient search of the space of possible neural networks with the aim of discovering novel, engaging behavioral outputs would allow students to more quickly create and train interesting brains in the classroom. Faster and richer sensory feature extraction would allow students to design brains that can recognize and adapt to key features of their local environment. Perhaps the most important near-term goal is to expand the library of brains available to students, to include interesting, useful, well-understood features such as retinotopy and place cells, and to develop creative pedagogic exercises to introduce these brains to students. We are particularly interested in creating brains that make use of the dopaminergic neuron type, activation of which generates reward signal and enables reinforcement learning without the need for the dopamine button.

As researchers and educators exploring the still nascent field of educational neurorobotics we are faced with numerous interesting questions. What types of sensory features are most useful to students and how should they be made accessible in the Neurorobot App? What types of neurons and neural circuits do students need to be able to deploy with a single click? What types of exercises, brains and behaviors do students prefer to work with, and why? How should existing neurorobotics research and computational brain models be translated into forms suitable for the high-school classroom? How should educational neurorobotics be combined with project-based learning? How can we make teachers confident about using neurorobots to teach neuroscience? What opportunities are presented by virtual environments such as the Human Brain Project’s Neurorobotics Platform? And how should neuromorphic hardware be incorporated into educational neurorobots? It’s an exciting time to bring neurorobots to the classroom!

The datasets generated for this study are available on request to the corresponding author.

The studies involving human participants were reviewed and approved by the IntegReview IRB (Protocol number 5552). Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

CH conceived of the idea. CH, SM, ZR, MA, and GG developed the neurorobot hardware. CH, SM, ÐJ, and GG developed the software application. CH, LG, WW, and JD developed and enacted the workshop. CH and LG analyzed the survey results and wrote the manuscript with support from SM and GG.

This research was supported by a Small Business Innovation Research Grant #1R43NS108850 from the National Institute of Neurological Disorders and Stroke of the National Institutes of Health. CH and GG are Principal Investigators on this grant.

GG is a co-founder and co-owner of Backyard Brains, Inc., a company that manufactures and sells neurorobots that are functionally similar to the hardware described in this manuscript. CH, SM, MA, and ÐJ are employed by Backyard Brains, Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

A previous version of this manuscript is available as a preprint on bioRxiv https://www.biorxiv.org/content/10.1101/597609v1.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnbot.2020.00006/full#supplementary-material

Antonietti, A., Martina, D., Casellato, C., D’Angelo, E., and Pedrocchi, A. (2019). Control of a humanoid NAO robot by an adaptive bioinspired cerebellar module in 3D motion tasks. Comput. Intell. Neurosci. 2019:15. doi: 10.1155/2019/4862157

Barker, B. S. (ed.) (2012). Robots in K-12 Education.: A New Technology for Learning: A New Technology for Learning. Pennsylvania: IGI Global.

Benitti, F. B. V. (2012). Exploring the educational potential of robotics in schools: a systematic review. Comput. Educ. 58, 978–988. doi: 10.1016/j.compedu.2011.10.006

Bolado-Gomez, R., and Gurney, K. (2013). A biologically plausible embodied model of action discovery. Front. Neurorobotics 7:4. doi: 10.3389/fnbot.2013.00004

Calin-Jageman, R. J. (2017). Cartoon network: a tool for open-ended exploration of neural circuits. J. Undergrad. Neurosci. Educa. 16, A41.

Calin-Jageman, R. J. (2018). Cartoon network update: new features for exploring of neural circuits. J. Undergrad. Neurosci. Educ. 16, A195.

Cervantes, B., Hemmer, L., and Kouzekanani, K. (2015). The impact of project-based learning on minority student achievement: implications for school redesign. Educ. Leadersh. Rev. Dr. Res. 2, 50–66.

Dekker, S., and Jolles, J. (2015). Teaching about “brain and learning” in high school biology classes: effects on teachers’ knowledge and students’ theory of intelligence. Front. Psychol. 6:1848.

Falotico, E., Vannucci, L., Ambrosano, A., Albanese, U., Ulbrich, S., Vasquez Tieck, J. C., et al. (2017). Connecting artificial brains to robots in a comprehensive simulation framework: the neurorobotics platform. Front. Neurorob. 11:2. doi: 10.3389/fnbot.2017.00002

Frantz, K. J., McNerney, C. D., and Spitzer, N. C. (2009). We’ve got NERVE: a call to arms for neuroscience education. J. Neurosci. 29, 3337–3339. doi: 10.1523/jneurosci.0001-09.2009

Frazzetto, G., and Anker, S. (2009). Neuroculture. Nat. Rev.. Neuroscie. 10:815. doi: 10.1038/nrn2736

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., et al. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc. Natl. Acade. Sci. U.S.A. 111, 8410–8415. doi: 10.1073/pnas.1319030111

Fulop, R. M., and Tanner, K. D. (2012). Investigating high school students conceptualizations of the biological basis of learning. Adva. Physiol. Educ 36, 131–142. doi: 10.1152/advan.00093.2011

Gage, G. J. (2019). The case for neuroscience research in the classroom. Neuron 102, 914–917. doi: 10.1016/j.neuron.2019.04.007

Grillner, S. (2006). Biological pattern generation: the cellular and computational logic of networks in motion. Neuron 52, 751–766. doi: 10.1016/j.neuron.2006.11.008

Haak, D. C., HilleRisLambers, J., Pitre, E., and Freeman, S. (2011). Increased structure and active learning reduce the achievement gap in introductory biology. Science 332, 1213–1216. doi: 10.1126/science.1204820

Hassabis, D., Kumaran, D., Summerfield, C., and Botvinick, M. (2017). Neuroscience-inspired artificial intelligence. Neuron 95, 245–258. doi: 10.1016/j.neuron.2017.06.011

Izhikevich, E. M. (2003). Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572. doi: 10.1109/TNN.2003.820440

Kanter, D. E., and Konstantopoulos, S. (2010). The impact of a project-based science curriculum on minority student achievement, attitudes, and careers: The effects of teacher content and pedagogical content knowledge and inquiry-based practices. Science Education 94, 855–887. doi: 10.1002/sce.20391

Karim, M. E., Lemaignan, S., and Mondada, F. (2015). “A review: can robots reshape K-12 STEM education?,” in Advanced Robotics and its Social Impacts (ARSO), 2015 IEEE International Workshop on, (Piscataway, NJ: IEEE), 1–8.

Krichmar, J. L. (2018). Neurorobotics—a thriving community and a promising pathway toward intelligent cognitive robots. Front. Neurorob. 12:42. doi: 10.3389/fnbot.2018.00042

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). “Imagenet classification with deep convolutional neural networks,” in Advances in neural information processing systems, eds T. K. Leen, T. G. Dietterich, and V. Tresp, (Cambridge, MA: MIT Press), 1097–1105.

Labriole, M. (2010). Promoting brain-science literacy in the K-12 Classroom. Cerebrum. 2010:15. doi: 10.4135/9781452204062.n2

Levine, S., Pastor, P., Krizhevsky, A., Ibarz, J., and Quillen, D. (2018). Learning hand-eye coordination for robotic grasping with deep learning and large-scale data collection. Int. J. Rob. Res. 37, 421–436. doi: 10.1177/0278364917710318

Lewis, A., and Rogers, J. J. (2005). SlugBug: A tool for neuroscience education developed at Iguana Robotics, Inc. Avaliable at: http://www.iguana-robotics.com/publications/whitepaper.pdf. (accessed January 1, 2018).

Ludi, S. (2012). “Educational robotics and broadening participation in STEM for underrepresented student groups,” in Robots in K-12 Education: A New Technology for Learning, eds Barker Bradley, S., (Pennsylvania: IGI Global), 343–361. doi: 10.4018/978-1-4666-0182-6.ch017

Petto, A., Fredin, Z., and Burdo, J. (2017). The use of modular, electronic neuron simulators for neural circuit construction produces learning gains in an undergraduate anatomy and physiology course. J. Undergrad. Neurosc. Educ. 15:A151.

Prescott, T. J., González, F. M. M., Gurney, K., Humphries, M. D., and Redgrave, P. (2006). A robot model of the basal ganglia: behavior and intrinsic processing. Neural Netwo. 19, 31–61. doi: 10.1016/j.neunet.2005.06.049

Rosen, J., Stillwell, F., and Usselman, M. (2012). “Promoting diversity and public school success in robotics competitions,” in Robots in K-12 education: A New Technology for Learning, eds Barker Bradley, S. (Pennsylvania: IGI Global), 326–342. doi: 10.4018/978-1-4666-0182-6.ch016

Sanchez, M. (2016). Neuro-robotics as. (a)Tool to Understand the Brain. Avaliable at: https://biysc.org/programmes/research-projects/neuro-robotics-tool-understand-brain-0. (accessed March 29, 2019).

Seth, A. K., Prescott, T. J., and Bryson, J. J. (eds) (2011). Modelling Natural Action Selection. Cambridge, MA: Cambridge University Press.

Sperduti, A., Crivellaro, F., Rossi, P. F., and Bondioli, L. (2012). Do octopuses have a brain? Knowledge, Perceptions and Attitudes towards Neuroscience at School. PloS One 7:e47943. doi: 10.1371/journal.pone.0047943

Tieck, J., Schnell, T., Kaiser, J., Mauch, F., Roennau, A., and Dillmann, R. (2019). Generating pointing motions for a humanoid robot by combining motor primitives. Front. Neurorob. 13:77. doi: 10.3389/fnbot.2019.00077

Weinberg, J. B., Pettibone, J. C., Thomas, S. L., Stephen, M. L., and Stein, C. (2007). The impact of robot projects on girls’ attitudes toward science and engineering. Workshop Res. Rob Educ. 3, 1–5.

Yuen, T. T., Ek, L. D., and Scheutze, A. (2013). “Increasing participation from underrepresented minorities in STEM through robotics clubs,” in Teaching, Assessment and Learning for Engineering (TALE), 2013 IEEE International Conference on, (Piscataway, NJ: IEEE), 24–28.

Zador, A. (2019). A critique of pure learning: what artificial neural networks can learn from animal brains. bioRxiv [preprint]. doi: 10.1101/582643

Zhong, J., Peniak, M., Tani, J., Ogata, T., and Cangelosi, A. (2019). Sensorimotor input as a language generalisation tool: a neurorobotics model for generation and generalisation of noun-verb combinations with sensorimotor inputs. Auton. Rob. 43, 1271–1290. doi: 10.1007/s10514-018-9793-7

Zylbertal, A. (2016). Neuron Cad [Video Demonstration Avaliable at: https://www.youtube.com/watch?v=vx5QH23PIk8. (accessed January 1, 2018).

Keywords: neurorobots, neurorobotics, brain-based robots, computational neuroscience, education technology, workshop, active learning, high school

Citation: Harris CA, Guerri L, Mircic S, Reining Z, Amorim M, Jović Ð, Wallace W, DeBoer J and Gage GJ (2020) Neurorobotics Workshop for High School Students Promotes Competence and Confidence in Computational Neuroscience. Front. Neurorobot. 14:6. doi: 10.3389/fnbot.2020.00006

Received: 21 October 2019; Accepted: 27 January 2020;

Published: 13 February 2020.

Edited by:

Jun Tani, Okinawa Institute of Science and Technology Graduate University, JapanReviewed by:

Alberto Antonietti, Politecnico di Milano, ItalyCopyright © 2020 Harris, Guerri, Mircic, Reining, Amorim, Jović, Wallace, DeBoer and Gage. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christopher A. Harris, Y2hyaXN0b3BoZXJAYmFja3lhcmRicmFpbnMuY29t

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.