94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Neurorobot., 29 May 2019

Volume 13 - 2019 | https://doi.org/10.3389/fnbot.2019.00034

This article is part of the Research TopicMulti-Modal Information Fusion for Brain-Inspired RobotsView all 11 articles

Min Li1,2*

Min Li1,2* Bo He1

Bo He1 Ziting Liang1

Ziting Liang1 Chen-Guang Zhao3

Chen-Guang Zhao3 Jiazhou Chen1

Jiazhou Chen1 Yueyan Zhuo1

Yueyan Zhuo1 Guanghua Xu1,2

Guanghua Xu1,2 Jun Xie1,2

Jun Xie1,2 Kaspar Althoefer4

Kaspar Althoefer4Hand rehabilitation exoskeletons are in need of improving key features such as simplicity, compactness, bi-directional actuation, low cost, portability, safe human-robotic interaction, and intuitive control. This article presents a brain-controlled hand exoskeleton based on a multi-segment mechanism driven by a steel spring. Active rehabilitation training is realized using a threshold of the attention value measured by an electroencephalography (EEG) sensor as a brain-controlled switch for the hand exoskeleton. We present a prototype implementation of this rigid-soft combined multi-segment mechanism with active training and provide a preliminary evaluation. The experimental results showed that the proposed mechanism could generate enough range of motion with a single input by distributing an actuated linear motion into the rotational motions of finger joints during finger flexion/extension. The average attention value in the experiment of concentration with visual guidance was significantly higher than that in the experiment without visual guidance. The feasibility of the attention-based control with visual guidance was proven with an overall exoskeleton actuation success rate of 95.54% (14 human subjects). In the exoskeleton actuation experiment using the general threshold, it performed just as good as using the customized thresholds; therefore, a general threshold of the attention value can be set for a certain group of users in hand exoskeleton activation.

Hand function is essential for our daily life (Heo et al., 2012). In fact, only partial loss of the ability to move our fingers can inhibit activities of daily living (ADL), and even reduce our quality of life (Takahashi et al., 2008). Research on robotic training of the wrist and hand has shown that improvements in finger or wrist level function can be generalized across the arm (Lambercy et al., 2011). Finger muscle weakness is believed to be the main cause of loss of hand function after strokes, especially for finger extension (Cruz et al., 2005; Kamper et al., 2006). Hand rehabilitation requires repetitive task exercises, where a task is divided into several movements and patients are asked to practice those movements to improve their hand strength, range of motion, and motion accuracy (Takahashi et al., 2008; Ueki et al., 2012). High costs of traditional treatments often prevent patients from spending enough time on the necessary rehabilitation (Maciejasz et al., 2014). In recent years, robotic technologies have been applied in motion rehabilitation to provide training assistance and quantitative assessments of recovery. Studies show that intense repetitive movements with robotic assistance can significantly improve the hand motor functions of patients (Takahashi et al., 2008; Ueki et al., 2008; Kutner et al., 2010; Carmeli et al., 2011; Wolf et al., 2006).

Patients should be actively involved in training to achieve better rehabilitation results (Teo and Chew, 2014; Li et al., 2018). Motor rehabilitation has implemented Brain Computer Interface (BCI) methods as one of the means to detect human movement intent and get patients to be actively involved in the motor training process (Teo and Chew, 2014; Li et al., 2018). Motor imagery-based BCIs (Jiang et al., 2015; Pichiorri et al., 2015; Kraus et al., 2016; Vourvopoulos and Bermúdez I Badia, 2016), movement-related cortical potentials-based BCIs (Xu et al., 2014; Bhagat et al., 2016), and steady-state motion visual evoked potential-based BCIs (Zhang et al., 2015) have been used to control rehabilitation robots. However, the high cost and complexity of the preparation in utilizing these methods mean that most current BCI devices are more suitable for research purposes than clinical practices. This is attributable to the fact that the ease of use and device cost are two main factors to consider during the selection of human movement intent detection based on BCIs for practical use (van Dokkum et al., 2015; Li et al., 2018). Therefore, non-invasive, easy-to-install BCIs that are convenient to use with acceptable accuracy should be introduced to hand rehabilitation robot control.

Owing to the versatility and complexity of human hands, developing hand exoskeleton robots for rehabilitation assistance in hand movements is challenging (Heo et al., 2012; Arata et al., 2013). In recent years, hand exoskeleton devices have drawn much research attention, and the results of current research look promising (Heo et al., 2012). Hand exoskeleton devices mainly use linkage, wire, or hydraulically/pneumatically driven mechanisms (Polygerinos et al., 2015a). The rigid mechanical design of linkage-based mechanisms provides robustness and reliability of power transmission, and has been widely applied in hand exoskeletons (Tong et al., 2010; Ito et al., 2011; Arata et al., 2013; Cui et al., 2015; Polygerinos et al., 2015a). However, the safety problem of misalignment between the human finger joints and the exoskeleton joints may occur during rehabilitation movements (Heo et al., 2012; Cui et al., 2015). Compensation approaches used in current studies make the mechanism more complicated (Nakagawara et al., 2005; Fang et al., 2009; Ho et al., 2011). Pneumatic and hydraulic soft hand exoskeletons, which are made of flexible materials, are proposed to assist hand opening or closing (Ang and Yeow, 2017; Polygerinos et al., 2015a; Yap et al., 2015b). However, despite bi-directional assistance—namely finger flexion and extension—being essential for hand rehabilitation (Iqbal et al., 2014), a large group of current soft hand exoskeleton devices only provide finger flexion assistance (Connelly et al., 2010; Polygerinos et al., 2013, 2015a; Yap et al., 2015a, b). Wire-driven mechanisms can also be complex to transmit bi-directional movements since wires can only transmit forces along one direction (In et al., 2015; Borboni et al., 2016). In order to transmit bi-directional movements, a tendon-driven hand exoskeleton was proposed, where the tendon works as a tendon during the extension movement and as compressed flexible beam constrained into rectilinear slides mounted on the distal sections of the glove during flexion (Borboni et al., 2016). Arata et al. (2013) attempted to avoid wire extension and other associated issues by proposing a hand exoskeleton with a three-layered sliding spring mechanism. Hand rehabilitation exoskeleton devices are still seeking to achieve key features such as low complexity, compactness, bi-directional actuation, low cost, portability, safe human-robotic interaction, and intuitive control.

In this article, we describe the design and characterization of a novel multi-segment mechanism driven by one layer of a steel spring that can assist both extension and flexion of the finger. Thanks to the inherent features of this multi-segment mechanism, joint misalignment between the device and the human finger is no longer a problem, enhancing the simplicity and flexibility of the device. Moreover, its compliance makes the hand exoskeleton safe for human-robotic interaction. This mechanism can generate enough range of motion with a single input by distributing an actuated linear motion to the rotational motions of finger joints. Active rehabilitation training is realized by using a threshold of the attention value measured by a commercialized electroencephalography (EEG) sensor as a brain-controlled switch for the hand exoskeleton. Features of this hand exoskeleton include active involvement of patients, low complexity, compactness, bi-directional actuation, low cost, portability, and safe human-robotic interaction. The main contributions of this article include: (1) prototyping and evaluation of a hand exoskeleton with a rigid-soft combined multi-segment mechanism driven by one layer of a steel spring with a sufficient output force capacity; (2) using attention-based BCI control to increase patients’ participation in exoskeleton-assisted hand rehabilitation; and (3) determining the threshold of attention value for our attention-based hand rehabilitation robot control.

The target users are stroke survivors during flaccid paralysis period who need continuous passive motion training of their hands. They should also be able to focus their attention on motion rehabilitation training for at least a short period of time. For the purpose of hand rehabilitation, an exoskeleton should have minimal ADL interference and have the ability to generate adequate forces to perform hand flexion and extension with a range of motion that is similar or slightly lower than the motion range of a natural finger.

To achieve minimal ADL interference, the device is to be confined to the back of the finger and the width of the device should not exceed the finger width. Here, the width and height constraints of the exoskeleton on the back of the finger are both 20 mm. Low weight of the rehabilitation systems is a key requirement to allow practical use by a wide stroke population (Nycz et al., 2016). Therefore, the target weight of the exoskeleton should be as light as possible to make the patient feel more comfortable to wear it. The typical weight of other hand exoskeletons is in the range of 0.7 kg–5 kg (CyberGlove Systems Inc., 2016; Delph et al., 2013; Polygerinos et al., 2015a; Rehab-Robotics Company Ltd., 2019). In this article, the target weight of the exoskeleton is less than 0.5 kg.

There are 15 joints in the human hand. The thumb joint consists of an interphalangeal joint (IPJ), a metacarpophalangeal joint (MPJ), and a carpometacarpal joint (CMJ). Each of the other four fingers has three joints including a metacarpophalangeal joint (MCPJ), a proximal interphalangeal joint (PIPJ), and a distal interphalangeal joint (DIPJ). The hand exoskeleton must have three bending degrees of freedom (DOF) to exercise the three joints of the finger. For some rehabilitation applications, it is unnecessary for each of the MCPJ, PIPJ, and DIPJ of the human finger to have independent motion as long as the whole range of motion of the finger is covered. Tripod grasping requires the MPJ and IPJ of the thumb to bend around 51° and 27°; MCPJ, PIPJ, and DIPJ of the index finger to bend around 46°, 48°, and 12°; and for the middle finger to bend around 46°, 54°, and 12° (In et al., 2015). For the execution speed of rehabilitation exercises, physiotherapists suggest a lower speed than 20 s for a flexion/extension cycle of a finger joint (Borboni et al., 2016). It has to be stressed that hyperextension of all these joints should always be carefully avoided.

The exerted force to the finger should be able to enable continuous passive motion training. In addition, the output force should help the patient to generate grasping forces required to manipulate objects in ADL. Pinch forces required to complete functional tasks are typically below 20 N (Smaby et al., 2004). Polygerinos et al. (2015b) estimated each robot finger should exert a distal tip force of about 7.3 N to achieve a palmar grasp—namely four fingers against the palm of the hand—to pick up objects less than 1.5 kg. Existing devices can provide a maximum transmission output force between 7 N and 35 N (Kokubun et al., 2013; In et al., 2015; Polygerinos et al., 2015b; Borboni et al., 2016; Nycz et al., 2016).

The design should allow some customization to hand size and adaptability to different patient statuses and different stages of rehabilitation.

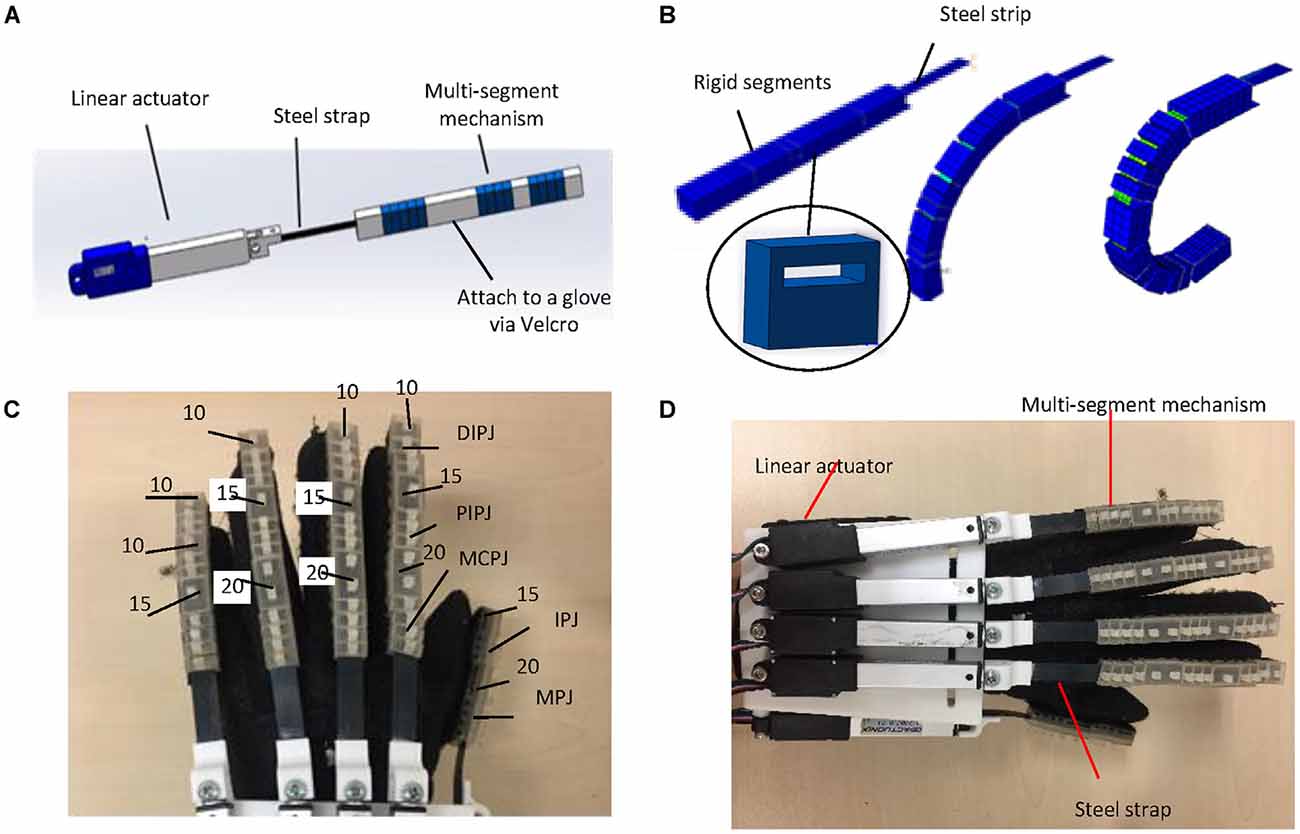

Based on our established design requirements, a hand exoskeleton was designed and constructed (see Figure 1). In our design, each finger was driven by one actuator for finger extension and flexion, resulting in a highly compact device. A multi-segment mechanism with a spring layer was proposed. It has respectable adaptability, thus avoiding joint misalignment problems. A three-dimensional model of a single finger actuator is shown in Figure 1A. This finger actuator contained a linear motor, a steel strap, and a multi-segment mechanism. As shown in Figure 1B, the spring layer bended and slid because of the linear motion input provided by the linear actuator. The structure then became like a circular sector. When the structure was attached to a finger, it supported the finger flexion/extension motion. Five finger actuators were attached to a fabric glove via Velcro straps and five linear motors were attached to a rigid part which was fixed to the forearm by a Velcro strap. Each steel strap was attached to a motor by a small rigid 3D-printed part. It should be noted that the current structure is not applicable to thumb adduction/abduction.

Figure 1. Design of the hand exoskeleton: (A) CAD drawing of the index finger acuator; (B) bending motion generated by the proposed mutli-segment mechanism with a spring layer; (C) segment thicknesses (unit: mm); and (D) overview of the hand exoskeleton prototype.

Based on the mechanism design, we developed a prototype hand exoskeleton for left hand. The finger exoskeleton actuators can be easily replaced since they are attached to a glove with Velcro straps. The segments of the multi-segment mechanism were made of VisiJet Crystal material using a rapid prototyping machine (3D Systems MJP3600). To attach the linear actuator, the rigid part was made of PLA using a rapid prototyping machine (D3020, Shenzhen Sundystar technology co. Ltd, China). The weight of the prototype is 401 g, including the glove, multi-segment mechanism, and motors, which is much lower than the target weight (0.5 kg). The characteristics of the linear motors are listed in Table 1. The thickness and length of the steel strip are 0.3 mm and 80 mm, respectively. Since the actuator bends more at the joints than at the finger segments, the segments of the multi-segment mechanism above the joints are designed to be thinner than the segments above the distal phalangeal, proximal phalangeal, and metacarpal phalangeal. The segments of the multi-segment mechanism above the joints are 12 mm × 10 mm × 5 mm. Thumb IPJ and MPJ have three and four segments, respectively. There are 3, 3, and 4 segments for DIPJ, PIPJ, and MCPJ of the index, middle, and ring fingers. The numbers are 2, 2, and 4 for DIPJ, PIPJ, and MCPJ for the pinky finger. The thicknesses of other segments (unit: mm) are shown in Figure 1C. Figure 1D shows the overview of the hand exoskeleton prototype.

Figure 2 displays a schematic diagram of the brain-controlled switch for the hand exoskeleton. Here, a commercialized Brainlink Lite device (Macrotellect Ltd., Shenzhen, China) with EEG sensors (NeuroSky, Inc., San Jose, CA, USA) was used in the brain-controlled switch for the hand exoskeleton. This device is easy to wear and does not need to apply the conductive gel. It contains a specially designed electronic circuit perceives the brain signals, filters out the noise and the muscle movement, and converts to digital power. EEG sensors are integrated into a headband. There are three dry electrodes in this device including an EEG signal channel, a REF (reference point), and a GND (Ground Point). EEG band power values for delta, theta, alpha, beta, and gamma were recorded with a sampling rate of 512 Hz in the frequency range between 3 Hz and 100 Hz. This device monitors the contact between the electrodes and the skin. If this device fails to collect EEG or receives poor signals, it will issue a warning to notify the user to adjust the sensor.

Mirror neurons, which link vision and motion, can be activated either when an individual acts, mentally practices an action, or observes the same action performed by another human, robotic actions, or virtual characters (Oztop et al., 2013). Moreover, motor imagery can be enhanced based on visual guidance, thus promoting motor recovery (Li et al., 2015; Pichiorri et al., 2015; Liang et al., 2016). Therefore, a motion demonstration is shown on the screen providing visual stimulation to mirror neurons. A graphical user interface (GUI) in MATLAB was designed to display the visual guidance. The user should look at the demonstration on the screen and imagine the movement. The intensity of mental “focus” or “attention” of the user was used as the brain-controlled switch for the hand exoskeleton. The attention value was acquired using the built-in patented Attention Meter algorithm (NeuroSky, 2018) in a ThinkGear AM (TGAM) module (NeuroSky, Inc., San Jose, CA, USA) in this Brainlink Lite device. In the last few years, there were some reports about using the TGAM module and the Attention Meter algorithm in BCI studies (Iordache, 2017; Cui et al., 2018). Unfortunately, the specific attention value calculation function was not possible to find out as it is regarded as a trade secret. But we could guess that the attention value should be calculated from the recorded EEG band power values. The BrainLink device transmitted data to a MATLAB program in a computer via a Bluetooth connection. A threshold of attention value was defined to turn on the hand exoskeleton. Once the user reaches the threshold of the attention value, the hand exoskeleton will be activated to conduct the same motion as shown on the screen providing stimulation to the motion perception and proprioception. The real-time attention value is also shown on the screen using a bar. An Arduino MEGA 2560 (Arduino, Ivrea, Italy) was used as the controller of the hand exoskeleton. Bluetooth connection was also used between the Arduino and the computer. The Arduino MEGA 2560 controlled the linear motors using the 0–5V interface mode of the linear motors. The 0–5V input voltage to the motor had a linear relationship to its 50 mm stroke.

Experiments were conducted to evaluate the proposed rigid-soft combined hand exoskeleton and the attention value-based switch for the hand exoskeleton. Human subjects were involved in these experiments. The experiments were undertaken in accordance with the recommendations of the Declaration of Helsinki. The study was approved by the Institutional Review Board of Xi’an Jiaotong University. All subjects provided signed informed written consent before the start of the experiments.

For preliminary evaluations of the developed prototype, we characterized the finger motion output and force output.

This section describes the evaluation experiment of the range of motion under the actuation of the hand exoskeleton. Two sessions of hand movements were conducted including an active hand motion session (driven by the subject’s hand muscles) and a passive hand motion session (driven by the exoskeleton). The range of motion of the finger joints in these two sessions was compared. A healthy human subject (male, 24 years old, right handed) worn the hand exoskeleton and participated in this experiment. Twenty-one retroreflective hemispheric markers, each 9 mm in diameter, were attached to the finger joints of a healthy subject. An active hand closing (from the open position where finger and palm were straightened to the flexed position) and opening session (from the flexed position to the open position) was conducted in order to obtain the maximum joint angle that the subject can normally actively perform. A passive session was then performed, where actions were performed with the assist of actuators. In this passive session, the subject was instructed to relax the finger, and the exoskeleton performed a hand closing and opening motion. During the process, the finger movement was recorded using a VICON motion capturing system, a 3D motion capture system with 10 T40 MX cameras that can recode at a frequency of 500 FPS (OML Co., UK). The joint angle values during the motion were calculated from the captured position data of the markers.

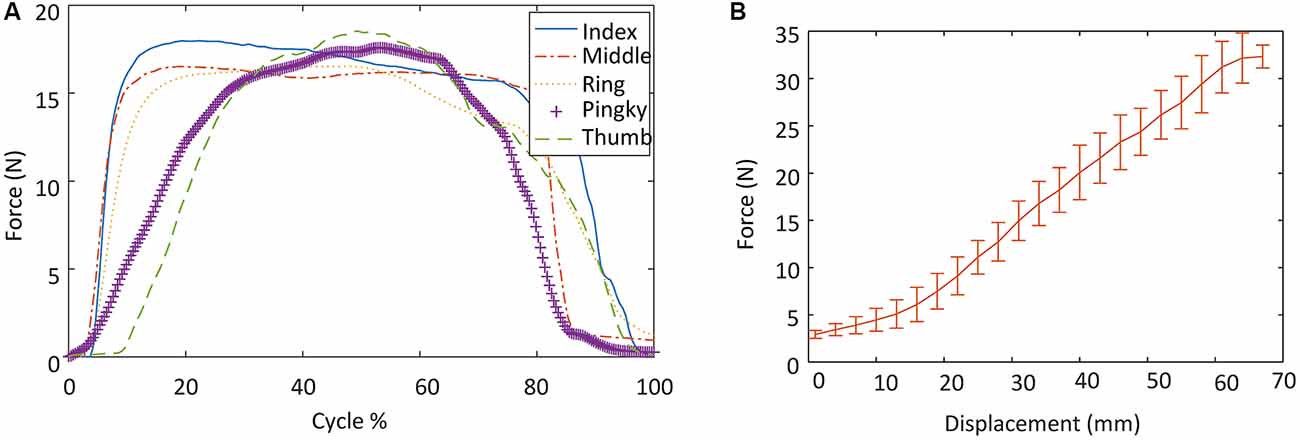

In order to characterize the force output of the hand exoskeleton prototype, two types of output force measurement experiments were conducted. We measured the amount of force received by the fingertips during the exoskeleton actuation, and the maximum pulling force when grabbing an object.

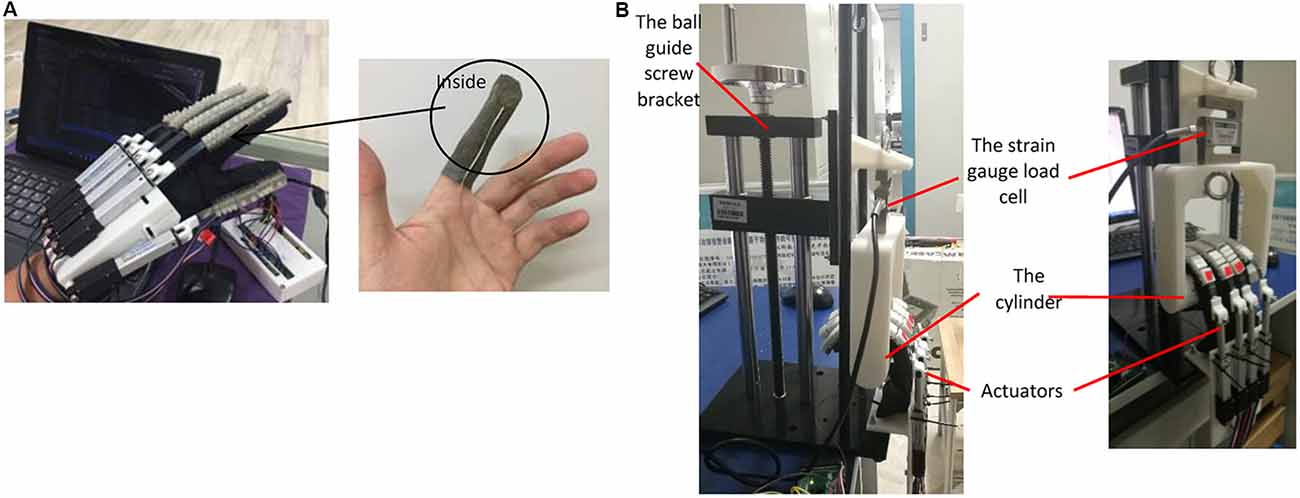

For the first type, the interactive forces between the exoskeleton and the human fingertips were recorded during a hand closing and opening process. A Finger TPS System (Pressure Profile Systems, Los Angeles, CA, USA) was used to measure the output force of the finger exoskeleton at the fingertip. The technical characteristics of the fingertip force sensor are listed in Table 2. The experimental set-up is shown in Figure 3A. The same human subject as in the motion control test worn a finger TPS sensor for each finger inside the glove of the hand exoskeleton. The force sensing element was placed between the fingertip and the exoskeleton. The subject’s hand was passively driven by the exoskeleton to conduct a hand closing and opening motion. The TPS System recorded the fingertip forces throughout the process.

Figure 3. Output force measurement experimental set-up: (A) the interactive forces between the exoskeleton and the human fingertips during the exoskeleton actuation; and (B) tension provided by the finger exoskeleton in a grasping motion.

For the second type, the tension provided by the finger exoskeleton in a grasping motion was measured with a strain gauge load cell (Model THL-1, Bengbu, China). This sensor has a range of 500 N (sensor error: 0.02% of full scale). The experimental platform is shown in Figure 3B. A cylinder made by 3D printing was connected to a ball guide screw bracket by the strain gauge load cell. The ball guide screw bracket ensured that the cylinder moved up and down smoothly and accurately. Four actuators were fixed at the bottom of the ball guide screw. During the test, the actuators were bent to the maximum extent and the postures were maintained. Simultaneously, the cylinder was slowly pulled by the ball guide screw and moved up. Force data were started collecting when the finger came into contact with the cylinder; the output of the sensor was then recorded every 3 mm cylinder displacement. The experiment was repeated three times.

Experiments were conducted to evaluate the performance of the attention value-based switch for the hand exoskeleton involving human subjects. Fourteen participants, including 10 males and four females, with an average age of 23.8 (SD = 0.89) were involved in the experiment. All the experiments were conducted in a quiet room. Participants were asked to sit in front of a laptop and wear the Brainlink device.

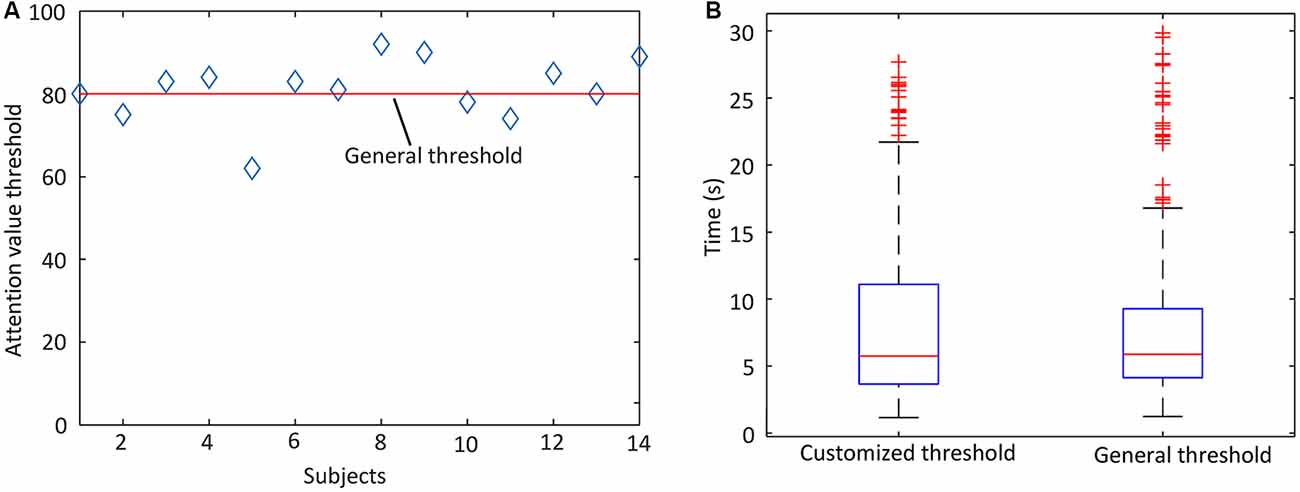

The purpose of this part was to determine the threshold of attention value. The experiment measured the attention values of the subject during resting state, during concentration practice with visual guidance, without visual guidance, and with distractions. During experiment of the resting state, the participants were idle and not imagining any movement. This process lasted for 50 s. In the experiment of concentration practice with visual guidance, participants were asked to watch a video clip of a hand grasping motion and focus on the action for at least 50 s. The grasping video played over and over again for the entire 50 s duration. During the distraction experiment, they were asked to listen to the sound of the simulating collision or the noise of the factory while watching three video clips. At the same time, they were asked to answer random questions. During the concentration practice without visual guidance, subjects were asked to look at the blank screen and imagine the action of grasping. No video guidance was provided at this time. At the beginning of each trial, the start of the trial was cued to the participant by a voice prompt. This experiment was repeated three times for each participant, and the attention values were recorded. Before the experiment, the process was explained to each participant and they had one trial to get familiar with the experiment process without recording their data. Between each trial, there was a break lasting at least 1 min.

The purpose of this part was to evaluate the proposed attention-based exoskeleton control method by measuring the response time and success rate of the rehabilitation exoskeleton activation based on the threshold measured in the previous section. Since the attention value threshold measured when participants were focusing on the video was higher than the one without visual guidance, visual guidance was applied here. Participants were asked to look at the motion demonstration of hand grasping on the screen and to imagine the same motion. If the threshold was reached, the hand exoskeleton would be activated to conduct the same motion. Each participant repeated this process twenty times, respectively. The response time for each test was recorded. Some subjects need a short period of time to clear their thoughts and focus on the hand motion. On the other hand, it is difficult for the human subjects to maintain a high level of attention value for a long time. Therefore, we set a 30 s time limit for each trial. When the time exceeded 30 s, the test was marked as a failure.

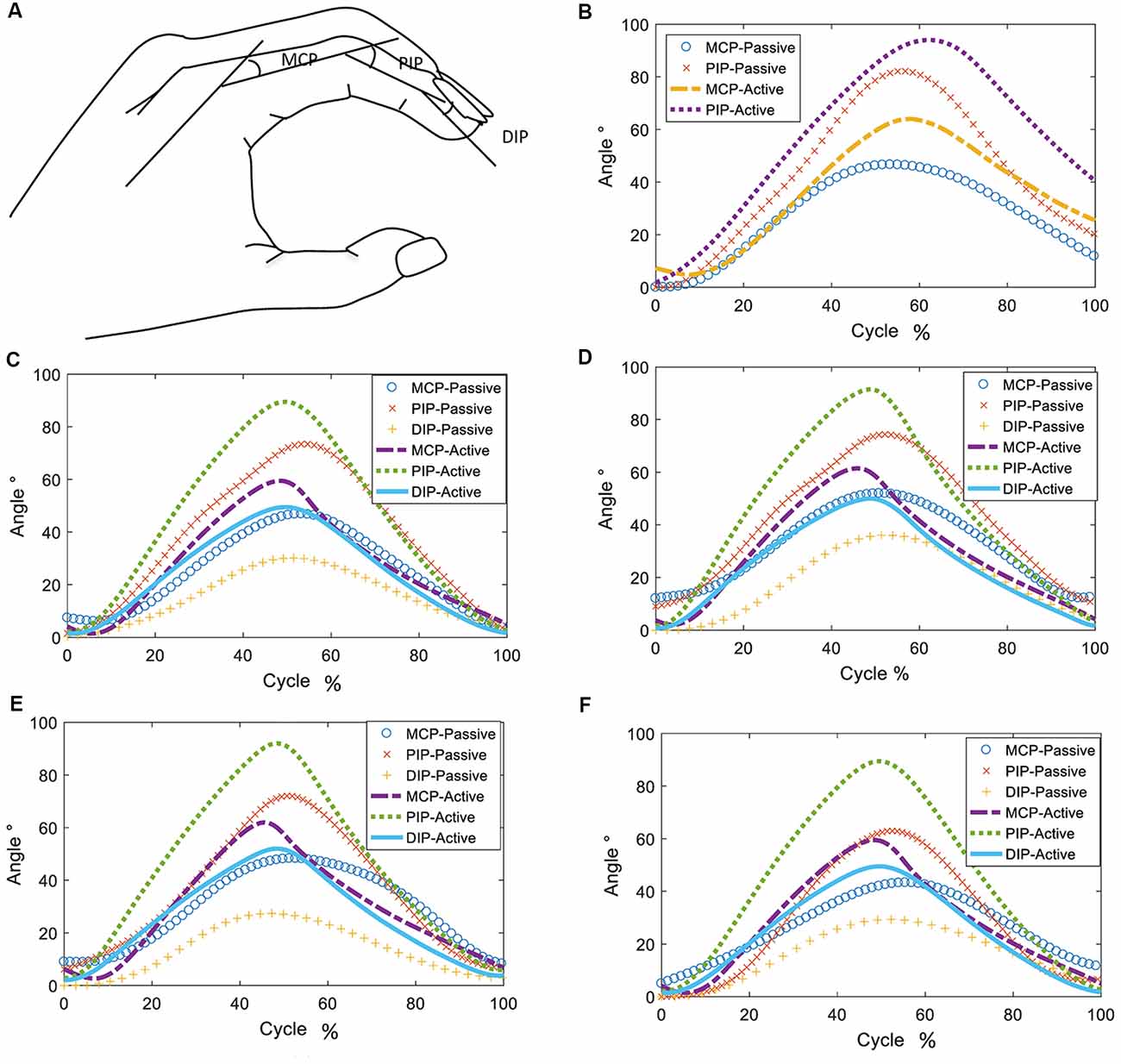

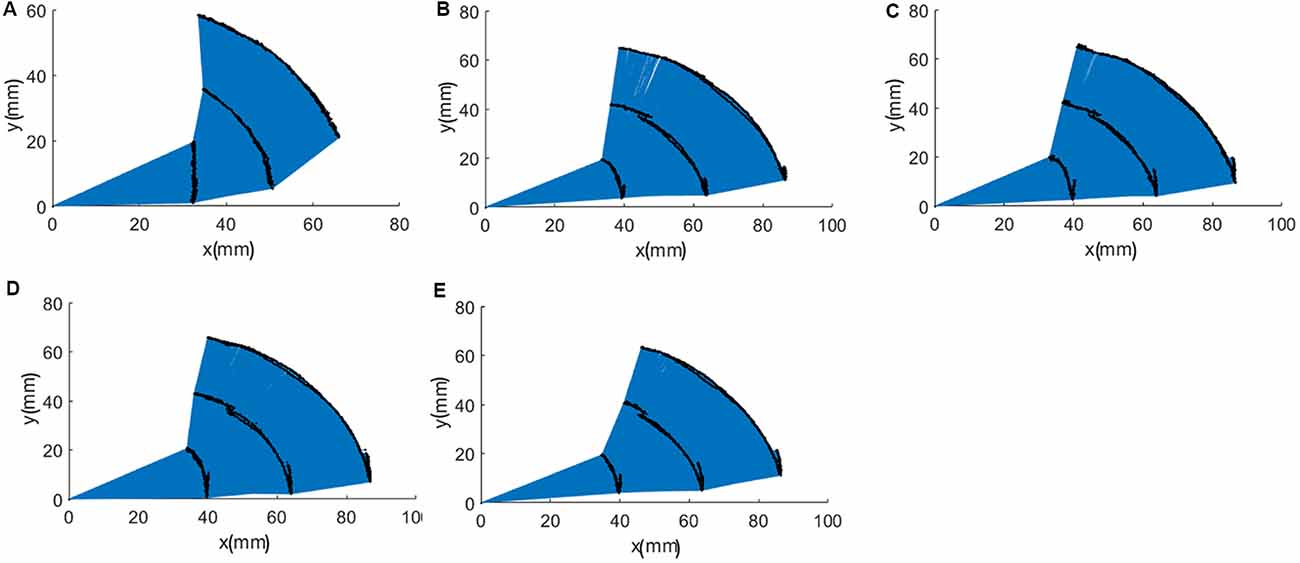

Figure 4A shows the definition of the joint angles. The range of motion of a single joint was compared to the maximum joint angle in the active session and the results are shown in Figures 4, 5, as well as in Table 3. Although the proposed finger exoskeleton achieved smaller joint bending angles, the mechanically guided motion of the prototype corresponded well to the active human finger motion. Since the achieved finger joint angles are larger than the finger joint angle requirements of tripod grasping shown in Section II (MPJ and IPJ of thumb bends around 51° and 27°; MCPJ, PIPJ, and DIPJ of the index finger bends around 46°, 48°, and 12°; and the middle finger bends around 46°, 54°, and 12°, respectively), we consider the range of motion of our proposed prototype sufficient for ADL assistance and finger rehabilitation.

Figure 4. Definitions of joint angles, shown in (A) and changes of joint angles during passive and active hand opening and closing: (B) thumb, (C) index finger, (D) middle finger, (E) ring finger, and (F) pinky finger.

Figure 5. Trajectories of fingertips, distal interphalangeal joint (DIPJ), and proximal interphalangeal joint (PIPJ): (A) thumb, (B) index finger, (C) middle finger, (D) ring finger, and (E) pinky finger.

As shown in Figure 6A, during the hand closing and opening process, the maximum interactive force between the exoskeleton and the human fingertips was 17.98 N, 16.52 N, 16.56 N, 17.71 N, and 18.70 N, respectively, for the thumb, index, middle, ring, and pinky finger. The results of the pulling force experiment are shown in Figure 6B. Four fingers can provide up to 30.87 ± 0.97 N of pull force. As described in Section “Exoskeleton Design”, each robot finger should exert a distal tip force of approximately 7.3 N to achieve a palmar grasp, which is four fingers against the palm of the hand, to pick up objects weighing less than 1.5 kg. We consider the output force of the proposed finger exoskeleton acceptable to provide assistance in ADLs.

Figure 6. Output force measurement experimental results: (A) the interactive forces between the exoskeleton and the human fingertips during the exoskeleton actuation; and (B) tension provided by the finger exoskeleton in a grasping motion.

An average attention value was calculated for each trial from a 30 s period of data when the attention value reached a stable status. The sample size was 42 (14 subjects × 3 repeats). As shown in Figure 7, the mean attention value of those subjects was 26.3 (SD = 10.7), 40.2 (SD = 10.70), 76.4 (SD = 8.74), and 81.1 (SD = 9.38), respectively, for the experiment of distraction, resting, concentration with no visual guidance, and concentration with visual guidance. A Shapiro-Wilk test was used to check the sample normality. The test results showed that the attention values of those experiment parts all had normal distributions (resting: W = 0.988, p = 0.925, focusing: W = 0.981, p = 0.7, disturbing: W = 0.951, p = 0.067, focusing with visual guidance: W = 0.963, p = 0.192). A Levene test was used to test the homogeneity of variance. The test results showed that their variances were equal (p = 0.734 > 0.05). Therefore, a one-way analysis of variance (ANOVA) test was used to test whether there were significant differences among those groups and among different human subjects. The analysis result showed that there were both significant differences among those groups (p = 1.71 × 10−67 < 0.05). Then a student t-test with Bonferroni correction was applied for pairwise comparisons using R Project for Statistical Computing. The experiment of distraction had significantly less attention value than the other three experiment parts (compared to resting: p = 9.31 × 10−8, compared to concentration with visual guidance: p < 2.2 × 10−16, compared to concentration: p < 2.2 × 10−16). There was also a significant difference between the average attention value of experiment of concentration with visual guidance and that of the experiment of concentration without visual guidance (p = 4.96 × 10−3). The average attention value of resting had significant less attention value than that of the experiment of concentration with and without visual guidance (p < 2.2 × 10−16).

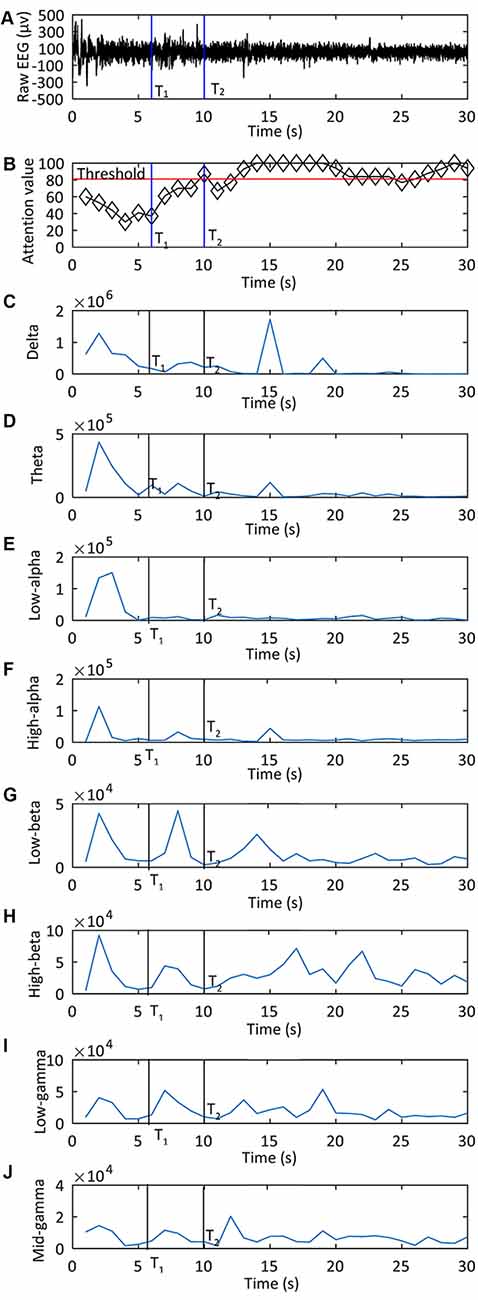

There were 14 subjects and 20 trials for each subject. The attention thresholds used in the experiment are shown in Figure 8A. The hand exoskeleton activation performance of each human subject is shown in Table 4. Figure 9 shows one set of EEG signals and attention values extracted from the Brainlink device. It illustrates the changing process of the attention value from the resting state to the focusing state. T1 was the moment when the subject heard the voice prompt to focus. T2 was the moment when the attention value reached the threshold. Note that the sampling rate of EEG signals of the ThinkGear AM module in the Brainlink Lite device was 512 Hz (NeuroSky, 2019). However, the attention values were calculated in a rate that could generate one data point per second in the ThinkGear AM module and only one data point every second of the corresponding EEG band power values could be extracted from the software of the Brainlink Lite device. These acquired EEG band power values had no units and were only meaningful compared to each other and to themselves, to consider relative quantity and temporal fluctuations. Error checking was performed against the frame and any frame with errors was discarded. Incidents of data loss over the Bluetooth link were observed in our experiments. During 1 min, about 55–58 data points could be received. Therefore, the data shown in Figure 9 have a time interval of about 1 s between every two data points. Since our threshold-based hand exoskeleton triggering application did not have a high requirement on the accurate time intervals of the attention value data points, data loss of the attention values was ignored for our hand exoskeleton control. The hand exoskeleton had an overall success rate of 94.64% during the experiment with customized thresholds. Using a general threshold, the hand exoskeleton had an overall success rate of 96.43%. In general, the actuation success rate was 95.54%. The ANOVA test result showed that there was no significant difference between the hand exoskeleton activation performance using a generalized threshold and using customized thresholds regarding the actuation success rate (p = 0.48 > 0.05). Note that both the actuation success rate using the general threshold and the customized thresholds were not normally distributed (Shapiro-Wilk test: general threshold: p < 0.001 < 0.05, customized threshold: p = 0.002 < 0.05) and their variances were not equal (Leyene test: p = 0.045 < 0.05).

Figure 8. (A) Thresholds used in the attention-based hand exoskeleton control experiment and (B) the time needed for the participants to reach the attention threshold to activate the hand rehabilitation exoskeleton.

Figure 9. One set of data shown electroencephalography (EEG) signals and attention values extracted from the Brainlink device during the experiment of the attention-based control of the hand exoskeleton: (A) the raw EEG signal; (B) the attention value used to control the exoskeleton; from (C–J) are EEG band power values for delta, theta, low alpha, high alpha, low beta, high beta, low gamma, high gamma, respectively. T1 was the moment when the subject heard the voice prompt to focus. T2 was the moment when the attention value reached the threshold.

As shown in Figure 8B, the average time before actuation was 8.06 s (SD = 6.15) and 8.08 s (SD = 6.21) for hand rehabilitation control with a general attention value threshold and with customized attention value thresholds, respectively. A Shapiro-Wilk test was used to test whether the activation time was normally distributed. The test results showed that the time before actuation of each experiment part did not have a normal distribution (hand exoskeleton controlled with a general attention value threshold: W = 0.798, p < 2.2 × 10−16, hand exoskeleton controlled with customized attention value thresholds: W = 0.8377, p = 5.639 × 10−16). Therefore, a Mann-Whitney U test was applied to compare the time difference between the two experiment parts. The time difference between the two experiment parts was not significant (W = 34734, p = 0.5607).

Compared to the hand exoskeleton design in Arata et al. (2013), which has a three-layered sliding spring mechanism, our proposed mechanism has less sliding springs and has a higher output force capacity (30.87 ± 0.97 N pull force for four fingers vs. 3 N per finger). Compared to the hand exoskeleton proposed in Borboni et al. (2016), our proposed design does not have an extra portable trolley carrying an air compressor, pneumatic, or electronic systems. Therefore, the entire device is much smaller.

To optimize the contact force and trajectory, it is necessary to conduct further research on the optimal design of the elements via kinematic modeling. Moreover, the actuator should also be adjusted according to different therapy exercises such as strong grasp, hook grasp, clip grasp, and spherical grasp, among others. Since the actuator is attached to a glove with Velcro straps, the actuator can be easily changed.

According to the success rates in the experiment of the attention-based control of the hand exoskeleton, the general threshold performed as good as the customized thresholds. Moreover, there was no significant difference in regards to the time used before the robot actuation in the system evaluation experiment. Therefore, a general threshold of the attention value for a certain group of users can be a good choice in hand exoskeleton activation. In this experiment, only a group of young, healthy people participated. In future studies, a greater number of participants with differing characteristics, such as those who have a medical history involving strokes, should be included.

Linking the intention to execute a movement with sensorimotor feedback has the potential to promote the rehabilitation of stroke patients. In this article, we proposed to use attention value as an input command for the control of the hand exoskeleton. This method is more practical to be clinically used than other complex and expensive BCI methods.

In this article, we present the design of a brain-controlled hand exoskeleton for the combined assistance and rehabilitation of finger extension and flexion using a multi-segment mechanism. Its compliance makes the hand exoskeleton safe for human-robotic interaction. The device was characterized in terms of its force output and range of motion. The results revealed that the device could achieve hand grasping with acceptable force and motion range. Active rehabilitation training is realized using a threshold of the attention value measured by an EEG sensor as a brain-controlled switch for the hand exoskeleton. In the experiment used to determine the attention value threshold, the average attention value measured when participants focusing on the video motion demonstration was higher than focusing without the visual guidance. An experiment was conducted to evaluate the performance of the attention value-based switch for the hand exoskeleton with visual guidance. The overall activation rate was as high as 95.54%. According to the success rates in the experiment of the attention-based control of the hand exoskeleton, it is evident that the general threshold performed as good as the customized thresholds. Moreover, the time used before the robot actuation in the system evaluation experiment showed there was no significant difference. Therefore, a general threshold of the attention value for a certain group of users can be a suitable choice in hand exoskeleton activation.

For future studies, the multi-segment mechanism will be further optimized to achieve different bending profiles with variable stiffness implemented at different localities. Thus, the device would be highly customizable for different therapy exercises. Moreover, additional experiments with stroke patients are required to further prove the clinical feasibility of the proposed method.

ML, JC, and BH: designed the hand exoskeleton. ML and ZL: designed the BCI system. ML, BH, C-GZ, ZL, and YZ: analyzed the data. ML: wrote the article. ML, GX, JX, and KA: contributed materials and analysis tools. KA: language correction.

This work was supported by the National Natural Science Foundation of China (approval no. 51505363), the Fundamental Research Funds for the Central Universities (xzy012019012), the China Postdoctoral Science Foundation (2019M653586), and the Natural Science Foundation of Shaanxi Province of China (2019JQ-332).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank the participants of the experiments.

Ang, B. W. K., and Yeow, C. H. (2017). “Print-it-Yourself (PIY) glove: a fully 3D printed soft robotic hand rehabilitative and assistive exoskeleton for stroke patients,” in Proceedings of the IEEE International Conference on Intelligent Robots and Systems (Vancouver, Canada: IEEE), 1219–1223.

Arata, J., Ohmoto, K., Gassert, R., Lambercy, O., Fujimoto, H., and Wada, I. (2013). “A new hand exoskeleton device for rehabilitation using a three-layered sliding spring mechanism,” in Proceedings of the 2013 IEEE International Conference on Robotics and Automation (Karlsruhe, Germany: IEEE), 3902–3907.

Bhagat, N. A., Venkatakrishnan, A., Abibullaev, B., Artz, E. J., Yozbatiran, N., Blank, A. A., et al. (2016). Design and optimization of an EEG-based brain machine interface (BMI) to an upper-limb exoskeleton for stroke survivors. Front. Neurosci. 10:122. doi: 10.3389/fnins.2016.00122

Borboni, A., Mor, M., and Faglia, R. (2016). Gloreha—hand robotic rehabilitation: design, mechanical model, and experiments. J. Dyn. Syst. Meas. Control 138:111003. doi: 10.1115/1.4033831

Carmeli, E., Peleg, S., Bartur, G., Elbo, E., and Vatine, J. J. (2011). HandTutor enhanced hand rehabilitation after stroke—a pilot study. Physiother. Res. Int. 16, 191–200. doi: 10.1002/pri.485

Connelly, L., Jia, Y., Toro, M. L., Stoykov, M. E., Kenyon, R. V., and Kamper, D. G. (2010). A pneumatic glove and immersive virtual reality environment for hand rehabilitative training after stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 18, 551–559. doi: 10.1109/tnsre.2010.2047588

Cruz, E. G., Waldinger, H. C., and Kamper, D. G. (2005). Kinetic and kinematic workspaces of the index finger following stroke. Brain 128, 1112–1121. doi: 10.1093/brain/awh432

Cui, X., Li, Z., Liu, Y., Yu, F., and Sun, H. (2018). Intelligent car housekeeper design based on brain waves. Conf. Mater. Sci. Eng. 397:012128. doi: 10.1088/1757-899X/397/1/012128

Cui, L., Phan, A., and Allison, G. (2015). “Design and fabrication of a three dimensional printable non- assembly articulated hand exoskeleton for rehabilitation,” in Proceedings of the International Conference of the IEEE Engineering in Medicine and Biology Society (Milan, Italy: IEEE), 4627–4630.

CyberGlove Systems Inc. (2016). Cybergrasp. Available online at: http://www.cyberglovesystems.com/cybergrasp. Accessed on December 16, 2016.

Delph, M. A., Fischer, S. A., Gauthier, P. W., Luna, C. H. M., Clancy, E. A., and Fischer, G. S. (2013). “A soft robotic exomusculature glove with integrated sEMG sensing for hand rehabilitation,” in Proceedings of the 2013 IEEE 13th International Conference on Rehabilitation Robotics (ICORR) (Seattle, WA, USA: IEEE), 1–7. Available online at: http://dx.doi.org/10.1109/ICORR.2013.6650426

Fang, H., Xie, Z., and Liu, H. (2009). “An exoskeleton master hand for controlling DLR/HIT hand,” in Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009 (St. Louis, USA: IEEE), 3703–3708.

Heo, P., Gu, G. M., Lee, S.-J., Rhee, K., and Kim, J. (2012). Current hand exoskeleton technologies for rehabilitation and assistive engineering. Int. J. Precis. Eng. Manuf. 13, 807–824. doi: 10.1007/s12541-012-0107-2

Ho, N. S. K., Tong, K. Y., Hu, X. L., Fung, K. L., Wei, X. J., Rong, W., et al. (2011). An EMG-driven exoskeleton hand robotic training device on chronic stroke subjects: task training system for stroke rehabilitation. IEEE Int. Conf. Rehabil. Robot. 2011:5975340. doi: 10.1109/icorr.2011.5975340

In, H., Kang, B. K., Sin, M., and Cho, K. (2015). Exo-Glove: a soft wearable robot for the hand using soft tendon routing system. IEEE Robot. Autom. Magaz. 22, 97–105. doi: 10.1109/MRA.2014.2362863

Iordache, B. (2017). Brain-computer interface: the mindwave mobile interactive device for brainwave monitoring beatrice iordache. Cent. East. Eur. Online Libr. J. 1–1, 43–52.

Iqbal, J., Khan, H., Tsagarakis, N. G., and Caldwell, D. G. (2014). A novel exoskeleton robotic system for hand rehabilitation—conceptualization to prototyping. Biocybern. Biomed. Eng. 34, 79–89. doi: 10.1016/j.bbe.2014.01.003

Ito, S., Kawasaki, H., Ishigure, Y., Natsume, M., Mouri, T., and Nishimoto, Y. (2011). A design of fine motion assist equipment for disabled hand in robotic rehabilitation system. J. Franklin Inst. 348, 79–89. doi: 10.1016/j.jfranklin.2009.02.009

Jiang, S., Chen, L., Wang, Z., Xu, J., Qi, C., Qi, H., et al. (2015). “Application of BCI-FES system on stroke rehabilitation,” in Proceedings of the International IEEE/EMBS Conference on Neural Engineering, NER (Montpellier, France: IEEE), 1112–1115.

Kamper, D. G., Fischer, H. C., Cruz, E. G., and Rymer, W. Z. (2006). Weakness is the primary contributor to finger impairment in chronic stroke. Arch. Phys. Med. Rehabil. 87, 1262–1269. doi: 10.1016/j.apmr.2006.05.013

Kokubun, A., Ban, Y., Narumi, T., Tanikawa, T., and Hirose, M. (2013). “Visuo-haptic interaction with mobile rear touch interface,” in ACM SIGGRAPH 2013 Posters on—SIGGRAPH ’13 (New York, USA: ACM Press), 38.

Kraus, D., Naros, G., Bauer, R., Khademi, F., Leão, M. T., Ziemann, U., et al. (2016). Brain state-dependent transcranial magnetic closed-loop stimulation controlled by sensorimotor desynchronization induces robust increase of corticospinal excitability. Brain Stimul. 9, 415–424. doi: 10.1016/j.brs.2016.02.007

Kutner, N. G., Zhang, R., Butler, A. J., Wolf, S. L., and Alberts, J. L. (2010). Quality-of-life change associated with robotic-assisted therapy to improve hand motor function in patients with subacute stroke: a randomized clinical trial. Phys. Ther. 90, 493–504. doi: 10.2522/ptj.20090160

Lambercy, O., Dovat, L., Yun, H., Wee, S. K., Kuah, C. W. K., Chua, K. S. G., et al. (2011). Effects of a robot-assisted training of grasp and pronation/supination in chronic stroke: a pilot study. J. Neuroeng. Rehabil. 8:63. doi: 10.1186/1743-0003-8-63

Li, L., Wang, J., Xu, G., Li, M., and Xie, J. (2015). The study of object-oriented motor imagery based on EEG suppression. PLoS One 10:e0144256. doi: 10.1371/journal.pone.0144256

Li, M., Xu, G., Xie, J., and Chen, C. (2018). A review: motor rehabilitation after stroke with control based on human intent. Proc. Inst. Mech. Eng. H 232, 344–360. doi: 10.1177/0954411918755828

Liang, S., Choi, K.-S., Qin, J., Pang, W.-M., Wang, Q., and Heng, P.-A. (2016). Improving the discrimination of hand motor imagery via virtual reality based visual guidance. Comput. Methods Programs Biomed. 132, 63–74. doi: 10.1016/j.cmpb.2016.04.023

Maciejasz, P., Eschweiler, J., Gerlach-Hahn, K., Jansen-Troy, A., and Leonhardt, S. (2014). A survey on robotic devices for upper limb rehabilitation. J. Neuroeng. Rehabil. 11:3. doi: 10.1186/1743-0003-11-3

Nakagawara, S., Kajimoto, H., Kawakami, N., Tachi, S., and Kawabuchi, I. (2005). “An encounter-type multi-fingered master hand using circuitous joints,” in Proceedings of the IEEE International Conference on Robotics and Automation (Barcelona, Spain: IEEE), 2667–2672.

NeuroSky. (2018). Attention algorithm. Available online at: http://neurosky.com/biosensors/eeg-sensor/algorithms. Accessed September 10, 2018.

NeuroSky. (2019). TGAM1 characteristics. Available online at: http://neurosky.com/biosensors/eeg-sensor/?tdsourcetag=s_pctim_aiomsg. Accessed May 7, 2019.

Nycz, C. J., Butzer, T., Lambercy, O., Arata, J., Fischer, G. S., and Gassert, R. (2016). Design and characterization of a lightweight and fully portable remote actuation system for use with a hand exoskeleton. Proc. IEEE Robot. Autom. Lett. 1, 976–983.

Oztop, E., Kawato, M., and Arbib, M. A. (2013). Mirror neurons: functions, mechanisms and models. Neurosci. Lett. 540, 43–55. doi: 10.1016/j.neulet.2012.10.005

Pichiorri, F., Morone, G., Petti, M., Toppi, J., Pisotta, I., Molinari, M., et al. (2015). Brain-computer interface boosts motor imagery practice during stroke recovery. Ann. Neurol. 77, 851–865. doi: 10.1002/ana.24390

Polygerinos, P., Lyne, S., Wang, Z., Nicolini, L. F., Mosadegh, B., Whitesides, G. M., et al. (2013). “Towards a soft pneumatic glove for hand rehabilitation,” in Proceedings of the IEEE International Conference on Intelligent Robots and Systems (Tokyo, Japan: IEEE), 1512–1517.

Polygerinos, P., Wang, Z., Galloway, K. C., Wood, R. J., and Walsh, C. J. (2015a). Soft robotic glove for combined assistance and at-home rehabilitation. Rob. Auton. Syst. 73, 135–143. doi: 10.1016/j.robot.2014.08.014

Polygerinos, P., Wang, Z., Galloway, K. C., Wood, R. J., and Walsh, C. J. (2015b). “Soft robotic glove for hand rehabilitation and task specific training,” in Proceedings of the IEEE International conference on Robotics and Automation ICRA (Washington, USA: IEEE), 2913–2919.

Rehab-Robotics Company Ltd. (2019). Hand of Hope brochure. Available online at: http://www.rehab-robotics.com/hoh/RM-230-HOH3-0001-7%20HOH_brochure_eng.pdf. Accessed on March 25, 2019.

Smaby, N., Johanson, M. E., Baker, B., Kenney, D. E., Murray, W. M., and Hentz, V. R. (2004). Identification of key pinch forces required to complete functional tasks. J. Rehabil. Res. Dev. 41, 215–224. doi: 10.1682/jrrd.2004.02.0215

Takahashi, C. D., Der-Yeghiaian, L., Le, V., Motiwala, R. R., and Cramer, S. C. (2008). Robot-based hand motor therapy after stroke. Brain 131, 425–437. doi: 10.1093/brain/awm311

Teo, W. P., and Chew, E. (2014). Is motor-imagery brain-computer interface feasible in stroke rehabilitation? PM R 6, 723–728. doi: 10.1016/j.pmrj.2014.01.006

Tong, K. Y., Ho, S. K., Pang, P. M. K., Hu, X. L., Tam, W. K., Fung, K. L., et al. (2010). An intention driven hand functions task training robotic system. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2010, 3406–3409. doi: 10.1109/iembs.2010.5627930

Ueki, S., Kawasaki, H., Ito, S., Nishimoto, Y., Abe, M., Aoki, T., et al. (2012). Development of a hand-assist robot with multi-degrees-of-freedom for rehabilitation therapy. IEEE/ASME Trans. Mechatronics 17, 136–146. doi: 10.1109/tmech.2010.2090353

Ueki, S., Nishimoto, Y., Abe, M., Kawasaki, H., Ito, S., Ishigure, Y., et al. (2008). Development of virtual reality exercise of hand motion assist robot for rehabilitation therapy by patient self-motion control. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2008, 4282–4285. doi: 10.1109/iembs.2008.4650156

van Dokkum, L. E. H., Ward, T., and Laffont, I. (2015). Brain computer interfaces for neurorehabilitation-its current status as a rehabilitation strategy post-stroke. Ann. Phys. Rehabil. Med. 58, 3–8. doi: 10.1016/j.rehab.2014.09.016

Vourvopoulos, A., and Bermúdez I Badia, S. (2016). Motor priming in virtual reality can augment motor-imagery training efficacy in restorative brain-computer interaction: a within-subject analysis. J. Neuroeng. Rehabil. 13:69. doi: 10.1186/s12984-016-0173-2

Wolf, S. L., Winstein, C. J., Miller, J. P., and Morris, D. (2006). Effect of constraint-induced movement therapy on upper extremity function 3 to 9 months after stroke: the EXCITE randomized clinical trial. JAMA 296, 2095–2104. doi: 10.1001/jama.296.17.2095

Xu, R., Jiang, N., Mrachacz-Kersting, N., Lin, C., Asin Prieto, G., Moreno, J. C., et al. (2014). A closed-loop brain-computer interface triggering an active ankle-foot orthosis for inducing cortical neural plasticity. IEEE Trans. Biomed. Eng. 61, 2092–2101. doi: 10.1109/tbme.2014.2313867

Yap, H. K., Goh, J. C. H., and Yeow, R. C. H. (2015a). “Design and characterization of soft actuator for hand rehabilitation application,” in Proceedings of the 6th European Conference of the International Federation for Medical and Biological Engineering (Switzerland: Springer International Publishing), 367–370.

Yap, H. K., Lim, J. H., Nasrallah, F., Goh, J. C. H., and Yeow, R. C. H. (2015b). “A soft exoskeleton for hand assistive and rehabilitation application using pneumatic actuators with variable stiffness,” in IEEE International Conference on Robotics and Automation (ICRA) (Seattle, WA: IEEE), 4967–4972.

Keywords: hand exoskeleton, hand rehabilitation, brain-controlled rehabilitation, rigid-soft combined robot, EEG

Citation: Li M, He B, Liang Z, Zhao C-G, Chen J, Zhuo Y, Xu G, Xie J and Althoefer K (2019) An Attention-Controlled Hand Exoskeleton for the Rehabilitation of Finger Extension and Flexion Using a Rigid-Soft Combined Mechanism. Front. Neurorobot. 13:34. doi: 10.3389/fnbot.2019.00034

Received: 30 November 2018; Accepted: 15 May 2019;

Published: 29 May 2019.

Edited by:

Yongping Pan, National University of Singapore, SingaporeReviewed by:

Nikunj Arunkumar Bhagat, University of Houston, United StatesCopyright © 2019 Li, He, Liang, Zhao, Chen, Zhuo, Xu, Xie and Althoefer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Min Li, bWluLmxpQG1haWwueGp0dS5lZHUuY24=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.