94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Neurorobot., 09 August 2017

Volume 11 - 2017 | https://doi.org/10.3389/fnbot.2017.00037

This article is part of the Research TopicNeuromechanics and Control of Physical Behavior: from Experimental and Computational Formulations to Bio-inspired TechnologiesView all 23 articles

A dynamical model of an animal’s nervous system, or synthetic nervous system (SNS), is a potentially transformational control method. Due to increasingly detailed data on the connectivity and dynamics of both mammalian and insect nervous systems, controlling a legged robot with an SNS is largely a problem of parameter tuning. Our approach to this problem is to design functional subnetworks that perform specific operations, and then assemble them into larger models of the nervous system. In this paper, we present networks that perform addition, subtraction, multiplication, division, differentiation, and integration of incoming signals. Parameters are set within each subnetwork to produce the desired output by utilizing the operating range of neural activity, R, the gain of the operation, k, and bounds based on biological values. The assembly of large networks from functional subnetworks underpins our recent results with MantisBot.

The development of robotic control that can closely match the dexterity and adaptability found in the animal kingdom has so far remained elusive. This is because the control of locomotion is a complex process controlled by dynamic systems which are not fully understood. However, recent advances in neural imaging and recording has lead to an increase in the abundance and detail of our knowledge of how an animal’s nervous system controls its body within the context of its environment (for a recent review, see Buschmann et al. (2015)).

These advances have lead to an explosion of bio-inspired robotic systems in recent years (for a review, see Ijspeert (2014)). These models can be broadly categorized into a range of template and anchor models. In a template model, biological principles are abstracted, such using as a spring-loaded inverted pendulum (SLIP) model to investigate bipedal locomotion (Blickhan, 1989) or using Whegs to investigate insect locomotion (Allen et al., 2003; Schroer et al., 2004). These models seek to explain how specific characteristics of animal locomotion lead to desired behaviors, or they exploit certain principles of animal locomotion for more agile robotic systems. Anchor models, in contrast, seek to directly mimic particular mechanical or control mechanisms from animals, in order to understand how they function. Robots such as Pleurobot (Karakasiliotis et al., 2016), Puppy (Hunt et al., 2017), MantisBot (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a), and others are relevant anchor models, because they seek to use highly articulated robots with central pattern generator controllers to understand how specific animals are capable of providing adaptable locomotion with their unique morphology and physical constraints.

The template versus anchor model distinction is not limited to physical models; it can also be applied to control systems. The majority of robotic controllers so far have been template models, either mathematical abstractions of neural systems, or black box artificial neural networks. This is because effective tools for setting parameters in more realistic, dynamic neural models to produce reliable behavior in a robotic system do not yet exist. In spite of growing knowledge about the neural connectivity that underlies locomotion control, detailed data for tuning these systems (neural time constants, ion channel conductivities, synaptic conductivities, etc.) remain largely unavailable, requiring the modeler or engineer to tune these parameter values. However, this is an inherently difficult task because there are many parameters to be tuned in a model, and likely many different parameter combinations that lead to indistinguishable performance (Prinz et al., 2004; Marder and Taylor, 2011). Thus, the emphasis in choosing parameter values should not be on selecting the singular “correct” values, but rather sufficiently “effective” values. In this work, we tune parameter values in functional subnetworks for addition, subtraction, multiplication, division, differentiation, and integration of incoming signals and use analytical techniques to identify constraints on the parameter values that must be met for the intended calculations to occur. Larger networks can then be assembled from these subnetworks with no additional tuning (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a). In this manuscript, “tuning” refers to selecting the static parameter values for a network; “learning” refers to changing the parameter values while the model performs a task, either in simulation or in a robot, based on its performance; and “adapting” refers to a model qualitatively changing its behavior (e.g., walking more slowly), either with or without “learning.”

Neuromechanical models may be tuned in a supervised or unsupervised way. A supervised tuning method adjusts the parameters of the model until the model replicates animal data. This includes tuning the model by hand (Daun-Gruhn and Tóth, 2010; Szczecinski et al., 2014; Markin et al., 2016), which is a time-consuming and imprecise process. Such imprecision may be acceptable in simulation studies, but provides many difficulties for robots that must interact with real environments. Techniques do exist for tuning controllers based on animal locomotion data (Schilling et al., 2013; Hunt et al., 2015b, 2017; Karakasiliotis et al., 2016). However, collecting kinematic and dynamic data from animals is time-consuming and expensive, and once collected, must be further processed to scale the dynamics of the animal to the robot (Karakasiliotis et al., 2016; Hunt et al., 2017). In addition, using cross-individual average values for tuning dynamical neural models may fail in many cases, because the average may not represent any one individual (Golowasch et al., 2002; Marder and Taylor, 2011). Large amounts of animal data may be used to tune a control network of abstracted artificial neural networks (Schilling et al., 2013). Methods like back-propagation can be used to adjust synaptic weights in the network until it captures the animal data arbitrarily closely, if it has enough connections (Trappenberg, 2009). However, because the control network is abstracted, so are the biological insights gained from the model.

Unsupervised tuning methods, in contrast, tune the model based on how well the model accomplishes a task, such as navigating toward a goal, without comparison to animal data. These methods frequently use genetic algorithms (GAs) (Beer and Gallagher, 1992; Haferlach et al., 2007; Agmon and Beer, 2013; Izquierdo and Beer, 2013) or reservoir computing (RC) (Dasgupta et al., 2015) to test many different networks and parameter values, based on a simulated agent’s performance. GAs can be effective at finding networks that perform specific operations, such as oscillating (Beer and Gallagher, 1992), navigating (Haferlach et al., 2007), or switching between foraging tasks (Agmon and Beer, 2013). However, this approach has some drawbacks. Specifically, the evolution process is slow, requiring the simulation of hundreds or thousands of parameter combinations (Agmon and Beer, 2013), which may take days without great computing power. The speed and likelihood of success can be increased by embedding functional subnetworks in the network (Pasemann et al., 2001; Haferlach et al., 2007), which may be identified by brute-force (Prinz et al., 2003), dynamical systems analysis (Hunt et al., 2017), or constraints on network connectivity and parameter values (Haferlach et al., 2007). In this paper, we analytically derive parameter constraints to eliminate the need for GAs altogether, and guarantee network performance.

RC methods simulate large “reservoirs” of randomly connected dynamical neuron models, and then use optimization methods to map reservoir activity to learned useful values. While this method can produce capable robotic controllers (Dasgupta et al., 2015), the final system is likely more complicated than is ultimately necessary, increasing its computational cost to implement. In addition, the final system is a black box, which does not provide any insights about nervous system function. The methods in this paper enable the direct assembly and tuning of dynamical networks without the need of large reservoirs of neurons.

This work analytically derives constraints that govern the behavior of synthetic nervous systems (SNSs) built from dynamical neural networks. These constraints were derived as a result of our previous network design work (Szczecinski et al., 2017b) and have enabled the rapid assembly and testing of our recent robot control networks (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a). An SNS designer can apply these constraints to find parameter values needed for a functional network. Section 2 presents the neural and synaptic models and explains how the neural system encodes mechanical inputs and outputs. Section 3 derives two basic synapse types, “signal transmission” and “signal modulation,” and uses them to derive constraints on synaptic parameters in networks performing addition, subtraction, multiplication, and division of two incoming signals. Section 4 derives constraints on neural and synaptic parameters in networks that differentiate and integrate incoming signals as a function of time. Results showing that the networks perform as intended are provided throughout the manuscript, and Tables 1 and 2 summarize the design constraints. Finally, Sec. 6 explores how these techniques may be used to tune robot controllers and neuromechanical models of animals, and how they may be improved in the future.

We model neurons as non-spiking Hodgkin–Huxley compartments (Cofer et al., 2010), the same basic model as used in continuous-time recurrent neural networks (Haferlach et al., 2007; Agmon and Beer, 2013). The leaky integrator dynamics capture the most basic behavior of neurons and allow more complex behaviors to be added with additional ion channels, if desired. This work is not concerned with the specifics on how action potentials are generated and have left out Hodgkin–Huxley sodium and potassium currents. The membrane voltage, V, may be seen as a proxy for the spiking frequency of a spiking neuron. V varies according to the differential equation

where

and Iapp is an optional external stimulus. Equations (2) and (3) define the leak and synaptic currents, respectively. Both follow the same basic form of a conductance G multiplied by the difference between the current membrane voltage, V, and a constant reference voltage (i.e., reversal potential), E. Er is the resting potential of the neuron, and Cm and Gm are the capacitance and conductance of the cell membrane, respectively. Unless otherwise noted, all units in this paper are scaled to nA for current, mV for potentials, nF for capacitances, and μS for conductances.

Neurons communicate via synapses. The conductance, Gs,i in equation (3), is a threshold linear function of the ith incoming (i.e., presynaptic) neuron’s voltage. Synapses communicate via piecewise-linear functions described as

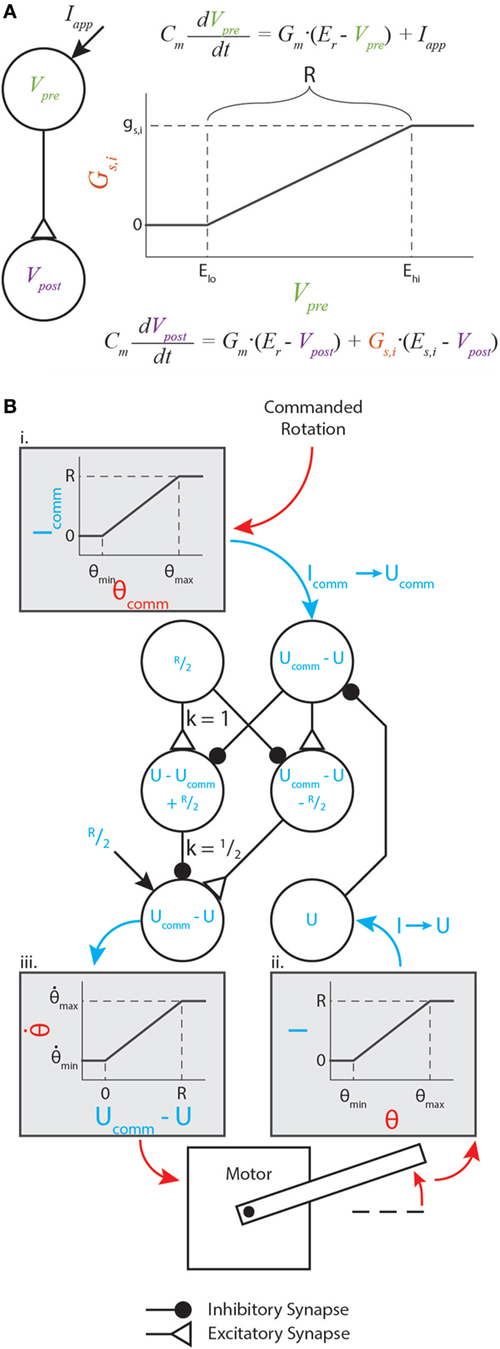

The parameters gs,i, Elo, and Ehi are constants representing the synapse’s maximum conductance, its lower threshold, and its upper threshold, respectively. The relationship between the presynaptic neuron voltage, synaptic conductance, and postsynaptic neuron voltage is illustrated in Figure 1A.

Figure 1. Graphical representation of synaptic dynamics and mapping between mechanical and neural values. (A) Graphical representation of how synapses couple neural dynamics. Note that R is marked on the plot. (B) Enhanced version of the motor control network from Szczecinski et al. (2017b), showing how R relates mechanical and neural values. Mechanical values are drawn in red, and neural values are drawn in blue. Gray shaded boxes map from mechanical to neural values (i. and ii.), or from neural to mechanical values (iii.).

We prefer this piecewise-linear representation better than a sigmoidal function for several reasons. First, the thresholds ensure that for low activations, synapses conduct exactly 0 current. This could represent a reduced model of a spiking neuron, which transmits no information while it is not spiking. Second, equation (4) contains no transcendental terms, facilitating analytical manipulation of the equations. A discontinuous system does complicate traditional gradient-based optimization methods, but this structure can be exploited to make these methods unnecessary. In the following sections, we show how networks of three or four neurons with synapses between them can be constructed and analytically tuned to perform mathematical operations on the input signals, such as addition or differentiating with respect to time.

Instead of analyzing V when designing these networks, we shift the neural activity to simplify analysis. For each neuron, we substitute U = V − Er, the activation level above the resting voltage. A typical value is Er = −60 mV, but using U for analysis rather than V lets us apply the same analysis no matter what Er is. We also set Gm = 1 μS, which is a typical value (Daun-Gruhn et al., 2009; Daun-Gruhn, 2010).

For the synapses, we set Elo = Er of the presynaptic neuron, and introduce a new parameter R = Ehi − Elo. Thus, a synapse’s conductivity rises as the presynaptic neuron’s voltage rises above its resting potential, and exhibits an “operating range” of R mV. The constraints we apply ensure that Upre ∈ [0, R], meaning that the synapse is always active, but never saturates. Thus, we can replace Gs with the second line of equation (4). Applying the substitutions described so far,

For each synapse, we also introduce the parameter ΔEs,i = Es,i − Er,post, where Er,post is the resting potential of the postsynaptic, or receiving neuron.

Making all of these substitutions in equations (1)–(3) gives the response

When U = R, the neuron is fully active, and when U = 0, the neuron is inactive. We can use this knowledge to categorize synapses as excitatory or inhibitory, depending on the sign of ΔEs,i. If ΔEs,i ≥ R, then the ith synapse will always transmit positive current, no matter the instantaneous value of U. Thus, this synapse will cause U to increase and is, therefore, excitatory. Similarly, if ΔEs,i ≤ 0, then the ith synapse will always transmit negative current, no matter the instantaneous value of U. Thus, this synapse will cause U to decrease and is, therefore, inhibitory.

The nervous system encodes physical quantities as neural activity. In insects, the firing rate of sensory neurons encode the stretch of chordotonal organs (Field and Matheson, 1998) and the strain of campaniform sensilla (Zill et al., 2004), among other physical quantities. Typical robot controllers perform operations on these signals to provide meaningful information for control actions. These operations may include the subtraction of measured and reference values, differentiation or integration of error values, or gain adjustments. Neural systems perform these same operations, but in a transformed space. The exact transformation that nervous systems use is not known, but for reliable behavior, it is necessary that sensory information is mapped to neural activity in apredictable way. Thus, we map any sensory input, θ, to an applied current

where R is the “operating range” specified in the previous section. Figure 1B illustrates such a transformation within a diagram of a neural feedback loop that controls the position of a motor. The purpose of this paper is not to analyze how this particular network functions; for a detailed analysis of this network and its function, see Szczecinski et al. (2017b). Instead, the purpose is to show how R and other functional values (time constants, gains, etc.) may be used to constrain neural and synaptic parameter values.

Figure 1B (i,ii) graphically illustrate the mapping in equation (7), and Figure 1B (iii) graphically illustrates the inverse relationship (i.e., neural value to mechanical value). If a sensory neuron has only this applied current and leak current, equation (6) shows that

This means that the sensory neuron acts as a low-pass filter with time constant τ = Cm. It is trivial to show that, when the neuron is at equilibrium (i.e., dU/dt = 0),

where the superscript “∗” specifies the equilibrium value. (Throughout this manuscript, the equilibrium activation of neuron U will be referred to as U*, and the neuron itself will be referred to as U.) Equation (9) means that the neuron’s activation above its rest potential encodes the sensory signal. In addition to perceiving sensory information, commands must be issued in the same transformation. Thus, we map the commanded sensory quantity, θcomm, to the commanded neural activation, Ucomm, with the inverse function of equation (9),

In this way, the nervous system may specify an intended motion, such as the rotation of a joint, encoded in neural activity. In our synthetic nervous systems, R specifies how mechanical quantities and neural activation are related. Thus, the tuning of every functional subnetwork described in this work relies on R, which the designer specifies before tuning the rest of the network. Two other parameters are critical for tuning these subnetworks: the amplification of synaptic transmission, ksyn (discussed in Sec. 3.1), and the synaptic reversal potential, ΔEs. From these values, biological parameters such as synaptic conductance and neural tonic drive can be directly calculated. This makes network design intuitive, enabling the designer to select biological parameter values based on functional ones.

This section describes how to use typical engineering quantities to design neural and synaptic pathways. We can understand how these pathways work by manipulating their equilibria, something that naive optimization does not leverage. The steady-state activation U* is calculated by solving for U when dU/dt = 0

Moving all U* terms to the left hand side

Solving for U*,

This solution is the basis for the remainder of Sec. 3.

The goal of a signal transmission pathway is to cause the postsynaptic neuron’s voltage to be some ratio of the presynaptic neuron’s voltage. We call this ratio ksyn. The Upre,i terms in the denominator of the right hand side of equation (13) mean that ksyn changes as Upre changes, so we approximate ksyn as Upost/Upre when the presynaptic neuron is fully activated (i.e., Upre = R). The steady-state response of a neuron with a single synaptic input and no applied current can be written based on equation (13), as below:

To find ksyn for this synapse, we first divide both sides of equation (14) by Upre,

Next, we want to find ksyn, which can be calculated for any value of Upre. To simplify analysis and improve the clarity of this derivation, we set find ksyn when Upre = R. Then, we show how to set parameter values to keep ksyn nearly constant, even as Upre changes. Making this substitution,

Finally, reducing R/R terms reveals

Rearranging to solve for gs,

Because gs must be positive, and the numerator of equation (18) is always positive, equation (18) is also subject to the constraint

Equation (18) will be used to tune addition, subtraction, multiplication, and division networks (Secs. 3.3 through 3.6).

We may also use synapses to modulate a neuron’s sensitivity to other inputs. Based on equation (13), the steady-state response of a neuron with only an applied current Iapp is simply

if we set Gm = 1. For example, this is the case for a sensory neuron that receives applied current proportional to a sensor’s state, such as a joint angle (Figure 1B), muscle stretch, or touch sensor. However, the nervous system may need to actively increase or reduce the sensitivity of the sensory neuron depending on context. Hyperpolarizing or depolarizing the neuron, however, would cause sensory information to be truncated (i.e., Vpre < Elo). We can change the sensitivity of this neuron without losing sensory information by adding a synaptic input to the response from equation (20):

To quantify how Upre modulates for a given Iapp, we introduce the parameter csyn, which quantifies this degree of modulation. We define csyn as , the same as ksyn, but with the understanding that Upre will decrease in this case. Dividing both sides of equation (21) by Upre and using the definition of csyn,

As in the previous section, we will solve for csyn when Upre = R to simplify analysis. Making this substitution and reducing R/R terms,

Multiplying both sides by the denominator of the right hand side and expanding,

Collecting gs terms on the left hand side,

Solving equation (25) for gs,

Just as in Sec. 3.1, gs > 0 depends only on R, which the designer specifies beforehand, ΔEs, which is limited by biological constraints, and csyn, which the designer picks based on network function. ΔEs should be negative, and as close to 0 as possible to minimize hyperpolarization of the postsynaptic neuron. Equation (26) will be used to tune division and multiplication networks (Secs. 3.5 and 3.6).

A subnetwork that approximates linear addition of the form may underlie positive feedback mechanisms, which increase motor neuron activation proportional to sensory inputs such as force sensing organs (Zill et al., 2004), or used to sum sensory signals from different body segments (Mittelstaedt, 1957). We construct such a network by using two Signal Transmission pathways as presented in Sec. 3.1.

Let us rewrite equation (13) here, for clarity:

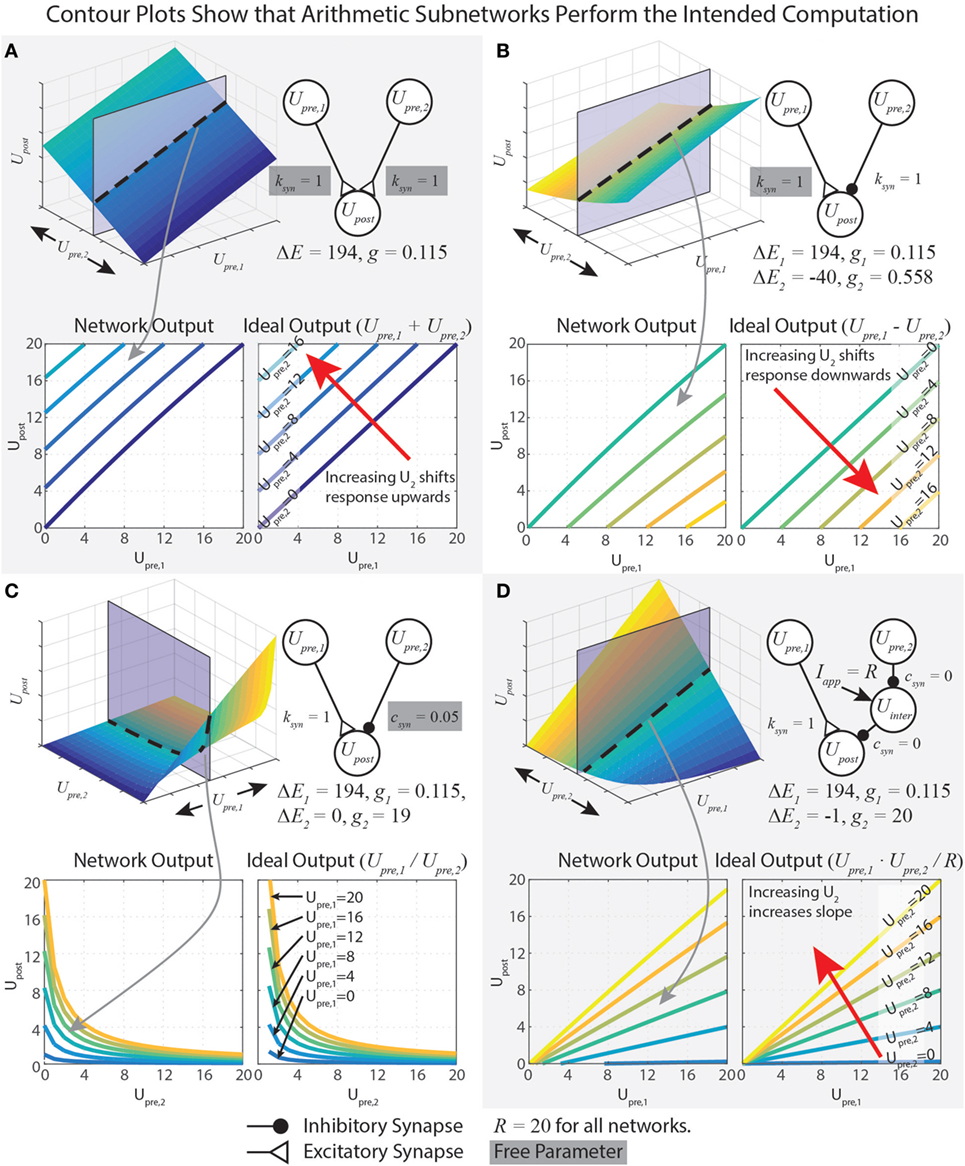

This equation shows Upre,i in both the numerator and denominator. To capture addition, we wish to minimize the impact of Upre,i on the denominator. This is accomplished by minimizing gs. However, if gs = 0, then the network will not function at all. Therefore, we instead maximize ΔEs, which yields a small gs (equation (18)). Mathematically, there is no limit on ΔEs, but synaptic potentials are limited in biological systems. In our work, we choose the reversal potential of calcium (Es = 134 mV), which yields ΔEs = Es − Er = 134 − (−60) = 194 mV, and specify R = 20 mV. To design a pathway where ksyn = 1, for example, we plug these values into equation (18), which gives gs = 115 nS. The contour plots in Figure 2A show that the network matches the ideal behavior very closely over the operating range Usum ∈ [0, R]. These design constraints are summarized in Table 1.

Figure 2. Data demonstrating the function of arithmetic networks. Each contour plot represents cross sections of the response surface, as depicted at the top. The network diagram, relevant parameters, and data are shown for addition (A), subtraction (B), division (C), and multiplication (D). Triangular synaptic terminations stand for excitatory inputs, and filled round terminations stand for inhibitory inputs. For each operation, the contour on the right is the ideal output, and the contour on the left is the actual operation for the parameter values listed. Free parameters from Table 1 are highlighted in gray.

A subnetwork that approximates linear subtraction of the form may underlie negative feedback mechanisms, which are important for controlling many parameters in locomotion (Pearson, 1993; Peterka, 2003; Buschmann et al., 2015). Just as in the previous section, equation (18) is used to find gs for each pathway.

Designing a subtraction network requires that we pay attention to how the two synapses affect one another. Since the reversal potentials of hyperpolarizing ion channels are not much more negative than typical resting potentials, larger gs,2 values are required to transmit information than for depolarizing ion channels. This makes it harder to minimize gs like we did in the previous section. Equation (13) enables us to constrain gs,2 such that when Upre,1 = R and Upre,2 = R, . Starting with the neuron response in equation (13) for two synaptic currents and no applied current,

Substituting in Upre,1 = R, Upre,2 = R, and ,

Substituting equation (18) for gs,1,

To be physically realizable, gs,2 > 0. Because gs,1 > 0 and ΔEs,1 > 0, gs,2 > 0 if and only if ΔEs,2 < 0. Thus, it is critical that ΔEs,2 < 0.

Just as for the addition network, we minimize gs,1 by maximizing ΔEs,1. If R = 20 mV and ksyn = 1, then gs,1 = 115 nS and ΔEs,1 = 194 mV. To tune gs,2, we first select ΔEs,2 = −40 mV, then we solve equation (33) to find gs,2 = 558 nS. These design constraints are summarized in Table 1, and Figure 2B graphically shows the accuracy of the subtraction network.

A subnetwork that approximates division of the form

replicates the function of GABA synapses that regulate activity in the brain. A key reason for this behavior is that the reversal potential of GABA-ergic synapses is about equal to the resting potential of the postsynaptic neuron (Trappenberg, 2009). Equation (26) is used to find gs for the division pathway.

The synapse from Upre,1 to Upost is tuned as an excitatory Signal Transmission pathway with k = 1, as in Sec. 3.1. In our work, R = 20 mV, ΔEs,1 = 194 mV, and equation (18) tells us that gs,1 = 115 nS. Such a small gs ensures that the signal from Upre,1 to Upost is transmitted without greatly affecting the sensitivity of Upost to inputs. That is, the effect of Upre,1 on the denominator of is very nearly 0.

The synapse from Upre,2 to Upost is tuned as a Signal Modulation pathway, as analyzed in Sec. 3.2. Setting ΔEs,2 = 0 will eliminate Upre,2’s influence on the numerator of . Substituting this case into equation (26) and reducing,

where when Upre,1 = Upre,2 = R, their maximal value. Equation (35) also reveals that since gs,2 > 0, 0 < csyn < 1.

The steady-state response of the network is the result of these two synaptic inputs, as written in equation (28). Substituting equation (35), and specifying that ksyn,1 = 1, simplifies to

In our network, we wished when Upre,2 = R, so we set csyn = 1/R = 0.05, which makes gs,2 = 19 μS. When csyn is close to 0, Upre,2 can strongly reduce Upost’s sensitivity to inputs. When csyn is close to 1, Upre,2 can only weakly reduce Upost’s sensitivity to inputs. Figure 2C shows that this network performs the intended division of the signals. Table 1 summarizes these design constraints.

A subnetwork that approximates multiplication of the form can be used to control the gain of a sensory feedback loop, a frequently observed characteristic of neural systems that control locomotion (Cruse, 1981; Gabriel and Büschges, 2007) and posture (Peterka and Loughlin, 2004).

A multiplication network can be assembled by replacing the Modulatory Pathway in the division network with two identical Modulatory Pathways in series, connected into a disinhibitory network (see Figure 2D). This works because the product of two numbers, a⋅b = a/(1/b). However, tuning the Modulatory Pathway for the multiplication network differs from tuning the division network. This is because the right-side pathway of the network in Figure 2D must make , no matter how active Upre,1 becomes (because a⋅0 = 0, no matter the value of a). Thus, according to equation (22), csyn = 0, unlike the division network, for which 0 < csyn < 1. Solving equation (26) when csyn = 0 reveals that

To solve for gs,2, we must first select ΔEs,2. If ΔEs,2 = 0 like for the division network, then equation (37) divides by 0. If ΔEs,2 > 0, then gs,2 < 0, which is physically not realizable. Therefore, we must choose a value ΔEs,2 < 0. The more negative ΔEs,2 is, the more small-amplitude signals are clipped; however, the less negative it is, the larger gs,2 must be. Therefore, gs,2 is the limiting factor to maintain biological realism. We have chosen gs,2 = 20 μS and R = 20 mV, making ΔEs,2 = −1.

Now that we have designed one of the Modulatory synapses, we can calculate the response of the complete multiplication network seen in Figure 2D, which includes two identical Modulatory Pathways in series. When Upre,2 is inactive, then it does not inhibit Uinter, which is tonically active. In this case, Uinter’s activity completely desensitizes Upost to inputs. When Upre,2 is active, then it inhibits Uinter. In this case, Uinter is hyperpolarized, and cannot desensitize Upost to inputs. To show that this is the case, let us find the full response of the system. We first calculate , which has one Modulatory Pathway input and a tonic applied current Iapp = R. Its response is the same as in equation (21), with the constraint from equation (37), which causes terms to cancel:

Upost has two presynaptic neurons, Upre,1 and Uinter. The synapse from Upre,1 is a Signal Transmission synapse, and the synapse from Uinter is a Signal Modulation synapse. Its response is found via equation (13),

We showed in Sec. 3.3 that equation (18) can be used to design a synapse that transmits the presynaptic neuron’s activity to the postsynaptic neuron, while minimizing its impact on the denominator of the postsynaptic neuron’s steady-state response, . This enables us to approximate Upre,1’s effect on as an applied current Iapp ≈ Upre,1. Making this substitution in equation (39),

Because we previously specified that the Modulatory Pathways are identical, we can apply the constraint from equation (37),

We can now substitute equation (38) for Uinter,

This expression can be simplified. First, as noted previously, synapses 2 and 3 are identical, so ΔEs,2 = ΔEs,3 = ΔEs. Second, we can multiply the first term in both the numerator and denominator by the factor (1 − Upre,2/ΔEs), which enables us to combine terms. Performing these simplifications,

Equation (44) contains a lot of information about how the multiplication network functions. First, Upost’s response indeed contains a term that multiplies Upre,1 and Upre,2. When ΔEs = −1, then scales with Upre,1⋅Upre,2 in a 1:1 fashion. Second, the numerator will be ≤ 0 if either Upre,1 = 0 or Upre,2 = 0, . This is because Upre,1 and Upre,2 must each be less than or equal to R. If either input is greater than R, then their synaptic inputs to Upost will saturate (see equation (4)), preventing this condition from being violated. Third, the denominator does not depend on the input values. Technically, because of the approximation made in equation (40), the denominator does change slightly with Upre,1. However, with our chosen values of R (20), ΔEs (−1), and gs,1 (0.115), this change is less than 1%, justifying this approximation. Figure 2D demonstrates that this network multiplies the two inputs.

Table 1 summarizes the function, component pathways, constraint equations, and free parameters of each network from this section. This analysis enables direct construction and parameter selection for functional subnetworks that can be assembled into more complex networks capable of performing real-time robotic control (e.g., Szczecinski and Quinn (2017) and Szczecinski et al. (2017a)). Additionally, one of the key advantages to using dynamic neural systems for motor control is the handling of time varying signals. The next section examines how the dynamics of these neurons can be exploited to perform calculus on signals.

The differential equation for a single neuron’s response (equation (1)) can be solved analytically. Solving an equation dx/dt = f (x) is simplified if the equilibrium state is x* = 0, so as in Sec. 3, the substitution U = V − Er is made. Additionally, the membrane conductance Gm and capacitance Cm can be combined into a new parameter τ = Cm/Gm, which is a more intuitive parameter when discussing dynamic networks. This section uses analysis from the previous section, plus additional analysis, to derive design constraints for networks that differentiate or integrate input signals over time.

One dynamic response neural systems are known to utilize is differentiation of signals. Early examination of neural networks led to the discovery of the Reichardt detector network (Reichardt, 1961), an autocorrelation network with delays that approximates the differential of an incoming signal. Other examples include human balance, which relies on feedback proportional to the position, velocity, and acceleration of the center of mass (Peterka, 2003; Safavynia and Ting, 2012). Also, positive velocity feedback plays an important role in insect muscle control (Cruse, 1981).

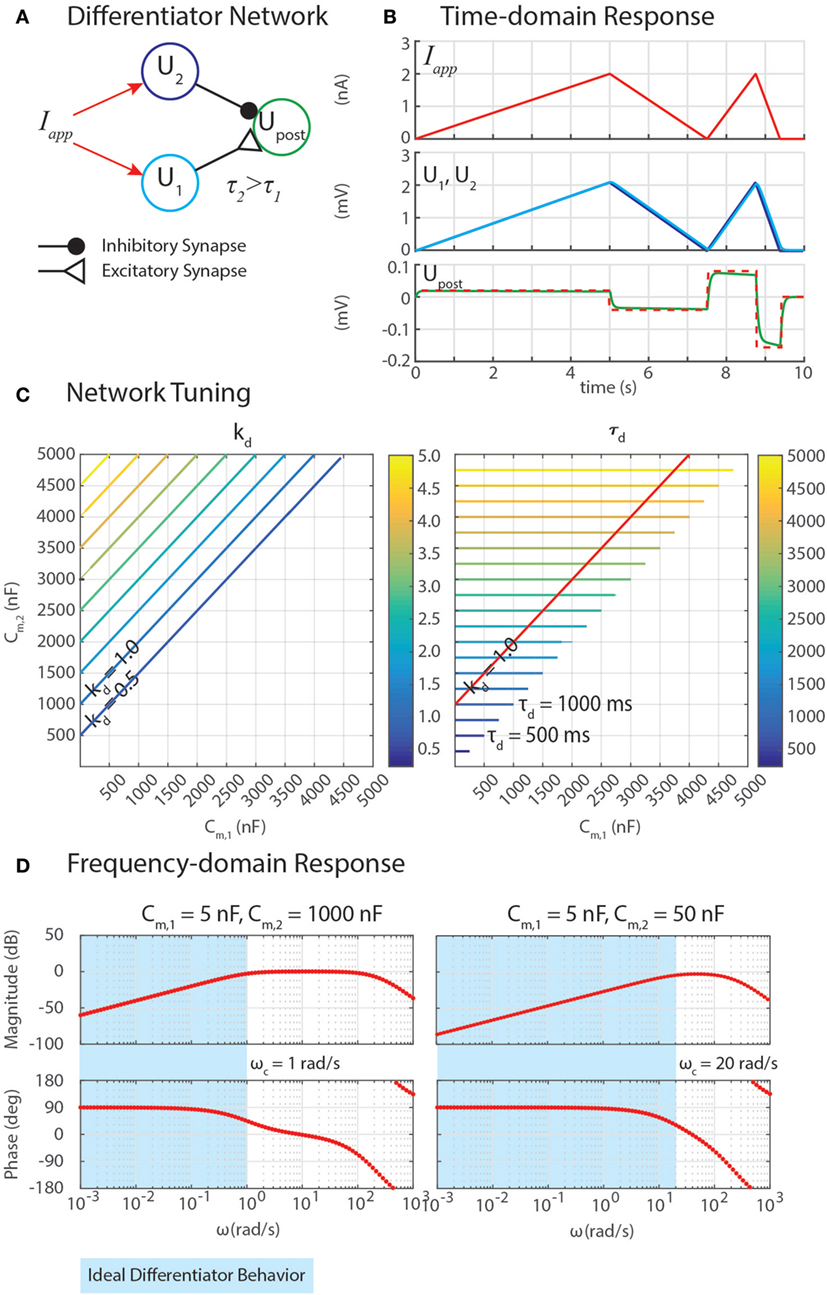

We have developed differentiation networks based on the Reichardt detector network, shown in Figure 3A. We can understand its function by examining a neuron’s response to a ramp input, Iapp = A⋅t, where A is an arbitrary slope of the ramp. The response of the network should be a step with a magnitude proportional to A, as shown in Figure 3B. Inserting this applied current into equation (6), a single neuron’s response is

Figure 3. (A) A network can exploit neural dynamics to compute the differential of an incoming signal. (B) When given an applied current in the form of ramps, the network returns steps whose heights are proportional to the slopes of the ramps. (C) The amplification of the differential, kd, and the time constant of the network, τd, depend on the capacitance of the neurons, Cm,1 and Cm,2. (D) Frequency domain analysis enables the identification of the cutoff frequency ωc, enabling the network to naturally filter out high-frequency noise.

The response of the neuron, U(t), is the sum of the particular and homogeneous solutions to equation (46), Up(t) and Uh(t), respectively. Simulating the dynamics of equation (46) suggests that the particular solution is a ramp of slope A, which lags behind the input with a time constant Cm. To confirm this, we can substitute a candidate solution and its derivative into equation (46), and check for equality. The result is the particular (i.e., steady-state) response,

This means that if the same Iapp were injected into neurons with different Cm values, and then their outputs were subtracted from one another with a network from Sec. 3.4, the network would perform a finite-difference approximation of the derivative of Iapp, once the transient response decays (illustrated in Figures 3A,B).

Calculating the homogeneous solution, Uh(t), informs us how quickly the transient response decays. The homogeneous solution to first-order linear equation like equation (46) is well-known, Uh(t) = b⋅exp(− t/Cm). The constant b is found by plugging the initial condition into the full response, U(t) = Up(t) + Uh(t),

To tune this network, the response of Upost is written as the difference between neuron Upre,1 with Cm,1 and neuron Upre,2 with Cm,2 > Cm,1,

Canceling the terms that are linear in t and expanding,

Properly tuning a differentiator network requires tuning Cm,1 and Cm,2 to obtain the intended gain of the network, kd, and an appropriately high cutoff frequency, ωc. Equation (50) reveals how these may be tuned. First, the steady-state response of this network to a ramp input defines kd = (Cm,2 − Cm,1). Second, the cutoff frequency ωc = 1/τd quantifies the frequency of incoming signals (i.e., Iapp = A⋅sin(ω⋅t)) above which the network’s response has less than half the energy of a lower-frequency signal. This is especially useful because although differential calculations amplify high-frequency noise, this network filters out noise with a frequency ω > ωc. Because Cm,2 > Cm,1, the time constant τd = Cm,2.

Figure 3C shows contours of kd and τd as Cm,1 and Cm,2 change. The plots show that increasing Cm,2 relative to Cm,1 increases kd, which may be valuable for amplifying signals. However, this also increases τd, making ωc impractically low, which will cause the network’s output to lag behind the input substantially. The contour for kd = 1 is drawn on the contour of τd, showing that the smallest τd achievable for this gain value is 1,000 ms, which would filter out all incoming signals for which ω > ωc = 1/(1 s) = 1 rad/s (0.159 Hz).

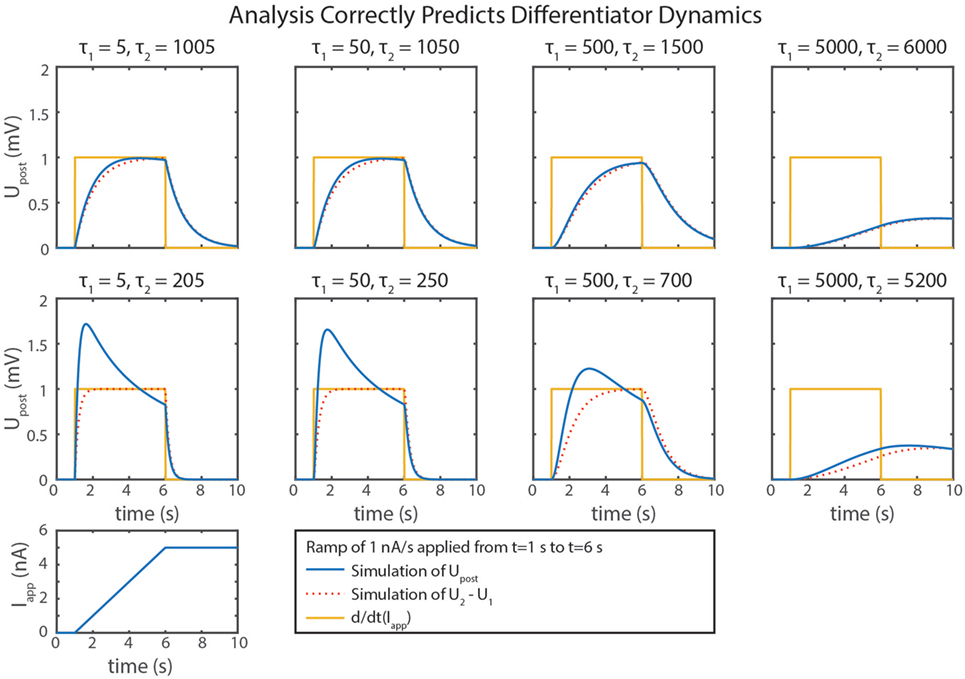

We can gain further insight into tuning τd using our FeedbackDesign tool (Szczecinski et al., 2017b). Figure 3D shows Bode plots for this network’s response, given two different values for Cm,2. When Cm,2 = 1,000 nF, like in Figure 3B, the network functions properly for inputs with ω < 1 rad/s, as predicted in the previous paragraph. Lowering Cm,2 to 50 nF increases ωc to 20 rad/s (3.18 Hz). Lowering Cm,2 also lowers the magnitude response as a function of ω, that is, it decreases kd. To regain this lost gain, we may increase ksyn in the subtraction network. Figure 4 shows simulation data that explores this tradeoff. Table 2 lists how to use τd and kd to tune the entire differentiation network.

Figure 4. Simulation data from eight trials with the differentiator network are shown. Different values of Cm,1 and Cm,2 were used in each. is plotted in blue, U2 − U1 is plotted in dotted red, and the actual rate of change of the input, d/dt(Iapp), is plotted in gold.

Our neuron model is a leaky integrator, which means that the membrane voltage will integrate an applied current, but “leak” current to return to its resting potential. As a result, data cannot be stored in individual neurons, because neurons only have one stable equilibrium point. A network that is constructed to have a marginally stable equilibrium curve (or subspace) will not leak. A network will have this property if the determinant of the Jacobian matrix is 0, or in other words, if it is not full rank (Khalil, 2002). Instead of leaking, it will maintain its activation when no external currents are applied; when currents are applied, the state of the system will change continuously. This is analogous to the position of a box on a table with friction; it will remain wherever it is placed indefinitely, unless an external force is applied. In this section, we expand on previous work (Szczecinski et al., 2017a) to show how to construct a network that is marginally stable by applying constraints to reduce the rank of its Jacobian matrix; demonstrate that such a network can be used to integrate signals over time; and relate the integration rate, ki, to the parameter values of the network, such that .

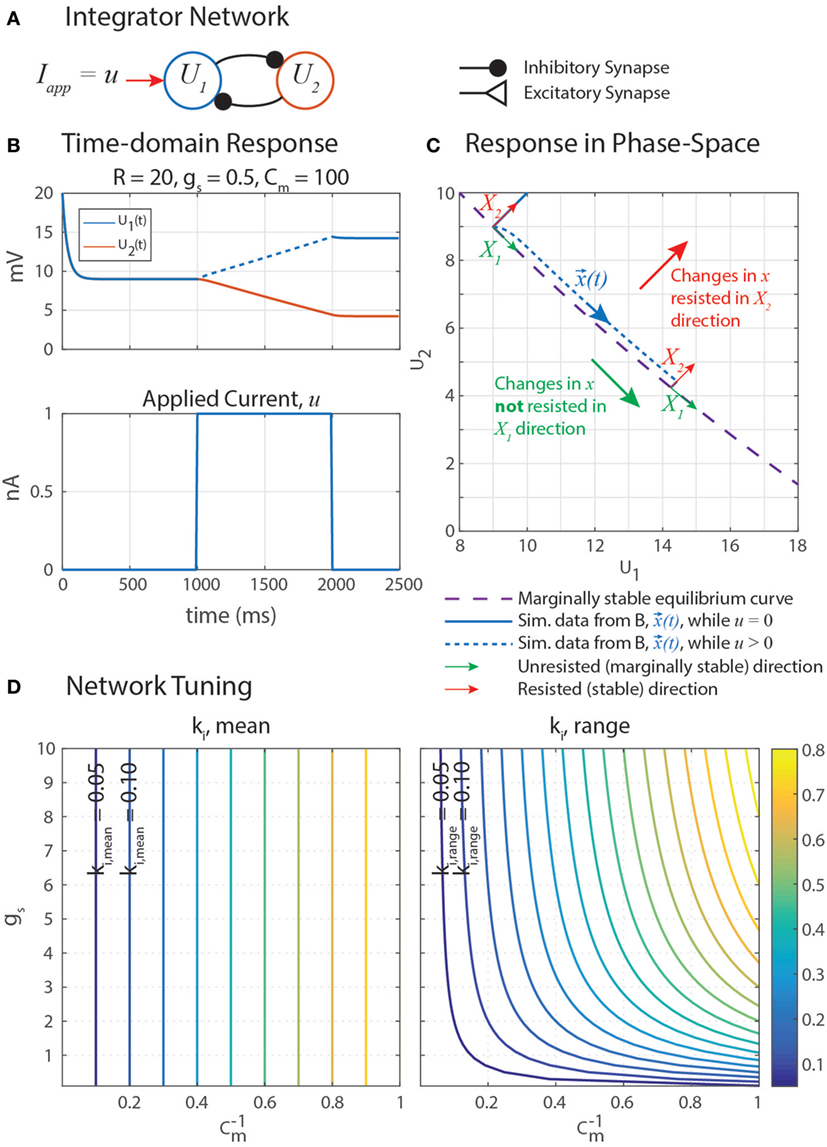

Marginally stable networks are hypothesized to play an important role in navigation (Haferlach et al., 2007) and the regulation of muscle forces in posture (Lévy and Cruse, 2008). Some memory models use carefully tuned self-excitation to cancel the leak current with excitatory synaptic current (Seung et al., 2000). In a similar vein, our network uses self-disinhibition (Figure 5A) to produce a line-attractor network in which a continuum of marginally stable equilibrium states exist. Simulation data in Figure 5B shows that stimulating U1 with an applied constant current u causes U1 to increase at an apparently constant rate, and when u is removed, neither U1 nor U2 leak to their rest potentials. This is the behavior of an integrator, as described in the previous paragraph.

Figure 5. (A) A disinhibitory network can exploit neural dynamics to compute the integral of an incoming signal. (B) When given an applied current in the form of a step, the network response is a ramp whose slope is proportional to the amplitude of the step. (C) A plot of this data in the (U1, U2) phase space shows that when stimulated by applied current u, the system state, x(t) = [U1(t), U2(t)]T (blue), moves in the X1 direction (green) while maintaining a constant distance from the equilibrium subspace (dashed violet) in the X2 direction (red). This difference in behavior in each direction is because the eigenvalue associated with eigenvector X1, λ1 = 0, and the eigenvalue associated with eigenvector X2, λ2 < 0. X1 and X2 are drawn in multiple places because they depend on x(t), as shown in Appendix. (D) The mean rate of integration, ki,mean (left), and the range of the rate of integration, ki,range (right), depend on the synaptic conductance of mutual inhibition, gs, and the membrane capacitance of the neurons, Cm. Note that the x-axis of these plots are 1/Cm, to better space the contour lines.

Let us write the response of the integrator network as shown in Figure 5A to find its equilibrium states. Each neuron has leak current, synaptic current, and a constant applied current. Let all parameter values be symmetrical between the two neurons. We make the same substitutions as before; U = V − Er, Er = Elo, ΔEs = Es − Er, and R = Ehi − Elo. If Iapp = R,

Moving dynamical terms to the left hand side, and applied current to the right hand side,

Solving equation (53) when dU1/dt = 0 reveals the equilibrium curve

Solving equation (54) when dU2/dt = 0 reveals the equilibrium curve

which can be algebraically rearranged to be the same as equation (55) as long as gs and ΔEs are constrained such that

Multiplying both sides of equation (56) by the denominator of the right hand side, and expanding,

Collecting multiples of U2 and applying equation (57),

Thus, equations (55) and (56) are the same equilibrium curve if gs and ΔEs satisfy equation (57). This curve, drawn on the phase-space diagram in Figure 5C, describes every equilibrium state that this network can have. In other words, a [U1, U2] pair is an equilibrium state of the system if and only if it satisfies equation (55). In the coming paragraph, we will use eigenvalue analysis to show that this network always functions as an integrator, as long as equation (57) is satisfied.

To find the system’s eigenvalues, let us write equations (53) and (54) together in matrix form,

in which the square matrix is J, the system Jacobian. Because J contains U1 and U2 terms, it is not constant, but still describes the stability of the system, given specific values of U1 and U2. To construct a marginally stable equilibrium subspace for the network, we must show that J has insufficient rank (i.e., the rows are identical) when U1 and U2 are at equilibrium (i.e., equation (55) is satisfied). However, the rows are identical, no matter the values of U1 and U2, if we apply the constraint from equations (57) to (60),

Thus, the system will always have one null direction, and we do not need to calculate J for specific equilibrium conditions to determine the system’s stability. To make notation more compact, let us define

These expressions let us write equation (61) as simply

Plotting the simulation data of the network’s forced response from Figure 5B on a phase-space diagram (Figure 5C) suggests that u causes U1 and U2 to change in such a way that the state of the system (, blue) moves tangent to the equilibrium curve (dashed violet), with some constant distance away from it. These curves do not overlap because the forced response is not the same as the equilibrium condition while the external current u is applied. Motion in the X2 direction is resisted by the neural dynamics, much how a spring resists the translation of an object with an applied force.

Nonetheless, these direction-dependent responses suggest that the state can be generalized into two decoupled degrees of freedom in the phase-space: unresisted, marginally stable motion parallel to the equilibrium curve (X1, green in Figure 5C); and resisted, stable motion away from the equilibrium curve (X2, red). The natural coordinates, , are transformed into generalized coordinates, , by a matrix X comprised of the eigenvectors of J. This same transformation matrix is used to transform J into the generalized coordinate system, yielding Jq. Jq is diagonal, decoupling the dynamics of the generalized coordinates and enabling us to quantify how quickly moves parallel to the equilibrium curve.

Appendix shows the calculation of X, with q1 representing the marginally stable mode and q2 representing the stable mode. Using X, we can transform the system into generalized coordinates. First, we write the dynamics from equation (64) in a compact format.

where J is the square matrix in equation (64) and

The generalized coordinates, , are defined as

To transform equation (65) into generalized coordinates, premultiply both sides of equation (65) by X−1,

where Jq = X−1JX and . The top and bottom rows of equation (68) are decoupled because Jq is a diagonal matrix. Furthermore, , meaning that , so the system simplifies even further.

To find the particular solution of this system, we can guess the form of qp,1 and qp,2, and substitute those in to equation (68). We observe that in steady state, where B is a constant that relates and u. q1(t) would be the integral of , but because the top row of Jq is zeros, it will not appear in the particular solution, and thus need not be explicitly included. We also observe that in steady state, so q2(t) = D, a constant. We can calculate using X−1, which is calculated in Appendix (equation (A9)). Solving for the particular solution of this system, ,

where d is defined in equation (A5). B describes how quickly qp,1 varies with u, but we want to know how quickly U1 varies with u. Therefore, we use equation (67) to transform into natural coordinates to find ,

Recall that a and b are functions of U1 and U2, respectively. This means that ki, the integral gain of the network, is not a constant. To place bounds on ki, let us substitute equations (62) and (63) into equation (74),

We can now plug in different values of U1 and U2 to see how ki varies. Using equations (55) and (56), we find that the most extreme cases are when [U1, U2] = [0, R] and [U1, U2] = [R, 0]. We can plug these cases into equation (75) to find the minimum and maximum values for ki,

and

The difference between ki,min and ki,max:

To find the mean rate of integration, we can calculate ki,mean = (ki,min + ki,max)/2,

This is the same value of ki obtained from computing ki when U1 = U2. This simple expression is a useful relationship for tuning the integrator network. One may select Cm to obtain the intended mean integration rate, and then minimize the variation of the integration rate by minimizing gs, as long as equation (57) is satisfied.

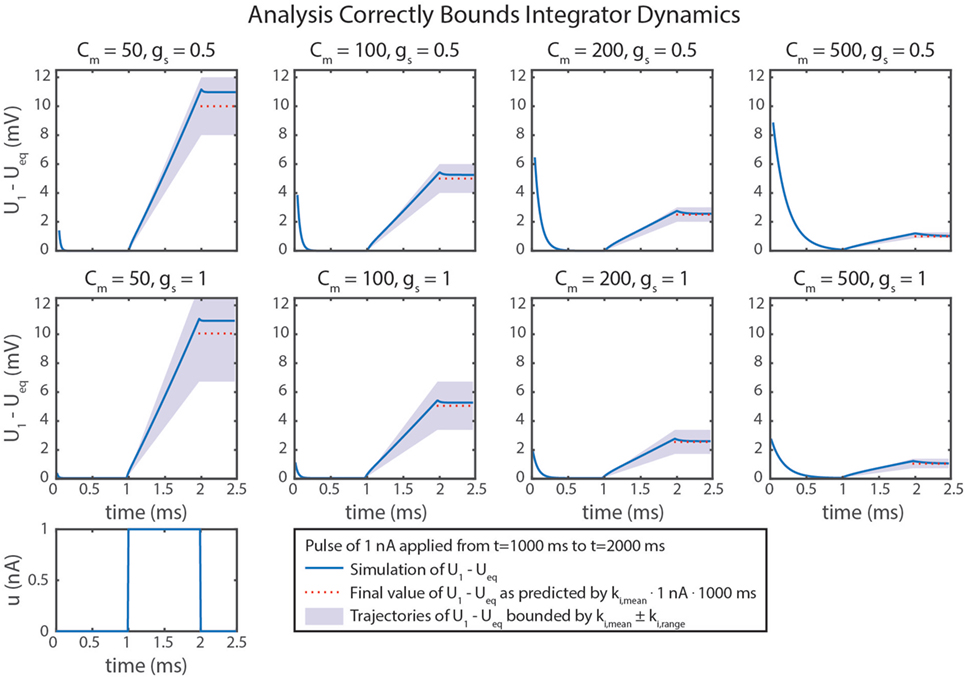

Figure 5D graphically demonstrates how ki,mean and ki,range determine Cm and gs. Just as in equation (79), ki,mean is a function only of Cm. Therefore, the contour only shows vertical lines. The value of ki,range is minimized by decreasing either gs or (i.e., increasing Cm). Figure 6 shows simulation data of the integrator’s response to a step input with eight different parameter value combinations. In every case, the change in U1 is bounded by the values of ki,mean and ki,range. As shown in Figure 5D, increasing Cm decreases the integration rate, and increasing gs increases the variation in the integration rate.

Figure 6. Simulation data from eight trials are shown. Different values of Cm and gs were used in each. Neural dynamics are plotted as blue lines. The expected final values of the simulations are plotted in dotted red lines. Regions bounded by ki,mean ± ki,range are shaded in violet. In every case, the actual outcome is correctly bounded. As demonstrated mathematically in the text, ki,mean only depends on Cm. In addition, ki,range depends on gs, leading to more variation in ki, as indicated by larger shaded areas.

Table 2 summarizes the design approach for this integrator network. The mean and range of the integration rate are free parameters that are determined by the intended network performance. Using these values and the constraint in equation (57), the neurons’ Cm value and the synapses’ gs and ΔEs values can be fully specified.

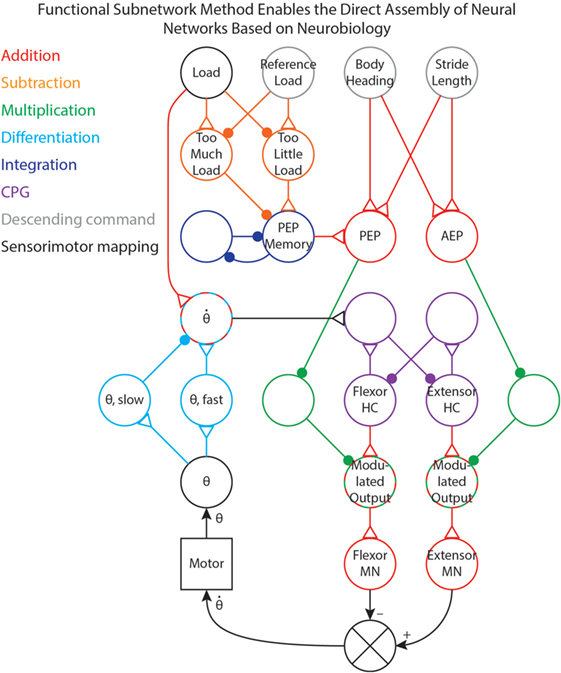

We have used the methods in this paper to tune (i.e., select parameter values for) several different networks that control robotic stepping (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a) and visual tracking (Szczecinski et al., 2017a). Once a network layout is determined, whether hypothetical or based on neurobiological findings, individual subnetworks can be identified and tuned to work together. Figure 7 shows a simplified joint-control network in which different functional pathways are color-coded. This illustrates how these functional subnetworks enable the direct assembly of control networks based on neurobiology. The neurobiological inspiration for these networks and the results of robotic experiments are presented in Szczecinski and Quinn (2017) and Szczecinski et al. (2017a), and so are omitted here.

Figure 7. A simplified joint-control network from our previous work (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a), with pathways color-coded based on the functional subnetwork.

The joint network in Figure 7 uses three simple descending commands (body heading, stride length, and reference leg load) to control the walking motion of one joint of a leg. The descending commands modulate the output of a central pattern generator (CPG) to control the speed of the motion, and sensory feedback is used to adjust both the timing and amplitude of motor output. Addition pathways are drawn in red. These include the mapping between body heading and stride length (i.e., descending commands, drawn in gray) to the PEP and AEP (Szczecinski and Quinn, 2017). The PEP can also be modulated by force feedback, which compares the load on the leg to a reference value (Szczecinski and Quinn, 2017, in review). This requires a subtraction network, drawn in orange, to compute if there is too much or too little load on the leg. The difference is used to adjust the PEP Memory network, which is an integration network, drawn in blue. This network adjusts the PEP over time, and remembers the motor command that produces the intended force.

The output of the CPG, drawn in purple, excites the motor neurons. Tuning CPG dynamics is discussed in our previous work (Szczecinski et al., 2017b). The PEP and AEP neurons adjust the motor output via multiplication pathways, drawn in green, which scale CPG output to the motor neurons based on the intended range of motion. Motor neuron activity controls the motor velocity, and the θ neuron receives position feedback from the motor via the mappings in Figure 1B. The motor velocity, computed by the cyan differentiation pathway, reinforces ongoing CPG behavior through the neuron (Szczecinski et al., 2017b). A division pathway (not shown) can be used to normalize the velocity feedback to the joint’s commanded range of motion, simplifying the control of stepping frequency. The neuron also receives some input from the Load neuron, ensuring that stance phase is stable (Szczecinski and Quinn, 2017, accepted). By using the functional subnetworks and the design constraints presented in this paper, we can rapidly and directly assemble models of neural systems that perform as intended without hand-tuning or optimization methods.

How are the “Free Parameters” in Tables 1 and 2 chosen? The free parameters fall into two classes: reversal potentials (i.e., ΔEs) and dynamical constants (e.g., k, τ, etc.). The reversal potentials are informed by biology. In this paper, we kept −40 < ΔEs < 194 mV (i.e., −100 < Es < 134 mV). The modeler could use reversal potentials from specific synapses if that data were available. The dynamical constants are informed by the function of the robot. For example, the ksyn of the subtraction network in Figure 1B controls the stiffness of the controller, and may destabilize the system if not tuned to match the mechanical properties of the robot (Szczecinski et al., 2017b).

As another example, τd and kd of the differentiator network in Figure 7 determines the robustness of CPG rhythms, and how well it entrains to sensory feedback (Szczecinski et al., 2017b). A slow, adaptively-walking robot may want a high kd to regularize CPG oscillations, whereas a fast running robot may want a low kd to be less sensitive to sensory feedback. Picking specific values for these free parameters ultimately depends on the intended behavior of the robot. The constraints in this paper enable the designer to think in terms of more traditional robotics quantities, and use these to set neural and synaptic parameter values, which may be less intuitive.

In this paper, we presented analytical methods for setting parameters in dynamical neural networks that can add, subtract, multiply, divide, differentiate, and integrate incoming signals. Such operations are at the core of control, and these techniques enable control networks to be assembled rapidly and tuned directly. This work primarily identifies constraint equations, not unique values, that govern how parameters should be tuned. Thus, many different networks may perform the same function with different parameter values, as observed in real neural circuits (Prinz et al., 2004). Since these results are analytical, not based on machine learning or optimization, there is no concern about these networks over- or under-fitting training data, and their behavior is provable. These techniques build on our previous analysis of synthetic nervous systems (Szczecinski et al., 2017b) and have been validated through several studies with our robot, MantisBot (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a).

All of the results from this paper make it easier to tune neuromechanical models of animals, as well. Many such models have been created to study the principles underlying insect (Daun-Gruhn and Tóth, 2010; Szczecinski et al., 2014) and mammalian (Hunt et al., 2015a; Markin et al., 2016) locomotion alike. Oftentimes, parameters of these models are tuned by hand to obtain the intended motion, which is a painstaking, slow, and imprecise process. The analysis in this paper can make neuromechanical models come together more quickly, and have more predictable behavior, leading to more thorough scientific investigations. More precise tuning methods enable more thorough validation or invalidation of hypotheses. Faster tuning methods enable more rapid validation or invalidation of hypotheses. For example, these methods could be used to improve the coordination our previous cockroach model (Szczecinski et al., 2014). In the model, curve walking of varying radii was achieved by modulating muscle activations with broad descending commands. However, the coordination, reliability, and repeatability of such motion could be improved with the methods of this paper, enabling us to improve or reject the model.

Some of the calculations in this paper are based on approximations, which lead to inaccuracies in the calculations of the subnetworks. One example is that the subtraction network does not produce linear output. This non-linearity occurs because the reversal potentials of synapses are rarely much lower than the resting potentials of neurons, requiring large values of gs,2 to build a subtractor where ksyn = 1. A large gs,2 value increases Upre,2’s effect on the denominator of ’s response, causing the synaptic input to reduce Upost’s sensitivity to inputs. This is particularly noticeable in the differentiator’s response (Figure 4), especially as ksyn increases.

Another example of a simplification we made is that our calculation of ki only used the particular solution of the system. This means that a transient response also exists, which we did not compute. In addition, ki is a function of U1 and U2. This means that ki is not a constant for this network. However, the impact of U1 and U2 on ki can be minimized by minimizing gs and maximizing R, as we showed in Sec. 4.2.

However, the developed networks are not intended to act as perfect analogs to their mathematical counterparts. These networks are intended to act as representations of real neural circuits, which likely do not act as perfect adders, multipliers, differentiators, etc. Dynamic and transient effects are a real part of biological control systems, and effective neural controllers have developed around these idiosyncrasies and have likely evolved to even exploit many of these aspects. In spite of these issues, the methods in this paper are valuable. Our recent robotics work (Szczecinski and Quinn, 2017; Szczecinski et al., 2017a), as well as related work in progress, is proof of the effectiveness of this approach.

The methods in this paper enable the direct construction of networks that perform arithmetic and dynamic calculations. Why bother building neural networks just to recreate mathematical operators? We believe there are several reasons to take this approach. From a neurobiology perspective, the constraints that we have identified may help explain why certain structures are common in the nervous system (David Friel, personal correspondence). For instance, mutually inhibitory parallel pathways are common in the thoracic control of insect locomotion (Büschges and Wolf, 1995), which may function as subtraction networks in negative feedback loops. As another example, networks in the retina of the rabbit are selectively sensitive to motion in one direction or the other (Barlow and Levick, 1965). Such a network could be constructed by using adjacent cells in the retina as inputs to differentiator networks. This would be consistent with both the function of direction-sensitivity, as well as the laterally inhibitive structure. Even though such consistency does not guarantee that the animal’s nervous system functions precisely this way, the design methods in this paper may aid in understanding the function of neural networks found in animals.

Additionally, the constraints that we identified may be used to constrain parameter values in large brain models. Rather than using global search techniques to understand the dynamics of a large pool of neurons, we believe it may be faster to begin with a number of functional subnetworks, and then use local search techniques to tune the connections between them. In this way, the designer is certain that parts of the network perform specific, useful computations, rather than naively optimizing a large network (Haferlach et al., 2007; Agmon and Beer, 2013; Izquierdo and Beer, 2013). The end result is something like a genetic program, but in a neuroscience context.

NS led research on functional subnetworks and led the preparation of the manuscript. AH aided in the preparation of the manuscript. RQ provided critical oversight of the research and aided in the preparation of the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

This work was supported by NASA Space Technology Research Fellowship NNX12AN24H, as well as a GAANN Fellowship.

Agmon, E., and Beer, R. D. (2013). The evolution and analysis of action switching in embodied agents. Adapt. Behav. 22, 3–20. doi: 10.1177/1059712313511649

Allen, T., Quinn, R., Bachmann, R., and Ritzmann, R. (2003). “Abstracted biological principles applied with reduced actuation improve mobility of legged vehicles,” in Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vol. 2 (Las Vegas, NV, USA: IEEE), 1370–1375.

Barlow, H. B., and Levick, W. R. (1965). The mechanism of directionally selective units in rabbit’s retina. J. Physiol. 178, 477–504. doi:10.1113/jphysiol.1965.sp007638

Beer, R. D., and Gallagher, J. C. (1992). Evolving dynamical neural networks for adaptive behavior. Adapt. Behav. 1, 91–122. doi:10.1177/105971239200100105

Blickhan, R. (1989). The spring-mass model for running and hopping. J. Biomed. 22, 1217–1227. doi:10.1016/0021-9290(89)90224-8

Büschges, A., and Wolf, H. (1995). Nonspiking local interneurons in insect leg motor control. I. Common layout and species-specific response properties of femur-tibia joint control pathways in stick insect and locust. J. Neurophysiol. 73, 1843–1860.

Buschmann, T., Ewald, A., Twickel, A. V., and Büschges, A. (2015). Controlling legs for locomotion – insights from robotics and neurobiology. Bioinspir. Biomim. 10, 41001. doi:10.1088/1748-3190/10/4/041001

Cofer, D. W., Cymbalyuk, G., Reid, J., Zhu, Y., Heitler, W. J., and Edwards, D. H. (2010). AnimatLab: a 3D graphics environment for neuromechanical simulations. J. Neurosci. Methods 187, 280–288. doi:10.1016/j.jneumeth.2010.01.005

Cruse, H. (1981). Is the position of the femur-tibia joint under feedback control in the walking stick insect?: I. Force measurements. J. Exp. Biol. 92, 87–95.

Dasgupta, S., Goldschmidt, D., Wörgötter, F., and Manoonpong, P. (2015). Distributed recurrent neural forward models with synaptic adaptation and CPG-based control for complex behaviors of walking robots. Front. Neurorobot. 9:10. doi:10.3389/fnbot.2015.00010

Daun-Gruhn, S. (2010). A mathematical modeling study of inter-segmental coordination during stick insect walking. J. Comput. Neurosci. 30, 255–278. doi:10.1007/s10827-010-0254-3

Daun-Gruhn, S., Rubin, J. E., and Rybak, I. A. (2009). Control of oscillation periods and phase durations in half-center central pattern generators: a comparative mechanistic analysis. J. Comput. Neurosci. 27, 3–36. doi:10.1007/s10827-008-0124-4

Daun-Gruhn, S., and Tóth, T. I. (2010). An inter-segmental network model and its use in elucidating gait-switches in the stick insect. J. Comput. Neurosci. 31, 43–60. doi:10.1007/s10827-010-0300-1

Field, L. H., and Matheson, T. (1998). Chordotonal organs of insects. Adv. In Insect Phys. 27, 1–56, C1–C2, 57–228. doi:10.1016/S0065-2806(08)60013-2

Gabriel, J. P., and Büschges, A. (2007). Control of stepping velocity in a single insect leg during walking. Philos. Trans. A Math. Phys. Eng. Sci. 365, 251–271. doi:10.1098/rsta.2006.1912

Golowasch, J., Goldman, M. S., Abbott, L. F., and Marder, E. (2002). Failure of averaging in the construction of a conductance-based neuron model. J. Neurophysiol. 87, 1129–1131. doi:10.1152/jn.00412.2001

Haferlach, T., Wessnitzer, J., Mangan, M., and Webb, B. (2007). Evolving a neural model of insect path integration. Adapt. Behav. 15, 273–287. doi:10.1177/1059712307082080

Hunt, A., Szczecinski, N., and Quinn, R. (2017). Development and training of a neural controller for hind leg walking in a dog robot. Front. Neurorobot. 11:18. doi:10.3389/fnbot.2017.00018

Hunt, A. J., Schmidt, M., Fischer, M. S., and Quinn, R. D. (2015a). A biologically based neural system coordinates the joints and legs of a tetrapod. Bioinspir. Biomim. 10, 055004. doi:10.1088/1748-3190/10/5/055004

Hunt, A. J., Szczecinski, N. S., Andrada, E., Fischer, M. S., and Quinn, R. D. (2015b). “Using animal data and neural dynamics to reverse engineer a neuromechanical rat model,” in Biomimetic and Biohybrid Systems, Vol. 9222 (Barcelona, Spain), 211–222.

Ijspeert, A. J. (2014). Biorobotics: using robots to emulate and investigate agile locomotion. Science 346, 196–203. doi:10.1126/science.1254486

Izquierdo, E. J., and Beer, R. D. (2013). Connecting a connectome to behavior: an ensemble of neuroanatomical models of C. elegans klinotaxis. PLoS Comput. Biol. 9:e1002890. doi:10.1371/journal.pcbi.1002890

Karakasiliotis, K., Thandiackal, R., Melo, K., Horvat, T., Mahabadi, N. K., Tsitkov, S., et al. (2016). From cineradiography to biorobots: an approach for designing robots to emulate and study animal locomotion. J. R. Soc. Interface 13, 1–15. doi:10.1098/rsif.2015.1089

Lévy, J., and Cruse, H. (2008). Controlling a system with redundant degrees of freedom: II. Solution of the force distribution problem without a body model. J. Comp. Physiol. A Neuroethol. Sens. Neural. Behav. Physiol. 194, 735–750. doi:10.1007/s00359-008-0348-9

Marder, E., and Taylor, A. L. (2011). Multiple models to capture the variability in biological neurons and networks. Nat. Neurosci. 14, 133–138. doi:10.1038/nn.2735

Markin, S. N., Klishko, A. N., Shevtsova, N. A., Lemay, M. A. M. A., Prilutsky, B. I., and Rybak, I. A. (2016). “A neuromechanical model of spinal control of locomotion,” in Neuromechanical Modeling of Posture and Locomotion, eds B. I. Prilutsky, and D. H. Edwards (New York: Springer), 197–223.

Mittelstaedt, H. (1957). “Prey capture in mantids,” in Recent Advances in Invertebrate Physiology, ed. B. T. Scheer (Eugene, Oregon: University of Oregon Publications), 51–72.

Pasemann, F., Steinmetz, U., Hülse, M., and Lara, B. (2001). Robot control and the evolution of modular neurodynamics. Theory Biosci. 120, 311–326. doi:10.1007/s12064-001-0025-9

Pearson, K. G. (1993). Common principles of invertebrates. Annu. Rev. Neurosci. 16, 265–297. doi:10.1146/annurev.ne.16.030193.001405

Peterka, R. J. (2003). Simplifying the complexities of maintaining balance. IEEE Eng. Med. Biol. Mag. 22, 63–68. doi:10.1109/MEMB.2003.1195698

Peterka, R. J., and Loughlin, P. J. (2004). Dynamic regulation of sensorimotor integration in human postural control. J. Neurophysiol. 91, 410–423. doi:10.1152/jn.00516.2003

Prinz, A. A., Billimoria, C. P., and Marder, E. (2003). Alternative to hand-tuning conductance-based models: construction and analysis of databases of model neurons. J. Neurophysiol. 90, 3998–4015. doi:10.1152/jn.00641.2003

Prinz, A. A., Bucher, D., and Marder, E. (2004). Similar network activity from disparate circuit parameters. Nat. Neurosci. 7, 1345–1352. doi:10.1038/nn1352

Reichardt, W. (1961). “Autocorrelation, a principle for the evaluation of sensory information by the central nervous system,” in Sensory Communication, ed. W. A. Rosenblith (Cambridge: The MIT Press), 303–317.

Safavynia, S. A., and Ting, L. H. (2012). Task-level feedback can explain temporal recruitment of spatially fixed muscle synergies throughout postural perturbations. J. Neurophysiol. 107, 159–177. doi:10.1152/jn.00653.2011

Schilling, M., Hoinville, T., Schmitz, J., and Cruse, H. (2013). Walknet, a bio-inspired controller for hexapod walking. Biol. Cybern. 107, 397–419. doi:10.1007/s00422-013-0563-5

Schroer, R., Boggess, M., Bachmann, R., Quinn, R., and Ritzmann, R. (2004). “Comparing cockroach and Whegs robot body motions,” in IEEE International Conference on Robotics and Automation, Vol. 4 (New Orleans, LA, USA: IEEE), 3288–3293.

Seung, H. S., Lee, D. D., Reis, B. Y., and Tank, D. W. (2000). The autapse: a simple illustration of short-term analog memory storage by tuned synaptic feedback. J. Comput. Neurosci. 9, 171–185. doi:10.1023/A:1008971908649

Szczecinski, N. S., Brown, A. E., Bender, J. A., Quinn, R. D., and Ritzmann, R. E. (2014). A neuromechanical simulation of insect walking and transition to turning of the cockroach Blaberus discoidalis. Biol. Cybern. 108, 1–21. doi:10.1007/s00422-013-0573-3

Szczecinski, N. S., Getsy, A. P., Martin, J. P., Ritzmann, R. E., and Quinn, R. D. (2017a). MantisBot is a robotic model of visually guided motion in the praying mantis. Arthropod Struct. Dev. doi:10.1016/j.asd.2017.03.001

Szczecinski, N. S., Hunt, A. J., and Quinn, R. D. (2017b). Design process and tools for dynamic neuromechanical models and robot controllers. Biol. Cybern. 111, 105–127. doi:10.1007/s00422-017-0711-4

Szczecinski, N. S., and Quinn, R. D. (2017). Template for the neural control of directed walking generalized to all legs of MantisBot. Bioinspir. Biomim. 12, 045001. doi:10.1088/1748-3190/aa6dd9

Trappenberg, T. (2009). Fundamentals of Computational Neuroscience, 2nd Edn. Oxford: Oxford University Press.

Zill, S. N., Schmitz, J., and Büschges, A. (2004). Load sensing and control of posture and locomotion. Arthropod Struct. Dev. 33, 273–286. doi:10.1016/j.asd.2004.05.005

We find the eigenvalues λ1 and λ2 and the associated eigenvectors X1 and X2 of the Jacobian matrix by the eigenvalue problem,

where i is the index of the eigenvalue (1 or 2), is an identity matrix, and J is the square matrix from equation (64). Solving for λ,

Because J is on the same side of the equation as (see equation (65)), λ2 > 0 indicates a stable system (e.g., as the stiffness matrix of a physical system). , because a > 0 and b > 0. The definition of a in equation (62) shows that a > 0 because gs > 0 (it is a physical quantity) and U1/R ∈ [0, 1]. The same reasoning applies to b.

We use the eigenvalues to find their associated eigenvectors,

Normalizing X1 to 1,

Next, we calculate

Normalizing X2 to 1,

We now know the transformation matrix between the natural coordinates, , and the generalized coordinates, :

We will also make use of X−1 when transforming between natural and generalized coordinates. We can analytically invert X from equation (A8),

Keywords: synthetic nervous system, design tools, functional subnetworks, leaky integrator, arithmetic, differentiator, memory

Citation: Szczecinski NS, Hunt AJ and Quinn RD (2017) A Functional Subnetwork Approach to Designing Synthetic Nervous Systems That Control Legged Robot Locomotion. Front. Neurorobot. 11:37. doi: 10.3389/fnbot.2017.00037

Received: 30 December 2016; Accepted: 17 July 2017;

Published: 09 August 2017

Edited by:

Manish Sreenivasa, Heidelberg University, GermanyReviewed by:

Shinya Aoi, Kyoto University, JapanCopyright: © 2017 Szczecinski, Hunt and Quinn. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicholas S. Szczecinski, bmljaG9sYXMuc3pjemVjaW5za2lAY2FzZS5lZHU=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.