95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Behav. Neurosci. , 23 January 2025

Sec. Emotion Regulation and Processing

Volume 18 - 2024 | https://doi.org/10.3389/fnbeh.2024.1464888

Introduction: Everyday life requires correct processing of emotions constantly, partly occurring unconsciously. This study aims to clarify the effect of emotion perception on different event-related potentials (ERP; P100, N170). The P100 and N170 are tested for their suitability as electrophysiological markers in unconscious processing.

Methods: Using a modified backward masking paradigm, 52 healthy participants evaluated emotional facial expressions (happy, sad, or neutral) during EEG recording. While varying primer presentation time (16.7 ms for unconscious; 150 ms for conscious perception), either congruent or incongruent primer / target emotions were displayed.

Results: The N170 was significantly larger in trials with conscious compared to unconscious primer presentation, while the P100 showed opposite results displaying higher amplitudes in unconscious versus conscious trials. The N170 amplitude was modulated by emotion.

Discussion: Both P100 and N170 were modulated by stimulus presentation time, demonstrating the suitability as potential biomarkers and for systematic research on conscious and unconscious face processing.

Facial expressions are a vital part of non-verbal communication between humans and require fast and accurate cognitive processing (Batty and Taylor, 2003). Since the human capacity of processing information consciously is limited (Miller, 1956), some stimuli detected by the sensory system are perceived without awareness (Merikle et al., 2001). For clarity in our study, we specifically define “unconscious” processing as occurring when participants are exposed to a stimulus but lack conscious awareness of its specific content. This definition implies that while the stimulus is processed by the brain, participants do not have conscious access to the details of what was presented. By using this definition, we aim to distinguish our focus on processing that occurs below the threshold of conscious perception from related, yet distinct, concepts in the field.

Although highly relevant, many emotional stimuli are not consciously perceived and processed (Tamietto and de Gelder, 2010). Since especially emotional facial expressions as omnipresent stimuli of high relevance influence human behavior (Fox, 2002), understanding the mechanisms of early facial expression processing can provide a reference foundation for future research on unconscious emotion processing. Although brain anatomy and neural models of unconscious emotional processing have been discussed (for a review see Smith and Lane, 2016), the underlying electrophysiological correlates of unconscious emotional processing have yet to be explored further. Via a modified backward mask emotional conflict task, we specifically aim to establish suitable associative electrophysiological markers that can be used to differentiate conscious from unconscious emotion processing. Understanding these differences contributes to broader theories of emotional processing pathways, which suggest that unconscious perception may primarily rely on rapid subcortical pathways (Gainotti, 2012), whereas conscious perception engages more detailed cortical processing, particularly in regions such as the fusiform gyrus (Andrews et al., 2002) and prefrontal cortex (Michel, 2022). In addition to this, a core aim of the study is to evaluate which ERP component, P100 or N170, is more suitable for capturing the neural differences between conscious and unconscious emotional face processing. This will allow us to identify which electrophysiological marker best differentiates the two levels of perceptual awareness, thus providing a more precise tool for future research in unconscious emotional processing. Specifically, we emphasize that unconscious processing of emotional stimuli is a crucial mechanism to evaluate our everyday surroundings, encode social cues and perform social interactions (Prabhakaran and Gray, 2012; Schütz et al., 2020). Furthermore, individuals suffering from varying mental disorders, such as depression or panic disorder, show alterations in this rapid and unaware processing of social cues such as facial expressions (Baroni et al., 2021; Hahn and Goedderz, 2020; Schräder et al., 2024). Therefore, unconscious behavior/processing can lead to develop and maintain mental disorders (Disner et al., 2011).

Event-related potentials (ERPs) are described as neural responses induced by specific stimuli (Blackwood and Muir, 1990). They allow a time-precise analysis of cognitive processes (Woodman, 2010), which has been considered particularly beneficial in researching emotion processing (Dickey et al., 2021). In this study, different ERP components (P100, N170) were considered as face sensitive early processing potentials.

The positive P100 component peaking at around 100 ms after stimulus onset reflects very early basic visual processing activity. Some research suggests that visual attention has an effect on the P100 (Mangun and Hillyard, 1991; Mangun, 1995). Attended stimuli elicit larger P100 amplitudes than non-attended stimuli (Di Russo and Spinelli, 1999; Hillyard et al., 1998). Other studies report that early face processing is not modulated by attention (Lueschow et al., 2004; Furey et al., 2006; Biehl et al., 2013). The P100 is considered sensitive to faces, with non-facial stimuli evoking smaller P100 amplitudes than facial stimuli (Herrmann et al., 2005a, 2005b; Biehl et al., 2013). Research is not consistent whether P100 amplitudes show variations contingent upon the specific facial emotions presented. For example, one study reports increased P100 amplitudes in response to happy compared to neutral facial expressions (Zhang et al., 2016). Other studies found no significantly different P100 amplitudes between any of the analyzed emotions (sadness, fear, disgust, anger, neutral, surprise, happiness; Batty and Taylor, 2003), or documented an absence of specific emotional effects on the P100 (Smith, 2012). Using different primer presentation times (17 vs. 200 ms), the P100 was additionally found to be sensitive to conscious versus unconscious processing of faces showing higher amplitudes in the conscious state (Zhang et al., 2012). Here, a presentation time of 16.7 ms is considered optimal for unconscious processing (Schräder et al., 2023).

The N170 is an ERP component with a negative peak at around 170 ms post stimulus onset and is considered specific to face stimuli (Bentin et al., 1996; Itier and Taylor, 2004; Hinojosa et al., 2015). Whereas not all studies report significant effects of facial emotion expressions on the N170 (Herrmann et al., 2002; Eimer and Holmes, 2002; Eimer et al., 2003; Ashley et al., 2004; Brunet, 2023), the ERP is found to be modulated by different emotional expressions in several studies (e.g., Batty and Taylor, 2003; Miyoshi et al., 2004; Blau et al., 2007; Hendriks et al., 2007; Zhang et al., 2012, 2016). Variation in referencing methods could explain the differing results observed in previous literature (Rellecke et al., 2013). However, a throughout review summarized evidence for varying N170 amplitudes indicate a heterogeneous sensitivity towards emotional cues (for review see Hinojosa et al., 2015). In healthy controls, joyful or happy faces elicit larger N170 amplitudes than sad faces (Jaworska et al., 2012; Zhang et al., 2016). Enhanced N170 amplitudes have also been found with subliminally presented emotional stimuli (Smith, 2012; Zhang et al., 2012). Furthermore, the N170 is modulated by stimulus presentation time, as stimuli presented for 200 ms elicited larger N170 amplitudes than stimuli presented for 17 ms (Zhang et al., 2012).

A previous study examined the effect of emotion (happy, sad, and neutral) on different ERPs in an established backward masking paradigm (Zhang et al., 2016), yet without comparing conscious versus unconscious processing. We here investigate the effect of the same emotions on the P100 and N170 comparing conscious versus unconscious processing (primary aim). Since supraliminal face stimuli induce larger N170 amplitudes than subliminally presented faces (Zhang et al., 2012; Navajas et al., 2013), we hypothesize that N170 amplitudes will be larger in conscious than in unconscious trials, as conscious perception of faces allows for more detailed and prolonged engagement of cortical regions such as the fusiform face area (FFA), which are critical for fine-grained facial feature analysis (Zhang et al., 2012). In contrast, unconscious presentation, which primarily engages rapid subcortical pathways (e.g., amygdala), may not provide sufficient time for full cortical engagement, resulting in attenuated N170 amplitudes. For the P100, we expected higher amplitudes in unconscious trials, reflecting early attentional mechanisms that may be enhanced due to the brief and automatic nature of unconscious face processing (Zhang et al., 2012). As a secondary aim (additional to pre-registered aims), a sensitivity to emotions is assumed. Trials with happy or sad faces are expected to elicit larger P100 and N170 amplitudes compared to neutral faces.

The target stimulus analysis investigating the effect of emotional conflict on different ERPs (N400, late positive potential (LPP)) can be found in the supplements as exploratory analyses, additional to pre-registered aims.

As face processing is a complex neurological task, several factors may be causal for a modulation of ERPs. In consideration thereof, also brain hemisphere/electrode location, sex and age of the participant will be examined in this study, in addition to presentation time and emotion. Sex differences in face processing were previously reported, e.g., while presenting emotional infants’ faces (Proverbio et al., 2006). This study aims to investigate whether sex differences occur when presenting emotionally expressive adult faces as stimuli. Since previous research suggested right hemisphere dominance during face processing (Bentin et al., 1996; Bötzel et al., 1995; Haxby et al., 1999; Kanwisher et al., 1997; Nguyen and Cunnington, 2014), lateralization with more dominant enhancement of the P100 and N170 amplitudes to the right brain hemisphere is hypothesized. Finally, previous research revealed differences in N170 amplitudes depending on the age of the participants (Taylor et al., 1999; for a review see Taylor et al., 2004).

The backward masking paradigm is often used to prevent awareness and conscious perception of stimuli (Esteves and Ohman, 1993; Liddell et al., 2004; Morris et al., 1998, 1999; Whalen et al., 1998). Utilizing this, previous literature demonstrated the suitability of backward masking specifically for researching unconscious emotion processing by analyzing different ERPs (Balconi and Mazza, 2009). The paradigm allows to investigate differences between conscious and unconscious processing depending on the masking as well as the impact of emotional conflict on emotion processing.

The task analyzed here was part of a simultaneous EEG-fMRI study examining unconscious emotional conflict including other tasks and fMRI data which will be published elsewhere. The complete study is preregistered at the open science framework (doi: 10.17605/OSF.IO/37XD2).

Healthy participants (N = 52; 29 women) aged from 18 to 55 years (M = 26.67, SD = 6.92) were recruited through public advertisements and flyers. All participants were required to be right-handed, have normal or corrected-to-normal vision and fulfill MR-scanning criteria. To ensure that all questionnaires could be answered, fluency in German was required. Participants with acute or history of neurological illnesses, substance-related or psychiatric disorders were excluded.

Due to poor EEG signal quality, one participant had to be excluded from the EEG data analysis reducing the final sample to 51 participants (28 women) with a mean age of 26.73 years (SD = 6.98).

The study protocol was conducted in accordance with the Declaration of Helsinki (World Medical Association, 2013) and was approved by the Ethics committee of the RWTH Aachen Medical Faculty. All participants gave written informed consent and received a financial compensation of 45 Euro.

Data collection took place over a period of 18 months at the Department of Psychiatry, Psychotherapy, Psychosomatics, Medical Faculty RWTH Aachen University.

Included participants were invited to a 3.5-h measurement appointment. First, participants completed questionnaires. A 64-channel EEG system (BrainAmp MR Amplifier, Brain Products GmbH, Gilching, Germany; BrainAmp [Apparatus], 2023) with an MR-compatible electrode cap was placed on participants’ heads. The 64 electrodes (5 kΩ resistors) included an electrocardiogram electrode (ECG) placed on the back left to the spine, the vertex electrode (Cz) on the middle of the scalp was used as reference electrode.

For the simultaneous EEG-fMRI measurement, participants were positioned in a 3 Tesla MR scanner (Siemens MAGNETOM Prisma®, Siemens Medical Systems, Germany) while their right hand was placed on an MRI compatible button device. For synchronization of EEG and MRI recording a synchronizing box (SyncBox MainUnit, BrainProducts, Germany) was used. To stabilize the head position, a cervical collar was placed.

The measurement session started with performing anatomical brain measurements. After completing two tasks in the scanner participants were asked to fill in a task evaluation questionnaire.

Basic demographic data and past and present medical history of psychiatric disorders were collected using a German version of the Structured Clinical Interview for DSM-5® Disorders (SCID-5; Beesdo-Baum et al., 2019). The Trail Making Test (TMT; Reitan, 1971) was used to evaluate visual processing speed, working memory and executive functions (Tischler and Petermann, 2010). To assess intelligence, a multiple-choice word test (Mehrfachwahl-Wortschatz-Intelligenztest, MWT-B) was used (Lehrl, 1977). Intelligence level was determined by age and sex matched norms. Short term memory was tested using a subtest (digit span) of the Wechsler Memory Scale (WMS-R; Härting and Wechsler, 2000). The Bermond-Vorst Alexithymia Questionnaire (BVAQ; Vorst and Bermond, 2001) was applied to test for possible occurrence of alexithymia symptoms. To evaluate individual perception of the task, participants received an additional questionnaire after completing the tasks. This questionnaire assessed the subjective difficulty of evaluating emotion (1 = easy; 2 = rather easy; 3 = moderate; 4 = rather difficult; 5 = difficult). Due to missing data, two participants had to be excluded from the statistical analysis of this questionnaire.

In the modified backward masked emotional conflict task, images of facial expressions showing three different types of emotion (happy, sad, or neutral) were presented in line with previous research (Zhang et al., 2016; Stuhrmann et al., 2013; Chen et al., 2014; Xu et al., 2013). In total, 36 faces (12 faces for each of the three emotions) with an equal number of men/women per emotion were taken from the FACES database (Ebner et al., 2010). A scrambled checkerboard pattern mask was built by Adobe Photoshop® based on a picture selected from the FACES database to ensure equal luminance between mask und stimuli. Images were shown against grey background at the center of an LCD monitor (120 Hz refresh rate) using PsychoPy3 software (Peirce, 2007).

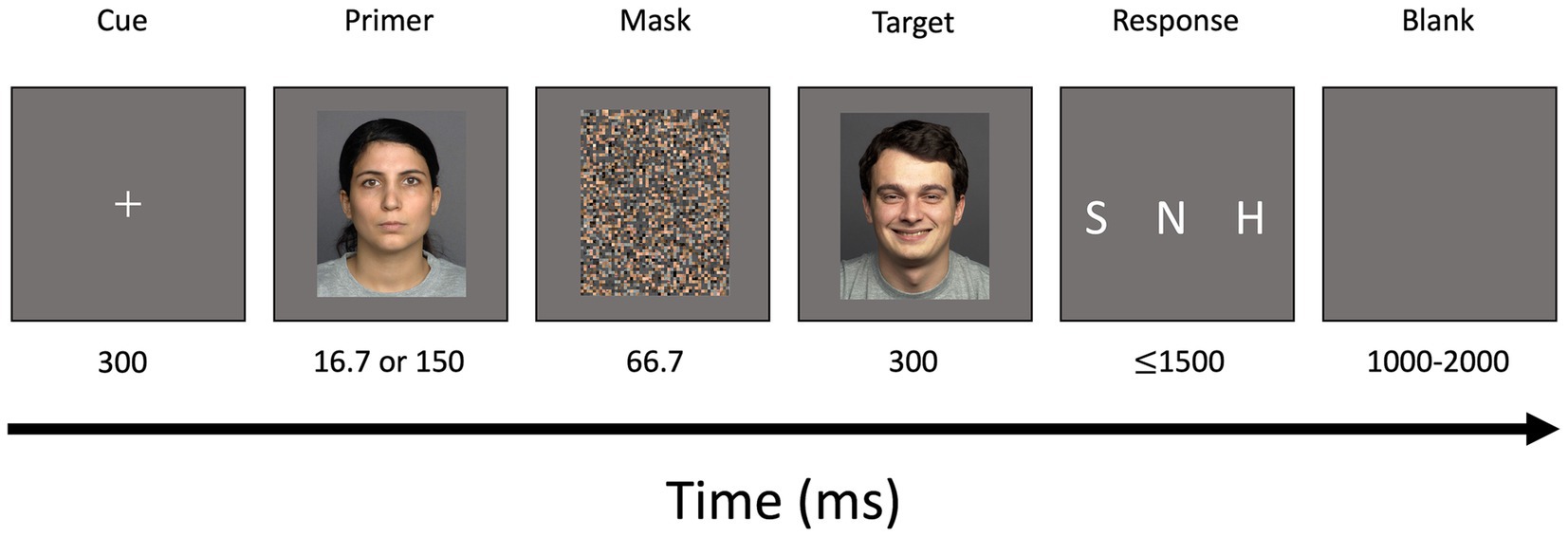

Each trial started with a white fixation cross for a duration of 300 ms (36 frames) which was followed by a primer image. Since presentation time of 16.7 ms was found to be ideal for unconsciously perceived stimuli (Schräder et al., 2023), unconscious primers were shown for 16.7 ms (2 frames) while conscious primers were presented for 150 ms (18 frames). Prime stimuli were followed by the mask which was displayed for 66.7 ms (8 frames). The mask was followed by a target image presented for 300 ms.

Afterwards, a response screen appeared for 1.5 s. Participants were asked to decide if the facial expression of the target image was “happy,” “sad,” or “neutral” as fast and as accurately as possible using three response buttons at the right hand. The index finger was used to indicate “sad,” middle finger for “neutral” and ring finger for “happy” faces. The response screen was followed by a blank screen serving as a jitter for a random duration between 1 to 2 s (Figure 1).

Figure 1. Schematic illustration of modified backward masked emotional conflict task (incongruent trial). A centered white fixation cross was displayed for a duration of 300 ms. Primers depicting happy, sad, or neutral facial expressions were presented for a duration of either 16.7 ms (unconscious trials) or 150 ms (conscious trials). A scrambled mask was shown for 66.7 ms followed by a target image also depicting happy, sad, or neutral facial expressions. A response screen was presented for 1,500 ms followed by a blank screen for a duration of 1,000–2,000 ms. S, sad; N, neutral; H, happy.

The task consisted of 3 blocks of each 120 trials in pseudorandomized order with a matching number of emotion-congruent and incongruent prime-target pairs. Each face served a maximum of 12 times as a target stimulus. Two practice trials were performed at the beginning.

The experimental set-up created a 2 × 2 × 3 factorial design (unconscious vs. conscious primer; primer-target emotion congruent vs. incongruent; emotion happy vs. sad vs. neutral) resulting in 30 trials for each of the 12 conditions.

The task lasted about 30 min.

EEG data were recorded with the BrainVision Recorder software (BrainVision Recorder, Vers. 1.23.0003, Brain Products GmbH, Gilching, Germany; BrainVision Recorder (Vers. 1.23.0003) [Software], 2023) at a 1,000 Hz sampling rate (0.01–250 Hz analog band-pass filter). The BrainVision Analyzer software (BrainVision Analyzer, Version 2.2.0, Brain Products GmbH, Gilching, Germany; BrainVision Analyzer (Version 2.2.0) [Software], 2019) was used for EEG data preprocessing. The simple gradient method was used to remove MR scanner artifacts (Allen et al., 2000). An infinite impulse response filter (IIR, 70 Hz cut off, 48 dB slope) was applied and data were down-sampled to 500 Hz which acts as a low-pass-filter. Data then underwent cardioballistic pulse artifacts detection and correction (Allen et al., 1998). Linear topographic interpolation was performed on EEG channels selected after visual inspection. After down-sampling to 250 Hz, data were filtered (0.01–45 Hz, 24 dB slope; ECG channel excluded from filter). Independent component analysis (ICA) was used to identify, e.g., eye blink artifacts which were removed from the data. All datasets were visually inspected, and intervals of bad quality were semi automatically detected and rejected. EEG data were exported for further analyses via EEGLAB (MATLAB®).

Further EEG data processing was done by using MATLAB software (version 9.9.0.1467703 (R2020b). Natick, Massachusetts: The MathWorks Inc, 2020) and the implemented toolbox EEGLab (version 2022.1; Delorme and Makeig, 2004). The preprocessed EEG data were segmented to epochs ranging from −200 to 800 ms relative to the primer onset (Nguyen and Cunnington, 2014). For target analysis, epochs were expanded to a range from −200 to 1,200 ms relative to primer onset. Baseline correction for the interval of 200 ms prior to the primer onset was carried out (Nguyen and Cunnington, 2014; Zhang et al., 2016).

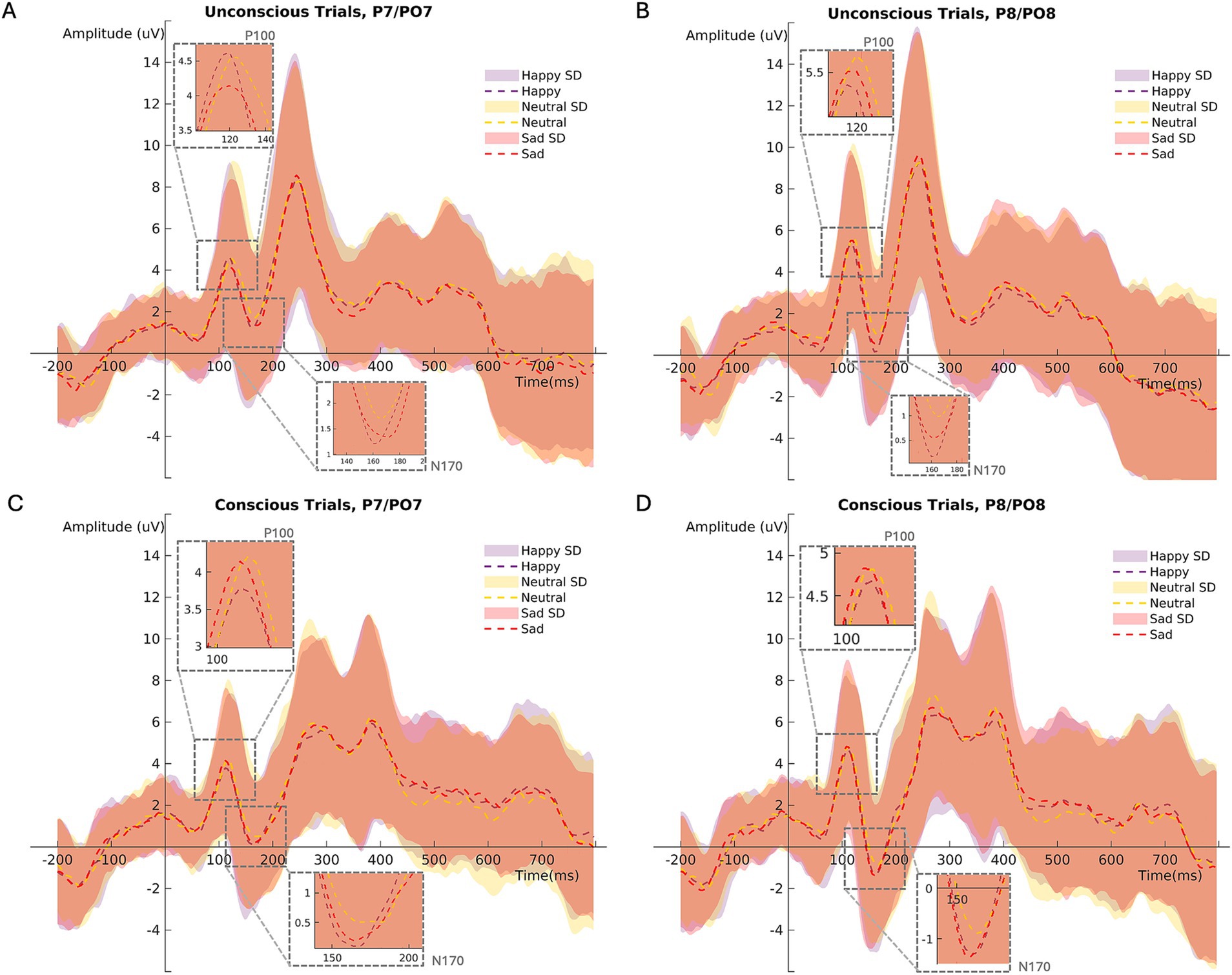

For ERPs analysis referring to the primer (P100 and N170), the channels P7, PO7, P8 and PO8 were chosen (Nguyen and Cunnington, 2014). Mean ERPs of the channels P7 and PO7 (left hemisphere) as well as the channels P8 and PO8 (right hemisphere) were created for every participant. For the primer analysis, P100 and N170 were computed for every subject by averaging the segments for each primer condition (happy, sad, and neutral primer emotion × unconscious and conscious primer presentation; Figure 2).

Figure 2. Mean ERP (P100/N170) amplitudes at channels PO7/P7 (left hemisphere) during (A) unconscious, (C) conscious trials. Mean ERP amplitudes at channels PO8/P8 (right hemisphere) during (B) unconscious, (D) conscious trials. SD = standard deviation.

The N170 peak component (negative) was estimated between 152 to 200 ms after primer stimulus onset (Hinojosa et al., 2015). Following previous studies, the P100 time interval was initially set to 80 to 120 ms (Nguyen and Cunnington, 2014). After visual data inspection, the P100 peak component (positive) was estimated in an extended time interval ranging from 80 to 132 ms after primer stimulus onset.

The identified negative (N170) and positive (P100) peaks within the time windows were subjected to statistical analysis using R (R Core Team, 2022) and RStudio (version 2022.12.0.353; Posit team, 2022).

The data obtained through the questionnaires were analyzed using IBM SPSS statistics [version 28.0.0.0 (190)]. To compare participants regarding sex differences, independent samples t-tests were conducted at a significance level of α = 0.05. Spearman’s Rho was computed to assess the relationship between the N170 and subjective difficulty of evaluating emotions in the paradigm. An analysis of possible relationships between different questionnaires and the ERP components can be found in the supplements (Supplementary Tables S1, S2).

Linear mixed models (LMMs) using the lme4 package (Bates et al., 2015) from R1 were used to conduct EEG data analysis. Using LMMs allows accounting for individual differences among participants as well as correlations between repeated measurements within individuals. Employing LMMs instead of traditional ANOVA significantly increases the degrees of freedom in the analysis enhancing the power and sensitivity to detect small main effects, making our design more robust than what would be achieved with ANOVA (Matuschek et al., 2017; de Melo et al., 2022).

For primer analysis, P100 (Model1) and N170 (Model2) served as dependent variables in their respective models. Set as fixed effects were presentation time (mask; conscious or unconscious), emotion (primer happy, sad, or neutral), sex (male or female), hemisphere (left or right) and age. A random intercept for each participant was included.

Model1 <- lmer(P100 ~ mask + primer emotion + sex + hemisphere + age+ (1|participant))

Model2 <- lmer(N170 ~ mask + primer emotion + sex + hemisphere + age + (1|participant))

During the model selection process, linear mixed models without interaction between variables were chosen due to better model quality criteria (AIC/BIC; see Supplementary material). Effect sizes were calculated using squaredGLMM and powerSim function in R.

Using the MASS package (Venables and Ripley, 2002) from R (https://cran.r-project.org/), a general linear mixed model (GLMM) was computed applying the Penalized Quasi-Likelihood.

For analysis of participants’ accuracy (Model5) in evaluating the target emotion, the accuracy served as the dependent variable. Target emotion (happy, sad, or neutral), primer presentation time (consciousness; conscious or unconscious perception), congruency (primer-target emotion congruent or incongruent), age and sex were included as fixed effects. A random intercept was included for each participant. Using the emmeans package (Lenth, 2023) estimated marginal means were obtained and subsequently used for pairwise comparisons as post hoc tests.

Model5 <- glmmPQL(accuracy ~ target emotion + consciousness + congruency + age + sex, random = ~ 1|participant, family = quasipoisson(link = “log”))

No statistically significant differences regarding age, and questionnaire scores were found between men and women (Table 1).

For an exploratory analysis of effects of different questionnaire scores on the ERP components as well as participants’ accuracy, see Supplementary material.

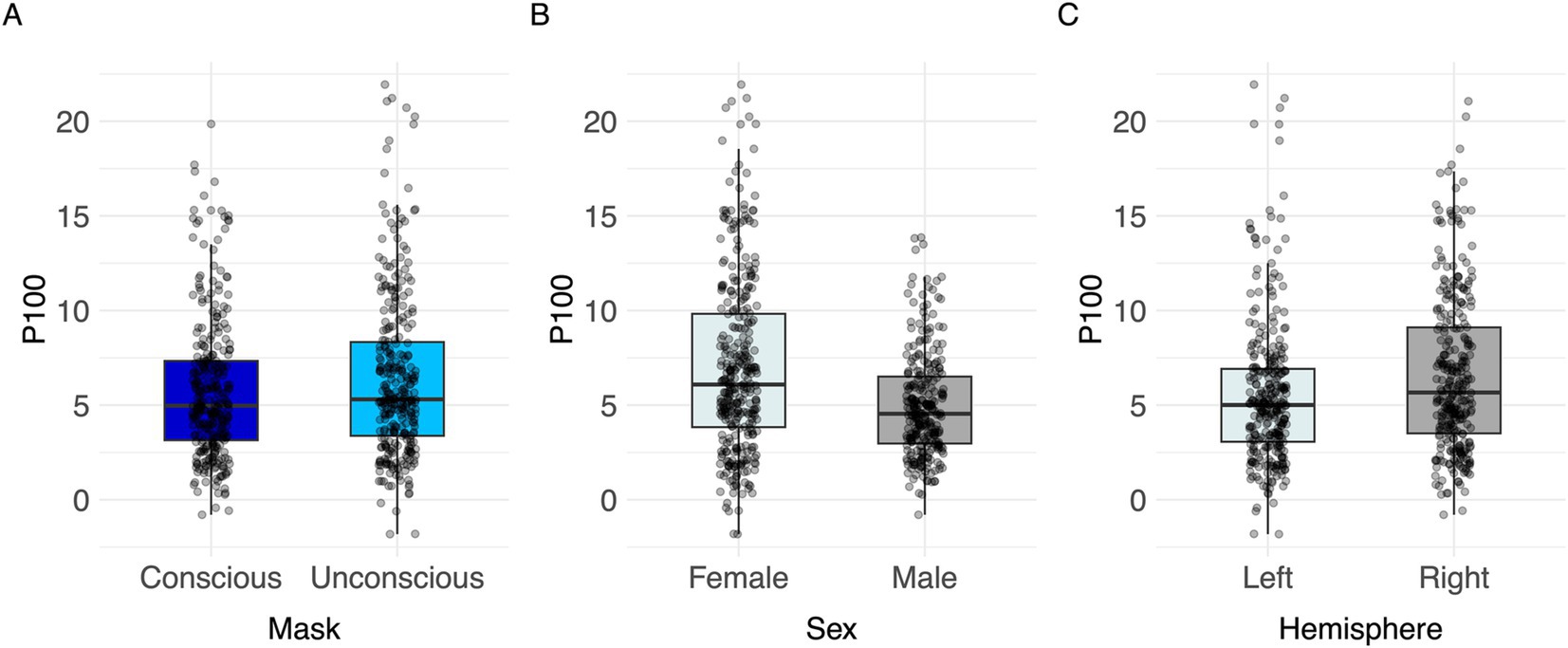

The linear mixed model (LMM) testing task effects on the P100 revealed a significant effect of mask (F(1,557) = 14.78, p < 0.001, partial Eta2 = 0.03, CI [0.01, 1.00]), sex (F(1,48) = 4.08, p = 0.049, partial Eta2 = 0.08, CI [0.00, 1.00]) and brain hemisphere (F(1,557) = 40.64, p < 0.001, partial Eta2 = 0.07, CI [0.04, 1.00]). No significant effect was found for primer emotion and age (Table 2).

Table 2. Effect on P100 values: Type II analysis of variance table with Kenward-Roger’s method for P100 model.

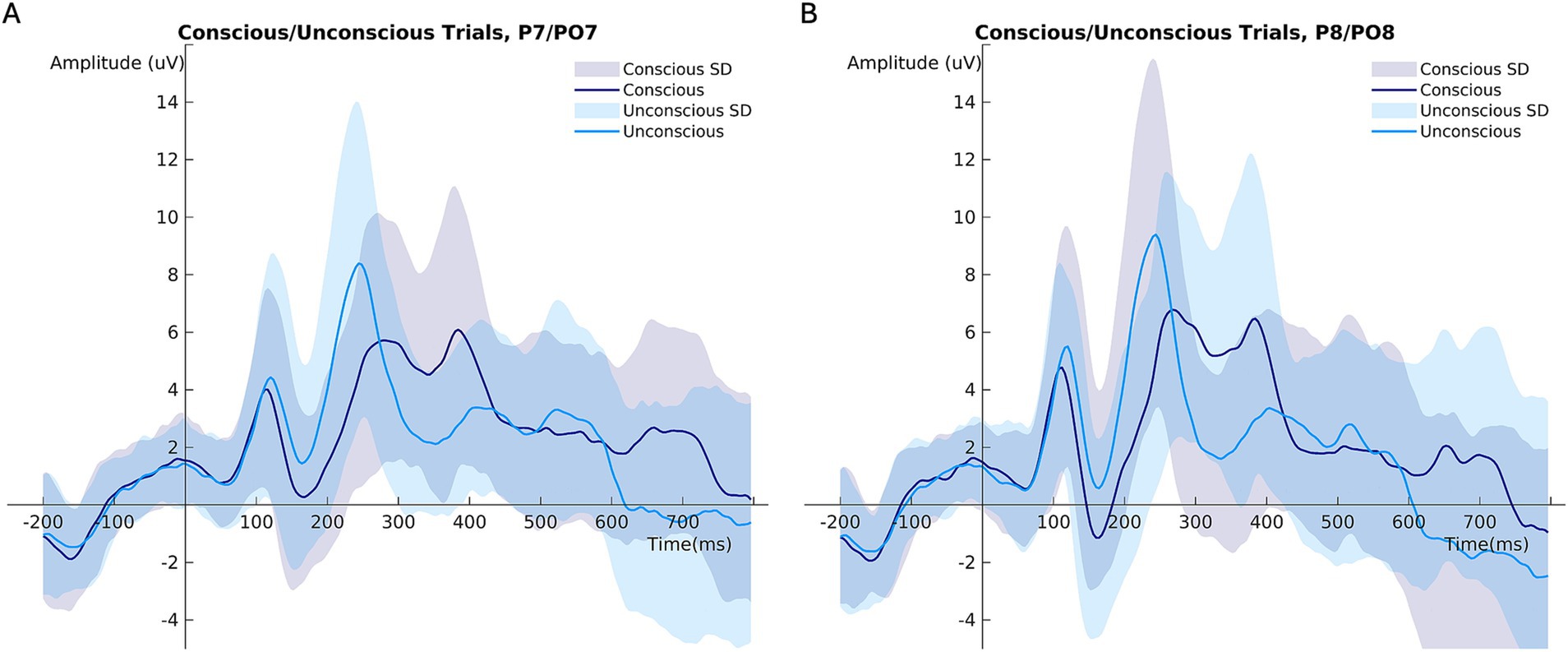

In unconscious trials, the P100 amplitude was larger than in conscious trials (Figure 3A; Table 2). The right brain hemisphere (channels P8/PO8) showed a significantly larger P100 in comparison to the left hemisphere (channels P7/PO7) (Figures 3C, 4, 5). Women had larger P100 values than men (Figure 3B; Table 2). The model explained 8.21% of variance via the fixed factors and 77.89% via the random intercept.

Figure 3. Visualization of parameter effects for P100. Boxplot of (A) mask, (B) sex, (C) brain hemisphere side.

Figure 4. Mean ERP amplitudes comparing conscious and unconscious trials at (A) channels P7/PO7 (left hemisphere), (B) channels P8/PO8 (right hemisphere). ***p < 0.001.

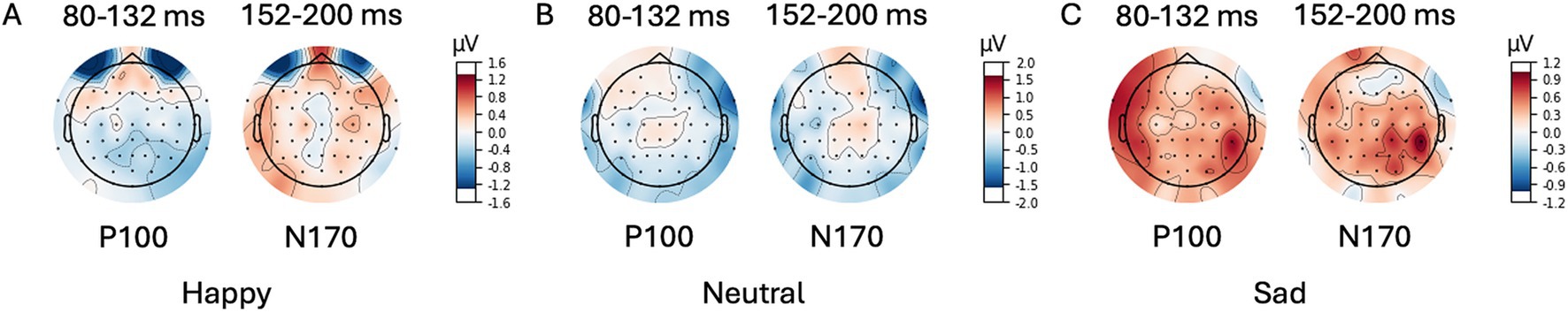

Figure 5. Scalp Topography Maps for P100/N170. Difference (unconscious voltage minus conscious voltage) between average amplitude in time intervals (80–132 ms post primer for P100; 152–200 ms post primer for N170). Primer emotion (A) happy, (B) neutral, and (C) (sad).

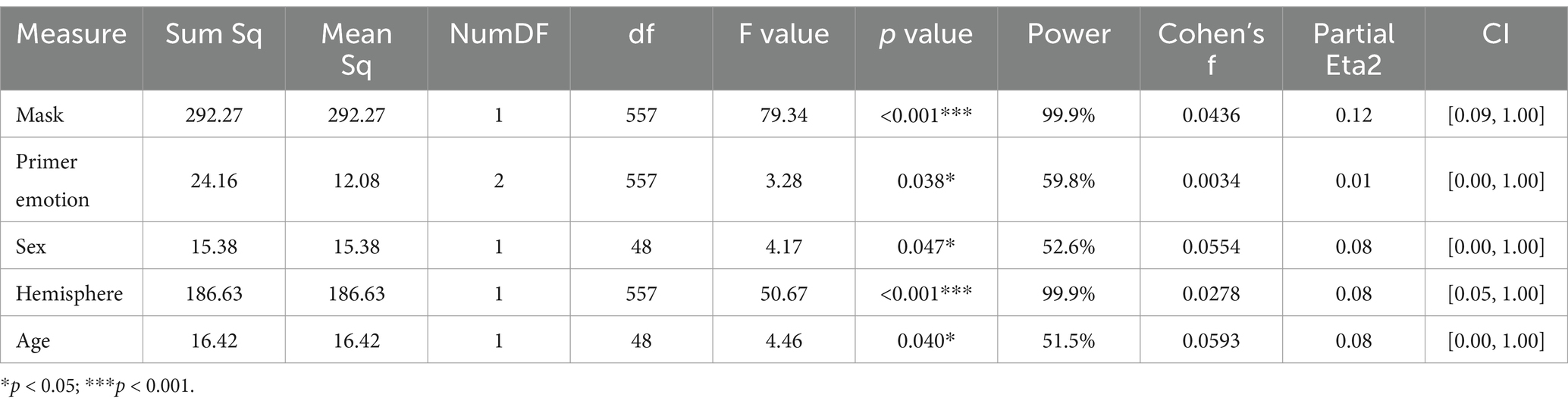

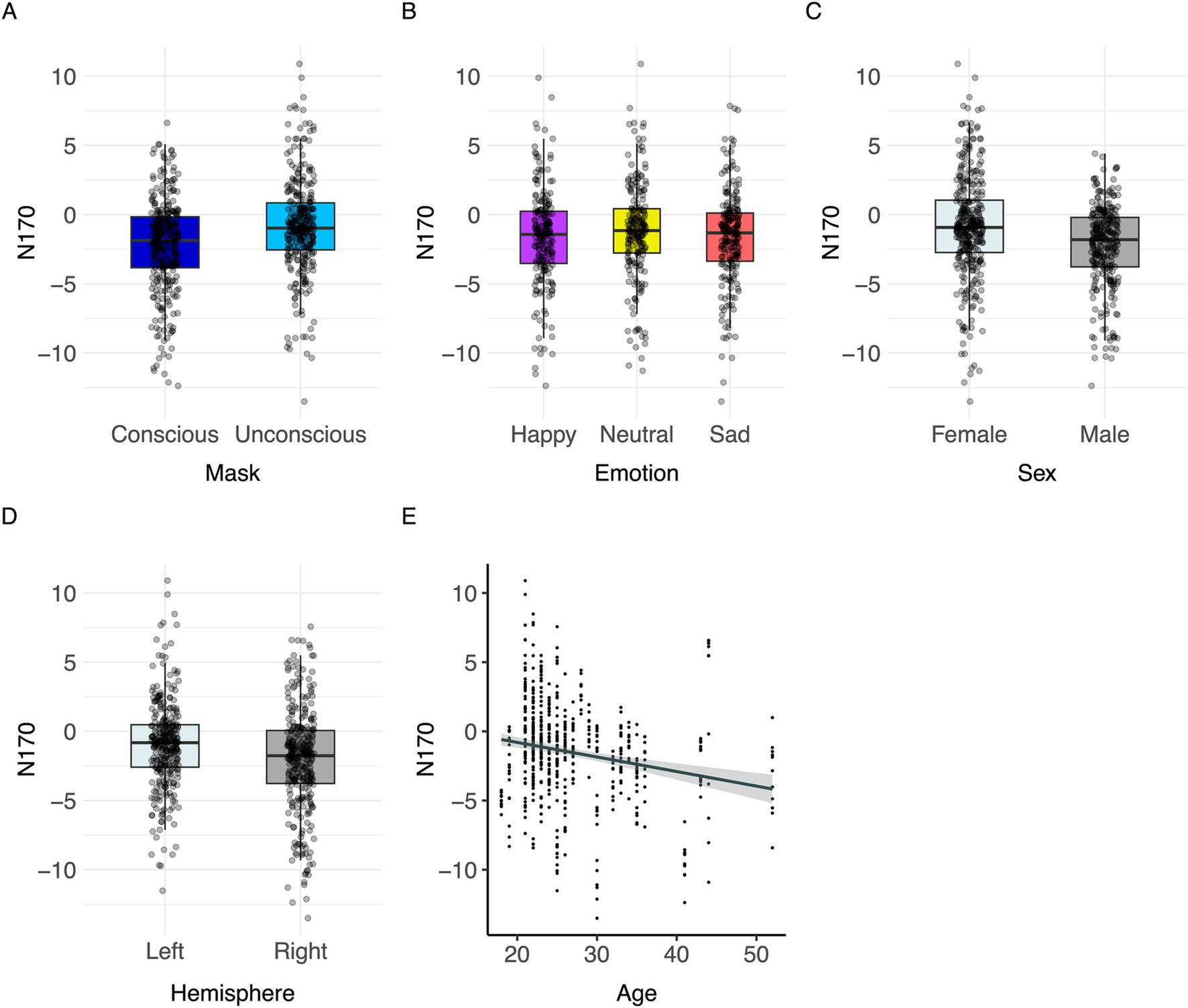

Significant effects of mask (F(1,557) = 79.34, p < 0.001, partial Eta2 = 0.12, CI [0.09, 1.00]), primer emotion (F(2,557) = 3.28, p = 0.038, partial Eta2 = 0.01, CI [0.00, 1.00])), hemisphere (F(1,557) = 50.67, p < 0.001, partial Eta2 = 0.08, CI [0.05, 1.00]), sex (F(1,48) = 4.17, p = 0.047, partial Eta2 = 0.08, CI [0.00, 1.00])) and age (F(1,48) = 4.46, p = 0.040, partial Eta2 = 0.08, CI [0.00, 1.00]) were found (Table 3).

Table 3. Effect on N170 value: Type II analysis of variance table with Kenward-Roger’s method for N170 model.

Conscious trials elicited a significantly larger N170 than unconscious trials (Figure 6A). Men showed higher N170 amplitudes than women (Figure 6C; Table 3). Right hemisphere induced higher N170 amplitudes compared to left hemisphere (Figures 2, 6D, Table 3). Post hoc comparisons showed no significant difference between emotions (Figure 6B; Table 4). The model explained 15.34% of variance via the fixed factors and 71.54% via the random intercept.

Figure 6. Visualization of parameter effects for N170. Boxplot of (A) mask, (B) emotion, (C) sex, (D) brain hemisphere, and (E) age.

Spearman’s correlation between N170 values and subjective difficulty of evaluating the target emotions shows a significant positive correlation [Spearman’s ρ(588) = 0.242, p < 0.001].

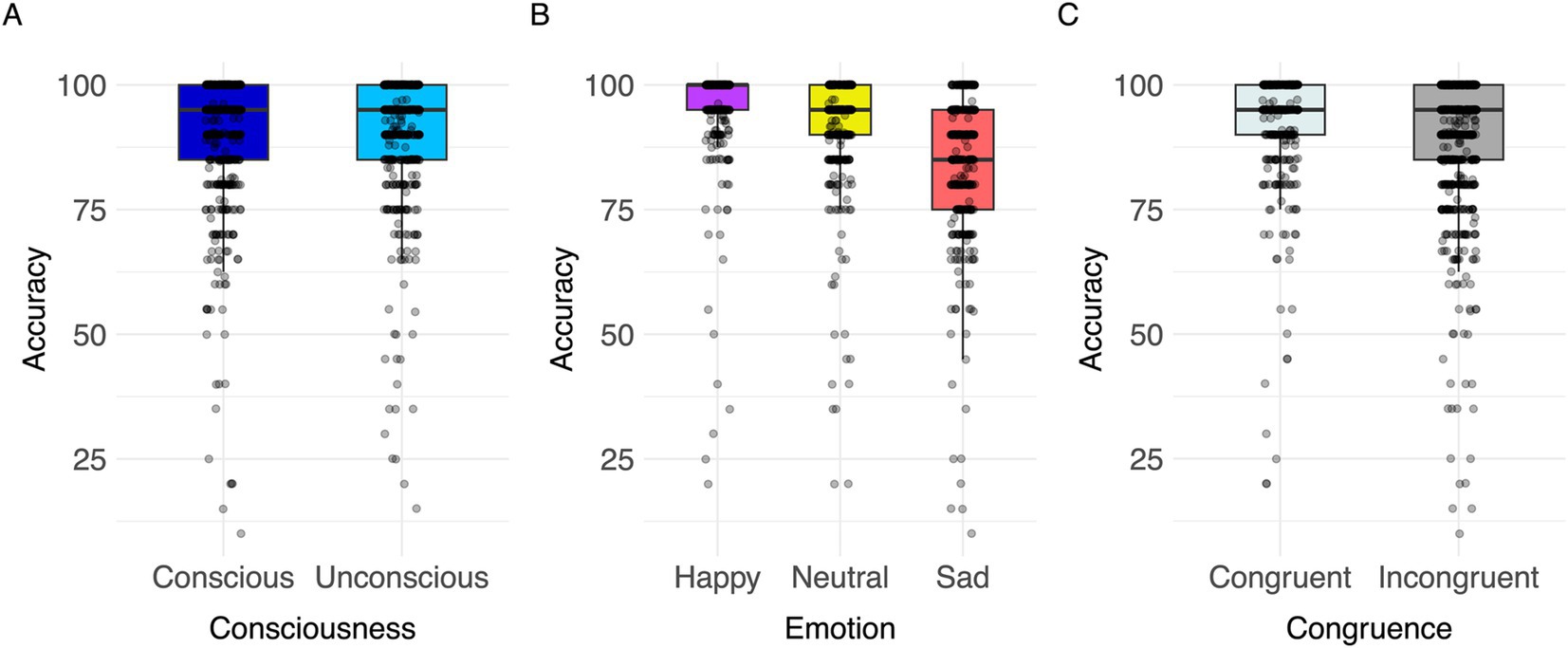

A significant effect of congruence was found [F(1,863) = 4.74, p < 0.001, Cohen’s f = 1.93; for all results see Table 5]. Response accuracy was greater in congruent than in incongruent trials (Figure 7). Post hoc comparisons indicated significant differences between all three target emotions [happy-neutral t(863) = 6.31, p < 0.001; happy-sad t(863) = 17.92, p < 0.001; neutral-sad t(863) = 11.63, p < 0.001; Table 5]. Participants’ responses were most accurate in happy target faces (happy > neutral > sad; Figure 7). The model explained 10.47% of variance via the fixed factors and 79.46% via the random intercept.

Figure 7. Visualization of parameter effects for accuracy. Boxplot of (A) congruence, (B) emotion, (C) consciousness.

The current study investigated the influence of conscious versus unconscious emotional face processing on electrophysiological markers. The P100 and N170 components were selected to analyze differences in conscious and unconscious emotional face processing. We observed higher accuracy in happy target trials compared to neutral and sad. Stimulus presentation time influenced the P100 and N170 amplitude. While P100 amplitudes were larger in trials with unconscious compared to conscious primer presentation, the N170 showed opposite results. N170 was modulated by emotion with post hoc effects being not significant. The N170 was lower when participants reported higher subjective difficulty of evaluating emotions.

The result of larger P100 amplitudes in unconscious than conscious trials is in line with past research. For example, larger P100 amplitudes were reported in trials where facial stimuli were presented for 17 ms (unconscious) in comparison to 200 ms (conscious) presentation time (Zhang et al., 2012). Assuming a modulation of P100 amplitudes by attention as reported in some literature showing higher P100 in trials with increased attention (Mangun and Hillyard, 1991; Mangun, 1995), briefly presented stimuli may elicit higher attention than trials with longer presentation times. This may lead to higher P100 amplitudes due to the increased attentional demand (Hillyard et al., 1998). A review proposed that some unconsciously perceived emotional facial expressions may attract higher attention, aiming to gain awareness of the emotional face (Eastwood and Smilek, 2005). Therefore, attention effects may be highly relevant especially for shortly presented facial expressions and are reflected by P100. Additionally, unconsciously perceived stimuli may have been processed only partly at this early stage which could increase the attentional demand resulting in higher P100 values.

N170 was larger in conscious compared to unconscious trials. The N170 is sensitive to face stimuli (Hinojosa et al., 2015) and fixation on specific face parts, e.g., on eyes elicited larger N170 amplitudes than fixation on mouths (de Lissa et al., 2014). Therefore, trials with longer stimuli presentation times may enable more detailed face processing resulting in larger N170. Our findings are in line with previous research proposing sensitivity of the N170 component to the visibility of faces (Zhang et al., 2012). Our results demonstrate a clear modulation of P100 and N170 amplitudes by stimulus presentation time, reflecting differential neural processing in conscious versus unconscious perception. The larger P100 amplitudes in unconscious trials suggest an early, rapid attentional mechanism that is activated by brief, unconsciously perceived stimuli. This heightened P100 response may indicate that the visual system allocates more attentional resources to quickly process stimuli that are only partially perceived, aligning with the hypothesis that unconscious emotional stimuli can attract higher attention (Eastwood and Smilek, 2005). On the other hand, the N170 amplitude was larger in conscious trials, consistent with the notion that conscious face perception engages more detailed and prolonged visual analysis, allowing for full engagement of face-specific cortical regions such as the fusiform gyrus (Zhang et al., 2012). This ERP has found to be altered depression on an unconscious level as well (Zhang et al., 2016), showing implications on how this ERP can be used to further understand the mechanisms of mental disorders. Furthermore, N170 and P100 can be used to classify unconscious vs. conscious processing and could be used to study more real life-like situations including subliminal facial expression processing using, e.g., dynamic stimulus material and can be therefore adapted to elaborate on disease specific alterations in unconscious emotion processing. Unconscious processing has practical relevance in daily interactions, where subtle emotional cues in faces may be registered unconsciously, influencing reactions before conscious awareness. This type of rapid processing could assist in social situations by helping to assess emotions or detect threats without requiring full awareness. Neuroscientific evidence supports the biological plausibility of processing complex stimuli like faces without awareness. Studies indicate that brain regions like the amygdala and subcortical pathways respond to faces even when not consciously perceived, suggesting an evolutionary adaptation for rapid, unconscious assessment of socially relevant cues (Pessoa, 2005; Tamietto and de Gelder, 2010). These findings align with our study’s aim to explore unconscious processing, showing its importance beyond controlled settings.

We hypothesized that the P100 component might be larger in trials displaying happy or sad faces compared to trials with neutral faces. However, the P100 was not significantly affected by the presented emotion in our study which could be due to a small effect size or the power of our model including the factor “emotion.” Since we observed wide confidence intervals that indicate a large data variability, we assume different sources that are not captured in our model. With a larger sample size, we could enhance precision about the effect and report more reliably reproducible effects. Other studies did not find an emotion effect on P100, as well (Batty and Taylor, 2003; Smith, 2012; Lee et al., 2017). The deviating results may be explained by the use of different methods calculating the ERP amplitudes, averaging the amplitude in a given time window (Zhang et al., 2016), contrary to selecting the peak within the time window. Another possible explanation may be differences regarding the experimental design, e.g., the longer stimulus presentation time of 500 ms, or the absence of a mask (Moradi et al., 2017).

As indicated by previous results (Hinojosa et al., 2015; Batty and Taylor, 2003; Miyoshi et al., 2004; Blau et al., 2007; Hendriks et al., 2007; Pegna et al., 2011; Smith, 2012; Zhang et al., 2012, 2016), the N170 component was influenced by different emotions. While the main effect of emotion on N170 was significant, post hoc comparisons between primer emotions were not significant. Since confidence intervals were wide and included the null value, large data variability might be considered as an influencing factor on the results. A meta-analysis underlines the effect of the emotion ‘happy’ on the N170 amplitude compared to neutral faces, whereas it does not substantiate the effect of the emotion ‘sad’ compared to neutral faces (Hinojosa et al., 2015).

Despite the robust effect of happy emotions, there are also contradictory findings with larger N170 amplitudes in sad faces than in happy or neutral faces (Lynn and Salisbury, 2008). There might be interindividual differences and preferences towards a specific emotion facilitating its processing. Assuming that such a bias towards specific emotions exists, this would result in increased ERPs for a specific emotion. In our study, the results would not support a specific bias towards one specific emotion, as no significant difference comparing happy and sad emotional faces was found. However, others found biases towards emotional information (for review see Kauschke et al., 2019).

We found a positive correlation between the N170 and subjective difficulty of evaluating emotion within the task paradigm. Participants who reported emotion assessment to be easier, elicited larger N170 amplitudes. Therefore, the emotion effect on the N170 may be dependent on participants’ general sensitivity and ability to discriminate emotions.

Early ERPs were found to be associated with lower-level processing, whereas later ERPs reflected more complex cognitive processes (Sur and Sinha, 2009; Portella et al., 2012). Even though the temporal distance between P100 and N170 is short, this is a substantial duration in the context of brain processing. This trend may be reflected by the finding that the N170 amplitude was modulated by presentation time as well as emotion, while the earlier P100 component was only influenced by stimulus presentation time.

The present study found significantly larger P100 amplitudes in women compared to men, whereas the N170 showed opposite results. Previous work investigating the effect of sex on ERPs related to emotional face processing found that women elicited larger P100 amplitudes than men in subthreshold fearful faces (Lee et al., 2017). Using an oddball paradigm, one study found that women elicited larger N170 amplitudes in emotional compared to neutral faces, whereas no such effect was found in men (Choi et al., 2015). Since previous study designs differed in experimental paradigm and emotions presented, it is unclear whether these findings are applicable to discuss our results. As the amount of research conducted on the effect of sex on the P100 and the N170 component is still insufficient, further research is needed for conclusive results. Additionally, it should be considered that the confidence intervals were wide indicating larger data variability and included the null value highlighting the need for more extensive research on the influence of the factor “sex” on the ERP components.

Both the P100 and the N170 amplitudes were larger on the right compared to the left hemisphere. In accordance with this, past findings suggest right hemisphere dominance for face processing in the N170, whereas results on the P100 are not fully conclusive yet (e.g., Leehey et al., 1978; Levine et al., 1988; Bötzel et al., 1995; Bentin et al., 1996; Kanwisher et al., 1997; Haxby et al., 1999; Rossion et al., 2003; Itier and Taylor, 2004; Zhang et al., 2012; Nguyen and Cunnington, 2014).

The N170 was increased with higher age which is in line with previous studies which discovered a trend towards an increase in N170 amplitudes in older participants. However, these studies were mainly focused on the population of children and adolescents (e.g., Taylor et al., 1999; for a review see Taylor et al., 2004). One study reported no significant difference of N170 amplitudes between young and middle-aged participants (Shi et al., 2022). Considering the face sensitivity of the N170 (Bentin et al., 1996; Hinojosa et al., 2015), it could be hypothesized that face processing still develops and matures in adults over the course of life. Accounting for the width and inclusion of the null effect in the confidence intervals analyzing the effect of age on the N170, our results should only be applied cautionary to other populations and more research on the effect of age in adult populations on the amplitude of the P100 and the N170 is required to establish conclusive results.

To avoid introducing noise into the ERP data caused by possible exhibition of atypical P100 and N170 responses in neurodivergent participants, only healthy participants were included. However, investigating a larger and more diverse sample could make the results more reliable.

Emotions in the paradigm were limited to happy, sad, and neutral facial expressions. Using a wider variety, e.g., additionally angry, and fearful faces could broaden the results. Additionally, our experiment is limited in investigating the actual perceived awareness of a stimulus in a trial but can only refer to mean ratings retrospectively.

Our results revealed that the P100 and the N170 components were modulated by conscious versus unconscious presentation times. While briefly presented faces elicited larger P100 amplitudes, N170 amplitudes showed opposite results. Consequently, P100 and N170 may be used as sensitive electrophysiological markers for investigating differences between conscious and unconscious emotional face processing. The N170 component was found to be enhanced in response to happy and sad facial expressions compared to neutral, which suggests a non-specific emotion effect.

All acquired data are electronically stored in a pseudonymous form for a duration of 10 years on the server and external hard drives in the Brain Imaging core facility of the IZKF Aachen (Interdisciplinary Centre for Clinical Research).

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/LennardHerzberg/MarkersUnconsciousProcessing.

The studies involving humans were approved by Ethics committee of the Medical Faculty, Uniklinik RWTH Aachen University, Germany. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

LH: Data curation, Formal analysis, Investigation, Software, Visualization, Writing – original draft. JS: Formal analysis, Investigation, Software, Writing – review & editing. H-GJ: Conceptualization, Funding acquisition, Methodology, Writing – review & editing. UH: Conceptualization, Funding acquisition, Resources, Writing – review & editing. LW: Conceptualization, Formal analysis, Project administration, Supervision, Validation, Writing – review & editing.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This study was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) 667892/JO 1453/2-1.

The authors thank all subjects for their participation. This work was supported by the Brain Imaging Facility of the Interdisciplinary Center for Clinical Research (IZKF) Aachen within the Faculty of Medicine at RWTH Aachen University. This study was supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) –SFB-TRR 379– 512007073 and the International Research Training Group (IRTG2150)—269953372/GRK2150. This work was also supported by the FZJ-NST Bilateral Cooperation Programme funded by the Forschungszentrum Jülich and the National Research Council of Science & Technology (Global-22-001). We would like to thank Marie Kranz and Fynn Wagels very much for their assistance with the measurements.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnbeh.2024.1464888/full#supplementary-material

Allen, P. J., Josephs, O., and Turner, R. (2000). A method for removing imaging artifact from continuous EEG recorded during functional MRI. NeuroImage 12, 230–239. doi: 10.1006/nimg.2000.0599

Allen, P. J., Polizzi, G., Krakow, K., Fish, D. R., and Lemieux, L. (1998). Identification of EEG events in the MR scanner: the problem of pulse artifact and a method for its subtraction. NeuroImage 8, 229–239. doi: 10.1006/nimg.1998.0361

Andrews, T. J., Schluppeck, D., Homfray, D., Matthews, P., and Blakemore, C. (2002). Activity in the fusiform gyrus predicts conscious perception of Rubin’s vase-face illusion. NeuroImage 17, 890–901. doi: 10.1006/nimg.2002.1243

Ashley, V., Vuilleumier, P., and Swick, D. (2004). Time course and specificity of event-related potentials to emotional expressions. Neuroreport 15, 211–216. doi: 10.1097/00001756-200401190-00041

Balconi, M., and Mazza, G. (2009). Consciousness and emotion: ERP modulation and attentive vs. pre-attentive elaboration of emotional facial expressions by backward masking. Motiv. Emot. 33, 113–124. doi: 10.1007/s11031-009-9122-8

Baroni, M., Frumento, S., Cesari, V., Gemignani, A., Menicucci, D., and Rutigliano, G. (2021). Unconscious processing of subliminal stimuli in panic disorder: a systematic review and meta-analysis. Neurosci. Biobehav. Rev. 128, 136–151. doi: 10.1016/j.neubiorev.2021.06.023

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Batty, M., and Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Brain Res. Cogn. Brain Res. 17, 613–620. doi: 10.1016/S0926-6410(03)00174-5

Beesdo-Baum, K., Zaudig, M., and Wittchen, H.-U. (2019). SCID-5-CV Strukturiertes Klinisches Interview für DSM-5-Störungen–Klinische Version: Deutsche Bearbeitung des Structured Clinical Interview for DSM-5 Disorders–Clinician Version von Michael B. First, Janet BW Williams, Rhonda S. Karg, Robert L. Spitzer. Hogrefe.

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565. doi: 10.1162/jocn.1996.8.6.551

Biehl, S. C., Ehlis, A. C., Müller, L. D., Niklaus, A., Pauli, P., and Herrmann, M. J. (2013). The impact of task relevance and degree of distraction on stimulus processing. BMC Neurosci. 14:107. doi: 10.1186/1471-2202-14-107

Blackwood, D. H., and Muir, W. J. (1990). Cognitive brain potentials and their application. Br. J. Psychiatry Suppl. 9, 96–101.

Blau, V. C., Maurer, U., Tottenham, N., and McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3:7. doi: 10.1186/1744-9081-3-7

Bötzel, K., Schulze, S., and Stodieck, S. R. (1995). Scalp topography and analysis of intracranial sources of face-evoked potentials. Exp. Brain Res. 104, 135–143. doi: 10.1007/BF00229863

Brunet, N. M. (2023). Face processing and early event-related potentials: replications and novel findings. Front. Hum. Neurosci. 17:1268972. doi: 10.3389/fnhum.2023.1268972

Chen, J., Ma, W., Zhang, Y., Wu, X., Wei, D., Liu, G., et al. (2014). Distinct facial processing related negative cognitive bias in first-episode and recurrent major depression: evidence from the N170 ERP component. PLoS One 9:e109176. doi: 10.1371/journal.pone.0109176

Choi, D., Egashira, Y., Takakura, J., Motoi, M., Nishimura, T., and Watanuki, S. (2015). Gender difference in N170 elicited under oddball task. J. Physiol. Anthropol. 34:7. doi: 10.1186/s40101-015-0045-7

de Lissa, P., McArthur, G., Hawelka, S., Palermo, R., Mahajan, Y., and Hutzler, F. (2014). Fixation location on upright and inverted faces modulates the N170. Neuropsychologia 57, 1–11. doi: 10.1016/j.neuropsychologia.2014.02.006

de Melo, M. B., Daldegan-Bueno, D., Menezes Oliveira, M. G., and de Souza, A. L. (2022). Beyond ANOVA and MANOVA for repeated measures: advantages of generalized estimated equations and generalized linear mixed models and its use in neuroscience research. Eur. J. Neurosci. 56, 6089–6098. doi: 10.1111/ejn.15858

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Di Russo, F., and Spinelli, D. (1999). Electrophysiological evidence for an early attentional mechanism in visual processing in humans. Vis. Res. 39, 2975–2985. doi: 10.1016/S0042-6989(99)00031-0

Dickey, L., Politte-Corn, M., and Kujawa, A. (2021). Development of emotion processing and regulation: insights from event-related potentials and implications for internalizing disorders. Int. J. Psychophysiol. 170, 121–132. doi: 10.1016/j.ijpsycho.2021.10.003

Disner, S. G., Beevers, C. G., Haigh, E. A., and Beck, A. T. (2011). Neural mechanisms of the cognitive model of depression. Nat. Rev. Neurosci. 12, 467–477. doi: 10.1038/nrn3027

Eastwood, J. D., and Smilek, D. (2005). Functional consequences of perceiving facial expressions of emotion without awareness. Conscious. Cogn. 14, 565–584. doi: 10.1016/j.concog.2005.01.001

Ebner, N. C., Riediger, M., and Lindenberger, U. (2010). FACES--a database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. doi: 10.3758/BRM.42.1.351

Eimer, M., and Holmes, A. (2002). An ERP study on the time course of emotional face processing. Neuroreport 13, 427–431. doi: 10.1097/00001756-200203250-00013

Eimer, M., Holmes, A., and McGlone, F. P. (2003). The role of spatial attention in the processing of facial expression: an ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 3, 97–110. doi: 10.3758/CABN.3.2.97

Esteves, F., and Ohman, A. (1993). Masking the face: recognition of emotional facial expressions as a function of the parameters of backward masking. Scand. J. Psychol. 34, 1–18. doi: 10.1111/j.1467-9450.1993.tb01096.x

Fox, E. (2002). Processing emotional facial expressions: the role of anxiety and awareness. Cogn. Affect. Behav. Neurosci. 2, 52–63. doi: 10.3758/CABN.2.1.52

Furey, M. L., Tanskanen, T., Beauchamp, M. S., Avikainen, S., Uutela, K., Hari, R., et al. (2006). Dissociation of face-selective cortical responses by attention. Proc. Natl. Acad. Sci. USA 103, 1065–1070. doi: 10.1073/pnas.0510124103

Gainotti, G. (2012). Unconscious processing of emotions and the right hemisphere. Neuropsychologia 50, 205–218. doi: 10.1016/j.neuropsychologia.2011.12.005

Hahn, A., and Goedderz, A. (2020). Trait-unconsciousness, state-unconsciousness, preconsciousness, and social miscalibration in the context of implicit evaluation. Soc. Cogn. 38, S115–S134. doi: 10.1521/soco.2020.38.supp.s115

Härting, C., and Wechsler, D. (2000). Wechsler-Gedächtnistest - revidierte Fassung: WMS-R; Manual; deutsche Adaptation der revidierten Fassung der Wechsler Memory scale. Huber.

Haxby, J. V., Ungerleider, L. G., Clark, V. P., Schouten, J. L., Hoffman, E. A., and Martin, A. (1999). The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22, 189–199. doi: 10.1016/S0896-6273(00)80690-X

Hendriks, M. C., van Boxtel, G. J., and Vingerhoets, A. J. (2007). An event-related potential study on the early processing of crying faces. Neuroreport 18, 631–634. doi: 10.1097/WNR.0b013e3280bad8c7

Herrmann, M. J., Aranda, D., Ellgring, H., Mueller, T. J., Strik, W. K., Heidrich, A., et al. (2002). Face-specific event-related potential in humans is independent from facial expression. Int. J. Psychophysiol. 45, 241–244. doi: 10.1016/S0167-8760(02)00033-8

Herrmann, M. J., Ehlis, A. C., Ellgring, H., and Fallgatter, A. J. (2005a). Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs). J. Neural Trans. 112, 1073–1081. doi: 10.1007/s00702-004-0250-8

Herrmann, M. J., Ehlis, A. C., Muehlberger, A., and Fallgatter, A. J. (2005b). Source localization of early stages of face processing. Brain Topogr. 18, 77–85. doi: 10.1007/s10548-005-0277-7

Hillyard, S. A., Vogel, E. K., and Luck, S. J. (1998). Sensory gain control (amplification) as a mechanism of selective attention: electrophysiological and neuroimaging evidence. Philos. Trans. R. Soc. Lond. Ser. B Biol. Sci. 353, 1257–1270. doi: 10.1098/rstb.1998.0281

Hinojosa, J. A., Mercado, F., and Carretié, L. (2015). N170 sensitivity to facial expression: a meta-analysis. Neurosci. Biobehav. Rev. 55, 498–509. doi: 10.1016/j.neubiorev.2015.06.002

Itier, R. J., and Taylor, M. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 14, 132–142. doi: 10.1093/cercor/bhg111

Jaworska, N., Blier, P., Fusee, W., and Knott, V. (2012). The temporal electrocortical profile of emotive facial processing in depressed males and females and healthy controls. J. Affect. Disord. 136, 1072–1081. doi: 10.1016/j.jad.2011.10.047

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997

Kauschke, C., Bahn, D., Vesker, M., and Schwarzer, G. (2019). The role of emotional valence for the processing of facial and verbal stimuli-positivity or negativity Bias? Front. Psychol. 10:1654. doi: 10.3389/fpsyg.2019.01654

Lee, S. A., Kim, C. Y., Shim, M., and Lee, S. H. (2017). Gender differences in neural responses to perceptually invisible fearful face-an ERP study. Front. Behav. Neurosci. 11:6. doi: 10.3389/fnbeh.2017.00006

Leehey, S., Carey, S., Diamond, R., and Cahn, A. (1978). Upright and inverted faces: the right hemisphere knows the difference. Cortex 14, 411–419. doi: 10.1016/S0010-9452(78)80067-7

Lenth, R (2023). Emmeans: estimated marginal means, aka least-squares means. R package version 1.8.4-1. Available at: https://CRAN.R-project.org/package=emmeans (Accessed January 29, 2024).

Levine, S. C., Banich, M. T., and Koch-Weser, M. P. (1988). Face recognition: a general or specific right hemisphere capacity? Brain Cogn. 8, 303–325. doi: 10.1016/0278-2626(88)90057-7

Liddell, B. J., Williams, L. M., Rathjen, J., Shevrin, H., and Gordon, E. (2004). A temporal dissociation of subliminal versus supraliminal fear perception: an event-related potential study. J. Cogn. Neurosci. 16, 479–486. doi: 10.1162/089892904322926809

Lueschow, A., Sander, T., Boehm, S. G., Nolte, G., Trahms, L., and Curio, G. (2004). Looking for faces: attention modulates early occipitotemporal object processing. Psychophysiology 41, 350–360. doi: 10.1111/j.1469-8986.2004.00159.x

Lynn, S. K., and Salisbury, D. F. (2008). Attenuated modulation of the N170 ERP by facial expressions in schizophrenia. Clin. EEG Neurosci. 39, 108–111. doi: 10.1177/155005940803900218

Mangun, G. R. (1995). Neural mechanisms of visual selective attention. Psychophysiology 32, 4–18. doi: 10.1111/j.1469-8986.1995.tb03400.x

Mangun, G. R., and Hillyard, S. A. (1991). Modulations of sensory-evoked brain potentials indicate changes in perceptual processing during visual-spatial priming. J. Exp. Psychol. Hum. Percept. Perform. 17, 1057–1074. doi: 10.1037/0096-1523.17.4.1057

Matuschek, H., Kliegl, R., Vasishth, S., Baayen, H., and Bates, D. (2017). Balancing type I error and power in linear mixed models. J. Mem. Lang. 94, 305–315. doi: 10.1016/j.jml.2017.01.001

Merikle, P. M., Smilek, D., and Eastwood, J. D. (2001). Perception without awareness: perspectives from cognitive psychology. Cognition 79, 115–134. doi: 10.1016/s0010-0277(00)00126-8

Michel, M. (2022). Conscious perception and the prefrontal cortex: a review. J. Conscious. Stud. 29, 115–157. doi: 10.53765/20512201.29.7.115

Miller, G. A. (1956). The magical number seven plus or minus two: some limits on our capacity for processing information. Psychol. Rev. 63, 81–97. doi: 10.1037/h0043158

Miyoshi, M., Katayama, J., and Morotomi, T. (2004). Face-specific N170 component is modulated by facial expressional change. Neuroreport 15, 911–914. doi: 10.1097/00001756-200404090-00035

Moradi, A., Mehrinejad, S. A., Ghadiri, M., and Rezaei, F. (2017). Event-related potentials of bottom-up and top-down processing of emotional faces. Basic Clin. Neurosci. 8, 27–36. doi: 10.15412/J.BCN.03080104

Morris, J. S., Ohman, A., and Dolan, R. J. (1998). Conscious and unconscious emotional learning in the human amygdala. Nature 393, 467–470. doi: 10.1038/30976

Morris, J. S., Ohman, A., and Dolan, R. J. (1999). A subcortical pathway to the right amygdala mediating “unseen” fear. Proc. Natl. Acad. Sci. USA 96, 1680–1685. doi: 10.1073/pnas.96.4.1680

Navajas, J., Ahmadi, M., and Quiroga, R. Q. (2013). Uncovering the mechanisms of conscious face perception: a single-trial study of the n170 responses. J. Neurosci. 33, 1337–1343. doi: 10.1523/JNEUROSCI.1226-12.2013

Nguyen, V. T., and Cunnington, R. (2014). The superior temporal sulcus and the N170 during face processing: single trial analysis of concurrent EEG-fMRI. NeuroImage 86, 492–502. doi: 10.1016/j.neuroimage.2013.10.047

Pegna, A. J., Darque, A., Berrut, C., and Khateb, A. (2011). Early ERP modulation for task-irrelevant subliminal faces. Front. Psychol. 2:88. doi: 10.3389/fpsyg.2011.00088

Peirce, J. W. (2007). PsychoPy--psychophysics software in Python. J. Neurosci. Methods 162, 8–13. doi: 10.1016/j.jneumeth.2006.11.017

Pessoa, L. (2005). To what extent are emotional visual stimuli processed without attention and awareness? Curr. Opin. Neurobiol. 15, 188–196. doi: 10.1016/j.conb.2005.03.002

Portella, C., Machado, S., Arias-Carrión, O., Sack, A. T., Silva, J. G., Orsini, M., et al. (2012). Relationship between early and late stages of information processing: an event-related potential study. Neurol. Int. 4:e16:16. doi: 10.4081/ni.2012.e16

Posit team (2022). RStudio: Integrated development environment for R. Posit Software, PBC, Boston, MA. Available at: http://www.posit.co/ (Accessed October 31, 2022).

Prabhakaran, R., and Gray, J. R. (2012). The pervasive nature of unconscious social information processing in executive control. Front. Hum. Neurosci. 6:105. doi: 10.3389/fnhum.2012.00105

Proverbio, A. M., Brignone, V., Matarazzo, S., Del Zotto, M., and Zani, A. (2006). Gender differences in hemispheric asymmetry for face processing. BMC Neurosci. 7:44. doi: 10.1186/1471-2202-7-44

R Core Team (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. Available at: https://www.R-project.org/ (Accessed October 31, 2022).

Reitan, R. M. (1971). Trail making test results for Normal and brain-damaged children. Percept. Mot. Skills 33, 575–581. doi: 10.2466/pms.1971.33.2.575

Rellecke, J., Sommer, W., and Schacht, A. (2013). Emotion effects on the n170: a question of reference? Brain Topogr. 26, 62–71. doi: 10.1007/s10548-012-0261-y

Rossion, B., Joyce, C. A., Cottrell, G. W., and Tarr, M. J. (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. NeuroImage 20, 1609–1624. doi: 10.1016/j.neuroimage.2003.07.010

Schräder, J., Habel, U., Jo, H. G., Walter, F., and Wagels, L. (2023). Identifying the duration of emotional stimulus presentation for conscious versus subconscious perception via hierarchical drift diffusion models. Conscious. Cogn. 110:103493. doi: 10.1016/j.concog.2023.103493

Schräder, J., Herzberg, L., Jo, H. G., Hernandez-Pena, L., Koch, J., Habel, U., et al. (2024). Neurophysiological pathways of unconscious emotion processing in depression: insights from a simultaneous electroencephalography-functional magnetic resonance imaging measurement. Biol. Psychiatry 9, 1121–1131. doi: 10.1016/j.bpsc.2024.07.005

Schütz, C., Güldenpenning, I., Koester, D., and Schack, T. (2020). Social cues can impact complex behavior unconsciously. Sci. Rep. 10:21017. doi: 10.1038/s41598-020-77646-2

Shi, H., Sun, G., and Zhao, L. (2022). The effect of age on the early stage of face perception in depressed patients: an ERP study. Front. Psychol. 13:710614. doi: 10.3389/fpsyt.2022.710614

Smith, M. L. (2012). Rapid processing of emotional expressions without conscious awareness. Cereb. Cortex 22, 1748–1760. doi: 10.1093/cercor/bhr250

Smith, R., and Lane, R. D. (2016). Unconscious emotion: a cognitive neuroscientific perspective. Neurosci. Biobehav. Rev. 69, 216–238. doi: 10.1016/j.neubiorev.2016.08.013

Stuhrmann, A., Dohm, K., Kugel, H., Zwanzger, P., Redlich, R., Grotegerd, D., et al. (2013). Mood-congruent amygdala responses to subliminally presented facial expressions in major depression: associations with anhedonia. J. Psychiatry Neurosci. 38, 249–258. doi: 10.1503/jpn.120060

Sur, S., and Sinha, V. K. (2009). Event-related potential: an overview. Ind. Psychiatry J. 18, 70–73. doi: 10.4103/0972-6748.57865

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nat. Rev. Neurosci. 11, 697–709. doi: 10.1038/nrn2889

Taylor, M. J., Batty, M., and Itier, R. J. (2004). The faces of development: a review of early face processing over childhood. J. Cogn. Neurosci. 16, 1426–1442. doi: 10.1162/0898929042304732

Taylor, M. J., McCarthy, G., Saliba, E., and Degiovanni, E. (1999). ERP evidence of developmental changes in processing of faces. Clin. Neurophysiol. 110, 910–915. doi: 10.1016/S1388-2457(99)00006-1

The MathWorks Inc (2020). MATLAB version: 9.9.0.1467703 (R2020b), Natick, Massachusetts: The MathWorks Inc. Available at: https://www.mathworks.com (Accessed October 13, 2022).

Tischler, L., and Petermann, F. (2010). Trail making test (TMT). Z. Psychiatr. Psychol. Psychother. 58, 79–81. doi: 10.1024/1661-4747.a000009

Venables, W. N., and Ripley, B. D. (2002). Modern applied statistics with S. Fourth Edn. New York: Springer.

Vorst, H. C. M., and Bermond, B. (2001). Validity and reliability of the Bermond-Vorst alexithymia questionnaire. Personal. Individ. Differ. 30, 413–434. doi: 10.1016/S0191-8869(00)00033-7

Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B., and Jenike, M. A. (1998). Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J. Neurosci. 18, 411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998

Woodman, G. F. (2010). A brief introduction to the use of event-related potentials in studies of perception and attention. Atten. Percept. Psychophysiol. 72, 2031–2046. doi: 10.3758/BF03196680

World Medical Association (2013). World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA 310, 2191–2194. doi: 10.1001/jama.2013.281053

Xu, Q., Yang, Y., Wang, P., Sun, G., and Zhao, L. (2013). Gender differences in preattentive processing of facial expressions: an ERP study. Brain Topogr. 26, 488–500. doi: 10.1007/s10548-013-0275-0

Zhang, D., He, Z., Chen, Y., and Wei, Z. (2016). Deficits of unconscious emotional processing in patients with major depression: an ERP study. J. Affect. Disord. 199, 13–20. doi: 10.1016/j.jad.2016.03.056

Keywords: event-related potentials, unconsciousness, emotion processing, P100, N170

Citation: Herzberg L, Schräder J, Jo H-G, Habel U and Wagels L (2025) Identifying P100 and N170 as electrophysiological markers for conscious and unconscious processing of emotional facial expressions. Front. Behav. Neurosci. 18:1464888. doi: 10.3389/fnbeh.2024.1464888

Received: 15 July 2024; Accepted: 30 December 2024;

Published: 23 January 2025.

Edited by:

Joao Miguel Castelhano, University of Coimbra, PortugalReviewed by:

Peter De Lissa, Université de Fribourg, SwitzerlandCopyright © 2025 Herzberg, Schräder, Jo, Habel and Wagels. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lennard Herzberg, bGhlcnpiZXJnQHVrYWFjaGVuLmRl

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.