94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Behav. Neurosci., 21 October 2021

Sec. Pathological Conditions

Volume 15 - 2021 | https://doi.org/10.3389/fnbeh.2021.755812

This article is part of the Research TopicLooking at the Complete Picture: Tackling Broader Factors Important for Advancing the Validity of Preclinical Models in DiseaseView all 6 articles

Annesha Sil1

Annesha Sil1 Anton Bespalov2

Anton Bespalov2 Christina Dalla3

Christina Dalla3 Chantelle Ferland-Beckham4

Chantelle Ferland-Beckham4 Arnoud Herremans5

Arnoud Herremans5 Konstantinos Karantzalos6

Konstantinos Karantzalos6 Martien J. Kas7

Martien J. Kas7 Nikolaos Kokras3,8

Nikolaos Kokras3,8 Michael J. Parnham9

Michael J. Parnham9 Pavlina Pavlidi3

Pavlina Pavlidi3 Kostis Pristouris6

Kostis Pristouris6 Thomas Steckler10

Thomas Steckler10 Gernot Riedel1†

Gernot Riedel1† Christoph H. Emmerich2*†

Christoph H. Emmerich2*†Laboratory workflows and preclinical models have become increasingly diverse and complex. Confronted with the dilemma of a multitude of information with ambiguous relevance for their specific experiments, scientists run the risk of overlooking critical factors that can influence the planning, conduct and results of studies and that should have been considered a priori. To address this problem, we developed “PEERS” (Platform for the Exchange of Experimental Research Standards), an open-access online platform that is built to aid scientists in determining which experimental factors and variables are most likely to affect the outcome of a specific test, model or assay and therefore ought to be considered during the design, execution and reporting stages. The PEERS database is categorized into in vivo and in vitro experiments and provides lists of factors derived from scientific literature that have been deemed critical for experimentation. The platform is based on a structured and transparent system for rating the strength of evidence related to each identified factor and its relevance for a specific method/model. In this context, the rating procedure will not solely be limited to the PEERS working group but will also allow for a community-based grading of evidence. We here describe a working prototype using the Open Field paradigm in rodents and present the selection of factors specific to each experimental setup and the rating system. PEERS not only offers users the possibility to search for information to facilitate experimental rigor, but also draws on the engagement of the scientific community to actively expand the information contained within the platform. Collectively, by helping scientists search for specific factors relevant to their experiments, and to share experimental knowledge in a standardized manner, PEERS will serve as a collaborative exchange and analysis tool to enhance data validity and robustness as well as the reproducibility of preclinical research. PEERS offers a vetted, independent tool by which to judge the quality of information available on a certain test or model, identifies knowledge gaps and provides guidance on the key methodological considerations that should be prioritized to ensure that preclinical research is conducted to the highest standards and best practice.

Biomedical research, particularly in the preclinical sphere, has been subject to scrutiny for the low levels of reproducibility that continue to persist across laboratories (Ioannidis, 2005). Reproducibility in this context refers to the ability to corroborate results of a previous study by conducting new experiments with the same experimental design but collecting new and independent data sets. Reproducibility checks are common in fields like physics (CERN, 2018), but rarer in biological disciplines such as neuroscience and pharmacotherapy, which are increasingly facing a “reproducibility crisis” (Bespalov et al., 2016; Bespalov and Steckler, 2018; Botvinik-Nezer et al., 2020). Even though a high risk of failure to repeat experiments between laboratories is an inherent part of developing innovative therapies, some risks can be greatly reduced and avoided by adherence to evidence-based research practices using clearly identified measures to improve research rigor (Vollert et al., 2020; Bespalov et al., 2021; Emmerich et al., 2021). Alternative initiatives have been introduced to increase data reporting and harmonization across laboratories [ARRIVE 2.0 (Percie du Sert et al., 2020); EQUATOR network (Simera, 2008); The International Brain Laboratory (The International Brain Laboratory, 2017); FAIRsharing Information Resource (Sansone et al., 2019)], improve data management and analysis [Pistoia Alliance Database (Makarov et al., 2021); NINDS Common Data Elements (Stone, 2010); FITBIR: Traumatic Brain Injury network (Tosetti et al., 2013); FITBIR: Preclinical Traumatic Brain Injury Common Data Elements (LaPlaca et al., 2021)], or publish novel methods and their refinements (Norecopa; Current Protocols in Neuroscience; protocols.io; The Journal of Neuroscience Methods). However, extrinsic and intrinsic factors that affect study outcomes in biomedical research have not yet been systematically considered or weighted and are the subject of “PEERS” (Platform for the Exchange of Experimental Research Standards). This makes PEERS a unique addition to this eclectic list of well-established resources.

The rationale for PEERS is as follows: Laboratory workflows and preclinical models have become increasingly diverse and complex. Although the mechanics of many experimental paradigms are well explored and usually repeatable across laboratories and even across national/continental boundaries (Robinson et al., 2018; Aguillon-Rodriguez et al., 2021), data can be highly variable and are often inconsistent. Multiple attempts have been made to overcome this issue, but even efforts in which experimental conditions were fully standardized between laboratories have not been completely successful. This may not be surprising given that behavioral testing, for example, is sensitive to environmental factors such as housing conditions (background noise, olfactory cues), experimenter interactions, and the sex or strain of the animal under investigation (Sousa et al., 2006; Bohlen et al., 2014; Riedel et al., 2018; Pawluski et al., 2020; Butlen-Ducuing et al., 2021). Many multi-laboratory studies have also observed significant differences between mouse strains and interactions of genotype ∗ laboratory despite efforts to rigorously standardize both housing conditions and experimental design (Wolfer et al., 2004; Richter et al., 2011). Taking together, there are many variables/factors that can affect an experiment and the outcome of a study.

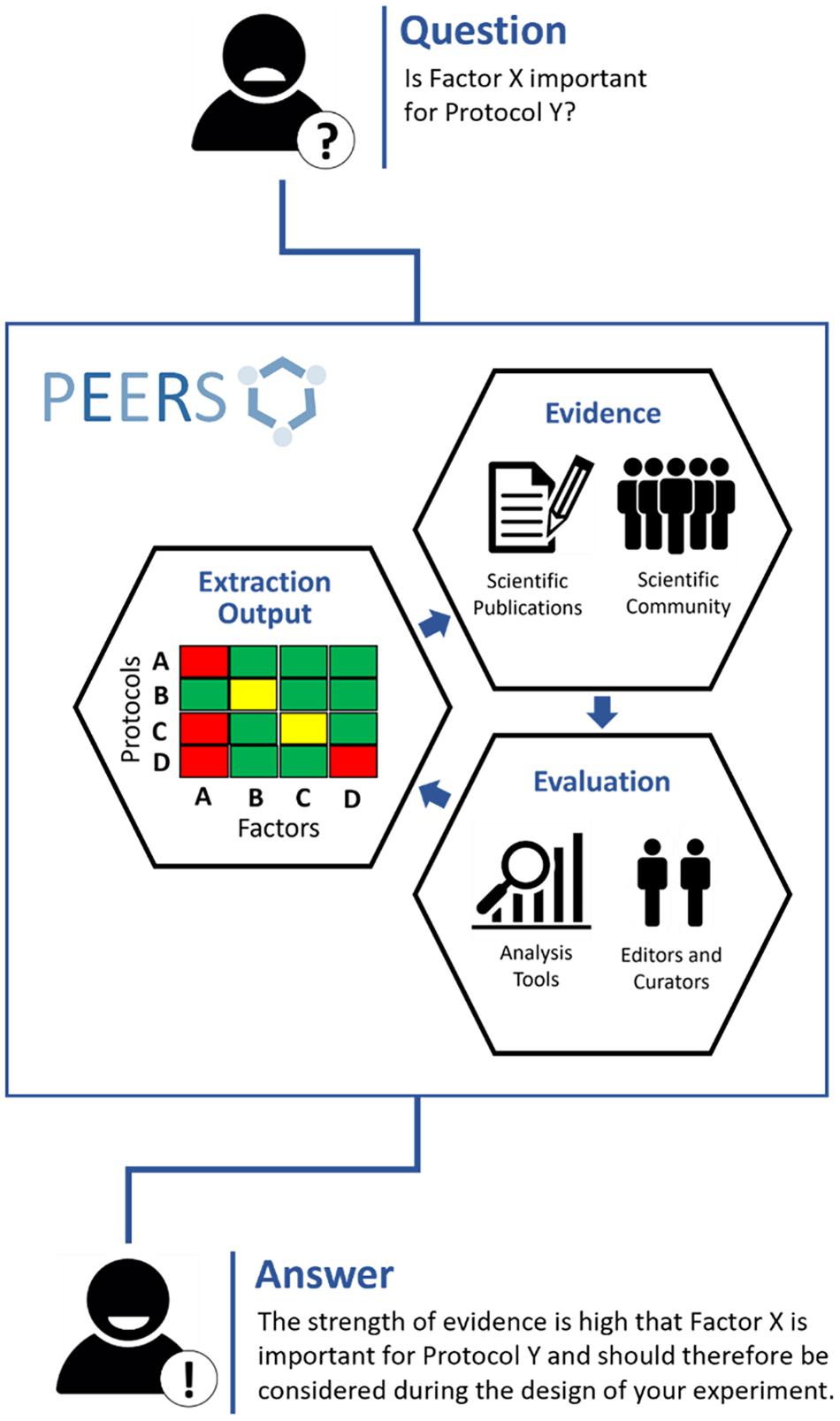

A proper catalog of these influencing factors, including the scientific evidence combined with a rating of its strength, is missing to date. PEERS seeks to fill this gap and aims to guide scientists by advising which factors need to be monitored, recorded or reported. Figure 1 represents the overarching concept of the PEERS platform.

Figure 1. Outline of the PEERS concept and workflow (the 3Es). To understand whether specific factors are relevant for certain methods/models (“protocols”), the PEERS workflow is based on different steps to collect information about selected factors/protocols from publications or the scientific community (“Evidence”); rate the strength of this information and provide mechanisms for editing, curating and maintaining the information/database (“Evaluation”); and present the outcome in a user-friendly and digestible form (“Extraction Output”) so that users will be provided with an answer helpful for their planned experiments.

To mitigate some of the above issues, we have developed PEERS, an open-access online platform that seeks to aid scientists in determining which experimental factors (or variables) most likely affect the outcome of a specific test, model or assay and therefore deserve consideration prior to study design, execution and reporting. Our overarching ambition is to develop PEERS into a one-stop exchange and reporting tool for extrinsic and intrinsic factors underlying variability in study outcomes and thereby undermining scientific progress. At the same time, PEERS offers a vetted, independent perspective by which the quality of information available on a certain test or model can be judged. It will also identify knowledge gaps and provide guidance on key methodological considerations that should be prioritized to ensure that preclinical research is conducted to the highest standards and incorporates best practice.

The PEERS project can be traced back to the Global Preclinical Data Forum (GPDF),1 a network financially and organizationally supported by the European College of Neuropsychopharmacology (ECNP; PMID: 26073278, doi: 10.1016/j.euroneuro.2015.05.011) and Cohen Veterans Bioscience (CVB). The GPDF focuses on robustness, reproducibility, translatability and transparency of reporting preclinical data and consists of a multinational consortium of specialist researchers from research institutions, universities, pharma companies, “small medium entities” and publishers. Originating from the GPDF, the PEERS Working Group (authors AS, CD, CF-B, AH, KK, MK, NK, KP, GR, CE) is currently funded by CVB during its initiation phase. The Working Group consists broadly of a “scientific arm” with long-standing expertise in neuroscience, reproducibility and improvements in data quality across academic and industrial preclinical biomedical research. The “scientific arm” of the group is complemented by the strong software and machine learning expertise of the “software arm” which translates the scientific input provided into an easy to navigate open access online platform.

Therefore, our initial focus is on in vivo and in vitro methods commonly utilized in neuroscience research. Since the inaugural meeting on 10 September 2020, the implementation of a principal concept was agreed, and partners have contributed to different work-packages.

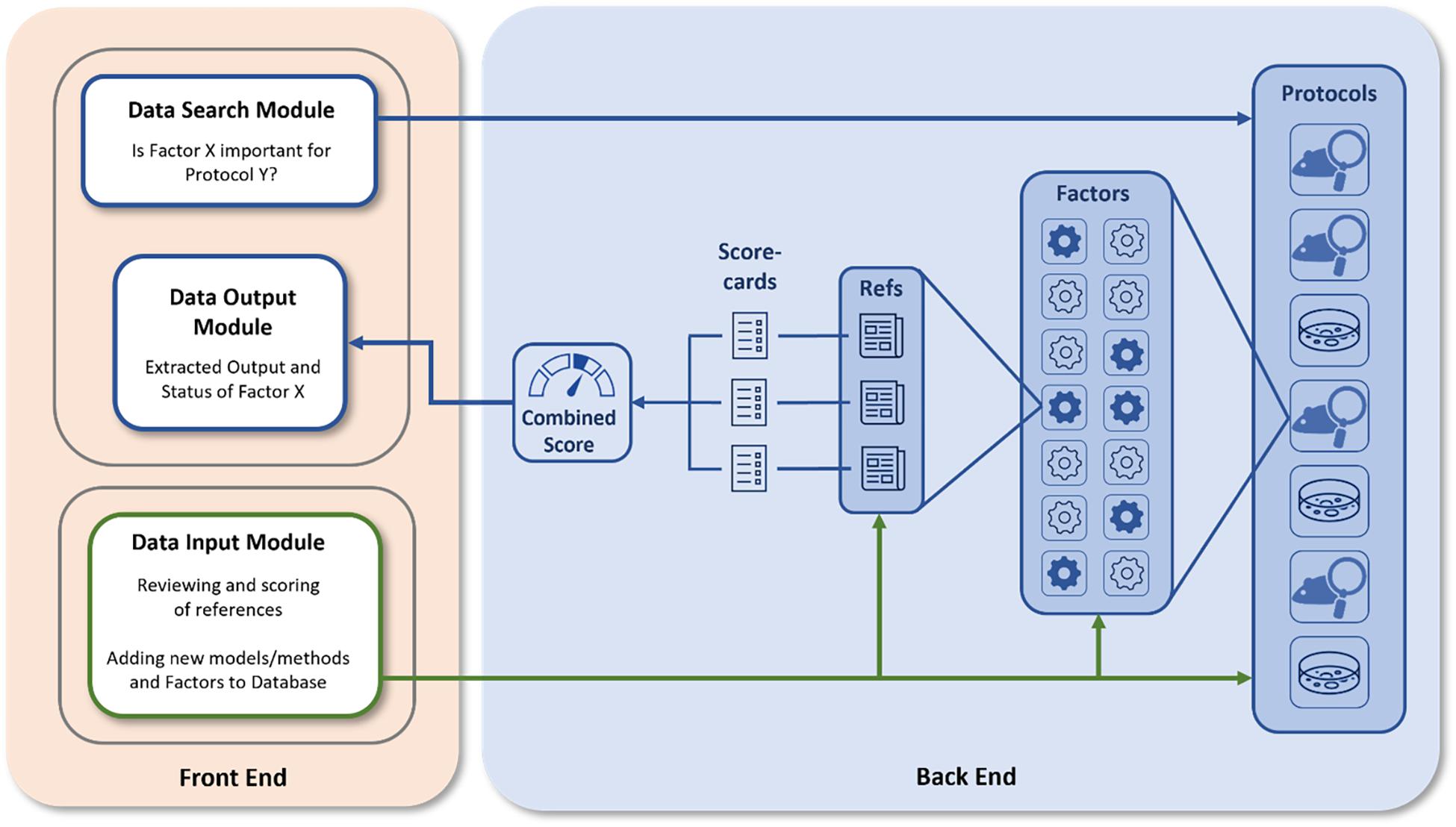

Figure 2 represents the overall structure of the platform with the front and back-end functionalities represented. The front end contains a data input module which allows registered users to add either new methods/models (here termed “protocols”) or provide add-on information to existing protocols, but also the data search and the data extraction modules to be used by a typical user for the examination of databases and the retrieval of information. The back end of the PEERS database contains the processes to collect and analyze information related to the selected protocols. The relevance of specific factors for the outcome of these protocols is analyzed based on a detailed scoring system, representing a central element of the PEERS working prototype. The different steps involved in setting up this platform are discussed in the sections below by following the 3Es identified in Figure 1.

Figure 2. PEERS platform structure. Users can interact with the PEERS platform (blue arrows) by searching for or adding information (Front End Modules). The PEERS database (Back End) consists of various protocols, for which generic and specific factors and related references have been identified. The Quality of Evidence for the importance of certain factors is evaluated using scorecards and a summary is presented by visualizing results in the user interface. Users can contribute by adding new protocols or factors and by scoring relevant references (green arrows).

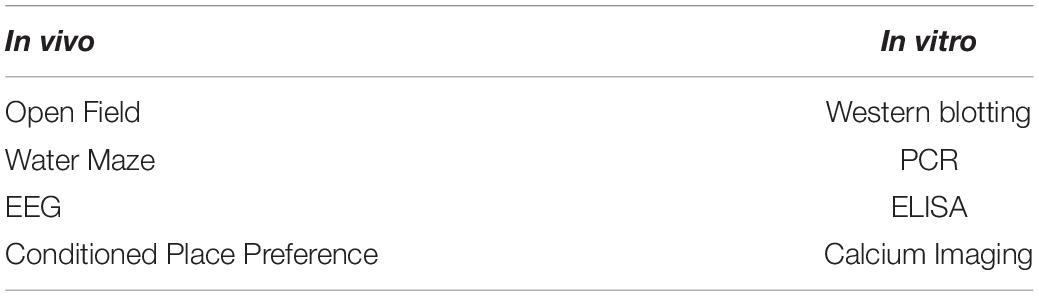

For the working prototype and as a proof-of-concept, four in vivo and four in vitro protocols were identified (see Table 1), based on (1) how commonly they are used in neuroscience (and by extension the literature available on them), and (2) the expertise of the core group.

Table 1. The four initial in vivo and in vitro neuroscience protocols selected for the PEERS platform.

The central elements of PEERS are called “factors,” defined as any aspect of a study that can affect the study outcome. Thus, information how to incorporate them into the study design (e.g., ignore/control, monitor, report them) is required. Factors were divided into two categories by utilizing expert opinion from within the PEERS consortium: (1) generic factors relevant to all protocols (e.g., strain of animals) and (2) specific factors relevant to and affecting specific protocols only (e.g., water temperature for water maze). Users of the platform can search for these factors depending on the protocols and outcomes they are interested in. Representative tables of factors for one in vivo (Open Field) and one in vitro method (Western blotting) can be found in the Supplementary Tables 1, 2.

To identify and collate references that study the importance of a specific factor for a selected protocol and its outcome, an extensive review of published literature via the PubMed and EMBASE databases was conducted. These included references dealing with either a factor of interest that had an effect on the protocol outcome or one that had no effect on the outcome when manipulated. A representative table of factors and the associated references for the Open Field protocol are provided in the Supplementary Table 1.

Within the PEERS database, we provide references for each factor that has been scrutinized. We have gone one step further by providing a grading of the strength of this evidence so that examination of a specific factor in the database provides the user with an extracted summary of all relevant papers and their scores from one or more assessors (scorecards). This required the development of a generic “checklist” to determine the quality of each paper the details of which are described in the following section.

Concurrent with the identification of experimental factors and the review of literature, novel detailed “scorecards” to evaluate the quality of scientific evidence were refined through multiple Delphi rounds within the PEERS Working Group. These scorecards contain a checklist with two main domains: Methods and Results. The elements of these domains were determined based on ARRIVE 2.0 “Essential 10” and recommendations of the EQIPD consortium (Percie du Sert et al., 2020; Vollert et al., 2020). The Methods domain assesses the adherence to these guidelines with a maximum score of 10 (essentially one point for each of the 10 items – or fractions of 1 if items are only covered partially - or zero points if specific items are not covered at all). The Results domain meanwhile aims at evaluating the quality and suitability of the results and analyses, and again a score of 10 was awarded if all items were sufficiently addressed. The scorecards constitute a unique feature of the PEERS database because not only do they evaluate reporting of the methods in any paper, but also take into account the suitability and strength of the results presented. Table 2 provides a detailed depiction of the checklist score utilized for the in vivo protocols.

As mentioned before, scorecards provide a possibility to objectively evaluate and assess the strength of evidence that a given factor may or may not impact a specific protocol. The assessors/users are initially asked to select whether a particular reference supports or negates the influence of a factor for a certain protocol. After filling out the scorecards, the system will then automatically calculate a score sum. If only one assessor has scored a single reference, the score of this reference is presented. Ideally, however, each reference is evaluated by two or more assessors to remove any source of bias, and thus an average score of all scorecards is presented, along with the calculated standard deviation, standard error and confidence intervals around the average score. This detailed information provides the user with a better understanding of the degree of consensus that the assessors reached evaluating the quality of the reference.

Besides the number of assessors for each reference, also the number of identified references for each factor needs to be considered when calculating the final score. When at least two assessors have scored two references, the system automatically starts a meta-analytic calculation process: based on previously described equations (Neyeloff et al., 2012), a fixed-effect model is used and I2 test statistics are applied to evaluate the heterogenicity between assessors and references, thus testing both the consensus of the assessors for each reference as well as the consensus of the references regarding the factor of interest. Moreover, forest plots are generated with confidence intervals depicted, providing a bird’s eye view of all scores/scorecards that exist in the PEERS database for a certain factor or a certain protocol.

The grading system is then simplified to establish the overall scores for each factor into high (>14/20), medium (5–13/20), and low (<5/20) quality (or no evidence), which will then be displayed for users on the front-end of the platform. For reasons of transparency, all described information is freely accessible on the PEERS platform.

All first-time users of the PEERS platform need to register and accept the PEERS Code of Conduct (CoC- see below in Section “Proposed Curation Mechanisms and Community Engagement”) in order to use PEERS. This includes a small profile page where individual users will provide details of their present affiliation, level of expertise and areas of interest. These users can be from academia or from industry at any stage of their scientific career such as early career researchers – Ph.D. students; research assistants and fellows – as well as established researchers. This also allows users to be guided to areas of interest that match their profile. The platform will display the active users and will also show details of their involvement and contribution to different protocols (optional, only if desired). With time, these measurable contributions/metrics can be utilized by PEERS users to demonstrate their effort, time involvement and value.

Until a critical number of users is reached, the scoring of publications by new (and unexperienced) users will be moderated by experienced members of the PEERS Working Group. This will ensure that data quality is verified and moderated. However, as mentioned in Section “Checklist for Grading of Evidence/Publications - The Evaluation”, the scorecard checklists are kept as simple and intuitive as possible (e.g., by adhering to the ARRIVE 2.0 guidelines for methods reporting) so that scoring of publications is neither time-consuming nor difficult.

Once registered, users can ask questions about the relevance of specific factors by interacting with the “Data Search Module” (Figure 2). Additionally, users can also contribute by reviewing and scoring references using the “Data Input Module.” Ultimately, PEERS extracts an output for the user summarizing the “status” of the factor of interest and detailing the scientific strength available that the factor may indeed influence the design, conduct and reporting phase of the protocol of choice.

The current PEERS prototype consists of a web application such that any user, after registration, may insert data and review any existing protocol datasets. The central entities to be collected and stored in the PEERS database are the in vivo and in vitro protocols including all related factors, references, and scorecards. The prototype is set up using the popular ReactJS library with a simple and effective design provided by the Semantic UI framework. By implementing a relational schema, provisions have been made for easy data transformation using semi-structured formats such as JSON and XML, so they are ready for sharing with other applications or systems through an Application Programming Interface (API). The prototype will also include a user management, authentication, and authorization module so that the access/contributions of each user can be tracked and presented in the final dataset. This feature facilitates implementation of collaborative elements, which PEERS seeks to integrate. The application will be accessible using any web browser via the GPDF’s website.2

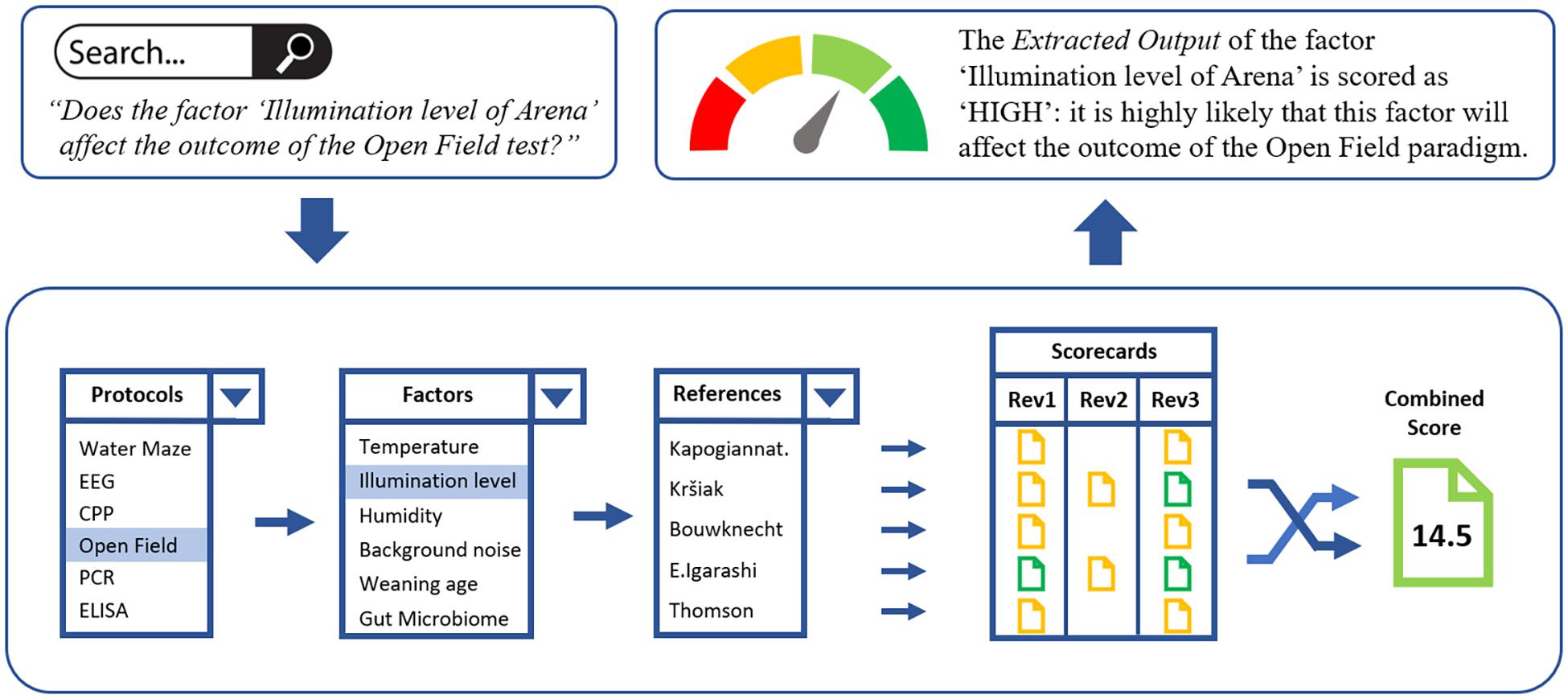

The following example demonstrates how the different back-end functionalities of PEERS will translate into the front-end “Extracted Output” displayed on the PEERS platform when users aim to retrieve information about specific factors for the Open Field in vivo protocol (Figure 3).

Figure 3. The “Open Field” protocol example, demonstrating how the different back-end functionalities of PEERS will translate into the “Extracted Output,” presented to PEERS users. A search query for a specific factor/protocol will lead to the selection of all relevant references from the PEERS database dealing with the factor of interest (e.g., the “illumination level of the arena”). Based on the scorecards for these references the combined score is calculated which translates into the overall extracted output for the selected factor/protocol combination. This status is then presented to the user. Users also have access to all scorecards to understand how the overall grading of evidence was achieved.

Accessing the Open Field test for the first time, users of PEERS might want to ask the question: “Do the factors ‘Sex’ (generic) and ‘Illumination level of arena’ (specific) affect the outcome of the Open Field test?”

a) As a first step, users accessing the platform can input their query into the search module such as: Will the factor “Sex” affect the outcome of an Open Field test? or Will the factor “Illumination level of arena” affect the outcome of an Open Field test? and click Search. All factors and tests will be displayed in drop-down menus.

b) Subsequently, the PEERS database will (i) locate all the factors pertaining to the Open Field protocol and will select “Sex” or “Illumination level of arena” from these, (ii) generate the list of references (via DOIs) for the two factors, (iii) provide scorecards scored by reviewers for each of the references, (iv) use the mathematical model to resolve any discrepancies between reviewers/multiple references, and (v) generate the overall extracted output of the evidence for “Sex” or “Illumination level of Arena” as either high (>14/20), medium (5–13/20), or low (<5/20) quality (or no evidence).

c) The extracted output status of the two factors will then be visualized as “HIGH” in this case - meaning that it is highly likely that both factors “Sex” and “Illumination level of arena” can affect the outcome of the Open Field paradigm. To ensure full transparency, users will also have access to all scorecards and related references for each of the papers scored and can follow each step to understand how the overall grading of evidence was achieved.

To involve the larger scientific community in building and growing the PEERS database, a wiki-like functionality is adopted to allow the collaborative modification and addition of content and structure. The wiki concept ensures that all stakeholders (including early career researchers – Ph.D. students; research assistants and fellows – and established professionals) can actively participate in the curation and reviewing process of evidence pertaining to a protocol using a standardized approach to evaluate the evidence. The presence of multiple reviewers for each factor and protocol will ensure that there is no bias while the meta-analysis approach described above will be utilized to resolve any disagreement between reviewers and adjust the strength of evidence should new information become available.

Editing, curating, and maintaining the PEERS platform is integral to the process and the Working Group is proposing several measures to credit any contributor to the review/editing/curating process. Some of these proposals include making PEERS recommendations citable like conventional publications or developing the platform such that contributors’ names appear on protocols they have contributed to. In addition to voluntary contributions, qualified staff will ensure maintenance, quality management of the content on the database, sustainability and project management functionalities where needed.

The PEERS platform aims to be a community-driven resource which will be curated and updated regularly in an open fashion. We expect biomedical researchers from both academia and industry all over the world to become members and contributors of this community. Above all, we would expect this community to be respectful and engaging to ensure that we reach a broader audience and be helpful to scientists at different stages of their career and in different research environments. Therefore, we will implement a PEERS Code of Conduct (CoC) and all users will have to accept the PEERS CoC before becoming contributors or active users. The CoC will be formulated as a guide to make the community-driven nature of the platform productive and welcoming.

However, violations of the CoC will affect the user’s ability to contribute to the PEERS database and to score papers and engage with the wider PEERS community. Often users will be scoring the quality of methods and results presented in a scientific paper and these are bound to have consequences for other users, colleagues or authors of that paper and therefore, it is important to be respectful, fair and open. Users must not allow their personal prejudices or preferences to overshadow the scoring of any papers and must judge a paper purely on the content presented in it.

Disagreements should be dealt with in a professional manner and when possible, informally. However, if the informal processes prove inadequate to resolve conflicts, PEERS will establish a structured procedure to deal with any complaints or report against any problematic users. The full CoC will be placed on the platform when it goes live.

In order to measure community engagement directly during the testing and validation phase of the platform, the so-called “Voice of the Customer” approach was and will be utilized. This is one of the most popular Agile techniques to capture product functionality as well as user needs and connects the PEERS platform directly with those who are likely to engage with it while also taking their valuable feedback on board. We aim to do this in various ways: (i) obtain user feedback following the product launch via succinct surveys and offer them an attractive opportunity to beta-test new protocols prior to release on the platform; (ii) interview users about their research problems, how they address them and how PEERS could aid their requirements; (iii) listen to users and implement new features and functionalities to the PEERS platform. This approach will help to identify the most vital protocols and facilitate the development of PEERS together with the user and maximize its usefulness. Initial feedback from end user interviews and a small survey suggests a willingness not only to use the PEERS platform to search for information but indeed also to act as a contributor to complete and update any relevant protocols.

Finally, PEERS will establish its presence via social media websites (e.g., Researchgate, Twitter, LinkedIn, and others) to update the scientific community regularly on new developments and to recruit reviewers for newly added protocols via “call-to-action” announcements. Other indirect metrics to ensure the uptake and adoption of PEERS by the wider scientific community and measure success will employ popular mechanisms utilized by online publications such as the number of hits, user access for each protocol and factor, the number of downloads of the evidence related to each factor and the number of downloads for the cited publications. Information will be graphically displayed on the platform and updated instantly. We will use this approach to identify (a) popular protocols; (b) protocols with low engagement; (c) popular modes of engagement with the different protocols. Outcomes will identify areas of global interest for researchers who use the platform. Simultaneously, this information enables us to identify specific knowledge gaps and we will seek to close them.

At present, given the short period of its existence, contents of the database are limited, but PEERS aims to upscale and expand by constantly adding new protocols. Given the composition of the Working Group, the list of protocols will be expanded to include other commonly employed, as well as newly developed neuroscience methods such as in vivo/in vitro electrophysiology, cell culture (2D + 3D), optogenetics, elevated plus maze, qPCR, flow cytometry, light-dark box, conditioned fear etc. The database will continue to be curated and updated for already published protocols.

In the longer term, PEERS aims to attract a broader user base and therefore, the ambition is to branch out and include protocols from other biological disciplines such as infection, inflammation, immunity, cardiovascular sciences, microbial research, etc. Furthermore, the inclusion of expert unpublished data and information related to the importance of specific factors may also be warranted. However, strict rules would need to be set out to ensure proper management, quality control and utility of such unpublished data.

As one of the next steps to aid this expansion, we seek to establish a “Board of Editors” of PEERS akin to an editorial board of a scientific journal, in which all biological disciplines will be represented. Novel protocols can be commissioned accordingly, and the wiki-like structure of the platform would then persist with the Board of Editors reviewing contributions to ensure the extraction of evidence for specific factors is appropriate. The members of the Board of Editors alongside the contributors will be displayed on the platform to make everyone’s contribution transparent.

Most importantly, as PEERS does not compete with existing initiatives for the reporting of results or with guidelines for scientific conduct, we envisage interactions with initiatives such as ARRIVE, EQIPD, FAIR and others to be fruitful in increasing the quality and reproducibility of research in the future.

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

All members of the PEERS Working Group were involved in the preparation of this manuscript with AS, CD, CF-B, AH, MK, NK, GR, and CE responsible for the scientific aspects (“scientific arm”) and KK and KP responsible for the technical aspects (“software arm”). AS, GR, and CE were responsible for the main text of the manuscript, while CE was responsible for the production of the figures. All other authors (AB, MP, PP, and TS) provided valuable input to early PEERS concepts and edits to the manuscript. All authors reviewed the final version of the manuscript.

The PEERS Consortium was funded by Cohen Veterans Bioscience Ltd. and grants COH-0011 from Steven A. Cohen.

AB and CE are employees and shareholders of PAASP GmbH and PAASP US LLC. AB was an employee and shareholder of Exciva GmbH and Synventa LLC. CF-B was employed by Cohen Veterans Bioscience, which has funded the initial stages of the PEERS project development. MP was an employee of EpiEndo Pharmaceutical EHF and previously of Fraunhofer IME-TMP and GSK. TS was an employee of Janssen Pharmaceutica. AH was employed by Y47 Consultancy.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We would like to thank IJsbrand Jan Aalbersberg, Natasja de Bruin, Philippe Chamiot-Clerc, Anja Gilis, Lieve Heylen, Martine Hofmann, Patricia Kabitzke, Isabel Lefevre, Janko Samardzic, Susanne Schiffmann, and Guido Steiner for their valuable input and discussions during the conceptualization of PEERS and the initial phase of the project.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnbeh.2021.755812/full#supplementary-material

Aguillon-Rodriguez, V., Angelaki, D., Bayer, H., Bonacchi, N., Carandini, M., Cazettes, F., et al. (2021). Standardized and reproducible measurement of decision-making in mice. Elife 10:e63711. doi: 10.7554/eLife.63711

Bespalov, A., Bernard, R., Gilis, A., Gerlach, B., Guillen, J., Castagne, V., et al. (2021). Introduction to the EQIPD quality system. Elife 10:e63294. doi: 10.7554/eLife.63294

Bespalov, A., and Steckler, T. (2018). Lacking quality in research: is behavioral neuroscience affected more than other areas of biomedical science? J. Neurosci. Methods 300, 4–9. doi: 10.1016/j.jneumeth.2017.10.018

Bespalov, A., Steckler, T., Altevogt, B., Koustova, E., Skolnick, P., Deaver, D., et al. (2016). Failed trials for central nervous system disorders do not necessarily invalidate preclinical models and drug targets. Nat. Rev. Drug Discov. 15, 516–516. doi: 10.1038/nrd.2016.88

Bohlen, M., Hayes, E. R., Bohlen, B., Bailoo, J. D., Crabbe, J. C., and Wahlsten, D. (2014). Experimenter effects on behavioral test scores of eight inbred mouse strains under the influence of ethanol. Behav. Brain Res. 272, 46–54. doi: 10.1016/j.bbr.2014.06.017

Botvinik-Nezer, R., Holzmeister, F., Camerer, C. F., Dreber, A., Huber, J., Johannesson, M., et al. (2020). Variability in the analysis of a single neuroimaging dataset by many teams. Nature 582, 84–88. doi: 10.1038/s41586-020-2314-9

Butlen-Ducuing, F., Balkowiec-Iskra, E., Dalla, C., Slattery, D. A., Ferretti, M. T., Kokras, N., et al. (2021). Implications of sex-related differences in central nervous system disorders for drug research and development. Nat. Rev. Drug Discov. doi: 10.1038/d41573-021-00115-6 [Epub Online ahead of print].

Emmerich, C. H., Gamboa, L. M., Hofmann, M. C. J., Bonin-Andresen, M., Arbach, O., Schendel, P., et al. (2021). Improving target assessment in biomedical research: the GOT-IT recommendations. Nat. Rev. Drug Discov. 20, 64–81. doi: 10.1038/s41573-020-0087-3

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Med. 2:e124. doi: 10.1371/journal.pmed.0020124

LaPlaca, M., Huie, J., Alam, H., Bachstetter, A., Bayir, H., Bellgowan, P., et al. (2021). Pre-Clinical common data elements for traumatic brain injury research: progress and use cases. J. Neurotrauma 38, 1399–1410. doi: 10.1089/NEU.2020.7328

Makarov, V. A., Stouch, T., Allgood, B., Willis, C. D., and Lynch, N. (2021). Best practices for artificial intelligence in life sciences research. Drug Discov. Today 26, 1107–1110. doi: 10.1016/J.DRUDIS.2021.01.017

Neyeloff, J. L., Fuchs, S. C., and Moreira, L. B. (2012). Meta-analyses and Forest plots using a microsoft excel spreadsheet: step-by-step guide focusing on descriptive data analysis. BMC Res. Notes 5:52. doi: 10.1186/1756-0500-5-52

Pawluski, J. L., Kokras, N., Charlier, T. D., and Dalla, C. (2020). Sex matters in neuroscience and neuropsychopharmacology. Eur. J. Neurosci. 52, 2423–2428. doi: 10.1111/EJN.14880

Percie du Sert, N., Hurst, V., Ahluwalia, A., Alam, S., Avey, M. T., Baker, M., et al. (2020). The ARRIVE guidelines 2.0: updated guidelines for reporting animal research. PLoS Biol. 18:e3000410. doi: 10.1371/journal.pbio.3000410

Richter, S. H., Garner, J. P., Zipser, B., Lewejohann, L., Sachser, N., Touma, C., et al. (2011). Effect of population heterogenization on the reproducibility of mouse behavior: a multi-laboratory study. PLoS One 6:e16461. doi: 10.1371/journal.pone.0016461

Riedel, G., Robinson, L., and Crouch, B. (2018). Spatial learning and flexibility in 129S2/SvHsd and C57BL/6J mouse strains using different variants of the Barnes maze. Behav. Pharmacol. 29, 688–700. doi: 10.1097/FBP.0000000000000433

Robinson, L., Spruijt, B., and Riedel, G. (2018). Between and within laboratory reliability of mouse behaviour recorded in home-cage and open-field. J. Neurosci. Methods 300, 10–19. doi: 10.1016/j.jneumeth.2017.11.019

Sansone, S.-A., McQuilton, P., Rocca-Serra, P., Gonzalez-Beltran, A., Izzo, M., Lister, A. L., et al. (2019). FAIRsharing as a community approach to standards, repositories and policies. Nat. Biotechnol. 37, 358–367. doi: 10.1038/s41587-019-0080-8

Simera, I. (2008). The EQUATOR Network: facilitating transparent and accurate reporting of health research. Serials 21, 183–187. doi: 10.1629/21183

Sousa, N., Almeida, O. F. X., and Wotjak, C. T. (2006). A hitchhiker’s guide to behavioral analysis in laboratory rodents. Genes, Brain Behav. 5, 5–24. doi: 10.1111/j.1601-183X.2006.00228.x

Stone, K. (2010). NINDS common data element project: a long-awaited breakthrough in streamlining trials. Ann. Neurol. 68, A11–3. doi: 10.1002/ANA.22114

The International Brain Laboratory (2017). An international laboratory for systems and computational neuroscience. Neuron 96, 1213–1218. doi: 10.1016/j.neuron.2017.12.013

Tosetti, P., Hicks, R. R., Theriault, E., Phillips, A., Koroshetz, W., and Draghia-Akli, R. (2013). Toward an international initiative for traumatic brain injury research. J. Neurotrauma 30, 1211–1222. doi: 10.1089/NEU.2013.2896

Vollert, J., Schenker, E., Macleod, M., Bespalov, A., Wuerbel, H., Michel, M., et al. (2020). Systematic review of guidelines for internal validity in the design, conduct and analysis of preclinical biomedical experiments involving laboratory animals. BMJ Open Sci. 4:e100046. doi: 10.1136/bmjos-2019-100046

Keywords: reproducibility, study design, neuroscience, transparency, quality rating, animal models, study outcome, platform

Citation: Sil A, Bespalov A, Dalla C, Ferland-Beckham C, Herremans A, Karantzalos K, Kas MJ, Kokras N, Parnham MJ, Pavlidi P, Pristouris K, Steckler T, Riedel G and Emmerich CH (2021) PEERS — An Open Science “Platform for the Exchange of Experimental Research Standards” in Biomedicine. Front. Behav. Neurosci. 15:755812. doi: 10.3389/fnbeh.2021.755812

Received: 09 August 2021; Accepted: 29 September 2021;

Published: 21 October 2021.

Edited by:

Tim Karl, Western Sydney University, AustraliaReviewed by:

Terence Y. Pang, University of Melbourne, AustraliaCopyright © 2021 Sil, Bespalov, Dalla, Ferland-Beckham, Herremans, Karantzalos, Kas, Kokras, Parnham, Pavlidi, Pristouris, Steckler, Riedel and Emmerich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christoph H. Emmerich, Y2hyaXN0b3BoLmVtbWVyaWNoQHBhYXNwLm5ldA==

†These authors have contributed equally to this work and share senior authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.