95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Behav. Neurosci. , 14 June 2016

Sec. Individual and Social Behaviors

Volume 10 - 2016 | https://doi.org/10.3389/fnbeh.2016.00117

Sleep has been related to emotional functioning. However, the extent to which emotional salience is processed during sleep is unknown. To address this concern, we investigated night sleep in healthy adults regarding brain reactivity to the emotionally (happily, fearfully) spoken meaningless syllables dada, along with correspondingly synthesized nonvocal sounds. Electroencephalogram (EEG) signals were continuously acquired during an entire night of sleep while we applied a passive auditory oddball paradigm. During all stages of sleep, mismatch negativity (MMN) in response to emotional syllables, which is an index for emotional salience processing of voices, was detected. In contrast, MMN to acoustically matching nonvocal sounds was undetected during Sleep Stage 2 and 3 as well as rapid eye movement (REM) sleep. Post-MMN positivity (PMP) was identified with larger amplitudes during Stage 3, and at earlier latencies during REM sleep, relative to wakefulness. These findings clearly demonstrated the neural dynamics of emotional salience processing during the stages of sleep.

Currently, the sleeping brain is considered an active processor that reacts to the external world (Perrin et al., 1999, 2002; Bastuji et al., 2002; Ruby et al., 2008). In such circumstances, we expect the sleeping brain to process emotions. However, the extent to which emotional salience is processed during the stages of sleep remains to be determined.

A large body of research, using similar paradigms to deliver sensory stimuli to sleeping vs. awaking subjects, had provided converging evidence to support the neural responsiveness during sleep (Coenen, 1995; Born et al., 2002; Coenen and Drinkenburg, 2002; Lavigne et al., 2004; Hennevin et al., 2007; Karakaş et al., 2007; Ibáñez et al., 2009). In particular, the auditory system continues to work during sleep (Atienza et al., 2001a). For instance, N400, a component of event-related potentials (ERP) elicited by the presentation of semantically unrelated information between two words or between a context and a word, corroborated the processing of semantic discrimination during stage 2 and rapid eye movement (REM) sleep (Brualla et al., 1998; Ibáñez et al., 2006). P3 was enhanced by the subject’s own name and K-complexes were evoked by all first names (Perrin et al., 1999, 2000). Sleeping subjects were substantially awakened faster by hearing their own names than other names (Oswald et al., 1960). Amygdala had a purported role of rapid, automatic and non-conscious processing of emotional and social stimuli (Pessoa and Adolphs, 2010; Tamietto and de Gelder, 2010). The subject’s own name relative to tones produced stronger activation in the left amygdala and prefrontal cortex during stage 2 (Portas et al., 2000). Even the learned representation of conditioned-fear, the initial neutral stimulus (conditioned stimulus, CS) acquired a behavioral significance through paring with a biologically relevant stimulus (unconditioned stimulus, US), was identified during sleep (Maho et al., 1991; Hennevin et al., 1993; Maho and Hennevin, 1999). The sleeping brain can discriminate relevant from irrelevant stimuli, particularly when the stimuli are significantly salient and intrinsically meaningful.

However, existing knowledge regarding emotional salience processing in the sleeping brain is mainly drawing from the observed modulation of a learned stimulus (the conditioned stimulus, CS; Maho and Hennevin, 1999), intrinsically meaningful stimulus (e.g., subject’s own names; Portas et al., 2000) or the comparisons of the behavioral and neuroimaging observations immediately before and after sleep (Yoo et al., 2007; Gujar et al., 2011a,b; van der Helm et al., 2011). The sensitivity to emotional facial expressions was reported to change after REM sleep (Gujar et al., 2011a). The amygdala activity involved in REM sleep was associated with emotional intensities of dreams (Maquet et al., 1996). Overnight sleep attenuated the amygdala activity in response to previously encountered emotional stimuli (van der Helm et al., 2011). Sleep deprivation enhanced the amygdala response to negative emotional stimuli (Yoo et al., 2007) and amplified the neural reactivity responsible for rewarding (Gujar et al., 2011b). Hence, the direct evidence to the processing of emotional salience during sleep is warranted (Hennevin et al., 2007). Specifically, to what extent are the neurophysiological indices of sleep functionally equivalent to their waking counterparts? Is the processing of emotional salience during sleep comparably efficient with that during wakefulness?

The passive oddball paradigm enables the investigation of automatic auditory processing during wakefulness and sleep, as indicated by neurophysiological indices of N1-P2 complex, mismatch negativity (MMN), and post-MMN positivity (PMP). The N1-P2 complex, a sensory processing index, has a time window of 100−300 ms during wakefulness (Doellinger et al., 2011), and shifts to approximately 70−225 ms during sleep (Nordby et al., 1996). The MMN, which reflects automatic discrimination of auditory changes in human auditory cortex (Näätänen et al., 2011), is identified during REM sleep (Nashida et al., 2000) as well as in Stage 1 and Stage 3 (Ruby et al., 2008). MMN in response to emotional syllables have been recently used to index emotional salience processing of voices at the preattentive stage (Cheng et al., 2012; Fan et al., 2013; Hung and Cheng, 2014). The MMN in response to meaningless syllables spoken with disgusted prosody generated cortical activity in the anterior insular cortex (Chen et al., 2014). Emotional MMN became atypical in individuals with empathy deficits (Hung et al., 2013; Fan and Cheng, 2014). Furthermore, testosterone had impact on emotional MMN (Chen et al., 2015), indicating the involvement of amygdala in the generation of early ERP components responsible for emotional perception (Sabatinelli et al., 2013). The PMP, a P3a-like wave recorded during transition to sleep and also during sleep (Cote, 2002), was elicited when the novel stimuli were sufficiently salient so as to intrude into consciousness (Putnam and Roth, 1990; Niiyama et al., 1994; Bastuji et al., 1995).

To elucidate the neural dynamics of emotional salience processing to vocal stimuli during sleep stages, this study used the passive oddball paradigm with deviants in the emotional syllables dada and correspondingly synthesized nonvocal sounds. We hypothesized that if the sleeping brain were able to process emotional salience per se, MMN and PMP, particularly during REM sleep, would respond to emotional syllables rather than nonvocal sounds. In contrast, if the sleeping brain were insensitive to emotions, MMN and PMP in response to emotional syllables would not be identified during sleep.

Twelve healthy subjects (6 females) aged 23–27 years (mean ± SD = 24 ± 1.3 years) volunteered to participate in this study. They were all self-reported good sleepers and were not using ongoing medications. All participants had normal peripheral hearing bilaterally (pure tone average thresholds <15 dB HL) and normal middle ear function at the time of testing. None had a history of neurological or psychiatric problems. The local Ethics Committee (Yang-Ming University Hospital) approved this study. In accordance with the Declaration of Helsinki, all participants provided informed consent and received instructions regarding all the experimental details, as well as their right to withdraw at any time. In addition, participants refrained from ingesting caffeine and alcohol for 24 h before the experiment and on the experimental days.

The stimuli consisted of two categories: emotional syllables and acoustically matched nonvocal sounds. For emotional syllables, a female speaker from a performing arts school produced meaningless syllables dada with two sets of emotional (happy, fearful) prosodies (see Cheng et al., 2012; Hung et al., 2013; Chen et al., 2015 for validation). Emotional syllables were edited to become equally long (550 ms) and loud (min: 57 dB; max: 62 dB; mean 59 dB) using Cool Edit Pro 2.0 and Sound Forge 9.0. Each syllable set was rated for emotionality on a 5-point Likert-scale. For the fearful set, 120 listeners classified each stimulus from “extremely fearful” to “not fearful at all”. For the happy set, listeners classified from “extremely happy” to “not happy at all”. These listeners did not overlap with the participants recruited in the ERP experiment. Two emotional syllables, which were consistently identified as “extremely fearful” and “extremely happy”, were selected as the stimuli. The ratings of happy and fearful syllables on the Likert-scale (mean ± SD) were 4.34 ± 0.65 and 3.93 ± 0.97, respectively.

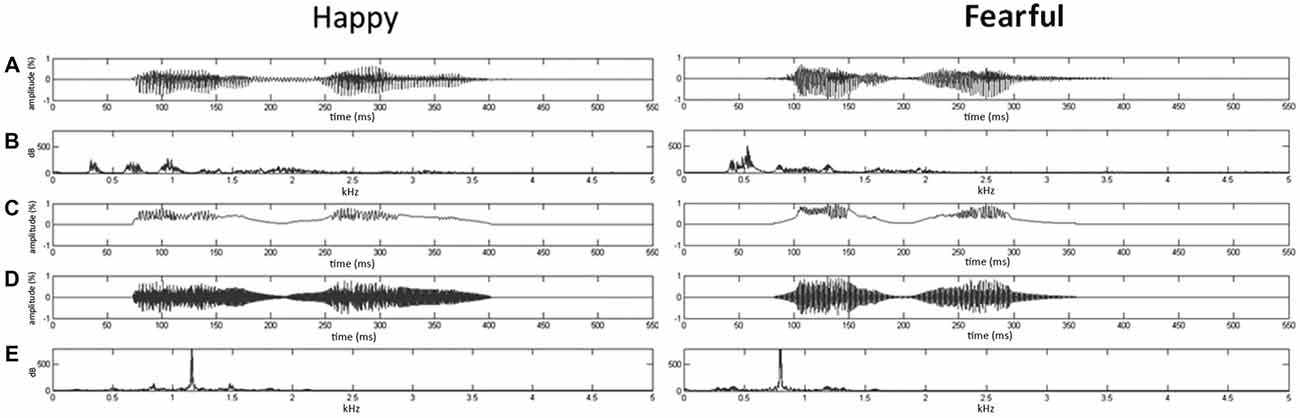

Given that firmly controlling the spectral power distribution might cause the loss of temporal flow associated with formant contents in voices (Belin et al., 2000), the synthesis based on the temporal envelope and core spectral elementals of voices could enable to reach the maximal control of temporal and spectral features (Remedios et al., 2009). Here, using Boersma (2001) and MATLAB (The MathWorks, Inc., MA, USA), we synthesized nonvocal sounds that retained acoustic correspondence with emotional syllables. The central gravity of frequency (fn) of each original syllable was defined as [∫ |X(f)|2 × f df/∫ |X(f)|2 df], where X(f) was the Fourier spectrum of emotional syllables. The fn of fearful and happy syllables was 797.2 Hz and 1159.27 Hz, respectively. The nonvocal sounds were then produced by multiplying the sine waveform with two Hamming windows, which were temporarily centered at each of the syllable [nonvocal sounds = fn(t) × Hamming window(t)]. In this way, nonvocal sounds had been used for controlling the temporal envelope and core spectral element of emotional syllables (Fan et al., 2013; Chen et al., 2014; Hung and Cheng, 2014). The time-course and frequency spectrum of emotional syllables and corresponding nonvocal sounds are illustrated in Figure 1. In addition, one of previous studies using the same stimuli demonstrated that emotional syllables, rather than nonvocal sounds, exerted above-chance hit rates on the emotional categorization task, indicating emotional neutrality of acoustic controls (Chen et al., 2014).

Figure 1. Oscillogram and spectrogram of emotional (happy and fearful) syllables and nonvocal sounds. (A) Oscillogram of emotional syllables [x axis: time (ms); y axis: amplitude (%)]. (B) Spectrogram of emotional syllables [x axis: kHz; y axis: dB]. (C) Sound envelop of emotional syllables and nonvocal sounds [x axis: time (ms); y axis: amplitude (%)]. (D) Oscillogram of nonvocal sounds [x axis: time (ms); y axis: amplitude (%)]. (E) Spectrogram of nonvocal sounds [x axis: kHz; y axis: dB]. Nonvocal sounds retain the spectral centroid (fn) as well as the temporal envelop of emotional syllables. The fn of fearful and happy syllables was 797.2 Hz and 1159.27 Hz, respectively.

Before EEG recordings, each participant completed a Chinese version of the Pittsburgh Sleep Quality Index (CPSQI; Buysse et al., 1989; Tsai et al., 2005) and the Epworth Sleepiness scale (CESS; Johns, 1991; Chen et al., 2002). The CPSQI and CESS are self-reported questionnaires that are used to assess sleep quality and to evaluate the degree of somnolence, respectively.

The subjects arrived at approximately 10:00 PM on two consecutive nights to participate in two experimental sessions, respectively: emotional syllables and nonvocal sounds. The order of emotional and nonvocal sessions was randomized and counterbalanced across subjects. We conducted EEG recordings in the examining room of Wang-Fang Hospital, where there was a separate double-walled and sound-attenuated testing chamber.

Each experimental session contained one waking block and one sleeping block for comparisons. During the waking block (11:00 PM to 12:00 AM), subjects were instructed to watch a silent movie with subtitles while task-irrelevant emotional syllables or nonvocal sounds in oddball sequences were presented. The stimulus presentation of the sleeping block was absolutely comparable with the waking block except for no need to watch a silent movie during sleep. Next, the subjects had to go to sleep for 6 h before 12:00 AM with the light off. During the sleeping block, we recorded EEG for an entire night with the auditory stimuli playing continuously. Particularly, instead of presenting physically identical stimuli as both of standards and deviants (Schirmer et al., 2007), we used the same theorem as previous works for the control of the mismatch paradigm (Čeponiene et al., 2003; Chen et al., 2014). The passive oddball paradigm for emotional syllables employed happy syllables as standards and fearful syllables as deviants. The corresponding nonvocal sounds were applied in the same oddball paradigm, but were presented as the separated session so that relative acoustic features between standards and deviants were controlled across sessions. During each block, 80% of the auditory stimuli were happy syllables or happy-derived sounds, and the remaining 20% were fearful syllables or fearful-derived sounds. The deviants ran at a random order of the sequence, edited by MATLAB (The MathWorks, Inc., USA). A minimum of two standards was always presented between any two deviants. The stimulus-onset asynchrony was 1200 ms, including a stimulus length of 550 ms and an inter-stimulus interval of 650 ms.

During the sleeping block, we recorded EEG for an entire night with the auditory stimuli playing continuously. On the following morning, immediately after waking up, participants were asked whether they had or had not consciously heard any sound during sleep, to ensure they were unaware of the sounds during sleep. The recorded lengths of the sleeping session ranged from 299.5 to 366.5 min (mean = 343.5 ± 19.5 min).

We applied compatible electroencephalography (EEG) and polysomnography (PSG) systems to record auditory ERPs and to monitor sleep, respectively. We continuously recorded EEG at 600 Hz (band-pass 0.1–100 Hz) by using four electrodes (F3, Fz, F4, and Cz) mounted on an elastic cap in accordance with a modified 10–20 system, with the addition of two mastoid electrodes (A1, A2) used as a reference, and a ground electrode placed on the forehead. Eye blinks and vertical eye movements were monitored using two electro-oculogram (EOG) electrode pairs located vertically above vs. below the left eye and horizontally at the outer canthi of both eyes. Electrode/skin impedance was maintained at <10 kΩ. EEG was epoched to 600-ms trials, including a 100 ms prestimulus baseline. Trials containing changes exceeding ±70 μV at recording electrodes and exceeding 100 μV at the EOG channels were excluded by an automatic rejection system. Trials with visually identified K-complexes that exceeded ±120 μV were also removed (Cote, 2002). We ensured the quality of ERP traces through thorough visual inspection of the data from every subject and from every trial by applying appropriate digital, zero-phase shift band-pass filtering (0.1–50 Hz, 24 dB/octave). ERP traces confirmed that muscle artifacts insignificantly contaminated all the electrodes. Submental electromyography (EMG) consisted of two electrodes placed on each side of the geniohyoid muscle with impedance maintained at <10 kΩ, which was crucial for correctly identifying REM sleep, because the waveform during REM sleep was highly similar to that during wakefulness. The electrocardiogram (ECG) consisted of two electrodes placed beneath clavicles with their impedance at 30 kΩ, for recording heart rate variability, which assisted with sleep stage scoring. We processed and analyzed ERPs using Neuroscan 4.3 (Compumedics Ltd., Australia). Notably, we applied comparable setting to record, pre-process, and segment the data during the waking and sleeping blocks for further analysis.

The scoring of the sleep stages involved the standard scoring manual (Rechtschaffen and Kales, 1968). We recorded, amplified, digitized, and filtered the PSG with polysomnography (MedCare, USA; 27 channels) by using a ground electrode placed at Cz. According to the standard sleep-staging criteria (Rechtschaffen and Kales, 1968), successive 30-s epochs of polysomnographic data were double-blind classified by two experienced sleep technologists into five various sleep and waking stages [wakefulness, Sleep Stage 1, Sleep Stage 2, slow-wave sleep stage (combined Sleep Stages 3 and 4), and REM]. The mean heart-rate values dropped from wakefulness, light sleep, to deep sleep. During REM sleep, heart rate increased again showed a high variability, which might exceed the variability observed during wakefulness (Zemaityte et al., 1986). Using spectral analysis on heart rate variability, specific frequency ranges attributed to sympathetic and parasympathetic activities in relation with the stage changes were identified (Akselrod et al., 1981; Zemaityte et al., 1986; Berlad et al., 1993).

The EEG signals in the lateral electrodes (F3 and F4) relative to midline electrodes (Fz and Cz) was noisier and contaminated by the motion artifact to a larger degree due to non-conscious movements during sleep. Based on previous literatures that showed the largest effect of MMN, we analyzed the amplitudes of MMN and PMP as an average within a 100-ms time window surrounding the peak latency at the electrode sites, Fz and Cz (Näätänen et al., 2007). We defined the MMN peak as the largest negativity in the subtraction between the deviant and standard sound ERPs, during a period from 150 to 300 ms during wakefulness and from 100 to 250 ms during sleep. The N1-P2 complex was the peak-to-peak amplitude of N1 and P2 components. The PMP peak was the largest positivity within the period of 300−500 ms during wakefulness and 250−450 ms during sleep. We conducted statistical analyses, separately for experimental sessions (emotional syllables or nonvocal sounds), used a two-way repeated-measure analysis of variance (ANOVA) with stage (wakefulness, Stage 1, Stage 2, Stage 3, and REM) and electrode (Fz, Cz) as the within-subject factors. The dependent variables were the amplitudes and peak latencies of the N1-P2 complex, MMN, and PMP components at the selected electrode sites. Statistical power (1−β) was estimated by G*Power 3.1 tests (Faul et al., 2009). Degrees of freedom were corrected using the Greenhouse-Geisser method. Post hoc analyses were conducted only when preceded by significant main effects.

Regarding the CPSQI, participants had optimal sleep qualities as part of their daily routine (mean score 5.3 ± 2.2). The average CESS score was 9.9 ± 3.9, indicated that the level of sleepiness during their daily routine was within the normal range.

Table 1 displays the averaged total recording time, average time spent sleeping, average time required for initiating sleep (sleep onset latency), sleep efficiency, and duration of each sleep stage between the experimental sessions of emotional syllables and nonvocal sounds. There was no significant difference in the total recording time [F(1,11) = 0.25, p = 0.63, = 0.022, estimated power (1−β) = 10.22%], total sleep time [F(1,11) = 0.38, p = 0.55, = 0.033, (1−β) = 13%], sleep onset latency [F(1,11) = 0.85, p = 0.38, = 0.072, (1−β) = 23.52%], and sleep efficiency [F(1,11) = 0.18, p = 0.68, = 0.016, (1−β) = 8.75%] between the emotional and nonvocal sessions. We calculated the sleep efficiency of each subject based on the duration of total sleeping time divided by the total recording time. The sleep efficiency was high, with no significant differences between the emotional session (90.58%) and nonvocal session (89.58%).

The duration of each sleep stage revealed no significant difference between the emotional and nonvocal sessions [F(3,33) = 1.03, p = 0.38, = 0.086, (1−β) = 34.59%]. Stage 2 was significantly longer than other Stages [F(3,33) = 87.06, p < 0.001, = 0.89, (1−β) ≈ 100%].

The accepted numbers of standard and deviant trials between emotional syllables and nonvocal sounds did not significantly differ in wakefulness [happy (standard): 721 ± 209; happy-derived (standard): 707 ± 63; fearful (deviant): 180 ± 53; fearful-derived (deviant): 177 ± 18], Stage 1 (1536 ± 581; 1485 ± 585; 386 ± 150; 328 ± 150), Stage 2 (5492 ± 783, 4682 ± 1634; 1384 ± 202; 1199 ± 427), Stage 3 (1175 ± 346; 1001 ± 448; 293 ± 90; 249 ± 118), and REM (1827 ± 375; 1941 ± 631; 458 ± 95; 484 ± 157).

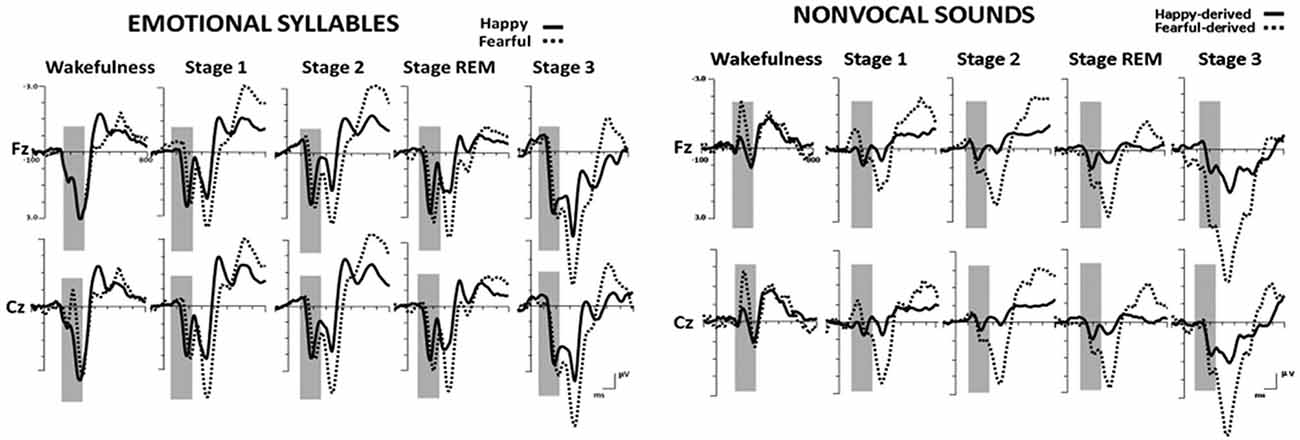

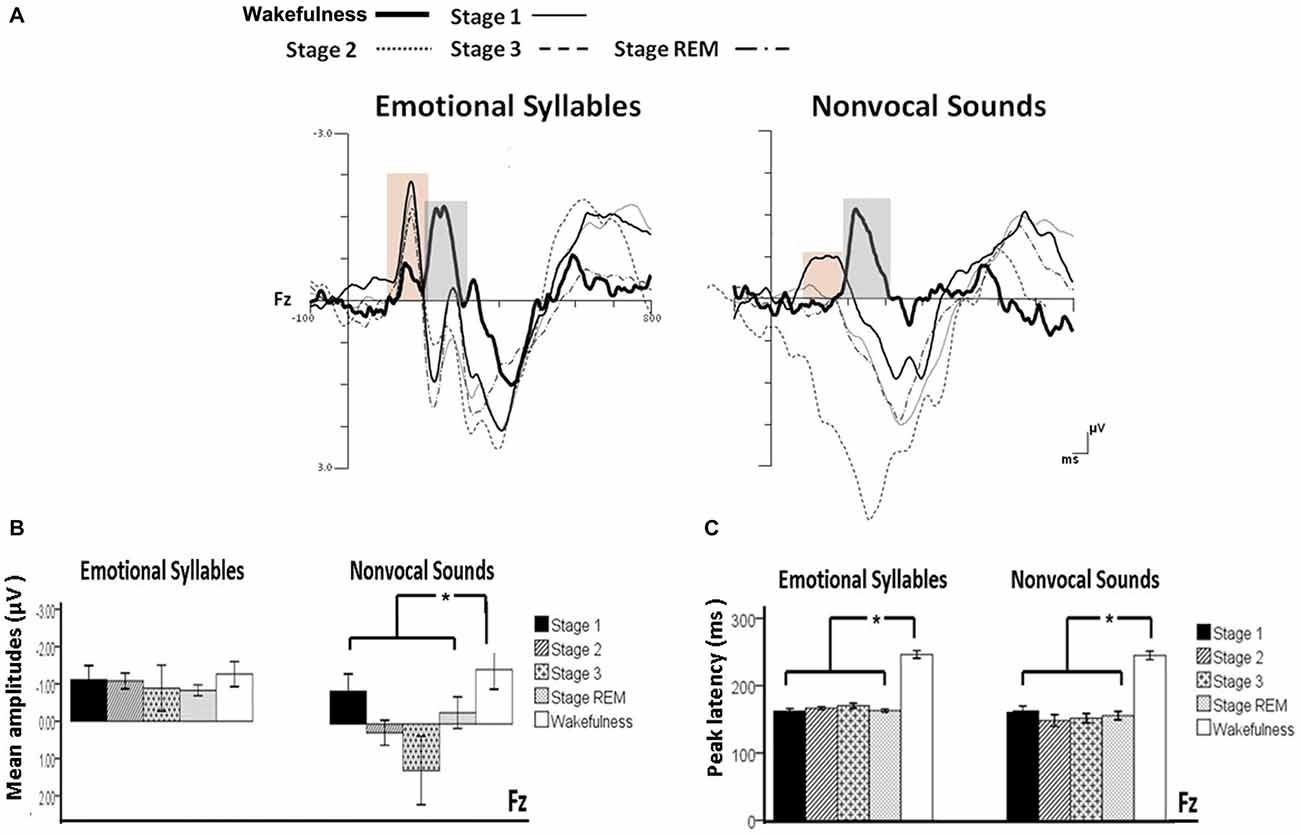

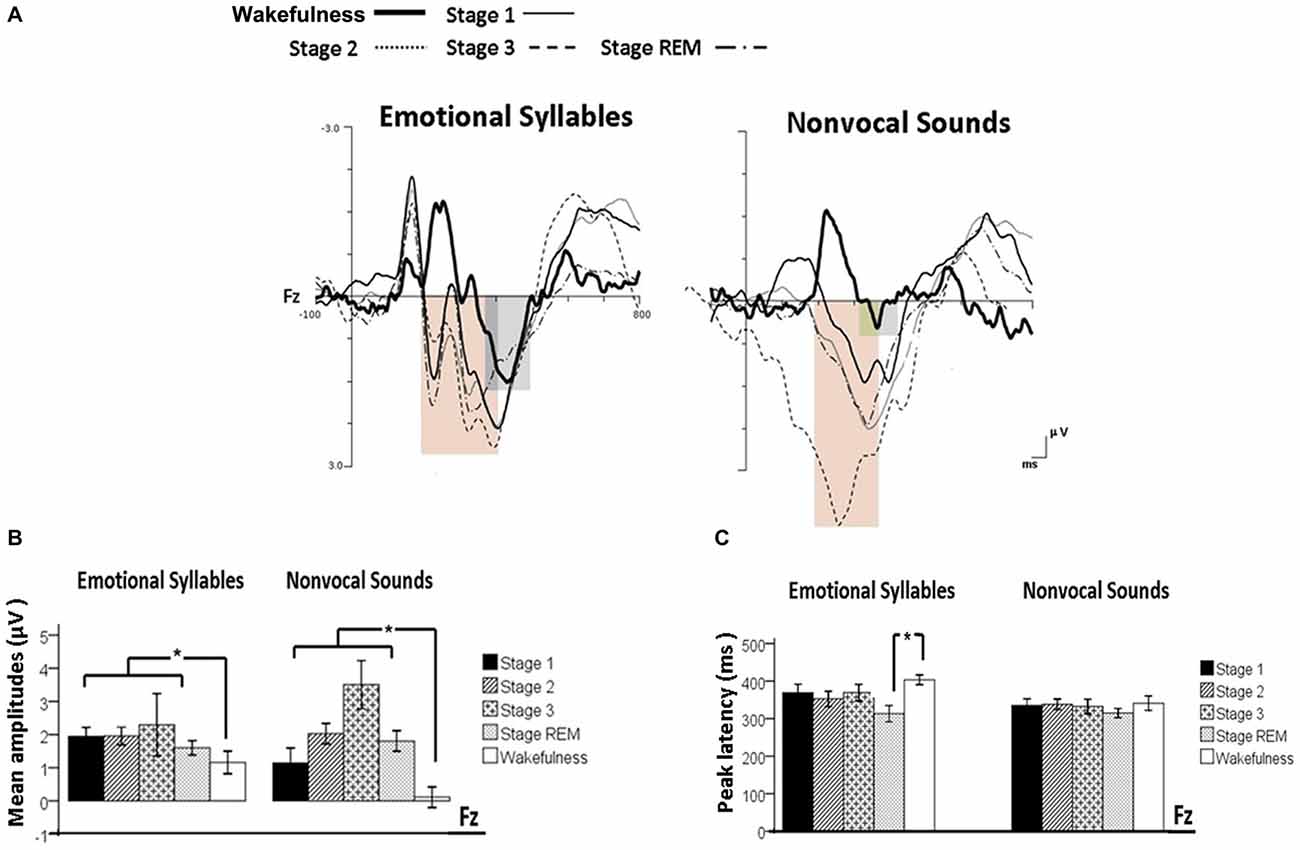

Figure 2 shows the ERP results for standard and deviant responses. We studied the preattentive process of emotional salience processing of voices using MMN, which was determined by subtracting happy ERP from fearful ERP (Figure 3). PMP in response to emotional syllables and nonvocal sounds at each stage of sleep are shown in Figure 4. Table 2, 3 list the mean amplitudes and peak latencies of the MMN and PMP subcomponents during each sleep stage.

Figure 2. Grand average standard (happy) and deviant (fearful) event-related potentials (ERP) waveforms in response to emotional syllables and nonvocal sounds at each sleep stage.

Figure 3. Mismatch negativity (MMN) in response to emotional syllables and nonvocal sounds at each stage of sleep. Emotional MMN during wakefulness was clearly detected during all sleep stages (p = 0.59), whereas nonvocal MMN during wakefulness was reduced at various sleep stages (p = 0.044). Regardless of emotional syllables (p < 0.001) or nonvocal sounds (p < 0.001), all sleep stages relative to wakefulness identified earlier latencies of MMN. (A) Grand average MMN waveforms. For the sake of clarity, the gray and orange area highlights the time windows of MMN during wakefulness and sleep, respectively, at the electrode site Fz. The bar graphs present the (B) mean amplitudes and (C) peak latency of MMN across electrodes. *P < 0.05.

Figure 4. Post-MMN positivity (PMP) in response to emotional syllables and nonvocal sounds at each stage of sleep. Regardless of emotional syllables (p = 0.027) or nonvocal sounds (p < 0.001), all sleep stages relative to wakefulness identified stronger PMP. Emotional PMP peaked significantly earlier during rapid eye movement (REM) sleep than during wakefulness (p = 0.025), whereas nonvocal PMP exhibited no such a pattern (p = 0.07). (A) Grand average PMP waveform. For the sake of clarity, the gray and orange area highlights the time windows of PMP during wakefulness and sleep, respectively, at the electrode site Fz. The bar graphs present the mean amplitudes (B) and peak latency (C) of PMP across electrodes. *P < 0.05.

We subjected the N1-P2 complex amplitudes to an ANOVA regarding the stimulus (standard: happy and deviant: fearful), stage (wakefulness, Stage 1, Stage 2, Stage 3, and REM), and electrode (Fz, Cz) as repeated-measure factors for each experimental session (emotional syllables or nonvocal sounds), respectively. For emotional syllables, the ANOVA model of N1-P2 amplitudes revealed main effects of the electrode [F(1,11) = 10.54, p = 0.008, = 0.489, (1−β) = 99.03%] and stimulus [F(1,11) = 10.71, p = 0.007, = 0.493, (1−β) = 99.11%]. Fz had stronger N1-P2 complex than did Cz. The deviant significantly induced larger N1/P2 complex than did the standard. For nonvocal sounds, the ANOVA model of N1-P2 amplitudes showed a main effect of the stimulus [F(1,11) = 43.14, p < 0.001, = 0.797, (1−β) ≈ 100%]. The deviant significantly induced larger N1/P2 complex than did the standard. Notably, neither emotional syllables [F(4,44) = 1.66, p = 0.22] nor nonvocal sounds [F(4,44) = 1.26, p = 0.31] varied N1-P2 complex among sleep stages. Both to emotional syllables and nonvocal sounds, the N1-P2 complex was undiminished from wakefulness to sleep.

The ANOVA on MMN amplitudes to emotional syllables did not reveal any significance [stage: F(4,44) = 0.71, p = 0.59, = 0.061, (1−β) = 26.75%; electrode: F(1,11) = 0.15, p = 0.71, = 0.013, (1−β) = 8.6%; stage × electrode: F(4,44) = 1.75, p = 0.21, = 0.13, (1−β) = 57.91%]. To control whether the MMN amplitude effect for fearful deviant vs. happy standard during sleep were stemming from acoustical feature differences, instead of the emotional sound content, an additional MMN analysis was conducted by subtracting the happy-derived ERP from the fearful-derived ERP. The analyses of nonvocal MMN revealed a main effect of the stage [F(4,44) = 2.68, p = 0.044, = 0.196, (1−β) = 81.55%]. Post hoc analyses revealed that nonvocal MMN amplitudes were comparable at stage 1 (Bonferroni-corrected p = 0.52), but were reduced at stage 2 (p = 0.038), stage 3 (p = 0.082), and REM sleep (p = 0.041) as compared with wakefulness. These results indicated that emotional MMN could be clearly detected during all sleep stages, as well as during wakefulness. By contrast, nonvocal MMN amplitudes during wakefulness (mean ± SE: −1.19 ± 0.45 μV) were significantly decreased at sleep (Stage 2: 0.31 ± 0.28 μV; Stage 3: 0.70 ± 0.69 μV; REM: −0.22 ± 0.34 μV).

The ANOVA of MMN peak latencies to emotional syllables indicated a main effect of the stage [F(4,44) = 118.97, p < 0.001, = 0.915, (1−β) ≈ 100%] without any effect for the electrode [F(1,11) = 0.81, p = 0.39, = 0.069, (1−β) = 22.68%] and electrode × stage [F(4,44) = 1.28, p = 0.29, = 0.104, (1−β) = 46.31%]. Follow-up analyses indicated that emotional MMN peak latencies during wakefulness (mean ± SE: 244.75 ± 5.23 ms) were significantly accelerated at sleep (Stage 1: 164.33 ± 2.47 ms; Stage 2: 166.42 ± 2.28 ms; Stage 3: 167.08 ± 3.02 ms; REM: 161.17 ± 2.83 ms). As for MMN peak latencies to nonvocal sounds, there were a main effect of the stage [F(4,44) = 42.20, p < 0.001, = 0.793, (1−β) ≈ 100%] and an interaction of electrode × stage [F(4,44) = 3.63, p = 0.023, = 0.248, (1−β) = 92.41%]. Follow-up analyses indicated that nonvocal MMN peak latencies during wakefulness (237.67 ± 5.24 ms) were accelerated at sleep (Stage 1: 157.83 ± 6.15 ms; Stage 2: 151.17 ± 7.6 ms; Stage 3: 157.67 ± 6.56 ms; REM: 160.58 ± 5.24 ms). Both Fz [F(4,44) = 42.73, p < 0.001, = 0.795, (1−β) ≈ 100%] and Cz [F(4,44) = 24.09, p < 0.001, = 0.687, (1−β) ≈ 100%] exhibited the stage effect, albeit with different effect size. Regardless of whether emotional syllables or nonvocal sounds were presented, MMN latencies were shortened during sleep as compared with wakefulness.

The ANOVA model targeting the PMP amplitudes to emotional syllables revealed a main effect of the stage [F(4,44) = 3.03, p = 0.027, = 0.216, (1−β) = 86.53%] and an interaction of stage × electrode [F(4,44) = 4.01, p = 0.007, = 0.267, (1−β) = 94.84%]. All of the sleep stages exhibited larger PMP amplitudes than did the wakefulness (Stage 1: 2.16 ± 0.26 μV; Stage 2: 2.14 ± 0.29 μV; Stage 3: 2.81 ± 0.57 μV; REM: 1.87 ± 0.18 μV; wakefulness: 1.08 ± 0.31 μV). Post hoc analysis indicated the presence of larger amplitudes for emotional PMP at sleep (Stage 2: 1.96 ± 0.27 μV; Stage 3: 3.29 ± 0.62 μV; REM: 1.93 ± 0.19 μV) than during wakefulness (1.16 ± 0.34 μV) at Fz [F(4,44) = 4.23, p = 0.006, = 0.278, (1−β) = 95.93%], but not at Cz [F(4,44) = 2.2, p = 0.08, = 0.16, (1−β) = 69.93%]. For the PMP amplitudes to nonvocal sounds, there was a main effect of the stage [F(4,44) = 8.31, p < 0.001, = 0.430, (1−β) = 99.97%] and an interaction of stage × electrode [F(4,44) = 5.92, p = 0.001, = 0.350, (1−β) = 99.39%]. PMP had larger amplitudes at sleep (Stage 1: 1.47 ± 0.45 μV; Stage 2: 2.12 ± 0.33 μV; Stage 3: 3.04 ± 0.66 μV; REM: 1.86 ± 0.28 μV) than during wakefulness (0.17 ± 0.32 μV). Post hoc analysis indicated that both Fz [F(4,44) = 10.08, p < 0.001, = 0.478, (1−β) ≈ 100%] and Cz [F(4,44) = 5.83, p = 0.001, = 0.346, (1−β) = 99.31%] exhibited a stage effect, albeit with different effect size. Regardless of whether emotional syllables or nonvocal sounds were presented, all sleep stages relative to wakefulness augmented PMP at electrode Fz.

When exploring the PMP peak latencies to emotional syllables, we observed a main effect for the stage [F(4,44) = 3.09, p = 0.025, = 0.219, (1−β) = 87.19%]. Follow-up analyses indicated that emotional PMP peaked significantly earlier during stage 2 (359 ± 18.13 ms) and REM sleep (326.17 ± 18 ms) than during wakefulness (402.75 ± 14.08 ms). However, the PMP peak latencies to nonvocal sounds did not reveal any significance [stage: F(4,44) = 0.531, p = 0.71, = 0.046, (1−β) = 20.42%; electrode: F(1,11) = 1.2, p = 0.30, = 0.09, (1−β) = 28.63%; stage × electrode: F(4,44) = 0.71, p = 0.59, = 0.061, (1−β) = 26.75%].

In this study, we aimed to investigate how emotional salience was processed during various sleep stages. We measured MMN and PMP, which were considered as the index of emotional salience processing and attention switching, used a passive oddball paradigm with emotional syllables along with corresponding acoustic controls, and recorded EEG during an entire undisturbed night of sleep. The results indicated that emotional MMN were clearly detected at all sleep stages, whereas nonvocal MMN was diminished during Stage 2, Stage 3, and REM sleep. The N1-P2 complex was stronger when responding to emotional syllables than to nonvocal sounds. Regardless of emotional syllable or nonvocal sounds, falling asleep from wakefulness accelerated MMN latencies and enhanced PMP amplitudes. Specifically, emotional PMP showed larger amplitudes during Stage 3 and earlier latencies during REM sleep relative to wakefulness, whereas nonvocal PMP exhibited no such pattern. The findings suggested that all sleep stages should be able to process emotional salience.

The N1-P2 complex was identified at all sleep stages, supporting the hypothesis that the sleeping brain was able to process auditory stimuli. The generator of the N1-P2 complex was presumably a network of neural populations in the primary and secondary auditory cortex (Eggermont and Ponton, 2002). The N1-P2 complex was believed to reflect the intensity of simple tones at Stage 2 (Liu and Sheth, 2009), cortical arousal (Bastien et al., 2008), and sensory sensitivity associated with involuntary orienting during REM sleep (Atienza et al., 2001b). In a case where the sleeping brain continued evaluating auditory salience (Perrin et al., 1999; Pratt et al., 1999), the N1-P2 complex during sleep was reported to be stronger in response to emotional syllables than to nonvocal sounds.

Remarkably, we detected MMN in response to emotional syllables at all sleep stages, whereas MMN in response to nonvocal sounds, as acoustic controls, was diminished during Stage 2, Stage 3, and REM sleep. Our findings in the wakefulness concurred with previous findings that were obtained with a similar paradigm in healthy awake adults (Cheng et al., 2012; Fan et al., 2013; Hung et al., 2013; Chen et al., 2014; Fan and Cheng, 2014; Hung and Cheng, 2014), indicated the validity of the current experimental design to examine the emotional processing during sleep stages. It was evidenced by that the MMN amplitude was decreased by increasing the deviant-stimulus probability, but not by the amount of deviant-stimulus per se (Näätänen et al., 2007). Furthermore, considering that affective discrimination was selectively driven by voice processing rather than low-level acoustical features, we hypothesized that emotional salience processing should be underpinned by cerebral specialization for human voices. The detection of nonvocal MMN at Stage 1 corroborated the automatic detection of sound changes during sleep (Ruby et al., 2008). Sleep relative to wakefulness rendered resource reallocation to alter brain activity, such as, focalized sensory cortical activation along with limited distant interaction with prefrontal cortices (Maquet, 2000; Portas et al., 2000; Drummond et al., 2004; Kaufmann et al., 2006). The MMN and PMP attenuation to nonvocal sounds during sleep might be in line with the altered neural responses to nonconsciously perceived acoustical features (Palva et al., 2005).

Emotional MMN was identified not only in REM sleep but also in the other stages of sleep, indicated that the processing of emotional salience might continue during the entire night of sleep. A general consensus seemed to support that REM sleep had a decisive role in the formation of emotional memory (Wagner et al., 2001; Hu et al., 2006; Holland and Lewis, 2007; Nishida et al., 2009). Nocturnal sleep rich in REM sleep had a priming-like enhancement of emotional reactivity (Wagner et al., 2002). REM sleep de-potentiated the amygdala reactivity to previous emotional experiences (van der Helm et al., 2011). It is worth to mention that the amygdala was activated by using a similar oddball paradigm on the perception of emotional syllables in healthy awake adults (Schirmer et al., 2008). The use of the same stimuli in an oddball paradigm indicated that the mismatch response to angry and fearful syllables was identified in sleeping human neonates (Zhang et al., 2014). Acute testosterone effect on emotional MMN further suggests the involvement of amygdala in the automatic stage of emotional salience processing (Chen et al., 2015). Along with the absence of nonvocal MMN during REM sleep, the present results demonstrated that emotional MMN during sleep stages should be selectively driven by emotional salience per se, rather than by acoustic changes.

Emotional PMP peaked earlier during REM sleep relative to wakefulness, whereas nonvocal PMP exhibited no such pattern. The PMP most likely reflected automatic attention orienting toward the salient deviants (Friedman et al., 2001), associated with an active processing of the deviant tone, supposedly as part of dream consciousness (Ruby et al., 2008). As expected from previous results regarding simple tones, the PMP was present during Sleep Stage 1 (Bastuji et al., 1995; Cote, 2002), 2 (Ruby et al., 2008), and REM sleep (Niiyama et al., 1994; Bastuji et al., 1995; Sallinen et al., 1996; Perrin et al., 1999; Pratt et al., 1999; Cote, 2002). Remarkably, during REM sleep, emotional PMP had the shortest latency. One explanation to conciliate previous mixed PMP findings during sleep might be that emotional syllables used in previous studies, such as the subject’s name (Perrin et al., 1999; Pratt et al., 1999), rendered the deviant stimuli particularly salient to eliciting PMP, and might not always have been based on a stimulus with less salience, such as simple tones (Bastuji et al., 1995; Sabri et al., 2000). Another possibility was explained by our increased sensitivity and specificity of PMP detection by collecting a large amount of data and by combining emotional syllables with correspondingly acoustic controls (293 ± 90 and 458 ± 95 emotional deviants, and 249 ± 118 and 484 ± 157 nonvocal deviants recorded at Sleep Stage 3 and during REM sleep, respectively). This strategy made it possible to identify a PMP component that was too weak to be detected by previous studies. Furthermore, Stage 3 was known as slow-wave sleep, whereby the sleepers tended to be unresponsive to numerous environmental stimuli. The stimuli with affective significance were likely to activate the amygdala to a greater extent during non-REM sleep (Portas et al., 2000). Supporting this, emotional (fearful vs. happy) syllables rather than nonvocal sounds elicited larger PMP values at Stage 3 relative to during wakefulness. Evolutionarily, emotional voices automatically captured attention, even during deep sleep, which might be related to survival.

Importantly, the presentation of non-awaking voices to sleeping subjects and the recording of neural dynamics elicited by emotional saliency changes help us to clarify the following. First, emotional MMN detected during sleep functionally appears equivalent to its waking counterpart. From wakefulness into sleep, accelerated MMN latencies and exaggerated PMP amplitudes may be as a result of resource reallocation. Considering the major generation of MMN with signal maxima over bilateral supratemporal cortices (Näätänen et al., 2007), reallocated focal activation within auditory cortex might enhance not only acoustic feature detection but also emotional salience processing. Second, emotional salience processing happens during every stage of sleep. The presence of double peaks within the time window of emotional MMN during sleep (please see Figure 2) can be attributed to the dissociation of two subcomponents, namely, the processes of acoustic feature and emotional salience, respectively. However, this remains future areas of inquiry. Finally, the adjacent and intensive auditory-limbic connectivity provided a platform for acoustic experience to induce structural or functional changes in the corresponding cortices (Kraus and Canlon, 2012), which, in turn, boosted automatic processing of emotional salience processing in all stages of sleep.

In conclusion, our study provides electrophysiological evidence for the processing of emotional salience during entire night of sleep. MMN in response to emotional (happy vs. fearful) syllables was detected at all sleep stages. Emotional syllables elicited stronger N1-P2 complexes than did corresponding nonvocal sounds. Emotional PMP was identified with larger amplitudes at Stage 3, and at earlier latencies during REM sleep relative to wakefulness.

CC, J-YS, and YC took part in designing the study experimental design. CC and YC undertook data analysis. CC and YC managed the literature search and wrote the first draft of the manuscript. All authors have contributed and approved the manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We thank Shun-Ju Tsai for assisting with data collection. The study was funded by the Ministry of Science and Technology (MOST 103–2410-H-010-003-MY3; 104-2420-H-010-001-; 105-2420-H-010-003-), National Yang-Ming University Hospital (RD2015-004; RD2016-004), Ministry of Education (Aim for the Top University Plan), Department of Health, Taipei City Government (10401-62-023), and Wan Fang Hospital (104swf09), Taipei Medical University, Taipei, Taiwan.

Akselrod, S., Gordon, D., Ubel, F. A., Shannon, D. C., Berger, A. C., and Cohen, R. J. (1981). Power spectrum analysis of heart rate fluctuation: a quantitative probe of beat-to-beat cardiovascular control. Science 213, 220–222. doi: 10.1126/science.6166045

Atienza, M., Cantero, J. L., and Escera, C. (2001a). Auditory information processing during human sleep as revealed by event-related brain potentials. Clin. Neurophysiol. 112, 2031–2045. doi: 10.1016/s1388-2457(01)00650-2

Atienza, M., Cantero, J. L., and Gomez, C. M. (2001b). The initial orienting response during human REM sleep as revealed by the N1 component of auditory event-related potentials. Int. J. Psychophysiol. 41, 131–141. doi: 10.1016/s0167-8760(00)00196-3

Bastien, C. H., St-Jean, G., Morin, C. M., Turcotte, I., and Carrier, J. (2008). Chronic psychophysiological insomnia: hyperarousal and/or inhibition deficits? An ERPs investigation. Sleep 31, 887–898.

Bastuji, H., García-Larrea, L., Franc, C., and Mauguière, F. (1995). Brain processing of stimulus deviance during slow-wave and paradoxical sleep: a study of human auditory evoked responses using the oddball paradigm. J. Clin. Neurophysiol. 12, 155–167. doi: 10.1097/00004691-199503000-00006

Bastuji, H., Perrin, F., and Garcia-Larrea, L. (2002). Semantic analysis of auditory input during sleep: studies with event related potentials. Int. J. Psychophysiol. 46, 243–255. doi: 10.1016/s0167-8760(02)00116-2

Belin, P., Zatorre, R. J., Lafaille, P., Ahad, P., and Pike, B. (2000). Voice-selective areas in human auditory cortex. Nature 403, 309–312. doi: 10.1038/35002078

Berlad, I. I., Shlitner, A., Ben-Haim, S., and Lavie, P. (1993). Power spectrum analysis and heart rate variability in Stage 4 and REM sleep: evidence for state-specific changes in autonomic dominance. J. Sleep Res. 2, 88–90. doi: 10.1111/j.1365-2869.1993.tb00067.x

Born, A. P., Law, I., Lund, T. E., Rostrup, E., Hanson, L. G., Wildschiodtz, G., et al. (2002). Cortical deactivation induced by visual stimulation in human slow-wave sleep. Neuroimage 17, 1325–1335. doi: 10.1006/nimg.2002.1249

Brualla, J., Romero, M. F., Serrano, M., and Valdizán, J. R. (1998). Auditory event-related potentials to semantic priming during sleep. Electroencephalogr. Clin. Neurophysiol. 108, 283–290. doi: 10.1016/s0168-5597(97)00102-0

Buysse, D. J., Reynolds, C. F. III, Monk, T. H., Berman, S. R., and Kupfer, D. J. (1989). The Pittsburgh Sleep Quality Index: a new instrument for psychiatric practice and research. Psychiatry Res. 28, 193–213. doi: 10.1016/0165-1781(89)90047-4

Čeponiene, R., Lepistö, T., Shestakova, A., Vanhala, R., Alku, P., Näätänen, R., et al. (2003). Speech-sound-selective auditory impairment in children with autism: they can perceive but do not attend. Proc. Natl. Acad. Sci. U S A 100, 5567–5572. doi: 10.1073/pnas.0835631100

Chen, C., Chen, C. Y., Yang, C. Y., Lin, C. H., and Cheng, Y. (2015). Testosterone modulates preattentive sensory processing and involuntary attention switches to emotional voices. J. Neurophysiol. 113, 1842–1849. doi: 10.1152/jn.00587.2014

Chen, N. H., Johns, M. W., Li, H. Y., Chu, C. C., Liang, S. C., Shu, Y. H., et al. (2002). Validation of a Chinese version of the Epworth sleepiness scale. Qual. Life Res. 11, 817–821. doi: 10.1023/A:1020818417949

Chen, Y., Lee, Y. H., and Cheng, Y. (2014). Anterior insular cortex to emotional salience of voices in a passive oddball paradigm. Front. Hum Neurosci. 8:743. doi: 10.3389/fnhum.2014.00743

Cheng, Y., Lee, S. Y., Chen, H. Y., Wang, P. Y., and Decety, J. (2012). Voice and emotion processing in the human neonatal brain. J. Cogn. Neurosci. 24, 1411–1419. doi: 10.1162/jocn_a_00214

Coenen, A. M. (1995). Neuronal activities underlying the electroencephalogram and evoked potentials of sleeping and waking: implications for information processing. Neurosci. Biobehav. Rev. 19, 447–463. doi: 10.1016/0149-7634(95)00010-c

Coenen, A. M., and Drinkenburg, W. H. (2002). Animal models for information processing during sleep. Int. J. Psychophysiol. 46, 163–175. doi: 10.1016/s0167-8760(02)00110-1

Cote, K. A. (2002). Probing awareness during sleep with the auditory odd-ball paradigm. Int. J. Psychophysiol. 46, 227–241. doi: 10.1016/s0167-8760(02)00114-9

Doellinger, M., Burger, M., Hoppe, U., Bosco, E., and Eysholdt, U. (2011). Effects of consonant-vowel transitions in speech stimuli on cortical auditory evoked potentials in adults. Open Neurol. J. 5, 37–45. doi: 10.2174/1874205X01105010037

Drummond, S. P., Smith, M. T., Orff, H. J., Chengazi, V., and Perlis, M. L. (2004). Functional imaging of the sleeping brain: review of findings and implications for the study of insomnia. Sleep Med. Rev. 8, 227–242. doi: 10.1016/j.smrv.2003.10.005

Eggermont, J. J., and Ponton, C. W. (2002). The neurophysiology of auditory perception: from single units to evoked potentials. Audiol. Neurootol. 7, 71–99. doi: 10.1159/000057656

Fan, Y. T., and Cheng, Y. (2014). Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS One 9:e102471. doi: 10.1371/journal.pone.0102471

Fan, Y. T., Hsu, Y. Y., and Cheng, Y. (2013). Sex matters: n-back modulates emotional mismatch negativity. Neuroreport 24, 457–463. doi: 10.1097/WNR.0b013e32836169b9

Faul, F., Erdfelder, E., Buchner, A., and Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behav. Res. Methods 41, 1149–1160. doi: 10.3758/BRM.41.4.1149

Friedman, D., Cycowicz, Y. M., and Gaeta, H. (2001). The novelty P3: an event-related brain potential (ERP) sign of the brain’s evaluation of novelty. Neurosci. Biobehav. Rev. 25, 355–373. doi: 10.1016/s0149-7634(01)00019-7

Gujar, N., McDonald, S. A., Nishida, M., and Walker, M. P. (2011a). A role for REM sleep in recalibrating the sensitivity of the human brain to specific emotions. Cereb. Cortex 21, 115–123. doi: 10.1093/cercor/bhq064

Gujar, N., Yoo, S. S., Hu, P., and Walker, M. P. (2011b). Sleep deprivation amplifies reactivity of brain reward networks, biasing the appraisal of positive emotional experiences. J. Neurosci. 31, 4466–4474. doi: 10.1523/JNEUROSCI.3220-10.2011

Hennevin, E., Huetz, C., and Edeline, J. M. (2007). Neural representations during sleep: from sensory processing to memory traces. Neurobiol. Learn. Mem. 87, 416–440. doi: 10.1016/j.nlm.2006.10.006

Hennevin, E., Maho, C., Hars, B., and Dutrieux, G. (1993). Learning-induced plasticity in the medial geniculate nucleus is expressed during paradoxical sleep. Behav. Neurosci. 107, 1018–1030. doi: 10.1037/0735-7044.107.6.1018

Holland, P., and Lewis, P. A. (2007). Emotional memory: selective enhancement by sleep. Curr. Biol. 17, R179–R181. doi: 10.1016/j.cub.2006.12.033

Hu, P., Stylos-Allan, M., and Walker, M. P. (2006). Sleep facilitates consolidation of emotional declarative memory. Psychol. Sci. 17, 891–898. doi: 10.1111/j.1467-9280.2006.01799.x

Hung, A. Y., Ahveninen, J., and Cheng, Y. (2013). Atypical mismatch negativity to distressful voices associated with conduct disorder symptoms. J. Child Psychol. Psychiatry 54, 1016–1027. doi: 10.1111/jcpp.12076

Hung, A. Y., and Cheng, Y. (2014). Sex differences in preattentive perception of emotional voices and acoustic attributes. Neuroreport 25, 464–469. doi: 10.1097/WNR.0000000000000115

Ibáñez, A., López, V., and Cornejo, C. (2006). ERPs and contextual semantic discrimination: degrees of congruence in wakefulness and sleep. Brain Lang. 98, 264–275. doi: 10.1016/j.bandl.2006.05.005

Ibáñez, A. M., Martín, R. S., Hurtado, E., and López, V. (2009). ERPs studies of cognitive processing during sleep. Int. J. Psychol. 44, 290–304. doi: 10.1080/00207590802194234

Johns, M. W. (1991). A new method for measuring daytime sleepiness: the Epworth sleepiness scale. Sleep 14, 540–545.

Karakaş, S., Bekçi, B., Çakmak, E. D., Erzengin, Ö.U., and Aydin, H. (2007). Information processing in sleep based on event-related activities of the brain. Sleep Biol. Rhythms 5, 28–39. doi: 10.1111/j.1479-8425.2006.00254.x

Kaufmann, C., Wehrle, R., Wetter, T. C., Holsboer, F., Auer, D. P., Pollmächer, T., et al. (2006). Brain activation and hypothalamic functional connectivity during human non-rapid eye movement sleep: an EEG/fMRI study. Brain 129, 655–667. doi: 10.1093/brain/awh686

Kraus, K. S., and Canlon, B. (2012). Neuronal connectivity and interactions between the auditory and limbic systems. Effects of noise and tinnitus. Hear. Res. 288, 34–46. doi: 10.1016/j.heares.2012.02.009

Lavigne, G., Brousseau, M., Kato, T., Mayer, P., Manzini, C., Guitard, F., et al. (2004). Experimental pain perception remains equally active over all sleep stages. Pain 110, 646–655. doi: 10.1016/j.pain.2004.05.003

Liu, S., and Sheth, B. R. (2009). Discrimination of behaviorally irrelevant auditory stimuli in stage II sleep. Neuroreport 20, 207–212. doi: 10.1097/WNR.0b013e328320a6c0

Maho, C., and Hennevin, E. (1999). Expression in paradoxical sleep of a conditioned heart rate response. Neuroreport 10, 3381–3385. doi: 10.1097/00001756-199911080-00023

Maho, C., Hennevin, E., Hars, B., and Poincheval, S. (1991). Evocation in paradoxical sleep of a hippocampal conditioned cellular response acquired during waking. Psychobiology 19, 193–205.

Maquet, P. (2000). Functional neuroimaging of normal human sleep by positron emission tomography. J. Sleep Res. 9, 207–231. doi: 10.1046/j.1365-2869.2000.00214.x

Maquet, P., Péters, J., Aerts, J., Delfiore, G., Degueldre, C., Luxen, A., et al. (1996). Functional neuroanatomy of human rapid-eye-movement sleep and dreaming. Nature 383, 163–166. doi: 10.1038/383163a0

Näätänen, R., Kujala, T., and Winkler, I. (2011). Auditory processing that leads to conscious perception: a unique window to central auditory processing opened by the mismatch negativity and related responses. Psychophysiology 48, 4–22. doi: 10.1111/j.1469-8986.2010.01114.x

Näätänen, R., Paavilainen, P., Rinne, T., and Alho, K. (2007). The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clin. Neurophysiol. 118, 2544–2590. doi: 10.1016/j.clinph.2007.04.026

Nashida, T., Yabe, H., Sato, Y., Hiruma, T., Sutoh, T., Shinozaki, N., et al. (2000). Automatic auditory information processing in sleep. Sleep 23, 821–828.

Niiyama, Y., Fujiwara, R., Satoh, N., and Hishikawa, Y. (1994). Endogenous components of event-related potential appearing during NREM stage 1 and REM sleep in man. Int. J. Psychophysiol. 17, 165–174. doi: 10.1016/0167-8760(94)90032-9

Nishida, M., Pearsall, J., Buckner, R. L., and Walker, M. P. (2009). REM sleep, prefrontal theta and the consolidation of human emotional memory. Cereb. Cortex 19, 1158–1166. doi: 10.1093/cercor/bhn155

Nordby, H., Hugdahl, K., Stickgold, R., Bronnick, K. S., and Hobson, J. A. (1996). Event-related potentials (ERPs) to deviant auditory stimuli during sleep and waking. Neuroreport 7, 1082–1086. doi: 10.1097/00001756-199604100-00026

Oswald, I., Taylor, A. M., and Treisman, M. (1960). Discriminative responses to stimulation during human sleep. Brain 83, 440–453. doi: 10.1093/brain/83.3.440

Palva, S., Linkenkaer-Hansen, K., Näätänen, R., and Palva, J. M. (2005). Early neural correlates of conscious somatosensory perception. J. Neurosci. 25, 5248–5258. doi: 10.1523/JNEUROSCI.0141-05.2005

Perrin, F., Bastuji, H., and Garcia-Larrea, L. (2002). Detection of verbal discordances during sleep. Neuroreport 13, 1345–1349. doi: 10.1097/00001756-200207190-00026

Perrin, F., Bastuji, H., Mauguière, F., and García-Larrea, L. (2000). Functional dissociation of the early and late portions of human K-complexes. Neuroreport 11, 1637–1640. doi: 10.1097/00001756-200006050-00008

Perrin, F., García-Larrea, L., Mauguière, F., and Bastuji, H. (1999). A differential brain response to the subject’s own name persists during sleep. Clin. Neurophysiol. 110, 2153–2164. doi: 10.1016/s1388-2457(99)00177-7

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Portas, C. M., Krakow, K., Allen, P., Josephs, O., Armony, J. L., and Frith, C. D. (2000). Auditory processing across the sleep-wake cycle: simultaneous EEG and fMRI monitoring in humans. Neuron 28, 991–999. doi: 10.1016/s0896-6273(00)00169-0

Pratt, H., Berlad, I., and Lavie, P. (1999). ’Oddball’ event-related potentials and information processing during REM and non-REM sleep. Clin. Neurophysiol. 110, 53–61. doi: 10.1016/s0168-5597(98)00044-6

Putnam, L. E., and Roth, W. T. (1990). Effects of stimulus repetition, duration and rise time on startle blink and automatically elicited P300. Psychophysiology 27, 275–297. doi: 10.1111/j.1469-8986.1990.tb00383.x

Rechtschaffen, A., and Kales, A. (1968). A Manual of Standardized Terminology, Techniques and Scoring System for Sleep Stages of Human Subjects. Washington, DC: Public Health Service.

Remedios, R., Logothetis, N. K., and Kayser, C. (2009). An auditory region in the primate insular cortex responding preferentially to vocal communication sounds. J. Neurosci. 29, 1034–1045. doi: 10.1523/JNEUROSCI.4089-08.2009

Ruby, P., Caclin, A., Boulet, S., Delpuech, C., and Morlet, D. (2008). Odd sound processing in the sleeping brain. J. Cogn. Neurosci. 20, 296–311. doi: 10.1162/jocn.2008.20023

Sabatinelli, D., Keil, A., Frank, D. W., and Lang, P. J. (2013). Emotional perception: correspondence of early and late event-related potentials with cortical and subcortical functional MRI. Biol. Psychol. 92, 513–519. doi: 10.1016/j.biopsycho.2012.04.005

Sabri, M., De Lugt, D. R., and Campbell, K. B. (2000). The mismatch negativity to frequency deviants during the transition from wakefulness to sleep. Can. J. Exp. Psychol. 54, 230–242. doi: 10.1037/h0087343

Sallinen, M., Kaartinen, J., and Lyytinen, H. (1996). Processing of auditory stimuli during tonic and phasic periods of REM sleep as revealed by event-related brain potentials. J. Sleep Res. 5, 220–228. doi: 10.1111/j.1365-2869.1996.00220.x

Schirmer, A., Escoffier, N., Zysset, S., Koester, D., Striano, T., and Friederici, A. D. (2008). When vocal processing gets emotional: on the role of social orientation in relevance detection by the human amygdala. Neuroimage 40, 1402–1410. doi: 10.1016/j.neuroimage.2008.01.018

Schirmer, A., Simpson, E., and Escoffier, N. (2007). Listen up! Processing of intensity change differs for vocal and nonvocal sounds. Brain Res. 1176, 103–112. doi: 10.1016/j.brainres.2007.08.008

Tamietto, M., and de Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nature Rev. Neurosci. 11, 697–709. doi: 10.1038/nrn2889

Tsai, P. S., Wang, S. Y., Wang, M. Y., Su, C. T., Yang, T. T., Huang, C. J., et al. (2005). Psychometric evaluation of the Chinese version of the Pittsburgh Sleep Quality Index (CPSQI) in primary insomnia and control subjects. Qual. Life Res. 14, 1943–1952. doi: 10.1007/s11136-005-4346-x

van der Helm, E., Yao, J., Dutt, S., Rao, V., Saletin, J. M., and Walker, M. P. (2011). REM sleep depotentiates amygdala activity to previous emotional experiences. Curr. Biol. 21, 2029–2032. doi: 10.1016/j.cub.2011.10.052

Wagner, U., Fischer, S., and Born, J. (2002). Changes in emotional responses to aversive pictures across periods rich in slow-wave sleep versus rapid eye movement sleep. Psychosom. Med. 64, 627–634. doi: 10.1097/00006842-200207000-00013

Wagner, U., Gais, S., and Born, J. (2001). Emotional memory formation is enhanced across sleep intervals with high amounts of rapid eye movement sleep. Learn. Mem. 8, 112–119. doi: 10.1101/lm.36801

Yoo, S. S., Gujar, N., Hu, P., Jolesz, F. A., and Walker, M. P. (2007). The human emotional brain without sleep–a prefrontal amygdala disconnect. Curr. Biol. 17, R877–R878. doi: 10.1016/j.cub.2007.08.007

Zemaityte, D., Varoneckas, G., Plauska, K., and Kaukenas, J. (1986). Components of the heart rhythm power spectrum in wakefulness and individual sleep stages. Int. J. Psychophysiol. 4, 129–141. doi: 10.1016/0167-8760(86)90006-1

Keywords: sleep, emotional salience, voices, mismatch negativity (MMN), rapid eye movement (REM)

Citation: Chen C, Sung J-Y and Cheng Y (2016) Neural Dynamics of Emotional Salience Processing in Response to Voices during the Stages of Sleep. Front. Behav. Neurosci. 10:117. doi: 10.3389/fnbeh.2016.00117

Received: 04 September 2015; Accepted: 25 May 2016;

Published: 14 June 2016.

Edited by:

Nuno Sousa, University of Minho, PortugalReviewed by:

Eric Murillo-Rodriguez, Universidad Anahuac Mayab, MexicoCopyright © 2016 Chen, Sung and Cheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution and reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yawei Cheng, eXdjaGVuZzJAeW0uZWR1LnR3

† These authors have contributed equally to this work.

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.