- Beijing Key Laboratory of Fundamental Research on Biomechanics in Clinical Application, School of Biomedical Engineering, Capital Medical University, Beijing, China

It is of potential clinical value to improve the accuracy of Alzheimer's disease (AD) recognition using structural MRI. We proposed a reparametrized convolutional neural network (Re-CNN) to discriminate AD from NC by applying morphological metrics and deep semantic features. The deep semantic features were extracted through Re-CNN on structural MRI. Considering the high redundancy in deep semantic features, we constrained the similarity of the features and retained the most distinguishing features utilizing the reparametrized module. The Re-CNN model was trained in an end-to-end manner on structural MRI from the ADNI dataset and tested on structural MRI from the AIBL dataset. Our proposed model achieves better performance over some existing structural MRI-based AD recognition models. The experimental results show that morphological metrics along with the constrained deep semantic features can relatively improve AD recognition performance. Our code is available at: https://github.com/czp19940707/Re-CNN.

1. Introduction

Alzheimer's disease (AD) is an irreversible neurodegenerative disease (Jagust, 2013) arised from a progressive neuron and synapse loss, with the resulting brain tissue atrophy. As seen from the pathological and clinical manifestations of AD, significant atrophy can be observed in early disease stages in the hippocampus and entorhinal cortex (Pennanen et al., 2004). The brain tissue atrophy may be visible on high-resolution structural MRI, and structural MRI measures can discriminate AD from healthy control (NC). Schmitter et al. (2015) utilized the voxel-based morphometry (VBM) to extract the hippocampus volume to discriminate AD from NC and achieved an accuracy of 83%. Koikkalainen et al. (2011) used deformation-based morphometry (DBM) to obtain structural MRI features and reached an accuracy of 86%. Park et al. (2012) computed cortical thickness (CTH) and sulcus depth (SD) with surface-based morphometry (SBM) and attained an accuracy of 85%. Ma et al. (2020) integrated gray matter volume (GMV), Jacobian determinant (JDV), CTH, SD, gyrification index (GI), and fractal dimension (FD) to discriminate mild cognitive impairment (MCI) from NC with random forest and achieved an accuracy of 80%. Long et al. (2018) proposed a comparative atlas-based recognition to recognize MCI from NC, GMV, white matter volume (WMV), and cerebrospinal fluid volume (CRFV) were calculated and reported accuracies of 83, 92, and 89% with AAL-90, BN-246, and AAL-1024 atlas, respectively. These studies needed to determine the region of interest (ROI) for computing morphological metrics, and ROIs often included voxel-level and region-level. It is obvious that neither voxel-level nor region-level based approaches can cover whole brain pathological regions. Deep semantic features may play an important role in AD recognition. Convolutional neural networks (CNNs) were considered backbone structures. Aderghal et al. (2018) designed deep CNNs to structural MRI using transfer learning adopted 188 AD and 228 NC subjects and achieved an accuracy of 90%. Pan et al. (2020) proposed a novel model for structural MRI combining CNN and ensemble learning for AD recognition, collected 137 AD and 162 NC subjects, and reached an accuracy of 84%. Qiu et al. (2020) proposed an interpretable deep learning framework to structural MRI for AD recognition, chose 188 AD subjects and 229 NC subjects, and attained an accuracy of 83%. Lian et al. (2018) proposed a hierarchical fully convolutional network, employed 358 AD subjects and 429 NC subjects, and achieved an accuracy of 90%. CNN based models apply a large number of convolutional kernels for feature extraction, the produced features are often redundant and correlated. An adaptive global pooling layer was used to filter the features (He et al., 2016; Huang et al., 2017; Tan and Le, 2019), and replaced the feature maps with their mean values to achieve dimensionality reduction. However, after the pooling layer, the produced feature's dimensionality is still high. The accuracy of structural MRI based AD recognition is hard to be improved (both morphological based and CNN based) due to the structural similarity between samples and limited training data. How to filter the redundant information effectively and extract the most distinguishing features from structural MRI scans is a challenge.

To improve the accuracy of recognition of structural MRI in AD, we built an end-to-end deep learning model Re-CNN on structural MRI for AD recognition. The main innovations of this article are as follows: (1) combined deep semantic features with morphological metrics (derived from VBM) for AD recognition. (2) created a reparametrized module to impose similarity constraints on deep semantic features, retained the most distinguishing features, reduce feature dimensionality, and improve AD recognition performance.

2. Method

In this article, a reparametrized convolutional neural network (Re-CNN) model was proposed on structural MRI to identify AD by applying morphological metrics and deep semantic features. The reparametrized module was designed to constrain the similarity of deep semantic features, achieving the most distinguishing features. The morphological metrics were derived from VBM (Jing et al., 2018; Zhao et al., 2021), where GMV, white matter volume, and cerebrospinal fluid volume were computed using CAT-12.

2.1. Data Collection

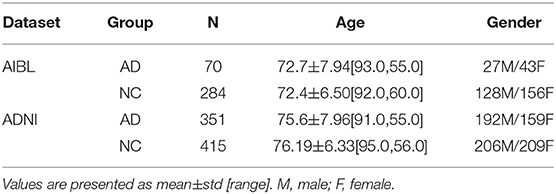

We collected data from Alzheimer's disease neuroimaging initiative (ADNI) and Australian imaging, biomarker & lifestyle (AIBL) datasets for our model training and testing, respectively. ADNI is a longitudinal multicenter study aimed to explore clinical, imaging, genetic, and biochemical biomarkers for the early detection and tracking of AD (Mueller et al., 2005). AIBL is the largest study of its kind in Australia designed to detect biomarkers, cognitive characteristics, and lifestyle factors that affect the progression of symptomatic AD. The data from ADNI were randomly divided into two groups for training and validation, and AIBL data was used for multi-site testing. The criterion for subject selection included age ≥55, and T1-weighted MRI scans. Cases were excluded including Alzheimer's disease with mixed dementia, non-Alzheimer's disease dementia, severe depression, stroke, brain tumors, and history of severe traumatic brain injury, as well as incident major systemic illnesses. The inclusion and exclusion criterion was referred to in the baseline recruitment protocol developed by the ADNI study, and the same selection criteria were applied to an other study (Qiu et al., 2020). Finally, 415 NC samples and 351 AD samples were selected from the ADNI dataset, and 284 NC samples and 70 samples were selected from the AIBL dataset. The samples statistics overview is shown in Table 1.

2.2. Structural MRI Scan Processing

All the structural MRI data in this study were in NIFTI format. We adopted the FSL package (Woolrich et al., 2009) for data processing, where the FSLReorient2STD toolkit was used for redirection, BET toolkit for skull removal, and Flirt toolkit for registration structural MRI to the MNI152 standard brain template [181*217*181]. After structural MRI scans image registration, we normalized the intensities of all the voxels. Then we adjusted the intensity of these voxels and other outliers by clipping them to the range: [-1,2.5], following the method in Qiu et al. (2020). It is well known that Alzheimer's disease leads to significant structural changes in hippocampus-related regions. Wen et al. (2020) chose the hippocampus as ROI and proved a cubic patch [50*50*50] enclosed hippocampus region can be used for training. In our study, we manually cropped a larger [80*100*80] patch to make sure it includes all the hippocampus (left and right).

2.3. Morphological Metrics

The adopted morphological metrics derived from ADNI and AIBL in this study included gray matter volume, white matter volume, and cerebrospinal fluid volume. The morphological metrics were extracted using CAT-12. First, the structural MRI scans were manually oriented to place the anterior commissure aligned to the MNI template. Second, the structural MRI data was skull stripped and corrected for bias-field inhomogeneity. Third, the images were segmented into GM, WM, and CSF using a unified segmentation method, and then normalized into the MNI space with the DARTEL algorithm. Specially, an integrated modulation step was applied to preserve volume information at each tissue voxel. Finally, the segmented scans were smoothed with an isotropic 4 mm full width half maximum (FWHM) Gaussian kernel. After that, the GM, WM, and CSF volumes were extracted from the smoothed images using hammers atlas which are available within the CAT-12.

2.4. Re-CNN

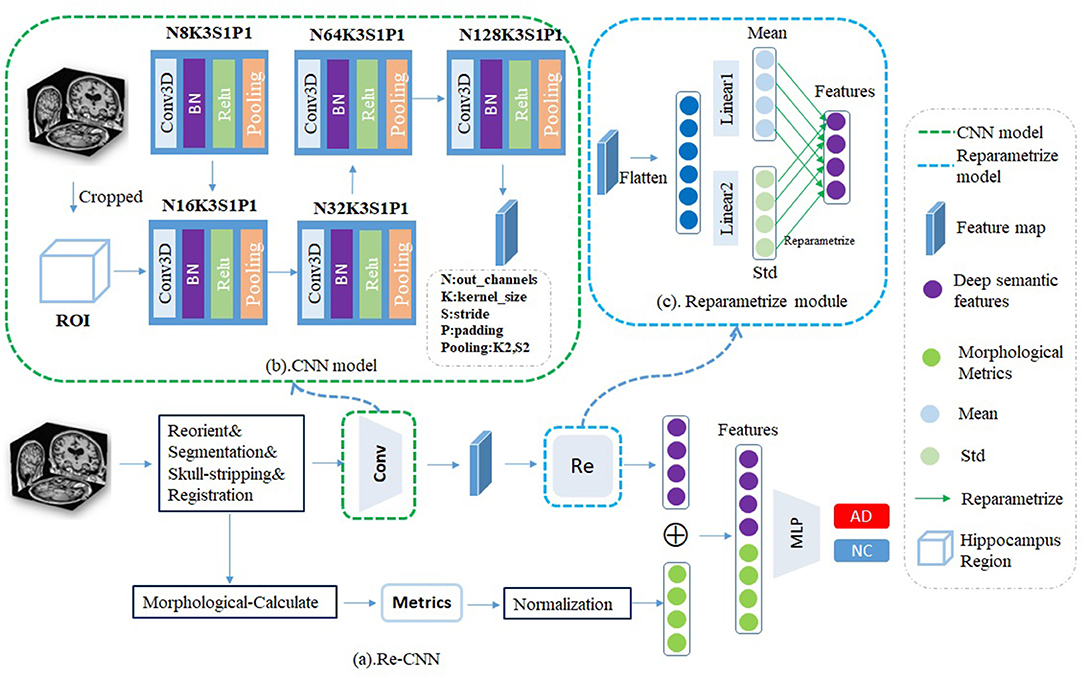

Our Re-CNN model consists of the CNN model and reparametrized module (as shown in Figure 1). CNN model inputs the cropped hippocampus patches and generates the original deep semantic features, reparametrized module constrains the similarity of the original deep semantic features and generates the most distinguishing features. Finally, we concatenate the deep semantic features and the morphological metrics together as inputs to a multi-layer perceptron classifier for AD recognition.

Figure 1. (a) Our reparametrized convolutional neural network (Re-CNN) consisted with two components, (b) CNN model, and (c) Reparametrized module.

The CNN model includes four convolution modules, each module containing Conv3D, BatchNorm3D, ReLU, and MaxPooling3D. The Conv3D is used for local feature extraction, BatchNorm3D is used for feature normalization, and the ReLU is used to add nonlinearity. To capture global semantic features, we use the PadMaxPool3d layer (Wen et al., 2020) to reduce the dimension of the input, so the pixels on the feature map have a larger receptive field in the original patches. The CNN model is shown in Figure 1B. After passing k = 5 convolution modules, the output size of patches is [128,3,4,3], which means that we have obtained 128 feature maps with the size of [3,4,3]. The corresponding size of the layer k is calculated as follows:

Where lk−1 represents the size of the receptive field corresponding to layer k−1, fk represents the kernel size of layer k, Si represents the stride. So each pixel in the feature map can correspond to the 32*32*32 region on the original map. A similar size was also used by Wen et al. (2020), and they proved that the size of the receptive field can effectively recognize AD from NC.

The reparametrized module was designed to obtain the most differentiating features. First, we flatten the features (size from [128,3,4,3] to [128*3*4*3]) and send them into two different fully connected layers fm and fs. The outputs of fm and fs can be viewed as mean value α and variance σ of the distribution of the features, respectively, then the features were randomly sampled according to α and σ. Assuming there are distributions in the hidden space, the reparametrized process can be expressed by the following formula:

Where Z represents the features after the reparametrized process, ε represents the n values created by standard normal distribution ε~N(0, 1), SD σ can be formulated as:

Where h represents initial features, fs(h) represents linear transformation by fully connected layer fs, Mean value μ can be formulated as:

fm(h) represents linear transformation by a fully connected layer. According to Equation 2, it is obvious that the processed features can be derived linearly from the normal distribution ε~N(0, 1). We can achieve dimension reduction by modifying the number of neurons in the full connection layers fm and fs. The proposed reparametrized module can predict features distribution instead of obtaining features directly, our reparametrized function can increase the diversity of the features.

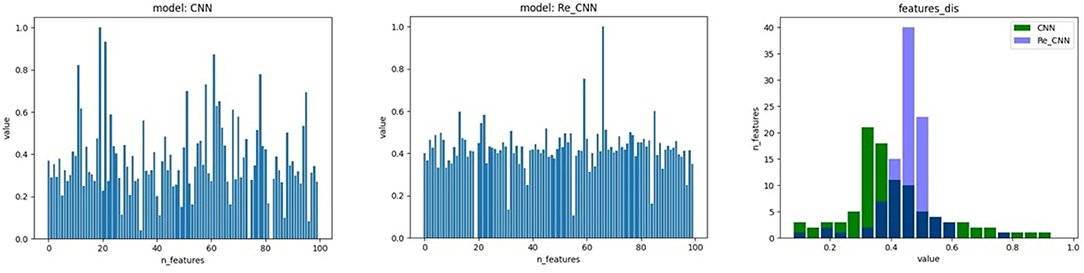

2.5. Loss Function

In this study, we proposed a loss function based on cross entropy and KL divergence. The cross entropy loss lce is used to increase the difference in the features. The feature distribution in hidden space became discontinuous due to the cross entropy (as shown in Figure 2A). Therefore, KL divergence loss lkl was used to restrict feature distribution to standard normal distribution. Our loss function can be expressed as:

α is an adjustable hyper-parameter representing the balance proportionality between the two loss functions, KL divergence loss function lets the feature distribution in hidden space become more continuous (as shown in Figure 2B). The cross entropy loss function lce can be formulated as:

Where yic is set to 1 if the prediction category is equal to the label category, otherwise is set to 0. pic is the probability that sample i belongs to class c. KL divergence loss lkl can be formulated as:

Where p(x) is a standard normal distribution N(0, 1), q(x) is features distribution of hidden space. All feature distributions in hidden space are constrained to N(0, 1) through the KL divergence loss function. The KL divergence loss can be simplified by μ and σ of the hidden space feature distribution:

Where μ and σ in Equations 3 and 4 have the same meaning, J denotes the feature dimension of hidden space and j denotes the jth feature. Our loss function ensures that the features' similarity is maximized while the differential features are retained.

Figure 2. The feature distribution of hidden space, 1) the feature distribution of conventional CNN, 2) the feature distribution of Re-CNN, 3) the feature distribution histogram of the two models.

2.6. Experiments

A total of 415 NC samples and 351 AD samples were selected from ADNI dataset for the experiments, we applied 80% of samples for model training, and 20% of samples for model validation. We fixed random seeds to make sure the same samples were utilized in the training and validation of all models. We modified the seed value to implement random cross-validation. As for the sample imbalance of AD and NC groups in the training set, we adopted oversampling method to give more weight to the AD group. AIBL was used to test directly as external multi-site data.

Our Re-CNN was implemented using Python with the Pytorch package, and the computer contains a single GPU (NVIDIA RTX 3090 24GB). We adopted random initialization weight to train Re-CNN from scratch. The Adam optimizer with a 0.0005 learning rate and a mini-batch size of 32 was set for training. The Re-CNN was trained for 120 epochs, which took around 7 min (i.e., 3.54 s for each epoch). The initial learning rate was set to 0, the learning rate warm-up method was used in the first two epochs and the rate increased according to the amount of training data. Finally, the learning rate remained unchanged at 0.0005 after the second epoch. Then, we adjusted the learning rate dynamically according to the number of epochs (learning rate shrinks 5 times per 40 epochs) to achieve better convergence.

2.7. Performance Metrics

The AD recognition performance was evaluated by four metrics, including accuracy (ACC), sensitivity (SEN), specificity (SPE), and area under the receiver operating characteristic curve (AUC). These metrics are defined as , , , where TP, TN, FP, and FN denote, the true positive, true negative, false positive, and false negative values, respectively.

3. Results

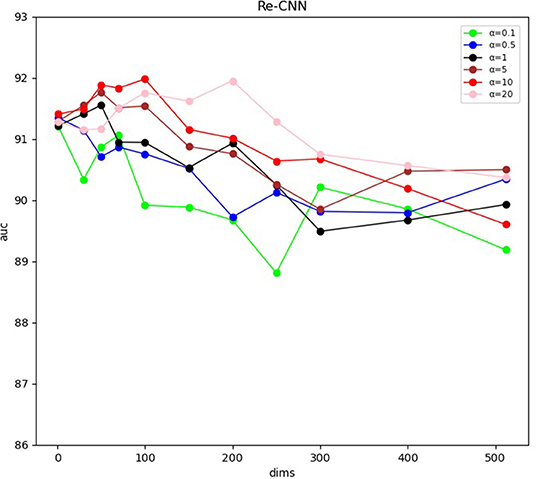

Our Re-CNN model can differentiate AD from NC by applying morphological metrics and most distinguishing deep semantic features. The experiments results were shown in (Figure 3 and Table 1). Table 1 shows the AUC of the model at different semantic features dimension and hyperparameter α, all the results were validated five times to reduce randomness, and the mean and SD of AUC were illustrated. Figure 3 visualized the AUC at different semantic features dimension and hyperparameter α. Our model achieved the optimal AUC when the hyperparameter α is 10, and the feature dimension is 100.

Figure 3. Area under the receiver operating characteristic curve at different semantic features dimension and hyperparameter α.

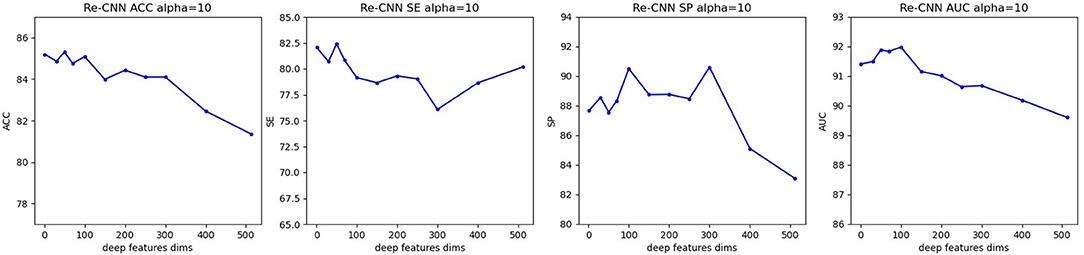

When hyperparameter α is equal to 10, at different semantic features dimension, precision, sensitivity, specificity, and AUC of our model were shown in Figure 4.

Figure 4. Precision, sensitivity, specificity, and AUC of our model (when α equals 10, semantic features dimension increases from 0 to 512). (A) Re-CNN. (B) CNN model. (C) Reparametrize module.

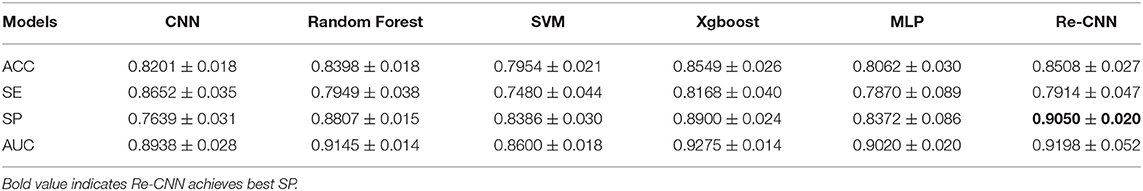

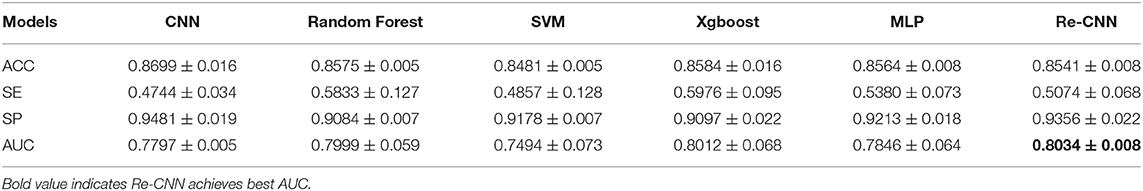

We compared our model with conventional CNN, MLP, SVM, random forest, and Xgboost, the descriptive information about these five models was added in the Supplementary Materials. All the models used the same training and validation data as our Re-CNN, as shown in Section 2.6. All the results in Tables 2, 3 were validated five times (random cross-validation based on different seeds), and the mean and SD of ACC, SP, SE, and AUC of all the models are shown in Tables 2, 3.

Table 2. Area under the receiver operating characteristic curve (AUC) of our Re-CNN model at different semantic features dimension and hyperparameter α.

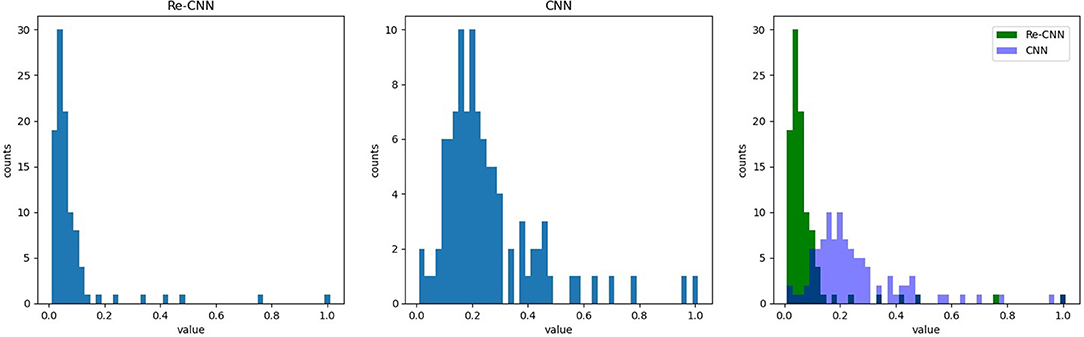

In addition, we compared the weights of the neurons in MLP corresponding to deep semantic features in conventional CNN and our Re-CNN, results showed that conventional CNN had much more high neurons weights than our Re-CNN, as shown in Figure 5. The left figure shows the first 100 neuron weights corresponding to conventional CNN. The middle figure shows the weights for Re-CNN when alpha equals 1 and the deep semantic feature dimension equals 100. The right figure shows the neuron weights histogram of the two models.

Figure 5. Re-CNNs' α equals 1, semantic features dimension equals 100, neuron weights corresponding to the semantic features are shown in the left figure. The neuron weights of the first layer of MLP. The first 100 neuron weights from CNN are shown in the middle figure. The right figure shows the neuron weights histogram of the two models.

4. Discussion

To improve the accuracy of discriminating AD from NC by structural MRI, we built an end-to-end model Re-CNN that integrates morphological metrics and deep semantic features from structural MRI for AD recognition. To address the problem of redundancy and correlation of the deep semantic features, we designed a reparametrized module to impose similarity constraints on features and retain the most distinguishing features.

We constructed a new loss function by integrating cross entropy and KL divergence loss functions. The weight coefficient α of KL divergence is an adjustable hyper-parameter to balance proportionality between the two loss functions. When α was set to small values, i.e., weak similarity constraint, a large number of redundancy features were extracted by the CNN model. The recognition performance of our model [The AUC is about 0.90, as shown in Figure 3 (α = 0.1)] cannot be further improved since the weak similarity constraint. As the α increased, the redundant features were filtered out and the most distinguishing features were extracted through the model. It should be noted that the performance of our Re-CNN cannot be improved consistently with the increase of hyperparameter α, as shown in Figure 3 (α = 5, 10, 20), when the α was very large, the deep semantic features are over-constrained, most of the deep semantic feature distributions were restricted to the standard normal distribution, the distinguishing ability of deep semantic features was gradually lost. When α was set to 10, our Re-CNN model achieved the best performance (AUC=0.9198 ± 0.020).

In our study, the deep semantic features dimension also affects the performance of our Re-CNN model. When the number of deep semantic features was set to 0 or 1, there is no deep semantic features adopted and the Re-CNN model degenerated to MLP (The AUC is about 0.90, as shown in Table 2, mf+0,1). As the deep semantic features increased, recognition performance declined when α was set to 0.1 or 0.5 (as shown in Figure 3 blue and green line) since the small α values can not constrain a large number of deep semantic features. However, the performance increased when α was set to 5,10,20 (as shown in Figure 3 in brown, red, and pink lines) since the appropriate dimension of deep semantic features were constrained and most distinguishing features were extracted. When the number of deep semantic features was set to 100 and α was set to 10, our Re-CNN model achieved the best result.

In addition, the MLP module would respond to different features with different weights. It could be clearly seen that many neuron weights in the Re-CNN almost converge to 0, while the neuron weights in the conventional CNN were more scattered (as shown in Figure 5). The reason was that high differentiation features would correspond to larger weights, and redundant features corresponding weights would tend to 0. Redundant features played little role in recognition tasks, so the weights of the neurons connected converge to 0. The conventional CNN did not impose similarity constraints on the features, a large number of redundant features were fed into the classifier, resulting in low recognition accuracy.

Most of the deep learning based AD recognition models achieved relatively high recognition accuracy on structural MRI. It is difficult to compare the performance of various models because different models used different data sets. On the same structural MRI data set, we compared the recognition performance of our Re-CNN model with conventional CNN, our model imposed similarity constraints on features and retained the most distinguishing features. For the ADNI random cross-validation (as shown in Table 3), the ACC, SP, and AUC of our Re-CNN model (under α = 10, and the deep semantic feature dimension is 100) outperformed the conventional CNN model. For the AIBL multi-site testing (as shown in Table 4), the SE and AUC of our Re-CNN model were superior to the conventional CNN model. The results showed that deep semantic features have limitations on improving AD recognition performance, but constrained deep semantic features with the reparametrized module can improve recognition performance.

We compared the Re-CNN model with SVM, random forest, MLP, and Xgboost on morphological metrics extract from the same structural MRI data set (as shown in Table 3,4). Compared to SVM, our Re-CNN has better ACC, SE, SP, and AUC in both ADNI and AIBL. These results showed the effectiveness of our Re-CNN. Compared to MLP, Re-CNN utilized the same classifier structure as MLP. Our model combined deep semantic features with morphological metrics and achieved the best ACC, SP, SE, and AUC on ADNI and the best SP and AUC on AIBL. The results demonstrated that recognition performance can be improved by applying constrained deep semantic features with the same classifier (MLP). Compared with some stronger classifiers, such as random forest and Xgboost, our model adopted a relatively weak classifier MLP and reached comparable results (the best SP on ADNI and the best AUC on AIBL) with random forest and Xgboost, which means the extracted features with Re-CNN are effective.

5. Conclusion

We proposed an end-to-end neural network model Re-CNN, applying morphological metrics and deep semantic features from structural MRI to distinguish AD from NC. The reparametrized module imposed similarity constraints on deep semantic features and retained the most distinguishing features. Our model worked with relatively high recognition and generalization performance and was superior to the conventional CNN model.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author. The datasets analyzed for this study can be found in the ADNI [http://adni.loni.usc.edu/] and AIBL [https://aibl.csiro.au/].

Author Contributions

ZC: methodology, architecture design, and writing the original manuscript. XM and RC: data collection and preprocessing. PF: programming. HL: conceptualization and manuscript revision. All the authors read and approved the final manuscript.

Funding

This study was supported by Beijing Natural Science Foundation no. L192044.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnagi.2022.856391/full#supplementary-material

References

Aderghal, K., Khvostikov, A., Krylov, A., Benois-Pineau, J., Afdel, K., and Catheline, G. (2018). “Classification of alzheimer disease on imaging modalities with deep cnns using cross-modal transfer learning,” in 2018 IEEE 31st International Symposium on Computer-Based Medical Systems (CBMS) (Karlstad: IEEE), 345–350.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Las Vegas, NV: IEEE), 770–778.

Huang, G., Liu, Z., Van Der Maaten, L., and Weinberger, K. Q. (2017). “Densely connected convolutional networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Honolulu, HI: IEEE), 4700–4708.

Jagust, W.. (2013). Vulnerable neural systems and the borderland of brain aging and neurodegeneration. Neuron 77, 219–234. doi: 10.1016/j.neuron.2013.01.002

Jing, B., Liu, B., Li, H., Lei, J., Wang, Z., Yang, Y., et al. (2018). Within-subject test-retest reliability of the atlas-based cortical volume measurement in the rat brain: a voxel-based morphometry study. J. Neurosci. Methods 307:46–52. doi: 10.1016/j.jneumeth.2018.06.022

Koikkalainen, J., Lötjönen, J., Thurfjell, L., Rueckert, D., Waldemar, G., Soininen, H., et al. (2011). Multi-template tensor-based morphometry: application to analysis of Alzheimer's disease. Neuroimage 56, 1134–1144. doi: 10.1016/j.neuroimage.2011.03.029

Lian, C., Liu, M., Zhang, J., and Shen, D. (2018). Hierarchical fully convolutional network for joint atrophy localization and alzheimer's disease diagnosis using structural mri. IEEE Trans. Pattern. Anal. Mach. Intell. 42, 880–893. doi: 10.1109/TPAMI.2018.2889096

Long, Z., Huang, J., Li, B., Li, Z., Li, Z., Chen, H., et al. (2018). A comparative atlas-based recognition of mild cognitive impairment with voxel-based morphometry. Front. Neurosci. 12:916. doi: 10.3389/fnins.2018.00916

Ma, Z., Jing, B., Li, Y., Yan, H., Li, Z., Ma, X., et al. (2020). Identifying mild cognitive impairment with random forest by integrating multiple mri morphological metrics. J. Alzheimers Dis. 73, 991–1002. doi: 10.3233/JAD-190715

Mueller, S. G., Weiner, M. W., Thal, L. J., Petersen, R. C., Jack, C., Jagust, W., et al. (2005). The Alzheimer's disease neuroimaging initiative. Neuroimaging Clin. North Am. 15, 869. doi: 10.1016/j.nic.2005.09.008

Pan, D., Zeng, A., Jia, L., Huang, Y., Frizzell, T., and Song, X. (2020). Early detection of alzheimer's disease using magnetic resonance imaging: a novel approach combining convolutional neural networks and ensemble learning. Frontiers in neuroscience 14:259. doi: 10.3389/fnins.2020.00259

Park, H., Yang, J.-J., Seo, J., and Lee, J.-m. (2012). Dimensionality reduced cortical features and their use in the classification of Alzheimer's disease and mild cognitive impairment. Neurosci. Lett. 529, 123–127. doi: 10.1016/j.neulet.2012.09.011

Pennanen, C., Kivipelto, M., Tuomainen, S., Hartikainen, P., Hänninen, T., Laakso, M. P., et al. (2004). Hippocampus and entorhinal cortex in mild cognitive impairment and early ad. Neurobiol. Aging 25, 303–310. doi: 10.1016/S0197-4580(03)00084-8

Qiu, S., Joshi, P. S., Miller, M. I., Xue, C., Zhou, X., Karjadi, C., et al. (2020). Development and validation of an interpretable deep learning framework for Alzheimer's disease classification. Brain 143, 1920–1933. doi: 10.1093/brain/awaa137

Schmitter, D., Roche, A., Maréchal, B., Ribes, D., Abdulkadir, A., Bach-Cuadra, M., et al. (2015). An evaluation of volume-based morphometry for prediction of mild cognitive impairment and Alzheimer's disease. Neuroimage Clin. 7, 7–17. doi: 10.1016/j.nicl.2014.11.001

Tan, M., and Le, Q. (2019). “Efficientnet: rethinking model scaling for convolutional neural networks,” in International Conference on Machine Learning (PMLR), (Mingxing Tan; Quoc V. Le) 6105–6114.

Wen, J., Thibeau-Sutre, E., Diaz-Melo, M., Samper-González, J., Routier, A., Bottani, S., et al. (2020). Convolutional neural networks for classification of alzheimer's disease: overview and reproducible evaluation. Med. Image Anal. 63, 101694. doi: 10.1016/j.media.2020.101694

Woolrich, M. W., Jbabdi, S., Patenaude, B., Chappell, M., Makni, S., Behrens, T., et al. (2009). Bayesian analysis of neuroimaging data in fsl. Neuroimage 45, S173–S186. doi: 10.1016/j.neuroimage.2008.10.055

Keywords: reparametrized CNN, Alzheimer's disease, structural MRI, multiple morphological metrics, deep semantic features

Citation: Chen Z, Mo X, Chen R, Feng P and Li H (2022) A Reparametrized CNN Model to Distinguish Alzheimer's Disease Applying Multiple Morphological Metrics and Deep Semantic Features From Structural MRI. Front. Aging Neurosci. 14:856391. doi: 10.3389/fnagi.2022.856391

Received: 17 January 2022; Accepted: 11 April 2022;

Published: 26 May 2022.

Edited by:

Ramon Casanova, Wake Forest School of Medicine, United StatesReviewed by:

Goo-Rak Kwon, Chosun University, South KoreaMartin Dyrba, Helmholtz Association of German Research Centers (HZ), Germany

Copyright © 2022 Chen, Mo, Chen, Feng and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Haiyun Li, aGFpeXVubGlAY2NtdS5lZHUuY24=

Zhenpeng Chen

Zhenpeng Chen Xiao Mo

Xiao Mo Rong Chen

Rong Chen Pujie Feng

Pujie Feng Haiyun Li*

Haiyun Li*