- 1Department of Nuclear Medicine, Asan Medical Center, University of Ulsan College of Medicine, Songpa-gu, South Korea

- 2Department of Biomedical Engineering, Asan Medical Center, University of Ulsan College of Medicine, Songpa-gu, South Korea

- 3Department of Health Sciences and Technology, Samsung Advanced Institute for Health Sciences & Technology (SAIHST), Sungkyunkwan University, Songpa-gu, South Korea

- 4Department of Intelligent Precision Healthcare Convergence, Sungkyunkwan University, Suwon-si, South Korea

- 5Department of Convergence Medicine, Asan Medical Center, University of Ulsan College of Medicine, Songpa-gu, South Korea

Although skull-stripping and brain region segmentation are essential for precise quantitative analysis of positron emission tomography (PET) of mouse brains, deep learning (DL)-based unified solutions, particularly for spatial normalization (SN), have posed a challenging problem in DL-based image processing. In this study, we propose an approach based on DL to resolve these issues. We generated both skull-stripping masks and individual brain-specific volumes-of-interest (VOIs—cortex, hippocampus, striatum, thalamus, and cerebellum) based on inverse spatial normalization (iSN) and deep convolutional neural network (deep CNN) models. We applied the proposed methods to mutated amyloid precursor protein and presenilin-1 mouse model of Alzheimer’s disease. Eighteen mice underwent T2-weighted MRI and 18F FDG PET scans two times, before and after the administration of human immunoglobulin or antibody-based treatments. For training the CNN, manually traced brain masks and iSN-based target VOIs were used as the label. We compared our CNN-based VOIs with conventional (template-based) VOIs in terms of the correlation of standardized uptake value ratio (SUVR) by both methods and two-sample t-tests of SUVR % changes in target VOIs before and after treatment. Our deep CNN-based method successfully generated brain parenchyma mask and target VOIs, which shows no significant difference from conventional VOI methods in SUVR correlation analysis, thus establishing methods of template-based VOI without SN.

Introduction

18F-fluorodeoxyglucose positron emission tomography (18F-FDG PET) is a useful imaging technique that enables the investigation of glucose metabolism-based functional imaging not only in human brains but also in mouse brains (Som et al., 1980; Bascunana et al., 2019).

Because mouse brains have different shapes and sizes, spatial normalization (SN) of individual brain PET and/or magnetic resonance imaging (MRI) onto standard anatomical spaces is required for objective statistical evaluation (Ma et al., 2005). Moreover, we can apply common volume-of-interest (VOI) templates for spatially normalized individual brain PET and MR images, which can be directly used without labor-intensive manual tracing and further can define individual brain-specific VOIs using inverse transformation of SN.

To do this, PET-based SN (i.e., the SN of individual PET images onto a ligand-specific PET template) can be conducted, but SN using PET alone may be vulnerable to local uptake changes, and disease-specific uptake patterns may not be optimal compared to SN based on anatomical information. Moreover, MR-based SN has been preferred to project individual brain PET and/or MR images into a template space (Ashburner and Friston, 1999; Gispert et al., 2003) because MRI is independent of changes in uptake patterns due to diseases in PET images and is advantageous in terms of anatomical precision.

In human brain studies, many neuroimage analysis tools including statistical parametric mapping (SPM), FMRIB Software Library (FSL—Woolrich et al., 2009; Jenkinson et al., 2012), and Elastix (Klein et al., 2010; Shamonin et al., 2014) have been widely used to perform SN. However, there are limitations in the use of these tools in mouse brain research due to various differences between human and mouse brains, such as the scale, shape, and image contrast (including distribution of gray matter).

In some human brain studies, the template used for SN was skull-stripped to avoid potential spatial misregistration due to soft tissues around the skull and brain (Acosta-Cabronero et al., 2008). Because most mouse brain templates have been based on skull-stripped images, skull-stripping has been considered a prerequisite for the SN of mouse brain MRI and/or PET images (Fein et al., 2006; Feo and Giove, 2019).

Template-based (also known as atlas-based) brain skull-stripping and brain VOI segmentation methods in mouse brain MRIs have shown accurate segmentation performance in prior studies (Nie and Shen, 2013; Feo and Giove, 2019). In these studies, PET or MRI images of individual brains were registered onto predefined templates of average mouse brains using affine transformations, occasionally followed by non-linear registration for more precise registration of the individual brains onto a brain template. Subsequently, the template brain skull-stripping results were used as starting (or seeding) points to define the individual brain skull-stripping and segmentation. Although these methods were relatively accurate, most of them were applied semiautomatically, not only resulting in SN with reduced reliability due to inter- and intrarater reliability issues (Yasuno et al., 2002; Kuhn et al., 2014) but also involving a time-consuming process. In addition, degradation of image quality may occur (in preserving the integrity of the original voxel intensities), because SN requires spatial transformation of the images onto a template, which, in turn, requires image intensity interpolation.

Recently, several deep learning (DL)-based skull-stripping methods have been studied. Hsu et al. (2020) devised a DL-based framework to automatically identify mouse brains in MR images. The brain mask was generated using MR image patches randomly cropped as input of the 2D U-Net architecture. For the evaluation of the model, a manually traced brain mask by an anatomical expert and a brain mask generated by the model were evaluated by Dice coefficient and Jaccard index and also positive predictive value and sensitivity, and the results showed better performance than conventional methods (Chou et al., 2011; Oguz et al., 2014; Liu et al., 2020). Similarly, De Feo et al. (2021) proposed multitask U-Net (MU-Net) to accomplish skull-stripping and region segmentation. 128 T2 MR image from 32 mice at 4 different ages were used as inputs to perform the training and validation of their proposed model, and five manually traced regions consisting of cortical, hippocampus, ventricular, striatum, and brain masks were used as labels. They demonstrated that MU-Net was able to effectively reduce the inter- and intrarater variability that occurs when skull-stripping and region segmentation are performed manually and showed better performance than the latest multiatlas segmentation methods (Jorge Cardoso et al., 2013; Ma et al., 2014).

By contrast, DL-based SN has been a very challenging problem involving many DL-based medical image preprocessing steps. Recently, an interesting DL-based “pseudo” PET template generation method (as the preprocessing of amyloid PET SN) has been developed using a convolutional autoencoder and a generative adversarial network (Kang et al., 2018). Nonetheless, this class of methods commonly generates a “pseudo” linearly or non-linearly registered individual brain onto the template using a convolutional neural network (CNN), instead of estimating the actual spatial transformation of the individual images onto the template, inevitably requiring additional efforts for the final SN. Another class of CNN-based spatial registration methods has been developed, called spatial transformer networks (STNs, Jaderberg et al., 2015). However, most of them are demonstrated as 2D registration and linear (rigid body or affine) transformation tools. Although a diffeomorphic transformer network was recently developed by Detlefsen et al. (2018) using a continuous piecewise affine-based transformation, its validation study as an SN tool for PET or MR images has not been conducted thus far. Notably, a DL model-based SN of Tau PET has been developed, which repeatedly estimates the sets of rigid and affine transformations using a CNN (Alvén et al., 2019). Although rigid and affine transformations have been implemented and evaluated, a non-rigid deformation has not been. Moreover, this class of methods requires a complicated CNN architecture consisting of cascades of many regression and spatial transformer layers, that is, numerous CNN parameters to be estimated. This reduces the clinical feasibility of these methods in that considerable amounts of data are required for effective training. Taken together, thus far, the SN problem in isolation has not been fully resolved, even in recent DL-based literature, including not only image segmentation (Delzescaux et al., 2010; Bai et al., 2012; Nie and Shen, 2013; Feo and Giove, 2019) but also apparently more challenging image generation methods, such as PET-based MR generation, to support SN (Choi et al., 2018). Considering that DL-based image generation can resolve these challenging issues of medical image preprocessing, we were motivated to reformulate the challenging SN problem into a more easily tractable image generation problem of VOI segmentation. To bridge SN and segmentation, we used the useful approach of iSN.

The iSN process involves the operation of generating the inverse of a deformation field and resampling a spatially normalized image back to the original space (i.e., an individual brain space—Ashburner et al., 2000). Indeed, we can generate inversely normalized VOIs (iVOIs) in an individual brain space by applying the iSN technique to VOI templates, as performed in many neuroimaging studies. Representatively, neuroimage analysis tools such as MarsBar and the deformation toolbox of SPM conduct iSN-based VOI analysis in an individual (native) brain space and have been frequently used in many brain PET quantification studies (Kim et al., 2010, 2015; Cho et al., 2014). Specifically, computed tomography (CT)-based SN was used for 18F-fluoro-propyl-carbomethoxy-iodophenyl-tropane (18F-FP-CIT) PET analysis using an inverse-transformed automatic anatomical label (AAL) VOI template in the two previous studies (Cho et al., 2014; Kim et al., 2015). Similarly, iVOIs were defined using the iSN of the Brodmann area or callosal template VOIs as the seed points for diffusion tensor tractography (Oh et al., 2007, 2009). Although attenuation correction (AC) can be conducted directly using CT transmission data in conventional PET/CT, it is difficult to conduct AC in PET/MR. To resolve this issue, several studies have employed template-based AC (Hofmann et al., 2009, 2011; Wollenweber et al., 2013; Sekine et al., 2016). In particular, Sekine et al. (2016) projected an atlas-based pseudo-CT to a patient-specific PET/MR space using iSN methods, which generated a single-head atlas from multiple CT head images.

Inspired by these concepts, we developed a new method for generating the iVOI labels of a deep CNN in an individual brain space. Consequently, we could reduce the abovementioned complicated problem of SN for the final VOI quantification into a much simpler problem of VOI segmentation in an individual brain space that is straightforwardly tractable for modern deep CNNs such as U-Net.

Recently, individual brain-specific VOI generation methods such as the FreeSurfer software (Lehmann et al., 2010), which can produce highly concordant VOIs with respect to manually traced ground-truth VOIs, have been frequently used in many neuroimaging studies. By contrast, template-based VOI approaches such as SPM present better or equal performance than individual brain space VOIs in terms of test–retest reproducibility (Palumbo et al., 2019). Considering this information, our previous iSN-based template VOI defined in the individual brain space leverages the strengths of both methods. Employing the iSN method, we can avoid image deformation, whose magnitude can be described using the Jacobian determinant of the deformation fields (Ashburner and Friston, 2000), which can lead to differences between the effective voxel sizes of PET images in individual brain and template spaces.

In this regard, herein, we propose a unified deep CNN framework designed to conduct not only mouse brain parenchyma segmentation (i.e., skull-stripping) but also to generate target VOIs (i.e., cortex, hippocampus, striatum, thalamus, and cerebellum) in an individual brain space. This is achieved using VOI templates defined in mouse MR or PET templates without SN onto a template to facilitate automatic precision 18F-FDG PET analysis with MR-based iVOI using deep CNN. Consequently, we could reduce the relatively complicated brain VOI generation (by skull-stripping and SN) for precise PET quantification to a more tractable problem of DL-based iVOI segmentation in an individual brain space without conducting SN. This approach can avoid the complicated preprocessing steps for skull-stripping and SN for target VOI generation, which prevents the unwanted time-consuming process of semiautomatic skull-stripping and reduces the inter- and intrarater reliability issues. In addition, our DL-based method can perform precise PET image analysis without a network of complicated structures for SN (Kang et al., 2018; Alvén et al., 2019).

Materials and Methods

Data

Eighteen transgenic mice expressing an amyloid precursor protein (APP) and presenilin (PS)-1 Alzheimer’s disease (AD) mouse model underwent brain 18F-FDG PET and T2-weighted MR (T2 MR) imaging. The PET images were acquired by nanoScan PET/MRI 1 Tesla (nanoPM—Mediso Medical Imaging Systems, Budapest, Hungary). Eighteen mice were anesthetized with isoflurane (1.5–2%) and received an intravenous injection of 18F-FDG (0.15 m Ci/0.2 cc). After the acquisition of T2-weighted fast spin-echo MRI by nanoPM, the PET static images were acquired for 20 min in list mode and were reconstructed using the ordered subset maximum-likelihood algorithm using the abovementioned T2-weighted MR-based AC in the Nucline software (Reinvent Systems For Science & Discovery, Mundolsheim, France) in the following parameters—energy window: 250–750 keV; coincidence mode: 1–3; Tera-tomo3D full detector; regularization: normal; iteration x subset: 8 × 6; voxel size: 0.4 mm. The MR images were acquired using a Bruker 7.0T MRI Small Animal Scanner (Bruker, Massachusetts, United States) reconstruction options of T2 MR images as follows—repetition time: 4,500 ms; echo time: 38.52 ms; field of view (FOV): 20 mm x 20 mm; slice thickness: 0.8 mm; matrix: 256 × 256; respiration gating was applied.

Preprocessing

All image preprocessing was performed using SPM 12 software (SPM12; Wellcome Trust Centre for Neuroimaging, London, United Kingdom) implemented in MATLAB R2018a (The MathWorks Inc.) and MRIcro (Chris Rorden, Columbia, South Carolina, United States). First, the acquired T2 MR images and 18F-FDG PET images were converted from DICOM format to Analyze format using MRIcro. PET images were coregistered onto MR images to match different FOVs and slice thicknesses. For brain parenchyma mask generation DL, the entire mouse brain was manually traced using in-house software (Asan Medical Center Nuclear Medicine Toolkit for Image Quantification of Excellence—ANTIQUE) (Han et al., 2016). To achieve a more precise SN of the PET image, deformation fields generated by spatial normalizing T2 MR images to the T2 MR template were applied to the corresponding PET images. In addition, to obtain a label image of the “iVOI template” (i.e., VOI template-based and iSN-based VOIs in an individual brain space) generation model, an inverse deformation field was generated and resampled from the VOI template space to the individual image space. This was achieved using the deformation toolbox of SPM, by performing inverse transformation of the deformation fields that map the individual brains onto the template brain. A quantitative evaluation of the PET images by a VOI template was used as the gold standard.

Deep Convolutional Neural Network

A CNN is a DL model that enables training while maintaining spatial information and thus allows efficient learning with a lighter architecture than a conventional neural network architecture, that is, fully connected network. For image feature extraction in a CNN, the filters (also known as kernels) in the convolution layers transform the input images into convolution-based filtered output images, termed feature maps. F represents a feature map and is calculated using the following equation:

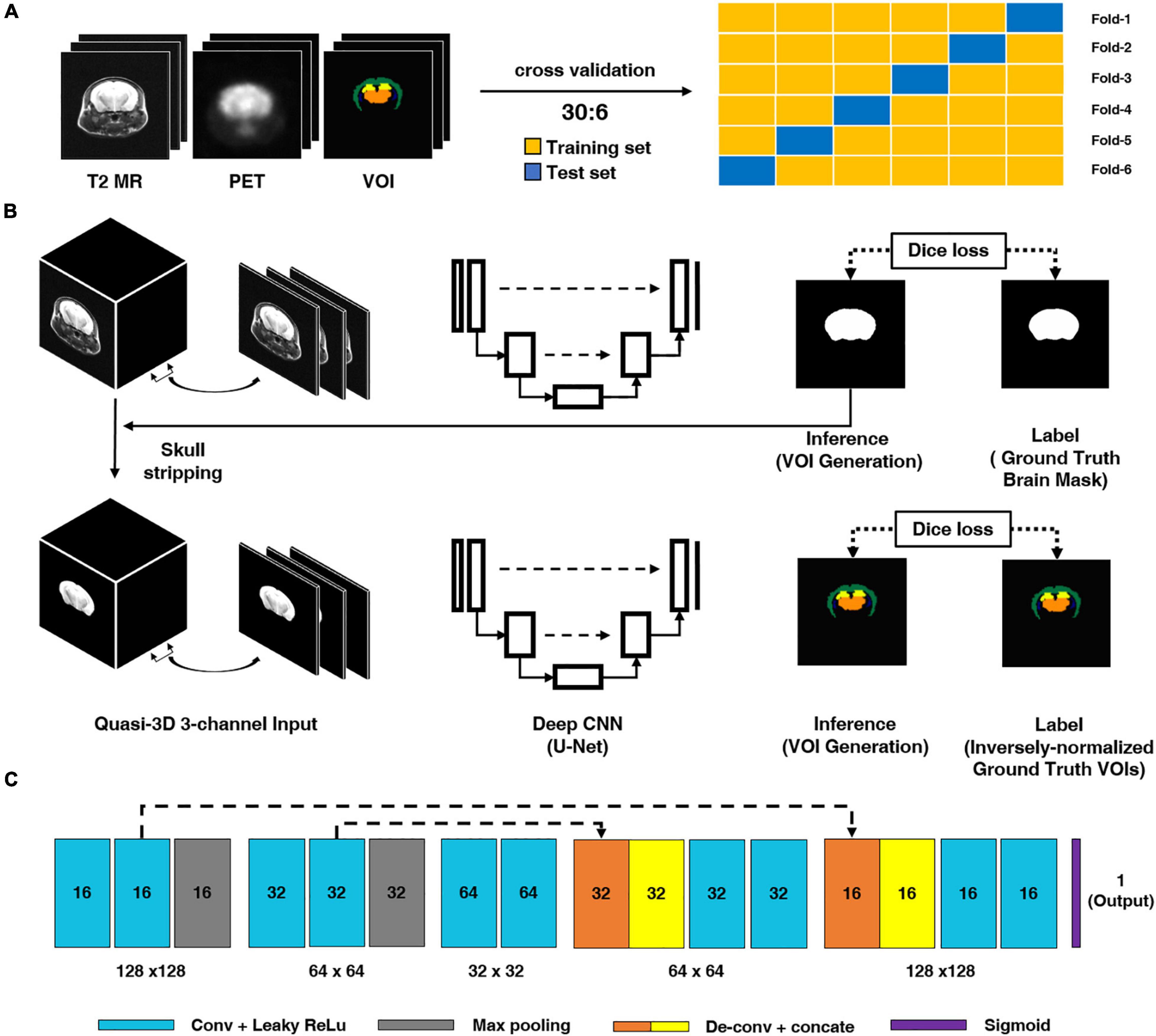

where I represents the input image, k represents a kernel, and m and n represent the index of the rows and columns of the resulting matrix, respectively. For each convolution layer, the shape of the output data is changed according to the filter size, stride, and max pooling size and if zero-padding is applied or not. In this study, we used a quasi-3D U-Net for the deep CNN architecture and an input comprising three consecutive slices for the T2 MR images to generate a brain mask for skull-stripping and an iVOI template in an individual space, as illustrated in Figure 1. Specifically, we used three consecutive slice-based multichannel inputs for the generation of each VOI slice; this 2D-like approach can generate as large amount of training data, as a 2D approach (see Supplementary Material for the comparison of the results of the quasi-3D and 2D approaches). We believe that such an approach can additionally overcome the limitations of the small amounts of mouse data. Moreover, the consecutive slice-based information allows the U-Net architecture to generate a highly continuous VOI by referring to the information of contiguous slices. U-Net consists of an end-to-end CNN architecture using a contracting path of the images and an expanding path for localization and residual learning of images to output layers. The contraction path consists of a 3 × 3 convolution followed by a 2 × 2 max pooling using a leaky rectified linear unit (leaky ReLU) as the activation function. The expansion path consists of a 2 × 2 deconvolution for upsampling and a 3 × 3 convolution using a leaky ReLU after concatenating with the context captured in the contraction path to increase the accuracy of localization. To train a DL CNN for skull-stripping mask generation, the abovementioned consecutive axial slices of T2 MR images were used as multichannel input, and manually traced binary brain masks were used as labels. A similar qausi-3D approach was also used in the training of iVOI template generation model, where an iVOI template in an individual space was used as the label. As the U-Net input, the skull-stripping mask generation model and the iVOI template generation model used 70 slices of transaxial MR and the corresponding skull-stripped MR, respectively, and the input dimension was 70 slices × N, 128, 128, 3 channel, where N is the number of mice used in the training set.

Figure 1. Schematic diagram of the proposed deep CNN model. (A) We conducted six-fold crossvalidation for training and test set separation. (B) Brain parenchymal mask generation deep CNN. For model training, T2-weighted magnetic resonance (T2 MR) imaging was used as an input and manually traced brain mask was used as a label. (B) Inversely normalized volumes-of-interest (iVOIs) in individual brain space generation through deep CNN. For training the deep CNN model, skull-stripped T2 MR was used as the model input and iVOI template was used as the VOIs in individual space (the label for the model). (C) Deep CNN (i.e., U-Net) structure used in this study. The light blue box represents a 3 × 3 convolution and a leaky ReLU followed by max pooling, expressed in gray boxes. The orange and yellow boxes denote the 2 × 2 deconvolution and concatenation operations to increase the accuracy of localization. The dash arrow represents the copying skip connections. The dash arrow represents the copying skip connections. deep CNN, deep convolutional neural network.

To overcome the small amount of data available for training set, data augmentation was performed through shift, rotation, and shear transformations. We used a Dice loss function and an adaptive moment estimation (Adam) optimizer to fit deep CNN parameters. The training was performed with an initial learning rate of 1e-5 with 25 batches. Our deep CNN model was implemented using Keras (version 2.2.4)-based code in the Python programming language with a backbone of TensorFlow (version 1.12.0) running on a GeForce NVIDIA GTX 1080 GPU and an Intel® Xeon® E5-2640 CPU. To regularize the generated mask by the deep CNN, we conducted postprocessing in several steps, different from other studies that used complicated postprocessing methods such as graph cuts (Jimenez-Carretero et al., 2019; Kim et al., 2020; Hu et al., 2021). First, the mask predicted by DL was converted into a binary mask, and erosion and dilation were sequentially performed (also known as the “open” operator) to remove noisy false positives (FPs). Subsequently, 3D-connected component analysis-based kill-islands and fill-holes were conducted to reduce FPs and false negatives (FNs), respectively.

Performance Evaluation

To avoid overfitting issue and also to increase the generalization ability of the deep CNN, we conducted six-fold crossvalidation for training and test set separation. Of a total 36 samples obtained by 2 scans before and after treatment in 18 mice, 30 samples (15 mice × 2 scans) were used as the training set. The remaining 6 samples (3 mice × 2 scans) were used as the testing set in each fold of the training or test set pair to ensure that the slices of the same mouse are not split as training or test samples. To assess the concordance between predicted and label masks, we measured the Dice similarity coefficient (DSC), average symmetric surface distance (ASSD), sensitivity (SEN), and positive predictive value (PPV). DSC is the most representative indicator used for image segmentation evaluation. It directly compares the results of the two image segmentations, which indicate their similarity. The average symmetrical surface distance is the average of all distances from a point at the boundary of the machine segment region to the boundary of the ground truth. The SEN is defined as the proportion of true positive (TP) results among TP and FN. The SPE is defined as the proportion of true negative (TN) among TN and FP. PPV is defined as the proportion of TP among TP and FP. Formulas are provided for the five methods as follows, where P is the predicted mask of our network, and G is the label used in deep CNN (See following equations for the details).

Standardized uptake value (SUV), the most representative and simplest method of determining activities in PET images, is widely used for PET (semi)quantitative analysis. SUV can be calculated by the equation below.

where CPET(t) is the radioactivity measured from an image acquired at the time t, ID is the injected dose at t = 0, and BW is the animal’s body weight. The ratio of the SUV from target region and reference region within the same PET image is commonly called standardized uptake value ratio (SUVR). In 18F-FDG PET, a PET image is obtained based on glucose uptake metabolism after sufficient time elapses, following the injection of the tracer into the body. Then, SUVR was calculated by dividing target regions of glucose metabolism depending on the disease, with constant glucose uptake regions at all times regardless of the disease. In this study, the SUVR evaluation was conducted on four major regions: cortex, hippocampus, thalamus, and stratum. We chose the cerebellum as a reference region, which seems to be free of plaques in the AD mouse model.

To assess our DL-based method, mean count and SUVR were calculated by the following three different methods: DL-generated mask (VOIDL), DL label mask (i.e., inversely normalized template (ground-truth) VOIs, VOIiGT), and template-based ground-truth VOI (VOIGT). Then, correlation analysis was conducted between these methods. In addition, we compared percentage change (% change) in SUVR before and after the treatment of 18 mice obtained by the abovementioned three masks by qualitative assessment and quantitative assessment by conducting paired or unpaired two-sample t-tests.

Results

Deep Convolutional Neural Network for Automatic Generation of Brain Mask for Skull-Stripping

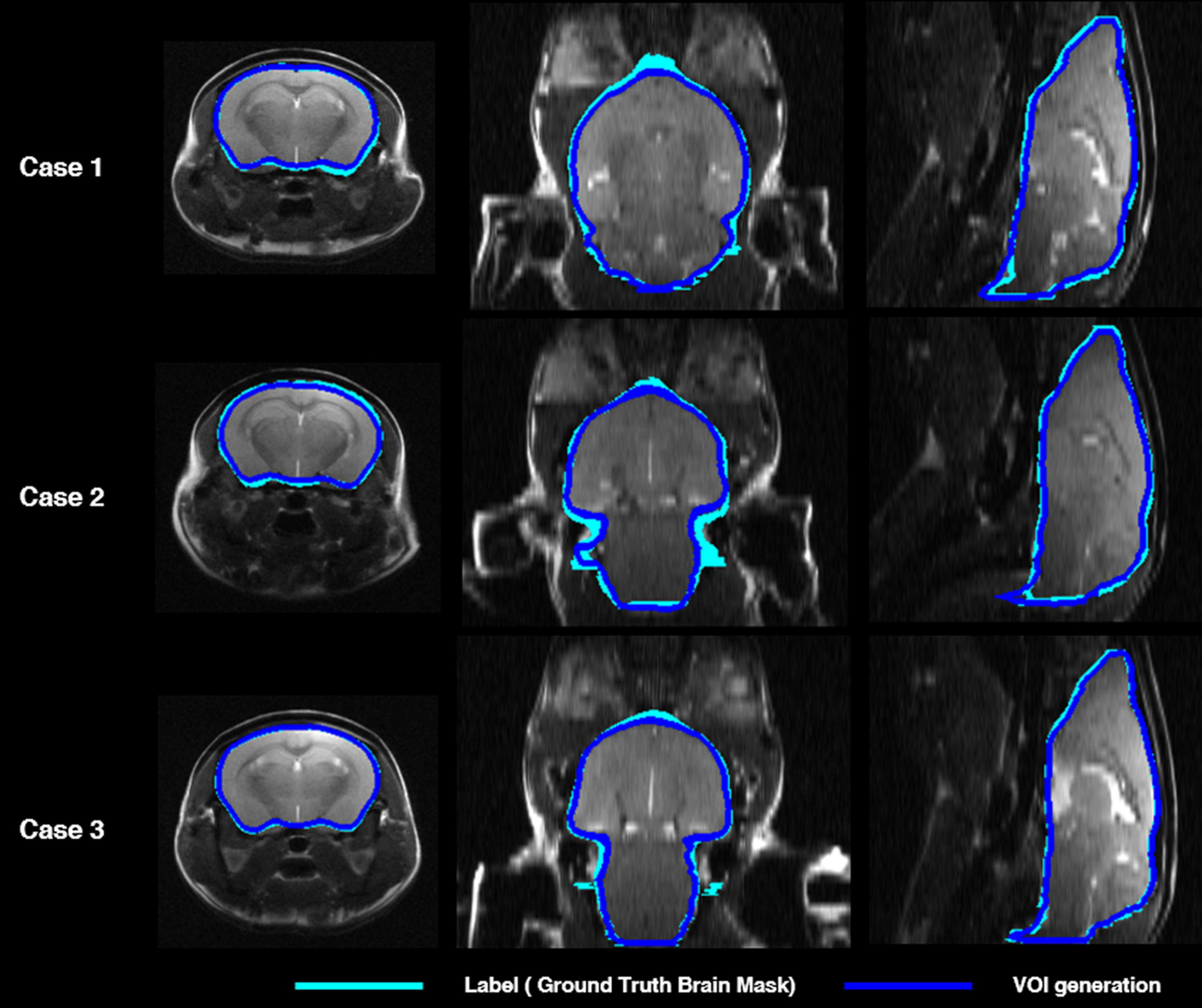

Figure 2 shows the comparisons in the brain skull-stripping mask generated by the proposed deep CNN (i.e., U-Net) (blue contour) and a manually traced brain mask (light blue contour) in three mice. All three mice showed high visual consistency without significant differences.

Figure 2. Comparison of mouse brain parenchyma mask contours between manually traced brain mask (light blue) and deep CNN-predicted brain mask (blue) in three cases. The left shows the axial plane, the middle the coronal plane, and the right shows the sagittal plane. deep CNN, deep convolutional neural network.

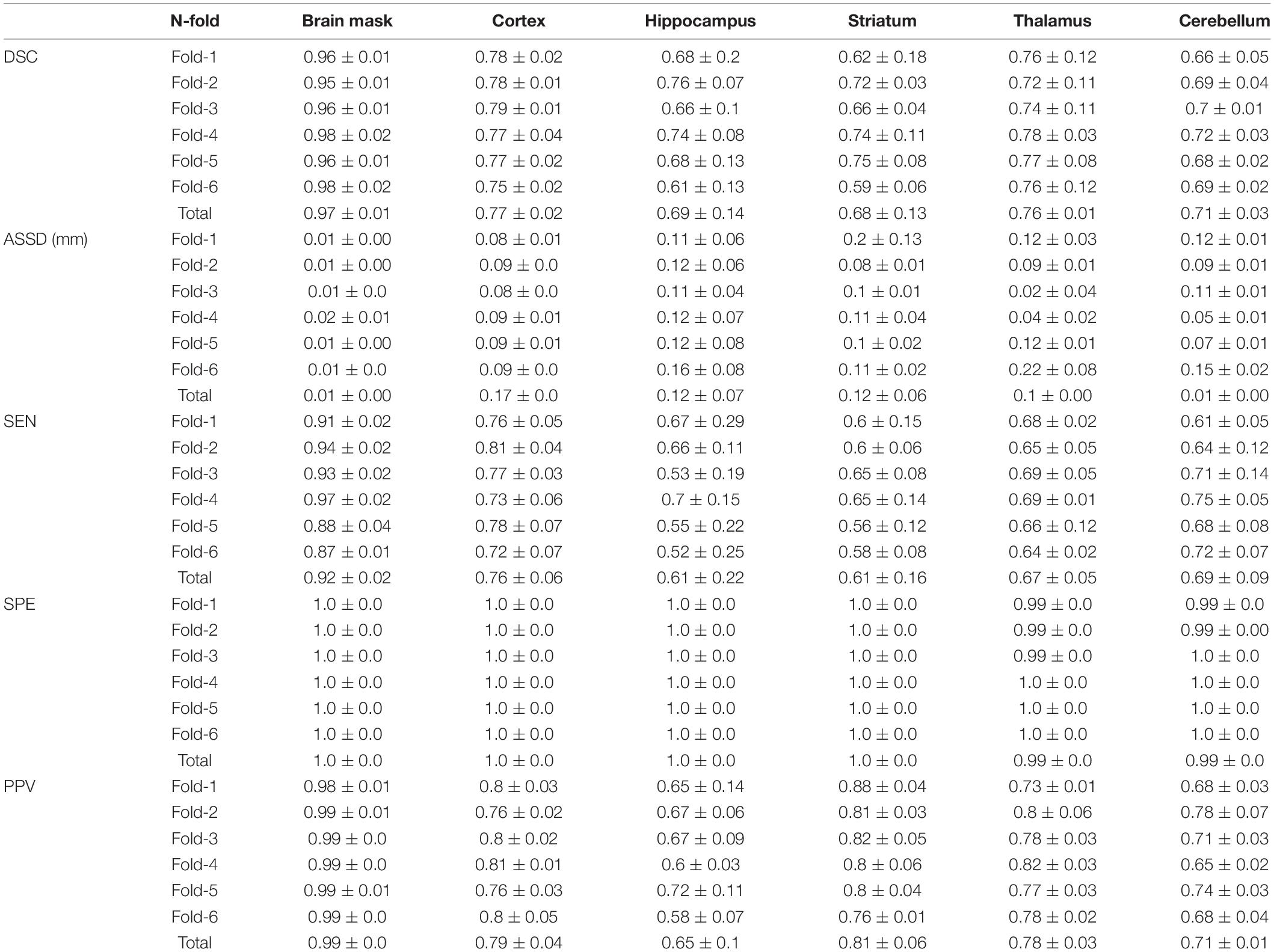

Table 1 shows the concordance of brain masks generated by a deep CNN and manually traced masks in terms of the DSC, ASSD, SEN, SPE, and PPV. Mean DSC reached 0.97, mean ASSD was less than 0.01 mm, and mean SEN reached 0.92. In addition, both mean SPE and mean PPV were greater than 0.99. In summary, there was no significant difference between the brain mask generated by our DL-based method and the manually traced mask in the six-fold crossvalidation.

Table 1. Our unified deep neural network assessment through the mean and standard deviation of Dice similarity coefficient (DSC), average symmetric surface distance (ASSD) (mm), sensitivity (SEN), and positive predictive value (PPV) between DL labels and DL-generated brain masks and inversely normalized target VOIs (cortex, hippocampus, striatum, thalamus, and cerebellum) in six-fold crossvalidation.

Deep Convolutional Neural Network for Automatic Generation of Inversely Normalized VOI Template in Individual Brain Space

We conducted a qualitative visual assessment and quantitative assessment of VOIDL and VOIiGT to evaluate the performance of the proposed deep CNN model. In addition, we conducted a correlation analysis between mean count in each VOI obtained using VOIDL, VOIiGT, and VOIGT and correlation analysis between each mean SUVR using the abovementioned VOI method. In addition, % change of SUVR between PET images before and after treatment was analyzed through a two-sample t-test for each VOI mask.

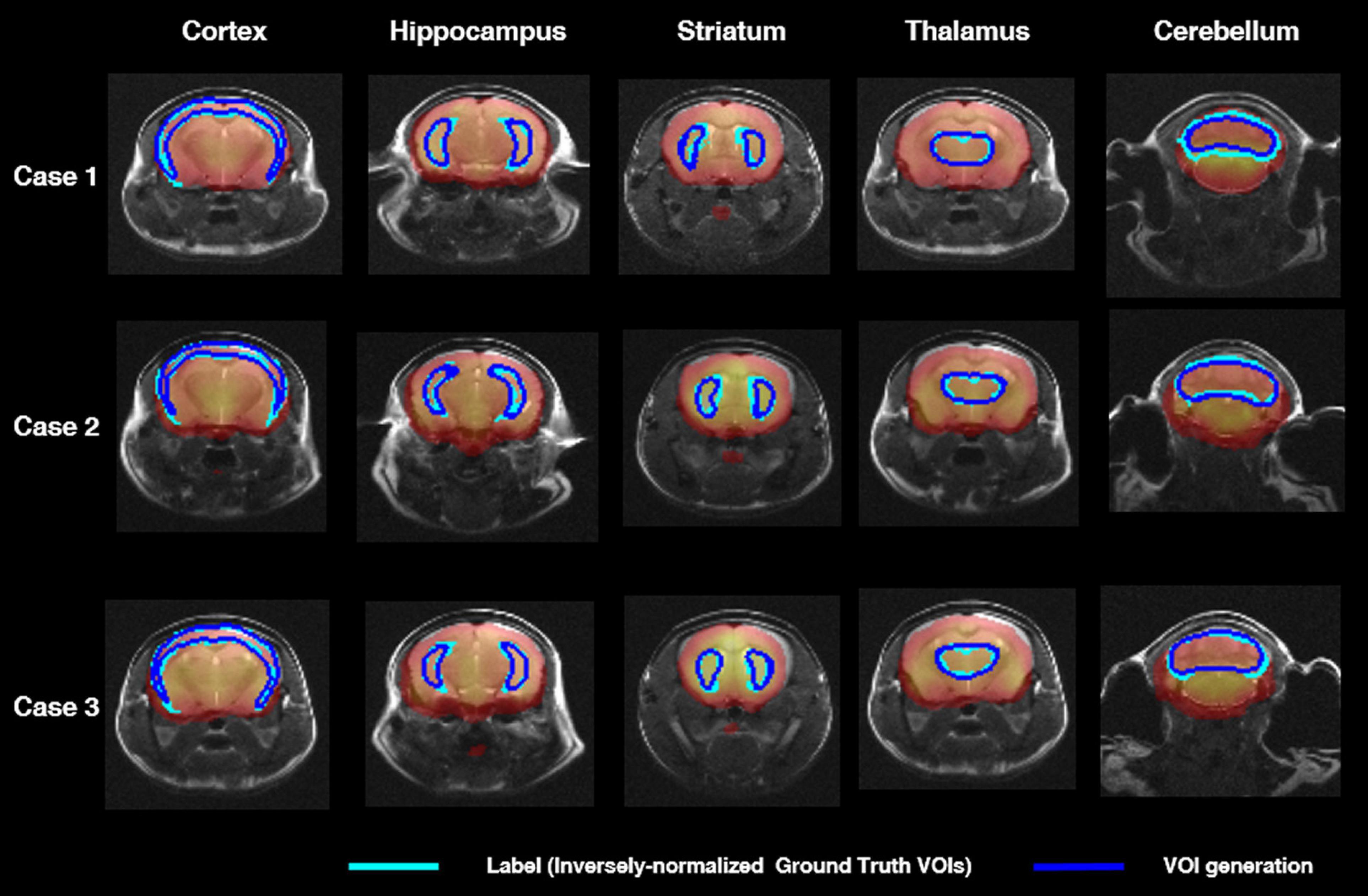

Figure 3 shows axial slices of VOIDL (blue contour) and VOIiGT (light blue contour) in target VOIs (cortex, hippocampus, stratum, thalamus, and cerebellum). There was no significant difference between VOIDL and VOIiGT in cortex, cerebellum, and thalamus and also in the relatively small areas of the hippocampus and striatum.

Figure 3. Segmentation comparison of target VOIs (i.e., cortex, hippocampus, striatum, thalamus, cerebellum) in axial plane of three mice. The light blue contour represents deep learning label masks which are iVOI templates. The blue contour represents an iVOI template in an individual space generated by the proposed deep CNN. iVOI, inversely normalized VOI; deep CNN, deep convolutional neural network.

In Table 1, VOIDL and VOIiGT of 36 mice in target VOIs (cortex, hippocampus, striatum, thalamus, and cerebellum) were evaluated by calculating the average of DSC, ASSD, SEN, SPE, and PPV. The DSC for each target VOI was 0.77, 0.69, 0.68, 0.76, 0.69, the ASSD was 0.17, 0.12, 0.12, 0.1, and 0.01 mm, SEN was 0.76, 0.61, 0.61, 0.67, and 0.69, SPE was 1.0, 1.0, 1.0, 0.99, and 0.99, and PPV was 9, 0.65, 0.81, 0.78, and 0.71, respectively, which indicates that our DL model generated target VOIs well.

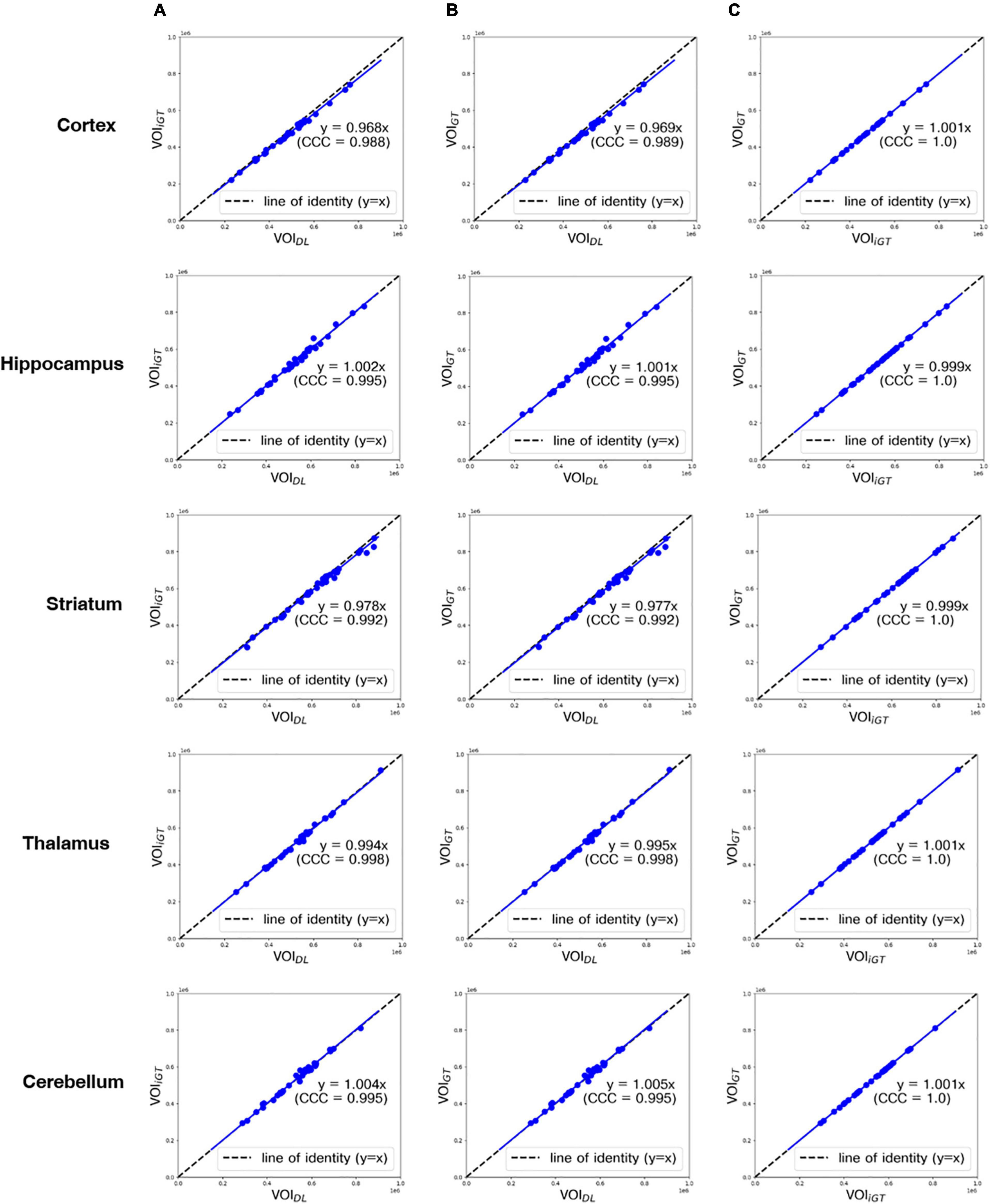

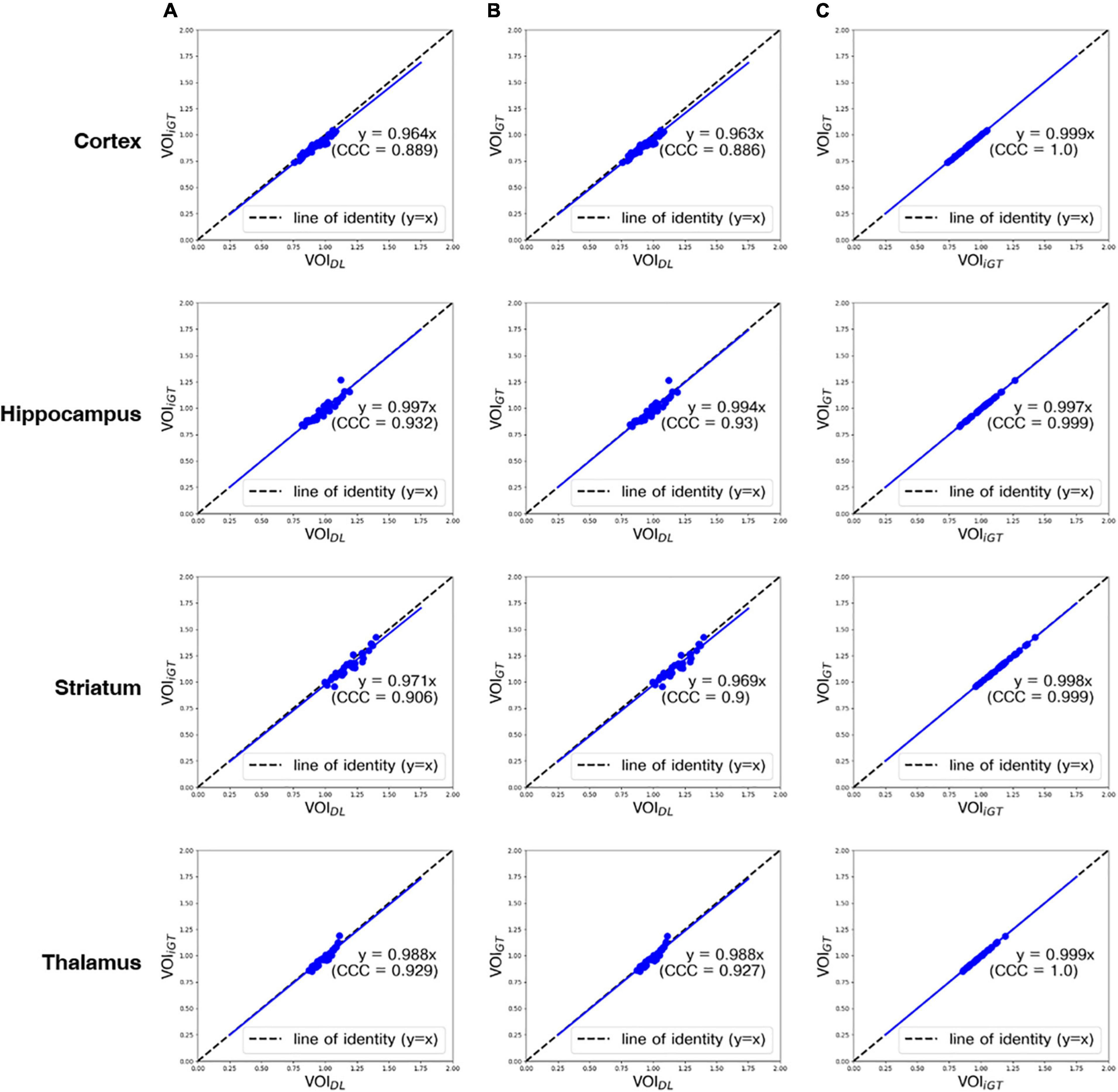

In Figure 4, the mean counts obtained by VOIDL, VOIiGT, and VOIGT were significantly (p < 0.001) correlated with one another in all target VOIs. In addition, as shown in Figure 4B, the mean count obtained by VOIGT in template space tended to be slightly underestimated compared to the mean count obtained by VOIDL in individual space. Furthermore, we conducted a correlation analysis between each mean SUVR obtained using VOIDL, VOIiGT, and VOIGT in all target VOIs (Figure 5). For SUVR analysis, cerebellum was used as a reference region, that is, SUV (or mean count) of target regions was normalized by that of cerebellum. In all target VOIs, mean SUVR obtained through VOIDL, VOIiGT, and VOIGT was significantly (p < 0.001) correlated with one another. As shown in Figure 5B, in the hippocampus and thalamus, the mean SUVR obtained by VOIGT in template space and the VOIDL in individual space was almost identical, whereas in the cortex and striatum, the mean SUVR obtained by VOIGT was underestimated compared to the mean SUVR obtained by VOIDL.

Figure 4. Correlation analysis between all mean counts (blue dot) obtained using VOIDL, VOIiGT, and VOIGT in all target VOIs from the first to the fifth row (cortex, hippocampus, striatum, thalamus, and cerebellum, respectively). CCC represents a measure of reliability based on covariation and correspondence. The line of identity (dashed line) is depicted as a reference line. Cortex, hippocampus, striatum, thalamus, and cerebellum are represented sequentially from the top row. (A) Correlation analysis between each mean count obtained using VOIDL and VOIiGT. (B) Correlation analysis between each mean count obtained using VOIDL and VOIGT. (C) Correlation analysis between each mean count obtained using VOIiGT and VOIGT VOIDL, deep-learning generated-VOI; VOIiGT, deep learning (inverse-normalized ground-truth) label VOI; VOIGT, template-based ground-truth VOI; CCC, concordance correlation coefficient.

Figure 5. Correlation analysis between all mean SUVRs (blue dot) obtained using VOIDL, VOIiGT, and VOIGT in all target VOIs from the first to the fourth rows (cortex, hippocampus, striatum, and thalamus, respectively). Concordance correlation coefficient represents a measure of reliability based on covariation and correspondence. The line of identity (dashed line) is depicted as a reference line. Cortex, hippocampus, striatum, and thalamus are represented sequentially from the top row. (A) Correlation analysis between mean SUVRs obtained using VOIDL and VOIiGT. (B) Correlation analysis between mean SUVRs obtained using VOIDL and VOIGT. (C) Correlation analysis between each mean SUVRs obtained using VOIiGT and VOIGT VOIDL, deep learning generated VOI; VOIiGT, deep learning label VOI; VOIGT, template-based VOI; CCC, concordance correlation coefficient.

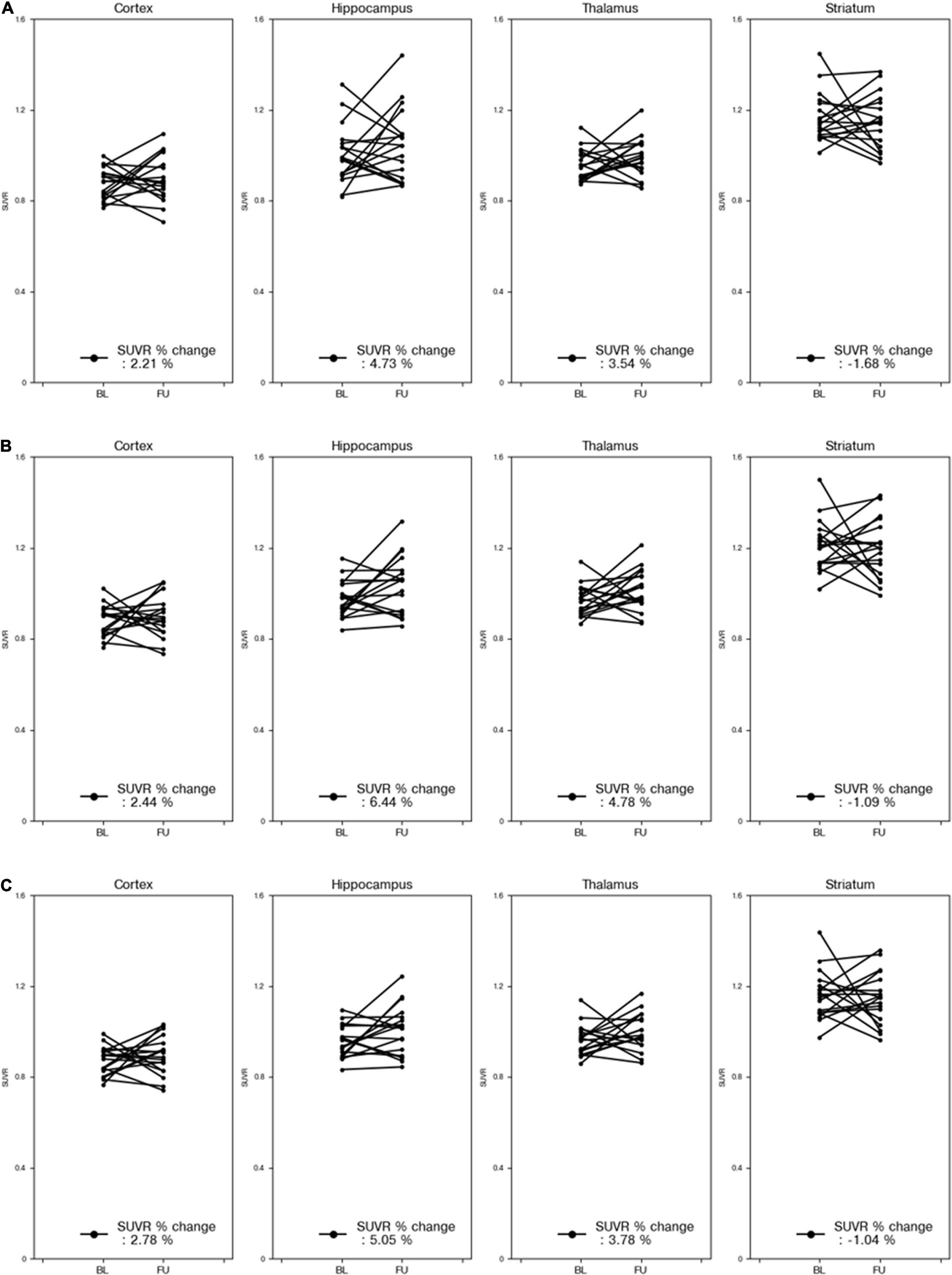

Figure 6 compares the % changes of the SUVRs in each VOI before and after treatment using VOIDL, VOIiGT, and VOIGT for 18 mice. Regardless of the three types of masks, there was no significant difference between the mean SUVR % changes obtained by each mask. Moreover, the degrees of increase in the mean SUVRs in the cortex, hippocampus, and thalamus were similar, and the degree of decrease in those in the striatum was similar. In addition, we did not find significant differences in the SUVR% changes of all target VOIs before and after treatment of three methods (DL-based method and SN/iSN-based conventional methods) by both paired and unpaired two-sample t-tests (p > 0.3 and p > 0.8, respectively).

Figure 6. % changes in SUVR for each part of 18 mice before and after treatment. The reference area of the SUVR was the cerebellum. Percent change in SUVR obtained using VOIDL (A). Percent change in SUVR obtained using VOIiGT (B). Percent change in SUVR obtained using VOIGT (C). Results for cortex, hippocampus, striatum, and thalamus are presented sequentially from the left column. VOIDL, deep learning generated VOI; VOIiGT, deep learning label VOI; VOIGT, template-based VOI; baseline (BL—before treatment); follow-up (FU—after treatment).

Discussion and Conclusion

Spatial normalization of individual brain PET images onto standard anatomical spaces is required for objective statistical evaluation (Feo and Giove, 2019). However, several steps of the preprocessing were semiautomatic, which causes time-consuming problems and inter- and intrarater problem. A precise SN method through DL has also been devised and has recently attracted considerable attention. However, despite the use of a complicate network of structures, the method still has difficulty in performing complete SN from affine transformation to non-linear transformation (Alvén et al., 2019), and an additional SN process is required to generate a PET pseudotemplate (Kang et al., 2018) or a method of generating MR from a PET image (Choi et al., 2018). In addition, many studies have considered the use of iSN for PET quantitative analysis (Kim et al., 2010, 2015; Cho et al., 2014). Inspired by this, we generated an iVOI template to reformulate the complicated and difficult-to-implement SN problem into a relatively simpler problem of VOI segmentation for PET quantification. Overall, we have devised a unified CNN framework for brain mask generation to skull-stripping and generate an iVOI template in individual space to perform precise PET quantification without any additional efforts of skull-stripping and SN.

With regard to DL for brain parenchyma segmentation, as shown in Figure 2, manually traced brain mask contours (light blue) and DL-based contours (blue) were almost identical in visual assessment. Moreover, DSC reached 0.97, ASSD was less than 0.01 mm, and SEN and PPV were higher than 0.92 and 0.99 (Table 1) compared between these two masks, confirming good agreement from a quantitative perspective as well. With regard to DL for iVOI template segmentation, mean count and SUVR obtained by VOIiGT and VOIDL were significantly (p < 0.001) correlated with each other in all target VOIs (Figures 3A, 4A). In addition, each of the mean count and SUVR obtained with template-based VOI (i.e., VOIGT) and individual space VOI (i.e., VOIDL, VOIiGT) showed a significant (p < 0.001) correlation between each VOI methods (Figures 3B,C, 4B,C). However, the mean count and SUVR obtained by VOIGT tended to be slightly underestimated compared to that obtained by VOIiGT and VOIDL in hippocampus and striatum. We believe that our method can avoid image intensity degradation problems that may occur in preprocessing, including SN. Consequently, the % change of SUVR in the target VOI showed the same tendency in each of the three methods. In summary, the proposed DL-based method using an iVOI template in individual space that does not require SN has been developed as new method of PET quantitative assessment, avoiding variation between intra- and interrater that can occur in the process of drawing a brain mask or in the preprocessing. In addition, we showed a comparable level of segmentation performance using lighter neural network structures (i.e., CNNs with fewer training parameters—fewer channels and simple convolutional blocks) as compared to conventional mouse brain studies (Hsu et al., 2020; De Feo et al., 2021). Furthermore, our DL-based iVOI method has reformulated the PET SN problem for precise PET quantification, which remains challenging despite using a complicate network that requires a considerable parameter estimation (Choi et al., 2018; Kang et al., 2018; Alvén et al., 2019), with an easy-to-handle image generation method for target VOIs using inversely normalized template VOIs.

This study involves several limitations. First, we only had small number of data, consisting of 18 mice. Although the data were inflated using consecutive axial slices of T2 MR as multichannels input of the deep CNN and using data augmentation, this was not sufficient. Second, because this study was carried out with only in-house mice with a specific disease (i.e., Alzheimer’s disease), there is a possibility that the trained model may be specialized to our data. Therefore, as in study by De Feo et al. (2021), model validation should be warranted with more data and various types of mice in the near future. Third, our method is dependent on MR images because we generated iVOI templates using parameters based on SN of MR images onto MR templates in the process of generating label used for deep CNN training. However, corresponding MR images are not always available in PET image analyses. In this regard, we should consider further studies for generating iVOI templates only with PET images. Finally, one of the strengths of this study is that because we used a PET/MRI system registration of PET, MR is straightforward in nature compared to existing methods. Nonetheless, we believe that our proposed approach may be readily applied to ordinary PET or PET/CT scanners with additional MR images as well. In this regard, a further study on a more unified deep CNN with PET input and iVOI defined by MR should be warranted in the near future.

In conclusion, we proposed a unified deep CNN-based model that can generate mouse brain parenchyma masks and iVOI templates in individual brain space without any effort for skull-stripping and SN. Through qualitative and quantitative evaluations, our proposed model has shown identical quantification of regional glucose metabolism (i.e., mean SUV of each VOI) forming line of identity between ground truth (conventional template) methods-based mean SUV/SUVR and DL-based mean SUV/SUVR (Figures 4, 5, respectively) and also concordant patterns of mean SUVR treatment-induced change between ground truth and DL methods. These results show that the proposed approach comprises a new method for PET image analysis by reformulating the SN problem, which has been difficult to implement despite of recent advances in DL techniques, into a segmentation problem using iVOI template generation in an individual brain space.

Data Availability Statement

The datasets presented in this article are not readily available because because this study cohort is not open for public use. Requests to access the datasets should be directed to JO, anVuZ3N1Lm9oQGdtYWlsLmNvbQ==.

Ethics Statement

The animal study was reviewed and approved by the Institutional Animal Care and Use Committee (IACUC), Asan Institute for Life Sciences, Asan Medical Center.

Author Contributions

SS, S-JK, and JO contributed to study conceptualization, data acquisition, data analysis, data interpretation, writing, and editing of the manuscript. JC, S-YK, and SO contributed to data acquisition and data interpretation. SJ and JK contributed to study conceptualization, data interpretation, and editing of the manuscript. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Research Foundation of Korea (NRF) grant funded by the Korean Government (Ministry of Science and ICT; nos. NRF-2020M2D9A1094074 and 2021R1A2C3009056) and by a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (HI18C2383).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We appreciate Neuracle Science, Co. Ltd. for allowing us to use the collaboration research data of the Center for Bio-imaging of New Drug Development (C-BiND), Asan Institute for Life Sciences, to demonstrate the strength of our DL-based VOI generation method in Alzheimer mice model to be published as this manuscript original article (to Jae Keun Lee and Bong Cheol Kim et al.).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnagi.2022.807903/full#supplementary-material

References

Acosta-Cabronero, J., Williams, G. B., Pereira, J. M., Pengas, G., and Nestor, P. J. (2008). The impact of skull-stripping and radio-frequency bias correction on grey-matter segmentation for voxel-based morphometry. Neuroimage 39, 1654–1665. doi: 10.1016/j.neuroimage.2007.10.051

Alvén, J., Heurling, K., Smith, R., Strandberg, O., Schöll, M., Hansson, O., et al. (2019). “A Deep Learning Approach to MR-less Spatial Normalization for Tau PET Images,” in 22nd International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2019), (Shenzhen: MICCAI).

Ashburner, J., Andersson, J. L., and Friston, K. J. (2000). Image registration using a symmetric prior–in three dimensions. Hum. Brain Mapp. 9, 212–225. doi: 10.1002/(SICI)1097-0193(200004)9:4<212::AID-HBM3<3.0.CO;2-#

Ashburner, J., and Friston, K. J. (1999). Nonlinear spatial normalization using basis functions. Hum. Brain Mapp. 7, 254–266.

Ashburner, J., and Friston, K. J. (2000). Voxel-based morphometry–the methods. Neuroimage 11, 805–821. doi: 10.1006/nimg.2000.0582

Bai, J., Trinh, T. L., Chuang, K. H., and Qiu, A. (2012). Atlas-based automatic mouse brain image segmentation revisited: model complexity vs. image registration. Magn. Reson. Imaging 30, 789–798. doi: 10.1016/j.mri.2012.02.010

Bascunana, P., Thackeray, J. T., Bankstahl, M., Bengel, F. M., and Bankstahl, J. P. (2019). Anesthesia and Preconditioning Induced Changes in Mouse Brain [(18)F] FDG Uptake and Kinetics. Mol. Imaging Biol. 21, 1089–1096. doi: 10.1007/s11307-019-01314-9

Cho, H., Kim, J. S., Choi, J. Y., Ryu, Y. H., and Lyoo, C. H. (2014). A computed tomography-based spatial normalization for the analysis of [18F] fluorodeoxyglucose positron emission tomography of the brain. Korean J. Radiol. 15, 862–870. doi: 10.3348/kjr.2014.15.6.862

Choi, H., Lee, D. S., and Alzheimer’s Disease Neuroimaging Initiative (2018). Generation of Structural MR Images from Amyloid PET: application to MR-Less Quantification. J. Nucl. Med. 59, 1111–1117. doi: 10.2967/jnumed.117.199414

Chou, N., Wu, J., Bai Bingren, J., Qiu, A., and Chuang, K. H. (2011). Robust automatic rodent brain extraction using 3-D pulse-coupled neural networks (PCNN). IEEE Trans. Image Process. 20, 2554–2564. doi: 10.1109/TIP.2011.2126587

De Feo, R., Shatillo, A., Sierra, A., Valverde, J. M., Grohn, O., Giove, F., et al. (2021). Automated joint skull-stripping and segmentation with Multi-Task U-Net in large mouse brain MRI databases. Neuroimage 229:117734. doi: 10.1016/j.neuroimage.2021.117734

Delzescaux, T., Lebenberg, J., Raguet, H., Hantraye, P., Souedet, N., and Gregoire, M. C. (2010). Segmentation of small animal PET/CT mouse brain scans using an MRI-based 3D digital atlas. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2010, 3097–3100. doi: 10.1109/IEMBS.2010.5626106

Detlefsen, N. S., Freifeld, O., and Hauberg, S. (2018). “Deep Diffeomorphic Transformer Networks,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, (Salt Lake City: IEEE), 4403–4412. doi: 10.1016/j.neuroimage.2020.117161

Fein, G., Landman, B., Tran, H., Barakos, J., Moon, K., Di Sclafani, V., et al. (2006). Statistical parametric mapping of brain morphology: sensitivity is dramatically increased by using brain-extracted images as inputs. Neuroimage 30, 1187–1195. doi: 10.1016/j.neuroimage.2005.10.054

Feo, R., and Giove, F. (2019). Towards an efficient segmentation of small rodents’ brain: a short critical review. J. Neurosci. Methods 323, 82–89. doi: 10.1016/j.jneumeth.2019.05.003

Gispert, J. D., Pascau, J., Reig, S., Martínez-Lázaro, R., Molina, V., García-Barreno, P., et al. (2003). Influence of the normalization template on the outcome of statistical parametric mapping of PET scans. Neuroimage 19, 601–612. doi: 10.1016/s1053-8119(03)00072-7

Han, S., Oh, M., Oh, J. S., Lee, S. J., Oh, S. J., Chung, S. J., et al. (2016). Subregional Pattern of Striatal Dopamine Transporter Loss on 18F FP-CIT Positron Emission Tomography in Patients With Pure Akinesia With Gait Freezing. JAMA Neurol. 73, 1477–1484. doi: 10.1001/jamaneurol.2016.3243

Hofmann, M., Bezrukov, I., Mantlik, F., Aschoff, P., Steinke, F., Beyer, T., et al. (2011). MRI-based attenuation correction for whole-body PET/MRI: quantitative evaluation of segmentation- and atlas-based methods. J. Nucl. Med. 52, 1392–1399. doi: 10.2967/jnumed.110.078949

Hofmann, M., Pichler, B., Schölkopf, B., and Beyer, T. (2009). Towards quantitative PET/MRI: a review of MR-based attenuation correction techniques. Eur. J. Nucl. Med. Mol. Imaging 36, 93–104. doi: 10.1007/s00259-008-1007-7

Hsu, L. M., Wang, S., Ranadive, P., Ban, W., Chao, T. H., Song, S., et al. (2020). Automatic Skull Stripping of Rat and Mouse Brain MRI Data Using U-Net. Front. Neurosci. 14:568614. doi: 10.3389/fnins.2020.568614

Hu, Y. C., Mageras, G., and Grossberg, M. (2021). Multi-class medical image segmentation using one-vs-rest graph cuts and majority voting. J. Med. Imaging 8:034003. doi: 10.1117/1.Jmi.8.3.034003

Jaderberg, M., Simonyan, K., Zisserman, A., and Kavukcuoglu, K. (2015). “Spatial transformer networks,” in Proceedings of the 28th International Conference on Neural Information Processing Systems - Volume 2, (Montreal: MIT Press).

Jenkinson, M., Beckmann, C. F., Behrens, T. E., Woolrich, M. W., and Smith, S. M. (2012). FSL. Neuroimage 62, 782–790. doi: 10.1016/j.neuroimage.2011.09.015

Jimenez-Carretero, D., Bermejo-Peláez, D., Nardelli, P., Fraga, P., Fraile, E., San José Estépar, R., et al. (2019). A graph-cut approach for pulmonary artery-vein segmentation in noncontrast CT images. Med. Image Anal. 52, 144–159. doi: 10.1016/j.media.2018.11.011

Jorge Cardoso, M., Leung, K., Modat, M., Keihaninejad, S., Cash, D., Barnes, J., et al. (2013). STEPS: similarity and Truth Estimation for Propagated Segmentations and its application to hippocampal segmentation and brain parcellation. Med. Image Anal. 17, 671–684. doi: 10.1016/j.media.2013.02.006

Kang, S. K., Seo, S., Shin, S. A., Byun, M. S., Lee, D. Y., Kim, Y. K., et al. (2018). Adaptive template generation for amyloid PET using a deep learning approach. Hum. Brain Mapp. 39, 3769–3778. doi: 10.1002/hbm.24210

Kim, H., Jung, J., Kim, J., Cho, B., Kwak, J., Jang, J. Y., et al. (2020). Abdominal multi-organ auto-segmentation using 3D-patch-based deep convolutional neural network. Sci. Rep. 10:6204. doi: 10.1038/s41598-020-63285-0

Kim, J. S., Cho, H., Choi, J. Y., Lee, S. H., Ryu, Y. H., Lyoo, C. H., et al. (2015). Feasibility of Computed Tomography-Guided Methods for Spatial Normalization of Dopamine Transporter Positron Emission Tomography Image. PLoS One 10:e0132585. doi: 10.1371/journal.pone.0132585

Kim, J. S., Lee, J. S., Park, M. H., Kim, K. M., Oh, S. H., Cheon, G. J., et al. (2010). Feasibility of template-guided attenuation correction in cat brain PET imaging. Mol. Imaging Biol. 12, 250–258. doi: 10.1007/s11307-009-0277-1

Klein, S., Staring, M., Murphy, K., Viergever, M. A., and Pluim, J. P. (2010). elastix: a toolbox for intensity-based medical image registration. IEEE Trans. Med. Imaging 29, 196–205. doi: 10.1109/TMI.2009.2035616

Kuhn, F. P., Warnock, G. I., Burger, C., Ledermann, K., Martin-Soelch, C., and Buck, A. (2014). Comparison of PET template-based and MRI-based image processing in the quantitative analysis of C11-raclopride PET. EJNMMI Res. 4:7. doi: 10.1186/2191-219X-4-7

Lehmann, M., Douiri, A., Kim, L. G., Modat, M., Chan, D., Ourselin, S., et al. (2010). Atrophy patterns in Alzheimer’s disease and semantic dementia: a comparison of FreeSurfer and manual volumetric measurements. Neuroimage 49, 2264–2274. doi: 10.1016/j.neuroimage.2009.10.056

Liu, Y., Unsal, H. S., Tao, Y., and Zhang, N. (2020). Automatic Brain Extraction for Rodent MRI Images. Neuroinformatics 18, 395–406. doi: 10.1007/s12021-020-09453-z

Ma, D., Cardoso, M. J., Modat, M., Powell, N., Wells, J., Holmes, H., et al. (2014). Automatic structural parcellation of mouse brain MRI using multi-atlas label fusion. PLoS One 9:e86576. doi: 10.1371/journal.pone.0086576

Ma, Y., Hof, P. R., Grant, S. C., Blackband, S. J., Bennett, R., Slatest, L., et al. (2005). A three-dimensional digital atlas database of the adult C57BL/6J mouse brain by magnetic resonance microscopy. Neuroscience 135, 1203–1215. doi: 10.1016/j.neuroscience.2005.07.014

Nie, J., and Shen, D. (2013). Automated segmentation of mouse brain images using multi-atlas multi-ROI deformation and label fusion. Neuroinformatics 11, 35–45. doi: 10.1007/s12021-012-9163-0

Oguz, I., Zhang, H., Rumple, A., and Sonka, M. (2014). RATS: rapid Automatic Tissue Segmentation in rodent brain MRI. J. Neurosci. Methods 221, 175–182. doi: 10.1016/j.jneumeth.2013.09.021

Oh, J. S., Kubicki, M., Rosenberger, G., Bouix, S., Levitt, J. J., McCarley, R. W., et al. (2009). Thalamo-frontal white matter alterations in chronic schizophrenia: a quantitative diffusion tractography study. Hum. Brain Mapp. 30, 3812–3825. doi: 10.1002/hbm.20809

Oh, J. S., Song, I. C., Lee, J. S., Kang, H., Park, K. S., Kang, E., et al. (2007). Tractography-guided statistics (TGIS) in diffusion tensor imaging for the detection of gender difference of fiber integrity in the midsagittal and parasagittal corpora callosa. Neuroimage 36, 606–616. doi: 10.1016/j.neuroimage.2007.03.020

Palumbo, L., Bosco, P., Fantacci, M. E., Ferrari, E., Oliva, P., Spera, G., et al. (2019). Evaluation of the intra- and inter-method agreement of brain MRI segmentation software packages: a comparison between SPM12 and FreeSurfer v6.0. Phys. Med. 64, 261–272. doi: 10.1016/j.ejmp.2019.07.016

Sekine, T., Buck, A., Delso, G., Ter Voert, E. E., Huellner, M., Veit-Haibach, P., et al. (2016). Evaluation of Atlas-Based Attenuation Correction for Integrated PET/MR in Human Brain: application of a Head Atlas and Comparison to True CT-Based Attenuation Correction. J. Nucl. Med. 57, 215–220. doi: 10.2967/jnumed.115.159228

Shamonin, D., Bron, E., Lelieveldt, B., Smits, M., Klein, S., and Staring, M. (2014). Fast Parallel Image Registration on CPU and GPU for Diagnostic Classification of Alzheimer’s Disease. Front. Neuroinform. 7:50. doi: 10.3389/fninf.2013.00050

Som, P., Atkins, H. L., Bandoypadhyay, D., Fowler, J. S., MacGregor, R. R., Matsui, K., et al. (1980). A fluorinated glucose analog, 2-fluoro-2-deoxy-D-glucose (F-18): nontoxic tracer for rapid tumor detection. J. Nucl. Med. 21, 670–675.

Wollenweber, S. D., Ambwani, S., Delso, G., Lonn, A. H. R., Mullick, R., Wiesinger, F., et al. (2013). Evaluation of an Atlas-Based PET Head Attenuation Correction Using PET/CT & MR Patient Data. IEEE Trans. Nucl. Sci. 60, 3383–3390. doi: 10.1109/TNS.2013.2273417

Woolrich, M. W., Jbabdi, S., Patenaude, B., Chappell, M., Makni, S., Behrens, T., et al. (2009). Bayesian analysis of neuroimaging data in FSL. Neuroimage 45, S173–S186. doi: 10.1016/j.neuroimage.2008.10.055

Keywords: mouse brain, deep convolutional-neural-network (CNN), inverse-spatial-normalization (iSN), skull-stripping, template-based volume of interest (VOI)

Citation: Seo SY, Kim S-J, Oh JS, Chung J, Kim S-Y, Oh SJ, Joo S and Kim JS (2022) Unified Deep Learning-Based Mouse Brain MR Segmentation: Template-Based Individual Brain Positron Emission Tomography Volumes-of-Interest Generation Without Spatial Normalization in Mouse Alzheimer Model. Front. Aging Neurosci. 14:807903. doi: 10.3389/fnagi.2022.807903

Received: 02 November 2021; Accepted: 17 January 2022;

Published: 04 March 2022.

Edited by:

Woon-Man Kung, Chinese Culture University, TaiwanCopyright © 2022 Seo, Kim, Oh, Chung, Kim, Oh, Joo and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jungsu S. Oh, anVuZ3N1X29oQGFtYy5zZW91bC5rcg==; anVuZ3N1Lm9oQGdtYWlsLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Seung Yeon Seo

Seung Yeon Seo Soo-Jong Kim1,2,3,4†

Soo-Jong Kim1,2,3,4† Jungsu S. Oh

Jungsu S. Oh Jinwha Chung

Jinwha Chung Segyeong Joo

Segyeong Joo Jae Seung Kim

Jae Seung Kim