94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Aging Neurosci., 30 May 2022

Sec. Neurocognitive Aging and Behavior

Volume 14 - 2022 | https://doi.org/10.3389/fnagi.2022.773692

This article is part of the Research TopicInsights into Mechanisms Underlying Brain Impairment in Aging, Volume IIView all 5 articles

Background: Cognitive decline remains highly underdiagnosed despite efforts to find novel cognitive biomarkers. Electroencephalography (EEG) features based on machine-learning (ML) may offer a non-invasive, low-cost approach for identifying cognitive decline. However, most studies use cumbersome multi-electrode systems. This study aims to evaluate the ability to assess cognitive states using machine learning (ML)-based EEG features extracted from a single-channel EEG with an auditory cognitive assessment.

Methods: This study included data collected from senior participants in different cognitive states (60) and healthy controls (22), performing an auditory cognitive assessment while being recorded with a single-channel EEG. Mini-Mental State Examination (MMSE) scores were used to designate groups, with cutoff scores of 24 and 27. EEG data processing included wavelet-packet decomposition and ML to extract EEG features. Data analysis included Pearson correlations and generalized linear mixed-models on several EEG variables: Delta and Theta frequency-bands and three ML-based EEG features: VC9, ST4, and A0, previously extracted from a different dataset and showed association with cognitive load.

Results: MMSE scores significantly correlated with reaction times and EEG features A0 and ST4. The features also showed significant separation between study groups: A0 separated between the MMSE < 24 and MMSE ≥ 28 groups, in addition to separating between young participants and senior groups. ST4 differentiated between the MMSE < 24 group and all other groups (MMSE 24–27, MMSE ≥ 28 and healthy young groups), showing sensitivity to subtle changes in cognitive states. EEG features Theta, Delta, A0, and VC9 showed increased activity with higher cognitive load levels, present only in the healthy young group, indicating different activity patterns between young and senior participants in different cognitive states. Consisted with previous reports, this association was most prominent for VC9 which significantly separated between all level of cognitive load.

Discussion: This study successfully demonstrated the ability to assess cognitive states with an easy-to-use single-channel EEG using an auditory cognitive assessment. The short set-up time and novel ML features enable objective and easy assessment of cognitive states. Future studies should explore the potential usefulness of this tool for characterizing changes in EEG patterns of cognitive decline over time, for detection of cognitive decline on a large scale in every clinic to potentially allow early intervention.

Trial Registration: NIH Clinical Trials Registry [https://clinicaltrials.gov/ct2/show/results/NCT04386902], identifier [NCT04386902]; Israeli Ministry of Health registry [https://my.health.gov.il/CliniTrials/Pages/MOH_2019-10-07_007352.aspx], identifier [007352].

Cognitive decline is characterized by impairments in various cognitive functions such as memory, orientation, language, and executive functions, expressed more than is anticipated for an individual’s age and education level (Plassman et al., 2010). Cognitive decline with memory deficit indications is associated with a high-risk for developing dementia and Alzheimer’s disease (AD) (Ritchie and Touchon, 2000). Dementia is recognized as one of the most significant medical challenges of the future. So much so, that it has already reached epidemic proportions, with prevalence roughly doubling every 5 years in populations over the age of 65 (van der Flier and Scheltens, 2005). This rate is expected to increase unless therapeutic approaches are found to prevent or stop disease progression (Hebert et al., 2013). Since AD is the most prevalent form of dementia, responsible for about 60–70% of cases (Qiu et al., 2009), it remains the focus of clinical trials. To date, most clinical trials that include a disease-modifying treatment fail to demonstrate clinical benefits in symptomatic AD patients. This could be explained by the late intervention that occurs after neuropathological processes have already resulted in substantial brain damage (Galimberti and Scarpini, 2011). Hence, the discovery of predictive biomarkers for preclinical or early clinical stages, such as cognitive decline, is imperative (Jack et al., 2011). Cognitive decline may be detected several years before dementia onset with known validated tools (Hadjichrysanthou et al., 2020). Interventions starting early in the disease process, before substantial neurodegeneration has taken place, can change the progression of the disease dramatically (Silverberg et al., 2011). Yet, there is still no universally recommended screening tool that satisfies all needs for early detection of cognitive decline (Cordell et al., 2013).

The most commonly used screening tool for cognitive assessment in the elderly population is the Mini-Mental State Examination (MMSE) (Folstein et al., 1975). The MMSE evaluates cognitive function, with a total possible score of 30 points. Patients who score below 24 would typically be suspected of cognitive decline or early dementia (Tombaugh and McIntyre, 1992). However, several studies have shown that sociocultural variables, age, and education, as well as tester bias, could affect individual scores (Brayne and Beardsall, 1990; Crum et al., 1993; Shiroky et al., 2007). Furthermore, studies report a short-term practice effect for subjects in AD trials and diagnostic studies resulting from repeated exposure to the MMSE (Chapman et al., 2016).

Objective cognitive assessment based on brain activity measurements would be preferable to subjective clinical evaluations using pen-and-paper assessment tools like the MMSE. However, such objective methods are often cumbersome and expensive. Electroencephalography (EEG) offers a non-invasive and relatively inexpensive screening tool for cognitive assessment (Cassani et al., 2018). Frontal asymmetry among the activity in the left and right hemispheres, peak frequency in resting-state EEG, and response time in sensory ERP are found to correlate with MMSE scores (Doan et al., 2021). EEG studies investigating cognitive decline highlight the role of Theta power as a possible indicator for early detection of cognitive decline (Missonnier et al., 2007; Deiber et al., 2015). For example, it was found that frontal Theta activity differs substantially in cognitively impaired subjects performing cognitive tasks, compared to healthy seniors. A lack of increased Theta activity was shown to serve as a predictor of cognitive decline progression (Deiber et al., 2009). When performing tasks involving working memory, frontal Theta activity increases with the expected increase of the cognitive load levels (Jensen and Tesche, 2002). Working memory manipulation is one of the ways to modify cognitive load, as explained by the Cognitive Load Theory (CLT). Since working memory capacity is limited, performing a higher difficult task results in simultaneous processing of information elements, which leads to higher cognitive load (Sweller, 2011). Studies using EEG repeatedly show frontal Theta increase with higher cognitive load and task difficulty (Antonenko et al., 2010). Studies examining resting-state EEG found that Alpha-to-Theta ratio decreased as the MMSE scores decreased (Choi et al., 2019). A recent study suggest that novel diagnostic classification based on EEG signals could be even more useful than frontal Theta for differentiating between clinical stages (Farina et al., 2020).

The development of machine learning (ML), alongside advancement in signal processing, has largely contributed to the extraction of useful information from the raw EEG signal (Dauwels et al., 2010a). Novel techniques are capable of exploiting the large amount of information on time-frequency processes in a single recording (Pritchard et al., 1994; Babiloni et al., 2004). Recent studies demonstrated novel measures of EEG signals for identification of cognitive impairment with high accuracy, using classifiers based on neural networks, wavelets, and principal component analysis (PCA), indicating the relevance of such methods for cognitive assessment (Cichocki et al., 2005; Melissant et al., 2005; Lehmann et al., 2007; Ahmadlou et al., 2010; Meghdadi et al., 2021).

However, most studies in this field have several constraints. Most commonly, such studies use multichannel EEG systems to characterize cognitive decline. The difficulty with multichannel EEG is the long setup time, the requirement of specially trained technicians, as well as the need for professional interpretation of the results. This makes the systems costly and not portable, thus not suitable for wide-range screening in community clinics. Consequently, these systems are not included in the usual clinical protocol for cognitive decline detection. This emphasizes the need for additional cost-effective tools with easy setup and short assessment times, to possibly allow earlier detection of cognitive decline in the community.

A recent study (Khatun et al., 2019) examined differences in responses to auditory stimuli between cognitively impaired and healthy subjects and concluded that cognitive decline can be characterized using data from a single EEG channel. Specifically, using data from frontal electrodes, the authors extracted features that were later used in classification models to identify subjects with cognitive impairments. Additional studies (Choi et al., 2019; Doan et al., 2021) found prefrontal EEG effective for screening dementia and, specifically, frontal asymmetry as a potential EEG variable for dementia detection. These results contribute to the notion that a prefrontal single-channel EEG can be used as an efficient and convenient way for assessing cognitive decline. However, the risk of overfitting the data in such classification studies should be addressed to ensure generalization capabilities, especially with a small sample size. Studies that use the same dataset for training as well as feature extraction (Kashefpoor et al., 2016; Cassani et al., 2017; Khatun et al., 2019) extend the risk of overfitting the data. For generalization of the data, the features should be examined in different datasets and be made to provide consistency in the results of new datasets. Furthermore, measuring the correlations of the extracted features with standard clinical measurements (like the MMSE score) or behavioral results of cognitive tasks [like reaction times (RTs) and accuracy] may be highly valuable for validation of novel EEG features.

In this study, we evaluated the ability of an easy-to-use single-channel EEG system to potentially detect cognitive decline in an elderly population. The EEG signal was decomposed using mathematical models of harmonic analysis, and machine-learning (ML) methods were used to extract EEG features. The pre-extracted EEG features used in this study were validated in previous studies performed on young healthy subjects (Maimon et al., 2020, 2021, 2022; Bolton et al., 2021). A short auditory cognitive assessment utilizing auditory stimuli was used. The auditory cognitive assessment included a simple auditory detection task with two difficulty levels (low and high), and a resting-state task. Previous findings show that recording EEG during active engagement in cognitive and auditory tasks offers distinct features and may lead to better discrimination power of brain states (Ghorbanian et al., 2013). Furthermore, using auditory stimulation detection is linked directly to attentional processes of the working memory system and can be used to manipulate WM load, as shown by EEG studies (Berti and Schröger, 2001; Lv et al., 2010). To continue this notion, we used an auditory assessment battery with musical stimuli. It was previously shown that musical stimuli elicit stronger activity than using visual cues such as digits and characters (Tervaniemi et al., 1999).

This pilot study aims to evaluate the ability of a frontal single-channel EEG system to assess cognitive decline in an elderly population, recognizing the importance of providing an accurate, low-cost alternative for cognitive decline assessment. Several hypotheses were formed in this study: (1) The EEG features activity will correlate to the MMSE scores (in the senior groups); (2) The EEG features that show correlation to the MMSE scores will also differentiate between the lower MMSE groups and the healthy young group; (3) Some of the EEG features will correlate to cognitive load (elicited by the tasks); and (4) This pattern of activity, which refers to the difficulty of the task, could be absent in patients with low MMSE scores.

Ethical approval for this study was granted by the Ethics Committee (EC) of Dorot Geriatric Medical Center on July 01, 2019. Israeli Ministry of Health (MOH) registry number MOH_2019-10-07_007352. NIH Clinical Trials Registry number NCT04386902, URL: https://clinicaltrials.gov/ct2/show/NCT04386902.

Sixty patients from the inpatient rehabilitation department at Dorot Geriatric Medical Center were recruited for this study. For the full demographic details, see Table 1. The overall mean age was 77.55 (9.67) years old. There was a wide range of ages for each group, with no significant age difference between the groups. Participant groups consisted of 47% females and 53% males. Among the patients, 82% were hospitalized for orthopedic rehabilitation, and 18% due to various other causes. Among the patients who had surgery, an average of 27 (16.3) days had passed since the surgery. Potential subjects were identified by the clinical staff during their admissions to the inpatient rehabilitation department. All subjects were hospitalized at the center and were chosen based on inclusion criteria specified in the study protocol. The patients underwent a MMSE by an occupational therapist upon hospital admission, and this score was used to screen patients who had scores between 10 and 30. All subjects were also evaluated for their abilities to hear, read, and understand instructions for the discussion of Informed Consent Form (ICF), as well as for the auditory task. Patients that spoke English, Hebrew, and Russian were provided with the appropriate ICF and auditory task in the language they could read and understand. All participants provided ICF according to the guidelines outlined in the Declaration of Helsinki. Patients that showed any verbal or non-verbal form of objection were not included in the study. Other exclusion criteria included MMSE score lower than 10; the presence of several neurological comorbidities (intended to exclude patients with other neurological conditions that could affect the results); damage to the integrity of the scalp and/or skull, and skin irritation in the facial and forehead area; significant hearing impairments; and a history of drug abuse.

In total, 50 of the 60 recruited patients completed the auditory task, and their EEG data was used. Ten patients signed the ICF and were included in the overall patient count but were excluded from data analysis due to their desire to stop the study, or because of technical problems during the recording.

Twenty-two healthy students participated in this study for course credit. The overall mean age was 24.09 (2.79) years old. Participant group consisted of 60% females and 40% males. Ethical approval for this study was granted by Tel-Aviv University Ethical Committee 27.3.18.

Electroencephalography recordings were performed using the Neurosteer ® single-channel EEG Recorder. A three-electrode medical-grade patch was placed on each subject’s forehead, using dry gel for optimal signal transduction. The non-invasive monopolar electrodes were located at the prefrontal regions; the difference between Fp1 and Fp2 in the International 10/20 electrode system produced the single-EEG-channel, with a reference electrode in Fpz, was ± 25 mV (Input noise < 30 nVrms); EEG electrode contact impedances were maintained below 12 kΩ, as measured by a portable impedance meter (EZM4A, Grass Instrument Co., West Warwick, RI, United States). The data were digitized in continuous recording mode at a 500-Hz sampling frequency. For further details, see Supplementary Appendix A.

A trained operator monitored each subject during recordings to minimize muscle artifacts and instructed each subject to avoid facial muscle movement during recordings, as well as alerted the subjects whenever they showed increased muscle or ocular movement. It should be noted that the differential input and the high common-mode rejection ratio (CMRR) assist in the removal of motion artifacts as well as line noise (Hoseini et al., 2021). The EEG power spectrum was obtained by fast Fourier transform (FFT) of the EEG signals within a 4-s window.

The time-frequency approach to analyzing EEG data has been used in the past years to characterize brain behavior in AD (Jeong, 2004; Bibina et al., 2018; Nimmy John et al., 2019). Following this notion, we are using a novel time-frequency approach to analyze the EEG signal in this study. Full technical specifications regarding the signal analysis are provided in Supplementary Appendix A. In brief, the Neurosteer ® signal-processing algorithm interprets the EEG data using a time/frequency wavelet-packet analysis, creating a presentation of 121 components composed of time-varying fundamental frequencies and their harmonics.

To demonstrate this process, let g and h be a set of biorthogonal quadrature filters created from the filters G and H, respectively. These are convolution-decimation operators where, in a simple Haar wavelet, g is a set of averages, and h is a set of differences.

Let ψ1 be the mother wavelet associated with the filters s ∈ H, and d ∈ G. Then, the collection of wavelet packets ψn is given by:

The recursive form provides a natural arrangement in the form of a binary tree. The functions ψn have a fixed scale. A library of wavelet packets of any scale s, frequency f, and position p is given by

The wavelet packets {ψsfp:p ∈ Z} include a large collection of potential orthonormal bases. An optimal basis can be chosen by the best-basis algorithm (Coifman and Wickerhauser, 1992). Furthermore, an optimal mother wavelet together with an optimal basis can also be found (Neretti and Intrator, 2002). Following robust statistics methods to prune some of the basis functions using Coifman and Donoho’s denoising method (Coifman and Donoho, 1995), an output of 121 basis functions is received, termed “Brain Activity Features” (BAFs). Based on a given labeled-BAF dataset (collected by Neurosteer ®), various models can be created for different discriminations of these labels. In the (semi-) linear case, these models are of the form:

where w is a vector of weights and Ψ is a transfer function that can either be linear, e.g., Ψ(y)=y, or sigmoidal for logistic regression Ψ(y)=1/(1+e–y).

The BAFs are calculated over a 4-s window that is advanced by 1-s. This means that the BAF has 2048 components as it is a power of 2 and the sampling frequency is 500 Hz spanning the 4-s window. In this 4 s window, the BAF is a time/frequency atom. Thus, it allows for a signal that can vary the frequency over the 4-s window, such as a chirp. Then the window is advanced by 1 s, just like it is done in a spectrogram with 75% overlap, and calculated again over the new 4-s window.

The data was tested for artifacts due to muscle and eye movement of the prefrontal EEG signals (Fp1, Fp2). The standard methods used to remove non-EEG artifacts were all based on different variants of the Independent Components Analysis (ICA) algorithm (Urigüen and Garcia-Zapirain, 2015). These methods could not be performed here, as only a single-channel EEG data was used. As an alternative, strong muscle artifacts have higher amplitudes than regular EEG signals, mainly in the high frequencies; thus, they are clearly observable in many of the BAFs that are tuned to high frequency. This phenomenon helps in the identification of artifacts in the signal. Minor muscle activity is filtered out by the time/frequency nature of the BAFs and thus caused no disturbance to the processed signal. Similarly, eye movements are detected in specific BAFs and are taken into account during signal processing and data analysis.

Several linear combinations were obtained using ML techniques on labeled datasets previously collected by Neurosteer ® using the described BAFs. Specifically, EEG features VC9 and A0 were calculated using the linear discriminant analysis (LDA) technique (Hastie et al., 2007). LDA technique is intended to find an optimal linear transformation that maximizes the class separability. LDA models on imaging data were found successful in predicting development of cognitive decline up to 4 years prior to displaying symptoms of decline (Rizk-Jackson et al., 2013). EEG feature VC9 was found to separate between low and high difficulty levels of an auditory detection task within healthy participants (ages 20–30). EEG feature A0 was found to separate between resting state with music and auditory detection task within healthy participants (ages 20–30).

EEG feature ST4 was calculated using PCA (Rokhlin et al., 2009). Principle component analysis is a method used for feature dimensionality reduction before classification. Studies show that features extracted using PCA show significant correlation to MMSE score and distinguish AD from healthy subjects (López et al., 2009; Meghdadi et al., 2021), as well as show good performance for the diagnosis of AD using imaging (Choi and Jin, 2018). Here, the fourth principal component was found to separate between low and high difficulty levels of auditory n-back task for healthy participants (ages 30–70). Most importantly, all three EEG features were derived from different datasets than the data analyzed in the present study. Therefore, the same weight matrices that were previously found were used to transform the data obtained in the present study.

The frequency approach has been extensively researched in the past decade, leading to a large body of evidence regarding the association of frequency bands to cognitive functions (Herrmann et al., 2016). In this study, we introduce a novel time-frequency approach for signal analysis and compare it to relevant frequency band results. The EEG features presented here are produced by a secondary layer of ML on top of the BAFs. These BAFs were created as an optimal orthogonal decomposition of time/frequency components following the application of the Best Basis Algorithm (Coifman and Wickerhauser, 1992) on the full wavelet packet tree that was created from a large collection of EEG recordings (see full details in the Supplementary Material). Therefore, they are composed of time-varying fundamental frequencies and their harmonics. As a result of this dynamic nature, and due to the fact that the EEG features are created as linear combinations of multiple BAFs, each feature potentially includes a wide range of frequencies and dynamic varying characteristics. If the time variant characteristic was not present, the spectral envelope of each feature would have represented the full characteristic of each EEG feature. As the time varying component of each BAF is in the millisecond range (sampled at 500 Hz), it is not possible to characterize the dynamics with a spectrogram representation which averages the signal over 4-s windows. Thus, though characterizing the frequency representations of the novel features may be of interest, it is not applicable in this case, much like with EEG-produced ERPs (Makeig et al., 2002; Fell et al., 2004; Popp et al., 2019). We do observe, however, that EEG feature VC9 includes predominantly fundamental frequencies that belong to the Delta and Theta range (and their harmonics), while EEG features ST4 and A0 are broader combinations of frequencies spanning the whole spectrum (up to 240 Hz).

Other studies conducted on young healthy participants (a different study population than that previously mentioned) showed that EEG feature VC9 activity increased with increasing levels of cognitive load, as manipulated by numeric n-back task (Maimon et al., 2020). Additionally, VC9 activity during the performance of an arithmetic task decreased with external visual interruptions (Bolton et al., 2021). VC9 activity was also found to decrease with the repetition of a motor task in a surgery simulator performed by medical interns and was correlated with their individual performance (Maimon et al., 2021). These studies found that VC9 showed higher sensitivity than Theta, especially for lower-difficulty cognitive loads, which are more suitable for clinical and elderly populations. Within the clinical population, VC9 was found to correlate with auditory mismatch negativity (MMN) ERP component of minimally responsive patients (Maimon et al., 2022). EEG feature ST4 was found to correlate to individual performance of the numeric n-back task. That is, the difference between high and low load in RTs per participant was correlated to the difference between high and low load in ST4 activity (Maimon et al., 2020).

The recording room was quiet and illuminated. The research assistant set up the sanitized system equipment (electrode patch, sensor, EEG monitor, clicker) and provided general instructions to the participants before starting the task. Then the electrode was placed on the subject’s forehead, and the recording was initiated. Each participant was seated during the assessment and heard instructions through a loudspeaker connected to the EEG monitor. The entire recording session typically lasted 20–30 min. The cognitive assessment battery was pre-recorded and included two tasks: a detection task as well as a series of true/false questions answered by pressing a wireless clicker. Further explanations for the task were kept at a minimum to avoid bias. A few minutes of baseline activity were recorded per participant to ensure accurate testing. Each auditory cognitive assessment lasted 18 min.

Figure 1 illustrates the detection task used in the study. In each block, participants were presented with a sequence of melodies (played by a violin, a trumpet, and a flute). Each participant was given a clicker to respond to the stimuli. In the beginning of each block, auditory instructions indicated an instrument, to which the participant responded by clicking once. The click response was only to “yes” trials when the indicated instrument melody played. The task included two difficulty levels to test increasing cognitive load. In level 1, each melody was played for 3 s, and the same melody repeated throughout the entire block. The participant was asked to click once as fast as possible for each repetition of the melody. This level included three 90-s trials (one for each instrument), with 5–6 instances of each melody, and with 10–18 s of silence in between. In level 2, the same melodies were played for 1.5 s, and all three instruments appeared in the block. The participants were asked to click only for a specific instrument within the block and to ignore the rest of the melodies. Each trial consisted of 6-8 melodies, with 8–14 s of silence in between, and 2–3 instances of the target stimulus.

Figure 1. An example of six trials of detection level 1 (Top) and detection level 2 (Bottom). Both examples show a “trumpet block” in which the participant reacts to the trumpet melody. Red icons represent trials in which the participant was required to respond with a click when hearing the melody, indicating a “yes” response.

The behavioral dependent variables included mean response accuracy and mean Reaction times (RTs) per participant.

The electrophysiological dependent variables included the power spectral density. Absolute power values were converted to logarithm base 10 to produce values in dB. Out of the frequency bands, the following were included: Delta (0.5–4 Hz) and Theta (4–7 Hz). Pretests showed that the other frequency bands, namely Alpha (8–15 Hz), Beta (16–31 Hz), and lower Gamma (32–45 Hz), did not show any significant correlation or differences on the current data.

The analysis also included activity of the three selected EEG features: VC9, ST4, and A0, normalized to a scale of 0–100. The EEG variables were calculated each second from a moving window of 4 s, and mean activity per condition was factored into the analyses.

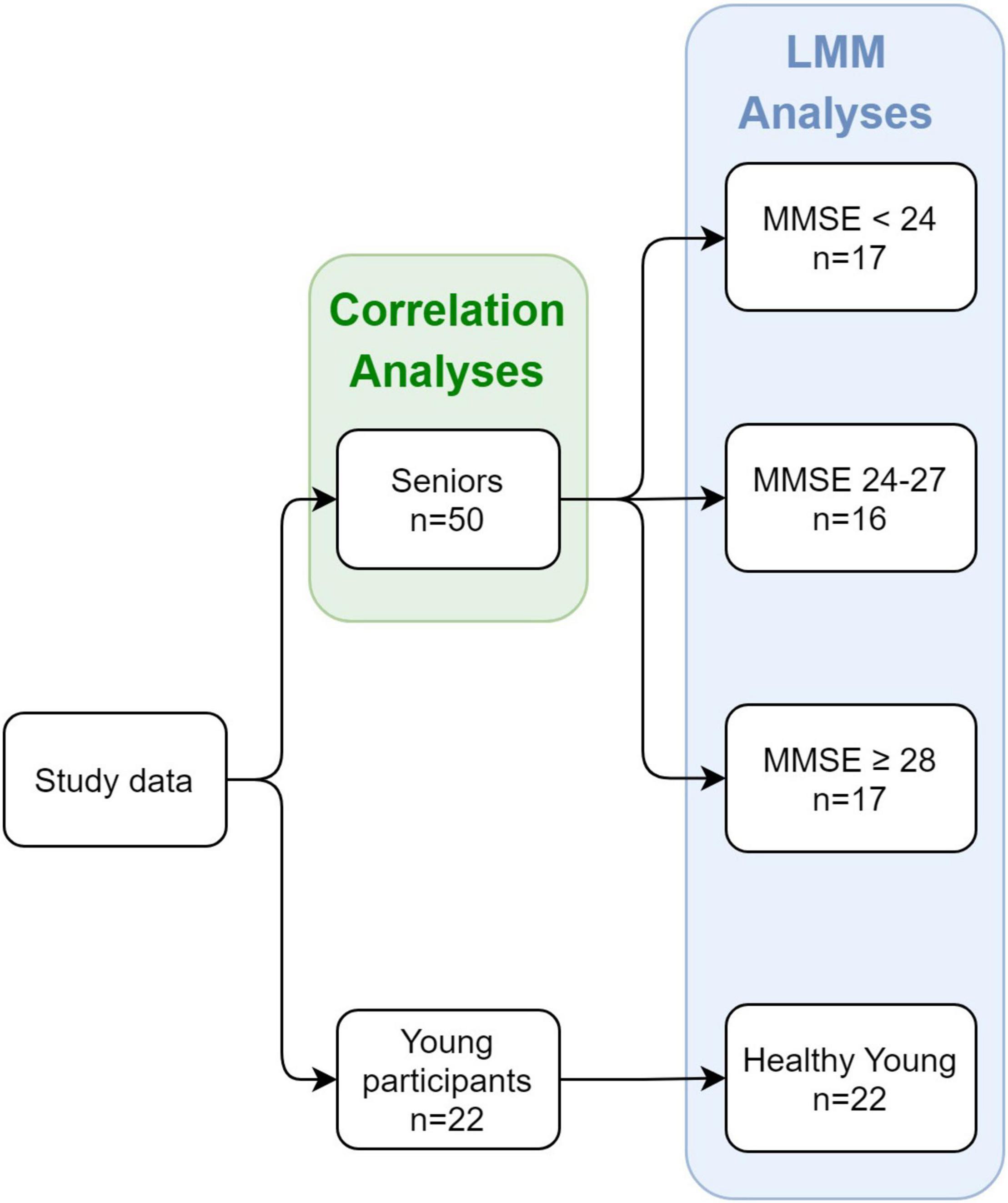

Statistical analyses were performed on data from 50 senior participants and 22 healthy young participants (72 participants in total). Groups were allocated as follows (see Figure 2). The senior participants were divided into three groups according to their MMSE scores: (1) Patients with a score of 17–23 in the MMSE < 24 group (n = 17); (2) Patients with a score of 24–27 in the MMSE 24–27 group (n = 16); and (3) Patients with a score of 28–30 in the MMSE ≥ 28 group (n = 17). This was done in order to obtain relatively balanced group sizes. We used MMSE score cutoffs of 24 and 27 in allocating the groups, as we were mostly interested in detecting cognitive decline as early as possible and found previous indications that a higher cutoff score would achieve optimal evaluations of diagnostic accuracy (Crum et al., 1993). Furthermore, it was argued that educated individuals who score below 27 are at greater risk of being diagnosed with dementia (O’Bryant et al., 2008). The fourth group included in the analysis consisted of the 22 young healthy participants (healthy young group). To ensure that groups were well adjusted in terms of age and sex, we compared the mean ages of each MMSE group in total, and for males and females separately. Additionally, we compared the age and MMSE scores of each MMSE group between males and females. These comparisons were done with Welch Two Sample t-test. See Table 1 for the descriptive and statistical results.

Figure 2. Study design and groups at each stage. The study included both seniors and young healthy participants as controls. For the senior participants, an MMSE score was obtained, and division into groups was based on the individual MMSE score.

The analyses included correlation models between EEG variables and MMSE score of senior participants, and mixed linear models measuring the associations between the EEG variables and MMSE score/group. Significance level for all analyses was set to p < 0.05. Post-hoc effects with Tukey corrections were made following significant main effect and interactions. All analyses were conducted with RStudio version 1.4.1717 (RStudio Team, 2020).

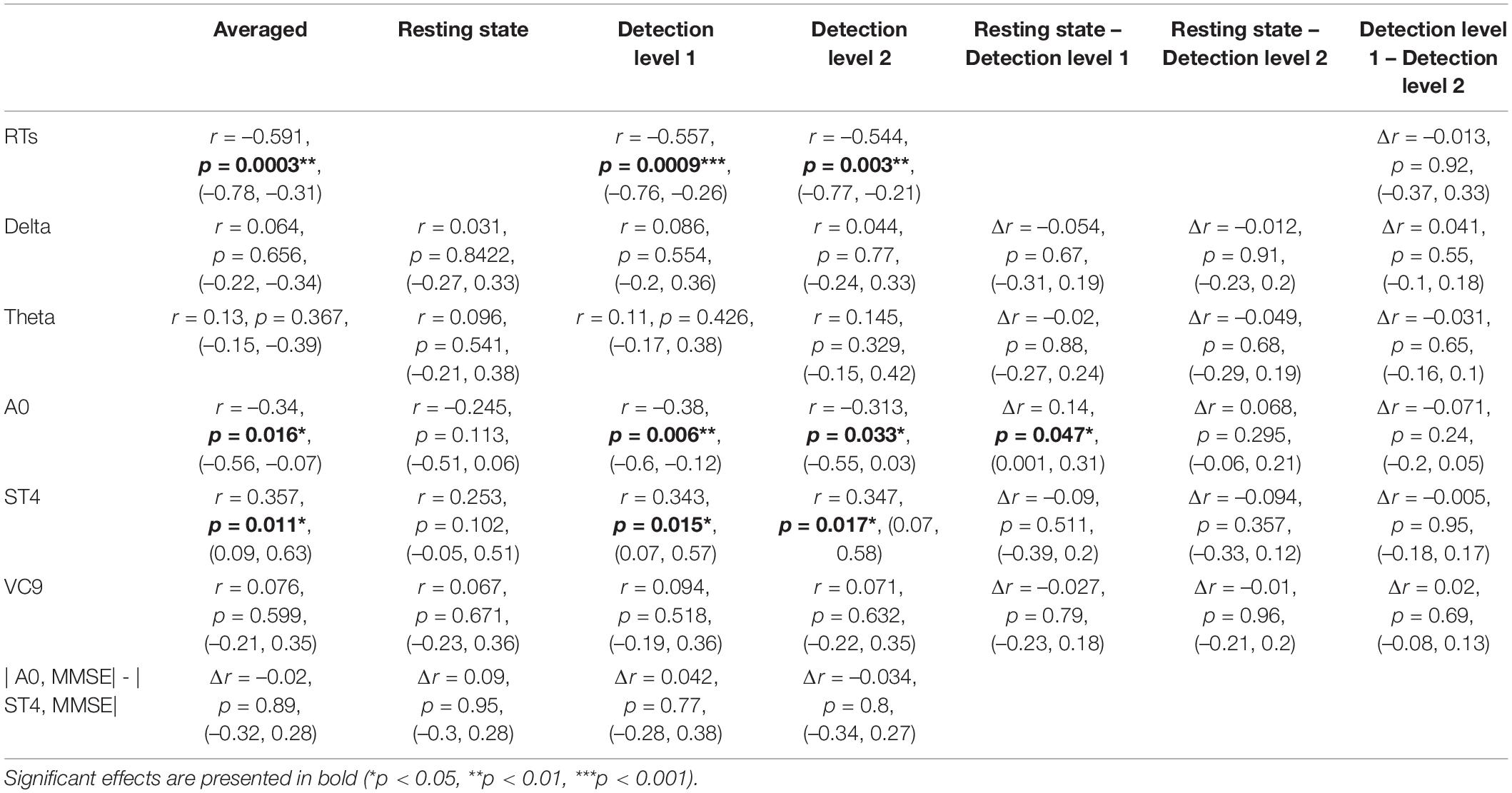

As an initial validation of the cognitive assessment method and based on previous studies (Silverberg et al., 2011), we expected that the RTs in the cognitive detection task would be greater for participants with lower MMSE scores. This was tested by calculating the Pearson correlation coefficient between mean RTs in detection levels 1 and 2, and the individual MMSE score of each participant. Estimated correlation coefficient, corresponding 95% Confidence Interval (CI), and p-values are presented in Table 2 and Figure 3.

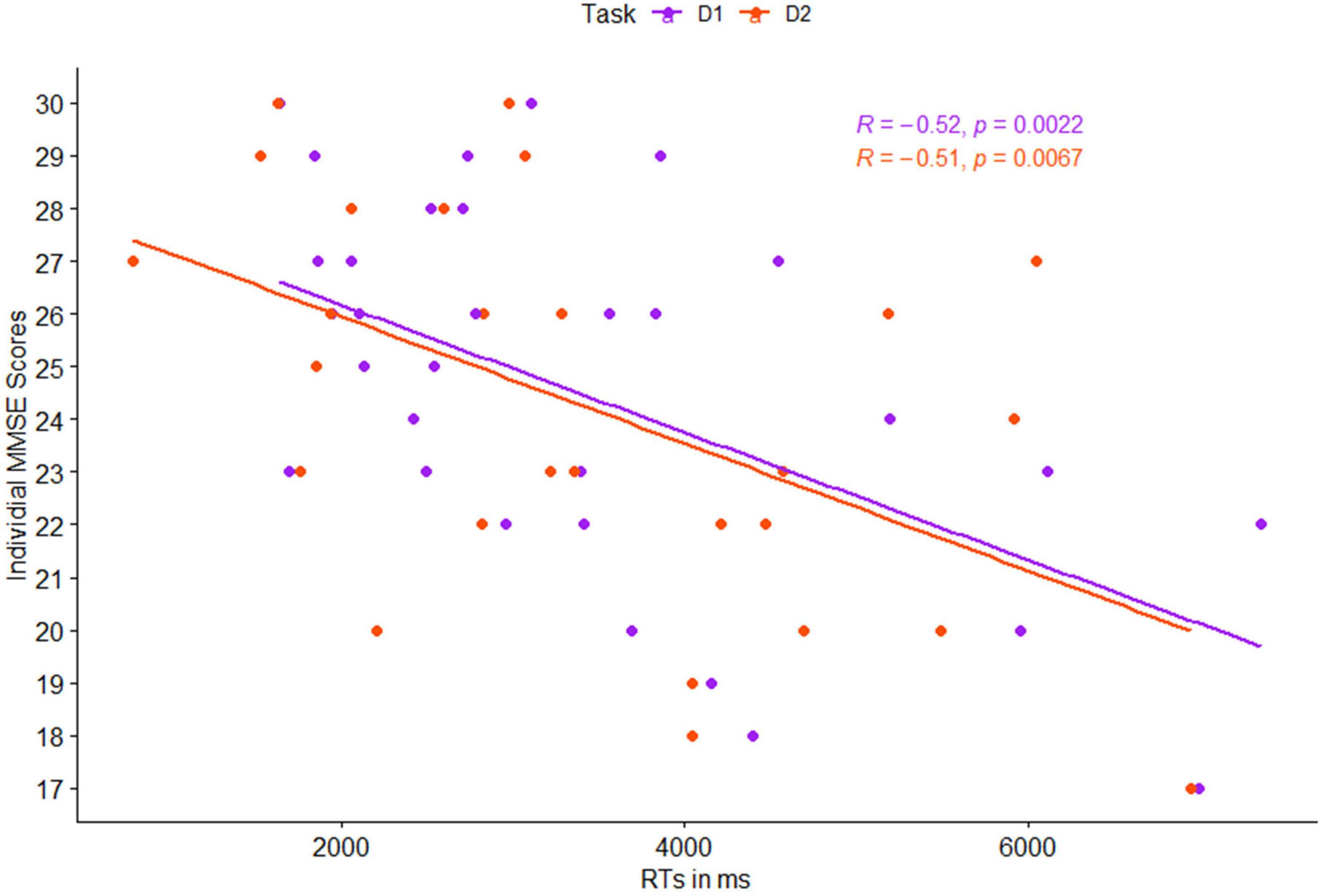

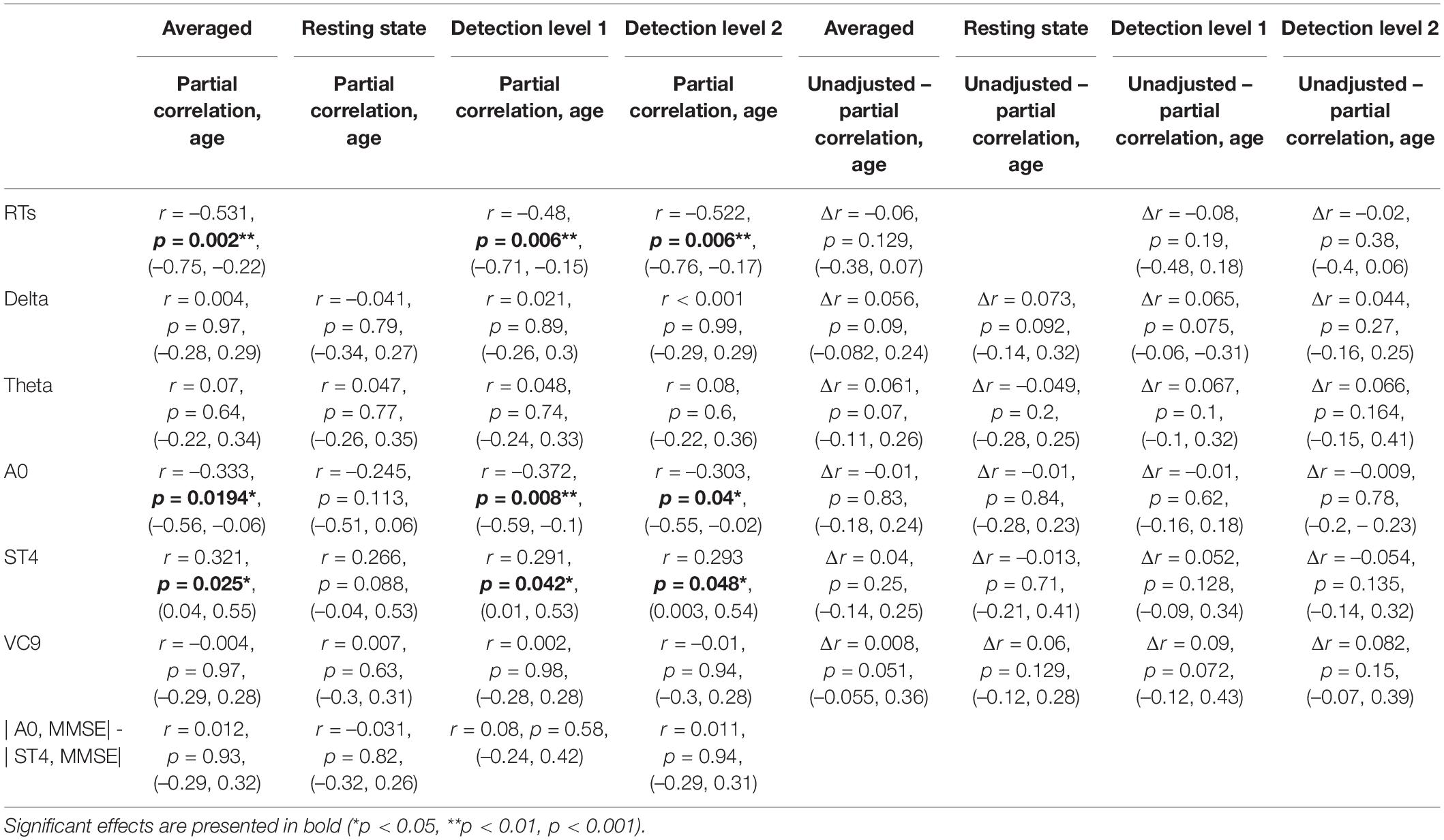

Table 2. Pearson r coefficients (first row of each cell), p-values (second row), and 95% CI (third row) of the correlations between individual MMSE scores and EEG features (and reaction times) as a function of task (averaged across tasks, resting state, detection level 1, and detection level 2); and the difference between correlation coefficients and CIs between tasks, as calculated by Meng et al. (1992) z extension of the Fisher Z transform (Dunn and Clark, 1969), including a test of the confidence interval for comparing two correlations.

Figure 3. Pearson correlation between individual mean reaction times (RTs) and individual MMSE scores, as a function of task level: detection 1 (purple) and detection 2 (red).

Next, following our first hypothesis regarding the correlation between EEG activity and MMSE scores, we calculated the Pearson correlation between each of the EEG features and individual MMSE scores. Each feature’s activity was averaged across the three task conditions (i.e., detection level 1, detection level 2, and resting state), as well as averaged for each of the conditions separately. We then compared the Pearson correlations between the different tasks. This was done using Meng et al. (1992) z extension of the Fisher Z transform (Dunn and Clark, 1969), which includes a test of the confidence interval for comparing two correlations. We also compared significant correlations between the different features. All comparisons were done using the Corcor (Diedenhofen and Musch, 2015) library for Rstudio. Estimated correlation coefficients, 95% CIs, p-values, and their comparisons are summarized in Table 2 and visually presented in Figure 4.

Figure 4. Pearson correlation between individual MMSE scores and (A) EEG frequency bands Delta and Theta, and (B) EEG features A0, ST4, and VC9, as a function of task level: resting state (blue), detection level 1 (purple), and detection level 2 (red).

Finally, we calculated partial Pearson product-moment partial correlations, controlling for age and built on the asymptotic confidence interval of the correlation coefficient based on Fisher’s Z transform, conducted with RVAideMemoire (Maxime, 2017) library in Rstudio. Each partial correlation between EEG variables and MMSE controlled for age was compared to the unadjusted correlation using the bootstrap method (Efron and Tibshirani, 1993). The bias-corrected and accelerated (BCa) bootstrap method was used to test if the difference between the overlapping adjusted, and unadjusted correlations is equal to zero. We used the pzcor function in zeroEQpart library with k = 1000 bootstrap samples taken, and pzconf function to calculate the subtraction of the partial correlation from the confidence intervals for the unadjusted correlation (Table 3).

Table 3. Partial Pearson r coefficients (first row of each cell), p-values (second row), and 95% CI (third row) of the correlations between individual MMSE scores and EEG features (and reaction times), controlled for age as a function of task (averaged across tasks, resting state, detection level 1, and detection level 2), and the difference between correlation coefficients and Cis, the partial correlations, and the unadjusted correlations, as calculated by the bias-corrected and accelerated (BCa) bootstrap method.

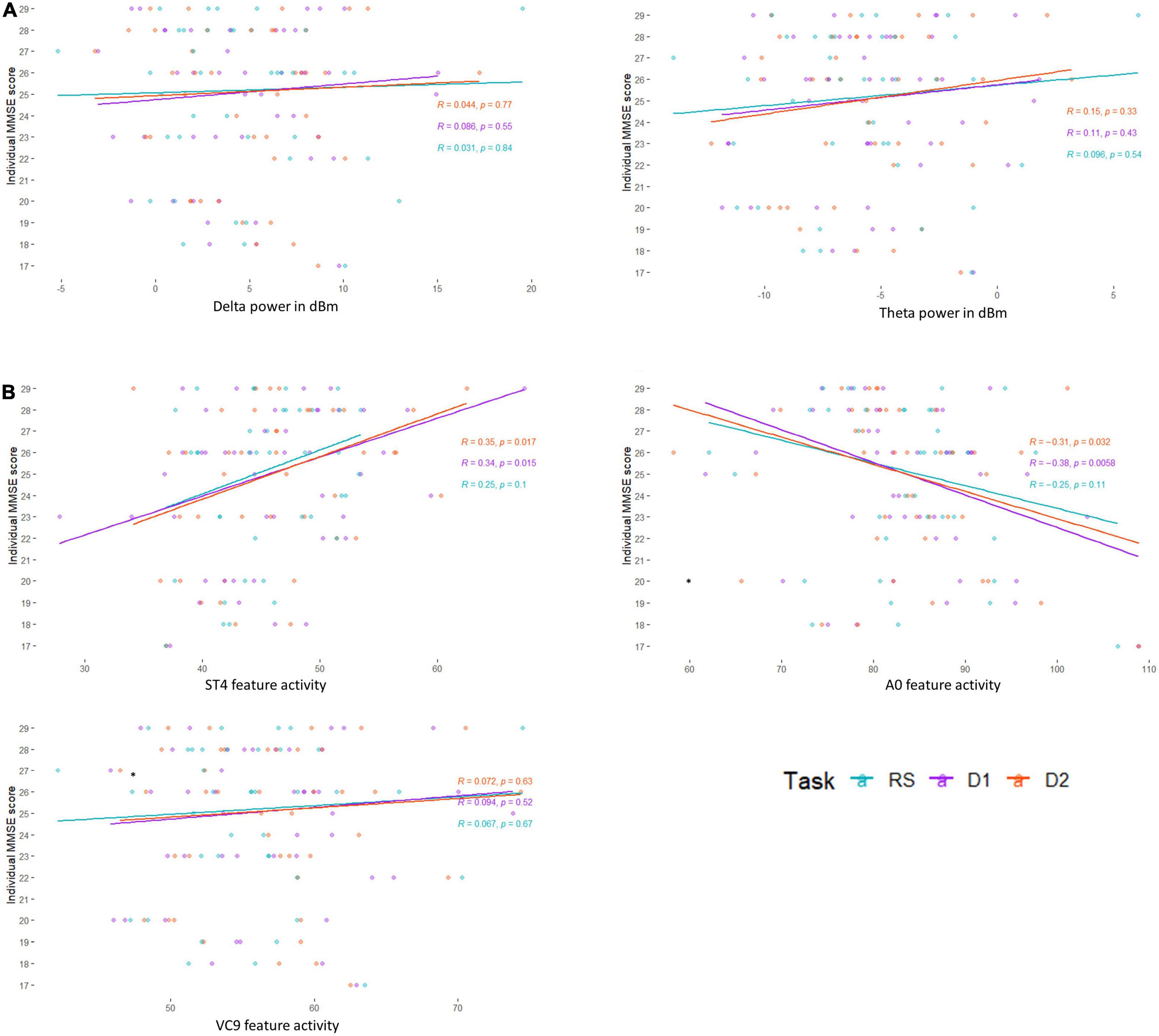

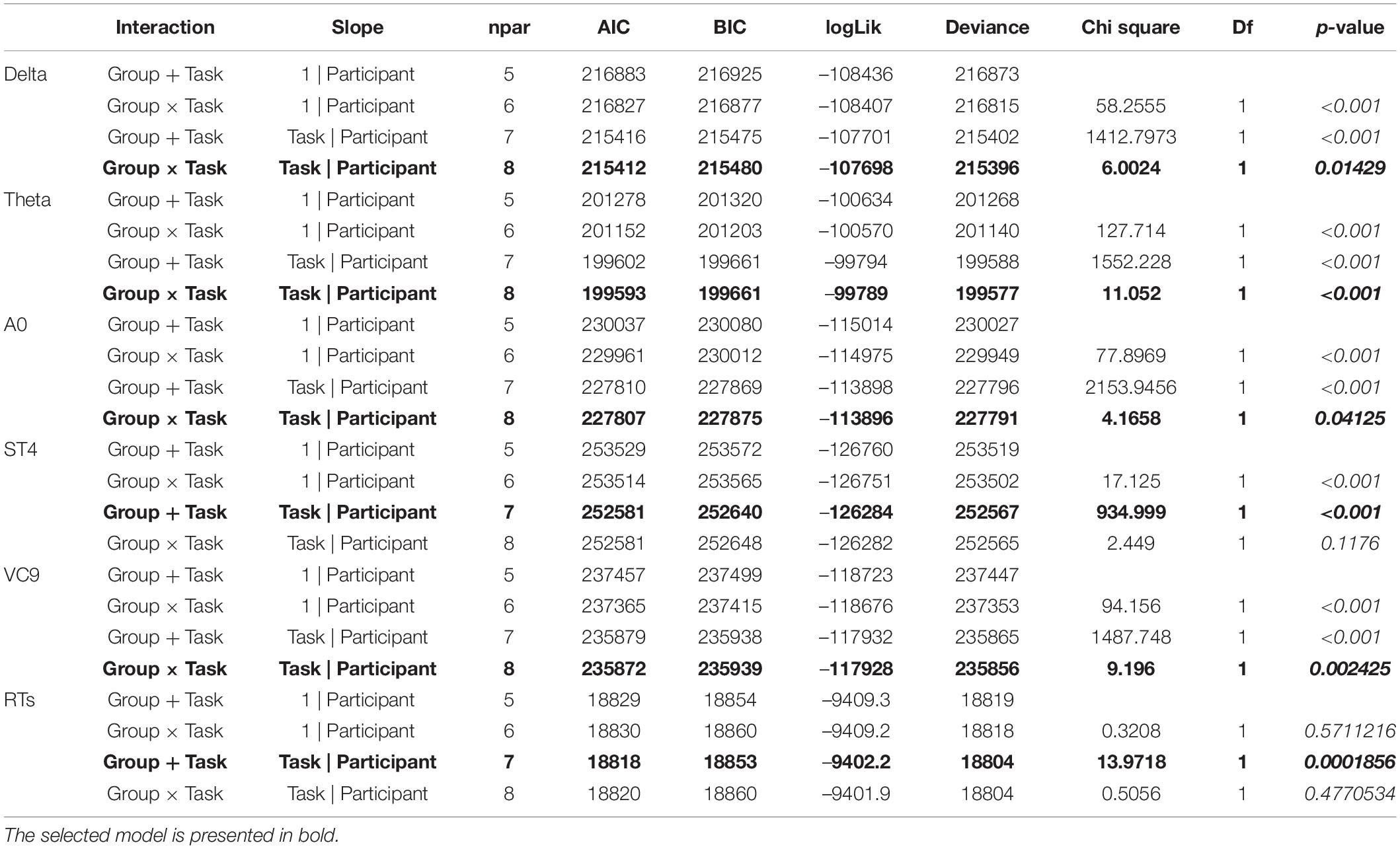

To create analyses detecting differences between groups, we added a group of young participants in addition to the three MMSE groups of the senior participants, all performing the same tasks. Due to the relatively small sample size, we fitted a general linear mixed model (GLMM) (Cnaan et al., 1997) that incorporated both fixed- and random-effect terms in a linear predictor expression from which the conditional mean of the response can be evaluated, using the lmer function in the lme4 package (Bates et al., 2015). This model was chosen over the simple GLM due to the relatively small sample size, as the GLMM takes into consideration the random slope per each participant. Age was not inserted as a covariant since it was part of the analysis: the young healthy group is inherently different due to their ages. The model included the fixed within-participant variable of task level (resting state vs. detection level 1 vs. detection level 2), and group as between-participants variable (MMSE < 24 vs. MMSE 24–27 vs. MMSE ≥ 28 vs. healthy young). Both variables were coded as linear variables. Task: resting state = 0; detection level 1 = 1; and detection level 2 = 2. Group: MMSE < 24 = 0; MMSE 24–27 = 1; MMSE ≥ 28 = 2; healthy young = 3. The model included the samples per participant per task (i.e., samples per second of activation) as a random slope. The interaction between the two fixed variables and participants’ random slopes were fit to the EEG variable data in a step-wise-step-up procedure using Chi square tests: the initial model included group and task level without the interaction between them; the second model included both variables and the interactions, and afterwards, the random factor was fitted into the data to include task| participant as random slope (all model comparisons parameters are summarized in Table 4). Fixed effects were calculated according to the selected model (i.e., included the interaction between the variables only for selected models which included it), the fixed effects estimates, standard errors, DFs, t and p-values of the best-fitting models for each of the EEG variables are summarized in Table 5 and presented in Figure 5. For models that showed a significant main effect of either group or task, post hoc analyses were conducted, comparing possible pairwise comparisons of the main effects levels (i.e., comparing between groups and between task levels), using Tukey HDS correction. This was done using the PostHocTest function from the DescTools library in Rstudio. All pairwise differences, 95% Cis, and p-values are summarized in Table 6.

Table 4. Model type, number of parameters, AIC, BIC, loglik, deviance, Chi square, df and p-values of GLMM models selection for each of the EEG features.

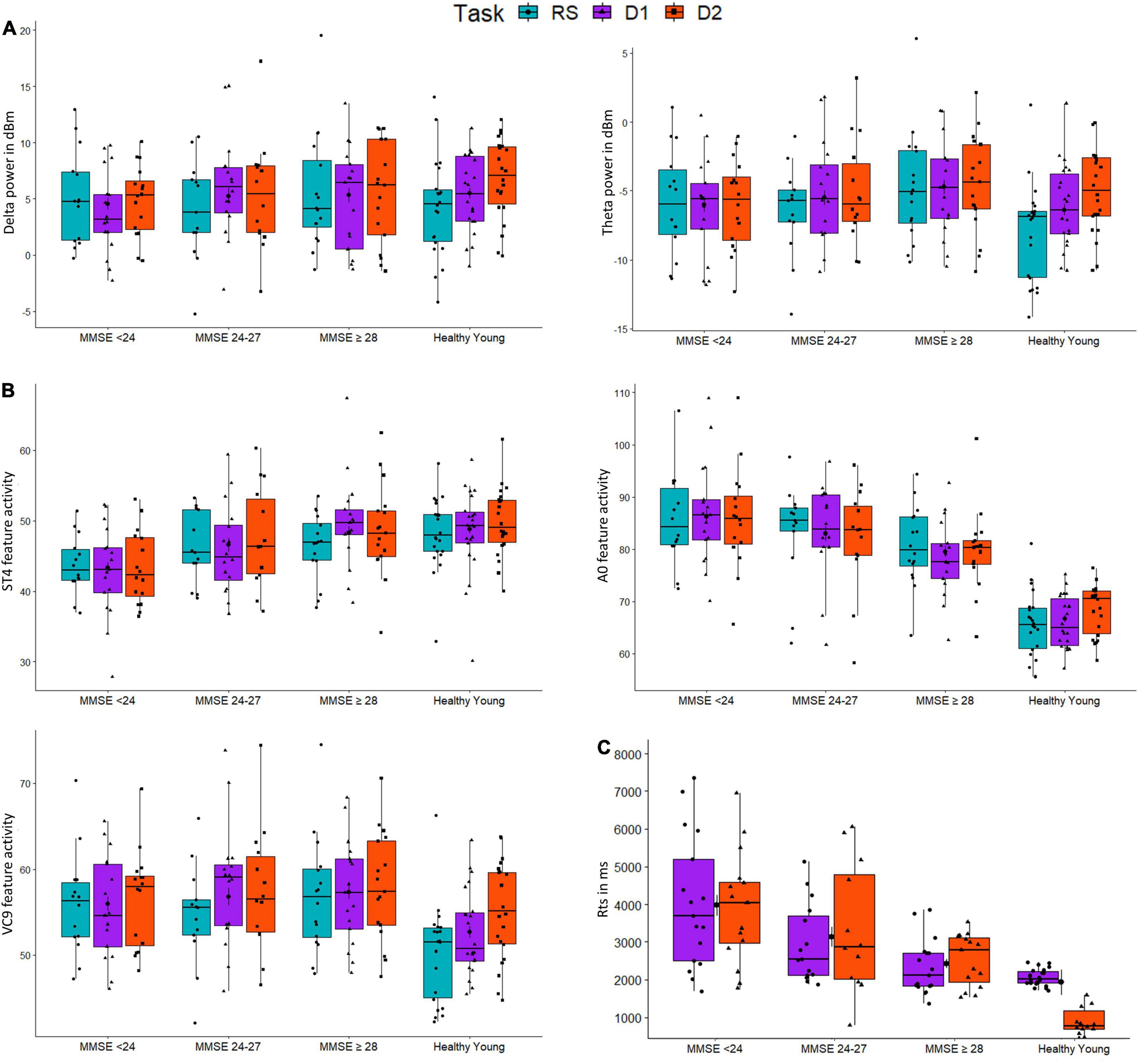

Figure 5. The distributions of groups (MMSE < 24, MMSE 24-27, MMSE ≥ 28, and healthy young) for activity of EEG features (A) Delta and Theta; (B) A0, ST4 and VC9; and (C) reaction times (RTs), as a function of task: resting-state (blue), detection level 1 (purple), and detection level 2 (red).

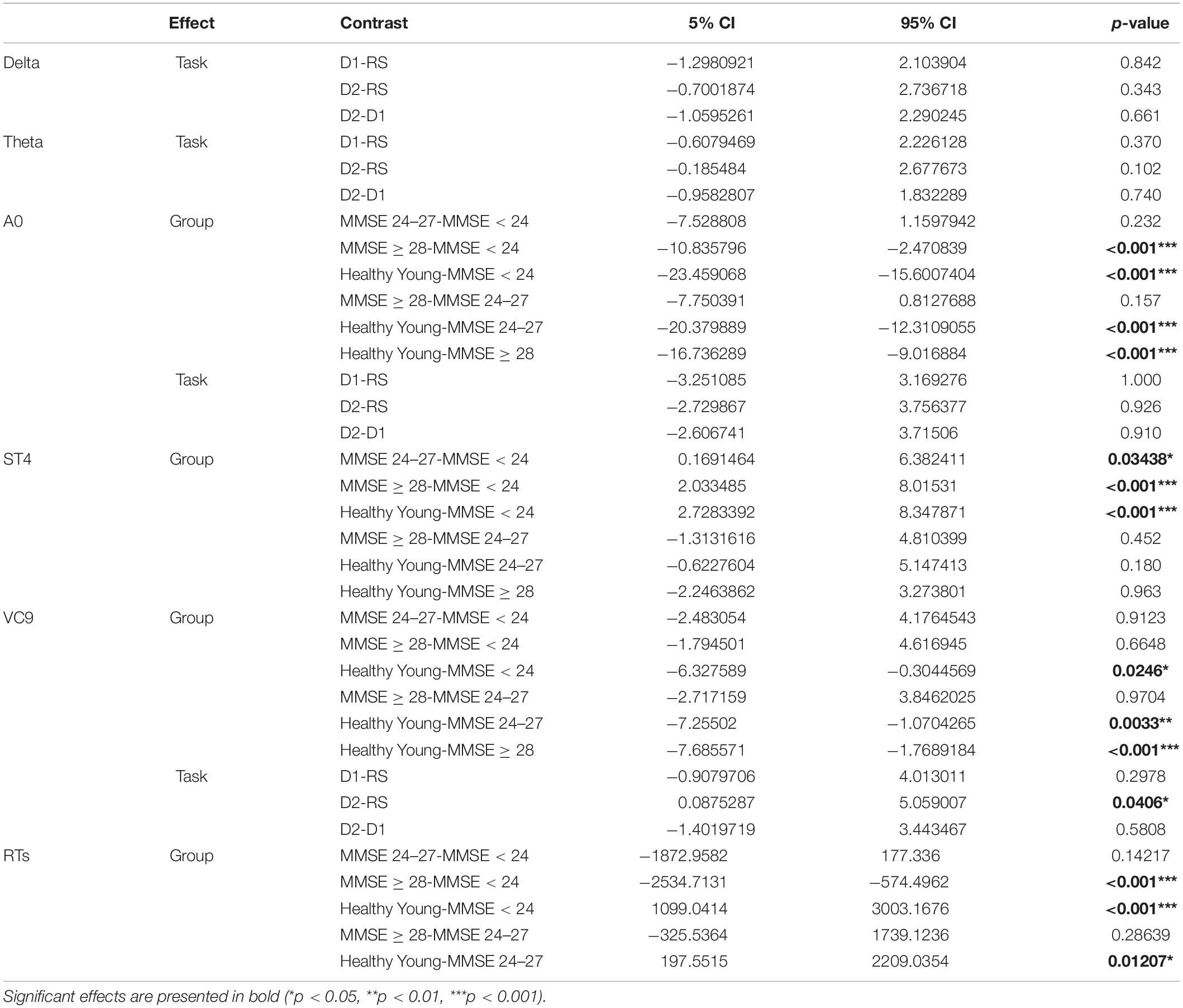

Table 6. Difference, 95% CI, and p-values of the post hoc comparisons for significant main effects of group and task.

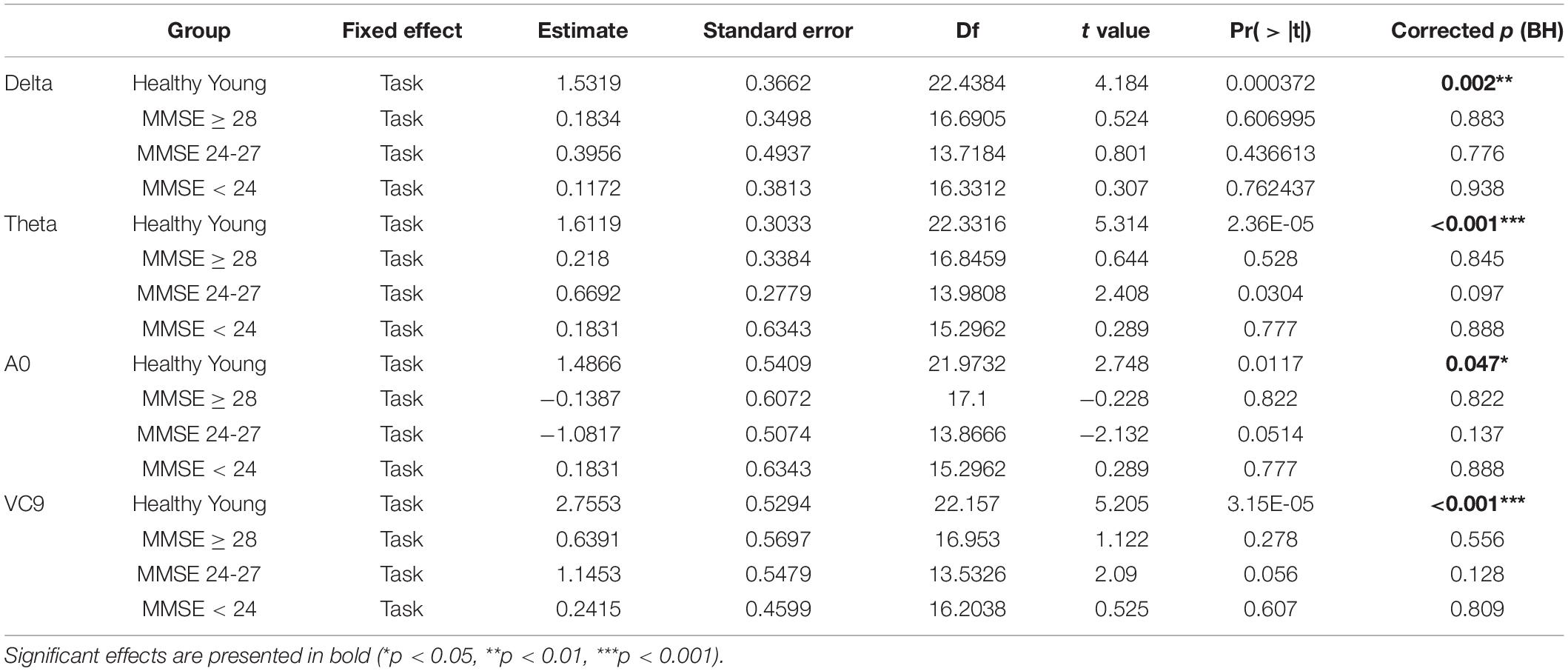

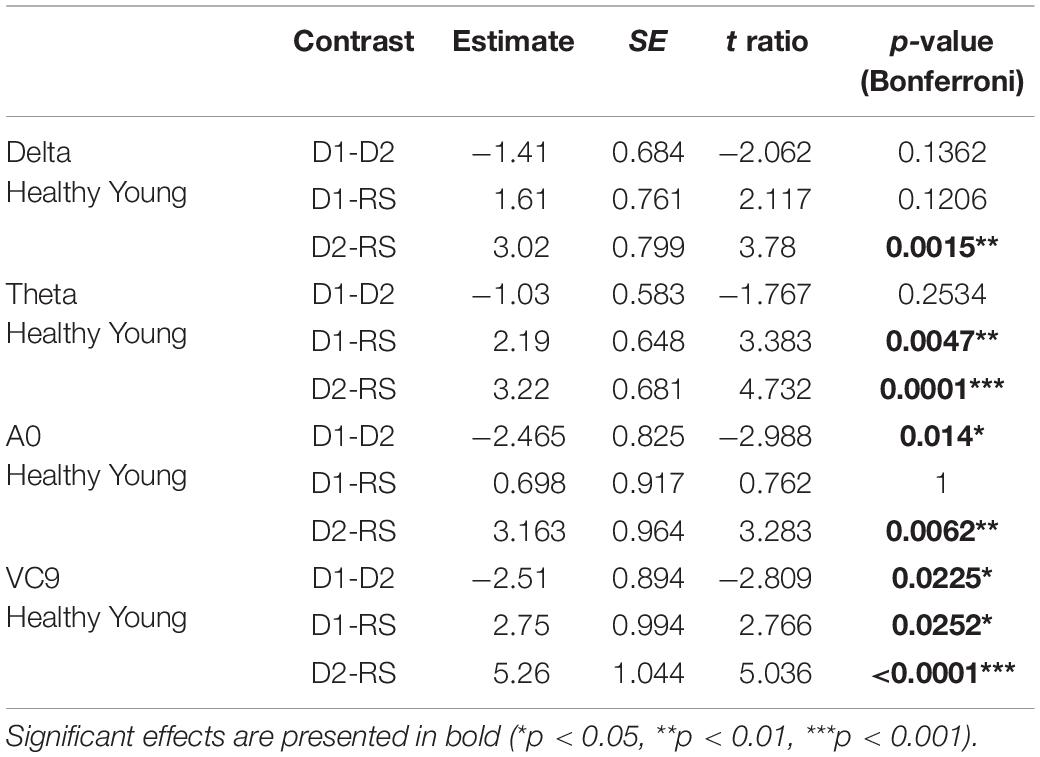

Finally, to explore whether the differences between task levels vary between the groups (hypothesis 4), we conducted separate GLMMs for each group, with task as a within-participants variable (coded as a linear variable similar to the main analysis). These included only the EEG features that exhibited significant interaction between group and task (i.e., only for features with selected model which included the interaction, and with significant interaction effect in their main GLMM). Taking all fixed effects together, we corrected the p-values using Benjamini Hochberg correction. For features that exhibited a corrected significant main effect of task using GLMM, we further compared the task levels using post hoc analyses with Tukey correction. The coefficients of the main effect of task using GLMMs are presented in Table 7, and post-hoc comparisons of significant features are presented in Table 8.

Table 7. Estimate, Standard errors, df, t-values and p-values, and BH-corrected p-values of the separate GLMM conducted on each group, with task level as a within-participants variable.

Table 8. Estimate, SE, t and p-values of the post hoc comparisons for significant main effects of task in the sub GLMM conducted on healthy young participants.

T-test results between the mean ages of each MMSE group in total, and for males and females separately as well as between the age and MMSE scores of each MMSE group between males and females were calculated. Means, standard deviations, t and p-values of the comparisons are presented in Table 1. The mean age of the participants did not differ between the three MMSE groups for all participants and for males and females separately (all ps > 0.05). Additionally, MMSE mean scores and mean age of each MMSE group were similar in males and females (all ps > 0.05).

Correlations between individual MMSE scores and participants’ RTs in both levels of the detection task were significant, both for each level separately, as well as the mean activation in the entire cognitive detection task (p < 0.01 for all, see Figure 3).

Pearson r, 95% CIs and p-values of the correlations between individual MMSE scores and each EEG variable (averaged and separated for each task level) and their comparisons are presented in Table 2 and Figure 4. The activity of ST4 increased with higher MMSE scores both as averaged across tasks, and separately during detection 2, and was highest during detection 1 (p = 0.011, p = 0.017, and p = 0.015, respectively). ST4 activity under the resting-state task did not correlate with MMSE (p = 0.102), and the comparison between the correlations during the different tasks did not yield significant differences. The activity of A0 increased with lower MMSE scores both as averaged across tasks and separately during detection 2, and was highest during detection 1 (p = 0.016, p = 0.033 and p = 0.006, respectively). A0 activity under the resting state task did not correlate with MMSE and was significantly lower than the correlation during detection level 1 (p = 0.113 and p = 0.047, respectively). There was no difference between the correlations of ST4 and MMSE and A0 and MMSE. No other features were found to be correlated with individual MMSE scores.

The partial Pearson correlations between the EEG features and MMSE scores controlled for age demonstrated fully comparable results to the unadjusted correlations: A0 and ST4 showed significant partial correlations with MMSE both for the averaged activity and separately during detection level 1 and detection level 2. All partial correlations controlling for age were not significantly different from the unadjusted correlation, and partial correlations between MMSE and A0 were no different from the partial correlations between MMSE and ST4 (see Table 3).

For EEG features Delta, Theta, A0, and VC9, the best fitted model included the fixed effects of group, task, and the interaction between them; and the random slope included task/participant. For ST4 and reaction times, the best fitted model included fixed effects of group and task without their interaction, and the random slope task| participant (see Table 4 for model selection and Chi test results).

The distribution of participants’ EEG features activity under the three tasks is presented in Figure 5. All the model fixed effects estimates, standard errors, DFs, and t and p-values are presented in Table 5. The main linear effect of group was significant for EEG features ST4, A0, and VC9 (p < 0.001, p < 0.001, and p = 0.003, respectively), and for RTs (p < 0.001). Post hoc comparisons using Tukey corrections revealed that for EEG feature A0, significant differences were found between young healthy participants and all the senior groups, as well as the difference between the senior group with MMSE ≥ 28 and the senior group with MMSE < 24 (all ps < 0.001). EEG feature ST4 differentiated between the senior group with MMSE < 24 and the senior group with MMSE between 24 and 27, the senior group with MMSE ≥ 28 and the healthy young participants (p = 0.034, p < 0.001, and p < 0.001, for the comparisons between senior group with MMSE < 24 and MMSE 24–27, MMSE ≥ 28 and healthy young participants, respectively). EEG feature VC9 showed significant comparisons between the young healthy group and all senior groups (p = 0.024, p = 0.003, and p < 0.001 for the comparisons between healthy young group and senior group MMSE < 24, MMSE 24–27, and MMSE ≥ 28, respectively). Finally, RT results showed significant comparisons between the healthy young participants and the senior groups with MMSE < 24, and MMSE between 24 and 27 (p < 0.001 and p = 0.012, respectively), as well as between senior group with MMSE ≥ 28 and the senior group with MMSE < 24 (p < 0.001). For all significant pairwise comparisons results, see Table 6.

The main effect of task was significant for Delta, Theta, A0, and VC9 (p < 0.001, p < 0.001, p = 0.042, and p < 0.00, respectively). However, post hoc comparisons of task main effect levels revealed that the difference between detection level 2 and resting state was significant only for VC9 (p = 0.041).

Finally, the interaction between group and task level was significant for Delta, Theta, A0 and VC9 (p = 0.015, p < 0.001, p = 0.042, and p = 0.003, respectively). To unfold this interaction for each variable separately, we conducted GLMMs per each group with task as a within-participants variable. Results revealed that for all the variables (after BH correction) the main linear effect of task was significant only for the healthy young participants group (p = 0.002, p < 0.001, p = 0.047, and p < 0.001 for Delta, Theta, A0 and VC9, respectively). See Table 7 for the separate GLMMs per each group. Post-hoc pairwise comparisons with Bonferroni correction revealed that in the young healthy group, the difference between detection level 2 and resting state was significant for all features (p = 0.002, p < 0.001, p = 0.006, and p < 0.001 for Delta, Theta, A0 and VC9, respectively). The difference between detection level 1 and resting state was significant only for Theta and VC9 (p = 0.004, and p = 0.025, respectively). The difference between the detection levels 1 and 2 was significant for A0 and VC9 (p = 0.014, and p = 0.025, respectively). See Table 8 for all pairwise comparisons.

Cognitive decline remains highly underdiagnosed (Lang et al., 2017). Improving the detection rate in the community to allow early intervention is therefore imperative. The aim of this study was to evaluate the ability of a single-channel EEG system with an interactive assessment tool to detect cognitive decline with correlation to known assessment methods. We demonstrate that objective EEG features extracted from a wearable EEG system with an easy setup, together with a short evaluation, may provide an assessment method for cognitive state.

Fifty seniors and twenty-two healthy young control participants completed a short auditory cognitive assessment battery. Classical EEG frequency bands as well as pre-defined ML features were used in the analysis of the data. ML applied to EEG signals is increasingly being examined for detection of cognitive deterioration. The biomarkers that are extracted using ML approaches show accurate separation between healthy and cognitively impaired populations (Cichocki et al., 2005; Melissant et al., 2005; Amezquita-Sanchez et al., 2019; Schapkin et al., 2020; Doan et al., 2021; Meghdadi et al., 2021). Our approach utilizes wavelet-packet analysis (Coifman and Wickerhauser, 1992; Neretti and Intrator, 2002; Intrator, 2018; Intrator, 2019) as pre-processing to ML. The EEG features used here were calculated using a different dataset to avoid the risks associated with classification studies, such as overfitting (Mateos-Pérez et al., 2018). This is unlike other studies that use classifiers trained and tested via cross validation on the same dataset (Deiber et al., 2015; Kashefpoor et al., 2016; Khatun et al., 2019). Specifically, the pre-extracted EEG features used here, VC9 and ST4, were previously validated further in studies performed on healthy young subjects. Results showed a correlation of VC9 to working memory load (Maimon et al., 2020, 2021; Bolton et al., 2021) and a correlation of ST4 to individual performance (Maimon et al., 2020).

The wearable single-channel EEG system was previously used in several studies to assess cognition (Maimon et al., 2020, 2021, 2022; Bolton et al., 2021). A novel cognitive assessment based on auditory stimuli with three cognitive load levels (high, low, and rest) was used to probe different cognitive states. Individual response performance (RT) was correlated to the MMSE score in both difficulty levels of the cognitive task, which further validates the cognitive assessment tool. The auditory stimuli included a simple detection task involving musical stimuli. We chose this particular detection task because it is one of the most commonly used tasks to measure differences in EEG activity between cognitive decline groups (Paitel et al., 2021), and it requires relatively low cognitive load levels, which is well suited for cognitive decline states (Debener et al., 2005). We used this cognitive assessment on senior participants in different cognitive states (from healthy seniors to cognitive decline patients, as determined by MMSE score independently obtained by clinicians), and on healthy young participants.

Verifying our first hypothesis, activity of EEG features A0 and ST4 and RTs significantly correlated with individual MMSE scores for both levels of the auditory detection task. These correlations persisted when controlling for age, thus eliminating a possible confounding effect. Additionally, the correlations between MMSE scores and EEG features ST4 and A0 activity were significant for both difficulty levels of the cognitive task (i.e., detection levels 1 and 2). Comparison of the correlations showed that the low difficulty level of a detection task elicited the highest correlation to MMSE scores, specifically for A0. These correlation analyses indicate a significant initial association between the novel EEG features and cognitive states as previously determined by clinical screening tools.

To continue exploring this association, further analysis compared the senior groups with the addition of a control group of healthy young participants. Results demonstrated the ability of the EEG features A0 and ST4, as well as RTs, to significantly differentiate between groups of seniors with high vs. low MMSE scores. High MMSE scores are associated with healthy cognition, while low MMSE scores tend to indicate a cognitive impairment. In allocating the groups, we used the common cutoff score of 24 to divide between low-functioning (MMSE < 24) and high-functioning seniors. However, we divided the high-functioning group further using a cutoff score of 27 to get a notion of possible separability between cognitive states in high-functioning seniors. Results showed that EEG features ST4 and A0 separated between the group of seniors with high-MMSE scores and the low-MMSE group, with the common cutoff score of 24, comparable to previous reports in the field (Lehmann et al., 2007; Kashefpoor et al., 2016; Khatun et al., 2019). Additionally, ST4 showed differences between the low MMSE group (MMSE < 24) and the young healthy group, which is expected, but it also showed a significant difference to the group of seniors with MMSE 24–27 scores. Finally, although RTs exhibited some significant differences between the groups (i.e., between the healthy young participants and the senior groups with MMSE 24–27 and MMSE < 24, and between the group with MMSE ≥ 28 and MMSE < 24), EEG features ST4 and A0 show additional more subtle differences between the groups that were not detectable using behavioral performance alone (e.g., the difference in ST4 activity between MMSE < 24 and MMSE 24–27).

These results suggest a detection of more delicate differences between seniors with MMSE scores under 24 and seniors that are considered healthy to date, but are at a greater risk for developing cognitive decline (with MMSE scores below 27 but above 24). The results may further indicate a different cognitive functionality between seniors that are already considered to have experienced a certain decline (MMSE < 24) and seniors that score lower in the initial screening test but are not considered as suffering from cognitive decline (MMSE 24–27). This result contributes to the debate in the literature over cognitive functionality of patients with scores below 27 (Shiroky et al., 2007; O’Bryant et al., 2008). Finally, EEG features A0 and VC9 showed significant differences between the young healthy group and all of the senior groups. While a separation between healthy controls and low-MMSE score groups is expected, these results also suggest different cognitive patterns between healthy young participants and seniors considered healthy (based on their MMSE scores), consistent with reports from previous studies (Vlahou et al., 2014).

Confirming our third hypothesis that EEG activity will correlate with cognitive load levels, results further demonstrated that the task variable modulated EEG features A0, VC9 and Theta activity, and was correlative to cognitive load level. Activity of these features increased with higher cognitive load only within healthy young group and not in the senior groups, who did not exhibit such activity patterns, corroborating our fourth hypothesis. Although all features exhibited a significant difference between the two cognitive load extremes, only VC9 feature showed significant effects for all of the comparisons between the different levels of cognitive load. This is in line with previous reports of frontal Theta showing an increase during cognitively demanding tasks (Jensen and Tesche, 2002; Scheeringa et al., 2009). This difference was not present in the senior population, supporting the notion that Theta may be indicative of cognitive state and serve as a predictor of cognitive decline, consistent with previous findings (Deiber et al., 2015; Missonnier et al., 2007). Results of VC9 activity are also consistent with previous work, supporting the association of VC9 with working memory load in the healthy population (Maimon et al., 2020, 2021; Bolton et al., 2021). All together, these results provide an initial indication of the ability of the proposed tool to assess cognitive states and detect cognitive decline in the elderly population.

Taking these new results together with previous reports, EEG feature VC9 shows clear association to frontal brain functions involving cognitive load, closely related to frontal Theta (Maimon et al., 2020, 2021, 2022; Bolton et al., 2021). Further, EEG feature ST4, previously shown to correlate with individual performance of healthy young participants undergoing highly demanding cognitive load task (n-back task with 3 levels; Maimon et al., 2020), was found in the present study to correlate to MMSE score of seniors in different states of cognition, showing specific sensitivity for lower MMSE scores. As such, this feature may be related to general cognitive abilities, and specifically most sensitive to declining cognitive state. Finally, EEG feature A0 exhibited a correlation to MMSE score within senior participants, as well as ability to differentiate between groups of cognitive decline and healthy participants, with higher sensitivity to the higher levels of cognitive state (i.e., the healthy young participants and senior participants with no cognitive decline). In addition, it also correlated with cognitive load within the healthy young participants. Therefore, we concur that A0 might be related to brain functions involving cognitive load and abilities within the cognitively healthy population and can detect gentle changes of brain activity in the slightly impaired population.

The ability to differentiate between cognitive states with the EEG features shown here relies solely on a single EEG channel together with a short auditory assessment, unlike most studies attempting to assess cognitive states with multichannel EEG systems (Dauwels et al., 2010b; Moretti et al., 2011). It has been argued that the long setup time of multichannel EEG systems may cause fatigue, stress, or even change mental states, affecting EEG patterns and, subsequently, study outcomes (Cassani et al., 2017). This suggests that cognitive state evaluation using a wearable single-channel EEG with a quick setup time may not only make the assessment more affordable and accessible, but also potentially reduce the effects of pretest time on the results. Using a single EEG channel was previously shown to be effective in detection of cognitive decline (Khatun et al., 2019); however, here we demonstrate results obtained using features that were extracted from an independent dataset to avoid overfitting the data. The assessment method offered here may potentially enable detection of cognitive decline in earlier stages, before major dementia symptoms arise.

While this pilot study shows promising initial results, more work is needed. Specifically, additional studies should include a longer testing period to quantify the variability within subjects and to potentially increase the predictive power. Due to the small sample size, generalization of the results is limited. Thus, larger cohorts of patients that are quantified by extensive screening methods would offer an opportunity to get more sensitive separation between earlier stages of cognitive decline using the suggested tool, and potentially reduce the subjective nature of the MMSE. Furthermore, thorough diagnostic batteries such as the Petersen criteria (Petersen, 2004), Clinical Dementia Rating (Morris, 1993), and NINCDS-ADRDA (Albert et al., 2011) would assist in determining the patient’s clinical stage (i.e., MCI/dementia) and may provide further diagnostic predictions in addition to screening. Moreover, a longitudinal study could assess cognitive state in asymptomatic senior patients and follow participants’ cognition over an extended period of time, validating the predictive power of the EEG features. Education levels of the senior participants were not collected in the present study, presenting a key limitation. Education level was previously shown to effect individual MMSE scores (Crum et al., 1993) and including such data could improve the models if taken as a covariate in the statistical methods (Choi et al., 2019). Further studies with the novel EEG features should include education level data and explore their correspondences to MMSE scores. Finally, our approach utilizes wavelet-packet analysis as pre-processing to ML, creating components composed of time-varying fundamental frequencies and their harmonics. As a result, analyzing the features only in terms of frequency range takes away two important properties of these components: their fine-temporal nature and their reliance on harmonics of the fundamental frequency. The best analogy to this can be found in the visual cortex; simple cells in the visual cortex respond to bars at a certain orientation while complex cells respond to a collection of moving bars at different orientations and velocity, which are formed from collections of simple cells (Hubel and Wiesel, 1962), rendering the complex cells crucial to representation of 3D structure (Edelman and Intrator, 2000). Our approach is similar, where the complex time/frequency components of this dynamic nature are instrumental in the interpretation of the EEG signal. Future research should explore the usefulness of this approach in cognitive assessment. Furthermore, exploring the potential usefulness of the novel EEG features presented here in controlled studies characterizing EEG psychogeography in seniors may contribute to understanding the association of these features to basic brain function.

This pilot study successfully demonstrated the ability to assess cognitive states using a wearable single-channel EEG and machine-learning EEG features that correlate to well-validated clinical measurements for detection of cognitive decline. Using such a low-cost approach to allow objective assessment may provide consistency in assessment across patients and between medical facilities clear of tester bias. Furthermore, due to a short setup time and the short cognitive evaluation, this tool has the potential to be used on a large scale in clinics in the community to detect deterioration before clinical symptoms emerge. Future studies should explore potential usefulness of this tool in characterizing changes in EEG patterns of cognitive decline over time, for early detection of cognitive decline to potentially allow earlier intervention.

The datasets presented in this article are not readily available because the datasets generated and analyzed during the current study are not publicly available due to patient privacy but may be available from the corresponding author under restrictions. Requests to access the datasets should be directed to NI, bmF0aGFuQG5ldXJvc3RlZXIuY29t.

Ethical approval for this study was granted by the Ethics Committee of Dorot Geriatric Medical Center (for seniors) and Tel Aviv University Ethics Committee (for young healthy participants). Registration: Israeli Ministry of Health (MOH) registry number MOH_2019-10-07_007352. NIH Clinical Trials Registry number NCT04386902.

LM, NM, NI, and AS: conception and study design. LM, NR-P, and SR: data acquisition. NI and AS: supervision. LM, NM, and NI: data analysis and writing. All authors read and approved the final manuscript.

LM, NM, and NI have equity interest in Neurosteer, which developed the Neurosteer system.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

We wish to thank Talya Zeimer for her support while writing this manuscript and Narkiss Pressburger for her great contribution to data acquisition. We also thank the study participants and the supporting staff for their contributions to this study.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnagi.2022.773692/full#supplementary-material

Ahmadlou, M., Adeli, H., and Adeli, A. (2010). New diagnostic EEG markers of the Alzheimer’s disease using visibility graph. J. Neural Transm. 117, 1099–1109. doi: 10.1007/s00702-010-0450-3

Albert, M. S., DeKosky, S. T., Dickson, D., Dubois, B., Feldman, H. H., Fox, N. C., et al. (2011). The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 7, 270–279. doi: 10.1016/j.jalz.2011.03.008

Amezquita-Sanchez, J. P., Mammone, N., Morabito, F. C., Marino, S., and Adeli, H. (2019). A novel methodology for automated differential diagnosis of mild cognitive impairment and the Alzheimer’s disease using EEG signals. J. Neurosci. Methods 322, 88–95. doi: 10.1016/j.jneumeth.2019.04.013

Antonenko, P., Paas, F., Grabner, R., and van Gog, T. (2010). Using electroencephalography to measure cognitive load. Educ. Psychol. Rev. 22, 425–438. doi: 10.1007/s10648-010-9130-y

Babiloni, C., Binetti, G., Cassetta, E., Cerboneschi, D., Dal Forno, G., Del Percio, C., et al. (2004). Mapping distributed sources of cortical rhythms in mild Alzheimer’s disease. A multicentric EEG study. Neuroimage 22, 57–67. doi: 10.1016/j.neuroimage.2003.09.028

Bates, D. M., Maechler, M., Bolker, B., and Walker, S. (2015). lme4: linear mixed-effects models using S4 classes. J. Stat. Softw. 67, 1–48.

Berti, S., and Schröger, E. (2001). A comparison of auditory and visual distraction effects: behavioral and event-related indices. Cogn. Brain Res. 10, 265–273. doi: 10.1016/S0926-6410(00)00044-6

Bibina, V. C., Chakraborty, U., Regeena, M. L., and Kumar, A. (2018). Signal processing methods of diagnosing Alzheimer’s disease using EEG a technical review. Int. J. Biol. Biomed. Eng. 12.

Bolton, F., Te’Eni, D., Maimon, N. B., and Toch, E. (2021). “Detecting interruption events using EEG,” in Proceedings of the 3rd Global Conference on Life Sciences and Technologies (LifeTech) (Nara: IEEE), 33–34.

Brayne, C., and Beardsall, L. (1990). Estimation of verbal intelligence in an elderly community: an epidemiological study using NART. Br. J. Clin. Psychol. 29, 217–223. doi: 10.1111/j.2044-8260.1990.tb00872.x

Cassani, R., Estarellas, M., San-Martin, R., Fraga, F. J., and Falk, T. H. (2018). Systematic review on resting-state EEG for Alzheimer’s disease diagnosis and progression assessment. Dis. Mark. 2018:5174815. doi: 10.1155/2018/5174815

Cassani, R., Falk, T. H., Fraga, F. J., Cecchi, M., Moore, D. K., and Anghinah, R. (2017). Towards automated electroencephalography-based Alzheimer’s disease diagnosis using portable low-density devices. Biomed. Signal Process. Control 33, 261–271. doi: 10.1016/j.bspc.2016.12.009

Chapman, K. R., Bing-Canar, H., Alosco, M. L., Steinberg, E. G., Martin, B., Chaisson, C., et al. (2016). Mini mental state examination and logical memory scores for entry into Alzheimer’s disease trials. Alzheimers Res. Ther. 8, 8–9. doi: 10.1186/s13195-016-0176-z

Choi, H., and Jin, K. H. (2018). Predicting cognitive decline with deep learning of brain metabolism and amyloid imaging. Behav. Brain Res. 344, 103–109. doi: 10.1016/j.bbr.2018.02.017

Choi, J., Ku, B., You, Y. G., Jo, M., Kwon, M., Choi, Y., et al. (2019). Resting-state prefrontal EEG biomarkers in correlation with MMSE scores in elderly individuals. Sci. Rep. 9:10468. doi: 10.1038/s41598-019-46789-2

Cichocki, A., Shishkin, S. L., Musha, T., Leonowicz, Z., Asada, T., and Kurachi, T. (2005). EEG filtering based on blind source separation (BSS) for early detection of Alzheimer’s disease. Clin. Neurophysiol. 116, 729–737. doi: 10.1016/j.clinph.2004.09.017

Cnaan, A., Laird, N. M., and Slasor, P. (1997). Using the general linear mixed model to analyse unbalanced repeated measures and longitudinal data. Stat. Med. 16, 2349–2380.

Coifman, R. R., and Donoho, D. L. (1995). “Translation-invariant de-noising,” in Wavelets and Statistics. Lecture Notes in Statistics, Vol. 103, eds A. Antoniadis and G. Oppenheim (New York, NY: Springer). doi: 10.1007/978-1-4612-2544-7_9

Coifman, R. R., and Wickerhauser, M. V. (1992). Entropy-based algorithms for best basis selection. IEEE Trans. Inf. Theory 38, 713–718. doi: 10.1109/18.119732

Cordell, C. B., Borson, S., Boustani, M., Chodosh, J., Reuben, D., Verghese, J., et al. (2013). Alzheimer’s association recommendations for operationalizing the detection of cognitive impairment during the medicare annual wellness visit in a primary care setting. Alzheimers Dement. 9, 141–150. doi: 10.1016/j.jalz.2012.09.011

Crum, R. M., Anthony, J. C., Bassett, S. S., and Folstein, M. F. (1993). Population-based norms for the mini-mental state examination by age and educational level. JAMA J. Am. Med. Assoc. 269, 2386–2391. doi: 10.1001/jama.1993.03500180078038

Dauwels, J., Vialatte, F., and Cichocki, A. (2010a). Diagnosis of Alzheimer’s disease from EEG signals: where are we standing? Curr. Alzheimer Res. 7, 487–505. doi: 10.2174/1567210204558652050

Dauwels, J., Vialatte, F., Musha, T., and Cichocki, A. (2010b). A comparative study of synchrony measures for the early diagnosis of Alzheimer’s disease based on EEG. Neuroimage 49, 668–693. doi: 10.1016/j.neuroimage.2009.06.056

Debener, S., Makeig, S., Delorme, A., and Engel, A. K. (2005). What is novel in the novelty oddball paradigm? Functional significance of the novelty P3 event-related potential as revealed by independent component analysis. Cogn. Brain Res. 22, 309–321. doi: 10.1016/j.cogbrainres.2004.09.006

Deiber, M. P., Ibañez, V., Missonnier, P., Herrmann, F., Fazio-Costa, L., Gold, G., et al. (2009). Abnormal-induced theta activity supports early directed-attention network deficits in progressive MCI. Neurobiol. Aging 30, 1444–1452. doi: 10.1016/j.neurobiolaging.2007.11.021

Deiber, M. P., Meziane, H. B., Hasler, R., Rodriguez, C., Toma, S., Ackermann, M., et al. (2015). Attention and working memory-related EEG markers of subtle cognitive deterioration in healthy elderly individuals. J. Alzheimers Dis. 47, 335–349. doi: 10.3233/JAD-150111

Diedenhofen, B., and Musch, J. (2015). Cocor: a comprehensive solution for the statistical comparison of correlations. PLoS One 10:e0121945. doi: 10.1371/journal.pone.0121945

Doan, D. N. T., Ku, B., Choi, J., Oh, M., Kim, K., Cha, W., et al. (2021). Predicting dementia with prefrontal electroencephalography and event-related potential. Front. Aging Neurosci. 13:659817. doi: 10.3389/fnagi.2021.659817

Dunn, O. J., and Clark, V. (1969). Correlation coefficients measured on the same individuals. J. Am. Stat. Assoc. 64, 366–377. doi: 10.1080/01621459.1969.10500981

Edelman, S., and Intrator, N. (2000). (Coarse coding of shape fragments)+(retinotopy)≈representation of structure. Spat. Vis. 13, 2–3. doi: 10.1163/156856800741072

Efron, B., and Tibshirani, R. J. (1993). An Introduction to the Bootstrap - CRC Press Book. London: Chapman and Hall.

Farina, F. R., Emek-Savaş, D. D., Rueda-Delgado, L., Boyle, R., Kiiski, H., Yener, G., et al. (2020). A comparison of resting state EEG and structural MRI for classifying Alzheimer’s disease and mild cognitive impairment. Neuroimage 15:116795. doi: 10.1016/j.neuroimage.2020.116795

Fell, J., Dietl, T., Grunwald, T., Kurthen, M., Klaver, P., Trautner, P., et al. (2004). Neural bases of cognitive ERPs: more than phase reset. J. Cogn. Neurosci. 16, 1595–1604. doi: 10.1162/0898929042568514

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). Mini-mental state’. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Galimberti, D., and Scarpini, E. (2011). Disease-modifying treatments for Alzheimer’s disease. Ther. Adv. Neurol. Disord. 4, 203–216. doi: 10.1177/1756285611404470

Ghorbanian, P., Devilbiss, D. M., Verma, A., Bernstein, A., Hess, T., Simon, A. J., et al. (2013). Identification of resting and active state EEG features of Alzheimer’s disease using discrete wavelet transform. Ann. Biomed. Eng. 41, 1243–1257. doi: 10.1007/s10439-013-0795-5

Hadjichrysanthou, C., Evans, S., Bajaj, S., Siakallis, L. C., McRae-McKee, K., de Wolf, F., et al. (2020). The dynamics of biomarkers across the clinical spectrum of Alzheimer’s disease. Alzheimers Res. Ther. 12:74. doi: 10.1186/s13195-020-00636-z

Hastie, T., Buja, A., and Tibshirani, R. (2007). Penalized discriminant analysis. Ann. Stat. 23, 73–102. doi: 10.1214/aos/1176324456

Hebert, L. E., Weuve, J., Scherr, P. A., and Evans, D. A. (2013). Alzheimer disease in the United States (2010-2050) estimated using the 2010 census. Neurology 80, 1778–1783. doi: 10.1212/WNL.0b013e31828726f5

Herrmann, C. S., Strüber, D., Helfrich, R. F., and Engel, A. K. (2016). EEG oscillations: from correlation to causality. Int. J. Psychophysiol. 103, 12–21. doi: 10.1016/j.ijpsycho.2015.02.003

Hoseini, Z., Nazari, M., Lee, K. S., and Chung, H. (2021). Current feedback instrumentation amplifier with built-in differential electrode offset cancellation loop for ECG/EEG sensing frontend. IEEE Trans. Instrum. Meas. 70:2001911. doi: 10.1109/TIM.2020.3031205

Hubel, D. H., and Wiesel, T. N. (1962). Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 160, 106–154. doi: 10.1113/jphysiol.1962.sp006837

Intrator, N. (2018). Systems and methods for brain activity interpretation. U.S. Patent No 2,016,023,535,1A1. Washington, DC: U.S. Patent and Trademark Office.

Intrator, N. (2019). Systems and methods for analyzing brain activity and applications thereof. U.S. Patent No 2,017,034,790,6A1. Washington, DC: U.S. Patent and Trademark Office.

Jack, C. R. Jr., Albert, M. S., Knopman, D. S., McKhann, G. M., Sperling, R. A., Carrillo, M. C., et al. (2011). Introduction to revised criteria for the diagnosis of Alzheimer’s disease: national institute on aging and the Alzheimer association workgroups. Alzheimer Dement. 7, 257–262. doi: 10.1016/j.jalz.2011.03.004.Introduction

Jensen, O., and Tesche, C. D. (2002). Frontal theta activity in humans increases with memory load in a working memory task. Eur. J. Neurosci. 15, 1395–1399. doi: 10.1046/j.1460-9568.2002.01975.x

Jeong, J. (2004). EEG dynamics in patients with Alzheimer’s disease. Clin. Neurophysiol. 115, 1490–1505. doi: 10.1016/j.clinph.2004.01.001

Kashefpoor, M., Rabbani, H., and Barekatain, M. (2016). Automatic diagnosis of mild cognitive impairment using electroencephalogram spectral features. J. Med. Signals Sens. 6, 25–32. doi: 10.4103/2228-7477.175869

Khatun, S., Morshed, B. I., and Bidelman, G. M. (2019). A single-channel EEG-based approach to detect mild cognitive impairment via speech-evoked brain responses. IEEE Trans. Neural Syst. Rehabil. Eng. 27, 1063–1070. doi: 10.1109/TNSRE.2019.2911970

Lang, L., Clifford, A., Wei, L., Zhang, D., Leung, D., Augustine, G., et al. (2017). Prevalence and determinants of undetected dementia in the community: a systematic literature review and a meta-analysis. BMJ Open 7:e011146. doi: 10.1136/bmjopen-2016-011146

Lehmann, C., Koenig, T., Jelic, V., Prichep, L., John, R. E., Wahlund, L. O., et al. (2007). Application and comparison of classification algorithms for recognition of Alzheimer’s disease in electrical brain activity (EEG). J. Neurosci. Methods 161, 342–350. doi: 10.1016/j.jneumeth.2006.10.023

López, M. M., Ramírez, J., Górriz, J. M., Alvarez, I., Salas-Gonzalez, D., Segovia, F., et al. (2009). SVM-based CAD system for early detection of the Alzheimer’s disease using kernel PCA and LDA. Neurosci. Lett. 464, 233–238. doi: 10.1016/j.neulet.2009.08.061

Lv, J. Y., Wang, T., Qiu, J., Feng, S. H., Tu, S., and Wei, D. T. (2010). The electrophysiological effect of working memory load on involuntary attention in an auditory-visual distraction paradigm: an ERP study. Exp. Brain Res. 205, 81–86. doi: 10.1007/s00221-010-2360-x

Maimon, N. B., Bez, M., Drobot, D., Molcho, L., Intrator, N., Kakiashvilli, E., et al. (2021). Continuous monitoring of mental load during virtual simulator training for laparoscopic surgery reflects laparoscopic dexterity. A comparative study using a novel wireless device. Front. Neurosci. 15:694010. doi: 10.3389/fnins.2021.694010

Maimon, N. B., Molcho, L., Intrator, N., and Lamy, D. (2020). Single-channel EEG features during n-back task correlate with working memory load. arXiv [Preprint] doi: 10.48550/arXiv.2008.04987

Maimon, N. B., Molcho, L., Jaul, E., Intrator, N., Barron, J., and Meiron, O. (2022). “EEG reactivity changes captured via mobile BCI device following tDCS intervention–a pilot-study in disorders of consciousness (DOC) patients,” in Proceedings of the 10th International Winter Conference on Brain-Computer Interface (BCI) (Gangwon-do: IEEE), 1–3.

Makeig, S., Westerfield, M., Jung, T. P., Enghoff, S., Townsend, J., Courchesne, E., et al. (2002). Dynamic brain sources of visual evoked responses. Science 295, 690–694. doi: 10.1126/science.1066168

Mateos-Pérez, J. M., Dadar, M., Lacalle-Aurioles, M., Iturria-Medina, Y., Zeighami, Y., and Evans, A. C. (2018). Structural neuroimaging as clinical predictor: a review of machine learning applications. Neuroimage Clin. 20, 506–522. doi: 10.1016/j.nicl.2018.08.019

Maxime, H. (2017). RVAideMemoire: Diverse Basic Statistical and Graphical Functions,” R package 0.9-57. Available Online at: https://CRAN.R-project.org/package=RVAideMemoire.

Meghdadi, A. H., Stevanović Karić, M., McConnell, M., Rupp, G., Richard, C., Hamilton, J., et al. (2021). Resting state EEG biomarkers of cognitive decline associated with Alzheimer’s disease and mild cognitive impairment. PLoS One 16:e0244180. doi: 10.1371/journal.pone.0244180

Melissant, C., Ypma, A., Frietman, E. E. E., and Stam, C. J. (2005). A method for detection of Alzheimer’s disease using ICA-enhanced EEG measurements. Artif. Intell. Med. 33, 209–222. doi: 10.1016/j.artmed.2004.07.003

Meng, X. L., Rosenthal, R., and Rubin, D. B. (1992). Comparing correlated correlation coefficients. Psychol. Bull. 111, 172–175. doi: 10.1037/0033-2909.111.1.172

Missonnier, P., Deiber, M. P., Gold, G., Herrmann, F. R., Millet, P., Michon, A., et al. (2007). Working memory load-related electroencephalographic parameters can differentiate progressive from stable mild cognitive impairment. Neuroscience 150, 346–356. doi: 10.1016/j.neuroscience.2007.09.009

Moretti, D. V., Frisoni, G. B., Fracassi, C., Pievani, M., Geroldi, C., Binetti, G., et al. (2011). MCI patients’ EEGs show group differences between those who progress and those who do not progress to AD. Neurobiol. Aging 32, 563–571. doi: 10.1016/j.neurobiolaging.2009.04.003

Morris, J. C. (1993). The clinical dementia rating (cdr): current version and scoring rules. Neurology 43, 1588–1592. doi: 10.1212/wnl.43.11.2412-a

Neretti, N., and Intrator, N. (2002). “An adaptive approach to wavelet filters design,” in Proceedings of the Neural Networks for Signal Processing IEEE Workshop, Bangalore, 317–326. doi: 10.1109/NNSP.2002.1030043

Nimmy John, T., Subha Dharmapalan, P., and Ramshekhar Menon, N. (2019). Exploration of time-frequency reassignment and homologous inter-hemispheric asymmetry analysis of MCI-AD brain activity. BMC Neuroscience 20:38. doi: 10.1186/s12868-019-0519-3

O’Bryant, S. E., Humphreys, J. D., Smith, G. E., Ivnik, R. J., Graff-Radford, N. R., Petersen, R. C., et al. (2008). Detecting dementia with the mini-mental state examination in highly educated individuals. Arch. Neurol. 65, 963–967. doi: 10.1001/archneur.65.7.963

Paitel, E. R., Samii, M. R., and Nielson, K. A. (2021). A systematic review of cognitive event-related potentials in mild cognitive impairment and Alzheimer’s disease. Behav. Brain Res. 396:112904. doi: 10.1016/j.bbr.2020.112904

Petersen, R. C. (2004). Mild cognitive impairment as a diagnostic entity. J. Intern. Med. 256, 183–194. doi: 10.1111/j.1365-2796.2004.01388.x

Plassman, B. L., Williams, J. W. Jr., Burke, J. R., Holsinger, T., and Benjamin, S. (2010). Systematic review: factors associated with risk for and possible prevention of cognitive decline in later life. Ann. Intern. Med. 153, 182–193. doi: 10.7326/0003-4819-153-3-201008030-00258

Popp, F., Dallmer-Zerbe, I., Philipsen, A., and Herrmann, C. S. (2019). Challenges of P300 modulation using Transcranial Alternating Current Stimulation (tACS). Front. Psychol. 10:476. doi: 10.3389/fpsyg.2019.00476

Pritchard, W. S., Duke, D. W., Coburn, K. L., Moore, N. C., Tucker, K. A., Jann, M. W., et al. (1994). EEG-based, neural-net predictive classification of Alzheimer’s disease versus control subjects is augmented by non-linear EEG measures. Electroencephalogr. Clin. Neurophysiol. 91, 118–130. doi: 10.1016/0013-4694(94)90033-7

Qiu, C., Kivipelto, M., and von Strauss, E. (2009). Epidemiology of Alzheimer’s disease: occurrence, determinants, and strategies toward intervention. Dialogues Clin. Neurosci. 11, 111–128. doi: 10.31887/DCNS.2009.11.2/cqiu

Ritchie, K., and Touchon, J. (2000). Mild cognitive impairment: conceptual basis and current nosological status. Lancet 15, 225–228. doi: 10.1016/S0140-6736(99)06155-3