- 1School of Electronic and Computer Engineering, Peking University, Shenzhen, China

- 2Peng Cheng Laboratory, Shenzhen, China

- 3College of Engineering, Pennsylvania State University, State College, PA, United States

- 4Harbin Institute of Technology, Shenzhen, China

- 5Beijing Normal University-Hong Kong Baptist University United International College, Zhuhai, China

- 6PLA Strategic Support Force Characteristic Medical Center, Beijing, China

Nuclear segmentation of histopathological images is a crucial step in computer-aided image analysis. There are complex, diverse, dense, and even overlapping nuclei in these histopathological images, leading to a challenging task of nuclear segmentation. To overcome this challenge, this paper proposes a hybrid-attention nested UNet (Han-Net), which consists of two modules: a hybrid nested U-shaped network (H-part) and a hybrid attention block (A-part). H-part combines a nested multi-depth U-shaped network and a dense network with full resolution to capture more effective features. A-part is used to explore attention information and build correlations between different pixels. With these two modules, Han-Net extracts discriminative features, which effectively segment the boundaries of not only complex and diverse nuclei but also small and dense nuclei. The comparison in a publicly available multi-organ dataset shows that the proposed model achieves the state-of-the-art performance compared to other models.

1 Introduction

Histopathological imaging diagnosis is an important significance of cancer diagnosis, known as the “gold standard” of clinical tumors. Nuclear segmentation of histopathological images is a crucial step in computer-aided image analysis. Accurately segmenting the nucleus in pathological tissue sections provides powerful support for disease diagnosis, cancer staging, and postoperative treatment. However, the task of nuclear segmentation in histopathological images is still challenging, for which 1) the types of histopathological structures are complex and diverse, and there are many types and complex appearances of nuclei; 2) the nuclei are usually small and dense, leading to an overlapping challenge for nuclear segmentation.

Traditional nuclear segmentation methods have contributed to some extent, such as Otsu (Otsu, 1979), the watershed method (Yang et al., 2006), K-mean clustering (Filipczuk et al., 2011), and Grab Cut (Rother et al., 2004). However, some specific parameters or thresholds are required to set while using these methods for nuclear segmentation. Besides, the lack of generalization ability makes these methods only effective for a few types of histopathological images. With the application and development of deep learning technology in image segmentation, these traditional nuclear segmentation methods are only used as pre/post-processing steps.

In recent years, some models based on convolutional neural networks have been proposed for histopathological image analysis (Ronneberger et al., 2015), (Zhou et al., 2018), (Wollmann et al., 2019), (Qu et al., 2019). UNet (Ronneberger et al., 2015) is successfully applied to segmentation tasks in medical image analysis. The network adopts encode-decode design and has skip connections to combine low-level and high-level feature information. This framework effectively captures the contextual information of image features and has become a basic framework of the current mainstream model for segmentation tasks. However, only using the UNet framework cannot efficiently separate dense or overlapping instances. Therefore, some models have been proposed to improve the performance of UNet. Zhou et al. (2018) proposed UNet++, which reduces the semantic gap between feature maps of encoder and decoder subnets through a series of nested, dense skip pathways. Wollmann et al. (2019) proposed GRUU-Net, which integrates convolutional neural networks and gated recurrent neural networks on multiple image scales to combine the advantages of both types of networks. In addition to encode-decode architecture, dilated convolution has also been proposed and applied to segmentation tasks. Yu and Koltun first proposed a new convolutional network module specifically for dense prediction, which uses dilated convolution to systematically aggregate multi-scale context information without loss of resolution (Yu and Koltun, 2016). Qu et al. introduced the idea of dilated convolution into nuclear segmentation and proposed FullNet (Qu et al., 2019). FullNet uses several densely connected layers with different dilation factors to replace the encoding-decoding operation, thereby avoiding the loss of feature information. Experiments show that the performance of FullNet in cell nuclear segmentation is better than other comparison models. In general, although some latest models have turned their attention to the gaps of the UNet model and achieved competitive performance to some extent, they still have limitations. For example, 1) they fail to effectively identify small or dense objects; 2) they treat each pixel as a separate classification task, failing to fully consider global feature information and the relevance between different pixels.

To address the issues mentioned above, the main contributions of this paper are 1) We propose a hybrid-attention nested UNet (Han-Net), which consists of two modules: a hybrid nested U-shaped network (H-part) and hybrid attention block (A-part). 2) In H-part, we integrate a nested multi-depth U-shaped network and a dense network with full resolution to capture more effective feature information. The excellent feature extraction capability of H-part can effectively segment the boundaries of complex and diverse nuclei. 3) We propose a novel hybrid attention block (A-part) to boost attention information and explore the correlation between different pixels, thereby to effectively capture some small or dense nuclei. 4) Han-Net is compared with some recently proposed models in a multi-organ segmentation dataset. The comparison results show that the proposed model has the state-of-the-art performance.

2 Method

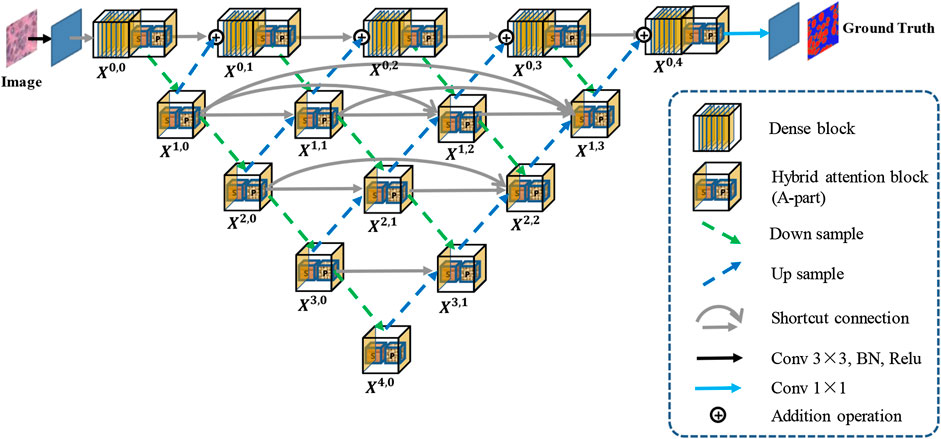

Figure 1 shows an overview of the proposed Han-Net, which consists of the backbone module (H-part) and hybrid attention module (A-part). H-part is proposed to obtain multi-scale feature information and improve the capability for exploring effective features. A-part is proposed to boost attention information and capture the correlation between features. In Figure 1, each different block and process is represented by different icons and arrows. The detailed information of H-part and A-part is described in the following sub-sections.

2.1 Hybrid Nested U-Shaped Network (H-part)

The framework of H-part is similar to a multilayer regular triangle structure, which is composed of multiple encoders and decoders. The framework of the proposed H-part is shown in Figure 2. Inspired by UNet++ (Zhou et al., 2018), we have nested multiple conv blocks in UNet to bridge the possible semantic gap between the corresponding levels of encoder-decoder in classic UNet.

In the top layer, we added a dense network using dilated convolution to make it obtain more global feature information at full resolution. Further, we unify the channel number of the output feature map obtained from up-sampling and previous dense block, so that feature map fusion can be carried out by adding and acting as the input of next dense block. Except the top layer, we set up shortcut connections to prevent losing feature information, and use concatenate operation for the fusion of feature maps. A dense block contains

Moreover, we explain the calculation relationship between each block: let

where

2.2 Hybrid Attention Block (A-Part)

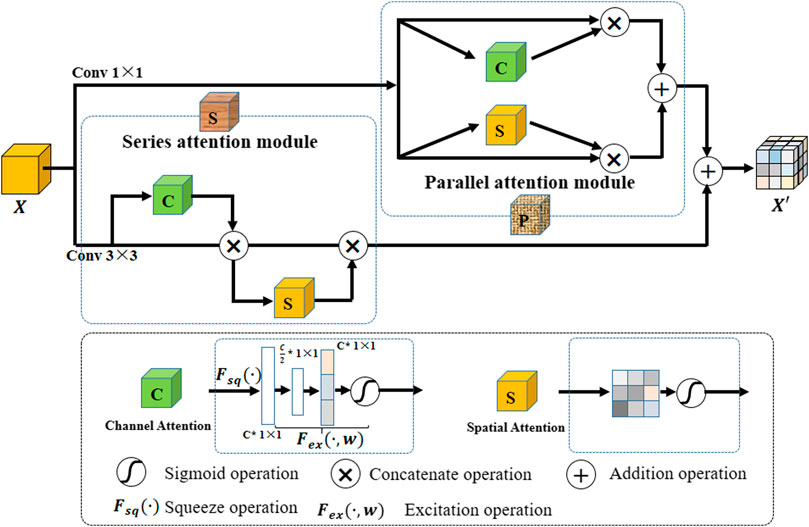

To highlight effective features and explore the correlation between different pixels, we propose a hybrid attention block (A-part). Its structure is shown in Figure 3. In A-part, channel attention and spatial attention constitute a series of attention module and parallel attention module through series and parallel operations, respectively. Assume that the input feature map are

Channel attention captures the importance of different channels in feature maps, thereby enhancing or suppressing different channels. The operation is as follows: a branch is separated after a normal convolution operation. Squeeze operation is first performed on the branch (i.e.,

Spatial attention aims at exploring the relative importance of each pixel on feature map. It emphasizes related spatial locations and ignores unrelated locations. The operation flow is as follows: First, a 1-channel kernel size of

In this paper, A-part is added after each dense block in H-part to strengthen the effective information while controlling the number of channels. In the nested U-shape network of H-part, we replace the original conv block with A-part to improve extraction capability for effective feature information.

3 Experimental and Results

3.1 Dataset and Evaluation Metrics

We validate the performance of our proposed model in the Multi-Organ nuclear segmentation (MoNuSeg) dataset (Kumar et al., 2017). The dataset consists of 30 hematoxylin and eosin (H & E) stained histopathology images of size

In this paper, four evaluation metrics are used to comprehensively evaluate the performance of the proposed model, namely F1-score (F1), Dice coefficient (Dice), average Hausdorff distance (H), and Aggregated Jaccard Index (AJI). Among them, F1 and Dice are evaluated from pixel-level, and Hausdorff and AJI are evaluated from object-level.

3.2 Implementation Details

We implemented the proposed model with PyTorch 1.0. One NVIDIA Tesla V100 with CUDA 10.1 is used for computation. We treat the nuclear segmentation task as a three-class classification problem, including nucleoli, nuclear boundary, and background. During training, we use Adam as the optimizer. The initial learning rate is set as 0.001 and drop rate is set as 0.1. The dilation factors are

Although the model predicts three-class results during testing, we use inside class area to retrieve the boundary class area and distinguish different nuclei instances according to the boundary class area in the post-processing process. The final boundary area is obtained according to the following steps: 1) Perform dilation and erosion operations in the inside class area; 2) Subtract the area obtained by erosion from the area obtained by dilation to obtain the boundary class area.

3.3 Evaluation and Comparison

3.3.1 Ablation Study

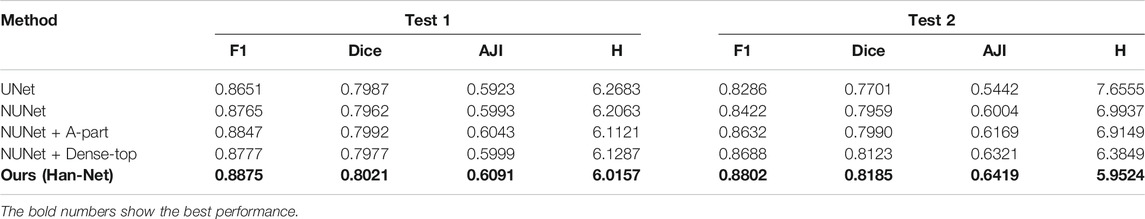

In the experiments, we perform several ablation studies to prove the effectiveness of the proposed method and block. We use UNet as a benchmark and compare the following strategies respectively: 1) Our nested UNet (NUNet): nested U-shaped network, that is, UNet with multiple depths, and skip connections are added to the remaining layers except for the top layer; 2) NUNet + A-part: on the basis of (1), A-part is added without dense network; 3) NUNet +Dense-top: on the basis of (1), dense network is added in the top layer; 4) Ours (Han-Net): both H-part and A-part are adopted. The performance comparison results are shown in Table 1.

Comparing the performance in Table 1, some conclusions can be drawn: (1) In terms of UNet, our nested UNet performs better in the following aspects: In Test 1, F1 improves 1.14%, and other metrics also improve slightly. In Test 2, the performance of each metric gains a significant improvement. Among them, AJI shows an improvement of 5.62%. These comparison results prove that nested multi-depth U-shaped network can effectively improve segmentation performance. (2) Comparing the performance before and after using A-part, F1 achieves an improvement of 0.82%, Dice achieves 0.30%, and AJI achieves 0.50% in Test 1. In Test 2, F1 improves 2.10%, Dice improves 0.31%, and AJI improves 1.65%, respectively. The Hausdorff distance is reduced in both test sets. This shows that adding the A-part can indeed improve the segmentation performance. (3) Similarly, replacing the original conv block with dense network in the top layer effectively improves the model segmentation performance. Among them, the improvement of AJI is 3.17% in Test 2. These results prove the effectiveness of the dense network in H-part. (4) In Han-Net, which combines H-part and A-part, the segmentation performance gains more significant improvement basis of our nested UNet. Achieving F1 of 0.8875 and AJI of 0.6091 in Test 1, F1 of 0.8802, and AJI of 0.6419 in Test 2 shows an improvement of 1.10% for F1 and 0.98% for AJI in Test 1, 3.80% for F1 and 4.15% for AJI in Test 2, respectively. Dice and Hausdorff distance also have the same trend. The above results show that the several modules we proposed are effective. Further, compared with the performance improvement in Test 1, the performance improvement in Test 2 is more obvious, which reflects that after adopting the above modules, the Han-Net shows better robustness for the nuclear segmentation of different organs.

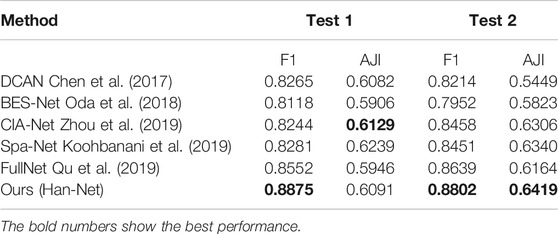

Moreover, we compare Han-Net with the novel methods proposed in previous studies. These methods include: DCAN (Chen et al., 2017), BES-Net (Oda et al., 2018), CIA-Net (Zhou et al., 2019), Spa-Net (Koohbanani et al., 2019), FullNet (Qu et al., 2019). They achieved competitive segmentation performance in the MoNuSeg dataset, respectively. Under the two evaluation metrics of F1 and AJI, the performance comparison between different methods is shown in Table 2.

The number of parameters and inference time are two important aspects to assess the utility of a new method. In order to further illustrate the effectiveness of the proposed Han-Net, we compared the number of parameters and inference time between Han-Net and vanilla UNet. In our experiment, Han-Net and vanilla UNet have same number of channels in each feature layer Xi (i.e. Xi, i = {0, 1, 2, 3, 4}). The channel numbers in each feature layer Xi are 64, 128, 256, 512, and 1024 respectively. The experimental results show that the parameters of Han-Net and vanilla UNet are 31.04 MB and 30.58 MB, respectively. In the experiment, we replaced the original ‘TransposeConv’ upsampling approach in UNet with ‘bilinear interpolation’ to reduce the parameters. In other words, the increase in parameters brought by the proposed modules is close to the decrease in parameters brought by the above operation. On the other hand, the experimental results show that the inference time required by Han-Net and vanilla UNet is basically the same, which means that Han-Net does not require additional inference time. These two comparative experiments also reflect the effectiveness of our proposed Han-Net.

From the performance comparison in Table 2, our proposed Han-Net achieves the state-of-the-art performance under F1 in Test 1, Test 2, and AJI in Test 2. It achieves 0.8875 of F1 in Test 1, 0.8802 of F1 and 0.6419 of AJI in Test 2 respectively, which shows an improvement of 3.23% for F1 in Test 1, 1.63% of F1 and 0.79% of AJI in Test 2. The performance of Han-Net’s AJI in Test 1 is almost the same as the optimal CIA-Net. Therefore, Han-Net can be considered to reach the state-of-the-art performance in MoNuSeg dataset.

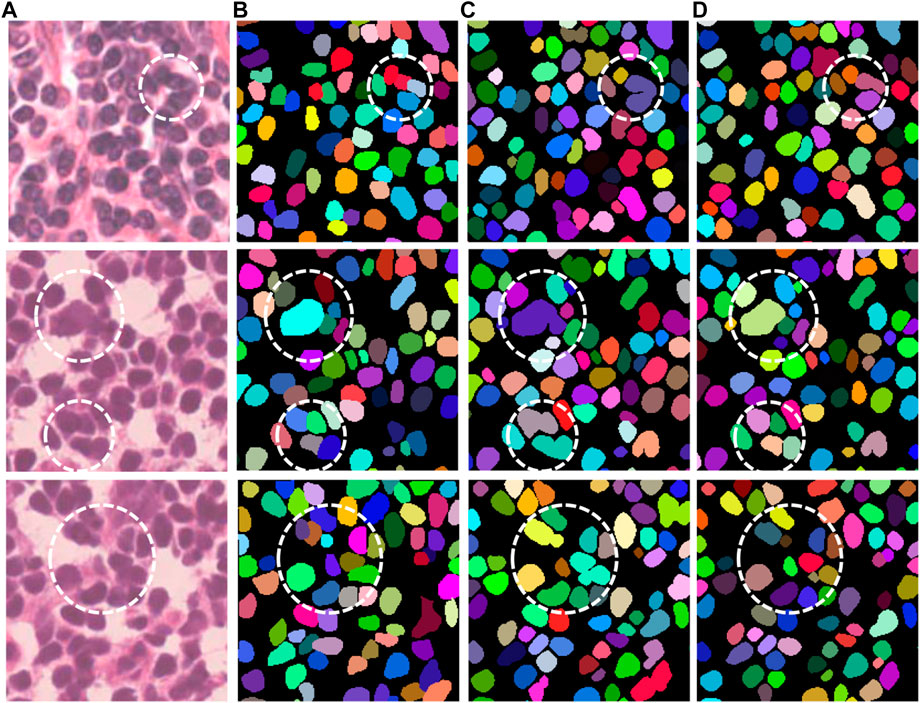

3.3.2 Qualitative analysis

Figure 4 shows several representative examples with challenging cases from the MoNuSeg dataset, which includes nuclei with irregular and densely distributed nuclei. That is, the relevant cases in Figure 4 are shown by a white dotted circle. It can be obtained from these images that compared with UNet, our proposed Han-Net achieves better segmentation results in some challenging regions.

4 Discussion

In this paper, we propose a hybrid nested attention UNet (Han-Net) for nuclear segmentation in histopathological images, which consists of H-part and A-part. Among them, H-part combines nested U-shaped network and dense network with full-resolution to obtain more effective multi-scale feature information. The A-part is proposed to enhance the effective features and suppress the invalid features, so that the proposed model can learn the morphological information of the nuclei. The experiment results prove that the Han-Net achieves state-of-the-art segmentation performance in the MoNuSeg dataset. In future work, we will consider pruning Han-Net to make it a lightweight network, and try to integrate other methods to decouple the boundary and inside.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

HH proposed the method and wrote most of the sections of this paper, CZ and LC wrote the most of code, RG carried out data preprocessing, JC provided support on computing resources and guidance on deep learning techniques. YoL and YaL provided suggestions on model improvement and guidance on paper writing, JW and YX provided guidance on pathological diagnosis and reviewed experimental results. All authors contributed to the article and approved the submitted version.

Funding

This work is supported by the Nature Science Foundation of China (No.62081360152, No.61972217), Natural Science Foundation of Guangdong Provice in China (No.2019B1515120049, 2020B1111340056).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Chen, H, Qi, X, Yu, L, Dou, Q, Qin, J, and Heng, PA (2017). Dcan: deep contour-aware networks for object instance segmentation from histology images. Med Image Anal. 36, 135–146. doi:10.1016/j.media.2016.11.004 |

Filipczuk, P., Kowal, M., and Obuchowicz, A. (2011). “Automatic breast cancer diagnosis based on k-means clustering and adaptive thresholding hybrid segmentation”. in Image processing and communications challenges 3 Advances in Intelligent and Soft Computing. Editor R. S. Choraś (Berlin, Heidelberg: Springer). 295–302

Koohbanani, N. A., Jahanifar, M., Gooya, A., and Rajpoot, N. (2019). “Nuclear instance segmentation using a proposal-free spatially aware deep learning framework”. in International Conference on Medical Image Computing and Computer-Assisted Intervention. Shenzhen, China, 13–17 October, (Cham: Springer), 622–630

Kumar, N, Verma, R, Sharma, S, Bhargava, S, Vahadane, A, and Sethi, A (2017). A dataset and a technique for generalized nuclear segmentation for computational pathology. IEEE Trans Med Imaging. 36, 1550–1560. doi:10.1109/TMI.2017.2677499 |

Oda, H., Roth, H. R., Chiba, K., Sokolić, J., Kitasaka, T., Oda, M., et al. (2018). “Besnet: boundary-enhanced segmentation of cells in histopathological images”. in International Conference on Medical Image Computing and Computer-Assisted Intervention (Springer), 228–236

Otsu, N. (1979). A threshold selection method from gray-level histograms. IEEE Trans. Syst., Man, Cybern. 9, 62–66. doi:10.1109/tsmc.1979.4310076

Qu, H., Yan, Z., Riedlinger, G. M., De, S., and Metaxas, D. N. (2019). “Improving nuclei/gland instance segmentation in histopathology images by full resolution neural network and spatial constrained loss”. in International Conference on Medical Image Computing and Computer-Assisted Intervention Shenzhen, China, 13–17 October, (Cham: Springer), 378–386

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October (Cham: Springer), 234–241

Rother, C., Kolmogorov, V., and Blake, A. (2004). "GrabCut" interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 23, 309–314. doi:10.1145/1015706.1015720

Wollmann, T, Gunkel, M, Chung, I, Erfle, H, Rippe, K, and Rohr, K (2019). Gruu-net: Integrated convolutional and gated recurrent neural network for cell segmentation. Med Image Anal. 56, 68–79. doi:10.1016/j.media.2019.04.011 |

Yang, X., Li, H., and Zhou, X. (2006). Nuclei segmentation using marker-controlled watershed, tracking using mean-shift, and kalman filter in time-lapse microscopy. IEEE Trans. Circuits Syst. I. 53, 2405–2414. doi:10.1109/tcsi.2006.884469

Yu, F., and Koltun, V. (2016). “Multi-scale context aggregation by dilated convolutions”. in International Conference on Learning Representations (ICLR)

Zhou, Y., Onder, O. F., Dou, Q., Tsougenis, E., Chen, H., and Heng, P.-A. (2019). “Cia-net: Robust nuclei instance segmentation with contour-aware information aggregation”. in International Conference on Information Processing in Medical Imaging (Cham: Springer), 682–693

Keywords: Histopathological image, Nuclear segmentation, Nested UNet, Hybrid attention, Dilated convolution

Citation: He H, Zhang C, Chen J, Geng R, Chen L, Liang Y, Lu Y, Wu J and Xu Y (2021) A Hybrid-Attention Nested UNet for Nuclear Segmentation in Histopathological Images. Front. Mol. Biosci. 8:614174. doi: 10.3389/fmolb.2021.614174

Received: 05 October 2020; Accepted: 06 January 2021;

Published: 17 February 2021.

Edited by:

Xin Gao, King Abdullah University of Science and Technology, Saudi ArabiaCopyright © 2021 He, Zhang, Chen, Geng, Chen, Liang, Lu, Wu and Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yongjie Xu, YXJteHlqMDA3QGFsaXl1bi5jb20=

Hongliang He1,2

Hongliang He1,2 Jie Chen

Jie Chen Ruizhe Geng

Ruizhe Geng