95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Med. Technol. , 23 September 2022

Sec. Diagnostic and Therapeutic Devices

Volume 4 - 2022 | https://doi.org/10.3389/fmedt.2022.905074

This article is part of the Research Topic Insights in Diagnostic and Therapeutic Devices View all 5 articles

Chun Hon Lau1,2,†

Chun Hon Lau1,2,† Ken Hung-On Yu1,2,†

Ken Hung-On Yu1,2,† Tsz Fung Yip1,2

Tsz Fung Yip1,2 Luke Yik Fung Luk1,2

Luke Yik Fung Luk1,2 Abraham Ka Chung Wai3

Abraham Ka Chung Wai3 Tin-Yan Sit4

Tin-Yan Sit4 Janet Yuen-Ha Wong4,5*

Janet Yuen-Ha Wong4,5* Joshua Wing Kei Ho1,2*

Joshua Wing Kei Ho1,2*

The management of chronic wounds in the elderly such as pressure injury (also known as bedsore or pressure ulcer) is increasingly important in an ageing population. Accurate classification of the stage of pressure injury is important for wound care planning. Nonetheless, the expertise required for staging is often not available in a residential care home setting. Artificial-intelligence (AI)-based computer vision techniques have opened up opportunities to harness the inbuilt camera in modern smartphones to support pressure injury staging by nursing home carers. In this paper, we summarise the recent development of smartphone or tablet-based applications for wound assessment. Furthermore, we present a new smartphone application (app) to perform real-time detection and staging classification of pressure injury wounds using a deep learning-based object detection system, YOLOv4. Based on our validation set of 144 photos, our app obtained an overall prediction accuracy of 63.2%. The per-class prediction specificity is generally high (85.1%–100%), but have variable sensitivity: 73.3% (stage 1 vs. others), 37% (stage 2 vs. others), 76.7 (stage 3 vs. others), 70% (stage 4 vs. others), and 55.6% (unstageable vs. others). Using another independent test set, 8 out of 10 images were predicted correctly by the YOLOv4 model. When deployed in a real-life setting with two different ambient brightness levels with three different Android phone models, the prediction accuracy of the 10 test images ranges from 80 to 90%, which highlight the importance of evaluation of mobile health (mHealth) application in a simulated real-life setting. This study details the development and evaluation process and demonstrates the feasibility of applying such a real-time staging app in wound care management.

Caring for pressure injury (PI) in the community, especially for elderlies with limited mobility, has been emerging as a healthcare burden in recent years due to the ageing population (1–3). These injuries are caused by unrelieved pressure occurring over dependent bony prominences, such as the sacrum, the ankle or the heel, as well as shear forces and friction (4). Importantly, PI is preventable and treatable with early diagnosis and proper wound management.

Considering PI conditions and healing progress, National Pressure Injury Advisory Panel (NPIAP) have a defined staging system — stage 1, stage 2, stage 3, stage 4, unstageable, deep tissue pressure injury, and mucosal membrane pressure injury — to guide wound care specialists, clinicians and nurses for treatment options (5). The commonly identified tissue types in PI include epithelialisation, granulation, slough, necrosis, and dry eschar. Accurate and efficient assessment of PI helps healthcare professionals offer appropriate interventions and subsequent progress monitoring.

The prevalence of PI is estimated to be 10%–25.9% (6). Frail elderly, bedridden elderly and elderly with incontinence are particularly vulnerable. Initial PI assessment is normally performed by specialist wound care physicians or nurses in hospitals or clinics, followed by daily or weekly follow-up management by community nurses or other providers. Given its financial implication, patients with PI are usually managed by lay home carers, leading to increased risk of wound infection, delayed healing and hospital admissions (7).

Proper PI staging is critical for treatment plan formulation and progress monitoring. We propose a smartphone-based artificial intelligence (AI) application (app) can provide convenient and effective PI assessment support. We reviewed the state-of-the-art in this field and report our group's effort in the development and evaluation of a real-time PI assessment app.

A number of smartphone or tablet apps for wound assessment, using computer vision algorithms based on mathematical models or AI, have been developed (Table 1). Apps can have one-to-many features built-in, including wound size and depth measurement, localisation and segmentation, tissue classification, manual entry of wound characteristics for documentation and monitoring, and more. Apps are typically made for assessing chronic wounds, such as PI or diabetic foot ulcers.

Some apps require a portable device or component in addition to the smartphone or tablet itself. Garcia-Zapirain et al. (8) implemented a toroidal decomposition-based segmentation algorithm for PI into a tablet app that optimises the treatment plan. The same team later implemented a convolutional neural network (CNN)-based method in a tablet app to automatically segment PI wounds and measure wound size and depth (9). It is important to note that the app requires a Structure Sensor mounted on an iPad to perform the analysis. The imitoWound app (10) was developed for wound documentation and calculation of physical properties of a wound, including wound length, width, surface area, and circumference; these measurements require the use of their custom paper-based calibration markers. Fraiwan et al. (11) proposed an app that makes use of a portable thermal camera (FLIR ONE) for early detection of diabetic foot ulcers based on the Mean Temperature Difference (MTD). This smartphone app only works when attached to the external thermal imaging camera. The accuracy of the method was not benchmarked.

Apps can be conveniently and widely used if they are designed to run on a smartphone or tablet alone. Yap et al. (12) developed an app for assessing diabetic foot ulcers called FootSnap, which the initial version captured standardised images of the plantar surface of diabetic feet and worked on iPad only. Later versions of the app (13) were trained with a CNN to localise wounds in diabetic foot ulcer images, deployed on Android platform, and proposed using a cloud-based framework to store the images taken from the app (14). Kositzke and Dujovny (15) used a wound analyser software called MOWA to perform tissue classification in Android or iOS platforms and smartphone or tablet devices. However, the classification method and accuracy are both unknown, and the wound segmentation must be done manually. Friesen et al. (16) developed an Android smartphone or tablet app titled SmartWoundCare, which was used for electronic documentation of chronic wound management, especially for pressure ulcers. Instead of AI, the app focuses on functions such as telemedicine, wound record management, and tutorials for non-specialists. The group also proposed methods for detecting wound size and colour (17), but as far as publicly available, these are not currently in the SmartWoundCare app. Orciuoli et al. prototyped a smartphone app for size measurement and staging classification based on deep learning. Their staging classifier was trained on a limited set of images (62 total), and the evaluation was only done on the training set (18).

More ambitious apps have many of the mentioned features, whether or not an external device is required. KroniKare (19) provides an AI-based assessment of the wound. A custom-made scanning device, in addition to the smartphone, is required to take a 3D image of the wound. It measures the wound size, identifies tissue types, provides an integrated dashboard for documentation, and detects wound complication such as undermining or infection. Yet, peer review evaluation of its performance is lacking. CARES4WOUNDS (20) also provides AI-based wound assessment in tissue classification, automated wound size and depth measurement, prediction of infection likelihood, and an output of a wound score. The app accepts manual entry of wound characteristics related to documentation and monitoring and recommends wound dressing treatment plans from user-decided treatment objectives using a decision tree. The app works only on later versions of the iPhone (11+) with an integrated depth scanner. A clinical study found the wound size measurement of CARES4WOUNDS very accurate for diabetic foot ulcers (21). Other features of the app about classification or various prediction or score-based outputs have not yet been reported in the literature.

Out of the smartphone or tablet-based wound assessment apps we reviewed here, only Orciuoli et al. directly perform PI staging classification. Other apps mostly focus on size measurement or tissue segmentation, probably due to these features being more intuitively identifiable from the wound image. Staging a PI requires a trained professional, and staging results are not completely free from bias. To build on the existing study, we sought to create our own PI staging classification smartphone app using a larger set of images, further increased by data augmentation, and to more objectively evaluate the classifier using validation and testing sets (both were not included in the training). The challenges of building and evaluating a smartphone PI staging classifier include the requirement of an expert wound specialist to generate the ground truth, the different lighting, angle, wound relative size, and overall quality of the images, and further differences of these factors after a wound image is captured by a smartphone camera. In this article, we describe the process of developing an Android smartphone app that can automatically detect and classify PI. We also present a systematic evaluation of the performance of this app.

The main objective of this study is to develop and evaluate a practical, real-time PI assessment smartphone app. As a pilot study, we developed an AI-based app that was trained with publicly available PI images. The data set was based on a publicly available GitHub repository (22), consisting of images from the Medetec Wound Database (23) and other public sources. After manual inspection to remove images that do not contain PI, the data set has 190 images from pressure ulcer images, including stage 1 (38 photos), stage 2 (35 photos), stage 3 (38 photos), stage 4 (42 photos) and unstageable (38 photos). We manually identified the wound using boundary boxes and annotated each wound based on the definition of the staging system by NPIAP (5). The annotation was reviewed and confirmed by an experienced (>10 years) wound nurse, co-author Tin-Yan Sit. She is a registered nurse in Hong Kong, and an enterostomal therapist who is a member of the WCET (World Council of Enterostomal Therapists). Members of WCET are nurses who provide specialised nursing care for people with ostomy, wound and continence needs; she is also a member of Hong Kong Enterostomal Therapist Association.

Data augmentation was performed by Roboflow (24). Each image in the data set was subjected to three separate transformations—flipping, cropping, and brightening—to create two more images. We then randomly split the data into training and validation sets such that no image in the training set shares the same source as any image in the validation set. To further enhance the size of the training set, we performed another round of data augmentation on the training set with three separate transformations: rotation, shear, and exposure. In total, the training set contains 1,278 images, and the validation set contains 144 images.

We used a high-performance open source object detection and classification system, YOLOv4 (25), as the core AI component in our app. You Only Look Once (YOLO) is an object detection system for real-time processing (26) and uses a neural network to predict the target object's position and class score in a single iteration. Carrión et al. (27) used 256 images of the wound-inflicted lab mice in the YOLO model for object detection. Han et al. (28) proposed a real-time detection and classification software for Wagner grading of diabetic foot wounds. In addition, they found that YOLO achieved a better speed and precision trade-off than other wound localisation models like Single Shot MultiBox Detector (SSD) and Faster R-CNN. These studies show that YOLO is a suitable model for detecting or locating a wound.

Here, we designed a real-time wound detection and classification program based on YOLO's deep-learning training capability and implemented it in a smartphone app (29). We trained YOLO version 4 (v4) (25) on open-source PI images and performed the detection and classification. Following the implementation step in YOLOv4, we first configured cuDNN, a deep learning Graphical Processing Unit (GPU) acceleration library based on CUDA. Then, we installed an open-source neural network framework called Darknet, which was written in C and CUDA technology. Computations of YOLOv4 are performed on GPU. All the annotated wound images in the training set were exported in the YOLO Darknet format. We trained the YOLOv4 detector by using the default training parameter setting. After training the model, we saved the weights file every 1,000 iterations, for a total of 10,000 iterations, the best weights, and the last weights.

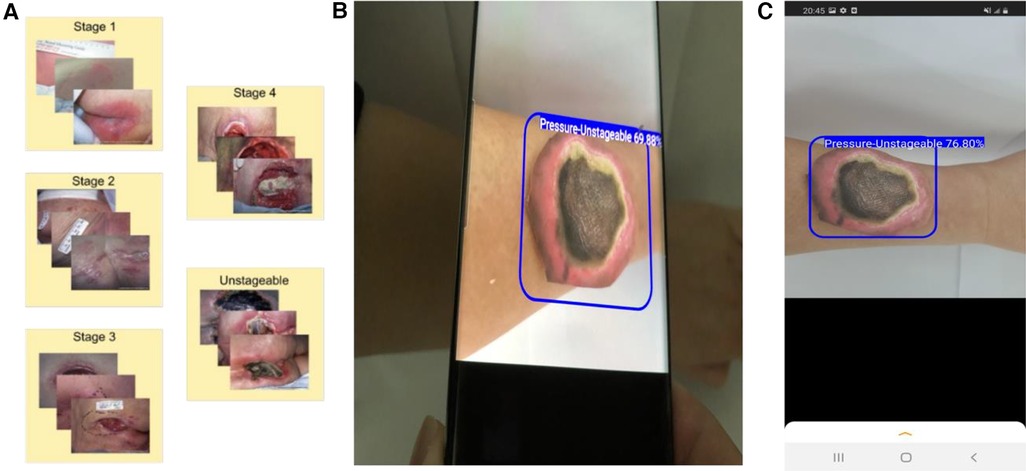

The trained YOLOv4 model was then converted to a TensorFlow Lite (TFLite) model for deployment in an Android device (30). We further built a graphical user interface to make this app user-friendly (Figure 1).

Figure 1. Development of a smartphone app for wound assessment. (A) Collection and annotation of images of pressure injury wounds. (B) Application of our smartphone app to detect and classify PI. (C) A screenshot showing how a printed wound image is automatically detected and classified using the app.

The accuracy of our model is calculated by:

The Matthews Correlation coefficient (MCC) for individual classes (A vs. not A) is calculated by:

where can be stage 1,2,3,4 or unstageable (U).

Bootstrap mean:

Suppose we have an original data set of with ascending order, we calculated the mean and SD of this original data set.

To create a bootstrap sample, we randomly select the data from the original data set times and obtain a data set of . This process is repeated 1,000 times. For each bootstrap sample, we calculated the mean. Finally, we obtained the SD of the mean of these 1,000 bootstrap samples.

The 95% confidence interval is calculated as:

with is the mean of the original data set and is the bootstrap means.

In 95% confidence interval,

We estimate the prediction accuracy of our model based on ten-fold cross-validation (10 fold CV) on the training data set; in addition, we evaluate our model based on a held-out validation set and an independent test set.

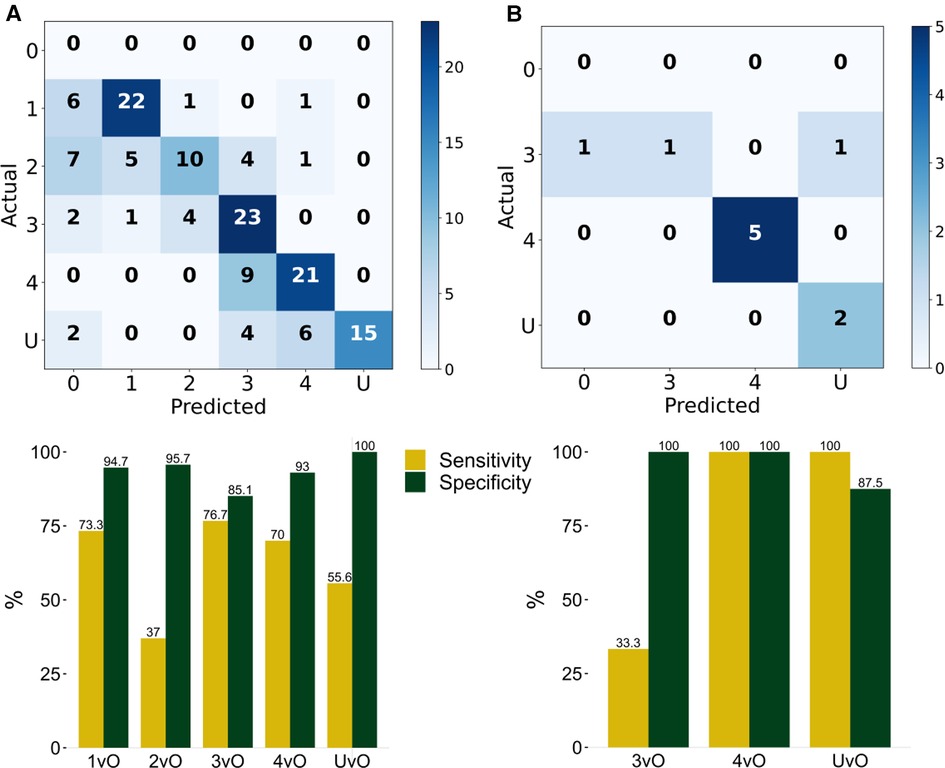

To validate the PI staging classification, we applied our trained YOLOv4 model to the 144 validation PI images. The confusion matrix is shown in Figure 2A. The overall prediction accuracy is 63.2%. We calculated the per-class prediction sensitivity and specificity by comparing the results of each stage against the other stages in the confusion matrix. The per-class prediction sensitivity is variable, at 73.3% (stage 1 vs. others), 37% (stage 2 vs. others), 76.7 (stage 3 vs. others), 70% (stage 4 vs. others), and 55.6% (unstageable vs. others). The per-class prediction specificity is uniformly high, at 94.7% (stage 1 vs. others), 95.7% (stage 2 vs. others), 85.1 (stage 3 vs. others), 93% (stage 4 vs. others), and 100% (unstageable vs. others). We also calculated the per-class prediction Matthews correlation coefficient (MCC) of the model on the same validation set, and the mean MCC across different classes is 60.5%, which is consistent with the accuracy estimate (Figure 3A).

Figure 2. Evaluation of the classification results of the PI stages. Stage 1 (1), stage 2 (2), stage 3 (3), stage 4 (4), and unstageable (U) of the trained YOLOv4 model by computing the confusion matrix and corresponding Sensitivity (TP/TP + FN) and Specificity (TN/TN + FP) of (A) validation set of 144 photos, and (B) testing set of 10 photos. 1vO: Stage 1 vs. Others; 2vO: Stage 2 vs. Others; 3vO: Stage 3 vs. Others; 4vO: Stage 4 vs. Others; UvO: Unstageable vs. Others.

Figure 3. Matthews correlation coefficient (MCC) of (A) validation set of 144 photos, and (B) testing set of 10 photos. 1vO: Stage 1 vs. Others; 2vO: Stage 2 vs. Others; 3vO: Stage 3 vs. Others; 4vO: Stage 4 vs. Others; UvO: Unstageable vs. Others.

To further test the predictive performance of the model, we collected an additional 10 test images using our local patients. Eight out of 10 images had correct classification (accuracy = 80%). The per-class sensitivity is within 33.3%–100%, and the specificity is within 87.5%–100% (Figure 2B), and the MCC is within 50.9%–100% (Figure 3B).

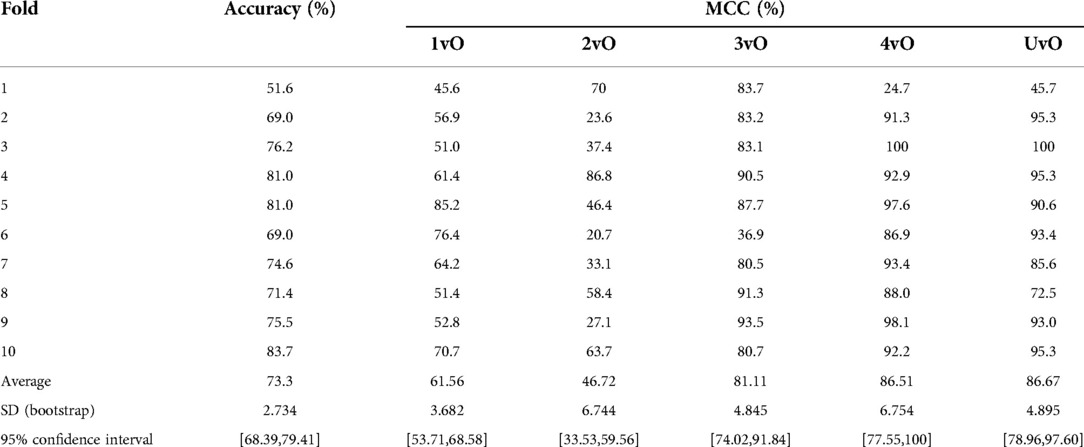

For estimating the reliability of the metrics of our model, we computed 10-fold cross-validation on the training set images, and for each fold, we computed the prediction accuracy and per-class MCC, as well as the mean and 95% confidence interval across the 10 folds for each metric (Table 2). Our mean 10-fold CV accuracy is 73.3% and MCC are 61.56%, 46.72%, 81.11%, 86.51%, 86.67% in the 1vO, 2vO, 3vO, 4vO, and UvO comparisons, respectively. The MCC estimated from the validation set is slightly lower than the estimates from the 10-fold CV experiment (Table 2). This may be caused by an underestimate of the confidence intervals due to large variance in the MCC estimates of the 10 folds. Nonetheless, the overall accuracy and the trend of the per-class MCC estimates between the 10-fold CV and validation set are quite consistent.

Table 2. Accuracy and Matthews correlation coefficient (MCC) of the training set of 1,278 pressure ulcer images by 10-fold cross-validation.

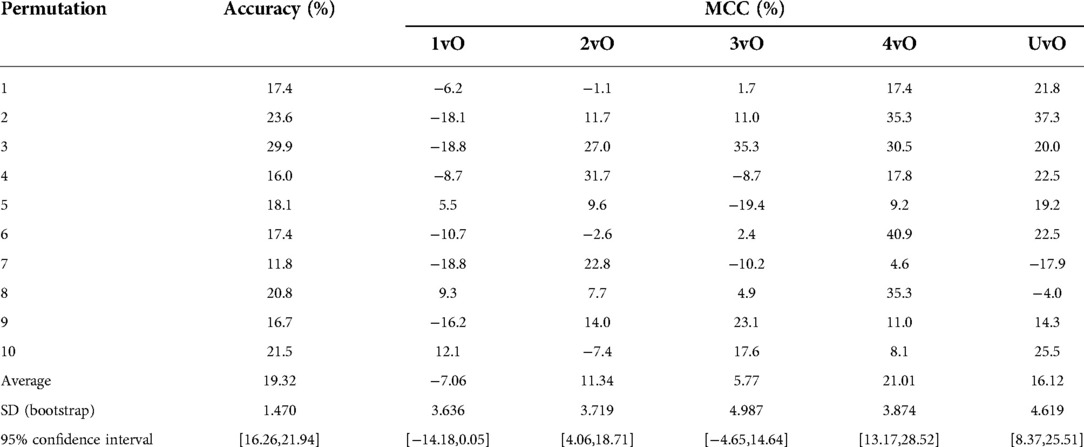

To construct an empirical baseline for comparison, we re-trained the model using the training set images with shuffled class labels (label shuffling done on the level of the original images) and calculated the evaluation metrics on the validation set ten times (Table 3). We recorded a mean shuffling test accuracy of 19.32% and multi-class MCC of −7.06–21.01, which are much poorer than our standard and 10-fold CV results. This result indicates that our trained YOLO model performed much better than a random baseline model, and has indeed learned.

Table 3. Accuracy and Matthews correlation coefficient (MCC) of validation set of random label shuffling of training set of 1,278 pressure ulcer images, repeated ten times.

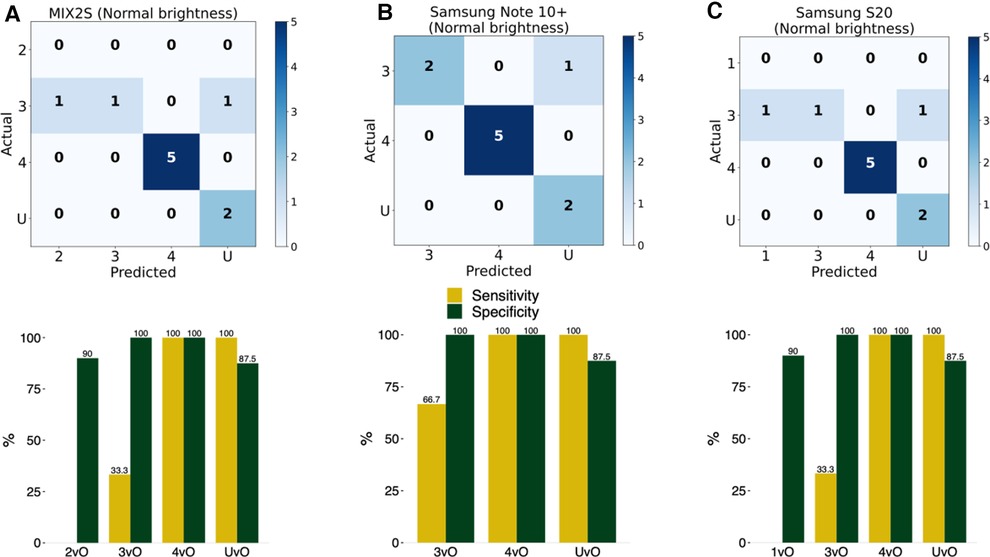

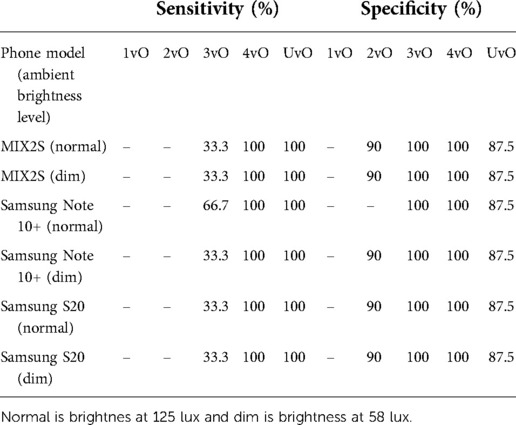

To test the practical utility of the smartphone app, as opposed to just the performance of the ML model, we printed the 10 test PI images on blank white papers and adhered the cut-out of the wound image on the skin of a forearm. The idea is that this design provides a more realistic evaluation strategy for practical performance of the app. This design allows us to test the impact of the ambient environment, such as brightness, as well as the stability across different phones which have distinct cameras. In this test, we placed the smartphone app at a distance to enable the wound to be clearly seen inside the screen. We tested three Android smartphone models (Figures 4A–C) across two brightness levels of indoor illumination levels (Table 4). In particular, we noted that the classification accuracy varies between 80% to 90% depending on phone model and ambient brightness (Figure 4). The estimated sensitivity and specificity are also shown in Figure 4 and Table 4. These findings indicated that the app was reasonably robust in realistic situations.

Figure 4. Evaluation of accuracy of the implemented model in an Android smartphone app. The detection and classification of the printed wound images in the 10 photos in the test set using the smartphone app with three different Android phones at normal (125 lux) brightness level: (A) MIX2S, (B) Samsung Note 10+, and (C) Samsung S20. 1vO: Stage 1 vs. Others; 2vO: Stage 2 vs. Others; 3vO: Stage 3 vs. Others; 4vO: Stage 4 vs. Others; UvO: Unstageable vs. Others.

Table 4. Per-class specficity and sensitivity of the implemented model in three different android phones at two different ambient brightness levels on the test set images.

This study reports the development and evaluation of the first AI-enabled smartphone app for real-time pressure injury staging assessment. Other app developers have considered size and depth measurement, wound segmentation, tissue classification, treatment recommendation, and the applicable wound type (PI, diabetic foot ulcer, or others). We also plan to explore some of these features in the future. Still, our current proposed AI-based app, which is built upon open-source PI images verified by wound care nurses, provides a reasonable PI staging support tool for lay carers. With early diagnosis and proper management of PI, wound infection and hospital admission are prevented.

We identified several issues that can be improved. First, the realistic conditions testing of the app was only done on printed images, while testing on real patient wounds will be needed to ensure robustness of the results. Further technical development, such as utilising the flash light in a smartphone, may further improve the sensitivity and specificity of real-time image capture. Second, high standard performance including accuracy, sensitivity and specificity, and incorporation of features such as speed, operating efficiency, and convenience in retrieving patient medical records, as well as comparing and contrasting PI parameters for healing monitoring, are necessary for more professional use. Third, our app is used on Android platform only, and should therefore aim to be compatible with both Android and iOS devices. Fourth, with the real-time function, the app can be further optimised to inter-operate with existing tele-medicine platforms for supporting remote medical consultations.

In the community application, AI wound assessment in smartphones has the potential to perform early wound diagnosis, optimise wound management plans, reduce healthcare costs, and improve patients' quality of life in residential homes by the carers. Our newly developed app can perform real-time PI assessment without the need to use additional hardware (e.g., thermal camera and structure sensor) and internet access, making it easily deployable in the community. Nevertheless, the practicality of wound assessment assisted by an AI app can depend on the level of acceptance of such technology from health workers.

As a feasibility study, we mostly made use of publicly available data for training and validation of the machine learning system. We collected a small set of additional test photos for independent validation. Still, the number of images is still relatively small. In the future, we will further collect high quality PI images for training and evaluation. In addition, we should conduct an evaluation study on real patients and a usability of nurses and wound specialists.

In medical and nursing education or specialist training, these apps have potential to serve as an educational tool for learners to practice. As the apps can detect both photos or real wound, it enhances teachers' resources for teaching wound assessment and management. Also, technology-based pedagogy may enhance students' learning motivation and arouse their interest to practice, which will maximize their learning performance.

We demonstrated that the detection of a pressure ulcer by the object detection model YOLO. The app stages pressure ulcers with our limited training and test sets. The results have indicated that we can have a correct classification of the staging level by YOLO if we have enough training set of the pressure ulcer images. We also implemented our trained YOLO into an Android app. The app can detect the wound successfully in real-time by the phone camera. The size, dimensionality, and distance from the phone camera are the factors that affected the classification results. Overall, in this work, we developed a real-time smartphone or tablet app to detect and classify the staging of pressure injuries. It provides the foundation of pressure injuries assessment with an object detection app. Furthermore, our proposed technology for wound assessment may help to prevent and recover pressure injuries without leading to wound infection and hospital admission.

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/mlaradji/deep-learning-for-wound-care/tree/master/data/google-images-medetec-combined/pressure-ulcer-images.

The studies involving human participants were reviewed and approved by the Institutional Review Board (IRB) of University of Hong Kong and Hospital Authority Hong Kong West Cluster (approval reference UW 21-625). Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

JYHW and JWKH conceived the idea and supervised the study. CHL and KHOY designed and developed the app and performed the evaluation. TFY and LYFL provided technical support in smartphone app development. AKCW provided expertise in medical wound care and design of the system. TYS provided expertise in pressure injury staging and provided the test images. CHL, KHOY, JYHW and JWKH wrote the paper. All authors contributed to the article and approved the submitted version.

This work was supported by AIR@InnoHK administered by Innovation and Technology Commission.

We thank all the patients who agreed to contribute wound images to this study.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Chen G, Lin L, Yan-Lin Y, Loretta CYF, Han L. The prevalence and incidence of community-acquired pressure injury: a protocol for systematic review and meta-analysis. Medicine (Baltimore). (2020) 99(48):e22348. doi: 10.1097/MD.0000000000022348

2. Li Z, Lin F, Thalib L, Chaboyer W. Global prevalence and incidence of pressure injuries in hospitalised adult patients: a systematic review and meta-analysis. Int J Nurs Stud. (2020) 105:103546. doi: 10.1016/j.ijnurstu.2020.103546

3. Hajhosseini B, Longaker MT, Gurtner GC. Pressure injury. Ann Surg. (2020) 271(4):671–9. doi: 10.1097/SLA.0000000000003567

4. Gist S, Tio-Matos I, Falzgraf S, Cameron S, Beebe M. Wound care in the geriatric client. Clin Interv Aging. (2009) 4:269–87. doi: 10.2147/cia.s4726

5. Edsberg LE, Black JM, Goldberg M, McNichol L, Moore L, Sieggreen M. Revised national pressure ulcer advisory panel pressure injury staging system: revised pressure injury staging system. J Wound Ostomy Cont Nurs Off Publ Wound Ostomy Cont Nurses Soc. (2016) 43(6):585–97. doi: 10.1097/WON.0000000000000281

6. Chaboyer WP, Thalib L, Harbeck EL, Coyer FM, Blot S, Bull CF, et al. Incidence and prevalence of pressure injuries in adult intensive care patients: a systematic review and meta-analysis. Crit Care Med. (2018) 46(11):e1074–81. doi: 10.1097/CCM.0000000000003366

7. Lyder CH, Ayello EA. Pressure ulcers: a patient safety issue. In: Hughes RG, editors. Patient safety and quality: An evidence-based handbook for nurses. Rockville, MD: Agency for Healthcare Research and Quality (US) (2008) [cited 2022 Mar 19]. (Advances in Patient Safety). Available from: http://www.ncbi.nlm.nih.gov/books/NBK2650/

8. Garcia-Zapirain B, Sierra-Sosa D, Ortiz D, Isaza-Monsalve M, Elmaghraby A. Efficient use of mobile devices for quantification of pressure injury images. Technol Health Care (2018) 26(Suppl 1):269–80. doi: 10.3233/THC-174612

9. Zahia S, Garcia-Zapirain B, Elmaghraby A. Integrating 3D model representation for an accurate non-invasive assessment of pressure injuries with deep learning. Sensors. (2020) 20(10):E2933. doi: 10.3390/s20102933

10. imitoWound — Wound documentation in a breeze, image-centred. imito AG. [cited 2022 Mar 19]. Available from: https://imito.io/en/imitowound

11. Fraiwan L, Ninan J, Al-Khodari M. Mobile application for ulcer detection. Open Biomed Eng J. (2018) 12:16–26. doi: 10.2174/1874120701812010016

12. Yap MH, Chatwin KE, Ng CC, Abbott CA, Bowling FL, Rajbhandari S, et al. A new Mobile application for standardizing diabetic foot images. J Diabetes Sci Technol. (2018) 12(1):169–73. doi: 10.1177/1932296817713761

13. Goyal M, Reeves ND, Rajbhandari S, Yap MH. Robust methods for real-time diabetic foot ulcer detection and localization on mobile devices. IEEE J Biomed Health Inform. (2019) 23(4):1730–41. doi: 10.1109/JBHI.2018.2868656

14. Cassidy B, Reeves ND, Pappachan JM, Ahmad N, Haycocks S, Gillespie D, et al. A cloud-based deep learning framework for remote detection of diabetic foot ulcers. IEEE Pervasive Comput. (2022) 01:1–9. doi: 10.1109/MPRV.2021.3135686

15. Kositzke C, Dujovny M. Pressure injury evolution: mobile wound analyzer review | insight medical publishing. J Biol Med Res (2018) 2(2):12.

16. Friesen MR, Hamel C, McLeod RD. A mHealth application for chronic wound care: findings of a user trial. Int J Environ Res Public Health. (2013) 10(11):6199–214. doi: 10.3390/ijerph10116199

17. Poon TWK, Friesen MR. Algorithms for size and color detection of smartphone images of chronic wounds for healthcare applications. IEEE Access. (2015) 3:1799–808. doi: 10.1109/ACCESS.2015.2487859

18. Orciuoli F, Orciuoli FJ, Peduto A. A mobile clinical DSS based on augmented reality and deep learning for the home cares of patients afflicted by bedsores. Procedia Comput Sci. (2020) 175:181–8. doi: 10.1016/j.procs.2020.07.028

19. KroniKare—AI-Driven Chronic Wound Tech. KroniKare. [cited 2022 Mar 19]. Available from: https://kronikare.ai/

20. CARES4WOUNDS Wound Management System | Tetusyu Healthcare. Tetsuyu Healthcare. [cited 2022 Mar 19]. Available from: https://tetsuyuhealthcare.com/solutions/wound-management-system/

21. Chan KS, Chan YM, Tan AHM, Liang S, Cho YT, Hong Q, et al. Clinical validation of an artificial intelligence-enabled wound imaging mobile application in diabetic foot ulcers. Int Wound J. (2022) 19(1):114–24. doi: 10.1111/iwj.13603

22. deep-learning-for-wound-care/data/google-images-medetec-combined at master · mlaradji/deep-learning-for-wound-care. GitHub. [cited 2022 Mar 20]. Available from: https://github.com/mlaradji/deep-learning-for-wound-care

23. Medetec Wound Database stock pictures of wounds. [cited 2022 Mar 20]. Available from: http://www.medetec.co.uk/files/medetec-image-databases.html

24. Roboflow Give your software the power to see objects in images and video. [cited 2022 Mar 20]. Available from: https://roboflow.ai

25. Bochkovskiy A, Wang CY, Liao HYM. YOLOv4: Optimal Speed and Accuracy of Object Detection. ArXiv200410934 Cs Eess. (2020) [cited 2022 Mar 20]; Available from: http://arxiv.org/abs/2004.10934

26. Redmon J, Divvala S, Girshick R, Farhadi A. You Only Look Once: Unified, Real-Time Object Detection. ArXiv150602640 Cs. (2016) [cited 2022 Mar 20]; Available from: http://arxiv.org/abs/1506.02640

27. Carrión H, Jafari M, Bagood MD, Yang H, Isseroff RR, Gomez M. Automatic wound detection and size estimation using deep learning algorithms. PLOS Comput Biol. (2022) 18(3):e1009852. doi: 10.1371/journal.pcbi.1009852

28. Han A, Zhang Y, Li A, Li C, Zhao F, Dong Q, et al. Efficient refinements on YOLOv3 for real-time detection and assessment of diabetic foot Wagner grades. ArXiv200602322 Cs. (2020) [cited 2022 Mar 20]. Available from: http://arxiv.org/abs/2006.02322

29. Anisuzzaman DM, Patel Y, Niezgoda J, Gopalakrishnan S, Yu Z. A Mobile App for Wound Localization using Deep Learning. ArXiv200907133 Cs. (2020) [cited 2022 Mar 20]. Available from: http://arxiv.org/abs/2009.07133

30. Convert Darknet YOLOv4 or YOLOv3 to TensorFlow Model. GitHub. [cited 2022 Mar 20]. Available from: https://github.com/haroonshakeel/tensorflow-yolov4-tflite

Keywords: wound assessment, pressure injury, object detection, mHealth, digital health, bedsore, artificial intelligence, deep learning

Citation: Lau CH, Yu KH, Yip TF, Luk LY, Wai AKC, Sit T, Wong JY and Ho JWK (2022) An artificial intelligence-enabled smartphone app for real-time pressure injury assessment. Front. Med. Technol. 4:905074. doi: 10.3389/fmedt.2022.905074

Received: 26 March 2022; Accepted: 1 September 2022;

Published: 23 September 2022.

Edited by:

Luke E Hallum, The University of Auckland, New ZealandReviewed by:

Mh Busra Fauzi, Centre for Tissue Engineering and Regenerative Medicine (CTERM), Malaysia© 2022 Lau, Yu, Yip, Luk, Wai, Sit, Wong and Ho. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Janet Yuen-Ha Wong amFuZXR5aEBoa3UuaGs= Joshua Wing Kei Ho andraG9AaGt1Lmhr

†These authors have contributed equally to this work

Specialty Section: This article was submitted to Diagnostic and Therapeutic Devices, a section of the journal Frontiers in Medical Technology

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.