95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 21 November 2024

Sec. Healthcare Professions Education

Volume 11 - 2024 | https://doi.org/10.3389/fmed.2024.1424024

Su Min Joyce Tan1,2

Su Min Joyce Tan1,2 Michael J. Coffey2,3

Michael J. Coffey2,3 Katrina Blazek4

Katrina Blazek4 Neela Sitaram1

Neela Sitaram1 Isabella Dobrescu5

Isabella Dobrescu5 Alberto Motta5

Alberto Motta5 Sandra Chuang2,6

Sandra Chuang2,6 Chee Y. Ooi2,3*

Chee Y. Ooi2,3*Background and aim: The COVID-19 pandemic necessitated the transition to online medical education. This study evaluated the efficacy of online case-based tutorials using a serious game tutorial [PlayMed™ (PM)], as compared to a traditional slideshow tutorial (TT).

Methods: We performed a prospective, mixed-methods, randomised controlled trial on undergraduate medical students during the COVID-19 pandemic, from May 2020 to January 2021. Students were block randomised into the PM or TT groups. Tutors conducted online teaching on bronchiolitis and gastroenteritis cases using PM or TT to facilitate the presentation. Educational experience was assessed using a continuous interval scale (0–100; with pre-defined categories) and free text responses. Immediate and long-term knowledge acquisition was assessed using 6 multiple-choice questions (MCQ) for each case (total of 12 MCQ). A modified intention-to-treat mixed methods and a sensitivity per-protocol analysis were performed to compare outcomes between PM and TT groups.

Results: In total, 80 PM and 73 TT participants attended at least one tutorial. Sixty-five (81%) PM and 52 (71%) TT participants completed at least one survey and were included for analysis. PlayMed™ students had an increased likelihood of completing the surveys, which included the MCQ [odds ratio (95% CI) of 2.4 (1.6–3.8), p < 0.00006]. Regarding the immediate reactions post bronchiolitis and gastroenteritis cases, several responses were significantly more positive in the PM group compared to the TT group; e.g. ‘The learning activity was engaging’ [medium effect size: d (95% CI) = 0.58 (0.32–0.85), p < 0.0001]. Higher proportions of participants in the PM group reported feeling safe in the gastroenteritis and bronchiolitis tutorials (96 and 89%), compared to the TT group (76 and 74%). PlayMed™ participants significantly outperformed TT participants on the bronchiolitis MCQs done immediately post tutorial, 4.1 (1.0) vs. 3.5 (1.0), respectively, p = 0.004 [medium effect size: d (95% CI) = 0.54 (0.16–0.91)].

Conclusion: This study demonstrates the utility of a serious game (PlayMed™) as an online teaching tool for medical education. Students exposed to PM demonstrated superior engagement and feelings of safety. Utilisation of serious games may also facilitate knowledge acquisition, at least in the short term.

In recent years, hastened by the COVID-19 pandemic, digital and information technology have transformed society and various sectors in life including delivery of medical education and clinical care. During the pandemic, particularly in regions where lockdowns were implemented strictly, medical teaching moved online while face-to-face and bedside clinical teaching were heavily restricted (1, 2).

Traditional case-based tutorials are widely used in online medical education. These typically use clinical cases and scenarios to explore learning objectives of the curriculum such as generation of differential diagnoses, using investigations to support or refute differentials and development of clinical management plans. For online traditional case-based tutorials, tutors and students meet over virtual platforms. A slideshow presentation is commonly used by tutors. Students participate via live sharing of questions, answers and comments via microphone audio or chat functions. Immersive technologies and virtual medical education continues to play an important role in medical education with key benefits in accessibility, flexibility, cost-effectiveness and standardisation (3).

Serious games are educational tools, typically online, that are aimed to supply users with skills, knowledge, or attitudes useful in reality (4) without the high resource demands associated with traditional classroom or face-to-face teaching environments (5, 6). We had previously developed a highly-immersive serious game for paediatric medical education called PlayMed™ (PM) (7). PlayMed™ provided a unique platform which allowed the application Knowles’ theory of andragogy to facilitate adult learnings exploring and solving real-world problems (7). This theory provides a framework for adult learning by focusing on the principles of autonomy, self-direction and evaluation, and experiential and problem-based learning in a simulated workplace (8–10). Additionally, Kolb’s experiential learning theory provides a framework for understand and implementing experiential learning to allow learners to progress through the four stages in sequence: concrete experience, reflective observation, abstract conceptualisation and active experimentation (11). Online serious games have the benefit of immediate in-game feedback and repeatability in a safe environment that facilitate the application of Kolb’s experiential learning theory (11, 12). PlayMed™ was developed using multiple game design theories including, self-determination theory (13), MDA framework (14), flow theory (15), Raph Koster’s theory of fun (16) and importantly fiero and failure (17). PlayMed™ facilitates the integration of game and learning theories to provide an innovative and emotive environment. Players are faced with the meaningful challenge of managing virtual patients, a form of ‘Hard Fun’ which creates emotion through a structured and safe experience (17). Difficult cases can be frustrating and make require multiple attempts before succeeding, however the sense of personal triumph (i.e., Fiero) upon completion can be very rewarding and memorable. Additionally, the difficulty level of cases increases as the player progresses through the simpler cases, thus balancing game difficulty with the anticipated skill level to keep players engaged.

Perron et al. performed an investigator-blinded randomised controlled trial comparing learner attitudes and the educational efficacy of a serious game (PlayMed™) compared to both an adaptive tutorial (computerised, interactive, web-based lessons using the SmartSparrowTM platform) and a low stimulus control (published paper-based guidelines). Medical students were taught paediatric asthma and seizure assessment and management using one of the three interventions, and then assessed using multiple choice questions and simulated clinical management scenarios in a high-fidelity simulation laboratory. Students allocated to the serious game intervention demonstrated improved translation of knowledge in the simulated clinical scenarios, particularly compared to the low stimulus control (7).

The aim of our study was to assess if using a serious game as an online teaching tool by the tutor in medical student case-based tutorials [PlayMed™ (PM) group] was more effective than traditional slideshow and case-based online tutorials (TT) group. The primary aim was to evaluate and compare the student educational experience between the PM and TT groups, including assessment of student satisfaction, engagement and understanding, through surveys. The secondary aim was to evaluate and compare student learning and knowledge acquisition, assessing immediate and long-term retention of knowledge using multiple choice questions (MCQs). We hypothesised that the PM group would have better educational experience, learning and knowledge acquisition compared to the TT group.

This study was approved by the University of New South Wales Human Research Ethics Committee (HC17160).

We performed a prospective, mixed-methods, randomised controlled trial (RCT) on undergraduate medical students attending the Faculty of Medicine at University of New South Wales, Sydney, Australia (UNSW) during the COVID-19 pandemic, from May 2020 to January 2021. This study was designed to assess the efficacy and educational experience of serious game enhanced case-based tutorials (PM group) compared with traditional slideshow case-based tutorials (TT group).

Phase 3 medical students (final years of Years 5 and 6) at UNSW who were enrolled in the Children’s Health course were eligible. The Children’s Health course is an 8-week core course delivered by the School of Women’s and Children’s Health (SWCH) and completed by metropolitan and rural students during the final 2 years (Phase 3) of the 6-year undergraduate medical programme. There are five course offerings annually with 45–75 students enrolled in each teaching period. Due to the COVID-19 pandemic and strict lockdown measures in place across Australia, the Children’s Health course lectures and tutorials were mostly delivered online with significantly reduced face-to-face student-patient clinical experiences.

One of the study investigators emailed students with information about the study, including the Participant Information Statement, at least 2 weeks prior to starting the Children’s Heath course. Students were informed that apart from additional learning opportunities provided by the tutorials, there were no financial or additional incentives to participate in the trial. Participation in the study was voluntary. Informed consent was obtained from the students.

All recruited students were randomly allocated to one of two online tutorial groups: PlayMed™ case-based tutorial (PM group), or traditional slideshow case-based tutorial (TT group). A computer-generated block randomisation list, with block sizes of 4 and 6, and a strata for teaching period was utilised.1 Students were allocated a unique identification number which was recorded against their student number and group allocation in a secure (password-protected) electronic database on UNSW SWCH drive. This was done by one of the SWCH Education Support Officers.

The clinical assessment, diagnosis and management of bronchiolitis and acute gastroenteritis were selected as the focus of learning for the two online tutorials given to each PM or TT group. These two conditions are both addressed in the Children’s Health lecture programme and are frequently encountered in clinical rotations and clerkships. Importantly, acute gastroenteritis and respiratory infections like bronchiolitis are common paediatric emergency presentations in Australasia (18) and junior doctors will likely be involved in management. Australian clinical practise guidelines are freely available on the acute management of bronchiolitis (19) and management of acute gastroenteritis in infants and children (20). The key learning outcomes and case stems for these tutorials were determined using the Children’s Health course objectives and were the same for the PM and TT groups. The MCQs were written by paediatric doctors and reviewed by UNSW Medicine faculty educators to ensure that they were based on the same key learning outcomes.

A single tutor developed and presented all the PM tutorials and a separate single tutor developed and presented all the TT tutorials. Each tutorial was limited to 45 min duration and delivered virtually via a cloud-based video conferencing platform, with students given the option of leaving their webcam turned on or off. Each tutor was a general paediatric fellow (in advanced specialist training for paediatrics) in the Sydney Children Hospital Network with similar level of paediatric training and medical student teaching experience. Both tutors were instructed to develop content based on the Australian clinical practise guidelines for the acute management of bronchiolitis (19) and the management of acute gastroenteritis in infants and children (20). Each tutor was blinded to the tutorial content of the other group and MCQs.

PlayMed™ [developed using the Playconomics™ platform (21) is a highly immersive role-playing game set in a virtual hospital with the player acting as a doctor (7)] (Figure 1; Supplementary Figure 1). It was developed using the principles of Knowles’ theory of andragogy (8) and Kolb’s experiential learning theory (11). Gameplay involves a player first creating a customisable avatar which is able to walk around the virtual hospital. Players then select a case to play, with each case involving a single patient that requires assessment and management. Players must attend to the bedside of the patient and perform an assessment within a timely fashion. The player is required to order appropriate investigations, prescribe medications and make appropriate management decisions using the in-game electronic medical record system. Patient status, vital signs and investigations change in real time and are dependent on the decisions made by the player. During the entire case, a simulated ‘attending physician’ provides real-time feedback on the player’s actions. The case is completed once the player has satisfactorily managed the patient. If a player makes inappropriate decisions, they are redirected by the attending physician or can even fail the case. Individual patient cases within the game were designed with complex case-flow algorithms (allowing for different combinations and permutations) and offered the opportunity for replay (7). A typical case would take around 5 to 7 min to complete, with additional feedback and performance rating (out of 5 stars) provided at the end.

Figure 1. Various screenshots of PlayMed including: avatar customisation, gameplay in the hospital, bedside examination, review of vital signs, history and physical examination, management plan, medication prescription and in-game feedback log.

In our study, a case on bronchiolitis and a second case on acute gastroenteritis were designed with complex case-flow algorithms, allowing for different combinations and permutations. The tutor acted as a single proxy player for the students by taking majority consensus suggestions on management from the student audience. Group polls were created using a free web-based poll maker and inserted at key decision points in the game. The majority student vote determined which in-game option was selected, e.g., choice of investigation or treatment option. In addition to real time tutor facilitation, an in-game simulated ‘attending physician’ provided real time feedback. For example, the majority of students may select to apply high flow nasal prongs in an infant with increase work of breathing and hypoxia. The virtual patient’s vital signs (i.e., respiratory rate and oxygen saturation) and visible work of breathing would then improve in the game.

The tutor delivered this using an online Microsoft PowerPoint slideshow presentation. The tutor went through slides sequentially, outlining the case scenario and teaching content was focused on the learning outcomes of the curriculum, while being flexible to students’ level of knowledge and clinical needs. The tutor of the TT encouraged students to ask and also answer questions throughout the tutorial. The tutor would facilitate these interactions periodically throughout the tutorial.

An Education Support Officer from SWCH emailed the students individually their unique identification number as well as the date, time, and Microsoft Teams access link to their case tutorial sessions. All tutorials (PM or TT) were delivered live using Microsoft Teams and an instruction sheet for students was provided on how to access the tutorials. The bronchiolitis and gastroenteritis tutorials were given in Week 1–2 of the 8-week Children’s Health course. Where possible, the PM and TT groups had their tutorials given simultaneously by their respective tutors except on one occasion where due to tutor sickness, the TT group had their tutorials rescheduled within 48 h. Immediately after each tutorial (PM or TT), and in Week 8 (last week of the Children’s Health Course), participants were invited to give feedback about their educational experience and have their knowledge assessed as outlined below.

Primary outcomes included a mixed methods analysis of the educational experience of participants, i.e., assessment of satisfaction, engagement and understanding. The students were invited to anonymously complete an online survey using Qualtrics immediately following each tutorial (Qualtrics, Provo, UT, United States). The immediate educational experience response survey is presented in Appendix 1A (Supplementary material). In the survey, students were asked to rate their responses to items on a continuous interval scale (0 to 100) with pre-defined categories: 1–20: Strongly Disagree; 21–40: Disagree; 41–60: Neutral; 61–80: Agree; 81–100: Strongly Agree; 0: No response. Students were also asked for comments (free text responses) regarding their engagement with, and perception of the educational value, of the teaching tool. The time commitment required of participants for each assessment was approximately 5 min.

A second survey was completed at Week 8 post tutorials to assess the long-term educational experience response (at the conclusion of the Children’s Health Course). This survey aimed to capture the follow-up impact of the tutorials on the student’s learning (Appendix 1B, Supplementary material).

Additional secondary outcomes were the immediate knowledge acquisition and long-term retention of knowledge, assessed using MCQs. All MCQs were completed online using Qualtrics. For immediate knowledge acquisition, participants were assessed immediately after the tutorial (PM or TT) using 6 case-specific MCQs for the bronchiolitis tutorial and 6 case-specific MCQs for the gastroenteritis tutorial (Appendices 2A,B, Supplementary material). The time commitment required of participants for each assessment was approximately 15 min. For long-term retention of knowledge, participants were assessed in a single sitting in Week 8 of the course using the same MCQ questions as before (total of 12 MCQs; six MCQs for bronchiolitis and six MCQs for gastroenteritis). The time commitment required of participants for this assessment was approximately 30 min.

Participant information was collected in a series of electronic data collection forms with the Qualtrics Online Survey Software Tool using the study ID number as the identifier. The surveys were programmed to facilitate a personalised flow depending on the stage of the trial each participant was at, i.e., immediately post intervention (post-tutorial) or end of Children’s Health course (week 8). Post-tutorial and end of course surveys and MCQs were embedded in the survey. Email links were securely emailed to participants by an Education Support Officer from SWCH.

All study investigators were blinded to the assessment scores and feedback from the surveys.

The required sample size for this study was calculated using the following conditions: (i) based on data from our previous randomised controlled trial (7), 38.9% of serious game (i.e., PlayMed™) and 16.7% of adaptive tutorial (using Smart Sparrow) students ‘strongly agreed’ that the ‘activity provided helped me prepare for, and deal with, real life clinical scenarios’; (ii) relative group sizes of 1:1 for PM and TT; (iii) the sample size is calculated to detect a difference in incidence of the dichotomous outcome (‘strongly agree’ vs. any other response) between the two groups using the results above; (iv) type 1 error probability of 0.05 (two-tailed); (v) desired power of 0.8; and (vi) the ClinCalc Sample Size Calculator (https://clincalc.com/stats/samplesize.aspx) was used (22).

Based on these calculations, a minimum sample size of 63 PM and 63 TT students was required to reject the null hypothesis that the incidence of a ‘strongly agree’ response in the experimental (PM) and placebo-controlled (TT) groups were equal with probability (power) 0.8 and Type I error of 0.05. Assuming a total refusal and drop-out rate of 10%, 70 subjects for each cohort were required, i.e., 70 PM and 70 TT students.

Statistical analysis of the quantitative data was performed using descriptive and inferential statistics, calculated according to normality of distribution. Normally distributed data is presented by mean and standard deviation (SD) and non-normally distributed data was presented by median and interquartile range (IQR). Quantitative data was analysed on a modified intention-to-treat (mITT) basis with participants who answered at least one survey included. Missing quantitative data from the surveys and MCQs immediately post the tutorials (Appendix 1A, 2A,B, Supplementary material) were estimated by group mean imputation using the MICE package (v3.16.0) (23). A sensitivity per protocol analysis was also performed with missing quantitative data treated as missing. Given more than 50% of the data from the week 8 survey and MCQs (Appendix 1B, 2A,B, Supplementary material) was missing from both groups, only a per protocol analysis was performed.

Cross-sectional group comparisons (TT vs. PM) were made using quantitative data and performing an unpaired t-test or a Mann–Whitney test for parametric and non-parametric data, respectively. A chi-square test was used to compare categorical data between groups. p values <0.05 were considered statistically significant for all analyses. Effect sizes were calculated for statistically significant variables using Cohen’s d (d) with 95% confidence intervals (95% CI) using the ‘effsize’ package. Effect sizes were considered small if 0.2 ≤ d < 0.5, medium if 0.5 ≤ d < 0.8, and large if d ≥ 0.8. All quantitative analyses were performed by author MC who was blinded to the group allocation.

Qualitative data from open-ended survey questions were coded using a thematic, inductive approach by author KB who could not feasibly be blinded to group allocation. Data was manually transcribed into NVIVO ™ which assisted with data immersion. Labels were created and assigned to text, which described key meanings and ideas. After the first pass, and with each successive pass, codes were subsequently refined by aggregating common key themes and ideas. Group consensus was sought in cases where the meaning of the response was unclear. The proportion of responses related to each code were compared between tutorial type.

Given the difference in survey response rates between the TT and PM groups, a logistic regression analysis was performed. To determine the likelihood of completing the survey, a conditional logistic regression was performed using a dummy variable for MCQ completion (1 = yes; 0 = no). NVivo 1.7.1, R v4.2.0 and RStudio 2023.09.1 were used for the statistical analyses.

A total of 153 students attended at least one of the online tutorials, consisting of 73 students in the TT group and 80 students in the PM group. Seventy-one percent (52/73) of the students in the TT group and 81% (65/80) of the students in the PM group provided responses to one or more surveys (immediately post tutorial or at the end of the Children’s Health course) and were included in the analysis (Table 1). The TT group had a significantly lower response rate in the post gastroenteritis tutorial survey compared with the PM group (54% vs. 83%, respectively, p = 0.001). Overall, PM students had an increased likelihood of completing the surveys, which included the MCQ [odds ratio (95% CI) of 2.4 (1.6–3.8), p < 0.00006].

Demographic data for the included participants is presented in Table 1. There were no significant differences between both groups for demographic variables of age, sex, or year.

Survey completion rates are presented in Table 1.

The primary aim was to evaluate and compare the educational experience of both groups immediately post the bronchiolitis and gastroenteritis tutorials.

A mITT analysis of the immediate reactions to the bronchiolitis and gastroenteritis cases is presented in Table 2.

Regarding the combined reactions from both bronchiolitis and gastroenteritis cases, PM participants reported a significantly more positive response than TT participants to the following statements: (i) ‘I enjoyed the learning activity’ [small effect size: d (95% CI) = 0.35 (0.08–0.61), p = 0.01]; (ii) ‘The learning activity was engaging’ [medium effect size: d (95% CI) = 0.58 (0.32–0.85), p < 0.0001]; (iii) ‘Receiving feedback during the learning activity aided my learning’ [medium effect size: d (95% CI) = 0.52 (0.26–0.78), p = 0.0001]; and (iv) ‘Your learning activity was more engaging than face-to-face teaching’ [large effect size: d (95% CI) = 0.90 (0.63–1.17), p < 0.0001].

A sensitivity, per-protocol analysis of the immediate reactions is presented in Supplementary Table 1.

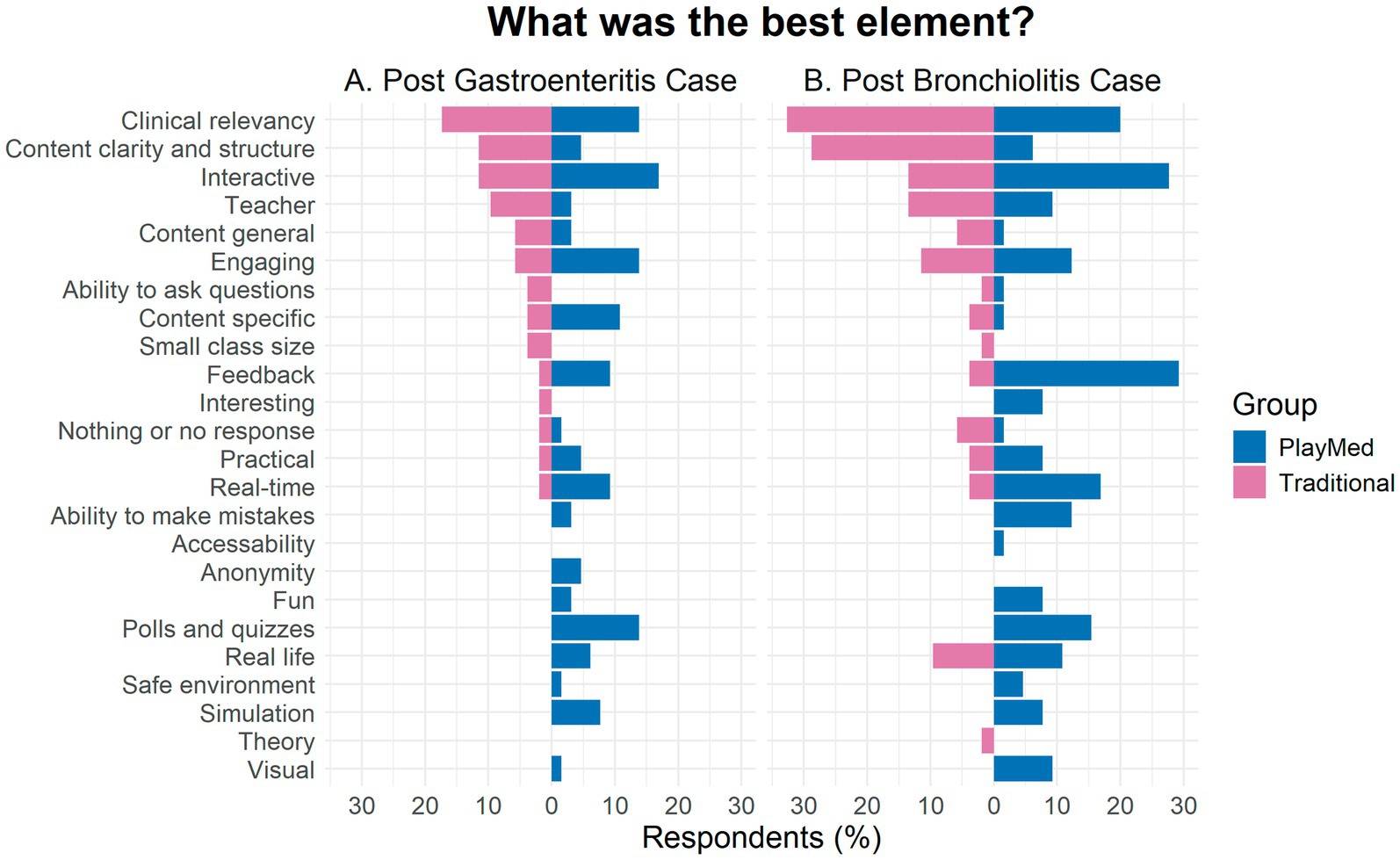

Both groups described both tutorials as clinically relevant, interactive and engaging (Figure 2). In general, the TT group liked content clarity, structure and the teacher, while PM group liked that the learning activity was fun, in real time and real life. They also liked the ability to make mistakes in a safe environment with feedback (Figure 3).

Figure 3. Comparison of best elements of learning post-gastroenteritis and post-bronchiolitis for PlayMed vs. Traditional tutorials (immediate reaction to open-ended questions).

Most students did not give a response to this or said that there was nothing to improve on (Figure 4). In both groups, add or expand content was suggested. In the PM group, majority of suggestions were to improve technology (e.g., videoconferencing glitches, connectivity issues, visuals) or allow direct individual access to the PlayMed™ software. There were also a few suggestions to have more time for the tutorials. In the TT group, suggestions included to make it more engaging or interesting, decrease or manage downtime, make the scenario more realistic or clinically relevant, and increase interactivity.

Seventy-two percent and 60% of respondents from the PM group reported to be engaged or highly engaged for the bronchiolitis and gastroenteritis tutorials, respectively, (Figure 5). In comparison, 44 and 27% of the respondents from the TT group reported to be engaged or highly engaged for the respective tutorials.

Immediately post tutorials, both groups described largely positive sentiments (Figure 6).

More than three-quarters of both groups reported that the COVID-19 pandemic affected their education. When asked about what form of teaching they preferred, 24% and 21% of respondents from the TT group preferred online learning, while slightly higher proportion at 39% and 30% from the PM group did so, for the bronchiolitis and gastroenteritis tutorials, respectively. Similar proportions from both groups preferred a traditional face-to-face learning activity, at 43% (TT) and 39% (PM) respectively. Both groups wanted to do this type of online learning again, with 78% and 86% in TT group and 84 and 87% of respondents in the PM group saying yes for the bronchiolitis and gastroenteritis tutorial, respectively. Almost all the respondents (96% and 89%) in the PM group reported that they felt safe and comfortable sharing their thoughts, asking questions, or making mistakes in the gastroenteritis tutorial and bronchiolitis tutorial, respectively. A smaller proportion (76 and 74%) of the TT group reported similarly. A large majority of the students from both groups felt included in the learning activity.

The secondary aim was to evaluate and compare student learning and knowledge acquisition, assessing immediate and long-term retention of knowledge using MCQs. Additionally, the long-term reactions to the educational experience were assessed.

In a mITT analysis, the PM participants significantly outperformed TT participants on the six bronchiolitis MCQs, 4.1 (1.0) vs. 3.5 (1.0), respectively, p = 0.004 [medium effect size: d (95% CI) = 0.54 (0.16–0.91)]. There was no significant difference between PM and TT participants on the six gastroenteritis MCQs, 3.7 (1.2) vs. 3.8 (0.7), respectively, p = 0.7. A sensitivity, per-protocol analysis of the MCQ results, along with results for individual questions are presented in Supplementary Table 2.

Twenty-one participants from the PM group (32%) and eight participants from the TT group (15%) completed the week 8 MCQs. In a per-protocol analysis, there was no significant difference in the total MCQ score (out of 12) between the PM and TT groups, 8 (7–9) vs. 7.5 (6.5–8.5), respectively, p = 0.5. There was also no significant difference between the PM and TT groups for the six bronchiolitis MCQs (5 (4–5) vs. 4.5 (3–6), respectively, p = 0.98), or for the six gastroenteritis MCQs [4 (3–4) vs. 3.5 (2–4.3), p = 0.5]. Results for the individual questions are presented in Supplementary Table 3.

Eleven participants from the TT group (21%) and 25 participants from the PM group (38%) completed the week 8 long term reactions survey. A per protocol analysis was performed with responses to individual questions presented in Supplementary Table 4. Traditional tutorial participants reported significantly more positive responses than PM participants to the statement: ‘I was able to apply the acquired knowledge from my learning activity in my paediatrics term’ [medium effect size: d (95% CI) = 0.76 (−0.08 to 1.6), p = 0.01].

In the week 8 survey, there were more positive perceptions in the PM group vs. the TT group (Figure 7). For the gastroenteritis tutorial, 34% of respondents in the PM group responded positively compared to 12% in the TT group. For the bronchiolitis tutorial, the PM group had 31% of respondents code positively compared to 15% in the TT group.

By utilising a serious game (PlayMed™) to facilitate online medical student education during the COVID-19 pandemic, we demonstrated the ability to improve medical student engagement with a medium to large effect size. This mixed-methods RCT demonstrates that serious games can provide a platform to apply Kolb’s experiential learning theory (11) in a safe environment. We showed that both student reactions (Kirkpatrick Level 1) and student learning (Kirkpatrick Level 2) can be improved with utilisation of a serious game in online medical education. The utility of a serious game in medical education is likely due to the facilitation of experiential learning, which is often limited with traditional slideshow-based tutorials.

In this study, much higher levels of engagement were reported in the PM group compared to the TT group. Consistent with this, survey response rates were significantly higher in the PM group, particularly evident in the end of course surveys, reflecting higher levels of engagement in not only the short term, but also in the longer term. Higher proportions of students in the PM group reported feeling safe, compared to the TT group. These findings combined suggest that PM may relax the threat of testing. This in turn may have positive effects on knowledge acquisition as students are able to identify what they do not know and take corrective action.

Students in the PM group reported having an improved understanding of educating parents regarding oral rehydration at home in gastroenteritis (Kirkpatrick Level 1), however there was no measurable difference in both groups for the individual MCQ scores relating to this learning objective (Kirkpatrick Level 2).

For the bronchiolitis tutorial, the PM group outperformed the TT group immediately post tutorial for total MCQ scores and individual MCQ scores for two questions which assessed interpretation of clinical findings, diagnosis, and parental education about bronchiolitis (Kirkpatrick Level 2).

There is a growing body of evidence that using serious games in healthcare professions education can increase engagement, fuel motivation to learn and improve learning outcomes (24). However, there has been little high-quality translational research in this context, especially using a randomised controlled trial to evaluate and compare learner attitudes and knowledge (i.e., Kirkpatrick’s Model of Evaluation Levels 1 and 2) (25). To our knowledge, this is the first randomised controlled trial that has explored using serious games in a case-based group tutorial setting in medical education. In most of the literature, serious games were used in individual player or multiplayer settings where the learner interacts with the game and other learners, without a tutor present (26–31). Integrating PM in the delivery of tutorials uniquely involves live facilitation by the tutor, enabling tutor assessment of student group competency levels, matching of students with appropriate task complexity. This may possibly mitigate the ‘expertise reversal effect’ (32), while still encouraging development of collaborative awareness, clinical reasoning and decision-making skills (9, 33, 34). Feedback in the PM tutorial is delivered real-time, dually by the game programme and the tutor, which aims to achieve timely, specific, and actionable feedback, fundamental for learners’ clinical skill development (35).

In our study, possible reasons for higher engagement levels in the PM group include adding a fun dimension to the learning activity, real time case evolution dependent on the group’s clinical management decisions and simulating real life patient scenarios. Polls and quizzes integrated into the teaching session helped structure the exploration of clinical decision making.

A large majority of students in the PM group reported positively on psychological safety, affirming that this is a safe educational intervention. Psychological safety is defined as a shared belief that the team is safe for interpersonal risk taking (36). When fostered in education, students have improved engagement, participation and learning experience (37, 38). Factors contributing to psychological safety in the PM tutorials include anonymity in polls and quizzes, which remove risk of exposure or judgement. Students exercised their personal choice to keep their webcams on or off; reinforcing social presence when on but reducing the risk of judgement when webcams were off.

Students in the PM group had higher MCQ scores compared to the TT group for the bronchiolitis tutorial, suggesting that the PM group had improved knowledge acquisition and clinical reasoning, which includes interpretation of symptoms, examination findings, formulating diagnoses and initiating appropriate management (39). Similarly, previous trials have demonstrated positive implications of serious games on teaching knowledge (31, 40). Interestingly, the PM group did not show superior MCQ scores for the gastroenteritis tutorial, raising the possibility that some of the benefits conferred when using a serious game like PM may be case-specific. Unfortunately, there is no strong evidence on the role of case specificity in transferability of clinical reasoning (41) and literature is scarce on which components of clinical reasoning are transferable.

This was a single-centre study with limited sample size recruiting students in their final 2 years of medical school, relating to paediatric-specific content, which limits generalizability of results and external validity. Drop-out rate from attendance at tutorials to combined survey completion (immediate post tutorial and week 8 surveys) were higher in the TT group at 29% versus 19% in the PM group. This may reflect higher participant engagement in the PM group rather than a random effect. We measured outcomes in the short to long term (end of 8-week course) but were not able to measure real-world clinical effects resembling the third and fourth levels of the Kirkpatrick Model.

Execution of the PM tutorial was not always consistently smooth, due to limitations in connectivity and high-resolution visuals. Despite this, large majority of the PM group reported wanting to do this type of online learning again. Given that the students in both groups preferred traditional face-to-face teaching, using PM in a face-to-face setting may bypass these technology challenges and boost educational experience.

This mixed-methods RCT provides support for the development and utilisation of serious games for online medical student education. We demonstrated that a novel serious game such as PlayMed™, can provide a superior educational experience compared to traditional slideshow-based presentations. Furthermore, a serious game can provide a safe learning environment, facilitating students’ experiential learning. Further research assessing participants’ real-world clinical behaviour in a larger multicentre trial is required.

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

ST: Conceptualization, Data curation, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. MC: Conceptualization, Data curation, Formal analysis, Methodology, Project administration, Resources, Software, Visualization, Writing – review & editing. KB: Data curation, Formal analysis, Validation, Visualization, Writing – review & editing. NS: Writing – review & editing, Investigation. ID: Writing – review & editing, Software. AM: Software, Writing – review & editing. SC: Writing – review & editing, Project administration. CO: Writing – review & editing, Conceptualization, Funding acquisition, Methodology, Resources, Software, Supervision, Validation, Visualization.

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. Chee Y. Ooi received a fellowship funding by the National Health and Medical Research Council (NHMRC, Australia) Investigator Grant (2020/GNT1194358).

First, the study team would like to extend our sincerest gratitude to the participants of this study. We would also like to thank the Discipline of Paediatrics Education Support Officers, Melinda Bresolin and Deborah Broder, for assisting with enrollment and randomisation of students, and the team at Lionsheart Studios for assisting with game development.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2024.1424024/full#supplementary-material

1. Kaul, V, Gallo de Moraes, A, Khateeb, D, Greenstein, Y, Winter, G, Chae, J, et al. Medical education during the Covid-19 pandemic. Chest. (2021) 159:1949–60. doi: 10.1016/j.chest.2020.12.026

2. Idris, A, and Edris, B. Virtual medical education during the Covid-19 pandemic: how to make it work. Eur. Heart J. (2021) 42:145–6. doi: 10.1093/eurheartj/ehaa638

3. Ryan, GV, Callaghan, S, Rafferty, A, Higgins, MF, Mangina, E, and McAuliffe, F. Learning outcomes of immersive technologies in health care student education: systematic review of the literature. J. Med. Internet Res. (2022) 24:e30082. doi: 10.2196/30082

5. Gentry, SV, Gauthier, A, L'Estrade Ehrstrom, B, Wortley, D, Lilienthal, A, Tudor Car, L, et al. Serious gaming and gamification education in health professions: systematic review. J. Med. Internet Res. (2019) 21:e12994. doi: 10.2196/12994

6. Wang, R, DeMaria, S Jr, Goldberg, A, and Katz, D. A systematic review of serious games in training health care professionals. Simul. Healthcare. (2016) 11:41–51. doi: 10.1097/sih.0000000000000118

7. Perron, JE, Uther, P, Coffey, MJ, Lovell-Simons, A, Bartlett, AW, McKay, A, et al. Are serious games seriously good at preparing students for clinical practice?: a randomized controlled trial. Med Teach. (2024):1–8. doi: 10.1080/0142159x.2024.2323179

8. Knowles, MS . The Modern Practice of Adult Education; Andragogy Versus Pedagogy. New York: The Association Press (1970). 384 p.

9. Akl, EA, Sackett, KM, Erdley, WS, Mustafa, RA, Fiander, M, Gabriel, C, et al. Educational games for health professionals. Cochrane Database Syst. Rev. (2013) 1:Cd006411. doi: 10.1002/14651858.CD006411.pub3

10. Misch, DA . Andragogy and medical education: are medical students internally motivated to learn? Adv. Health Sci. Educ. Theory Pract. (2002) 7:153–60. doi: 10.1023/a:1015790318032

11. Kolb, DA . Experiential Learning: Experience as the Source of Learning and Development. Englewood Cliffs, NJ: Prentice Hall (1984).

12. Wijnen-Meijer, M, Brandhuber, T, Schneider, A, and Berberat, PO. Implementing Kolb’S experiential learning cycle by linking real experience, case-based discussion and simulation. J. Med. Educat. Curri. Develop. (2022) 9:23821205221091511. doi: 10.1177/23821205221091511

13. Deci, E, and Ryan, R. The "what" and "why" of goal pursuits: human needs and the self-determination of behavior. Psychol. Inq. (2000) 11:227–68. doi: 10.1207/S15327965PLI1104_01

14. Hunicke, R, Leblanc, M, and Zubek, R (2004). Mda: a formal approach to game design and game research. AAAI Workshop—Technical Report. 1.

18. Acworth, J, Babl, F, Borland, M, Ngo, P, Krieser, D, Schutz, J, et al. Patterns of presentation to the Australian and New Zealand Paediatric emergency research network. Emerg. Med. Australas. (2009) 21:59–66. doi: 10.1111/j.1742-6723.2009.01154.x

19. Innovation AfC (2018). Infants and children—acute Management of Bronchiolitis (2018) (updated 10 January 2018). Available online at: https://www1.health.nsw.gov.au/pds/ActivePDSDocuments/GL2018_001.pdf (Accessed 16 November 2023).

20. The Royal Children's Hospital M, Australia (2020). Gastroenteritis (December 2020). Available online at: https://www.rch.org.au/clinicalguide/guideline_index/gastroenteritis/ (Accessed November 16, 2023).

21. Dobrescu, L, Greiner, B, and Motta, A. Learning economics concepts through game-play: an experiment. Int. J. Educ. Res. (2014) 69:23–37. doi: 10.1016/j.ijer.2014.08.005

23. van Buuren, S, and Groothuis-Oudshoorn, K. Mice: multivariate imputation by chained equations in R. J. Stat. Softw. (2011) 45:1–67. doi: 10.18637/jss.v045.i03

24. Xu, M, Luo, Y, Zhang, Y, Xia, R, Qian, H, and Zou, X. Game-based learning in medical education. Front. Public Health. (2023) 11:1113682. doi: 10.3389/fpubh.2023.1113682

26. Palee, P, Wongta, N, Khwanngern, K, Jitmun, W, and Choosri, N. Serious game for teaching undergraduate medical students in cleft lip and palate treatment protocol. Int. J. Med. Inform. (2020) 141:104166. doi: 10.1016/j.ijmedinf.2020.104166

27. Chon, SH, Timmermann, F, Dratsch, T, Schuelper, N, Plum, P, Berlth, F, et al. Serious games in surgical medical education: a virtual emergency department as a tool for teaching clinical reasoning to medical students. JMIR Ser. Games. (2019) 7:e13028. doi: 10.2196/13028

28. Tsopra, R, Courtine, M, Sedki, K, Eap, D, Cabal, M, Cohen, S, et al. Antibiogame®: a serious game for teaching medical students about antibiotic use. Int. J. Med. Inform. (2020) 136:104074. doi: 10.1016/j.ijmedinf.2020.104074

29. Hu, L, Zhang, L, Yin, R, Li, Z, Shen, J, Tan, H, et al. Neogames: a serious computer game that improves long-term knowledge retention of neonatal resuscitation in undergraduate medical students. Front. Pediatr. (2021) 9:645776. doi: 10.3389/fped.2021.645776

30. Gerard, JM, Scalzo, AJ, Borgman, MA, Watson, CM, Byrnes, CE, Chang, TP, et al. Validity evidence for a serious game to assess performance on critical pediatric emergency medicine scenarios. Simul. Healthcare. (2018) 13:168–80. doi: 10.1097/sih.0000000000000283

31. Tubelo, RA, Portella, FF, Gelain, MA, de Oliveira, MMC, de Oliveira, AEF, Dahmer, A, et al. Serious game is an effective learning method for primary health care education of medical students: a randomized controlled trial. Int. J. Med. Inform. (2019) 130:103944. doi: 10.1016/j.ijmedinf.2019.08.004

32. Dankbaar, M . Serious games and blended learning; effects on performance and motivation in medical education. Perspect. Med. Educ. (2017) 6:58–60. doi: 10.1007/s40037-016-0320-2

33. Tsoy, D, Sneath, P, Rempel, J, Huang, S, Bodnariuc, N, Mercuri, M, et al. Creating gridlocked: a serious game for teaching about multipatient environments. Acad. Med. (2019) 94:66–70. doi: 10.1097/acm.0000000000002340

34. Min, A, Min, H, and Kim, S. Effectiveness of serious games in nurse education: a systematic review. Nurse Educ. Today. (2022) 108:105178. doi: 10.1016/j.nedt.2021.105178

35. Hovaguimian, A, Joshi, A, Onorato, S, Schwartz, AW, and Frankl, S. Twelve tips for clinical teaching with telemedicine visits. Med Teach. (2022) 44:19–25. doi: 10.1080/0142159x.2021.1880558

36. Edmondson, A . Psychological safety and learning behavior in work teams. Adm. Sci. Q. (1999) 44:350–83. doi: 10.2307/2666999

37. Tsuei, SH-T, Lee, D, Ho, C, Regehr, G, and Nimmon, L. Exploring the construct of psychological safety in medical education. Acad. Med. (2019) 94:S28–35. doi: 10.1097/acm.0000000000002897

38. McLeod, E, and Gupta, S. The role of psychological safety in enhancing medical students’ engagement in online synchronous learning. Med. Sci. Educ. (2023) 33:423–30. doi: 10.1007/s40670-023-01753-8

39. Kassirer, JP . Teaching clinical reasoning: case-based and coached. Acad. Med. (2010) 85:1118–24. doi: 10.1097/acm.0b013e3181d5dd0d

40. Buijs-Spanjers, KR, Hegge, HH, Jansen, CJ, Hoogendoorn, E, and de Rooij, SE. A web-based serious game on delirium as an educational intervention for medical students: randomized controlled trial. JMIR Ser. Games. (2018) 6:e17. doi: 10.2196/games.9886

Keywords: serious games, game-based learning, virtual, tutorial, medical education

Citation: Tan SMJ, Coffey MJ, Blazek K, Sitaram N, Dobrescu I, Motta A, Chuang S and Ooi CY (2024) Serious games vs. traditional tutorials in the pandemic: a randomised controlled trial. Front. Med. 11:1424024. doi: 10.3389/fmed.2024.1424024

Received: 26 April 2024; Accepted: 12 November 2024;

Published: 21 November 2024.

Edited by:

José Manuel Reales, National University of Distance Education (UNED), SpainReviewed by:

Dian Puspita Sari, Faculty of Medicine University of Mataram, IndonesiaCopyright © 2024 Tan, Coffey, Blazek, Sitaram, Dobrescu, Motta, Chuang and Ooi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chee Y. Ooi, a2VpdGgub29pQHVuc3cuZWR1LmF1

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.