95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 06 February 2024

Sec. Ophthalmology

Volume 11 - 2024 | https://doi.org/10.3389/fmed.2024.1326004

This article is part of the Research Topic Efficient Artificial Intelligence (AI) in Ophthalmic Imaging View all 10 articles

Background: Retinal detachment (RD) is a common sight-threatening condition in the emergency department. Early postural intervention based on detachment regions can improve visual prognosis.

Methods: We developed a weakly supervised model with 24,208 ultra-widefield fundus images to localize and coarsely outline the anatomical RD regions. The customized preoperative postural guidance was generated for patients accordingly. The localization performance was then compared with the baseline model and an ophthalmologist according to the reference standard established by the retina experts.

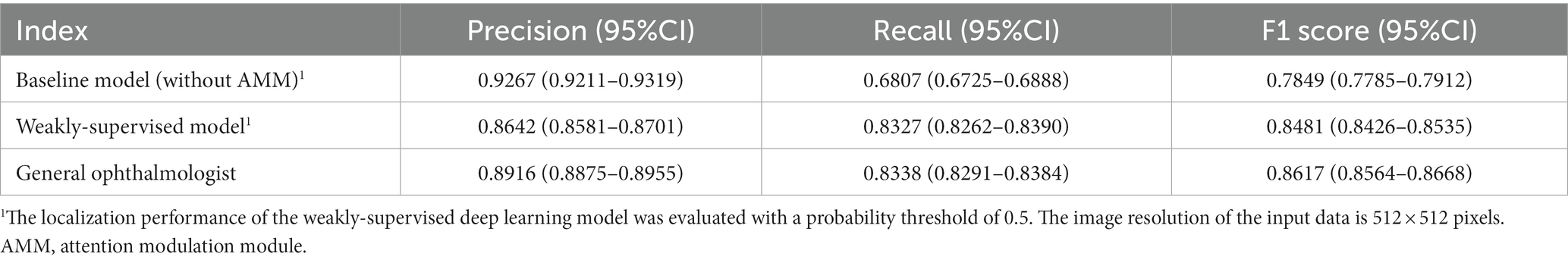

Results: In the 48-partition lesion detection, our proposed model reached an 86.42% (95% confidence interval (CI): 85.81–87.01%) precision and an 83.27% (95%CI: 82.62–83.90%) recall with an average precision (PA) of 0.9132. In contrast, the baseline model achieved a 92.67% (95%CI: 92.11–93.19%) precision and limited recall of 68.07% (95%CI: 67.25–68.88%). Our holistic lesion localization performance was comparable to the ophthalmologist’s 89.16% (95%CI: 88.75–89.55%) precision and 83.38% (95%CI: 82.91–83.84%) recall. As to the performance of four-zone anatomical localization, compared with the ground truth, the un-weighted Cohen’s κ coefficients were 0.710(95%CI: 0.659–0.761) and 0.753(95%CI: 0.702–0.804) for the weakly-supervised model and the general ophthalmologist, respectively.

Conclusion: The proposed weakly-supervised deep learning model showed outstanding performance comparable to that of the general ophthalmologist in localizing and outlining the RD regions. Hopefully, it would greatly facilitate managing RD patients, especially for medical referral and patient education.

Retinal detachment (RD) is a sight-threatening condition that occurs when the neurosensory retina is separated from the retinal pigment epithelium (1). Several population-based epidemiological studies of RD find an annual incidence of around 1 in 10,000 (2). It has been estimated that the lifetime risk of RD is about 0.1% (3, 4). However, early intervention facilitates the prevention of disease progression and improves prognosis. Clinically, scleral buckle, vitrectomy, and pneumatic retinopexy are the most common surgical approaches to repairing RD (5–7). Before the surgery, patients should be instructed to lie in the appropriate position to minimize the detachment extending and improve visual outcomes (6, 8, 9). Postural guidance is consistent with the localization of the lesion throughout the management. However, corresponding patient education is not often adequate in busy clinical situations which may lead to poor patient compliance (10). Therefore, an efficient and reliable method for localizing and estimating the detached retinal regions is fundamental for detailed postural instruction and medical referrals, especially in remote areas with insufficient fundus specialists.

In recent years, artificial intelligence (AI) models for RD detection based on color fundus photography (CFP) and optical coherence tomography (OCT) have been gradually established (11–14). However, the emergence of the ultra-widefield fundus (UWF) imaging system promotes the intelligent diagnosis of fundus diseases to a new height. A panoramic image of the retina with 200° views allows for detailed rendering of the peripheral retina, which compensates for the deficiency of traditional fundus images (15). Ohsugi et al. (16) made a pioneering attempt to diagnose rhegmatogenous RD with a small sample of UWF images based on deep learning algorithms. Later, Li et al. (17) proposed a cascaded deep learning system using UWF images for various RD detection and macula status discerning. Despite promising advancements, their work mainly focused on the presence or absence of the target disease. However, the concrete localization of the RD lesions, a crucial need for therapeutic decision-making including the preoperative posture and surgical options, is not fully emphasized (18–21).

Generally, the extent of the retinal lesion is obtained using the supervised models which requires elaborate labeling for most existing algorithms. Whereas, the equivocal boundaries of lesion, as well as the lack of expert annotations considerably hinder the efficient development of related models. In this context, weakly supervised learning, where the learning model can be trained with incomplete and simplified annotations, has attracted great attention (22). It typically fits for training lesion localization and segmentation models in medical images. For instance, Ma et al. (23) resorted to classification-based Class Activation Maps (CAMs) to segment geographic atrophy in retinal OCT images. Monaro et al. (24) proposed an architectural setting that enabled the weakly-supervised coarse segmentation of age-related macular degeneration lesions in color fundus images. The incorporation of lesion-specific activation maps provides more meaningful information for diagnosis with great explainability. In medical imaging, Gradient-weighted CAM (Grad-CAM) (25) is one of the most commonly used techniques to generate coarse localization maps. However, most approaches derived from it only focus on the discriminative image regions but ignore much detailed information. To alleviate this issue, Qin et al. (26) proposed an activation modulation and recalibration (AMR) scheme. The combination architecture of a compensation branch and spotlight branch could achieve better performance on image-level weakly supervised segmentation tasks. Given our purpose of achieving lesion-specific holistic localization, working under coarse image-level annotation instead of bounding box annotation is highly desirable (22, 27–29). Moreover, incorporating the AMR scheme mentioned above with our approaches could generate high-quality activation maps to compensate for previous detail-loss issues.

Therefore, we proposed a weakly supervised learning model to generate localization maps that outline the RD lesions based on UWF images. Relying on the localization maps, the potential diagnostic evidence will be instantaneously transmitted to the clinicians for reference. Furthermore, individual postural guidance will be generated for healthcare reference to the patients.

This study was conducted adhering to the tenets of the Declaration of Helsinki. It was approved by the Medical Ethics Committee of the Second Affiliated Hospital of Zhejiang University, School of Medicine.

A total of 30,446 UWF images were retrospectively obtained from visitors presenting for ophthalmic examinations between 1 May 2016 and 15 August 2022, at Eye Center, The Second Affiliated Hospital, School of Medicine, Zhejiang University. Images insufficient for interpretation were excluded, including (1) Poor-view images, referring to images with significant deficiencies in focus or illumination, visibility of the optic disc, or over one-third of the field obscured by the eyelashes or eyelids. (2) Poor-position images, referring to images with significantly off-center optic disc and macula due to incorrect gazing in the image capture process. The UWF images were captured using an OPTOS nonmydriatic camera (OPTOS Daytona, Dunfermline, United Kingdom) with 200-degree fields of view. The subjects underwent the examinations without mydriasis. All of the UWF images were anonymized before being involved in this study.

A professional image labeling team was recruited to generate the ground truth. The team consisted of two retinal specialists with more than 5 years of clinical experience and one senior specialized ophthalmologist with over 20 years of clinical experience.

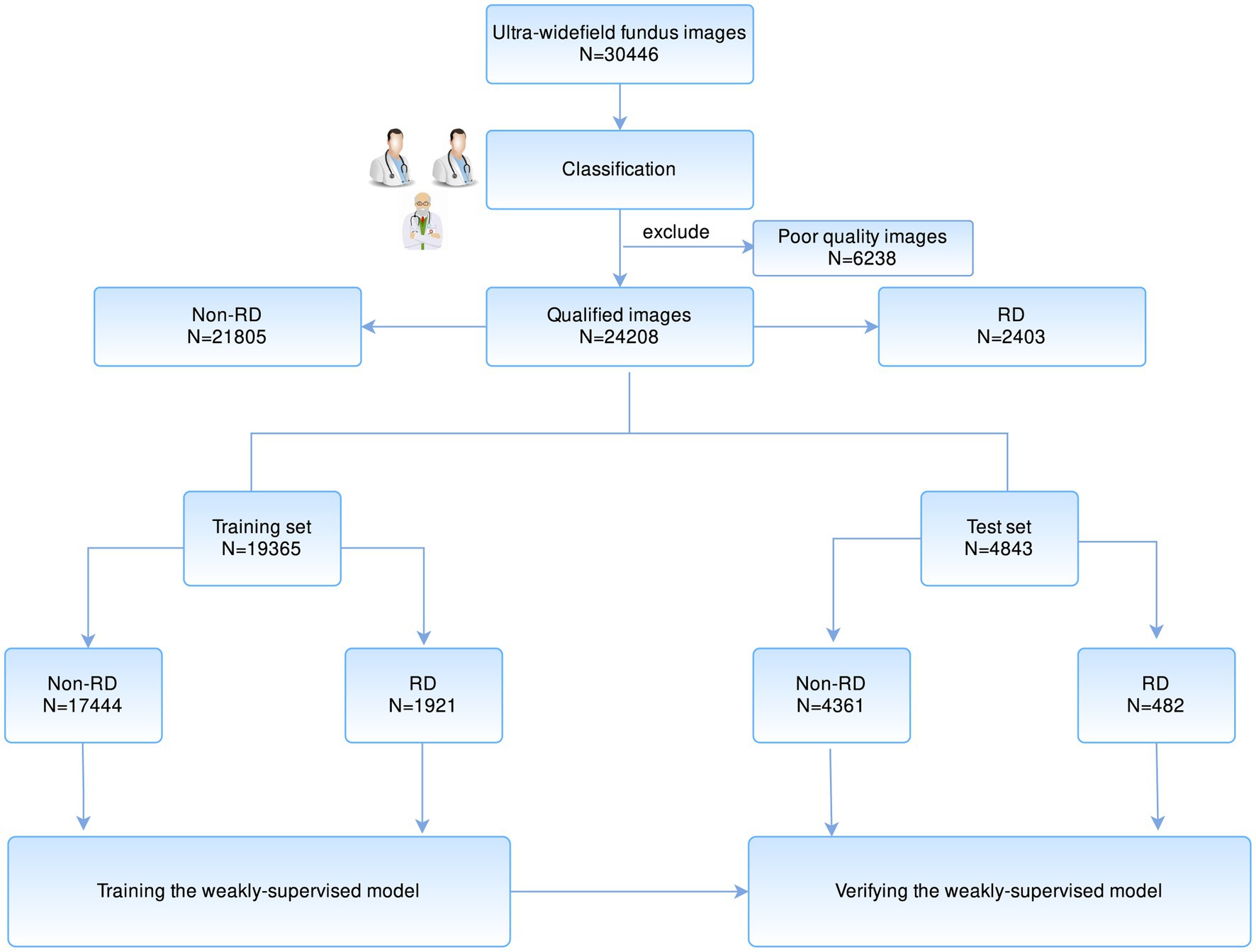

At first, the included UWF images were annotated with image-level labels after quality filtration. Two specialists, respectively, classified all images into two types: RD and Non-RD. The ground truth was determined based on their consensus. Any divergences were finally arbitrated by the senior specialized ophthalmologist. Figure 1 illustrates the workflow of image classification.

Figure 1. The workflow of developing a weakly supervised learning model for the localization of RD region based on UWF images. RD, retinal detachment; UWF, ultra-widefield fundus.

Then, the uninvolved fovea of each RD image (Macula ON) was marked manually to further obtain the specific anatomical zone for postural guidance. Besides, the RD regions of images in the test set were independently contoured by two specialists. The ground truth of the RD region was determined based on the intersection of their labeled areas. Any image with less than 0.9 intersection-over-union (IOU) of the labeled RD regions was confirmed by the senior specialized ophthalmologist.

UWF images incorporate a vast of critical information about the profile and distribution of the lesions, which is essential for the healthcare of RD patients. Clinically, typical RD is recognized by an elevated and corrugated retinal appearance accompanied by retinal breaks, and such features can often be recognized by the deep learning algorithm. Based on this rationale, we propose a model that enables the localization of RD regions based on weakly supervised training. The design of the model consisted of two sections: localizing the RD lesions and generating postural guidance according to the anatomical zone of the lesion.

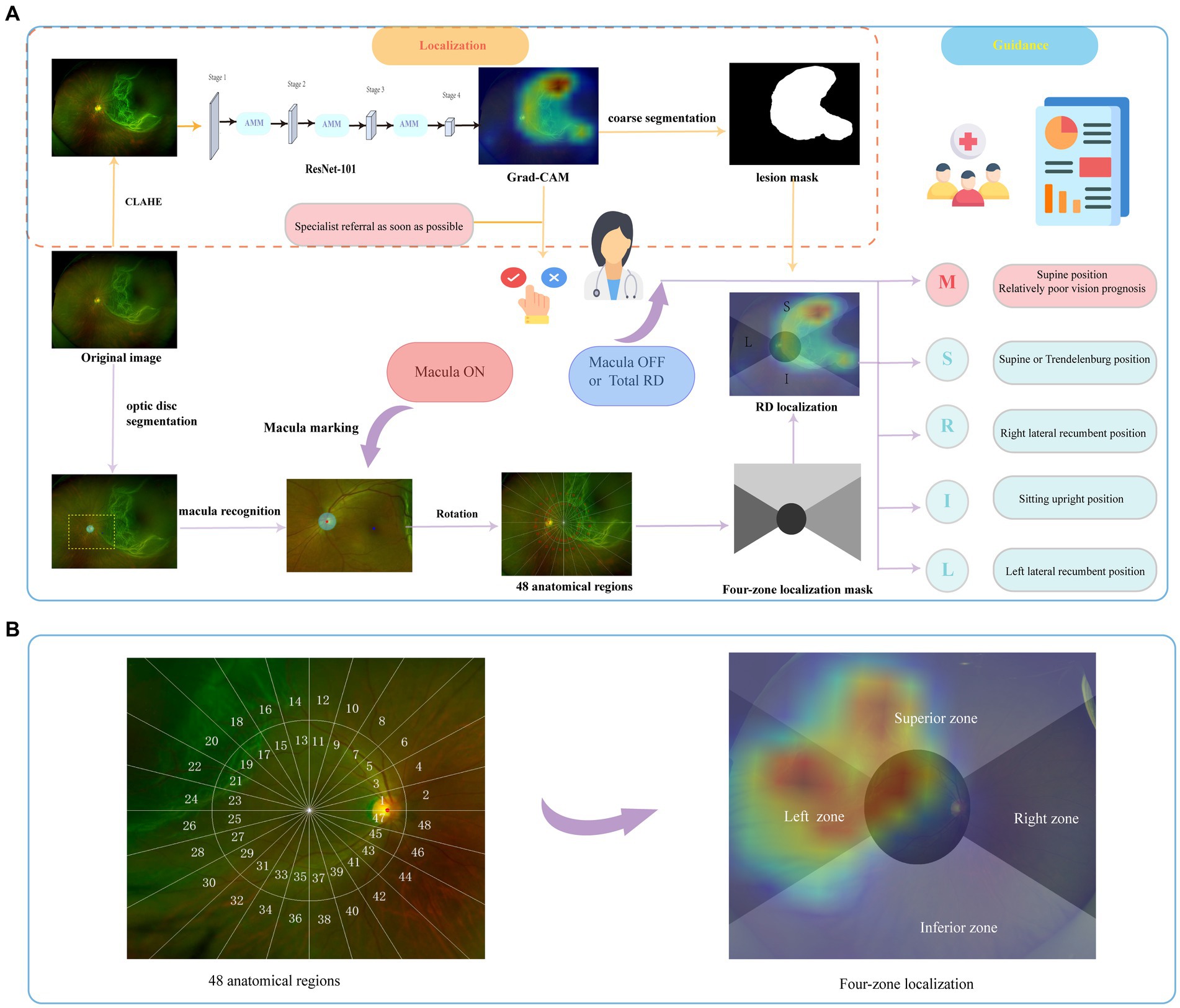

In the localization section, an attention modulation module (AMM) (26) was involved in our scheme to realize recalibration supervision and generate lesion-specific activation maps. In the first place, it was necessary to extract the fundus’ region of interest (ROI). The four corners (left and right top, left and right bottom) in a UWF image were called irrelevant areas since there was no fundus information in these four regions. These irrelevant regions from different images were variable in texture but highly similar in extent. We manually crafted an ROI template to erase pixels in these irrelevant regions. Local contrast enhancement (CLAHE) was applied to image augmentation afterward.

A ResNet-101 (30) was pre-trained to identify RD cases with a learning rate of 0.01 and focal loss (alpha was set to 0.65, gamma was set to 1.15). Then, AMM was employed to emphasize region-essential features for the segmentation task between every two stages, as shown in Figure 2. Features from the discriminative regions were considered to be the most sensitive features, and the minor features referred to features that are important but easily ignored (31). The AMM can rearrange the distribution of the feature importance to highlight sensitive and minor activations, which is crucial to generating semantic segmentation masks. The ResNet-101 with AMMs was fine-tuned with a learning rate of 0.001. Probability maps were generated based on feature maps from stage 4 by Grad-CAM and resampled to the original size afterward.

Figure 2. The schema of the overall study. The brief illustration of RD region localization and corresponding postural guidance (A). The retina was divided into 48 anatomical regions to evaluate the holistic localization performance. The final four-zone overlaid image was generated for postural guidance (B). RD, retinal detachment; M, macula zone; S, superior zone; R, right zone; I, inferior zone; L, left zone.

In the guidance section, the coarse segmentation of the RD region with pseudo labels obtained from localization maps with a probability threshold of 0.5 was carried out. As shown in Figure 2, a coordinate system was constructed based on the recognition of fovea marked manually and optical disc segmented by U-Net (32). Details on the established zoning principles are presented below. The primary zone label is assigned corresponding to the largest number of RD pixels. Then, the predicted label was output based on the coarse lesion segmentation results to generate the customized postural guidance.

The panoramic retinal view is divided into four zones centered on the manually labeled macula fovea. It is calibrated with a horizontal line through the fovea and optic disc center. In a clockwise direction, we designate 2–4 o’clock as the right zone, 10–2 o’clock as the superior zone, 8–10 o’clock as the left zone, and 4–8 o’clock as the inferior zone. In addition, we pay more attention to the posterior pole, designated as the circle centered on the macula and including the optic disc (33), which is closely associated with the surgical option and visual prognosis (4, 6). To further evaluate the holistic localization performance, each zone was divided clockwise by 15° to obtain 48 anatomical regions defining the entire retina as shown in Figure 2. Each image has a 48-length vector label for 48-partition localization. The label is assigned as 1 when more than 50 RD pixels fall into this partition.

Given that difference in image resolution of input data may have impacts on the localization outcome. We implemented sensitivity analyses based on three common image resolutions including 256 × 256, 512 × 512, and 1,024 × 1,024 pixels. We evaluated the 48-partition localization performance of our weakly-supervised model in these contexts separately and selected the optimal resolution model for further evaluation.

A comparison experiment with the proposed model was conducted using a baseline model without AMM to explore the performance enhancement that comes with the AMR scheme. Meanwhile, to evaluate our weakly-supervised deep learning model in the localization of the RD region, we recruited a general ophthalmologist with 3 years of clinical experience. It is challenging to clearly define the contours of the RD region, considering its equivocal borders, even for clinicians. Given that the final localization is the essential factor for postural instruction, we evaluated their performance of lesion body localization rather than the edge segmentation performance. According to the defined ground truth, we compared the localization performance of the proposed model with that of the baseline model and general ophthalmologist based on the test set, respectively.

The precision, recall, F1 score, sensitivity, specificity, and accuracy of the models and general ophthalmologist were calculated according to the reference standard. The F1 score is the harmonic mean of precision and recall, which is calculated as:

The precision-recall curve was generated to visualize the localization performance of the deep learning models. The Cohen’s Kappa value of the model and general ophthalmologist compared with the reference standard for the four-zone localization was calculated to evaluate the consistency. All statistical analyses for the study were conducted using SPSS 26.0 (Chicago, IL, United States) and Python 3.7 (Wilmington, DE, United States).

In total, 30,446 images were obtained for preliminary model development. After filtering 6,238 poor-quality images that are insufficient for interpretation, 24,208 eligible images were annotated. Two thousand four hundred and three were classified as RD, while the remaining 21,805 images were classified as non-RD. The dataset was randomly split in 80:20 ratios according to the Pareto principle, with 19,365 (80%) images as a training set and 4,843 (20%) as a test set. The baseline characteristics of collected images are summarized in Table 1.

In the test set, the associated lesions of 480 RD images are successfully localized with activation maps. Only two cases have been missed due to the inconspicuous shallow detachment. In 467 Macula-ON RD images, the entire retina is divided into 48 anatomical regions based on the location of the optic disc and macula fovea, as illustrated in Figure 2, to evaluate the holistic localization of the RD region in the test set. The following anatomical localization evaluation will be specific to these 467 RD images.

Table 2 exhibited the holistic localization performance of our weakly supervised model with three image resolutions for sensitivity analysis. The results showed that the image resolution of 1,024 × 1,024 pixels had the highest precision of 89.14% (95%CI: 88.52–89.73%). However, the image resolution of 512 × 512 pixels achieved the highest recall of 83.38% (95%CI: 82.91–83.84%) and acceptable precision with an optimal F1 score of 84.81% (95%CI: 84.26–85.35%). As a result, the following localization evaluation adopted the image resolution of 512 × 512 uniformly.

Table 2. The holistic localization performance of our weakly-supervised model with different image resolutions.

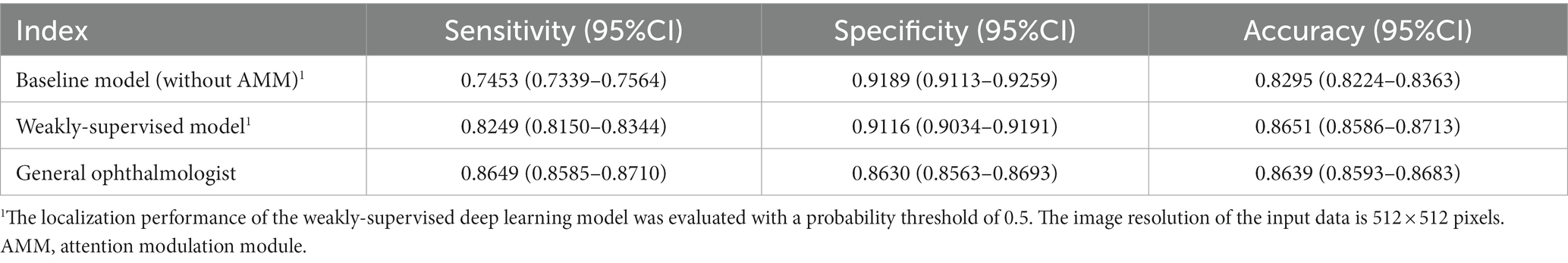

The performance of the baseline model, the proposed model, and general ophthalmologist to identify whether the posterior pole is involved or not is shown in Table 3. The general ophthalmologist had an 86.49% (95%CI: 85.85–87.10%) sensitivity and an 86.30% (95%CI: 85.63–86.93%) specificity, whereas the model had an 82.49% (95%CI: 81.50–83.44%) sensitivity and a 91.16% (95%CI: 90.34–91.91%) specificity with a probability threshold of 0.5. Despite a high specificity of 91.89% (95%CI: 91.13–92.59%) achieved, the baseline model showed limited sensitivity of 74.53% (95%CI: 73.39–75.64%) for early identification.

Table 3. The localization of RD in the posterior pole area by the weakly-supervised deep learning model and the general ophthalmologist compared with the ground truth in the test set.

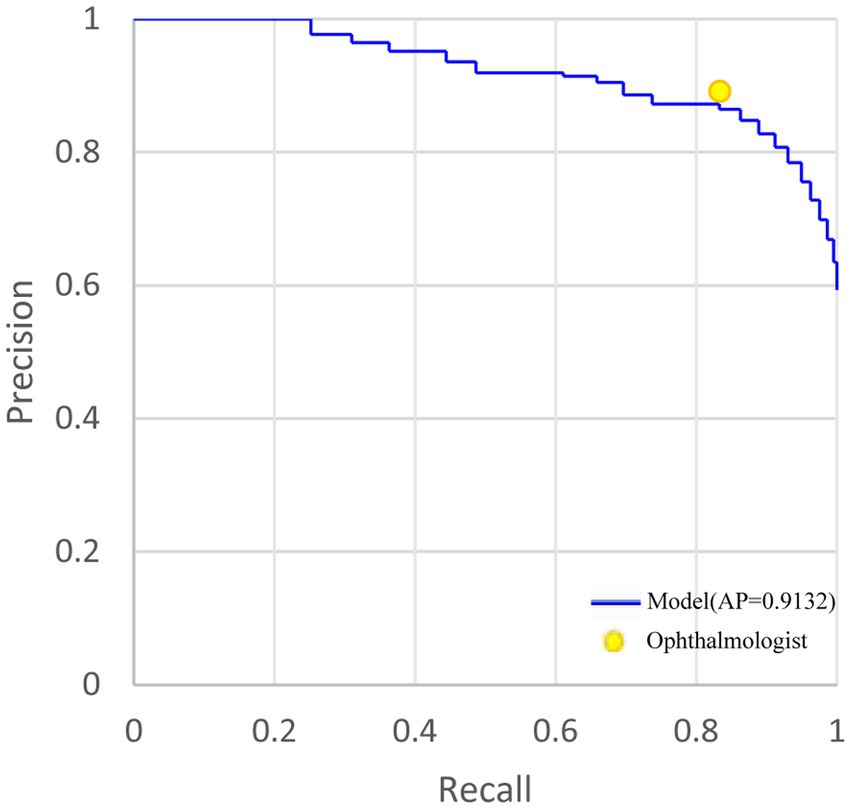

As for localizing RD lesions in 48 anatomical regions, the general ophthalmologist had an 89.16% (95%CI: 88.75–89.55%) precision and 83.38% (95%CI: 82.91–83.84%) recall. In contrast, our model had an 86.42% (95%CI: 85.81–87.01%) precision and an 83.27% (95%CI: 82.62–83.90%) recall with an average precision (AP) of 0.9132. Though the baseline model achieved a 92.67% (95%CI: 92.11–93.19%) precision which could be attributed to the most discriminative response region, it showed limited recall of 68.07% (95%CI: 67.25–68.88%). For visualizing the model performance when different probability thresholds are applied, the precision-recall curve of the model is shown in Figure 3. The performance of localizing RD lesions in all 48 anatomical regions by the proposed model and general ophthalmologist is shown in Table 4.

Figure 3. The precision-recall curve of holistic localization performance of RD region based on the weakly-supervised model and general ophthalmologist, compared with the ground truth in the test set. AP, average precision. RD, retinal detachment. AMM, attention modulation module.

Table 4. The performance of localizing RD lesions in 48 anatomical regions by the baseline model, weakly-supervised model, and the general ophthalmologist, compared with the ground truth in the test set.

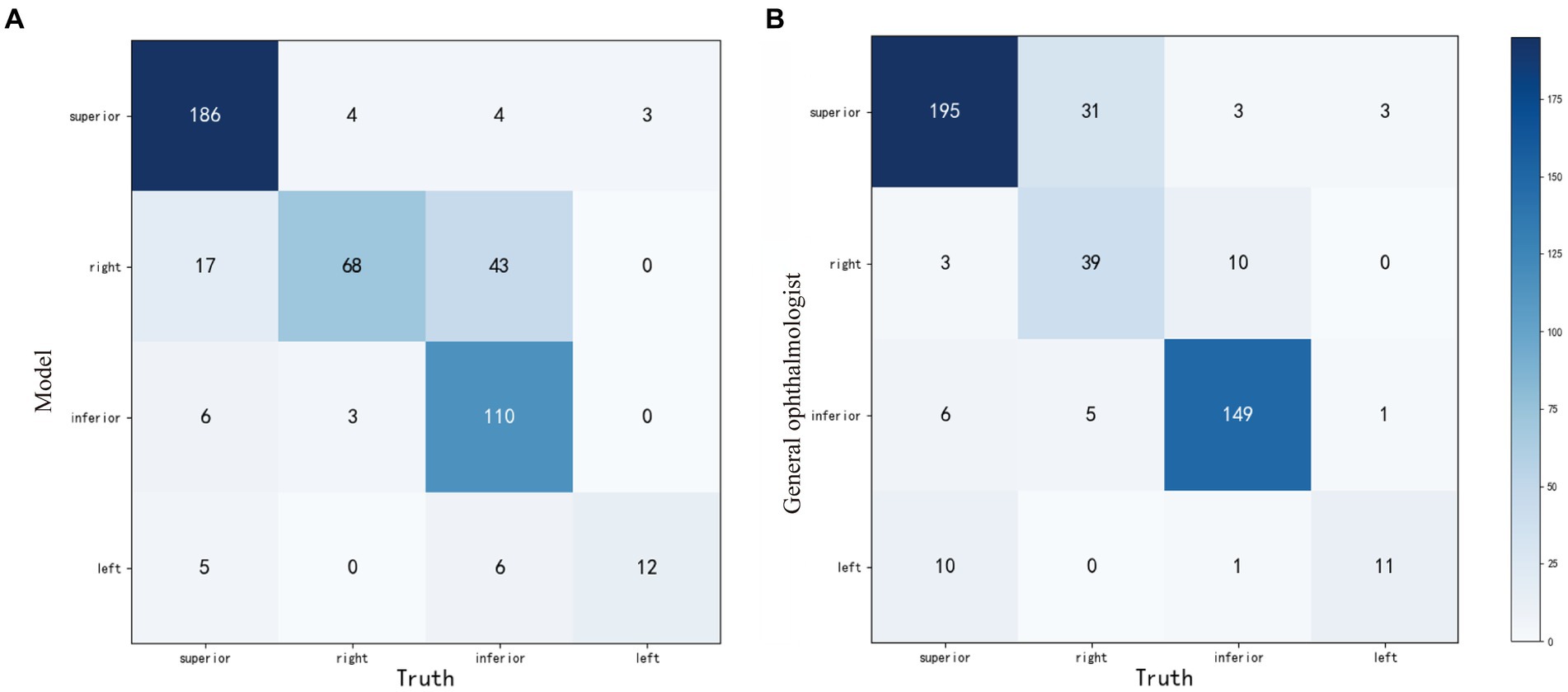

Compared with the ground truth, the unweighted Cohen’s κ coefficients were 0.710 (95%CI: 0.659–0.761) and 0.753 (95%CI: 0.702–0.804) for the weakly-supervised model and the general ophthalmologist, respectively. The four-zone location accuracy of our model is 0.8051 (95%CI: 0.7656–0.8395), which is slightly inferior to the general ophthalmologist’s accuracy of 0.8437 (95%CI: 0.8068–0.8748). The confusion matrixes are shown in Figure 4.

Figure 4. The confusion matrixes of four-zone RD localization performance based on the weakly-supervised model (A) and the general ophthalmologist (B), compared with the ground truth in the test set. RD, retinal detachment.

RD is a typical ophthalmic emergency. Early medical interventions based on the precise localization of lesions could increase the success rate of surgical repair and avoid permanent visual impairment (6, 34). Here, we established a standardized procedure for RD localization from UWF images using a weakly-supervised approach. It could provide a corresponding medical reference to both clinicians and RD patients throughout the early-stage management. Compared with the baseline model which only focused on the most discriminative regions with limited recall, our weakly supervised model incorporated the AMR scheme. For this reason, the generated localization maps yielded a comprehensive presentation of RD lesion-related information. The four-zone anatomical localization performance of our model, which was highly related to posture regimens (6, 35, 36), showed substantial consistency with the specialists according to the unweighted Cohen’s kappa coefficients of 0.710(95%CI: 0.659–0.761). The human-model comparisons also demonstrated its localization performance with high precision and recall, almost equaled to a general ophthalmologist’s judging ability. In general, our model exhibits acceptable performance for the holistic localization of the RD regions. To the best of our knowledge, this is the first attempt to precisely localize the RD regions.

Previously, several deep learning systems in identifying RD in fundus images presented favorable performance (16, 17, 37, 38). Similarly, our model also showed a perfect capacity of discernment for RD from UWF images. Nevertheless, previous deep learning models were mainly proposed for classification tasks, and CAMs were employed for post-hoc interpretability. Since such heatmaps were classification-oriented, they tended to resort to some discriminative regions instead of the holistic bound of the whole object. Even though Li et al. (17) attempted to visualize the decisive regions with saliency maps and embedded an arrow according to the hot regions for head positioning guidance, the most decisive regions in the heatmaps may not be the primary location of RD lesions. The classifiers may only focus on a small part of the target lesions (26, 39). Moreover, the limited localization results from true-positive samples had yet to be thoroughly evaluated for general feasibility. In contrast to simply utilizing classification-oriented heatmaps, our model presents the edge of providing lesion-specific holistic activation maps to localize RD regions. For digging out the regions that are essential but easily ignored for lesion segmentation by the weakly supervised algorithm, we introduce AMM to our scheme to provide recalibration supervision and task-specific concepts. The lesion information of clinical interests provided by this interpretable method complies with cognitive law, which could indicate the diagnostic reference to the clinicians and could be verified easily. Moreover, in the coordinates established above, the model could elaborate on the anatomical zones of the RD lesions. According to the most affected zone, a supine preoperative position is advised for RD in the superior zones and a sitting position for RD in the inferior zones (9). Patients with RD lesions in the right or left zones were positioned flat on the right or left side of the affected eye, respectively (40). The involvement of the posterior pole is almost suggestive of a relatively poor vision prognosis if emergency repair surgery is not available before the macula is involved (4, 7, 41). Patients should maintain a supine position during this time and take an urgent referral.

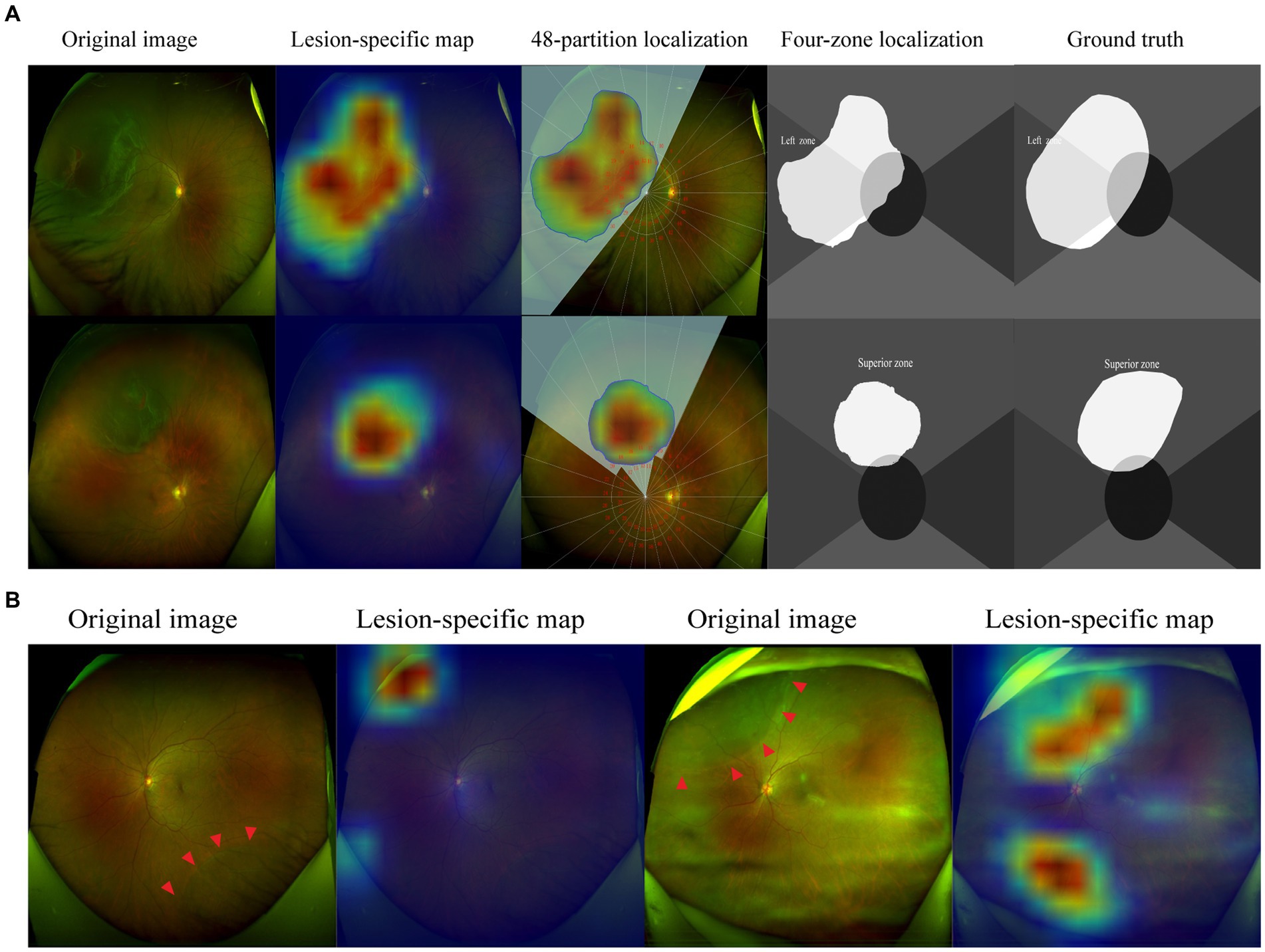

In our research, most cases can realize holistic localization of RD lesions with great satisfaction. As shown in Figure 5, the corrugated retinal appearance of RD lesions makes them more distinguishable, whereas the shallow RDs are easily missed due to their atypical appearance. In addition, interference from irrelevant factors can also be misleading for automatic localization. The OPTOS camera pads and artifacts with RD-similar edges may result in mistaken highlights in localization maps. In future work, these problems could be improved by further training based on large-scale images with corresponding issues.

Figure 5. Visualization of representative cases. The corrugated retina and the edge of breaks are highlighted in lesion-specific maps, the detached regions were demonstrated in 48-partition localization maps and four-zone localization maps (A). The shallow retinal detachments are not detected in the inferior quadrant, while OPTOS camera pads are highlighted. Artifacts caused by opaque refractive media are highlighted in localization maps (B). The red arrowheads indicate the borders of the RD region.

This study has several limitations. First, blurred border and texture feature differences within the RD regions made it difficult for the activation maps to highlight the whole area of the lesion. The regions with inconspicuous texture features were easily missed even though the advanced AMM module had been incorporated, which may result in some inconsistency in anatomical localization. Strictly, the localization of breaks of the rhegmatogenous RD had more significance for posture instructions. However, the small breaks in the retina were not always visible, especially in the peripheral regions. Given most of the breaks are within the detached retina, the localization of the RD region could extend its clinical applications considerably. In addition, the determination of whether the posterior pole was involved may not represent the status of the macula, especially when the macula was located near the borderline of the RD regions. Hence, further work is warranted to accurately discern the status of the macula for determining operation time and predicting visual prognosis. Furthermore, automatic postural guidance had a relatively limited application range due to the high-quality images required for anatomical localization. The anatomical localization of RD was highly dependent on the clear presentation of the retina. Those fundus images with significant opaque refractive media, inappropriate illumination, and invisible optic disc were not eligible for inclusion in this study. Finally, our model was developed based on single-center retrospective datasets with limited generalization. The evaluation of localization accuracy was conducted on a single-disease dataset and was not strictly validated in the cases of fundus comorbidities. In the future, we expect to explore more advanced methods to aid the full-stage management of RD, incorporating the medical history and other imaging data. Meanwhile, we will expand the training samples of fundus comorbidity images and facilitate the evaluation based on the large-scale test scenario.

In this study, we developed a weakly-supervised deep learning model to localize RD regions based on UWF images. The lesion-specific localization maps could be incorporated into the diagnostic process and personalized postural guidance of RD patients for reference. Moreover, the implementation of this task considerably surmounted the current “label-hunger” difficulty. It would greatly facilitate managing RD patients when insufficient specialists are available, especially for medical referral and postural guidance. The application of this model could significantly equilibrate medical resources and improve healthcare efficiency.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving humans were approved by Institutional Review Board of the Second Affiliated Hospital of Zhejiang University, School of Medicine. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

HL: Conceptualization, Data curation, Formal analysis, Methodology, Software, Writing – original draft. JC: Conceptualization, Data curation, Validation, Writing – review & editing, Writing – original draft. KY: Data curation, Formal analysis, Methodology, Software, Writing – original draft. YZ: Resources, Writing – review & editing. JY: Conceptualization, Funding acquisition, Project administration, Resources, Supervision, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was funded by National Natural Science Foundation Regional Innovation and Development Joint Fund, grant number U20A20386, National key research and development program of China, grant number 2019YFC0118400, and Key research and development program of Zhejiang Province, grant number 2019C03020.

KY and YZ were employed by Zhejiang Feitu Medical Imaging Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Ghazi, NG, and Green, WR. Pathology and pathogenesis of retinal detachment. Eye. (2002) 16:411–21. doi: 10.1038/sj.eye.6700197

2. Mitry, D, Charteris, DG, Fleck, BW, Campbell, H, and Singh, J. The epidemiology of rhegmatogenous retinal detachment: geographical variation and clinical associations. Br J Ophthalmol. (2010) 94:678–84. doi: 10.1136/bjo.2009.157727

3. Gariano, RF, and Kim, CH. Evaluation and management of suspected retinal detachment. Am Fam Physician. (2004) 69:1691–8.

4. Kwok, JM, Yu, CW, and Christakis, PG. Retinal detachment. CMAJ. (2020) 192:E312. doi: 10.1503/cmaj.191337

5. Felfeli, T, Teja, B, Miranda, RN, Simbulan, F, Sridhar, J, Sander, B, et al. Cost-utility of Rhegmatogenous retinal detachment repair with pars Plana vitrectomy, scleral buckle, and pneumatic Retinopexy: a microsimulation model. Am J Ophthalmol. (2023) 255:141–54. doi: 10.1016/j.ajo.2023.06.002

6. Kang, HK, and Luff, AJ. Management of retinal detachment: a guide for non-ophthalmologists. BMJ. (2008) 336:1235–40. doi: 10.1136/bmj.39581.525532.47

8. de Jong, JH, de Koning, K, den Ouden, T, van Meurs, JC, and Vermeer, KA. The effect of compliance with preoperative Posturing advice and head movements on the progression of macula-on retinal detachment. Transl Vis Sci Technol. (2019) 8:4. doi: 10.1167/tvst.8.2.4

9. de Jong, JH, Vigueras-Guillén, JP, Simon, TC, Timman, R, Peto, T, Vermeer, KA, et al. Preoperative Posturing of patients with macula-on retinal detachment reduces progression toward the fovea. Ophthalmology. (2017) 124:1510–22. doi: 10.1016/j.ophtha.2017.04.004

10. Li, Y, Li, J, Shao, Y, Feng, R, Li, J, and Duan, Y. Factors influencing compliance in RRD patients with the face-down position via grounded theory approach. Sci Rep. (2022) 12:20320. doi: 10.1038/s41598-022-24121-9

11. Jin, K, and Ye, J. Artificial intelligence and deep learning in ophthalmology: current status and future perspectives. Adv Ophthalmol Practice Res. (2022) 2:100078. doi: 10.1016/j.aopr.2022.100078

12. Lake, SR, Bottema, MJ, Williams, KA, Lange, T, and Reynolds, KJ. Retinal shape-based classification of retinal detachment and posterior vitreous detachment eyes. Ophthalmol Ther. (2023) 12:155–65. doi: 10.1007/s40123-022-00597-6

13. Li, B, Chen, H, Zhang, B, Yuan, M, Jin, X, Lei, B, et al. Development and evaluation of a deep learning model for the detection of multiple fundus diseases based on colour fundus photography. Br J Ophthalmol. (2022) 106:316290. doi: 10.1136/bjophthalmol-2020-316290

14. Yadav, S, Das, S, Murugan, R, Dutta Roy, S, Agrawal, M, Goel, T, et al. Performance analysis of deep neural networks through transfer learning in retinal detachment diagnosis using fundus images. Sādhanā. (2022) 47:49. doi: 10.1007/s12046-022-01822-5

15. Nagiel, A, Lalane, RA, Sadda, SR, and Schwartz, SD. ULTRA-WIDEFIELD Fundus Imaging: a review of clinical applications and future trends. Retina. (2016) 36:660–78. doi: 10.1097/IAE.0000000000000937

16. Ohsugi, H, Tabuchi, H, Enno, H, and Ishitobi, N. Accuracy of deep learning, a machine-learning technology, using ultra–wide-field fundus ophthalmoscopy for detecting rhegmatogenous retinal detachment. Sci Rep. (2017) 7:9425. doi: 10.1038/s41598-017-09891-x

17. Li, Z, Guo, C, Nie, D, Lin, D, Zhu, Y, Chen, C, et al. Deep learning for detecting retinal detachment and discerning macular status using ultra-widefield fundus images. Commun Biol. (2020) 3:15. doi: 10.1038/s42003-019-0730-x

18. Alexander, P, Ang, A, Poulson, A, and Snead, MP. Scleral buckling combined with vitrectomy for the management of rhegmatogenous retinal detachment associated with inferior retinal breaks. Eye. (2008) 22:200–3. doi: 10.1038/sj.eye.6702555

19. Chronopoulos, A, Hattenbach, LO, and Schutz, JS. Pneumatic retinopexy: A critical reappraisal. Surv Ophthalmol. (2021) 66:585–93. doi: 10.1016/j.survophthal.2020.12.007

20. Hillier, RJ, Felfeli, T, Berger, AR, Wong, DT, Altomare, F, Dai, D, et al. The pneumatic Retinopexy versus vitrectomy for the Management of Primary Rhegmatogenous Retinal Detachment Outcomes Randomized Trial (PIVOT). Ophthalmology. (2019) 126:531–9. doi: 10.1016/j.ophtha.2018.11.014

21. Warren, A, Wang, DW, and Lim, JI. Rhegmatogenous retinal detachment surgery: a review. Clin Experiment Ophthalmol. (2023) 51:271–9. doi: 10.1111/ceo.14205

22. Wang, J, Li, W, Chen, Y, Fang, W, Kong, W, He, Y, et al. Weakly supervised anomaly segmentation in retinal OCT images using an adversarial learning approach. Biomed Opt Express. (2021) 12:4713–4729. doi: 10.1364/BOE.426803

23. Ma, X., Ji, Z., Niu, S., Leng, T., Rubin, DL, and Chen, Q. MS-CAM: Multi-Scale Class Activation Maps for Weakly-Supervised Segmentation of Geographic Atrophy Lesions in SD-OCT Images. IEEE Journal of Biomedical and Health Informatics, (2020) 24:3443–3455. doi: 10.1109/JBHI.2020.2999588

24. Morano, J, Hervella, ÁS, Rouco, J, Novo, J, Fernández-Vigo, JI, and Ortega, M. Weakly-supervised detection of AMD-related lesions in color fundus images using explainable deep learning. Comput Methods Prog Biomed. (2023) 229:107296. doi: 10.1016/j.cmpb.2022.107296

25. Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. Grad-CAM: visual explanations from deep networks via gradient-based localization. in 2017 IEEE international Conference on Computer vision (ICCV), 618–626. (2017).

26. Qin, J., Wu, J., Xiao, X., Li, L., and Wang, X. Activation modulation and recalibration scheme for weakly supervised semantic segmentation. (2021). Proceedings of the AAAI Conference on Artificial Intelligence, 36. (Palo Alto, CA: AAAI Press), 2117–2125.

27. Cinbis, RG, Verbeek, J, and Schmid, C. Weakly supervised object localization with multi-fold multiple instance learning. IEEE Trans Pattern Anal Mach Intell. (2017) 39:189–203. doi: 10.1109/TPAMI.2016.2535231

28. Zhang, D., Guo, G., Zeng, W., Li, L., and Han, J. Generalized weakly supervised object localization. in: IEEE Transactions on Neural Networks and Learning Systems, (2022a). PP. 1, 12.

29. Zhang, D, Han, J, Cheng, G, and Yang, MH. Weakly supervised object localization and detection: a survey. IEEE Trans Pattern Anal Mach Intell. (2022b) 44:1–5885. doi: 10.1109/TPAMI.2021.3074313

30. He, K., Zhang, X., Ren, S., and Sun, J. Deep residual learning for image recognition. in 2016 IEEE Conference On Computer Vision And Pattern Recognition (CVPR) IEEE Conference on Computer Vision and Pattern Recognition. (2016). (New York: IEEE), 770–778.

31. Jiang, P., Hou, Q., Cao, Y., Cheng, M., Wei, Y., and Xiong, H. Integral object mining via online attention accumulation. in 2019 IEEE/CVF international Conference on Computer vision (ICCV) (Seoul, Korea (South): IEEE), (2019). 2070–2079.

32. Ronneberger, O, Fischer, P, and Brox, T. U-net: convolutional networks for biomedical image segmentation In: N Navab, J Hornegger, WM Wells, and AF Frangi, editors. Medical image computing and Computer-assisted intervention—MICCAI 2015 lecture notes in Computer science. Cham: Springer International Publishing (2015). 234–41.

33. Quinn, N, Csincsik, L, Flynn, E, Curcio, CA, Kiss, S, Sadda, SR, et al. The clinical relevance of visualising the peripheral retina. Prog Retin Eye Res. (2019) 68:83–109. doi: 10.1016/j.preteyeres.2018.10.001

34. Wickham, L, Bunce, C, Wong, D, and Charteris, DG. Retinal detachment repair by vitrectomy: simplified formulae to estimate the risk of failure. Br J Ophthalmol. (2011) 95:1239–44. doi: 10.1136/bjo.2010.190314

35. Reeves, MG, Pershing, S, and Afshar, AR. Choice of primary Rhegmatogenous retinal detachment repair method in US commercially insured and Medicare advantage patients, 2003-2016. Am J Ophthalmol. (2018) 196:82–90. doi: 10.1016/j.ajo.2018.08.024

36. Sverdlichenko, I, Lim, M, Popovic, MM, Pimentel, MC, Kertes, PJ, and Muni, RH. Postoperative positioning regimens in adults who undergo retinal detachment repair: a systematic review. Surv Ophthalmol. (2023) 68:113–25. doi: 10.1016/j.survophthal.2022.09.002

37. Sun, G, Wang, X, Xu, L, Li, C, Wang, W, Yi, Z, et al. Deep learning for the detection of multiple fundus diseases using ultra-widefield images. Ophthalmol Ther. (2023) 12:895–907. doi: 10.1007/s40123-022-00627-3

38. Zhang, C, He, F, Li, B, Wang, H, He, X, Li, X, et al. Development of a deep-learning system for detection of lattice degeneration, retinal breaks, and retinal detachment in tessellated eyes using ultra-wide-field fundus images: a pilot study. Graefes Arch Clin Exp Ophthalmol. (2021) 259:2225–34. doi: 10.1007/s00417-021-05105-3

39. Meng, Q, Liao, L, and Satoh, S. Weakly-supervised learning with complementary Heatmap for retinal disease detection. IEEE Trans Med Imaging. (2022) 41:2067–78. doi: 10.1109/TMI.2022.3155154

40. Johannigmann-Malek, N, Stephen, BK, Badawood, S, Maier, M, and Baumann, C. Influence of preoperative POSTURING on SUBFOVEAL fluid height in macula-off retinal detachments. Retina. (2023) 43:1738–44. doi: 10.1097/IAE.0000000000003864

Keywords: weakly supervised, deep learning, localization, retinal detachment, ultra-widefield fundus images

Citation: Li H, Cao J, You K, Zhang Y and Ye J (2024) Artificial intelligence-assisted management of retinal detachment from ultra-widefield fundus images based on weakly-supervised approach. Front. Med. 11:1326004. doi: 10.3389/fmed.2024.1326004

Received: 23 November 2023; Accepted: 19 January 2024;

Published: 06 February 2024.

Edited by:

Qiang Chen, Nanjing University of Science and Technology, ChinaCopyright © 2024 Li, Cao, You, Zhang and Ye. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Juan Ye, eWVqdWFuQHpqdS5lZHUuY24=

†These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.