94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med., 18 May 2023

Sec. Ophthalmology

Volume 10 - 2023 | https://doi.org/10.3389/fmed.2023.1162124

This article is part of the Research TopicBig Data and Artificial Intelligence in Ophthalmology - Clinical Application and Future ExplorationView all 10 articles

Introduction: Infectious keratitis is a vision threatening disease. Bacterial and fungal keratitis are often confused in the early stages, so right diagnosis and optimized treatment for causative organisms is crucial. Antibacterial and antifungal medications are completely different, and the prognosis for fungal keratitis is even much worse. Since the identification of microorganisms takes a long time, empirical treatment must be started according to the appearance of the lesion before an accurate diagnosis. Thus, we developed an automated deep learning (DL) based diagnostic system of bacterial and fungal keratitis based on the anterior segment photographs using two proposed modules, Lesion Guiding Module (LGM) and Mask Adjusting Module (MAM).

Methods: We used 684 anterior segment photographs from 107 patients confirmed as bacterial or fungal keratitis by corneal scraping culture. Both broad- and slit-beam images were included in the analysis. We set baseline classifier as ResNet-50. The LGM was designed to learn the location information of lesions annotated by ophthalmologists and the slit-beam MAM was applied to extract the correct feature points from two different images (broad- and slit-beam) during the training phase. Our algorithm was then externally validated using 98 images from Google image search and ophthalmology textbooks.

Results: A total of 594 images from 88 patients were used for training, and 90 images from 19 patients were used for test. Compared to the diagnostic accuracy of baseline network ResNet-50, the proposed method with LGM and MAM showed significantly higher accuracy (81.1 vs. 87.8%). We further observed that the model achieved significant improvement on diagnostic performance using open-source dataset (64.2 vs. 71.4%). LGM and MAM module showed positive effect on an ablation study.

Discussion: This study demonstrated that the potential of a novel DL based diagnostic algorithm for bacterial and fungal keratitis using two types of anterior segment photographs. The proposed network containing LGM and slit-beam MAM is robust in improving the diagnostic accuracy and overcoming the limitations of small training data and multi type of images.

Infectious keratitis is a common cause of permanent blindness worldwide and can cause serious complications such as corneal perforation, corneal opacification, and endophthalmitis if not properly treated (1–6). Approximately 2,300,000 cases of microbial keratitis (including those caused by bacteria, fungi, viruses, and Acanthamoeba) occur annually in South Korea, where bacteria still dominate as the causative organisms of the disease (5). It is known to show various patterns depending on the region, climate, and country. For example, in temperate climates, fungal and mixed infections are more common than in tropical and semi-tropical areas. From an epidemiological point of view, ocular trauma and contact lens-associated keratitis have been increasing in recent years (7, 8).

The selection of an effective antimicrobial agent requires the identification of the causative microorganism. The gold standard for diagnosis is corneal scraping and culture, but it is not always available, and bacterial or fungal growth on culture plates takes several days or weeks (9–11). Even if it is actually microorganism positive, the result may be negative and the lesion may worsen while waiting for the result. Therefore, empirical therapy with broad-spectrum antibiotics, antifungals, and antiviral agents should be initiated based on the clinical experience of the ophthalmologist, based on the shape, size, depth, and location of the lesion, before culture results are obtained (9–12). However, bacterial and fungal keratitis are not completely distinct from each other. If patients receive unnecessary or late treatment due to an incorrect diagnosis, it may result in poor outcomes for the sufferer’s vision, poor quality of life, and increased medical expenses.

Because the deep learning approach has shown remarkable performance in various image processing tasks such as classification and object detection, it has been applied in numerous research fields. Deep learning, using various types of medical images, is also used for the accurate diagnosis and treatment of many ocular diseases. As a result of the development of the methods based on deep learning, the diagnostic performance has been equivalent to or even surpassed the diagnostic ability of clinicians (13–15). Therefore, we expect that the application of deep learning in keratitis diagnosis can assist clinicians in reducing misdiagnoses and improving medical equity and accessibility to medical care.

In this context, we propose a deep learning-based computer-aided diagnosis (CAD) network that classifies and diagnoses bacterial and fungal keratitis combining with two novel modules which can improve keratitis diagnosis accuracy and predict more accurate lesion areas than conventional models.

This study was performed at Samsung Medical Center (SMC) and Korea Advanced Institute of Science and Technology (KAIST) according to the tenets of the Declaration of Helsinki. The Institutional Review Board of SMC (Seoul, Republic of Korea) approved this study (SMC 2019-01-014).

A retrospective analysis of the medical records of patients who had been diagnosed and treated for infectious keratitis (bacterial and fungal keratitis) at the SMC between January 1, 2002, and December 31, 2018, was conducted. All the patients underwent corneal scraping and culture; other forms of keratitis, such as viral or acanthamoeba keratitis, were excluded in this study.

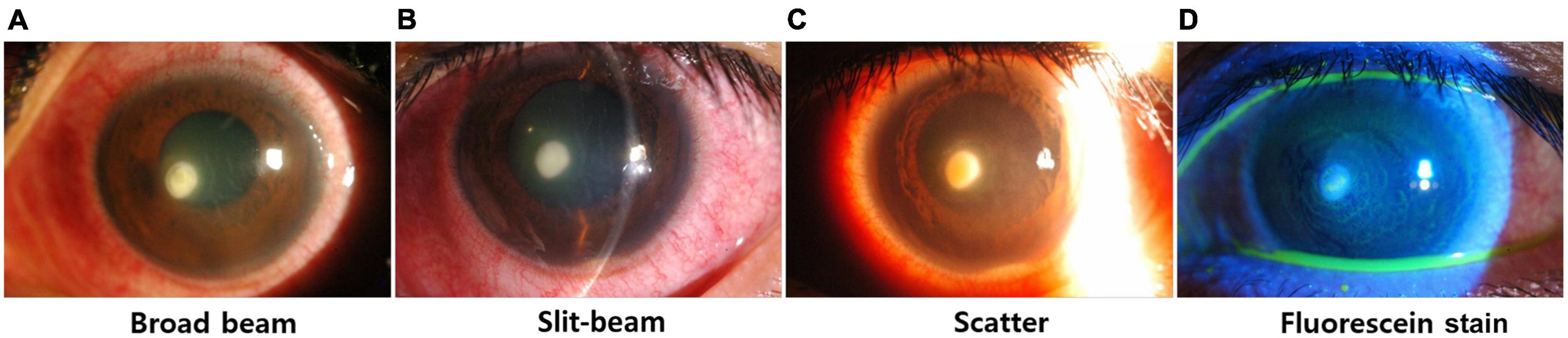

Anterior segment image dataset, called the SMC dataset, is a set of anterior segment images collected from 107 patients. It consists of broad-beam and slit-beam anterior segment images (Figure 1) (16). A total of 594 images from 88 patients were collected for training splits, and 90 images from 19 patients were collected for the test split. The training set comprised 361 images of 64 bacterial keratitis and 233 images of 24 fungal keratitis. The test set comprised 46 images of 13 bacterial keratitis and 24 images of 6 fungal keratitis. None of the patients belonged to both the training and test splits simultaneously. For the experiment, each image was resized to 500 pixels × 750 pixels. Three ophthalmologists (Y.K., T-YC., and D.H.L.) annotated lesions on images related to the diagnosis of keratitis.

Figure 1. Examples of various types of anterior segment images. (A) Broad-beam image, (B) slit-beam image, (C) scatter image, (D) fluorescein stain.

To verify the performance of the proposed network, we made the open source dataset consisted of 98 anterior segment images which were collected from Google image search and ophthalmology textbooks (17–19), and used it only as a test split.

The distribution of the images is shown in Table 1.

An overview of the entire study design and the proposed network framework is shown in Figure 2. It contains two proposed modules: the lesion guiding module (LGM) and slit-beam mask adjusting module (MAM). Each module was attached to the main classifier. These two modules were introduced to overcome the aforementioned limitations for classifying the cause of keratitis. In the training stage, LGM makes the network attend to the lesion instead of other details in the anterior segment image, such as reflected light. Because its output has the form of a heat map, the detected lesion location can be obtained. MAM finely generates an optimal mask-pointing slit-beam and small parts that have less impact on the diagnosis. By comparing the masked and unmasked input images in the learning process, the network distinguishes between the necessary and unnecessary parts for diagnosis in the anterior segment image and acquires the ability to not pay attention to the unnecessary parts. We set the baseline classifier to ResNet-50 (15), and the architecture of our proposed network was based on ResNet-50. Three LGMs were inserted between each residual block of the ResNet-50.

Lesion Guiding Module is designed for deep learning-based diagnostic systems to learn the location information of lesions annotated by ophthalmologists in the anterior segment image. In a classifier with convolutional layers, the nLGMs are inserted between the layers, as shown in Figure 3. The bounding boxes annotated by ophthalmologists are converted to a binary mask before being input to LGM. In LGM, the intensity of the intermediate feature maps is multiplied with a binary mask during the training stage. Following this process, an important part of the diagnosis has a negative value and a relatively unrelated part of the diagnosis has a positive value. Therefore, LGM is trained to minimize the loss function and can point to lesions that are correlated to the diagnosis.

In contrast to LGM learning information about areas to be focused on, the slit-beam MAM learns information about areas that should not be focused on. By using MAM in a training phase, the single network can efficiently learn slit-beam images and broad-beam images without paying attention to the slit-beam portion of the slit-beam image (Figure 4). In addition, it can prevent the network from focusing on complex textures, such as eyelashes or blood vessels, in the anterior segment image. MAM is a module that allows the main classification network to focus on the important parts for diagnosis, so it is used only in the training phase and not in inference.

Details of the proposed network and training procedure are provided in the Supplementary material and Supplementary Figures 1–3.

We compared the diagnostic performance of our deep learning network system with that of the baseline classifier, ResNet-50. To evaluate the accuracy of the diagnosis, the simple accuracy when the cause had the higher probability score was assumed as the final decision and areas under the receiver operating characteristic curve (AUC) were calculated. In addition, the accuracy of the detected lesion location during the diagnosis process was measured using the intersection over union (IOU) metric. The IOU is the ratio of the overlap between the predicted bounding box and the ophthalmologist’s manually labeled bounding box. The closer the IOU value is to 1, the more accurate is the location of the detected lesion. We also visualized the spatial attention map of LGM. To verify the individual effects of the proposed modules on diagnostic accuracy, we conducted an ablation study.

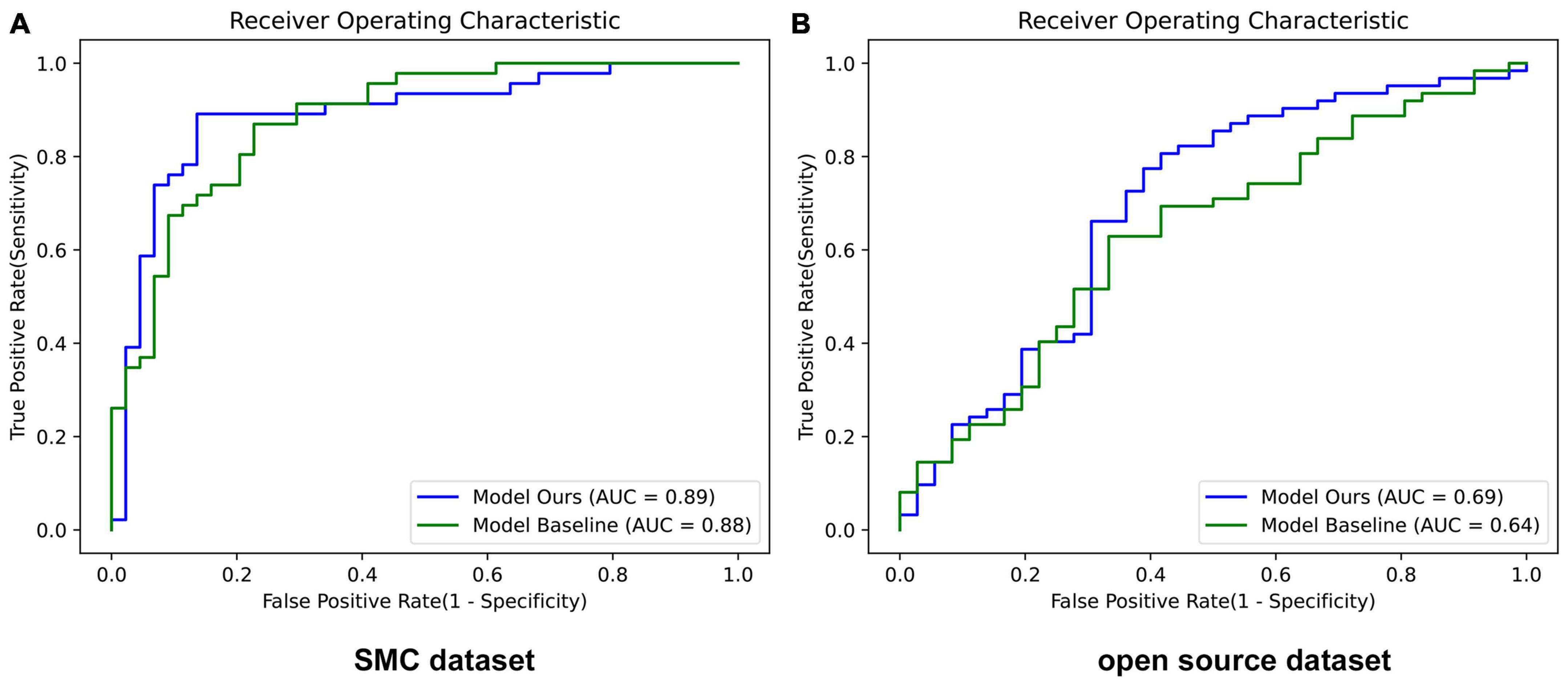

Details of the performance of baseline (ResNet-50) and the proposed method are shown in Table 2. The proposed method showed the higher values in all classification performances including accuracy than baseline with both SMC dataset and open source dataset.

By inferencing the two datasets, we obtained the diagnostic accuracy, as shown in Table 3. Comparing the diagnostic accuracy of the baseline network when the training image type was only a broad-beam and both a broad-beam and slit-beam, the accuracy of the broad-beam image decreased even though the number of images was more than doubled (B:0.818 → B:0.795). This result means that for different image types, simply increasing the number of images does not work properly to improve the performance. Furthermore, the network trained with broad-beam images show low diagnostic accuracy in slit-beam images (S:0.587).

In contrast, the network with the proposed modules showed an approximately 8% increase in the SMC dataset and 7% in the open source dataset on diagnostic accuracy compared to those of the baseline network trained with both a broad-beam and slit-beam images.

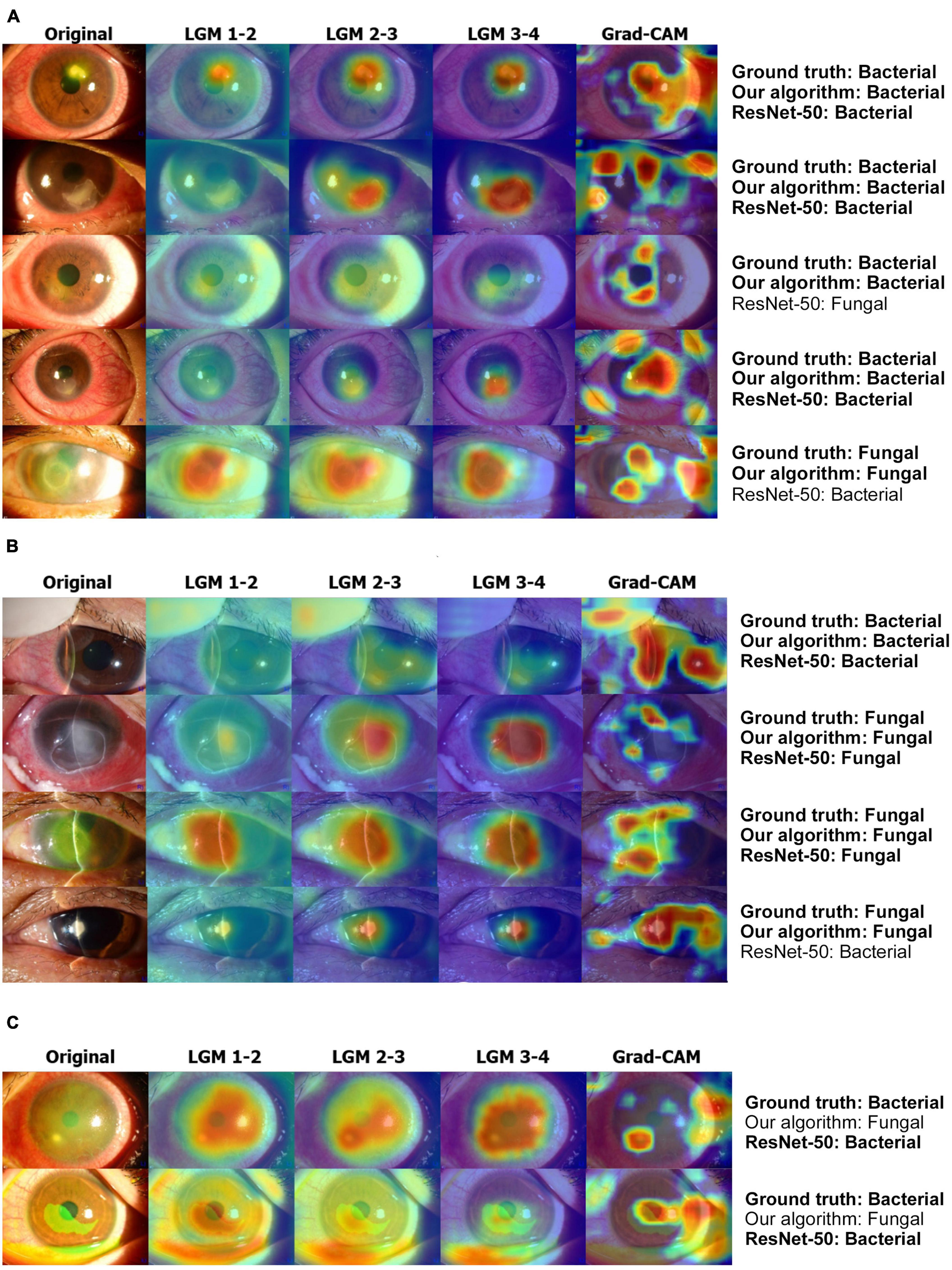

Supplementary Figure 4 showed the calculated IOU with various thresholds. Among these values, the best value occurred when the threshold was 0.45, as shown in Table 4. This shows that the spatial attention map of LGM, which was located between ResNet blocks 3 and 4, was more accurate than the baseline network with Grad-CAM (20).

Table 5 showed the results demonstrating that LGM and MAM modules had a positive effect on the accuracy of the SMC dataset and open source dataset both. Corresponding ROC curves are shown in Figure 5.

Figure 5. Performance of ROC curves in baseline and our proposed network. (A) SMC dataset and (B) Open source dataset. Model Ours is a new deep learning model that combines LGM and MAM with the baseline ResNet-50. Model Baseline is ResNet-50. AUC, area under the curve.

Figure 6A showed that LGM points to more accurate lesion areas regardless of lesion size or shape, whereas the Grad-CAM method has high values for complex textures such as eyelashes and blood vessels (20). LGM shows that it does not attend to the reflected light in the anterior segment images. In the case of the slit-beam images, the Grad-CAM method tends to point not only to lesions but also to slit-beam (20), whereas LGM only attends to lesions without adjusting the slit-beam (Figure 6B). In the case of misdiagnosed images, as shown in Figure 6C, most of the anterior segment images were obtained from the stained eyes. At this time, it could be shown that LGM tends to point to an excessively wide area, including a lesion site or an area unrelated to diagnosis.

Figure 6. Examples of result visualization. (A) Broad-beam images, (B) slit-beam images, and (C) misdiagnosis cases. LGM 1-2 denotes LGM between ResNet blocks 1 and 2. LGM 2-3 denotes LGM between ResNet blocks 2 and 3. LGM 3-4 denotes LGM between ResNet blocks 3 and 4. LGM, Lesion guiding module; Grad-CAM, gradient-weighted class activation mapping.

We developed a novel deep learning algorithm that specializes in diagnosing bacterial and fungal keratitis by analyzing anterior segment images. A representative convolutional neural network (CNN), ResNet-50, was used as the backbone of the algorithm with the two proposed modules, LGM and MAM, resulting in high performance in distinguishing the images with bacterial keratitis from those with fungal keratitis.

The early and accurate diagnosis of infectious keratitis is essential for resolving the infection and minimizing corneal damage (12). Generally, the presence of an irregular/feathery border, satellite lesions, and endothelial plaque is associated with fungal keratitis, whereas a wreath infiltrate or epithelial plaque is associated with bacterial keratitis (21). However, it is difficult to distinguish exactly based on the characteristics of the infected lesions. Bacterial and fungal keratitis are often confused, especially in the early stages, but the medications used are different, and the prognosis for fungal keratitis is much worse (22–24). If patients with keratitis receive unnecessary or late treatment due to an incorrect diagnosis, complications such as corneal opacity, poor outcome for vision, or endophthalmitis may occur. Therefore, we focused on differentiating between bacterial and fungal keratitis among patients with infectious keratitis in this study.

A deep-learning approach was used to analyze a variety of medical images. Recent advances in deep learning technology in the ophthalmic field have also allowed rapid and accurate diagnosis of several ocular diseases (14, 25). However, unlike deep learning systems using optical coherence tomography and retinal fundus images, only a few studies dealing with anterior segment images have been published. In particular, in the case of infectious keratitis, it is difficult to apply a deep learning algorithm directly because it is related to lesions in various positions, and it is difficult to identify the causative pathogen without corneal culture.

In this study, we utilized a deep learning CAD network system for the differential diagnosis of bacterial and fungal keratitis based on the different shapes of corneal lesions. There are two major challenges in diagnosing the cause of keratitis by using anterior segment images. First, because of the corneal aspheric shape, the depth and extent of the infiltration lesions that were seen in the actual slit-lamp examination are not clearly visible in the image, and small lesions are often not represented in the image. Additional information such as trauma (dirty water, soil, soft contact lens, etc.) or past medical history of the patient are very important for diagnosis. Furthermore, the appearance differs depending on the light source; therefore, keratitis is often misdiagnosed. Therefore, for an accurate diagnostic network, the knowledge of experienced ophthalmologists who can distinguish the lesion related to the diagnosis from other anatomical parts should be transferred to the diagnostic network. Second, it is difficult to obtain a large number of anterior segment images of keratitis from the various aspects of each pathogen. It is also problematic that anterior segment images can be captured in different ways. These diverse features make it difficult to learn a diagnosis network with a finite number of weights, reducing diagnostic accuracy. To solve these problems, a method for learning the features regardless of the type of anterior segment image is required. We propose two modules to solve these two challenges.

The two proposed modules, LGM and MAM, were combined with the main classifier. In the feature extraction process, LGM attends to a suspicious area related to diagnosis. Because its output has the form of a heat map, the detected lesion location can be obtained. The slit-beam MAM is used only in the training stage; it generates an optimal mask pointing to the slit-beam and small parts that have less impact on diagnosis. The unnoticed part of the actual diagnosis process is also suppressed in the learning process of the network so that the anterior segment images with and without the slit-beam can be effectively learned together. Through training a network using this module in the proposed procedure, the network can learn different types of anterior segment image (broad-beam and slit-beam) efficiently, and we obtained a high diagnostic accuracy for infectious keratitis using different types of images.

Similar to our deep learning model, some recent other studies have applied deep learning models to distinguish patients with fungal keratitis from those with bacterial keratitis using anterior segment images. The algorithms by Hung et al. (26) based on DenseNet161 and ResNet-50 achieved average accuracies of 0.786 and 0.773, respectively. Ghosh et al. (27) constructed a model called deep keratitis based on ResNet-50 with Grad-CAM. However, its precision was 0.57 (95% CI: 0.49−0.65) which was lower than 0.878 in our results. The model with VGG19 exhibited the highest performance (0.88). Redd et al. (28) showed the highest AUC of 0.86 in MobileNet among 5 CNNs, which was nearly similar with our AUC result (0.89). Our model achieved an overall accuracy of approximately 88%, which is comparable to these previous models. Most previous studies just emphasized the application of deep learning techniques for the diagnosis of infectious keratitis. Rather than suggesting a new developed deep learning network, they analyzed the performance of each existing CNN such as ResNet, DenseNet, and ResNeXt in diagnosing the keratitis. Meanwhile, to our knowledge, we first presented a novel deep learning framework combined with two proposed modules, which is specialized in diagnosing bacterial and fungal keratitis. Furthermore, several studies have been published recently to distinguish the infectious keratitis by causative pathogens including bacterial, fungal, acanthamoeba, or viral keratitis. Zhang et al. (29) and Koyama et al. (14) provided the deep learning based diagnostic models for 4 types of infectious keratitis, and they also showed the lower accuracy in diagnosing bacteria and fungi than acanthamoeba or virus.

This study had several limitations. First, we only distinguished between bacterial and fungal keratitis. Indeed, the causes of infectious keratitis are diverse, and it is difficult to discriminate non-infectious immune keratitis in the early stages. Through subsequent studies, we need to develop a system to discriminate between the various types of keratitis. Second, the training process of LGM requires a hand-labeled bounding box annotation by specialists who are experienced in keratitis diagnosis. Therefore, a significant amount of time and resources are required to train the model. In MAM, a sophisticated pixel-level slit-beam mask is required for learning. Relatively less expertise is required than for lesion annotation, but it is also difficult to obtain masks in large quantities because of the higher accuracy required for pixel-level labeling than for bounding boxes. The expensive work required to obtain resources for learning can be a deterrent preventing the proposed network from being used in practice. Third, the usability of LGM is limited. The broad-beam anterior segment images with and without the slit-beam used in this experiment had a relatively high similarity to each other compared to other types (scatter and stain images). Therefore, by applying LGM to the original and image-level modified image, effective learning of the diagnostic system could be achieved regardless of the presence of the slit-beam in this study. However, to apply a similar mechanism to all types of anterior segment images, a method of dividing image features into parts having diagnostic information at the feature level and parts that are unnecessary for diagnosis is required. Fourth, although the LGM technique of our study allowed us to distinguish between bacterial keratitis and fungal keratitis by first accurately finding the lesion and looking at the characteristics of the lesion, exactly which part of the lesion was used to distinguish between bacterial and fungal keratitis is unknown with the results. However, LGM can identify the pathologic areas accurately by focusing only on lesions without any other noise than the Grad-Cam in the heatmaps. Finally, we combined our two modules with ResNet-50. Because some previous deep learning algorithms tend to show higher performance in other CNNs, not on ResNet-50, further studies comparing different CNNs applying our modules are required to enhance the diagnostic accuracy of infectious keratitis.

In conclusion, our deep-learning framework for the diagnosis of infectious keratitis was successfully developed and validated. LGM is presented for an accurate diagnosis by emphasizing the lesions associated with the diagnosis. To prevent the less-informative part from affecting the diagnostic result and to efficiently learn two different types of anterior segment images in a single network, we designed a new learning procedure using a masking module, MAM, to control masking in the training phase. The results showed that our proposed module had a meaningful effect in enhancing the diagnostic performance of bacterial and fungal keratitis on different anterior segment image datasets.

The data analyzed in this study is subject to the following licenses/restrictions: The approval process from research data review committee is required and the dataset is not open to the public. Requests to access these datasets should be directed to YW, d3lrOTAwMTA1QGhhbm1haWwubmV0.

This study was performed at Samsung Medical Center (SMC) and Korea Advanced Institute of Science and Technology (KAIST) according to the tenets of the Declaration of Helsinki. The Institutional Review Board of SMC (Seoul, Republic of Korea) approved this study (SMC 2019-01-014).

DL and YR designed the study, reviewed the design and results, and submitted the draft. YK, T-YC, and DL annotated lesions on images related to the diagnosis of keratitis. YW, HL, YK, and GH analyzed and interpreted the clinical data. YW and HL drafted the submitted manuscript draft. All authors have read and approved the final manuscript.

This research was supported by the Samsung Medical Center Research and Development Grant #SMO180231, #SMO1230241, and a National Research Foundation of Korea grant funded by the Korean Government’s Ministry of Education (NRF-2021R1C1C1007795; Seoul, Republic of Korea) which was received by DL.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2023.1162124/full#supplementary-material

1. Green M, Apel A, Naduvilath T, Stapleton F. Clinical outcomes of keratitis. Clin Exp Ophthalmol. (2007) 35:421–6. doi: 10.1111/j.1442-9071.2007.01511.x

2. Austin A, Lietman T, Rose-Nussbaumer J. Update on the management of infectious keratitis. Ophthalmology. (2017) 124:1678–89. doi: 10.1016/j.ophtha.2017.05.012

3. Chirambo M, Benezra D. Causes of blindness among students in blind school institutions in a developing country. Br J Ophthalmol. (1976) 60:665–8. doi: 10.1136/bjo.60.9.665

4. Pascolini D, Mariotti S. Global estimates of visual impairment: 2010. Br J Ophthalmol. (2012) 96:614–8. doi: 10.1136/bjophthalmol-2011-300539

5. Pleyer U, Behrens-Baumann W. [Bacterial keratitis. Current diagnostic aspects]. Ophthalmologe. (2007) 104:9–14. doi: 10.1007/s00347-006-1466-9

6. Thylefors B, Negrel A, Pararajasegaram R, Dadzie K. Global data on blindness. Bull World Health Organ. (1995) 73:115–21.

7. Alexandrakis G, Alfonso E, Miller D. Shifting trends in bacterial keratitis in south Florida and emerging resistance to fluoroquinolones. Ophthalmology. (2000) 107:1497–502. doi: 10.1016/S0161-6420(00)00179-2

8. Garg P, Sharma S, Rao G. Ciprofloxacin-resistant Pseudomonas keratitis. Ophthalmology. (1999) 106:1319–23. doi: 10.1016/S0161-6420(99)00717-4

9. Austin A, Schallhorn J, Geske M, Mannis M, Lietman T, Rose-Nussbaumer J. Empirical treatment of bacterial keratitis: An international survey of corneal specialists. BMJ Open Ophthalmol. (2017) 2:e000047. doi: 10.1136/bmjophth-2016-000047

10. Hsu H, Nacke R, Song J, Yoo S, Alfonso E, Israel H. Community opinions in the management of corneal ulcers and ophthalmic antibiotics: A survey of 4 states. Eye Contact Lens. (2010) 36:195–200. doi: 10.1097/ICL.0b013e3181e3ef45

11. McDonald E, Ram F, Patel D, McGhee C. Topical antibiotics for the management of bacterial keratitis: An evidence-based review of high quality randomised controlled trials. Br J Ophthalmol. (2014) 98:1470–7. doi: 10.1136/bjophthalmol-2013-304660

12. Mun Y, Kim M, Oh J. Ten-year analysis of microbiological profile and antibiotic sensitivity for bacterial keratitis in Korea. PLoS One. (2019) 14:e0213103. doi: 10.1371/journal.pone.0213103

13. Gulshan V, Peng L, Coram M, Stumpe M, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. (2016) 316:2402–10. doi: 10.1001/jama.2016.17216

14. Koyama A, Miyazaki D, Nakagawa Y, Ayatsuka Y, Miyake H, Ehara F, et al. Determination of probability of causative pathogen in infectious keratitis using deep learning algorithm of slit-lamp images. Sci Rep. (2021) 11:22642. doi: 10.1038/s41598-021-02138-w

15. Xu Y, Mo T, Feng Q, Zhong P, Lai M, Chang E. Deep learning of feature representation with multiple instance learning for medical image analysis. Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Florence: (2014). p. 1626–30. doi: 10.1109/ICASSP.2014.6853873

16. Zhang K, Liu X, Liu F, He L, Zhang L, Yang Y, et al. An interpretable and expandable deep learning diagnostic system for multiple ocular diseases: Qualitative study. J Med Internet Res. (2018) 20:e11144. doi: 10.2196/11144

17. Bowling B. Kanski’s Clinical Ophthalmology: A Systematic Approach. 9th ed. Philadelphia, PA: Saunders Ltd (2015).

20. Selvaraju R, Cogswell M, Das A, Vedantam R, Parikh D, Batra D editors. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proceedings of the IEEE International Conference on Computer Vision. Venice: (2017). p. 618–26. doi: 10.1109/ICCV.2017.74

21. Dalmon C, Porco T, Lietman T, Prajna N, Prajna L, Das M, et al. The clinical differentiation of bacterial and fungal keratitis: A photographic survey. Invest Ophthalmol Vis Sci. (2012) 53:1787–91. doi: 10.1167/iovs.11-8478

22. Schaefer F, Bruttin O, Zografos L, Guex-Crosier Y. Bacterial keratitis: A prospective clinical and microbiological study. Br J Ophthalmol. (2001) 85:842–7. doi: 10.1136/bjo.85.7.842

23. Toshida H, Kogure N, Inoue N, Murakami A. Trends in microbial keratitis in Japan. Eye Contact Lens. (2007) 33:70–3. doi: 10.1097/01.icl.0000237825.98225.ca

24. Yeh D, Stinnett S, Afshari N. Analysis of bacterial cultures in infectious keratitis, 1997 to 2004. Am J Ophthalmol. (2006) 142:1066–8. doi: 10.1016/j.ajo.2006.06.056

25. Xu C, Zhu X, He W, Lu Y, He X, Shang Z, et al. editors. Fully deep learning for slit-lamp photo based nuclear cataract grading. Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2019: 22nd International Conference, Shenzhen, China, October 13–17, 2019, Part IV 22. Berlin: Springer (2019).

26. Hung N, Shih A, Lin C, Kuo M, Hwang Y, Wu W, et al. Using slit-lamp images for deep learning-based identification of bacterial and fungal keratitis: Model development and validation with different convolutional neural networks. Diagnostics. (2021) 11:1246. doi: 10.3390/diagnostics11071246

27. Ghosh A, Thammasudjarit R, Jongkhajornpong P, Attia J, Thakkinstian A. Deep learning for discrimination between fungal keratitis and bacterial keratitis: Deep Keratitis. Cornea. (2022) 41:616–22. doi: 10.1097/ICO.0000000000002830

28. Redd T, Prajna N, Srinivasan M, Lalitha P, Krishnan T, Rajaraman R, et al. Image-based differentiation of bacterial and fungal keratitis using deep convolutional neural networks. Ophthalmol Sci. (2022) 2:100119. doi: 10.1016/j.xops.2022.100119

Keywords: anterior segment image, bacterial keratitis, convolutional neural network (CNN), deep learning (DL), fungal keratitis, infectious keratitis, lesion guiding module (LGM), mask adjusting module (MAM)

Citation: Won YK, Lee H, Kim Y, Han G, Chung T-Y, Ro YM and Lim DH (2023) Deep learning-based classification system of bacterial keratitis and fungal keratitis using anterior segment images. Front. Med. 10:1162124. doi: 10.3389/fmed.2023.1162124

Received: 09 February 2023; Accepted: 24 April 2023;

Published: 18 May 2023.

Edited by:

Tae-im Kim, Yonsei University, Republic of KoreaReviewed by:

Tae Keun Yoo, B&VIIT Eye Center / Refractive Surgery & AI Center, Republic of KoreaCopyright © 2023 Won, Lee, Kim, Han, Chung, Ro and Lim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dong Hui Lim, bGRobHNlQGdtYWlsLmNvbQ==; Yong Man Ro, eW1yb0BrYWlzdC5hYy5rcg==

†Present Address: Tae-Young Chung, Renew Seoul Eye Center, Seoul, Republic of Korea

‡These authors have contributed equally to this work and share first authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.