95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Med. , 24 January 2023

Sec. Pathology

Volume 10 - 2023 | https://doi.org/10.3389/fmed.2023.1114673

This article is part of the Research Topic Computational Pathology for Precision Diagnosis, Treatment, and Prognosis of Cancer View all 10 articles

Liyu Shi1

Liyu Shi1 Xiaoyan Li2*

Xiaoyan Li2* Weiming Hu1

Weiming Hu1 Haoyuan Chen1

Haoyuan Chen1 Jing Chen1

Jing Chen1 Zizhen Fan1

Zizhen Fan1 Minghe Gao1

Minghe Gao1 Yujie Jing1

Yujie Jing1 Guotao Lu1

Guotao Lu1 Deguo Ma1

Deguo Ma1 Zhiyu Ma1

Zhiyu Ma1 Qingtao Meng1

Qingtao Meng1 Dechao Tang1

Dechao Tang1 Hongzan Sun3

Hongzan Sun3 Marcin Grzegorzek4,5

Marcin Grzegorzek4,5 Shouliang Qi1

Shouliang Qi1 Yueyang Teng1

Yueyang Teng1 Chen Li1*

Chen Li1*Background and purpose: Colorectal cancer is a common fatal malignancy, the fourth most common cancer in men, and the third most common cancer in women worldwide. Timely detection of cancer in its early stages is essential for treating the disease. Currently, there is a lack of datasets for histopathological image segmentation of colorectal cancer, which often hampers the assessment accuracy when computer technology is used to aid in diagnosis.

Methods: This present study provided a new publicly available Enteroscope Biopsy Histopathological Hematoxylin and Eosin Image Dataset for Image Segmentation Tasks (EBHI-Seg). To demonstrate the validity and extensiveness of EBHI-Seg, the experimental results for EBHI-Seg are evaluated using classical machine learning methods and deep learning methods.

Results: The experimental results showed that deep learning methods had a better image segmentation performance when utilizing EBHI-Seg. The maximum accuracy of the Dice evaluation metric for the classical machine learning method is 0.948, while the Dice evaluation metric for the deep learning method is 0.965.

Conclusion: This publicly available dataset contained 4,456 images of six types of tumor differentiation stages and the corresponding ground truth images. The dataset can provide researchers with new segmentation algorithms for medical diagnosis of colorectal cancer, which can be used in the clinical setting to help doctors and patients. EBHI-Seg is publicly available at: https://figshare.com/articles/dataset/EBHI-SEG/21540159/1.

Colon cancer is a common deadly malignant tumor, the fourth most common cancer in men, and the third most common cancer in women worldwide. Colon cancer is responsible for 10% of all cancer cases (1). According to prior research, colon and rectal tumors share many of the same or similar characteristics. Hence, they are often classified collectively (2). The present study categorized rectal and colon cancers into one colorectal cancer category (3). Histopathological examination of the intestinal tract is both the gold standard for the diagnosis of colorectal cancer and a prerequisite for disease treatment (4).

The advantage of using the intestinal biopsy method to remove a part of the intestinal tissue for histopathological analysis, which is used to determine the true status of the patient, is that it considerably reduces damage to the body and rapid wound healing (5). The histopathology sample is then sectioned and processed with Hematoxylin and Eosin (H&E). Treatment with H&E is a common approach when staining tissue sections to show the inclusions between the nucleus and cytoplasm and highlight the fine structures between tissues (6, 7). When a pathologist performs an examination of the colon, they first examine the histopathological sections for eligibility and find the location of the lesion. The pathology sections are then examined and diagnosed using a low magnification microscope. If finer structures need to be observed, the microscope is adjusted to use high magnification for further analysis. However, the following problems usually exist in the diagnostic process: the diagnostic results become more subjective and varied due to different doctors reasons; doctors can easily overlook some information in the presence of a large amount of test data; it is difficult to analyze large amounts of previously collected data (8). Therefore, it is a necessary to address these issues effectively.

With the development and popularization of computer-aided diagnosis (CAD), the pathological sections of each case can be accurately and efficiently examined with the help of computers (9). Now, CAD is widely used in many biomedical image analysis tasks, such as microorganism image analysis (10–18), COVID-19 image analysis (19), histopatholgical image analysis (20–27), cytopathological image analysis (28–31) and sperm video analysis (32, 33). Therefore, the application of computer vision technology for colorectal cancer CAD provides a new direction in this research field (34).

One of the fundamental tasks of CAD is the aspect of image segmentation, the results of which can be used as key evidence in the pathologists' diagnostic processes. Along with the rapid development of medical image segmentation methodology, there is a wide demand for its application to identify benign and malignant tumors, tumor differentiation stages, and other related fields (35). Therefore, a multi-class image segmentation method is needed to obtain high segmentation accuracy and good robustness (36).

The present study presents a novel Enteroscope Biopsy Histopathological H&E Image Dataset for Image Segmentation Tasks (EBHI-Seg), which contains 4456 electron microscopic images of histopathological colorectal cancer sections that encompass six tumor differentiation stages: normal, polyp, low-grade intraepithelial neoplasia, high-grade intraepithelial neoplasia, serrated adenoma, and adenocarcinoma. The segmentation coefficients and evaluation metrics are obtained by segmenting the images of this dataset using different classical machine learning methods and novel deep learning methods.

The present study analyzed and compared the existing colorectal cancer biopsy dataset and provided an in-depth exploration of the currently known research findings. The limitations of the presently available colorectal cancer dataset were also pointed out.

The following conclusions were obtained in the course of the study. For existing datasets, the data types can be grouped into two major categories: Multi and Dual Categorization datasets. Multi Categorization datasets contain tissue types at all stages from Normal to Neoplastic. In Trivizakis et al. (37), a dataset called “Collection of textures in colorectal cancer histology” is described. It includes 5,000 patches of size 74 × 74 μm and contains seven categories. However, because there were only 10 images, it is too small for a data sample and lacked generalization capability. In Chen et al. (23), a dataset called “NCT-CRC-HE-100K” is proposed. This is a set of 100,000 non-overlapping image patches of histological human colorectal cancer (CRC) and normal tissue samples stained with (H&E) that was presented by the National Center for Tumor Diseases (NCT). These image patches are from nine different tissues with an image size of 224 × 224 pixels. The nine tissue categories are adipose, background, debris, lymphocytes, mucus, smooth muscle, normal colon mucosa, cancer-associated stroma, and colorectal adenocarcinoma epithelium. This dataset is publicly available and commonly used. However, because the image sizes are all 224 × 224 pixels, the dataset underperformed in some global details that need to be observed in individual categories. Two datasets are utilized in Oliveira et al. (38): one containing colonic H&E-stained biopsy sections (CRC dataset) and the other consisting of prostate cancer H&E-stained biopsy sections (PCa dataset). The CRC dataset contains 1,133 colorectal biopsy and polypectomy slides grouped into three categories and labeled as non-neoplastic, low-grade and high-grade lesions. In Kausar et al. (39), a dataset named “MICCAI 2016 gland segmentation challenge dataset (GlaS)” is used. This dataset contained 165 microscopic images of H&E-stained colon glandular tissue samples, including 85 training and 80 test datasets. Each dataset is grouped into two parts: benign and malignant tumors. The image size is 775 × 522 pixels. Since this dataset has only two types of data and the number of data is too little, so that it performs poorly on some multi-type training.

Dual Categorization datasets usually contain only two types of tissue types: Normal and Neoplastic. In Wei et al. (40), a dataset named “FFPE” is proposed. This dataset obtained its images by extracting 328 Formalin-fixed Paraffin-embedded (FFPE) whole-slide images of colorectal polyps classified into two categories of : hyperplastic polyps (HPs) and sessile serrated adenomas (SSAs). This dataset contained 3,125 images with an image size of 224 × 224 pixels and is small in type and number. In Bilal et al. (41), two datasets named “UHCW” and “TCGA” are proposed. The first dataset is a colorectal cancer biopsy sequence developed at the University Hospital of Coventry and Warwickshire (UHCW) for internal validation of the rectal biopsy trial. The second dataset is the Cancer Genome Atlas (TCGA) for external validation of the trial. This dataset is commonly used as a publicly available cancer dataset and stores genomic data for more than 20 types of cancers. The two dataset types are grouped into two categories: Normal and Neoplastic. The first dataset contains 4,292 slices, and the second dataset contained 731 slices with an image size of 224 × 224 pixels.

All of the information for the existing datasets is summarized in Table 1. The issues associated with the dataset mentioned above included fewer data types, small amount of data, inaccurate dataset ground truth, etc. The current study required an open-source multi-type colonoscopy biopsy image dataset.

The dataset in the present study contained 4,456 histopathology images, including 2,228 histopathology section images and 2,228 ground truth images. These include normal (76 images and 76 ground truth images), polyp (474 images and 474 ground truth images), low-grade intraepithelial neoplasia (639 images and 639 ground truth images), high-grade intraepithelial neoplasia (186 images and 186 ground truth images), serrated adenoma (58 images and 58 ground truth images), and adenocarcinoma (795 images and 795 ground truth images). The basic information for the dataset is described in detail below. EBHI-Seg is publicly available at: https://figshare.com/articles/dataset/EBHI-SEG/21540159/1.

In the present paper, H&E-treated histopathological sections of colon tissues are used as data for evaluating image segmentation. The dataset is obtained from two histopathologists at the Cancer Hospital of China Medical University [proved by “Research Project Ethics Certification” (No. 202229)]. It is prepared by 12 biomedical researchers according to the following rules: Firstly, if there is only one differentiation stage in the image and the rest of the image is intact, then the differentiation stage became the image label; Secondly, if there is more than one differentiation stage in the image, then the most obvious differentiation is selected as the image label; In general, the most severe and prominent differentiation in the image was used as the image label.

Intestinal biopsy was used as the sampling method in this dataset. The magnification of the data slices is 400×, with an eyepiece magnification of 10× and an objective magnification of 40×. A Nissan Olympus microscope and NewUsbCamera acquisition software are used. The image input size is 224 × 224 pixels, and the format is *.png. The data are grouped into five types described in detail in Section 2.2.

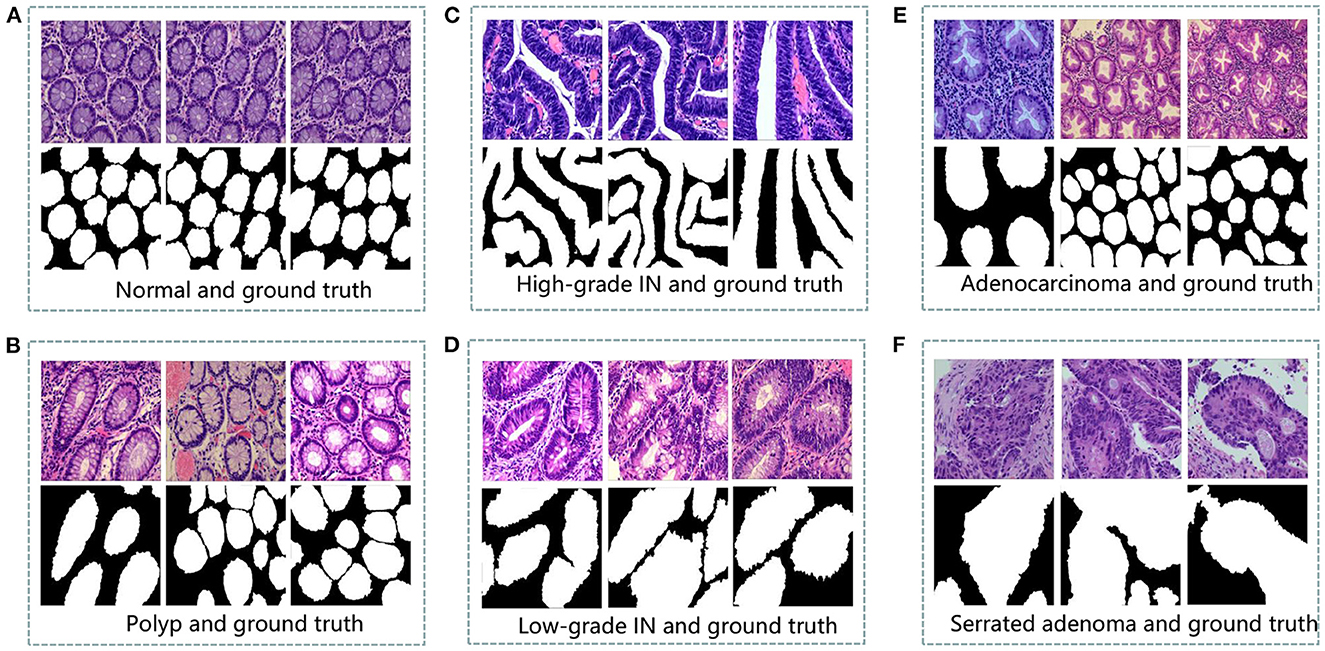

Colorectal tissue sections of the standard category are made-up of consistently ordered tubular structures and that does not appear infected when viewed under a light microscope (42). Section images with the corresponding ground truth images are shown in Figure 1A.

Figure 1. An example of histopathological images database. (A) Normal and ground truth, (B) Polyp and ground truth, (C) High-grade Intraepithelial Neoplasia and ground truth, (D) Low-grade Intraepithelial Neoplasia and ground truth, (E) Adenocarcinoma and ground truth, and (F) Serrated adenoma and ground truth.

Colorectal polyps are similar in shape to the structures in the normal category, but have a completely different histological structure. A polyp is a redundant mass that grows on the surface of the body's cells. Modern medicine usually refers to polyps as unwanted growths on the mucosal surface of the body (43). The pathological section of the polyp category also has an intact luminal structure with essentially no nuclear division of the cells. Only the atomic mass is slightly higher than that in the normal category. The polyp category and corresponding ground truth images are shown in Figure 1B.

Intraepithelial neoplasia (IN) is the most critical precancerous lesion. Compared to the normal category, its histological images show increased branching of adenoid structures, dense arrangement, and different luminal sizes and shapes. In terms of cellular morphology, the nuclei are enlarged and vary in size, while nuclear division increases (44). The standard Padova classification currently classifies intraepithelial neoplasia into low-grade and high-grade INs. High-grade IN demonstrate more pronounced structural changes in the lumen and nuclear enlargement compared to low-grade IN. The images and ground truth diagrams of high-grade and low-grade INs are shown in Figures 1C, D.

Adenocarcinoma is a malignant digestive tract tumor with a very irregular distribution of luminal structures. It is difficult to identify its border structures during observation, and the nuclei are significantly enlarged at this stage (45). An adenocarcinoma with its corresponding ground truth diagram is shown in Figure 1E.

Serrated adenomas are uncommon lesions, accounting for 1% of all colonic polyps (46). The endoscopic surface appearance of serrated adenomas is not well characterized but is thought to be similar to that of colonic adenomas with tubular or cerebral crypt openings (47). The image of a serrated adenoma with a corresponding ground truth diagram is shown in Figure 1F.

Six evaluation metrics are commonly used for image segmentation tasks. The Dice ratio metric is a standard metric used in medical images that is often utilized to evaluate the performance of image segmentation algorithms. It is a validation method based on spatial overlap statistics that measures the similarities between the algorithm segmentation output and ground truth (48). The Dice ratio is defined in Equation (1).

In Equation (1), for a segmentation task, X and Y denote the ground truth and segmentation mask prediction, respectively. The range of the calculated results is [0,1], and the larger the result the better.

The Jaccard index is a classical set similarity measure with many practical applications in image segmentation. The Jaccard index measures the similarity of a finite set of samples: the ratio between the intersection and concatenation of the segmentation results and ground truth (49). The Jaccard index is defined in Equation (2).

The range of the calculated results is [0,1], and the larger the result the better.

Recall and precision are the recall and precision rates, respectively. The range of the calculated results is [0,1]. A higher output indicates a better segmentation result. Recall and precision are defined in Equations (3), (4),

where TP, FP, TN, and FN are defined in Table 2.

The conformity coefficient (Confm Index) is a consistency coefficient, which is calculated by putting the binary classification result of each pixel from [−∞,1] into continuous interval [−∞,1] to calculate the ratio of the number of incorrectly segmented pixels to the number of correctly segmented pixels to measure the consistency between the segmentation result and ground truth. The conformity coefficient is defined in Equations (5), (6),

Where θAE= θFP+θFN represents all errors of the fuzzy segmentation results. θTP is the number of correctly classified pixels. Mathematically, ConfmIndex can be negative infinity if θTP=0. Such a segmentation result is definitely inadequate and treated as failure without the need of any further analysis.

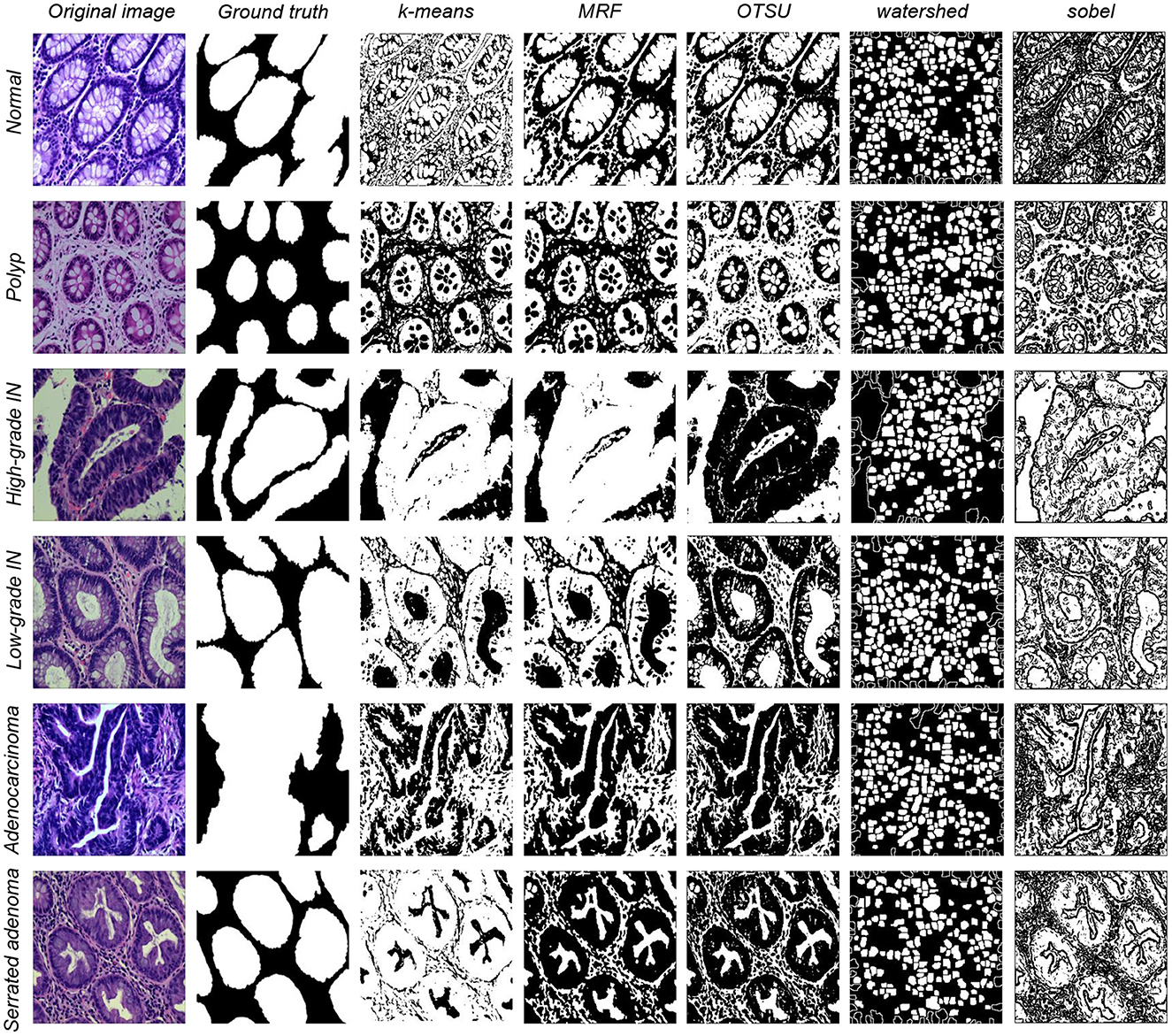

Image segmentation is one of the most commonly used methods for classifying image pixels in decision-oriented applications (50). It groups an image into regions high in pixel similarity within each area and has a significant contrast between different regions (51). Machine learning methods for segmentation distinguish the image classes using image features. (1) k-means algorithm is a classical division-based clustering algorithm, where image segmentation means segmenting the image into many disjointed regions. The essence is the clustering process of pixels, and the k-means method is one of the simplest clustering methods (52). Image segmentation of the present study dataset is performed using the classical machine learning method described above. (2) Markov random field (MRF) is a powerful stochastic tool that models the joint probability distribution of an image based on its local spatial action (53). It can extract the texture features of the image and model the image segmentation problem. (3) OTSU algorithm is a global adaptive binarized threshold segmentation algorithm that uses the maximum inter-class variance between the image background and the target image as the selection criterion (54). The image is grouped into foreground and background parts based on its grayscale characteristics independent of the brightness and contrast. (4) Watershed algorithm is a region-based segmentation method, that takes the similarity between neighboring pixels as a reference and connects those pixels with similar spatial locations and grayscale values into a closed contour to achieve the segmentation effect (55). (5) Sobel algorithm has two operators, where one detects horizontal edges and the other detects vertical flat edges. An image is the final result of its operation. Sobel edge detection operator is a set of directional operators that can be used to perform edge detection from different directions (56). The segmentation results are shown in Figure 2.

Figure 2. Five types of data segmentation results obtained by different classical machine learning methods.

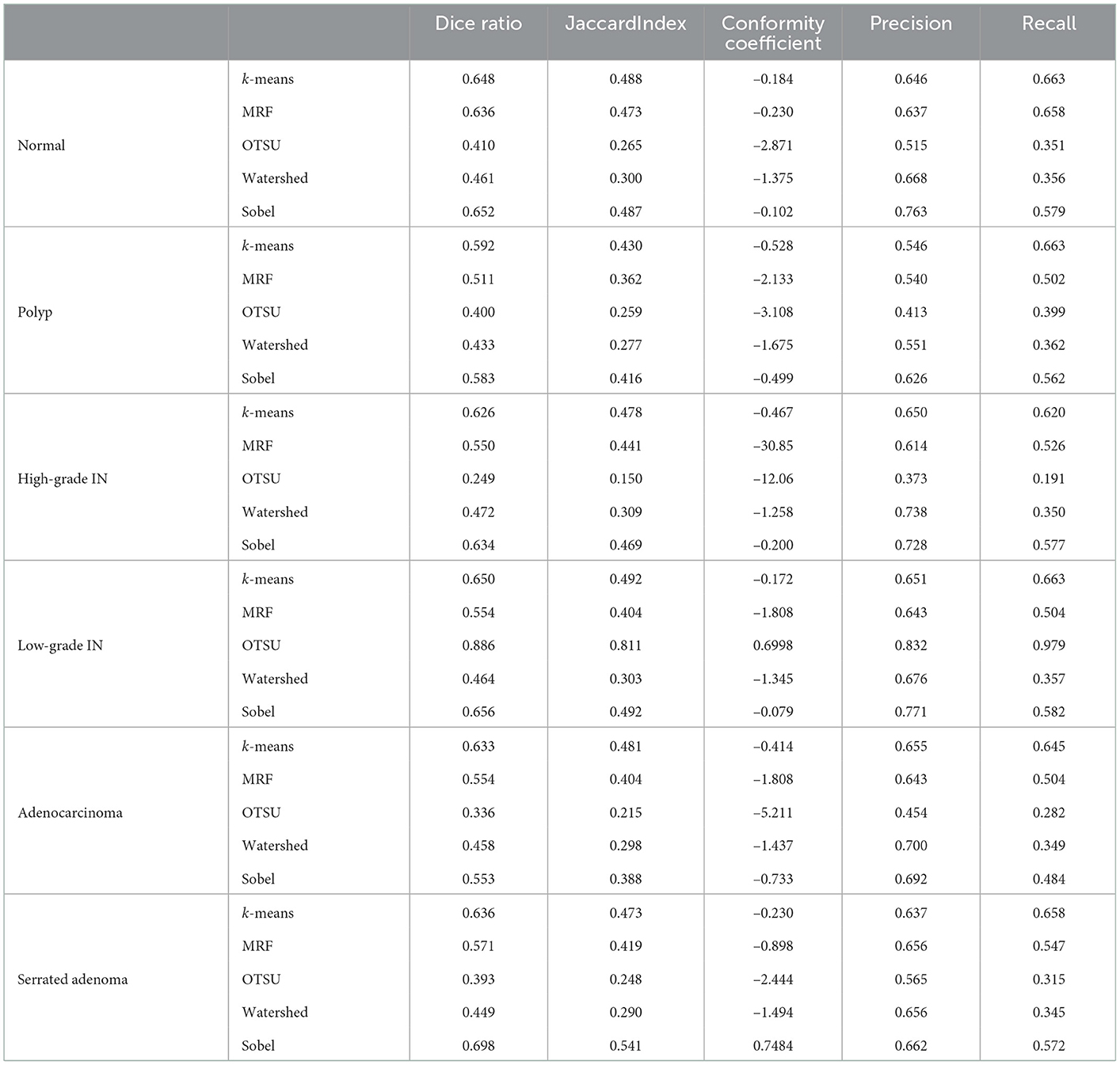

The performance of EBHI-Seg for different machine learning methods is observed by comparing the images segmented using classical machine learning methods with the corresponding ground truth. The segmentation evaluation metrics results are shown in Table 3. The Dice ratio algorithm is a similarity measure, usually used to compare the similarity of two samples. The value of one for this metric is c onsidered to indicate the best effect, while the value of the worst impact is zero. The Table 3 shows that k-means has a good Dice ratio algorithm value of up to 0.650 in each category. The MRF and Sobel segmentation results also achieved a good Dice ratio algorithm value of around 0.6. In terms of image precision and recall segmentation coefficients, k-means is maintained at approximately 0.650 in each category. In the classical machine learning methods, k-means has the best segmentation results, followed by MRF and Sobel. OTSU has a general effect, while the watershed algorithm has various coefficients that are much lower than those in the above methods. Moreover, there are apparent differences in the segmentation results when using the above methods.

Table 3. Evaluation metrics for five different segmentation methods based on classical machine learning.

In summary, EBHI-Seg has significantly different results when using different classical machine learning segmentation methods. Different classical machine learning methods have an obvious differentiation according to the image segmentation evaluation metrics. Therefore, EBHI-Seg can effectively evaluate the segmentation performance of different segmentation methods.

Besides the classical macine learning metheds tested above, some popular deep learning methods are also tested. (1) Seg-Net is an open source project for image segmentation (57). The network is identical to the convolutional layer of VGG-16, with the removal of the fully-connected hierarchy and the addition of max-pooling indices resulting in improved boundary delineation. Seg-Net performs better in large datasets. (2) U-Net network structure was first proposed in 2015 (58) for medical imaging. U-Net is lightweight, and its simultaneous detection of local and global information is helpful for both information extraction and diagnostic results from clinical medical images. (3) MedT is a network published in 2021, which is a transformer structure that applies an attention mechanism based on medical image segmentation (59). The segmentation results are shown in Figure 3.

The segmentation effect is test on the present dataset using three deep learning models. In the experiments, each model is trained using the ratio of the training set, validation set, and test set of 4:4:2. All of the information for the existing datasets is summarized in Table 4. The model learning rate is set to 3e−6, epochs are set to 100, and batch-size is set to 1. The optimizer is Adam, the loss function is crossentropyloss and the activation function is ReLU. The dataset segmentation results of using three different models are shown in Figure 3. The experimental segmentation evaluation metrics are shown in Table 5. Overall, deep learning performs much better than classical machine learning methods. Among them, the evaluation indexes of the training results using the U-Net and Seg-Net models can reach 0.90 on average. The evaluation results of the MedT model are slightly worse at a level, between 0.70 and 0.80. The training time is longer for MedT and similar for U-Net and Seg-Net.

Based on the above results, EBHI-Seg achieved a clear differentiation using deep learning image segmentation methods. Image segmentation metrics for different deep learning methods are significantly different so that EBHI-Seg can evaluate their segmentation performance.

This section presents the hardware configuration data required for this experiment as well as the software version.

Processor: Intel Core i7-8700 @ 3.20GHz Six Core

Graphics (GPU): NVIDIA GeForce RTX 2080

Graphics (CPU): Intel UHD Graphics 630

Hard Drive: SM961 NVMe SAMSUNG 512GB (Solid State Drive)

Motherboard: Dell 0NNNCT (C246 chipset)

Mainframe: Dell Precision 3630 Tower Desktop Mainframe

Software Versions: CUDA 11.2, torch 1.7.0, torchvision 0.8.0, python 3.8.

Six types of tumor differentiation stage data in EBHI-Seg were analyzed using classical machine learning methods to obtain the results in Table 3. Base on the Dice ratio metrics, k-means, MRF and Sobel show no significant differences among the three methods around 0.55. In contrast, Watershed metrics are ~0.45 on average, which is lower than the above three metrics. OTSU index is around ~0.40 because the foreground-background is blurred in some experimental samples and OTSU had a difficulty extracting a suitable segmentation threshold, which resulted in undifferentiated test results. Precision and Recall evaluation indexes for k-means, MRF, and Sobel are also around 0.60, which is higher than those for OTSU and Watershed methods by about 0.20. In these three methods, k-means and MRF are higher than Sobel in the visual performance of the images. Although Sobel is the same as these two methods in terms of metrics, it is difficult to distinguish foreground and background images in real images.The segmentation results for MRF are obvious but the running time for MRF is too long in comparison with other classical learning methods. Since classical machine learning methods have a rigorous theoretical foundation and simple ideas, they have been shown to perform well when used for specific problems. However, the performance of different methods varied in the present study.

In general, deep learning models are considerably superior to classical machine learning methods, and even the lowest MedT performance is still higher than the highest accuracy of classical machine learning methods. In EBHI-Seg, the Dice ratio evaluation index of MedT reaches ~0.75. However, the MedT model size was larger and as a result the training time was too long. U-Net and Seg-Net have higher evaluation indexes than MedT, both of about 0.88. Among them, Seg-Net has the least training time and the lowest training model size. Because the normal category has fewer sample images than other categories, the evaluation metrics of the three deep learning methods in this category are significantly lower than those in other categories. The evaluation metrics of the three segmentation methods are significantly higher in the other categories, with Seg-Net averaging above 0.90 and MedT exceeding 0.80.

The present stduy introduced a publicly available colorectal pathology image dataset containing 4456 magnified 400× pathology images of six types of tumor differentiation stages. EBHI-Seg has high segmentation accuracy as well as good robustness. In the classical machine learning approach, segmentation experiments were performed using different methods and evaluation metrics analysis was carried out utilizing segmentation results. The highest and lowest Dice ratios are 0.65 and 0.30, respectively. The highest Precision and Recall values are 0.70 and 0.90, respectively, while the lowest values are 0.50 and 0.35, respectively. All three models performed well when using the deep learning method, with the highest Dice ratio reaching above 0.95 and both Precision and Recall values reaching above 0.90. The segmentation experiments using EBHI-Seg show that this dataset effectively perform the segmentation task in each of the segmentation methods. Furthermore, there are significant differences among the segmentation evaluation metrics. Therefore, EBHI-Seg is practical and effective in performing image segmentation tasks.

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: EBHI-Seg is publicly available at: https://figshare.com/articles/dataset/EBHISEG/21540159/1.

LS: data preparation, experiment, result analysis, and paper writing. XL: data collection and medical knowledge. WH: data collection, data preparation, and paper writing. HC: data preparation and paper writing. JC, ZF, MGa, YJ, GL, DM, ZM, QM, and DT: data preparation. HS: medical knowledge. MGr and YT: result analysis. SQ: method. CL: data collection, method, experiment, result analysis, paper writing, and proofreading. All authors contributed to the article and approved the submitted version.

This work was supported by the National Natural Science Foundation of China (No. 82220108007) and the Beijing Xisike Clinical Oncology Research Foundation (No. Y-tongshu2021/1n-0379).

We thank Miss. Zixian Li and Mr. Guoxian Li for their important discussion in this work.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Sung H, Ferlay J, Siegel RL, Laversanne M, Soerjomataram I, Jemal A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2021) 71:209–49. doi: 10.3322/caac.21660

2. Lee YC, Lee YL, Chuang JP, Lee JC. Differences in survival between colon and rectal cancer from SEER data. PLoS ONE. (2013) 8:e78709. doi: 10.1371/journal.pone.0078709

3. Pamudurthy V, Lodhia N, Konda VJ. Advances in endoscopy for colorectal polyp detection and classification. In: Baylor University Medical Center Proceedings. Vol. 33. Taylor & Francis (2020). p. 28–35. doi: 10.1080/08998280.2019.1686327

4. Thijs J, Van Zwet A, Thijs W, Oey H, Karrenbeld A, Stellaard F, et al. Diagnostic tests for Helicobacter pylori: a prospective evaluation of their accuracy, without selecting a single test as the gold standard. Am J Gastroenterol. (1996) 91:10. doi: 10.1016/0016-5085(95)23623-6

5. Labianca R, Nordlinger B, Beretta G, Mosconi S, Mandalà M, Cervantes A, et al. Early colon cancer: ESMO Clinical Practice Guidelines for diagnosis, treatment and follow-up. Ann Oncol. (2013) 24:vi64–vi72. doi: 10.1093/annonc/mdt354

6. Fischer AH, Jacobson KA, Rose J, Zeller R. Hematoxylin and eosin staining of tissue and cell sections. Cold Spring Harbor Protocols. (2008) 2008:pdb-prot4986. doi: 10.1101/pdb.prot4986

7. Chan JK. The wonderful colors of the hematoxylin-eosin stain in diagnostic surgical pathology. Int J Surg Pathol. (2014) 22:12–32. doi: 10.1177/1066896913517939

8. Gupta V, Vasudev M, Doegar A, Sambyal N. Breast cancer detection from histopathology images using modified residual neural networks. Biocybernetics Biomed Eng. (2021) 41:1272–87. doi: 10.1016/j.bbe.2021.08.011

9. Mathew T, Kini JR, Rajan J. Computational methods for automated mitosis detection in histopathology images: a review. Biocybern Biomed Eng. (2021) 41:64–82. doi: 10.1016/j.bbe.2020.11.005

10. Li C, Wang K, Xu N. A survey for the applications of content-based microscopic image analysis in microorganism classification domains. Artif Intell Rev. (2019) 51:577–646. doi: 10.1007/s10462-017-9572-4

11. Zhang J, Li C, Yin Y, Zhang J, Grzegorzek M. Applications of artificial neural networks in microorganism image analysis: a comprehensive review from conventional multilayer perceptron to popular convolutional neural network and potential visual transformer. Artif Intell Rev. (2022) 2022:1–58. doi: 10.1007/s10462-022-10192-7

12. Zhang J, Li C, Kosov S, Grzegorzek M, Shirahama K, Jiang T, et al. LCU-Net: a novel low-cost U-Net for environmental microorganism image segmentation. Pattern Recogn. (2021) 115:107885. doi: 10.1016/j.patcog.2021.107885

13. Zhao P, Li C, Rahaman MM, Xu H, Yang H, Sun H, et al. A comparative study of deep learning classification methods on a small environmental microorganism image dataset (EMDS-6): from convolutional neural networks to visual transformers. Front Microbiol. (2022) 13:792166. doi: 10.3389/fmicb.2022.792166

14. Kulwa F, Li C, Zhang J, Shirahama K, Kosov S, Zhao X, et al. A new pairwise deep learning feature for environmental microorganism image analysis. Environ Sci Pollut Res. (2022) 2022:1–18. doi: 10.1007/s11356-022-18849-0

15. Ma P, Li C, Rahaman MM, Yao Y, Zhang J, Zou S, et al. A state-of-the-art survey of object detection techniques in microorganism image analysis: from classical methods to deep learning approaches. Artif Intell Rev. (2022) 2022:1–72. doi: 10.1007/s10462-022-10209-1

16. Kulwa F, Li C, Grzegorzek M, Rahaman MM, Shirahama K, Kosov S. Segmentation of weakly visible environmental microorganism images using pair-wise deep learning features. Biomed Signal Process Control. (2023) 79:104168. doi: 10.1016/j.bspc.2022.104168

17. Zhang J, Li C, Rahaman MM, Yao Y, Ma P, Zhang J, et al. A comprehensive survey with quantitative comparison of image analysis methods for microorganism Biovolume measurements. Arch Comput Methods Eng. (2022) 30, 639–73. doi: 10.1007/s11831-022-09811-x

18. Zhang J, Li C, Rahaman MM, Yao Y, Ma P, Zhang J, et al. A comprehensive review of image analysis methods for microorganism counting: from classical image processing to deep learning approaches. Artif Intell Rev. (2021) 2021:1–70. doi: 10.1007/s10462-021-10082-4

19. Rahaman MM, Li C, Yao Y, Kulwa F, Rahman MA, Wang Q, et al. Identification of COVID-19 samples from chest X-ray images using deep learning: a comparison of transfer learning approaches. J X-ray Sci Technol. (2020) 28:821–39. doi: 10.3233/XST-200715

20. Chen H, Li C, Wang G, Li X, Rahaman MM, Sun H, et al. GasHis-Transformer: a multi-scale visual transformer approach for gastric histopathological image detection. Pattern Recogn. (2022) 130:108827. doi: 10.1016/j.patcog.2022.108827

21. Li Y, Wu X, Li C, Li X, Chen H, Sun C, et al. A hierarchical conditional random field-based attention mechanism approach for gastric histopathology image classification. Appl Intell. (2022) 2022:1–22. doi: 10.1007/s10489-021-02886-2

22. Hu W, Li C, Li X, Rahaman MM, Ma J, Zhang Y, et al. GasHisSDB: a new gastric histopathology image dataset for computer aided diagnosis of gastric cancer. Comput Biol Med. (2022) 2022:105207. doi: 10.1016/j.compbiomed.2021.105207

23. Chen H, Li C, Li X, Rahaman MM, Hu W, Li Y, et al. IL-MCAM: an interactive learning and multi-channel attention mechanism-based weakly supervised colorectal histopathology image classification approach. Comput Biol Med. (2022) 143:105265. doi: 10.1016/j.compbiomed.2022.105265

24. Hu W, Chen H, Liu W, Li X, Sun H, Huang X, et al. A comparative study of gastric histopathology sub-size image classification: from linear regression to visual transformer. Front Med. (2022) 9:1072109. doi: 10.3389/fmed.2022.1072109

25. Li Y, Li C, Li X, Wang K, Rahaman MM, Sun C, et al. A comprehensive review of Markov random field and conditional random field approaches in pathology image analysis. Arch Comput Methods Eng. (2022) 29:609–39. doi: 10.1007/s11831-021-09591-w

26. Sun C, Li C, Zhang J, Rahaman MM, Ai S, Chen H, et al. Gastric histopathology image segmentation using a hierarchical conditional random field. Biocybern Biomed Eng. (2020) 40:1535–55. doi: 10.1016/j.bbe.2020.09.008

27. Li X, Li C, Rahaman MM, Sun H, Li X, Wu J, et al. A comprehensive review of computer-aided whole-slide image analysis: from datasets to feature extraction, segmentation, classification and detection approaches. Artif Intell Rev. (2022)2022:1–70. doi: 10.1007/s10462-021-10121-0

28. Rahaman MM, Li C, Wu X, Yao Y, Hu Z, Jiang T, et al. A survey for cervical cytopathology image analysis using deep learning. IEEE Access. (2020) 8:61687–710. doi: 10.1109/ACCESS.2020.2983186

29. Mamunur Rahaman M, Li C, Yao Y, Kulwa F, Wu X, Li X, et al. DeepCervix: a deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Comput Biol Med. (2021) 136:104649. doi: 10.1016/j.compbiomed.2021.104649

30. Liu W, Li C, Rahaman MM, Jiang T, Sun H, Wu X, et al. Is the aspect ratio of cells important in deep learning? A robust comparison of deep learning methods for multi-scale cytopathology cell image classification: from convolutional neural networks to visual transformers. Comput Biol Med. (2021) 2021:105026. doi: 10.1016/j.compbiomed.2021.105026

31. Liu W, Li C, Xu N, Jiang T, Rahaman MM, Sun H, et al. CVM-Cervix: a hybrid cervical pap-smear image classification framework using CNN, visual transformer and multilayer perceptron. Pattern Recogn. (2022) 2022:108829. doi: 10.1016/j.patcog.2022.108829

32. Chen A, Li C, Zou S, Rahaman MM, Yao Y, Chen H, et al. SVIA dataset: a new dataset of microscopic videos and images for computer-aided sperm analysis. Biocybern Biomed Eng. (2022) 2022:10. doi: 10.1016/j.bbe.2021.12.010

33. Zou S, Li C, Sun H, Xu P, Zhang J, Ma P, et al. TOD-CNN: an effective convolutional neural network for tiny object detection in sperm videos. Comput Biol Med. (2022) 146:105543. doi: 10.1016/j.compbiomed.2022.105543

34. Pacal I, Karaboga D, Basturk A, Akay B, Nalbantoglu U. A comprehensive review of deep learning in colon cancer. Comput Biol Med. (2020) 126:104003. doi: 10.1016/j.compbiomed.2020.104003

35. Miranda E, Aryuni M, Irwansyah E. A survey of medical image classification techniques. In: 2016 International Conference on Information Management and Technology (ICIMTech). Bandung: IEEE (2016). p. 56–61.

36. Kotadiya H, Patel D. Review of medical image classification techniques. In: Third International Congress on Information and Communication Technology. Singapore: Springer (2019). p. 361–9. doi: 10.1007/978-981-13-1165-9_33

37. Trivizakis E, Ioannidis GS, Souglakos I, Karantanas AH, Tzardi M, Marias K. A neural pathomics framework for classifying colorectal cancer histopathology images based on wavelet multi-scale texture analysis. Sci Rep. (2021) 11:1–10. doi: 10.1038/s41598-021-94781-6

38. Oliveira SP, Neto PC, Fraga J, Montezuma D, Monteiro A, Monteiro J, et al. CAD systems for colorectal cancer from WSI are still not ready for clinical acceptance. Sci Rep. (2021) 11:1–15. doi: 10.1038/s41598-021-93746-z

39. Kausar T, Kausar A, Ashraf MA, Siddique MF, Wang M, Sajid M, et al. SA-GAN: stain acclimation generative adversarial network for histopathology image analysis. Appl Sci. (2021) 12:288. doi: 10.3390/app12010288

40. Wei J, Suriawinata A, Ren B, Liu X, Lisovsky M, Vaickus L, et al. Learn like a pathologist: curriculum learning by annotator agreement for histopathology image classification. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. Waikoloa, HI: IEEE (2021). p. 2473–83.

41. Bilal M, Tsang YW, Ali M, Graham S, Hero E, Wahab N, et al. AI based pre-screening of large bowel cancer via weakly supervised learning of colorectal biopsy histology images. medRxiv. (2022) doi: 10.1101/2022.02.28.22271565

42. De Leon MP, Di Gregorio C. Pathology of colorectal cancer. Digest Liver Dis. (2001) 33:372–88. doi: 10.1016/S1590-8658(01)80095-5

43. Cooper HS, Deppisch LM, Kahn EI, Lev R, Manley PN, Pascal RR, et al. Pathology of the malignant colorectal polyp. Hum Pathol. (1998) 29:15–26. doi: 10.1016/S0046-8177(98)90385-9

44. Ren W, Yu J, Zhang ZM, Song YK, Li YH, Wang L. Missed diagnosis of early gastric cancer or high-grade intraepithelial neoplasia. World J Gastroenterol. (2013) 19:2092. doi: 10.3748/wjg.v19.i13.2092

45. Jass JR, Sobin LH. Histological Typing of Intestinal Tumours. Berlin; Heidelberg: Springer Science & Business Media (2012). doi: 10.1007/978-3-642-83693-0_2

46. Spring KJ, Zhao ZZ, Karamatic R, Walsh MD, Whitehall VL, Pike T, et al. High prevalence of sessile serrated adenomas with BRAF mutations: a prospective study of patients undergoing colonoscopy. Gastroenterology. (2006) 131:1400–7. doi: 10.1053/j.gastro.2006.08.038

47. Li SC, Burgart L. Histopathology of serrated adenoma, its variants, and differentiation from conventional adenomatous and hyperplastic polyps. Arch Pathol Lab Med. (2007) 131:440–5. doi: 10.5858/2007-131-440-HOSAIV

48. Zou KH, Warfield SK, Bharatha A, Tempany CM, Kaus MR, Haker SJ, et al. Statistical validation of image segmentation quality based on a spatial overlap index1: scientific reports. Acad Radiol. (2004) 11:178–89. doi: 10.1016/S1076-6332(03)00671-8

49. Jaccard P. Étude comparative de la distribution florale dans une portion des Alpes et des Jura. Bull Soc Vaudoise Sci Nat. (1901) 37:547–79.

50. Naz S, Majeed H, Irshad H. Image segmentation using fuzzy clustering: a survey. In: 2010 6th International Conference on Emerging Technologies (ICET). Islamabad: IEEE (2010). p. 181–6.

51. Zaitoun NM, Aqel MJ. Survey on image segmentation techniques. Procedia Comput Sci. (2015) 65:797–806. doi: 10.1016/j.procs.2015.09.027

52. Dhanachandra N, Manglem K, Chanu YJ. Image segmentation using K-means clustering algorithm and subtractive clustering algorithm. Procedia Comput Sci. (2015) 54:764–71. doi: 10.1016/j.procs.2015.06.090

53. Deng H, Clausi DA. Unsupervised image segmentation using a simple MRF model with a new implementation scheme. Pattern Recogn. (2004) 37:2323–35. doi: 10.1016/S0031-3203(04)00195-5

54. Huang C, Li X, Wen Y. AN OTSU image segmentation based on fruitfly optimization algorithm. Alexandria Eng J. (2021) 60:183–8. doi: 10.1016/j.aej.2020.06.054

55. Khiyal MSH, Khan A, Bibi A. Modified watershed algorithm for segmentation of 2D images. Issues Informing Sci Inf Technol. (2009) 6:1077. doi: 10.28945/1077

56. Zhang H, Zhu Q, Guan Xf. Probe into image segmentation based on Sobel operator and maximum entropy algorithm. In: 2012 International Conference on Computer Science and Service System. Nanjing: IEEE(2012). p. 238–41.

57. Badrinarayanan V, Kendall A, Cipolla R. Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans Pattern Anal Mach Intell. (2017) 39:2481–95. doi: 10.1109/TPAMI.2016.2644615

58. Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation. In: International CONFERENCE on Medical Image Computing and Computer-Assisted Intervention. Berlin; Heidelberg: Springer (2015). p. 234–41. doi: 10.1007/978-3-662-54345-0_3

Keywords: colorectal histopathology, enteroscope biopsy, image dataset, image segmentation, EBHI-Seg

Citation: Shi L, Li X, Hu W, Chen H, Chen J, Fan Z, Gao M, Jing Y, Lu G, Ma D, Ma Z, Meng Q, Tang D, Sun H, Grzegorzek M, Qi S, Teng Y and Li C (2023) EBHI-Seg: A novel enteroscope biopsy histopathological hematoxylin and eosin image dataset for image segmentation tasks. Front. Med. 10:1114673. doi: 10.3389/fmed.2023.1114673

Received: 02 December 2022; Accepted: 06 January 2023;

Published: 24 January 2023.

Edited by:

Jun Cheng, Shenzhen University, ChinaReviewed by:

Wenxu Zhang, City University of Hong Kong, Hong Kong SAR, ChinaCopyright © 2023 Shi, Li, Hu, Chen, Chen, Fan, Gao, Jing, Lu, Ma, Ma, Meng, Tang, Sun, Grzegorzek, Qi, Teng and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaoyan Li,  bGl4aWFveWFuQGNhbmNlcmhvc3AtbG4tY211LmNvbQ==; Chen Li,

bGl4aWFveWFuQGNhbmNlcmhvc3AtbG4tY211LmNvbQ==; Chen Li,  bGljaGVuQGJtaWUubmV1LmVkdS5jbg==

bGljaGVuQGJtaWUubmV1LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.