95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Med. , 09 June 2022

Sec. Healthcare Professions Education

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.871957

Medical schools are increasingly incorporating ultrasound into undergraduate medical education. The global integration of ultrasound into teaching curricula and physical examination necessitates a strict evaluation of the technology's benefit and the reporting of results. Course structures and assessment instruments vary and there are no national or worldwide standards yet. This systematic literature review aims to provide an up-to-date overview of the various formats for assessing ultrasound skills. The key questions were framed in the PICO format (Population, Intervention, Comparator, and Outcome). A review of literature using Embase, PubMed, Medline, Cochrane and Google Scholar was performed up to May 2021, while keywords were predetermined by the authors. Inclusion criteria were as follows: prospective as well as retrospective studies, observational or intervention studies, and studies outlining how medical students learn ultrasound. In this study, 101 articles from the literature search matched the inclusion criteria and were investigated. The most frequently used methods were objective structured clinical examinations (OSCE), multiple choice questions, and self-assessments via questionnaires while frequently more than one assessment method was applied. Determining which assessment method or combination is ideal to measure ultrasound competency remains a difficult task for the future, as does the development of an equitable education approach leading to reduced heterogeneity in curriculum design and students attaining equivalent skills.

Ultrasound examinations and obtained images are highly dependent on the physician's competence. Integration of ultrasound training offers opportunities to provide instruction in the use of novel educational and clinical practice tools and there is wide support for the incorporation into undergraduate medical education. However, despite growing interest in ultrasound education, course structure and implementation in undergraduate medical education programs differ between universities and countries without national standards and guidelines (1). A critical difficulty in ultrasound training is allocating time and funds for training programs in overburdened curricula. Early analyses demonstrated that in small cohorts, medical students were able to develop the psychomotor and interpretative skills required for effective focused ultrasound. For example, 1st year medical students were able to successfully use portable ultrasound after following six 90-min sessions covering abdominal, cardiovascular, genitourinary, and musculoskeletal applications (2). Recently, the European Federation of Medical and Biological Ultrasound Societies (EFSUMB) and the World Federation for Ultrasound in Medicine and Biology (WFUMB) have promoted undergraduate medical ultrasound education within European medical faculties and have developed measures to accomplish this objective (3, 4). The use of ultrasound in medical education depends on curricular requirements as well as the type of equipment available, the selected educational approach and faculty skill sets. These determine the type and quality of training delivered to students. Selecting an appropriate assessment method is crucial given the need to closely align learning objectives, instructional methods and exams. Reliable methods to assess physician's skills in performing ultrasound are critical for training and to prove the curriculum's quality (5). The global integration of ultrasound into medical education will make a regulated assessment and report of the results essential (6) in order to estimate and compare the efficacy of different attempts to organize ultrasound courses in medical education (7). There are various goals of assessment, including the optimization of learning and direct feedback in order to protect patients from insufficiently educated doctors (8) and to re-certify individuals, whose skills may have declined over time (9). However, a standardized method to evaluate ultrasound knowledge or of higher importance to assess the examination performance does not exist yet (9–11).

It has been proposed that the practical examination should include assessment of accurate machine settings, probe handling, image acquisition as well as documentation (12).

To assess various examination formats used for ultrasound in medical education, a systematic literature review of the MEDLINE, EMBASE, Cochrane, PubMed, and Google Scholar Databases was conducted to identify published literature on ultrasound assessment in undergraduate or graduate medical training.

This systematic literature review was conducted according to the preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines (13).

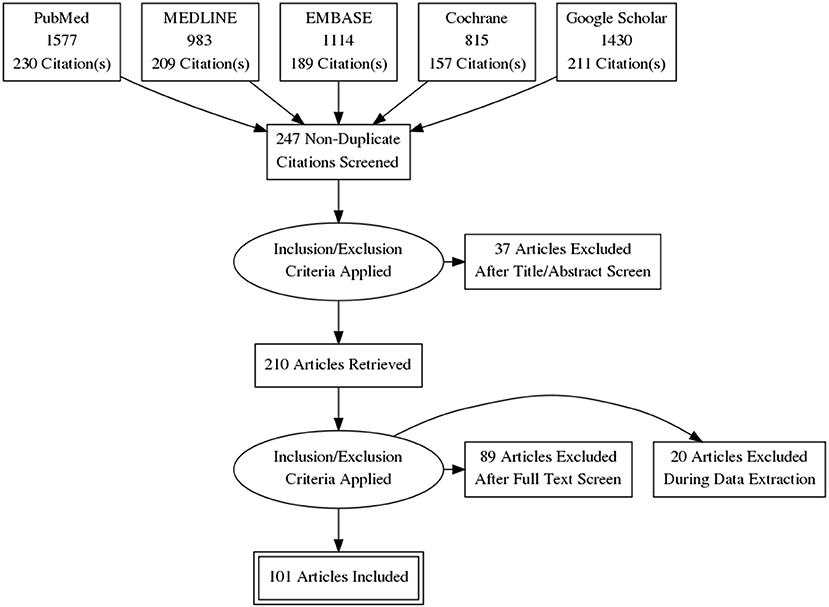

Relevant medical databases, including PubMed, MEDLINE, EMBASE, Cochrane and Google Scholar were searched for publications related to the assessment of ultrasound skills up to May 2021, while keywords were predetermined by the authors (Figure 1). Titles and abstracts were analyzed for possible inclusion. In addition, reference lists of the identified articles were investigated for further potential inclusion. Agreement regarding potential relevance was reached by consensus and full text copies of relevant papers were obtained. The key questions of this systematic literature review were framed in the PICOS format (Population, Intervention, Comparator, Outcome, and Study design) as detailed in Table 1.

Figure 1. This systematic literature review was conducted according to the preferred reporting items for systematic reviews and meta-analyses (PRISMA) guidelines. The figure displays the review process, at the end 101 of 247 articles have been included.

Articles meeting the following criteria were suitable for inclusion:

• Prospective as well as retrospective studies, observational or intervention studies, and studies outlining different assessment methods.

• The following keywords were combined: (ultrasound or sonography) and (education, medical) or (medical students) and (assessment) or (exam).

Exclusion criteria were:

• No data about the form of assessment or descriptive data only

• Duplicate articles within or between data bases

• Reviews

• Abstracts only

• Newsletters

• Conference presentations

• Expert opinions

• Editorials

The different assessment formats were structured in subclasses, which are the following: theoretical knowledge (written or online examination, multiple choice questions (MCQ) or essay questions), practical examination skills [e.g., US acquisition (US image rating), observed simulated clinical encounters (Objective Structured Clinical Examination (OSCEs), The Objective Structured Assessment of Ultrasound Skills (OSAUS), Direct Observation of Procedural Skills (DOPS))], and self-assessment (e.g., surveys regarding satisfaction and competence).

Data originated from full-text articles is presented in a structured table (Table 2) to illustrate the various assessment formats in ultrasound education and to give selected examples for the outlined evaluation methods.

The results section that follows should provide a general understanding of the various assessment forms utilized in the evaluation of ultrasound.

In many cases evaluation is based on self-assessment using surveys or questionnaires, sometimes in a pre-/post course design. Self-assessment can help to evaluate the student's thoughts and manners when learning and it can identify tactics that enhance better understanding and improvement of skills.

Further, students can rate their own competence, which might motivate them to improve their skills as they detect incongruity between present and wanted performance. According to one study, self-evaluation can help students improve their critical thinking skills (52) further it might encourage reflection on personal performance (53).

Creating surveys is time- and cost-effective and the evaluator does not essentially have to be a specialist. Since the evaluation is based on subjective data when using self-assessment, there is the threat of discrepancy between actual performance and answers given in the survey. Students might not rate their actual performance competence but the effort they put into the course (54).

There is no acquisition of genuine knowledge and competence in examination as no objective data is generated. Additionally, there is a risk of decreased validity by response bias which are prevalent in research involving participant's self-report. For example, the study results can be influenced by acquiescence bias, which belongs to response bias and describes that participants in a survey have the tendency to agree with the asked questions.

The objective structured clinical examination (OSCE) was developed by Harden and colleagues in 1975 (55). The idea is rotating through multiple stations in a simulated clinical setting. Each of these stations challenges the student to solve a special task in a pre-specified time in order to test clinical skill performance. While the students carry out the examination they are observed by one or two assessors who rate the student's performance using checklists which have been developed in advance.

OSCE has been widely accepted as an objective form of assessing clinical competences (56) and is used in various specializations and clinical tasks.

It allows the assessment of scanning technique and image interpretation in real time and combines the evaluation of technical skills and theoretical knowledge. Further, the possibility for direct feedback on the student's performance is provided and it aims to prepare students for daily clinical practice. There are different assessors with every station and the students should rotate so that they should all have the same time and tasks to bring fairness.

Within an OSCE, different forms of assessment can be combined since e.g., at one station case- based US images could be diagnosed while the next task requires the students to examine a patient to obtain own images to observe the student's probe handling and scanning technique.

In general, the checklist for ultrasound can include various aspects, which have been defined prior to the assessment. Items that are often included are e.g., positioning of the patient as well as interaction with the patient, positioning/handling/orientation of the ultrasound probe, image adjustment, and interpretation. A different OSCE station is needed for each organ as the protocols are individually tailored.

Therefore, the large number of stations and the various protocols to test different organs as well as the required educated assessors may exceed the available resources (36). OSCEs require staff, equipment, clinical laboratories as well as long preparations. Furthermore, to avoid bias, two assessors each station would be preferable, resulting in even higher cost- and time investment. OSCEs might not display a realistic hospital setting (57).

As the time allotted to each station is predetermined, students may become stressed and be unable to complete the task to their own expectations owing to time constraints. However, limited time may compel a higher level of training motivation and motivate students to practice more in order to achieve satisfactory outcomes (7).

The concept of direct observation of procedural skills (DOPS) was developed by the Royal Medical College of England. The assessor observes the student during the clinical procedure on a real patient and gives feedback afterwards, it is a workplace-based assessment method.

Further, DOPS combines learning, supervision, rating and feedback (58) and can be both formative and summative (59).

Designing specific protocols for grading facilitates detailed feedback and fairness since the assessor has the same base to rate the different students.

The use of workplace-based assessment is useful since it determines not only the students' learning achievements but also their attempt to assume professional responsibilities (60). Scanning technique and image interpretation can be assessed in real time and therefore theoretical knowledge as well as examination technique can be rated. DOPS have been used for ultrasound assessment and seems to be a reliable and valid method (36) and requires less assessment stations and resources than an OSCE format. However, an educated assessor is necessary for the evaluation of the student and it would be even better to have two independent raters. Further, in comparison to OSCE, DOPS is not widely established in ultrasound assessment and there might be more studies required to show its efficacy and validity.

The Objective Structured Assessment of Ultrasound Skills (OSAUS) was developed as an approach to achieve international consensus across various specialities on an evaluation tool for ultrasound education (9). Based on a delphi-consensus seven key points have been identified and included in the protocol.

These key points are: (1) Indication for the examination, (2) Applied knowledge of ultrasound equipment, (3) Image optimization, (4) Systematic examination, (5) Interpretation of images, (6) Documentation of examination, and (7) Medical decision making.

OSAUS can be used to assess US competence in different clinical settings and disciplines. It is a time-effective method as the protocol should be used universally there is no need of developing a new protocol for various specializations/organs. However, OSAUS is measuring general aspects and is not procedure specific.

The student is asked, to state the indication for the examination, as well as how the examination could help in further decision making and treatment. Therefore, the student learns the importance of ultrasound and the practical applications of this imaging method.

Further studies are required to examine the value of the protocol to assess ultrasound competence in different fields and clinical settings.

Examinations in form of multiple choice (MCQ) or written questions are often used additionally to a direct observation of scanning technique (26, 27, 61).

Using MCQ is objective and the questions could be included in any existing examination.

Further, no educated assessor is required for the real-time assessment of skills.

Due to the absence of an ultrasound examination, evaluating scanning method and image optimization is not feasible. Whereas, ultrasound is a technical skill, the assessment with MCQ rather checks theoretical knowledge.

Including pictures and case-based assessments are more opportunities for the assessment of ultrasound knowledge. Here, students have to detect pathologic findings in US images and have to connect them to clinical cases and further clinical applications.

Short duration and case based presentations can increase the knowledge maintained after 2 weeks in learning (37).

Case based learning (CBL) is a teaching method which finds application in multiple medical fields using case vignettes to convey relevance and to connect theory to practice (62).

It is objective and does not require any special educated assessor. Just like MCQ, case-based questions can be incorporated into an already existing exam.

Since it is a cost- and time-effective tool it can be well-used when resources are limited.

However, probe handling, scanning technique and image adjustment cannot be evaluated with this method of assessment and would be necessary to further improve the students' competence.

Several studies have shown that simulation-based ultrasound training can lead to better clinical performance not only regarding diagnostic accuracy, but further students seem to need less supervision (63). Ultrasound simulators are important for training in anesthesia and gynecology and can be used for the assessment of competence (41). They are used especially for rather advanced exercises e.g., for the incorporation of central venous catheters or practicing regional anesthesia (64) as they provide the possibility to practice complex tasks prior to the performance with patients. Simulators can offer standardized and valid measurement of skills that can be compared not only nationally but globally (41). The clinical setting is missing and the interaction with the patient cannot be evaluated. On the other hand, the assessment in a clinical setting requires expenditure not every university can afford.

When the number of simulators is limited, the assessment can be affected if the students memorized the correct position. Since anatomy differs, the learning effect when scanning patients might be different.

In this assessment format students as well as experts were asked to perform the same examination and results were compared afterwards.

One study trained students to measure the liver size using ultrasound. Experienced physicians were asked to measure liver span with standard examination methods. Afterwards the results were compared to the student's findings to evaluate if the course was effective (44). Another study compared the precision of cardiovascular diagnoses by medical students using a mobile ultrasound device with the findings of cardiologists which were using standard physical examinations (43). This assessment form provides a clinical setting and students get to perform examinations with real patients. Theoretical knowledge cannot be assessed neither can scanning technique or probe handling since only the resulting image is rated. In this case the students do not get direct feedback on their performance.

In some studies US images of the students were rated by predetermined criteria. For example images from the pretraining scanning examination and the images from the post-training scanning examination were stored and then compared for improvement (46). In other studies the image quality was live-evaluated and students got a different score, depending on their ability to visualize the organ (47, 48).

There is no global accepted rating system of US images yet, even though the need for a standardized method to evaluate the quality of an US image is well-documented. The B-QUIET method for example represents such an approach to quantify the sonographer component of ultrasound images (65) (Table 3).

Since assessment is a crucial part of medical education and plays a major role in the concept of constructive alignment, it should always be considered in curriculum development. Especially for practical skills such as ultrasound it plays a vital role and it is essential to ensure efficacious use of this specific technology (66). As ultrasound is a hands-on skill, the examination should ideally not only ask for theoretical knowledge, in addition it may be necessary to assess the resulting ultrasound images or the scanning technique. Furthermore, when testing, one should make sure that not only subjective outcomes are assessed to ensure that the study results can be used to compare different teaching methods in order to find the best educational approach. By using a combination of different assessment methods, some of the limitations that every examination format has can be compensated, learning objectives can be elaborated and inadequate performance can be detected (67). As seen by the numerous assessment forms presented, there is currently no internationally acknowledged assessment tool. Future studies should analyze and develop consensus on when and how ultrasound can be utilized effectively, as well as how ultrasound assessment should be incorporated into medical school education. Moreover, a general approach of an equal education in different universities and countries leading to less variability in the curriculum design is needed. There have already been attempts to develop a single general assessment tool that could be used to evaluate ultrasound skills across diverse specializations and contexts (9, 65). The decision which assessment method or which combination is best to measure ultrasound competency remains a challenging task for future trials. Besides, standards have to be defined as well as the frequency at which students should be tested.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Material preparation, data collection, analysis were performed, and the first draft of the manuscript was written by EH, FR, and VS. CD and VS helped by manuscript editing. All authors contributed to the study conception and design, commented on previous versions of the manuscript, read, and approved the final manuscript.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. Tarique U, Tang B, Singh M, Kulasegaram KM, Ailon J. Ultrasound curricula in undergraduate medical education: a scoping review. J Ultrasound Med. (2018) 37:69–82. doi: 10.1002/jum.14333

2. Rao S, van Holsbeeck L, Musial JL, Parker A, Bouffard JA, Bridge P. A pilot study of comprehensive ultrasound education at the Wayne State University School of Medicine: a pioneer year review. J. Ultrasound Med. (2008) 27:745–9. doi: 10.7863/jum.2008.27.5.745

3. Cantisani V, Dietrich CF, Badea R, Dudea S, Prosch H, Cerezo E. EFSUMB statement on medical student education in ultrasound short version. Ultraschall in der Medizin. (2016) 37:100–2. doi: 10.1055/s-0035-1566959

4. Dietrich CF, Hoffmann B, Abramowicz J, Badea R, Braden B, Cantisani V. Medical student ultrasound education: a WFUMB position paper, part I. Ultrasound Med Biol. (2019) 45:271–81. doi: 10.1016/j.ultrasmedbio.2018.09.017

5. Sisley AC, Johnson SB, Erickson W, Fortune JB. Use of an Objective Structured Clinical Examination (OSCE) for the assessment of physician performance in the ultrasound evaluation of trauma. J Trauma. (1999) 47:627–31. doi: 10.1097/00005373-199910000-00004

6. Solomon SD, Saldana F. Point-of-care ultrasound in medical education — stop listening and look. N Engl J Med. (2014) 370:1081–3. doi: 10.1056/NEJMp1311944

7. Hofer M, Kamper L, Sadlo M, Sievers K, Heussen N. Evaluation of an OSCE assessment tool for abdominal ultrasound courses. Ultraschall in der Medizin. (2011) 32:184–90. doi: 10.1055/s-0029-1246049

8. Sultan SF, Iohom G, Saunders J, Shorten G. A clinical assessment tool for ultrasound-guided axillary brachial plexus block. Acta Anaesthesiol Scand. (2012) 56:616–23. doi: 10.1111/j.1399-6576.2012.02673.x

9. Tolsgaard MG, Todsen T, Sorensen JL, Ringsted C, Lorentzen T, Ottesen B. International multispecialty consensus on how to evaluate ultrasound competence: a Delphi consensus survey. PLoS ONE. (2013) 8:e57687. doi: 10.1371/journal.pone.0057687

10. Afonso N, Amponsah D, Yang J, Mendez J, Bridge P, Hays G. Adding new tools to the black bag–introduction of ultrasound into the physical diagnosis course. J Gen Intern Med. (2010) 25:1248–52. doi: 10.1007/s11606-010-1451-5

11. Bahner DP, Adkins EJ, Hughes D, Barrie M, Boulger CT, Royall NA. Integrated medical school ultrasound: development of an ultrasound vertical curriculum. Crit Ultrasound J. (2013) 5:6. doi: 10.1186/2036-7902-5-6

12. Akhtar S, Theodoro D, Gaspari R, Tayal V, Sierzenski P, Lamantia J. Resident training in emergency ultrasound: consensus recommendations from the 2008 Council of Emergency Medicine Residency Directors Conference. Acad Emerg Med. (2009) 16(Suppl.2):S32-6. doi: 10.1111/j.1553-2712.2009.00589.x

13. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gøtzsche PC, Ioannidis JPA. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. (2009) 339:b2700). doi: 10.1136/bmj.b2700

14. Bernard S, Richardson C, Hamann CR, Lee S, Dinh V. Head and neck ultrasound education-a multimodal educational approach in the predoctoral setting: a pilot study. J Ultrasound Med. (2015) 34:1437–43. doi: 10.7863/ultra.34.8.1437

15. Hammoudi N, Arangalage D, Boubrit L, Renaud MC, Isnard R, Collet J, et al. Ultrasound-based teaching of cardiac anatomy and physiology to undergraduate medical students. Archiv Cardiovasc Dis. (2013) 106:487–91. doi: 10.1016/j.acvd.2013.06.002

16. Hoyer R, Means R, Robertson J, Rappaport D, Schmier C, Jones T. Ultrasound-guided procedures in medical education: a fresh look at cadavers. Intern Emerg Med. (2016) 11:431–6. doi: 10.1007/s11739-015-1292-7

17. Ivanusic J, Cowie B, Barrington M. Undergraduate student perceptions of the use of ultrasonography in the study of “living anatomy”. Anat Sci Educ. (2010) 3:318–22. doi: 10.1002/ase.180

18. Brown B, Adhikari S, Marx J, Lander L, Todd GL. Introduction of ultrasound into gross anatomy curriculum: perceptions of medical students. J Emerg Med. (2012) 43:1098–102. doi: 10.1016/j.jemermed.2012.01.041

19. Keddis MT, Cullen MW, Reed DA, Halvorsen AJ, McDonald FS, Takahashi PY, et al. Effectiveness of an ultrasound training module for internal medicine residents. BMC Med Educ. (2011) 11:75. doi: 10.1186/1472-6920-11-75

20. Rempell JS, Saldana F, DiSalvo D, Kumar N, Stone MB, Chan W, et al. Pilot point-of-care ultrasound curriculum at harvard medical school: early experience. West J Emerg Med. (2016) 17:734–40. doi: 10.5811/westjem.2016.8.31387

21. Swamy M, Searle RF. Anatomy teaching with portable ultrasound to medical students. BMC Med Educ. (2012) 12:99. doi: 10.1186/1472-6920-12-99

22. Teichgraber UKM, Meyeq JMA, Poulsen Nautrup C, Berens von Rautenfeld D. Ultrasound anatomy: a practical teaching system in human gross anatomy. Med Educ. (1996) 30:296–8. doi: 10.1111/j.1365-2923.1996.tb00832.x

23. Moscova M, Bryce DA, Sindhusake D, Young N. Integration of medical imaging including ultrasound into a new clinical anatomy curriculum. Anat Sci Educ. (2015) 8:205–20. doi: 10.1002/ase.1481

24. Dinh V, Frederick J, Bartos R, Shankel TM, Werner L. Effects of ultrasound implementation on physical examination learning and teaching during the first year of medical education. J Ultrasound Med. (2015) 34:43–50. doi: 10.7863/ultra.34.1.43

25. Duanmu Y, Henwood PC, Takhar SS, Chan W, Rempell JS, Liteplo AS. Correlation of OSCE performance and point-of-care ultrasound scan numbers among a cohort of emergency medicine residents. Ultrasound J. (2019) 11:3. doi: 10.1186/s13089-019-0118-7

26. Knobe M, Münker R, Sellei RM, Holschen M, Mooij SC, Schmidt-Rohlfing B. Peer teaching: a randomised controlled trial using student-teachers to teach musculoskeletal ultrasound. Med Educ. (2010) 44:148–55. doi: 10.1111/j.1365-2923.2009.03557.x

27. Lozano-Lozano M, Galiano-Castillo N, Fernández-Lao C, Postigo-Martin P, Álvarez-Salvago F, Arroyo-Morales M. The ecofisio mobile app for assessment and diagnosis using ultrasound imaging for undergraduate health science students: multicenter randomized controlled trial. J Med Internet Res. (2020) 22:e16258. doi: 10.2196/16258

28. Hofer M, Schiebel B, Hartwig HG, Garten A, Mödder U. Innovative course concept for small group teaching in clinical methods. Results of a longitudinal, 2-cohort study in the setting of the medical didactic pilot project in Dusseldorf. Dtsch Med Wochenschr. (2000) 125:717–23. doi: 10.1055/s-2007-1024468

29. Gogalniceanu P, Sheena Y, Kashef E, Purkayastha S, Darzi A, Paraskeva P. Is basic emergency ultrasound training feasible as part of standard undergraduate medical education? J Surg Educ. (2010) 67:152–6. doi: 10.1016/j.jsurg.2010.02.008

30. Knobe M, Carow JB, Ruesseler M, Leu BM, Simon M, Beckers SK. Arthroscopy or Ultrasound in Undergraduate Anatomy Education: A Randomized Cross-Over Controlled Trial. (2012). Available online at: https://bmcmededuc.biomedcentral.com/track/pdf/10.1186/1472-6920-12-85 (accessed April 16, 2020).

31. Bornemann P. Assessment of a novel point-of-care ultrasound curriculum's effect on competency measures in family medicine graduate medical education. J Ultrasound Med. (2017) 36:1205–11. doi: 10.7863/ultra.16.05002

32. Henwood PC, Mackenzie DC, Rempell JS, Douglass E, Dukundane D, Liteplo AS. Intensive point-of-care ultrasound training with long-term follow-up in a cohort of Rwandan physicians. Trop Med Int Health. (2016) 21:1531–8. doi: 10.1111/tmi.12780

33. Chuan A, Thillainathan S, Graham PL, Jolly B, Wong DM, Smith N. Reliability of the direct observation of procedural skills assessment tool for ultrasound-guided regional anaesthesia. Anaesth Intensive Care. (2016) 44:201–9. doi: 10.1177/0310057X1604400206

34. Nilsson PM, Todsen T, Subhi Y, Graumann O, Nolsøe CP, Tolsgaard MG. Kosteneffizienz des App-basierten Selbststudiums im “Extended Focused Assessment with Sonography for Trauma” (eFAST): Eine randomisierte Studie. Ultraschall in der Medizin. (2017) 38:642–7. doi: 10.1055/s-0043-119354

35. Royer DF, Kessler R, Stowell JR. Evaluation of an innovative hands-on anatomy-centered ultrasound curriculum to supplement graduate gross anatomy education. Anat Sci Educ. (2017) 10:348–62. doi: 10.1002/ase.1670

36. Heinzow HS, Friederichs H, Lenz P, Schmedt A, Becker JC, Hengst K. Teaching Ultrasound in a Curricular Course According to Certified EFSUMB Standards During Undergraduate Medical Education a Propective Study. (2020). Available online at: https://theultrasoundjournal.springeropen.com/track/pdf/10.1007/s13089-011-0052-9 (accessed April 16, 2020).

37. Hempel D, Stenger T, Campo Dell' Orto M, Stenger D, Seibel A, Röhrig S, et al. Analysis of trainees' memory after classroom presentations of didactical ultrasound courses. Crit Ultrasound J. (2014) 6:10. doi: 10.1186/2036-7902-6-10

38. Fox JC, Cusick S, Scruggs W, Henson TW, Anderson CL, Barajas G. Educational assessment of medical student rotation in emergency ultrasound. Western J Emerg Med. (2007) 8:84–7.

39. Noble VE, Nelson BP, Sutingco AN, Marill KA, Cranmer H. Assessment of knowledge retention and the value of proctored ultrasound exams after the introduction of an emergency ultrasound curriculum. BMC Med Educ. (2007) 7:40. doi: 10.1186/1472-6920-7-40

40. Syperda VA, Trivedi PN, Melo LC, Freeman ML, Ledermann EJ, Smith TM. Ultrasonography in preclinical education: a pilot study. J Am Osteopath Assoc. (2007) 108:601–5. doi: 10.7556/jaoa.2008.108.10.601

41. Madsen ME, Konge L, Nørgaard LN, Tabor A, Ringsted C, Klemmensen AK. Assessment of performance measures and learning curves for use of a virtual-reality ultrasound simulator in transvaginal ultrasound examination. Ultrasound Obstetr Gynecol. (2014) 44:693–9. doi: 10.1002/uog.13400

42. Yoo MC, Villegas L, Jones DB. Basic ultrasound curriculum for medical students: validation of content and phantom. J Laparoendosc Adv Surg Tech A. (2004) 14:374–9. doi: 10.1089/lap.2004.14.374

43. Kobal SL, Trento L, Baharami S, Tolstrup K, Naqvi TZ, Cercek B. Comparison of effectiveness of hand-carried ultrasound to bedside cardiovascular physical examination. Am J Cardiol. (2005) 96:1002–6. doi: 10.1016/j.amjcard.2005.05.060

44. Mouratev G, Howe D, Hoppmann R, Poston MB, Reid R, Varnadoe J. Teaching medical students ultrasound to measure liver size: comparison with experienced clinicians using physical examination alone. Teach Learn Med. (2013) 25:84–8. doi: 10.1080/10401334.2012.741535

45. Angtuaco TL, Hopkins RH, DuBose TJ, Bursac Z, Angtuaco MJ, Ferris EJ. Sonographic physical diagnosis 101 teaching senior medical students basic ultrasound scanning skills using a compact ultrasound system. Ultrasound Q. (2007) 23:157–60. doi: 10.1097/01.ruq.0000263847.00185.28

46. Arger PH, Schultz SM, Sehgal CM, Cary TW, Aronchick J. Teaching medical students diagnostic sonography. J Ultrasound Med. (2005) 24:1365–9. doi: 10.7863/jum.2005.24.10.1365

47. Mullen A, Kim B, Puglisi J, Mason NL. An economical strategy for early medical education in ultrasound. BMC Med Educ. (2018) 18:169. doi: 10.1186/s12909-018-1275-2

48. Tshibwabwa ET, Groves HM. Integration of ultrasound in the education programme in anatomy. Med Educ. (2005) 39:1148. doi: 10.1111/j.1365-2929.2005.02288.x

49. Wittich CM, Samantha PC, Montgomery MS, Michelle A, Neben BS. Teaching Cardiovascular Anatomy to Medical Students by Using a Handheld Ultrasound Device. JAMA. (2002) 288:1062–3. doi: 10.1001/jama.288.9.1062

50. Fernández-Frackelton M, Peterson M, Lewis RJ, Pérez JE, Coates WCA. bedside ultrasound curriculum for medical students: prospective evaluation of skill acquisition. Teach Learn Med. (2007) 19:14–9. doi: 10.1080/10401330709336618

51. Shapiroa RS, Kob RP, Jacobson S. A pilot project to study the use of ultrasonography forteaching physical examination to medical students. Comput Biol Med. (2002) 32:403–9. doi: 10.1016/S0010-4825(02)00033-1

52. Austin Z, Gregory P, Chiu S. Use of reflection-in-action and self-assessment to promote critical thinking among pharmacy students. Am J Pharm Educ. (2008) 72:48. doi: 10.5688/aj720348

53. Sullivan K, Hall C. Introducing students to self-assessment. Assess Eval High Educ. (1997) 22:289–305. doi: 10.1080/0260293970220303

54. Evans AW, McKenna C, Oliver M. Self-assessment in medical practice. J Royal Soc Med. (2002) 95:511–3. doi: 10.1177/014107680209501013

55. Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. (1975) 1:447–51. doi: 10.1136/bmj.1.5955.447

57. Touchie C, Humphrey-Murto S, Varpio L. Teaching and Assessing Procedural Skills: A Qualitative Study. BMC Med Educ. (2013) 13:69. doi: 10.1186/1472-6920-13-69

58. Norcini JJ, McKinley DW. Assessment methods in medical education. Teach Teacher Educ. (2007) 23:239–50. doi: 10.1016/j.tate.2006.12.021

59. Wragg A, Wade W, Fuller G, Cowan G, Mills P. Assessing the performance of specialist registrars. Clin Med. (2003) 3:131–4. doi: 10.7861/clinmedicine.3-2-131

60. Farajpour A, Amini M, Pishbin E, Mostafavian Z, Akbari Farmad S. Using Modified Direct Observation of Procedural Skills (DOPS) to assess undergraduate medical students. J Adv Med Educ Professional. (2018) 6:130–6. doi: 10.30476/JAMP.2018.41025

61. Wright SA, Bell AL. Enhancement of undergraduate rheumatology teaching through the use of musculoskeletal ultrasound. Rheumatology. (2008) 47:1564–6. doi: 10.1093/rheumatology/ken324

62. McLean SF. Case-based learning and its application in medical and health-care fields: a review of worldwide literature. J Med Educ Curricular Dev. (2016) 3.:S20377 doi: 10.4137/JMECD.S20377

63. Tolsgaard MG, Chalouhi GE. Use of ultrasound simulators for assessment of trainee competence: trendy toys or valuable instruments? Ultrasound Obstetr Gynecol. (2018) 52:424–6. doi: 10.1002/uog.19071

64. Amini R, Stolz LA, Breshears E, Patanwala AE, Stea N, Hawbaker N. Assessment of ultrasound-guided procedures in preclinical years. Intern Emerg Med. (2017) 12:1025–31. doi: 10.1007/s11739-016-1525-4

65. Bahner DP, Adkins EJ, Nagel R, Way D, Werman HA, Royall NA. Brightness mode quality ultrasound imaging examination technique (B-QUIET): quantifying quality in ultrasound imaging. J Ultrasound Med. (2011) 30:1649–55. doi: 10.7863/jum.2011.30.12.1649

66. Moore CL, Copel JA. Point-of-care ultrasonography. N Engl J Med. (2011) 364:749–57. doi: 10.1056/NEJMra0909487

Keywords: medical education, assessment, ultrasound, undergraduate education, practical skills

Citation: Höhne E, Recker F, Dietrich CF and Schäfer VS (2022) Assessment Methods in Medical Ultrasound Education. Front. Med. 9:871957. doi: 10.3389/fmed.2022.871957

Received: 23 February 2022; Accepted: 11 May 2022;

Published: 09 June 2022.

Edited by:

Ahsan Sethi, Qatar University, QatarReviewed by:

Majed Wadi, Qassim University, Saudi ArabiaCopyright © 2022 Höhne, Recker, Dietrich and Schäfer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Florian Recker, Zmxvcmlhbi5yZWNrZXJAdWtib25uLmRl

†These authors have contributed equally to this work

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.