95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Med. , 06 July 2022

Sec. Translational Medicine

Volume 9 - 2022 | https://doi.org/10.3389/fmed.2022.795957

This article is part of the Research Topic Managing Healthcare Transformation Towards P5 Medicine View all 10 articles

Health care is shifting toward become proactive according to the concept of P5 medicine–a predictive, personalized, preventive, participatory and precision discipline. This patient-centered care heavily leverages the latest technologies of artificial intelligence (AI) and robotics that support diagnosis, decision making and treatment. In this paper, we present the role of AI and robotic systems in this evolution, including example use cases. We categorize systems along multiple dimensions such as the type of system, the degree of autonomy, the care setting where the systems are applied, and the application area. These technologies have already achieved notable results in the prediction of sepsis or cardiovascular risk, the monitoring of vital parameters in intensive care units, or in the form of home care robots. Still, while much research is conducted around AI and robotics in health care, adoption in real world care settings is still limited. To remove adoption barriers, we need to address issues such as safety, security, privacy and ethical principles; detect and eliminate bias that could result in harmful or unfair clinical decisions; and build trust in and societal acceptance of AI.

“Artificial intelligence (AI) is the term used to describe the use of computers and technology to simulate intelligent behavior and critical thinking comparable to a human being” (1). Machine learning enables AI applications to automatically (i.e., without being explicitly programmed for) improving their algorithms through experiences gained by cognitive inputs or by the use of data. AI solutions provide data and knowledge to be used by humans or other technologies. The possibility of machines behaving in such a way was originally raised by Alan Turing and further explored starting in the 1950s. Medical expert systems such as MYCIN, designed in the 1970s for medical consultations (2), were internationally recognized a revolution supporting the development of AI in medicine. However, the clinical acceptance was not very high. Similar disappointments across multiple domains led to the so-called “AI winter,” in part because rule-based systems do not allow the discovery of unknown relationships and in part because of the limitations in computing power at the time. Since then, computational power has increased enormously.

Over the centuries, we have improved our knowledge about structure and function of the human body, starting with the organs, tissues, cells sub-cell components etc. Meanwhile, we could advance it up to the molecular and sub-molecular level, including protein coding genes, DNA sequences, non-coding RNA etc. and their effects and behavior in the human body. This has resulted in a continuously improving understanding of the biology of diseases and disease progressions (3). Nowadays, biomedical research and clinical practice are struggling with the size and complexity of the data produced by sequencing technologies, and how to derive from it new diagnoses and treatments. Experiment results, often hidden in clinical data warehouses, must be aggregated, analyzed, and exploited to derive our new, detailed and data-driven knowledge of diseases and enable better decision making.

New tools based on AI have been developed to predict disease recurrence and progression (4) or response to treatment; and robotics, often categorized as a branch of AI, plays an increasing role in patient care. In a medical context, AI means for example imitating the decision-making processes of health professionals (1). In contrast to AI that generates data, robotics provides touchable outcomes or realize physical tasks. AI and robotics use knowledge and patient data for various tasks such as: diagnosis; planning of surgeries; monitoring of patient physical and mental wellness; basic physical interventions to improve patient independence during physical or mental deterioration. We will review concrete realizations in a later section of this paper.

These advances are causing a revolution in health care, enabling it to become proactive as called upon by the concept of P5 medicine –a predictive, personalized, preventive, participatory and precision discipline (5). AI can help interpret personal health information together with other data to stratify the diseases to predict, stop or treat their progression.

In this paper, we describe the impact of AI and robotics on P5 medicine and introduce example use cases. We then discuss challenges faced by these developments. We conclude with recommendations to help AI and robotics transform health ecosystems. We extensively refer to appropriate literature for details on the underlying methods and technologies. Note that we concentrate on applications in the care setting and will not address in more detail the systems used for the education of professionals, logistics, or related to facility management–even though there are clearly important applications of AI in these areas.

We can classify the landscape of AI and robotic systems in health care according to different dimensions (Figure 1): use, task, technology. Within the “use” dimension, we can further distinguish the application area or the care setting. The “task” dimension is characterized by the system's degree of autonomy. Finally, regarding the “technology” dimension, we consider the degree of intrusion into a patient and the type of system. Clearly, this is a simplification and aggregation: AI algorithms as such will not be located in a patient etc.

We can distinguish two types of such systems: virtual and physical (6).

• Virtual systems (relating to AI systems) range from applications such as electronic health record (EHR) systems, or text and data mining applications, to systems supporting treatment decisions.

• Physical systems relate to robotics and include robots that assist in performing surgeries, smart prostheses for handicapped people, and physical aids for elderly care.

There can also be hybrid systems combining AI with robotics, such as social robots that interact with users or microrobots that deliver drugs inside the body.

All these systems exploit enabling technologies that are data and algorithms (see Figure 2). For example, a robotic system may collect data from different sensors–visual, physical, auditory or chemical. The robot's processor manipulates, analyzes, and interprets the data. Actuators enable the robot to perform different functions including visual, physical, auditory or chemical responses.

Two kinds of data are required: data that captures the knowledge and experience gained by the system during diagnosis and treatment, usually through machine learning; and individual patient data, which AI can assess and analyze to derive recommendations. Data can be obtained from physical sensors (wearable, non-wearable), from biosensors (7), or from other information systems such as an EHR application. From the collected data, digital biomarkers can be derived that AI can analyze and interpret (8).

AI-specific algorithms and methods allow data analysis, reasoning, and prediction. AI consists of a growing number of subfields such as machine learning (supervised, unsupervised, and reinforcement learning), machine vision, natural language processing (NLP) and more. NLP enables computers to process and understand natural language (written or spoken). Machine vision or computer vision extracts information from images. An authoritative taxonomy of AI does not exist yet, although several standards bodies have started addressing this task.

AI methodologies can be divided into knowledge-based AI and data-driven AI (9).

• Knowledge-based AI models human knowledge by asking experts for relevant concepts and knowledge they use to solve problems. This knowledge is then formalized in software (9). This is the form of AI closest to the original expert systems of the 1970s.

• Data-driven AI starts from large amounts of data, which are typically processed by machine learning methods to learn patterns that can be used for prediction. Virtual or augmented reality and other types of visualizations can be used to present and explore data, which helps understand relations among data items that are relevant for diagnosis (10).

To more fully exploit the knowledge captured in computerized models, the concept of digital twin has gained traction in the medical field (11). The terms “digital patient model,” “virtual physiological human,” or “digital phenotype” designate the same idea. A digital twin is a virtual model fed by information coming from wearables (12), omics, and patient records. Simulation, AI and robotics can then be applied to the digital twin to learn about the disease progression, to understand drug responses, or to plan surgery, before intervening on the actual patient or organ, effecting a significant digital transformation of the health ecosystems. Virtual organs (e.g., a digital heart) are an application of this concept (13). A digital twin can be customized to an individual patient, thus improving diagnosis.

Regardless of the specific kind of AI, there are some requirements that all AI and robotic systems must meet. They must be:

• Adaptive. Transformed health ecosystems evolve rapidly, especially since according to P5 principles they adapt treatment and diagnosis to individual patients.

• Context-aware. They must infer the current activity state of the user and the characteristics of the environment in order to manage information content and distribution.

• Interoperable. A system must be able to exchange data and knowledge with other ones (14). This requires common semantics between systems, which is the object of standard terminologies, taxonomies or ontologies such as SNOMED CT. NLP can also help with interoperability (15).

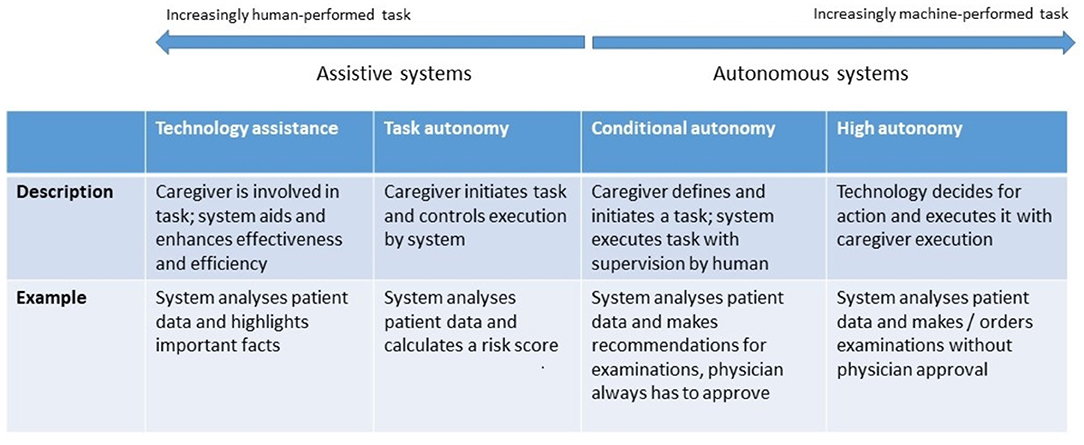

AI and robotic systems can be grouped along an assistive-to-autonomous axis (Figure 3). Assistive systems augment the capabilities of their user by aggregating and analyzing data, performing concrete tasks under human supervision [for example, a semiautonomous ultrasound scanner (17)], or learning how to perform tasks from a health professional's demonstrations. For example, a robot may learn from a physiotherapist how to guide a patient through repetitive rehabilitation exercises (18).

Figure 3. Levels of autonomy of robotic and AI systems. [following models proposed by (16)].

Autonomous systems respond to real world conditions, make decisions, and perform actions with minimal or no interaction with a human (19). They be encountered in a clinical setting (autonomous implanted devices), in support functions to provide assistance1 (carrying things around in a facility), or to automate non-physical work, such as a digital receptionist handling patient check-in (20).

The diversity of users of AI and robotics in health care implies an equally broad range of application areas described below.

Robotics-assisted surgery, “the use of a mechanical device to assist surgery in place of a human-being or in a human-like way” (21) is rapidly impacting many common general surgical procedures, especially minimally invasive surgery. Three types of robotic systems are used in surgery:

• Active systems undertake pre-programmed tasks while remaining under the control of the operating surgeon;

• Semi-active systems allow a surgeon to complement the system's pre-programmed component;

• Master–slave systems lack any autonomous elements; they entirely depend on a surgeon's activity. In laparoscopic surgery or in teleoperation, the surgeon's hand movements are transmitted to surgical instruments, which reproduce them.

Surgeons can also be supported by navigation systems, which localize positions in space and help answer a surgeon's anatomical orientation questions. Real-time tracking of markers, realized in modern surgical navigation systems using a stereoscopic camera emitting infrared light, can determine the 3D position of prominent structures (22).

Various AI and robotic systems support rehabilitation tasks such as monitoring, risk prevention, or treatment (23). For example, fall detection systems (24) use smart sensors placed within an environment or in a wearable device, and automatically alert medical staff, emergency services, or family members if assistance is required. AI allows these systems to learn the normal behavioral patterns and characteristics of individuals over time. Moreover, systems can assess environmental risks, such as household lights that are off or proximity to fall hazards (e.g., stairwells). Physical systems can provide physical assistance (e.g., lifting items, opening doors), monitoring, and therapeutic social functions (25). Robotic rehabilitation applications can provide both physical and cognitive support to individuals by monitoring physiological progress and promoting social interaction. Robots can support patients in recovering motions after a stroke using exoskeletons (26), or recovering or supplementing lost function (27). Beyond directly supporting patients, robots can also assist caregivers. An overview on home-based rehabilitation robots is given by Akbari et al. (28). Virtual reality and augmented reality allow patients to become immersed within and interact with a 3D model of a real or imaginary world, allowing them to practice specific tasks (29). This has been used for motor function training, recovery after a stroke (30) and in pain management (31).

Systems supporting telemedicine support among others the triage, diagnostic, non-surgical treatment, surgical treatment, consultation, monitoring, or provision of specialty care (32).

• Medical triage assesses current symptoms, signs, and test results to determine the severity of a patient's condition and the treatment priority. An increasing number of mobile health applications based on AI are used for diagnosis or treatment optimization (33).

• Smart mobile and wearable devices can be integrated into “smart homes” using Internet-of-Things (IoT) technologies. They can collect patient and contextual data, assist individuals with everyday functioning, monitor progress toward individualized care and rehabilitation goals, issue reminders, and alert care providers if assistance is required.

• Telemedicine for specialty care includes additional tools to track mood and behavior (e.g., pain diaries), AI-based chatbots can mitigate social isolation in home care environments2 by offering companionship and emotional support to users, noting if they are not sleeping well, in pain or depressed, which could indicate a more complex mental condition (34).

• Beyond this, there are physical systems that can deliver specialty care: Robot DE NIRO can interact naturally, reliably, and safely with humans, autonomously navigate through environments on command, intelligently retrieve or move objects (35).

Precision medicine considers the individual patients, their genomic variations as well as contributing factors (age, gender, ethnicity, etc.), and tailors interventions accordingly (8). Digital health applications can also incorporate data such as emotional state, activity, food intake, etc. Given the amount and complexity of data this requires, AI can learn from comprehensive datasets to predict risks and identify the optimal treatment strategy (36). Clinical decision support systems (CDSS) that integrate AI can provide differential diagnoses, recognize early warning signs of patient morbidity or mortality, or identify abnormalities in radiological images or laboratory test results (37). They can increase patient safety, for example by reducing medication or prescription errors or adverse events and can increase care consistency and efficiency (38). They can support clinical management by ensuring adherence to the clinical guidelines or automating administrative functions such as clinical and diagnostic encoding (39), patient triage or ordering of procedures (37).

NLP applications, such as voice transcription, have proved helpful for clinical note-taking (40), compiling electronic health records, automatically generating medical reports from patient-doctor conversations or diagnostic reports (41). AI algorithms can help retrieving context-relevant patient data. Concept-based information retrieval can improve search accuracy and retrieval speed (42). AI algorithms can improve the use and allocation of hospital resources by predicting the length of stay of patients (43) or risk of re-admission (44).

Robotic systems can be used inside the body, on the body or outside the body. Those applied inside the body include microrobots (45), surgical robots and interventional robots. Microrobots are sub-millimeter untethered devices that can be propelled for example by chemical reactions (46), or physical fields (47). They can move unimpeded through the body and perform tasks such as targeted therapy (localized delivery of drugs) (48).

Microrobots can assist in physical surgery, for example by drilling through a blood clot or by opening up obstructions in the urinary tract to restore normal flow (49). They can provide directed local tissue heating to destroy cancer cells (50). They can be implanted to provide continuous remote monitoring and early awareness of an emerging disease.

Robotic prostheses, orthoses and exoskeletons are examples of robotic systems worn on the body. Exoskeletons are wearable robotic systems that are tightly physically coupled with a human body to provide assistance or enhance the wearer's physical capabilities (51). While they have often been developed for applications outside of health care, they can help workers with physically demanding tasks such as moving patients (52) or assist people with muscle weakness or movement disorders. Wearable technology can also be used to measure and transmit data about vital signs or physical activity (19).

Robotic systems applied outside the body can help avoid direct contact when treating patients with infectious diseases (53), assist in surgery (as already mentioned), including remote surgical procedures that leverage augmented reality (54) or assist providers when moving patients (55).

Another dimension of AI and robotics is the duration of their use, which directly correlates with the location of use. Both can significantly influence the requirements, design, and technology components of the solution. In a longer-term care setting, robotics can be used in a patient's home (e.g., for monitoring of vital signs) or for treatment in a nursing home. Shorter-term care settings include inpatient hospitals, palliative care facilities or inpatient psychiatric facilities. Example applications are listed in Table 1.

Having seen how to classify AI and robotic systems in health care, we turn to recent concrete achievements that illustrate their practical application and achievements already realized. This list is definitely not exhaustive, but it illustrates the fact that we're no longer purely at the research or experimentation stage: the technology is starting to bear fruit in a very concrete way–that is, by improving outcomes–even when only in the context of clinical trials prior to regulatory approval for general use.

Sepsis was recently identified as the leading cause of death worldwide, surpassing even cancer or cardiovascular diseases.3 And while timely diagnosis and treatment are difficult in other care settings, it is also the leading cause of death in hospitals in the United States (Sepsis Fact Sheet4) A key reason is the difficulty of recognizing precursor symptoms early enough to initiate effective treatment. Therefore, early onset prediction promises to save millions of lives each year. Here are four such projects:

• Bayesian Health5, a startup founded by a researcher at Johns Hopkins University, applied its model to a test population of hospital patients and correctly identified 82% of the 9,800 patients who later developed sepsis.

• Dascena, a California startup, has been testing its software on large cohorts of patients since 2017, achieving significant improvements in outcomes (63).

• Patchd6 uses wearable devices and deep learning to predict sepsis in high-risk patients. Early studies have shown that this technology can predict sepsis 8 h earlier, and more accurately, than under existing standards of care.

• A team of researchers from Singapore developed a system that combines clinical measures (structured data) with physician notes (unstructured data), resulting in improved early detection while reducing false positives (64).

For patients in an ICU, the paradox is that large amounts of data are collected, displayed on monitors, and used to trigger alarms, but these various data streams are rarely used together, nor can doctors or nurses effectively observe all the data from all the patients all the time.

This is an area where much has been written, but most available information points to studies that have not resulted in actual deployments. A survey paper alluded in particular to the challenge of achieving effective collaboration between ICU staff and automated processes (65).

In one application example, machine learning helps resolving the asynchrony between a mechanical ventilator and the patient's own breathing reflexes, which can cause distress and complicate recovery (66).

This is another area where research has provided evidence of the efficacy of AI, generally not employed alone but rather as an advisor to a medical professional, yet there are few actual deployments at scale.

These applications differ based on the location of the tumors, and therefore on the imaging techniques used to observe them. AI makes the interpretation of the images more reliable, generally by pinpointing to the radiologists areas they might otherwise overlook.

• In a study performed in Korea, AI appeared to improve the recognition of lung cancer in chest X-rays (67). AI by itself performed better than unaided radiologists, and the improvement was greater when AI was used as an aid by radiologists. Note however that the sample size was fairly small.

• Several successive efforts aimed to use AI to classify dermoscopic images to discriminate between benign nevi and melanoma (68).

The rapid and tragic emergence of the COVID-19 disease, and its continued evolution at the time of this writing, have mobilized many researchers, including the AI community. This domain is naturally divided into two areas, diagnostic and treatment.

An example of AI applied to COVID-19 diagnostic is based on an early observation that the persistent cough that is one of the common symptoms of the disease “sounds different” from the cough caused by other ailments, such as the common cold. The MIT Opensigma project7 has “crowdsourced” sound recordings of coughs from many people, most of whom do not have the disease while some know that they have it or had it. Several similar projects have been conducted elsewhere (69).

Another effort used AI to read computer tomography images to provide a rapid COVID-19 test, reportedly achieving over 90% accuracy in 15 s (70). Curiously, after this news was widely circulated in February-March 2020, nothing else was said for several months. Six months later, a blog post8 from the University of Virginia radiology and medical department asserted that “CT scans and X-rays have a limited role in diagnosing coronavirus.” The approach pioneered in China may have been the right solution at a specific point in time (many cases concentrated in a small geographical area, requiring a massive detection effort before other rapid tests were available), thus overriding the drawbacks related to equipment cost and patient exposure to radiation.

While the word triage immediately evokes urgent decisions about what interventions to perform on acutely ill patients or accident victims, it can also be applied to remote patient assistance (e.g., telehealth applications), especially in areas underserved by medical staff and facilities.

In an emergency care setting, where triage decisions can result in the survival or death of a person, there is a natural reluctance to entrust such decisions to machines. However, AI as a predictor of outcomes could serve as an assistant to an emergency technician or doctor. A 2017 study of emergency room triage of patients with acute abdominal pain only showed an “acceptable level of accuracy” (71), but more recently, the Mayo Clinic introduced an AI-based “digital triage platform” from Diagnostic Robotics9 to “perform clinical intake of patients and suggest diagnoses and hospital risk scores.” These solutions can now be delivered by a website or a smartphone app, and have evolved from decision trees designed by doctors to incorporate AI.

Google Research announced in 2018 that it has achieved “prediction of cardiovascular risk factors from retinal fundus photographs via deep learning” with a level of accuracy similar to traditional methods such as blood tests for cholesterol levels (72). The novelty consists in the use of a neural network to analyze the retina image, resulting in more power at the expense of explainability.

In practice, the future of such a solution is unclear: certain risk factors could be assessed from the retinal scan, but those were often factors that could be measured directly anyway–such as from blood pressure.

Many physiological and neurological factors affect how someone walks, given the complex interactions between the sense of touch, the brain, the nervous system, and the muscles involved. Certain conditions, in particular Parkinson's disease, have been shown to affect a person's gait, causing visible symptoms that can help diagnose the disease or measure its progress. Even if an abnormal gait results from another cause, an accurate analysis can help assess the risk of falls in elderly patients.

Compared to other applications in this section, gait analysis has been practiced for a longer time (over a century) and has progressed incrementally as new motion capture methods (film, video, infrared cameras) were developed. In terms of knowledge representation, see for example the work done at MIT twenty years ago (73). Computer vision, combined with AI, can considerably improve gait analysis compared to a physician's simple observation. Companies such as Exer10 offer solutions that physical therapists can use to assess patients, or that can help monitor and improve a home exercise program. This is an area where technology has already been deployed at scale: there are more than 60 clinical and research gate labs11 in the U.S. alone.

Robots that provide assistance to elderly or sick persons have been the focus of research and development for several decades, particularly in Japan due to the country's large aging population with above-average longevity. “Elder care robots” can be deployed at home (with cost being an obvious issue for many customers) or in senior care environments (74), where they will help alleviate a severe shortage of nurses and specialized workers, which cannot be easily addressed through the hiring of foreign help given the language barrier.

The types of robots used in such settings are proliferating. They range from robots that help patients move or exercise, to robots that help with common tasks such as opening the front door to a visitor or bringing a cup of tea, to robots that provide psychological comfort and even some form of conversation. PARO, for instance, is a robotic bay seal developed to provide treatment to patients with dementia (75).

Biomechatronics combines biology, mechanical engineering, and electronics to design assistive devices that interpret inputs from sensors and send commands to actuators–with both sensors and actuators attached in some manner to the body. The sensors, actuators, control system, and the human subject form together a closed-loop control system.

Biomechatronic applications live at the boundary of prosthetics and robotics, for example to help amputees achieve close-to-normal motion of a prosthetic limb. This work has been demonstrated for many years, with impressive results, at the MIT Media Lab under Prof. Hugh Herr12 However, those applications have rarely left the lab environment due to the device cost. That cost could be lowered by production in large quantities, but coverage by health insurance companies or agencies is likely to remain problematic.

Table 2 shows a mapping of the above use cases to the classification introduced in the first section of this paper.

While the range of opportunities, and the achievements to date, of robotics and AI are impressive as seen above, multiple issues impede their deployment and acceptance in daily practice.

Issues related to trust, security, privacy and ethics are prevalent across all aspects of health care, and many are discussed elsewhere in this issue. We will therefore only briefly mention those challenges that are unique to AI and robotics.

Health care professionals may ignore or resist new technologies for multiple reasons, including actual or perceived threats to professional status and autonomy (76), privacy concerns (77) or the unresolved legal and ethical questions of responsibility (78). The issues of worker displacement by robots are just as acute in health care as in other domains. Today, while surgery robots operate increasingly autonomously, humans still perform many tasks and play an essential role in determining the robot's course of operation (e.g., for selecting the process parameters or for the positioning of the patient) (79). This allocation of responsibilities is bound to evolve.

Explainability is “a characteristic of an AI-driven system allowing a person to reconstruct why a certain AI came up with the presented prediction” (80). In contrast to rule-based systems, AI-based predictions can often not be explained in a human-intelligible manner, which can hide errors or bias (the “black box problem” of machine learning). The explainability of AI models is an ongoing research area. When information on the reasons for an AI-based decision is missing, physicians cannot judge the reliability of the advice and there is a risk to patient safety.

Who is responsible when the AI or robot makes mistakes or creates harm in patients? Is it the programmer, manufacturer, end user, the AI/robotic system itself, the provider of the training dataset, or something (or someone) else? The answer depends on the system's degree of autonomy. The European Parliament's 2017 Resolution on AI (81) assigns legal responsibility for an action of an AI or robotic system to a human actor, which may be its owner, developer, manufacturer or operator.

Machine learning requires access to large quantities of data regarding patients as well as healthy people. This raises issues regarding the ownership of data, protection against theft, compliance with regulations such as HIPAA in the U.S. (82) or GDPR for European citizens (83), and what level of anonymization of data is necessary and possible. Regarding the last point, AI models could have unintended consequences, and the evolution of science itself could make patient re-identification possible in the future.

Currently, the reliability and quality of data received from sensors and digital health devices remain uncertain (84)–a fact that future research and development must address. Datasets in medicine are naturally imperfect (due to noise, errors in documentation, incompleteness, differences in documentation granularities, etc.), hence it is impossible to develop error-free machine learning models (80). Furthermore, without a way to quickly and reliably integrate the various data sources for analysis, there is lost potential for fast diagnosis by AI algorithms.

Introducing AI and robotics into the delivery of health care is likely to create new risks and safety issues. Those will exist even under normal functioning circumstances, when they may be due to design, programming or configuration errors, or improper data preparation (85).

These issues only get worse when considering the probability of cyberattacks:

• Patient data may be exposed or stolen, perhaps by scammers who want to exploit it for profit.

• Security vulnerabilities in robots that interact directly with patients may cause malfunctions that physically threaten the patient or professional. The robot may cause harm directly, or indirectly by giving a surgeon incorrect feedback. In case of unexpected robot behavior, it may be unclear to the user whether the robot is functioning properly or is under attack (86).

The EU Commission recently drafted a legal framework13 addressing the risks of AI (not only in health care) in order to improve the safety of and trust in AI. The framework distinguishes four levels of risks: unacceptable risk, high risk, limited risk and minimal risk. AI systems with unacceptable risks will be prohibited, high-risk ones will have to meet strict obligations before release (e.g., risk assessment and mitigation, traceability of results). Limited-risk applications such as chatbots (which can be used in telemedicine) will require “labeling” so that users are made aware that they are interacting with an AI-powered system.

While P5 medicine aims at considering multiple factors–ethnicity, gender, socio-economic background, education, etc.–to come up with individualized care, current implementations of AI often demonstrate potential biases toward certain patient groups of the population. The training datasets may have under-represented those groups, or important features may be distributed differently across groups–for example, cardiovascular disease or Parkinson's disease progress differently in men and women (87), so the corresponding features will vary. These causes result in undesirable bias and “unintended of unnecessary discrimination” of subgroups (88).

On the flip side, careful implementations of AI could explicitly consider gender, ethnicity, etc. differences to achieve more effective treatments for patients belonging to those groups. This can be considered “desirable bias” that counteracts the undesirable kind (89) and gets us closer to the goals of P5 medicine.

The relationship between patients and medical professionals has evolved over time, and AI is likely to impact it by inserting itself into the picture (see Figure 4). Although AI and robotics are performing well, human surveillance is still essential. Robots and AI algorithms operate logically, but health care often requires acting empathically. If doctors become intelligent users of AI, they may retain the trust associated with their role, but most patients, who have a limited understanding of the technologies involved, would have much difficulty in trusting AI (90). Conversely, reliable and accurate diagnosis and beneficial treatment, and appropriate use of AI and robotics by the physician can strengthen the patient's trust (91).

This assumes of course that the designers of those systems adhere to established guidelines for trustworthy AI in the first place, which includes such requirements as creating systems that are lawful, ethical, and robust (92, 93).

We can summarize the previous sections as follows:

1. There are many types of AI applications and robotic systems, which can be introduced in many aspects of health care.

2. AI's ability to digest and process enormous amounts of data, and derive conclusions that are not obvious to a human, holds the promise of more personalized and predictive care–key goals of P5 medicine.

3. There have been, over the last few years, a number of proof-of-concept and pilot projects that have exhibited promising results for diagnosis, treatment, and health maintenance. They have not yet been deployed at scale–in part because of the time it takes to fully evaluate their efficacy and safety.

4. There is a rather daunting list of challenges to address, most of which are not purely technical–the key one being demonstrating that the systems are effective and safe enough to warrant the confidence of both the practitioners and their patients.

Based on this analysis, what is the roadmap to success for these technologies, and how will they succeed in contributing to the future of health care? Figure 5 depicts the convergent approaches that need to be developed to ensure safe and productive adoption, in line with the P5 medicine principles.

First, AI technology is currently undergoing a remarkable revival and being applied to many domains. Health applications will both benefit from and contribute to further advances. In areas such as image classification or natural language understanding, both of which have obvious utility in health care, the rate of progress is remarkable. Today's AI techniques may seem obsolete in ten years.

Second, the more technical challenges of AI–such as privacy, explainability, or fairness–are being worked on, both in the research community and in the legislative and regulatory world. Standard procedures for assessing the efficacy and safety of systems will be needed, but in reality, this is not a new concept: it is what has been developed over the years to approve new medicines. We need to be consistent and apply the same hard-headed validation processes to the new technologies.

Third, it should be clear from our exploration of this subject that education–of patients as well as of professionals–is key to the societal acceptance of the role that AI and robotics will be called upon to play. Every invention or innovation–from the steam engine to the telephone to the computer–has gone through this process. Practitioners must learn enough about how AI models and robotics work to build a “working relationship” with those tools and build trust in them–just as their predecessors learned to trust what they saw on an X-ray or CT scan. Patients, for their part, need to understand what AI and robotics can or cannot do, how the physician will remain in the loop when appropriate, and what data is being collected about them in the process. We will have a responsibility to ensure that complex systems that patients do not sufficiently understand cannot be misused against them, whether accidentally or deliberately.

Fourth, health care is also a business, involving financial transactions between patients, providers, and insurers (public or private, depending on the country). New cost and reimbursement models will need to be developed, especially given that when AI is used to assist professionals, not replace them, the cost of the system is additive to the human cost of assessing the data and reviewing the system's recommendations.

Fifth and last, clinical pathways have to be adapted and new role models for physicians have to be built. Clinical paths can already differ and make it harder to provide continuity of care to a patient who moves across care delivery systems that have different capabilities. This issue is being addressed by the BPM+ Health Community14 using the business process, case management and decision modeling standards of the Object Management Group (OMG). The issue will become more complex by integrating AI and robotics: every doctor has similar training and a stethoscope, but not every doctor or hospital will have the same sensors, AI programs, or robots.

Eventually, the convergence of these approaches will help to build a complete digital patient model–a digital twin of each specific human being – generated out of all the data gathered from general practitioners, hospitals, laboratories, mHealth apps, and wearable sensors, along the entire life of the patient. At that point, AI will be able to support superior, fully personal and predictive medicine, while robotics will automate or support many aspects of treatment and care.

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

KD came up with the classification of AI and robotic systems. CB identified concrete application examples. Both authors contributed equally, identified adoption challenges, and developed recommendations for future work. Both authors contributed to the article and approved the submitted version.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

1. ^https://cmte.ieee.org/futuredirections/2019/07/21/autonomous-systems-in-healthcare/

2. ^https://emag.medicalexpo.com/ai-powered-chatbots-to-help-against-self-isolation-during-covid-19/

3. ^https://www.med.ubc.ca/news/sepsis-leading-cause-of-death-worldwide/

4. ^https://www.sepsis.org/wp-content/uploads/2017/05/Sepsis-Fact-Sheet-2018.pdf

5. ^https://medcitynews.com/2021/07/johns-hopkins-spinoff-looking-to-build-better-risk-prediction-tooing,ls-emerges-with-15m/

6. ^https://www.patchdmedical.com/

8. ^https://blog.radiology.virginia.edu/covid-19-and-imaging/

9. ^https://hitinfrastructure.com/news/diagnostic-robotics-mayo-clinic-bring-triage-platform-to-patients

11. ^https://www.gcmas.org/map

12. ^https://www.media.mit.edu/groups/biomechatronics/overview/

13. ^https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

1. Amisha Malik P, Pathania M, Rathaur VK. Overview of artificial intelligence in medicine. J Fam Med Prim Care. (2019) 8:2328–31. doi: 10.4103/jfmpc.jfmpc_440_19

2. van Melle W, Shortliffe EH, Buchanan BG. EMYCIN: a knowledge engineer's tool for constructing rule-based expert systems. In: Buchanan BG, Shortliffe EH, editors. Rule-Based Expert Systems. Reading, MA: Addison-Wesley Publishing Company (1984). p. 302–13.

3. Tursz T, Andre F, Lazar V, Lacroix L, Soria J-C. Implications of personalized medicine—perspective from a cancer center. Nat Rev Clin Oncol. (2011) 8:177–83. doi: 10.1038/nrclinonc.2010.222

4. van't Veer LJ, Dai H, van de Vijver MJ, He YD, Hart AAM, Mao M, et al. Gene expression profiling predicts clinical outcome of breast cancer. Nature. (2002) 415:530–6. doi: 10.1038/415530a

5. Auffray C, Charron D, Hood L. Predictive, preventive, personalized and participatory medicine: back to the future. Genome Med. (2010) 2:57. doi: 10.1186/gm178

6. Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. (2017) 69:S36–40. doi: 10.1016/j.metabol.2017.01.011

7. Kim J, Campbell AS, de Ávila BE-F, Wang J. Wearable biosensors for healthcare monitoring. Nat Biotechnol. (2019) 37:389–406. doi: 10.1038/s41587-019-0045-y

8. Nam KH, Kim DH, Choi BK, Han IH. Internet of things, digital biomarker, and artificial intelligence in spine: current and future perspectives. Neurospine. (2019) 16:705–11. doi: 10.14245/ns.1938388.194

9. Steels L, Lopez de, Mantaras R. The Barcelona declaration for the proper development and usage of artificial intelligence in Europe. AI Commun. (2018) 31:485–94. doi: 10.3233/AIC-180607

10. Olshannikova E, Ometov A, Koucheryavy Y, Olsson T. Visualizing big data with augmented and virtual reality: challenges and research agenda. J Big Data. (2015) 2:22. doi: 10.1186/s40537-015-0031-2

11. Björnsson B, Borrebaeck C, Elander N, Gasslander T, Gawel DR, Gustafsson M, et al. Digital twins to personalize medicine. Genome Med. (2019) 12:4. doi: 10.1186/s13073-019-0701-3

12. Bates M. Health care chatbots are here to help. IEEE Pulse. (2019) 10:12–4. doi: 10.1109/MPULS.2019.2911816

13. Corral-Acero J, Margara F, Marciniak M, Rodero C, Loncaric F, Feng Y, et al. The “Digital Twin” to enable the vision of precision cardiology. Eur Heart J. (2020) 41:4556–64. doi: 10.1093/eurheartj/ehaa159

14. Montani S, Striani M. Artificial intelligence in clinical decision support: a focused literature survey. Yearb Med Inform. (2019) 28:120–7. doi: 10.1055/s-0039-1677911

15. Oemig F, Blobel B. natural language processing supporting interoperability in healthcare. In: Biemann C, Mehler A, editors. Text Mining. Cham: Springer International Publishing (2014). p. 137–56. (Theory and Applications of Natural Language Processing). doi: 10.1007/978-3-319-12655-5_7

16. Bitterman DS, Aerts HJWL, Mak RH. Approaching autonomy in medical artificial intelligence. Lancet Digit Health. (2020) 2:e447–9. doi: 10.1016/S2589-7500(20)30187-4

17. Carriere J, Fong J, Meyer T, Sloboda R, Husain S, Usmani N, et al. An Admittance-Controlled Robotic Assistant for Semi-Autonomous Breast Ultrasound Scanning. In: 2019 International Symposium on Medical Robotics (ISMR). Atlanta, GA: IEEE (2019). p. 1–7. doi: 10.1109/ISMR.2019.8710206

18. Tao R, Ocampo R, Fong J, Soleymani A, Tavakoli M. Modeling and emulating a physiotherapist's role in robot-assisted rehabilitation. Adv Intell Syst. (2020) 2:1900181. doi: 10.1002/aisy.201900181

19. Tavakoli M, Carriere J, Torabi A. Robotics, smart wearable technologies, and autonomous intelligent systems for healthcare during the COVID-19 pandemic: an analysis of the state of the art and future vision. Adv Intell Syst. (2020) 2:2000071. doi: 10.1002/aisy.202000071

20. Ahn HS, Yep W, Lim J, Ahn BK, Johanson DL, Hwang EJ, et al. Hospital receptionist robot v2: design for enhancing verbal interaction with social skills. In: 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN). New Delhi: IEEE (2019). p. 1–6. doi: 10.1109/RO-MAN46459.2019.8956300

21. Lane T. A short history of robotic surgery. Ann R Coll Surg Engl. (2018) 100:5–7. doi: 10.1308/rcsann.supp1.5

22. Mezger U, Jendrewski C, Bartels M. Navigation in surgery. Langenbecks Arch Surg. (2013) 398:501–14. doi: 10.1007/s00423-013-1059-4

23. Luxton DD, June JD, Sano A, Bickmore T. Intelligent mobile, wearable, and ambient technologies for behavioral health care. In: Artificial Intelligence in Behavioral and Mental Health Care. Elsevier (2016). p. 137–62. Available online at: https://linkinghub.elsevier.com/retrieve/pii/B9780124202481000064

24. Casilari E, Oviedo-Jiménez MA. Automatic fall detection system based on the combined use of a smartphone and a smartwatch. PLoS ONE. (2015) 10:e0140929. doi: 10.1371/journal.pone.0140929

25. Sriram KNV, Palaniswamy S. Mobile robot assistance for disabled and senior citizens using hand gestures. In: 2019 International Conference on Power Electronics Applications and Technology in Present Energy Scenario (PETPES). Mangalore: IEEE (2019). p. 1–6. doi: 10.1109/PETPES47060.2019.9003821

26. Nibras N, Liu C, Mottet D, Wang C, Reinkensmeyer D, Remy-Neris O, et al. Dissociating sensorimotor recovery and compensation during exoskeleton training following stroke. Front Hum Neurosci. (2021) 15:645021. doi: 10.3389/fnhum.2021.645021

27. Maciejasz P, Eschweiler J, Gerlach-Hahn K, Jansen-Troy A, Leonhardt S. A survey on robotic devices for upper limb rehabilitation. J NeuroEngineering Rehabil. (2014) 11:3. doi: 10.1186/1743-0003-11-3

28. Akbari A, Haghverd F, Behbahani S. Robotic home-based rehabilitation systems design: from a literature review to a conceptual framework for community-based remote therapy during COVID-19 pandemic. Front Robot AI. (2021) 8:612331. doi: 10.3389/frobt.2021.612331

29. Howard MC. A meta-analysis and systematic literature review of virtual reality rehabilitation programs. Comput Hum Behav. (2017) 70:317–27. doi: 10.1016/j.chb.2017.01.013

30. Gorman C, Gustafsson L. The use of augmented reality for rehabilitation after stroke: a narrative review. Disabil Rehabil Assist Technol. (2020) 17:409–17. doi: 10.1080/17483107.2020.1791264

31. Li A, Montaño Z, Chen VJ, Gold JI. Virtual reality and pain management: current trends and future directions. Pain Manag. (2011) 1:147–57. doi: 10.2217/pmt.10.15

32. Tulu B, Chatterjee S, Laxminarayan S. A taxonomy of telemedicine efforts with respect to applications, infrastructure, delivery tools, type of setting and purpose. In: Proceedings of the 38th Annual Hawaii International Conference on System Sciences. Big Island, HI: IEEE (2005). p. 147.

33. Lai L, Wittbold KA, Dadabhoy FZ, Sato R, Landman AB, Schwamm LH, et al. Digital triage: novel strategies for population health management in response to the COVID-19 pandemic. Healthc Amst Neth. (2020) 8:100493. doi: 10.1016/j.hjdsi.2020.100493

34. Valtolina S, Marchionna M. Design of a chatbot to assist the elderly. In: Fogli D, Tetteroo D, Barricelli BR, Borsci S, Markopoulos P, Papadopoulos GA, Editors. End-User Development. Cham: Springer International Publishing (2021). p. 153–68. (Lecture Notes in Computer Science; Bd. 12724).

35. Falck F, Doshi S, Tormento M, Nersisyan G, Smuts N, Lingi J, et al. Robot DE NIRO: a human-centered, autonomous, mobile research platform for cognitively-enhanced manipulation. Front Robot AI. (2020) 7:66. doi: 10.3389/frobt.2020.00066

36. Bohr A, Memarzadeh K, . (Eds.) The rise of artificial intelligence in healthcare applications. In: Artificial Intelligence in Healthcare. Oxford: Elsevier (2020). p. 25–60. doi: 10.1016/B978-0-12-818438-7.00002-2

37. Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med. (2020) 3:17. doi: 10.1038/s41746-020-0221-y

38. Saddler N, Harvey G, Jessa K, Rosenfield D. Clinical decision support systems: opportunities in pediatric patient safety. Curr Treat Options Pediatr. (2020) 6:325–35. doi: 10.1007/s40746-020-00206-3

39. Deng H, Wu Q, Qin B, Chow SSM, Domingo-Ferrer J, Shi W. Tracing and revoking leaked credentials: accountability in leaking sensitive outsourced data. In: Proceedings of the 9th ACM Symposium on Information, Computer and Communications Security. New York, NY: Association for Computing Machinery (2014). p. 425–34. (ASIA CCS'14). doi: 10.1145/2590296.2590342

40. Leventhal R. How Natural Language Processing is Helping to Revitalize Physician Documentation. Cleveland, OH: Healthc Inform (2017). Vol. 34, p. 8–13.

41. Gu Q, Nie C, Zou R, Chen W, Zheng C, Zhu D, et al. Automatic generation of electromyogram diagnosis report. In: 2020 IEEE International Conference on Bioinformatics and Biomedicine (BIBM). Seoul: IEEE (2020). p. 1645–50.

42. Jain V, Wason R, Chatterjee JM, Le D-N, editor. Ontology-Based Information Retrieval For Healthcare Systems. 1st ed. Wiley-Scrivener (2020). doi: 10.1002/9781119641391

43. Awad A, Bader–El–Den M, McNicholas J. Patient length of stay and mortality prediction: a survey. Health Serv Manage Res. (2017) 30:105–20. doi: 10.1177/0951484817696212

44. Mahajan SM, Mahajan A, Nguyen C, Bui J, Abbott BT, Osborne TF. Predictive models for identifying risk of readmission after index hospitalization for hip arthroplasty: a systematic review. J Orthop. (2020) 22:73–85. doi: 10.1016/j.jor.2020.03.045

45. Ceylan H, Yasa IC, Kilic U, Hu W, Sitti M. Translational prospects of untethered medical microrobots. Prog Biomed Eng. (2019) 1:012002. doi: 10.1088/2516-1091/ab22d5

46. Sánchez S, Soler L, Katuri J. Chemically powered micro- and nanomotors. Angew Chem Int Ed Engl. (2015) 54:1414–44. doi: 10.1002/anie.201406096

47. Schuerle S, Soleimany AP, Yeh T, Anand GM, Häberli M, Fleming HE, et al. Synthetic and living micropropellers for convection-enhanced nanoparticle transport. Sci Adv. (2019) 5:eaav4803. doi: 10.1126/sciadv.aav4803

48. Erkoc P, Yasa IC, Ceylan H, Yasa O, Alapan Y, Sitti M. Mobile microrobots for active therapeutic delivery. Adv Ther. (2019) 2:1800064. doi: 10.1002/adtp.201800064

49. Yu C, Kim J, Choi H, Choi J, Jeong S, Cha K, et al. Novel electromagnetic actuation system for three-dimensional locomotion and drilling of intravascular microrobot. Sens Actuators Phys. (2010) 161:297–304. doi: 10.1016/j.sna.2010.04.037

50. Chang D, Lim M, Goos JACM, Qiao R, Ng YY, Mansfeld FM, et al. Biologically Targeted magnetic hyperthermia: potential and limitations. Front Pharmacol. (2018) 9:831. doi: 10.3389/fphar.2018.00831

51. Phan GH. Artificial intelligence in rehabilitation evaluation based robotic exoskeletons: a review. EEO. (2021) 20:6203–11. doi: 10.1007/978-981-16-9551-3_6

52. Hwang J, Kumar Yerriboina VN, Ari H, Kim JH. Effects of passive back-support exoskeletons on physical demands and usability during patient transfer tasks. Appl Ergon. (2021) 93:103373. doi: 10.1016/j.apergo.2021.103373

53. Hager G, Kumar V, Murphy R, Rus D, Taylor R. The Role of Robotics in Infectious Disease Crises. ArXiv201009909 Cs (2020).

54. Walker ME, Hedayati H, Szafir D. Robot teleoperation with augmented reality virtual surrogates. In: 2019 14th ACM/IEEE International Conference on Human-Robot Interaction (HRI). Daegu: IEEE (2019). p. 202–10. doi: 10.1109/HRI.2019.8673306

55. Ding M, Matsubara T, Funaki Y, Ikeura R, Mukai T, Ogasawara T. Generation of comfortable lifting motion for a human transfer assistant robot. Int J Intell Robot Appl. (2017) 1:74–85. doi: 10.1007/s41315-016-0009-z

56. Mohebali D, Kittleson MM. Remote monitoring in heart failure: current and emerging technologies in the context of the pandemic. Heart. (2021) 107:366–72. doi: 10.1136/heartjnl-2020-318062

57. Blasco R, Marco Á, Casas R, Cirujano D, Picking R. A smart kitchen for ambient assisted living. Sensors. (2014) 14:1629–53. doi: 10.3390/s140101629

58. Valentí Soler M, Agüera-Ortiz L, Olazarán Rodríguez J, Mendoza Rebolledo C, Pérez Muñoz A, Rodríguez Pérez I, et al. Social robots in advanced dementia. Front Aging Neurosci. (2015) 7:133. doi: 10.3389/fnagi.2015.00133

59. Bickmore TW, Mitchell SE, Jack BW, Paasche-Orlow MK, Pfeifer LM, O'Donnell J. Response to a relational agent by hospital patients with depressive symptoms. Interact Comput. (2010) 22:289–98. doi: 10.1016/j.intcom.2009.12.001

60. Chatzimina M, Koumakis L, Marias K, Tsiknakis M. Employing conversational agents in palliative care: a feasibility study and preliminary assessment. In: 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE). Athens: IEEE (2019). p. 489–96. doi: 10.1109/BIBE.2019.00095

61. Cecula P, Yu J, Dawoodbhoy FM, Delaney J, Tan J, Peacock I, et al. Applications of artificial intelligence to improve patient flow on mental health inpatient units - narrative literature review. Heliyon. (2021) 7:e06626. doi: 10.1016/j.heliyon.2021.e06626

63. Burdick H, Pino E, Gabel-Comeau D, McCoy A, Gu C, Roberts J, et al. Effect of a sepsis prediction algorithm on patient mortality, length of stay and readmission: a prospective multicentre clinical outcomes evaluation of real-world patient data from US hospitals. BMJ Health Care Inform. (2020) 27:e100109. doi: 10.1136/bmjhci-2019-100109

64. Goh KH, Wang L, Yeow AYK, Poh H, Li K, Yeow JJL, et al. Artificial intelligence in sepsis early prediction and diagnosis using unstructured data in healthcare. Nat Commun. (2021) 12:711. doi: 10.1038/s41467-021-20910-4

65. Uckun S. Intelligent systems in patient monitoring and therapy management. a survey of research projects. Int J Clin Monit Comput. (1994) 11:241–53. doi: 10.1007/BF01139876

66. Gholami B, Haddad WM, Bailey JM. AI in the ICU: in the intensive care unit, artificial intelligence can keep watch. IEEE Spectr. (2018) 55:31–5. doi: 10.1109/MSPEC.2018.8482421

67. Nam JG, Hwang EJ, Kim DS, Yoo S-J, Choi H, Goo JM, et al. Undetected lung cancer at posteroanterior chest radiography: potential role of a deep learning–based detection algorithm. Radiol Cardiothorac Imaging. (2020) 2:e190222. doi: 10.1148/ryct.2020190222

68. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542:115–8. doi: 10.1038/nature21056

69. Scudellari M. AI Recognizes COVID-19 in the Sound of a Cough. Available online at: https://spectrum.ieee.org/the-human-os/artificial-intelligence/medical-ai/ai-recognizes-covid-19-in-the-sound-of-a-cough (accessed November 4, 2020).

70. Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W, et al. Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology. (2020) 296:E32–40. doi: 10.1148/radiol.2020200642

71. Farahmand S, Shabestari O, Pakrah M, Hossein-Nejad H, Arbab M, Bagheri-Hariri S. Artificial intelligence-based triage for patients with acute abdominal pain in emergency department; a diagnostic accuracy study. Adv J Emerg Med. (2017) 1:e5. doi: 10.22114/AJEM.v1i1.11

72. Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. (2018) 2:158–64. doi: 10.1038/s41551-018-0195-0

73. Lee L. Gait analysis for classification. (Bd. Thesis Ph. D.)–Massachusetts Institute of Technology, Department of Electrical Engineering and Computer Science (2002). Available online at: http://hdl.handle.net/1721.1/8116

74. Foster M. Aging Japan: Robots May Have Role in Future of Elder Care. Healthcare & Pharma. Available online at: https://www.reuters.com/article/us-japan-ageing-robots-widerimage-idUSKBN1H33AB (accessed March 28, 2018).

75. Pu L, Moyle W, Jones C. How people with dementia perceive a therapeutic robot called PARO in relation to their pain and mood: a qualitative study. J Clin Nurs February. (2020) 29:437–46. doi: 10.1111/jocn.15104

76. Walter Z, Lopez MS. Physician acceptance of information technologies: role of perceived threat to professional autonomy. Decis Support Syst. (2008) 46:206–15. doi: 10.1016/j.dss.2008.06.004

77. Price WN, Cohen IG. Privacy in the age of medical big data. Nat Med. (2019) 25:37–43. doi: 10.1038/s41591-018-0272-7

78. Lamanna C, Byrne L. Should artificial intelligence augment medical decision making? the case for an autonomy algorithm. AMA J Ethics. (2018) 20:E902–910. doi: 10.1001/amajethics.2018.902

79. Fosch-Villaronga E, Drukarch H. On Healthcare Robots. Leiden: Leiden University (2021). Available online at: https://arxiv.org/ftp/arxiv/papers/2106/2106.03468.pdf

80. The The Precise4Q consortium, Amann J, Blasimme A, Vayena E, Frey D, Madai VI. Explainability for artificial intelligence in healthcare: a multidisciplinary perspective. BMC Med Inform Decis Mak. (2020) 20:310. doi: 10.1186/s12911-020-01332-6

81. European Parliament. Resolution with Recommendations to the Commission on Civil Law Rules on Robotics (2015/2103(INL)). (2017). Available online at: http://www.europarl.europa.eu/

82. Mercuri RT. The HIPAA-potamus in health care data security. Comm ACM. (2004) 47:25–8. doi: 10.1145/1005817.1005840

83. Marelli L, Lievevrouw E, Van Hoyweghen I. Fit for purpose? the GDPR and the governance of European digital health. Policy Stud. (2020) 41:447–67. doi: 10.1080/01442872.2020.1724929

84. Poitras I, Dupuis F, Bielmann M, Campeau-Lecours A, Mercier C, Bouyer L, et al. Validity and reliability of wearable sensors for joint angle estimation: a systematic review. Sensors. (2019) 19:1555. doi: 10.3390/s19071555

85. Macrae C. Governing the safety of artificial intelligence in healthcare. BMJ Qual Saf June. (2019) 28:495–8. doi: 10.1136/bmjqs-2019-009484

86. Fosch-Villaronga E, Mahler T. Cybersecurity, safety and robots: strengthening the link between cybersecurity and safety in the context of care robots. Comput Law Secur Rev. (2021) 41:105528. doi: 10.1016/j.clsr.2021.105528

87. Miller IN, Cronin-Golomb A. Gender differences in Parkinson's disease: clinical characteristics and cognition. Mov Disord Off J Mov Disord Soc. (2010) 25:2695–703. doi: 10.1002/mds.23388

88. Cirillo D, Catuara-Solarz S, Morey C, Guney E, Subirats L, Mellino S, et al. Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare. Npj Digit Med. (2020) 3:81. doi: 10.1038/s41746-020-0288-5

89. Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: a tool to assess the risk of bias and applicability of prediction model studies. Ann Intern Med. (2019) 170:51–8. doi: 10.7326/M18-1376

90. LaRosa E, Danks D. Impacts on trust of healthcare AI. In: Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society. New Orleans, LA: ACM (2018). p. 210–5. doi: 10.1145/3278721.3278771

91. Lee D, Yoon SN. Application of artificial intelligence-based technologies in the healthcare industry: opportunities and challenges. Int J Environ Res Public Health. (2021) 18:271. doi: 10.3390/ijerph18010271

92. Smuha NA. Ethics guidelines for trustworthy AI. Comput Law Rev Int. (2019) 20:97–106. doi: 10.9785/cri-2019-200402

Keywords: artificial intelligence, robotics, healthcare, personalized medicine, P5 medicine

Citation: Denecke K and Baudoin CR (2022) A Review of Artificial Intelligence and Robotics in Transformed Health Ecosystems. Front. Med. 9:795957. doi: 10.3389/fmed.2022.795957

Received: 15 October 2021; Accepted: 15 June 2022;

Published: 06 July 2022.

Edited by:

Dipak Kalra, The European Institute for Innovation Through Health Data, BelgiumReviewed by:

Marios Kyriazis, National Gerontology Centre, CyprusCopyright © 2022 Denecke and Baudoin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kerstin Denecke, a2Vyc3Rpbi5kZW5lY2tlQGJmaC5jaA==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.