- ICT4SM Group, École Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

Nowadays, the manufacturing industry is constantly changing. Production systems must operate in a highly dynamic environment where unexpected events could occur and create disruption, making rescheduling inevitable for manufacturing companies. Rescheduling models are fundamental to the robustness of production processes. This paper proposes a model to address rescheduling caused by unexpected events, aiming to achieve the zero-defect manufacturing (ZDM) concept. The goal of the model is to incorporate traditional and ZDM–oriented events into one methodology to calculate when the next rescheduling will be performed to effectively react to unexpected events. The methodology relies on the definition of two key time parameters for each event type: event response time (RT) and event delay response time (DRT). Based on these parameters, an event management algorithm is designed to identify the optimal rescheduling solution. The DRT parameter is calculated based on a multi-parametric dynamic formula to capture the dynamics of production. Moreover, ANOM, and ANOVA methods are used to analyse the behaviour of the developed method and to assess the level of robustness of the proposed approach. Finally, a case study based on real production scenarios is conducted, a series of simulation experiments are performed, and comparisons with other rescheduling policies are presented. The results demonstrate the effectiveness of the proposed event management algorithm for managing rescheduling.

Introduction

Over the years, the manufacturing industry has seen constant growth and change. The fourth industrial revolution (Industry 4.0) and relevant advanced technologies have reshaped modern manufacturing systems and enhanced their ability to meet customers' higher expectations, such as for more customised products in a shorter time (Khan and Turowski, 2016; Zhou et al., 2017). The frequent changes in the industry environment require production systems to perform in highly dynamic and stochastic scenarios (Ouelhadj and Petrovic, 2009). Under these circumstances, unexpected events may occur, causing the initial schedule to be changed because it does not fit the new scenario (Barták and Vlk, 2017). In such cases, rescheduling is mandatory to mitigate the impact of disruptions and recover the original solution (Salido et al., 2017).

Scheduling and rescheduling problems have been popular in both academic and industrial practises. Different scheduling and rescheduling models have been developed to improve the reliability and efficiency of production systems. However, most existing models work only under ideal conditions, since they do not consider external events (Uhlmann and Frazzon, 2018). Indeed, various unpredictable events could occur at the shop-floor level and introduce inconsistencies into the ongoing schedule. Therefore, it is important to broaden the research area by analysing existing rescheduling models and creating new ones to mitigate the consequences of unpredictable events (Uhlmann and Frazzon, 2018).

A proactive approach may not foresee all possible disruptive events, even if the original schedule is robust (Barták and Vlk, 2017). Thus, rescheduling has a central role in the robustness of production processes under uncertain conditions (Stevenson et al., 2020). Such production processes need to be as reactive and flexible as possible to minimise interruptions to the production flow (Cardin et al., 2017). More specifically, a generated schedule can become infeasible or non-optimal due to the uncertainties of manufacturing systems, such as new orders or machine breakdowns (Baykasoglu and Karaslan, 2017). As an example, the outbreak of the COVID-19 pandemic has heavily impacted almost all manufacturing systems across the globe. During the first surge of the pandemic, there was an explosive demand for relevant medical equipment, such as medical masks and aspirators. This urgent situation required medical equipment manufacturers and resource suppliers to adjust their production plans rapidly and maintain high product quality. According to Lindström et al. (2019), the generic strategy to apply is the following: first, the scheduling solution has to be produced (predictive); then, when an unexpected event occurs, rescheduling should be conducted to generate a new feasible solution (reactive).

In the current highly competitive market, a high-performance quality management system is critical to satisfy customer needs, minimise waste, and increase the sustainability of a production system (Psarommatis et al., 2020c). The most sustainable solution to tackle this problem is to follow the zero-defect manufacturing (ZDM) philosophy. The aim is to achieve higher efficiency and quality in the process by eliminating defected parts (Psarommatis et al., 2020b). The concept of ZDM suggests that for any event in the production there must be a reaction to mitigate the negative effects of the event. For example, in the event of a defected part, repairing the part could be an action to maintain production quality. ZDM is composed of four different strategies: detection, repair, prediction, and prevention (Psarommatis et al., 2020b). The implantation of each of the four ZDM strategies heavily relies on the technologies that Industry 4.0 offers. In other words, ZDM is the only quality assurance philosophy that utilises the full potential of Industry 4.0 technologies.

A previous study (Lindström et al., 2019) has proved that rescheduling a production system is one of the main challenges to be addressed to achieve ZDM. This is because the number of events in production is significantly increased, due to the addition of ZDM–oriented events, and their counter-actions must be integrated within the existing production with as little performance loss as possible. However, to apply ZDM in the rescheduling process, a new category of real-time events must be added: product-oriented unexpected events (Myklebust, 2013). This adds an extra level of complexity when rescheduling. Therefore, attention should be given to the rescheduling policy used (Stevenson et al., 2020).

To broaden the knowledge of the research community and present a reliable solution to industrial practitioners, this research aims to develop a generic method to incorporate both traditional production events, such as new orders or maintenance, and events that arise from the ZDM concept in one model. The purpose of the proposed model is to balance rescheduling frequency and measured Key Performance Indicators (KPIs) and therefore to achieve an efficient ZDM implementation increasing at the same time the sustainability of the production. More specifically, the proposed rescheduling model achieves two goals towards sustainable manufacturing, the successful implementation of ZDM which contributes to the minimisation of negative environmental impact while at the same time ensures an economically sound manufacturing process. This is achieved by evaluating the events at any point in time and automatically deciding on the time to reschedule and which events to include to this rescheduling. The model was validated, and its performance was tested, using a real industrial case from the semiconductor manufacturing industry that produces printed circuit boards (PCBs) for the healthcare sector. Using the Taguchi method for the design of experiments and the ANOVA method, to study the behaviour of each control parameter. Other rescheduling policies were modelled, using the industrial data, and compared with the proposed method.

The rest of this paper is organised as follows. The related work is reviewed in section Related Work. The proposed method is introduced in section Events Management Algorithm. A case study and relevant experiments are presented in section Industrial Use Case. In section Critical Discussion, a critical discussion of the proposed approach and the case study is provided. Finally, we conclude our study in section Conclusion and Future Work.

Related Work

The aim of this study is to develop a rescheduling model to support manufacturing companies to effectively react to unexpected events, aiming at achieving the ZDM vision. A literature review is conducted to summarise existing solutions. We first investigate the rescheduling concept in the ZDM context. We then analyse various existing rescheduling models in different categories. In addition, we compare important rescheduling models that are linked to ZDM, based on which we propose our novel solution.

To cope with disruptions and variabilities, the schedules of manufacturing systems need to be resilient and robust. Such schedules require two main functions: predictive and reactive scheduling (Mehta and Uzsoy, 1999; O'donovan et al., 1999). Predictive scheduling generates a schedule through inserting idle time between pre-scheduled activities. When a disruption's duration exceeds the inserted idle time, reactive scheduling is required, which is also commonly referred to as rescheduling (Salido et al., 2017). Rescheduling has been defined as a process of generating a new executable schedule upon the occurrence of an unforeseen disruption (Vieira et al., 2003). It modifies the existing schedule during processing to adapt to changes in a production or operational environment (Sun and Xue, 2001).

Disruptions to a manufacturing system may be caused by different types of events, such as unexpected orders, unexpected machine breakdown, unexpected product defects, and so on. Correspondingly, existing rescheduling methods can be categorised as order-oriented, machine-oriented, or product-oriented. In addition, there are also studies focusing on generic rescheduling problems that disregard the cause of the disruption. Some important studies in each category are introduced in the following subsections.

Generic Rescheduling

Generic rescheduling solutions aim to address disruptions to manufacturing systems in general, covering multiple types of unexpected events or even disregarding differences in disruption types. Barták and Vlk (2017) propose a back-jumping heuristic algorithm to respond to unexpected resource breakdowns and rush orders. The solution adopted is to replace activities present in the process with other new activities to react as quickly as possible to the disruptive events. Battaïa et al. (2019) present a rescheduling algorithm based on a constraint-programming approach to handle the remaining tasks when unexpected events disrupt a low-volume assembly line. A fast rescheduling decision support tool is implemented to reschedule all the uncompleted tasks. The performance of the tool is evaluated through numerical experiments to cheque the sustainability of the model. A multi-objective rescheduling methodology is proposed by Rangsaritratsamee et al. that considers both efficiency and stability measures (Rangsaritratsamee et al., 2004). A genetic local search algorithm that uses a multicriteria objective function as the fitness function is utilised to generate schedules at each periodic rescheduling point. This algorithm enables a balance between efficiency and stability in different situations. Mejía and Lefebvre propose a model that uses timed transition petri net (TTPN) to address operation interruptions and unreliable resources in flexible manufacturing systems (FMS) in uncertain environments (Mejía and Lefebvre, 2019). The TTPN model includes controllable and uncontrollable transitions. The disruptions caused by operation and resource failures can be represented by the firings of uncontrollable transitions. Based on TTPN, the authors develop an intelligent anytime filtered search algorithm that can incrementally compute control sequences in the event of operation interruptions and resource failures. Gholami and Zandieh propose integrating a simulation with a genetic algorithm to solve flexible job-shop scheduling problems (Gholami and Zandieh, 2009). This framework minimises expected mean makespan and expected mean tardiness. To analyse the results of the simulation, the ANOVA and ANOM methodologies are applied. It is highlighted that the breakdown level (Ag) and mean time to repair (MTTR) are very impactful to minimise both the objectives. Despite the numerous studies in literature that address the rescheduling problem, there is no unanimous agreement on how rescheduling frequency affects performance. In some studies, very high and very low rescheduling frequency affects the performance negatively (Gupta and Maravelias, 2016). However, others suggest rescheduling as frequently as possible until a critical point is reached (Pfund and Fowler, 2017).

New Order–Oriented Rescheduling

One of the main unexpected events studied in rescheduling is the new order event. Rahmani and Ramezanian propose a model that addresses the unexpected arrival of a new order in a dynamic flexible flow shop (FFS) (Rahmani and Ramezanian, 2016). It aims to generate a reschedule that is stable despite any unexpected order arrival, since it prioritises generating a stable solution, rather than an optimal solution that neglects disruptions. A variable neighbourhood search (VNS) algorithm is implemented to reduce computational complexity. Three types of performance measures – stability, total weighted tardiness, and the parameter's resistance to change – were considered in this model. Aiming to define a simple rescheduling procedure for SMEs, Villa and Taurino develop a constructive rescheduling method composed of two steps: cheque the constraints of the work sequence for each order and cheque the non-overlapping constraints for the operations that each machine must perform (Villa and Taurino, 2018). This model can satisfy both the work sequence constraints of operations for each order and the disjunctive constraints on each machine. This method is simple but effective: especially suitable for SMEs. Liu (2019) presents a model to solve a two-machine flow shop outsourcing and rescheduling problem (TFSORP) caused by the arrival of an unexpected order. The goal is to optimise the variables makespan and outsourcing cost by using a hybrid variable neighbourhood search (HVNS) algorithm. In addition, a design of experiments is implemented to optimise and calibrate the settings of the HVNS. Liu and Zhou address the parallel-machine rescheduling problem caused by an unexpected order (Liu and Zhou, 2013). The model is based on two dependent factors: the number of disrupted orders and the completion time. The problem is treated as a three bi-criteria scheduling problem, using lexicographical and simultaneous optimisation approaches. Moghaddam and Saitou propose a rescheduling model for unplanned order arrival (Moghaddam and Saitou, 2019). The tool developed is based on the concept of dynamic pegging in multi-level production and on a mixed integer programming (MIP) model that links dynamic pegging with rescheduling. When unplanned orders arrive, the dynamic pegging reassigns the work in progress (WIP) to the newly arrived orders by optimising rescheduling costs.

Machine-Oriented Rescheduling

Rescheduling because of a machine breakdown event is also a major study focus. Buddala and Mahapatra (2019) propose a model to solve a flexible job-shop scheduling problem due to machine failure by applying a two-stage teaching-learning–based optimisation (2S-TLBO). The target of this approach is to minimise makespan to generate robust and sustainable schedules that mitigate the costs of unexpected machine breakdowns. The results obtained with 2S-TLBO are analysed using a one-way ANOVA test. Qiao et al. (2018) propose a rescheduling model for machine failure in a dynamic semiconductor manufacturing system. The model is based on a novel machine group–oriented match-up rescheduling (NMUR) approach that achieves better results than right shift rescheduling (RSR) in the stability and efficiency of rescheduling. Al-Hinai and Elmekkawy (2011) consider four different types of machine breakdown in a flexible job-shop. The model implements a two-stage hybrid genetic algorithm (HGA) to minimise makespan. Yin et al. (2016) consider a failure of two identical parallel machines. The aim of this model is to reschedule tasks by considering deviation costs and total completion time to minimise excessive schedule disruption. The tool is designed to generate a set of Pareto-optimal solutions that are based on the optimisation of both rescheduling completion time and scheduling disruption factors.

Moreover, some solutions consider environmental objectives without overlooking production objectives. For example, Ferrer et al. (2018) propose a model to solve an unrelated parallel-machine rescheduling problem in a dynamic environment by applying two different approaches: greedy-heuristic and meta-heuristic. The aim is to improve production management, in terms of rescheduling quality and computational time, by limiting energy consumption (so this is an energy-aware scheduling problem). Nouiri et al. (2018) propose a green rescheduling method (GRM) that generates a rescheduling solution for a dynamic flexible job-shop affected by machine breakdowns. The aim of the model is to optimise the makespan and the energy consumption. Li (2019) proposes a model that addresses the rescheduling problem for unexpected machine breakdown. The aim of the model is to minimise the number of reschedules by considering energy consumption and lead time.

Product or Quality-Oriented Rescheduling

Compared with the previous types of rescheduling problems, far fewer studies focus on product or quality-oriented rescheduling. Some research addresses the issue of unexpected product-oriented events without linking it to the ZDM philosophy. Kucharska et al. (2017) propose a model to solve unexpected defects in a flow-shop system with stochastic uncertainties. The model is developed around a hybrid algorithm based on an algebraic-logical metamodel (ALMM) that can remove manufacturing defects detected during quality control. The approach distinguishes itself by adding the possibility of modelling the decision-making process. The results of the experiments are evaluated considering three factors: the cost of algebraic-logical models switching operation, the impact of the number of defect repairs on execution time, and the number of switches between models. Joo et al. (2016) propose a model to solve scheduling problems in a three-stage dynamic flexible flow shop (DFFS). It is mainly based on linking quality feedback to the defect rate: if the defect rate exceeds the limit, a quality feedback is generated. Dispatch rules-based scheduling algorithm is adopted to solve quality problems by maximising the quality rate and minimising job tardiness. Levitin et al. (2019) propose a tool based on the Poisson process of shocks that may generate defects in a random environment. If defects are detected, an optimised reschedule is generated with the aim to maximise and optimise, respectively, two performance indicators: mission success probability (MSP) and failure avoidance probability (FAP).

The first model that links a rescheduling problem due to unexpected product-oriented events to ZDM is proposed by Psarommatis and Kiritsis (2018). The model integrates the decision support system (DSS) into a dynamic scheduling tool to comply with ZDM principles. When a disruptive event occurs, the DSS and the dynamic scheduling tool interact to produce a new schedule. This solution was evaluated based on product quality and other KPIs (Psarommatis, 2021). A more generic model that links scheduling with ZDM is proposed by Dreyfus and Kyritsis (2018), who aim to increase production capabilities without large investments. Their model is developed based on the combination of three different strategies: ZDM, predictive maintenance, and scheduling algorithms. Automatic scheduling is considered as the brain of the tool, which considers uncertainty and decides if it is necessary to launch and schedule a maintenance operation based on the probability of failures and the time required to repair them.

To summarise, the literature review results indicate that there is a lack of research into product or quality-oriented rescheduling solutions, compared with machine-oriented and order-oriented solutions. However, product-oriented rescheduling is essential to realising the ZDM concept. In addition, very few studies have investigated how to evaluate the solution's performance according to different parameters and the number of reschedules. Aiming to address the above research gaps, this paper proposes a novel rescheduling model that pays more attention to product-oriented disruptions and provides a method to evaluate the quality of the solution.

Events Management Algorithm

Manufacturing environments are characterised by uncertainty and dynamic behaviour. Therefore, unexpected events that disturb the normal production flow may happen at any time. Such events traditionally may be new orders, machine breakdowns, or maintenance. In addition, ZDM requires that when there is a quality issue, such as a defect detection or a defect prediction, a mitigation action should occur for each individual quality event (Psarommatis et al., 2020b). There are two types of mitigation actions, depending on the nature of the triggering factor: the repair of a defect or its prevention. More specifically, if a defect is detected, repair of the part is required, if possible. Otherwise, a new product should be made to compensate for the one that was defected. Another case is when a defect is detected and the defect's data is used to trace the source of the problem and conduct preventive actions to avoid future defects. Furthermore, in the era of Industry 4.0, defect prediction is possible using methods such as machine learning or artificial intelligence. Therefore, if a defect is predicted, the prediction data are analysed to conduct the necessary preventive actions to avoid the predicted defect and future ones. The preventive actions may be small-scale maintenance or machine tuning. Therefore, a manufacturing stage may include multiple events of varying importance and requiring different actions.

A key to the success of a manufacturing company is the efficient scheduling of the mitigation actions proposed by ZDM and traditional events; otherwise, inefficient integration of the mitigation actions may lead to monetary and time losses. The current research work is focused on presenting a generic, flexible method for calculating the most efficient time for rescheduling production to incorporate the new tasks. The goal of the proposed method is to balance the rescheduling frequency and the measured KPIs, simultaneously permitting the implementation of the ZDM concept. This would be impossible to implement without a method for managing the increased number of events that are created because of ZDM. This is achieved by introducing an events management method to calculate the rescheduling time. It does so by considering the dynamics of production at the time of the decision to adapt the decision according to production needs. The proposed events management algorithm uses a custom-made heuristic rule to calculate the next rescheduling time. This renders it suitable for real industrial use and able to perform in real time without requiring high computational time, compared to other optimisation approaches.

The proposed methodology utilises two key parameters for each event action: the response time (RT) and the delay response time (DRT) (Psarommatis et al., 2020a). RT refers to the minimum time that is required from the moment of the event until the time that the mitigation action is ready to be released to the shopfloor, whereas DRT refers to the maximum time that an event action can be delayed until its release to the shopfloor becomes mandatory. Each event action has an RT and an DRT value that constitute the control parameters of the action management method. In the current study, both parameters have a dynamic, rather than a static, nature. More specifically, the RT depends on a manufacturer's operations policy and supplier relationships, and therefore there is no suggested formula for calculating this value. Nevertheless, when the RT is estimated it can be used alongside other parameters to calculate the DRT. Equations (1–3) are responsible for calculating the DRT (CT: current time, OC: Order Criticality, ET: event timestamp, i: event number, j: the order that the event affects). OC is a measure for ranking the importance of each individual customer order, with values between 0 and 10, where 10 is the most important. Events can also be classified into two categories: order-dependent and machine-dependent. Order-dependent events are those that affect only one order, such as a new order or a defected part. Machine-dependent events are those that affect one machine and by extension all the orders that are affected by the action of the event: such events cause maintenance and preventive actions. For machine-dependent events, the DRT is calculated for each affected order and the minimum is selected.

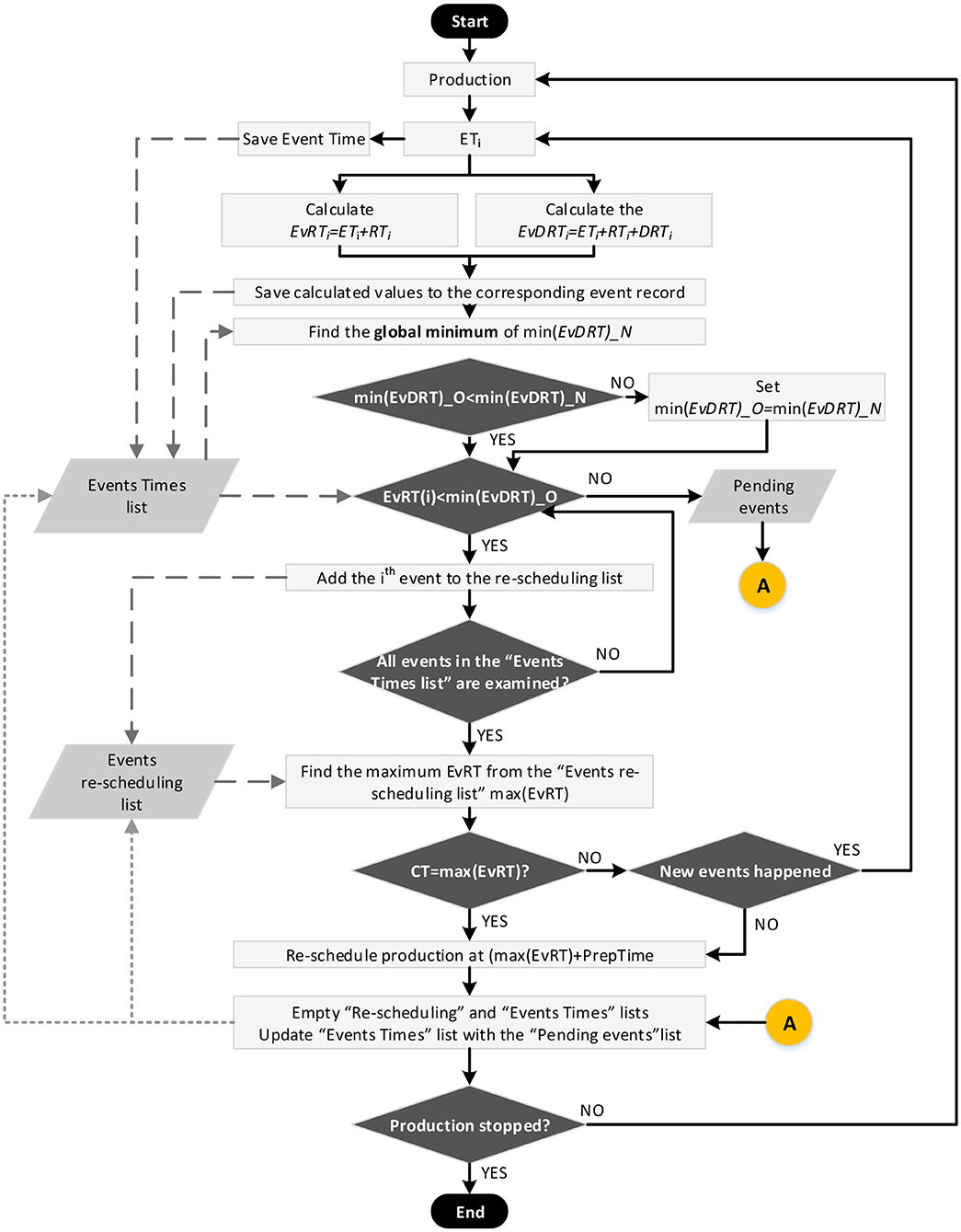

Figure 1 illustrates the flowchart of the developed algorithm, which was designed to be able to make decisions in real time. One key feature of the algorithm is that it is event-driven, which means that every time a new event occurs, the algorithm runs and provides a decision. Each event is accompanied by an ET, which is saved to a list with all the current events under investigation. Using the calculated parameters RT and DRT for each event action, two times are calculated: the event response time (EvRT) and the event delay response time (EvDRT). Then, the minimum EvRT from the current event list is calculated. The min(EvDRT)_O is used to compare each events' EvRTi. If it is smaller, then the current event is included in the next rescheduling, and it is saved to the rescheduling event list; otherwise, the event is saved for future rescheduling. If a new event occurs with min(EvDRT)_N < min(EvDRT)_O, then the value of the min(EvDRT)_O is replaced by the min(EvDRT)_N, and all events are re-examined with the new min(EvDRT)_O value. For example, if the algorithm starts with some events and a new event has been considered and has the smallest EvDRT, then the entire process restarts, and all the events are evaluated using the new smallest EvDRT value. If another event occurs afterwards with an even smaller EvDRT, the same process applies. This process continues until there are no other events in the event list. Once this procedure is finished, the maximum EvRT from the rescheduling events list is found. If the CT is equal to the maximum EvRT, the production is rescheduled at max(EvRT)+PrepTime. PrepTime is the time that the production needs to prepare for rescheduling. The records of the event times and rescheduling lists are deleted, and the event time list is filled with the events from the pending event list.

Industrial Use Case

The analysis and the performance evaluation of the developed methodology was conducted using a real-life industrial scenario from the semiconductor domain. The scenario concerns the production, for a period of 1 year, of an electronic module that is used in a medical device. The product under investigation is composed of 15 manufacturing tasks and six inspection tasks at the points with the highest defect rates. The layout of the production is configured as a flexible job shop (Pinedo, 2016) and more specifically is composed of five work centres. Each work centre is composed of two or three identical machines capable of performing more than one task. The sixth work centre is responsible for quality inspection in areas that have the highest defect rate, between 5 and 6%. This work centre is composed of six inspection machines capable of performing only one inspection task.

The proposed methodology is integrated with a dynamic ZDM-oriented scheduling tool (Psarommatis and Kiritsis, 2018) to test the developed method. The ZDM-oriented scheduling tool has the four ZDM strategies implemented, and the concept of ZDM is used during the simulation. Therefore, for every defect detection or prediction, the tool assigns a mitigation action to counteract the implications of the triggering factor. Furthermore, preventive maintenance is also considered during the simulations, and therefore there are no breakdowns. Maintenance is performed based on the quality of the produced parts and is required after a threshold value to avoid future low-quality parts. The goal of the scheduling tool is to produce 100% acceptable products with as little performance loss as possible. The problem under investigation is strongly NP-hard, because although there is only one product under investigation, the same tasks of different orders are considered as different tasks. Therefore, the scheduling tool creates the schedule with the goal of serving each individual order in the best way. The scheduling tool utilises a set of six different heuristic rules to solve the scheduling problem (Psarommatis et al., 2020d). Each time it is used, all six rules are executed, and the best solution is selected. Heuristics are selected over other methods, as they require minimum computational time, which is important in an industrial environment. All heuristic rules utilise a function for retrieving information regarding the previous schedule, and the tool schedules the production with minimal alteration to the short-term schedule when possible. Additionally, defects are generated based on a random number from an exponential distribution, presented in Equation (4), which calculates the probability of a part being defected. The model is driven by the variable PT, which represents the total operation time of the machine from the last maintenance point to the point under investigation. Each machine has its own unique characteristics, and therefore “a” and “b” are different for each machine. The proposed dynamic scheduling tool was tested using the Shapiro-Wilk test to validate the normality of the results (Öner and Kocakoç, 2017). For this purpose, 30 runs using random parameter values – which were the same for all the simulations – were performed, and the normality of the KPIs was evaluated. The normality test showed that the results are normal, with a p-value of 0.2169.

The industrial case was used to analyse the developed method and to evaluate the performance of the method compared to other rescheduling policies. The analysis of the method was performed using a design of experiments method, specifically the Taguchi method (Phadke, 1995). This analysis is required to gain insights into the influence of each control parameter of the events management algorithm (section Events Management Algorithm Analysis). Section Comparison With Other Rescheduling Policies is devoted to measuring the performance of the method and the comparison with other rescheduling policies.

Performance Indicators

The quality of the solution of each simulation is measured based on six KPIs (Gholami and Zandieh, 2009; Wang et al., 2018; Liu, 2019). More specifically, the solutions are measured based on (i) makespan (MSP), (ii) tardiness (TRS), (iii) rescheduling frequency (RFY), (iv) production cost (PC), and (v) rescheduling cost (RSC). MSP and TRS are measures of schedule efficiency based on time, and the equation is reported (Pinedo, 2016). The RFY represents the average time from rescheduling intervals. However, time-based performance measures do not directly reflect the economic aspect of the production system. Therefore, it is important also to evaluate scheduling decisions and strategies based on economic KPIs. In this research, two economic KPIs are used: the PC and the RSC. The PC is a function of the operational cost of machines, the cost of raw materials, the setup cost, the labour cost, and the depreciation of the machines. The RSC is calculated based on the number of tasks (NT) to be re-scheduled (Equation 5). If there are tasks in the task list that are scheduled for the first time, no cost is calculated for them. RMSCF is the value including cost units, which depicts the cost for rescheduling one task. It includes the labour and machine transportation costs for re-configuring the production set-up to match the needs of the new schedule. To aggregate the five KPIs into one value, to be able to study the total performance of each simulation, a two-step method is used. The first step is the normalisation of the KPI values. In the second step, the utility value is calculated using a weighted sum formula (Mourtzis et al., 2015). The utility value is within the range [0,1]: the higher it is, the better the solution. Equation (6) illustrates the objective function that is used to aggregate all the KPIs into one value to be maximised. The “∧” represent the normalised values of the KPIs. Equation (7) presents the relationships among the weights for each KPI: the sum of all the weights must equal one.

Events Management Algorithm Analysis

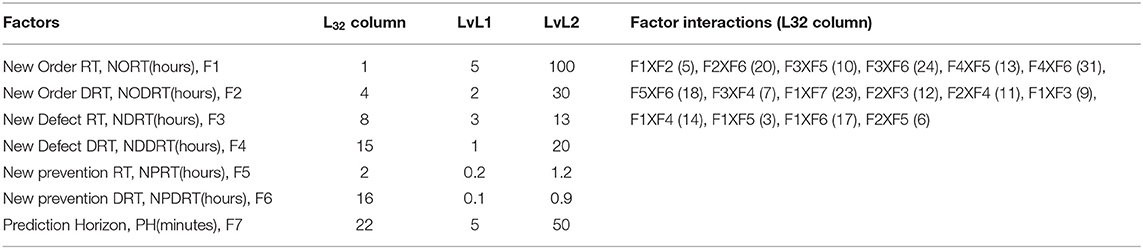

In the current section, a design of experiments is presented, based on the Taguchi method (Phadke, 1995). This method implies that a standard orthogonal array is selected that is based on the number of factors and the levels of each factor. This orthogonal array denotes the experiments that must occur for the method to have accurate results. Other design of experiments methods were investigated, such as the central composite design and the Box-Behnken design, but they were rejected due to the high number of experiments that were required. The goal of this analysis is to gain insights into the influence of each of the algorithm's control parameters on the measured KPIs. For this analysis, three types of events were used: new orders, defect detection, and defect prediction. Therefore, in total there are six time-oriented parameters based on the definition described in section Events Management Algorithm. One more parameter, the prediction horizon (PH), controls how far ahead the prediction algorithm searches for a defect. Therefore, there are seven parameters to investigate, and two level factors with interactions were selected. The factors' levels and the corresponding interactions can be seen in Table 1. At this point, it should be mentioned that to perform this analysis the DRTi time is considered static to control its the value and measure the impact on the solution. The L32 orthogonal array was selected to conduct the experiments, which denotes 32 experiments. This is more than double the number in the other design of experiments methods, due to the higher number of factor levels. This analysis is not performed to optimise the performance of the algorithm, but to understand the behaviour of each control parameter relative to the KPIs.

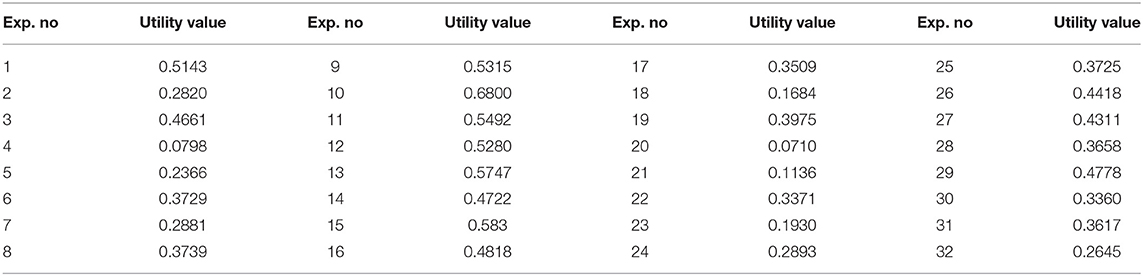

For each of the 32 experiments denoted by the L32, 10 runs were performed to improve the accuracy. The aim was to overcome the inherent randomness of generating defects during simulation. From the 10 simulation runs, the average utility value was taken as the final value for the experiment set. The results from the experiments are presented in Table 2 and analysed using the ANOVA and ANOM analyses. More specifically, an initial ANOVA was performed on the results to calculate the significance of each of the 23 terms examined (seven factor main effects and 16 interactions). The results from the initial ANOVA analysis showed that some factors and many interactions were not significant, and therefore a stepwise linear regression model reduction method was used to eliminate non-significant terms and improve the accuracy of the results. In total, 17 terms were eliminated. The results from the linear regression model showed that the model had a good fit with R-squared: 0.894. The adjusted R-squared was 0.869, the root mean squared error was 0.0546, and the p-value was 5e−11.

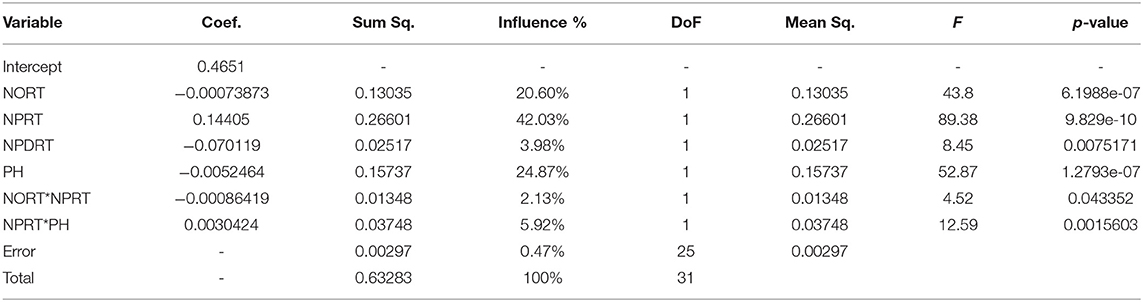

For the remaining significant terms, an ANOVA was performed, and the results are presented in Table 3. An interesting observation is that, in general, the DRT times did not contribute to the final solution, except the NPDRT, which contributed only 3.98%. At the same time the RT times presented significant percentages, with 20.60 and 42.03% for the NORT and NPRT, respectively. Furthermore, the PH factor showed significant influence on the final solution, with the second highest percentage: 24.87%. From the 16 interactions, only two were significant: the NORT*NPRT and the NPRT*PH, with 2.13 and 5.92%, respectively. The parameters regarding defects (NDRT and NDDRT) seem to have no significant impact on the solution. Moving forward, to analyse in which direction the quality of the solution is moving, the ANOM methodology was used for the factor main effects. In summary, the transition from level 1 to level 2 for factors PH, NORT, and NPDRT affects the solution negatively, with 19.10, 16.19, and 8.20%, respectively. Only NPRT affected the solution in a positive way, with 24.39% of difference from level 1 to level 2.

Comparison With Other Rescheduling Policies

The analysis of the developed method revealed some interesting insights regarding the performance of the events management algorithm. In reality, however, it is difficult to optimise parameters, such as how quickly the production can react to an event, mostly because they are the outcomes of many different aspects that are not easy to control. This is due to the dynamic nature of manufacturing systems. Nevertheless, manufacturers have the option to try to remain as close as possible to the values that least affect the solution. The analysis in section Events Management Algorithm Analysis provided the direction and extent to which each factor affects the final solution, providing a starting point that can be used as a reference.

In the current section, the comparison of the performance of the developed method with other rescheduling policies is conducted. For these experiments, the same industrial set-up as in section Events Management Algorithm Analysis is used, with the difference that the DRTi time is calculated dynamically based on the methodology presented in section Events Management Algorithm. Furthermore, for the specific industrial case, the NORT is set at 7 h, the NDRT at 3.5 h, the NPRT at 17 min, and the PH at 30 min. To those values, some stochasticity is introduced to simulate a real production environment. The stochasticity is introduced by using a normal distribution to generate a random number. The values mentioned earlier are used as the mean value of the distribution, and each has its own standard deviation based on the data provided by the specific industrial case. The current industrial scenario was simulated for the period of 1 year, and the demand profile was the average for the past 2 years.

In total, five alternative rescheduling policies are simulated alongside the proposed method and a benchmark scenario that constitutes the ideal scenario, meaning that there are no defects and the only events that occur are new orders. The five rescheduling policies that are used are considered as fixed periodic rescheduling policies because of the simplicity of their implementation in production systems and their high performance (Stevenson et al., 2020). These policies are divided into two categories: rescheduling occurs after every event and rescheduling occurs at certain time intervals. In the latter category, four different time intervals are tested: rescheduling twice a day, every day, every 2 days, and every 3 days, of which some are widely used (Stevenson et al., 2020). For each rescheduling policy, 10 individual runs were performed, as explained in section Events Management Algorithm Analysis, to overcome the inherent randomness of generating defects and the randomness of the control factors of the developed method.

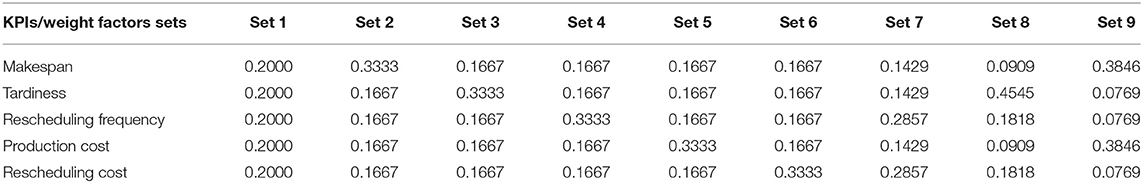

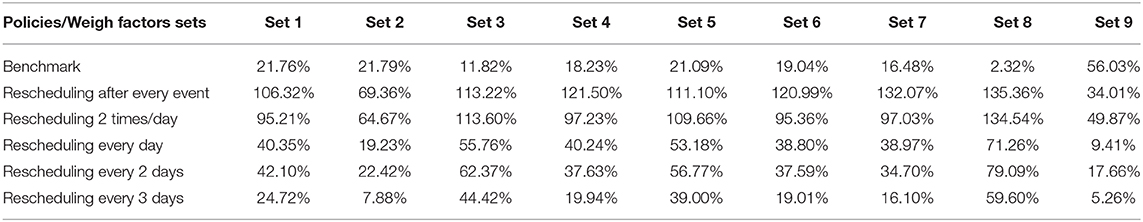

The performance of the seven simulated scenarios was measured using the five defined KPIs, which were aggregated into one value, the utility value (section Performance Indicators). Furthermore, multiple KPI weight factor sets were used to calculate the utility value and study the impact of each rescheduling method on each KPI or on the combination of KPIs. In total, nine weight factor sets were investigated; Table 4 presents the actual weight factor values for each KPI. There are three categories to which the weight factor sets belong: set 1 considers all the KPIs equally; sets 2–6 place importance on one KPI at a time, keeping all the others equal; and the final category, sets 7–9, place importance on more than one KPI at a time. The results from the simulation are illustrated in Figure 2, in which the utility values are presented for each weight factor set. Furthermore, the error from the 10 individual simulation runs of each scenario was calculated and is presented using the error bars, with a 95% confidence interval. Analysing the results further, the relative difference between the developed method (action management algorithm) and the other six scenarios was calculated and is summarised in Table 5.

Overall, the results showed that the proposed method outperformed the five other rescheduling policies, and it was close to the best solution, on average by 21.1%, considering the results from all the weight factor sets. The worst solution to almost all the weight factor sets was rescheduling after each event; only in set 9, in which attention was given to the MSP and PC, was this policy the second worst, after rescheduling twice a day. Furthermore, third place is held by rescheduling every 3 days, which was on average 26.2% worse than the proposed method. In Figure 2, three different trends are observed among the different weight factor sets. The first concerns sets 1, 3, 4, 5, and 6, in which the events management algorithm was clearly the second best solution, 29.4% of relative difference ahead of the third best and 18.4% worse than the benchmark scenario. These weight factors are those that either give equal importance to each KPI or give attention to one KPI at a time, keeping all the others at the same weight, except MSP. When attention is given to the MSP, a similar trend is observed between weight factor sets 2 and 9, in which the performance of the developed method is very close, but better by 6.6% than the next policy. More specifically, in set 2, in which importance is given only to MSP, the performance of rescheduling every 3 days is apparently increased and the performance of the developed method is at the second lowest value. However, in set 9, in which attention is given to MSP and PC, the proposed method reached the lowest value and was 5.3% better than the next policy. The characteristics of set 8 are not observed in other weight factor sets. This set gives significant attention to TRS and less attention to RFY and RSC. For this weight factor set, the events management algorithm provided the best solution, with only 3.6% relative difference from the benchmark scenario. The results of the proposed method showed almost double the error between the individual simulation runs compared to the other rescheduling policies, whereas the benchmark scenario had the smallest error.

Critical Discussion

The results from the design of experiments presented in section Events Management Algorithm Analysis revealed some profound insights regarding the behaviour of the proposed methodology and the defined control parameters. The most important outcome is that the DRT time does not influence the performance of the solution at all, except the NPDRT, which has a 3.9% influence. On the one hand, this result shows that the developed method has significant levels of robustness, because DRT does not affect the final solution and provides flexibility for manufacturers. In addition, this result is aligned with the dynamic calculation of the DRT times that is proposed, whereas if the DRT times affected the solution significantly it would be impossible, or it would require additions, to add boundaries to the calculated DRT times. On the other hand, the small (3.9%) influence of NPDRT is also reasonable and expected, because when predicting an event the production should act before the occurrence of the predicted event to prevent it, as ZDM states. If the DRT is not aligned with the NPRT and PH, then the preventive action might be scheduled after the occurrence of the event, contradicting the purpose of the prediction. Additionally, regarding the occurrence of defects and the repairing of parts, it is encouraging that both NDRT and NDDRT do not affect the solution, which also provides some flexibly to manufacturers. This can be explained because the new repair tasks are inserted in the existing schedule, causing minimal changes to the previous schedule. For the same reason as with the NPDRT, the strongest interaction observed was the NPRT*PH, which was expected since these parameters are tightly connected. The NPRT must be smaller than the PH, otherwise there is no reason for predicting: the production is unable to act before the occurrence of the event to avoid it. The proposed methodology presented a strong influence of 87.5% from the NORT, NPRT, and PH parameters. This result is encouraging because manufacturers can control these parameters, although it is difficult.

The developed events management algorithm showed an impressive performance compared to the five alternative rescheduling policies, proving that it can efficiently handle and calculate the next rescheduling time. Furthermore, the selection of a heuristic-based approach achieved very fast and high-quality results, with a decision time of less than a second. This is compared to an optimisation method that was developed for comparison that required 45 min to calculate the optimal rescheduling scenario, with only 4.8% increased performance compared to the proposed events management algorithm. The optimisation algorithm that was developed examined all the possible rescheduling scenarios given the same events.

Zero defect manufacturing is an emerging concept and therefore not many studies have been conducted on implementing ZDM concepts in the scheduling problem. To the authors' best knowledge, there are no studies combining traditional production disruption events, such as new orders or machine breakdowns, with ZDM-oriented events. More specifically, traditional rescheduling policies do not have the flexibility that is required by ZDM events and particularly the predict–prevent approach. This approach requires by default that rescheduling is performed frequently, due to the nature of the events that require rapid responses to avoid quality issues. This is the main issue with rescheduling at specific time intervals; these methods do not have the required flexibility to handle prediction events or capture the dynamics of production. However, rescheduling after each event could potentially be a good alternative in an ideal manufacturing environment. The experiments showed that when importance was given to the RSC, this policy produced the worst solution. This is without considering the costs that might arise due to mistakes because of the operators' confusion with the high number of reschedules, which is very difficult to calculate. Furthermore, the dynamic behaviour and rapid changes of the market require dynamic approaches, and more importantly holistic methods, that integrate many different aspects into one model, providing the required flexibility to make future modifications without causing problems. Efficient rescheduling is crucial for the competence of a manufacturing company. Although in the event of reliable processes and a low frequency of orders there is no need for rescheduling, and therefore most of the rescheduling policies will converge at the same results, this is not the case in the contemporary manufacturing landscape.

Events that disrupt production are the main causes of disorder at the shop-floor level and could create huge costs if they are not managed correctly. Theoretically, it is better to act immediately when events occur and avoid accumulating them to satisfy demand without incrementing supply chain costs (such as inventory costs for raw materials). Furthermore, a quick reaction to events indicates that production is very flexible and has the capability to handle efficiently this type of unexpected event. It also has a positive impact on the customers' experiences and satisfaction, since manufacturers are able to meet the customers' demands at the agreed time. The proposed methodology would help production to become more resilient and thus to stand out in the market. For instance, during the COVID-19 pandemic, the demand for medical instruments received an incredible boost, which consequently has led companies to receive many new orders per day alongside all the other unexpected events that can arise in a manufacturing environment. A flexible and resilient system for managing events at the production level would help them to meet the demand and increase their market share with minimal performance losses and a more sustainable production system.

Conclusion and Future Work

The current study proposed an events management algorithm to dynamically calculate the next rescheduling time. In an era of high-quality standards and a need for sustainable production systems, ZDM is gaining ground rapidly, and the proposed method can assist on the successful implementation of ZDM in manufacturing systems leading to the minimisation of negative environmental impact of the production contributing to the first pilar of sustainability. Additionally, the proposed methodology contributes also to the second pillar of sustainability which is the accomplishment of an economically sound manufacturing process, by balancing the events to schedule with the designed KPIs using the dynamic rescheduling model.

The proposed methodology was designed to consider both traditional events, such as new orders or machine breakdowns, and events created by ZDM when calculating the next rescheduling time. Furthermore, the developed methodology is flexible and can be expanded to accommodate other types of events, without modifications to the method, by adding the control parameters of the new event to the algorithm. The algorithm utilises RT and DRT as master control parameters, which are customised for each event. Furthermore, a methodology for dynamically calculating the DRT was presented to minimise rescheduling frequency as much as possible.

The developed methodology was analysed using the Taguchi method to design the experiments. This analysis provided great insights into the influence of the algorithm's control parameters on the final solution. More specifically, the method proved sufficiently robust. As DRT times did not influence the solution, a dynamic calculation of the DRT could be used and could provide an extra level of flexibility and ease in utilising the proposed methodology. Additionally, the proposed algorithm was compared to five alternative rescheduling policies, and the results showed that the current approach could effectively and efficiently handle different types of events and at the same time make possible the implementation of the ZDM concept in a production system. Furthermore, such methods can also help the ZDM implementation to handle situations with high a number of orders in a more efficient way, such as in the phenomenon observed because of the COVID-19 pandemic.

Future work will focus on the integration of predictive maintenance events into the scheduling tool and events management algorithm and consider if the same trends for the new control parameters are observed as with those presented in the current work.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author Contributions

FP: conceptualisation, experiments design, conduct the experiments, results elaboration, writing, and reviewing. GM: writing and results elaboration. XZ: writing and reviewing. DK: reviewing. All authors contributed to the article and approved the submitted version.

Funding

The present work was supported by EU H2020 funded projects Z-Fact0r (723906) and QU4LITY (825030).

Disclaimer

The paper reflects the authors' views only and not the Commission's.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Al-Hinai, N., and Elmekkawy, T. Y. (2011). Robust and stable flexible job shop scheduling with random machine breakdowns using a hybrid genetic algorithm. Int. J. Prod. Econ. 132, 279–291. doi: 10.1016/j.ijpe.2011.04.020

Barták, R., and Vlk, M. (2017). “Hierarchical task model for resource failure recovery in production scheduling,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) (Heidelberg: Springer Verlag), 362–378. doi: 10.1007/978-3-319-62434-1_30

Battaïa, O., Sanmartin, L., and Pralet, C. (2019). Dealing with disruptions in low-volume manufacturing: a constraint programming approach. Procedia CIRP 81, 1372–1375. doi: 10.1016/j.procir.2019.04.030

Baykasoglu, A., and Karaslan, F. S. (2017). Solving comprehensive dynamic job shop scheduling problem by using a GRASP-based approach. Int. J. Prod. Res. 55, 3308–3325. doi: 10.1080/00207543.2017.1306134

Buddala, R., and Mahapatra, S. S. (2019). Two-stage teaching-learning-based optimization method for flexible job-shop scheduling under machine breakdown. Int. J. Adv. Manuf. Technol. 100, 1419–1432. doi: 10.1007/s00170-018-2805-0

Cardin, O., Trentesaux, D., Thomas, A., Castagna, P., Berger, T., and Bril El-Haouzi, H. (2017). Coupling predictive scheduling and reactive control in manufacturing hybrid control architectures: state of the art and future challenges. J. Intell. Manuf. 28, 1503–1517. doi: 10.1007/s10845-015-1139-0

Dreyfus, P. A., and Kyritsis, D. (2018). “A framework based on predictive maintenance, zero-defect manufacturing and scheduling under uncertainty tools, to optimize production capacities of high-end quality products,” in IFIP Advances in Information and Communication Technology (New York, NY: LLC Springer), 296–303. doi: 10.1007/978-3-319-99707-0_37

Ferrer, S., Nicolò, G., Salido, M. A., Giret, A., and Barber, F. (2018). “Dynamic rescheduling in energy-aware unrelated parallel machine problems,” in IFIP Advances in Information and Communication Technology (New York, NY: LLC Springer). doi: 10.1007/978-3-319-99707-0_29

Gholami, M., and Zandieh, M. (2009). Integrating simulation and genetic algorithm to schedule a dynamic flexible job shop. J. Intell. Manuf. 20, 481–498. doi: 10.1007/s10845-008-0150-0

Gupta, D., and Maravelias, C. T. (2016). On deterministic online scheduling: major considerations, paradoxes and remedies. Comput. Chem. Eng. 94, 312–330. doi: 10.1016/j.compchemeng.2016.08.006

Joo, B. J., Chua, T. J., and Cai, T. X. (2016). “Dispatching rule-based scheduling algorithm for a dynamic flexible flow shop with time-dependent process defect rate,” in CIE 2016: 46th International Conferences on Computers and Industrial Engineering (Tianjin).

Khan, A., and Turowski, K. (2016). “A survey of current challenges in manufacturing industry and preparation for industry 4.0,” in Advances in Intelligent Systems and Computing (Rostov-on-Don – Sochi). doi: 10.1007/978-3-319-33609-1_2

Kucharska, E., Grobler-Dȩbska, K., and Raczka, K. (2017). Algebraic-logical meta-model based approach for scheduling manufacturing problem with defects removal. Adv. Mech. Eng. 9, 1–18. doi: 10.1177/1687814017692291

Levitin, G., Finkelstein, M., and Huang, H. Z. (2019). Scheduling of imperfect inspections for reliability critical systems with shock-driven defects and delayed failures. Reliab. Eng. Syst. Saf. 189, 89–98. doi: 10.1016/j.ress.2019.04.016

Li, Z. (2019). Multi-task scheduling optimization in shop floor based on uncertainty theory algorithm. Acad. J. Manuf. Eng. 17, 104–112.

Lindström, J., Lejon, E., Kyösti, P., Mecella, M., Heutelbeck, D., Hemmje, M., et al. (2019). “Towards intelligent and sustainable production systems with a zero-defect manufacturing approach in an Industry4.0 context,” in Procedia CIRP (Póvoa de Varzim: Elsevier B.V.), 880–885. doi: 10.1016/j.procir.2019.03.218

Liu, L. (2019). Outsourcing and rescheduling for a two-machine flow shop with the disruption of new arriving jobs: a hybrid variable neighborhood search algorithm. Comput. Ind. Eng. 130, 198–221. doi: 10.1016/j.cie.2019.02.015

Liu, L., and Zhou, H. (2013). On the identical parallel-machine rescheduling with job rework disruption. Comput. Ind. Eng. 66, 186–198. doi: 10.1016/j.cie.2013.02.018

Mehta, S. V., and Uzsoy, R. (1999). Predictable scheduling of a single machine subject to breakdowns. Int. J. Comput. Integr. Manuf. 12, 15–38. doi: 10.1080/095119299130443

Mejía, G., and Lefebvre, D. (2019). Robust scheduling of flexible manufacturing systems with unreliable operations and resources. Int. J. Prod. Res. 58, 1–19. doi: 10.1080/00207543.2019.1682706

Moghaddam, S. K., and Saitou, K. (2019). On optimal dynamic pegging in rescheduling for new order arrival. Comput. Ind. Eng. 136, 46–56. doi: 10.1016/j.cie.2019.07.012

Mourtzis, D., Doukas, M., and Psarommatis, F. (2015). A toolbox for the design, planning and operation of manufacturing networks in a mass customisation environment. J. Manuf. Syst. 36, 274–286. doi: 10.1016/j.jmsy.2014.06.004

Myklebust, O. (2013). Zero defect manufacturing: a product and plant oriented lifecycle approach. Procedia CIRP 12, 246–251. doi: 10.1016/j.procir.2013.09.043

Nouiri, M., Bekrar, A., and Trentesaux, D. (2018). Towards energy efficient scheduling and rescheduling for dynamic flexible job shop problem. IFAC-PapersOnLine 51, 1275–1280. doi: 10.1016/j.ifacol.2018.08.357

O'donovan, R., Uzsoy, R., and Mc kay, K. N. (1999). Predictable scheduling of a single machine with breakdowns and sensitive jobs. Int. J. Prod. Res. 37, 4217–4233. doi: 10.1080/002075499189745

Öner, M., and Kocakoç, I. D. (2017). JMASM 49: a compilation of some popular goodness of fit tests for normal distribution: their algorithms and MATLAB codes (MATLAB). J. Mod. Appl. Stat. Methods 16, 547–575. doi: 10.22237/jmasm/1509496200

Ouelhadj, D., and Petrovic, S. (2009). A survey of dynamic scheduling in manufacturing systems. J. Sched. 12, 417–431. doi: 10.1007/s10951-008-0090-8

Pfund, M. E., and Fowler, J. W. (2017). Extending the boundaries between scheduling and dispatching: hedging and rescheduling techniques. Int. J. Prod. Res. 55, 3294–3307. doi: 10.1080/00207543.2017.1306133

Pinedo, M. L. (2016). Scheduling Theory, Algorithms, and Systems. 5h Edn. New York, NY: Springer International Publishing.

Psarommatis, F. (2021). A generic methodology and a digital twin for zero defect manufacturing (ZDM) performance mapping towards design for ZDM. J. Manuf. Syst. 59, 507–521. doi: 10.1016/j.jmsy.2021.03.021

Psarommatis, F., Gharaei, A., and Kiritsis, D. (2020a). Identification of the critical reaction times for re-scheduling flexible job shops for different types of unexpected events. Procedia CIRP 93, 903–908. doi: 10.1016/j.procir.2020.03.038

Psarommatis, F., and Kiritsis, D. (2018). “A scheduling tool for achieving zero defect manufacturing (ZDM): a conceptual framework,” in IFIP Advances in Information and Communication Technology (New York, NY: LLC Springer), 271–278. doi: 10.1007/978-3-319-99707-0_34

Psarommatis, F., May, G., Dreyfus, P.-A., and Kiritsis, D. (2020b). Zero defect manufacturing: state-of-the-art review, shortcomings and future directions in research. Int. J. Prod. Res. 7543, 1–17. doi: 10.1080/00207543.2019.1605228

Psarommatis, F., Prouvost, S., May, G., and Kiritsis, D. (2020c). Product quality improvement policies in industry 4.0: characteristics, enabling factors, barriers, and evolution toward zero defect manufacturing. Front. Comput. Sci. 2, 1–15. doi: 10.3389/fcomp.2020.00026

Psarommatis, F., Vuichard, M., and Kiritsis, D. (2020d). Improved heuristics algorithms for re-scheduling flexible job shops in the era of Zero Defect manufacturing. Procedia Manuf. 51, 1485–1490. doi: 10.1016/j.promfg.2020.10.206

Qiao, F., Ma, Y. M., Zhou, M. C., and Wu, Q. D. (2018). A novel rescheduling method for dynamic semiconductor manufacturing systems. IEEE Trans. Syst. Man Cybern. Syst. 50, 1679–1689. doi: 10.1109/TSMC.2017.2782009

Rahmani, D., and Ramezanian, R. (2016). A stable reactive approach in dynamic flexible flow shop scheduling with unexpected disruptions: a case study. Comput. Ind. Eng. 98, 360–372. doi: 10.1016/j.cie.2016.06.018

Rangsaritratsamee, R., Ferrell, W. G., and Kurz, M. B. (2004). Dynamic rescheduling that simultaneously considers efficiency and stability. Comput. Ind. Eng. 46, 1–15. doi: 10.1016/j.cie.2003.09.007

Salido, M. A., Escamilla, J., Barber, F., and Giret, A. (2017). Rescheduling in job-shop problems for sustainable manufacturing systems. J. Clean. Prod. 162, 121–132. doi: 10.1016/j.jclepro.2016.11.002

Stevenson, Z., Fukasawa, R., and Ricardez-Sandoval, L. (2020). Evaluating periodic rescheduling policies using a rolling horizon framework in an industrial-scale multipurpose plant. J. Sched. 23, 397–410. doi: 10.1007/s10951-019-00627-5

Sun, J., and Xue, D. (2001). A dynamic reactive scheduling mechanism for responding to changes of production orders and manufacturing resources. Comput. Ind. 46, 189–207. doi: 10.1016/S0166-3615(01)00119-1

Uhlmann, I. R., and Frazzon, E. M. (2018). Production rescheduling review: opportunities for industrial integration and practical applications. J. Manuf. Syst. 49, 186–193. doi: 10.1016/j.jmsy.2018.10.004

Vieira, G. E., Herrmann, J. W., and Lin, E. (2003). Rescheduling manufacturing systems: a framework of strategies, policies, and methods. J. Schedul. 6, 39–62. doi: 10.1023/A:1022235519958

Villa, A., and Taurino, T. (2018). Event-driven production scheduling in SME. Prod. Plan. Control 29, 271–279. doi: 10.1080/09537287.2017.1401143

Wang, H., Jiang, Z., Wang, Y., Zhang, H., and Wang, Y. (2018). A two-stage optimization method for energy-saving flexible job-shop scheduling based on energy dynamic characterization. J. Clean. Prod. 188, 575–588. doi: 10.1016/j.jclepro.2018.03.254

Yin, Y., Cheng, T. C. E., and Wang, D. J. (2016). Rescheduling on identical parallel machines with machine disruptions to minimize total completion time. Eur. J. Oper. Res. 252, 737–749. doi: 10.1016/j.ejor.2016.01.045

Keywords: zero-defect manufacturing, rescheduling, unexpected events, events management, design of experiments

Citation: Psarommatis F, Martiriggiano G, Zheng X and Kiritsis D (2021) A Generic Methodology for Calculating Rescheduling Time for Multiple Unexpected Events in the Era of Zero Defect Manufacturing. Front. Mech. Eng. 7:646507. doi: 10.3389/fmech.2021.646507

Received: 27 December 2020; Accepted: 01 April 2021;

Published: 26 April 2021.

Edited by:

Amit Bandyopadhyay, Washington State University, United StatesReviewed by:

Otávio Oliveira, São Paulo State University, BrazilHenrique de Amorim Almeida, Polytechnic Institute of Leiria, Portugal

Copyright © 2021 Psarommatis, Martiriggiano, Zheng and Kiritsis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Foivos Psarommatis, Zm9pdm9zLnBzYXJvbW1hdGlzQGVwZmwuY2g=

Foivos Psarommatis

Foivos Psarommatis Giacomo Martiriggiano

Giacomo Martiriggiano Xiaochen Zheng

Xiaochen Zheng Dimitris Kiritsis

Dimitris Kiritsis