- School of Automation and Electrical Engineering, Shenyang Ligong University, Shenyang, China

Underwater images typically exhibit low quality due to complex imaging environments, which impede the development of the Space-Air-Ground-Sea Integrated Network (SAGSIN). Existing physical models often ignore the light absorption and attenuation properties of water, making them incapable of resolving details and resulting in low contrast. To address this issue, we propose the attenuated incident optical model and combine it with a background segmentation technique for underwater image restoration. Specifically, we first utilize the features to distinguish the foreground region of the image from the background region. Subsequently, we introduce a background light layer to improve the underwater imaging model and account for the effects of non-uniform incident light. Afterward, we employ a new maximum reflection prior in the estimation of the background light layer to achieve restoration of the foreground region. Meanwhile, the contrast of the background region is enhanced by stretching the saturation and brightness components. Extensive experiments conducted on four underwater image datasets, using both classical and state-of-the-art (SOTA) algorithms, demonstrate that our method not only successfully restores textures and details but is also beneficial for processing images under non-uniform lighting conditions.

1 Introduction

Underwater images play a significant role in fields such as the exploration of marine resources, environmental protection, and disaster warning systems. In recent years, Space-Air-Ground-Sea Integrated Networks (SAGSIN) have provided new solutions for underwater image processing by integrating various resources, enabling efficient image acquisition, transmission, and processing (Guo et al., 2021; Cheng et al., 2020). However, due to the unique characteristics of the underwater environment, image clarity remains a critical issue to address, and high-quality underwater images are particularly important in applications such as those within the SAGSIN (Deng et al., 2019; Lei et al., 2022; Liu et al., 2022b).

In recent decades, many enhancement (Wang et al., 2023b; Zheng et al., 2024; Bi et al., 2024; Liu et al., 2024; Wang et al., 2023a, Wang et al., 2024c, b) and restoration (Ali and Mahmood, 2022; Zhou et al., 2022a; Li et al., 2022; Liang et al., 2021) methods have been developed to improve underwater image quality. Yet, current underwater image processing methods still have some limitations that require further research. These methods typically rely on strict assumptions, such as uniform lighting conditions for underwater imaging, resulting in partially restored images with insufficient contrast and inaccurate color information. Furthermore, most existing methods only treat the global background light as a constant value, leading to unsatisfactory results when handling underwater images under complex lighting conditions (Raveendran et al., 2021).

This paper enhances on the classical imaging model by fully considering the absorption and attenuation properties of light in water, thus effectively addressing the issue of non-uniformly attenuated incident light. In this paper, we propose a novel underwater image restoration method to solve the problems caused by the imaging process. The main contributions are as follows:

(1) We propose a new maximum reflection prior estimation of the background light layer and introduce the background light layer into the attenuated incident optical model, which fully accounts for the absorption and attenuation properties of light in water.

(2) We utilize the gradient, chromatic aberration and area features of the image to distinguish between the foreground and background, thereby effectively avoiding the issue of inaccurate color information recovery.

(3) We propose a depth map estimation model based on features prior to generating transmission maps. This model effectively enhances the details and successfully recovers the texture.

The remainder of the paper is organized as follows: Section 2 presents the existing underwater image processing methods. Section 3 provides a detailed description of the proposed approach. Section 4 reports the experimental results, and Section 5 presents the conclusions.

2 Related work

2.1 Underwater imaging model

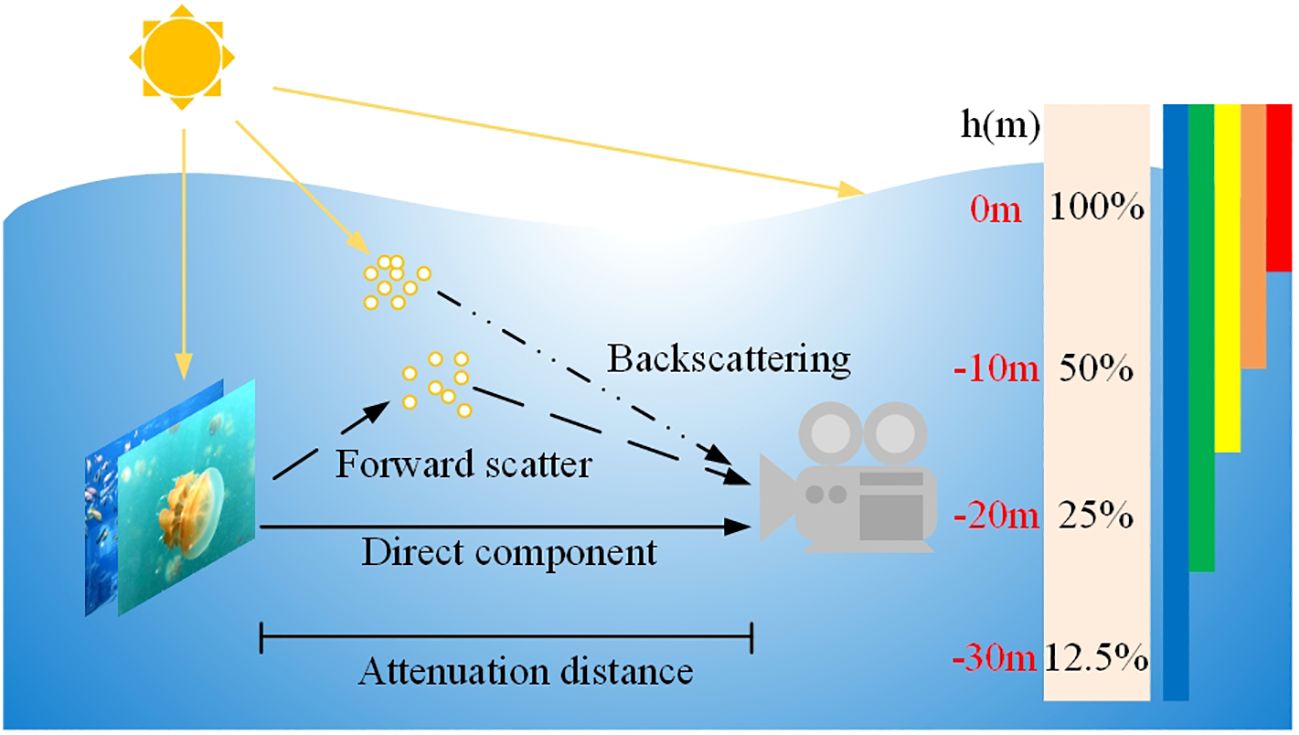

Underwater optical images are subject to a certain degree of attenuation due to the complexity of the underwater imaging process. The Jaffe-McGlamery underwater imaging model (Hu et al., 2022) accounts for the way light propagates to provide a comprehensive characterization of the imaging process. In this model, the image received by the underwater imaging system is composed of a linear combination of three components: a direct component, a forward scattering component, and a backscattering component. The scattering intensity of the ambient light increases with distance due to the increasing number of scattering media (scattering volume function), leading to an augmented backscattering component. When the distance between the object and the camera is small, the forward scattering component becomes negligible. Therefore, the simplified underwater imaging model primarily consists of the direct component and the backscattering component, which can be expressed as:

Where, is the degraded image obtained by the imaging device, is a clear image of the restored, is the underwater background light, is the transmittance of the medium, x represents the position of a pixel. The ultimate goal of underwater image clarity is to recover from .

A schematic diagram of an underwater imaging model is shown in Figure 1.

2.2 Underwater image processing methods

Currently, underwater image processing methods can be categorized into traditional methods and deep learning methods. Traditional methods can be further subdivided into model-free underwater image enhancement methods and model-based underwater image restoration methods, based on whether the imaging process is considered or not.

2.2.1 Model-free method

Model-free underwater image enhancement methods utilize digital image processing or machine learning techniques to highlight key information in an image while suppressing redundant information according to specific needs. Ghani et al. (Ghani and Isa, 2017) enhanced the contrast by recursively segmenting the image and adaptively adjusting the sub-block histogram to solve the problems of over-enhancement and noise amplification that occur in traditional histogram equalization algorithms. Zhang et al. (Zhang et al., 2021) designed a method to compensate for the RGB channel using fractional compensation, combined with background stretching and foreground stretching, to enhance the image contrast. Yuan et al. (Yuan et al., 2020) enriched the image details through multiple morphological operations, stretched the detail enhancement results, and corrected the distorted colors. Zhou et al. (Zhou et al., 2022b) extracted the irradiation component using a multi-scale Retinex algorithm and introduced a color recovery factor to correct the color channels. The above image enhancement methods for underwater environments improve the image color and contrast to a certain extent, but they still cannot completely restore the true color and are difficult to apply to all types of underwater scenes.

2.2.2 Model-based method

The physical model-based underwater image restoration methods fully consider the propagation characteristics of light in water. They establish an imaging model based on the underwater image degradation process, estimate the model parameters based on priori assumption information, and invert the model to obtain the ideal clear image. He et al. (He et al., 2010) presented a Dark Channel Prior (DCP) algorithm based on a large number of outdoor clear image statistical laws. Drews et al. (Drews et al., 2016) presented the Underwater Dark Channel Prior (UDCP) by verifying the two hypotheses that the blue-green channel of underwater images contains the main visual information and that the DCP algorithm when applied to the blue-green channel. Akkaynak et al. (Akkaynak and Treibitz, 2018) verified that the attenuation coefficients of the direct-incidence and backscattering components are different, and proposed the Akkaynak-Treibitz model to solve the problem of instability in underwater imaging models. Hou et al. (Hou et al., 2020) developed an Underwater Total Variation (UTV) model based on the UDCP to effectively remove underwater noise interference. Li et al. (Li and Li, 2019) combined the diagonal gradient operator and underwater light attenuation to estimate the scene depth prior and used quadtree subdivision to estimate the background light and recovered the scene brightness based on an underwater imaging model. Li et al. (Li et al., 2016) estimated the ambient light based on Minimum Information Loss Prior (MILP) and adjusted the brightness, color and contrast of the image by using the histogram of a naturally clear image as a reference. Zhou et al. (Zhou et al., 2023b) combined a comprehensive imaging formation model with prior knowledge and unsupervised techniques to adopt an unsupervised approach to improve the accuracy of monocular depth estimation and reduce the effects of artificial lighting. While the above methods improved image visibility and clarity with high efficiency, their results were affected by the accuracy and availability of prior information, and their generalization ability and adaptability are limited.

2.2.3 Deep learning-based method

Deep learning is a dataset-based training method for the recovery and enhancement of degraded images, achieved by learning the non-linear relationship between real and degraded images. Li et al. (Li et al., 2021) presented the underwater image enhancement network (Water-Net), which uses three preprocessed resultant images—white balance, gamma correction, and histogram equalization of degraded images—as inputs to a gated fusion network, combined with three confidence maps to obtain the fusion results. Liu et al. (Liu et al., 2022a) proposed a supervised learning-based adaptive learning attention network (LANet) for underwater image enhancement. This network utilizes a multi-scale fusion module to combine different spatial information and introduces an asynchronous training mode to improve the performance of the network’s multinomial loss function. Chen et al. (Chen et al., 2021) proposed an underwater image enhancement algorithm based on deep learning and imaging models. This algorithm reconstructs clear underwater images using convolutional neural networks to simulate the image formation process, by integrating the features of local texture and the features of the depth map. Zhou et al. (Zhou et al., 2023a) proposed an efficient Fully Guided Information Flow Network (UGIF-Net). This network accurately approximates the color information by integrating the features of two color spaces within a unified framework, and simultaneously adaptively senses the critical color information. Chen et al. (Chen and Pei, 2022) introduced Underwater Image Enhancement via Content and Style Separation (UIESS), a framework that separates coded features into content and latent styles, distinguishes different domain latent styles, and performs domain adaptation and image enhancement. Wang et al. (Wang et al., 2024a) proposed a human visual perception-driven image enhancement paradigm for underwater scenes based on reinforcement learning, overcoming the limitations of deep models. Deep learning methods are relatively intuitive, offering high efficiency and interpretability. Nevertheless, they require substantial amounts of paired training data. Acquiring paired clear and fuzzy image datasets in an underwater environment is extremely challenging, which may impede the training process and result in limited performance for underwater image enhancement or restoration tasks.

3 Methodology

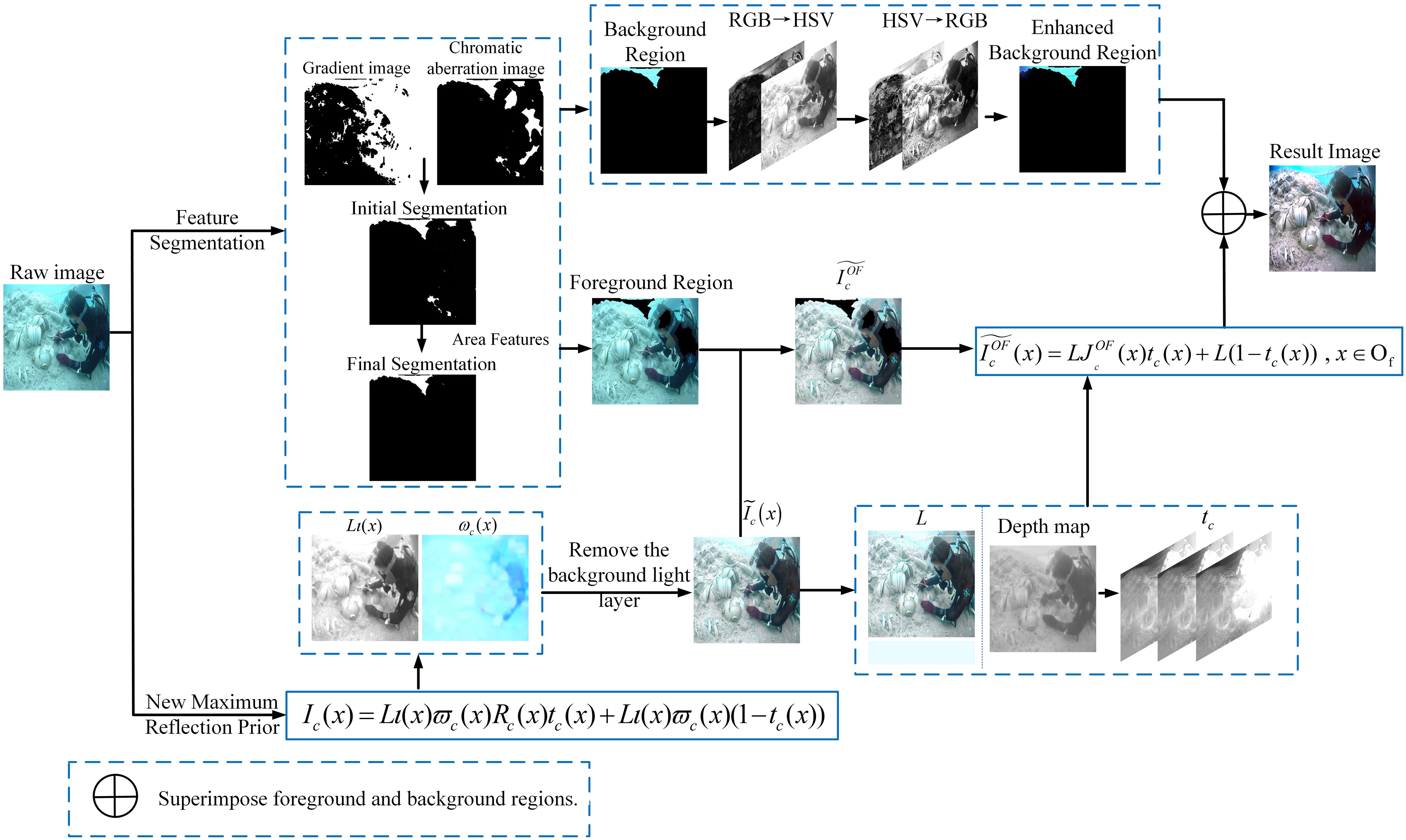

This paper presents an underwater image restoration method based on an attenuated incident optical model and incorporates background segmentation. The proposed methodology is summarized in Figure 2. Specifically, by analyzing the complex light characteristics underwater, the method segments the background and foreground regions using three effective features: gradient, chromatic aberration, and area. Each region is then enhanced and restored separately. Subsequently, the attenuated incident optical model is refined by introducing a background light layer to solve the problem of non-uniform incident light. Meanwhile, a new maximum reflection prior is employed to estimate the color and intensity images of the background light layer. Finally, the restored image is solved inversely for the foreground region with the background light layer removed, and the saturation and brightness of the background region are stretched.

3.1 Background segmentation

The background region of an underwater image typically contains fewer scene objects and exhibits less texture and edge information compared to the foreground region. Moreover, as the background region is prone to more severe color deviation, applying the attenuated incident optical model restoration directly to this region can exacerbate the color deviation. Therefore, we segment the foreground and background regions based on three effective image features: gradient, chromatic aberration, and area.

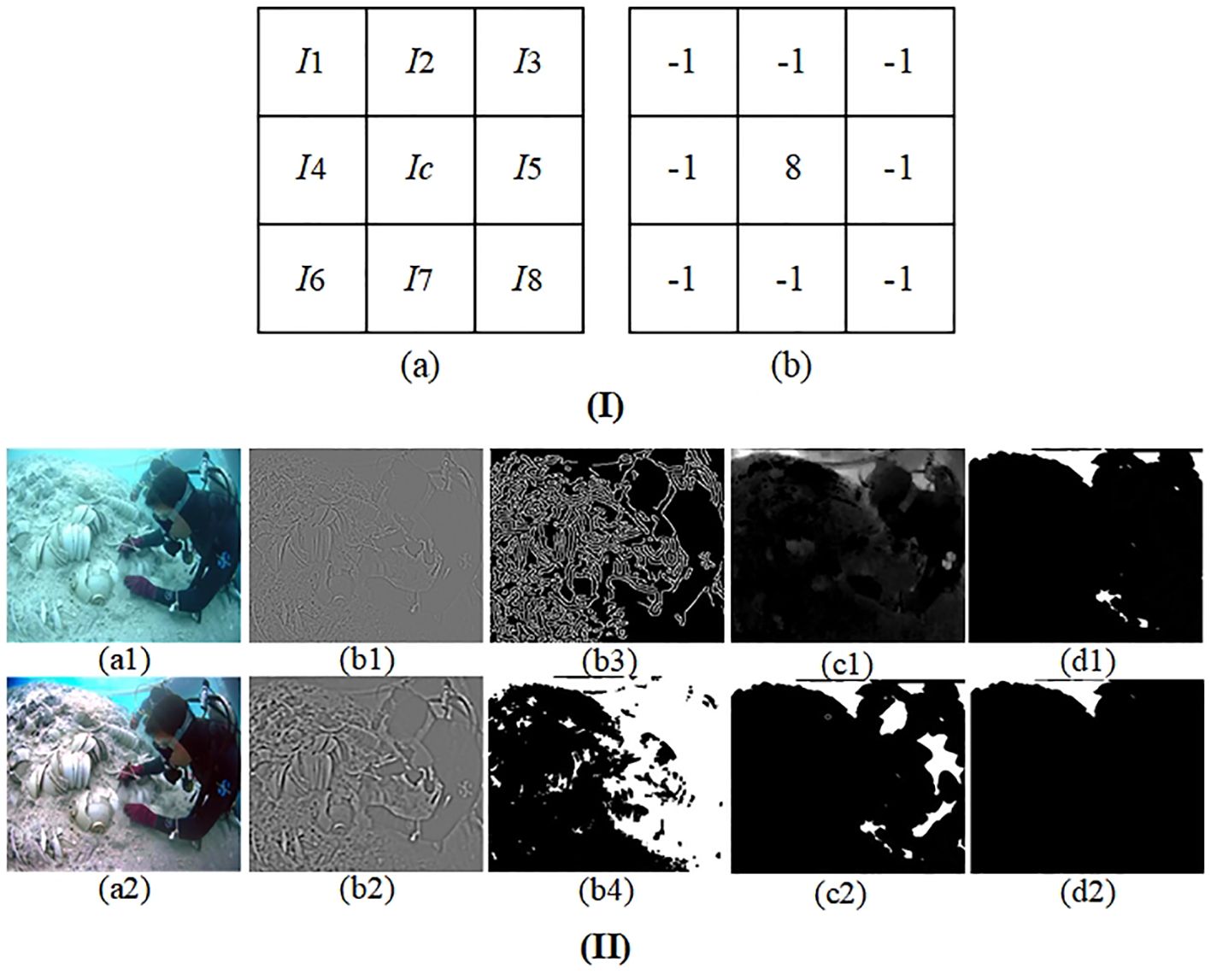

Assuming that the pixel gradient of the background region is smaller than that of the foreground region, the gradient value can be used to determine the region to which the pixel point belongs. Specifically, assuming that the gradient value of the background region is close to 0, the edges of the image in all directions are extracted using multi-directional diagonal gradient operator, as shown in the multi-directional oblique gradient operator template in Figure 3(I), which takes into account skewed edges in addition to the horizontal and vertical edges. and are obtained by converting this template with the domain of each pixel point of the image:

Figure 3. (I). The multi-directional oblique gradient operator template. (II). Background region segmentation process. (a1) Raw image. (a2) Restore image. (b1) Gradient feature image. (b2) Result after Gaussian filter enhancement. (b3) Edge detection image. (b4) Gradient image after binarization. (c1) Chromatic aberration image. (c2) Chromatic aberration image after binarization. (d1) Area feature image. (d2) Final Segmentation.

Compute the image gradient channel expression:

Gaussian filter enhancement is applied . Edge detection of enhancement results using Canny operators; the edge detection results are then binarized using the morphological expansion operation. The binarization is the result of gradient segmentation with pixel value 0 in the background region and 1 in the foreground region with the expression:

Where, is the background region, is the foreground region.

Due to the selective absorption of light by water, light of different wavelengths will exhibit varying degrees of attenuation with increasing depth, and the attenuation rate of the red channel is faster than that of the blue and green channels. In the foreground region, the attenuation of the channels is weak and the difference in intensity is not significant. In the background region, the red channel decays rapidly, and its intensity value differs greatly from that of the blue-green channel; the difference in pixel values of the three channels can be used to determine the area to which the pixel point belongs:

Setting the threshold . The pixel point difference in the background area is greater than the threshold and the binarization result is 1. The pixel point difference in the foreground region is less than the threshold value, the binarization result is 0. The expression is:

To avoid phenomena such as mistaking objects with smooth surfaces for the background and highlights for the foreground, the error regions are excluded based on the percentage of the region’s area.

Finally, the initial segmentation, characterized by low gradient and large color difference, is subjected to morphological processing to eliminate scatter and fill in gaps. Subsequently, the background region whose area is not less than 5% of the whole image is retained, and the final segmentation, where the pixel value of the background region is 1 and the pixel value of the foreground region is 0. The expression is:

A diagram of the segmentation process is shown in Figure 3(II).

3.2 New maximum reflection prior

Currently, further research is needed on underwater non-uniform incident light. However, the foggy environment at night has similarities to the underwater environment. The Maximum reflection prior(MRP) (Zhang et al., 2017), which is based on the statistical characteristics of a large number of clear outdoor images, effectively achieves deblurring in uneven lighting environments. Therefore, we improve the MRP to solve the problem of blurring in the underwater non-uniform incident light environment. The MRP reveals that the intensity values of each color channel are high in most of the image blocks. Definition:

Where, is the maximum intensity in the neighborhood of the color channel c, is the incident light, is the reflectance. The incident light of a clear image can be considered uniform (i.e., with a value of 1). Then the maximum intensity within each color channel is mainly determined by the reflectivity .

Since light propagating in water is selectively absorbed by the water column, resulting in attenuation of the incident light, direct use of the maximum reflection prior for underwater image restoration will amplify the noise. In light of this, we propose a new maximum reflection prior.

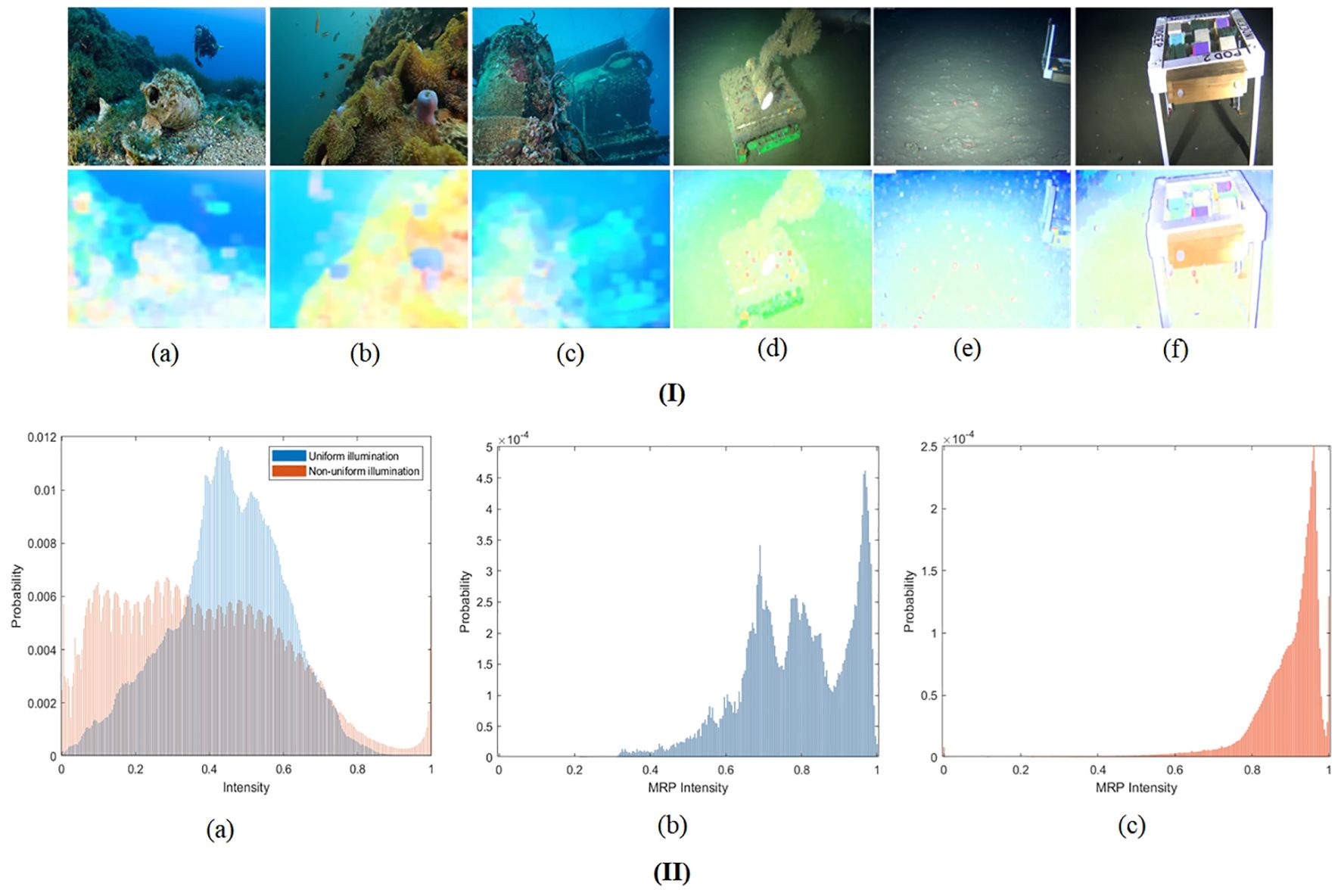

Figure 4(I) shows the underwater images and their corresponding maximum reflectance maps, from which it can be seen that the maximum reflectance maps have high pixel values and presents different color tones. Regardless of whether the lighting is uniform or non-uniform, the maximum reflective intensity is higher in well-illuminated areas. The above analysis indicates that the maximum reflection map is strongly correlated with the lighting environment during imaging, and can reflect variations in non-uniform incident light intensity and color change. Therefore, the attenuated light can be roughly estimated based on the reflection map.

Figure 4. (I). Maximum reflection maps of underwater degradation images. (A–C) Uniformly illuminated images. (D–F) Non-uniformly illuminated images. (II). Maximum reflection maps of underwater degradation images. (A–C) Uniformly illuminated images. (D–F) Non-uniformly illuminated images.

For an unattenuated underwater scene, where the light is not attenuated and is uniform, can be considered as representing the background light intensity and is assumed to be 1. The underwater maximum reflection map is defined as follows:

We selected 400 uniformly illuminated images and 400 non-uniformly illuminated images in the current publicly available dataset for statistical purposes. From Figure 4(II) and the analysis above, it can be seen that the maximum intensity value for each color channel of the image block tends to 1 in most normalized images, which represents the new maximum reflection prior value:

3.3 Attenuated incident optical model

The traditional model is based on the assumption of uniform incident light. However, the degree of spectral absorption attenuation by the water is affected by factors such as water turbidity and underwater depth during the propagation of light underwater. The light source in shallow water is mainly generated by the sunlight above the water surface (uniform illumination), while artificial light sources are often used in deep water areas where there is a severe shortage of light. Artificial light sources do not illuminate the underwater scene uniformly, resulting in reduced image contrast and different color deviations.

Therefore, it is inaccurate to assume that the global background light is a constant value. In underwater complex imaging environments, the lighting situation is influenced by a combination of natural and artificial light sources, resulting in variable background light. Taking into account the effect of non-uniform incident light, a background light layer is introduced into the conventional underwater imaging model, and the attenuated incident light imaging model is defined as:

Where, is the scene reflection. According to Retinex theory (Fu et al., 2023), an underwater image can be represented as the product of the object reflection component and the irradiation component, decomposing the scene reflection into two images, the background light layer and the scene reflection component.

The background light layer can be expressed as the product of the background light color layer and the background light intensity layer with the expression:

Where, is the background light intensity layer, and is the background light color layer. Substituting Equation 14 into Equation 13 yields:

Assuming that , and are constants within a certain localized block of pixels , the maximization is obtained for each localized block:

There is by the new maximum reflection prior: . Substituting into Equation 16 yields:

Thus, the background light color layer can be obtained:

The maximum value of in the three color channels is the background intensity light . The background light color layer, with its light intensity removed and refined through guided filtering, is denoted as .To remove the effect of the background light color layer from the underwater image, the expression is:

Where, is the effect of removing the background light color layer in the background.

Although the background light color layer has been eliminated, the background light intensity layer still exists, and correction of the background light intensity layer is achieved through white balance, yielding the that effectively removes the influence of the background light layer. Subsequently, the image with the background light layer removed is applied to the foreground region, resulting in:

3.4 Transmission map estimation

In response to the limitations of the classical dark channel prior algorithm, where bright pixel points, such as white objects, may lead to errors in the depth of field information obtained from the dark channel map, a combination of low-pass filtering and UDCP is utilized for background light estimation. Low-pass filtering is employed to obtain low-frequency components related to water background light, thus avoiding the estimation of white objects as background light. Subsequently, UDCP is used to obtain the dark channel diagram. Taking the first 0.1% of pixel points to calculate the background light is more accurate and prevents overcompensation of the image due to the attenuation of light absorption in water. This approach is specifically expressed as follows:

Where, is a Gaussian low-pass filter. The convolutional kernel is used to calculate the average value of the center pixel and its neighborhood pixels to achieve the purpose of smooth filtering, is a rectangular window centered on x, is the set of pixels that satisfy the requirement. In order to prevent the block effect of the obtained dark channel graph, guided filtering is used to further refine it.

Refer to (Zhou et al., 2023c). Based on the analysis of the feature prior, a new depth map estimation method is proposed. Subsequently, the transmission map is obtained according to the Lambert-Beer law. Since more distant scenes exhibit higher fog density and a higher luminance component, we opted to utilize the dark channel and the brightness map in the Lab for the estimation of the transmission map.

We compensate for the red channel by inverting its value to obtain a depth map based on the dark channel:

We can convert RGB to Lab color space to obtain a brightness map. The depth map related to brightness is shown below:

Where, is the intensity channel of the input image in the Lab color space.

We obtain the adaptive parameters based on the sigmoid function to estimate the depth map .

Because the brighter the scene, the greater the gray value of the image, a grayscale image is selected to obtain adaptive parameters. We conducted extensive experiments on UIEB dataset (Li and Li, 2019) to verify the accuracy of the depth map estimation for different values of in the range of 0.1-0.9 (with a step size of 0.1). Figure 5 shows several examples. We can observe that when the depth maps (a)-(e) in Figure 5 are inaccurately estimated, some details are lost. At this point, takes values in the range of 0.1-0.4. Simultaneously, smaller values of lead to excessively large values of . When , the depth maps (g)-(h) in Figure 5 incorrectly estimate the foreground as the background. However, when , both the foreground and the background are correctly described, and the depth maps are more detailed.

Figure 5. Determination of adaptive parameter values. (A) Raw images. (B–J) Depth maps for different parameter values (0.1-0.9, step size 0.1). (K) Restore images.

The transmission map is obtained from the Lambert-Beer law.

Where, is the transmission map of the image , is the attenuation coefficient. Refer to (Peng and Cosman, 2017), , , .

After obtaining the background light and transmission images, the image is restored according to the attenuated incident optical model for the foreground region, with the background light layer removed.

3.5 Background region stretched and superposition

The background region is stretched using the saturation and brightness components. The final image is obtained by blending the background region with the foreground region, and then the boundary between the two regions is smoothed to make the resulting image more natural. Specifically, the segmented image is first converted into HSV color space. Then, the saturation and brightness components are stretched using histogram equalization (Rao, 2020) to enhance the visual quality of the background region. Afterward, the stretched image is converted back into RGB color space.

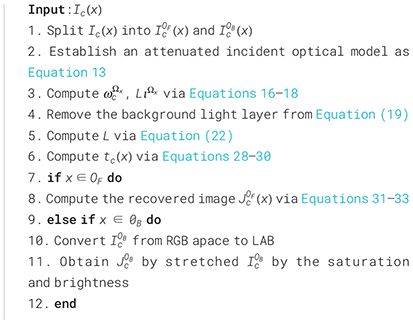

The output image is obtained by superimposing the stretched background and restored foreground, and bilateral filtering is used to smooth the image while maintaining edge sharpness. The solution procedure for the reduction method proposed in this paper is presented in Algorithm 1.

Algorithm 1. Underwater image restoration.

4 Experimental results and analysis

4.1 Experiment settings

4.1.1 Test environment

The algorithm was run on MATLAB R2022b software under Windows 10 operating system, and the computer configuration was as follows: Intel I5-9300 CPU @ 2.40 GHz and 64 GB RAM.

4.1.2 Dataset

To validate the performance of the algorithm, we choose to perform comparative experiments on the Color-Check7 (Lin et al., 2023), RUIE (Liu et al., 2020),UIEB and Ocean-dark (Porto Marques et al., 2019) open datasets. The Color-Check7 dataset contains 7 different underwater scenes for evaluating the color deviation calibration of underwater images. The UIEB dataset contains 890 raw images and 60 challenge images containing underwater images of varying quality. The RUIE dataset contains three large-scale subsets of real underwater imagery: UCCS, UIQS, and UTTS, we chose UCCS and UIQS for our evaluation. The Ocean-dark dataset consists of 183 low-light underwater images taken with artificial lighting.

4.1.3 Comparison algorithm

We selected 8 algorithms for comparative experiments, including both classical and state-of-the-art (SOTA) algorithms. Specifically, UDCP, Fusion (Ancuti et al., 2017), DRDCP (Wang et al., 2019), FUnIE-Gan (Islam et al., 2020), Shallow-uwnet (Naik et al., 2021), MLLE (Zhang et al., 2022), UIESS and ICSP (Hou et al., 2023). Where FUnIE-Gan, Shallow-uwnet and UIESS are deep learning algorithms.

4.1.4 Evaluation indexes

Restricted by the current open datasets RUIE and Ocean-dark without the corresponding clear images, we choose one image color deviation calculation index and four no-reference indexes to evaluate the performance of the algorithms in this paper and the comparison algorithms: CIEDE2000 (Lin et al., 2021). Underwater Color Image Quality Evaluation(UCIQE) (Yang and Sowmya, 2015). Frequency Domain Underwater Metric(FDUM) (Yang et al., 2021). Information Entropy(IE) (Azmi et al., 2019). Colorfulness index, Contrast index and Fog density index(CCF) (Wang et al., 2018). CIEDE2000 measures the difference between the color to measure and the standard color, the lower the value, the stronger the color restoration of the image. UCIQE is applied to quantify uneven color blurring and low contrast in underwater images, with higher values resulting in stronger image quality. FDUM fuses color, contrast, and sharpness measurements by weighted summation, with higher values indicating better overall image performance in terms of color, contrast and deblurring. IE indicates the amount of information contained in the image, larger values indicate more detailed information. CCF fully considers the chromaticity index related to light absorption, and the larger its value, the better the overall performance of the image in terms of color, contrast and defogging.

4.2 Qualitative comparison

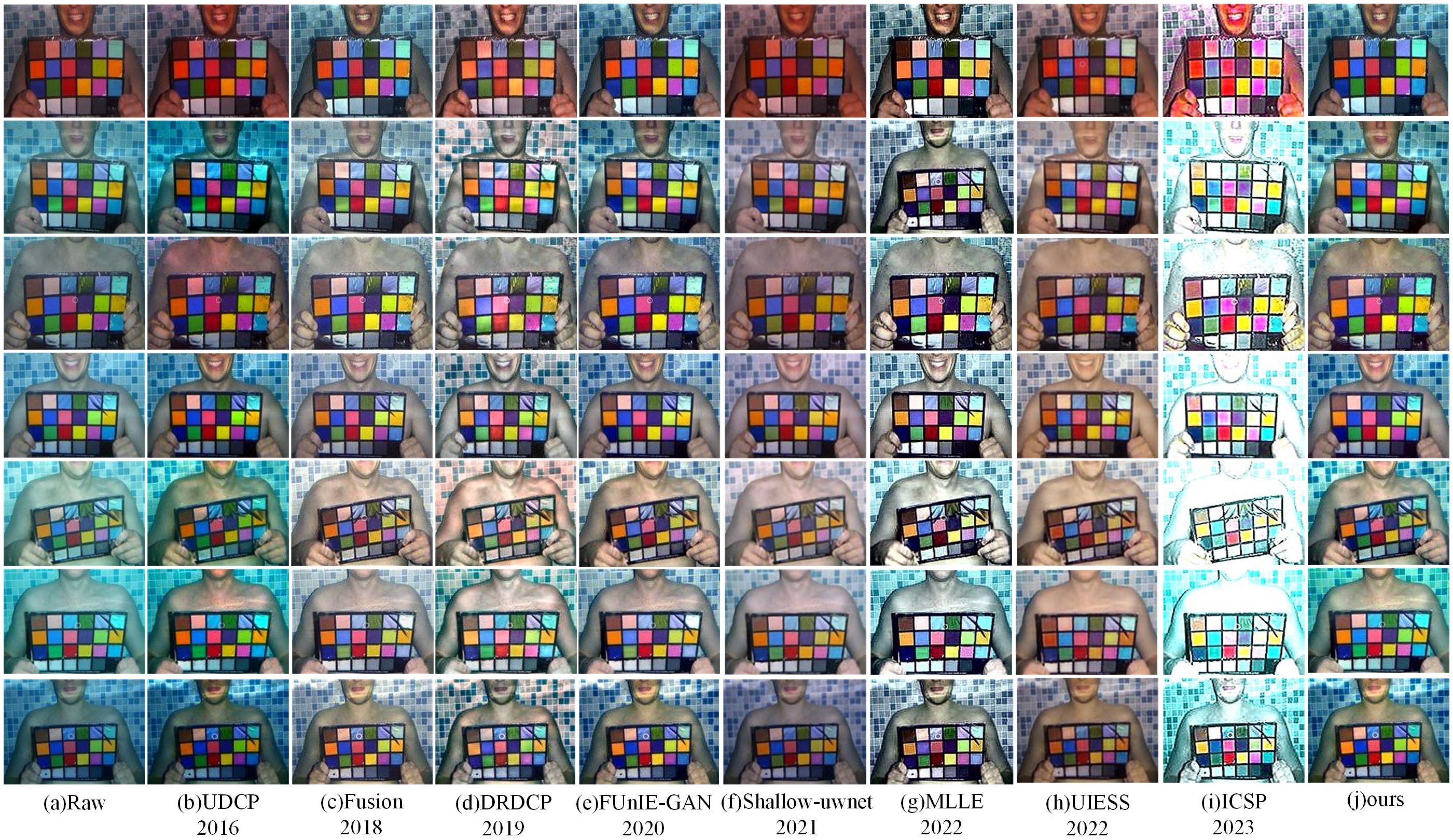

To evaluate the effectiveness of the color correction method, it is tested on the color-Check7 dataset. From Figure 6, we can see that the raw image has poor brightness, low contrast and color distortion, Fusion and MLLE algorithms improve color distortion, but the brightness of Fusion requires improvement, the saturation of MLLE is poor, and the color recovery of color cards is not natural, as shown in the results of D10, T8000 and Z33 color cards. Other contrast algorithms, such as DRDCP, FUnIE-GAN, ICSP, fail to address the problem of color distortion and even exacerbate it. The images recovered by the algorithm in this paper have more natural colors with better visual effects.

Figure 6. Results of color recovery. From top to bottom, the underwater color charts taken by TS1, W60, W80, D10, Z33, T6000, T8000 and the processing results of each algorithm were respectively obtained.

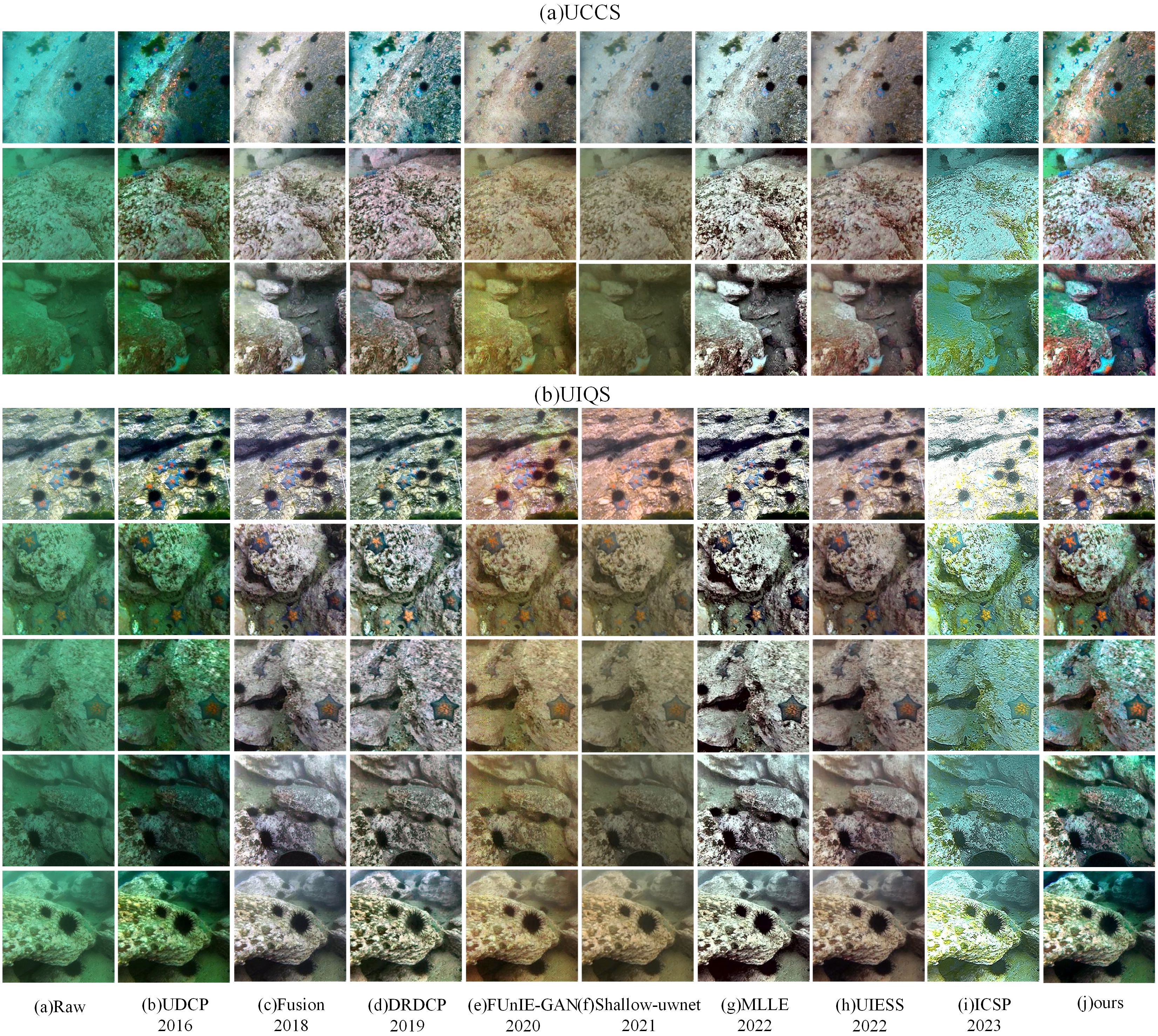

To verify the performance of the algorithm in natural light, a comparative experiment is performed on the RUIE dataset. As shown in Figure 7, UDCP fails to correct the color deviation. The result images from DRDCP and Fusion are gray, and the color recovery is not sufficiently natural. The color recovery of MLLE is unrealistic and unnatural, and the result images require improvement. The result images from FUnIE-GAN and Shallow-uwnet display light yellow distortion and low contrast. UIESS brings about red distortion. ICSP fails to completely eliminate the color deviation. The algorithms presented in this paper provide more thorough color correction, higher contrast, effectively improved visibility, and more natural color reproduction.

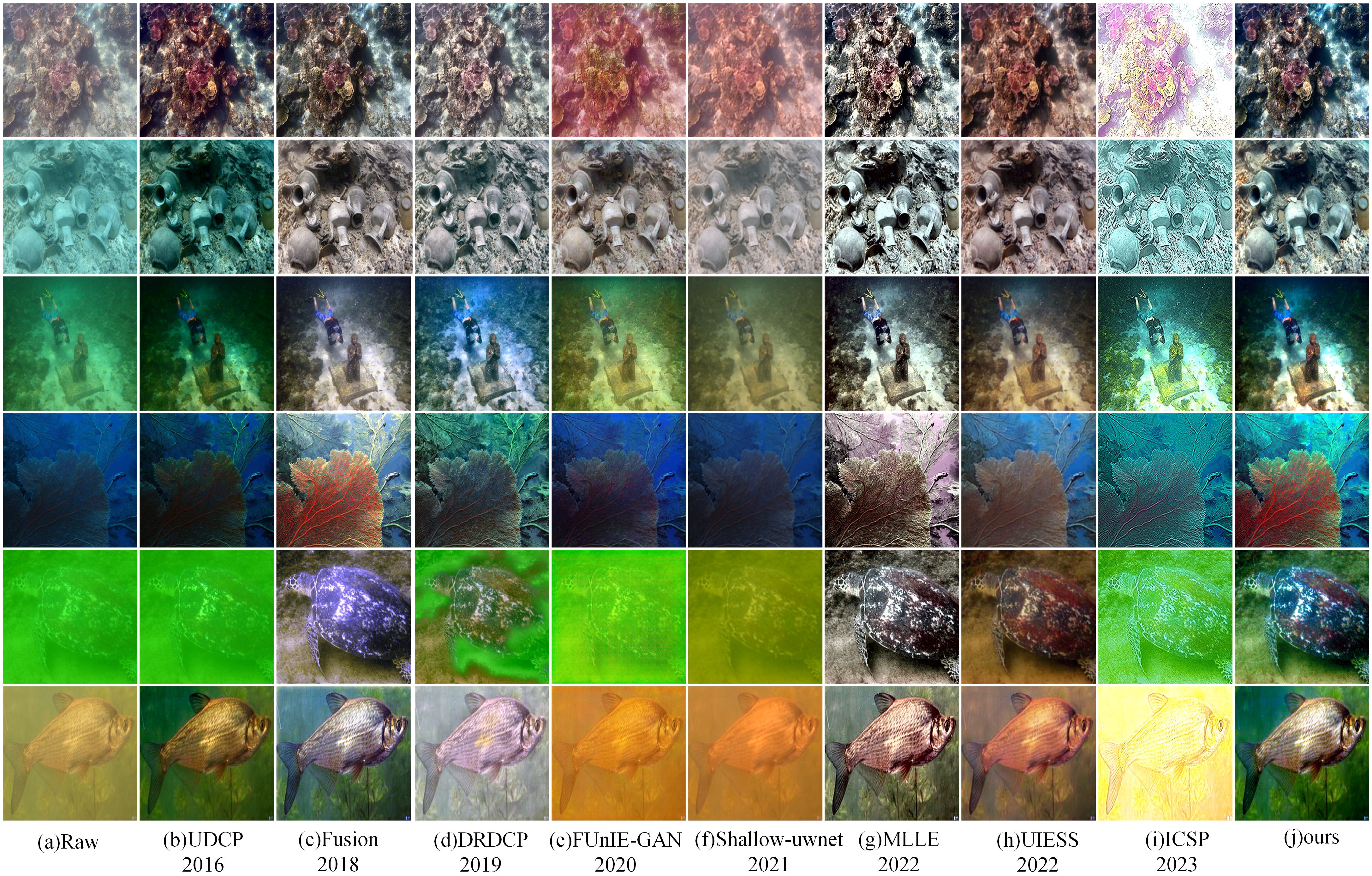

To further validate the robustness of the algorithm under natural light, we conducted comparative experiments on the UIEB dataset. Figure 8 shows the degraded images for several different color deviations in the UIEB. UDCP aggravated the color distortion, and the resulting image was dark. FUnIE-GAN, Shallow-uwnet, and UIESS additionally introduce color distortion. DRDCP and Fusion are inadequate for improving the visibility of low-visibility underwater images and eliminating the foggy appearance. MLLE can effectively enhance image visibility, but it introduces local darkness, and the saturation needs to be enhanced. ICSP overcompensates for brightness and cannot completely remove color distortion. The proposed algorithm is more thorough in removing color distortion, can effectively improve visibility, and has better texture detail recovery.

4.3 Quantitative comparison

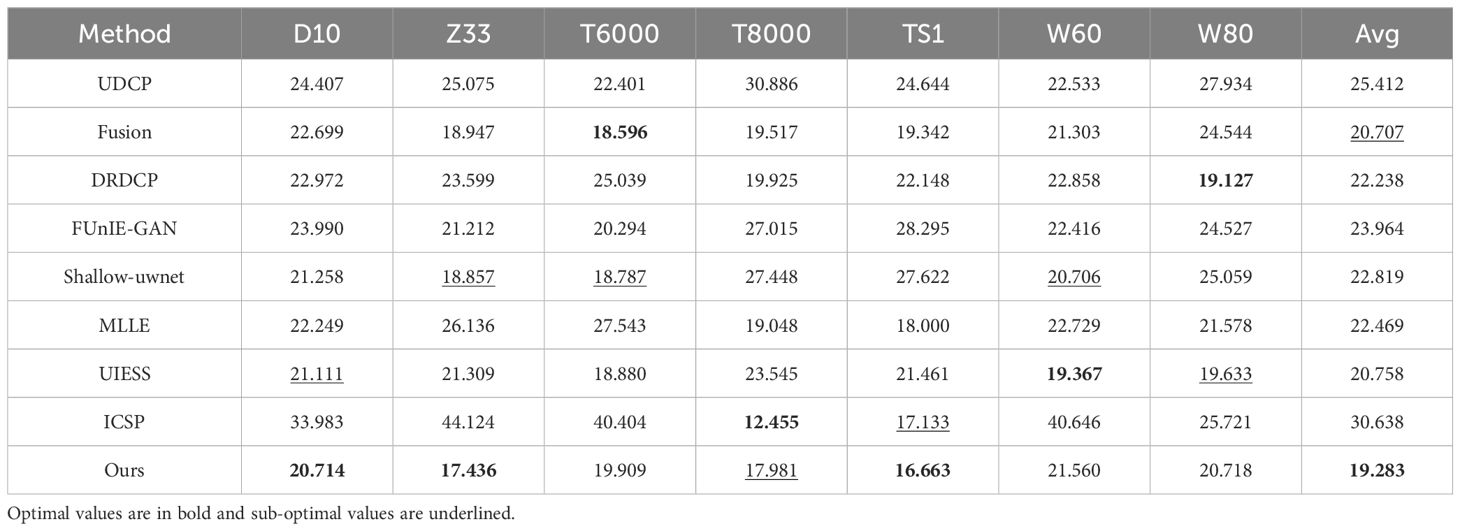

As shown in Table 1, the algorithm has the best index when processing D10, Z33, and TS1 images, and the average index on the dataset is also the best, which once again verifies the color correction ability of the algorithm.

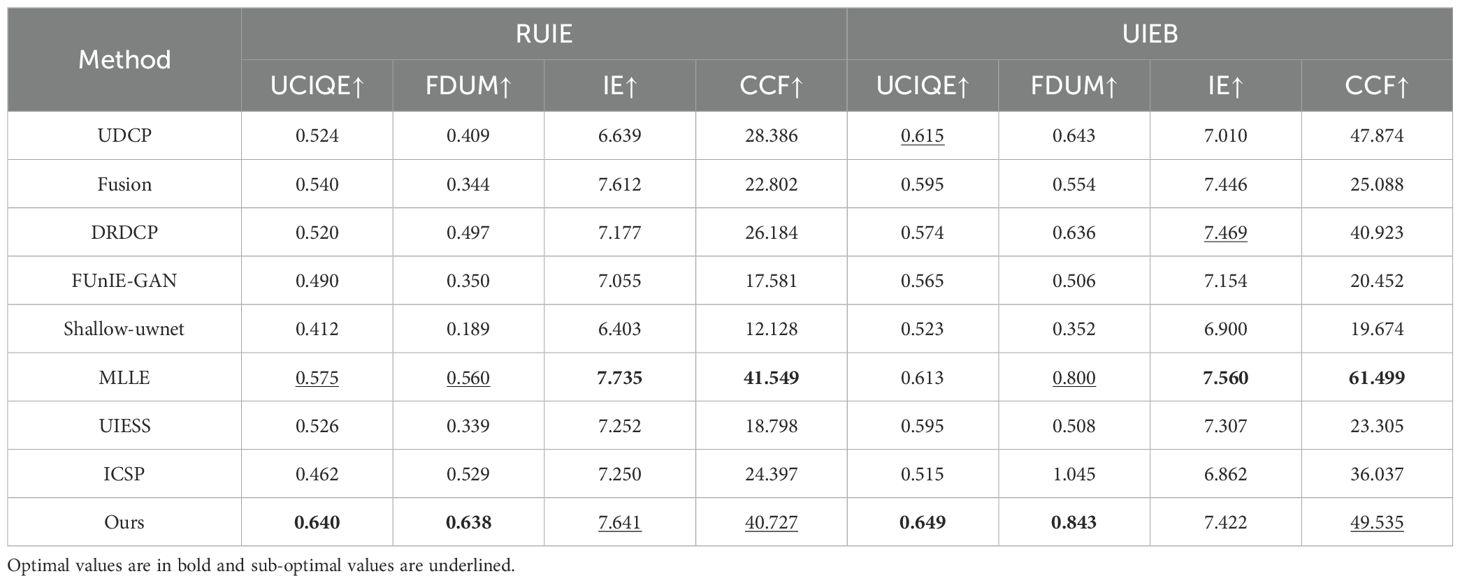

From Table 2, it can be seen that, compared with the proposed algorithm, two indexes are optimal and two indexes are sub-optimal on the RUIE dataset. Two indexes on the UIEB dataset are optimal, and one index is sub-optimal. The combined qualitative and quantitative evaluation results show that the proposed algorithm has stronger contrast recovery and produces better processing results for underwater images of different quality levels.

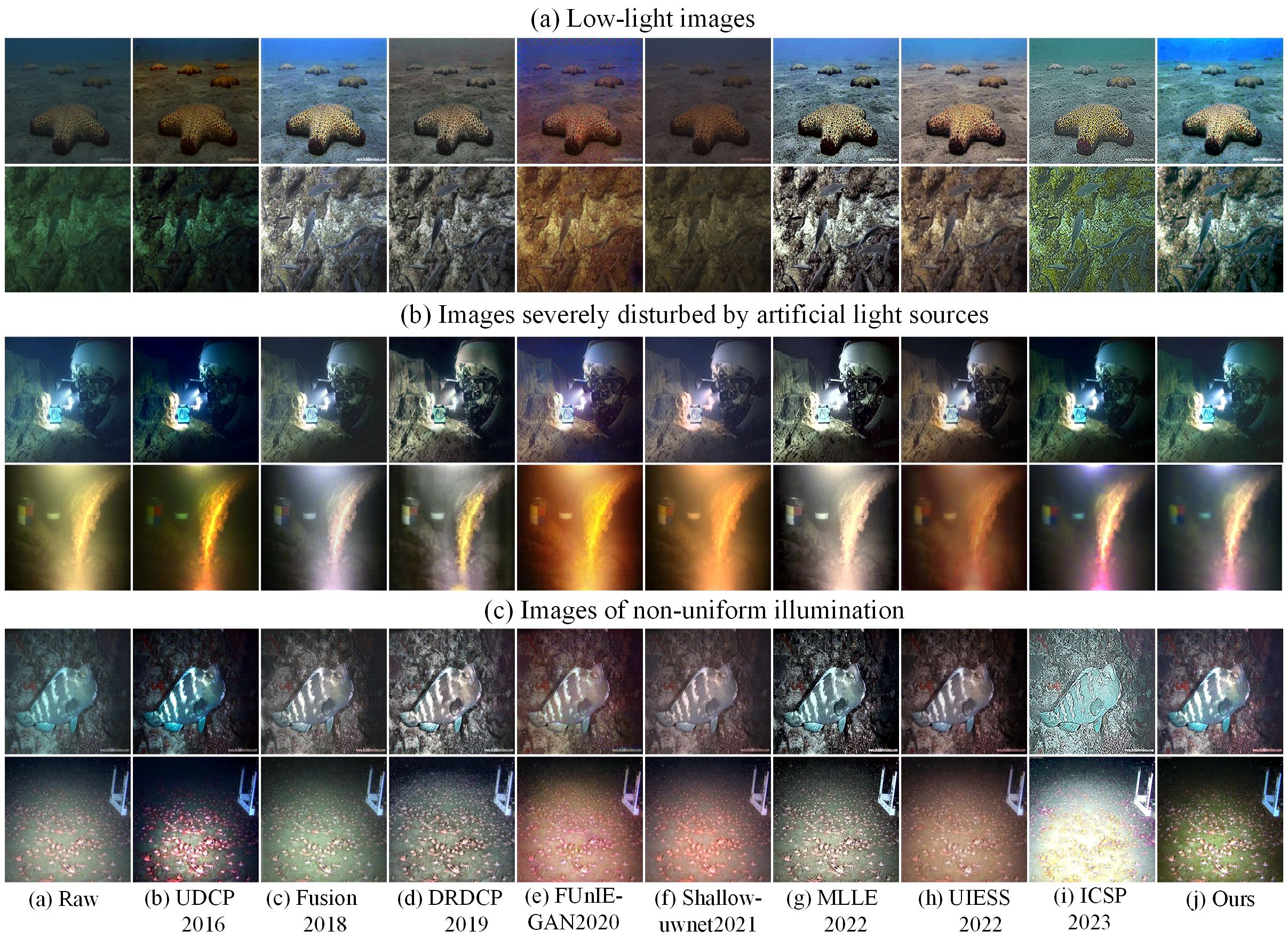

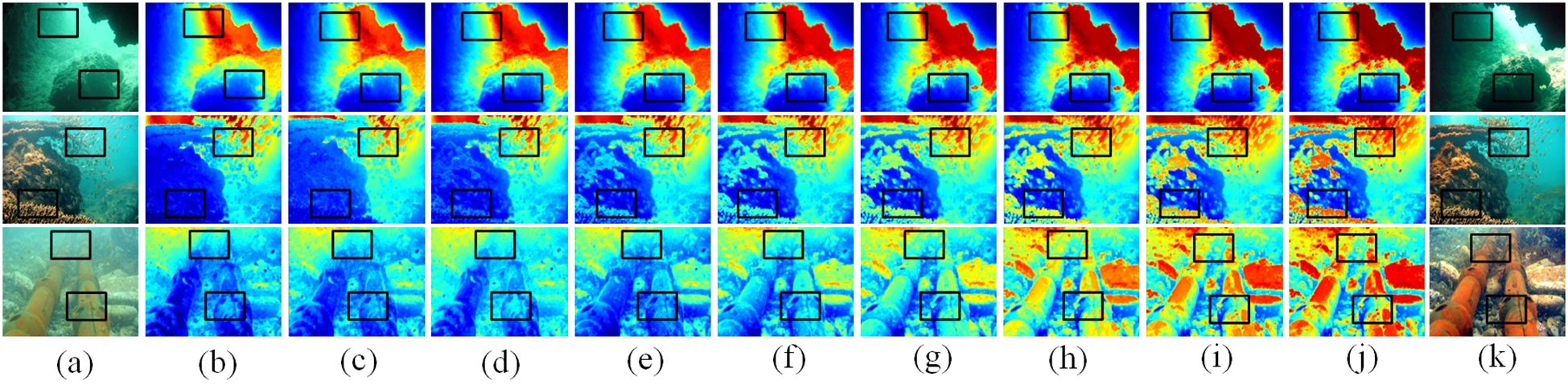

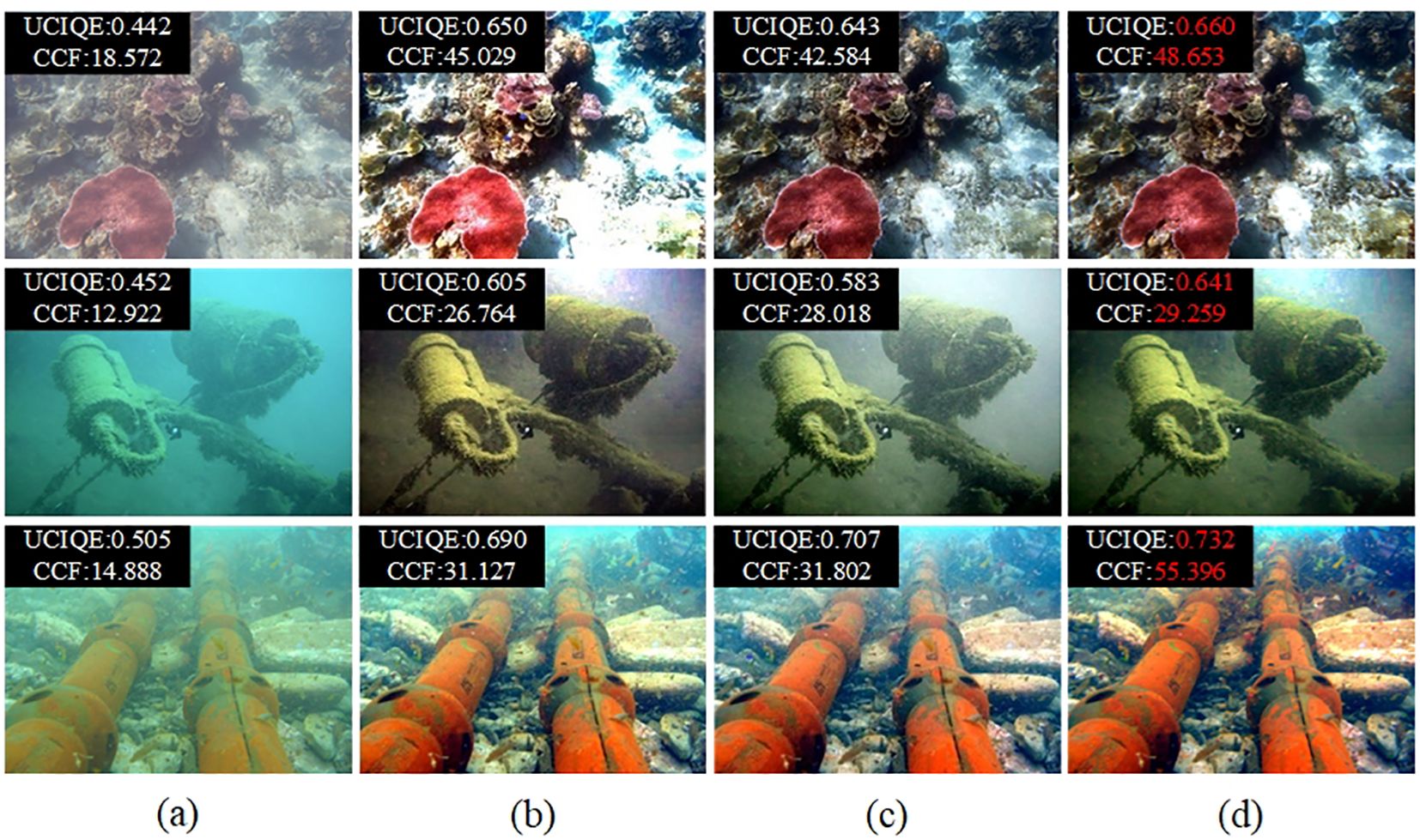

4.4 Experimental results for non-uniform light

To verify the robustness of the algorithm under complex illumination, underwater images in complex lighting environments such as low light and artificial lighting were selected from UIEB, Ocean-dark dataset, and reference (Treibitz and Schechner, 2008) for comparative experiments. As shown in Figure 9, from top to bottom, the images with low light, heavily disturbed by artificial light sources, and non-uniform illumination are presented, along with their resultant maps. For low-light images, Fusion effectively restores brightness, but the contrast still needs to be improved, while other algorithms fail to restore brightness and still suffer from color deviation. For images severely disturbed by artificial light sources, UDCP and Shallow-uwnet result in darker dark regions of the image, and the other algorithms result in exposure in the light source region. For non-uniform illumination environments that are seriously disturbed by artificial light sources and have low image contrast, the algorithm in this paper can correct the color bias and improve the image contrast compared to other algorithms, with better visual effects.

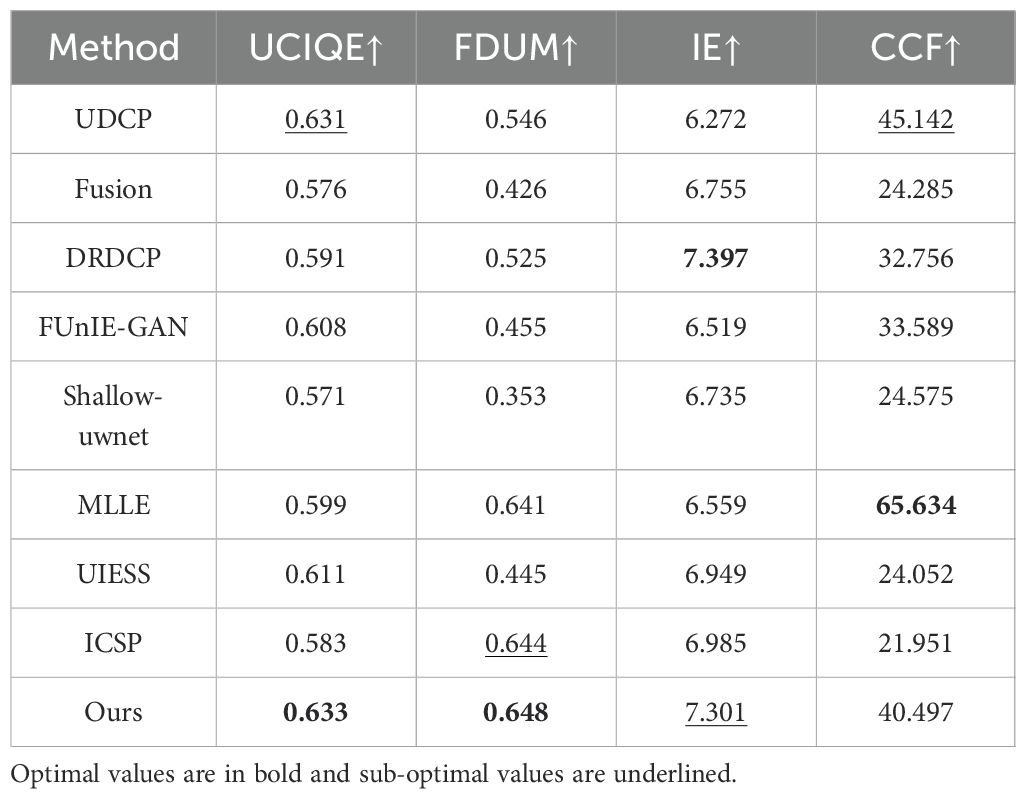

As can be seen from Table 3, this paper’s algorithm achieves two optimal and two sub-optimal objective indexes for the resultant image when processing images in non-uniform lighting environments. The combined qualitative and quantitative evaluation results show that the algorithm has strong robustness.

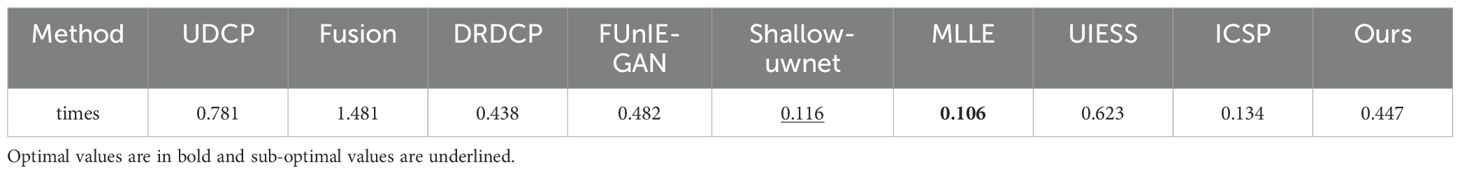

4.5 Running time comparison experiment

To verify the efficiency of the algorithm, the results are shown in Table 4. As a result of the addition of segmentation and superposition steps in this paper’s algorithm, the running time is slightly slower than that of other individual algorithms. However, this paper’s algorithm achieves better qualitative and quantitative results, and it is able to effectively deal with images under non-uniform lighting conditions. Overall, the algorithm has better performance.

4.6 Ablation experiment

To evaluate the effectiveness of the algorithm and its individual components, images were randomly selected from the dataset for the ablation experiment. The experiment comprised the following three parts: the uncorrected background light intensity layer, the uncorrected background light color layer, and the complete algorithm. The results of the experiment are shown in Figure 10.

Figure 10. Results of ablation experiment, optimum value is marked red. From left to right: (A) Raw images. (B) Uncorrected background light intensity layer. (C) Uncorrected background light color layer. (D) Proposed algorithm.

As can be seen from the visual comparison in Figure 10, compared with the original image, the uncorrected raw image of the background light intensity layer has low contrast, fails to carry out effective defogging, and has an unnatural color correction. The uncorrected raw image of the background light color layer has improved contrast and reduced color bias, but there still exists the phenomenon of incomplete color bias correction or the introduction of other color biases, as well as over-enhancement. The raw image of this paper’s algorithm with all modules included has a natural restoration of the color, with significantly improved contrast, clear details, resulting in the best visual effect.

4.7 Application test

To verify the application effectiveness of the proposed algorithm, the SURF algorithm (Bay et al., 2006) is employed for feature point matching. As shown in Figure 11(I), three typical images are selected for experimental comparison, with the feature point matching numbers of these images displayed in the upper right corner of the figure. From Figure 11(I), it is evident that the number of local feature points in the recovered image has significantly increased. This demonstrates the effectiveness of the proposed method in detail augmentation and provides reliable data support for realizing SAGSIN.

Figure 11. (I) Feature point matching. The white numbers represent the number of matched feature points. (II) Failure case analysis: the first two rows represent the raw images and its corresponding scatter diagram, while the last two rows represent the resulting images and its corresponding scatter diagram.

4.8 Failure case analysis

Due to the extremely complex lighting conditions in underwater environments, different depths, water qualities, and biological communities can all affect lighting, leading to varying degrees of color deviation. Although our method can effectively correct most color deviations, there may still be some color residuals in certain extreme or special underwater environments. During the experiment, a few failed cases emerged occasionally. As shown in Figure 11(II), these images exhibit low contrast, significant color deviations, and extremely blurred object edges. From a subjective perspective, although our algorithm corrects image color deviations and enhances image contrast, the naturalness of the image is poor, and the edge smoothness needs to be improved.

Therefore, we will continue to optimize algorithms in future research to improve adaptability and robustness.

5 Conclusion

In this paper, we propose an attenuated incident optical model that accounts for the attenuation properties of light in water, with a focus on the light absorption attenuation characteristics of water. We propose a new maximum reflection prior to the estimation of the background light layer, which is introduced in the attenuated incident optical model and combined with background segmentation to restore degraded images. Our method successfully restores details and textures, providing reliable data support for SAGSIN to realize cross-domain and multi-dimensional information fusion and sharing. To validate the superiority and effectiveness of our method, we conducted a comprehensive series of experiments and performed qualitative and quantitative comparisons with eight existing clarity approaches. Additionally, we conducted experiments under non-uniform illumination conditions to demonstrate that our method is also favorable for processing images in such scenarios. While our method excels in restoring non-uniform incident light, it still faces challenges when processing images from extreme underwater environments. We intend to address these challenges in our future work.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

SL: Formal analysis, Methodology, Supervision, Validation, Writing – original draft, Writing – review & editing. YS: Conceptualization, Data curation, Formal analysis, Methodology, Software, Validation, Writing – original draft, Writing – review & editing. NY: Data curation, Formal analysis, Validation, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was in part supported by the Basic Research Project of Higher Education Institutions of the Educational Department of Liaoning Province under Grant No. LJKMZ20220615, the Special Fund for Basic Scientific Research Operations of Undergraduate Universities in Liaoning Province, and the “Guangxuan Plan” project of Shenyang Ligong University.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akkaynak D., Treibitz T. (2018). A revised underwater image formation model. In. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) in 2018, 6723–6732. doi: 10.1109/CVPR.2018.00703

Ali U., Mahmood M. T. (2022). Underwater image restoration through regularization of coherent structures. Front. Mar. Sci. 9, 1024339. doi: 10.3389/fmars.2022.1024339

Ancuti C. O., Ancuti C., De Vleeschouwer C., Bekaert P. (2017). Color balance and fusion for underwater image enhancement. IEEE Trans. image Process. 27, 379–393. doi: 10.1109/TIP.83

Azmi K. Z. M., Ghani A. S. A., Yusof Z. M., Ibrahim Z. (2019). Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Computing 85, 105810. doi: 10.1016/j.asoc.2019.105810

Bay H., Ess A., Tuytelaars T., Gool V. (2006). “Speeded-up robust features (surf). computer vision and image understanding (cviu),” in Proc of the 9th European Conference on Computer Vision, Austria: Springer.

Bi X., Wang P., Guo W., Zha F., Sun L. (2024). Rgb/event signal fusion framework for multi-degraded underwater image enhancement. Front. Mar. Sci. 11, 1366815. doi: 10.3389/fmars.2024.1366815

Chen Y.-W., Pei S.-C. (2022). Domain adaptation for underwater image enhancement via content and style separation. IEEE Access 10, 90523–90534. doi: 10.1109/ACCESS.2022.3201555

Chen X., Zhang P., Quan L., Yi C., Lu C. (2021). Underwater image enhancement based on deep learning and image formation model. arXiv preprint. doi: 10.48550/arXiv.2101.00991

Cheng N., Quan W., Shi W., Wu H., Ye Q., Zhou H., et al. (2020). A comprehensive simulation platform for space-air-ground integrated network. IEEE Wireless Commun. 27, 178–185. doi: 10.1109/MWC.7742

Deng X., Wang H., Liu X. (2019). Underwater image enhancement based on removing light source color and dehazing. IEEE Access 7, 114297–114309. doi: 10.1109/Access.6287639

Drews P. L., Nascimento E. R., Botelho S. S., Campos M. F. M. (2016). Underwater depth estimation and image restoration based on single images. IEEE Comput. Graphics Appl. 36, 24–35. doi: 10.1109/MCG.38

Fu H., Zheng W., Meng X., Wang X., Wang C., Ma H. (2023). “You do not need additional priors or regularizers in retinex-based low-light image enhancement,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18125–18134.

Ghani A. S. A., Isa N. A. M. (2017). Automatic system for improving underwater image contrast and color through recursive adaptive histogram modification. Comput. Electron. Agric. 141, 181–195. doi: 10.1016/j.compag.2017.07.021

Guo H., Li J., Liu J., Tian N., Kato N. (2021). A survey on space-air-ground-sea integrated network security in 6g. IEEE Commun. Surveys Tutorials 24, 53–87. doi: 10.1109/COMST.2021.3131332

He K., Sun J., Tang X. (2010). Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. doi: 10.1109/TPAMI.2010.168

Hou G., Li J., Wang G., Yang H., Huang B., Pan Z. (2020). A novel dark channel prior guided variational framework for underwater image restoration. J. Visual communication image representation 66, 102732. doi: 10.1016/j.jvcir.2019.102732

Hou G., Li N., Zhuang P., Li K., Sun H., Li C. (2023). Non-uniform illumination underwater image restoration via illumination channel sparsity prior. IEEE Trans. Circuits Syst. Video Technol. doi: 10.1109/TCSVT.2023.3290363

Hu K., Weng C., Zhang Y., Jin J., Xia Q. (2022). An overview of underwater vision enhancement: From traditional methods to recent deep learning. J. Mar. Sci. Eng. 10, 241. doi: 10.3390/jmse10020241

Islam M. J., Xia Y., Sattar J. (2020). Fast underwater image enhancement for improved visual perception. IEEE Robotics Automation Lett. 5, 3227–3234. doi: 10.1109/LSP.2016.

Lei X., Wang H., Shen J., Liu H. (2022). Underwater image enhancement based on color correction and complementary dual image multi-scale fusion. Appl. Optics 61, 5304–5314. doi: 10.1364/AO.456368

Li C., Anwar S., Hou J., Cong R., Guo C., Ren W. (2021). Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Trans. Image Process. 30, 4985–5000. doi: 10.1109/TIP.2021.3076367

Li C.-Y., Guo J.-C., Cong R.-M., Pang Y.-W., Wang B. (2016). Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25, 5664–5677. doi: 10.1109/TIP.2016.2612882

Li J., Li Y. (2019). Underwater image restoration algorithm for free-ascending deep-sea tripods. Optics Laser Technol. 110, 129–134. doi: 10.1016/j.optlastec.22018.05.034

Li S., Liu F., Wei J. (2022). Dehazing and deblurring of underwater images with heavy-tailed priors. Appl. Optics 61, 3855–3870. doi: 10.1364/AO.452345

Liang Z., Ding X., Wang Y., Yan X., Fu X. (2021). Gudcp: Generalization of underwater dark channel prior for underwater image restoration. IEEE Trans. circuits Syst. video Technol. 32, 4879–4884. doi: 10.1109/TCSVT.2021.3114230

Lin S., Chi K., Wei T., Tao Z. (2021). Underwater image sharpening based on structure restoration and texture enhancement. Appl. Optics 60, 4443–4454. doi: 10.1364/AO.420962

Lin S., Zhang R., Ning Z., Luo J. (2023). Tcrn: A two-step underwater image enhancement network based on triple-color space feature reconstruction. J. Mar. Sci. Eng. 11, 1221. doi: 10.3390/jmse11061221

Liu S., Fan H., Lin S., Wang Q., Ding N., Tang Y. (2022a). Adaptive learning attention network for underwater image enhancement. IEEE Robotics Automation Lett. 7, 5326–5333. doi: 10.1109/LRA.2022.3156176

Liu R., Fan X., Zhu M., Hou M., Luo Z. (2020). Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. circuits Syst. video Technol. 30, 4861–4875. doi: 10.1109/TCSVT.76

Liu X., Lin S., Chi K., Tao Z., Zhao Y. (2022b). Boths: Super lightweight network-enabled underwater image enhancement. IEEE Geosci. Remote Sens. Lett. 20, 1–5. doi: 10.1109/LGRS.2022.3230049

Liu T., Zhu K., Wang X., Song W., Wang H. (2024). Lightweight underwater image adaptive enhancement based on zero-reference parameter estimation network. Front. Mar. Sci. 11, 1378817. doi: 10.3389/fmars.2024.1378817

Naik A., Swarnakar A., Mittal K. (2021). “Shallow-uwnet: Compressed model for underwater image enhancement (student abstract),” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35. 15853–15854.

Peng Y.-T., Cosman P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Trans. image Process. 26, 1579–1594. doi: 10.1109/TIP.2017.2663846

Porto Marques T., Branzan Albu A., Hoeberechts M. (2019). A contrast-guided approach for the enhancement of low-lighting underwater images. J. Imaging 5, 79. doi: 10.3390/jimaging5100079

Rao B. S. (2020). Dynamic histogram equalization for contrast enhancement for digital images. Appl. Soft Computing 89, 106114. doi: 10.1016/j.asoc.2020.106114

Raveendran S., Patil M. D., Birajdar G. K. (2021). Underwater image enhancement: a comprehensive review, recent trends, challenges and applications. Artif. Intell. Rev. 54, 5413–5467. doi: 10.1007/s10462-021-10025-z

Treibitz T., Schechner Y. Y. (2008). Active polarization descattering. IEEE Trans. Pattern Anal. Mach. Intell. 31, 385–399. doi: 10.1109/TPAMI.2008.85

Wang Y., Li N., Li Z., Gu Z., Zheng H., Zheng B., et al. (2018). An imaging-inspired no-reference underwater color image quality assessment metric. Comput. Electrical Eng. 70, 904–913. doi: 10.1016/j.compeleceng.2017.12.006

Wang H., Sun S., Bai X., Wang J., Ren P. (2023a). A reinforcement learning paradigm of configuring visual enhancement for object detection in underwater scenes. IEEE J. Oceanic Eng. 48, 443–461. doi: 10.1109/JOE.2022.3226202

Wang H., Sun S., Chang L., Li H., Zhang W., Frery A. C., et al. (2024a). Inspiration: A reinforcement learning-based human visual perception-driven image enhancement paradigm for underwater scenes. Eng. Appl. Artif. Intell. 133, 108411. doi: 10.1016/j.engappai.2024.108411

Wang G., Tian J., Li P. (2019). Image color correction based on double transmission underwater imaging model. Acta optica Sin. 39, 0901002. doi: 10.3788/AOS201939.0901002

Wang H., Zhang W., Bai L., Ren P. (2024b). Metalantis: A comprehensive underwater image enhancement framework. IEEE Trans. Geosci. Remote Sens. 62. doi: 10.1109/TGRS.2024.3387722

Wang H., Zhang W., Ren P. (2024c). Self-organized underwater image enhancement. ISPRS J. Photogrammetry Remote Sens. 215, 1–14. doi: 10.1016/j.isprsjprs.2024.06.019

Wang H., Zhong G., Sun J., Chen Y., Zhao Y., Li S., et al. (2023b). Simultaneous restoration and super-resolution gan for underwater image enhancement. Front. Mar. Sci. 10, 1162295. doi: 10.3389/fmars.2023.1162295

Yang M., Sowmya A. (2015). An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24, 6062–6071. doi: 10.1109/TIP.2015.2491020

Yang N., Zhong Q., Li K., Cong R., Zhao Y., Kwong S. (2021). A reference-free underwater image quality assessment metric in frequency domain. Signal Processing: Image Communication 94, 116218. doi: 10.1016/j.image.2021.116218

Yuan J., Cao W., Cai Z., Su B. (2020). An underwater image vision enhancement algorithm based on contour bougie morphology. IEEE Trans. Geosci. Remote Sens. 59, 8117–8128. doi: 10.1109/TGRS.2020.3033407

Zhang J., Cao Y., Fang S., Kang Y., Wen Chen C. (2017). “Fast haze removal for nighttime image using maximum reflectance prior,” in Proceedings of the IEEE conference on computer vision and pattern recognition. 7418–7426.

Zhang W., Pan X., Xie X., Li L., Wang Z., Han C. (2021). Color correction and adaptive contrast enhancement for underwater image enhancement. Comput. Electrical Eng. 91, 106981. doi: 10.1016/j.compeleceng.2021.106981

Zhang W., Zhuang P., Sun H.-H., Li G., Kwong S., Li C. (2022). Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 31, 3997–4010. doi: 10.1109/TIP.2022.3177129

Zheng S., Wang R., Zheng S., Wang L., Liu Z. (2024). A learnable full-frequency transformer dual generative adversarial network for underwater image enhancement. Front. Mar. Sci. 11, 1321549. doi: 10.3389/fmars.2024.1321549

Zhou J., Li B., Zhang D., Yuan J., Zhang W., Cai Z., et al. (2023a). Ugif-net: An efficient fully guided information flow network for underwater image enhancement. IEEE Trans. Geosci. Remote Sens. 61. doi: 10.1109/TGRS.2023.3293912

Zhou J., Liu Q., Jiang Q., Ren W., Lam K.-M., Zhang W. (2023b). Underwater camera: Improving visual perception via adaptive dark pixel prior and color correction. Int. J. Comput. Vision, 1–19. doi: 10.1007/s11263-023-01853-3

Zhou J., Wang Y., Li C., Zhang W. (2023c). Multicolor light attenuation modeling for underwater image restoration. IEEE J. Oceanic Eng. 48. doi: 10.1109/JOE.2023.3275615

Zhou J., Yang T., Chu W., Zhang W. (2022a). Underwater image restoration via backscatter pixel prior and color compensation. Eng. Appl. Artif. Intell. 111, 104785. doi: 10.1016/j.engappai.2022.104785

Keywords: underwater image restoration, attenuated incident optical model, background segmentation, new maximum reflection prior, background light layer estimation

Citation: Lin S, Sun Y and Ye N (2024) Underwater image restoration via attenuated incident optical model and background segmentation. Front. Mar. Sci. 11:1457190. doi: 10.3389/fmars.2024.1457190

Received: 30 June 2024; Accepted: 16 September 2024;

Published: 10 October 2024.

Edited by:

Takafumi Hirata, Hokkaido University, JapanReviewed by:

Akihiko Tanaka, Tokai University, JapanPeng Ren, China University of Petroleum (East China), China

Copyright © 2024 Lin, Sun and Ye. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sen Lin, bGluc2VuQHN5bHUuZWR1LmNu

†These authors have contributed equally to this work and share first authorship

Sen Lin

Sen Lin Yuanjie Sun†

Yuanjie Sun†