94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Mar. Sci. , 28 May 2024

Sec. Ocean Observation

Volume 11 - 2024 | https://doi.org/10.3389/fmars.2024.1411920

This article is part of the Research Topic Best Practices in Ocean Observing View all 85 articles

Unmanned surface vehicles (USVs) offer significant value through their capability to undertake hazardous and time-consuming missions across water surfaces. Recently, as the application of USVs has been extended to nearshore waterways, object tracking is vital to the safe navigation of USVs in offshore scenes. However, existing tracking systems for USVs are mainly based on cameras or LiDAR sensors, which suffer from drawbacks such as lack of depth perception or high deployment costs. In contrast, millimeter-wave (MMW) radar offers advantages in terms of low cost and robustness in all weather and lighting conditions. In this work, to construct a robust and low-cost tracking system for USVs in complex offshore scenes, we propose a novel MMW radar-based object tracking method (ROTracker). The proposed ROTracker combines the physical properties of MMW radar with traditional tracking systems. Specifically, we introduce the radar Doppler velocity and a designed motion discriminator to improve the robustness of the tracking system toward low-speed targets. Moreover, we conducted real-world experiments to validate the efficacy of the proposed ROTracker. Compared to other baseline methods, ROTracker achieves excellent multiple object tracking accuracy in terms of 91.9% in our collected dataset. The experimental results demonstrated that the proposed ROTracker has significant application potential in both accuracy and efficiency for USVs, addressing the challenges posed by complex nearshore environments.

In recent years, unmanned surface vehicles (USVs) have been increasingly notable for their capacity to execute hazardous and time-intensive missions (Kang et al. (2024)). As early as the end of 1960s, remote-controlled USVs have found utility in various naval applications (Skorski et al. (1970)). With the advent of the twenty-first century, significant advancements in USV control systems and navigation technologies have expanded their operational mode (Manley (2008)) where USVs quipped with partially autonomous or fully autonomous control systems can be remotely controlled by operators stationed on land or aboard nearby vessels. As USVs in offshore scenes are more closely related to human life and have a large potential value in offshore waterways transportation systems, strong demands from commercial, scientific, and environmental factors have driven the expansion of USV applications from wide marine scenes to narrow offshore scenes. However, complex offshore environments also bring new challenges for USV autonomous perception system, especially for object tracking task.

Object tracking is a typical perception task for USVs, which provides essential target information and enables USVs autonomous navigation safe. With the advancement of intelligent perception technology, object tracking for unmanned surface vehicles (USVs) has garnered significant attention from researchers. Almeida et al. (2009); Oleynikova et al. (2010) construct object track system through marine radar for USV in wide marine environment. However, as marine radars have low resolution and limited capability in short-range object detection, they are often deemed unsuitable for deployment in offshore environments. Some researchers, such as (Larson et al. (2007); Martins et al. (2007); Xu et al. (2023)), have developed visual tracking systems for USVs, leveraging the high semantic information provided by cameras. Tall et al. (2010) propose a visual tracking method for USV in early exploring stage, using traditional image processing technology and demonstrating the potentiality of visual tracking system. Wolf et al. (2010) extend it with a 360-degree visual tracking system. They utilize multiple cameras to achieve surrounding-view visual tracking system for USVs. And with the emergence of deep learning, camera has become a versatile and highly relevant sensor in automatic applications, leading the trend of ocean exploration research around computer vision. Kim et al. (2022) utilize improved YOLO series object detection algorithms to identify maritime targets against waves, light reflection, and water mist on the water surface. Yang et al. (2021) propose an enhanced SiamMask network for visual ship tracking and semi-supervised video object segmentation in the open sea. In these visual tracking systems, visual detectors first detect objects from camera image inputs, followed by a multi-object track method to output track results. However, these visual-based algorithms can hardly discriminate ships from background under light deficiency conditions and suffer from tracking performance degradation in dynamic shaking environment. Besides, their algorithms do not qualify for tracking multiple targets simultaneously. Ramos et al. (2019) design an inertial measurement unit (IMU) aided surface plane fitting algorithm and a depth fingerprint association cue for nautical object tracking. Due to the limitation of stereo camera baseline length, this algorithm is range constrained, which can only perceive obstacles within 20 m. Because cameras are sensitive to light and weather changes, their perception performance is relatively unstable.

To overcome the shortcoming of visual tracking methods, other researchers (De Robertis et al. (2021); Zulkifli et al. (2023)), have utilized LiDAR sensors to construct tracking systems for USVs. LiDAR sensors offer accurate measurements and robustness under various lighting conditions. LiDAR-based tracking systems provide valuable spatial information, including the location and velocity of moving objects, which is beneficial for enabling autonomous navigation of USVs in complex water surface traffic scenes. Muhovič et al. (2019) proposed a LiDAR-based simultaneous multi-object tracking and static mapping system tailored for USVs. This approach operates effectively without requiring any training data and exhibits robust performance, particularly in nearshore scenarios. Halterman and Bruch (2010) developed a berthing awareness framework, utilizing a 64-line LiDAR to sense the ego-ship status relative to the dock in real time by registering point clouds across successive scans. While LiDAR-based tracking systems (Yao et al. (2023)) offer significant performance benefits for USVs, the high hardware cost associated with LiDAR poses a barrier to widespread deployment in USVs. To address this challenge, single-chip millimeter-wave (MMW) radars have been increasingly utilized in autonomous road vehicles and intelligent traffic monitoring as a cost-effective alternative to LiDAR.

MMW radars offer advantages such as low cost, compact size, and robustness against extreme weather conditions and varying light conditions, making it an attractive option for tracking systems (Wenger (1998)). The adoption of radar-based tracking systems presents great potential for USVs in complex offshore waterways. Some researchers explore fusing low-cost MMW radar to detect targets on the water surface. Kiriakidis et al. (2022) explore a radar-based object detect and track framework for USVs. Cheng et al. (2021) proposed a radar and camera fusion water surface small target detection model, which demonstrates the great potential of radar-camera fusion for USV detection task. However, due to the low resolution of MMW radar, traditional radar-based detection methods encounter numerous false targets, particularly in offshore waterway scenes. With the expanding application scenarios for certain offshore waterway USVs, it is worth exploring radar-based detection methods. There are three primary challenges for the application of radar-based tracking systems in offshore waterways.

(1) Sparse Point Clouds. While LiDAR sensors typically generate tens of thousands of point clouds per scan, MMW radar systems produce only hundreds of reflective points per scan period. This discrepancy arises from the different electromagnetic wavelengths utilized by each sensor type. MMW radar, being more susceptible to specular reflection, yields lower-resolution point clouds. Consequently, these sparse radar point clouds may fail to accurately represent track targets and other static features such as shores, posing a challenge for radar-based tracking systems.

(2) Noisy Radar Point Clouds. MMW radar often generates noisy clutter points in its point clouds due to its reflection characteristics. This noise includes water clutter points and multi-path noise. Water clutter points are caused by inaccurately detecting waves on the water surface, while multi-path noise results from multiple reflections of radar beams. Additionally, radar point clouds are influenced by various factors such as target material, shape, and size characteristics, further contributing to their instability. These noisy and unstable radar point clouds decrease the robustness of radar-based tracking methods.

(3) Low Moving Speed. In offshore waterway environments, USVs and other targets typically operate at low speeds compared to road and marine scenes. In these low-speed tracking scenarios, accurate analysis of point clouds at each frame is crucial, with the quality of point clouds being of paramount importance. However, the noisy radar points mentioned above present significant challenges for radar-based tracking methods, particularly for static or low-speed targets. Traditional radar-based tracking methods are primarily designed for high-speed scenes, making them less suitable for low-speed environments.

To overcome the challenges of radar-based tracking systems in offshore waterway scenes, we propose a novel MMW radar-based object tracking method named ROTracker. Given sparse 4D MMW radar point clouds, our proposed ROTracker accurately outputs tracked objects along with their spatial locations and estimated moving speeds, enabling autonomous navigation of USVs in complex offshore scenarios. Similar to most tracking systems, our ROTracker method consists of a detector and a tracker. First, the radar detector detects objects from sparse and noisy radar point clouds. Then, in the object tracking stage, alongside the common Kalman filtering tracker, we introduce Doppler speed information from MMW radar to address the sparsity of radar points and enhance the accuracy of moving direction for dynamic targets. Furthermore, we introduce a novel motion discriminator in our tracker to improve the robustness of tracking performance. Quantitative experiments are conducted to validate the tracking performance of our ROTracker compared to high-cost LiDAR-based tracking systems. Our ROTracker framework exhibits great deployment potential for offshore waterway USVs. Due to the low number of radar point clouds, our ROTracker system boasts fast running speed and can be efficiently deployed in USV embedded computing platforms.

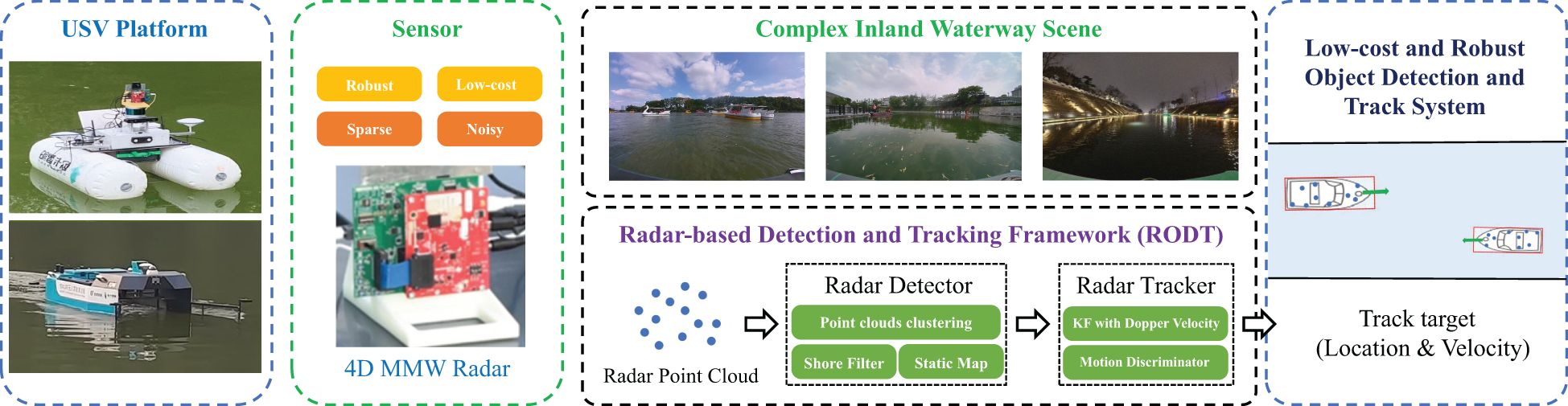

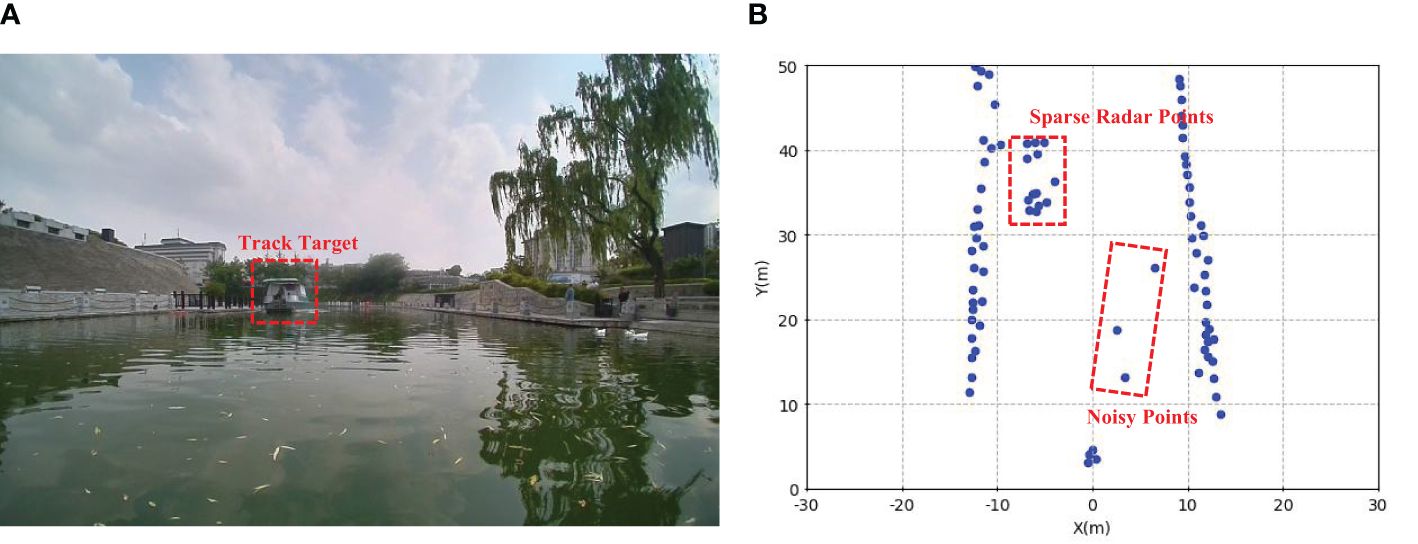

As illustrated in Figure 1, radar-based tracking systems offer a notable advantage in terms of both cost-effectiveness and robustness when compared to visual and LiDAR-based tracking systems. Based on the physical property of MMW radar, we proposed a novel radar-based object track method named ROTracker for USVs in complex offshore scenarios. However, as shown in Figure 2, the sparse and noisy radar point clouds in offshore waterway scenes present significant challenges for radar-based tracking systems. Our ROTracker, consisting of a radar detector and a radar tracker, effectively employ spatial and Doppler velocity information from 4D MMW radar to overcome the issues mentioned above. In this section, we will introduce each important module of our proposed ROTracker in detail.

Figure 1 A novel millimeter wave radar-based object tracking method for unmanned surface vehicle in offshore waterway environments.

Figure 2 The figures present the challenges of sparse MMW radars in offshore waterway scenes: (A) shows camera images. (B) shows sparse MMW radar point clouds for the detected boat.

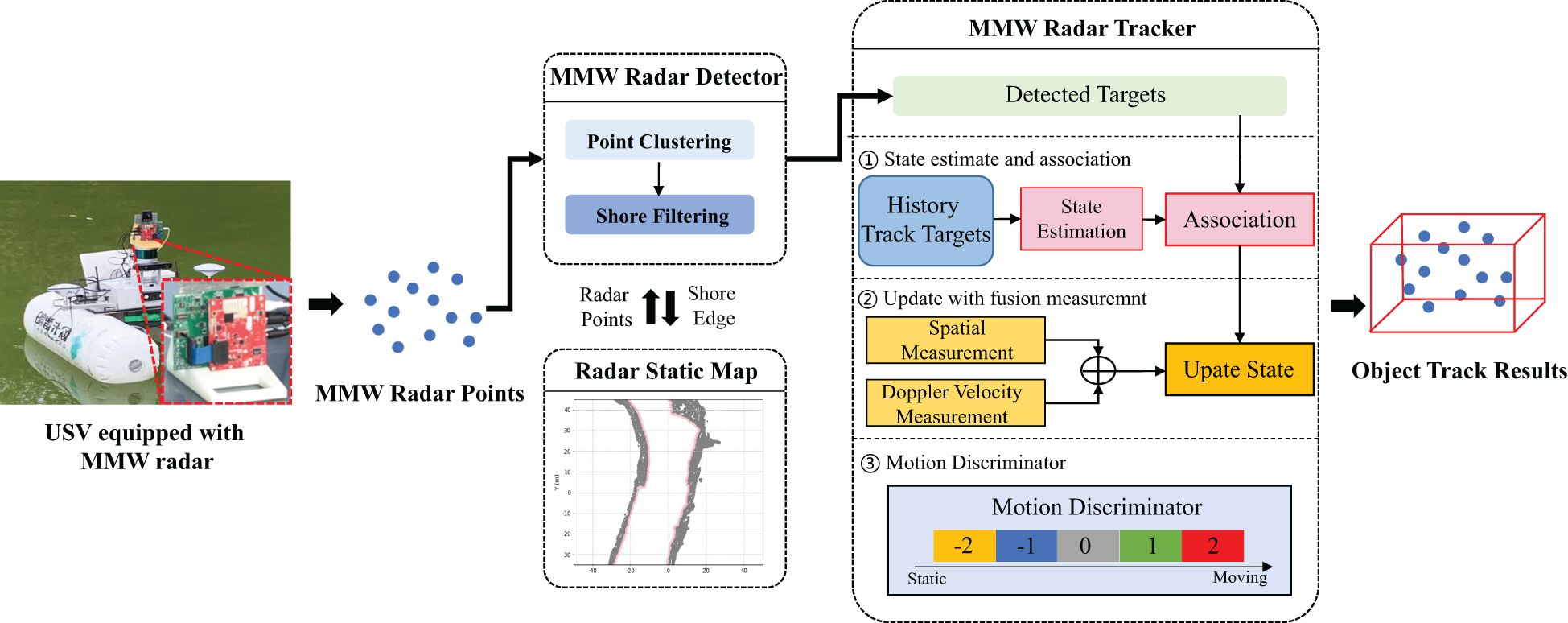

Figure 3 presents the detailed structure of our ROTracker framework. The ROTracker framework comprises a radar detector and a radar tracker. As single-frame radar points are extremely sparse and may lead to false detections from shores, we construct a static map to integrate multi-frame radar points and filter out false detection objects on the shore. Once all detected targets are obtained in the radar detector, the radar tracker calculates the moving speed and track identification (ID). Since Kalman filtering trackers are known for their accuracy and robustness, particularly under noisy sensor inputs, they have been extensively utilized in camera-based, LiDAR-based, and radar-based tracking methods. Therefore, we extend their use in our radar tracker. To address the track errors caused by noisy radar points, we incorporate radar Doppler velocity information into the Kalman Filter tracker module. Moreover, we design a motion discriminator to improve the tracking robustness in low-speed tracking scenes. This motion discriminator categorizes objects into five distinct motion levels, thereby aiding in reducing the impact of MMW radar errors on tracking performance.

Figure 3 The proposed ROTracker architecture consists of a MMW radar detector and a MMW radar tracker.

The radar detector contains two processing step: point clouds clustering and shore points filtering.

1) Point cloud clustering. The point clouds clustering module detects targets from spatial dimensions. As MMW radar points own high distance resolution in 2D spatial dimension, we can cluster radar points to obtain different objects in 2D spatial dimension. The collected point clouds primarily originate from obstacles and the surrounding coastal environment. To focus on relevant objects, we set the object track range with a region of interest (ROI). The radar point clouds outside ROI area are filtered out using a conditional filter. Next, we apply the density-based spatial clustering of applications with noise (DBSCAN) algorithm to cluster points within ROI. This method facilitates the generation of clusters in different scans under the same hyper-parameter settings. The predefined search distance and minimum points of cluster serve as the superparameters of the DBSCAN algorithm.

2) Shore points filtering. The shore is the most common element for USVs in offshore waterways. After processing in the point clustering module, there are many clustered point clouds on the shore. These shore points can lead to false track results and significantly reduce the efficacy of tracking algorithm. Hence, we address this issue in the shore point filtering module by removing these shore targets. Similar to typical LiDAR-based object tracking systems, we utilize a static map to filter out shore targets. At the start of the ROTracker system, the static map is initialized with a 2D voxel grid matrix in the world coordinate system. Subsequently, at each frame, radar point clouds are fed into the static map, and multi-frame sparse radar point clouds are accumulated to form denser radar points. To populate the static map, a coordinate transformation process is applied to the radar point clouds: Then we use an image contour extraction algorithm to extract the shore edge from a static map. According to the shore edge and USV location, we can filter false targets on the shore. After filtering shore pints, we can effectively decrease the false targets in radar detectors.

The radar tracker module of our ROTracker framework is developed from the traditional Kalman filter method. However, we have incorporated several key modules to enhance tracking accuracy, particularly in challenging multi-radar tracking scenes and low-speed tracking scenarios. In radar measurement, we dynamically combine spatial measurements and velocity measurements obtained from MMW radar. While traditional radar-based tracking methods may struggle with significant spatial location variations in MMW radar points, potentially leading to tracking direction errors, our approach leverages Doppler velocity information from MMW radar to provide accurate estimates of the target’s moving direction. Furthermore, we have developed a motion discriminator module to enhance the robustness of tracking static or low-speed targets. The radar tracker module consists of three main processing steps: 1) State Estimate and Association, 2) Update with fusion measurement, 3) Motion Discriminator.

1) State estimate and association.

Similar to other vehicles, the motions of boats can be considered a linear system, so we adopt the Kalman filter algorithm in the state estimate stage. For each track object, we construct the state vector including eight elements:

where px and py are the center position of target, vx and vy are the speed along with x and y axis directions, ax and ay are the acceleration along with x and y axis directions, l and w are the length and width of the target box.

Compared to the state vector of LiDAR-based track system, we lack height estimation. The reason is that MMW radar has poor resolution in z-axis direction and we need to ignore it. Then we can predict the state for each target. As illustrated in Equation 2, we follow the KF algorithm to linearly predict the states of targets.

where k and k − 1 denote current frame and last frame, x denotes the state vector, A denotes the state transition matrix, ω denotes Gaussian white noises with zero mean and covariance Q.

Once the target states are confirmed, we employ a data association method to match track results using the intersection-over-union (IOU) metric. We compute the assignment cost matrix based on the IOU metric between each detected target and all predicted targets. Subsequently, the association results are calculated using the Hungarian algorithm to achieve the best association between targets and trajectories.

2) Update with fusion measurement.

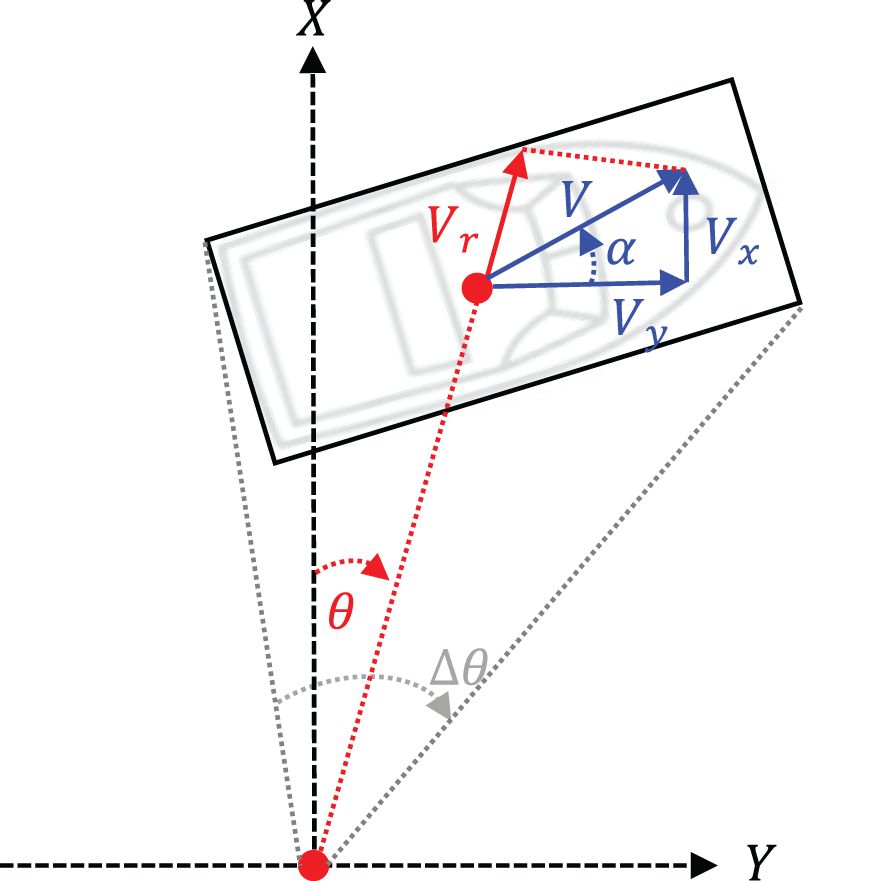

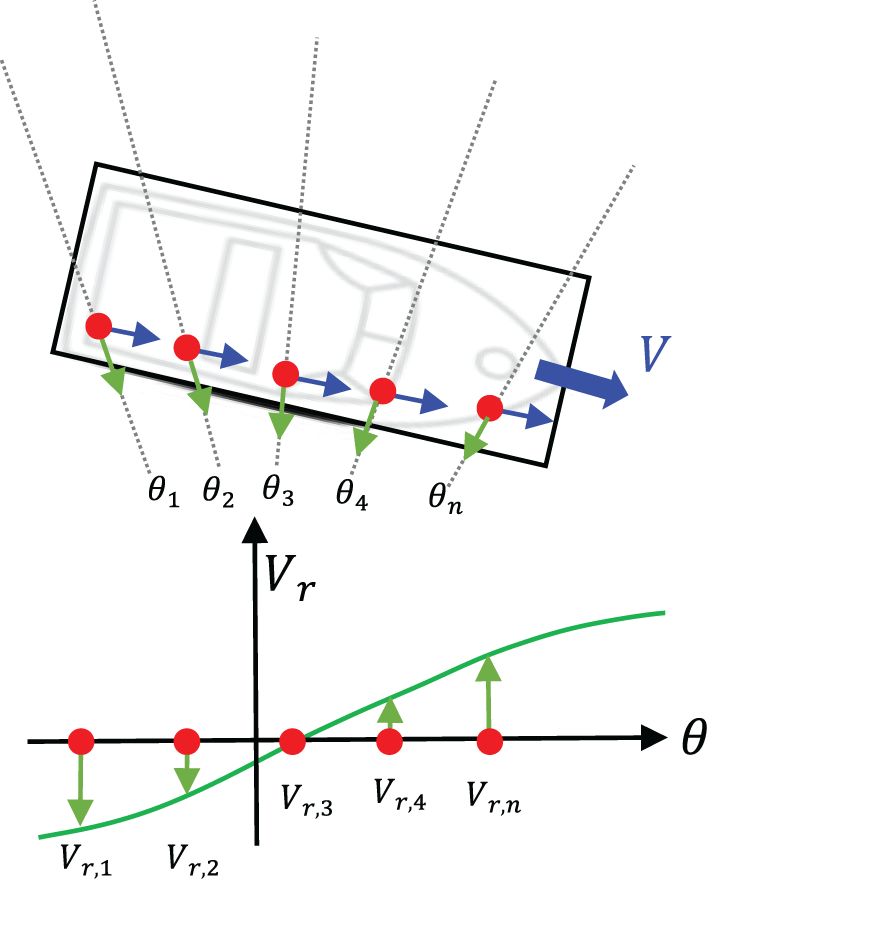

To decrease radar measurement errors, we combine radar spatial measurement and radar velocity measurement. The radar spatial measurement can be obtained through KF state transfer equation. When there are obvious errors in spatial location measurements, we can supplement the velocity measurements of MMW radar to enhance the radar measurement results. As Doppler velocity is not the actual moving speed of targets, it is a relative radius velocity. Here we introduce the theoretical background of MMW radar Doppler velocity. As presented in Figure 4, for all radar points (i =1…N), the Doppler radar gets the measured radial velocity vr depending on its azimuth angle θ. The identical velocity vector vx, vy in radar coordinates can be formulated as follows:

Figure 4 The figure presents the Doppler velocity measurement values (red) and actual velocity (blue).

where vr,1 is the radial velocity of i-th radar point, θ1 is the azimuth angle of i-th radar point.

Then we estimate the velocity of targets using Doppler velocity. As illustrated in Figure 5, the velocity profile is a linear system that is over-determined for more than two targets and Equation 1 and is transformed in a general cosine with orientation α and absolute velocity v. Using vx = vcos(α) and vy = vsin(α) in Equation 3, the radial velocity vr can be expressed as:

Figure 5 The figure presents the velocity profile of multi radar points in a linear moving vehicle, Considering one radial cell the velocity describes a cosine curve (green curve).

As vr and θi can be directly obtained from radar point clouds, to solve Equation 4, we simultaneously calculate all radar points (i =1, 2,…, N) with the same orientation α and absolute velocity v. Figure 5 shows the velocity profile of all radar points in a linear moving vehicle. As can be seen, the phase shift of the velocity profile is identical to the negative vehicle orientation and the amplitude is identical to the absolute velocity. Therefore, in our designed Doppler velocity encoder, we first adopt a density cluster algorithm DBSCAN to cluster radar points. Then a Random Sample and Consensus (RANSAC) algorithm is utilized to calculate the velocity profile of each clustered radar point clouds. RANSAC is normally used to eliminate outliers in group data. Here we used RANSAC to filter abnormal Doppler velocity in radar point clouds. The estimated azimuth angle can be calculated as follows:

where n0 denotes the maximum iterations for RANSAC.

After obtaining estimated azimuth angle through Equation 5, then we can solve a velocity vector (vx, vy) with an estimated azimuth angle, which can be formulated in Equation 6:

where vx, vy denote Dopper estimated velocity vectors in the X-axis and Y-axis of radar coordinate.

The final fusion measurement consists of radar spatial measurement and radar velocity measurement. The measurement update result zk for each target are presented in Equation 7.

where xk denotes the state vector, H denotes the radar measurement matrix, d denotes the Euclidean distance of targets, γ denotes the hyper-parameter, v denotes Gaussian white noises with zero mean and covariance R.

3) Motion discriminator.

Compared to other radar-based tracking methods, the motion discriminator is one core contribution of our radar tracker. Since MMW radar points occur in obvious spatial variation under different RCS conditions, it is hard to distinguish low-speed or static targets through KF estimation. And we usually misidentify static targets as dynamic targets. Therefore we design a motion discriminator to judge the status of target. We define five motion levels in the motion discriminator: absolutely static, static, wait, dynamic, and absolutely dynamic. All track targets will be initiated with wait status at the start frame, then we check target statuses with a dynamic-static distinguish method at the next frames. The dynamic-static distinguish method adopts intersection and union ratio (IoU) calculation for the convex hull of the radar point group, which can be expressed as:

where D denotes the motion status of target, as presented in Figure 6, Ct−1 and Ct are the convex hull of radar point clouds at t frame and t − 1 frame, c is IoU threshold.

Figure 6 Convex hull calculation for radar point clouds. (A) shows radar point clouds in frame t-1 and t. (B) shows detailed IoU calculation of convex hull.

After we obtain multi-frame dynamic-static distinguish results through Equation 8, we update the status of track targets according to distinguish results. Table 1 presents the dynamic-static distinguish results according to motion status. Each time the motion statuses of targets are only permitted to move adjacent states. Finally, the motion statuses of targets are combined with KF estimated results. For those targets with wait status, their speed will be Nan. For those targets with static or absolutely static status, their speed will be zero. The motion discriminator effectively improve the robustness of radar track system in low-speed scenes against the noisy radar point clouds.

To validate the performance of our proposed ROTracker framework, we compared our method to other baseline methods in the real-world USV platform. First, we introduce the experiment platform and evaluation metrics. Then we compare our method to other baseline methods with quantitative evaluation. Finally, we evaluate the efficiency of our ROTracker framework in each module.

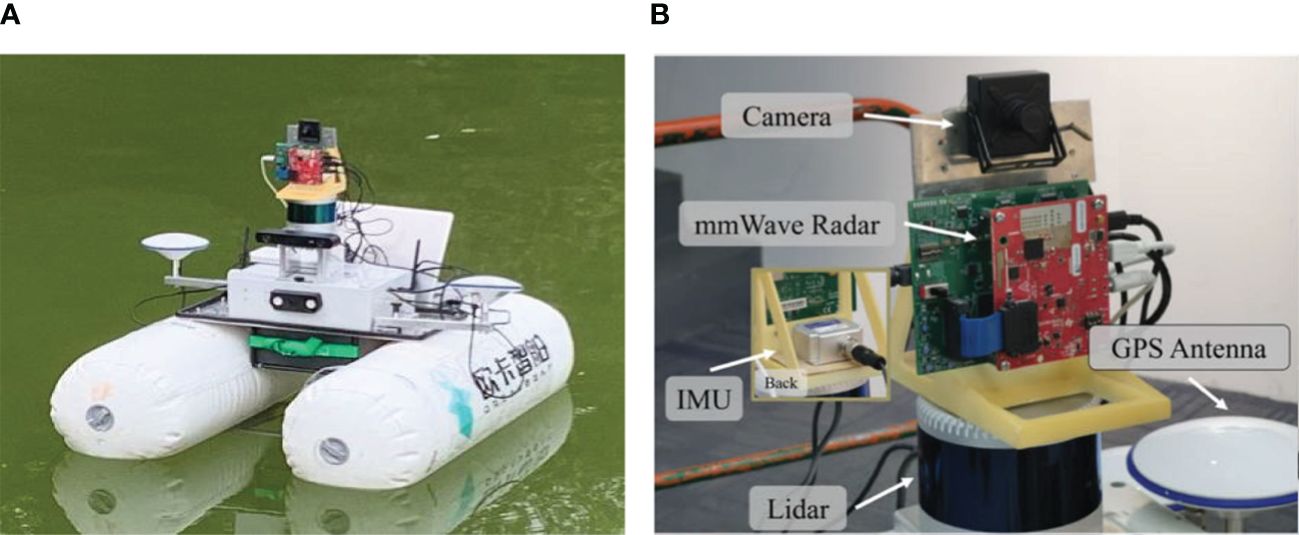

Figure 7 illustrates our custom unmanned surface vehicle (USV), which is equipped with a diverse array of sensors to enable comprehensive environmental perception. These sensors include a LiDAR, a camera, a 4D millimeter-wave (MMW) radar, an inertial sensing unit (IMU) module, and a global positioning system (GPS). The LiDAR used in our research is a multi-line mechanical LiDAR from LSLIDAR Instrument, featuring 16 laser scan lines covering a 360° horizontal field of view and a 32° vertical field of view. With a range resolution set at 2 cm, this LiDAR system offers precise detection and localization of objects in the horizontal plane, complemented by a horizontal angular resolution of 0.18°. The MMW radar utilized is a Texas Instrument 77 GHz frequency-modulated continuous-wave (FMCW) radar model AWR1843, configured with a maximum range of 100 m and a range resolution of 0.16 m. Operating at a frame rate of 10 Hz, the MMW radar provides valuable information about the surrounding environment, particularly in challenging weather and lighting conditions. In this experiment, we utilized three types of unmanned surface vehicles (USVs) equipped with GPS location modules, as depicted in Figure 8. These GPS modules employ real-time kinematic (RTK) technology, providing high location accuracy within ±0.1 meters. The GPS location modules enable us to obtain ground truth data for tracking boats. By directly outputting the location of USV targets, these modules facilitate accurate calculation of the moving direction and speed of the targets. This ground truth data serves as a reference for evaluating the performance of our tracking framework and other baseline methods.

Figure 7 Our experiment platform. (A) Shows our data collection platform. (B) Shows detailed sensor configuration.

Both sensors operated at a frame rate of 10 Hz. The extrinsic parameters of the MMW radar were obtained through calibration with corner reflectors. Subsequently, we performed coordinate system alignment to ensure consistency between the radar and LiDAR data. Our collected dataset comprises 4 sequences with three kinds of USVs. In total, the dataset contains 12,000 frames of data. For further details on each sequence, refer to Table 2, which outlines the scenes covered in each sequence.

Table 2 Dataset description, S, T, X in class column denote “Smurf”, “Titan” and “Xi”, respectively.

The experimental code for the ROTracker framework is implemented in Python. In the radar detector module, we set the search radius for spatial clustering at 1.2 m and the velocity threshold for velocity clustering at 0.8 m/s. Additionally, the minimum number of points required for inclusion in a cluster is set to 20 for both spatial and velocity clustering. For the radar static map, we employ a resolution of 0.25 m and a size of 400 × 400 pixels for each sub-map. In the multi-path filtering module, the search angle resolution is set to 10 degrees to identify and filter out clutter caused by multi-path reflections. In the radar tracker module, the fusion hyper-parameter γ is set to 0.8 to balance the influence of radar measurements and prediction estimates during the state update process. These parameter settings have been carefully chosen to optimize the performance of the ROTracker framework under various operating conditions and environments encountered in offshore waterways.

For evaluating the performance of our tracking system, we utilize the widely recognized CLEAR MOT metrics, which offer a comprehensive assessment of the algorithm’s effectiveness. The primary metrics employed include: multi-object tracking accuracy (MOTA) and multi-object tracking precision (MOTP). In addition to these primary metrics, we consider other important indicators such as: true positives (TP) and false positives (FP). Moreover, since the speed and direction of moving targets are crucial in tracking applications, we include the following metrics to assess velocity and direction accuracy: mean velocity error (MVE) and mean angle error (MAE). By considering these metrics collectively, we can comprehensively evaluate the performance of our tracking system and gain insights into its strengths and limitations under various real-world scenarios.

To validate the performance of our proposed ROTracker framework, we conducted a real-world experiment comparing our method with other baseline methods.

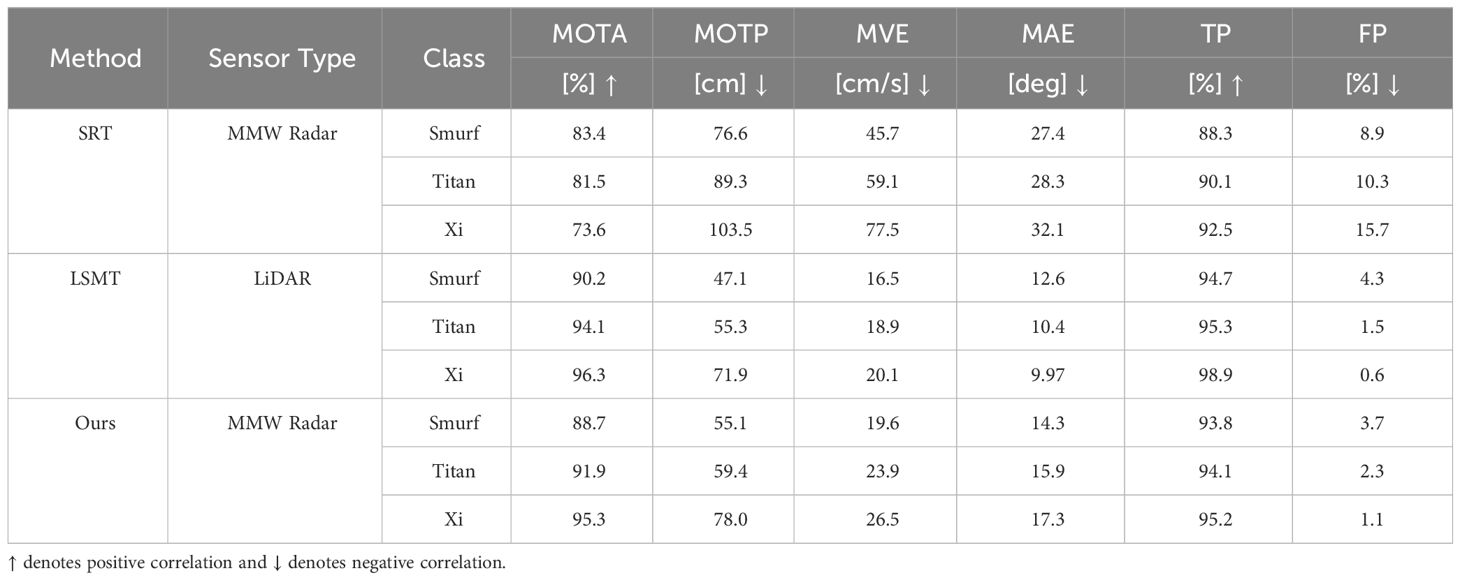

textbfCompared with other baselines. There are two baseline methods for the quantitative evaluation. The first baseline method is a traditional MMW radar-based tracking method SRT (Tan et al., 2023) which adopts a Kalman tracker. As the LiDAR-based tracking method LSTM (Yao et al., 2023) shows great performance, we also choose it as the baseline method. Table 3 presents the comparison result using our method and baseline methods on the evaluation dataset mentioned above. Due to the high-precision LiDAR point clouds, the LiDAR-based tracking baseline methods achieve the best performance in each evaluation metric. Only using space and noisy radar point clouds, our ROTracker framework also performs greatly in complex offshore waterway scenes. Compared to other MMW radar-based tracking methods only using simple KF state estimation, our ROTracker also presents an obvious improvement in tracking performance. Figure 9 visualizes the tracking results using our ROTracker framework. Figure 10 presents per-class tracking performance comparison using our ROTracker and other baseline methods. While LiDAR-based tracking methods demonstrate superior tracking accuracy, our ROTracker, solely relying on low-cost radar technology, strikes a crucial balance between efficiency and accuracy, thus holding immense potential for widespread deployment across USVs. Furthermore, leveraging the robustness of MMW radar, our ROTracker exhibits enhanced robustness compared to LiDAR-based tracking methods, especially in harsh weather conditions. Moreover, with the continuous advancement of radar sensors, next-generation radar systems offer improved resolution, further bolstering the capabilities of radar-based tracking methods.

Figure 10 Per-class tracking performance comparison using our ROTracker and other baseline methods: (A) shows the “Smurf” class, (B) shows the “Titan” class, (C) shows the “Xi” class.

Table 3 Object track comparison on our collected datasets using the proposed method and other baseline methods.

Ablation evaluation. We conducted an ablation experiment to analyze the effects of key modules in the ROTracker framework. Our framework integrates the traditional Kalman Filter (KF) tracking method with additional modules for velocity measurement and motion discrimination. Table 4 presents the results of this ablation experiment, assessing the effectiveness of these modules. Due to the challenges posed by low-speed scenes for radar-based tracker, we evaluated the performance using the mean velocity error (MVE) and mean angle error (MAE) metrics, with a focus on low-speed conditions (MVEL and MAEL). Interestingly, the results indicate that combining spatial and velocity measurements leads to decreased direction estimation accuracy (MAE), particularly noticeable in low-speed scenes (MAEL). Conversely, the Motion Discriminator module enhances the robustness of the radar tracker against noisy radar point clouds, thereby improving velocity estimation accuracy in low-speed scenes.

Different distances. Distance is an important influence factor for object tracking accuracy. We further analyze the performance of our model under different distance conditions. The experiment results are shown in Table 5. Our ROTracker model has excellent tracking performance at near distances. The introduction of Doppler velocity information provides moving direction and helps the detector to overcome the sparse radar spatial features.

Efficiency analysis. Efficiency is crucial for real-world deployment, particularly in offshore waterways where USVs must process large volumes of point clouds in real-time. To evaluate the efficiency of our proposed radar-based detection and tracking framework, we conducted further analysis using a USV-embedded computing platform, the NVIDIA Xavier NX shown in Figure 11. The NVIDIA Xavier NX features a 6-core central processing unit with a maximum frequency of 1.40 GHz. We selected test data from our single radar USV platform and measured the average running time per period as the evaluation metric. Table 6 presents the efficiency comparison results between our ROTracker framework and LiDAR-based baseline methods using single LiDAR data. The results indicate that our ROTracker framework demonstrates outstanding efficiency, requiring minimal computing resources. In contrast, LiDAR sensors produce a significantly larger volume of point clouds in offshore waterway environments, leading to increased computation time for LiDAR-based tracking systems. Compared to LiDAR-based methods, our ROTracker framework offers lower costs in both sensor and computation expenses, making it highly suitable for deployment in real-world scenarios.

In this work, we propose a novel MMW radar-based object tracking framework called ROTracker. Comprising a radar detector and a radar tracker, the ROTracker framework effectively integrates the unique characteristics of MMW radar with traditional tracking systems. Despite the sparse and noisy nature of MMW radar point clouds, our ROTracker leverages radar Doppler velocity and a motion discriminator to enhance the tracking system’s robustness, particularly for low-speed targets. Through real-world experiments, we validate the robustness and efficiency of the ROTracker framework, showcasing its significant potential for USVs. In the future, we aim to expand the ROTracker into a multi-radar tracking system to further enhance our research and improve the deployment convenience of USV autonomous systems. To summarize, this paper mainly contributes to the following aspects:

(1) We introduce a novel MMW radar-based object tracking method (ROTracker), leveraging the unique characteristics of MMW radars. Our ROTracker framework provides a robust and cost-effective tracking system for USVs navigating complex offshore waterways.

(2) To address the challenges posed by sparse and noisy MMW radar point clouds, our ROTracker integrates traditional Kalman filtering tracking methods with Doppler velocity information from MMW radar, effectively enhancing the robustness of the multi-radar ROTracker system. Furthermore, a motion discriminator is designed to mitigate errors in low-speed tracking scenarios.

(3) Our ROTracker method demonstrates significant potential. Extensive real-world experiments validate the effectiveness of ROTracker. Moreover, the proposed framework exhibits high efficiency on USV embedded computation platforms.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

HX: Investigation, Methodology, Writing – original draft, Writing – review & editing. JH: Formal analysis, Writing – review & editing. CP: Data curation, Validation, Writing – review & editing. XZ: Resources, Supervision, Writing – review & editing. YY: Funding acquisition, Resources, Supervision, Validation, Writing – review & editing.

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work is supported by ORCA-Uboat and in part by National Key Research and Development Program (2021YFC2803000, 2021YFC2803001).

Authors HX and CP were employed by company ORCA-Uboat.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The authors declare that this study received funding from ORCA-Uboat. The funder had the following involvement in the study: study design, data collection and analysis, decision to publish, and preparation of the manuscript.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Almeida C., Franco T., Ferreira H., Martins A., Santos R., Almeida J. M., et al. (2009). “Radar based collision detection developments on usv roaz ii,” in Oceans 2009-Europe (IEEE), 1–6. doi: 10.1109/OCEANSE.2009.5278238

Cheng Y., Xu H., Liu Y. (2021). “Robust small object detection on the water surface through fusion of camera and millimeter wave radar,” in Proceedings of the IEEE/CVF international conference on computer vision. IEEE. 15263–15272.

De Robertis A., Levine M., Lauffenburger N., Honkalehto T., Ianelli J., Monnahan C. C., et al. (2021). Uncrewed surface vehicle (usv) survey of walleye pollock, gadus chalcogrammus, in response to the cancellation of ship-based surveys. ICES J. Mar. Sci. 78, 2797–2808.

Halterman R., Bruch M. (2010). “Velodyne hdl-64e lidar for unmanned surface vehicle obstacle detection,” in Unmanned systems technology XII, vol. 7692. (SPIE), 123–130.

Kang Z., Gao M., Liao Z., Zhang A. (2024). Collaborative communication-based ocean observation research with heterogeneous unmanned surface vessels. Front. Mar. Sci. 11, 1388617. doi: 10.3389/fmars.2024.1388617

Kim J.-H., Kim N., Park Y. W., Won C. S. (2022). Object detection and classification based on yolo-v5 with improved maritime dataset. J. Mar. Sci. Eng. 10, 377. doi: 10.3390/jmse10030377

Kiriakidis K., Croteau B., Severson T., Rodriguez-Seda E., Robucci R., Islam R., et al. (2022). “Degradable tracking system based on hardware multi-model estimators,” in 2022 resilience week (RWS) (IEEE), 1–6. doi: 10.1109/RWS55399.2022.9984042

Larson J., Bruch M., Halterman R., Rogers J., Webster R. (2007). Advances in autonomous obstacle avoidance for unmanned surface vehicles. AUVSI unmanned Syst. North America 2007. doi: 10.21236/ADA475524

Manley J. E. (2008). “Unmanned surface vehicles, 15 years of development,” in OCEANS 2008 (IEEE), 1–4. doi: 10.1109/OCEANS.2008.5289429

Martins A., Almeida J. M., Ferreira H., Silva H., Dias N., Dias A., et al. (2007). “Autonomous surface vehicle docking manoeuvre with visual information,” in Proceedings 2007 IEEE International Conference on Robotics and Automation. IEEE. 4994–4999.

Muhovič J., Bovcon B., Kristan M., Perš J. (2019). Obstacle tracking for unmanned surface vessels using 3-d point cloud. IEEE J. Oceanic Eng. 45, 786–798.

Oleynikova E., Lee N. B., Barry A. J., Holler J., Barrett D. (2010). “Perimeter patrol on autonomous surface vehicles using marine radar,” in OCEANS’10 IEEE SYDNEY. IEEE. 1–5.

Ramos M. A., Utne I. B., Mosleh A. (2019). Collision avoidance on maritime autonomous surface ships: Operators’ tasks and human failure events. Saf. Sci. 116, 33–44.

Skorski W., Matejski M., Graczyk T. (1970). Baltic sea waters-aspects of post war military residues. WIT Trans. Built Environ. 27.

Tall M., Rynne P. F., Lorio J., von Ellenrieder K. D. (2010). Visual-based navigation of an autonomous surface vehicle. Mar. Technol. Soc. J. 44, 37–45. doi: 10.4031/MTSJ.44.2.6

Tan B., Ma Z., Zhu X., Li S., Zheng L., Huang L., et al. (2023). Tracking of multiple static and dynamic targets for 4d automotive millimeter-wave radar point cloud in urban environments. Remote Sens. 15, 2923. doi: 10.3390/rs15112923

Wenger J. (1998). Automotive mm-wave radar: Status and trends in system design and technology. doi: 10.1049/ic:19980188

Wolf M. T., Assad C., Kuwata Y., Howard A., Aghazarian H., Zhu D., et al. (2010). 360-degree visual detection and target tracking on an autonomous surface vehicle. J. Field Robotics 27, 819–833. doi: 10.1002/rob.20371

Xu H., Geng Z., He J., Shi Y., Yu Y., Zhang X. (2023). “A multi-task water surface visual perception network for unmanned surface vehicles,” in 2023 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC). IEEE. 1–5.

Yang X., Wang Y., Wang N., Gao X. (2021). An enhanced siammask network for coastal ship tracking. IEEE Trans. Geosci. Remote Sens. 60, 1–11.

Yao Z., Chen X., Xu N., Gao N., Ge M. (2023). Lidar-based simultaneous multi-object tracking and static mapping in nearshore scenario. Ocean Eng. 272, 113939. doi: 10.1016/j.oceaneng.2023.113939

Keywords: unmanned surface vehicles, millimeter-wave radar, object track, offshore waterway, marine observation

Citation: Xu H, Zhang X, He J, Pang C and Yu Y (2024) ROTracker: a novel MMW radar-based object tracking method for unmanned surface vehicle in offshore environments. Front. Mar. Sci. 11:1411920. doi: 10.3389/fmars.2024.1411920

Received: 03 April 2024; Accepted: 26 April 2024;

Published: 28 May 2024.

Edited by:

Jay S. Pearlman, Institute of Electrical and Electronics Engineers, FranceReviewed by:

Nitin Agarwala, Centre for Joint Warfare Studies, IndiaCopyright © 2024 Xu, Zhang, He, Pang and Yu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yang Yu, bndwdXl1eUBud3B1LmVkdS5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.