95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

METHODS article

Front. Mar. Sci. , 30 January 2023

Sec. Ocean Observation

Volume 10 - 2023 | https://doi.org/10.3389/fmars.2023.1074428

This article is part of the Research Topic Optics and Machine Vision for Marine Observation View all 25 articles

Darkfield imaging can achieve in situ observation of marine plankton with unique advantages of high-resolution, high-contrast and colorful imaging for plankton species identification, size measurement and abundance estimation. However, existing underwater darkfield imagers have very shallow depth-of-field, leading to inefficient seawater sampling for plankton observation. We develop a data-driven method that can algorithmically refocus planktonic objects in their defocused darkfield images, equivalently achieving focus-extension for their acquisition imagers. We devise a set of dual-channel imaging apparatus to quickly capture paired images of live plankton with different defocus degrees in seawater samples, simulating the settings as in in situ darkfield plankton imaging. Through a series of registration and preprocessing operations on the raw image pairs, a dataset consisting of 55 000 pairs of defocused-focused plankter images have been constructed with an accurate defocus distance label for each defocused image. We use the dataset to train an end-to-end deep convolution neural network named IsPlanktonFE, and testify its focus-extension performance through extensive experiments. The experimental results show that IsPlanktonFE has extended the depth-of-field of a 0.5× darkfield imaging system to ~7 times of its original value. Moreover, the model has exhibited good content and instrument generalizability, and considerable accuracy improvement for a pre-trained ResNet-18 network to classify defocused plankton images. This focus-extension technology is expected to greatly enhance the sampling throughput and efficiency for the future in situ marine plankton observation systems, and promote the wide applications of darkfield plankton imaging instruments in marine ecology research and aquatic environment monitoring programs.

Marine plankton are abundant underwater drifters widely distributed in the world’s oceans (Batten et al., 2019). They are mainly phytoplankton and zooplankton with weak swimming ability. They are also starters of food, material and energy cycles in the ocean, and foundations of the marine ecosystems and food webs (Steinberg and Landry, 2017; Lombard et al., 2019; Suthers et al., 2019). Their regional proliferation in a short period of time can form blooms, which are often accompanied with negative physical and toxicological effects, threatening the safety of nearby aquaculture (Cowen and Sponaugle, 2009), coastal facilities (Zhanhui et al., 2020), and even human health (Suthers et al., 2019). Therefore, the observation of marine plankton is important for understanding the impact of anthropogenic activities and global change on marine ecosystems, and conversely the responses of marine ecosystems to global change (Alvarez-Fernandez et al., 2018). It is also an indispensable means in operational oceanography applications such as marine environment monitoring, biodiversity investigation, fishery resources assessment, and harmful organism breakout warning (Lombard et al., 2019).

As early as in the era of film photography, people tried to use underwater optical imaging for in situ observation of marine plankton (Ortner et al., 1979). With the maturity of solid-state lighting and digital camera technologies since the 1990s, a variety of underwater plankton imagers have been developed (Benfield et al., 1996; Cowen and Guigand, 2008; Schulz et al., 2009; Picheral et al., 2010; Bi et al., 2013; M. Rotermund and Samson, 2015; Gallager, 2019; Orenstein et al., 2020; Li et al., 2022), through which digital images of plankters are captured in natural seawaters. By further analysis of the obtained images using digital processing and machine learning algorithms, people can achieve automatic observation of plankton taxonomy and various functional traits (Orenstein et al., 2022). Compared with traditional methods, in situ imaging has the advantages of longer observational time and continuity, and higher spatio-temporal resolution. Its non-contact property also makes it more suitable for observing fragile gelatinous organisms. These advancements have greatly expanded our knowledge on related marine sciences (Gorsky et al., 2000; Hirche et al., 2014; Campbell et al., 2020).

However, in situ plankton imaging has always faced the trade-off between imaging quality and sampling efficiency. On the one hand, the complexity of seawater composition (Davies and Nepstad, 2017) and plankton attributes makes the optical properties of imaging medium and targets variable and heterogeneous, which easily leads to the deterioration of imaging quality (Lombard et al., 2019; Cheng et al., 2020). On the other hand, high magnification is necessary for sufficient resolution to identify and measure tiny plankters. This leads to a shallow depth-of-field (DOF) and a small volume of seawater sampled by a single frame, which in turn leads to low throughput and efficiency in seawater sampling (Lombard et al., 2019).

To increase the sampling throughput, existing imagers have adopted different strategies and methods. Imaging flow cytometers actively pump seawater into their instruments and implement microscopy (mainly on microphytoplankton) as the seawater is flowing through an interrogation volume to improve the sampling rate (Olson and Sosik, 2007; Grcs et al., 2018). However, this is no longer a strictly “in situ” strategy and is inefficient for sampling larger and scarcer mesoplankton. Many other underwater imagers use more direct strategy of imaging targets in the seawater outside their housings through transparent ports to achieve in situ snapshot imaging sampling. For example, silhouette imagers such as ISIIS (Cowen and Guigand, 2008) and ZooVIS (Bi et al., 2013) use parallel light beam illumination and shadowgraphic imaging to enlarge their DOF. Combined with towed deployment, they can improve the sampling throughput to 70L/s (Cowen and Guigand, 2008). However, shadowgraphy seriously compromises the resolution, texture, and color information of the acquired images, and is easy to lose target edge sharpness and signal-to-background ratio (SBR) by underwater light scattering, resulting in great plankton detection difficulty in turbid seawater (Cheng et al., 2020; Panaïotis et al., 2022). Digital holography is another typical in situ method that uses coherent illumination to record holograms and refocusing computation to achieve focus extended imaging. Known instruments include LISST-HOLO (Graham and Nimmo Smith, 2010), HOLOCAM (Katz and Sheng, 2010), and 4Deep (Rotermund and Samson, 2015), among others. However, this method has the shortcomings of intensive reconstruction computation, speckle noise, and loss of color etc. More seriously, in the turbid seawater environment, the coherence of illumination light is easily reduced, leading to refocusing and target detection difficult (Nayak et al., 2021).

Darkfield imaging is also a popular method for in situ plankton observation. Its images own excellent resolution and rich color information for better representation of the planktonic target, and are more intuitive to human vision. Additionally, it often achieves good image SBR to facilitate object detection in subsequent processing. These features are beneficial for finer plankton taxonomy and quantification, especially favorable for biodiversity study (Lombard et al., 2019). Therefore, darkfield imaging has been adopted by many underwater plankton cameras (Benfield et al., 1996; Gorsky et al., 2000; Schulz et al., 2009; Gallager, 2019; Orenstein et al., 2020; Li et al., 2022). For example, the Imaging Plankton Probe (IPP) developed by (Li et al., 2022) is a darkfield plankton imager that supports long-time near-shore buoy deployment. It features a compressed orthogonal white-light illumination to reduce stray light scattered from outside the imaging DOF, enabling high-contrast true-color in situ imaging of plankton and suspended particles in a wide size range of 200μm-40mm and finer plankton identification.

However, darkfield imaging also has very shallow DOF. For example, the DOF of a 0.5× lens used in IPP is only ~3mm, corresponding to a sampling volume of just ~1.5 mL by a single frame capture. Although low-magnification lenses have thicker DOF, using them as replacement of high magnification lens in any imager will sacrifice resolution. To overcome this drawback, (Wang et al., 2020) installed different magnification lenses onto a rotatable nosepiece in their darkfield imager to expand the imaging range. (Merz et al., 2021) simply put a high and a low magnification imaging optics into one darkfield imager housing for simultaneous acquisition of wider size range. But both made the instrument cumbersome, expensive, and unreliable. Exploring other possible strategies by hardware modifications, one can easily think of methods for darkfield imaging focus-extension by using liquid lenses (Cheng et al., 2021), diffractive optical elements (Xu et al., 2019), or light-field cameras (Martínez-Corral and Javidi, 2018). However, these methods are essentially at the cost of sacrificing temporal or spatial freedom, and will increase the complexity and cost of instrumentation, which is not favorable for long-term work in the harsh oceanic underwater environment.

It will become very attractive if the focus of underwater darkfield imager could be effectively extended without hardware modification. This directs us to image restoration algorithm as an alternative for this goal, which can be roughly classified into two categories: physical modeling-based method (Krishnan et al., 2011; Nishiyama et al., 2011; Karaali and Jung, 2018) and data-driven method (Abuolaim and Brown, 2020; Lee et al., 2021; Luo et al., 2021). In the scenario of underwater imaging, due to the extremely complex properties of seawater and targets, and inconsistent imaging characteristics of different instruments, the accurate prior for physical modeling is difficult to estimate or measure. Therefore, relevant works were mostly carried out in laboratories (Fan et al., 2010; Makarkin and Bratashov, 2021). The data-driven method trains deep convolution neural network (DNN) models through a large-scale dataset, and can achieve end-to-end focus restoration for images with a certain degree of defocus. However, the large-scale high-quality real-world data needed to train such deep-learning models is difficult to obtain. In few successful DNN-training reports, (Luo et al., 2021) and (Rai Dastidar and Ethirajan, 2020) used microscopic image stack datasets collected by expensive precision automatic microscopes and (Abuolaim and Brown, 2020) and (Lee et al., 2021) relied on a special dual-pixel camera chip to collect natural scene image datasets with defocus distance information embedded. Obviously, these datasets construction patterns have high time and money costs and are difficult to duplicate for building up live marine plankton image datasets. Moreover, in order to train DNNs to generalize well in actual ocean observation, the dataset is necessary to have considerable example quantity and diversity. This undoubtedly makes the modeling of learning-based algorithm for underwater plankton imaging focus-extension face great challenge in data availability.

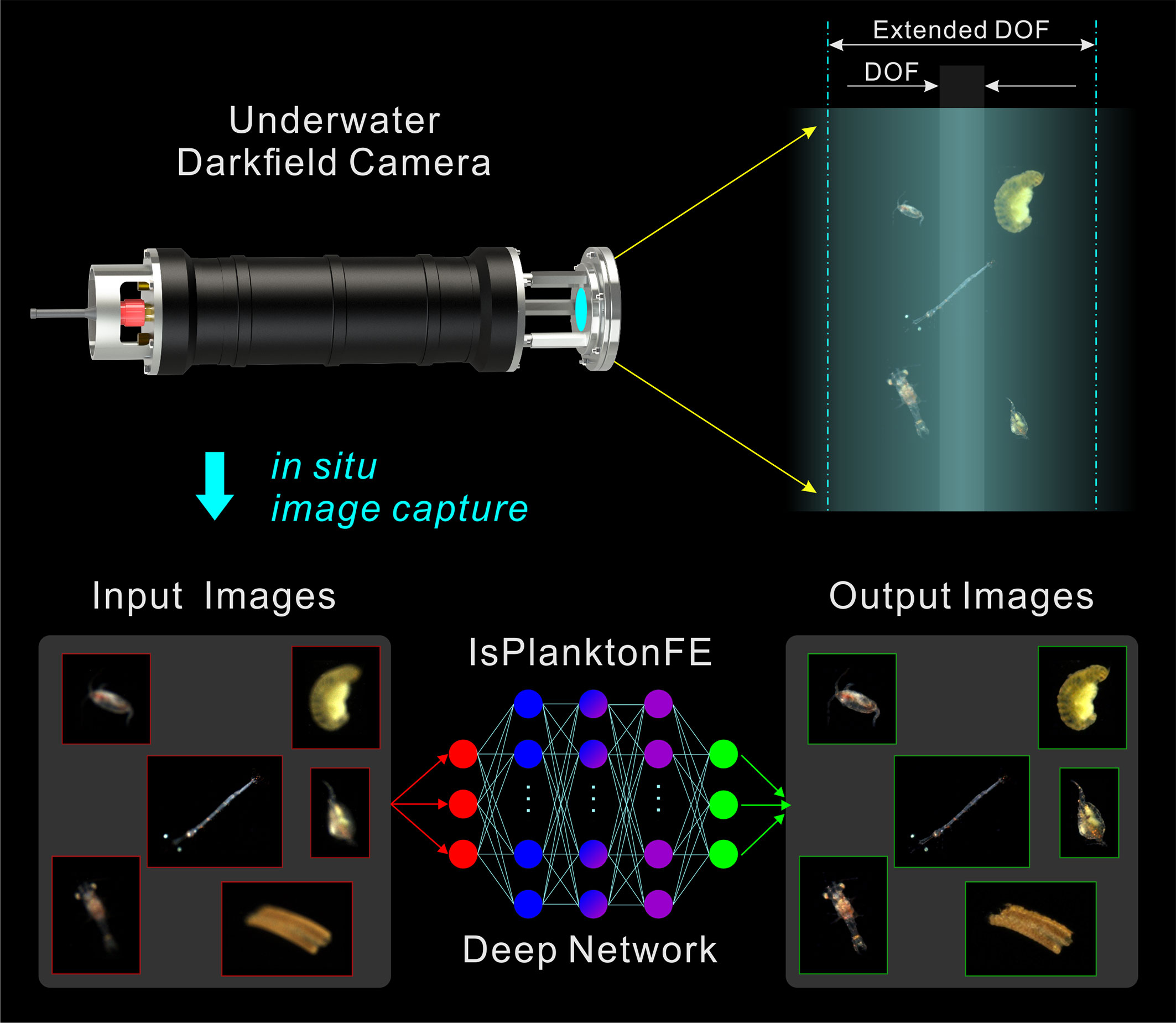

To this end, we used the off-the-shelf optical components to customize a set of dual-channel darkfield imaging apparatus, which efficiently facilitated us to construct a dataset consisting of 55 000 pairs of defocused-focused marine plankter images. The acquisition of this dataset mimicked the settings as in real oceanic in situ imaging, and all defocused images are provided with accurate defocus distance labels. Using this dataset, we trained a self-guided focus-extension DNN named IsPlanktonFE, which, to the best of our knowledge, achieved end-to-end defocus restoration of real in situ plankton darkfield images for the first time. The idea of using IsPlanktonFE for darkfield plankton imager focus-extension is shown in Figure 1. We used a standard SiO2 bead and real plankton as targets to test and calibrate its performance. The results show that it can significantly improve the accuracy of plankton images classification and bead size measurement. IsPlanktonFE has extended the DOF of a 0.5× darkfield imaging system to ±10mm range, which is ~7 times of its original value of ~3mm. Its performance was further verified on a lot of in situ images collected by different IPPs from the actual sea sites. The results show that the network has good generalizability, and plays a significant role in improving the efficiency of marine plankton observation in practice.

Figure 1 Concept of a deep learning-based focus-extension for in situ darkfield imaging of marine plankton.

The contributions of this article are emphasized as follows.

1. A data-driven method that can achieve focus-extension for single-image acquired by underwater darkfield imaging systems is proposed, which can greatly improve their efficiency for in situ observing marine plankton and suspended particles.

2. A simple dual-channel imaging apparatus is devised and a complete protocol for using the apparatus to conveniently and efficiently building up large-scale defocused-focused image pair datasets of live plankton is introduced. The accurate defocus distance labels contained in the datasets can provide quantitative reference to the training of imaging focus-extension models.

3. A self-guided end-to-end convolution neural network that can effectively extend the DOF of underwater darkfield imaging with proven instrument and content generalizability is designed and trained.

10 liters of coastal seawater sample containing live plankton was collected from Dapeng Ao Cove (22°34’4’’ N, 114°31’53’’ E), Shenzhen of China by light trapping on December 9th, 2021 and July 28th, 2022, respectively. The seawater samples were kept at ambient temperature in a bucket when they were returned to laboratory in one hour time after collection. Every time we used a pipet to select some active plankters from the samples and added them into the container of the dual-channel darkfield imaging apparatus for dataset construction. According to plankton expert’s identification from the recorded images, the taxa in the samples were mainly Arthropoda and Annelida.

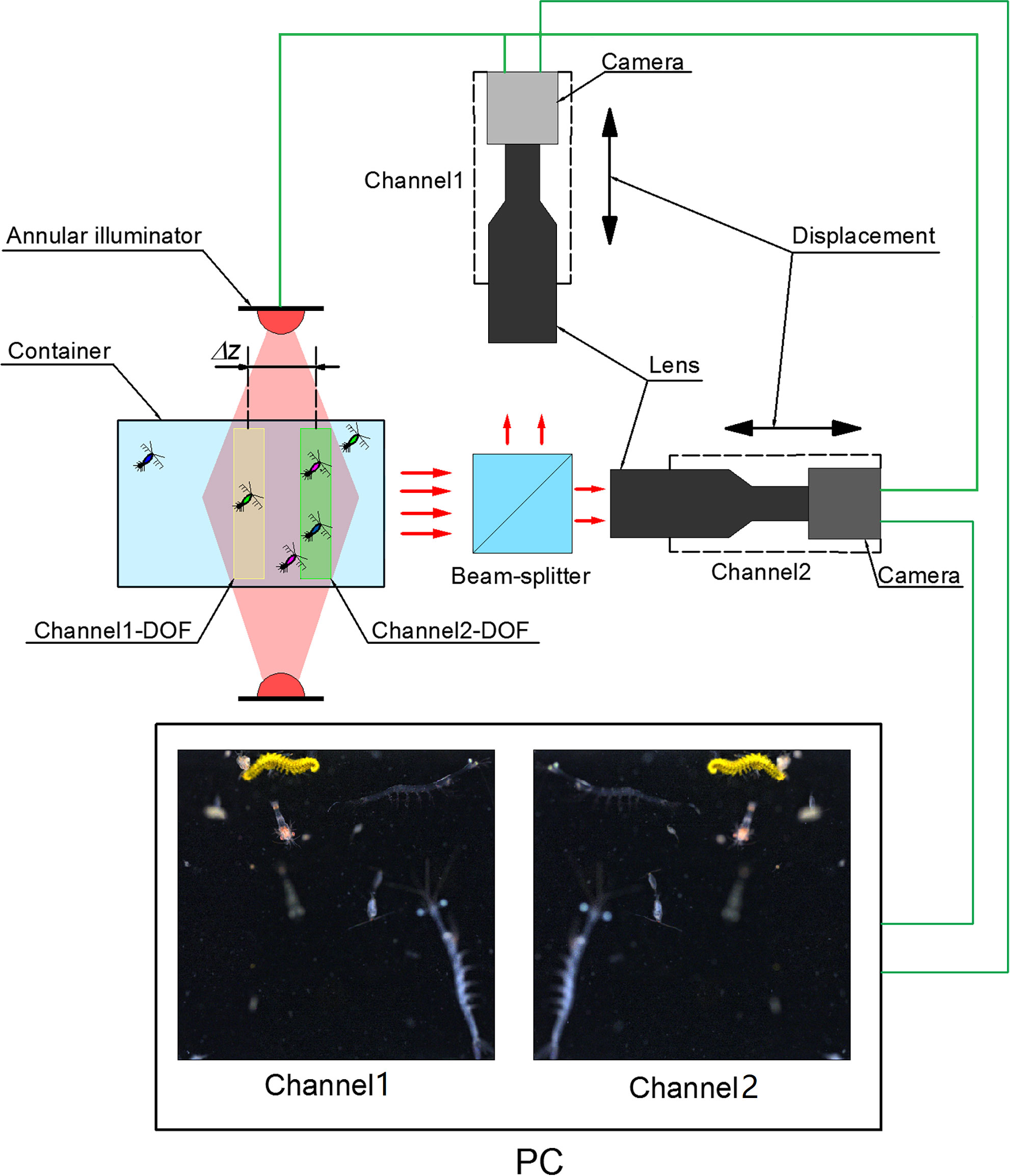

In order to acquire defocused-focused image pairs of live plankton in their natural state, we built a set of dual-channel darkfield imaging apparatus in laboratory to simulate the real in situ imaging settings in the ocean. Its composition and principle are shown in Figure 2. When the plankters freely swim through the illuminated space in the transparent container, they are illuminated by a white-light ring LED illuminator. Part of the scattered or refracted light by the plankton is split into two pathways by a cube beam-splitter and enters two imaging sub-systems for simultaneous imaging. Each sub-system consists of a telecentric lens (0.5×, DOF~3mm) and a CMOS camera (4096×3000 pixels, FLIR BFS-PGE-122S6C-C) mounted on a high-precision translation stage. During image acquisition, the focal plane of one channel was fixed to a certain position behind the container’s window, and the focal plane of the other channel was adjusted to different axial positions with a spacing Δz relative to the previous focal plane. At each Δz, the cameras of the two channels were triggered to synchronously capture paired images with very short exposure time of 400μs to avoid motion blur, and their framerate was both set to 4fps. After enough images were acquired at one Δz position, one of the focal planes was translated stepwise (1mm) to next axial position to continue capturing a new set of image pairs. By repeating such process while Δz was varied in a range of 0mm-10mm, we finally acquired enough raw darkfield image pairs of live plankton with discrete defocus spacing. Note that multiple clear and blurry planktonic targets may coexist in all the raw images at this stage.

Figure 2 Setup of the dual-channel darkfield imaging apparatus for defocused-focused live plankton darkfield image pair acquisition.

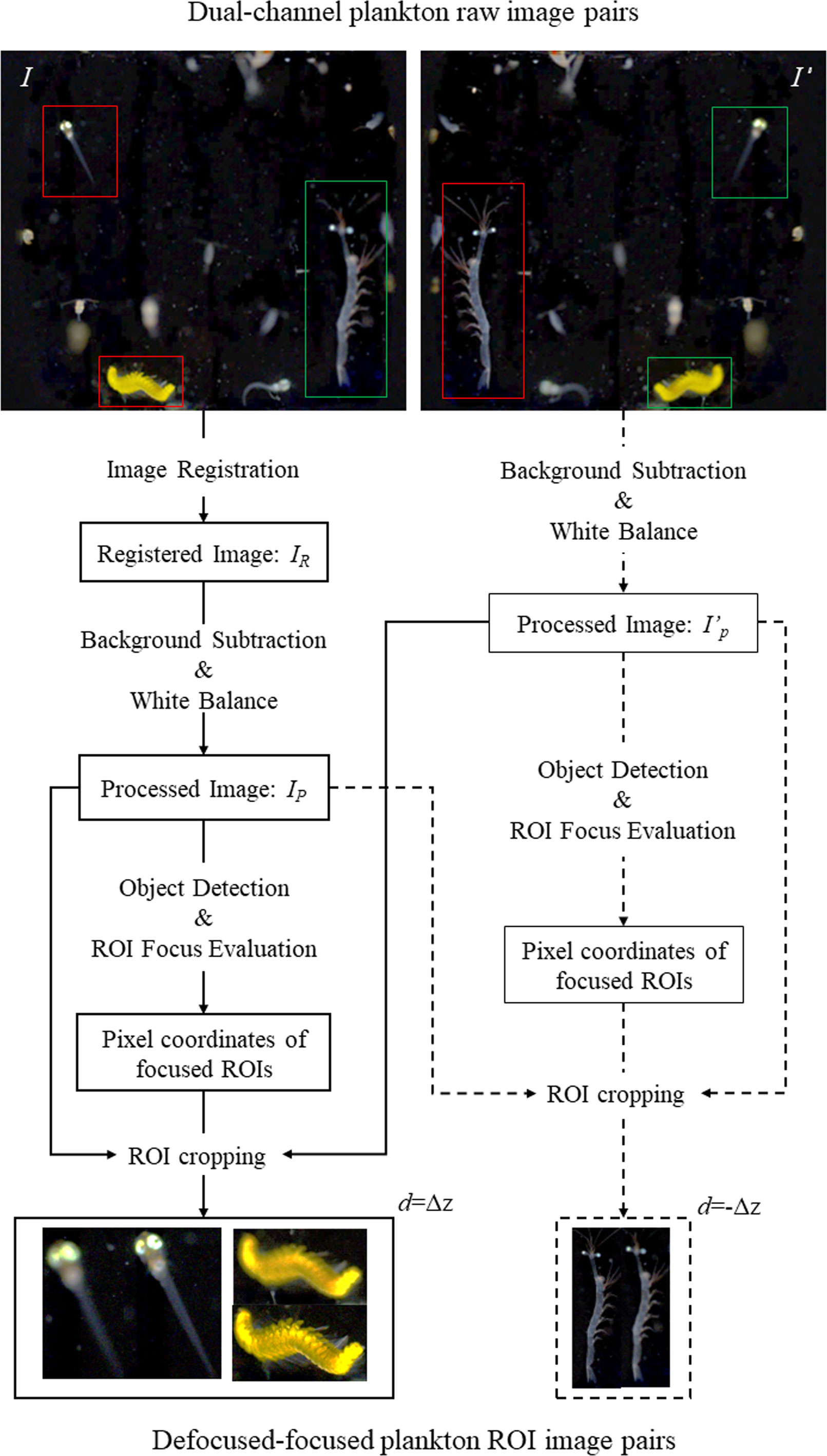

Next, we applied a set of image registration and preprocessing operations to process all the raw images. Figure 3 illustrates the steps of this process to generate multiple defocused-focused plankton region-of-interest (ROI) pairs from one pair of raw images I-I’ with focal spacing Δz between them. The steps include performing (1) affine transformation of image I relative to the reference image I’ to obtain a registered image IR (refer to Supplementary Materials for image registration details), (2) background subtraction and white balancing of the registered image pair IR-I’ (Li et al., 2022), (3) ROI extraction by thresholding from the processed image pair IP-I’P (Li et al., 2022), (4) focus evaluation of all ROIs extracted from IP to select coordinates of all the in-focus ROIs (Yang et al., 2021), (5) defocused-focused ROI pairs cropping from the raw image pair IP-I’P (note all the cropped ROIs from IP are in-focus as evaluated by the algorithm, and their corresponding ROIs cropped from I’P are defocused with a defocus distance Δz), (6) repeating steps (4) and (5) to process ROIs extracted from I’P in step (3) to obtain defocused-focused ROI pairs (note the cropped ROIs from I’P are in-focus, and their corresponding ROIs cropped from IP are defocused with a defocus distance of -Δz). Applying the above processing, we obtained the raw data of defocused-focused ROI pairs with different defocus distances.

Figure 3 Flow chart of generating defocused-focused plankton ROI pairs from processing a pair of dual-channel raw plankton images. Red and green frames represent focused and defocused plankton ROIs, respectively.

Finally, the raw ROI pair data was further cleaned by removing some unwanted ROIs selected based on human visual determination. We firstly selected the ROIs still with visual blur, ROIs containing multiple objects, and ROIs with both defocused and focused parts in one planktonic object from all the ROIs determined by the algorithm as “clear”. Then we discarded all these selected ROIs together with their corresponding defocused counterparts. For the remained ROI pairs after cleaning, we denoted d as the defocus distance label to the defocused ROI in the pair. When the position of a target is between the focal plane and the lens, d=Δz; while when the target’s position is outside the focal plane, d=-Δz. Thus, the construction of a large-scale defocused-focused marine plankton ROI image pair dataset with defocus distance label d was eventually completed.

Based on the characteristics of the defocused-focused plankton ROI pair dataset, we designed a self-guided DNN network IsPlanktonFE to achieve end-to-end focus-extension for underwater darkfield plankton imagers. As Figure 4 shows, the structure of IsPlanktonFE includes a defocus distance estimation sub-network DDE-Net and a focus-extension sub-network FE-Net. The DDE-Net can estimate the defocus distance of an input ROI image and encode the estimated value for the FE-Net to guide its focus-extension. The FE-Net extracts the features of the input ROI, and then encodes and decodes it with the reference of the estimated defocus distance. Finally, it reconstructs and outputs a refocused image.

The DDE-Net is composed of a feature extractor, an aggregator, a regressor and an encoder. A ResNet-34 network is trained as feature extractor to extract features of the input ROI image in patches (refer to Supplementary Materials for details). Then, the mean and standard deviation, quantile, and moment of the extracted features are aggregated into three vectors. Next, partial least squares regressor (PLSR) is used to regress the three vectors, and the mean of the regression value is taken as the estimate of the defocus distance of the input defocused ROI. Finally, the estimate is transformed by the encoder into two one-dimensional vectors α and β to meet the input requirements of the FE-Net combiner. The encoder consists of five convolutional layers with 1×1 cores.

FE-Net is the backbone of IsPlanktonFE, which is composed of an extractor, a combiner, and a decoder. The extractor extracts the feature map fmex from the input, which consists of four e-blocks and each contains three convolutional layers with batch-normalization and ReLU. The combiner is responsible for adding α and β to fmex. It consists of two f-blocks and each has a convolution and an FILM layer (Perez et al., 2018). The FILM layer implements the function as expressed in the equation below, where fmref is a feature map with reference information.

The decoder is composed of only one convolutional layer, which performs dimension transformation of the combined feature map fmref to obtain and merge the R, G and B channel values to output the final refocused image.

IsPlanktonFE adopts a weighted sum of contextual loss and MSE loss to guide its training optimization, as is formulated by the equation:

The contextual loss (Li et al., 2017) is known to be insensitive to image misalignment, but it does not consider sufficiently on the global distribution of features and often generates mosaic artifacts in the output images (Odena et al., 2016). Conversely, the MSE loss takes the global features into account, but it is very sensitive to image registration errors. Therefore, the combinational loss is expected to complement their strengths and weaknesses to direct the IsPlanktonFE training towards more accurate feature recovery and better visual effect optimization for its ultimate focus-extension performance.

IsPlanktonFE was implemented using PyTorch on a server with 6 NVIDIA GeForce RTX3090 GPUs. Its training was divided into two phases: (1) training DDE-Net with defocused ROIs and the absolute value of their corresponding defocus distances in the dataset (more details are provided in the Supplementary Materials); (2) training FE-Net by using the trained DDE-NET and the defocused-focused ROI pairs in the dataset. In this phase, the model was trained with Adam optimizer and an initial learning rate of 6*10^ (-3). The initial weights k1 and k2 for the contextual loss and MSE loss were empirically set to 0.97 and 0.03. The batch size was set to 48 and patch size was set to 128. To improve training efficiency, only the number of foreground pixels in a patch exceeded 820 (empirical value) would it be used for training. After 200 epochs of training, the learning rate decreased to 0.316 times of its initial, and k1 and k2 became 0.95 and 0.05, respectively.

A dual-channel darkfield imaging apparatus (Figure 2) has been home-built to capture raw image pairs of live plankton in seawater samples with focal distance difference between the channels adjusted in a range of 10 mm at a step of 1 mm. Afterwards, the raw image pairs were processed to finally construct a dataset containing 55 000 defocused-focused ROI pairs of marine plankton. We randomly selected 2500 ROI pairs recorded at each defocus distance as training set and reserved extra 250 ROI pairs as test set for testing and validating the IsPlanktonFE and the DeblurGAN-v2 deep networks (Kupyn et al., 2019). The DeblurGAN-v2 is a state-of-the-art deep-learning model for image restoration, which is selected for focus-extension performance comparison with our proposed IsPlanktonFE network. Its modeling details are provided in the Supplementary Materials.

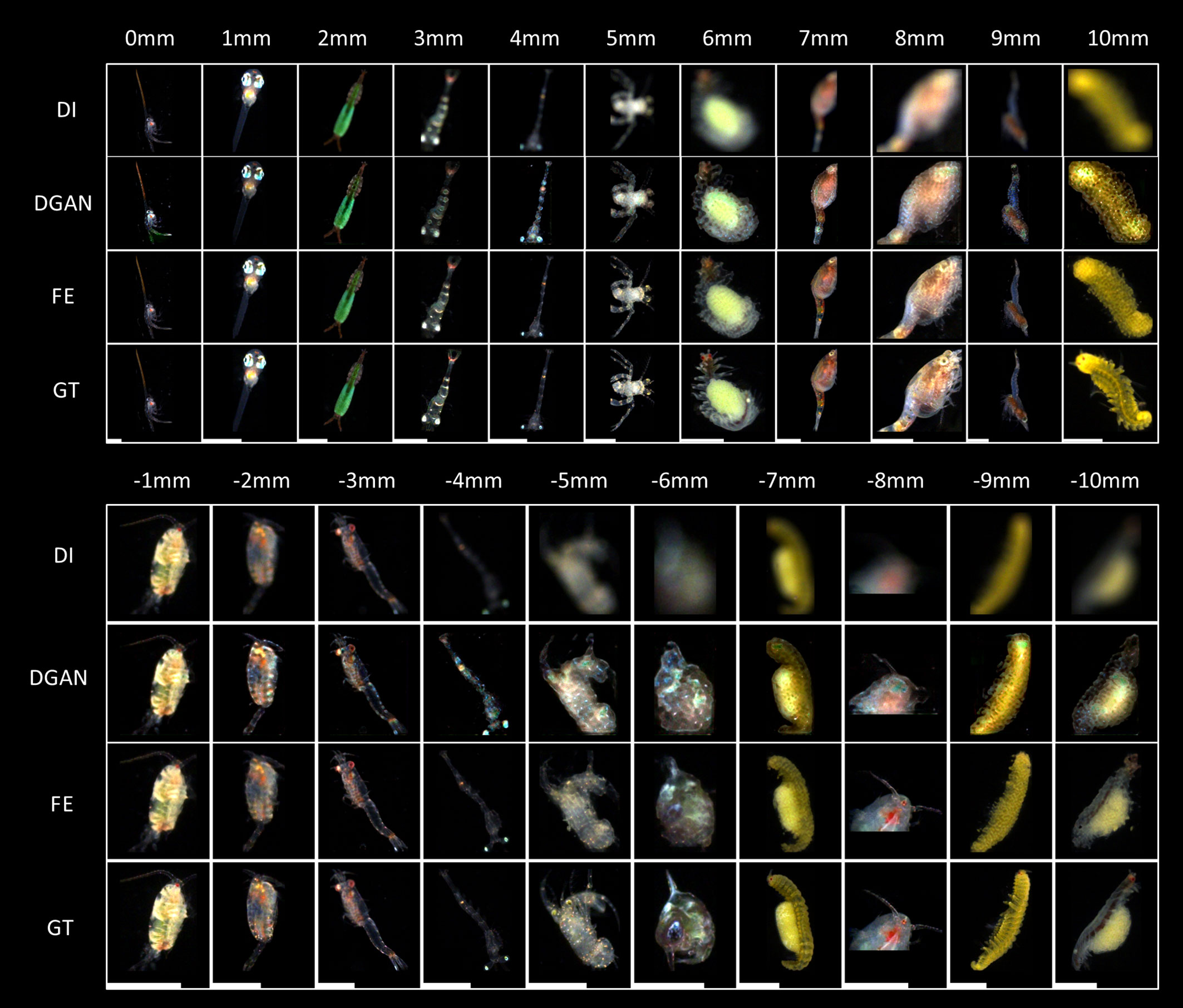

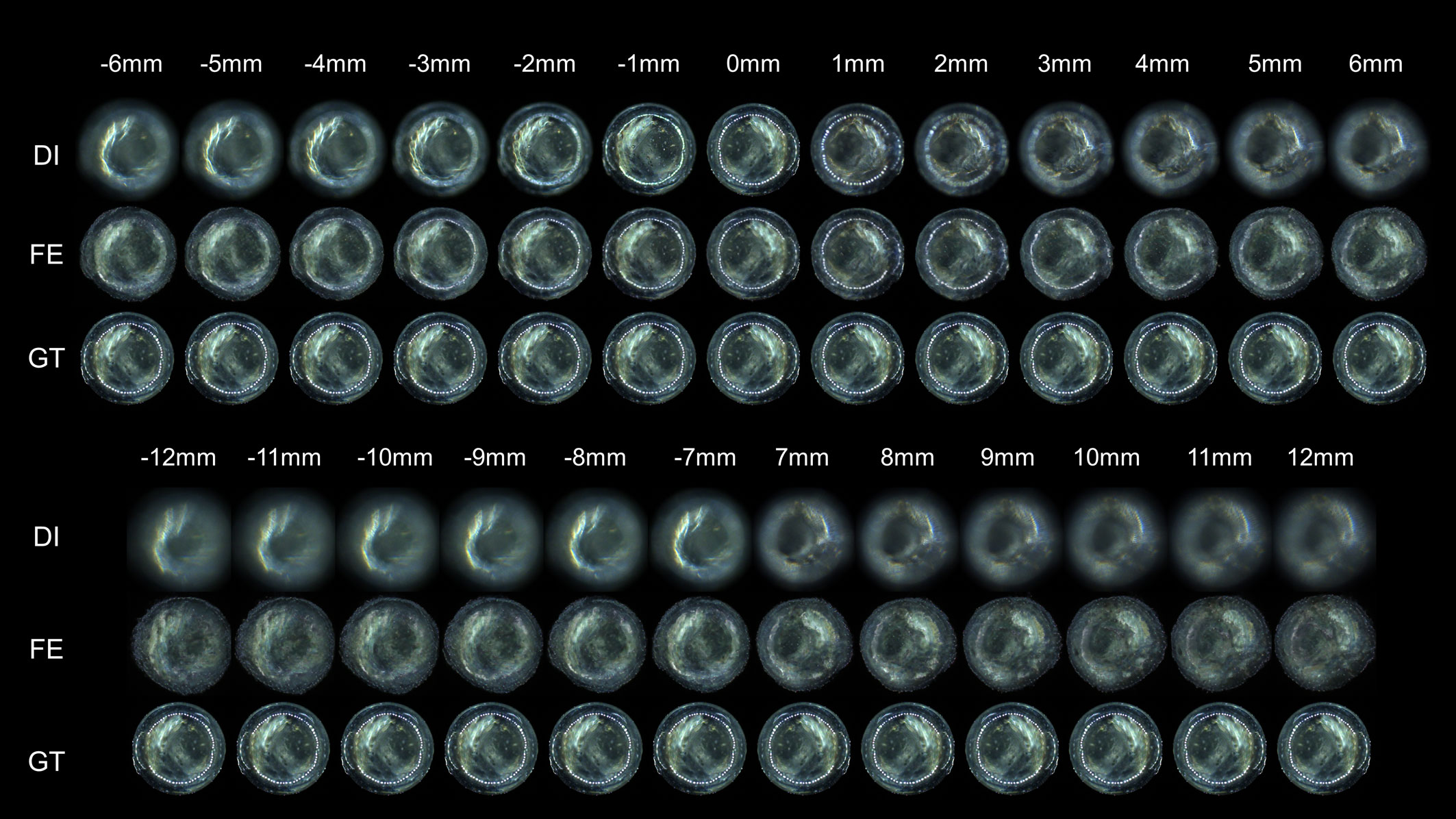

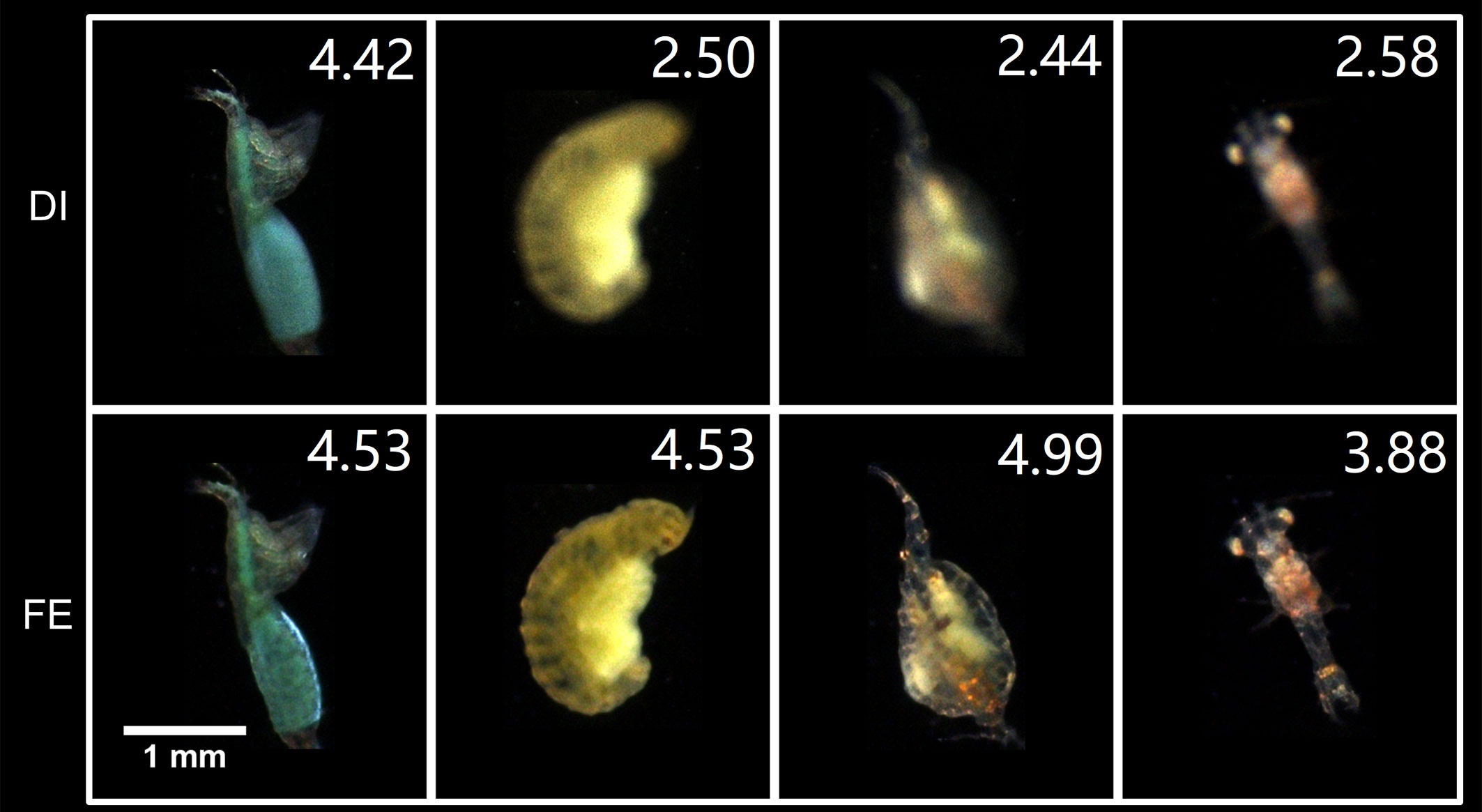

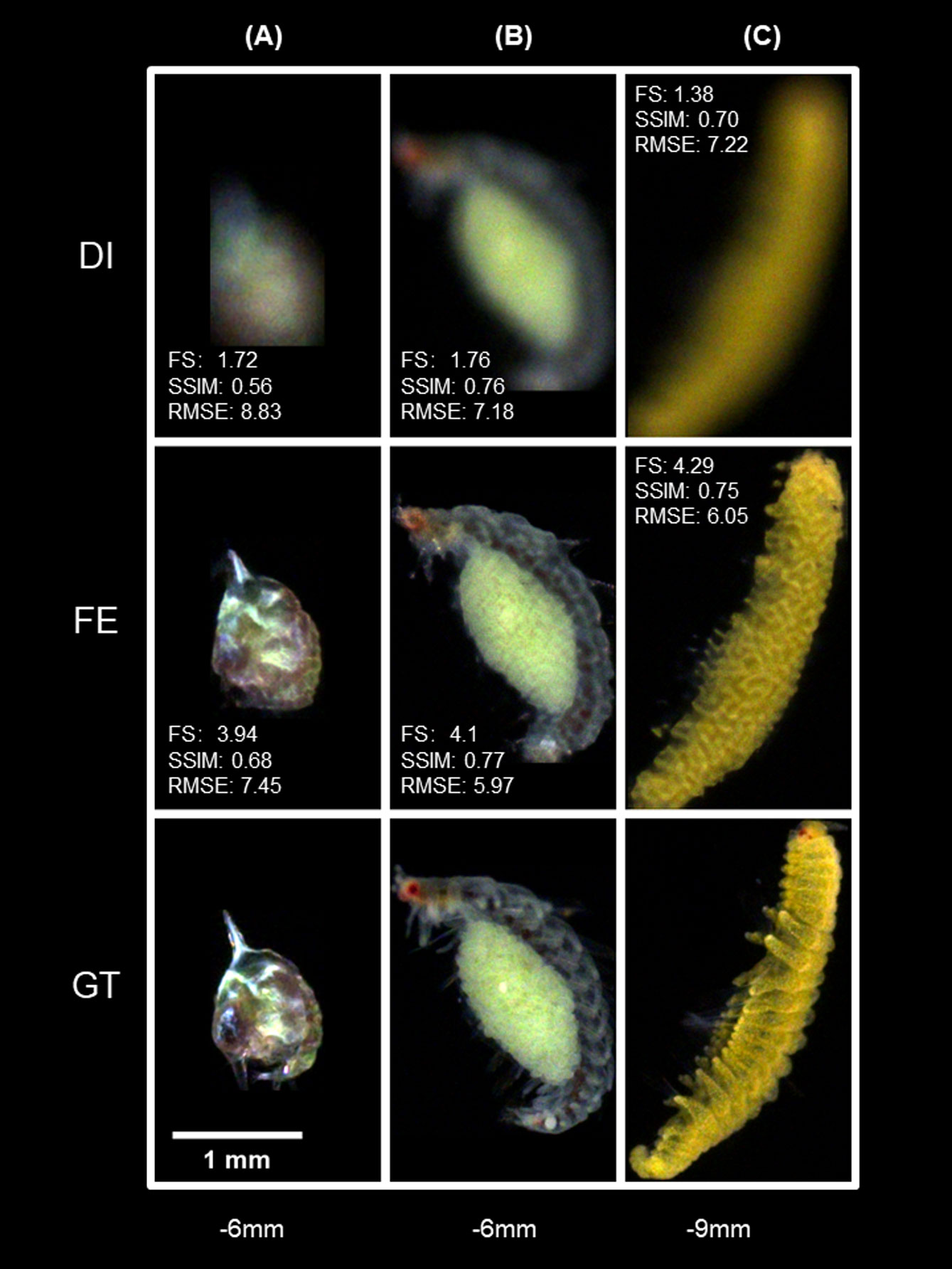

The focus-extension results of the two DNN models are shown in Figure 5. From top to bottom, each column lists an input marine plankton ROI with different defocus distances in the test set, output images from the two DNN models, and the in-focus image of the input target (ground truth, GT), respectively. From visual perception on this comparison, we can see that both DNNs have restored considerable details of the targets from their defocused images. As the defocus distance increases, the defocus blur of the input image increases, the recovered details in the two network outputs become less, and the artifacts start to emerge and gradually become serious. But the artifacts in the IsPlanktonFE outputs are much weaker than those from the DeblurGAN-v2 model, and the restoration effect by IsPlanktonFE is visually closer to the corresponding sharp ground truths.

Figure 5 Visual comparisons of the focus-extension performances of two DNNs on representative marine plankton images. DI, DGAN, FE and GT represents the defocused images as network input, their corresponding restored images by the DeblurGAN-v2 and IsPlanktonFE models, and the focused ground truth images, respectively. The value above each column indicates the defocus distance label of the DI relative to the GT images. The length of the scale bars at the bottom is 1mm. The abbreviations of DI, FE, GT and DGAN are also applicable to other figures in this work.

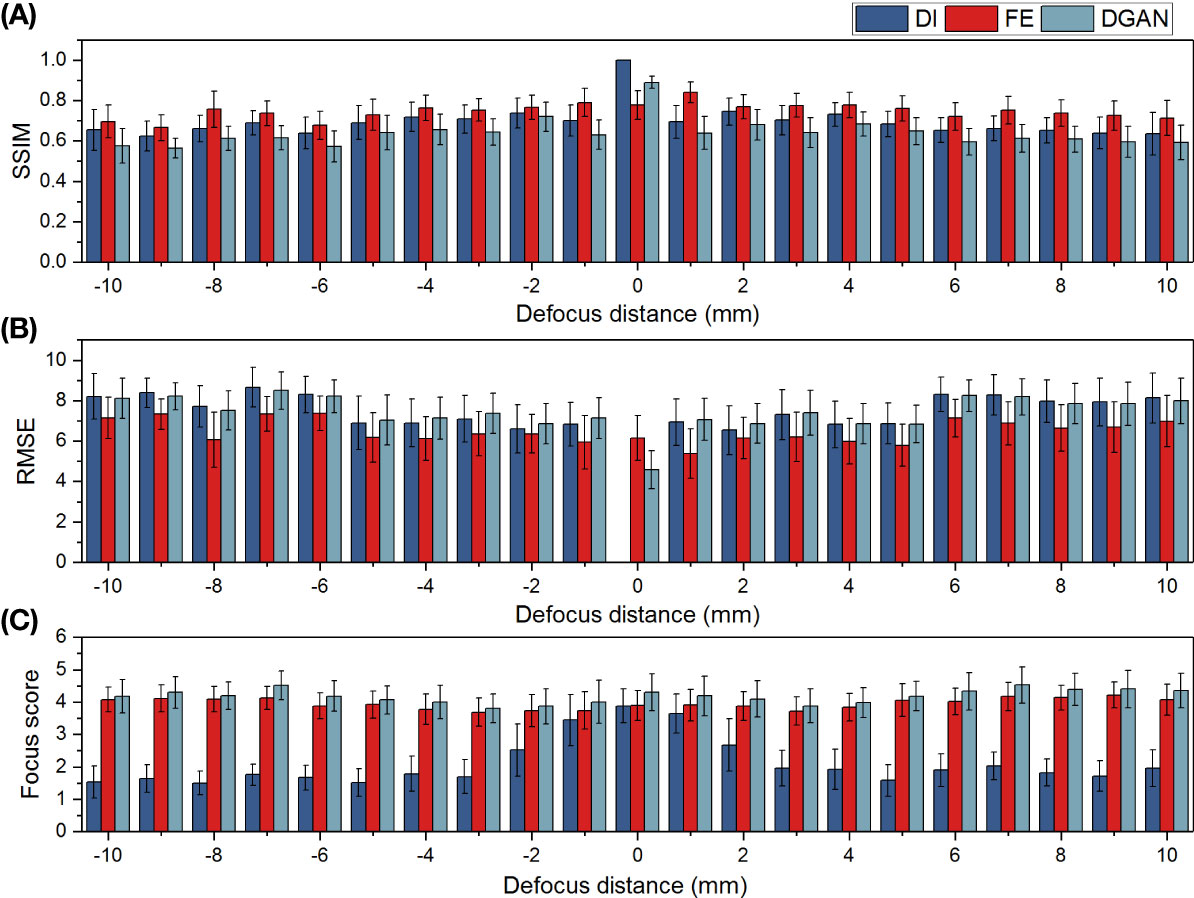

To make more quantitative evaluation of the DNNs’ focus-extension performance, we used structural similarity (SSIM), root mean square error (RMSE) and a self-defined focus score (Yang et al., 2021) as three metrics, and calculated their values on all the input images in the test set and their outputs from the two DNN models relative to their in-focus GT images. We compared the mean and standard deviation of these scores at each defocus distance as the results displayed in Figure 6.

Figure 6 Quantitative comparisons of the focus-extension performances of the DeblurGAN-v2 and IsPlanktonFE networks on restoring marine plankton images with metrics of SSIM (A), RMSE (B) and focus score (C). Here larger SSIM (∈[0,1]), smaller RMSE, and higher Focus score indicates sharper image focusing state, respectively.

On all the metrics, the IsPlanktonFE network has achieved obvious improvements compared to its inputs and relatively stable standard deviations, indicating its efficacy in focus-extension. Moreover, IsPlanktonFE has also achieved higher scores than DeblurGAN-v2 on the metrics of SSIM (Figure 6A) and RMSE (Figure 6B). In comparison, DeblurGAN-v2 has not exhibited RMSE improvement (Figure 6B) and even obtained peculiarly worse scores than its inputs on SSIM (Figure 6A), though it marginally outperformed IsPlanktonFE on the focus score (Figure 6C). Upon visual inspection of the DeblurGAN-v2 outputs shown in Figure 5, we found these images contain quite some high-frequency artifacts.

Note in Figures 6A, B that the average SSIM and RMSE values of the gradually defocused input images decrease very slowly with the increase of defocus distance. Differently, as shown in Figure 6C, their focus scores decrease sharply with the increase of defocus distance, indicating that the evaluation of focus score is more consistent with human vision (Yang et al., 2021). This is not surprising, since this metric was designed to incorporate group evaluations from human vision.

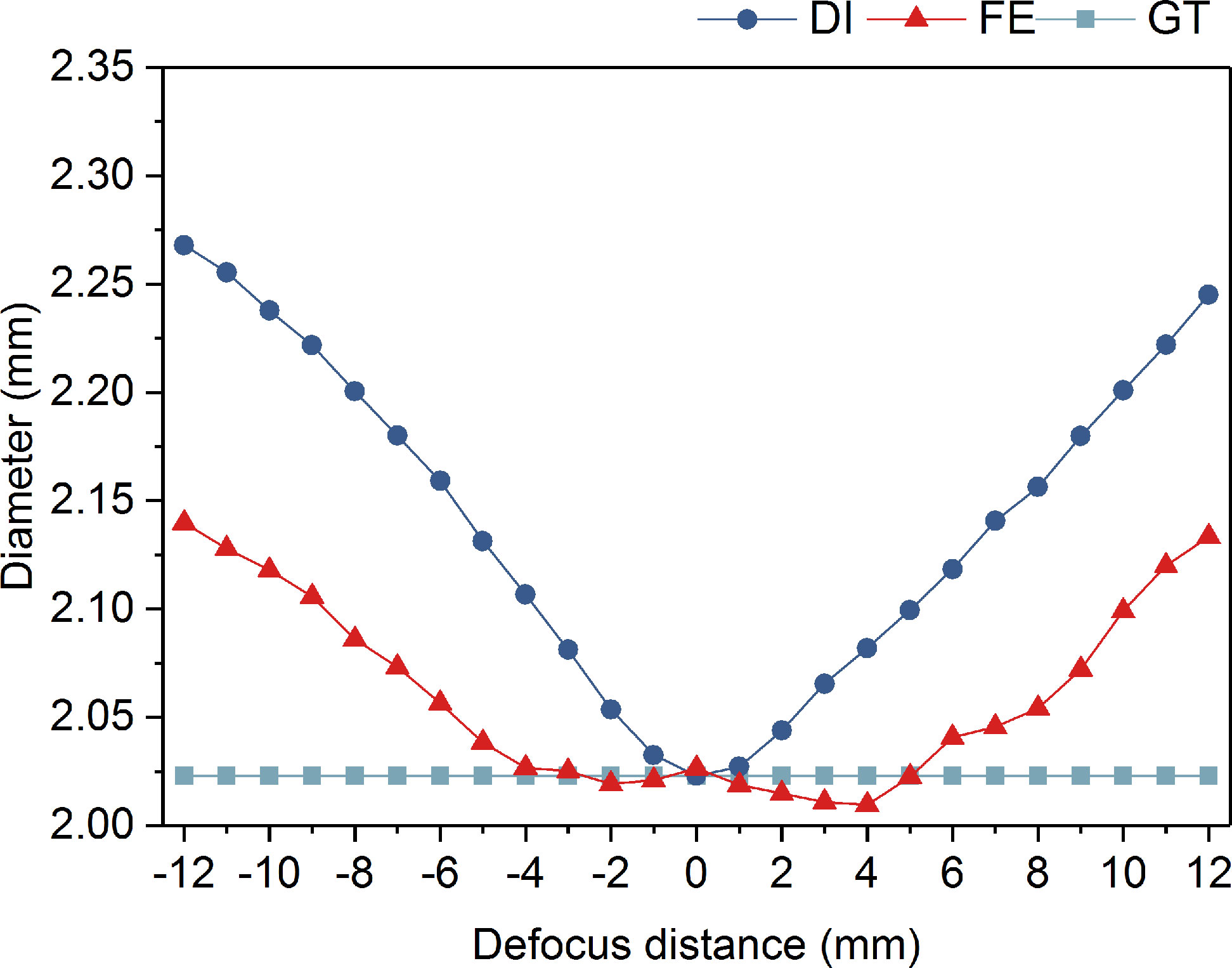

To reduce evaluation variance caused by morphological irregularity of diverse real planktonic organisms when characterizing IsPlanktonFE performance, we used a 2mm SiO2 bead as target to further calibrate its achievable DOF through comparative image analysis of the bead. As shown in Figure 7, we used the dual-channel imaging apparatus to collect a series of focused and defocused image pairs of the bead in the defocus distance range of -12mm-12mm.

Figure 7 Visual comparisons of the focus-extension performance of the IsPlanktonFE network on darkfield images of a SiO2 bead target. The value above each column indicates the defocus distance between the DI and the GT images. The nominal diameter of the SiO2 bead is 2mm.

It is well known that defocused contour of a target leads to its inaccurate size measurement. Therefore, we calibrated the focus extended range by IsPlanktonFE based on the measurement accuracy of the bead’s diameter from its darkfield images. As the results shown in Figure 8, the diameter measured from the in-focus images of the bead is 2.02mm, which is very close to its nominal value of 2mm. From the defocused images, the measurement error gradually increases with the increase of defocus distance, and reaches 0.25mm at ±10mm (>10%). In comparison, the measurement error from the focus extended images is almost negligible within a defocus range of -5mm-5mm. Only when the defocus distance is outside this range, the error begins to increase gradually. Even at a defocus distance of ±10mm, the error is only 0.1mm (~5%).

Figure 8 Diameter comparison of a SiO2 bead measured from its defocused images, focus-extended images by IsPlanktonFE, and focused GT images.

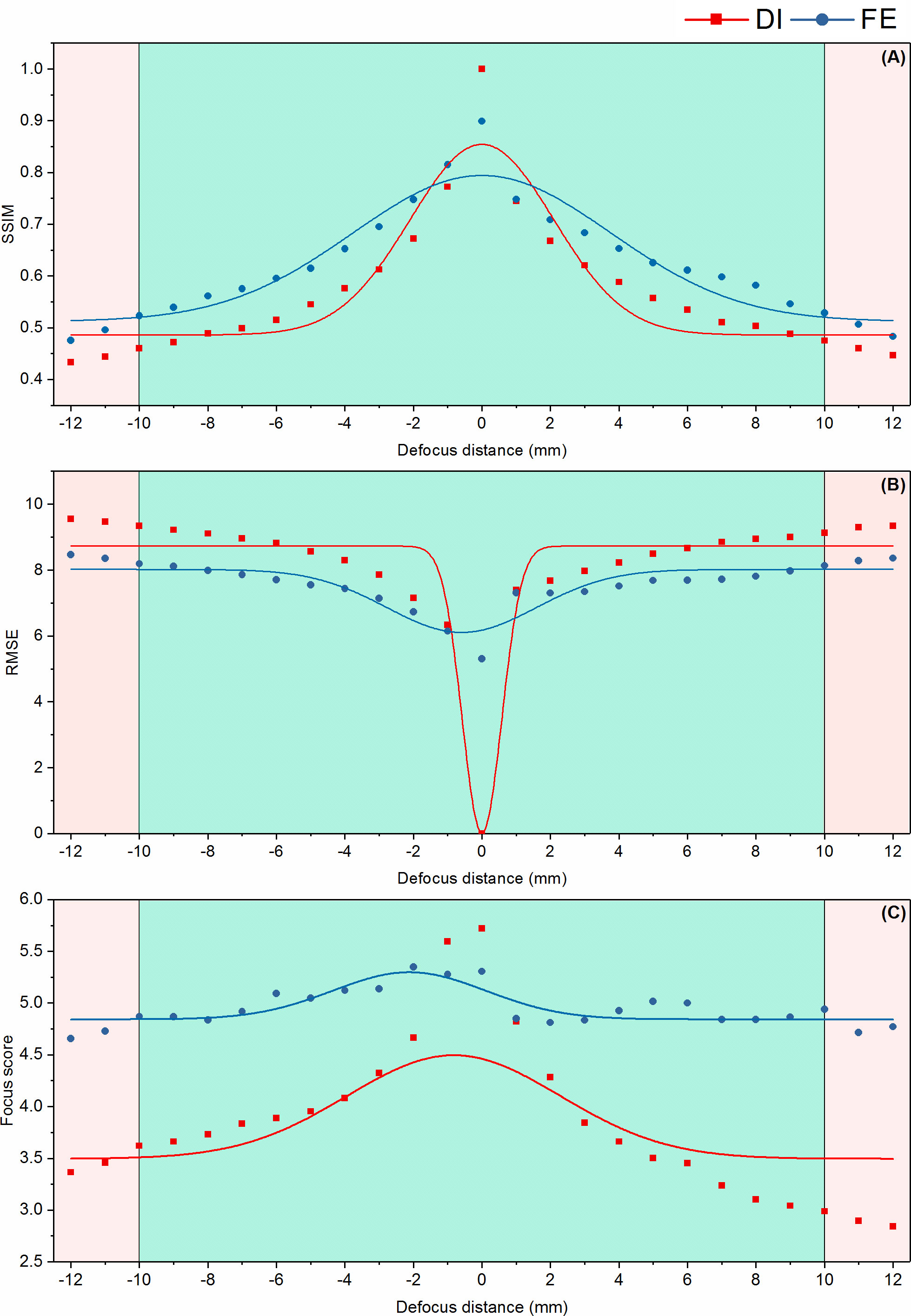

On the other hand, the details inside the contour of a planktonic target, e.g., the high-frequency features of some biological structures, often play a decisive role for its recognition. Defocus obviously blurs the faithful representation of such features, resulting in serious decline in the accuracy of subsequent recognition. Therefore, we further calibrated the focus-extension performance of IsPlanktonFE by evaluating its ability in restoring the internal features of the bead’s images. Using the RMSE, SSIM and focus score metrics again, we quantified and compared the features in different defocused and the focus-extended images of the bead as the results shown in Figure 9. We found that the measurement from the focus-extended images were significantly better than from the defocused images in the range of -12mm-12mm. IsPlanktonFE has not only achieved good restoration effect in the trained defocus range of -10mm-10mm, but also considerable improvement outside this range.

Figure 9 Quantitative focus-extension evaluation of the IsPlanktonFE network using a 2mm SiO2 bead by the metrics of SSIM (A), RMSE (B) and focus score (C), respectively. The cyan areas in the panels indicate a defocus distance range from which the recorded SiO2 bead images are used for training the IsPlanktonFE model. The pink areas indicate defocus distance ranges where the recorded SiO2 bead images were NOT used to train the IsPlanktonFE model.

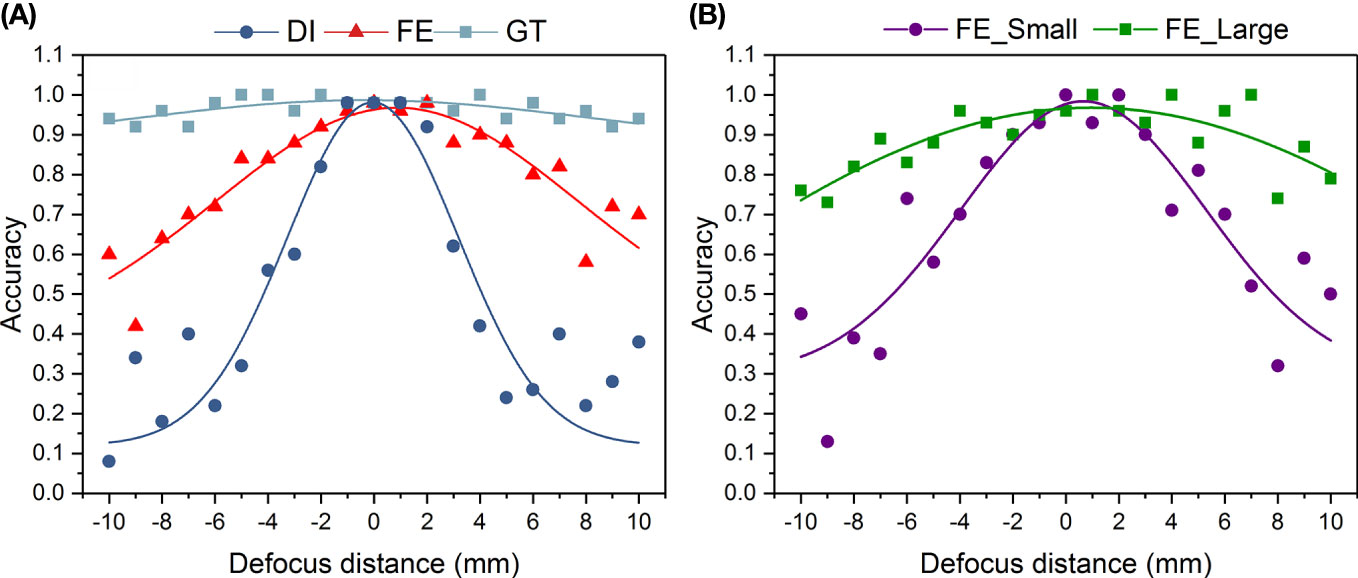

To further confirm the focus-extended range by IsPlanktonFE, we designed another experiment to compare the performance of a ResNet-18 network, which had been pre-trained only on 20 classes of focused plankton ROIs, on classifying marine plankton from their defocused and corresponding focus-extended images. For each class, 200 ROIs were subsampled from the dataset constructed in this work. The results in Figure 10A show that within the defocus range of -10mm-10mm, the accuracy of the ResNet-18 network on classifying the blurred images decreases significantly with the increase of defocus distance, which is consistent with common sense. In contrast, the accuracy on classifying the focus-extended images decreases much slower and remains better than 50% even at defocus distances of ±10 mm. Figure 10B further details the changes of the ResNet-18’s accuracy on classifying focus-extended images with different plankton sizes. It can be seen that for the images collected by the 0.5× imaging optics, the accuracy on classifying plankton with body length > 2mm decreases very slowly with the increase of defocus distance, reaching more than 70% within the defocus range of -10mm-10mm. However, for smaller plankton with body length< 2mm, the accuracy decreases rapidly. Even though, such accuracy is still higher than that achieved on the defocused images. These results indicate that within the defocus range of -10mm-10mm, the focus-extension by IsPlanktonFE has contributed effective improvement to a machine classifier’s performance on recognizing plankton images. And the larger the target, the better such performance.

Figure 10 Performance comparison of a trained ResNet-18 deep network on classifying defocused plankton images, their focus-extended counterparts by IsPlanktonFE, and corresponding focused GT images. The ResNet-18 classifier has been trained on focused plankton images before this test. The value of each point is the average accuracy of 50 randomly selected testing plankton images. (A) plots the classification accuracy of the ResNet-18 model versus defocus distance on DI, FE, and GT testing plankton images. (B) further plots the classification accuracy on the FE plankton images grouped in two different sizes. FE_Small refers to plankton length (diagonal of ROI)<2mm. FE_Large refers to plankton length >2mm.

The above experimental results from the SiO2 bead size measurement, bead image feature restoration assessment, and machine classifier performance evaluation on real plankton images have proved that our trained IsPlanktonFE deep network at least extended a 0.5× darkfield imaging system’s DOF of 3mm to a wider range of -10mm-10mm, which is equivalent to ~7 times focus-extension.

We selected a batch of in situ acquired ROIs by different IPPs deployed separately from coastal sites near Shenzhen, Pingtan, and Changjiang of China, and Hobart of Australia, and used the metric of focus score to evaluate their focus states before and after the processing by IsPlanktonFE to test its generalization performance on real-world test data.

Figure 11 shows the test results of IsPlanktonFE on some ROIs containing in-distribution (i.e., plankton classes already in the training dataset) plankton of Copepod, Polychaete and Mysid, in which the defocused and refocused ROIs of these representative common plankters and their focus scores are given for comparison. Note that the principles of IPP and the dual-channel imaging apparatus are both darkfield imaging, but their optics has certain differences in lens magnification, pixel size and illumination. These make the selected test images have feature variations from those in the training set, although biologically they belong to the same taxa. Judging from the resultant visual evaluation and quantitative focus score comparisons of the input and output images, we see that IsPlanktonFE has evidently refocused the blurred images.

Figure 11 Focus-extension comparisons of IsPlanktonFE on some representative in situ marine plankton ROIs acquired by IPP. The value at the top-right corner of each ROI indicates its focus score.

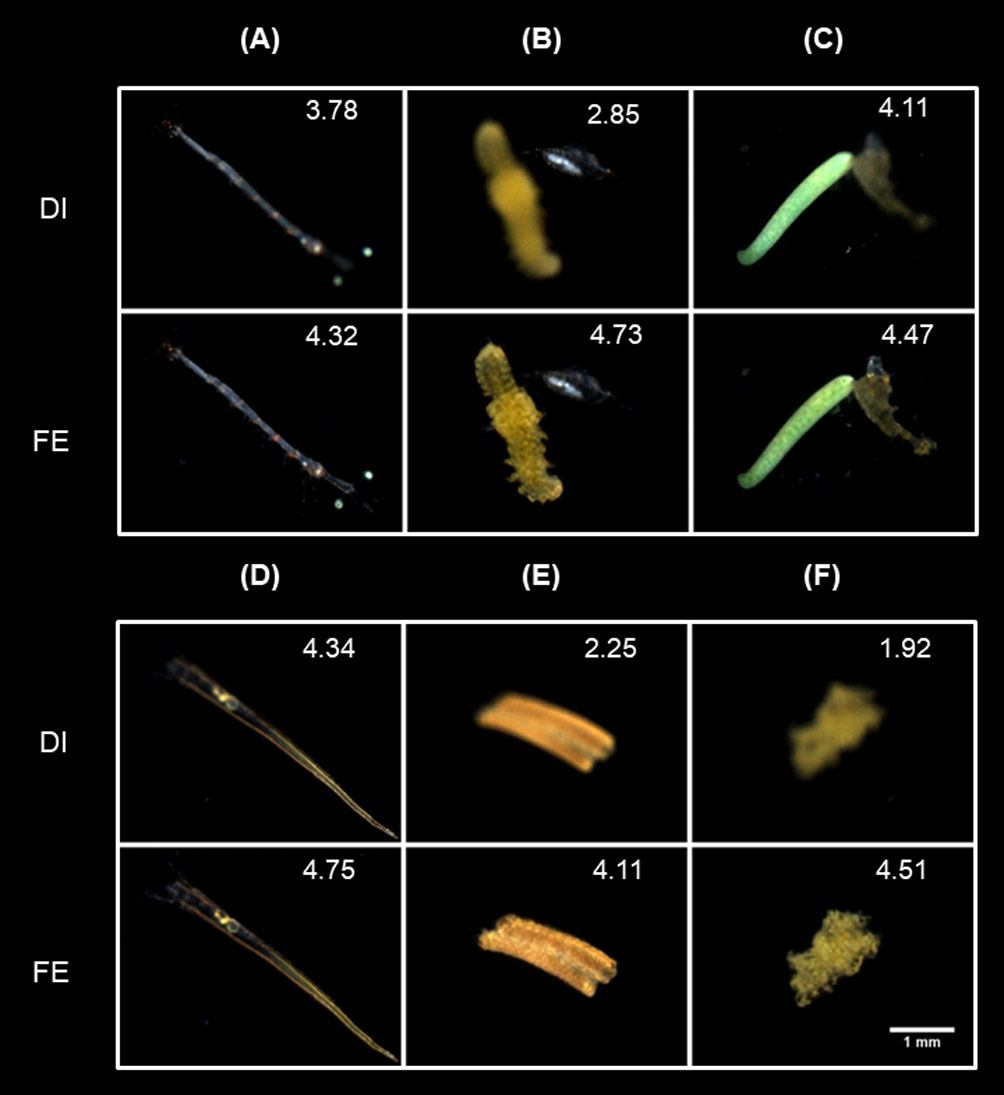

Then we selected another subset of out-of-distribution ROIs (biological classes and/or defocus states not included in the training set) to further validate the generalizability of IsPlanktonFE on different image contents. The test results are shown in Figure 12, in which the Lucifer sp. (Figure 12A) and the Polychaete sp. and the copepod (Figure 12B) exhibits more complex defocus status although their taxa were already included in the training set. Specifically, different parts of the Lucifer sp. defocus differently, and the Polychate sp. and the copepod has different defocus degree. While in Figures 12C, D, the defocus status of the Monstroid with eggs and the Cresis Acicula is also very complex, and their taxa are not included in the training set. Observing the refocused ROIs and their focus scores, we can see IsPlanktonFE has achieved excellent refocusing effect on them with never-trained contents. Remarkably, as shown in Figures 12E, F and more in Figure S3 in the Supplementary Materials, IsPlanktonFE also turned out to be very effective in refocusing the suspended particle images, which were never trained and are known to be extremely heterogeneous in morphology.

Figure 12 Focus-extension comparisons of IsPlanktonFE on some special in situ darkfield ROIs acquired by IPP. (A) A ROI contains an in-distribution plankter with different degrees of defocus in different body parts. (B) A ROI contains two different in-distribution plankters with different degrees of defocus. (C) and (D) contains an out-of-distribution (OOD) plankter, respectively. (E) and (F) contains an OOD suspended particle, respectively. The value at the top-right corner of each ROI indicates its focus score.

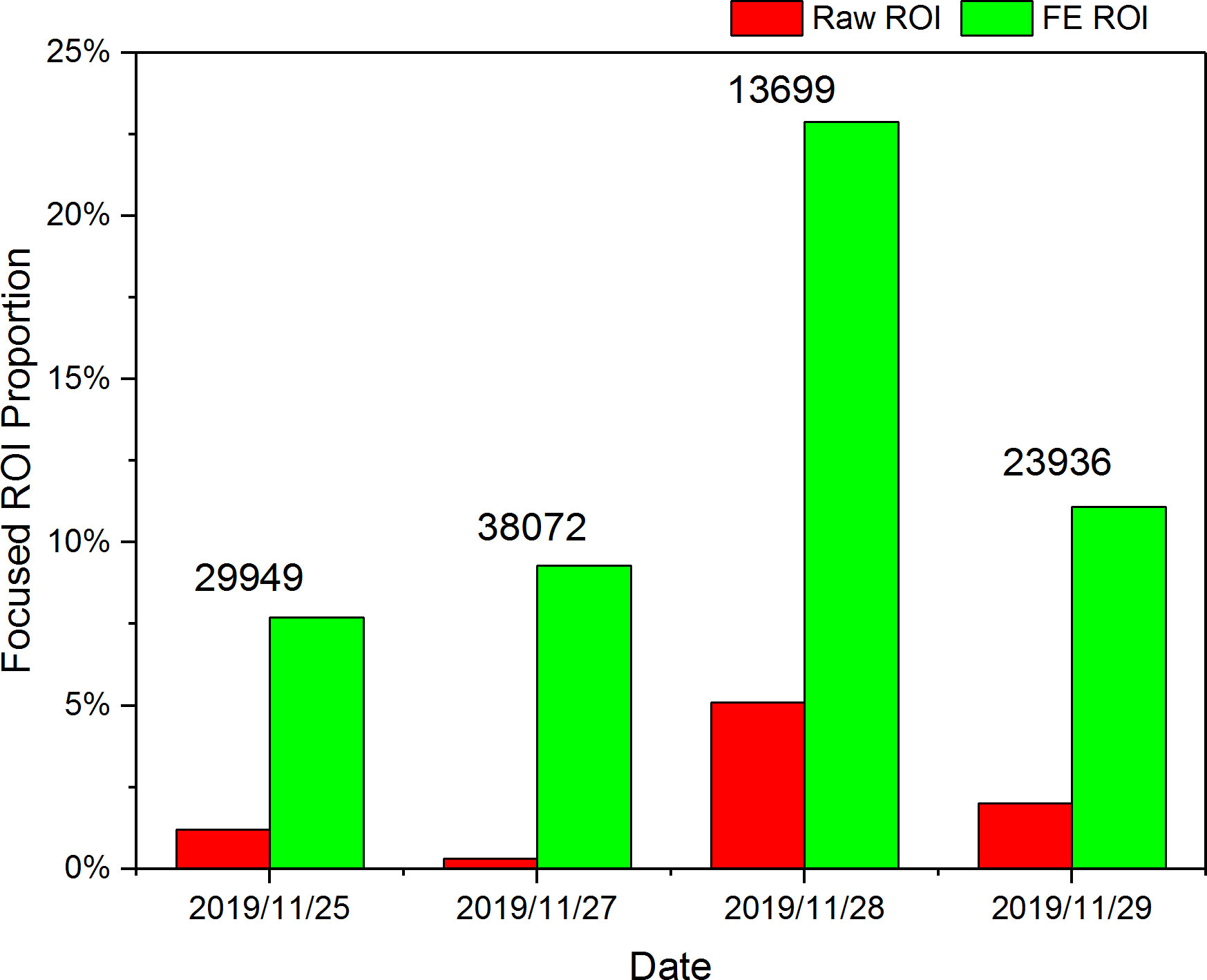

We used the raw ROIs acquired from a trial IPP deployment underwater at the wharf of CSRIO Ocean and Atmosphere of Australia during December 25-29, 2019 as testing data to verify the efficiency improvement by applying IsPlanktonFE for in situ marine plankton observation. In this data, an average of 26,414 ROIs were taken per day. Because the focus evaluation filtration algorithm (Yang et al., 2021) was not deployed at the time during their collection, the data contains many defocused ROIs.

We first screened the focus scores of all the original ROIs and found that the average daily proportion of the clear images (focus score>4.0) in the raw ROIs was only 2.15%. After applying focus-extension to all the raw ROIs by IsPlanktonFE, such proportion rose to 12.75%. The proportional change of clear ROIs before and after IsPlanktonFE processing and the absolute number of raw ROIs collected daily are shown in Figure 13. The proportional fluctuations of the focused ROIs were mainly caused by the natural change of the plankton and suspended particles abundance and spatial distribution with time. This result further confirmed the application of IsPlanktonFE can greatly increase the fraction of in-focus images acquired in the raw data. The observation efficiency of IPP has been further lifted by ~6 times on average.

Figure 13 Proportion change of the clear ROI images in a 4-day in situ image data acquired by an IPP camera deployed underwater before and after all the raw ROIs processed by IsPlanktonFE.

Single image refocusing is an ill-posed problem. There are many possible solutions to the mapping between a defocused image and its focused GT. The DDE-Net essentially provides a unique descriptor for the defocus degree of the input, which assists IsPlanktonFE to learn more definitely the optimal mapping between blurred and clear images. The practicality of this module has obviously benefited from our dual-channel imaging apparatus, which provided accurate defocus distance label to the training image pairs. In contrast, DeblurGan-v2 cannot utilize defocus distance prior and only relied on image data to learn such complicated mapping. Limited by network layer depth and parameters, DeblurGan-v2 is difficult to handle volatile defocus situations in the input images, and generated serious artifacts for many images. This is why the output of DeblurGAN-v2 even appear lower SSIM and RMSE scores than its inputs. The DNN used in the focus score evaluation (Yang et al., 2021) is only sensitive to the content rather than the distribution of high-frequency features, so DeblurGAN-v2 has achieved higher scores on this metric as it is prone to generate falsely distributed high-frequency artifacts in the output images.

With the increase of defocus distance, each pixel in a defocused image receives contributions from more neighboring object features, which renders it more challenging to remap them correctly to their original pixel locations. In this limit, it gradually becomes difficult for IsPlanktonFE to obtain an optimal solution to the mapping even it is provided with the defocus distance information.

Figure 14 shows the focus-extension effect of IsPlanktonFE on planktons with different sizes at different defocus distances. The comparison between Figures 14A, B shows that at the same defocus distances, the larger plankter containing more features in the defocused image achieved higher SSIM score and better image quality after the restoration. The comparison between Figures 14A, C reflects that at a larger defocus distance, the model is also more likely to perform better for larger plankton. These results indicate that the focus-extension performance of IsPlanktonFE will decline with the decrease of planktonic object size. The resolution of an optical system with fixed magnification will become insufficient when imaging smaller targets. Under such situation, defocus blur will result in further loss of high-frequency features in the low-resolution images of tiny objects, thus disadvantaging the restoration of any focus-extension models without exception of IsPlanktonFE. When the resolution of acquired images is not enough to entertain the computation requirements of IsPlanktonFE, higher magnification of the imaging instruments should be considered.

Figure 14 Effects of plankton size on the focus-extension performance of IsPlanktonFE. (A) a Megalopa larva at d=-6mm, (B) a Polychate with eggs at d=-6mm, (C) a different Polychate at d=-9mm.

It is worth discussion that the darkfield ROIs have offered IsPlanktonFE relatively simplified foreground and background contents compared to the more complex natural scene images. On the one hand, it is rare in the ROIs that multiple foreground targets overlap or a single target has inconsistent defocus situations at different focal positions. This means the label d can depict the defocus distances of the foreground targets accurately and uniquely. On the other hand, darkfield ROIs have almost zero backgrounds with little interference to the foregrounds. These data characteristics are all favorable to the training of IsPlanktonFE for performance. In addition, the dual-channel imaging apparatus has an inborn advantage of reciprocity in acquiring the image pairs, which means the defocused and focused targets in one channel image are counterparts to those in the other channel image, so it can acquire 2n image pairs by just stepping one focal plane of the two channels for only n positions. Note that this is very useful to speed up the data acquisition to keep the fragile planktonic organisms’ bioactivity after being caught out from the marine environment. It certainly also saves time to upscale the live plankton image dataset for IsPlanktonFE training.

Currently, the IsPlanktonFE dataset has reached a scale of 55 000 ROI pairs, containing at least 20 classes of plankton from the phyla of Arthropoda and Annelida. Admittedly, compared with the actual number of plankton in the ocean and the high complexity of the underwater environment, the scale and diversity of this dataset is far from sufficient. Fortunately, IsPlanktonFE has learnt the low-level pixel-by-pixel feature mapping between defocused-focused image pairs, which is essentially different from an image classifier learning mappings between images and their categorical labels, so it is less sensitive to high-level image semantics such as classes, morphologies, etc. When the ROI pairs in the IsPlanktonFE dataset were acquired, the plankton were alive so they kept on swimming. Compared with the limited FOV and DOF of the imaging apparatus and the confined space in the container, their motion is not finite at all. In addition, we only used a slow frame rate during the raw image acquisition, so even for the same plankton target, its defocus position, orientation and morphology can vary significantly in temporally close frames and spatially close positions. This allows our dataset to have a very rich “defocused image-focused image” mapping variety, i.e., defocus diversity, even though its construction is based on a limited number of taxonomic groups. As a result, even trained and tested by a simple random 10:1 split of the datasets, the obtained IsPlanktonFE model has achieved good generalizability on the images of untrained organism classes, images collected by different instruments, or even suspended particles, as demonstrated in the results and the Figure S3 in the Supplementary Materials. In principle, we can reasonably infer that this generalizability has more potentials.

Firstly, the good performance of IsPlanktonFE on in situ images acquired by different IPPs suggests its application potential for a variety of other in situ darkfield plankton imagers. Although the optics of the dual-channel imaging apparatus has various differences from the other underwater darkfield imagers besides IPP, their principle is all snapshot darkfield macrophotography or microscopy (Benfield et al., 1996; Schulz et al., 2009; Picheral et al., 2010; Gallager, 2019; Orenstein et al., 2020; Li et al., 2022). The representation of plankton and particles by their images exhibits very similar morphological features. Therefore, the modeling process of IsPlanktonFE could be repeated to optimize different hyperparameters for these instruments. In fact, several sets of hyperparameters at different magnifications can also be multiplexed to further improve the universality of the model. This perhaps is the most attractive aspect of IsPlanktonFE, as it requires no hardware changes to existing imagers, but can computationally extend their DOFs and make observation efficiency improvements.

Secondly, although the marine plankton species is extremely diverse, the number of high-level taxonomic groups become much smaller. It is known that organisms belong to the same phylum have many similar morphological features but different between different phyla (Batten et al., 2019), e.g., Copepoda and Eumalacostraca share quite some alike exoskeleton features as they both belong to Arthropoda. Due to this biological property, the training scale of IsPlanktonFE might not necessarily need to cover all plankton species, but only needs to cover enough higher-order phyla, and then it is possible to generalize to the images of untrained species under the same phyla. The examples of Monstrilloid and Creseis acicula in Figures 12C, D exactly justified this hypothesis. It is known that copepods alone account for 90% of all marine zooplankton abundance (Suthers et al., 2019), so it is anticipated that IsPlanktonFE has the potential to generalize to a considerable number of plankton classes. However, the dataset still lacks examples from Chordata, Cnidaria and etc. The performance of IsPlanktonFE is likely to drop if it were directly applied to in situ plankton images of these species. But users can simply apply the apparatus and methods described in this paper, combined with live sampling of these plankton, their images can be conveniently supplemented into the training set. The model can then be retrained to quickly regain the focus-extension ability on these images.

Surprisingly, IsPlanktonFE also achieves excellent performance on various suspended particle images, although none of them are used in the training. This may be because the kaleidoscopic particles in their darkfield images also contain many features similar to plankton morphology that have been learnt by the model. This speculation does not lack of scientific reasoning as many particulates are the debris of plankton or fecal pellets (smaller planktonic debris). On the other hand, marine plankton might evolve their morphology to resemble the suspended particles in seawater, because this is preferable for their hiding and survival. It is well known that the quantity of suspended particles in the ocean is extremely large [>80% in situ images are particles (Panaïotis et al., 2022)], and their size and volume are the most concerned measurands in the study of oceanic carbon cycle (Lombard et al., 2019; Giering et al., 2020). IsPlanktonFE has achieved remarkable performance on refocusing the contour of defocused suspended particles, which is obviously beneficial for their more accurate measurement.

Generally, the density of plankton in ocean is low, and larger organisms are in lower abundance. For more efficient observations, the seawater sampled per unit time by any plankton imaging method is always favored as more as possible to obtain statistically representative plankton information and more undersea space coverage. Extending the focus of an imaging instrument with fixed magnification (i.e., fixed field of view) is obviously a straightforward way for this purpose. Taking the results of this study as an example, IsPlanktonFE can extends the DOF of a 0.5× darkfield imaging system from ~3mm to ~20mm, equivalent to a seawater sampling volume improvement of ~7 times. Based on our experience in IPP nearshore deployment, this will result in a total water volume enlargement from 388.8L to 2592L per day at an imaging frame rate of 3FPS. This is expected to further fill the gap in seawater sampling throughput and plankton quantification between the traditional methods and the in situ imagers (Barth and Stone, 2022; Le et al., 2022).

The value of IsPlanktonFE for plankton observation is also reflected in its generalization for future darkfield imaging instrumentation. When an imager works underwater, the space outside its DOF will inevitably be illuminated, and the stray light from these spaces can enter the camera and reach the imaging chip. This is the fundamental cause for defocus blur in darkfield imaging. The existing darkfield imagers generally assess the focus of the target in a ROI by simple edge gradient calculation routine, and directly discard the defocused ROIs to just retain the “clear” ones (Gallager, 2019; Orenstein et al., 2020; Li et al., 2022). This made their high-quality image yield and light energy utilization rate very low. IPP improves such yield by physically compressing the illumination into a layer of thickness to ~6.89mm to reduce illumination outside the DOF. However, it is not trivial to further compress incoherent light beams thinner than such thickness. This will not only increase the complexity of the optomechanical structure of the illuminator and lose instrumentation flexibility, but also greatly waste illumination energy. If the DOF is extended to ~20mm by IsPlanktonFE, the difficulty of matching the illumination layer with the imaging DOF can be greatly alleviated, in favor of simplifying the instrumentation and can also improve the high-quality image yield of darkfield imaging to its limit. Moreover, the process of IsPlanktonFE establishment has provided users references to solve their respective research problems of interest, in which the users may have to deal with different challenges from complicated observational objects and application environments. All these will certainly help marine scientists to explore more of the unknown ocean at better cost.

One shortcoming of IsPlanktonFE lies in its deep network structure, leading to high computation cost and long training time. It took 120 hours and six RTX3090 GPU cards in training the model to achieve convergence. Fortunately, the computation is greatly reduced in its production phase, during which IsPlanktonFE was verified to achieve an average speed of 1-2 ROI/s using a single RTX3090 GPU. This is much faster than the average speed of ROI generation by an IPP deployed nearshore according to our experience (Li et al., 2022). In the future, the model can be light-weighted by network pruning (Molchanov et al., 2019), quantification (Wang et al., 2022), knowledge distillation (Yim et al., 2017) techniques to further reduce its demand on computation for training and inference. These will enable the deployment of IsPlanktonFE on a cloud computing platform to facilitate next-generation in situ real-time marine plankton observations.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

TC and JL conceived the idea, designed the dual-channel darkfield imaging apparatus and JL supervised the project. TC collected the in situ marine plankton image raw data. TC, WM, GG, ZY and ZL built the IsPlanktonFE dataset. TC, GG and ZY developed computer programming for the IsPlanktonFE model. WM developed computer programming for the DeBlurGan-v2 model and JQ supervised this work. TC and JL designed the experiments, analysed the findings, and drafted the manuscript. All authors contributed to the article and approved the submitted version.

This work was supported by International Partnership Program of Chinese Academy of Sciences No. 172644kysb20210022, Shenzhen Science and Technology Innovation Program No. JCYJ20200109105823170 and Key Project of the Guangdong Basic and Applied Basic Research Foundation No. 2022B1515120030.

The authors are grateful to Mr. Mark Luk and Mr. Tom Xu from Shenzhen Xcube Technology Co. Ltd. for their technical support during IPP deployment at Hobart, Australia, and Pingtan, China, and to Dr. Haifeng Gu from Third Institute of Oceanography, Ministry of Natural Resources, China for his logistics support during IPP deployment at Pingtan. T.C. acknowledges Dr. Tim Yue Him Wong from Shenzhen University for his inspiring discussion and language advice.

TC and JL have three pending China invention patent applications on the presented dual-channel darkfield imaging apparatus and the IsPlanktonFE algorithm.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmars.2023.1074428/full#supplementary-material

Abuolaim A., Brown M. S. (2020). “Defocus deblurring using dual-pixel data,” in European Conference on computer vision. (Springer, Cham), 111–126. doi: 10.1007/978-3-030-58607-2_7

Alvarez-Fernandez S., Bach L. T., Taucher J., Riebesell U., Sommer U., Aberle N., et al. (2018). Plankton responses to ocean acidification: The role of nutrient limitation. Prog. Oceanogr. 165, 11–18. doi: 10.1016/j.pocean.2018.04.006

Barth A., Stone J. (2022). Comparison of an in situ imaging device and net-based method to study mesozooplankton communities in an oligotrophic system. Front. Mar. Sci. 9. doi: 10.3389/fmars.2022.898057

Batten S. D., Abu-Alhaija R., Chiba S., Edwards M., Graham G., Jyothibabu R., et al. (2019). A global plankton diversity monitoring program. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00321

Benfield M. C., Davis C. S., Wiebe P. H., Gallager S. M., Lough R. G., Copley N. J. (1996). Video plankton recorder estimates of copepod, pteropod and larvacean distributions from a stratified region of georges bank with comparative measurements from a MOCNESS sampler. Deep-sea Res. Part 2. Topical Stud. oceanography. 43 (43), 1925–1945. doi: 10.1016/S0967-0645(96)00044-6

Bi H., Cook S., Yu H., Benfield M. C., Houde E. D. (2013). Deployment of an imaging system to investigate fine-scale spatial distribution of early life stages of the ctenophore Mnemiopsis leidyi in Chesapeake Bay. J. Plankton Res. 35 (2), 270–280. doi: 10.1093/plankt/fbs094

Campbell R. W., Roberts P. L., Jaffe J. (2020). The prince William sound plankton camera: a profiling in situ observatory of plankton and particulates. ICES J. Mar. Sci. 77 (4), 1440–1455. doi: 10.1093/icesjms/fsaa029

Cheng Y., Cao J., Tang X., Hao Q. (2021). Optical zoom imaging systems using adaptive liquid lenses. Bioinspir. Biomim. 16 (4), 041002. doi: 10.1088/1748-3190/abfc2b

Cheng X., Cheng K., Bi H. (2020). Dynamic downscaling segmentation for noisy, low-contrast in situ underwater plankton images. IEEE Access. 8, 111012–111026. doi: 10.1109/access.2020.3001613

Cowen R. K., Guigand C. M. (2008). In situ ichthyoplankton imaging system (ISIIS): system design and preliminary results. Limnol. Oceanogr.: Methods. 6 (2), 126–132. doi: 10.4319/lom.2008.6.126

Cowen R. K., Sponaugle S. (2009). Larval dispersal and marine population connectivity. Annu. Rev. Mar. Sci. 1 (1), 443–466. doi: 10.1146/annurev.marine.010908.163757

Davies E. J., Nepstad R. (2017). In situ characterisation of complex suspended particulates surrounding an active submarine tailings placement site in a Norwegian fjord. Regional Stud. Mar. Sci. 16, 198–207. doi: 10.1016/j.rsma.2017.09.008

Fan F., Yang K., Fu B., Xia M., Zhang W. (2010). “Application of blind deconvolution approach with image quality metric in underwater image restoration,” in Proceedings of the International Conference on Image Analysis and Signal Processing. (Zhejiang, China: IEEE), 236–239. doi: 10.1109/IASP.2010.5476122

Gallager S. M. (2019). Continuous particle imaging and classification system. Washington, DC: U.S. Patent and Trademark Office, U.S. Patent No 10222688.

Giering S. L. C., Cavan E. L., Basedow S. L., Briggs N., Burd A. B., Darroch L. J., et al. (2020). Sinking organic particles in the ocean–flux estimates from in situ optical devices. Front. Mar. Sci. 6 (834). doi: 10.3389/fmars.2019.00834

Gorsky G., Picheral M., Stemmann L. (2000). Use of the underwater video profiler for the study of aggregate dynamics in the north Mediterranean. Estuarine Coast. Shelf Sci. 50 (1), 121–128. doi: 10.1006/ecss.1999.0539

Graham G. W., Nimmo Smith W. A. M. (2010). The application of holography to the analysis of size and settling velocity of suspended cohesive sediments. Limnol. Oceanogr.: Methods. 8 (1), 1–15. doi: 10.4319/lom.2010.8.1

Grcs Z., Tamamitsu M., Bianco V., Wolf P., Roy S., Shindo K., et al. (2018). A deep learning-enabled portable imaging flow cytometer for cost-effective, high-throughput, and label-free analysis of natural water samples. Light Sci. Appl. 7, 66. doi: 10.1038/s41377-018-0067-0

Hirche H. J., Barz K., Ayon P., Schulz J. (2014). High resolution vertical distribution of the copepod Calanus chilensis in relation to the shallow oxygen minimum zone off northern Peru using LOKI, a new plankton imaging system. Deep Sea Res. Part I: Oceanographic Res. Papers. 88, 63–73. doi: 10.1016/j.dsr.2014.03.001

Karaali A., Jung C. R. (2018). Edge-based defocus blur estimation with adaptive scale selection. IEEE Trans. Image Process. 27 (3), 1126–1137. doi: 10.1109/TIP.2017.2771563

Katz J., Sheng J. (2010). Applications of holography in fluid mechanics and particle dynamics. Annu. Rev. Fluid Mechanics. 42 (1), 531–555. doi: 10.1146/annurev-fluid-121108-145508

Krishnan D., Tay T., Fergus R. (2011). “Blind deconvolution using a normalized sparsity measure,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (Colorado Springs, CO, USA: IEEE), 233–240. doi: 10.1109/CVPR.2011.5995521

Kupyn O., Martyniuk T., Wu J., Wang Z. (2019). “Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better,” in Proceedings of the IEEE/CVF International Conference on Computer Vision. (Seoul, South Korea: IEEE), 8878–8887. doi: 10.1109/ICCV.2019.00897

Lee J., Son H., Rim J., Cho S., Lee S. (2021). “Iterative filter adaptive network for single image defocus deblurring,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (Nashville, TN, USA: IEEE), 2034–2042. doi: 10.1109/CVPR46437.2021.00207

Le K. T., Yuan Z., Syed A., Ratelle D., Orenstein E. C., Carter M. L., et al. (2022). Benchmarking and automating the image recognition capability of an In situ plankton imaging system. Front. Mar. Sci. 9. doi: 10.3389/fmars.2022.869088

Li J., Chen T., Yang Z., Chen L., Liu P., Zhang Y., et al. (2022). Development of a buoy-borne underwater imaging system for In situ mesoplankton monitoring of coastal waters. IEEE J. Oceanic Eng. 47 (1), 88–110. doi: 10.1109/JOE.2021.3106122

Li D., Jiang T., Jiang M. (2017). “Exploiting high-level semantics for no-reference image quality assessment of realistic blur images,” in Proceedings of the 25th ACM international conference on Multimedia. (Mountain View, California USA: ACM), 378–386. doi: 10.1145/3123266.3123322

Lombard F., Boss E., Waite A. M., Vogt M., Uitz J., Stemmann L., et al. (2019). Globally consistent quantitative observations of planktonic ecosystems. Front. Mar. Sci. 6. doi: 10.3389/fmars.2019.00196

Luo Y., Huang L., Rivenson Y., Ozcan A. (2021). Single-shot autofocusing of microscopy images using deep learning. ACS Photonics. 8 (2), 625–638. doi: 10.1021/acsphotonics.0c01774

Makarkin M., Bratashov D. (2021). State-of-the-Art approaches for image deconvolution problems, including modern deep learning architectures. Micromachines. 12 (12), 1558. doi: 10.3390/mi12121558

Martínez-Corral M., Javidi B. (2018). Fundamentals of 3D imaging and displays: a tutorial on integral imaging, light-field, and plenoptic systems. Adv. Optics Photonics. 10 (3), 512–566. doi: 10.1364/aop.10.000512

Merz E., Kozakiewicz T., Reyes M., Ebi C., Isles P., Baity-Jesi M., et al. (2021). Underwater dual-magnification imaging for automated lake plankton monitoring. Water Res. 203, 117524. doi: 10.1016/j.watres.2021.117524

Molchanov P., Mallya A., Tyree S., Frosio I., Kautz J. (2019). “Importance estimation for neural network pruning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (Beach, CA, USA: IEEE), 11264–11272. doi: 10.1109/CVPR.2019.01152

Nayak A. R., Malkiel E., McFarland M. N., Twardowski M. S., Sullivan J. M. (2021). A review of holography in the aquatic sciences: In situ characterization of particles, plankton, and small scale biophysical interactions. Front. Mar. Sci. 7. doi: 10.3389/fmars.2020.572147

Nishiyama M., Hadid A., Takeshima H., Shotton J., Kozakaya T., Yamaguchi O. (2011). Facial deblur inference using subspace analysis for recognition of blurred faces. IEEE Trans. Pattern Anal. Mach. Intell. 33 (4), 838–845. doi: 10.1109/TPAMI.2010.203

Odena A., Dumoulin V., Olah C. (2016). Deconvolution and checkerboard artifacts. Distill. 1 (10), e3. doi: 10.23915/distill.00003

Olson R. J., Sosik H. M. (2007). A submersible imaging-in-flow instrument to analyze nano-and microplankton: Imaging FlowCytobot. Limnol. Oceanogr. Methods. 5, 195–203. doi: 10.4319/lom.2007.5.195

Orenstein E. C., Ayata S.-D., Maps F., Becker É.C., Benedetti F., Biard T., et al. (2022). Machine learning techniques to characterize functional traits of plankton from image data. Limnol. Oceanogr. 67 (8), 1647–1669. doi: 10.1002/lno.12101

Orenstein E. C., Ratelle D., Briseño-Avena C., Carter M. L., Franks P. J. S., Jaffe J. S., et al. (2020). The Scripps plankton camera system: A framework and platform for in situ microscopy. Limnol. Oceanogr. Methods 18 (11), 681–695. doi: 10.1002/lom3.10394

Ortner P. B., Cummings S. R., Aftring R. P., Edgerton H. E. (1979). Silhouette photography of oceanic zooplankton. Nature. 277 (5691), 50–51. doi: 10.1038/277050a0

Panaïotis T., Caray–Counil L., Woodward B., Schmid M. S., Daprano D., Tsai S. T., et al. (2022). Content-aware segmentation of objects spanning a Large size range: Application to plankton images. Front. Mar. Sci. 9. doi: 10.3389/fmars.2022.870005

Perez E., Strub F., De Vries H., Dumoulin V., Courville A. (2018). “Film: Visual reasoning with a general conditioning layer,” in Proceedings of the AAAI Conference on Artificial Intelligence. (Hilton New Orleans Riverside, New Orleans, Louisiana, USA: ACM), 3942–3951. doi: 10.1609/aaai.v32i1.11671

Picheral M., Guidi L., Stemmann L., Karl D. M., Iddaoud G., Gorsky G. (2010). The underwater vision profiler 5: An advanced instrument for high spatial resolution studies of particle size spectra and zooplankton. Limnol. Oceanogr. Methods 8 (9), 462–473. doi: 10.4319/lom.2010.8.462

Rai Dastidar T., Ethirajan R. (2020). Whole slide imaging system using deep learning-based automated focusing. BioMed. Opt Express. 11 (1), 480–491. doi: 10.1364/BOE.379780

Rotermund L. M., Samson J. (2015). A submersible holographic microscope for 4-d in-situ studies of micro-organisms in the ocean with intensity and quantitative phase imaging. J. Mar. Science: Res. Dev. 06 (01). doi: 10.4172/2155-9910.1000181

Schulz J., Barz K., Mengedoht D., Hanken T., Lilienthal H., Rieper N., et al. (2009). Lightframe on-sight key species investigation (LOKI). (Bremen, Germany: OCEANS 2009-EUROPE, IEEE), 1–5. doi: 10.1109/OCEANSE.2009.5278252

Steinberg D. K., Landry M. R. (2017). Zooplankton and the ocean carbon cycle. Annu. Rev. Mar. Sci. 9 (1), 413–444. doi: 10.1146/annurev-marine-010814-015924

Suthers I., Rissik D., Richardson A. (2019). “Plankton: A guide to their ecology and monitoring for water quality,” in Plankton: A guide to their ecology and monitoring for water quality. (Boca Raton, FL: CRC Press, CSIRO Publishing). doi: 10.1093/plankt/fbp102

Wang Z., Liu X., Huang L., Chen Y., Zhang Y., Lin Z., et al. (2022). QSFM: Model pruning based on quantified similarity between feature maps for AI on edge. IEEE Internet Things J. 9 (23). doi: 10.1109/jiot.2022.3190873

Wang N., Yu J., Yang B., Zheng H., Zheng B. (2020). Vision-based In situ monitoring of plankton size spectra Via a convolutional neural network. IEEE J. Oceanic Eng. 45 (2), 511–520. doi: 10.1109/joe.2018.2881387

Xu J., Kong Y., Jiang Z., Gao S., Xue L., Liu F., et al. (2019). Accelerating wavefront-sensing-based autofocusing using pixel reduction in spatial and frequency domains. Appl. Opt. 58 (11), 3003–3012. doi: 10.1364/AO.58.003003

Yang Z., Chen T., Li J., Sun J. (2021). “Focusing evaluation for in situ darkfield imaging of marine plankton,” in OCEANS 2021: San Diego – Porto. (Diego, CA, USA: IEEE), 1–8. doi: 10.23919/OCEANS44145.2021.9706029

Yim J., Joo D., Bae J., Kim J. (2017). “A gift from knowledge distillation: Fast optimization, network minimization and transfer learning,” in Proceedings of the IEEE conference on computer vision and pattern recognition. (Honolulu, HI, USA: IEEE), 4133–4141. doi: 10.1109/CVPR.2017.754

Keywords: underwater imaging, deep learning, focus extension, ocean observation, marine plankton

Citation: Chen T, Li J, Ma W, Guo G, Yang Z, Li Z and Qiao J (2023) Deep focus-extended darkfield imaging for in situ observation of marine plankton. Front. Mar. Sci. 10:1074428. doi: 10.3389/fmars.2023.1074428

Received: 19 October 2022; Accepted: 06 January 2023;

Published: 30 January 2023.

Edited by:

Rizwan Ali Naqvi, Sejong University, South KoreaReviewed by:

Jules S. Jaffe, University of California, San Diego, United StatesCopyright © 2023 Chen, Li, Ma, Guo, Yang, Li and Qiao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jianping Li, anAubGlAc2lhdC5hYy5jbg==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.