- 1Faculty of Data Science, City University of Macau, Macau, Macao SAR, China

- 2Key Laboratory of Intelligent Detection in Complex Environment of Aerospace Land and Sea, Beijing Institute of Technology, Zhuhai, China

Clear underwater images are necessary in many underwater applications, while absorption, scattering, and different water conditions will lead to blurring and different color deviations. In order to overcome the limitations of the available color correction and deblurring algorithms, this paper proposed a fusion-based image enhancement method for various water areas. We proposed two novel image processing methods, namely, an adaptive channel deblurring method and a color correction method, by limiting the histogram mapping interval. Subsequently, using these two methods, we took two images from a single underwater image as inputs of the fusion framework. Finally, we obtained a satisfactory underwater image. To validate the effectiveness of the experiment, we tested our method using public datasets. The results showed that the proposed method can adaptively correct color casts and significantly enhance the details and quality of attenuated underwater images.

1. Introduction

Underwater optical imaging is one of the most direct ways to obtain underwater information. Currently, clear underwater images are urgently needed for marine life research, marine environmental protection, and underwater equipment maintenance, among other fields. Unlike that on the ground, however, underwater imaging tends to be less effective due to, for example, color distortion, blurred details, and low contrast and brightness, among others. These issues hinder the use of unprocessed underwater images for research.

Absorption and scattering are the causes of poor underwater imaging. During the propagation of light underwater, the attenuation rates of light at different wavelengths differ due to water absorption (Adolfson and Berghage, 1974). As the water depth increases, red light is attenuated first, followed by yellow, green and purple, and then blue light. On the other hand, scattering of the underwater medium and particles leads to fogging and the blurring of details (Chao and Wang, 2010). Scattering is classified into forward scattering and backward scattering. Forward scattering is defined as light from the object moving through particles in the same direction before it reaches the camera, while backward scattering is defined as light that is reflected by particles before it reaches the object. Scattering can be considered as chiffon covering the underwater image, reducing its contrast and blurring its details. Therefore, deblurring and color correction are the main challenges to obtaining information from attenuated underwater images.

In this paper, we propose a single underwater image enhancement method based on adaptive correction of channel differential and fusion (ACCDF). In Section 3, firstly, we deblur and enhance the underwater image using an underwater physical imaging model and the image pixel-based method separately. Subsequently, we use the pyramid-based fusion method to enhance the underwater image. Finally, we obtain the enhanced underwater image. In Section 4, we use the EUVP (Enhancing Underwater Visual Perception) dataset (Islam et al., 2020) and the Raws dataset from the UIEB (Underwater Image Enhancement Benchmark) (Li et al., 2019) for analysis. According to the the results, our method can deblur and adaptively correct color. We also compare our method with other methods using the same dataset, validating the higher performance of our method.

The main contributions of this article are as follows:

1) A method of underwater image blurring was proposed based on the dominant hue of the image combined with dark channel prior to better deal with color degradation in different environments.

2) Based on the contrast limited adaptive histogram equalization (CLAHE) method, the contrast stretching of the dominant hue’s channel of the image was restricted better to restore the severely degraded color in the image.

3) It was demonstrated that the fusion of the deblurred and color-corrected images can preserve their respective advantages at the same time.

2. Related work

Underwater image processing includes an underwater physical imaging model and a non-physical model based on image pixel processing. Where the physical method estimates the reasonable degradation model of the image and restores the image quality through the inverse process, the non-physical method improves visual quality by enhancing the values of the parts of interest and weakening the values of the parts of no interest.

Underwater image degradation, also known as fogging, is caused by the scattering of suspended particles and absorption. He et al. (2010) proposed the dark channel prior (DCP) image recovery method to solve image fogging. The authors considered that, in each locality of a picture without fog, there is at least one color channel in each locality with pixel values close to zero, and these darkest points are used to remove fogging evenly. Although DCP performs well in terrestrial images, Chao and Wang (2010) and Chiang and Chen (2011) provided proof that DCP is not effective or even worse when applied directly to underwater scenes due to absorption and the presence of large areas similar to atmospheric light. Considering the attenuation of red light, Galdran et al. (2015) proposed an automatic red channel recovery method to reduce the influence of the red channel and artificial light sources on the estimation of the transmission map. Furthermore, considering that the red channel caused by absorption in the underwater environment is almost dark, Drews et al. (2013) proposed the underwater dark channel prior (UDCP) method. The modified DCP is only applied to the blue and green channels. Peng et al. (2018) proposed the generalization of the dark channel prior (GDCP) method to estimate the transmission map based on the brightness difference between the environment and the object. Similar to UDCP, the authors processed different color channels in the image formation model. Song et al. (2018) estimated the depth of the underwater scene by means of supervised learning. The background light and the transmission map estimated by the depth map have been proven to be able to effectively improve the image restoration effect.

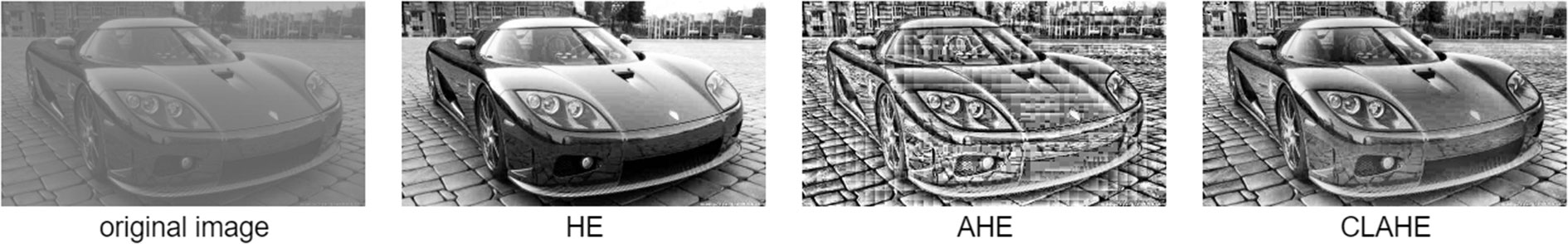

The distribution of the histogram reflects the statistical characteristics of the image gray level. Histogram equalization (HE), as an image enhancement method, can enhance the contrast of the image. The HE method uniformly maps the gray level of the image histogram to the range from 0 to 255. This method can obtain a better contrast enhancement effect when the histogram of the image does not change dramatically. However, it is obvious that roughly stretching the histogram distribution to the range of 0–255 has a limited effect. Based on the HE method, the adaptive histogram equalization (AHE) method was proposed (Ketcham et al., 1974). It uses grids to cut the image into multiple regions, calculates the cumulative distribution function of the region where each pixel is located, and then maps the pixels. However, when the histogram in an area has only one gray level, an obvious “mosaic” phenomenon (Figure 1) will appear in adjacent areas. To avoid tearing caused by the AHE method, Pizer et al. (1987) proposed the contrast limited adaptive histogram equalization (CLAHE) method. This method includes two innovations. The first is to limit the histogram distribution. When the gray level of an image exceeds the specified threshold, the excess part will be evenly distributed to each gray level. The second is to consider the bilinear interpolation of the image boundary. It finds two adjacent windows for one pixel of the boundary window of the image and four adjacent windows for one pixel of the non-boundary window, calculates the mapping value of the adjacent window histogram cumulative distribution function (CDF) to the pixel point, and finally performs linear and bilinear interpolations. Huang et al. (2018) proposed the relative global histogram stretching (RGHS) method to stretch the histogram of the GB channels according to the histogram distribution and absorption of underwater images.

Figure 1 Comparison of the histogram equalization (HE), adaptive histogram equalization (AHE), and contrast limited adaptive histogram equalization (CLAHE) methods.

The above algorithms have their own advantages in terms of color correction or defogging or image detail enhancement. Combining multiple algorithms to process one underwater image may be a good attempt. In order to combine the advantages of various methods, Ancuti et al. (2012) proposed the FUSION framework to solve the problems of a single attenuated underwater image. This framework has two inputs: one is the image processed by white balance and the other the image processed by CLAHE. In the experiment, the authors found that the direct pyramid fusion technology can make the image glow, but the multi-scale filter did not work well. Therefore, the authors used the multi-scale Laplacian pyramid (Burt and Adelson, 1987) to fuse the two processed images. Lu et al. (2015) performed optimization on the basis of FUSION. In order to further strengthen the image details, the authors changed the original FUSION input to the denoised image obtained using the local adaptive filter (Lu et al., 2017) and the denoised and reflected high-resolution image obtained using the subsample super-resolution method (Huang et al., 2015). Bai et al. (2020) used a multi-scale Gaussian filter to denoise the image on the basis of FUSION, conducted global and local histogram processing according to the different color channels of the image, and then fused the two different processing results.

The machine learning method has strong performance and is a powerful technique for underwater image processing. Since machine learning requires ground truth, Perez et al. (2017) believed that the repaired image has details similar to the ground truth, so a new convolutional neural network (CNN) model was proposed, which uses the repaired image as the ground truth to train the model. However, the constructed model cannot adapt to images at different locations. Anwar et al. (2018) considered that the existing machine learning methods would need ground truth for training, which is extremely challenging; therefore, a fully data-driven and end-to-end model was proposed: the underwater image enhancement convolutional neural network (UWCNN). This model trains optimizing the loss of the mean square error (MSE) and structural similarity (SSIM), which was an innovative attempt. Chen et al. (2021) uses the CNN model to estimate the backward scattering and direct transmission. Fabbri et al. (2018) proposed generating enhanced underwater images with generic adversarial networks (GANs). On the other hand, the authors also proposed generating degraded images based on non-degraded images using GANs, which can build more underwater available datasets. Guo et al. (2019) proposed a new GAN model that combines residual learning, dense concatenation, and multi-scaling and uses a variety of loss functions to preserve the textural details.

3. Adaptive correction of channel differential and fusion

In this paper, we propose the enhancement of a single underwater image based on ACCDF. Our main aim was to deblur and correct images based on the degree of color attenuation.

Hitam et al. (2013) stated that red light first degrades in water and is almost absorbed at a depth of 5 m. As the water depth increases, green light is then gradually absorbed, and finally blue light. Due to this phenomenon, it is reasonable to assume that, in most cases, underwater images have the most information on the blue and green channels. Based on a previous work, we have concluded that the main hue of the image correlates with the mean of each channel (Lai et al., 2022) and that, by determining the mean value of the channels, a more appropriate transmission map can be obtained. On the other hand, the channel with the highest mean value also indicates the least degree of absorption; therefore, its maximum should be limited in color correction and the minimum of the other channels should be increased to better correct the color deviations of the image.

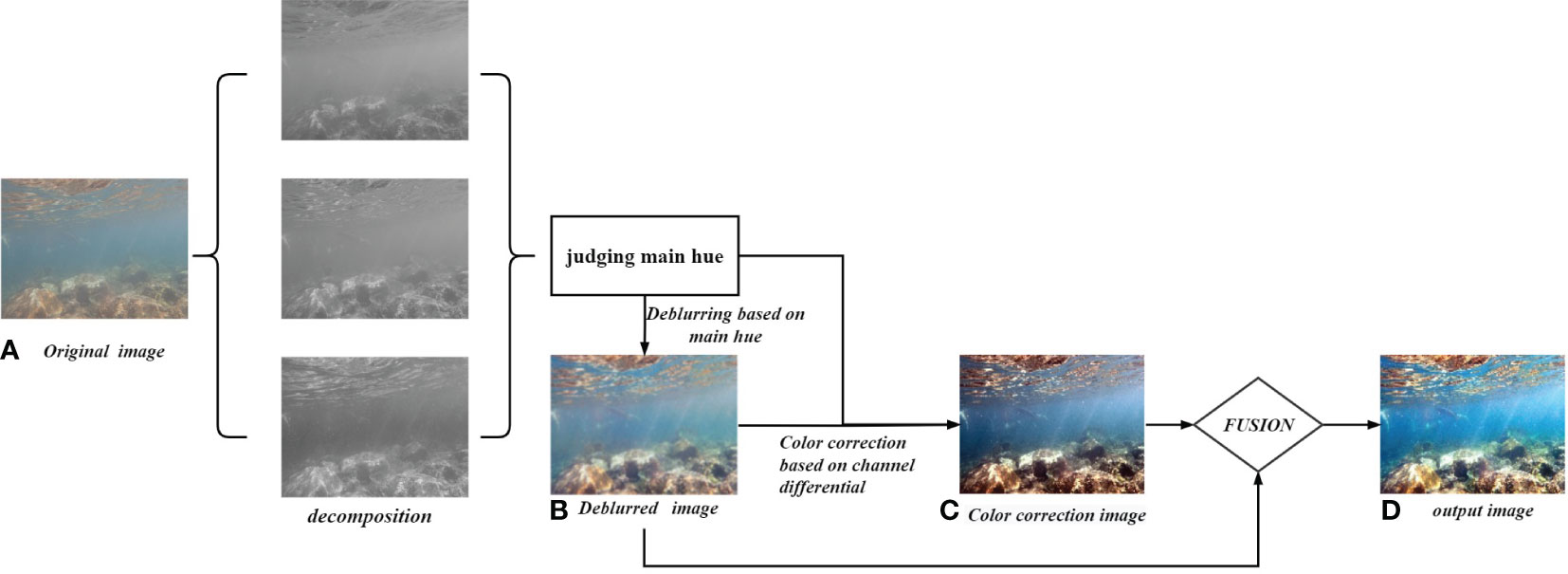

Previous research has proven the good performance of the FUSION method in the field of underwater image enhancement (Ancuti et al., 2012). We therefore assumed that its advantages can be utilized by fusing the deblurring image and the color correction image. The methods we applied in this paper included decomposing the image in the RGB (red, green, blue) color space and correcting the degradation of the various channels of the image due to the differential attenuation of light at different wavelengths in water resulting in varying degrees of degradation of the image channels. Firstly, we compared the mean values of the GB channels in order to determine whether the image is greenish or bluish. For the bluish image, we supposed that only the transmission map obtained from channel B is more suitable for the actual underwater imaging model. On the contrary, for the greenish image, we assumed that only the transmission map obtained from the G channel is more appropriate. We then combined the transmission map and the underwater imaging model to realize image deblurring. Subsequently, we stretched the contrast of the main hue of the deblurred underwater image to the maximum limit, while the contrast of the R channel was also stretched with a minimum increase. Thereafter, we converted the image to the hue saturation value (HSV) color space and stretched the SV channels to improve the contrast and brightness of the image. Finally, deblurring and color correction of the underwater images were combined based on the image pyramid to obtain an enhanced underwater image that can simultaneously achieve deblurring and color correction. The flowchart of the ACCDF is shown in Figure 2.

Figure 2 Flowchart of adaptive correction of channel differential and fusion (ACCDF). (A) Original image. After decomposition, information on the three channels was extracted. (B, C) Images obtained by deblurring and color correction based on the mean values of each channel. (D) Image obtained from the fusion of (B, C) using the FUSION algorithm.

3.1. Adaptive correction of channel differential for deblurring

The attenuation of light of different wavelengths is different in water, which results in the blue–green hue of most underwater images. After a lot of experiments, we found that the dominant color of the image is directly related to the average size of the RGB channels.

When the image was bluish, we assumed that the information of channel B in the underwater image can better reflect the real information of the target scene.

In the equations, C denotes the color channel, Ω(x,y) represents a local patch, IC is the input image, JC is the dark channel, Ac is the global atmospheric light, and is the transmission of the patch.

The opposite is true when the image is green.

The estimation of channel A also followed the DCP method: the top 10% brightest points were selected from the corresponding dark channel, JACCDF . Afterward, the pixels at the same position as these points in the original image, IC(x,y) , were determined and the maximum value taken as the estimated value of the background light of channel C. Combining the underwater image and the imaging model, the deblurred underwater image can be obtained.

To avoid over- and underexposure, a threshold for the transmission map was set. According to a large number of engineering experiments, the lower threshold value was set as 0.3 and the upper threshold value as 0.9.

Based on the formulation below, the deblurred underwater image can be obtained.

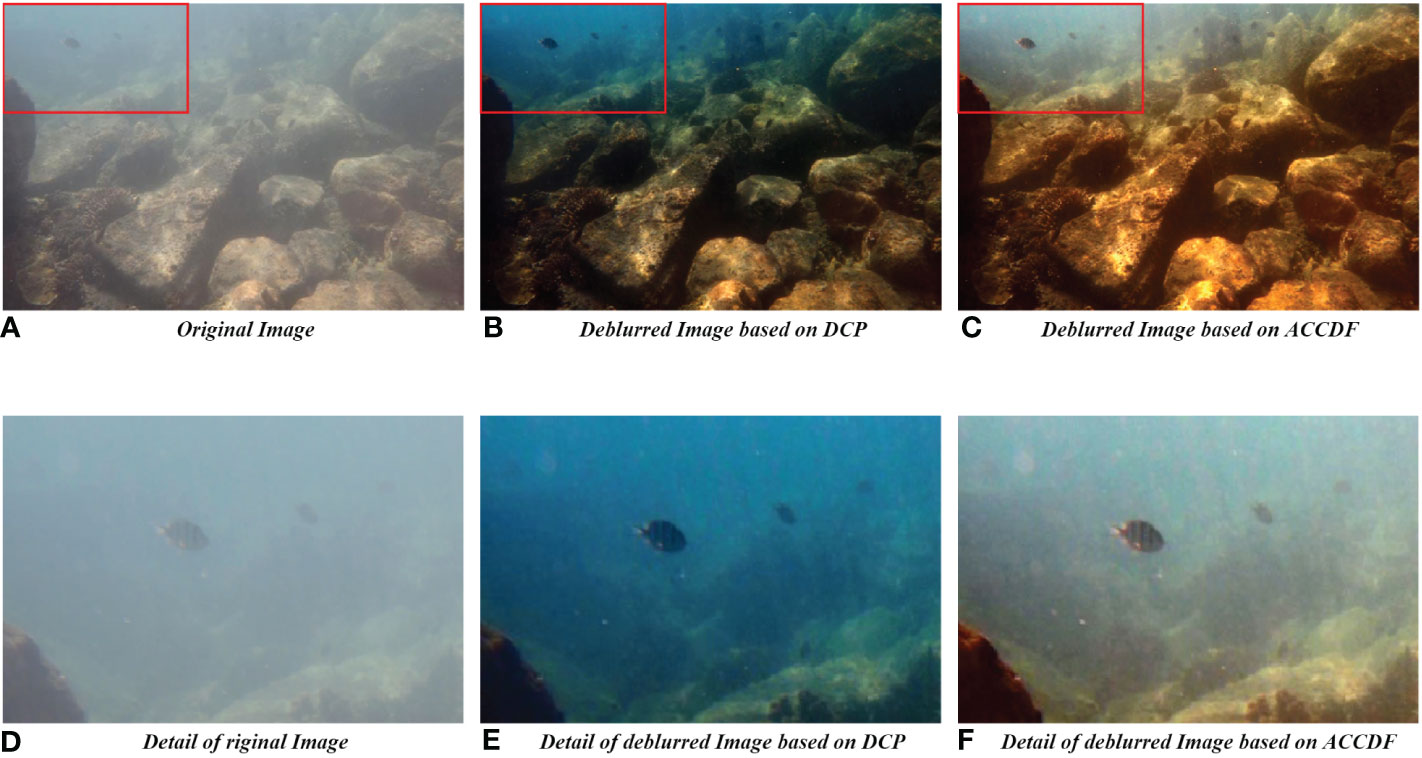

As can be seen, the first line of Figure 3 shows that both the DCP (Figure 3B) and the ACCDF method (Figure 3C) can produce a clearer image compared to the original image (Figure 3A). However, the image processed using the DCP method had serious blue distortion, which caused the background to appear unclear. On the other hand, the ACCDF method that estimates the transmission map by considering the dominant hue channel significantly and partially eliminated the color distortion of the underwater image. The second line of Figure 3 displays a comparison of the details for selected parts of the first line. In Figure 3D, the fish is not clear because of the foggy blur, and the background and the fish texture are not clear, as if they are hidden. Although the outline of the fish and the background in Figure 3E after DCP processing became clear, the details of the texture on the fish were not obvious because the overall color was blue. Figure 3F shows the elimination of the overall foggy blur of the image after our method processing, and the image does not appear as a blue color cast, showing bright colors and clear details, especially the black stripes on the fish body that were well reproduced.

Figure 3 (A–C) Images are comparison of the deblurred image based on dark channel prior (DCP) and adaptive correction of channel differential and fusion (ACCDF), respectively. (D–F) are the details of (A–C).

Figure 4 shows that the estimated transmission map of the ACCDF method was obviously different from that of the DCP method. The transmission map corresponding to the stone part had a clearer texture, reflected in the processing results shown in Figure 4D. The color of the stone part in the image processed using the ACCDF method was clearer and the textural detail richer. In particular, the red boxes were obviously different, which also contributed to the better visual effect of the ACCDF method in the comparison of the processed image background details shown in Figure 3.

Figure 4 Comparison of the transmission maps. (A, B) images are the transmission map estimated using the dark channel prior (DCP) and the adaptive correction of channel differential and fusion (ACCDF) method, respectively. (C, D) images are the details of (A, B).

3.2. Correcting channel differential for correction of the color cast

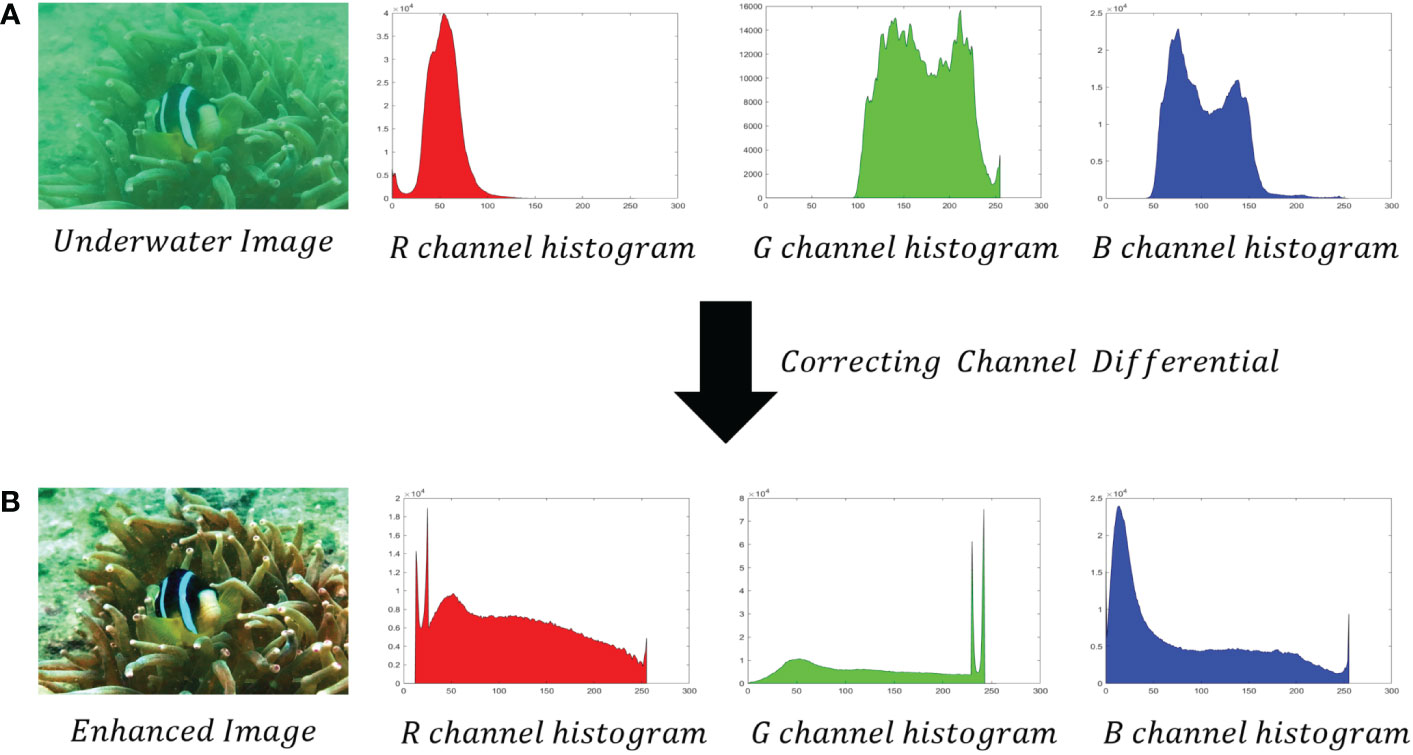

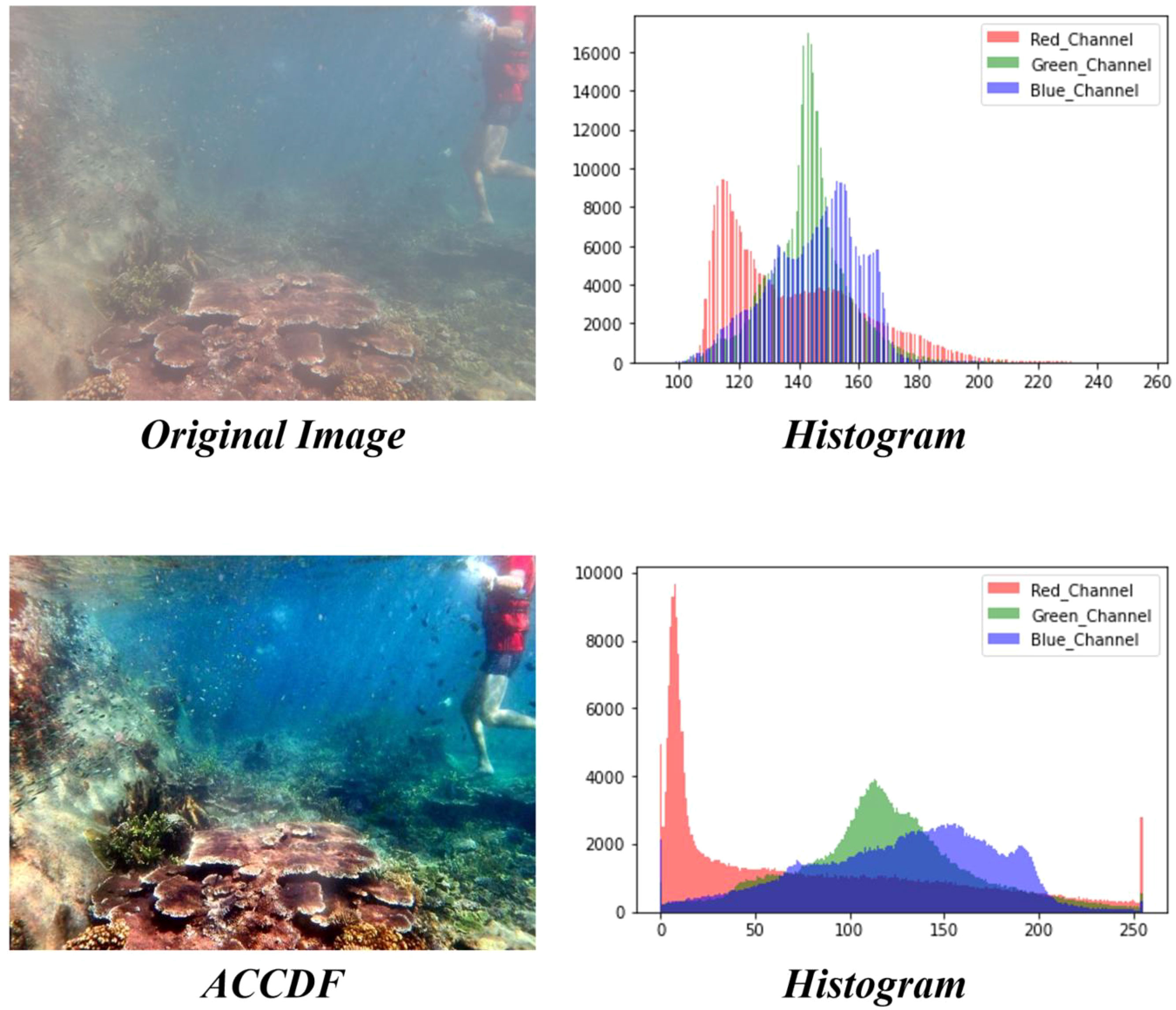

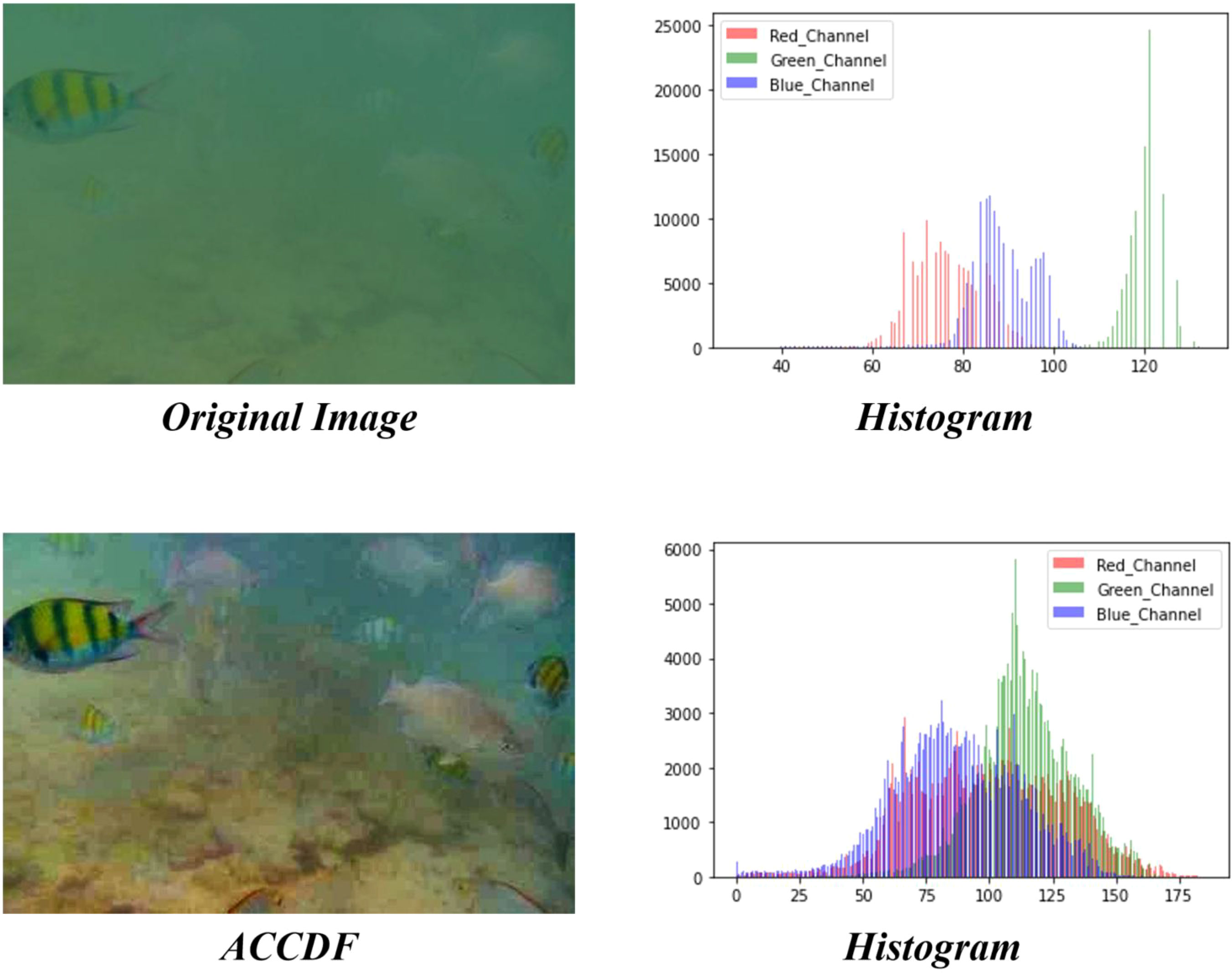

In underwater images, the pixel values of the R channel are mostly distributed in a relatively small range. As mentioned in Section 3.1, underwater images will show the dominant blue or green color, and the pixel values of the dominant color are usually distributed in the range of large pixel values. For the remaining channels corresponding to the non-dominant colors, due to the attenuation of the blue and green lights in the underwater environment being smaller than that of red light, the pixel values of these channels corresponding to the non-dominant colors are mostly distributed in the middle (Figure 5A).

Figure 5 (A) image is the original underwater image and it's histogram of the RGB (red, green, blue) channels. (B) image is the enhanced image and it's histogram of the RGB (red, green, blue) channels.

We take Figure 5 as an example to show the stretching process of our channels, with the stretching formula as follows:

where pin and pout are the input and output pixels, respectively; O denotes the intensity of the original image, and D is the intensity limited by our method.

The dominant color of the image (Figure 5A) is green, the average value of channel G is Gmean=171.19 , the average value of channel B is Bmean=103.67 , and Gmean>Bmean . Therefore, we limited the maximum value of the G channel to 255*0.95=242.5≈242 and used the CLAHE method to stretch the image within [0,242]. The minimum value of the R channel was also limited to 255*0.05=12.75≈13 and CLAHE method used to stretch the image within [13,255]. For channel B, the CLAHE method was used to stretch the image within the range of [0,255]. The stretched image is recorded as IS(x,y).

where Stre() denotes stretching the limited range of the RGB channels of the image using the CLAHE stretching method. The stretched image is defined as I(x,y).

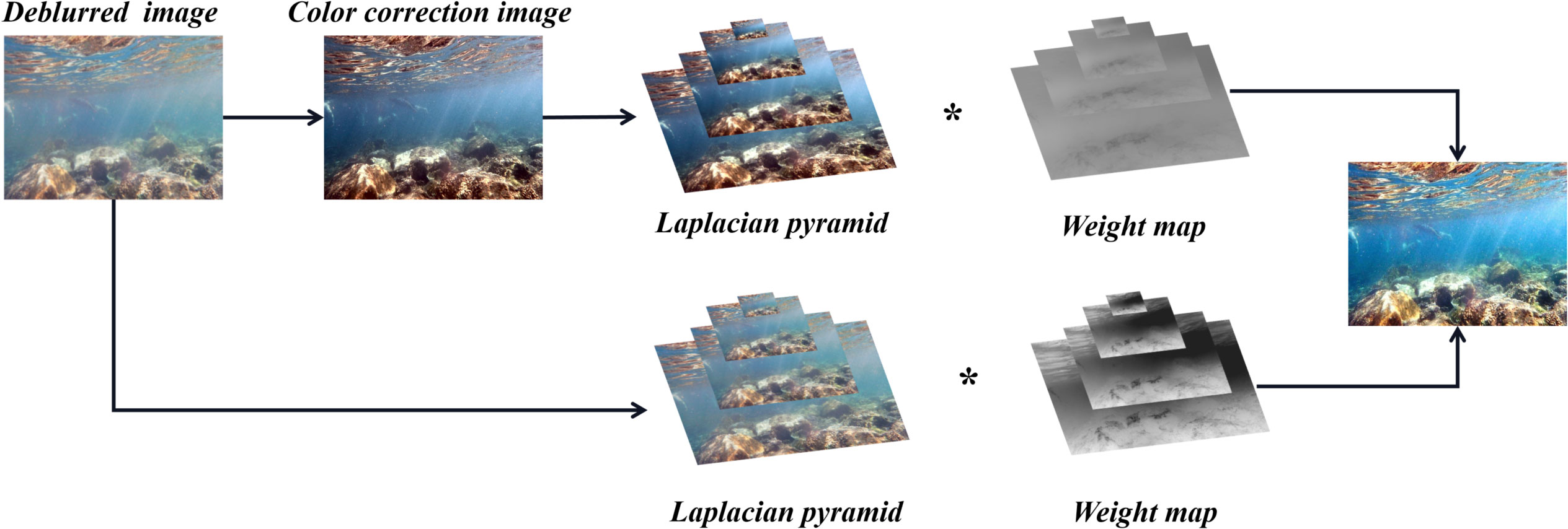

3.3. Image fusion based on the image pyramid

For underwater images, we have implemented deblurring and color correction based on the imaging model and pixel processing, respectively. Unfortunately, the color-corrected image will again lose a part of the textural detail, even if it has been previously deblurred. Considering that there are a lot of details in the deblurred image, we used the image pyramid fusion method to recover the details from the deblurred image after color correction.

where Fusion denotes the image pyramid fusion method.

According to the multi-scale Laplacian pyramid decomposition of the input picture, the Laplacian contrast weights, local weight contrast weights, brightness weights, and exposure weights are obtained. These weights are normalized to get , and the output image is obtained by fusing the inputs with at every pixel.

In this paper, although there are a variety of fusion methods, we chose the original fusion method (Ancuti et al., 2012) to verify our adaptive correction method and validate the performance of our method. The flowchart of the fusion framework is shown in Figure 6.

Figure 6 Flowchart of fusion processing. The output image is obtained by fusing the inputs with weight map at every pixel, where weight map is obtained by decomposing the input image into multi-scale of the Laplacian pyramid.

4. Experiment and analysis

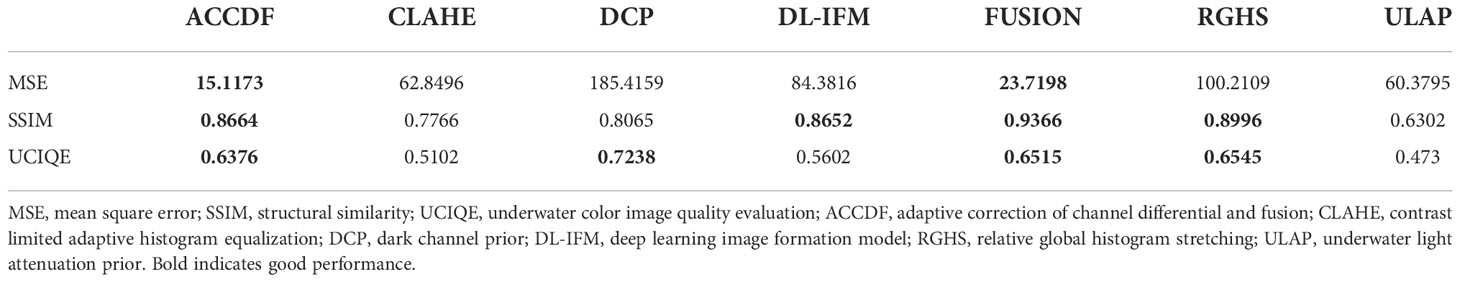

In order to ensure effectiveness, we used the Raws dataset from the UIEB (Li et al., 2019) and the EUVP dataset (Islam et al., 2020) for the experiment. The experimental results were compared with the original images, ground truth images, and the images processed by the DCP, CLAHE, RGHS, underwater light attenuation prior (ULAP), FUSION, and deep learning image formation model (DL-IFM) methods (Chen et al., 2021). The comparison comprised two parts: direct visual effect comparison and image quality evaluation index comparison, with the evaluation indices including MSE, SSIM, and underwater color image quality evaluation (UCIQE).

4.1. Datasets and evaluation index

The UIEB dataset included two sub-datasets: the Raws dataset and the Challenge dataset. The Raw dataset included 890 underwater images, each of which has a corresponding high-quality image as a reference, while the Challenge dataset contained 60 challenging underwater images, but without reference standards. Therefore, in this paper, we used the Raws dataset for the experiment. The EUVP dataset has three sub-datasets: Underwater Dark, Underwater ImageNet, and Underwater Scenes. We used the Underwater Dark dataset, which included 5,550 training images and 570 verification images. Each image had a corresponding ground truth as a reference.

In the comparison of the image quality evaluation index, we used MSE, SSIM, and UCIQE. MSE compared the errors between the processed image and the ground truth. The smaller the error, the closer the generated image is to the ground truth. SSIM (Wang et al., 2004) evaluated the similarity of two images by comparing their luminance, contrast, and structure. The SSIM value range was [0,1]. The larger the value, the smaller the image distortion. UCIQE (Yang and Sowmya, 2015) is a combination of the color concentration, saturation, and contrast. The higher the UCIQE value, the better the visual quality of the image.

4.2. Comparison and analysis

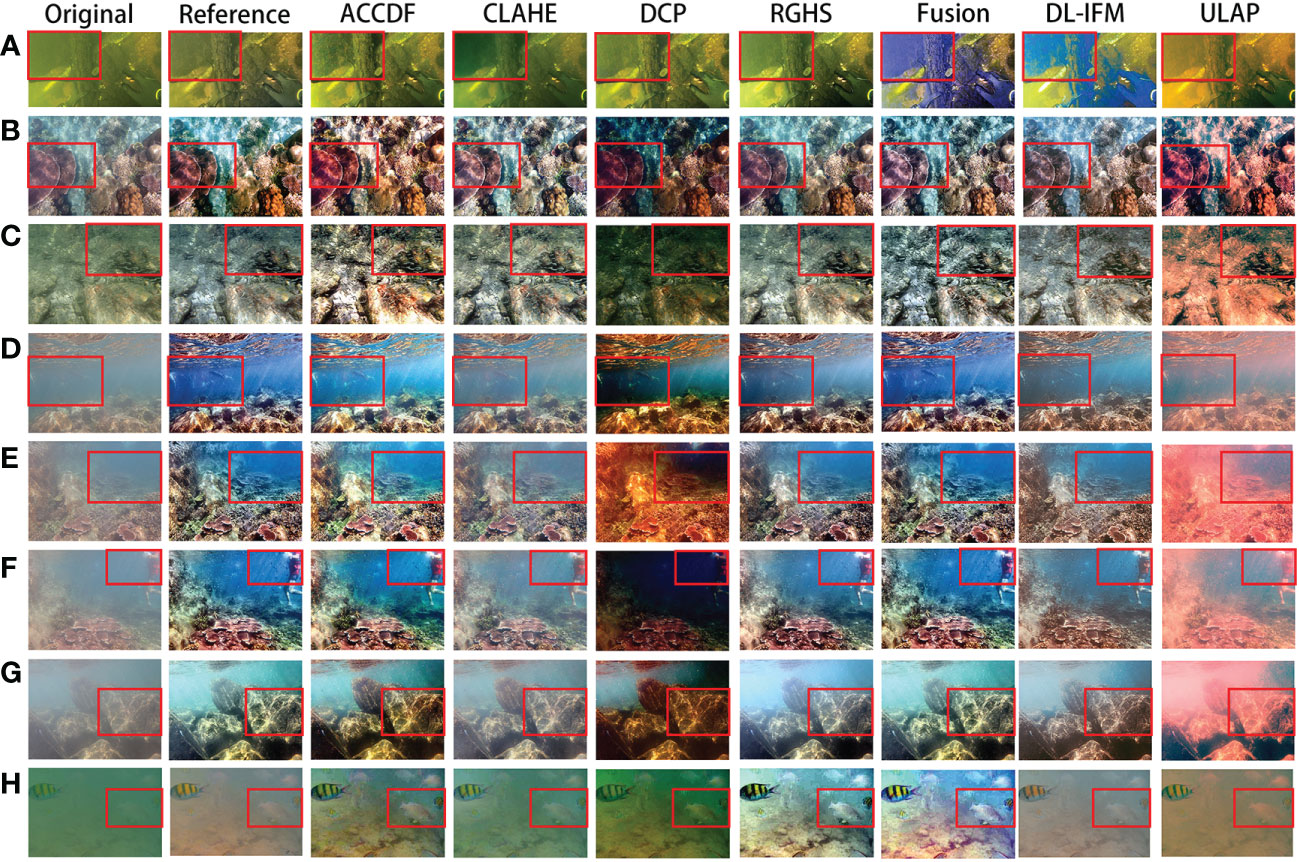

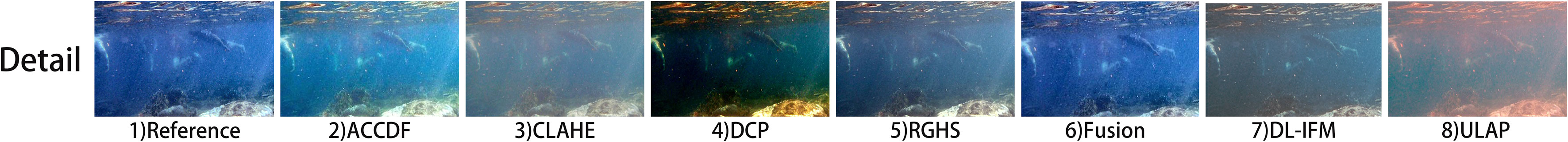

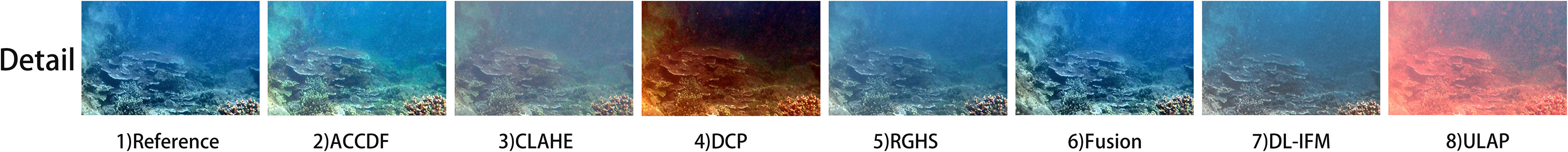

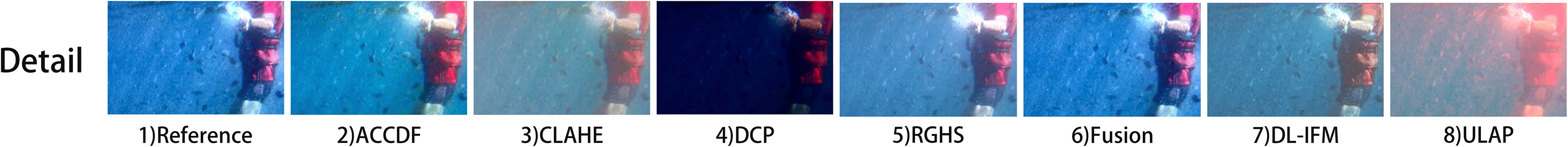

In order to observe the differences of the various methods more intuitively, we divided the experimental results into several parts for elaboration. The experimental results of the Raws dataset are shown in Figure 7. It can be clearly seen that the image quality with the use of the ACCDF method was significantly improved compared with that of the original image. The CLAHE method also performed very well in terms of color correction, but lacked deblurring ability. The deblurring ability of the DCP method was good, but its color correction was low. Some images processed using the DCP method appeared red or even black, resulting in the loss of detail of the color distortion parts. Similarly, FUSION performed well, except for the abnormal color in the first image. The DL-IFM method showed mediocre performance as a deep learning method, even weak at deblurring. The overall performance of RGHS was good. Although its color correction ability was stronger than that of CLAHE, its deblurring ability was slightly worse than that of ACCDF. There will be obvious “mosaics” when the original image is fuzzy. Except for its better performance shown in Figures 7A, H, the ULAP method showed abnormal red light in other figures, which may have been caused by incorrect red channel processing.

Figure 7 Experimental results of the comparison of the Raws dataset. Image (A–G) are eight images selected from the Raws dataset. From left to right are the original image, the ground truth image and the image processed by ACCDF, CLAHE, DCP, RGHS, FUSION, DL-IFM and ULAP methods. And the red box indicates the advantages of ACCDF over other methods.

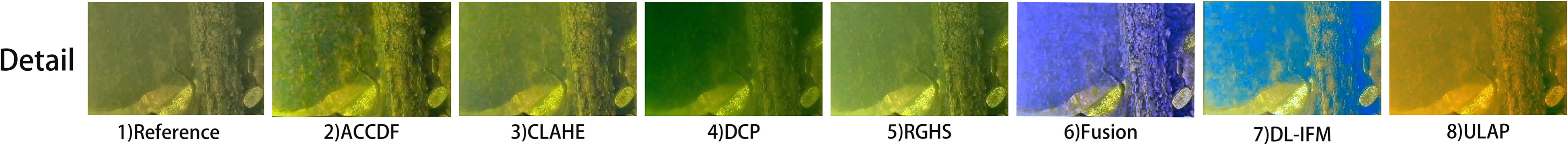

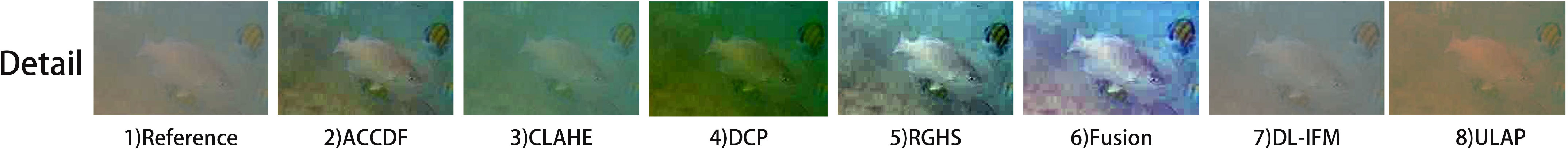

In Figure 7A, the image quality of the ACCDF, DCP, and RGHS methods had been significantly improved. The images processed using these methods showed an obvious deblurring effect, and the texture of the underwater stones, gravel, and wood had been significantly enhanced. In the upper left corner of the original image, i.e., Figure 8, it can be seen that the stone texture of the DCP image is lost, the images by FUSION, DL-IFM, and ULAP are severely distorted, and the deblurring effect of the RGHS is not obvious. Compared with that of CLAHE, the image processed using ACCDF has a clearer texture.

Figure 8 Detail comparison of Figure 7A.

Figure 7B shows that the deblurring effect of ACCDF was obviously better than that of the other methods. On the premise of a similar deblurring effect, the color correction ability of ACCDF was better than that of CLAHE, RGHS, FUSION and DL-IFM. Although we sacrificed the brightness of the shadow by limiting the stretch range of the red channel, the overall color of the image, especially the color saturation of coral, became better and more visually comfortable.

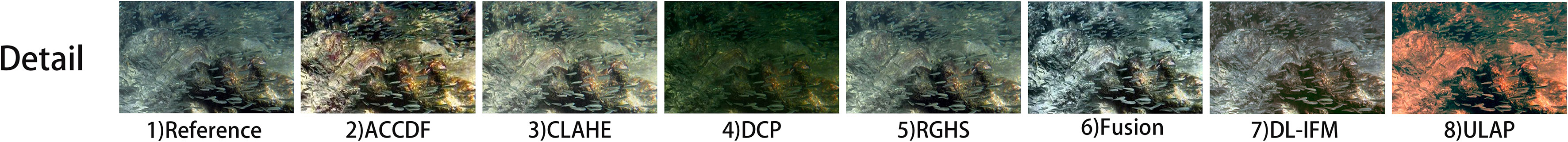

In Figure 7C, the original image tone is gray and the image has a gray fog blur. However, the ACCDF, CLAHE, RGHS, and FUSION methods achieved good deblurring effects. In the upper right corner of Figure 7C, i.e., Figure 9, it can be seen that the color correction ability of ACCDF was better than that of CLAHE and RGHS, even if the original image is fuzzy. Furthermore, the outline of the small fish in the ACCDF image was clearer than in others.

Figure 9 Detail comparison of Figure 7C.

In Figures 7D–F, it can be seen that the blue background of the three pictures is quite large and that the deblurring effect of the other methods is not very good, especially in the blue background. Due to the adaptive processing of different channels, the ACCDF method was much better at deblurring compared to the other methods, and the textural detail was more obvious. Compared with the reef in the lower part of Figure 7D, the ACCDF method was more effective than the other methods at deblurring, and the features of the texture of the reef were also strengthened. The top left of the picture, i.e., the detailed Figure 10, shows that the deblurring effect of the ACCDF method was better than that of others; furthermore, because the color correction was more reasonable, this made the swimmers in the red box clearer, and it can even be seen that they are wearing red life jackets.

Figure 10 Detail comparison of Figure 7D.

Looking at the lower part of Figure 7E, it can be seen that, due to adaptive color correction, the ACCDF method makes the colors of the coral and the reef closer to reality. On the top right of the picture, i.e., the detailed Figure 11, it can also be seen that ACCDF has a better deblurring effect. In terms of color correction, because we have limited the stretching range of the different channels, the coral processed by the ACCDF method appeared more colorful. The background of ACCDF was brighter than that of DCP and more saturated than that of ALCHE.

Figure 11 Detail comparison of Figure 7E.

Figure 7F displays the excellent deblurring effect of the ACCDF method. The reef at the bottom left of the picture does not appear overexposed, unlike that of RGHS and DL-IFM, and the blue ocean background is clearer than that of the other methods. At the top right of the image, i.e., the detailed Figure 12, due to the stretching range of the red channel being limited, the color of the life jacket in the ACCDF image is perfectly restored and brighter than that of the other methods. It can be said that the image processed using the ACCDF method is almost the same as the ground truth.

Figure 12 Detail comparison of Figure 7F.

In order to further examine the effect of the ACCDF method, we compared the histogram of the original image with that of the processed image (Figure 13). The RGB histogram of the original image was concentrated in the range [100,160], with the blue and green areas overlapping seriously, which made the image gray and the color distinction not obvious, what we call a “fog.” After stretching, the “fog” of the image was obviously eliminated, and the blue and green became obvious, with the red also strengthened.

Figure 13 Histogram comparison of Figure 7F.

In the original image of Figure 7G, not only was “fog” present but also the scattering of sunlight penetrating the water surface and suspended particles. The ACCDF and DCP methods had obvious deblurring effects. However, the image color of the DCP method was red, and the upper right corner had missing information. The ACCDF, ALCHE, RGHS, and DL-IFM methods showed good color correction effects, but the ALCHE, RGHS, and DL-IFM methods retained a layer of “mist” in the image.

The original image in Figure 7H is an extremely challenging one. The image showed extremely heavy “fogging.” Except for the fish close to the lens, the overall image hardly displayed other useful information. Surprisingly, all of the methods extracted the ground and fish information in the background from the poor original image. However, the ALCHE method did not remove the blur very well, while the DCP and ULAP methods showed color distortions of green and red. By comparing the details in Figure 14, we found that the RGHS and FUSION methods showed very obvious feature enhancement effects. The feature of the fish and reef in the background were very noticeable, but the image appeared as a mosaic, which means that the deblurring ability was slightly weaker. In addition to removing the fog from the original image, the ACCDF image made the fish and the rocks in the upper right corner of the image appear sharper. Furthermore, adaptive color channel processing of the ACCDF method made the two fish colors closer to the ground truth.

Figure 14 Detail comparison of Figure 7H.

In order to further examine the effect of the ACCDF method, we compared the histogram of the original image with that of the processed image (Figure 15). The histogram of the original image was sparsely distributed, and the red and blue channel pixels were within the range [60,100], making the image dark as a whole. After stretching using the ACCDF method, the pixel distribution of the RGB channels turned uniform, which allowed the information of the image to be reproduced.

Figure 15 Histogram comparison of Figure 7H.

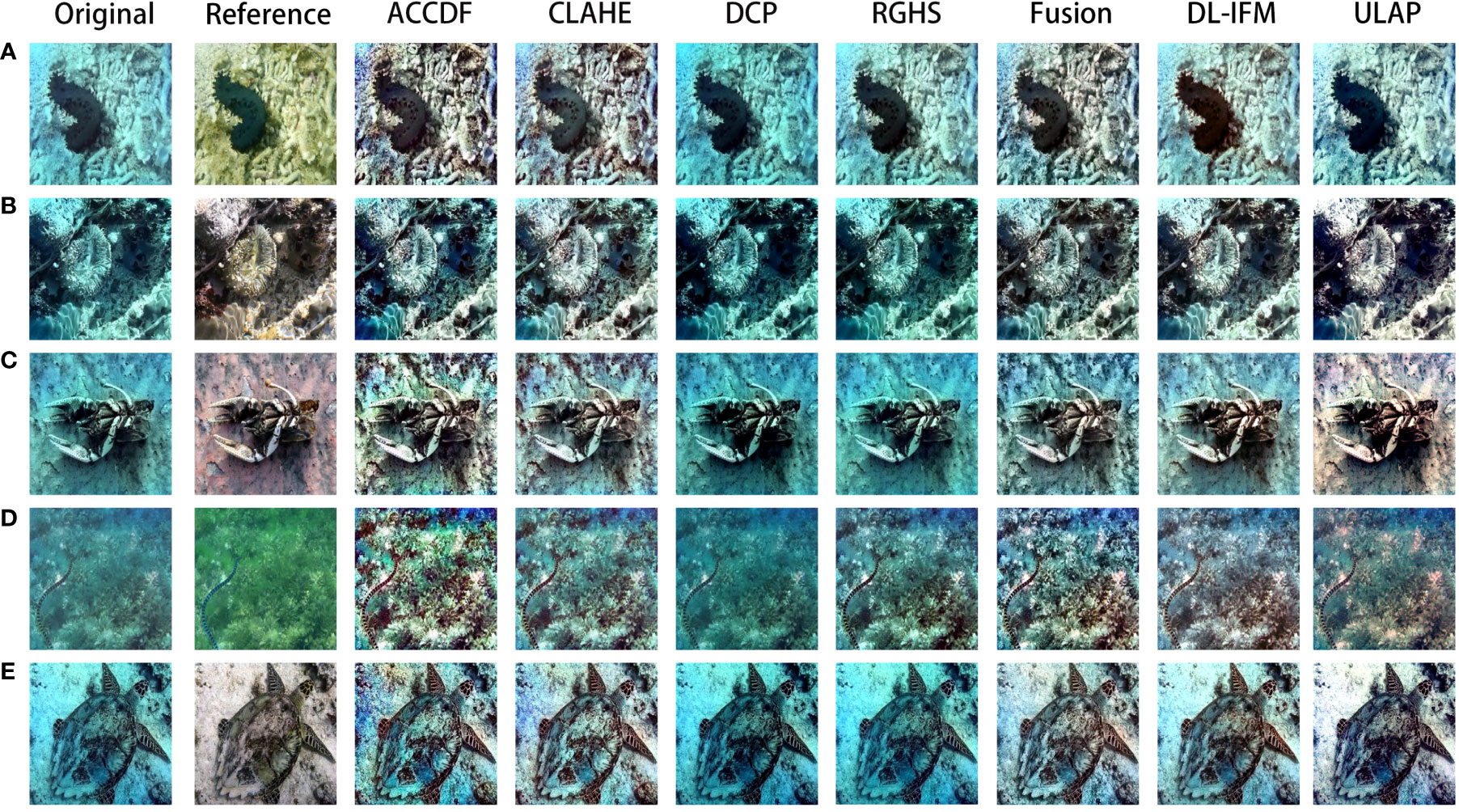

The experimental results of the EUVP dataset are shown in Figure 16. Since the images in this dataset have been seriously attenuated, the original color of the image cannot be restored by non-deep learning methods; therefore, in this part, we mainly compared the deblurring ability of each method.

Figure 16 Image (A–E) are five images selected from the EUVP dataset. From left to right are the original image, the ground truth image and the image processed by ACCDF, CLAHE, DCP, RGHS, FUSION, DL-IFM and ULAP methods.

Figure 16A presents the better deblurring effects of the ACCDF, CLAHE, and FUSION methods compared to the other methods. The contour of the sea cucumber in the middle of the ACCDF image was clearer, while the sea cucumber in the middle of the DL-IFM image turned blurred. Externally, it can be seen that the texture of the gravel in the ACCDF image was more obvious and the outline clearer by comparing the background part of the image. The background texture of the DCP, RGHS, DL-IFM, and ULAP images was blurred, especially in the upper left corner of the image.

In Figure 16B, the images processed using DCP and RGHS are shown to be almost the same as the original pictures. Details were missing in the upper left corner of the DL-IFM and ULAP images. CLAHE had a good deblurring effect, but compared with the ACCDF method, especially in the texture comparison between the sea anemone in the middle of the image and the coral in the upper left corner, the enhancement ability for the textural features was slightly weaker.

In Figure 16C shows that the ACCDF, CLAHE, and FUSION methods removed most of the blue cast in the original image, resulting in obvious image details. The ULAP method removed most of the blue cast and had good visual effect, but lost some textural details on the lobster head in the middle of the image. The ACCDF image showed not only enhanced details of the lobster head but also enhanced ground texture of the image background.

In Figures 16D, E, although the ACCDF and FUSION images had abnormal colors compared with the ground truth images, the details of the image were enhanced. For example, in Figure 16D, the seaweed contour of the ACCDF method was clearer than that of the other methods, while the FUSION image showed a mosaic-like blur despite its high contrast. The pattern of the turtle shell in the ACCDF image in Figure 16E had higher contrast and is clearer than that of the other methods. The sand and stone on the bottom of the DL-IFM and ULAP images were partially exposed and became blurred, while the sand and stone texture in the ACCDF image was clearer.

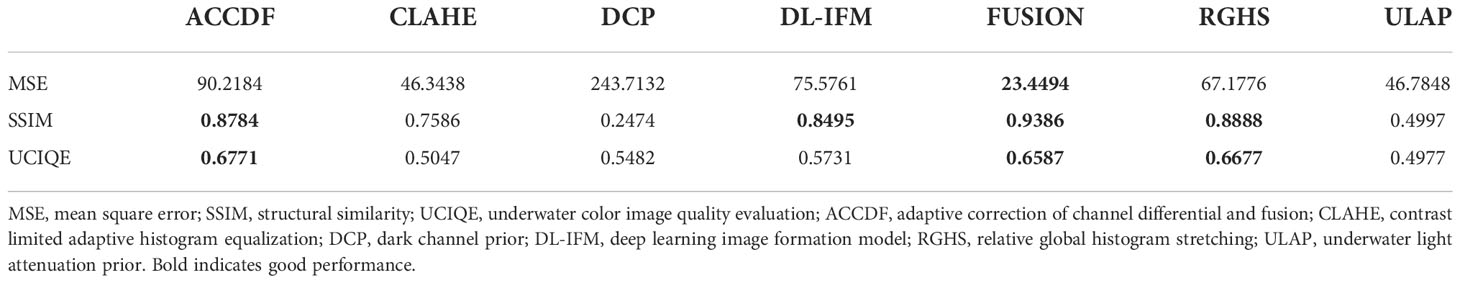

We combined the image quality evaluation indices for analysis. The quality evaluation of Figure 7D is shown in Table 1. CLAHE and DL-IFM performed slightly poorly in the quality evaluation because of their slightly weaker deblurring ability. DCP and ULAP has unusually large MSE values due to severe color distortion and loss of detail in the processed pictures. The overall performance of ACCDF and FUSION was good. The MSE values of ACCDF were the smallest, while those of SSIM and UCIQE were relatively large. As displayed in Figure 7D, ACCDF and FUSION provided the best deblurred and color-corrected images. However, compared with FUSION, ACCDF had a warmer color orientation; hence, the image details were more easily observed. This has no relevance in the image quality metrics evaluation, but it has resulted in a better visual experience.

Table 1 Quality evaluation of Figure 7D.

The quality evaluation of Figure 7F is shown in Table 2. Based on its evaluation index, the FUSION method performed very well. It can also be seen in the picture that the FUSION method performed well in deblurring and color correction. The CLAHE, RGHS, and DL-IFM methods performed well in the evaluation criteria, but a layer of “mist” can still be seen in the image, which results in slightly poor visual performance. The DCP method performed poorly in the evaluation criteria because the images lost a lot of information. Surprisingly, the ACCDF method had the largest MSE value, which did not correspond to the visual perception of the image, probably because the method removed bubbles from the image during the deblurring process. Comparison of the SSM and UCIQE values indicated that the ACCDF method performed well. It can be seen that the image color saturation of ACCDF in Figures 7F, 12 was higher and the textural detail clearer compared to the other images.

Table 2 Quality evaluation of Figure 7F.

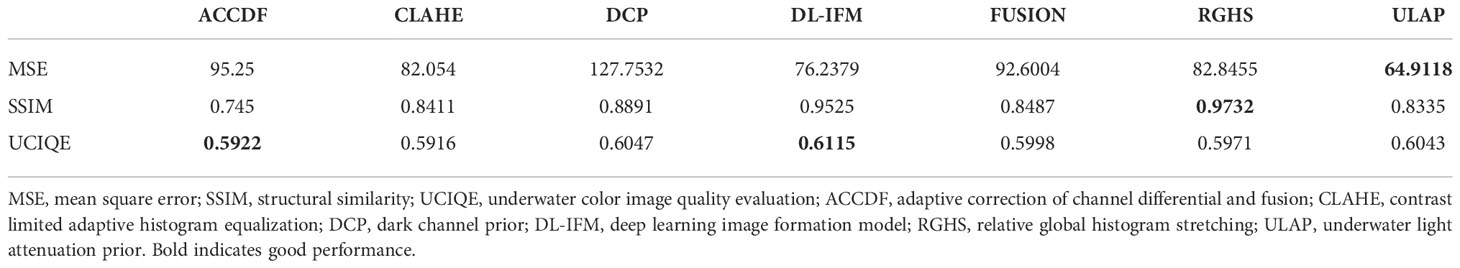

Comparison of the quality evaluation indicators in Figure 16C is shown in Table 3. The MSE value of ULAP was the lowest, and the image processing results also showed that the image color using this method was the closest to the ground truth. The SSIM and the UCIQE value of the RGHS and the DL-IFM method, respectively, was the largest. The evaluation index of the ACCDF method was average due to the original image having been seriously attenuated, and the non-machine learning method cannot accomplish restoration. The ACCDF method focused on the blue dominant tone of the image to stretch the histogram, which resulted in color distortion. However, when the textural features were examined closely, it was found that, in the ACCDF image, the details of the lobster head were obviously enhanced, and the textural contrast of the ground sand and stone at the edge of the image was higher and the outline clearer.

Table 3 Quality evaluation of Figure 14C.

5. Conclusion

In this paper, we proposed an adaptive color correction and deblurring method for underwater images with different color casts. This method adaptively deblurs the dark channel and stretches the histogram by perceiving the main hue of the image. It then fuses the images processed by the two techniques into a better image. The results of the experiment showed that this method can handle underwater images in different green and blue distortions and has better deblurring and color correction capabilities compared to other classical algorithms. We also found that the images processed using this method had warmth, which made the visual experience better, especially with a severely attenuated red. In addition, this method enhanced the textural detail extremely well, finding more hidden details and effectively improving the quality of the blurred underwater image.

On the other hand, when the color of the image has been seriously attenuated, the ACCDF method performed poorly in terms of color correction. This scenario is more suitable for color filling using deep learning methods. In the future, we will consider using a deep learning algorithm to supplement and correct the color of images, adding it to the fusion framework to make it stronger.

Data availability statement

Publicly available datasets were analyzed in this study. The data can be found here: EUVP: https://irvlab.cs.umn.edu/resources/euvp-dataset; UIEB: https://li-chongyi.github.io/proj_benchmark.html.

Author contributions

ZeZ completed the main work of this paper. TW provided guidance during the research. ZeZ, ZhZ, YL, TW, SZ, and HC were involved in proofreading and manuscript revision. HX help complete the part of experiment. All authors contributed to the article and approved the submitted version.

Funding

This research was supported by: National Natural Science Foundation of China and Macao Science and Technology Development Joint Fund (0066/2019/AFJ): Research on knowledge-oriented probabilistic graphical model theory based on multi-source data; the MOST-FDCT Projects (0058/2019/ AMJ,2019YFE0110300; Research and Application of Cooperative Multi-Agent Platform for Zhuhai-Macao Manufacturing Service); the Key Platform and Scientific Research Project for General Universities, Guangdong, 2022(grant no. 2022ZDZX4061); Science and Technology Program of Social Development, Zhuhai, 2022 (grant no. 2220004000195); Key Platform and Scientific Research Project for General Universities, Guangdong, 2022, (grant no.2022ZDZX4061; Science and Technology Program of Social Development, Zhuhai, 2022, (grant no.2220004000195); Guangdong Provincial Key Laboratory of Interdisciplinary Research and Application for Data Science, BNU-HKBU United International Collegeproject code 2022B1212010006; Guangdong Higher Education Upgrading Plan (2021-2025) with UIC research grant R0400001-22 and R201902; R&D plan project in key fields of Guangdong Province (No. 2021B0707010001); R&D plan project in key fields of Guangdong Province (No. 2018B010109001).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ancuti C., Ancuti C. O., Haber T., Bekaert P. (2012). “Enhancing underwater images and videos by fusion,” in 2012 IEEE conference on computer vision and pattern recognition (IEEE), 81–88. doi: 0.1109/CVPR.2012.6247661

Anwar S., Li C., Porikli F. (2018). Deep underwater image enhancement. arXiv preprint arXiv, 1807.03528. doi: 10.48550/ARXIV.1807.03528

Bai L., Zhang W., Pan X., Zhao C. (2020) Underwater image enhancement based on global and local equalization of histogram and dual-image multi-scale fusion (IEEE) (Accessed Access 8). doi: 10.1109/ACCESS.2020.3009161

Burt P. J., Adelson E. H. (1987). “The laplacian pyramid as a compact image code,” in Readings in computer vision (Elsevier), 671–679. doi: 10.1109/TCOM.1983.1095851

Chao L., Wang M. (2010). “Removal of water scattering,” in 2010 2nd international conference on computer engineering and technology, vol. 2. (IEEE), V2–35. doi: 10.1109/ICCET.2010.5485339

Chen X., Zhang P., Quan L., Yi C., Lu C. (2021). Underwater image enhancement based on deep learning and image formation model. arXiv preprint arXiv, 2101.00991. doi: 10.48550/ARXIV.2101.00991

Chiang J. Y., Chen Y.-C. (2011). Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. image Process. 21, 1756–1769. doi: 10.1109/TIP.2011.2179666

Drews P., Nascimento E., Moraes F., Botelho S., Campos M. (2013). “Transmission estimation in underwater single images,” in Proceedings of the IEEE international conference on computer vision workshops, 825–830. doi: 10.1109/ICCVW.2013.113

Fabbri C., Islam M. J., Sattar J. (2018). “Enhancing underwater imagery using generative adversarial networks,” in 2018 IEEE international conference on robotics and automation (ICRA) (IEEE), 7159–7165. doi: 10.1109/ICRA.2018.8460552

Galdran A., Pardo D., Picón A., Alvarez-Gila A. (2015). Automatic red-channel underwater image restoration. J. Visual Communication Image Representation 26, 132–145. doi: 10.1016/j.jvcir.2014.11.006

Guo Y., Li H., Zhuang P. (2019). Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Oceanic Eng. 45, 862–870. doi: 10.1109/JOE.2019.2911447

He K., Sun J., Tang X. (2010). Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. doi: 10.1109/TPAMI.2010.168

Hitam M. S., Awalludin E. A., Yussof W. N. J. H. W., Bachok Z. (2013). “Mixture contrast limited adaptive histogram equalization for underwater image enhancement,” in 2013 international conference on computer applications technology (ICCAT) (IEEE), 1–5. doi: 10.1109/ICCAT.2013.652201

Huang J.-B., Singh A., Ahuja N. (2015). “Single image super-resolution from transformed self-exemplars,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 5197–5206. doi: 10.1109/CVPR.2015.7299156

Huang D., Wang Y., Song W., Sequeira J., Mavromatis S. (2018). “Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition,” in International conference on multimedia modeling (Springer), 453–465. doi: 10.1007/978-3-319-73603-7_37

Islam M. J., Xia Y., Sattar J. (2020). “Fast underwater image enhancement for improved visual perception,” in IEEE Robotics and automation letters, vol. 5. , 3227–3234. doi: 10.1109/LRA.2020.2974710

Ketcham D. J., Lowe R. W., Weber J. W. (1974). “Image enhancement techniques for cockpit displays,” in Tech. rep (Hughes Aircraft Co Culver City Ca Display Systems Lab).

Lai Y., Zhou Z., Su B., Xuanyuan Z. (2022). Single underwater image enhancement based on differential attenuation compensation. Front. Mar. Sci., 2216. doi: 10.3389/fmars.2022.1047053

Li C., Guo C., Ren W., Cong R., Hou J., Kwong S., et al. (2019). An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389. doi: 10.1109/TIP.2019.2955241

Lu H., Li Y., Nakashima S., Kim H., Serikawa S. (2017). Underwater image super-resolution by descattering and fusion. IEEE Access 5, 670–679. doi: 10.1109/ACCESS.2017.2648845

Lu H., Li Y., Zhang L., Serikawa S. (2015). Contrast enhancement for images in turbid water. JOSA A 32, 886–893. doi: 10.1364/JOSAA.32.000886

Peng Y.-T., Cao K., Cosman P. C. (2018). Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 27, 2856–2868. doi: 10.1109/TIP.2018.2813092

Perez J., Attanasio A. C., Nechyporenko N., Sanz P. J. (2017). “A deep learning approach for underwater image enhancement,” in International work-conference on the interplay between natural and artificial computation (Springer), 183–192. doi: 10.1007/978-3-319-59773-7_19

Pizer S. M., Amburn E. P., Austin J. D., Cromartie R., Geselowitz A., Greer T., et al. (1987). Adaptive histogram equalization and its variations. Comput. vision graphics image Process. 39, 355–368. doi: 10.1016/S0734-189X(87)80186-X

Song W., Wang Y., Huang D., Tjondronegoro D. (2018). “A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration,” in Pacific rim conference on multimedia (Springer), 678–688. doi: 10.1007/978-3-030-00776-8_62

Wang Z., Bovik A. C., Sheikh H. R., Simoncelli E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE Trans. image Process. 13, 600–612. doi: 10.1109/TIP.2003.819861

Keywords: underwater image processing, image enhancement, histogram stretching, color correction, fusion

Citation: Zhao Z, Zhou Z, Lai Y, Wang T, Zou S, Cai H and Xie H (2023) Single underwater image enhancement based on adaptive correction of channel differential and fusion. Front. Mar. Sci. 9:1058019. doi: 10.3389/fmars.2022.1058019

Received: 30 September 2022; Accepted: 01 December 2022;

Published: 18 January 2023.

Edited by:

Hong Song, Zhejiang University, ChinaReviewed by:

Carlos Pérez-Collazo, University of Vigo, SpainJing Lian, Lanzhou Jiaotong University, China

Viacheslav Voronin, Moscow State Technological University “Stankin”, Russia

Copyright © 2023 Zhao, Zhou, Lai, Wang, Zou, Cai and Xie. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tenghui Wang, dml0by53YW5nNzc3QGdtYWlsLmNvbQ==

Zefeng Zhao

Zefeng Zhao Zhuang Zhou

Zhuang Zhou Yunting Lai

Yunting Lai Tenghui Wang2*

Tenghui Wang2* Haohao Cai

Haohao Cai