95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Mar. Sci. , 11 November 2022

Sec. Ocean Observation

Volume 9 - 2022 | https://doi.org/10.3389/fmars.2022.1047053

This article is part of the Research Topic Optics and Machine Vision for Marine Observation View all 25 articles

A correction has been applied to this article in:

Corrigendum: Single underwater image enhancement based on differential attenuation compensation

Yunting Lai1

Yunting Lai1 Zhuang Zhou1*

Zhuang Zhou1* Binghua Su1

Binghua Su1 Zhe Xuanyuan2

Zhe Xuanyuan2 Jialin Tang1

Jialin Tang1 Jinghui Yan1

Jinghui Yan1 Wanxin Liang1

Wanxin Liang1 Jiongjiang Chen1

Jiongjiang Chen1High quality underwater images and videos are important for exploitation tasks in the underwater environment, but the complexity of the underwater imaging environment makes the quality of the acquired underwater images generally low. To correct the chromatic aberration and enhance the sharpness of underwater images in order to improve the quality of underwater images, we based on the differential compensation proposed a Differential Attenuation Compensation (DAC) method. The underwater image is contrast stretched to improve the contrast of the image, as well as the underwater image is denoised, for the red channel with serious loss of detail information we choose the blue and green channels with more detail information to compensate for this, and finally the image is restored through the grayscale world to obtain more realistic colors. Our method is qualitatively and quantitatively compared with multiple state-of-the-art methods in the public underwater image dataset, underwater image enhancement benchmark (UIEB) and enhancing underwater visual perception (EUVP), demonstrating that the underwater images processed by our method better resolve the problems of chromatic aberration and blur, with more realistic color, detail and better underwater image quality evaluation indicators

The ocean is rich in material resources, but due to the complex underwater environment, the tasks related to ocean development are challenging. As a key technology for ocean development, underwater imaging technology can effectively assist related tasks of ocean development. Underwater imaging technology is an effective way to obtain underwater images, and it is also used in various underwater scenarios, such as the laying of submarine cables (Ortiz et al., 2002), the survey of deep sea, and the observation of fish schools (Howell et al., 2021). Obtaining effective underwater environment information and target information is the premise of ocean development. However, due to the complex underwater imaging environment, the quality of underwater information collected by common sensors is low and difficult to use for other tasks.

In the marine environment, the complex imaging environment leads to serious degradation of underwater images collected by ordinary imaging equipment, and problems such as color distortion, contrast reduction, and image blurring occur. In the underwater imaging process, different wavelengths of light have different attenuation rates in underwater propagation (Clarke and James, 1939). In the visible wavelength band, red light has a long wavelength penetration is the weakest, most easily absorbed by water, its propagation distance underwater is about 4 meters; blue light and green light has a short wavelength, the absorption effect of water is small, the propagation distance is farther. The variability in the attenuation of different wavelengths of light in the underwater environment leads to a general problem of color distortion and an overall blue-green hue in underwater images. Meanwhile, there are a large number of suspended particles and plankton in the water, which scatter the imaging light and background light making the underwater images blurred (Wozniak and Dera, 2007).

Therefore, eliminating blurring and chromatic aberration of underwater images caused by differential attenuation and scattering through underwater image processing techniques is the key to obtaining high-quality underwater images.

We propose a pixel processing-based underwater image enhancement method DAC based on the differential attenuation of different wavelengths of light in the underwater environment. The quality of underwater images is enhanced by contrast stretching and color differential attenuation compensation of underwater images. The following contributions are made in this paper:

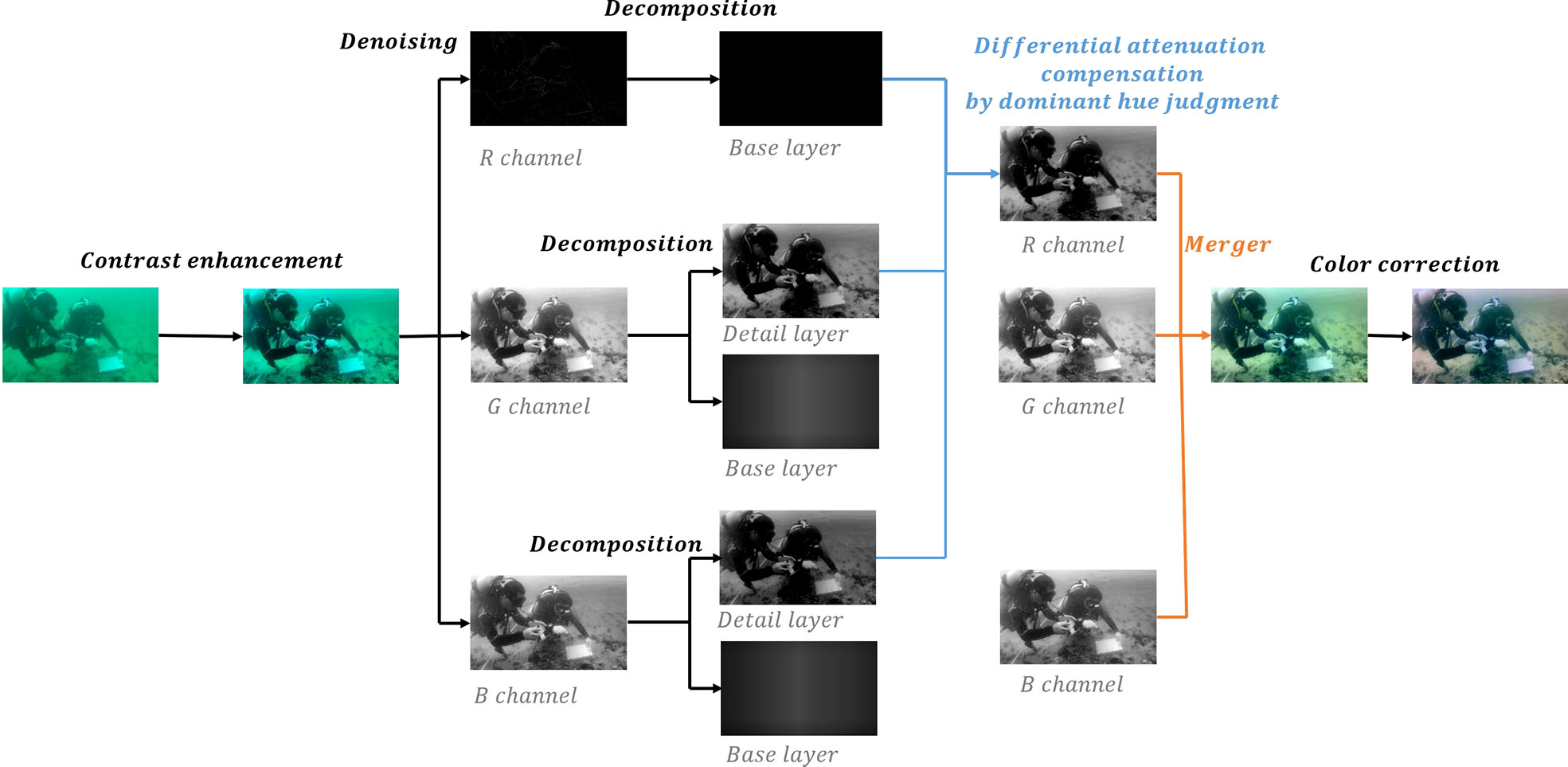

1. We decompose the underwater image to be processed under RGB color space based on the differential attenuation of different wavelengths of light in the underwater environment, further decompose each channel image into a base layer containing image structure information and a detail layer containing image texture information, and propose a method to compensate R channel detail information with G and B channel detail information.

2. Our proposed method is a new approach to underwater image processing that is not based on the underwater physical imaging model, but only on image pixels, and is capable of simultaneously removing image blur and correcting image chromatic aberration. And the paper contains a large number of experiments based on pixel information, such as verifying the associativity of the mean value of G and B channel with image hue.

3. We provide codes for this paper, every one can get codes from https://github.com/lailaiyun/Single-Underwater-Image-Enhancement-Based-on-Differential-Attenuation-Compensation.

The scattering effect of light by suspended particles and a large number of plankton in the water, as well as the absorption effect of water on different wavelengths of light cause problems such as color attenuation, low contrast and blurring of underwater images, which have serious impact on the exploitation of the ocean. The enhancement of underwater imaging technology can provide more useful information for the development of the ocean, which can be divided into two methods, one is the enhancement of hardware equipment, such as underwater imagers and LIDAR imaging systems (Phillips et al., 2019; Egorenko and Efremov, 2020). Unfortunately, for hardware equipment enhancements often require higher costs. The other approach is to process the acquired underwater information by algorithms (Sahu et al., 2014). Among them, there are also three methods for underwater image processing, physical model-based image enhancement, non-physical model-based image enhancement, and deep learning-based image enhancement.

Non-physical model-based image enhancement is used to enhance the quality of underwater images by directly processing the pixel values of the underwater images. The classical non-physical model algorithm for histogram equalization (Kaur et al., 2011) uses contrast stretching (Abdullah-Al-Wadud et al., 2007) to adjust the histogram of an image by distributing the concentrated pixel points in the histogram evenly throughout the Gray World Algorithm, redistributing the pixel values of the image, and increasing the gap between the gray levels of the histogram, thus achieving contrast stretching. In the deep ocean, where light propagation is obstructed, artificial light sources are often used for image acquisition, but using histogram equalization for underwater images that are unevenly lit and contain areas that are too dark or too bright can overstretch the contrast of the processed image. In this regard, adaptive histogram equalization (Pizer et al., 1987) is proposed to solve the problem of global equalization of the image, which divides the image into small blocks of equal size and performs local histogram stretching for each small block of image to solve the problem of image overstretching, but this proposed method introduces the problem of noise being amplified, which affects the peak signal-to-noise ratio of the image. In this regard, adaptive histogram equalization is proposed to solve the problem of global equalization of the image, which divides the image into small blocks of equal size and performs local histogram stretching for each small block of image to solve the problem of image overstretching, but this proposed method introduces the problem of noise being amplified, which affects the peak signal-to-noise ratio of the image. Later, many improvements and fusion methods were proposed by researchers, Ancuti et al. (Ancuti et al., 2012; Ancuti et al., 2017) proposed a multi-scale fusion strategy approach combining contrast enhancement and color correction, which largely increased the color of underwater images. Besides, frequency domain method (Yang et al., 2021) is also a non-physical model image processing method. This method converts the pixel point and position information in space to other processing space, and filters the high frequency information and low frequency information of the image, such as high pass filtering and low pass filtering. Then it is back-converted to the spatial domain to obtain the enhanced underwater image. Huang (Huang et al., 2018) processes the image in RGB and CIE-Lab color space, and stretches the histogram of the image to enhance the quality of image. Kashif (Iqbal et al., 2010) proposed an unsupervised colour correction method (UCM) for underwater image enhancement. UCM stretches the image on RGB and HSI colour space to archive color correction.

The underwater imaging model proposed by Jaffe-McGlamery (McGlamery, 1980) recovers the degradation process of underwater images in the form of a physical model and is the basis for underwater image enhancement based on the physical model. This underwater imaging model suggests that the light energy of an underwater image consists of three components as long as: forward scattering (scattering of light by the underwater scene), backward scattering (scattering of light by impurities in the water), and directly transmitted light energy (Lu et al., 2016). Image enhancement algorithms based on physical models are often used to enhance the quality of underwater images by reducing the forward and backward scattering of images (Li et al., 2018). Based on the underwater imaging model, HE et al. (He et al., 2010) proposed a classical de-fogging algorithm Dark Channel Prior (DCP), which is able to estimate the depth map of the underwater image scene and achieve image clarity. Paulo et al. (Drews et al., 2013) proposed Underwater Dark Channel Prior (UDCP) by combining the properties of color decay of underwater images in order to repair underwater degraded images and obtain underwater images that are clearer and contain realistic colors. In addition to this, Akkaynak et al. (Akkaynak and Treibitz, 2018) (Akkaynak and Treibitz, 2019) proposed a method for de-watering underwater images and videos based on an underwater image imaging model, which realistically restores the colors of underwater images, making them closer to the colors as well as the true form of land images. Wei (Song et al., 2018) proposes underwater light appreciation prior to estimate the parameters of the model, ambient background light and transmission.

In recent years, the development of deep learning has led to outstanding performance in various fields, and it has gained wide attention in underwater image processing. Generative adversarial network models based on game ideas are also often used in underwater image enhancement (Engin et al., 2018), but in deep learning, pairs of datasets (containing degraded images and corresponding high-quality images) are usually required to train the network models. Cycle Generative Adversarial Networks (Cycle-GAN) (Zhu et al., 2017) was proposed to solve the problem of no paired dataset. Based on the paired dataset generated by Cycle-GAN, Wang et al. (Wang et al., 2019) proposed an underwater color image enhancement algorithm Underwater Generative Adversarial Networks (UWGAN) based on generative and adversarial network to make blurred, color-biased underwater images clearer and more colorful. Islam et al. combined both supervised and unsupervised learning methods to propose a multimodal objective function-basedfully-convolutional conditional Generative Adversarial Networks based model for real-time underwater image enhancement, and refer to as FUnIE-GAN (Islam et al., 2020), which optimizes the loss function of generative adversarial networks and provides enhancing underwater visual perception (EUVP) datasets that can be used for both one-way and two-way training. Even though Cycle-GAN is able to train the network by synthesising underwater image datasets, there is still a gap between the synthesised underwater image datasets and the real underwater images, and the network model is not always well trained. Therefore, the robustness and generalization of deep learning-based underwater image enhancement algorithms still have more room for improvement compared to traditional algorithms based on physical and non-physical models. Ankita (Naik et al., 2021) proposes a shallow neural network architecture, Shallow-UWnet which maintains performance and has fewer parameters than the state-of-art models. Chen (Chen et al., 2021) proposed a new a new underwater image enhancement method based on deep learning and image formation model, we refer to as Image Formation. This method works well, but lacks interpretability.

The complex imaging environment in the ocean causes severe degradation of the underwater images acquired by underwater imaging systems. In the aqueous medium, water molecules and various substances contained in the water absorb light, and the absorption has the property of increasing with decreasing wavelength. The absorption of light energy by the water body makes the underwater images suffer from low contrast, color shift and distortion. In addition, impurities in the water also cause blurring and lack of ground realism in the underwater images. As shown in Figure 1, we propose the Differential Attenuation Compensation (DAC) method for the problems of underwater images in the above paper, which uses a three-step strategy of contrast enhancement, image decomposition and R channel attenuation compensation to process underwater images without relying on the underwater imaging system and thus improve the quality of underwater images.

Figure 1 Algorithm flow chart. Firstly, the image is contrast stretched, the base layer as well as the detail layer of the image is extracted, then the color attenuation is compensated, and finally the color of the image is restored by the Gray World.

The underwater environment contains a large number of suspended particles, and because of the scattering effect, the underwater image will be blurred, like a shroud of fog, and the contrast is low. And in environments such as the deep sea and other natural light can’t be illuminated, the use of artificial light sources and other converging light illumination, the target scene is not uniformly illuminated, the images collected in this environment, some areas are brighter, some are darker. Therefore, we use the Contrast Limited Adaptive Histogram Equalization (Reza, 2004) (kumar Rai et al., 2012) method to stretch each channel of the image.

C∈{R,G,B} denotes the red, green, and blue channels of the image, IC denotes the C hannel image of the original underwater image, CLAHE() enotes the image processed with the Contrast Limited Adaptive Histogram Equalization method, and denotes the underwater image after contrast enhancement. Contrast Limited Adaptive Histogram Equalization is an improvement to the adaptive histogram equalization mentioned in the related work above.

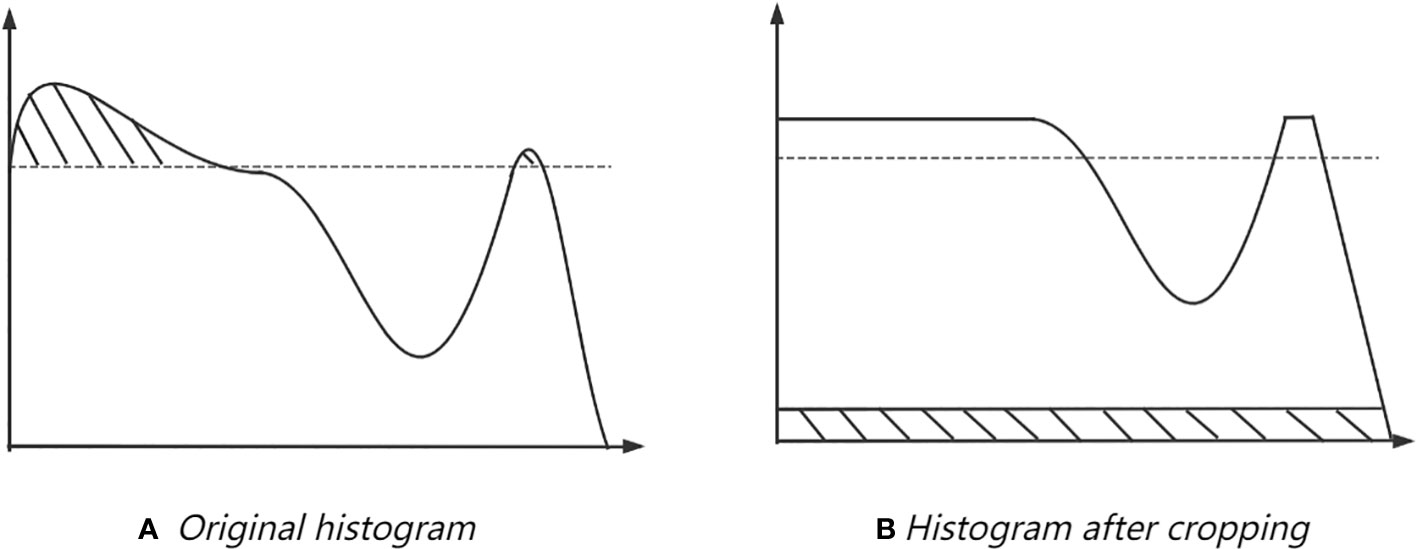

As shown in Figure 2, the algorithm uses a pre-set threshold to limit the maximum magnitude of the histogram, crops the histogram after computing the mapping function, and then distributes the cropped pixel values uniformly to each gray level of the image, suppressing the noise of the adaptive histogram equalization. Also, bilinear interpolation is used to stitch the divided image blocks in the adaptive histogram equalization to remove the boundaries between image blocks.

Figure 2 Contrast limiting principle. The cropped pixels are evenly divided into gray levels. (A) is the original histogram, (B) is the histogram after cropping, the cropped pixels are uniformly filled at each gray level.

We divide the underwater image into several equal-sized image blocks evenly and calculate the histogram and the corresponding mapping function in each image block separately, mapping the pixel points located at the boundaries with the mapping function of the adjacent image block. For non-boundary pixel points, the mapping values of the four adjacent image blocks for that pixel value are calculated separately for linear interpolation.

As shown in Figure 3, it is the image before and after the CLAHE method stretched and its channel images and the corresponding histogram. The histogram distribution of each channel before and after stretching is similar, but in the underwater image after stretching, the R channel has more pixels distributed in the large pixel value range, and the B channel has more pixels distributed in the small pixel value range. The stretched underwater image visibly partially removes the blue hue or green hue of the original underwater image.

Image decomposition refers to the decomposition of an image into two parts, structure and texture. The structure part is the larger scale base object in the image, we refer to as BL and the texture part is the smaller scale detail object, we refer to as DL Before image decomposition, the image needs to be pre-processed with noise removal to prevent noise from being considered as detail information and affecting the decomposition result of the image.

Where C∈{R,G,B} equation 2 shows that the C channel of contrast- enhanced image is viewed as an accumulation of two parts, the base layer BLC and the detail layer DLC

Due to the impact of plankton and suspended particles on imaging in the underwater environment, the captured underwater images are superimposed with too much interference and severe noise. Then we decompose the image into two parts, the base layer and the detail layer, using the image mean value as the threshold. As shown in equation 3 we perform mean filtering on the C channel of the contrast-boosted image and consider the filtered image as the base layer of the channel.

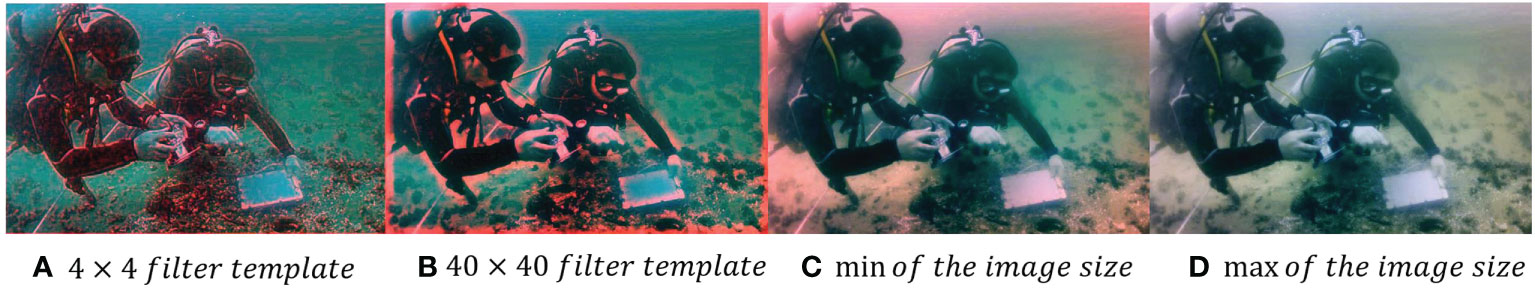

Where Z denotes the mean filter and * denotes the convolutional transport. The selection of filter template size is adaptively adjusted according to the image resolution. Figure 4 shows the effect of using a 4×4 filter template, a 4×4 filter template, the minimum size of the image (filter size=minm, n) and the maximum size of the image (filter size=maxm, n) as filter templates for filtering the underwater image, respectively. The template with too small size will make the underwater image too smooth and produce red edges. In this paper, we choose the largest size of the image as the filtering template, which can have a better smoothing effect on the detail area of the underwater image and retain the brightness information of the underwater image, which is convenient for detail extraction. As mentioned above we consider the image to be composed of a base layer and a detail layer, so the detail layer for this channel is:

Figure 4 Comparison of the results of processing images with different filters. (A–D) is 4 × 4 filter templates, 40 × 40 filter templates, min of the image size and max of the image size respectively.

As shown in Figures 4A, B are the images processed with 4×4 filter template and 4×4 filter template respectively, in which the divers and objects and the edges of the images have obvious red lines, which make the images distorted. The size of the images used in our experiments is mostly around 800 × 500, much larger than 4 and 40. 4 (C) is the processing result of the image when the size of filter template is min {m, n} and there is obvious red distortion at the edges of the image, which makes the image quality significantly degraded. We have gone through a lot of experiments to change the size of filter template, and found that when the size of filter template is max {m, n} the filtered image results are the best, as shown in 4 (D). In the figure we only show the results of our individual experiments, but we have actually tried many size of filter templates.

Compared to red light, blue and green light decay relatively slowly in water and travel the farthest distance. Therefore, we believe that the R channel information of the image is more lost, while the G channel and B channel information is more retained. We observed several datasets of underwater images that have been used more by researchers and found that most of the underwater images appear blue and green, with most of the images that appear blue being images taken in the deep sea, while the green images were taken in relatively close waters. We also found in our field research that the seawater close to the coastline is generally very turbid due to the current and sediment, normally appearing yellow, gradually appearing green away from the coast, and gradually appearing blue as it continues to move away from the coast. For the underwater image with green hue, we believe that the G channel detail is the most complete information retained by attenuation, and for the underwater image with blue hue, we believe that the B channel detail is the most complete information retained by attenuation. This is because under natural lighting conditions, red light is generally depleted by attenuation at about 4m underwater.

As shown in Figure 3, the images before and after stretching by the CLAHE method and their respective channel images as well as the corresponding histograms are shown. Underwater images in blue hue, the pixel values of the R channel are mostly distributed around pixel values equal to 0. The corresponding R channel images have many black dots, and during image processing, when the pixel value of a pixel is very close to 0, the computer may assume that the pixel value of the pixel is 0. Therefore, the image information corresponding to this part may be lost during the processing, and the corresponding position in the image will become a black dot. In addition, when the pixel value is small, it is difficult for the human eye to distinguish the details in a darker image.

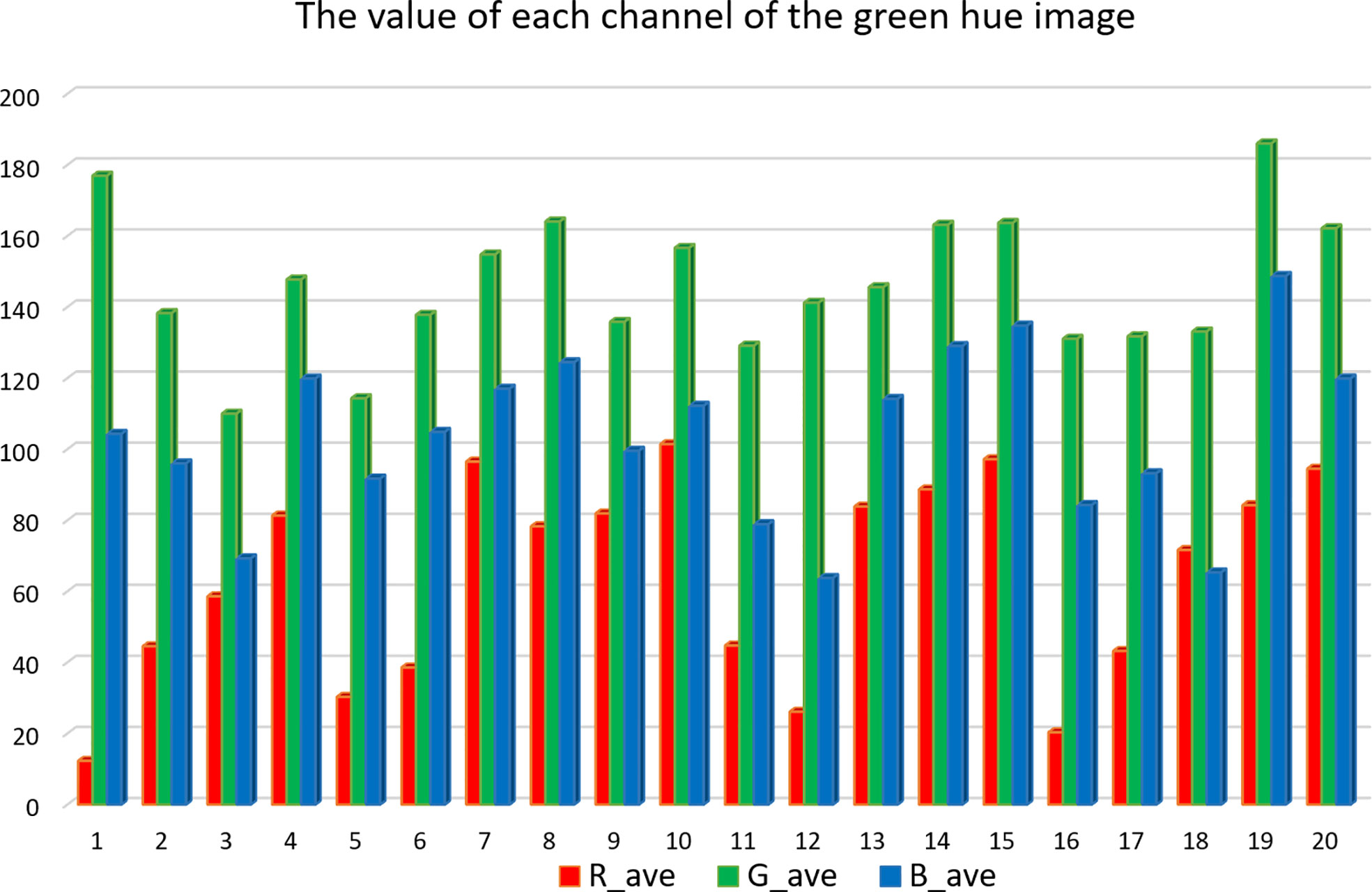

As shown in Figure 7 and Figure 8, we found that the pixel values of the image R channel in the blue or green hue images are relatively small after extensive statistical experiments, and the vast majority of the R channel pixels in Figure 7 and Figure 8 are below 50. Therefore, we believe that the loss of detail information in the R channel of the image is serious, and we need to compensate for the loss of detail information in the R channel with the G channel or B channel.

After a large number of image tests, it was found that the blue underwater images had the largest B channel mean and the green underwater images had the largest G channel mean. The pixel values of the R channel of the blue-green hue underwater images are all relatively small.

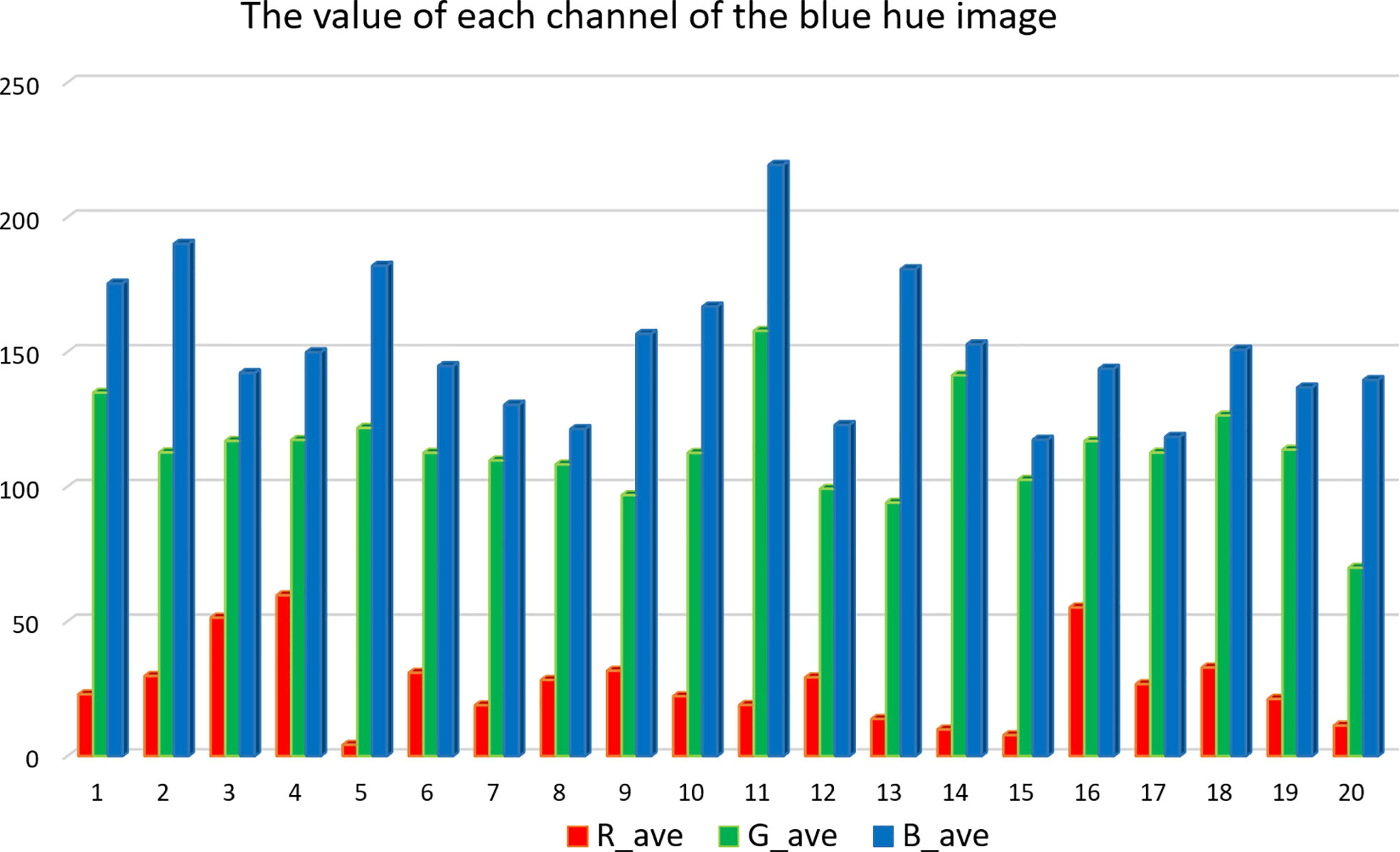

Among the blue underwater images and the green underwater images, as shown in Figure 5 and Figure 6, we selected 20 representative images for analysis respectively, and the analysis results are shown in Figure 7 and Figure 8. We therefore compare the mean values of the G channel and the B channel of the image to determine whether to compensate the detail information of the R channel with the G channel or the B channel.

Figure 6 Comparison of mean values of green hue underwater images in Figure 5 for each channel.

Figure 8 Comparison of mean values of blue hue underwater images in Figure 6 for each channel.

Where Gave, Bave are the mean values of G channel and B channel of the image, respectively. Rcpis the R channel after compensating the detail information, the above equation shows that when Gave> Bave the image as a whole is green, the R channel is compensated by the detail layer of the image G channel, and when Bave> Gave the image as a whole is blue, the R channel is compensated by the detail layer of the image B channel. Finally, Rcp are combined as the result of underwater image after R channel attenuation compensation.

As shown in Figure 7 and Figure 8, for the underwater image in green hue, the mean value of the G channel is the largest among the mean values of the RGB channels, and for the underwater image in blue hue, the mean value of the B channel is the largest among the mean values of the RGB channels. The mean value of the R channel is the smallest in both the blue hue underwater image and the green hue underwater image, and even in the vast majority of cases the mean value of the R channel is less than 50. In DAC, we first determine whether the image is dominated by green or blue, and then compensate for the lost detail information of the R channel with the detail information of the dominant color. In DAC, we first determine whether the image is dominated by green or blue, and then compensate for the lost detail information of the R channel with the detail information of the dominant color.

We think that the underwater image after compensating the R channel partially eliminates the effect of attenuation on the underwater image, but there is still some deviation between the underwater image and the real color of the target scene, so we introduce the Gray World Algorithm (Fu et al., 2017) to eliminate the effect of different wavelengths of light attenuation on the image and restore the real color of the target scene. In our experiments, we found that most of the results would show an overall red color of the image, so we used the Gray World Algorithm with the following restrictions on the red channel.

Where, Gray is the mean of the R, G, B channel mean. α is a weight coefficient to control the color recovery of R channel, and the value range is [0,1]. If α is too small, it will make the image red compensation insufficient, resulting in the loss of some color information and the overall blue-green mixed color of the image. If the α is too large, it will make the image overcompensate and appear reddish overall, especially the background part of the image will appear pink or purple. In this paper, we choose α = 0.8 or better performance. Rave Gave Bave are the mean values of Rcp respectively. kR kG and kB are scale parameters of R, G, B channel respectively. denotes the C channel of the output image.

In the experiments of this paper, the UIEB (Li et al., 2019) underwater image enhancement benchmark dataset is used, which consists of 950 real-world underwater images covering the diversity of underwater environments and contains a variety of underwater scenes, such as deep-sea fish, coral reefs, submarine cables, underwater antiquities, etc. Most of the underwater images in the dataset show blue-green color, which satisfies the original intention of our proposed algorithm. In this paper, we conduct experiments based on the UIEB underwater image dataset, and to verify the effectiveness of our algorithm, we compare it with other underwater image enhancement methods for quantitative and qualitative analysis.

In this paper, two types of image quality evaluation metrics are used to demonstrate the effectiveness of our algorithm. They are the quality evaluation metrics for non-reference images and the quality evaluation metrics for reference images. We used the underwater color image quality evaluation metric (UCIQE) (Yang and Sowmya, 2015) as an image quality evaluation metric for non-reference images. UCIQE is a linear combination of color intensity, saturation and contrast and is an evaluation of uneven chromatic aberrations, blurring and low contrast in underwater images. The smaller value of MSE indicates that the processed image is closer to Ground Truth, and the larger values of PSNR, SSIM, and UCIQE indicate better image quality. The CLAHE algorithm is able to stretch the contrast of underwater images in a limited way, which increases the contrast and sharpness of the image, improving the quality of underwater images. However, the range of application is limited and can result in overexposure or underexposure of underwater images that are too bright or too dark, as well as loss of detail in the image.

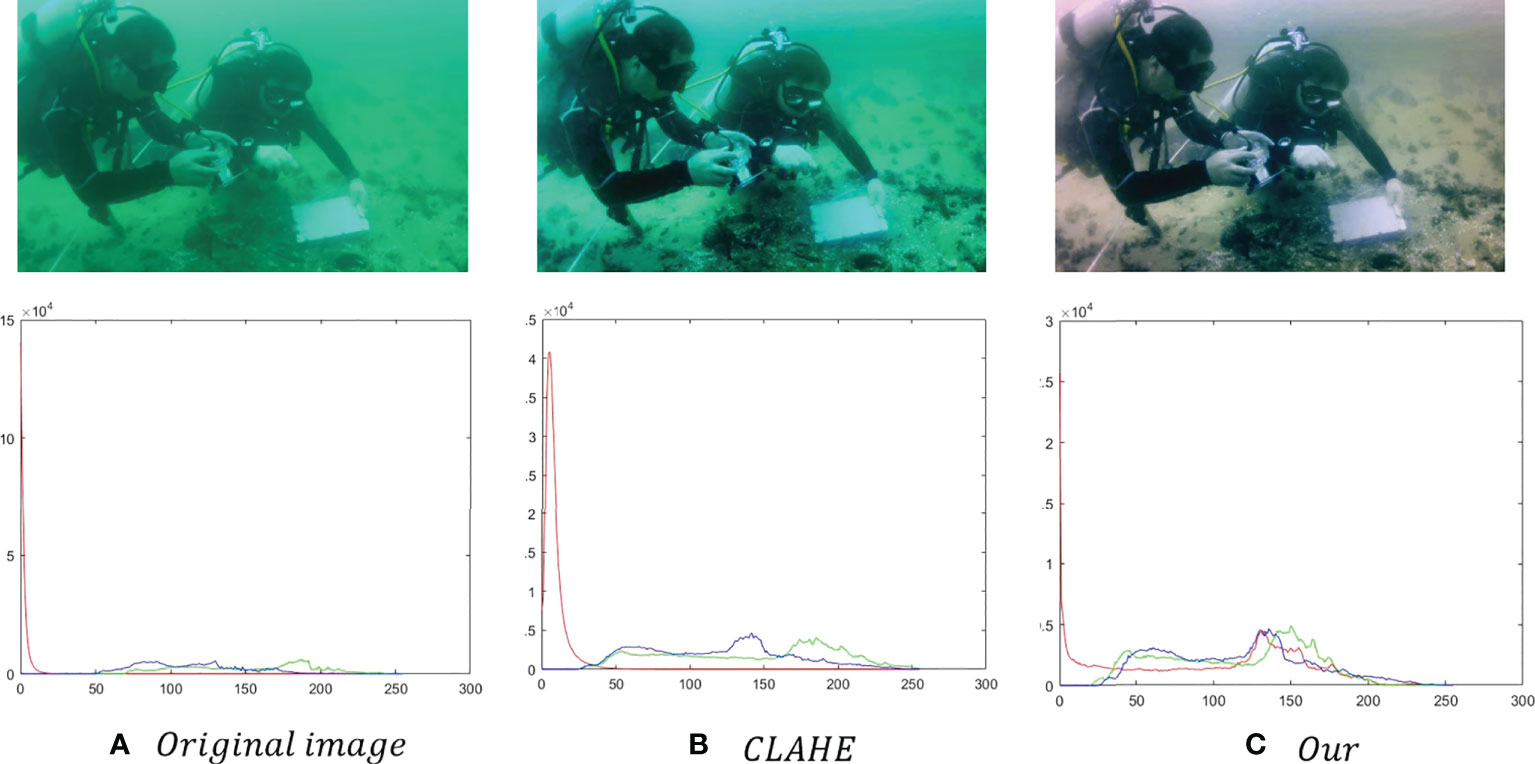

As shown in Figure 9, a histogram calculation of the original image reveals that there are few pixel points in the red channel of the unprocessed underwater image. In order to compensate for the lost detail information in the R channel, we want to stretch the image by CLAHE to make the pixel value of the R channel image larger, as shown in Figure 9B, the image after CLAHE stretching has more pixels in the large pixel value range for each RGB channel compared to the original image, especially the peak of the histogram of the R channel is obviously shifted to the right, and the pixel value of the R channel has significantly increased. However, due to the less information in the R channel, the R channel information of the stretched image is still less, and most of the pixel values are still distributed in the range of [0,35], so there is no obvious red color in the CLAHE processed image, and the image still shows a blue-green hue. While our method compensates for the red channel detail information of the underwater image, the histogram of the processed image shows a peak around the pixel value equal to 135, with significantly more pixels distributed in the large pixel value range than in the original image and the CLAHE-processed image, as shown in Figure 9C. The results of our method clearly eliminate the blue-green hue of the original and CLAHE processed images.

Figure 9 Image Contrast Stretching. (A) is the histogram of the original image, (B) is the histogram of the image stretched by the CLAHE algorithm, (C) is the histogram of the image stretched by our DAC.

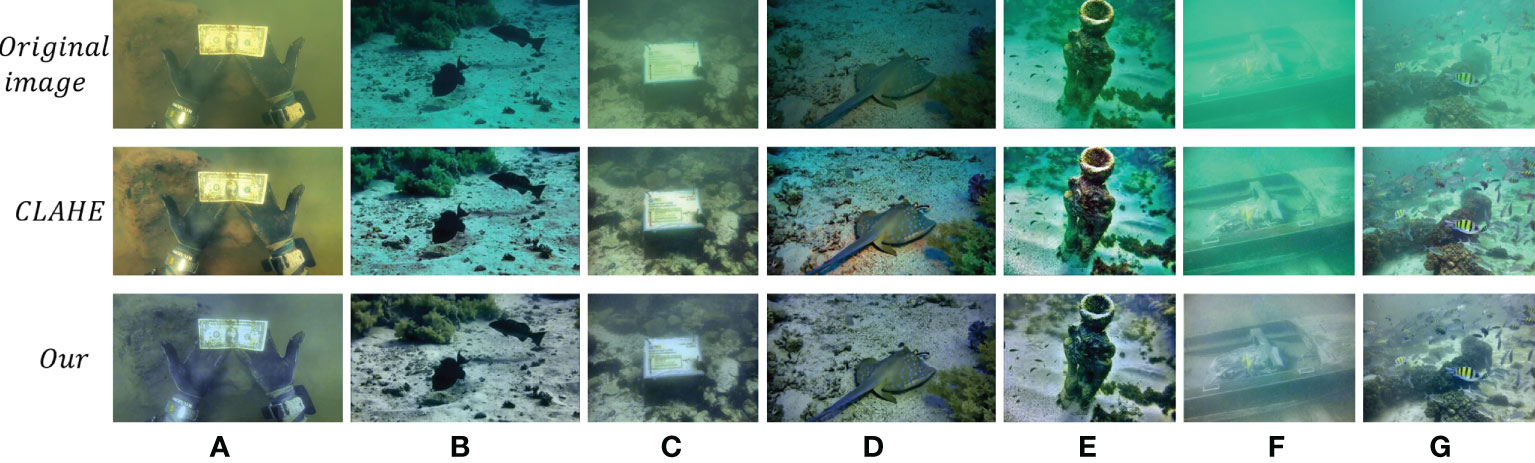

As shown in Figure 10, we compare our algorithm with CLAHE. From the comparison, it can be seen that our algorithm clearly eliminates the blue-green hue of the original image and has a significant enhancement of the details of the image. Figures 10A, C show that our method clearly eliminates the yellow-green hue in both the original image and the CLAHE processing results. Because there is no hue interference, the detailed information on the bill held in the diver’s hand is clearer, and the information on the packaging of the item in Figure 10C is clearer. As shown in Figures 10B, D, our method clearly eliminates the blue hue in the Original image and CLAHE processing results, so the fish outline and the details of the rocks in the background are clearer, and our algorithm eliminates the overstretched red shadows in CLAHE as shown in Figure 10D, restoring the true shape of the image. Figure 10E, F showed that the information of the objects in the boat was clearer because the strong green hue were eliminated, so the outline of the objects in the image was clearer, and there was no exposure of the upper outline of the objects after the CLAHE method processing. Figure 10G showed that each fish in the school of fish in the image was clearer because the green hue was eliminated.

Figure 10 Effect of comparison with CLAHE. (A–H) are underwater images in various scenes processed using the CLAHE algorithm and our DAC algorithm, respectively.

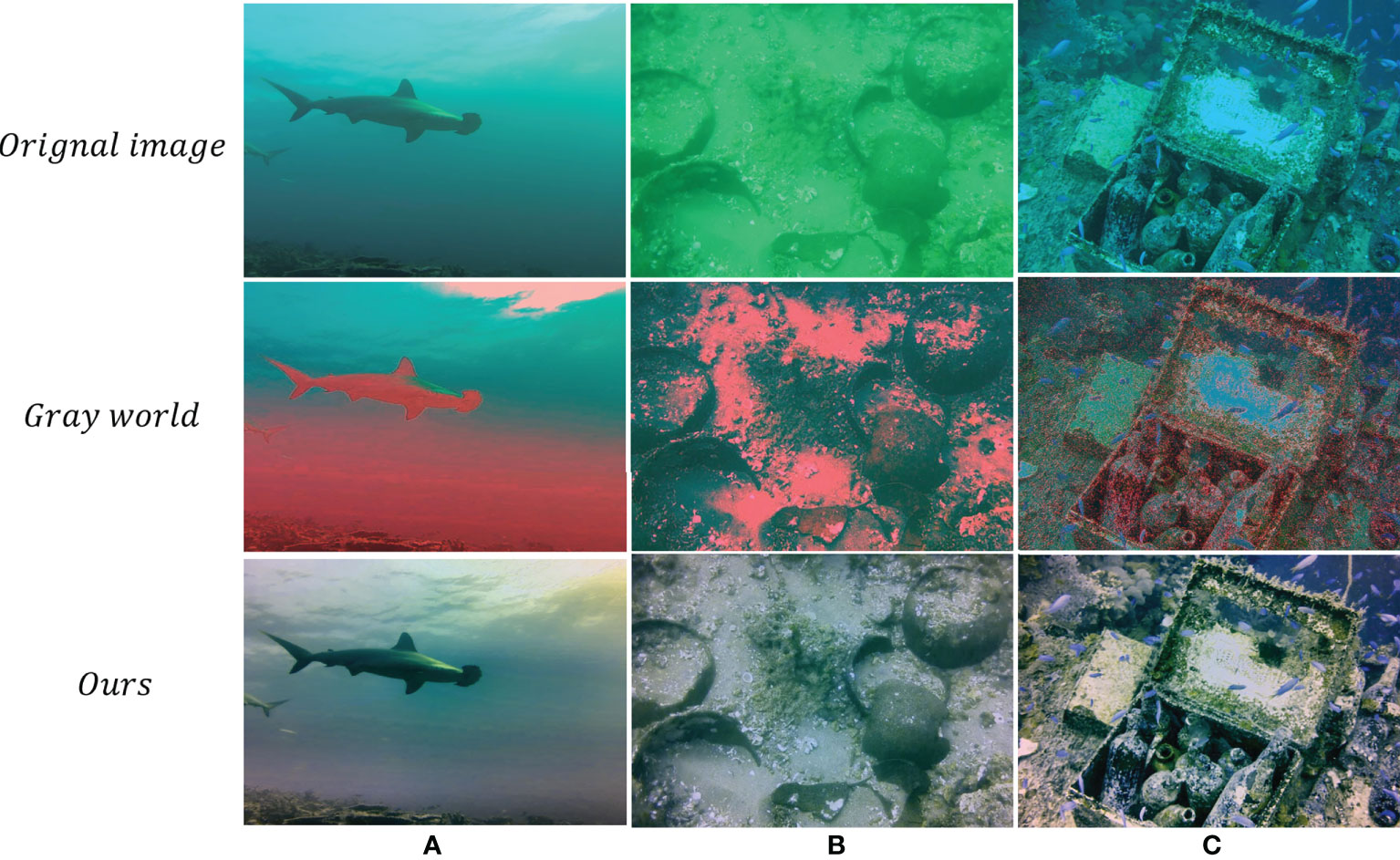

Gray World Algorithm makes the mean values of the three channels of the underwater image converge to the same gray value, which can eliminate the influence of ambient light on the image and restore the true color of the image. Figure 11 shows the comparison of the effect between the algorithm in this paper and Gray World Algorithm. As can be seen from the figure, the color balance in Gray World Algorithm makes the underwater images have serious chromatic aberration and the overall color of underwater images is reddish. As shown in Figure 11: Figures 11A, B The effect images processed by Gray World Algorithm have too much red information, which makes the image lose its real color. Besides, according to Figure 11C it can be found that the underwater image processed by Gray World Algorithm has lost details. Therefore, we compensated the image for color attenuation and obtained images with more uniform colors and richer details.

Figure 11 The result of comparing with Gray World Algorithm. (A–G) are underwater images in various scenes processed using the Gray World algorithm and our DAC algorithm, respectively.

In addition, as shown in Table 1, the bold values are the better values in the quantitative comparison. we also quantitatively analyze our method with Gray World Algorithm and we can find that our algorithm has a greater advantage over Gray World Algorithm in the three metrics of MSE, PSNR and SSIM (Hore and Ziou, 2010).

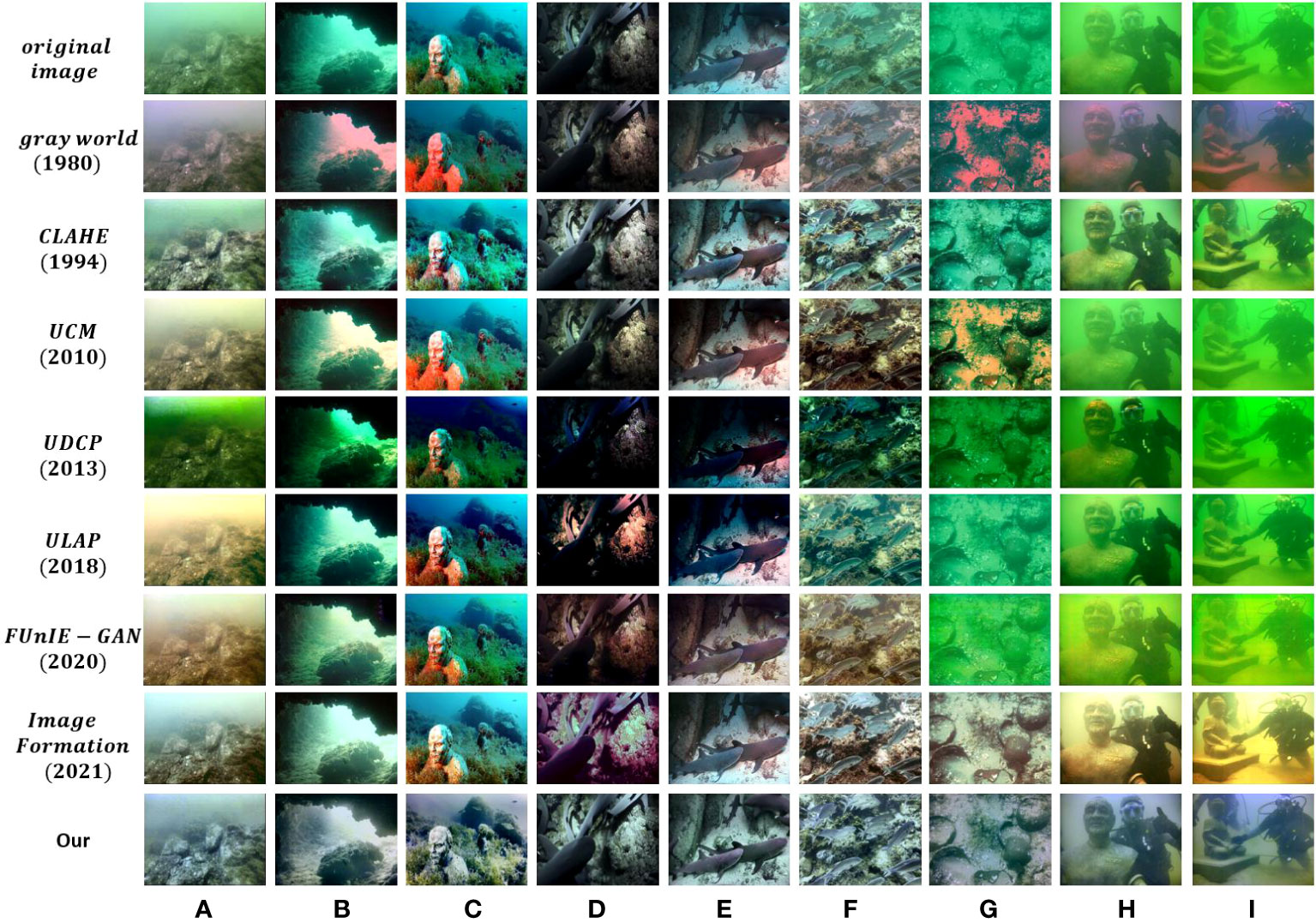

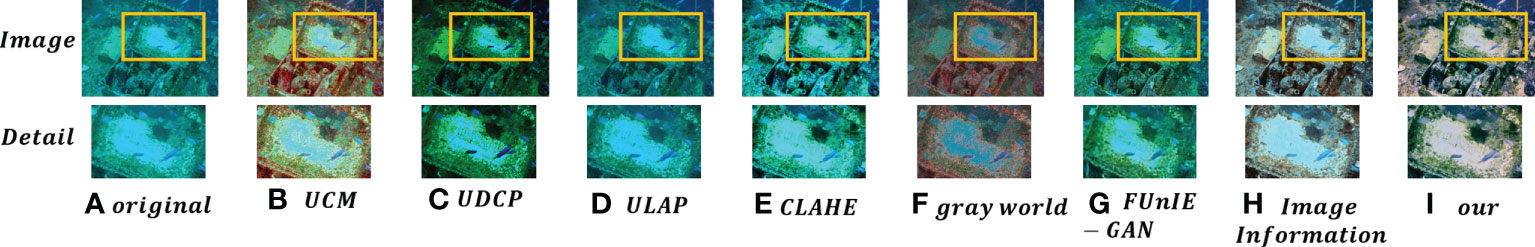

To demonstrate that our algorithm has a significant improvement on underwater images, we performed a qualitative analysis to make a comparison with UCM (Iqbal et al., 2010), UDCP, ULAP (Song et al., 2018), CLAHE, Gray World Algorithm, Image Information (Chen et al., 2021) and FUnIE-GAN based on generative adversarial networks, and a comparison of the experimental results is shown in the following Figure 12.

Figure 12 The DAC compares with classical and state-of-the-art methods on the UIEB dataset. (A–I) is the comparison results of original image with UCM, UDCP, ULAP, CLAHE, gray world, FUnIE-GAN, Image Information and our DAC in each underwater scene.

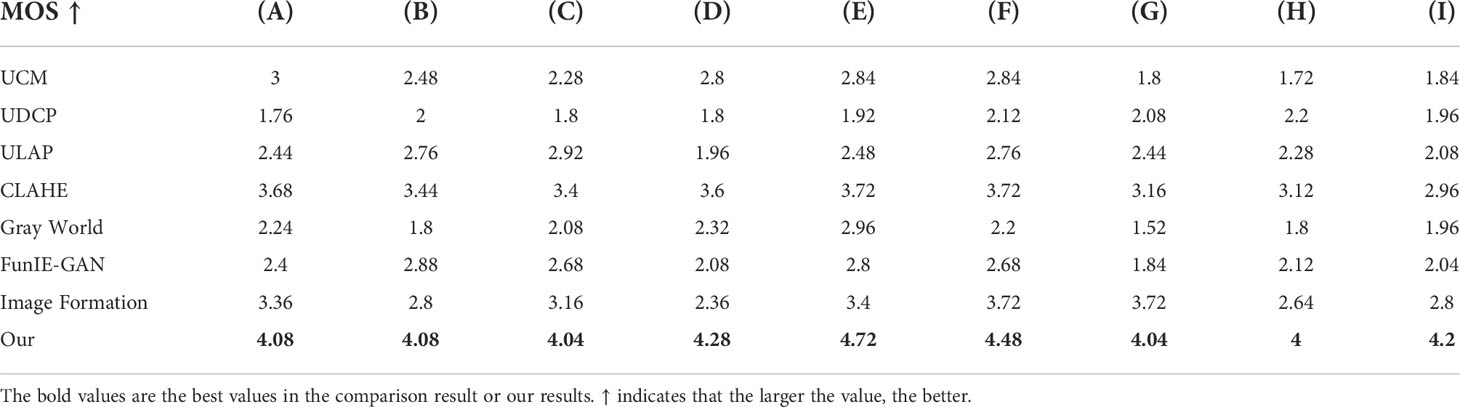

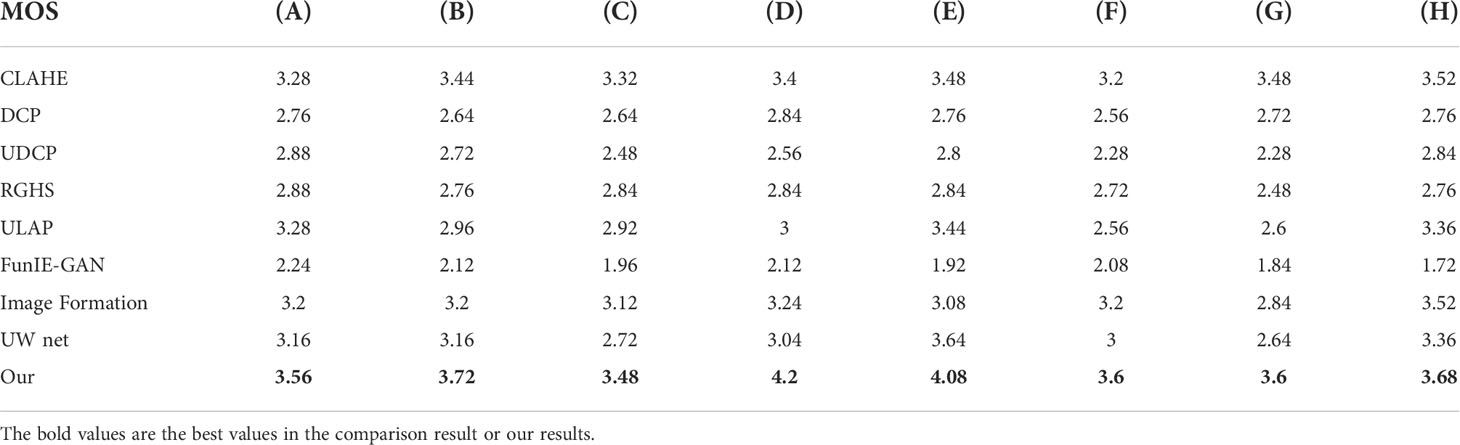

In addition, we conduct the Mean Opinion Score (MOS) test as subjective test. We find 25 volunteers on university campuses to evaluate the images in Figure 12. The full score is 5 points. 25 volunteers are made up of teachers and students. The mean score of 25 volunteers is shown in the Table 2. The bold values are the best value in the comparison. The enhanced images by our DAC method get the max score in each comparison. This also means that the enhanced images by our DAC have obvious advantages in the subjective test and more in line with human visual aesthetics. Everyone can find the table of result scores by subjective test in https://github.com/lailaiyun/Single-Underwater-Image-Enhancement-Based-on-Differential-Attenuation-Compensation.

Table 2 MOS of images in Figure 12.

As shown in Figure 12, by comparing with other algorithms, we can see that our algorithm has a significant enhancement effect on the image. The underwater image we processed attenuates the color shift of the image, reduces the blue and green hue of the image, and restores the true color of the image, making it visually closer to the real sense of land. In addition, we also enhance the image clarity and make the image more detailed. UCM stretches the image in RGB and HSV color space to enhance the quality of the image. FUnIE-GAN and Image formation all claim to improve the contrast of the image. However, through experimental comparison, it can be found that our DAC method is significantly better than their processing results in improving the contrast and details of underwater images. Therefore, our method has obvious advantages compared with the general method of improving image quality by stretching.

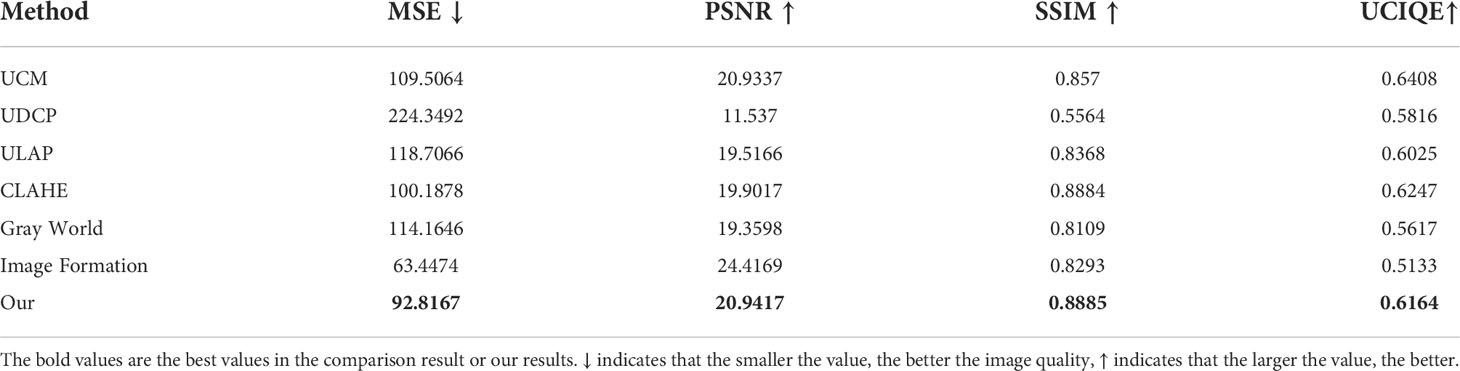

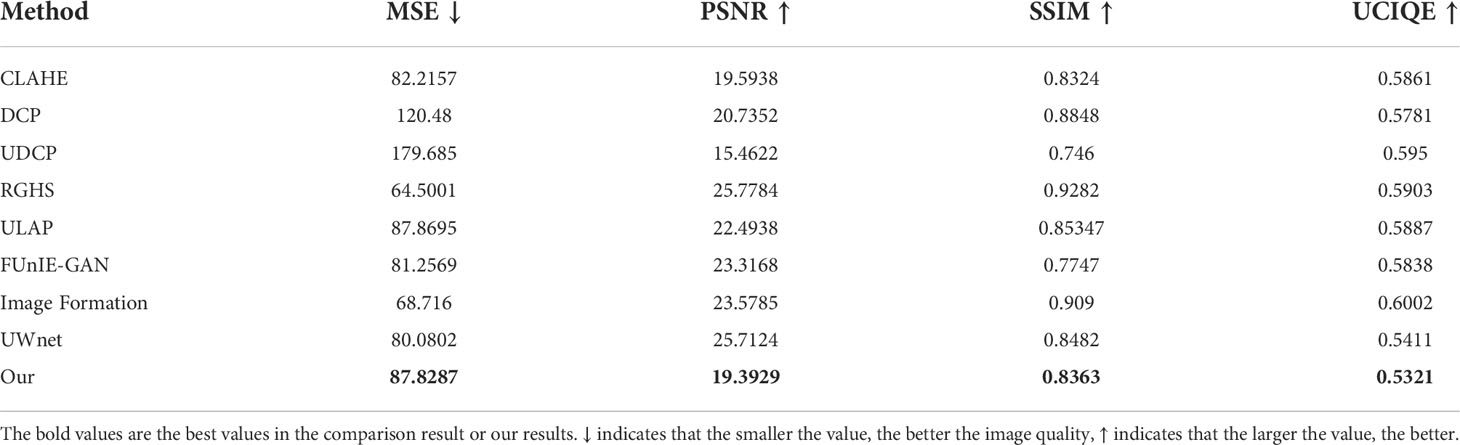

Table 3 shows the results of the quantitative analysis of our method with other methods for MSE, PSNR, SSIM, and UCIQE metrics. The bold values are our results in comparison. The analysis of the above table shows that the results of our method are only larger than those of the Image Formation method in the MSE comparison. The results of our method are only smaller than the Image Formation method in the PSNR comparison, but the results of our method are much higher than the Image Formation method in both the SSIM and UCIQE comparisons. And our method achieved the maximum value in the comparison of SSIM and only scored less than the UCM method in the comparison of UCIQE. Overall, it seems that our method still has a clear advantage over other methods in quantitative analysis.

Table 3 The quantitative analysis of comparing with other methods on UIEB dataset. Each value is the mean of the processing results of each method in UIEB dataset.

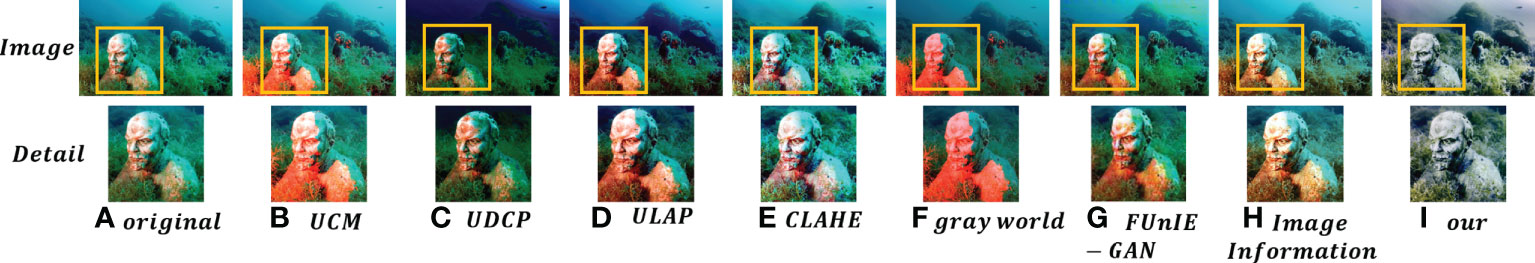

We also compared the details of the images for each method. The details of the stone statue and the box were compared as shown in Figures 13, 14. In Figure 13 it can be found that other algorithms have some exposure on the stone image. Our algorithm corrects the problem of uneven illumination of the stone image, enhancing the dark areas and weakening the bright areas of the image. And it can be found in Figure 14 that the image processed by the algorithm of this paper has more details and the text part is clearer, which effectively proves the improvement of the image details by the algorithm of this paper.

Figure 13 Comparison of stone statue detail. (A–I) are the detail information comparison of the original image with UCM, UDCP, ULAP, CLAHE, gray world, FUnIE-GAN, Image Information and our DAC processed stone image, respectively.

Figure 14 Comparison of text on the box. (A–I) are the textual information comparison of the original image with UCM, UDCP, ULAP, CLAHE, gray world, FUnIE-GAN, Image Information and our DAC processed box image, respectively.

In addition to the UIEB dataset, the experiments in this paper also use the synthetic underwater image dataset EUVP provided by (Islam et al., 2020) in FUnIE-GAN. This dataset consists of unpaired data and paired data. We select the Underwater Dark in the paired data as dataset of our experiment, and randomly selected 8 underwater images for qualitative and quantitative analysis.

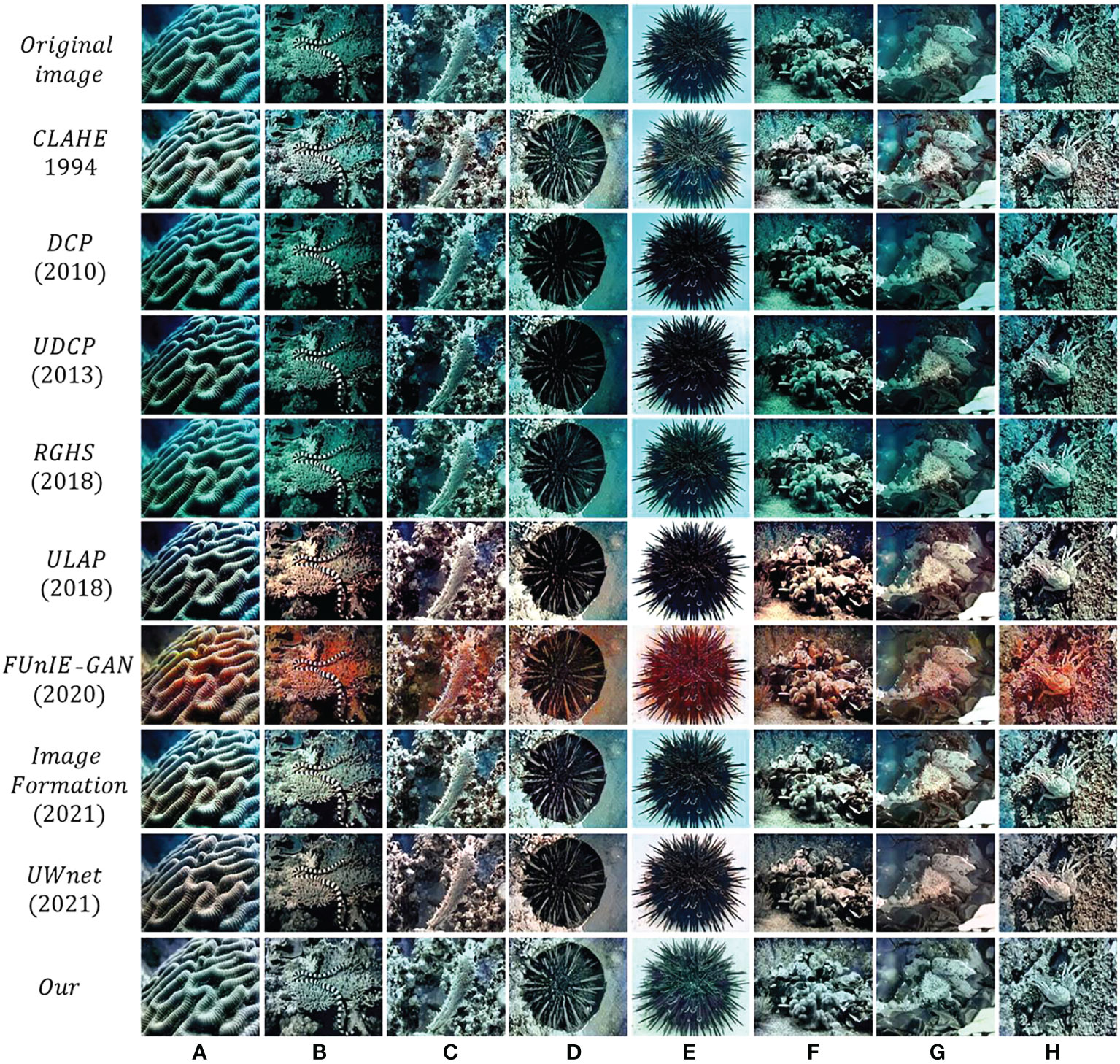

In addition, we also conduct the Mean Opinion Score (MOS) test as subjective test. We find 25 volunteers on university campuses to evaluate the images in Figure 15. The full score is 5 points. 25 volunteers are made up of teachers and students. The mean score of 25 volunteers is shown in the Table 4. The bold values are the best values in the MOS test. The enhanced images by DAC get the max score in each comparison. This also means that the enhanced images by our DAC have obvious advantages in the subjective test and more in line with human visual aesthetics. Everyone can find the table of result scores by subjective test in https://github.com/lailaiyun/Single-Underwater-Image-Enhancement-Based-on-Differential-Attenuation-Compensation.

Figure 15 The DAC compares with classical and state-of-the-art method on the EUVP dataset. (A–H) is the comparison results of original image with CLAHE, DCP, UDCP, RGHS, ULAP, FUnIE-GAN, Image Information, UWnet and our DAC in each underwater scene.

Table 4 MOS of images in Figure 15.

As shown in Figure 15, the algorithms in this paper are shown the comparison of the image processing results with the traditional defogging algorithm CLAHE, DCP, the proposed UDCP based on the DCP method, the relative global histogram stretching(RGHS) method proposed by Huang (Huang et al., 2018), Underwater Light Appreciation Prior (ULAP), and Compressed Model for Underwater Image Enhancement (UWnet) proposed by Ankita (Naik et al., 2021).

The results of the CLAHE, DCP, UDCP, and RGHS methods removed the blurring of the images, but did not eliminate the blue-green hue of the underwater images. The resultant image details after Image Formation method and UWnet method processing are not as clear as the details of the image after our DAC method processing, as shown in the comparison of Figure 15D, E), the sea urchin spine after DAC method processing has more detail information.

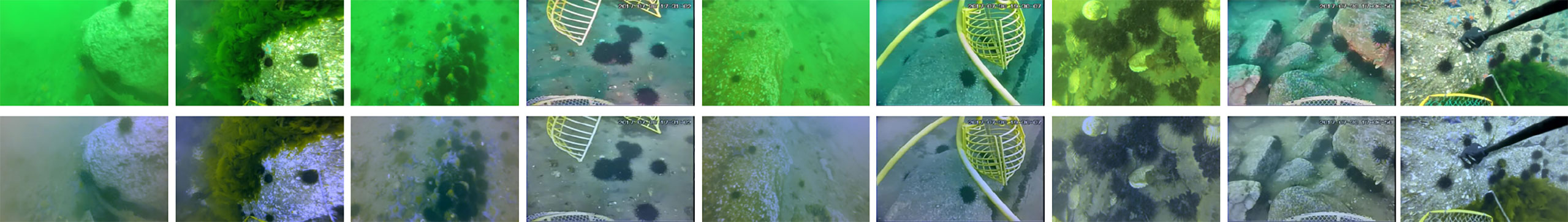

As shown in Table 5, the data reflects the comparison of each method. , the bold values are our results in the comparison. Our DAC results achieve good values in MSE and SSIM, and outperform most other methods, this means our DAC results are similar to ground truth. From our experiences, larger PSNR value do not seem to correlate with better visual quality of images in many cases. Therefore, although the PSNR value of the DAC result is not large, the visual effect is indeed better than most methods, the detailed analysis about are shown in subjective tests. Our DAC result get the lowest mean value UCIQE in comparing. This is because UCIQE is a color related metric, while the EUVP dataset is a synthetic dataset, and the corresponding ground truth colors are richer, but the color of the underwater environment in the real world is dull, as shown in the first row of Figure 16. The color of the image processed by our DAC method is more consistent with the actual situation.

Table 5 The quantitative analysis of comparing with other methods on EUVP dataset. Each value is the mean of the processing results of each method in EUVP dataset.

Figure 16 Original underwater images provided by 2022 China Underwater robot professional contest, and DAC results of these images.

On the one hand, underwater image enhancement tasks can provide high quality underwater images and videos that conform to human visual habits, and on the other hand, they also serve as a basis for other underwater development tasks by enhancing the quality of underwater images and videos to improve the robustness and accuracy of tasks such as underwater target detection. In recent years, many researchers have proposed some new research ideas by combining the underwater image enhancement task with the object detection task. Yeh et al. (Yeh et al., 2021) proposed a light-weight deep neural network (LDN), the network contains color conversion network and Object detection network, which quantitatively proved that correcting the color of underwater images can improve the accuracy of underwater target detection. Liu et al. (Liu et al., 2022) solved the problem of low contrast and loss of color in underwater images by a self-adaptive global histogram method and introduced the convolutional block attention module (CBAM) in YOLO v5 to adapt the network to target detection in underwater environments. Zhao et al. (Zhao et al., 2021) designed a new composite backbone network (CBresnet) and an enhanced path aggregation network (EPANet) by improving the residual network (ResNet) to form a novel composite fish detection framework. The method demonstrates a strong detection capability for underwater environmental targets.

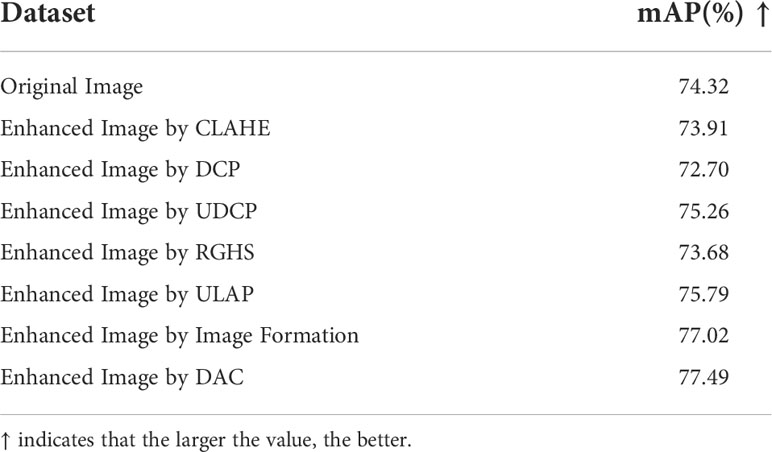

Although we do not propose new methods applicable to underwater target detection based on underwater image enhancement in this paper, we have actually been working on target detection tasks in underwater environments for many years, and we have shown our latest progress in our other work. As shown in the Figure 17 and Table 6 we briefly demonstrate the performance improvement of the DAC method mentioned in this paper for the underwater target detection task.

Table 6 All mAPs for original underwater images dataset and enhanced underwater images dataset by CLAHE, DCP, UDCP, RGHS, ULAP, Image Formation and DAC method, are got at the 100th epoch.

We have enhanced the underwater images provided by 2022 China Underwater robot professional contest, Everyone can get it from: http://www.urpc.org.cn/index.html. It can be found that the target information of the processed image detection becomes clear. We applied the processed image to YOLO v7 (Wang et al., 2022) target detection, and it can be found that more targets can be detected in the enhanced image, as shown in Figure 17. Application experiments related to YOLO v7 in the environment with Intel(R) Core (TM) i7-12700KF@3.61 GHz CPU, 16GB RAM, NVIDIA GeForce RTX 3080 Ti graphics card, Windows 10 Professional, Python version 3.8, CUDA version 11.6, and Adam gradient descent optimizer. PyTorch version 1.12.0, CUDA version 11.6. Gradient descent optimizer is Adam. Learning rate update during training is step. Maximum learning rate is 0.001. Frozen training batch size is 8, unfrozen training batch size is 4. Momentum is 0.937.

As shown in Figure 16, the first row are original images, the second row are DAC results of these images. These original images provided by 2022 China Underwater robot professional contest, these images are all collected from real underwater scenes, we can clearly see that the colors of the images are relatively monotonous. This reflects the fact that the underwater image in the real world has dull color. Therefore, parameters related to color will not get high value. This may explain the lower values of color related UCIQE parameter in our quantitative analysis in Table 5 on the synthetic underwater dataset EUVP in Section 4.2.

As shown in the Figure 17, the top left image in the figure shows the original unprocessed image, the bottom left image shows the detection result of the unprocessed image, the top right image shows the underwater image after the DAC method, and the bottom right image shows the detection result of the processed underwater image. As the yellow box in the figure shows, the processed underwater image is able to identify more sea urchins.

In the comparison experiment, we process the original images provided by 2022 China Underwater robot professional contest with CLAHE, DCP, UDCP, RGHS, ULAP, Image Formation and our DAC method respectively, and do not change the label, and then train the YOLO v7 model. The model can realize underwater target detection after trained. Because the output images size of the FUnIE-GAN and UWnet methods changes, resulting in a mismatch between the image and the label, we do not compare the improvement of YOLO v7 with the images processed by the FUnIE-GAN and UWnet methods. At the 100th epoch, we get the original underwater image dataset and the model mAPs of the datasets processed by each method. As shown in Table 6, dataset enhanced by CLAHE, DCP, RGHS, the corresponding mAP is lower than that of the Original Image, which may be related to the distortion of some images processed by these methods. The mAP values corresponding to the datasets enhanced by UDCP, ULAP, Image Formation, and DAC methods have been significantly improved, and the mAP value corresponding to the dataset processed by the DAC method is the largest, get the increase of 3.17%. This proves that the enhanced underwater images by our DAC method can significantly improve the performance of related underwater object detection and outperform other methods.

After demonstrating the performance improvement of our DAC method on the task of underwater image object detection. In order to verify that the underwater images processed by our algorithm improve the efficiency of the vision task, it is shown that we also applied our processed images to the edge detection of the Canny operator (Canny, 1986), as shown in Figure 18, there is the comparison that the Canny edge detection results of the original image and the image enhanced by each method.

In Figure 18, the first and third rows are the original image and the result images after processing by each method, and the second and fourth rows are the results of Canny edge detection corresponding to each image. In the comparison of the second row, the outline of the background stone in the image enhanced by our method is clearer, and the outline of the seagrass in the image is also clear. In the comparison in the fourth row, the outlines of the stone in the images processed by our method are clearer and the background noise is less. This proves that our DAC method improves the quality of underwater images to a certain extent and can improve the performance of underwater exploitation related tasks.

In this paper, we propose a pixel processing-based underwater image enhancement method DAC, which decomposes the information of each channel of the underwater image in RGB color space based on the characteristic of differential attenuation of different wavelengths of light in the underwater environment, compensates the R channel detail information of the image, eliminates the image blur and corrects the image chromatic aberration, and obtains the underwater image closer to the real color. In this paper, we propose a pixel processing-based underwater image enhancement method DAC, which decomposes the information of each channel of the underwater image in RGB color space based on the characteristic of differential attenuation of different wavelengths of light in the underwater environment, compensates the R channel detail information of the image, eliminates the image blur and corrects the image chromatic aberration, and obtains the underwater image closer to the real color. To verify the effectiveness of the DAC algorithm, we demonstrate the superiority of our algorithm by qualitative and quantitative analysis in the experimental section and the application section.

Publicly available datasets were analyzed in this study. This data can be found here: UIEB:https://li-chongyi.github.io/proj_benchmark.html. EUVP:https://irvlab.cs.umn.edu/resources/euvp-dataset.

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

YL completes the main work of this paper. ZZ, BS and ZX guide YL in the research of this work. JT, WL, JC and JY help complete the part of experiment. All authors are involved in revising the manuscript, proofreading, and approving the submit.

Our work was supported in part by the Key Platform and Scientific Research Project for General Universities, Guangdong, 2022, grant 2022ZDZX4061, in part by the Science and Technology Program of Social Development, Zhuhai, 2022, grant 2220004000195, in part by the Guangdong Provincial Key Laboratory of Interdisciplinary Research and Application for Data Science, BNU-HKBU United International Collegeproject code 2022B1212010006 and in part by Guangdong Higher Education Upgrading Plan (2021-2025) with UIC research grant R0400001-22 and R201902.

Throughout the writing of this thesis. I have received a great deal of support and assistance. I would like to express my gratitude to all those who helped me during the writing of this thesis. My deepest gratitude goes first and foremost to my supervisor, Zhuang Zhou, for his constant encouragement and guidance. He has walked me though all the stages of the writing of this thesis. Without his consistent and illuminating instruction, this thesis could not have reached its present form. Second, I would like to express my heartfelt gratitude to professor Binghua Su, who provided me a good platform to complete my thesis. Finally, I am indebted to my parents, other authors of this paper, and my friends for their continuous support and encouragement.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Abdullah-Al-Wadud M., Kabir M. H., Dewan M. A. A., Chae O. (2007). A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53, 593–600. doi: 10.1109/TCE.2007.381734

Akkaynak D., Treibitz T. (2018). “A revised underwater image formation model,” in Proceedings of the IEEE conference on computer vision and pattern recognition. Vol. 19. (Salt Lake City, UT, USA: IEEE), 6723–6732. doi: 10.1109/CVPR.2018.00703

Akkaynak D., Treibitz T. (2019). Sea-Thru: A method for removing water from underwater images. Proc. IEEE/CVF Conf. Comput. Vision Pattern Recognit. 20, 1682–1691. doi: 10.1109/CVPR.2019.00178

Ancuti C. O., Ancuti C., De Vleeschouwer C., Bekaert P. (2017). Color balance and fusion for underwater image enhancement. IEEE Trans. image Process. 27, 379–393. doi: 10.1109/TIP.2017.2759252

Ancuti C., Ancuti C. O., Haber T., Bekaert P. (2012). “Enhancing underwater images and videos by fusion,” in 2012 IEEE conference on computer vision and pattern recognition, Vol. 12. (IEEE), 81–88.

Canny J. (1986). A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 39, 679–698. doi: 10.1109/TPAMI.1986.4767851

Chen X., Zhang P., Quan L., Yi C., Lu C. (2021). Underwater image enhancement based on deep learning and image formation model. arXiv 33, 2101.00991. doi: 10.48550/arXiv.2101.00991

Clarke G. L., James H. R. (1939). Laboratory analysis of the selective absorption of light by sea water. JOSA 29, 43–55 3. doi: 10.1364/JOSA.29.000043

Drews P., Nascimento E., Moraes F., Botelho S., Campos M. (2013). “Transmission estimation in underwater single images,” in Proceedings of the IEEE international conference on computer vision workshops, Vol. 825–830. (Sydney, NSW, Australia: IEEE), 18. doi: 10.1109/ICCVW.2013.113

Egorenko M. P., Efremov V. S. (2020). “Mirror-lens camera system for underwater drones,” in 26th international symposium on atmospheric and ocean optics, atmospheric physics, (SPIE) 11560, 601–605.6.

Engin D., Genç A., Kemal Ekenel H. (2018). “Cycle-dehaze: Enhanced cyclegan for single image dehazing,” in Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Vol. 825–833. (Lake City, UT, USA: IEEE), 21. doi: 10.1109/CVPRW.2018.00127

Fu X., Fan Z., Ling M., Huang Y., Ding X. (2017). “Two-step approach for single underwater image enhancement,” in 2017 international symposium on intelligent signal processing and communication systems (ISPACS), vol. 789–794. (IEEE), 27.

He K., Sun J., Tang X. (2010). Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33, 2341–2353. doi: 10.1109/TPAMI.2010.168

Hore A., Ziou D. (2010). “Image quality metrics: Psnr vs. ssim,” in 2010 20th international conference on pattern recognition, Vol. 2366–2369. (IEEE), 30.

Howell K. L., Hilário A., Allcock A. L., Bailey D., Baker M., Clark M. R., et al. (2021). A decade to study deep-sea life. Nat. Ecol. Evol. 5, 265–267. doi: 10.1038/s41559-020-01352-5

Huang D., Wang Y., Song W., Sequeira J., Mavromatis S. (2018). “Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition,” in International conference on multimedia modeling. (Springer), 453–465.

Iqbal K., Odetayo M., James A., Salam R. A., Talib A. Z. H. (2010). “Enhancing the low quality images using unsupervised colour correction method,” in In 2010 IEEE International Conference on Systems, Man and Cybernetics, Vol. 31. (IEEE), 1703–1709.

Islam M. J., Xia Y., Sattar J. (2020). Fast underwater image enhancement for improved visual perception. IEEE Robotics Automation Lett. 5, 3227–3234 24. doi: 10.1109/LRA.2020.2974710

Kaur M., Kaur J., Kaur J. (2011). Survey of contrast enhancement techniques based on histogram equalization. Int. J. Advanced Comput. Sci. Appl. 2. doi: 10.14569/IJACSA.2011.020721

kumar Rai R., Gour P., Singh B. (2012). Underwater image segmentation using clahe enhancement and thresholding. Int. J. Emerg. Technol. Advanced Eng. 2, 118–123.26.

Li C., Guo C., Ren W., Cong R., Hou J., Kwong S., et al. (2019). An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 28. doi: 10.1109/TIP.2019.2955241

Li Y., Lu H., Li K.-C., Kim H., Serikawa S. (2018). Non-uniform de-scattering and de-blurring of underwater images. Mobile Networks Appl. 23, 352–362 16. doi: 10.1007/s11036-017-0933-7

Liu Z., Zhuang Y., Jia P., Wu C., Xu H., Liu Z. (2022). A novel underwater image enhancement algorithm and an improved underwater biological detection pipeline. J. Mar. Sci. Eng. 10, 1204 36. doi: 10.3390/jmse10091204

Lu H., Li Y., Xu X., He L., Li Y., Dansereau D., et al. (2016). “Underwater image descattering and quality assessment,” in Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP 2016) (Institute of Electrical and Electronics Engineers Inc.), Vol.1998–2002. (Phoenix, AZ, USA: IEEE), 15. doi: 10.1109/ICIP.2016.7532708

McGlamery B. (1980). A computer model for underwater camera systems. In Ocean Optics VI (SPIE) 208, 221–231 14. doi: 10.1117/12.958279

Naik A., Swarnakar A., Mittal K. (2021). “Shallow-uwnet: Compressed model for underwater image enhancement (student abstract),” in Proceedings of the AAAI conference on artificial Intelligence, Vol. 35. (Salt Lake City, UT, USA), 15853–15854.

Ortiz A., Simó M., Oliver G. (2002). A vision system for an underwater cable tracker. Mach. Vision Appl. 13, 129–140 1. doi: 10.1007/s001380100065

Phillips B. T., Licht S., Haiat K. S., Bonney J., Allder J., Chaloux N., et al. (2019). Deepi: A miniaturized, robust, and economical camera and computer system for deep-sea exploration. Deep Sea Res. Part I: Oceanographic Res. Papers 153, 1031365. doi: 10.1016/j.dsr.2019.103136

Pizer S. M., Amburn E. P., Austin J. D., Cromartie R., Geselowitz A., Greer T., et al. (1987). Adaptive histogram equalization and its variations. Comput. vision graphics image Process. 39, 355–368 10. doi: 10.1016/S0734-189X(87)80186-X

Reza A. M. (2004). Realization of the contrast limited adaptive histogram equalization (clahe) for real-time image enhancement. J. VLSI Signal Process. Syst. signal image video Technol. 38, 35–44 25. doi: 10.1023/B:VLSI.0000028532.53893.82

Sahu P., Gupta N., Sharma N. (2014). A survey on underwater image enhancement techniques. Int. J. Comput. Appl. 87, 7. doi: 10.5120/15268-3743

Song W., Wang Y., Huang D., Tjondronegoro D. (2018). “A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration,” in Pacific rim conference on multimedia, vol. 32. (Springer), 678–688.

Wang C.-Y., Bochkovskiy A., Liao H.-Y. M. (2022). Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 38. doi: 10.48550/arXiv.2207.02696

Wang N., Zhou Y., Han F., Zhu H., Yao J. (2019). Uwgan: underwater gan for real-world underwater color restoration and dehazing. arXiv 23, 1912.10269. doi: 10.48550/arXiv.1912.10269

Wozniak B., Dera J. (2007). Light absorption by suspended particulate matter (SPM) in sea water Vol. 4 (Springer).

Yang M., Sowmya A. (2015). An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24, 6062–6071 29. doi: 10.1109/TIP.2015.2491020

Yang N., Zhong Q., Li K., Cong R., Zhao Y., Kwong S. (2021). ). a reference-free underwater image quality assessment metric in frequency domain. Signal Process.: Image Commun. 94, 116218 13. doi: 10.1016/j.image.2021.116218

Yeh C.-H., Lin C.-H., Kang L.-W., Huang C.-H., Lin M.-H., Chang C.-Y., et al. (2021). Lightweight deep neural network for joint learning of underwater object detection and color conversion. IEEE Trans. Neural Networks Learn. Syst. 35. doi: 10.1109/TNNLS.2021.3072414

Zhao Z., Liu Y., Sun X., Liu J., Yang X., Zhou C. (2021). Composited fishnet: Fish detection and species recognition from low-quality underwater videos. IEEE Trans. Image Process. 30, 4719–4734 37. doi: 10.1109/TIP.2021.3074738

Keywords: underwater image, image enhancement, contrast stretching, differential attenuation compensation, machine vision

Citation: Lai Y, Zhou Z, Su B, Xuanyuan Z, Tang J, Yan J, Liang W and Chen J (2022) Single underwater image enhancement based on differential attenuation compensation. Front. Mar. Sci. 9:1047053. doi: 10.3389/fmars.2022.1047053

Received: 17 September 2022; Accepted: 17 October 2022;

Published: 11 November 2022.

Edited by:

Hong Song, Zhejiang University, ChinaReviewed by:

Yaoming Zhuang, Northeastern University, ChinaCopyright © 2022 Lai, Zhou, Su, Xuanyuan, Tang, Yan, Liang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhuang Zhou, am9uYWh6aG91MTk5NTI2QGdtYWlsLmNvbQ==

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.