95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Mar. Sci. , 29 March 2018

Sec. Marine Fisheries, Aquaculture and Living Resources

Volume 5 - 2018 | https://doi.org/10.3389/fmars.2018.00106

Predatory open access (OA) journals can be defined as non-indexed journals that exploit the gold OA model for profit, often spamming academics with questionable e-mails promising rapid OA publication for a fee. In aquaculture—a rapidly growing and highly scrutinized field—the issue of such journals remains undocumented. We employed a quantitative approach to determine whether attributes of scientific quality and rigor differed between OA aquaculture journals not indexed in reputable databases and well-established, indexed journals. Using a Google search, we identified several non-indexed OA journals, gathered data on attributes of these journals and articles therein, and compared these data to well-established aquaculture journals indexed in quality-controlled bibliometric databases. We then used these data to determine if non-indexed journals were likely predatory OA journals and if they pose a potential threat to aquaculture research. On average, non-indexed OA journals published significantly fewer papers per year, had cheaper fees, and were more recently established than indexed journals. Articles in non-indexed journals were, on average, shorter, had fewer authors and references, and spent significantly less time in peer review than their indexed counterparts; the proportion of articles employing rigorous statistical analyses was also lower for non-indexed journals. Additionally, articles in non-indexed journals were more likely to be published by scientists from developing nations. Worryingly, non-indexed journals were more likely to be found using a Google search, and their articles superficially resembled those in indexed journals. These results suggest that the non-indexed aquaculture journals identified herein are likely predatory OA journals and pose a threat to aquaculture research and the public education and perception of aquaculture. Several points of reference from this study, in combination, may help scientists and the public more easily identify these possibly predatory journals, as these journals were typically established after 2010, publishing <20 papers per year, had fees <$1,000, and published articles <80 days after submission. Subsequently checking reputable and quality-controlled databases such as the Directory of Open Access Journals, Web of Science, Scopus, and Thompson Reuters can aid in confirming the legitimacy of non-indexed OA journals and can facilitate avoidance of predatory OA aquaculture journals.

In addition to dramatic environmental change, the Anthropocene Epoch is also characterized by rapid and drastic human societal changes (Ellis et al., 2016). One such societal shift is the ways that humans communicate with one another, as advances in technology have created a hyper-connected world in which considerable amounts of information are available at the push of a button. As such, the ways that humans view and interact with the natural world and one another in this time of great change can be impacted by the information that they receive and the ways in which it is perceived (Castree, 2017; Holmes et al., 2017). Unfortunately, however, this hyper-connectivity has resulted in a platform for the dissemination of propagandist information and the onset of a “post-truth era” (e.g., Keyes, 2004; d'Ancona, 2017). Thus, now, more than ever, we rely on the adequate dissemination and propagation of objectively truthful information on a global scale. Given that science is arguably the best method to obtain objective truth, the scientific community must endeavor to make scientific information accessible and understandable to mass audiences outside of the scientific community if truth and logic are to prevail in the Anthropocene.

Open access publishing—making academic research freely available to everyone—has emerged as one tool to make science more accessible to all by providing universal free access to scientific articles. The open access approach can have many benefits. For example, the citation counts of open access articles are often higher than those of closed-access articles (Antelman, 2004; Hajjem et al., 2005; Eysenbach, 2006; Craig et al., 2007; Gargouri et al., 2010; Clements, 2017). Open access articles are also reported to attract more media attention and increased author exposure (Adie, 2014; Wang et al., 2015; McKiernan et al., 2016). Most importantly, the general premise of open access publishing allows scholarly works to be legally accessed by anyone with internet access (although illegal means of obtaining scholarly works blocked behind subscription paywalls are available, e.g., SciHub; McNutt, 2016), making scientific information available to those who have historically been restricted (e.g., researchers in developing nations that cannot afford journal subscriptions, the general public; Björk et al., 2014; Swan et al., 2015).

Although there are many benefits of OA publishing, the approach has been linked to the emergence of so-called “predatory OA journals” (Beall, 2012, 2013), which have rapidly increased in recent years (Shen and Björk, 2015). Predatory OA journals are typically not indexed in quality-controlled databases [e.g., Directory of Open Access Journals (DOAJ), Web of Science, Scopus, Thompson Reuters; however it is important to note that not all non-indexed OA journals are predatory] and exploit the gold OA model for profit. These journals also provide substandard services, accept all submitted articles, and tend to spam academics with disingenuous e-mails and the promise of rapid publication in their fully open access journals for a fee (Jalalian and Mahboobi, 2014; Moher and Srivastava, 2015); many even report bogus and/or alternative impact factors (Gutierrez et al., 2015). Such journals are often run by and recruit non-scientists (e.g., fake editors; Sorokowski et al., 2017), use questionable peer review practices (Bohannon, 2013), and publish low quality science and/or non-scientific information (Bohannon, 2013; “shoddy science indeed, they publish.”—Dockrill, 2017). Thus, predatory OA journals can disseminate potentially dubious results that have not received adequate peer review, fundamentally threatening principled scientific integrity (Beall, 2016; Vinny et al., 2016).

Researchers may be naïve to predatory OA publishers (which, for some fields, can be an alarming percentage of researchers; Christopher and Young, 2015) or can be pressured to publish and may thus use predatory OA journals as an outlet for disseminating their research quickly (Van Nuland and Rogers, 2016). For naïve but well-intended researchers, publishing in predatory OA journals might hinder career progression if hiring committees penalize researchers for publishing in such journals. Alternatively, unethical researchers can also exploit predatory OA journals to increase their publication numbers by avoiding rigorous peer review (Shen and Björk, 2015; Berger, 2017), and such practices can make unethical researchers appealing to hiring committees by increasing their publication numbers. Furthermore, predatory OA journals can provide an outlet for the publication of research from nations that are often excluded (intentionally or not) from Western publishing outlets. Indeed it is reported that the majority of authors publishing in these journals are researchers in developing nations who could better use their funds to stimulate research activities or publish in non-predatory open access journals (Shen and Björk, 2015; Xia et al., 2015; Seethapathy et al., 2016; Balehegn, 2017; Gasparyan et al., 2017). Consequently, predatory publishing practices threaten the institution of science, undermine legitimate open access journals, and hinder progression of the utilitarian concept of open science on a global scale.

For areas of research that are highly applicable to the public, it is important to elucidate ways of distinguishing predatory OA journals from legitimate journals. One such area of research that has yet to receive attention regarding predatory OA journals is the field of aquaculture. Since the onset of the Anthropocene, aquaculture (i.e., aquatic farming) activities have become characteristic of many coastal regions around the world. Aquaculture is one of the fastest growing food sectors in the world, providing a source of food for millions of people in both developed and developing nations (FAO, 2016). Aquaculture activities are also a major component of the global economy, with an estimated value of more than USD$160 billion (FAO, 2016). Furthermore, while capture fisheries have ceased growth (Pauly and Zeller, 2016) the aquaculture sector continues to increase at an astonishing rate, now accounting for half of all seafood and highlighting its importance for future economic growth and food security (FAO, 2016). Although aquaculture plays a key role in global food systems, public perception of aquaculture activities are often negative and accompanied by many misconceptions, hindering productive and informed discussion (Fisheries and Oceans Canada, 2005; Bacher, 2015; D'Anna and Murray, 2015; Froehlich et al., 2017). It is suggested that clear communication and adequate investigation into the real and perceived threats of aquaculture could help shift public perception and support the growth and sustainability of aquaculture activities (Froehlich et al., 2017). Thus, public accessibility to rigorous, high quality science is critical to stimulate productive dialogue regarding aquaculture activities and sustainability.

While predatory OA journals can provide a seemingly-professional platform for diminutive science and ideas across a number of disciplines, the ways in which such journals threaten the field of aquaculture and influence associated public perception is unknown. Furthermore, the careless and loose attribution of the term “predatory” to many OA journals that are not predatory has recently been heavily criticized (Swauger, 2017). Consequently, an objective understanding of the severity of predatory OA publishing in aquaculture is needed. A more objective understanding of predatory OA aquaculture journal attributes and comparing them to well-established aquaculture journals would not only make it easier for aquaculture stakeholders (scientists, fish farmers, policy makers) to identify predatory OA journals with more confidence, but would provide a measure for the severity of the problem within this highly scrutinized field. Moreover, comparing proxies of scientific rigor between predatory and non-predatory OA journal articles, such as methodological rigor (e.g., statistical rigor) and content quality, would begin to address whether or not such journals really pose a threat to aquaculture research.

The overall goal of this study was to gain a more quantitative and objective understanding of both the quality of the aquaculture journals easily found by internet search engines and the possible associated consequences, and objectively bring to attention the issue of predatory OA publishing within this field of research. More specifically, we attempted to: 1. Quantitatively compare characteristics of non-indexed OA aquaculture journals and the articles therein to those of well-established, indexed aquaculture journals (to determine the likelihood of non-indexed OA journals being predatory), 2. Determine the probability of the general public encountering non-indexed OA aquaculture journals using a Google search, and 3. Use the results of the aforementioned goals to conceptualize potential risks of predatory OA journals to aquaculture research. We hypothesized that non-indexed OA journals would appear more frequently in a Google search, and that articles in those journals would exhibit tendencies of lesser scientific quality than those in well-established, indexed journals based on proxies of scientific rigor.

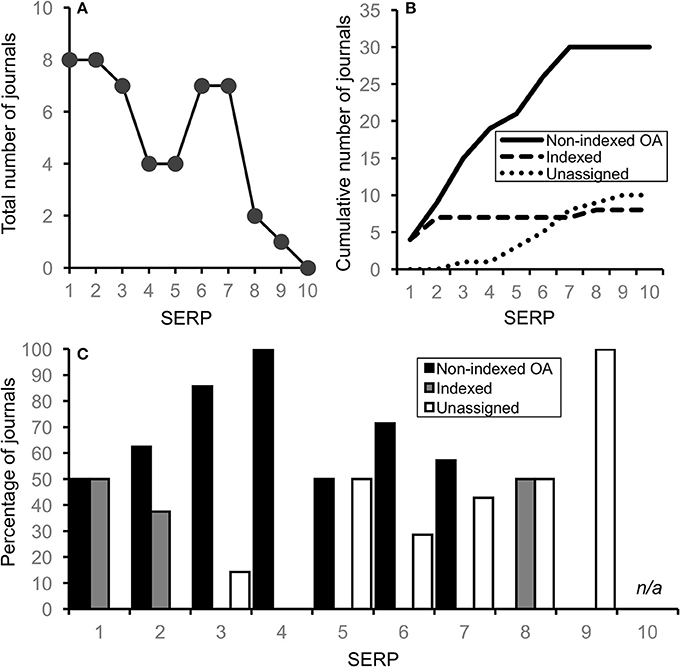

We conducted a simple Google search for potential scholarly aquaculture journals using the keyword string “journal of aquaculture”; advertisements and sponsored links appearing on each search-engine results page (SERP) were excluded. This keyword string was chosen based on what we deemed to be the most likely keyword string that individuals would use when searching for scholarly journals in aquaculture. We also searched keyword strings “journal aquaculture” and “aquaculture journal,” which provided similar proportions of non-indexed vs. indexed journals (13 and 12 non-indexed OA journals within the first three search pages, although the order of journal appearance changed with each new keyword string). A Google search was used because non-indexed journals are not referenced in reliable searchable databases (e.g., DOAJWeb of Science, Scopus, Thompson Reuters), and because the public most likely have access to and use general Google searches to obtain information (as opposed to scholarly databases including Google Scholar; Purcell et al., 2012; Fox and Duggan, 2013; Richter, 2013). We then assessed each hit to determine if the associated webpage presented itself as a scientific journal (i.e., contained apparently scientific research articles pertaining to aquaculture) and limited our search to journals with the term “aquaculture” in the title. We discarded hits that were not scientific journals, and also discarded duplicate journals within and between SERPs.

Due to increasing redundancy in relevant material, we only searched the first 10 SERPs for scientific journals (Figure 1A). Each journal was then categorized as well-established and indexed (hereafter referred to as “indexed”), non-indexed OA, or unassigned (i.e., journals that could not be identified as either indexed or non-indexed OA). We initially identified non-indexed OA journals by searching three quality-controlled databases: the Directory of Open Access Journals (DOAJ), Clarivate Analytics (Journal Citation Reports, JCR), and Scopus. “Indexed” aquaculture journals were characterized as journals that were listed in the DOAJ, Clarivate Analytics JCR, and/or Scopus. Indexed journals could be partially or fully OA; fully OA indexed journals were checked in the DOAJ, Clarivate Analytics JCR, and Scopus to ensure that they were verified as accredited OA journals. Unassigned journals were characterized as journals that were not indexed in the DOAJ, Clarivate Analytics JCR, or Scopus, and were not published under a mandatory OA policy (and could therefore not exploit gold OA for profit).

Figure 1. (A) The total number of scientific journals (n = 48) identified on each search engine results page (SERP; 10 hits page−1). (B) The cumulative number of scientific journals identified in the Google search (across the 10 SERPs) that were categorized as non-indexed OA, indexed, or unassigned. (C) The percentage of journals identified on each SERP that were categorized as non-indexed OA (black), indexed (gray), and unassigned (white).

For each of the 10 SERPs assessed, we recorded the number of journals from each of the three categories. From these data, we calculated the per-SERP and overall totals of each journal type (i.e., indexed, non-indexed OA, and unassigned) found in the Google search. To obtain comparative data on journal attributes between indexed and non-indexed OA aquaculture journals, we analyzed a subsample of journals by choosing the first six journals from each category (ncategory = 6, Ntotal = 12) that had a global scope (i.e., were not restricted to a certain geographic area, e.g., North America); this provided a representative sample of both journal types and a balanced sampling design for statistical analyses. Each selected journal had to have published at least one issue in 2017 (as well as papers in previous years; ensuring at least one issue in 2017 provided confirmation that the journal was still actively publishing, but year of publication was not taken into account when sampling articles because the metrics obtained from each paper would not be affected by publication year), had published a total of >20 articles (to accommodate our chosen article sample size; see next paragraph), and contained a peer review policy on the journal or publisher website. For non-indexed OA journals, there also had to be a clear indication that authors would need to pay a fee for publication.

For each of the six subsampled journals from each category, we collected a variety of journal-level and article-level metrics (Table 1; see Tables S1–S3 for raw data). At the journal level, we recorded the year that the journal was established (i.e., age), the type of peer review reported (single blind or double blind), the APC for open access (where applicable), and the average number of papers published per year (total number of papers ÷ age). Two journals (one non-indexed OA and one indexed) did not report open access APCs, reducing the sample size for this variable from 6 per group to 5 per group.

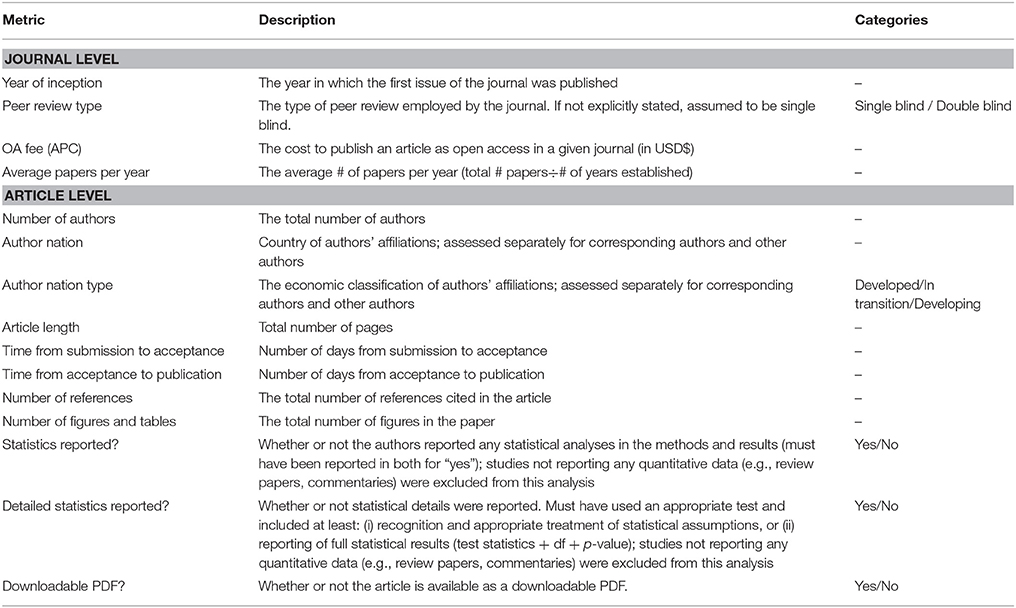

Table 1. Descriptions and associated categories (where applicable) for each journal- and article-level metric collected.

For article-level metrics, we recorded the type of article (research, review, or commentary), the number of authors, the nationality of the corresponding author and all other authors, and the economic classification of an author's country (developed, in transition, or developing, as defined by the UN; United Nations, 2017). To quantitatively determine whether or not non-indexed OA journals published poor science and could thus potentially be predatory, we recorded various proxies of scientific rigor at the article level (see Table 1); it should be noted, however, that these proxies are not necessarily direct indicators of scientific quality and rigor. Some journals did not report the time from submission to acceptance nor the time from acceptance to publication and sample sizes were thus reduced for these metrics (n = 100 for predatory and 80 for non-predatory).

Since our sampling design included both fixed and random factors, we used linear mixed models to test for the effect of journal type (fixed factor) on journal-level metrics (ncategory = 6) and article level metrics (ncategory = 120 except for proportional data with ncategory = 6). For journal level metrics and proportional article-level metrics, “journal” was included as a random factor to control for any random effects of individual journal; likewise, article nested within journal was included as a random variable for non-proportional article level metrics. AIC/log likelihood tests comparing models with and without the random factors were used to determine whether or not random effects were evident (Burnham and Anderson, 2002). Assumptions of normality and homoscedasticity were assessed visually using Q-Q plots and residual plots, respectively. Journal-level metrics were log transformed prior to analyses to avoid violations of homoscedasticity and all proportion data were arcsin square root transformed prior to analyses [Ahrens et al., 1990; while scrutinized (Warton and Hui, 2011), arcsin transformations allowed the data to meet statistical assumptions]. All statistical analyses were conducted in R version 3.4.1 (R Development Core Team, 2017) using the “nlme” package (Pinheiro et al., 2017) with a significance threshold of α ≤ 0.05. R-code for all analyses can be found on GitHub and has been archived on Zenodo (see Supplementary Material section).

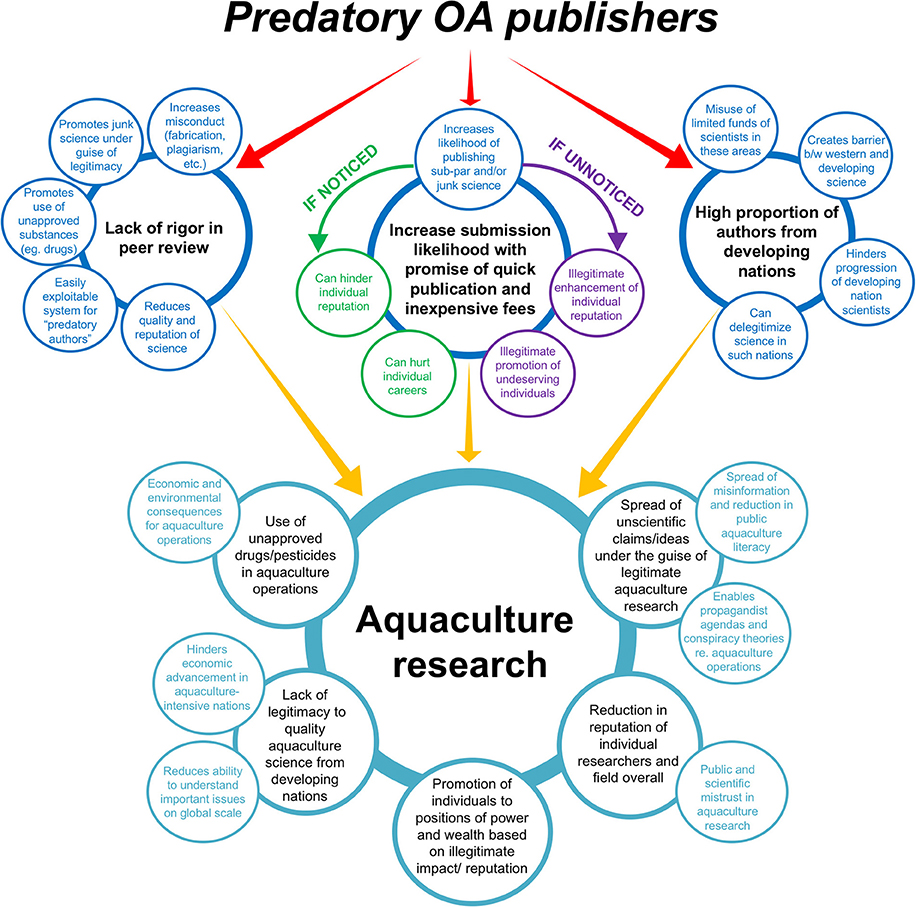

Using the results of the quantitative assessment, we derived a conceptualization of the potential negative impacts of predatory OA journals on aquaculture research. For impact conceptualization, we first identified three potential negative impacts of predatory OA journals and subsequently determined whether or not these results supported or refuted the existence of such impacts in the field of aquaculture: 1. Lack of peer review (indicated by significantly shorter durations between submission and acceptance in non-indexed OA journals, along with significantly lower indicators of scientific and statistical rigor), 2. Enticing scientists to publish work in predatory OA journals by promising quick publication and comparatively cheap OA fees (indicated by significantly shorter times between submission and publication, and significantly cheaper APCs, in non-indexed OA journals), and 3. Exploiting naïve scientists and/or providing an easily exploitable platform for unethical (“predatory”) authors from developing nations, where the pressure to publish is often intense (Butler, 2013). Each of the three negative impacts to science that were found to hold true from our quantitative assessment were then translated into direct effects to aquaculture research.

Non-indexed OA aquaculture journals were ca. three times more numerous and common in a Google search than indexed and unassigned journals (Figure 1). Across the 10 SERPs assessed, we identified a total of 30 non-indexed OA journals, 8 indexed journals, and 10 unassigned journals (Figure 1B). While not strictly OA, the majority of indexed journals were considered hybrid journals (i.e., offered the option of making individual articles OA, but not requiring it); only one indexed journal had a mandatory OA policy and granted waivers and discounts to researchers from underdeveloped nations. Importantly, the proportion of non-indexed OA journals was equal to (3 of 10 SERPs) or higher than (6 of 10 SERPs) that of indexed journals on all SERPs with the exception of SERP eight (Figure 1C).

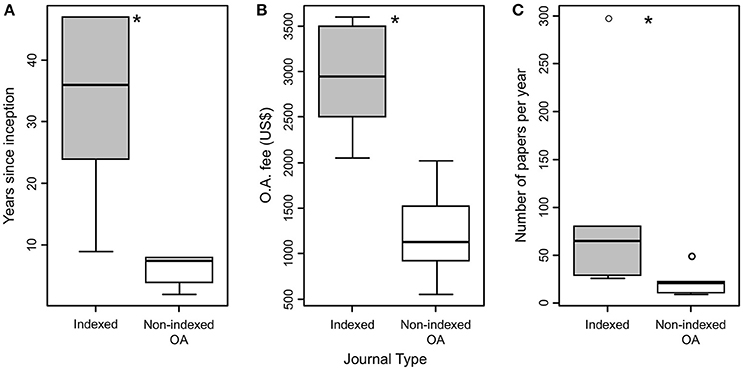

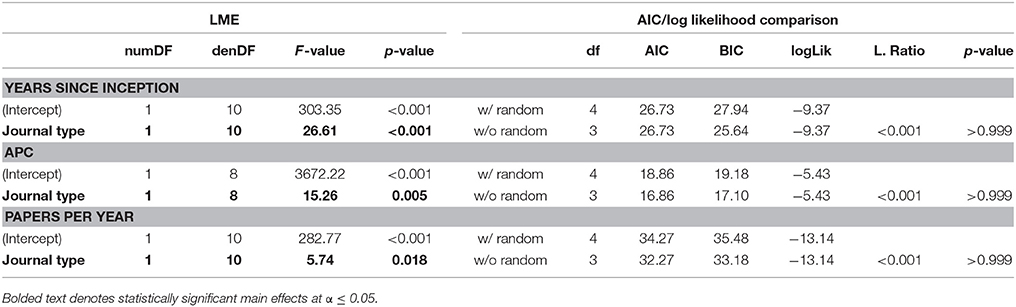

We found significant differences between indexed and non-indexed OA journals for all three journal-level metrics (Figure 2, Table 2), much of it rooted in the longevity of the journals themselves. Relative to indexed journals, non-indexed OA aquaculture journals were characterized by more recent inception years [minimum year of inception: non-indexed OA = 2010, indexed = 1970; F(1, 10) = 26.61, p < 0.001; Table 2, Figure 2A]. In fact, the indexed journal with the most recent inception date (2009) was still older than the oldest non-indexed OA journal (2010). The older age of indexed journals was associated with higher numbers of articles, volumes, and issues than non-indexed OA journals. Non-indexed OA journals were also characterized by cheaper APCs (US$991.50 ± 215.23) when compared to indexed journals (US$2920.60 ± 268.31) [F(1, 8) = 15.26, p = 0.005; Table 2, Figure 2B], and tended to publish fewer papers year−1 than indexed journals [21.4 ± 5.6 papers year−1 in non-indexed OA journals vs. 94.1 ± 42.0 in indexed journals; F(1, 10) = 5.74, p = 0.018; Table 2, Figure 2C]. Interestingly, while all indexed journals surveyed for this study reported a single blind peer review policy (i.e., authors are not made aware of the reviewers' identity), 33% (2/6) of non-indexed OA journals reported double blind peer review, while an additional 33% of non-indexed OA journals reported that double blind review would be made available at the authors' request (i.e., 66% of non-indexed OA journals offered double blind peer review). Statistically, linear mixed effects modeling revealed significant differences between indexed and non-indexed OA journals for all six journal-level metrics, while individual journal had no effect (Table 2).

Figure 2. Boxplot representation of journal-level metrics for indexed (gray boxes) and non-indexed OA (white boxes) journals, including the time since the journal was incepted (A), the cost of publishing open access (A.P.C.) (B), and the average number of papers published per year (C). Asterisks denote significant differences between journal types (see Table 2). n = 6 per group.

Table 2. Results of linear mixed effects models and AIC/log likelihood model comparisons for effects of journal type and journal on journal-level metrics.

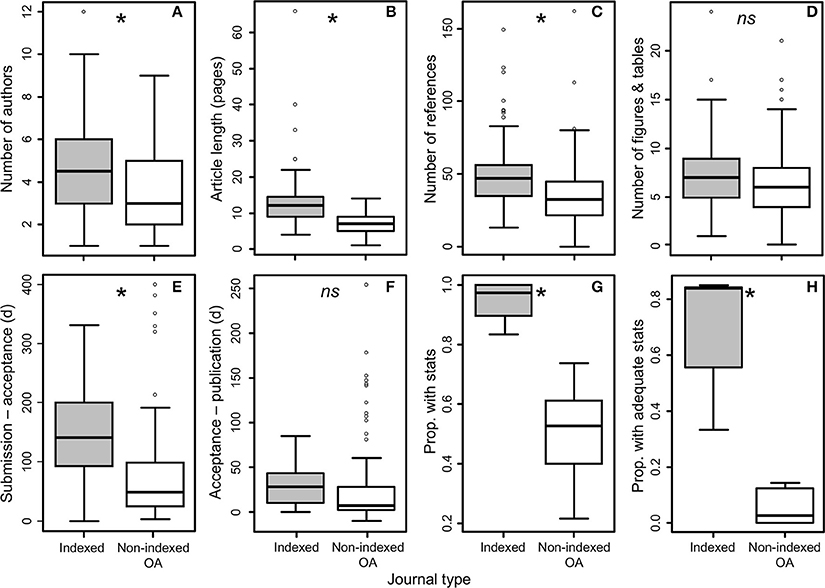

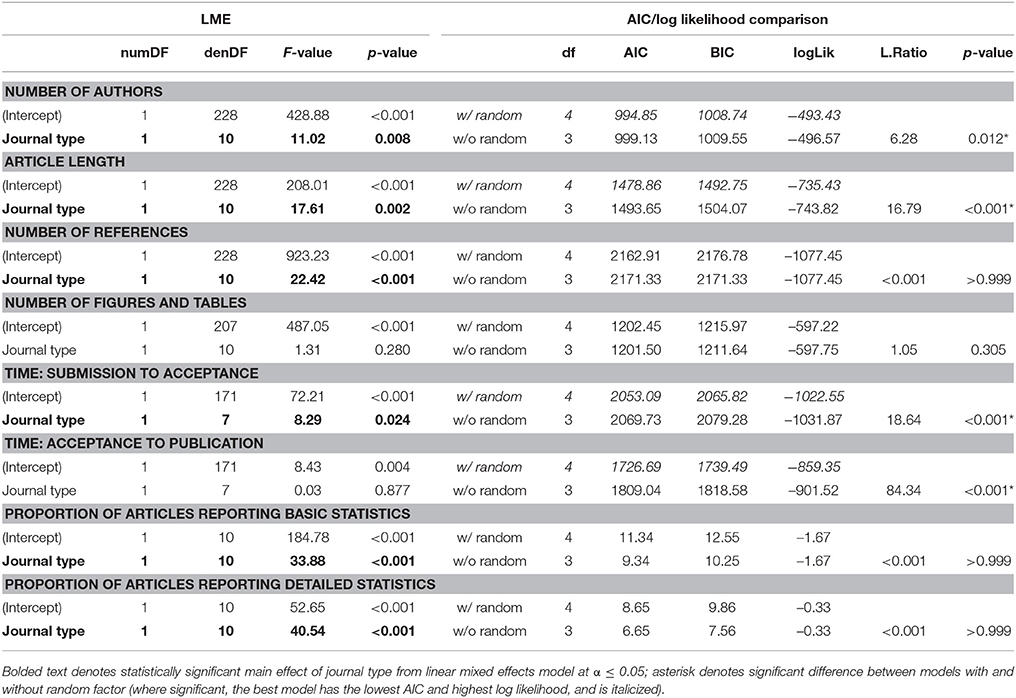

Although non-indexed OA journal articles superficially resembled articles in indexed journals, indicators of scientific rigor in non-indexed OA journals were, in general, lower. Articles in non-indexed OA journals had significantly fewer authors [indexed: 4.69 ± 0.19, non-indexed OA: 3.38 ± 0.42 authors; F(1, 10) = 11.02, p = 0.008], were significantly shorter [indexed: 12.70 ± 0.65, non-indexed OA: 6.99 ± 0.60 pages; F(1, 10) = 17.61, p = 0.002], and were less well referenced [indexed: 49.73 ± 2.03, non-indexed OA: 36.32 ± 4.84 references; F(1, 10) = 22.42, p < 0.001] than articles in indexed journals (Figures 3A–C, Table 3). In contrast, articles from indexed and non-indexed OA journals had similar numbers of figures and tables (Figure 3D, Table 3). While non-indexed OA articles spent significantly less time under peer review than articles in indexed journals [indexed: 150.25 ± 6.91, non-indexed OA: 75.94 ± 17.70 days; F(1, 7) = 8.29, p = 0.024] (Figure 3E, Table 3), the time from acceptance to online publication (i.e., time in production) was statistically similar between articles in indexed and non-indexed OA journals [indexed: 29.77 ± 2.16, non-indexed OA: 26.57 ± 10.16 authors; F(1, 7) = 0.03, p = 0.877] (Figure 3F, Table 3). Additionally, the proportion of articles reporting both basic statistics [indexed: 0.95 ± 0.03, non-indexed OA: 0.50 ± 0.08; F(1, 10) = 33.89, p < 0.001] and detailed statistical analyses [indexed: 0.71 ± 0.09, non-indexed OA: 0.05 ± 0.03 authors; F(1, 10) = 40.54, p < 0.001] was far higher in indexed journals than non-indexed OA journals (Figures 3G,H, Table 3). While clear differences between articles in indexed and non-indexed OA journals existed for metrics of scientific rigor, the incorporation of journal as a random variable significantly improved model fit for many of these metrics (number of authors, length, number of tables, and all three measures of time prior to publication; Table 3).

Figure 3. Boxplot representation of non-demographic article level metrics for indexed (gray boxes) and non-indexed OA (white boxes) journals, including the number of authors (A), the article length (in pages) (B), the number of references (C), the number of figures and tables (D), the time (in days) from submission to acceptance (E) and the time from acceptance to publication (F), and the proportion of articles reporting basic (G) and detailed statistical analyses (H). Asterisks denote significant differences between journal types; ns denotes non-significant differences (see Table 3). See Methods section Data collection for details on sample sizes.

Table 3. Results of linear mixed effects models and AIC/log likelihood model comparisons for effects of journal type and journal on non-demographic article-level metrics.

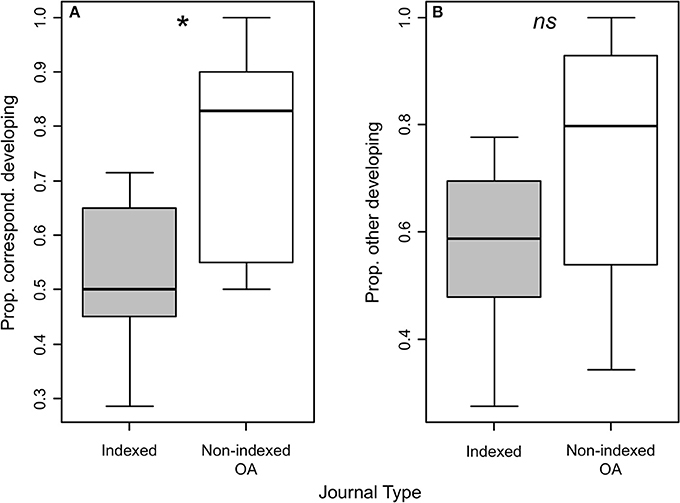

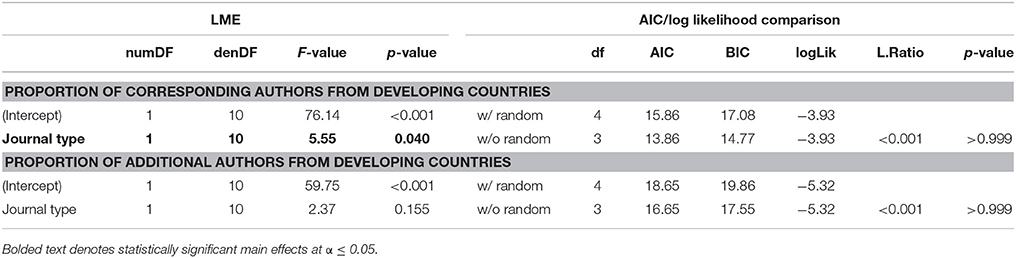

Non-indexed OA journals had a statistically higher number of authors from developing countries than indexed journals. Alongside displaying comparatively weaker scientific rigor, non-indexed OA journals had significantly higher [indexed: 0.51 ± 0.07, non-indexed: 0.78 ± 0.08; F(1, 10) = 5.50, p = 0.04] proportions of corresponding and/or primary authors from developing countries (Figure 4A, Table 4); however, the proportion of additional authors from developing countries was similar between indexed and non-indexed OA journals [indexed: 0.57 ± 0.07, non-indexed OA: 0.73 ± 0.10; F(1, 10) = 2.37, p = 0.155; Figure 4B, Table 4].

Figure 4. Boxplot representation of demographic article level metrics for indexed (gray boxes) and non-indexed OA (white boxes) journals, including the proportion of articles containing corresponding authors (A) and other authors (i.e., all authors other than the corresponding author) (B) with affiliations in developing countries. Asterisks denote significant differences between journal types; ns denotes non-significant differences (see Table 4). n = 6 per group.

Table 4. Results of linear mixed effects models and AIC/log likelihood model comparisons for effects of journal type and journal on demographic article-level metrics.

To our knowledge, this is the first study to measure attributes of journals in aquaculture research to provide a quantitative comparison between non-indexed OA journals and well-established, indexed journals for scientific standards, ethical rigor and possible predatory implications. Broadly, our results suggest that non-indexed OA journals are widespread within aquaculture research and tend to be less established, contain fewer papers, and offer OA at a lower cost. Importantly, these components appear to align with reduced scientific quality (fewer references, shorter peer review) and statistical rigor. Thus, our results suggest that many non-indexed OA aquaculture journals show characteristics of predatory OA journals (as described in the section Introduction), and support the idea that predatory journals often (but perhaps not always) use questionable peer-review practices and publish substandard science and/or non-scientific information (Bohannon, 2013) that can lead to propagation of false information. It is important to note, however, that our results do not show that all non-indexed OA aquaculture journals are predatory OA journals, and individual journals need to be assessed using a combination of criteria outlined below. Furthermore, not all articles published in non-indexed OA journals are necessarily of poor quality. Nonetheless, our results provide evidence that predatory OA journals may be affecting aquaculture science through a lack of proper peer review (i.e., short times between submission and acceptance which could lead to increased pressure on peer reviewers and promote errors in peer review), enticing scientists to publish work in predatory journals by promising quick publication and comparatively cheap OA fees, and by exploiting (either advertently or inadvertently) scientists in developing nations (Figure 5).

Figure 5. Diagrammatic of three major ways in which predatory OA publishing threatens science (based on our results), and how such threats apply directly to aquaculture research.

Our results suggest that while they are likely of lesser quality, non-indexed OA aquaculture journals often mimic legitimate, high-quality scientific articles (e.g. similarly named, professional website, PDF articles). Thus, it is not trivial to distinguish legitimate and reputable journals from those that are not. Theoretically, trained scientists should be able to recognize potentially predatory OA journals based on the quality of science and proceed accordingly. In reality, however, this is not always the case; with approximately 6,800 scientific articles published each day (Ware and Mabe, 2015), possible predatory submissions are bound to be overlooked. Hypothetically, if researchers simply skim a paper for information and do not recognize that the paper is from a potential predatory journal and has not been through rigorous peer review, misinformation can become embedded within the aquaculture literature, as well as other fields. Furthermore, hypothetically, naïve researchers unfamiliar with the shifting landscape of scientific publication and the threats therein may submit and publish work in potentially predatory OA journals, unbeknownst to the consequences. As mentioned previously with developing researchers, this may result in high-quality work being published in a predatory OA journal, ultimately going unrecognized or being dismissed as inadequate. In addition, the reputation of individual researchers can be influenced, depending on whether or not repeated publications in potentially predatory OA journals are noticed and properly critiqued. As such, future studies conducting a deeper evaluation of the scientific content of articles in known predatory aquaculture journals than we provide herein are warranted to better understand the extent of this effect. At current, however, it is unclear just how much of the aquaculture research community (and the industry at large) is naïve to predatory OA journals. A quantitative documentation of the susceptibility of researchers to predatory OA publishing is necessary to fully comprehend how much of a risk to aquaculture predatory publishers pose.

As mentioned, the non-indexed OA aquaculture journals highlighted in our study used less rigorous peer review (i.e., shorter peer review times which could put pressure on reviewers to review papers quickly, thus promoting errors and poorer peer review) and scientific work, they superficially resembled well-established, indexed journals. Their existence thus poses a potential threat to the communication of aquaculture research to the public. Although most scientists are trained to critically evaluate individual scientific articles, the same cannot be said for non-scientists, as non-scientists are typically not well versed to distinguish between high- and low-quality science. Astonishingly, the non-indexed OA journals herein were three times more abundant than well established, indexed journals in our Google search. This is concerning when one considers that a large portion of the public obtains much of its scientific information from Google searches (Purcell et al., 2012; Fox and Duggan, 2013). It is important to recognize, however, the Google searches are personalized, and Google results will vary across users and over time; a more in depth study assessing Google search results across a broad range of users and time periods is thus necessary to confirm the findings herein. Nonetheless, our search was adequate in identifying non-indexed OA journals for quantitative comparison with indexed journals. Furthermore, fish farmers and other stakeholders in the aquaculture industry rely on sound scientific information to inform optimal practices in order to ensure economic and environmental optimization and stability. For example, the treatment of farmed fish with tested and approved pharmaceuticals can be critical in maintaining healthy and high-quality fish (Shao, 2001; Benkendorff, 2009), and predatory OA journals are known to publish false pharmaceutical findings in other fields (Bohannon, 2013). Thus, predatory OA aquaculture journals are not only a potential threat to the academic side of aquaculture, but also to the public perception of aquaculture activities and the economic and ecological sustainability of the industry. It should be noted, however, that well respected, non-predatory publishers can also publish faulty and/or non-scientific content [e.g., the autism-vaccine link (Wakefield et al., 1998), or the recent high-profile case of microplastic effects on perch larvae (Lönnestedt and Eklöv, 2016)] and the spread of misinformation within the field of aquaculture research is not restricted to predatory OA journals. Nonetheless, our results do suggest that predatory OA aquaculture journals have a higher propensity to publish lesser-quality science than their non-predatory counterparts and can potentially contribute to the spread of misinformation in aquaculture.

Our results agree with those of previous studies suggesting that non-indexed OA journals serve a disproportionate number of authors from developing countries (Shen and Björk, 2015; Xia et al., 2015; Seethapathy et al., 2016; Balehegn, 2017; Gasparyan et al., 2017). It thus seems that many aquaculture researchers from these regions are spending a fair proportion of their limited financial resources on publishing in likely predatory OA journals, when such resources can be used in more effective ways (e.g., increasing research infrastructure, publishing in high quality open access journals, hiring staff). This may be due to a number of factors such as preferential targeting of researchers in developing-nations by predatory OA publishers, a shorter cultural tradition of participation in scientific publication, an intense pressure to publish resulting in unethical publishing practices (i.e., predatory authors), and/or economic limitations competing against high APCs in non-predatory OA journals (Harris, 2004; Butler, 2013; Shen and Björk, 2015). It is important to note, however, that particularly the latter factor is not at all limited to developing nations. It is also likely that good, high-quality research sometimes gets published in questionable journals because authors are unaware of what predatory OA journals are (although a quantitative understanding of naïvety among the aquaculture community is required) and wish to publish their work OA for as cheap a fee as possible. Thus, because predatory OA journals can negatively impact the reputation of individual researchers (Castillo, 2013; although this has yet to be quantitatively assessed) and can be exploited by unethical researchers for personal gain (Shen and Björk, 2015), aquaculture research from such nations may be perceived by the academic community as low quality, even though it may not be. As such, the publication of aquaculture research in non-indexed OA journals may hinder the research reputation of nations that publish in such journals often. To combat this, individual researchers should always use the “think, check, submit” method (see next section) when submitting papers to OA journals. In addition, indexed journals and other relevant institutions should explore ways to remove publication barriers for researchers in developing nations and increase incentives for such researchers to publish their work in reputable journals.

Alongside recognizing and avoiding predatory OA journals, there are a number of other approaches that can be used to hinder the progression and spread of predatory OA journals in the field of aquaculture and beyond. For example, Lalu et al. (2017) suggested that educating researchers on how to identify probable predatory OA journals, and providing incentives for publishing in legitimate journals and disincentives for publishing in predatory journals, could help to combat predatory OA publishing. These suggestions could be aided by peer-to-peer and institutional education and outreach, and diligent assessments of researcher publication records by institutions and funding agencies; institutional support and education can also aid graduate students and naïve researchers who wish to ethically publish OA (Beaubien and Eckard, 2014). Institutions and funding agencies could also explore mandatory archiving in institutional repositories, coupled with critical assessments of articles submitted to those repositories to monitor and discourage researchers from publishing in predatory OA journals (Yessirkepov et al., 2015; Seethapathy et al., 2016). Likewise, hiring committees, grant providers, international committees, and similar associations need to be diligent in assessing researcher credentials to avoid hiring and promoting “researchers” that may exploit the lack of rigorous peer review in predatory OA journals to enhance their CV.

An economic shift in the gold OA model could also help to alleviate the impact of predatory OA aquaculture journals. The current state of the gold OA model unfortunately drives the exploitation of scientific publishing for monetary gain, where an author fee-per-paper model incentivizes higher rates of publication and lower rigor. It is important to recognize, however, that traditional subscription-based publishers also exploit scientific publishing for monetary gain (in most cases, much more efficiently than OA journals). Given the financial requirements of OA publishing and the difficulty in removing the associated costs (Hoyt, 2017), removing the financial component of the gold OA model may not be the most realistic solution and may hinder the progression of and transition to open science. Thus, the approaches mentioned in the previous paragraph may be more effective.

While systematic changes will be necessary to eliminate predatory OA publishing, individual researchers can contribute to the cause by being educated about predatory OA journals, and using the “think, check, submit” method when submitting a manuscript to OA journals (http://thinkchecksubmit.org/). This method first encourages researchers to think about the journal they are about to submit their work to and closely consider whether or not the journal seems legitimate and if their work fits in the journal. Next, submitting authors are guided to check the legitimacy of the journal. This can be done using the “think, check, submit” checklist (http://thinkchecksubmit.org/check/) and cross-checking the journal or publisher in the DOAJ and other quality-controlled bibliometric databases. Researchers can also use the attributes elucidated herein to assess attributes of a candidate OA journal for their work to determine if the journal may be predatory (see below). Finally, submitting authors are encouraged to submit their work only if they answered yes to most of the questions in the “think, check, submit” checklist. In addition, aquaculture researchers can use the criteria herein to distinguish likely predatory journals from legitimate ones.

In this study, we found a number of journal- and article-level attributes that statistically differentiated non-indexed OA aquaculture journals from indexed ones and suggested that non-indexed OA journals may likely be predatory. However, it is important to recognize that assessing a single attribute (as highlighted here or in other studies) is insufficient for determining whether or not an OA aquaculture journal is predatory (as not all non-indexed OA journals are predatory; Beaubien and Eckard, 2014); particularly given that the random factor of journal had a significant effect on most of our article-level metrics. Thus, researchers need to carefully examine multiple journal attributes in order to conclusively and assuredly determine whether or not a given OA journal is likely predatory. Based on our analysis, the aquaculture journals that appear most prominently on Google searches use the gold OA model, were established after 2010, publish <20 articles per year, and have an APC under $1,000. In addition, these journals are not included in any of the three quality-controlled bibliometric databases used herein (DOAJ, Clarivate Analytics, and Scopus). We thus suggest that OA aquaculture journals with this combination of attributes are most likely predatory (as defined previously) and we encourage all authors to assess OA publishing options with care. It is critical to stress here, however, that it is the combination of these attributes that characterize predatory OA aquaculture journals, and any single attribute cannot be used to define a journal as predatory. Taking additional steps such as referring to the DOAJ (https://doaj.org/; and other quality-controlled databases such as Web of Science, Clarivate Analytics, and Scopus) prior to submitting a paper to an OA aquaculture journal would also serve researchers well in avoiding predatory OA journals (Günaydin and Dogan, 2015).

It should also be noted that this study does not suggest that all non-indexed OA aquaculture journals are predatory by nature, or that all articles contained in predatory OA journals are necessarily of low quality. Thus, our statistical approach here is only indicative of potential predatory OA journals. Nonetheless, it is clear that naïve researchers may submit high quality work to predatory OA journals. This does not excuse predatory OA journals on the basis that they sometimes publish good research, but rather highlights that good science may get lost or interpreted as poor science when it is published in such journals. Thus, it is critical for researchers to check attributes of the journals they are submitting to in order to determine whether or not a given OA journal provides an adequate range of scholarly publishing services (e.g., solid peer review, participation in archiving, quality-controlled bibliometric indexation); using the “think, check, submit” method can be useful in this regard (see section Minimizing the Impact of Potential Predatory OA Journals in Aquaculture). Future studies exploring the variation in article scientific quality within predatory and non-predatory OA journals would provide a more detailed understanding of the scientific quality of predatory OA journal articles. Furthermore, experimental studies with blinded reviewers assessing the scientific quality of articles from the non-indexed OA and indexed journals (compared to an assessment of aggregate proxies for scientific rigor) would provide more direct evidence as to whether or not articles in non-indexed OA journals are truly of lesser scientific quality.

While this study documents a variety of quantitative attributes of non-indexed OA aquaculture journals and suggests that they can be predatory, we did not include all potential attributes of predatory OA journals. Indeed a number of predatory OA journal attributes are evident that we did not incorporate into our analysis, including illustrative aspects of journal covers, unusually large editorial boards filled with famous names and/or editors without an online presence, strange/broad subject categories, and poorly designed websites with many typos and no secure payment options for open access fees (Hunziker, 2017). As such, future studies objectively assessing the attributes of non-indexed OA and well-established, indexed journals would benefit from incorporating additional attributes such as those highlighted above.

Lastly, while this paper focuses on aquaculture research, the broader applicability of our results to other fields of research remains to be determined. Given our robust design and the similarities between our results and the documented nature of predatory OA journals (Bohannon, 2013), it seems likely that our results are applicable to other fields of research. Subtle differences in publishing practices, however, could theoretically render our results invalid for other research disciplines. Future studies applying our approach to objectively assess non-indexed OA and well-established, indexed journals in other fields of research are required to determine the breadth of applicability that our results provide.

Our findings suggest that non-scientists are likely to encounter predatory OA aquaculture journals in online searches given that non-indexed OA journals were characteristic of predatory OA journals and were three times more abundant than indexed journals in the first 10 SERPs of our search. Overall, non-indexed OA journals were found to use the gold OA model, were established more recently, and were be less scientifically rigorous than indexed aquaculture journals. Taken together, these results suggest that predatory OA publishing may pose a threat to aquaculture research and needs to be considered seriously by the aquaculture research community and industry as a whole. The awareness and recognition of predatory OA aquaculture journals by individual researchers, students, and various aquaculture stakeholders is paramount for minimizing predatory OA journals' impact on aquaculture research and the aquaculture industry at large; this can be achieved through institutional and peer-to-peer education regarding attributes of predatory OA journals (compared to non-predatory journals) and promoting the use of databases such as the DOAJ. Additionally, the removal of the pay-per-article aspect of the gold OA model would eliminate the purpose of predatory OA journals and the negative effects that they inflict on aquaculture. The implementation of such recommendations could help remove the questionable practices of predatory OA publishers from the field of aquaculture research, thus maintaining the integrity of the field, moving toward an appropriate public perception of aquaculture in a post-truth era, and ensuring an adequate and productive contribution of aquaculture to global food security for the future.

JC: Conceived and helped develop the idea, contributed to study design, collected and analyzed data, and wrote and revised the manuscript; RD and HF: Contributed to idea development, study design, data collection, and manuscript revision; RD: Also contributed to data checks and analytical confirmation.

This project was funded by a NSERC Visiting Postdoctoral Fellowship to JC (Grant number 501540-2016) and institutional postdoctoral fellowships of RD and HF.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

We are grateful to Dr. Luc A. Comeau and Rémi Sonier at the Gulf Fisheries Centre (Fisheries and Oceans Canada) for constructive feedback on an earlier draft of this manuscript. We also wish to thank the two reviewers for their substantive comments which helped improve the manuscript.

All raw data and R-code are available in the Supplementary Material, which are hosted on GitHub (https://github.com/remi-daigle/PredPubAquaculture) and archived on Zenodo (https://zenodo.org/record/1188938#.Wp2cXfnwZpg).

Adie, E. (2014). Attention! A study of open access vs. non-open access articles. Figshare. doi: 10.6084/m9.figshare.1213690

Ahrens, W. H., Cox, D. J., and Budhwar, G. (1990). Use of the arcsin and square root transformations for subjectively determined percentage data. Weed Sci. 38, 452–458.

Antelman, K. (2004). Do open-access articles have a greater research impact? Coll. Res. Libr. 65, 372–382.

Bacher, K. (2015). Perception and Misconceptions of Aquaculture: A Global Overview. GLOBEFISH Research Programme, Vol. 120. Rome: Food and Agriculture Organization of the United Nations.

Balehegn, M. (2017). Increased publication in predatory journals by developing countries' institutions: what it entails? And what can be done? Int. Inf. Libr. Rev. 49, 97–100. doi: 10.1080/10572317.2016.1278188

Beall, J. (2012). Predatory publishers are corrupting open access. Nature 489:179. doi: 10.1038/489179a

Beall, J. (2013). Predatory publishing is just one of the consequences of gold open access. Learn. Publ. 26, 79–84. doi: 10.1087/20130203

Beall, J. (2016). Dangerous predatory publishers threaten medical research. J. Korean Med. Sci. 31, 1511–1513. doi: 10.3346/jkms.2016.31.10.1511

Beaubien, S., and Eckard, M. (2014). Addressing faculty publishing concerns with open access journal quality indicators. J. Libr. Schol. Comm. 2:eP1133. doi: 10.7710/2162-3309.1133

Benkendorff, K. (2009). “Aquaculture and the production of pharmaceuticals and nutraceuticals,” in New Technologies in Aquaculture: Improving Production Efficiency, Quality and Environmental Management, eds G. Burnell, and G. Allan (Oxford: Woodhead Publishing), 866–891.

Berger, M. (2017). “Everything you ever wanted to know about predatory publishing but were afraid to ask,” in Association of College and Research Libraries. At the Helm: Leading Transformation: The Proceedings of the ACRL 2017 Conference, March 22–25, 2017 Baltimore, MD, ed D. M. Mueller (Chicago, IL: Association of College and Research Libraries), 206–217.

Björk, B. C., Laakso, M., Welling, P., and Paetau, P. (2014). Anatomy of green open access. J. Assoc. Inf. Sci. Technol. 65, 237–250. doi: 10.1002/asi.22963

Bohannon, J. (2013). Who's afraid of peer review? Science 342, 60–65. doi: 10.1126/science.342.6154.60

Burnham, K. P., and Anderson, D. R. (2002). Model Selection and Multimodel Inference: A Practical Information-Theoretic Approach, 2nd Edition. New York, NY: Springer-Verlag.

Butler, D. (2013). Investigating journals: the dark side of publishing. Nature 495, 433–435. doi: 10.1038/495433a

Castillo, M. (2013). Predators and cranks. Am. J. Neuroradiol. 34, 2051–2052. doi: 10.3174/ajnr.A3774

Christopher, M. M., and Young, K. M. (2015). Awareness of “predatory” open-access journals among prospective veterinary and medical authors attending scientific writing workshops. Front. Vet. Sci. 2:22. doi: 10.3389/fvets.2015.00022

Clements, J. C. (2017). Open access articles receive more citations in hybrid marine ecology journals. FACETS 2, 1–14. doi: 10.1139/facets-2016-0032

Craig, I. D., Plume, A. M., McVeigh, M. E., Pringle, J., and Amin, M. (2007). Do open access articles have greater citation impact? A critical review of the literature. J. Informetr. 1, 239–248. doi: 10.1016/j.joi.2007.04.001

D'Anna, L. M., and Murray, G. D. (2015). Perceptions of shellfish aquaculture in British Columbia and implications for well-being in marine social-ecological systems. Ecol. Soc. 20:57. doi: 10.5751/ES-07319-200157

Dockrill, P. (2017). A Neuroscientist Just Tricked 4 Dodgy Journals into Accepting a Fake Paper on 'Midi-Chlorians'. ScienceAlert. Available online at: https://www.sciencealert.com/a-neuroscientist-just-tricked-4-journals-into-accepting-a-fake-paper-on-midi-chlorians (Accessed July 24, 2017).

Ellis, E., Maslin, M., Boivin, N., and Bauer, A. (2016). Involve social scientists in defining the Anthropocene. Nature 540, 192–193. doi: 10.1038/540192a

Eysenbach, G. (2006). Citation advantage of open access articles. PLoS Biol. 4:e157. doi: 10.1371/journal.pbio.0040157

FAO (2016). The State of the World Fisheries and Aquaculture: Contributing to Food Security and Nutrition for All. Rome: Food and Agriculture Organization of the United Nations.

Fisheries Oceans Canada (2005). Overview: Qualitative Research Exploring Canadians' Perceptions, Attitudes and Concerns Towards Aquaculture. Fisheries and Oceans Canada, Reports and Publications. Available online at: http://www.dfo-mpo.gc.ca/por-rop/focus-aquaculture-eng.htm (Accessed July 10, 2017).

Fox, S., and Duggan, M. (2013). Health Online 2013. Washington, DC: Pew Research Center's Internet & American Life Project.

Froehlich, H. E., Gentry, R. R., Rust, M. B., Grimm, D., and Halpern, B. S. (2017). Public perceptions of aquaculture: evaluating spatiotemporal patterns of sentiment around the world. PLoS ONE 12:e0169281. doi: 10.1371/journal.pone.0169281

Gargouri, Y., Hajjem, C., Larivière, V., Gingras, Y., Carr, L., Brody, T., et al. (2010). Self-selected or mandated, open access increases citation impact for higher quality research. PLoS ONE 5:e13636. doi: 10.1371/journal.pone.0013636

Gasparyan, A. Y., Nurmashev, B., Udovik, E. E., Koroleva, A. M., and Kitas, G. D. (2017). Predatory publishing is a threat to non-mainstream science. J. Korean Med. Sci. 32, 713–717. doi: 10.3346/jkms.2017.32.5.713

Günaydin, G. P., and Dogan, N. Ö. (2015). A growing threat for academicians: fake and predatory journals. J. Acad. Emerg. Med. 14, 94–96. doi: 10.5152/jaem.2015.48569

Gutierrez, F. R., Beall, J., and Forero, D. A. (2015). Spurious alternative impact factors: the scale of the problem from an academic perspective. Bioessays 37, 474–476. doi: 10.1002/bies.201500011

Hajjem, C., Harnad, S., and Gingras, Y. (2005). Ten-year cross disciplinary comparison of the growth of open access and how it increases research citation impact. Bull. IEEE Comp. Soc. Tech. Comm. Data Eng. 28, 39–47.

Harris, E. (2004). Building scientific capacity in developing nations. EMBO Rep. 5, 7–11. doi: 10.1038/sj.embor.7400058

Holmes, G., Barber, J., and Lundershausen, J. (2017). Anthropocene: be wary of social impact. Nature 541:464. doi: 10.1038/541464b

Hoyt, J. (2017). Untitled. Twitter. Available online at: https://twitter.com/jasonHoyt/status/879624241817296896 (Accessed July 14, 2017).

Hunziker, R. (2017). Avoiding predatory publishers in the post-Beall world: tips for writers and editors. AMWA J. 32, 113–115.

Jalalian, M., and Mahboobi, H. (2014). Hijacked journals and predatory publishers: is there a need to re-think how to assess the quality of academic research? Walailak J. Sci. Technol. 11, 389–394. doi: 10.14456/wjst.2014.16

Keyes, R. (2004). The Post-Truth Era: Dishonesty and Deception in Contemporary Life. New York, NY: St. Martin's Press.

Lalu, M. M., Shamseer, L., Cobey, K. D., and Moher, D. (2017). How stakeholders can respond to the rise of predatory journals. Nat. Hum. Behav. 1, 852–855. doi: 10.1038/s41562-017-0257-4

Lönnestedt, O. M., and Eklöv, P. (2016). Environmentally relevant concentrations of microplastic particles influence larval fish ecology. Science 352, 1213–1216. doi: 10.1126/science.aad8828

McKiernan, E. C., Bourne, P. E., Brown, C. T., Buck, S., Kenall, A., Lin, J., et al. (2016). How open science helps researchers succeed. eLife 5:e16800. doi: 10.7554/eLife.16800

Moher, S., and Srivastava, A. (2015). You are invited to submit. BMC Med. 13:180. doi: 10.1186/s12916-015-0423-3

Pauly, D., and Zeller, D. (2016). Catch reconstructions reveal that global marine fisheries catches are higher than reported and declining. Nat. Commun. 7:10244. doi: 10.1038/ncomms10244

Pinheiro, J., Bates, D., DebRoy, S., and Sarkar, D. (2017). nlme: Linear and Nonlinear Mixed Effects Models. Available online at: https://CRAN.R-project.org/package=nlme (Accessed July 11, 2017).

Purcell, K., Brenner, J., and Rainie, L. (2012). Search Engine Use 2012. Washington, DC: Pew Research Center's Internet & American Life Project.

R Development Core Team (2017) R: A Language and Environment for Statistical Computing Version 3.4.1. Vienna: R Foundation for Statistical Computing.

Richter, F. (2013). 1.17 Billion People Use Google Search. Available online at: https://www.statista.com/chart/899/unique-users-of-search-engines-in-december-2012 (Accessed September 28, 2017).

Seethapathy, G. S., Santhosh Kumar, J. U., and Hareesha, A. (2016). India's scientific publication in predatory journals: need for regulating quality of Indian science and education. Curr. Sci. 111, 1759–1764. doi: 10.18520/cs/v111/i11/1759-1764

Shao, Z. J. (2001). Aquaculture pharmaceuticals and biologicals: current perspectives and future possibilities. Adv. Drug Deliv. Rev. 50, 229–243. doi: 10.1016/S0169-409X(01)00159-4

Shen, C., and Björk, B. C. (2015). “Predatory” open access: a longitudinal study of article volumes and market characteristics. BMC Med. 13:230. doi: 10.1186/s12916-015-0469-2

Sorokowski, P., Kulczycki, E., Sorokowska, A., and Pisanski, K. (2017). Predatory journals recruit fake editor. Nature 543, 481–483. doi: 10.1038/543481a

Swan, A., Gargouri, Y., Hunt, M., and Harnad, S. (2015). Open access policy: numbers, analysis, effectiveness. arXiv:1504.02261.

Swauger, S. (2017). Open access, power, and privilege: a response to “What I learned from predatory publishing”. Coll. Res. Libr. News 78, 603–606. doi: 10.5860/crln.78.11.603

Van Nuland, S. E., and Rogers, K. A. (2016). Academic nightmares: predatory publishing. Anat. Sci. Educ. 10, 392–394. doi: 10.1002/ase.1671

Vinny, P. W., Vishnu, V. Y., and Lal, V. (2016). Trends in scientific publishing: dark clouds loom large. J. Neurol. Sci. 363, 119–120. doi: 10.1016/j.jns.2016.02.040

Wakefield, A. J., Murch, S. H., Anthony, A., Linnell, J., Casson, D. M., Malik, M., et al. (1998). Ileal-lymphoid -nodular hyperplasia, non-specific colitis, and pervasive developmental disorder in children. Lancet 351, 637–641. doi: 10.1016/S0140-6736(97)11096-0

Wang, X., Liu, C., Mao, W., and Fang, Z. (2015). The open access advantage considering citation, article usage and social media attention. Scientometrics 103, 555–564. doi: 10.1007/s11192-015-1547-0

Ware, M., and Mabe, M. (2015). The STM Report: An Overview of Scientific and Scholarly Journal Publishing, Fourth Edition. The Hague: International Association of Scientific, Technical and Medical Publishers.

Warton, D. I., and Hui, F. K. C. (2011). The arcsin is asinine: the analysis of proportions in ecology. Ecology 92, 3–10. doi: 10.1890/10-0340.1

Xia, J., Harmon, J. L., Connolly, K. G., Donnelly, R. M., Anderson, M. R., and Howard, H. A. (2015). Who publishes in “predatory” journals? J. Assoc. Inf. Sci. Technol. 66, 1406–1417. doi: 10.1002/asi.23265

Keywords: ethics, journal selection, open access, peer review, scientific publishing, science communication

Citation: Clements JC, Daigle RM and Froehlich HE (2018) Predator in the Pool? A Quantitative Evaluation of Non-indexed Open Access Journals in Aquaculture Research. Front. Mar. Sci. 5:106. doi: 10.3389/fmars.2018.00106

Received: 26 July 2017; Accepted: 14 March 2018;

Published: 29 March 2018.

Edited by:

António V. Sykes, Centro de Ciências do Mar (CCMAR), PortugalReviewed by:

Pedro Morais, University of California, Berkeley, United StatesCopyright © 2018 Clements, Daigle and Froehlich. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jeff C. Clements, amVmZmVyeS5jbGVtZW50c0BkZm8tbXBvLmdjLmNh; amVmZmVyeWNjbGVtZW50c0BnbWFpbC5jb20=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.