The terms “good science,” “bad science,” and especially “sound science” are frequently used in the policy arena. Most often, this is so parties with interests (usually economic) in the outcome of a political decision can promote certain results and attempt to discredit others. It has been argued that the terms “sound science” and “junk science” have been appropriated by various industries, such as the oil and gas industry and the tobacco industry. “Junk science” is the term used to tar scientific studies that disagree with positions favorable to the industry (Mooney, 2004, 2006; Oreskes and Conway, 2011; Macilwain, 2014). But can science actually be “good” or “bad”?

Science is a process. It's the act of taking observations made in the natural world to test hypotheses, preferably in a rigorous, repeatable way. The tested hypotheses are then rejected if they fall short, rather than accepted if the data are compatible, and the results are ultimately critically reviewed by the scientific community. Concepts that work survive, whereas those that do not fit the observed data die off. Eventually, concepts that survive the frequent and repeated application of enormous amounts of observational data become scientific theory. Such theories become as close to scientific fact as is possible—nothing can be proved absolutely. This process holds for social science as much as for chemistry, physics or biology: it does not matter if the data come from surveys or observational data from humans. A study either follows this protocol or it does not. Put simply, it is science or it isn't science.

That said, what is sometimes referred to as “bad science” is the use of a bad experimental design. This is typically a set-up that has not accounted for confounding variables, so the hypothesis has not been appropriately tested and the inferences based upon this work are flawed and incorrect. These flaws may include the use of an inappropriate sample size or time frame. Use of selective data is another problem, where data that don't fit are simply left out of statistical analyses as “outliers.” In short, “bad science” is a study that does not follow the scientific process. It could also be used to describe studies that have flaws and limitations that are not highlighted by researchers. The term “bad science” has also been applied to inappropriate interpretations of the results. The reason for this, mentioned above, is that science never proves anything. Thus personal opinions can color interpretations of what data actually mean. This is where most of the debate in the scientific community really lies. We may all agree that a given hypothesis has not yet been invalidated, but what if alternative explanations for the observed data are possible? Or, as noted above, there might be limitations and caveats in particular study—for example an ex situ experimental study on a small sample of a single species in an aquarium produces interesting results, but to ignore these limitations and extrapolate these results to make conclusions about multiple species in multiple ecosystems in the wild over-reaches the real limits of the study in question (see Parsons et al., 2008 for an example related to captive cetacean studies and the impacts underwater sound). However, when scientific studies are interpreted beyond hyperbole, and are deliberately misinterpreted to fit a particular world view or to favor special interests, this is when science is no longer just “bad,” but it becomes ugly.

Government decisions regarding the marine environment are typically required to be based on the “best available science.” The typical tools for aiding decision-making are environmental impact assessments (EIAs). However, such EIAs are typically restricted by a timeline and a tight budget, and frequently focus on simple species descriptions and habitat reviews. Conversely the marine environment is logistically difficult, complex, and expensive to study (Norse and Crowder, 2005). It is frequently the case that the scientific content of an EIA, due to these limitations, is insufficient to fully ascertain the impacts of a project. However, the EIA's conclusions often do not acknowledge the deficiencies of the assessment. This “bad” science can, moreover, turn ugly if conclusions of an EIA go contrary to the findings of the actual assessment in order to allow a project to get approval. After all, if an environmental consultant says a project cannot go ahead they may risk not being awarded any further contracts. Thus there is a major financial incentive to not highlight an EIA's limitations, or even to give the client the determination that they desire, contrary to the data gathered in the assessment (Wright et al., 2013a). It should be noted that the data in an EIA might actually be very rigorously gathered in an appropriate scientific manner, and thus technically be “good science.” However, when the interpretation of the science is not based on the data, but rather on the interests of industry, individuals or politics, is not longer “good science.” In fact, it ceases to be science at all.

One high-profile example of inappropriate interpretation of marine science data was research conducted to assess the impacts of the Acoustic Thermometry of the Ocean Climate (ATOC) program. This project was designed to detect changes in oceanic temperatures using a high-intensity, low-frequency sound source. After expressions of concern by scientists and NGOs about the possible impact of the high-intensity sound to be used in the project, a field test was conducted in 1991. While the sound source was operating, researchers acoustically monitored nearly 5000 km2 area of ocean. They found that acoustic detections of long-finned pilot whales (Globicephala melas) and sperm whales (Physeter macrocephalus) were substantially lower when the sound source was operating than when it was not (Bowles et al., 1994). Despite the results of this test, the ATOC project continued, albeit with a quieter (~20 dB) source level than used in the test. Several environmental NGOs subsequently launched a court case, which was settled out of court, but it did lead to a program of marine mammal-oriented studies (McCarthy, 2004; Oreskes, 2004, 2014). Several of these studies noted significant changes in the behavior/distribution of whales around the ATOC sound source (Calambokidis, 1998; Frankel and Clark, 1998, 2000, 2002). A Draft Environmental Impact Statement (DEIS) was released in 2000, which concluded that there was no short- or long-term biologically significant impact from the sound source, a stance that was criticized in a US National Research Council report (National Research Council, 2003). The critique stated that the studies relied on by the DEIS were insufficient to adequately test whether there had, or had not, been short- or long-term effects on marine mammals, nor the biological significance of any such effects if they occurred (National Research Council, 2003). That is to say, the hypotheses tested in the various marine mammal studies were not consistent with the conclusions drawn. Sadly, this is a common situation with many EIAs which have a seemingly supportive case for an impact in the part of the document that presents scientific data, but the conclusion is that there is no significant impact irrespective of the science presented (Wright et al., 2013a).

Another second case study on the nature of science in the marine environmental realm is that of the impact of naval sonar on cetaceans. Many scientists were initially convinced that the main concern for injury to cetaceans from high-intensity noise was temporary or permanent deafness or threshold shifts (referred to as TTS and PTS, respectively). However other scientists were concerned that behavioral changes, such as surfacing too quickly, might lead to injury through “the bends”-like effects (Jepson et al., 2003; Fernández et al., 2004, 2005; Cox et al., 2006; and see review in Parsons et al., 2008). These behavioral effects could potentially occur at levels much lower than those which were known to cause TTS/PTS. The latter hypothesis was criticized by several as being “bad” or “junk science” (pers. obs.), possibly because the hypothesis did not fit with the then held assumptions about the impacts of sound on marine mammals. Another possibility is that accepting the hypothesis would support the implementation of a more precautionary management regime, with heavier restrictions on noise-producing activities. However, the hypothesis was subsequently tested. Beaked whales and other cetaceans were exposed to military sonar, and potentially problematic behavioral changes were observed (Tyack et al., 2011). This was a good example of using the scientific method to investigate a problem. As a result, we know that there can be important impacts on cetaceans at levels of sound much lower than previously thought and management regimes can be adjusted accordingly. Prior to these experiments, many complained that the hypothesis whereby behavioral changes induced a “bends”-like effect was not “sound science” (pers. obs.). However, the fact that the majority now accept revised hypotheses that have been tested, and management recommendations are starting to be proposed based on the latest understanding of sound impacts, is an example of what one might consider “good science.”

This example leads us to another aspect of the scientific method: rejecting previously accepted hypotheses as additional data shows that these hypotheses are, in fact, false. If a scientist were to follow the scientific method, a “good” scientist's understanding of the environment changes as additional data are acquired, whereas a “bad” scientist sticks stubbornly to previously held beliefs despite being faced with data that suggest an alternative scenario. It is a basic tenant of scientific inquiry after all that hypotheses are rejected when not supported by data. Good scientists are willing to change their opinions quickly in the face of new evidence or in response to a good valid argument. However, opinions that are not based in data-tested hypotheses do not represent good or bad science; they are simply not scientific at all.

Sticking to an opinion or an idea despite evidence to the contrary is sadly quite common in the science community. One sees “scientists” who stubbornly resist new ideas and studies, especially those that contradict a paper that the “scientists” wrote or concepts that they have publicly supported, or even based their career on. But adapting to new evidence is a key criterion of the scientific method. When scientists stubbornly resist new evidence contrary to their opinion, it really is “bad science,” i.e., refusing to reject a hypothesis that has been shown to be false.

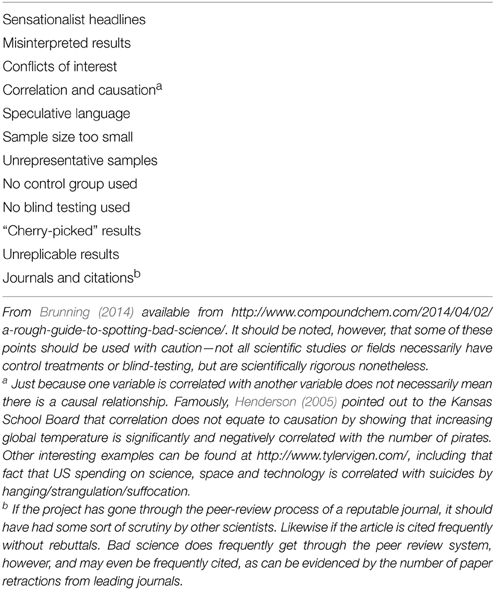

Combating bad science ideally should be done through scientific peer-review, as professional scientists should understand the intricacies of the scientific method, and in an ideal world this happens. However, reviewers with conflicts of interest are sadly too frequent. Moreover, any problems are exacerbated when science meets policy, or public opinion. Policy makers and the general public, who are not trained in the scientific method, may not understand the difference between “good” and “bad” science or recognize misrepresentations of science (see Wright et al., 2013b for further discussion). This is not helped by the fact that scientists that may be well-trained in the scientific method may not be trained in (or even very good at) the art of communication. Fortunately, some scientists forego research to become involved in policymaking and management, journalism, and/or teaching. However, there have been concerns that science journalism in traditional media has been in decline (Brumfiel, 2009; Nature, 2009a,b) with few newspapers employing journalists with a scientific background. The result is that articles on science often display a tenuous grip on the scientific method and the real implications of the results (Rose and Parsons, in press). Brunning (2014) provides a checklist to help the lay person to spot “bad science” (Table 1), whether it be in science-related articles, government reports or in EIAs (also recommended is McConway and Spiegelhalter, 2012 and www.badscience.net).

Marine scientists should try to avoid the tainted terms “sound” or “junk” science as these terms have been co-opted by special interests and have now become somewhat tainted by association, as noted earlier. There can be “good science” or “bad science,” but arguably only because a project uses a scientific methodology in which the experimental design is well-thought out, potential confounding variables are addressed, conclusions are appropriate for the hypotheses that were tested and the data that were gathered, and caveats are expressed… or this is not the case. In short, science has been properly conducted or it has not been conducted. There is no middle ground. Then there are situations where lip service is often paid to “science” but actual scientific data have been willfully ignored because of dogma, special interest or politics. This is often the realm of purveyors of the terms “sound science” for studies that supports their agenda, and “junk science” for those that don't. But to paraphrase Yoda, there are studies where data has been collected in an appropriate scientific fashion and interpreted appropriately, and there those that have not, there is no in between.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We wish to thanks Amy Bauer for kindly editing draft versions of this manuscript and we are grateful for the useful comments of two reviewers.

References

Bowles, A. E., Smultea, M., Würsig, B., DeMaster, D. P., and Palka, D. (1994). The relative abundance and behaviour of marine mammals exposed to transmissions from the Heard Island Feasibility Test. J. Acoust. Soc. Am. 96, 2469–2484. doi: 10.1121/1.410120

Brumfiel, G. (2009). Science journalism: supplanting the old media? Nature 458, 274–277. doi: 10.1038/458274a

Brunning, A. (2014). A Rough Guide to Spotting Bad Science. Available online at: http://www.compoundchem.com/2014/04/02/a-rough-guide-to-spotting-bad-science.

Calambokidis, J. (1998). “Effects of the ATOC sound source on the distribution of marine mammals observerved from aerial surveys off central California,” in World Marine Mammal Conference, Monte Carlo, Monaco, 20–24th January 1998, (Monte Carlo: European Cetacean Society and Society for Marine Mammalogy), 22.

Cox, T. M., Ragen, T. J., Read, A. J., Vos, E., Baird, R. W., Balcomb, K., et al. (2006). Understanding the impacts of anthropogenic sound on beaked whales. J. Cetacean Res. Manage. 7, 177–187.

Fernández, A., Arbelo, M., Deaville, R., Patterson, I. A. P., Castro, P., Baker, J. R., et al. (2004). Whales, sonar and decompression sickness. Nature 428, 1–2. doi: 10.1038/nature02528a

Fernández, A., Edwards, J. F., Rodríguez, F., Espinosa de los Monteros, A., Herráez, P., Castro, P., et al. (2005). “Gas and Fat Embolic Syndrome” involving a mass stranding of beaked whales (Family Ziphiidae) exposed to anthropogenic sonar signals. Vet. Pathol. 42, 446–457. doi: 10.1354/vp.42-4-446

Frankel, A. D., and Clark, C. W. (1998). Results of low-frequency playback of M-sequence noise to humpback whales, Megaptera novaeangliae, in Hawaii. Can. J. Zool. 76, 521–535.

Frankel, A. D., and Clark, C. W. (2000). Behavioral responses of humpback whales to full-scale ATOC signals. J. Acoust. Soc. Am. 108, 1–8. doi: 10.1121/1.1289668

Frankel, A. D., and Clark, C. W. (2002). ATOC and other factors affecting distribution and abundance of humpback whales (Megaptera novaeangliae) off the north shore of Kauai. Mar. Mamm. Sci. 18, 644–662. doi: 10.1111/j.1748-7692.2002.tb01064.x

Henderson, B. (2005). Open Letter to the Kansas School Board. Available online at: http://web.archive.org/web/20070407182624/http://www.venganza.org/about/open-letter/

Jepson, P. D., Arbelo, M., Deaville, R., Patterson, I. A. P., Castro, P., Baker, J. R., et al. (2003). Gas-bubble lesions in stranded cetaceans: was sonar responsible for a spate of whale deaths after an Atlantic military exercise? Nature 425, 575–576. doi: 10.1038/425575a

Macilwain, C. (2014). Beware of the backroom deals in the name of ‘science’. Nature 508:289. doi: 10.1038/508289a

McCarthy, E. (2004). International Regulation of Underwater Sound: Establishing Rules and Standards to Address Ocean Noise Pollution. New York, NY: Springer.

McConway, K., and Spiegelhalter, D. (2012). Score and ignore. A radio listener's guide to ignoring health stories. Significance 9, 45–48. doi: 10.1111/j.1740-9713.2012.00611.x

Mooney, C. (2004). Beware ‘Sound Science.’ It's Doublespeak for Trouble. Retrieved from Washington Post.

National Research Council, A. (2003). “Effects of noise on marine mammals,” in Ocean Noise and Marine Mammals, (Washington, DC: National Academies Press), 83–108.

Norse, E., and Crowder, L. B. (2005). “Why marine conservation biology?,” in Marine Conservation Biology, eds E. Norse and L. B. Crowder, (Washington, DC: Island Press), 1–18.

Oreskes, N. (2004). Science and public policy: what's proof got to do with it? Environ. Sci. Policy 7, 69–383. doi: 10.1016/j.envsci.2004.06.002

Oreskes, N. (2014). “Changing the mission: from the cold war to climate change,” in Science and Technology in the Global Cold War, eds N. Oreskes and J. Krige (Cambridge, MA: MIT Press), 141–187.

Oreskes, N., and Conway, E. M. (2011). Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming. New York, NY: Bloomsbury Press.

Parsons, E. C. M., Dolman, S., Wright, A. J., Rose, N. A., and Burns, W. C. G. (2008). Navy sonar and cetaceans: just how much does the gun need to smoke before we act? Mar. Pollut. Bull. 56, 1248–1257. doi: 10.1016/j.marpolbul.2008.04.025

Rose, N. A., and Parsons, E. C. M. (in press). “Back off, man, I'm a scientist!,” in When Marine Conservation Science Meets Policy, Ocean & Coastal Management.

Tyack, P. L., Zimmer, W. M. X., Moretti, D., Southall, B. L., Claridge, D. E., Durban, J. W., et al. (2011). Beaked whales respond to simulated and actual navy sonar. PLoS ONE 6:e17009. doi: 10.1371/journal.pone.0017009

Wright, A. J., Dolman, S. J., Jasny, M., Parsons, E. C. M., Schiedek, D., and Young, S. B. (2013a). Myth and momentum: a critique of environmental impact assessments. J. Environ. Prot. 4, 72–77. doi: 10.4236/jep.2013.48A2009

Keywords: science, good science, bad science, junk science, sound science, policy making, science communication

Citation: Parsons ECM and Wright AJ (2015) The good, the bad and the ugly science: examples from the marine science arena. Front. Mar. Sci. 2:33. doi: 10.3389/fmars.2015.00033

Received: 21 February 2015; Accepted: 18 May 2015;

Published: 04 June 2015.

Edited by:

Trevor John Willis, University of Portsmouth, UKReviewed by:

Clive Nicholas Trueman, University of Southampton, UKMatt Terence Frost, Marine Biological Association, UK

Copyright © 2015 Parsons and Wright. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: E. C. M. Parsons,ZWNtLXBhcnNvbnNAZWFydGhsaW5rLm5ldA==

E. C. M. Parsons

E. C. M. Parsons Andrew J. Wright

Andrew J. Wright