94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Lang. Sci. , 25 May 2023

Sec. Neurobiology of Language

Volume 2 - 2023 | https://doi.org/10.3389/flang.2023.1176233

This article is part of the Research Topic Syntax, the brain, and linguistic theory: a critical reassessment View all 11 articles

Theoretical accounts of syntax are broadly divided into lexicalist or construction-based viewpoints, where lexicalist traditions argue that a great deal of syntactic information is stored in lexical representations, while construction-based views argue for separate representations of multiword syntactic structures. Moreover, a strict autonomy between syntactic and semantic processing has been posited based on the grammatical well-formedness of non-sense sentences such as This round table is square. In this paper, we provide an overview of these competing conceptions of syntactic structure and the role of syntax in grammar. We review converging neuroimaging, electrophysiological, behavioral, electrocorticographic, and computational modeling evidence that challenge these views. In particular, we show that a temporal lobe ventral stream is crucial in processing phrases involving nouns and attributive adjectives, while a dorsal stream involving left parietal regions, including the angular gyrus, is crucial in processing constructions involving verbs and relational adjectives. We additionally support this interpretation by examining divergent pathways in the visual system for processing object information and event/spatial information, on the basis of integration across visual and auditory modalities. Our interpretation suggests that combinatorial operations which combine words into phrases cannot be isolated to a single anatomical location, as has been previously proposed—instead, it is an instantiation of a more general neural computation, one that is implemented across various brain regions and can be utilized in service of constructing linguistic phrases. Based on this orientation, we explore how abstract syntactic constructions, such as the transitive construction, both mirror and could emerge from semantics. These abstract construction representations are argued to be distinct from, and stored in regions functionally downstream from, lexical representations of verbs. Comprehension therefore involves the integration of both representations via feedforward and feedback connections. We implicate the IFG in communicating across the language network, including correctly integrating nominal phrases with the overall event representation and serving as one interface between processing streams. Overall, this approach accords more generally with conceptions of the development of cognitive systematicity, and further draws attention to a potential role for the medial temporal lobe in syntactic behaviors, often overlooked in current neurofunctional accounts of syntactic processing.

The combinatorial nature of language allows us to make infinite messages from a finite number of smaller elements. However the mechanisms by which we combine words into phrases and phrases into sentences has been the subject of decades of theorizing, experimentation, and retheorizing, yet with still little consensus. In this article, we briefly review two long standing debates in the field of theoretical syntax, namely the relationship between syntax and semantics, and the debate between lexicalist and constructionist theories. This frames our subsequent review of data predominantly from neuroimaging studies of syntactic processing during comprehension, with particular attention to the issue of combinatorial phrase building. We note an apparent pattern whereby primarily nominal-involved phrases appear to be processed in different regions than do primarily verb-involved phrases and those involving relational adjectives; we explain this by reference to visual processing of objects vs. actions and events. We therefore argue for a dual-stream model of syntactic processing that is divided into a ventral stream specialized for processing phrases involving entity nouns and attributive adjectives and a dorsal stream specialized for processing phrases involving verbs and relational adjectives. The ventral stream culminates in the left anterior temporal lobe (ATL) and the dorsal stream in the left angular gyrus (AG), with interactions between them occurring directly and mediated through the left inferior frontal gyrus (IFG). This suggests a distributed computation for combining phrases, accomplished using a delta-theta-gamma oscillatory “code.” To combine phrases into sentences, we suggest a type of cognitive map might be involved, which may come about based solely on statistical regularities between constituents or may be augmented by event semantics abstracted as schemas. We argue that as a result of this conceptualization semantics constrains and scaffolds syntax. We further argue that this is in line with a construction-based view of syntax, while acknowledging the necessity of integrating lexicalist and constructionist views to more holistically account for the wide range of experimental findings that support both.

Sentences such as Chomsky's infamous Colorless green ideas sleep furiously or This round table is square, discussed decades prior by Benedetto Croce and Antonio Gramsci, suggest that semantic meaningfulness is not a necessity for syntactic well-formedness. Yet, whether such anomalous cases should be seen as representative of language as a whole, and how syntax and semantics relate more generally, remains a matter of debate. Theorists working in the Generative Grammar tradition have typically held that syntactic and semantic knowledge are processed in two autonomous modules, with the syntactic module feeding into the semantic module (Chomsky, 1981, 1995; Lasnik and Lohndal, 2010; Collins and Stabler, 2016). Meanwhile, in theories under the Construction Grammar umbrella, the relationship between syntax and semantics has been seen through the lens of the Saussurean sign-signified relationship, where syntactic constructions are signs signifying particular meanings (Goldberg, 1995, 2003, 2013). Both traditions view syntax as the basis for semantic interpretation, and while they also converge on interesting conclusions, there are important distinctions between them.

Generative theories conceptualize the syntactic and semantic modules as embodying processes delineated into separate modules, whereas Construction-based theories view semantics more as something that is represented by specific lexical, morphological, and syntactic signs. In the process-type view, a syntactic parse of a sentence is constructed, and the way that the parse is handled influences the ultimate interpretation—for example, in the sentence, The Enterprise located the Romulan ship with long-range sensors, the prepositional phrase [PP with long-range sensors] could be “attached” to the verb phrase to give an interpretation whereby the Enterprise used its long-range sensors to find the Romulan spacecraft. [PP With long-range sensors] could also be “attached” to modify the Romulan spacecraft, such that the interpretation is now that the Enterprise found (through some unspecified means) the Romulan spacecraft that possesses long-range sensors. This basic idea was extended through various iterations of Generative theory to suggest that the underlying configuration of sentences was the same, regardless of their surface form. Deviations from standard intransitive, transitive, and ditransitive templates were explained through movement operations, some motivated by different syntactic principles. Though small aspects of semantics were folded into the motivations behind syntactic phenomena, Minimalism still maintains a separation between the syntactic processing modules: semantic features are interpreted at the Conceptual-Intentional Interface, while syntax is a set of operations applied to linguistic objects in order to render them interpretable at both the Articulatory-Perceptual and Conceptual-Intentional Interfaces (Chomsky, 1995; Collins and Stabler, 2016). Nevertheless, semantics are ultimately seen as secondary to syntax, in the sense that semantic interpretations of a sentence depend on how the sentence is parsed syntactically.

Constructionist theories take the accepted sound-meaning relationship of morphemes a step further and propose that such relationships hold for multi-word constructions as well. Although both theories arrive at similar generalizations, namely that semantic interpretations depend on syntactic form, how they propose this relationship comes about is entirely divergent. Rather than proposing that the relationship is mediated by a certain hierarchical configuration endowed by Universal Grammar or a set of complex processes, Construction-based theories contend that the relationship between syntactic form and semantic interpretation arises through repeated pairings of form and meaning. Over time, these pairings are abstracted over to conventionalize a grammatical Construction which has an associated meaning. For example, sentences like The Enterprise located the Romulan ship are abstracted over with other sentences such as The dog chased the cat, The CIA smuggled drugs, and The quarterback threw the ball to generate a TRANSITIVE CONSTRUCTION specifying a subject NP1 followed by a verb and an object NP; this structural form is then associated with a semantic interpretation roughly equivalent to “the subject NP performed an action upon the object NP”2. Further subdivisions of these constructions based on more nuanced semantics and networks of related grammatical forms are also proposed. Despite representational and operational differences then, both theories propose a tight relationship between syntax and semantics whereby syntactic form determines semantic interpretation from a comprehender's perspective.

Another point of contention across theoretical frameworks is whether syntactic constructions are driven by lexical items or whether they are independent constructs, which lexical items are inserted into. In this section, we will briefly examine these two viewpoints broadly, while later in this paper we will argue that the evidence supports a unification of both views, in which we will discuss how syntactic constructions can be abstracted from lexical items and the syntactic distributions they participate in. Differences between the two types of theories are most apparent in their treatment of verb argument structure, so we will make use of such examples to illustrate.

Lexicalist theories, including those in the Generative tradition, propose that syntactic structure and information is determined by lexical information. The type and amount of syntactic information determined by the lexical entry may vary from theory to theory, but typically a lexical entry is said to contain information about the types of phrases the lexical item combines with (i.e., the valence information of a word). For example, a lexical entry for cry would include that it combines with an NP as its subject; a lexical entry for write would include that it combines with three NPs, one as its subject, one as its indirect object, and one its direct object. Lexical rules are proposed to alter a lexical item's entry and thereby its valence, in order to account for alternations in argument structure; for example, passive constructions are said to be derived from a passivization lexical rule that decreases a verb's valency by one, while verbs such as give which undergo the dative alternation will be acted upon by a lexical rule governing their realization in the double-object or prepositional-object forms. Abstract syntactic rules are used to combine lexical items into phrases, when licensed by their lexically-determined argument structures, as well as to combine phrases into clauses.

Constructionist theories, in contrast, propose a hierarchy of construction forms that include lexical items as well as more abstract syntactic structures, which (depending on the particular theory in question) may be independent of any particular lexical entry. These syntactic constructions are typically claimed to emerge as abstracted patterns of usage across multiple lexical items. While notational conventions vary widely, an INTRANSITIVE CONSTRUCTION for example may be represented as [Subj V], indicating that it is formed by a lexical item or phrase being inserted into the Subj slot and a verb lexical item into the V slot. This generalization can capture sentences like The graduate student cried, as well as sentences like Immortal Technique's latest single slaps! which would be considered peripheral in other theories or would require potentially theoretically undesirable explanations to account for. Alternations of argument structure are explained by verbs being inserted into different constructions: transform for example may be inserted into a TRANSITIVE CONSTRUCTION to yield a sentence such as We transform them into intertwined vectors of struggle or inserted into a PASSIVE CONSTRUCTION to yield a sentence such as The live green earth is transformed into dead gold bricks. As discussed above, Construction-based theories tend to propose inheritance networks between related constructions—the DOUBLE-OBJECT and PREPOSITIONAL-OBJECT constructions may be considered descendants of a more general DITRANSITIVE CONSTRUCTION in this framework, with different theories proposing varying amounts of information shared between ancestor and descendant constructions.

Investigations specifically of combinatorial processing of phrases have repeatedly shown selective activation of the ATL, as well as the left AG and occasionally ventromedial prefrontal cortex (vmPFC). These studies have tended to use similar types of minimal combinatorial units, typically consisting of a two word condition that forms a phrase compared to non-word+word pairs that are suggested to not form such phrases. Zaccarella and Friederici (2015) for example, report selective activation of the left IFG for determiner+pseudo-word noun pairs, while others such as Bemis and Pylkkänen (2011), Pylkkänen et al. (2014), Westerlund et al. (2015), Flick et al. (2018) and Phillips and Pylkkänen (2021) have often used adjective+noun and verb+noun pairs. In contrast to the results of Zaccarella and Friederici (2015) and Zaccarella et al. (2017), MEG studies consistently show activation of left ATL in combinatorial conditions involving adjective+noun pairs in comparison to non-word+noun pairs (Bemis and Pylkkänen, 2011; Pylkkänen et al., 2014; Westerlund et al., 2015; Flick et al., 2018; Phillips and Pylkkänen, 2021). While these results were initially considered to be a form of both syntactic and semantic combinatorial processing, a purely semantic explanation has been argued for recently (Pylkkänen, 2019). To try to reconcile these findings though, we might rely on the distinction between processing of adjuncts compared to arguments. This is of course a fundamental distinction in linguistic theory, with adjectives generally being considered adjuncts within noun phrases, and a noun considered an argument of a determiner in some theories of syntax (and vice versa in others).

However, Westerlund et al. (2015) examined similar determiner+noun combinations as Zaccarella and Friederici (2015), as well as verb+noun argument combinations, and still found activation of left ATL compared to non-word+noun combinations. Granted, the determiner+noun pairs used in Westerlund et al. (2015) involved both real determiners and real nouns instead of the real determiners and pseudo-nouns of Zaccarella and Friederici (2015). While Friederici (2018) and others maintain that determiners are mostly semantically vacuous, Westerlund et al. (2015)'s results could be due to the semantic effect of combining a somewhat semantically meaningful determiner and a fully semantically meaningful noun. For example, the may have little semantic content, especially when combined with a phonologically plausible but non-meaningful pseudo-word; however, when combined with manifesto, it specifies that a particular manifesto is being referenced. This may be the driver of the combinatorial effects of determiner+noun seen in left ATL.

Westerlund et al. (2015)'s additional whole brain analysis further failed to show activation in the left IFG, left vmPFC, or in the left AG. Again, these findings suggest that the type of computation Zaccarella and Friederici (2015), Zaccarella et al. (2017), and Friederici (2018) identified as being carried out by left IFG may not be unique to that region or that its role in phrase-building is less straightforward. Nevertheless, consistent MEG results and even the fMRI meta-analysis conducted in Zaccarella et al. (2017) suggest a preference in the ATL for processing phrases involving nouns. Some additional clarity on this matter comes also from Murphy et al. (2022b), who investigated composition of minimal adjective+noun phrases using cortical surface electrocorticography (ECoG). They report a significant increase of high gamma (70–150 Hz) power in posterior superior temporal sulcus (pSTS) in combinatorial conditions shortly after the onset of the second word (100–300 ms), in addition to increases in power across the 8–30 Hz alpha and beta range in the IFG and ATL, and increased functional connectivity between pSTS and the IFG and pSTS and the ATL. The authors interpret these data as indicating that the pSTS is responsible for phrase composition, which is somewhat at odds with MVPA evidence suggesting the ATL as the locus of phrase composition (Baron et al., 2010). Nevertheless, the results of Murphy et al. (2022b) and Baron et al. (2010) are consistent with much of the MEG evidence already reviewed, as well as Baron and Osherson (2011) and Flick and Pylkkänen (2020). These data suggest a model in which the IFG serves as a working memory store that can reactivate the phonological representations in pSTS, generating a phrasal constituent through oscillatory activity (explored in more detail in Section 3.5.2.2), which is communicated to the ATL or AG (as explored in the next section) as appropriate to perform conceptual combinatorial computations. This functionality would also be in keeping with well accepted models of feed-forward/feedback reciprocal cortical connectivity (see also Flinker et al., 2015; for direct ECoG evidence in linguistic tasks), as well as evidence that predictions at higher levels of linguistic abstraction (e.g., syntactic category, semantics) inform or constrain predictions at lower levels (e.g., phonemes) (Lyu et al., 2019; Heilbron et al., 2022).

The AG has been previously proposed as processing verb argument structure or thematic role assignment. In particular, activation of AG increases parametrically with an increase in verb valency (Thompson et al., 2010); Meltzer-Asscher et al. (2013) used verbs that alternate in their valency (e.g., bake: Neelix baked vs. Neelix baked pastries), to show that AG was more active for verbs with higher valencies than for verbs with lower valencies. Adding to this evidence, Boylan et al. (2015) used Multi-Voxel Pattern Analysis (MVPA) to examine activation in bilateral ATL and bilateral AG, using stimuli sets similar in nature to those used by the Pylkkänen group's studies, i.e., verb+noun, verb+adverb, preposition+noun, and noun+adjective pairs, plus control sets of non-word+verb/verb+non-word and non-word+noun/noun+non-word pairs. They found significant similarity in the activation patterns elicited by verb+noun and verb+adverb pairs within left AG, but not in left ATL, and concluded that left AG appears sensitive to specifically verb+noun argument combination or perhaps the expectation for a verb+noun argument relationship.

These results are not irreconcilable with those of Westerlund et al. (2015), discussed above. Meltzer-Asscher et al. (2015) and Thompson et al. (2007, 2010) showed response in left AG and surrounding areas even in the absence of arguments, suggesting that there may have been roughly the same activation of left AG during the verb+noun pair and verb+non-word pair conditions. This contrast may have reduced any overall finding of left AG involvement in Westerlund et al. (2015). Moreover, Westerlund et al. (2015) used MEG whereas Boylan et al. (2015) used fMRI, and Westerlund et al. (2015) note that the time course for combinatorial-based left ATL activation peaks within 250 ms. The BOLD response of course takes several seconds to materialize, so given that the general trend of activation seen by Westerlund et al. (2015) was only distinguishable between the combinatorial and non-combinatorial conditions within a narrow time window of 200–400 ms, the sluggish BOLD response may render these distinctions unobservable with fMRI. Finally, only a subset of Westerlund et al. (2015)'s stimuli were verb+noun combinations and they were unable to test differences between determiner+noun and verb+noun stimuli. It's therefore unsurprising that, even if the left AG is sensitive to verb argument structure processing, Westerlund et al. (2015) did not see such activation, as they likely lacked the power to detect such an effect.

Nevertheless, Matchin et al. (2019) do contradict a strict argument-structure-based or thematic-role-assignment-based account of AG in favor of a more general conceptual-semantic, event-information account of AG processing. The authors used three word stimuli consisting of a verb+determiner+noun and determiner+adjective+noun trios; crucially, the adjective was the past participle of the same verb used in the verb-based trio, e.g., surprised, giving a possible VP trio such as surprised the kitten and an NP trio such as the surprised kitten. Using fMRI, they found activation in AG in both the VP and NP conditions, but with no significant difference between them; as in several prior studies though, they still found significant differences between activation in AG to phrases compared to lists.

Once again, these results are not necessarily irreconcilable with the previous literature reviewed—especially if we take a construction-based view of argument structure. Of course, the relationship between event semantics and argument structure is not necessarily straightforward and providing a definitive or exhaustively comprehensive account is beyond the scope of this paper. However, we suggest that this connection provides a strong starting point for a cognitively grounded understanding of argument structure and the interaction between syntax and semantics.

Left IFG—i.e., Broca's area—has drawn significant previous attention for its potential role in syntactic processes. Matchin and Hickok (2020) view the process carried out by the IFG, particularly the pars triangularis, as morphosyntactic linearization of a structure. However, given the long history of interest localizing syntactic processing to IFG, it's worth exploring broad trends that have led to this conclusion. The IFG's theorized contribution was initially based on patients with Broca's aphasia who routinely omit function words in their production and who struggle with syntactically complex sentences (Schwartz et al., 1980; Caplan and Futter, 1986; Hickok et al., 1993; Mauner et al., 1993; Kolk and Weijts, 1996; Kiss, 1997). Due to the broad range of production deficits in these patients, the early view of Broca's area was as a general language output module.

Numerous studies have also found an increase in BOLD response in left IFG during processing of sentences with non-canonical word order, compared to sentences with canonical word order (Caplan et al., 2000; Ben-Shachar et al., 2003, 2004; Bornkessel et al., 2005; Friederici et al., 2006; Grewe et al., 2007; Shetreet et al., 2007; Kinno et al., 2008; Bornkessel-Schlesewsky et al., 2009; Santi and Grodzinsky, 2010; Burholt Kristensen et al., 2013; Shetreet and Friedmann, 2014, among others). The cited studies found greater activation for sentences such as Picard piloted the Stargazer (grammatical and canonical) in comparison to sentences like The Stargazer, Picard piloted (grammatical but non-canonical), across a small range of languages including English, German, Hebrew, Danish, and Japanese. Based on these studies, as well as others which saw involvement of left IFG during processing of long-distance dependencies, a number of researchers claimed that IFG was the site of the theoretical Move-α operation from Chomskyan theories of syntax (Ben-Shachar et al., 2003, 2004; Grodzinsky and Friederici, 2006; Grodzinsky and Santi, 2008; Santi and Grodzinsky, 2010). Move-α is an operation that was proposed to account for a variety of syntactic phenomena where certain constituents are displaced from their canonical positions—the example above, for instance (The Stargazer, Picard piloted) would be explained through a movement that fronts the object from its underlying canonical position after the verb. However, other research groups suggested that the aforementioned results were actually indicative of increased working memory demands (e.g., Rogalsky and Hickok, 2011, among others).

Additional studies have attempted to control for working memory effects (Röder et al., 2002; Ben-Shachar et al., 2003; Friederici et al., 2006; Kinno et al., 2008; Kim et al., 2009; Obleser et al., 2011; Meyer et al., 2012). Meyer et al. (2012) in particular used adverbial modifiers to dissociate long-distance processing and non-canonical word order processing in German. They reported increased activation of left IFG to object-first sentences, with no effect of distance detected in the pattern of IFG activation. They further found that there was no correlation between IFG activation and subjects' performance on a digit span working memory test. Together, these results were taken as confirmation that the activity was driven by syntactic ordering effects rather than working memory. Nevertheless, there are some important caveats: the first being that the digit span task may not represent the best measure of verbal working memory, and we might expect stronger correlation between scores on a measure like the reading span task and IFG activation instead. Secondly, the conceptualization of working memory Meyer et al. (2012) argue against is more of an active, subvocal rehearsal working memory, rather than a more passive temporary storage (which is also sometimes distinguished as short-term memory) (Schwering and MacDonald, 2020). Thirdly, this result might be expected under a filler-gap dependency model of movement, where a linguistic item is kept in working memory until the comprehender encounters a syntactic gap, which is filled with the item being held in working memory and allows for interpretation (Fiebach et al., 2001). Fourthly, while Meyer et al. (2012) note that some studies which attempted to control for working memory effects involved English, most have used case marking languages such as German, Hebrew, and Japanese because the ordering flexibility allows for better separating distance and ordering effects. That said, the addition of case marking may influence processing strategies compared to the results from English, so the extent to which results in German, Hebrew, or Japanese generalize is unknown. Finally, results from an fMRI study contrasting double-object and prepositional-object dative sentences in English found no significant difference in activation of the IFG between the two sentence types (Allen et al., 2012). Given the additional phrasal embedding required under a more Generative framework for the prepositional-object construction, it might be expected for the IFG to be more activated in this condition.

More specific hypotheses regarding the role of particular subregions in left IFG have been proposed as well, based on differential activation patterns seen in some of these previous studies. These hypotheses propose that Brodmann's Areas (BA) 45 and 47 subserve semantic processes, that frontal operculum engages in building of local adjacent dependencies, and that BA 44 is involved in building non-adjacent syntactic hierarchies (Friederici, 2016). Zaccarella, Friederici, and colleagues argue that BA 44 in particular is the location of the theoretical Merge operation, based on the evidence discussed above, yet this is challenged on both theoretical and empirical grounds by other researchers. Nevertheless, despite the broad range of specific interpretations, activation of left IFG during comprehension of non-canonically ordered sentences has been a consistent finding. A potentially more general role for the IFG in language processing may provide a more comprehensive interpretation of these results, and is also suggested by ECoG data showing that the IFG mediates the communication of linguistic information between temporal cortex and motor cortex (Flinker et al., 2015). Taken together, these results are suggestive of a broad role for the IFG in communicating between regions of the language network, which can facilitate a range of linguistic computations including combinatorial phrase building and encoding motor representations for language production.

In reviewing lesion symptom mapping studies for possible evidence of IFG involvement in syntactic processing, Matchin and Hickok (2020) note inconsistent findings that damage to IFG results in comprehension deficits. This does not necessarily mean that the IFG is completely uninvolved in comprehension; given that language processing involves bottom-up and top-down mechanisms, relying on a dorsal stream from primary auditory cortex to AG and back may be sufficient. However, in the remainder of this section, we argue that part of the IFG's role in comprehension processing of sentences with non-canonical word orders is ensuring that nouns are correctly mapped to the appropriate semantic roles. To make this argument however, we first need to discuss the role of the posterior superior temporal sulcus (pSTS) and posterior superior temporal gyrus (pSTG).

Especially given their anatomical proximity to primary auditory cortex, these regions have typically been thought to be involved in phonological and lexical representations (Hickok and Poeppel, 2007; Pasley et al., 2012, see also Hickok (2022) for a review). However, portions of these regions have also been implicated in semantic processing by Frankland and Greene (2015, 2020) and by Murphy et al. (2022b), discussed above. Frankland and Greene (2015) used MVPA of fMRI data to suggest that separate areas of left mid-superior temporal cortex act as temporary storage of the agent and patient of a sentence, independent of their syntactic position. Frankland and Greene (2015, 2020) involved pairs of transitive sentences (e.g., Picard evacuated the Romulans/the Romulans evacuated Picard) and their passive forms, (e.g., The Romulans were evacuated by Picard/Picard was evacuated by the Romulans), thereby varying which participant was the agent and which the patient, as well as separately varying which side of the verb the agent and patient appeared on. Frankland and Greene (2015) used a classifier form of MVPA, which tries to divide data points into two or more distinct, predetermined categories. Using this classifier, they identified mid-superior temporal cortex that discerns between mirror pairs of transitive sentences; within this region, they further identified an upper portion of left STS and a portion of posterior STS which selectively activates to agents, and a separate portion of upper left STS extending to left lateral STG which selectively activates to patients. Similar follow-up fMRI work in Frankland and Greene (2020) confirmed these results, as well as providing evidence that more verb-specific semantic roles (e.g., a chaser rather than the more general agent) activate anterior-medial prefrontal cortex (i.e., BA 10) and concomitantly deactivate the hippocampus.

Some complicating results though come from Matchin et al. (2019), who reported increased activation of the pSTS for the verb phrase stimuli compared to the noun phrase stimuli, interpreting this as evidence that the pSTS encodes syntactic argument structure via lexical subcategorization information—or that the contrast could be driven by frequency effects if the verb use is more frequent than the adjectival use. However, given that the boy is assigned a semantic role in the verb phrase condition but not the noun phrase condition, this result can be seen as somewhat supporting Frankland and Greene (2015, 2020)'s contention that areas of superior temporal cortex represent broad semantic arguments of verbs. Contradicting this interpretation though is Frankland and Greene (2019), where the authors re-analyzed their prior data from Frankland and Greene (2015), reporting a region of left middle temporal gyrus (MTG) that specifically activates in response to verb and patient combinations, but not agent and verb combinations. Moreover they suggest that activation in an additional region of left MTG is predicted by the combination of agents and verb+patient combinations. Taken together, these results suggest an asymmetry in verb-argument semantic processing that functionally reproduces a syntactic hierarchy. Despite this, the pSTS activation reported by Matchin et al. (2019) for VPs involving a verb and patient does not accord with Frankland and Greene (2015)'s identification of this as an agent-selective region.

Returning to the IFG's role, the types of sentences that patients with Broca's aphasia were reported to have difficulty comprehending were passives—where the typical semantic role assignment of an active transitive is reversed—or sentences with increased levels of clausal embedding—which similarly involve disentangling which noun is assigned to what semantic role (Berndt et al., 1996). Furthermore, patients with damage to regions of the IFG, the arcuate fasciculus, the extreme capsule, and posterior temporal lobe can show impairments in processing semantically reversible sentences (e.g., Janeway hugged Chakotay/Chakotay hugged Janeway, where the semantics of the sentence allow for either entity to be the agent or the patient) (reviewed in Blank et al., 2016). The arcuate fasciculus connects the pars opercularis portion of the IFG to the pSTG while the extreme capsule connects the pars triangularis portion of the IFG to the pSTG (Makris et al., 2005; Friederici et al., 2006; Frey et al., 2008). The inconsistent pattern of damage in IFG leading syntactic comprehension impairments then could be explained by whether portions of IFG that are connected to pSTG via the arcuate fasciculus or extreme capsule are damaged. Given the type of impairment, i.e., that semantic role assignment seems to be impacted, the IFG therefore seems to play a role in correctly mapping nouns to the appropriate portion of pSTG/pSTS that indexes the semantic roles of a sentence. This could be performed through either language-specific processes or through domain-general working memory processes—or both. This explanation also explains that the same impairment is seen when there is a lesion to the connecting fiber tracts or to the pSTG itself and is consistent with the broad role of the IFG proposed above.

It is important to note some of the limitations and contradictions in the evidence for this proposal though. Frankland and Greene (2015, 2019)'s verb stimuli consisted of items like chase, which may exert less semantic selectional restrictions on their agents than a verb like melt does—leaving the possibility that their results may not generalize to all verbs. Furthermore, it is unclear how to reconcile the Matchin et al. (2019) and the Frankland and Greene (2015, 2019, 2020) interpretations of left STS, MTG, and even parts of STG with their previously implicated involvement in spectro-temporal auditory processing and lexical access3 (Hickok and Poeppel, 2007). Despite this, Frankland and Greene (2015, 2019, 2020) and Matchin et al. (2019) are not the only studies to report activation of left pSTS, pSTG, or left MTG during sentence-processing tasks with syntactic manipulations between conditions (e.g., Ben-Shachar et al., 2003, 2004; Bornkessel et al., 2005; Grewe et al., 2007; Assadollahi and Rockstroh, 2008; Kinno et al., 2008; Kalenine et al., 2009; Santi and Grodzinsky, 2010; Pallier et al., 2011).

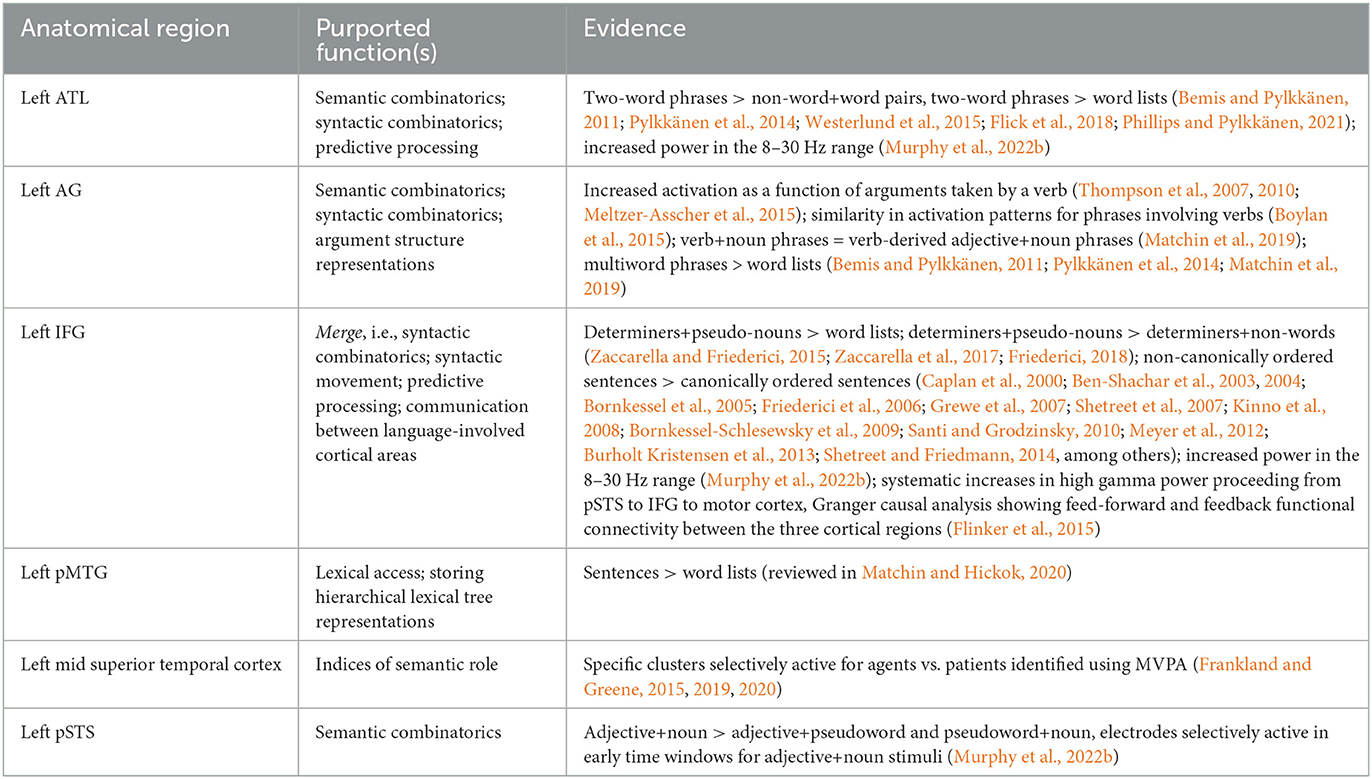

It is useful at this point to provide a brief summary of the evidence and analysis we have so far reviewed. This information is condensed in Table 1, but we sketch them here in narrative form as well. While we are primarily focused on syntactic processing in this paper, some of the results pertaining to semanti c processing are pertinent as well, given both the connection between the two as well as the difficulty in definitively dissociating the two experimentally. This is particularly relevant in the discussion surrounding the left ATL and left AG, both of which have been variously claimed to be sensitive to primarily syntactic or primarily semantic information.

Table 1. Summary of anatomical regions implicated in syntactic or semantic processing, including the function each region is claimed to carry out and the evidence commonly cited to support these interpretations.

With this in mind, based on MEG and fMRI data comparing two-word phrases to pairs of non-words and real words, left ATL has been argued to contribute primarily to semantic processing by conceptually combining the meanings of multiple words together; however, ECoG data utilizing similar types of stimuli have implicated ATL instead in predictive processing. Left AG has likewise been argued to be the focal point of semantic-conceptual combinatorial processing, given similar types of fMRI and MEG studies. It has also been argued to store verb argument structure representations or to process the thematic roles assigned by verbs, given fMRI studies showing increased activation as a function of verb valency (with and without the accompanying arguments), as well as similarity in the activation patterns elicited by phrases involving verbs, revealed by MVPA. Following extensive fMRI and PET studies manipulating the relative ordering of constituents in a sentence, the size of constituents, contrasts between determiner+pseudo-noun pairs and word lists, and contrasts between determiner+pseudo-noun pairs and determiner+non-word pairs, the left IFG has been argued variously to be the locus of syntactic phrase building or the locus of a syntactic movement operation. ECoG data has suggested instead that IFG is involved in predictive processing and in communicating linguistic information between cortical regions—which, it should be noted, are not mutually exclusive functions.

In reviewing some of the aforementioned studies on syntactic processing, Matchin and Hickok (2020) interpret increased activation of the posterior MTG for sentential compared to word list stimuli as indicative that the pMTG stores hierarchical tree structures headed by lexical items, reminiscent of Lexical Tree-Adjoining Grammar (Schabes et al., 1988), though this interpretation also accords with the Memory-Unification-Control model of Hagoort (2013). The final major anatomical region we discussed was the mid superior temporal cortex, within which Frank et al. (2015), Frankland and Greene (2019, 2020) reported small clusters that were preferentially active to nouns functioning as agents or as patients, as evidenced from MVPA of fMRI data, using stimuli that used the same nouns as agents and as patients.

While there have been some consistent findings amongst this literature, some of the interpretations appear to be conflicting and even contradictory. We therefore turn now to how we might make better sense of this data, drawing upon the cognitive neuroscience literature of other domains, especially vision. We do so under the premises that, (1) from an evolutionary perspective, anatomy and physiological mechanisms are frequently repurposed for new uses; and (2) from the perspective of neural organizational and resource efficiency, populations of neurons encoding or processing similar information should be in close physical proximity.

Processing of visual sensory information has long been accepted as branching into two “streams”, even if the exact information processed by each has been subject to disagreement (Mishkin et al., 1983; Goodale et al., 1991; Goodale and Milner, 1992). The ventral stream involves a series of brain regions extending ventrally from the occipital cortex along the inferior temporal lobe bilaterally, and appears to process and represent the sensory information of objects (Mishkin et al., 1983; Goodale et al., 1991; Goodale and Milner, 1992). The dorsal stream involves regions of posterior parietal cortex, though its function has been somewhat more controversial—in general however, it has shown sensitivity to manipulations of actions, events, and spatial relationships between objects (Mishkin et al., 1983; Goodale et al., 1991; Goodale and Milner, 1992). There is also evidence that lexical representations, while still fairly diffusely represented, may follow similar patterns (Yang et al., 2017; Lukic et al., 2021).

While we can certainly use language to talk about more abstract concepts or imaginary things, a great deal of our language use—especially during early childhood acquisition—is centered on our material reality. Indeed, core functions of (spoken) language require binding auditory labels to visual information such as objects, allowing us to communicate about the things in our environment. The relationships between objects and the actions we perform on them are similarly important for both our visual perception of the environment and how we communicate about them. With that said, we wish to be clear that we are making an argument more in keeping with a “weak” embodied view of language processing, in that labeling of visual information with linguistic information is certainly important for language acquisition, but is not to say that language comprehension works necessarily (or solely) by activating additional sensory perception processing areas.

Nevertheless, there is some evidence for individual variation in the amount of activation of visual sensory processing areas during linguistic processing (e.g., Humphreys et al., 2013). Humphreys et al. (2013) show greater activation of the posterior superior and middle temporal gyri (MTG) for visual scenes with motion compared to static visual scenes, which is shared with linguistic processing. Visser et al. (2012) too shows activation of pMTG for concordant lexical items and images (though cf. Murphy et al. (2022b) for evidence of these types of stimuli instead activating regions of frontal cortex and insula). This shared neural substrate suggests that it serves to associate representations across modalities. Indeed, a study by Pritchett et al. (2018) argued against such a strongly embodied account of language based on neuroimaging data that lacked activation in brain regions linked to higher-level language processing—except for the AG, which, as we will argue in Section 3.5.3, is what we would expect. This therefore doesn't necessarily rule out a role for visual sensory representations being involved in language comprehension according to an embodied cognition view, but does rule out the most extreme version of the argument where lower-level visual sensory processing areas are involved; conversely, it could be taken to suggest activation of linguistic representations during visual processing, in essence understanding what we see by putting it into language. The model we propose here though does not require association of sensory information across the auditory and visual systems to perform the functions we assign them. Instead, we argue that the brain is organized to facilitate efficient association across modalities when available, and this intrinsic organization supports the syntactic processing functions we have assigned to brain regions, even in the absence of one or more modality. In such situations, the exact anatomical regions performing the various syntactic functions may vary based on the input actually being received and competition among modalities to make use of cortical tissue not otherwise utilized. Yet this variation should still be somewhat constrained by the layout specified genetically and instantiated during development.

As mentioned above, some (e.g., Friederici, 2018) have proposed isolating the syntactic combinatorial operation to the left IFG. The problem of localizing this process is not simple given the various brain regions we have reviewed which have been implicated in phrase building. We instead propose that combinatorial operations are subserved by an instantiation of neural processes used in other cognitive domains and that the search for a single localizable brain region responsible for carrying out these operations may not be as productive a hypothesis space. Viewing phrase building as a computation that may not have a single neural correlate allows us to look for competing putative mechanisms to test that may better explain the current data. A strong candidate mechanism is hierarchically organized cross-frequency coupling of oscillatory activity (Murphy, 2015, 2018, 2020; Benítez-Burraco and Murphy, 2019); one such mechanism that has been extensively studied is the interplay of gamma and theta oscillations. Several studies of this theta-gamma oscillatory coding mechanism have been conducted in rodents, monkeys, and humans, frequently involving the hippocampus and/or entorhinal cortex (Lisman and Idiart, 1995; Skaggs et al., 1996; Tort et al., 2009; Axmacher et al., 2010; Nyhus and Curran, 2010; Quilichini et al., 2010; Friese et al., 2013; Lisman and Jensen, 2013; Heusser et al., 2016; McLelland and VanRullen, 2016; Headley and Pare, 2017; Kikuchi et al., 2018; Zheng et al., 2022). Such studies have implicated the theta-gamma “code” in multi-item working memory (Lisman and Idiart, 1995; Axmacher et al., 2010), memory for navigating mazes (Skaggs et al., 1996; Quilichini et al., 2010), and the order of events in an episodic memory Nyhus and Curran (2010), Heusser et al. (2016). While these represent the prototypical functions believed to be subserved by the hippocampus and entorhinal cortex, the theta-gamma code has also been implicated in sensory processing, including in olfactory processing in rats (Woolley and Timiras, 1965), and most important for the consideration of language, in processing of auditory stimuli in the primary auditory cortices of rhesus monkeys (Lakatos et al., 2005). A delta-theta-gamma cross-frequency coupling of oscillatory activity has been proposed as a potentially domain-general computation that could be implemented in language processing brain areas for the purpose of phrase building (Murphy, 2015, 2018, 2020; Benítez-Burraco and Murphy, 2019).

In the delta-theta-gamma “code” for phrase building proposed by Murphy (2015), Murphy (2018), Benítez-Burraco and Murphy (2019), and Murphy (2020), low frequency delta (0.5–4 Hz) activity modulates theta (4–8 Hz) activity, which in turn modulates neural activity occurring at higher frequencies in the gamma (>30 Hz) range, such that gamma wave activity is “embedded” within particular phases of a theta wave and theta wave activity is “embedded” within particular phases of a delta wave. Phonetic and phonological information has been suggested to be represented by a burst of neural activity occurring in a particular gamma frequency, and the relative ordering of those items within a lexical item is represented by the phase of the modulating theta activity that the gamma bursts occur in. In turn, the relative ordering of lexical items within a constituent is represented by the phase of the modulating delta activity which the theta activity occurs within.

The delta-theta-gamma oscillatory mechanism for phrase building proposed by Murphy (2015), Murphy (2018), Benítez-Burraco and Murphy (2019), and Murphy (2020) has good empirical evidence including from ECoG, MEG, and computational modeling studies (Ghitza, 2011; Giraud and Poeppel, 2012; Peelle and Davis, 2012; Ding et al., 2016; Martin and Doumas, 2017; Getz et al., 2018; Kaufeld et al., 2020; Lo et al., 2022). Rather than rehash this proposal, we wish to address the issue of “scope” in phrase building, i.e., how some lexical items in a phrase can combine before others. While this issue has received considerable attention in the literature, Rabagliati et al. (2017) provides insight of particular importance. Rabagliati et al. (2017) used a behavioral experiment showing reaction time differences for processing different types of one-, two-, and three-word phrases with different scope interpretations. Their results suggested that some types of three-word phrases are processed as quickly as two-word phrases, but more importantly, the time required to process a three-word phrase was related to its complexity and scopal interpretation. The types of two- and three-word phrases they suggest are processed the fastest are those that involve a simple structure and what Rabagliati et al. (2017) term “synchronous” activation; meanwhile, more complex phrases involve “asynchronous” or a combination of synchronous and asynchronous activation, where synchronous activation is used to combine some elements of a constituent prior to others. The synchronous activation strategy is reminiscent of the simultaneous and somewhat separate processing of shape and color information in visual processing, with some indication that synchronized activity between shape- and color-representing neural ensembles contributes to their perception as a unified whole (Milner, 1974; Hopfield and Brody, 2001; Romera et al., 2022, though this has been disputed, cf. Di Lollo (2012)). The theta-gamma code can encode information represented in this way through multiple simultaneously active gamma oscillations, in that neural ensembles active with the same gamma frequency and within the same period of the theta are represented together (Lisman and Jensen, 2013). As discussed above, the delta-theta-gamma code can be used to represent and integrate information processed in a serial manner—i.e., the asynchronous activation strategy described by Rabagliati et al. (2017).

Synthesizing the processing strategies of Rabagliati et al. (2017) with the delta-theta-gamma code, populations of neurons representing different lexical items which are combined using the synchronous strategy may oscillate at the same theta frequency and at the same phase of a modulating delta oscillation, with this synchronous activity serving to bind the items together conceptually and syntactically. Lexical items which are combined using the asynchronous strategy may oscillate at slightly different theta frequencies but crucially at different phases of the modulating delta frequency; constituents where lexical items are combined using both strategies can be represented by a combination of multiple lexical items active in the same phase of a delta cycle as well as different phases. Why might a particular set of words be processed using the synchronous strategy instead of the asynchronous one? One possibility is predictability: words that are highly predictable based on others may be preactivated via network effects during the same phase of the delta cycle as the prior word and with approximately the same theta frequency, potentially leading to a synchronization of activity. However, a robust discussion of the issue of preactivation in language processing is outside the scope of this paper. Nevertheless, that the theta-gamma code has indeed been implicated in predictive processing in other domains (Lisman and Jensen, 2013, for review). Another possibility is, as briefly discussed above, that the IFG reactivates lexical representations at an appropriate phase of the modulating delta cycle, such that multiple lexical representations are active simultaneously, leading to their conceptual combination.

A number of factors make the synchronous activation mechanism described above slightly less plausible though. In visual perception, shape and color information can activate neural populations tuned to their respective stimulus response characteristics simultaneously and in parallel; in auditory speech, only one word can be received at once. The situation is similar in sign language too, where, hypothetically, two signs that form a constituent could be produced simultaneously—yet we do not see this, they are still produced one after the other. Additional factors such as frequency of syntactic structure, pragmatic context, prior knowledge, etc. are also known to influence sentence processing and may require a more flexible representational mechanism than simultaneous activation of neural populations. A mechanism whereby parallel activation necessarily leads to a unified perception would further fail to account for psycholinguistic evidence that we build multiple syntactic parses in parallel.

Among linguists and psycholinguists there is considerable debate about whether syntactic information is stored in lexical entries or is mentally represented in more abstract constructions such as a TRANSITIVE CONSTRUCTION. With ample evidence on both sides, some indications are emerging that in fact both types of information are mentally stored and utilized (Tooley and Bock, 2014). How we synthesize these views and their associated findings however is an open question. A full synthesis of lexicalist and construction-based processing accounts awaits exciting new theoretical advancements, however it is worthwhile to consider how these two forms of representations may arise and are processed. Once again, we propose we can look to other cognitive domains for clues to organizational and processing principles that may carry over into the linguistic domain.

The first of such clues can come from visual processing. It is well-accepted that visual processing streams are organized hierarchically, where low-level features such as colors and the presence of light or darkness are processed early in visual processing streams, e.g., V1. Signals from V1 then feed further up the stream to V2, which processes edges from combinations of signals indicating light and dark. V2 itself feeds into regions that process shapes and movement, and so on. The further up this processing stream hierarchy, the more holistic and abstract the representations are. While a more direct parallel may come from processing the fine-grained auditory information of speech, a similar principle may be at play such that more “fine-grained” lexical information is stored and processed lower down language processing stream hierarchies, while more abstract constructions, such as the TRANSITIVE CONSTRUCTION, and conjunctions between lexical items are stored and processed further up the hierarchies. This principle may apply to both of the processing streams we propose here for entities and for events/scenes, with the ATL acting as an endpoint in the ventral stream where conjunctions of nouns and attributive adjectives are processed, and the AG acting as an endpoint in the dorsal stream where abstract argument structure is processed. In both instances, the STS, STG, and MTG represent and process information related to individual lexical items. In the remainder of this section, we will focus on the abstraction of argument structure information, as this is a particular point of contention between lexicalist and constructionist theories. The abstract construction representations we propose as being stored and processed in AG may be the result of abstraction over many instances of particular grammatical forms, or result from more semantically based scene schemas, or perhaps both. While we believe that both processes are at play, adjudicating which type of abstractions (purely syntactic or purely semantic) are represented awaits further study. Instead, we will speculate as to how these abstractions may emerge and interact.

We will first consider the semantically based scene schema abstractions. It is important that we define what we mean by “schema” before proceeding, as the term has been used in many different ways across the relevant literature. We define “schema” here in a similar vein as Gilboa and Marlatte (2017) and Reagh and Ranganath (2018), i.e., as abstract representations of scenes that serve as templates of a sort, built on commonalities between multiple prior experiences. For example, a schema for viewing a film may include the general event sequence of purchasing a ticket, buying concessions, finding a place to watch the film, and finally watching the film on a large screen located at the front. More specific details such as the layout of a particular cinema, whether one must find seats in a theater or a parking location at a drive-in, whether you prefer popcorn or candy, etc. are abstracted over. These schemas may be generated either via “gist” representations of scenes—which evidence suggests are encoded by a proposed posterior-medial (PM) network involving parahippocampal, and medial and ventrolateral parietal cortices, including the AG (Ranganath and Ritchey, 2012; Ritchey et al., 2015a,b; Inhoff and Ranganath, 2017; Reagh and Ranganath, 2018; O'Reilly et al., 2022)—or via abstraction over episodic scene representations—which evidence suggests involves both the aforementioned PM network in addition to a proposed anterior-temporal (AT) network involving perirhinal and ventral temporopolar cortices. Determining which of these proposed network accounts is correct awaits further study, but in either model the AG is an important component; the AG has also been implicated in visual processing of actions and scenes, in line with a proposed role for relational and spatial processing generally (Seghier, 2013; Gilboa and Marlatte, 2017; Fernandino et al., 2022). The type of information encoded in the model of visuospatial schemas adopted here has been suggested to provide the basis for schemas in other modalities such as time (Summerfield et al., 2020). For example, models of linear navigation through space have been suggested to generalize to neural representations of integer progression in counting and to representations of the progression of time. It should be noted that schemas are one particular event representation abstraction argued to be instantiated by the PM network, with other representations containing more specific details or being more structurally basic (Reagh and Ranganath, 2018). Notwithstanding the potential influence of event representations along a broad spectrum of abstractness, given the consistent findings of AG activation in processing phrases with action, event, or relational meaning, we can synthesize these data to suggest an at least partially semantic basis for verb argument structure representations using scene schemas.

Abstract syntactic representations could emerge in an analogous manner to abstractions over visual scenes, that is as abstractions over repeated instances of particular constructions (see also Hahn et al., 2022, for computational modeling evidence that similarly connects probabilistic syntactic parsing to visual processing). For example, repeatedly hearing a noun phrase followed by a verb and subsequently another noun phrase across various different lexical instantiations and contexts could abstract to a more general transitive construction. Such a process may rely on similar mechanisms as have been argued to generate schemas, by abstracting over repeated visual scenes and extracting the statistically common elements (Summerfield et al., 2020). The level of detail encoded in schemas is a matter of controversy, though it is perhaps likely that schemas at several levels along a detail-abstraction spectrum are represented, as has been argued to occur for syntactic constructions (Goldberg, 2003, 2013; Reagh and Ranganath, 2018; Summerfield et al., 2020) A tenable mechanism for instantiating such representations comes in the form of so-called “cognitive maps,” a framework proposed to unify representations of spatial and non-spatial structural knowledge. Cognitive maps are argued to represent “states” and transitions between them in a structured but abstract manner, allowing for their flexible use across a variety of different contexts and potentially across sensory or processing domains as well (Behrens et al., 2018; Park et al., 2020; Boorman et al., 2021). Cognitive maps have been implicated as representational structures in several domains, including navigation, reward-based decision making, and tracking social hierarchies (Behrens et al., 2018; Park et al., 2020, 2021; Boorman et al., 2021). An important feature of cognitive maps relevant for their use in language as we are suggesting is their ability to represent latent hierarchical structure between states based only on statistical regularities between them—including inferring relationships between states that have not been directly observed (Behrens et al., 2018; Boorman et al., 2021). While this suggestion is more speculative, this feature could potentially allow for cognitive maps to represent the latent hierarchical structure between words and constituents within sentences. Moreover, the structure of these cognitive maps can be constrained by structure generated from sensory features (Behrens et al., 2018), hinting that the visual scene schemas described above could serve to constrain “maps” of the relationships between linguistic constituents in a sentence. Cognitive maps could also potentially be used to implement anaphoric reference: canonical place cells are selectively active when an animal is at a particular spatial location, while canonical grid cells are active when an animal is at any of several different locations (Behrens et al., 2018). In combination with cells coding particular referents (as analogues of place cells), “grid” cells in such a linguistic cognitive map may respond to multiple uses of referents by different methods (e.g., name vs. pronoun) across different linguistic contexts (e.g., different clauses).

The preceding sections have touched upon issues related to general principles of cortical organization that bear summarizing and synthesizing, namely, sensory processing hierarchies. Sensory processing proceeds from subcortical to primary sensory cortices, to secondary sensory cortices, to primary and secondary association cortical areas. The pathways between these are of course not strictly serial, with subcortical projections to secondary sensory cortices, feedback loops from higher cortical regions, etc.; however, they do reflect a general principle such that processing of very finely detailed sensory information, such as the wavelength of light or frequency of sounds, are processed early in the relevant sensory stream (and consequently very quickly). Cortical regions further up these streams process and encode conjunctions of lower-level information, creating abstractions over this more fine detail; association cortices, which include large portions of the temporal, parietal, and frontal lobes, can (though do not always) integrate information from multiple sensory domains. Such organization allows for information to be encoded at multiple levels of abstraction simultaneously. Psycho- and neurolinguistic research models have long proposed that phonological information and lemma information are both encoded separately and simultaneously, but whether even more abstract linguistic forms (e.g., constructions) are as well has been more controversial. However, the encoding of multiword constructions at multiple, simultaneous levels of specificity would be in keeping with these principles of hierarchical processing and the abstractions they afford. While temporal lobe association cortical areas may be more selective than parietal association regions, that both afford mechanisms for computing conjunctions and abstractions over sensory features suggests a distributed computation is responsible for such combinatorial operations.

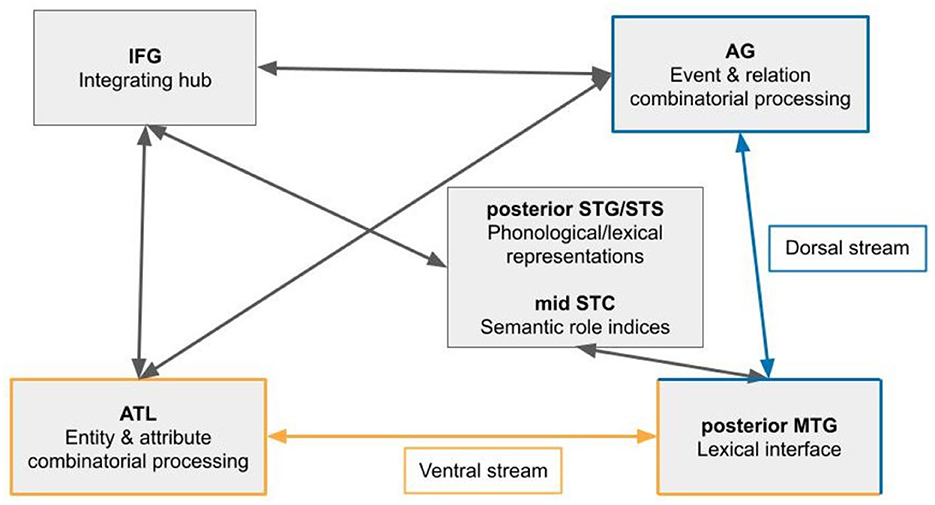

To summarize our proposed model, we divide syntactic processing into two streams: a ventral stream dedicated to combinatorial processing of nominals and attributive adjectives, and a dorsal stream dedicated to combinatorial processing involving verbs and relational adjectives (Figure 1). For processing phrasal constituents, the ventral stream culminates in the ATL while the dorsal stream culminates in the AG; in constructing sentences, the two streams interact both directly through white matter tracts extending from the temporal lobe to the parietal, as well as indirectly through the IFG. Because the model we propose here separates phrasal processing into two streams, a mechanism that can be instantiated along both must be involved: the delta-theta-gamma oscillatory code, which is further used to communicate between the involved brain regions (Murphy, 2015, 2018, 2020; Benítez-Burraco and Murphy, 2019). Regions in the mid superior temporal cortex are used as indices of semantic role, which the IFG may take part in properly assigning, utilizing white matter tracts between the STC and IFG. The IFG may also play a role in generating the low-frequency oscillatory activity used across the language system, including by pSTS, the ATL, and AG for phrase building and long-distance communication (Murphy et al., 2022b).

Figure 1. Proposed dual-stream model of syntactic combinatorial processing. The ventral stream is specialized for building phrases involving nouns and attributive adjectives; the dorsal stream is specialized for building phrases involving verbs and relational adjectives.

The apparent selectivity for the ATL in processing nominal phrases reflects a bias toward multimodal integration, namely with vision (Tanenhaus et al., 1995). In order for our lexical items to have semantic content, we must associate them with objects in the real world (setting aside the issue of more abstract lexical items such as ideas or dreams). One method of doing this is to associate the auditory lexical signal of an object with a corresponding visual signal. Given that the ventral visual stream extends into the posterior temporal gyrus and appears to selectively process objects and object-relevant attribute information (size, shape, color, etc.) (Ishai et al., 1999; Giménez Amaya, 2000), then the ATL as a locus of associating nominal and attributive lexical items with visual object representations is quite logical. Moreover, the temporal lobe has demonstrated hierarchical organization for both visual and auditory stimuli, oriented along dorsal-ventral and anterior-posterior axes, in humans and other primates (Bao et al., 2020; Blazquez Freches et al., 2020; Braunsdorf et al., 2021; Sierpowska et al., 2022). Hierarchical processing of visual stimuli extends from primary visual cortex in the occipital lobe along ventral temporal cortex, with increasing conjunctions of features computed and invariance of representation extending anteriorly from primary visual cortex (Braunsdorf et al., 2021). Auditory processing extending from primary auditory cortex shows a similar hierarchy, but arranged in a more concentric circular pattern, with regions closer to A1 coding for low-level features such as frequency, tone, etc.; extending further outwards, lexical representations and socially important information such as speaker identity are coded (Braunsdorf et al., 2021). Importantly, the ATL as well as MTG appear to be regions of convergence for both auditory and visual processing, with some arguing that MTG dynamically codes for conjunctions of visual and information from other modalities (Weiner and Grill-Spector, 2013; Braunsdorf et al., 2021). A similar point will be returned to as we now shift our attention to the AG and its involvement in event semantics and verb argument structure processing.

Complementary to the ventral visual stream is the dorsal visual stream, extending from primary visual cortex to parietal cortex, which processes spatial and action information (Goodale and Milner, 1992; Orban et al., 2004). Once more, we must bind our lexical items to particular concrete instantiations (again leaving aside the issue of more abstract concepts); in this case of verbs, they must be associated with particular actions, events, or states. By a similar logic as that proposed for nouns being bound to visual representations in the temporal lobe, verbs can be bound to visual action representations in the parietal lobe. The richness with which object information is represented in comparison to more sparsely represented spatial information by the visual system appears to have a profound influence on language, confirming the importance of linking object/noun binding in the temporal lobe and action/verb, event/construction binding in the parietal lobe (Landau and Jackendoff, 1993). This is perhaps more true for spoken languages than signed languages such as ASL; given their primary reliance on a manual-visual modality, certain operations may be more biased toward reliance on parietal regions, particularly given the extensive multimodal nature of association cortical regions in the parietal lobe (Corina et al., 2013).

Of course, actions do not occur without other entities either performing them or being affected by them (or both), nor do they occur removed from a particular spatial context. As cortical locations that make up part of the “where” stream of visual processing, and by integrating object information from the ventral visual stream, the parietal lobe can represent relational information between entities (reviewed in Cloutman, 2013; Ray et al., 2020). The conjunction of action, spatial, relational, and object information constitute the essential elements of an event. How can we get from the event semantic representations that appear to be processed by the AG to more general argument structures though? To begin with, the confluence of multiple sensory inputs in the parietal lobe suggests some manner of abstraction over these, in order to build appropriate associations between them (Binder and Desai, 2011). The large amount of informational components of an event combined in the AG is no exception. Firstly, there is ample evidence that people make fine-grained distinctions about the semantics of an event based on changes in argument structure (Wittenberg, 2018, for review). For example, Wittenberg et al. (2017) provide eye tracking and behavioral data that suggest participants, who were implicitly trained to classify sentences based on the number of semantic roles, interpret light verb constructions (e.g., give a kiss) intermediately between three semantic role constructions (e.g., in the prototypical ditransitive give construction) and two semantic role constructions (e.g., a prototypical transitive construction like kiss). They take this as evidence that two sets of competing event semantics are being activated, one set from the light verb and the other from the nominalized action, suggesting that argument structure influences event construals. Moreover, Ramchand (2019) argues that constrained event representations track systematically with particular argument structures, and especially important, that generalizations of event semantics and generalization of syntactic argument structures go hand-in-hand. In particular, she argues that a constrained decomposition of events into their component parts maps consistently to syntactic argument structures—including embedded clauses as subevents.

This discussion also brings up a fundamental question of how we generalize to new linguistic input. Certainly semantics plays some role, but how do we know that in a sentence like Nala glorped the dax to the flort, Nala is likely somehow transferring the dax item to the flort entity or location? O'Reilly et al. (2022) argue that more abstract, and increasingly content-general representations can be learned in the parietal lobe through an error-driven process, where sensory input is compared to sensory predictions. An abstract syntactic construction may be an example of such a representation, with particular arrangements of slots that are open for different lexical items. What type of learning mechanism can be employed that allows for learning abstract construction information in an error-driven way? A Bayesian type learning algorithm could fit the bill. Although we are unaware of a study testing this hypothesis specifically, Perfors et al. (2010) is potentially indicative; the authors implemented a hierarchical Bayesian learning model to simulate how a language learner might successfully cluster verbs into two classes depending on whether they alternate between the double-object and preposition-object forms or not. Using information about distributional statistics alone, the model was able to learn how many classes of verbs existed, as well as correctly assigning particular verbs to each class. This model therefore suggests a mechanism for learners to acquire even more abstract patterns of verb constructions: based on a confluence of the syntactic environments verbs appear in, as well as the semantics of each lexical item and the semantics of the overall clause, a Bayesian learner could generalize clusters of verbs that occur with a single nominal phrase and share event semantics of a single entity performing an action, and so on for other constructions. Coupled with the Perfors et al. (2010) model, both an abstract construction, the distribution of constructions, and the set of verbs participating in each construction could be learned, such that encountering a sentence like Nala glorped the dax to the flort allows a Bayesian learner to hypothesize that: (1) glorp is part of the set of verbs that participate in the ditransitive construction; (2) that it therefore likely carries a meaning of transfer or one thing to another entity or place; and (3) that it is more likely to only occur in the prepositional object construction. With that said, Bayesian modeling has been critiqued as not a good approximation of how the brain operates at the neuronal level, and its approximation to higher-level cognition rests on the assumption that what happens at the neural level can mostly be ignored or abstracted away from (O'Reilly et al., 2012).

To get a more neurobiologically grounded understanding of how such event abstractions may occur (though not quite at the level of individual neurons), we return to the issue of schemas and cognitive maps. Again, we wish to emphasize that we are not making an argument based on a strongly embodied view of language, but instead one where sensory perceptions provide a semantic basis and constraint for linguistic structure. Abstraction over sensory perceptions of events to create event schemas or situation models may provide a meaning basis for particular grammatical constructions; for example, abstracting over many individual visual/somatosensory instances of someone handing an object to another person or animate entity may generate a “giving” or “transferral” schema consisting of an object being transferred from one entity to another. When coupled with a systematic and regular pairing of a particular grammatical form, these schemas provide a basic general template for meaning to be more fully fleshed out by the specific lexical items used and the entities they denote. The level of abstraction of schemas is a matter of debate within the literature, reflective of a similar debate in theoretical syntax between lexical and construction representations of argument structure. For example, more detailed situation models (as proposed by e.g., Reagh and Ranganath, 2018) could be viewed as similar to more detailed lexically-based argument structures, in that a more specific event may be denoted by both the situation model and a verb. Meanwhile, more abstract schemas (as proposed by e.g., Summerfield et al., 2020) could be viewed as similar to more abstract construction-based argument structures, in that both schemas and argument structure constructions represent very general knowledge about entities and their relative spatial locations and interactions. As in language, these are not necessarily irreconcilable, with potentially multiple levels of abstraction occurring and being simultaneously drawn upon. Abstract representations built upon commonalities between prior sensory experiences, like those proposed here, should result in “fuzzy” category representations, with some instances sharing more commonalities with core features of the category than others. This is an important aspect of linguistic categories that has been explored in Cognitive Grammar with respect to lexical semantics and syntactic structures. Despite the aforementioned critiques, iterative Bayesian algorithms may model these aspects of learning abstract categories quite well.

Models of cognitive schemas assign important roles for medial temporal lobe (MTL) structures (including the hippocampus, perirhinal cortex, entorhinal cortex, and parahippocampus) in forming these schemas; extending this model to language acquisition suggests a more active contribution for these brain regions than has been previously appreciated. Some important recent work has begun incorporating MTL structures into neurobiological models of language, providing productive space for further research (Piai et al., 2016; Murphy et al., 2022a) This model similarly implies a more critical contribution of the AG in language acquisition and in connecting linguistic and visual representations, given suggestions that parietal lobe regions act as amodal hubs for compressing the dimensional space of sensory representations (Summerfield et al., 2020; O'Reilly et al., 2022). This model of interaction between visual and linguistic representations can help explain a fairly strong consistency in argument structures cross-linguistically (Nichols, 2011). This is not to say that the interaction is unidirectional—linguistic structure may serve to orient or contain attention to specific objects or entities in a visual event. For example, using give in a ditransitive argument structure may help alert a language learner that there are three entities or objects to which they should pay particular attention in a given visual scene, and that they should ignore or pay less attention to extraneous entities/objects that were not mentioned linguistically. The interaction between visual representations, linguistic representations, and attention may further help to explain argument structure alternations (e.g., the double-object and prepositional-object alternation) and argument structure optionality (e.g., eat is optionally transitive, while devor is obligatorily transitive, and dine is intransitive), but awaits further study—however, some joint eye-tracking and language production data from Pitjantjatjara and Murrinhpatha, two languages spoken by Aboriginal Australians with relatively free word order, are suggestive of this (Nordlinger et al., 2020).