94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Immunol. , 04 October 2022

Sec. Vaccines and Molecular Therapeutics

Volume 13 - 2022 | https://doi.org/10.3389/fimmu.2022.997343

Bilgin Osmanodja1*†

Bilgin Osmanodja1*† Johannes Stegbauer2†

Johannes Stegbauer2† Marta Kantauskaite2

Marta Kantauskaite2 Lars Christian Rump2

Lars Christian Rump2 Andreas Heinzel3†

Andreas Heinzel3† Roman Reindl-Schwaighofer3†

Roman Reindl-Schwaighofer3† Rainer Oberbauer3†

Rainer Oberbauer3† Ilies Benotmane4†

Ilies Benotmane4† Sophie Caillard4†

Sophie Caillard4† Christophe Masset5†

Christophe Masset5† Clarisse Kerleau5†

Clarisse Kerleau5† Gilles Blancho5†

Gilles Blancho5† Klemens Budde1†

Klemens Budde1† Fritz Grunow1

Fritz Grunow1 Michael Mikhailov1†

Michael Mikhailov1† Eva Schrezenmeier1,6†

Eva Schrezenmeier1,6† Simon Ronicke1†

Simon Ronicke1†Repeated vaccination against SARS-CoV-2 increases serological response in kidney transplant recipients (KTR) with high interindividual variability. No decision support tool exists to predict SARS-CoV-2 vaccination response to third or fourth vaccination in KTR. We developed, internally and externally validated five different multivariable prediction models of serological response after the third and fourth vaccine dose against SARS-CoV-2 in previously seronegative, COVID-19-naïve KTR. Using 20 candidate predictor variables, we applied statistical and machine learning approaches including logistic regression (LR), least absolute shrinkage and selection operator (LASSO)-regularized LR, random forest, and gradient boosted regression trees. For development and internal validation, data from 590 vaccinations were used. External validation was performed in four independent, international validation cohorts comprising 191, 184, 254, and 323 vaccinations, respectively. LASSO-regularized LR performed on the whole development dataset yielded a 20- and 10-variable model, respectively. External validation showed AUC-ROC of 0.840, 0.741, 0.816, and 0.783 for the sparser 10-variable model, yielding an overall performance 0.812. A 10-variable LASSO-regularized LR model predicts vaccination response in KTR with good overall accuracy. Implemented as an online tool, it can guide decisions whether to modulate immunosuppressive therapy before additional active vaccination, or to perform passive immunization to improve protection against COVID-19 in previously seronegative, COVID-19-naïve KTR.

SARS-CoV-2 vaccination offers protection from severe coronavirus disease 2019 (COVID-19) regardless of the causative variant for most healthy individuals. (1) In contrast, protection in immunocompromised solid organ transplant (SOT) recipients is limited. The serological response rate after SARS CoV-2 vaccination in kidney transplant recipients (KTR) after three doses of vaccine is strongly impaired in comparison to the general population – resulting in insufficient protection and an unacceptably high COVID-19 mortality within this population (2, 3).

Different strategies to induce humoral protection for KTR have been suggested, including repeated vaccination and vaccination under adjusted immunosuppression – besides SARS-CoV-2-specific monoclonal antibody therapy (4). Existing data are helpful to identify factors associated with insufficient vaccination response, but are not easily interpretable for the single patient or vaccination (5–7). Specifically, no tool exists to predict individual response to a vaccination. Risk calculators can help assess the likelihood of vaccination success in an individual and help decide between different possible actions such as passive or active immunization or adjustment of immunosuppressive medication. To date, no such decision support system is available.

For this reason, we aim to develop a classification model to predict serological response to third and fourth SARS-CoV-2 vaccinations in previously seronegative, COVID-19-naïve KTR. The model’s implementation objective is to identify patients that will likely not respond to an additional dose of vaccine, even with changes in immunosuppressive medication, and thus benefit most from passive immunization strategies. Using our previously reported data of vaccination outcomes in KTR, we develop and compare a set of prediction models based on classical statistical methods as well as machine learning. After selecting the most promising models, we validate the resulting prediction models in four independent validation cohorts, and make the result available as an online calculator.

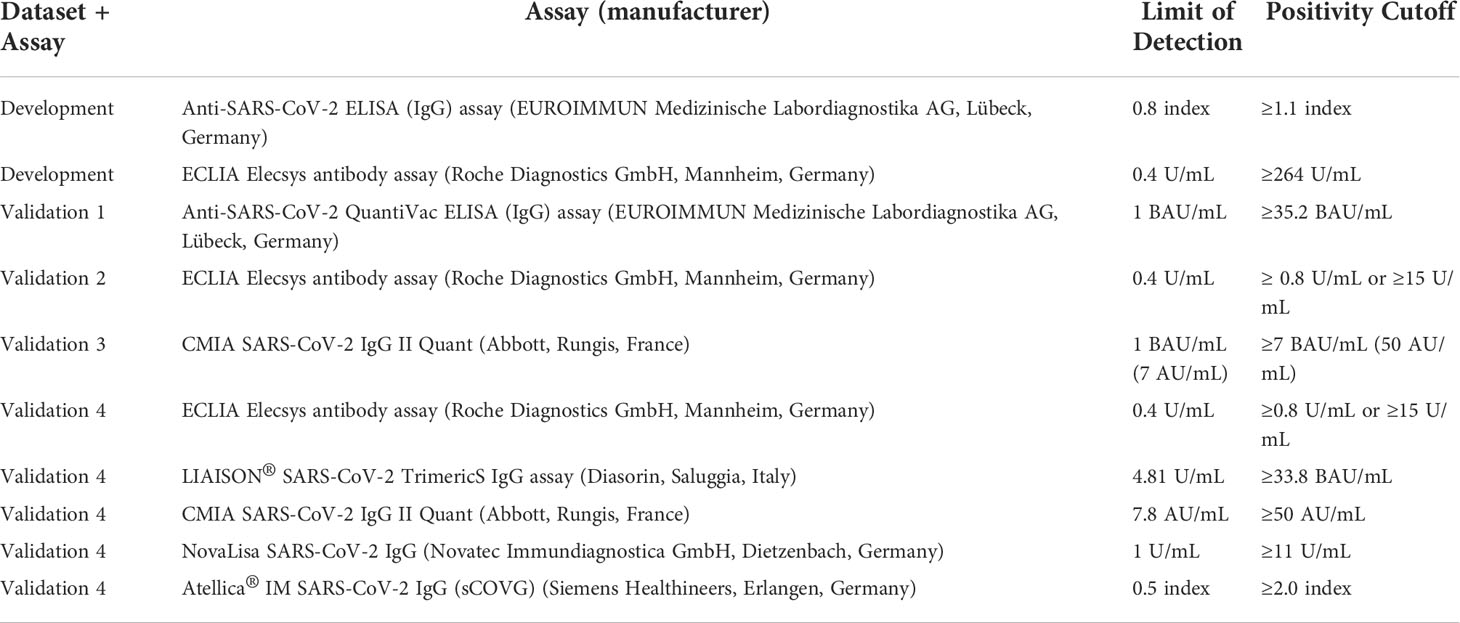

Data from KTR at Charité – Universitätsmedizin Berlin, Germany, were used to form the development cohort. Details of the underlying patient population, as well as the assays and cutoffs used have been previously reported (5). Briefly, KTR received up to five doses of SARS-CoV-2 vaccine in case of sustained lack of sufficient serological response to vaccination at our institution, combined with either maintenance, reduction or pausing mycophenolic acid (MPA) for fourth and fifth vaccination. For the enzyme-linked immunosorbent assays (ELISA) for the detection of IgG antibodies against the S1 domain of the SARS-CoV-2 spike (S) protein in serum (Anti-SARS-CoV-2-ELISA (IgG), EUROIMMUN Medizinische Labordiagnostika AG, Lübeck, Germany), samples with a cutoff index ≥ 1.1 (in comparison to the previously obtained cut-off value of the calibrator) were considered positive, samples with a cutoff index ≥ 0.8, and < 1.1 were considered low positive, and samples with a cutoff index <0.8 were considered negative, as suggested by the manufacturer.

Alternatively, for the electrochemiluminescence immunoassay (ECLIA) (Elecsys, Anti-SARS-CoV-2, Roche Diagnostics GmbH, Mannheim, Germany) detecting human immunoglobulins, including IgG, IgA and IgM against the spike protein receptor binding domain (RBD), samples with ≥ 264 U/ml were considered to be positive as recommended by Caillard et al. (8, 9) Any non-zero antibody level below this cutoff was considered low positive, with limit of detection (LoD) being 0.4 U/mL.

For predictive modeling, we included data on third and fourth vaccination, since basic immunization has most likely been performed in most KTR patients already, and since only few patients received fifth vaccination so far.

After applying all exclusion criteria summarized in Table 1, the development cohort comprised 590 vaccinations performed between December 2020 and January 2022 in 424 previously seronegative, COVID-naïve adult KTR (Figure 1). The Charité institutional review board approved this retrospective analysis (EA1/030/22).

We used four independent, international validation cohorts from outpatient transplant centers at University Hospital Düsseldorf, Germany (191 vaccinations in 137 KTR) (10, 11), Medical University Vienna, Austria (184 vaccinations in 184 KTR) (12), Strasbourg University Hospital, France (254 vaccinations in 229 KTR) (13, 14), Hotel Dieu Nantes, France (323 vaccinations in 269 KTR) (15). Detailed information about the validation cohorts are presented in Items S1-Item S4 and patient selection including outcome frequencies are summarized separately for each validation cohort in Figures S1-S4. No sample size calculation was applicable for this post-hoc analysis.

The single outcome variable was a positive serological response defined by the maximum anti-SARS-CoV-2 spike (S) IgG or antibody level, measured at least 14 days after vaccination and before any further immunization event such as SARS-CoV-2 infection, passive or active immunization. Since different assays were used at different sites, details on the tests and the respective cutoffs used are provided for each validation cohort in Item S1-S4, which are summarized in Table 2. Generally, IgG or antibody positivity was determined based on the local laboratory’s positivity cutoff, mostly the one provided by the manufacturer. Especially for the ECLIA Elecsys assay different cutoffs were available and used. We chose to assess model performance for two cutoffs for this specific assay. First, we used the 0.8 U/mL cutoff provided by the manufacturer, yielding highest sensitivity in detecting patients with previous COVID-19. Second, a cutoff of 15 U/mL, which was initially suggested by the manufacturer to exhibit a positive predictive value of more than 99% for presence of neutralizing antibodies against the wild-type virus, was used (12). Contrary to the manufacturer’s designated use, our intention was to provide an alternative positivity cutoff, below which no neutralization against omicron variant occurs, but that is not as close to the LoD (0.4 U/mL) as the positivity cutoff provided by the manufacturer (0.8 U/mL). This alternative positivity cutoff definition was needed to test the hypothesis that the absence or low number of “low-positive” antibody levels before vaccination (below the positivity cutoff, but above the LoD) for this assay led to low performance in validation sets 2 and 4. While the cutoff of 15 U/mL is somewhat arbitrary, it meets both needs. First, it increases the percentage of low positive patients in validation set 4, and second, patients with antibody levels <50 U/mL in this assay show no neutralization against omicron BA.1, which most likely applies to omicron BA.2 as well (16, 17). Hence, adjusting the cutoff to 15 U/mL is compatible with the objective to identify patients without serological response to an additional vaccine dose corresponding best with a lack of neutralizing antibodies.

Table 2 Assays, as well as respective limit of detection and positivity cutoff used for each dataset.

Predictor variables in the data sets comprised 20 variables: four vaccination-specific, three demographic, one comorbidity, three transplantation-specific, five encoding medication, and four biomarkers (Table S1). From the initial 27 candidate predictor variables, seven were excluded during revision for the following reasons: treatment with azathioprine, mTOR inhibitor or rituximab in the last year were removed as predictor variable, since each variable was present in less than 5 subjects in the development dataset. Donor-specific anti-HLA antibodies were removed since they highly depend on mismatch status and medication adherence in the past. Anti-HB-S antibodies were removed since they depend on hepatitis B vaccination status, which was not available for most patients in the development cohort. Treatment with mycophenolic acid (MPA) was removed as predictor variable, since MPA dose contained the same information and was already used. White blood cell count was removed, since it is presumably less suitable to predict vaccination response than lymphocyte count, which was already a predictor variable (Table S2).

For the development dataset, preliminary analysis showed that neither using data from patients without lymphocyte count, which was the most common missing laboratory value, nor imputation of missing laboratory values by multiple imputation (both of which yielded higher sample size) did add predictive accuracy for logistic regression and was therefore not followed for the main analysis (Figure S5; Table S3). After applying all exclusion criteria shown in Table 1, no missing values were present in the development dataset and no imputation methods were necessary.

For each validation set, we excluded vaccinations with missing data on serological response, missing information about the SARS-CoV-2 spike IgG or antibody assay used, missing immunosuppressive medication data, or missing estimated glomerular filtration rate (eGFR), lymphocyte count, or hemoglobin. We imputed the remaining variables to reduce the number of omitted cases due to missing values. Instead of multiple imputation, we used a more pragmatic approach and imputed either the most frequent value of the respective variable in the development dataset in case of binary or categorical variables, or the median (or mean) of the respective variable in the development dataset in case of numerical variables, as summarized in Table 3. This is the way a clinician would handle a missing value when using the online risk calculator, since those values are used as presets in the online calculator. In the validation cohorts, no data originating from a time after the respective vaccination was included to make predictions.

Alternatively, we performed multiple imputations by chained equations employing the R package mice after pooling all validation datasets. Performing multiple imputation separately for each dataset was unfeasible, since for validation set 2, no data on BMI, time on dialysis and diabetes status were present. Pooling all validation sets and performing multiple imputation hereafter was one possibility to avoid this problem.

To compare median/mean imputation to other possibilities to deal with missing data, we additionally performed complete case analysis for validation sets 1, 3, and 4.

Using the development cohort, we evaluated five models during internal validation. To perform model validation within the development cohort, a resampling approach was used by assigning 590 vaccinations randomly 100 times into training and test sets of 413 and 177 each (70:30 split). Each time, hyperparameter tuning, if applicable, and model fitting was performed on the respective training set, and performance metrics were assessed on the respective test set.

First, as baseline, we fit a logistic regression model with all candidate variables using the R function glm.

Second, we fit 2 logistic regression models with least absolute shrinkage and selection operator (LASSO) regularization using the packages caret and glmnet in R. The LASSO hyperparameter λ, which adjusts the tradeoff between model fit and model sparsity, was optimized for each training cohort with respect to the area under the receiver operating curve (AUC-ROC) using inner 5-fold cross-validation. We chose 2 different λ optimization criteria yielding 2 different models for each training cohort: (1) maximizing AUC-ROC (termed LASSO-Min model), and (2) penalty maximization while keeping the AUC-ROC within one standard error of the maximum AUC-ROC (termed LASSO-1SE model).

Third, we fit a random forest regression model using the package randomForest in R. We optimized the hyperparameter mtry by evaluating 15 random parameter combinations during two repeated 5-fold cross-validations within the training set. The value of mtry yielding the highest accuracy during cross-validation was used to fit the random forest on the respective training data.

Fourth, we fit a gradient boosted regression trees (GBRT) model using the gbm package. We used a tune grid with 4*8*3*1 hyperparameter combinations (n.trees: 300, 500, 700, 900; interaction.depth: 2, 4, 6, 8, 10, 12, 14, 16; shrinkage: 0.001, 0.01, 0.1; n.minobsinnode: 10) to optimize hyperparameters during two repeated 5-fold cross-validations within the training set. The combination yielding the highest normalized discounted cumulative gain during cross-validation was used to fit the GBRT on the respective training data.

We calculated median and mean performance during resampling for those five developed models. To evaluate the performance of the binary classification, we used Area Under the Curve of the Receiver Operator Characteristic (AUC-ROC), and confidence intervals (CI) in the resampling approach were determined from the empirical 2.5% and 97.5% quantiles of the performance on the 100 different test sets. Based on the threshold determined by the optimization criterion “closest.topleft” as provided in R package pROC (point with the least distance to [0,1] on the ROC-curve) during ROC-analysis, we calculated models’ sensitivity, specificity, accuracy, positive predictive value, and negative predictive value for each resampling step, again yielding median and empirical 95% CI.

We chose LASSO-Min and LASSO-1SE for estimation of model coefficients in the entire development cohort, which were then used for external validation. The relationship between the hyperparameter λ that controls model sparsity and the AUC-ROC during inner 5-fold cross-validation is shown in Figure S6. We assessed the decision thresholds for classification by determining the “closest.topleft” threshold on the entire development cohort, each for the final 10-variable and the 20-variable model. These were used for classification during external validation, and are also provided in the online risk calculator after transforming them into risk probabilities according to the formula:

where xt is the decision threshold.

For external validation, we calculated the aforementioned performance metrics on each validation cohort separately. Furthermore, 95% CIs in the external validation cohorts were determined by performing 1000-fold ordinary nonparametric percentile bootstrap, as the empirical 2.5%, and 97.5% quantiles of AUC, sensitivity, specificity, accuracy, positive predictive value, and negative predictive value based on the thresholds determined within the development cohort.

Additionally, we fitted LR, RF and GBRT on the development dataset and performed external validation after pooling all validation datasets. Decision thresholds for LR and GBRT were determined within the development cohort as described above, and decision threshold for RF was 0.5.

To make the prediction models publicly available, we created an online tool implementing the LASSO logistic regression models used for external validation, which can be assessed at https://www.tx-vaccine.com. For patients who meet one or more of the exclusion criteria, the risk calculator should not be used.

Statistical analysis was performed using R studio v.1.2.5042 and R version 4.1.2 (2021-11-01). The underlying code was made available at https://github.com/BilginOsmanodja/tx-vaccine. The datasets can be made available on request from the corresponding author.

This article was prepared according to the transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD) statement and we provided a checklist in the supplement (18).

In total, 590 vaccinations (411 third vaccinations, and 179 fourth vaccinations) were used for development and internal validation, which is summarized together with outcome frequencies and reasons for exclusion in Figure 1.

Baseline characteristics of patients in the development and validation datasets including summary statistics of all variables are shown in Table 3.

Using the resampling approach outlined above, we fit five different models on each training set and evaluated their performance on the respective unseen test set during 100 resampling steps.

A logistic regression model employing all candidate variables served as a baseline. Using the two different λ optimization criteria outlined in “Methods”, the LASSO-Min and LASSO-1SE models were fitted. Additionally, two tree-based machine-learning approaches were studied - random forest (RF) and gradient boosted regression trees (GBRT).

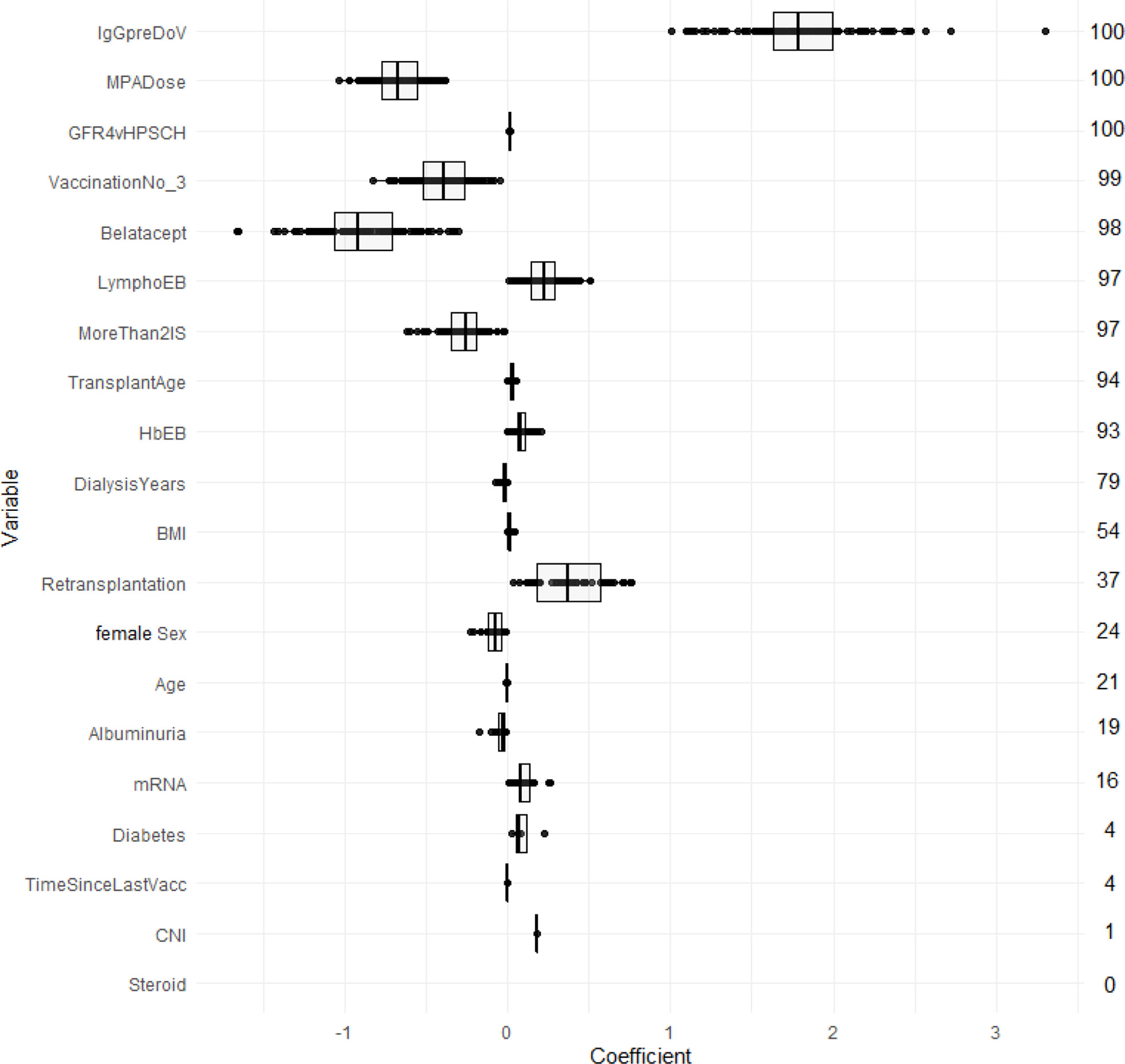

LASSO logistic regression selected in the majority of resampling runs 20, and 10 out of 20 potential predictors to yield the LASSO-Min and LASSO-1SE models, respectively. The regression coefficients, their variances, and the selection frequency of the predictors are shown in Figure 2 and Figure S7.

Figure 2 Estimated coefficients of the LASSO-1SE models summarized across 100 subsampling runs for unstandardized variables. Numbers on the right indicate the selection frequency (in percent) for the respective variable in 100 subsampling runs. Variables are ordered from top to bottom according to the selection frequency.

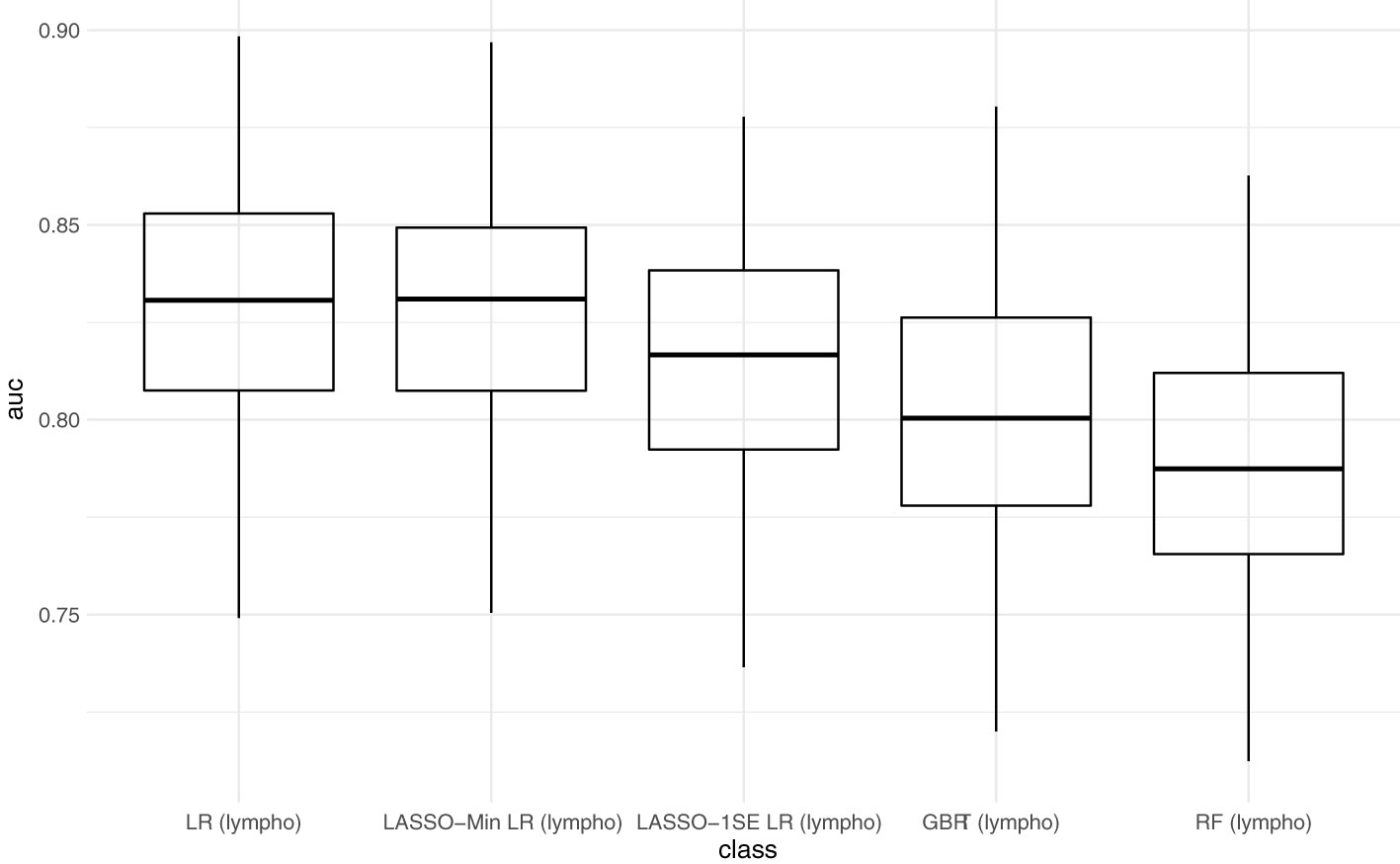

Figure 3 compares AUC-ROC of the 5 models on the unseen test sets during 100 resampling steps, and Table 4 summarizes mean, and median performance metrics as well as 95% confidence intervals determined from empirical 2.5% and 97.5% quantiles during internal validation. Thresholds for binary classification were determined on the respective test set during each resampling step by performing ROC-analysis.

Figure 3 Predictive performance of the developed models (AUC) in internal validation. Each point represents the AUC-ROC during 1 out of 100 resampling steps. Horizontal lines within the box depict the median and the upper and lower horizontal lines depict upper and lower quartiles, respectively. LR - logistic regression, LASSO-Min LR - least absolute shrinkage and selection operator regularized logistic regression with lambda hyperparameter optimized to yield maximum AUC-ROC within an inner 5-fold cross validation in the training set. LASSO-1SE - least absolute shrinkage and selection operator regularized logistic regression with lambda hyperparameter increased from lambda-min, so that AUC-ROC stays within one standard error within an inner 5-fold cross validation in the training set. GBRT - gradient boosted regression trees. RF - random forest. lympho – including lymphocyte count as predictor variable.

With respect to AUC-ROC, the LASSO-Min model - 0.831 (0.784 - 0.879) and the baseline logistic regression model - 0.831 (0.786 - 0.879) showed best performance during internal validation. Since the sparser LASSO-1SE model showed comparable predictive performance of 0.817 (0.742 - 0.873) with fewer variables, we chose to analyze both, LASSO-Min and LASSO-1SE regularized logistic regression models in depth during external validation.

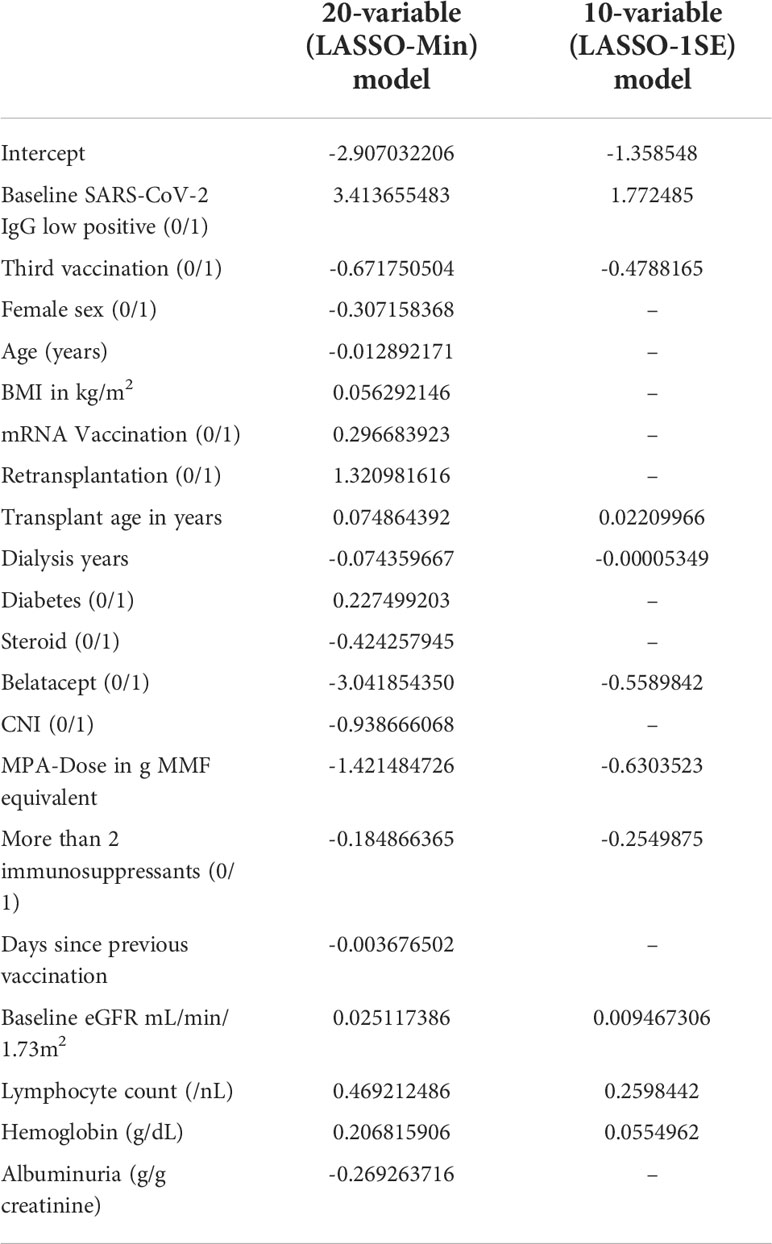

Final risk equations were obtained by fitting LASSO-Min, and LASSO-1SE models on the complete development dataset, yielding a 20-variable and one 10-variable risk equation respectively. The intercept and regression coefficients of the final LASSO logistic regression models are shown in Table 5. Risk equations are provided in Items S5 and S6, and are implemented as an online tool available at https://www.tx-vaccine.com.

Table 5 Final intercept and coefficients of the 20-variable (LASSO-Min), and 10-variable (LASSO-1SE) logistic regression model fitted on the entire development dataset, both of which are used for external validation.

After applying all exclusion criteria and performing imputation of missing variables, we evaluated both risk equations in the four independent validation datasets. Since predictive performance during external validation was comparable for both models, in the following we report on the sparser 10-variable model. Results of external validation of the 20-variable model are summarized in Table S4 and Figure S7.

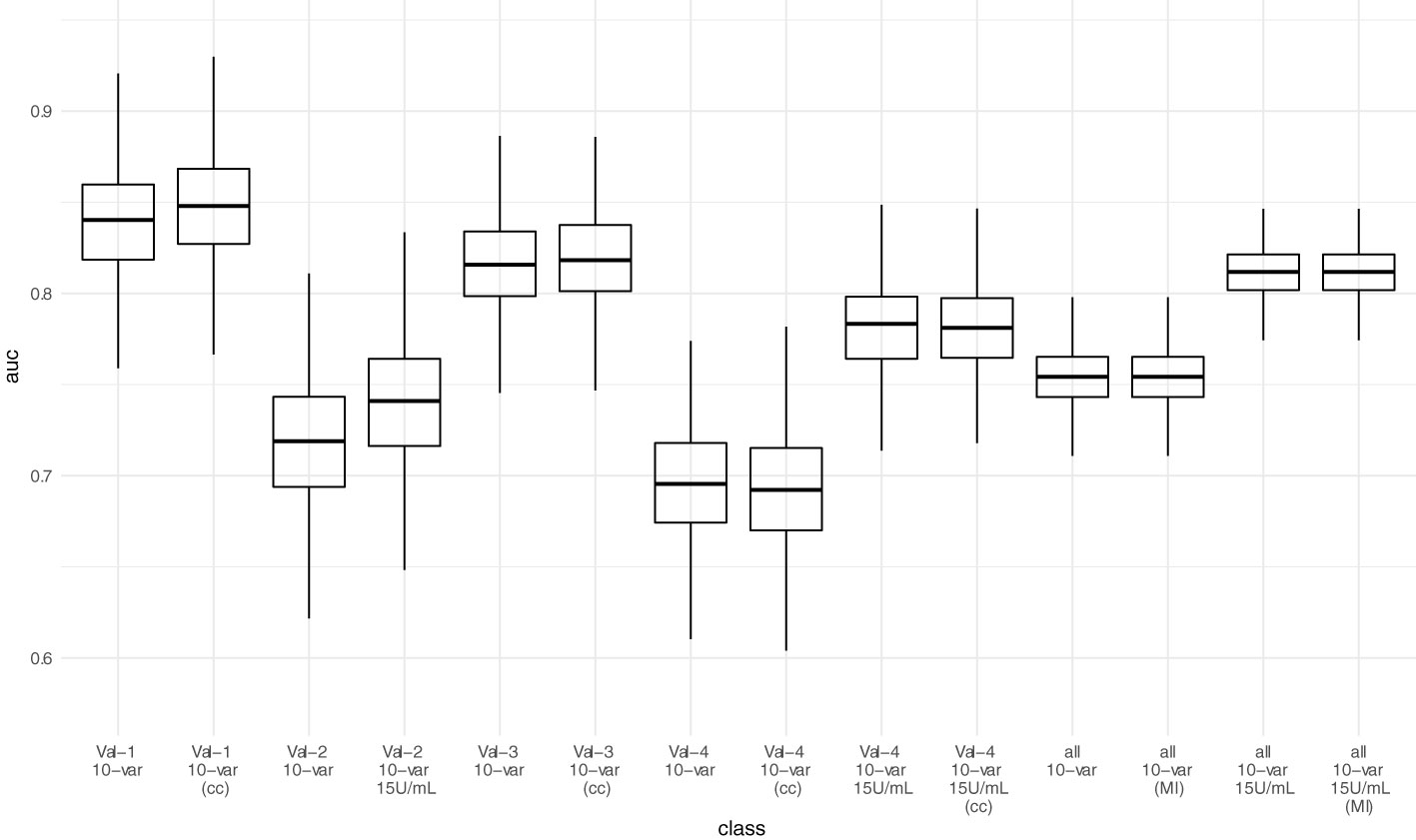

AUC-ROC of the sparser 10-variable model during external validation was 0.840 (0.777 - 0.897) for validation set 1, 0.719 (0.641 - 0.790) for validation set 2, 0.816 (0.763 - 0.862) for validation set 3, and 0.696 (0.629 - 0.758) for validation set 4, yielding an AUC-ROC of 0.754 (0.722 - 0.784) when merging all validation sets (Figure 4). Sensitivity, specificity, accuracy, positive predictive value, and negative predictive value using the thresholds determined during ROC-analysis in the development dataset are summarized in Table 6. The decision thresholds used for external validation are also provided in the online risk calculator to guide physicians’ decision as well in Items S5 and S6.

Figure 4 Predictive performance (AUC-ROC) of the 10-variable model in external validation. Each point represents the AUC-ROC in 1 out of 1000 bootstrap samples. Horizontal lines within the box depict the median and the upper and lower horizontal lines depict upper and lower quartiles, respectively. To assess the impact of the mean/median imputation method chosen, we also provide model performance when performing complete case analysis (cc) for validation sets 1, 3, and 4. For validation set 2, due to missing variable “Dialysis years” for all patients, no complete case analysis could be performed. Additionally, we performed multiple imputation (MI) in the pooled validation datasets (all) and assessed model performance here as well. Val – Validation cohort, 10-var – 10-variable model, all – all validation sets pooled, cc – complete case analysis, MI – multiple imputation.

Performance in the validations sets 2 and 4 was poorer than in the development as well as in the other two validation sets. We suspected the positivity cutoff of 0.8 U/mL provided by the manufacturer for the ECLIA Elecsys assay as one main reason. Since it is close to the LoD (0.4 U/mL), no or small fraction of “low positive” antibody levels (values above the LoD and below positivity cutoff) before vaccination are present in both validations sets (Table 3), which is different to both other validation sets and the development dataset. Since a low positive antibody level before vaccination is an important predictor of serological response (Table 5), we adjusted the positivity cutoff to 15 U/mL arbitrarily for two reasons. First, to test the hypothesis that cutoff definition is a reason for lower performance. Second, to provide data that an implementation of this model is feasible independent of the assay used. Our proposed implementation strategy for the prediction model is to identify patients, who will not respond to an additional vaccine dose, and to offer those patients passive immunization. Hence, using any other cutoff below which no neutralization against omicron occurs, is compatible with this strategy under the circumstances of omicron-dominance. We arbitrarily use an alternative positivity cutoff of 15 U/mL for this respective assay, since it has already been proposed by the manufacturer before.

When adjusting the cutoff to 15 U/mL for the ECLIA Elecsys assay, AUC-ROC increased to 0.741 (0.663 - 0.808) for validation set 2, and 0.783 (0.730 - 0.828) for validation set 4, yielding an overall AUC-ROC of 0.812 (0.784 - 0.836) after merging all validation sets. With the decision threshold assessed in the development dataset, the negative predictive value is 0.75 (0.713 - 0.784).

Neither complete case analysis nor multiple imputation in the pooled validation cohort led to relevant differences in predictive performance for the 20-variable and 10-variable LASSO LR models (Table 6; Table S4).

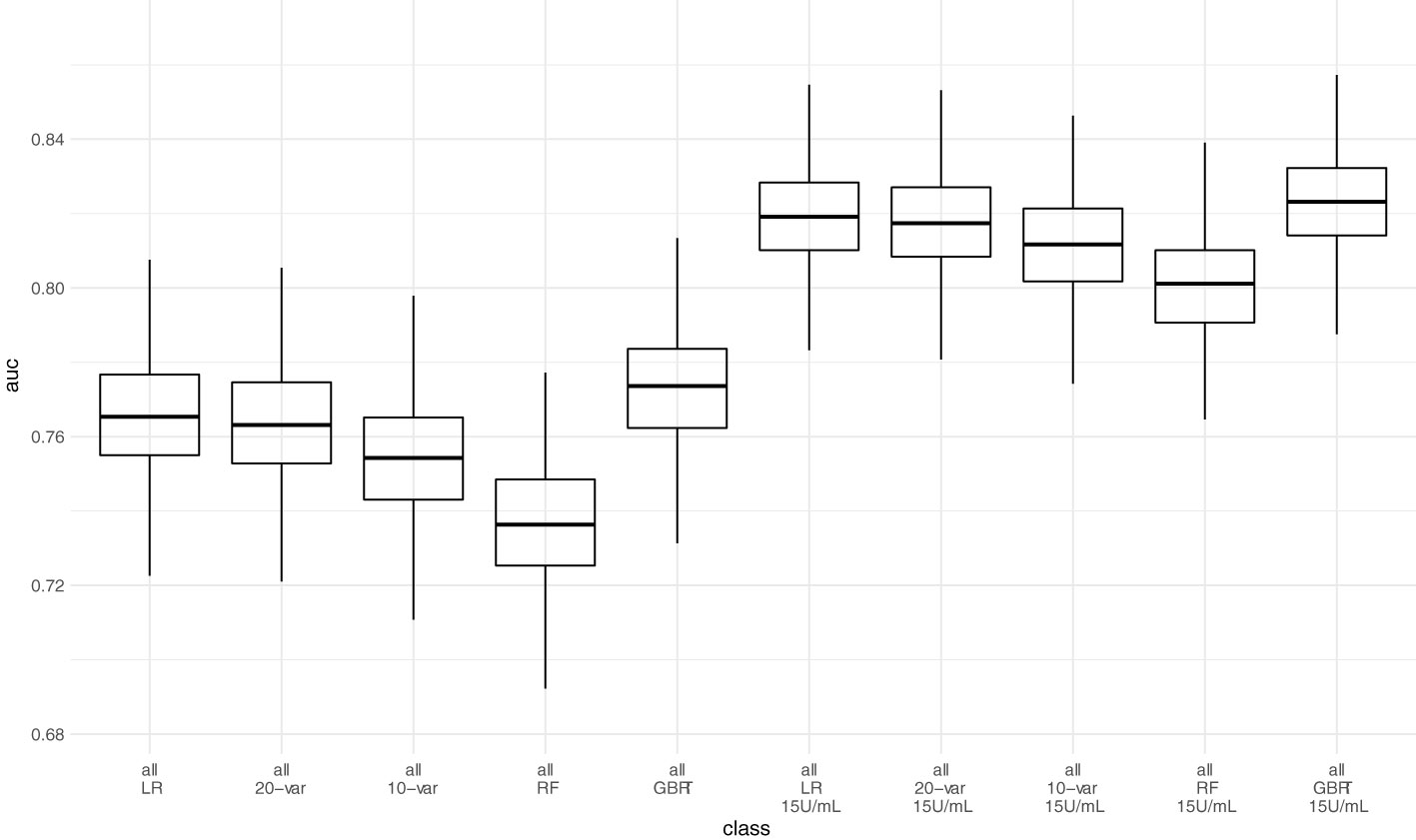

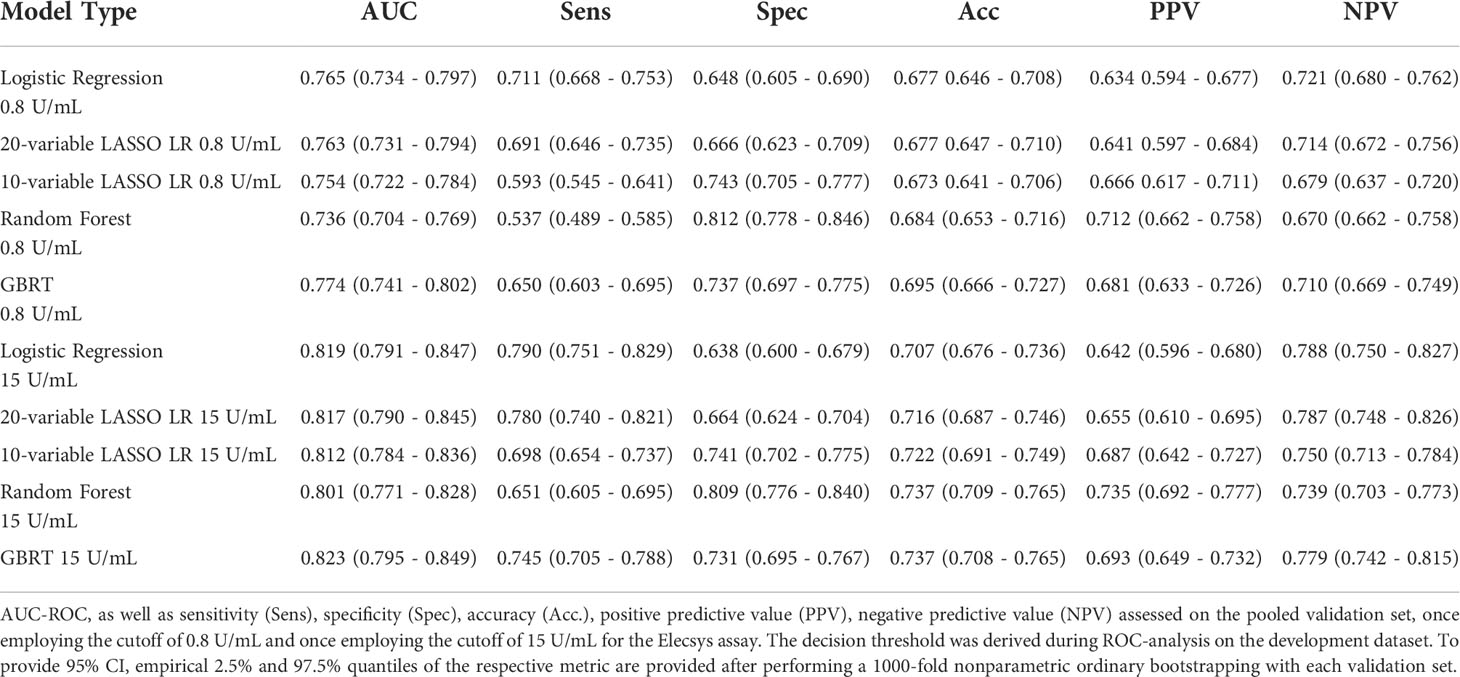

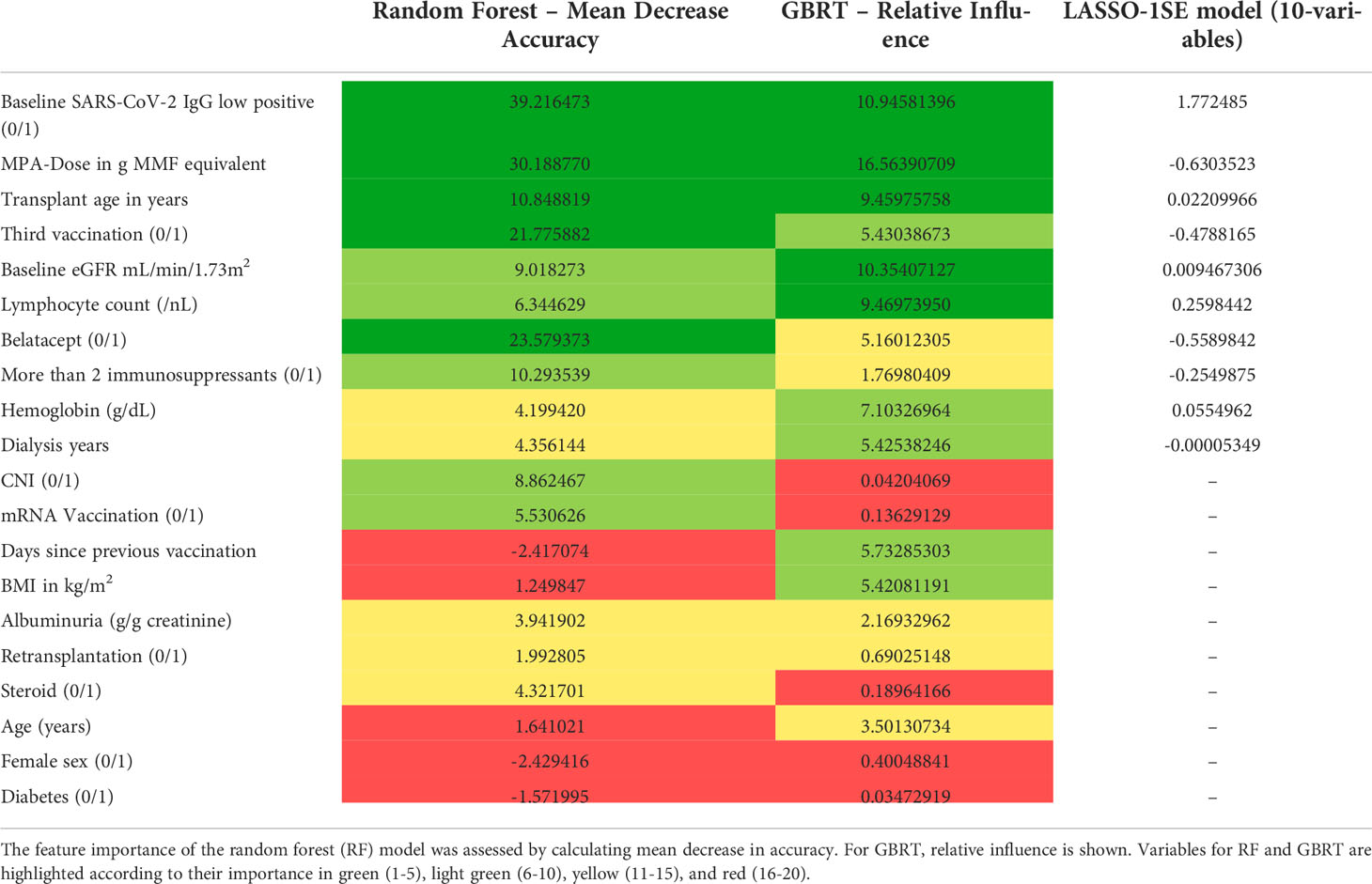

Next, we assessed model performance of all 5 models in the pooled validation set, using both the cutoff of 0.8 U/mL and 15 U/mL for the Elecsys assay, respectively (Figure 5). For the 15 U/mL cutoff, GBRT showed AUC-ROC of 0.823 (0.795 - 0.849), which was slightly better than LR 0.819 (0.791 - 0.847) and the LASSO-Min model 0.817 (0.790 - 0.845). The LASSO-1SE model with 0.812 (0.784 - 0.836) was still better than the RF model - 0.801 (0.771 - 0.828). All results are summarized in Table 7. Analysis of feature importance for the tree-based models revealed that for the RF model, low positive antibody titer, MPA dose, belatacept treatment, vaccination number, and transplant age were the five most important variables, while diabetes, time since vaccination and sex were the least important variables. For the GBRT model, MPA dose, low positive antibody titer, eGFR, lymphocyte count and transplant age were the most important variables, while diabetes, CNI treatment, and mRNA-based vaccine were the least important variables. When comparing the 10 most important variables from RF or GBRT to the LASSO-1SE model, RF as well as GBRT had 8 out of 10 variables in common with the 10-variable LASSO-1SE model (Table 8).

Figure 5 Predictive performance of the 10-variable, 20-variable LASSO logistic regression, logistic regression (LR), random forest (RF) and gradient boosted regression tree (GBRT) models on the pooled validation set comprised of all 4 validation sets with cutoff 0.8 U/mL and 15 U/mL for the Elecsys assay. 10-var – 10-variable LASSO LR model, 20-var – 20-variable LASSO LR model, all – all validation sets pooled.

Table 7 Performance of all five different models during external validation on the pooled validation datasets.

Table 8 Comparison of feature importance of random forest (RF), gradient boosted regression trees (GBRT), and variable selection in the LASSO-1SE model.

To assess how variable selection based on feature importance influences model performance, we selected the 10 most important variables for both RF and GBRT, retrained the models and performed external validation in the pooled validation dataset. Both, the 10-variable RF and the 10-variable GBRT yielded the same AUC-ROC during external validation as the respective 20-variable models - 0.823 (0.795 - 0.849) for GBRT, and 0.801 (0.771 - 0.828) for RF.

In this article, we present the development, internal and external validation of a 10-variable LASSO regularized logistic regression model for prediction of serological response to the third and fourth dose of SARS-CoV-2 vaccine in previously seronegative, COVID-19-naïve KTR.

It shows good discrimination of KTR exhibiting serological response both in a rigorous resampling approach in the development cohort and in four independent validation cohorts with an overall AUC-ROC of 0.812, and a negative predictive value of 0.75 based on a decision threshold established within the development dataset. Available online as a risk calculator at https://www.tx-vaccine.com and embedded into the proposed implementation strategy, it can assist physicians in choosing between different immunization strategies, namely, additional vaccination with or without adaption of immunosuppressive therapy, or pre-exposure prophylaxis with monoclonal anti-SARS-CoV-2-(S) antibodies.

While this is the first, online available risk calculator to predict seroconversion in response to third and fourth vaccination, there are already models predicting seroconversion after two vaccine doses.

Frölke et al. describe a sparse 6-variable model, where increased age, lower lymphocyte count, lower estimated glomerular filtration rate (eGFR), shorter time after transplantation, not using steroids and the use of mycophenolate mofetil/mycophenolic acid (MMF/MPA) are predictors of non-seroconversion (with a cutoff of 10 BAU/mL). This is completely in line with our own findings. The performance in the development (n=215) and rather small validation cohort (n=73) are promising (AUC-ROC 0.83 and 0.84, respectively). In a larger, second validation cohort, for which an adapted model without lymphocyte count was used, the performance drops to AUC-ROC 0.75, which emphasizes that lymphocyte count is an important predictor (19). Still, this model is easy to use, and shows apparently more stable performance than the other model available, which is provided by Alejo et al. They use a gradient boosting algorithm, and identified mycophenolate mofetil (MMF) use, shorter time since transplant, and older age as strongest predictors of non-seroconversion, which is in line with our findings as well. Since the model shows good predictive performance on the development dataset (AUC-ROC 0.79), but poor performance in an external validation cohort (AUC-ROC 0.67), it can be suspected that the model is overfitted (20). This is further supported when using the online tool the authors provide at http://www.transplantmodels.com/covidvaccine/, where small changes, e.g. in patient age, show great changes in vaccine response probability. Another reason for worse performance could be that not only kidney transplant recipients are included. Therefore, not only is the cohort more heterogeneous, but important predictors such as eGFR are missing.

Our own data show that GBRT can achieve comparable performance in internal and external validation, when overfitting is limited by hyperparameter tuning within the development cohort. Nevertheless, since the GBRT model did not substantially outperform LASSO logistic regression models, but is more complex and less transparent, we chose not to implement the GBRT model in the online calculator. These findings are in line with the statistical literature showing no benefit of machine learning methods over logistic regression for clinical prediction models (21).

From a biomedical point of view, serological response is only one half of immune response to vaccination and is complemented by T-cell response. However, neutralizing anti-SARS-CoV-2-(S) antibodies are pathophysiologically and epidemiologically established to offer protection from severe disease (9, 22), which is also supported by the protection offered by monoclonal antibodies against SARS-CoV-2 applied for prophylaxis and treatment (23, 24).

Yet, after the emergence of the omicron variants, neutralization antibody levels against omicron variant show 25.7-fold to 58.1-fold reduction in sera of healthy vaccinated subjects in comparison to wild-type (25). Consequently, antibody levels that ensure neutralization, increased from >264 U/mL for alpha variant (8, 9) to >2000 U/mL for omicron (16), making the interpretation of antibody levels more difficult than before.

Despite these uncertainties, it seems intolerable to leave patients without any humoral protection whatsoever. Hence, in patients without serological response to basic immunization, physicians and patients need to decide between additional active vaccination with or without adapting immunosuppressive medication, and pre-exposure prophylaxis with monoclonal antibodies (26).

Since negative predictive value was above 0.75, when merging all validation sets, we suggest the following implementation strategy: for patients, who are likely not to respond to additional SARS-CoV-2 vaccination according to the prediction model, pre-exposure prophylaxis with monoclonal antibodies exhibiting neutralizing capacity against omicron BA.4/5 should be administered to ensure timely protection (27–30). In patients, who are likely to respond according to the prediction model, there is still a chance that these patients will not reach antibody levels, which ensure neutralizing capacity against omicron variants. For these patients, both, repeated vaccination and monoclonal antibody prophylaxis are feasible and should in our view be chosen depending on the risk for severe disease course.

We provide a rigorously developed and validated prediction model, which is provided as an online risk calculator to support kidney transplant physicians when deciding upon the immunization strategy for their patients. Especially, the estimated effects of adaptions in immunosuppressive medication can be evaluated, e.g. reducing or pausing MPA dose, or switching from belatacept to calcineurin inhibitor.

While belatacept treatment and MPA dose have been reported as negative predictors of serological vaccine response throughout the literature (10), it is still unclear, whether modulation of immunosuppression, and especially pausing MPA can increase serological response to SARS-CoV-2 vaccination, since most data originate from observational or small non-randomized controlled trials (5, 31–33).

Since the data from the development cohort indicate that pausing MPA increases serological response to fourth vaccination, this hypothetical benefit is introduced into the model. Hence, if used to guide modulation of immunosuppression and specifically pausing MPA, the calculator must be used with caution, since the hypothetical effect on serological response comes at a potential risk of anti-HLA antibody formation and rejection.

Additionally, predictions can be suspected to be less accurate in cohorts, where no modulation of immunosuppression are performed around fourth vaccination.

Regarding the general sparsity of data on vaccine response to third and fourth dose in KTR, we analyze extensive datasets for development and validation, and hereby provide representative evaluation of real-life model performance. While performance of the 20-variable model was slightly better, it is also more impractical due to more variables, which is why we chose to report mainly on the sparser 10-variable model. The same applies for the 20-variable GBRT model, which was not implemented in the online calculator. When compared to other models reported for this purpose, it is the first to predict serological response to third and fourth vaccination, and shows the most promising performance during external validation.

Several limitations have to be considered: first, this model only predicts serological response and does not include information about T-cell response. However, we have shown before that more than 85% of KTR have SARS-CoV-2 specific CD4+ T-cell response after three vaccinations, which was not increased by fourth vaccination, while serological response rates increase with additional vaccinations (4, 5). This is the rationale, why especially serological response rate can and should be increased to improve protection from SARS-CoV-2 infection in KTR.

Since evidence of antibody level cutoffs that ensure neutralization of or protection from omicron is sparse, we chose not to make any predictions for this endpoint. Instead, we provide an implementation strategy that makes best use of the model’s prediction without making far-reaching assumptions about protective antibody levels against omicron.

Still, one major limitation becomes evident for validation sets 2 and 4, where predictive performance was only moderate when using the positivity cutoff of 0.8 U/mL for the ECLIA Elecsys assay. As outlined above, increasing the positivity cutoff to 15 U/mL is compatible with the proposed implementation, and leads to improved performance for two reasons.

First, since the cutoffs in the development dataset were based on protective levels against alpha variant for the ECLIA Elecsys assay (264 U/mL), predictive performance is expectably poorer when predicting positivity with a 0.8 U/mL cutoff. Second, with a cutoff of 0.8 U/mL for the ECLIA Elecsys assay, only few patients have low positive antibody levels before vaccination (above LoD, but below the positivity cutoff). Since this is an important predictor in both models and provides important information about the actual immunological status, loss of performance can be expected when this information is missing. This is further supported by the fact that after adapting the cutoff from 0.8 U/mL to 15 U/mL, in validation set 2, where all pre-vaccination antibody levels were below 0.4 U/mL, the performance only increased slightly (AUC-ROC 0.719 to 0.741), but in validation set 4, where the percentage of low positive patients increased from 14.6% (most of which were due to the other assays used in this dataset) to 33.1%, the performance increased markedly (AUC-ROC 0.696 to 0.783).

On the contrary, since the percentage of low positive antibody levels in the development dataset is 6.8%, performance in cohorts, where this rate is close to 100%, could potentially worsen as well.

Other possible reasons for different performance are the study design of validation set 2, which was a randomized clinical trial, with outcome assessment between days 29 and 42, whereas in the development and other validation sets, the maximum antibody level after the respective vaccination was chosen, independent of the time passed. Additionally, validation set 2 was the one with the highest proportion of adenoviral vaccine, which however, did not show any difference in serological response in the respective trial (12).

Worth discussing is the mean/median imputation method used, which ensures that performance assessed during external validation is comparable to real-life performance of the risk calculator provided. We show that neither performing complete case analysis nor multiple imputation in the validation cohorts substantially changes predictive performance.

Other limitations arise from the different immunization strategies used, which lead to different seroconversion rates and have influence on model performance as well.

Last, some immunosuppressive regimens have low frequency below 1% in the development cohort (such as rituximab, mTOR inhibitor, and azathioprine treatment), which limits applicability for these patients.

We provide the first, online available calculator to predict vaccine response to third or fourth vaccination in previously seronegative, COVID-19-naïve KTR. It can guide decisions whether to modulate immunosuppressive therapy before additional active vaccination, or to perform passive immunization to improve protection against COVID-19.

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

The studies involving human participants were reviewed and approved by Ethics Comittee of Charité Universitätsmedizin Berlin. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

BO and SR conceived of the presented idea. BO performed data analysis and implemented the risk calculator. SR performed data visualization and project coordination. FG and MM assisted in data preparation of the development dataset. JS, MK, LCR, AH, RR-S, RO, IB, SC, CM, CK, and GB provided validation datasets. BO and SR wrote the manuscript. KB, ES, JS, MK, AH, RR-S, IB, SC, and CM provided significant intellectual input during the conception and development of the article. All authors commented and reviewed the final manuscript.

Eva Schrezenmeier is a participant in the BIH-Charité Clinician Scientist Program funded by the Charité - Universitätsmedizin Berlin and the Berlin Institute of Health. We thank Jürgen Dönitz, Johannes Raffler, Ulla Schultheiss, and Helena U. Zacharias on behalf of the CKDapp team for providing their template for an online risk calculator.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fimmu.2022.997343/full#supplementary-material

AUC-ROC, area under the curve of the receiver operator characteristic; CI, confidence intervals; CNI, calcineurin inhibitor; COVID-19, coronavirus disease 2019; GBRT, gradient boosted regression trees; ECLIA, electrochemiluminescence immunoassay; eGFR, estimated glomerular filtration rate; ELISA, enzyme-linked immunosorbent assays; KTR, kidney transplant recipients; LASSO, least absolute shrinkage and selection operator; LoD, limit of detection; LR, logistic regression; MPA, mycophenolic acid; N, nucleocapsid protein; RBD, receptor binding domain; S, spike protein; SARS-CoV-2, severe acute respiratory syndrome coronavirus 2; SOT, solid organ transplantation; TCMR, T cell mediated rejection; TRIPOD, transparent reporting of a multivariable prediction model for individual prognosis or diagnosis.

1. Andrews N, Tessier E, Stowe J, Gower C, Kirsebom F, Simmons R, et al. Duration of protection against mild and severe disease by covid-19 vaccines. N Engl J Med (2022) 12:340–50. doi: 10.1056/NEJMoa2115481

2. Reischig T, Kacer M, Vlas T, Drenko P, Kielberger L, Machova J, et al. Insufficient response to mRNA SARS-CoV-2 vaccine and high incidence of severe COVID-19 in kidney transplant recipients during pandemic. Am J Transplant (2021) 3:801–12. doi: 10.1111/ajt.16902

3. Osmanodja B, Mayrdorfer M, Halleck F, Choi M, Budde K. Undoubtedly, kidney transplant recipients have a higher mortality due to COVID-19 disease compared to the general population. Transpl Int (2021) 34(5):769–71. doi: 10.1111/tri.13881

4. Schrezenmeier E, Rincon-Arevalo H, Jens A, Stefanski A-L, Hammett C, Osmanodja B, et al. Temporary antimetabolite treatment hold boosts SARS-CoV-2 vaccination-specific humoral and cellular immunity in kidney transplant recipients. JCI Insight (2022) 29. doi: 10.1172/jci.insight.157836

5. Osmanodja B, Ronicke S, Budde K, Jens A, Hammett C, Koch N, et al. Serological response to three, four and five doses of SARS-CoV-2 vaccine in kidney transplant recipients. J Clin Med (2022) 11(9):2565. doi: doi.org/10.3390/jcm11092565

6. Liefeldt L, Glander P, Klotsche J, Straub-Hohenbleicher H, Budde K, Eberspächer B, et al. Predictors of serological response to SARS-CoV-2 vaccination in kidney transplant patients: Baseline characteristics, immunosuppression, and the role of IMPDH monitoring. J Clin Med (2022) 11(6):1697. doi: 10.3390/jcm11061697

7. Stumpf J, Siepmann T, Lindner T, Karger C, Schwöbel J, Anders L, et al. Humoral and cellular immunity to SARS-CoV-2 vaccination in renal transplant versus dialysis patients: A prospective, multicenter observational study using mRNA-1273 or BNT162b2 mRNA vaccine. Lancet Reg Health Eur (2021) 9:100178. doi: 10.1016/j.lanepe.2021.100178

8. Caillard S, Thaunat O. COVID-19 vaccination in kidney transplant recipients. Nat Rev Nephrol (2021) 17(12):785–7. doi: 10.1038/s41581-021-00491-7

9. Feng S, Phillips DJ, White T, Sayal H, Aley PK, Bibi S, et al. Correlates of protection against symptomatic and asymptomatic SARS-CoV-2 infection. Nat Med (2021) 27(11):2032–40. doi: 10.1038/s41591-021-01540-1

10. Kantauskaite M, Muller L, Kolb T, Fischer S, Hillebrandt J, Ivens K, et al. Intensity of mycophenolate mofetil treatment is associated with an impaired immune response to SARS-CoV-2 vaccination in kidney transplant recipients. Am J Transplant (2022) 22(2):634–9. doi: 10.1111/ajt.16851

11. Kolb T, Fischer S, Muller L, Lübke N, Hillebrandt J, Andrée M, et al. Impaired immune response to SARS-CoV-2 vaccination in dialysis patients and in kidney transplant recipients. Kidney360 (2021) 2(9):1491–8. doi: 10.34067/KID.0003512021

12. Reindl-Schwaighofer R, Heinzel A, Mayrdorfer M, Jabbour R, Hofbauer TM, Merrelaar A, et al. Comparison of SARS-CoV-2 antibody response 4 weeks after homologous vs heterologous third vaccine dose in kidney transplant recipients: A randomized clinical trial. JAMA Intern Med (2022) 182(2):165–71. doi: 10.1001/jamainternmed.2021.7372

13. Benotmane I, Gautier G, Perrin P, Olagne J, Cognard N, Fafi-Kremer S, et al. Antibody response after a third dose of the mRNA-1273 SARS-CoV-2 vaccine in kidney transplant recipients with minimal serologic response to 2 doses. JAMA (2021) 23:1063–5. doi: 10.1001/jama.2021.12339

14. Benotmane I, Bruel T, Planas D, Fafi-Kremer S, Schwartz O, Caillard S. A fourth dose of the mRNA-1273 SARS-CoV-2 vaccine improves serum neutralization against the delta variant in kidney transplant recipients. Kidney Int (2022) 101(5):1073–6. doi: 10.1016/j.kint.2022.02.011

15. Masset C, Kerleau C, Garandeau C, Ville S, Cantarovich D, Hourmant M, et al. A third injection of the BNT162b2 mRNA COVID-19 vaccine in kidney transplant recipients improves the humoral immune response. Kidney Int (2021) 100(5):1132–5. doi: 10.1016/j.kint.2021.08.017

16. Seidel A, Jahrsdörfer B, Körper S, Albers D, von Maltitz P, Müller R, et al. SARS-CoV-2 vaccination of convalescents boosts neutralization capacity against SARS-CoV-2 delta and omicron that can be predicted by anti-s antibody concentrations in serological assays. medRxiv (2022):22269201. doi: 10.1101/2022.01.17.22269201

17. Bowen JE, Sprouse KR, Walls AC, Mazzitelli IG, Logue JK, Franko NM, et al. Omicron BA.1 and BA.2 neutralizing activity elicited by a comprehensive panel of human vaccines. bioRxiv (2022):484542. doi: 10.1101/2022.03.15.484542

18. Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Ann Intern Med (2015) 162(1):55–63. doi: 10.7326/M14-0697

19. Frölke S, Bouwmans P, Messchendorp L, Geerlings S, Hemmelder M, Gansevoort R, et al. MO184: Development and validation of a multivariable prediction model for nonseroconversion after SARS-COV-2 vaccination in kidney transplant recipients. Nephrol Dialysis Transplant (2022) 37(Supplement_3). doi: 10.1093/ndt/gfac066.086

20. Alejo JL, Mitchell J, Chiang TP-Y, Chang A, Abedon AT, Werbel WA, et al. Predicting a positive antibody response after 2 SARS-CoV-2 mRNA vaccines in transplant recipients: A machine learning approach with external validation. Transplantation (2022). doi: 10.1097/tp.0000000000004259

21. Christodoulou E, Ma J, Collins GS, Steyerberg EW, Verbakel JY, Van Calster B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J Clin Epidemiol (2019) 110:12–22. doi: 10.1016/j.jclinepi.2019.02.004

22. Khoury DS, Cromer D, Reynaldi A, Schlub TE, Wheatley AK, Juno JA, et al. Neutralizing antibody levels are highly predictive of immune protection from symptomatic SARS-CoV-2 infection. Nat Med (2021) 27(7):1205–11. doi: 10.1038/s41591-021-01377-8

23. Group RC. Casirivimab and imdevimab in patients admitted to hospital with COVID-19 (RECOVERY): a randomised, controlled, open-label, platform trial. Lancet (2022) 399(10325):665–76. doi: 10.1016/S0140-6736(22)00163-5

24. O'Brien MP, Hou P, Weinreich DM. Subcutaneous REGEN-COV antibody combination to prevent covid-19. N Engl J Med (2021) 385(20):e70. doi: 10.1056/NEJMc2113862

25. Medigeshi GR, Batra G, Murugesan DR, Thiruvengadam R, Chattopadhyay S, Das B, et al. Sub-Optimal neutralisation of omicron (B.1.1.529) variant by antibodies induced by vaccine alone or SARS-CoV-2 infection plus vaccine (hybrid immunity) post 6-months. EBioMedicine (2022) 78:103938. doi: 10.1016/j.ebiom.2022.103938

26. Weinreich DM, Sivapalasingam S, Norton T, Ali S, Gao H, Bhore R, et al. REGN-COV2, a neutralizing antibody cocktail, in outpatients with covid-19. N Engl J Med (2021) 384(3):238–51. doi: 10.1056/NEJMoa2035002

27. VanBlargan LA, Errico JM, Halfmann PJ, Zost SJ, Crowe JE Jr, Purcell LA, et al. An infectious SARS-CoV-2 B.1.1.529 omicron virus escapes neutralization by therapeutic monoclonal antibodies. Nat Med (2022) 28(3):490–5. doi: 10.1038/s41591-021-01678-y

28. Liu L, Iketani S, Guo Y, Chan JF-W, Wang M, Liu L, et al. Striking antibody evasion manifested by the omicron variant of SARS-CoV-2. Nature (2022) 602(7898):676–81. doi: 10.1038/s41586-021-04388-0

29. McCallum M, Czudnochowski N, Rosen LE, Zepeda SK, Bowen JE, Walls AC, et al. Structural basis of SARS-CoV-2 omicron immune evasion and receptor engagement. Science (2022) 375(6583):864–8. doi: 10.1126/science.abn8652

30. Cameroni E, Bowen JE, Rosen LE, Saliba C, Zepeda SK, Culap K, et al. Broadly neutralizing antibodies overcome SARS-CoV-2 omicron antigenic shift. Nature (2022) 602(7898):664–70. doi: 10.1038/s41586-021-04386-2

31. Regele F, Heinzel A, Hu K, Raab L, Eskandary F, Faé I, et al. Stopping of mycophenolic acid in kidney transplant recipients for 2 weeks peri-vaccination does not increase response to SARS-CoV-2 vaccination-a non-randomized, controlled pilot study. Front Med (Lausanne) (2022) 9:914424. doi: 10.3389/fmed.2022.914424

32. Kantauskaite M, Müller L, Hillebrandt J, Lamberti J, Fischer S, Kolb T, et al. Immune response to third SARS-CoV-2 vaccination in seronegative kidney transplant recipients: possible improvement by mycophenolate mofetil reduction. Clin Transplant (2022). doi: 10.1111/ctr.14790

Keywords: kidney transplantation, COVID-19, vaccination, clinical decision support, immunosuppression therapy

Citation: Osmanodja B, Stegbauer J, Kantauskaite M, Rump LC, Heinzel A, Reindl-Schwaighofer R, Oberbauer R, Benotmane I, Caillard S, Masset C, Kerleau C, Blancho G, Budde K, Grunow F, Mikhailov M, Schrezenmeier E and Ronicke S (2022) Development and validation of multivariable prediction models of serological response to SARS-CoV-2 vaccination in kidney transplant recipients. Front. Immunol. 13:997343. doi: 10.3389/fimmu.2022.997343

Received: 18 July 2022; Accepted: 20 September 2022;

Published: 04 October 2022.

Edited by:

Elke Bergmann-Leitner, Walter Reed Army Institute of Research, United StatesReviewed by:

Pinyi Lu, National Cancer Institute at Frederick (NIH), United StatesCopyright © 2022 Osmanodja, Stegbauer, Kantauskaite, Rump, Heinzel, Reindl-Schwaighofer, Oberbauer, Benotmane, Caillard, Masset, Kerleau, Blancho, Budde, Grunow, Mikhailov, Schrezenmeier and Ronicke. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bilgin Osmanodja, YmlsZ2luLm9zbWFub2RqYUBjaGFyaXRlLmRl

†ORCID: Bilgin Osmanodja, orcid.org/0000-0002-8660-0722

Johannes Stegbauer, orcid.org/0000-0001-8994-8102

Andreas Heinzel, orcid.org/0000-0002-8447-1795

Roman Reindl-Schwaighofer, orcid.org/0000-0002-4419-6282

Rainer Oberbauer, orcid.org/0000-0001-7544-6275

Ilies Benotmane, orcid.org/0000-0001-9113-2479

Sophie Caillard, orcid.org/0000-0002-0525-4291

Christophe Masset, orcid.org/0000-0002-7442-2164

Clarisse Kerleau, orcid.org/0000-0002-1487-5743

Gilles Blancho, orcid.org/0000-0003-0356-5069

Klemens Budde, orcid.org/0000-0002-7929-5942

Michael Mikhailov, orcid.org/0000-0002-7624-9668

Eva Schrezenmeier, orcid.org/0000-0002-0016-7885

Simon Ronicke, orcid.org/0000-0001-8822-4268

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.