95% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

CODE article

Front. ICT , 31 January 2020

Sec. Virtual Environments

Volume 7 - 2020 | https://doi.org/10.3389/fict.2020.00001

Virtual environments will deeply alter the way we conduct scientific studies on human behavior. Possible applications range from spatial navigation over addressing moral dilemmas in a more natural manner to therapeutic applications for affective disorders. The decisive factor for this broad range of applications is that virtual reality (VR) is able to combine a well-controlled experimental environment together with the ecological validity of the immersion of test subjects. Until now, however, programming such an environment in Unity® requires profound knowledge of C# programming, 3D design, and computer graphics. In order to give interested research groups access to a realistic VR environment which can easily adapt to the varying needs of experiments, we developed a large, open source, scriptable, and modular VR city. It covers an area of 230 hectare, up to 150 self-driving vehicles and 655 active and passive pedestrians and thousands of nature assets to make it both highly dynamic and realistic. Furthermore, the repository presented here contains a stand-alone City AI toolkit for creating avatars and customizing cars. Finally, the package contains code to easily set up VR studies. All main functions are integrated into the graphical user interface of the Unity® Editor to ease the use of the embedded functionalities. In summary, the project named Westdrive is developed to enable research groups to access a state-of-the-art VR environment that is easily adapted to specific needs and allows focus on the respective research question.

With the opening of the consumer market in recent years, VR has penetrated many areas of everyday life: there are e.g., applications for marketing, the games industry and for educational purposes (Anthes et al., 2016; Burke, 2018; Miller, 2018). Research on human behavior is also beginning to take an interest in experiments in virtual reality (de la Rosa and Breidt, 2018; Rus-Calafell et al., 2018; Wienrich et al., 2018). For instance, it is possible to embed ethical decision making in a seemingly real context in order to achieve a higher validity of experiments (Sütfeld et al., 2017; Faulhaber et al., 2018). Further, studies based on VR techniques address questions regarding spatial navigation, such as neurological correlations of human navigation (Epstein et al., 2017), as well as gender differences in navigation tasks in a well-controlled environment (Castelli et al., 2008). Although there are already available tools for creating virtual cities, these applications have not yet been designed for experiments on human behavior, but rather for planning and simulating urban development (CityEngine, 2013; Botica et al., 2015; FUZOR, 2019; VR-Design Studio, 2019). Furthermore, it is possible to use VR in a variety of psychotherapeutic and clinical scenarios (Riva, 2005; Li et al., 2011). Not only is this cost-efficient and more interactive than classical psychotherapy (Bashiri et al., 2017), it also offers the possibility to use this treatment at home, as VR becomes more widespread in the future. This means that VR has the potential to increase access to insights of human behavior as well as to psychological interventions (Slater and Wilbur, 1997; Freeman et al., 2018). Finally, VR can be combined with further technologies, such as EEG (Bischof and Boulanger, 2003) and fMRI, facilitating research of clinical disorders (Reggente et al., 2018). In summary, VR techniques have the potential to heavily advance research in the human sciences.

Still, compared to classical screen experiments, VR-based experiments are complex and require extensive programming, which is an intricate task by itself (Freeman et al., 2018). This causes VR experiments in behavioral research to lag behind their actual potential (Faisal, 2017). Even if already existing experiments are transferred to VR, knowledge of software and hardware must be acquired, meaning a larger expenditure of time and content (Pan and Hamilton, 2018). Westdrive is developed to eliminate these obstacles in the context of studies on spatial navigation and ethical aspects. It shortens the time required for the setup of or the transfer to VR experiments by a considerable magnitude either by enabling researchers to use the project scene directly, or indirectly by letting them use only the provided assets and code.

Probably the most crucial features of Westdrive are size, modularity and the simple handling of complex environments, since all components of the City AI toolkit can be used independently even without any programming knowledge.

Size is often a critical factor for virtual environments. This is the case with e.g., navigation tasks within VR (König et al., 2019). A distinction is made here between room-sized vista space and large environmental space. Small rooms are easier to grasp and therefore it is only possible in large environments to distinguish between test subjects who navigate using snapshots of landmarks only and those who have learned a true map of their environment (Ekstrom and Isham, 2017).

The modularity of a virtual environment is of equal importance. Not only does building realistic cities require the consideration of many different aspects, but different research projects also depend upon distinctive dynamic objects. For example, an experiment on the trolley dilemma requires driving vehicles and pedestrians (Faulhaber et al., 2018). A therapeutic application for fear of heights requires high buildings and animated characters to make the environment appear real (Freeman et al., 2018). Project Westdrive offers a wide variety of applications due to its modularity, both of the static environment which comprises trees, pavements, buildings, etc. and the dynamic objects like pedestrians or self-driving cars.

Additionally, the aforementioned managers of the City AI toolkit enable a simple handling of the project. The City AI toolkit, which facilitates implementation of paths, pedestrians and cars, which are all usable within the Unity® GUI without any experience in coding. All of the components are accessible within the Unity® Editor. All managers can be edited separately according to the respective requirements of an experiment. In this sense, these separately adjustable components also support modularity as only adjustments for the necessary components have to be made.

To use the project, only a powerful computer, VR glasses and the free Unity® program are needed1. If the aforementioned requirements are met, the scene presented here can be changed or manipulated at will. It is also possible to make alterations exclusively in the GUI of the Unity® editor without writing any code. This project offers not only the templates for static models, but also the functions integrated into the GUI for paths, character creation, and the creation of moving cars.

Westdrive and the City AI have been created with having simplicity in mind to relieve users from as much time-consuming preparations and programming as possible. Yet, as an open source project under constant development, we also encourage future researchers to further improve the project or change the codes based on their specific needs. Westdrive gives the user the possibility to carry out a multitude of investigations on human behavior through the key features. For example, the simple routing of pedestrians and cars makes it possible to carry out studies on trolley dilemmas or the human-machine interaction. Also, due to the realism of the avatars (Figure 5) it is possible to build therapeutic applications for the treatment of fear of heights or social phobias. However, this is only a very small part of the possible applications.

The Westdrive virtual environment is built in Unity® 2018.3.0f2 (64 bit), a game engine platform by Unity® Technologies. This engine is used together with a graphical user interface (GUI) called the Unity® editor, which supports 2D and 3D graphics as well as scripting in JavaScript and C# to create dynamic objects inside a simulation. Unity® runs on Windows and Mac and a Unity®-built project can be run on almost all common platforms including mobile devices like tablets or smartphones. We have chosen this software due to many available application programming interfaces (APIs) and good compatibility with a variety of VR headsets (Juliani et al., 2018). Moreover, the use of Unity® grants access to an asset store, which offers the option to purchase prefabricated 3D objects or scripts which only need to be imported into an already existing scene. Thus, Westdrive is a modular virtual environment, making it easy to integrate other software now and in the future.

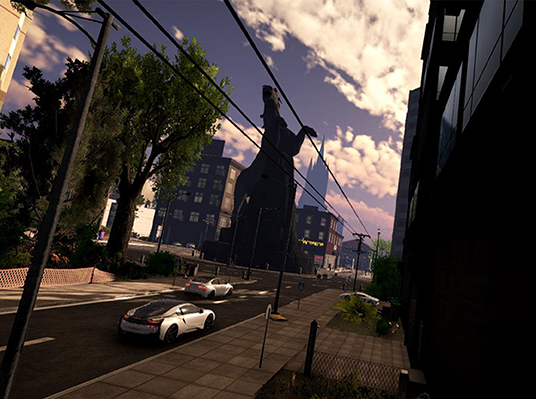

The Westdrive repository contains a city as one completed game scene. All associated assets including driving cars, walking characters, buildings, trees, plants, and a multitude of smaller 3D objects such as lanterns, traffic lights, benches etc. are included and offer a high level of detail (Figure 1). It also contains the relevant code that executes interactions and animations of the mentioned objects. Thus, users have all desirable components for an experiment in one consistent package.

Figure 1. Overview of the level of detail in the simulated city of the project Westdrive in a completed scene.

Westdrive can be divided into two sub-areas. On the one hand there is the static environment and on the other hand there is the code for interactions between dynamic objects. Both will be explained in the following.

The static environment models a large urban area. It includes 93 houses, several kilometers of roads and footpaths, about 10,000 small objects and about 16,000 trees, and plants on a total area of about 230 hectares. A large part of the 3D objects used for this purpose are taken from the Unity® asset store for free. A list of used assets and their licenses can be found in the specified repository. However, the design of the city presented here can be varied at will in the editor and an included mesh separating tool. It is possible to change the size, shape and amount of individual buildings, streets, cars, and pedestrians in the GUI of the Unity® Editor. The same applies to all other assets presented here. The static environment alone can thus be used indirectly for the development of further VR simulations as the project provides a large number of prefabricated assets (prefabs) that do not have to be created again. Consequently, it is possible to easily develop a broad range of scenarios for realistic VR experiments by simply manipulating the static environment to match respective needs.

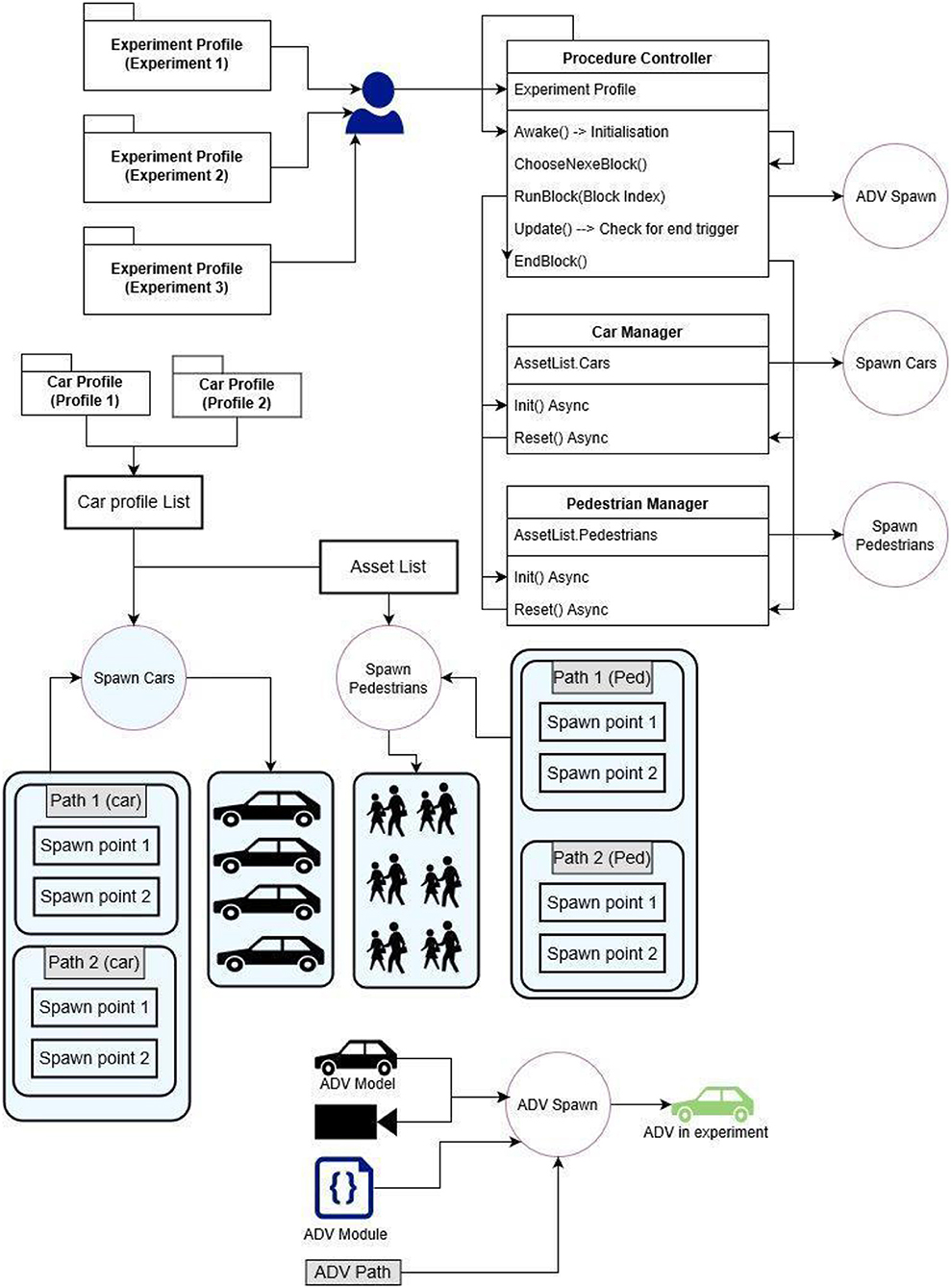

To use Westdrive to its full extent, the code described here is of essential importance. The code is written entirely in C# based on Microsoft's Net 4.0 API level and envelopes all functions for the stand-alone City AI toolkit (Figure 2). This includes six components developed by us: a Path Manager to create and manipulate paths for pedestrians and cars, a Car Engine script that allows cars to move independently, and a Car Profile Manager to create different profiles for different cars (e.g., the distance maintained to other vehicles, engine sound and car color). Additionally, there is the Pedestrian Manager and the Character Manager, that control animations and spawn points for moving characters along their defined route and an Experiment Profile Manager, which defines the experimental context, like routes, audio files, and scripted events along the path. The City AI works as a stand-alone toolkit in the GUI of the Unity editor. In short, it is possible to define fixed routes with spawn points for pedestrians and cars along which the non-playable characters (NPCs), such as pedestrians and cars, will move. Only if visual change of characters is desired an external tool is necessary2.

Figure 2. Scheme of the City AI features in Westdrive. This illustrates the interaction of the different managers of the toolkit to enable spawned cars and pedestrians as well as different experimental setups saved in one scene. These experimental profiles trigger the procedure controller, which takes care of the onset and ending of the experiment and creates the subject's car or avatar. This also triggers the car and pedestrian manager, which are responsible for the spawning of passive cars and pedestrians. In combination with the Car Profiles and the Asset List, the various cars and pedestrians required for the experiment are created in the experiment.

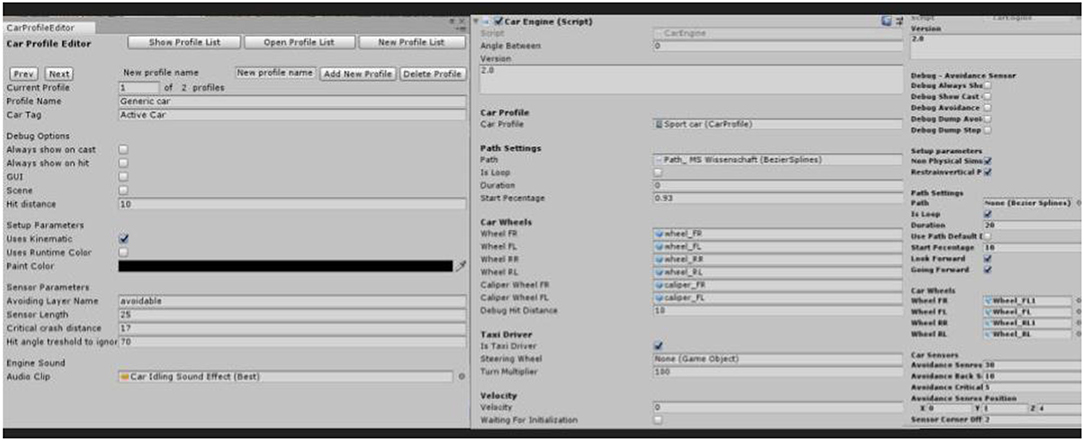

To enable well-controlled movements of cars and pedestrians, we developed a path creation toolkit inside the City AI which incorporates mathematical components of Bezier Splines (Prautzsch et al., 2013). This results in a deterministic and accurate path following system which is only dependent on units of time in a non-physics-based simulation. The users can themselves change the control points of the path inside the editor (see Figure 3). It is also possible to define the duration of the route or the circuit in the Unity® editor. Furthermore, the kinematic path creation facilitates the creation of spawn points for different asset types (cars and pedestrians at the moment, see Figures 4, 5) for each path. All these functions work without programming knowledge. The following components of the City AI are also depicted in Figure 3 to give a better overview of interactions and possibilities within Westdrive:

Figure 3. Overview in the Editor of the Car Profile Manager, the Car Engine and the according parameter bar. These functions allow users to use different types of vehicles in the city. The Car Profile changes the appearance of the vehicles, such as color, engine noise and sensor length. Car Engine allows the vehicles to move independently on the defined routes through the city and to accelerate, brake and steer independently. For each of these functions defaults are provided. An adjustment of these parameters is therefore only necessary for new vehicles.

Path Manager: This is the basis for all moving objects in Westdrive. With just a few clicks in the editor, the user can create new routes for pedestrians and cars or change existing routes. To do so, the control points of the already mentioned Splines can be moved using the mouse only. Afterwards it is possible to set the speed for objects on this route.

Car Engine: This component enables vehicles to steer, brake and accelerate independently both at traffic lights and in the event of an imminent collision with other road users.

Car Profile Manager: This component allows users to create and manage multiple independent profiles for cars. It enables creation of various types of cars with different characteristics such as engine sound, color, or a different spacer for preceding vehicles.

Pedestrian and Car Manager System: These systems take care of automatic spawn, restart, and re-spawn of all pedestrians and cars in the scene. They have the ability to load resources in an either synchronous or asynchronous manner, to ensure a smooth-running experiment.

Experiment Profiles and Procedure Controller: These scripts enable users to create different experiments within the environment. These profiles set up parameters for e.g., the routes that cars will follow. They also trigger the beginning and the end of the experiment; the end of the experiment blocks and they disable dynamic objects not necessary in the scene if needed. The Procedure Controller uses the Experiment Profile to automatize the experimental procedure e.g., by ending blocks, altering the appearance of or completely excluding dynamic objects.

All of these managers assign the correct scripts to objects and move them to a resources folder in order for them to be spawned in runtime when the experiment starts. These toolkits ensure that cars and pedestrians have all the necessary components attached to them.

As head-mounted display (HMD), the HTC Vive Pro is used at our department. At the time of writing, this virtual reality device is the most advanced technology available (Ogdon, 2019). In order to transfer the player's head movements into the virtual reality, HTC utilizes two passive laser-emitting “lighthouses” that have to be attached to the ceiling in two opposing corners of the room. The two handheld controllers and the headset use no <70 combined sensors to calibrate the positions of controllers and headset, measuring the time difference in sending and receiving the emitted signal (Prasuethsut, 2016). To use the HTC Vive Pro and the HTC Setup Software, an account at the online gaming platform Steam is necessary. This requires a stable internet connection, as both Steam and the HTC Setup software are free to use. Since this device is one of the most expensive ones on the market, it is used mainly for academic or industrial research rather than private gaming.

It is also worth mentioning, that although Westdrive has been developed for the HTC Vive Pro, it can easily be transferred to other virtual reality HMDs. The last component for the implementation is the Unity® software. Unity® can also be used free of charge as long as a project is not used commercially. Licenses are free for students and researchers. The Unity® editor can be downloaded from the Unity® website. Now it is possible to create a project order and convert the files from the repository presented here into Unity®.

A more detailed description of how to set up Westdrive as well as an example of the functionalities can be found as tutorial videos in the repository and in the Supplementary Materials.

Due to the complexity of the project and the differences between a deterministic simulation and a computer game, there are still many possible improvements to be implemented. With current enhancements like occlusion culling where, objects are not rendered when they are not seen by the player, simplified shadows, and mesh combining, an acceptable frame rate of at least 30 fps can be achieved using an NVidia GeForce RTX 2080 ti in combination with an Intel(R) Xenon® E5-1607 v4. The desired goal in the course of further research will be to reach the stable 90 Hz suggested by virtual reality technology providers such as HTC and Oculus.

It is important to note that the code does not calculate the mentioned objects physically, but kinematically, so no physically simulated forces are applied to any moving objects. There are several reasons for this: on the one hand, the computational requirements of the computer on which Westdrive is used on are kept as low as possible. On the other hand, an exact control bar of the visual stimuli can be guaranteed, because each object is spatially located exactly at the same place at the same time. Furthermore, it makes potential directed changes easy, as no physical interactions have to be reverse engineered.

Another point is that there is currently no structured software architecture. So far, the priority has been on the simple handling of all functionalities within the editor to facilitate the creation of own experiments. A structured architecture is still under development.

Concluding, we again want to emphasize the impact Westdrive can have on future VR research. Already over a decade ago, the potential of combining VR with physiological measurements has been discussed (Bischof and Boulanger, 2003), but only in the past years, when software became affordable, there was a renewed interest in VR in science (Interrante et al., 2018). The main advantage of the project is a simple implementation of a versatile project which, despite its complexity, can be altered quickly and easily without programming knowledge. Likewise, the experiment in its basic form doubles as an eye-tracking study. The code for the implementation is not included in this version, mainly because it was not written by the two authors, but by the Seahaven research group, investigating spatial navigation in a virtual environment (König et al., 2019). However, the repository will be constantly updated, thus it will also contain the required eye tracking code for Pupil Labs in the future. Westdrive as a city environment offers many areas of application. Nevertheless, the project is constantly in development and extension. At least two more scenes are currently planned in order to allow for an even wider application, for example the investigation of trolley dilemmas (Thomson, 1984) using a railway track or possible applications of the acceptance of new mobility concepts. All improvements and added scenes will be released via GitLab. Additionally, we are going to further clear up old parts of code and unused assets as code janitor, as well as fixing any possible typo or mistake in the code. At the same time, we will expand the comments and wiki section to have a user guide on how to use the project.

Since we are constantly improving the code and add functionalities, this cleanup is an ongoing process.

In this work, particular importance was attributed to a comprehensible formulation in order to ensure an understandable documentation of the work performed. There is an almost unlimited number of application possibilities for the extension of this project. The authors are looking forward to the many great ideas for the continuation of Westdrive.

This work is licensed under a Creative Commons Attribution 4.0 International License.

The datasets for this project can be found in the [project-westdrive]: [https://gitlab.com/farbod69/project-westdrive].

All scripts in the repo can be found under: [https://gitlab.com/farbod69/project-westdrive/tree/master/Assets/Scripts].

An executable file of the current experiment can be found under: [https://gitlab.com/farbod69/project-westdrive#builded-version].

MW and FN wrote this paper. Initial Idea to Westdrive began as a joint Master thesis. Both authors were building and designing the project. PK and GP supervised the project.

We acknowledge support by Deutsche Forschungsgemeinschaft (DFG) and Open Access Publishing Fund of Osnabrück University. This contribution is part of the research training group “va-eva: Vertrauen und Akzeptanz in erweiterten und virtuellen Arbeitswelten” of the University of Osnabrück.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fict.2020.00001/full#supplementary-material

Unity® 3D learning: www.unity.com/learn.

Online Character animation: www.mixamo.com.

1. ^Westdrive recommended system specs:

GPU: NVidia GeForce GTX 1080 ti, equivalent or better.

CPU: Intel(R) Xenon ® E5-1607 v4, equivalent or better.

RAM: 32 GB

Video Output: HDMI 1.4, DisplayPort 1.2 or newer.

USB Port: 1x USB 2.0 or better port.

Operating System: Windows 8.1 or later, Windows 10.

2. ^Character Creation: To create new characters for the city it needs two external tools. One is Fuse CC from Adobe and the other is the Mixamo website. In Fuse CC, a free 3D design program by adobe, it is possible to create figures according to your own imagination. This created model can then be uploaded to the program at Mixamo, which can automatically create animations (De Aguiar et al., 2017). From this website, finished models with animations can be downloaded, which then only need to be implemented into the project. All characters created with Fuse CC in combination with animations from Mixamo can be used without licensing or royalty fees.

Anthes, C., García-Hernández, R. J., Wiedemann, M., and Kranzlmüller, D. (2016). “State of the art of virtual reality technology,” in Aerospace Conference, 2016 IEEE (Big Sky, MT: IEEE), 1–19. doi: 10.1109/AERO.2016.7500674

Bashiri, A., Ghazisaeedi, M., and Shahmoradi, L. (2017). The opportunities of virtual reality in the rehabilitation of children with attention deficit hyperactivity disorder: a literature review. Korean J. Pediatr. 60, 337–343. doi: 10.3345/kjp.2017.60.11.337

Bischof, W. F., and Boulanger, P. (2003). Spatial navigation in virtual reality environments: an EEG analysis. Cyber Psychol. Behav. 6, 487–495. doi: 10.1089/109493103769710514

Botica, N., Martins, M., Ribeiro, M. D. C. F., and Magalhães, F. (2015). “3D representation of the urban evolution of Braga using the CityEngine tool,” in Managing Archaeological Heritage: Past and Present 2015, 132–143.

Burke, R. R. (2018). “Virtual reality for marketing research,” in Innovative Research Methodologies in Management, eds L. Moutinho and M. Sokele (Cham: Palgrave Macmillan), 63–82. doi: 10.1007/978-3-319-64400-4_3

Castelli, L., Corazzini, L. L., and Geminiani, G. C. (2008). Spatial navigation in large-scale virtual environments: gender differences in survey tasks. Comput. Human Behav. 24, 1643–1667. doi: 10.1016/j.chb.2007.06.005

De Aguiar, E., Gambaretto, E., and Corazza, S. (2017). Web Platform for Interactive Design, Synthesis and Delivery of 3D Character Motion Data. U.S. Patent Nr. 9,619,914. Mixamo, Inc., San Francisco, CA, United States.

de la Rosa, S., and Breidt, M. (2018). Virtual reality: a new track in psychological research. Br. J. Psychol. 109, 427–430. doi: 10.1111/bjop.12302

Ekstrom, A. D., and Isham, E. A. (2017). Human spatial navigation: representations across dimensions and scales. Curr. Opin. Behav. Sci. 17, 84–89. doi: 10.1016/j.cobeha.2017.06.005

Epstein, R. A., Patai, E. Z., Julian, J. B., and Spiers, H. J. (2017). The cognitive map in humans: spatial navigation and beyond. Nat. Neurosci. 20:1504. doi: 10.1038/nn.4656

Faisal, A. (2017). Computer science: visionary of virtual reality. Nature 551:298. doi: 10.1038/551298a

Faulhaber, A. K., Dittmer, A., Blind, F., Wächter, M. A., Timm, S., Sütfeld, L. R., König, P., et al. (2018). Human decisions in moral dilemmas are largely described by utilitarianism: virtual car driving study provides guidelines for autonomous driving vehicles. Sci. Eng. Ethics 25, 1–20. doi: 10.1007/s11948-018-0020-x

Freeman, D., Haselton, P., Freeman, J., Spanlang, B., Kishore, S., Albery, E., et al. (2018). Automated psychological therapy using immersive virtual reality for treatment of fear of heights: a single-blind, parallel-group, randomised controlled trial. Lancet Psychiatry 5, 625–632. doi: 10.1016/S2215-0366(18)30226-8

FUZOR (2019). Retrieved from: https://www.kalloctech.com/ (accessed December 16, 2019).

Interrante, V., Höllerer, T., and Lécuyer, A. (2018). Virtual and augmented reality. IEEE Comput. Graph. Appl. 38, 28–30. doi: 10.1109/MCG.2018.021951630

Juliani, A., Berges, V. P., Vckay, E., Gao, Y., Henry, H., Mattar, M., et al. (2018). Unity: a general platform for intelligent agents. arXiv [Preprint]. arXiv:1809.02627v1.

König, S., Kakerbeck, V., Nolte, D., Duesberg, L., Kuske, N., and König, P. (2019). Learning of spatial properties of a large-scale virtual city with an interactive map. Front. Hum. Neurosci. 13. doi: 10.3389/fnhum.2019.00240

Li, A., Montaño, Z., Chen, V. J., and Gold, J. I. (2011). Virtual reality and pain management: current trends and future directions. Pain Manage. 1, 147–157. doi: 10.2217/pmt.10.15

Miller, A. (2018). The Effect of Virtual Reality Education Tools on the Retention of Information. South Carolina Junior Academy of Science, 199. Available online at: https://scholarexchange.furman.edu/scjas/2018/all/199

Ogdon, D. C. (2019). HoloLens and VIVE pro: virtual reality headsets. J. Med. Libr. Assoc. 107:118. doi: 10.5195/JMLA.2019.602

Pan, X., and Hamilton, A. F. D. C. (2018). Why and how to use virtual reality to study human social interaction: the challenges of exploring a new research landscape. Br. J. Psychol. 109, 395–417. doi: 10.1111/bjop.12290

Prasuethsut, L. (2016). HTC Vive: Everything you need to know about the SteamVR headset. Berlin; Heidelberg: Springer (Retrieved January 3, 2017).

Prautzsch, H., Boehm, W., and Paluszny, M. (2013). Bézier and B-Spline Techniques. Berlin; Heidelberg: Springer Science & Business Media.

Reggente, N., Essoe, J. K. Y., Aghajan, Z. M., Tavakoli, A. V., McGuire, J. F., Suthana, N. A., et al. (2018). Enhancing the ecological validity of fMRI memory research using virtual reality. Front. Neurosci. 12:408. doi: 10.3389/fnins.2018.00408

Riva, G. (2005). Virtual reality in psychotherapy. Cyberpsychol. Behav. 8, 220–230. doi: 10.1089/cpb.2005.8.220

Rus-Calafell, M., Garety, P., Sason, E., Craig, T. J., and Valmaggia, L. R. (2018). Virtual reality in the assessment and treatment of psychosis: a systematic review of its utility, acceptability and effectiveness. Psychol. Med. 48, 362–391. doi: 10.1017/S0033291717001945

Slater, M., and Wilbur, S. (1997). A framework for immersive virtual environments (FIVE): speculations on the role of presence in virtual environments. Presence 6, 603–616. doi: 10.1162/pres.1997.6.6.603

Sütfeld, L. R., Gast, R., König, P., and Pipa, G. (2017). Using virtual reality to assess ethical decisions in road traffic scenarios: applicability of value-of-life-based models and influences of time pressure. Front. Behav. Neurosci. 11:122. doi: 10.3389/fnbeh.2017.00122

VR-Design Studio (2019). Retrieved from: https://www.forum8.com/technology/vr-design-studio/ (accessed December 16, 2019).

Wienrich, C., Schindler, K., Döllinqer, N., Kock, S., and Traupe, O. (2018). “Social presence and cooperation in large-scale multi-user virtual reality-the relevance of social interdependence for location-based environments,” in 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (Reutlingen: IEEE), 207–214. doi: 10.1109/VR.2018.8446575

Keywords: VR research, virtual environment, Code: C#, VR code repository, VR foundation, open source VR

Citation: Nezami FN, Wächter MA, Pipa G and König P (2020) Project Westdrive: Unity City With Self-Driving Cars and Pedestrians for Virtual Reality Studies. Front. ICT 7:1. doi: 10.3389/fict.2020.00001

Received: 18 February 2019; Accepted: 09 January 2020;

Published: 31 January 2020.

Edited by:

Mark Billinghurst, University of South Australia, AustraliaReviewed by:

Stephan Lukosch, Delft University of Technology, NetherlandsCopyright © 2020 Nezami, Wächter, Pipa and König. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maximilian A. Wächter, bXdhZWNodGVyQHVuaS1vc25hYnJ1ZWNrLmRl

†These authors share first authorship

‡These authors share senior authorship

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.