- 1Human Perception, Cognition and Action, Max Planck Institute for Biological Cybernetics, Tübingen, Germany

- 2Perceiving Systems, Max Planck Institute for Intelligent Systems, Tübingen, Germany

- 3Department of Psychology, University of Utah, Salt Lake City, UT, United States

- 4Motor Control and Learning, Technical University Darmstadt, Darmstadt, Germany

The creation or streaming of photo-realistic self-avatars is important for virtual reality applications that aim for perception and action to replicate real world experience. The appearance and recognition of a digital self-avatar may be especially important for applications related to telepresence, embodied virtual reality, or immersive games. We investigated gender differences in the use of visual cues (shape, texture) of a self-avatar for estimating body weight and evaluating avatar appearance. A full-body scanner was used to capture each participant's body geometry and color information and a set of 3D virtual avatars with realistic weight variations was created based on a statistical body model. Additionally, a second set of avatars was created with an average underlying body shape matched to each participant's height and weight. In four sets of psychophysical experiments, the influence of visual cues on the accuracy of body weight estimation and the sensitivity to weight changes was assessed by manipulating body shape (own, average) and texture (own photo-realistic, checkerboard). The avatars were presented on a large-screen display, and participants responded to whether the avatar's weight corresponded to their own weight. Participants also adjusted the avatar's weight to their desired weight and evaluated the avatar's appearance with regard to similarity to their own body, uncanniness, and their willingness to accept it as a digital representation of the self. The results of the psychophysical experiments revealed no gender difference in the accuracy of estimating body weight in avatars. However, males accepted a larger weight range of the avatars as corresponding to their own. In terms of the ideal body weight, females but not males desired a thinner body. With regard to the evaluation of avatar appearance, the questionnaire responses suggest that own photo-realistic texture was more important to males for higher similarity ratings, while own body shape seemed to be more important to females. These results argue for gender-specific considerations when creating self-avatars.

1. Introduction

Research on space perception in the real world and in virtual realities suggests that the body is important for an accurate perception of spatial layout (Ries et al., 2008; Linkenauger et al., 2010; Mohler et al., 2010; McManus et al., 2011). For example, it has been suggested that the environment is scaled using one's own bodily dimensions, such as eye height (Leyrer et al., 2011), body weight (Piryankova et al., 2014b), hand size (Linkenauger et al., 2011), and leg, foot, and arm length (Mark and Vogele, 1987; Jun et al., 2015; Linkenauger et al., 2015). These results argue that humans rely on their visual body dimensions for spatial perception and rapidly adapt their spatial interpretation of the surrounding world to new body dimensions. The results suggest that it may be important for many virtual reality applications that aim to convey and transfer spatial information from the virtual world to the real world to provide a personalized avatar in terms of size. Avatars are used in various applications and research fields such as health care (Stevens et al., 2006; Combs et al., 2015), education (Hayes et al., 2013), tele-communication (Slater and Steed, 2002; Garau et al., 2003; Bailenson et al., 2006; Biocca, 2014), immersive games (Christou and Michael, 2014), virtual clothes try on and animation of realistic clothing (Magnenat-Thalmann et al., 2011; Guan et al., 2012; Pons-Moll et al., 2017), design processes (Sherman and Craig, 2002), and ergonomics (Badler, 1997; Honglun et al., 2007).

Creating or choosing an avatar requires at least three major considerations: the overall shape of the body and face, the skin and clothing particularities (the texture of the body), and the ability to animate the avatar. In most research, avatars have been created through the use of previously modeled or purchased avatar kits, e.g., from Rocketbox Studios GmbH, Mixamo, Poser, or the Open-Source Software MakeHuman (also see Spanlang et al., 2014). A novel approach is SMPL (Loper et al., 2015), http://smpl.is.tue.mpg.de/, which provides researchers with avatars that clearly do not have the identity of the user, but can be personalized with respect to body dimensions and shape. Further, in recent years, several low-cost 3D body scanning systems, such as commodity depth and camera sensors (Shapiro et al., 2014; Malleson et al., 2017) or Microsoft's Kinect Sensor have become available for creating self-avatars.

More sophisticated 3D scanning systems have also been used to create personalized avatars, for research on body perception (Piryankova et al., 2014a; Mölbert et al., 2017a; Thaler et al., 2018), and on attitudes toward using 3D body scanning for clothing customization and selection (Lee et al., 2012). The scanning systems are capable of capturing both the shape and the texture of the body. However, even given the best 3D scanning capabilities available, there is still an open question as to how people perceive a personalized self-avatar that is based on a 3D scan of themselves and whether they accept the avatar as a virtual representation of themselves.

When customizing self-avatars, there are several aspects that should be considered: “How is the avatar visually perceived in terms of bodily dimensions?” and “How is the avatar's appearance evaluated with regard to the similarity to the own physical body, and the desired body? Would users accept it as a digital representation of the self?.” This distinction parallels research on people's body image, which is traditionally described as comprising two largely independent components: a perceptual component of how accurately own bodily dimensions are perceived, and a cognitive-affective component referring to the evaluation of one's own appearance (Gardner and Brown, 2011). Because research on the perception of avatars is a rather recent area, we argue that insights about how to assess the perception of avatars could come from examining the longstanding research on body perception. For decades, many different measures for assessing perceptual and attitudinal factors of body image (i.e., for real bodies) have been developed (Thompson, 2004; Gardner and Brown, 2011). The perceptual component of body image is usually assessed in body size estimation tasks that require participants to estimate dimensions of either their own body parts relative to a spatial measure (e.g., a caliper; metric methods) or of the whole body relative to another body (depictive methods). Depictive methods have the advantage of measuring the perception of the whole body and are more ecologically valid than metric methods. Using psychophysical paradigms, participants are usually presented with distinct variations of personalized or non-personalized digital bodies (photographs, videos, and sometimes avatars) varying in bodily dimensions and are asked to identify those bodies that best represent themselves. The results of such experiments provide measures for the accuracy of body size estimation as well as the sensitivity to changes in body size. In the current paper we will therefore use similar psychophysical paradigms to assess the perception of self-avatars.

Although body image in men has recently started to be investigated, the majority of the literature has looked at women's body image. The focus on women is likely due to the higher prevalence of eating disorders in women, as well as findings suggesting a relationship with distortions of body size perception in these disorders (Thompson et al., 1999). Additionally, healthy females were also found to not accurately estimate their own body weight (Piryankova et al., 2014a; Mölbert et al., 2017a; Thaler et al., 2018). Previous studies found that healthy females in the normal body mass index (BMI) range slightly underestimated their body weight and were more willing to accept thinner bodies as their own as compared to fatter bodies (Piryankova et al., 2014a; Mölbert et al., 2017a). Little research has examined body size estimation in males and potential gender differences in these estimates. The few studies on body size estimation in men have focused on how weight status affects the perception of one's own body size by comparing overweight and obese men with normal weight men (for a review, see Gardner, 2014). However, those studies did not use biometrically plausible body stimuli, nor did they make gender comparisons. In the current study, we explored how men estimate their body size with biometric self-avatars and we hypothesized that men would not underestimate their body weight as much as women. As support for this hypothesis, the literature suggests that men tend to be more satisfied with their bodies than women (Feingold and Mazzella, 1998) and are more concerned about their muscularity (Blond, 2008), while females' dissatisfaction centers around their body weight with the wish to be thinner (Grabe et al., 2008). This gender difference “muscles vs. weight” reflects the societal beauty ideals for males and females and is also reflected in people's choices of self-avatars for self-representation in video games (Dunn and Guadagno, 2012; Ducheneaut et al., 2009).

Because body shape, in large part body weight, is the factor of adult bodies that varies the most over the lifespan (GBE, 2013), we chose to focus our research on weight perception. Here, using a mirror-like virtual reality (VR) setup, we questioned whether there are gender differences in the use of visual cues (shape and texture) of a static self-avatar to estimate body weight and to evaluate the avatar's appearance. For assessing the visual perception of the avatar, we utilized two psychophysical paradigms in combination with 3D body scans and biometrically plausible changes in body weight. The first paradigm included a one-alternative forced choice task (1AFC) in which participants estimated whether the presented bodies corresponded to their own body weight. The second measure was a method of adjustment task (MoA) in which participants adjusted the virtual body until it matched their own in weight. Finally, participants also adjusted the avatar to their desired body weight and evaluated the avatar's appearance in terms of perceived similarity to their own body, uncanniness, and the willingness to accept this avatar as a digital representation of themselves.

The female results presented here were partially published in Piryankova et al. (2014a) where the focus was on visual cues used to estimate own body weight and establishing the psychophysical methods for studying self-body size perception in VR. In the present study, we focus on gender differences1 in the use of visual cues for the perception of an avatar's weight and factors related to providing personalized self-avatars in immersive VR, such as the evaluation of the avatar's appearance in terms of similarity to one's own body, uncanniness of the avatars, and the avatar as a digital self. The male data and VR-related evaluation factors (uncanniness and digital self measures) were not previously published.

2. Methods

2.1. Participants

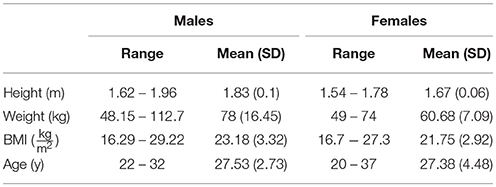

Thirteen male and thirteen female Caucasian participants with normal or corrected-to-normal vision took part in the experiment (see Table 1 for descriptive statistics of the participants). The BMI of both the male and female participants was representative of the German population (GBE, 2013). Participants gave written informed consent and were compensated with € 8 per hour for their participation. The experimental protocol was approved by the local ethics committee of the University of Tübingen and was performed in accordance with the Declaration of Helsinki.

2.2. Visual Stimuli

For each participant, a body scan was collected using a 3D full body scanning system (3dMD, Atlanta, GA). The system comprises 22 stereo units, each consisting of a pair of black and white cameras observing the textured light pattern that is projected by speckle projectors, and a 5-megapixel color camera that captures the body texture. The spatial resolution of the body scan system is approximately one millimeter. To get an accurate representation of the body shape, all participants wore tight gray shorts and a sleeveless shirt. To reduce distortions caused by the hair, participants wore a hair cap. Each participant was scanned in three different poses (neutral, A-pose, T-pose) in order to decrease loss of data in the mesh and the texture due to occlusion, or being outside the capture space of the scanner. To generate the body stimuli, the three high-polygon meshes were combined and first registered to a statistical body model as described by Hirshberg et al. (2012). The texture was generated based on the RGB images of the three body scans.

The statistical body model consists of a template mesh that can be deformed in shape and pose to fit a 3D-scan. The shape component of the body model for the females was learned from 2,094 female bodies and for the males from 1,700 male bodies in the CAESAR dataset (Robinette et al., 1999), by applying principal component analyses on the triangle deformations in the observed meshes after removing deformation due to pose. This allowed to model body shape variation in a subspace, U, spanned by the first 300 principal components, where the body shape of an individual, Sj, is described as a vector of 300 linear coefficients, βj, that approximate the shape deformation as Sj = Uβj + μ, where μ is the mean shape deformation in the female or male population.

The pose component of the body model is trained from approximately 1,200 3D-scans of people in different poses and describes deformations due to rotations of body parts. In the registration process, the pose and shape parameters are identified and used to transform the template mesh into the scan by minimizing the distance between template mesh and scan. When the scan is registered, a texture map is computed for each participant's model based on the pixels from the 22 RGB calibrated images. The texture map was post-processed in Adobe Photoshop (CS6, 13.0.1) to standardize the color of the textures across participants of each sex and to conceal small artifacts.

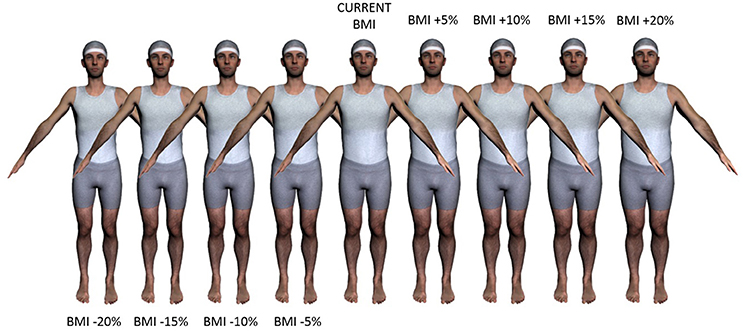

For generating the different weight variations of each avatar, a linear regressor X was learned between anthropomorphic measurements A = [weight, height, arm length, inseam] and the shape identity component β for the whole CAESAR dataset, such that the difference ||(A|1)X − β|| is minimized. This defines a linear relation between shape and measurements for each participant and allowed to modify β in a way that produces intended changes in the anthropomorphic measurements. Given each participant's weight w, height h, and registration, nine avatars with varying BMI were generated , with △BMI = {0, ±5%, ±10%, ±15%, ±20%} (Figure 1). Changing the BMI was achieved by applying a change in the shape vector, so that △β = (i.e., changing the weight equally to the desired proportional change in BMI, while keeping certain measurements – height, arm length, and inseam – fixed). Generating the weight variations based on percent changes from each participant's BMI was chosen to have an equal number of bodies bigger and smaller than each participant's actual body. This ensured that there is no influence of the weight range of the body stimuli on the accuracy of body size estimation across individuals as has been previously found by Mölbert et al. (2017b). Further, as the just-noticeable difference between two stimuli is proportional to their magnitude as described by Weber's law (Gescheider, 2013), using percent weight changes allows a comparison of the sensitivity to weight changes both for weight gain and weight loss across participants that is independent of personal body size.

Figure 1. Example of a set of personalized avatars with own shape and own photo-realistic texture varying in body mass index (BMI). The individual shown here has provided written informed consent that his body and face can be shown in publications.

In addition, for each participant another avatar was created with a different overall body shape, but with the same height, weight and sex as the participant (Figure 2). This was achieved by choosing the body of an individual in the CAESAR dataset that was closest to the average female or male body shape (with height havg and weight wavg). The height and weight of this individual's avatar was then matched to the BMI of each participant's set of nine avatars previously computed by changing the deformation coefficients by △β =. The resulting set of nine avatars had the participant's height and the same BMI steps but different relative body proportions and shape, from here on referred to as “average” body shape.

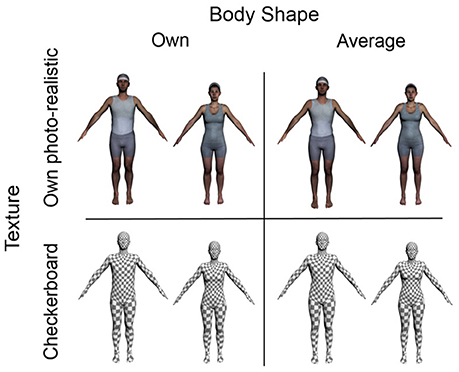

Figure 2. Four different experimental conditions used in the study with different texture-related cues (own photo-realistic, checkerboard) and shape-related cues (own shape, average shape). The individuals shown here have provided written informed consent that their bodies and faces can be shown in publications.

For both sets of avatars (own body shape, average body shape), and for each male and female participant, the nine avatars were combined in Autodesk 3ds Max 2015, such that is was possible to morph between the bodies in steps of 0.05% of the participant's actual BMI. To investigate the influence of color information (texture) on body weight perception, a checkerboard texture was generated where any color details specific to the participants were removed. This texture also removes low-level visual features of the body and draws attention to the overall body shape (see Figure 2). The reason for choosing a checkerboard texture as compared to a unicolor matte texture was that lighting would have had a greater influence on the availability of low level versus holistic features of the body. In this study, all body stimuli were posed identically (by keeping the pose parameters constant), in order to remove any perceptual effects related to pose. The pose parameter vector was calculated as the average pose parameter vector of all registered scans in the A-pose.

2.3. Experimental Setup and Virtual Scene

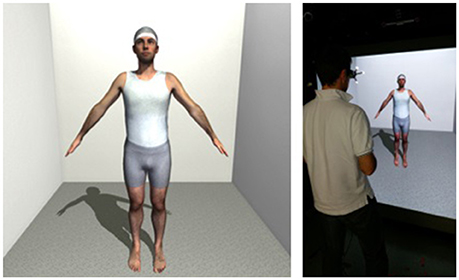

During the experiments, participants stood one meter in front of a flat, large-screen immersive display (LSID) (Figure 3, right). The visual stimuli were projected onto the display using a Christie SX+ stereoscopic video projector with a resolution of 1400 x 1050 pixels. The projection surface covered an area of 2.16 x 1.62 m with a floor-offset of 0.265 m, resulting in a field of view of 94.4° (horizontally) x 78° (vertically) of visual angle. A motion tracking system (ART SMARTTRACK) was connected to the flat LSID, which included two tracking cameras and one rigid object with reflective markers attached to the shutter glasses. This provided motion parallax and improved distance perception to the avatar. The stereoscopic projection was generated using the average inter-pupillary distance (IPD) of 0.065 m (Willemsen et al., 2008). Willemsen et al. (2008) showed that using an average IPD as compared to an individual-specific IPD did not affect distance judgments. Although the IPD of 0.065 m is closer to the mean IPD of males than of females (Dodgson, 2004), there were no subjective comments or complaints from the participants about their stereo vision experience. Participants wore shutter glasses (NVIDIA) with a field of view of 103° x 62° of visual angle (corresponding to an area of 2.52 x 1.2 m of the flat LSID) in order to see the virtual scene stereoscopically. The virtual scene contained an empty room with a life-size avatar standing in a constant A-pose at a distance of two meters from the participant (Figure 3, left). Participants responded by pressing buttons on a joystick pad.

Figure 3. (Left) Virtual scene viewed by the participants; (Right) Participant views a personalized avatar on a large-screen immersive display. The individual shown here has provided written informed consent that his body and face can be shown in publications.

2.4. Procedure

The experimental procedure was conducted across two sessions. In the first session, the participants' bodies were scanned. In the second session, participants first filled out the Rosenberg self-esteem questionnaire (Rosenberg, 1965), followed by four blocks of two psychophysical experiments. The sessions were separated by m = 13.23 days (sd = 6.39) for the females and by m = 113.31 days (sd = 58.91) for the males.2

The blocks differed in the visual cues of the avatar (own shape with own photo-realistic texture, own shape with checkerboard texture, average shape with own photo-realistic texture, and average shape with checkerboard texture; Figure 2). The order of the blocks was randomized across participants; the order of the two psychophysical experiments within the blocks remained constant. After each block, participants had a short break and filled out a post-questionnaire in which they rated how similar they perceived the avatar they estimated to correspond to their current weight, to their physical body on a 7-point Likert scale (e.g., “How similar was the avatar to you?” and “How similar were the legs/arms/torso/face of the avatar to your physical body?”). In addition, they evaluated the avatar's appearance in terms of uncanniness and willingness to accept the avatar as a digital representation of the self. Participants were instructed to ask if they did not understand the questions, and in those cases were explained that uncanniness means a feeling of strangeness and/or lack of realism when viewing the whole body or body parts. The whole experiment took around 90 min.

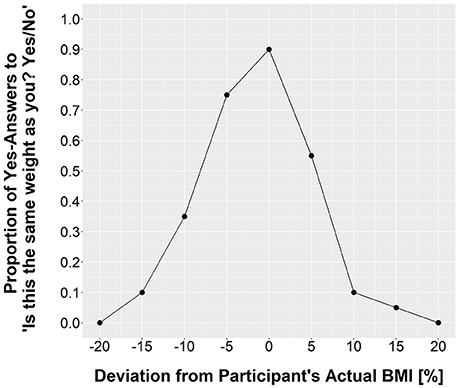

We used two psychophysical measures: the method of constant stimuli in a one-interval forced choice paradigm with two response possibilities (1AFC) and a method of adjustment (MoA) task. In each trial of the 1AFC task, participants were asked to judge whether the presented avatar corresponded to their actual body weight by responding to the question “Is it the same weight as you? Yes/No.” Each of the nine avatars (0, ±5%, ±10%, ±15%, and ±20% of the participant's actual BMI) was presented 20 times, resulting in a total of 180 trials. The trials were divided into 20 bins, each containing the set of nine avatars, such that each avatar was presented once before being repeated. The order of trial presentation within the bins was randomized. The avatar was visible until a response was given, after which the screen went black for two seconds before the next trial started. After 45 trials, participants were offered a break to avoid fatigue. They were instructed to respond as accurately as possible. Following the 1AFC task, participants completed two MoA tasks, in which they first adjusted the weight of the avatar nine times to match their actual weight, and then nine times to match their ideal (desired) body weight via pressing buttons on a joystick pad. The starting point of each of the nine trials was one of the nine avatars in a randomized order. Participants were free to interactively explore the whole weight range of +20 to −20% of the participant's BMI. Morphing between the nine bodies was possible in steps of 0.05% of the participant's actual BMI. The MoA task for actual weight was used as a converging measure for the 1AFC procedure given both tasks index visual body weight estimation. If the MoA task yields similar results than the 1AFC task, then this might be a useful method for avatar evaluation because it is much quicker and allows for a more refined answer because the step size is smaller.

2.5. Data Analysis

To control for potential biases introduced by using a sex-specific model for generating the avatar with average body shape matched to each participant's height and weight, an estimation of the body height and weight of each participant's own and average-shaped avatar was calculated. While the height of a virtual avatar can be easily measured, it is not possible to directly weigh it. Therefore, the relation between the volume of a body and its weight was used and a linear regressor allowing prediction of the weight from the volume was learned separately for the females and the males. For that, the SMPL body model (Loper et al., 2015) was registered to all female and male subjects in the CAESAR dataset (Robinette et al., 2002) and the volume of the obtained registrations was computed. The weight measurements of the female and male subjects in the CAESAR dataset have a linear relation to the volume of the SMPL fit, but are slightly different for each gender. The two regressors used iterative re-weighted least squares. Based on the estimated height and weight, the %-BMI deviation of each participant's avatar with average body shape from the avatar with own body shape was calculated. To ensure that any gender differences in body size estimation of avatars with own body shape and average body shape were not caused by differences in volume of the body stimuli, the %-BMI deviation was then used to correct each participant's body size estimates of the average-shaped body stimuli in the 1AFC and the MoA task.

For each participant, the proportion of yes-answers to the question “Is it the same weight as you?” was determined for the nine avatars separately for each of the four conditions (for an example, see Figure 4). The body with the highest proportion of yes-answers reflected participants' estimated body weight. To analyze participants' sensitivity to weight changes of bodies thinner and fatter than the estimated body weight, a cumulative Weibull function was fit according to Wichmann and Hill (2001) to the slopes on each side of the peak for each participant and condition. The position of the psychometric function along the x-axis (alpha), the slope steepness (beta), and the peak (lambda) were free to vary. Flooring performance (gamma) was fixed to zero. The accuracy of estimating body weight and the sensitivity to weight changes are independent of one another. For example, the sensitivity to weight changes can be high even when own weight is under- or overestimated. In the MoA task, the average of the nine responses was calculated for each participant per condition and question (current body weight, ideal body weight).

All analyses were done in R v 3.3.2. To analyze how the estimated own body weight in the 1AFC (peak value) and MoA task (average response) varied based on body shape (own, average), texture (own photo-realistic, checkerboard), and participant gender (male, female), repeated measures analyses of variance (ANOVAs) were conducted with body shape and texture as within-subject factors and participant gender as a between-subjects factor. For analyzing how the slope steepness of the fitted psychometric functions varied based on shape, texture and participant gender, a multi-level regression analysis was conducted. Multi-level modeling was chosen because for some participants and conditions, the Weibull function could not be fit due to an insufficient amount of data points. This analysis can be performed despite data loss, whereas an ANOVA is not as effective with missing data. The analysis was done using the lmer function of the lme4 package in R (Bates et al., 2015). Slope steepness (beta values) was regressed onto shape, texture, and participant gender. All factors were allowed to interact. Specifically, the following mixed-effect model was fitted [in Wilkinson-notation (Wilkinson and Rogers, 1973)]: Slope steepness ~ shape * texture * slope side * participant gender + (shape + texture + slope side | participant). It therefore included the by-subject random slopes: shape, texture, and slope side. The results reported are with Satterthwaite approximation for degrees of freedom. For pairwise comparisons the lsmeans package in R (Lenth, 2016) was used.

3. Results

The predicted BMI of the avatars with average body shape was 4.25% (se = 0.4) lower for the males and 0.35% (se = 0.3) lower for the females as compared to the predicted BMI of the avatars with own body shape. For each participant, the body size estimates of the average-shaped avatars were therefore corrected both in the 1AFC and MoA task.

3.1. Visual Perception of the Avatar

3.1.1. Estimation of the Avatar's Body Weight

We examined whether the influence of the visual cues (body shape, texture) on participants' estimates of the avatar's body weight differed for males and females. As a measure for the accuracy of body weight estimation, we first considered the %-BMI deviation of the highest proportion of yes-answers to the question “Is this the same weight as you? Yes/No” from participants' actual BMI. Note, %-BMI deviation is equivalent to %-weight deviation, as the height of the body stimuli was kept constant. A repeated-measures ANOVA was conducted on the estimated body weight with shape (own, average) and texture (own photo-realistic, checkerboard) as within-subject factors and participant gender (male, female) as a between-subjects factor.

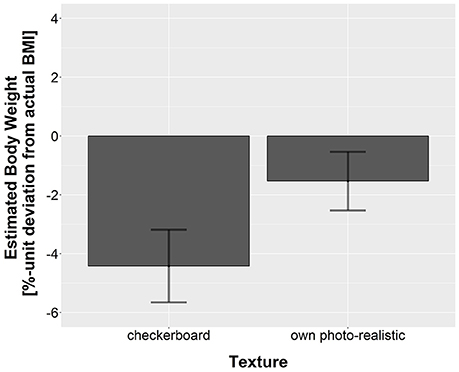

The ANOVA revealed that texture significantly influenced the estimated body weight, F(1, 24) = 13.54, p = 0.001, η2 = 0.05. The mean estimated body weight in the 1AFC task in raw units (%-unit deviation from participants' actual BMI) for avatars with own photo-realistic and checkerboard texture is shown in Figure 5. Participants reported that an avatar with a significantly lower body weight corresponded to their actual weight for the checkerboard texture (m = −4.42%, se = 1.24) compared to the own photo-realistic texture (m = −1.54%, se = 0.99). One sample t-tests revealed that the estimated body weight significantly differed from 0 (participants' actual BMI) for the checkerboard texture, t(25) = −3.57, p = 0.001, but not, however, for the own photo-realistic texture, t(25) = −1.54, p = 0.14. Thus, participants reported that their body weight corresponded to the weight of an avatar with a significantly lower BMI when the avatar was displayed with a checkerboard texture as compared to their own photo-realistic texture.

Figure 5. Mean estimated body weight in the 1AFC task in terms of percent BMI deviation from participants' actual BMI for own photo-realistic and checkerboard texture averaged across body shape of the avatar (own, average) and participant gender (male, female). Error bars represent one standard error of the mean.

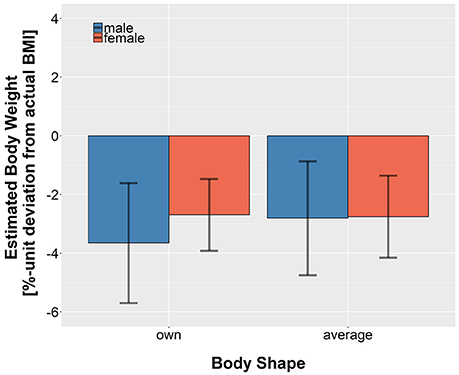

There was no main effect of body shape or participant gender, and no significant interactions. The mean estimated body weight in the 1AFC task in raw units (%-unit deviation from participants' actual BMI) for avatars with own shape and average shape for males and females is shown in Figure 6. On average, participants reported an avatar with their own body shape with a BMI of −3.17% (se = 1.17) of their actual weight to be equivalent to their actual weight, and an avatar with average body shape with a BMI of −2.78% (se = 1.17) to be equivalent to their actual weight. The estimated body weight was significantly different from 0, both for own shape, t(25) = −2.71, p = 0.01, and for average shape, t(25) = −2.37, p = 0.03. Thus, participants reported that their body weight corresponded to the weight of an avatar that was significantly lower than their own, both for their own and the average body shape.

Figure 6. Mean estimated body weight in the 1AFC task in terms of percent BMI deviation from participants' actual BMI for own shape and average shape averaged across texture for male and female participants. Error bars represent one standard error of the mean.

In addition to the 1AFC task, the MoA task for actual weight provided another measure for the accuracy of body weight estimation. To test whether the body weight estimates for actual weight in the MoA task were affected by the visual cues (shape and texture) in a similar pattern to the estimated body weight in the 1AFC task, a repeated-measures ANOVA was conducted on the adjusted body weight (%-unit deviation from participants' actual BMI) with shape and texture as within-subject factors and participant gender as a between-subjects factor. One female participant was excluded from the analysis because the data from one condition (own shape, checkerboard texture) was lost due to technical problems.

As in the 1AFC task, in the MoA task, texture again had a significant effect on the estimated body weight, F(1, 23) = 21.55, p < 0.001. Participants reported that an avatar with a significantly lower body weight corresponded to their actual weight for the checkerboard texture (m = −3.09%, se = 1.55) compared to the own photo-realistic texture (m = 0.36%, se = 1.32). There was no main effect of shape or gender, and no significant interactions. On average, participants adjusted the avatar with their own body shape to a weight of −2.16% (se = 1.67) of their own weight when estimating their actual weight, and the avatar with average body shape to a weight of −0.57% (se = 1.41). The estimated body weight was not significantly different from 0 neither for own shape, t(25) = −1.29, p = 0.2, nor for average shape, t(25) = −0.41, p = 0.69. In contrast to the 1AFC, participants accurately estimated their own body weight. This difference in results could be caused by the finer step size used in the MoA task that allows for more variability in the responses.

3.1.2. Acceptance Range of the Avatar's Body Weight

We hypothesize that weight gain and weight loss might generally be differently emotionally loaded, e.g., with more negative emotions toward weight gain. Therefore, the willingness to accept thinner and fatter bodies as corresponding to one's own body weight could also be different. Within the present experiment, we suggest that the willingness to accept a body as corresponding to one's own weight might be reflected in the sensitivity to weight changes (the steepness of the psychometric curve). The steeper the slope, the fewer avatars would be accepted as corresponding to own body weight.

To examine potential gender differences in the sensitivity to weight changes, the fall-off rates for positive and negative changes in weight relative to the estimated body weight in each condition were examined. As described in section 2.5, psychometric functions were fit to the data of each participant and condition separately. The mean fit of the psychometric function to the data was good (mean R2 = 0.996; se = 0.001). 13 out of a total 208 slopes (26 participants x 4 conditions x 2 slope sides) could not be fit due to an insufficient amount of data points (males: 9, females: 4).

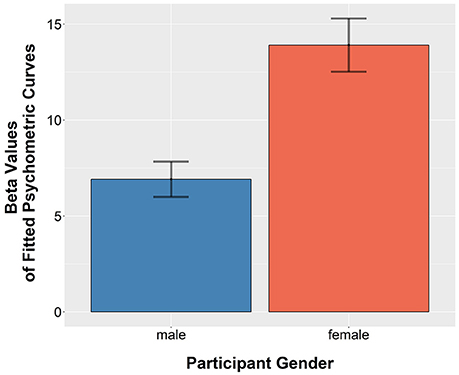

The multi-level regression analysis revealed that participant gender had a significant effect on the slope steepness, F(1, 22.35) = 6.32, p = 0.02. On average, male participants had lower beta values (m = 6.92, se = 0.92) than female participants (m = 13.91, se = 1.39), as shown in Figure 7. High beta values indicate a steeper fall-off rate and thus a higher sensitivity to weight changes. Thus, males were willing to accept a wider range of bodies as corresponding to their actual body weight as compared to females.

Figure 7. Mean beta values (indexing the slope steepness) of the psychometric function for male and female participants. Higher values indicate steeper slopes and thus higher sensitivity to weight changes. Error bars represent one standard error of the mean.

Further, there was a significant interaction between body shape and the direction of weight change, F(1, 133.66) = 4.55, p = 0.03. However, planned comparisons showed that there was only a marginal difference between negative changes of weight and positive changes in weight for the average body shape, t(47.25) = −1.94, p = 0.06, and no significant difference for own body shape, t(50.76) = 0.75, p = 0.45. There was also a significant interaction of texture and the direction of weight change, F(1, 132.31) = 5.8, p = 0.02. However, planned comparisons showed that there was no difference between weight increase and decrease both for the checkerboard texture, t(52.14) = 0.6, p = 0.55, and the own photo-realistic texture, t(45.74) = −1.81, p = 0.08.

3.2. Evaluation of Avatar Appearance

3.2.1. Ideal Body Weight

To examine potential gender differences in how satisfied participants were with their body weight, the discrepancy between adjusted ideal weight in the MoA task and actual weight was analyzed. A repeated-measures analysis of variance (ANOVA) was conducted on the adjusted ideal weight (in %-unit deviation from participants' actual BMI), with shape (own, average) and texture (own photo-realistic, checkerboard) and as within-subject factors and participant gender (male, female) as a between-subjects factor.

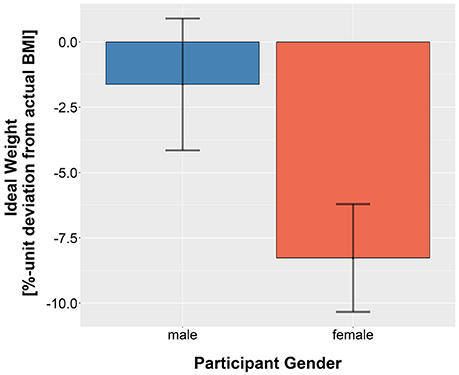

There was a marginal effect of gender, F(1, 23) = 4.07, p = 0.05. The adjusted ideal body weight was lower for females (m = −8.27%, se = 2.06) than for males (m = −1.62%, se = 2.52), as shown in Figure 8. A one sample t-test revealed that the adjusted ideal body weight significantly differed from 0 for females (t(11) = −4.01, p = 0.002), but not for males (t(12) = −0.64, p = 0.53). Thus, males adjusted their ideal weight to their actual weight, whereas females desired a much thinner body.

Figure 8. Mean adjusted BMI for ideal weight in terms of percent BMI deviation from participants' actual BMI for male and female participants. Error bars represent one standard error of the mean.

As in the estimated body weight in the 1AFC and MoA actual weight task, the influence of texture on the adjusted body weight was also apparent, F(1, 23) = 21.86, p < 0.001. Participants reduced the weight of the avatar with checkerboard texture (m = −6.38%, se = 1.81) more than of the avatar with own photo-realistic texture (m = −3.25%, se = 1.75). Unlike in the 1AFC and MoA task, we found a significant interaction between shape and texture, F(1, 23) = 4.83, p = 0.04. There was a larger effect of texture for own shape as compared to average shape.

It has been suggested that the discrepancy between the avatar's body and a user's physical body is related to self-esteem (Dunn and Guadagno, 2012). To examine whether there was a difference in self-esteem between males and females, the results of the Rosenberg self-esteem questionnaire were analyzed. The Rosenberg self-esteem questionnaire contains 10 statements that have to be rated on a 4-point scale (strongly agree, agree, disagree, strongly disagree) (Rosenberg, 1965). Scores between 15 and 25 (out of 30) indicate a level of self-esteem that is in the normal range. On average, males scored 23.23 (sd = 3.61) and females 21.46 (sd = 5.44) with two females and one male scoring lower than 15, and three females and two males scoring higher than 25. Since the scores were not normally distributed, a Wilcoxon rank-sum test was used to test whether there was any difference in self-esteem across participant gender. Self-esteem scores in males (Mdn = 24) and females (Mdn = 22) did not differ, W = 65.5, p = 0.34, r = −0.19 and can thus not explain the gender difference in the desired body weight. The sample size of 13 per gender was too small to run correlation analyses on the self-esteem scores and the results of the psychophysical experiments.

3.3. Perceived Similarity of the Avatar to the Own Body

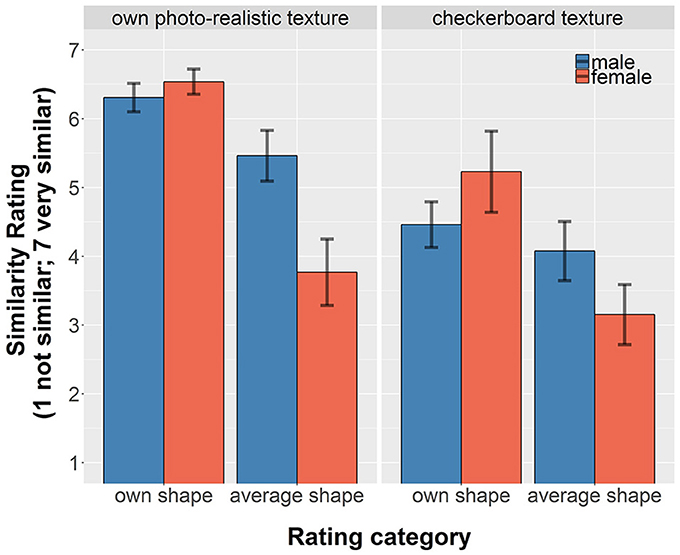

Perceived similarity of the avatar to the participants' own physical body could have influenced the results of the psychophysical experiments. The participants' similarity ratings of the overall appearance of the avatars as it was assessed by the question “How similar was the avatar to you?” after each block of psychophysical experiments are shown in Figure 9.

Figure 9. Mean similarity ratings for the overall appearance of the avatar as assessed by the post-questionnaires for all four conditions for male and female participants. Error bars represent one standard error of the mean.

To investigate potential gender differences in the influence of the visual cues on the perceived similarity of the avatar to participants' own physical body, the similarity ratings were statistically compared. Since the data was not normally distributed, the results of the similarity ratings were analyzed using non-parametric tests. To examine potential gender differences, Wilcoxon's rank-sum test was used; to examine the influence of visual cues on perceived similarity for male and female participants separately, Wilcoxon signed-rank tests were used. As non-parametric tests work on ranking the data and use the ranks rather than the actual data for the analysis, the medians of the data rather than the means are reported below in combination with the statistical results.

Averaged across conditions, males and females gave equally high similarity ratings for the overall appearance of the avatar (females: Mdn = 4.75; males: Mdn = 5), W = 63, p = 0.28 (Figure 9). Further, avatars with own body shape received higher ratings than avatars with average shape as indicated by higher similarity ratings for own shape compared to average shape, both for males (own shape: Mdn = 4.9, average shape: Mdn = 4.4; W = 13, p = 0.045, r = −0.39) and for females (own shape: Mdn = 6.2, average shape: Mdn = 3.5; W = 1, p < 0.001, r = −0.68). The texture of the avatar also had an influence on the similarity ratings. Avatars with own photo-realistic texture received higher similarity ratings than avatars with checkerboard texture, both for males (own photo-realistic texture: Mdn = 4.9, checkerboard texture: Mdn = 4.4; W = 0, p = 0.002, r = −0.62) and females (own photo-realistic texture: Mdn = 6.2, checkerboard texture: Mdn = 3.5; W = 0, p < 0.001, r = −0.72). As can be seen in Figure 9, males rated the avatars as more similar to their physical body when they had their own photo-realistic texture, whereas for females, avatars with own body shape received higher ratings independent of whether they had their own or the checkerboard texture.

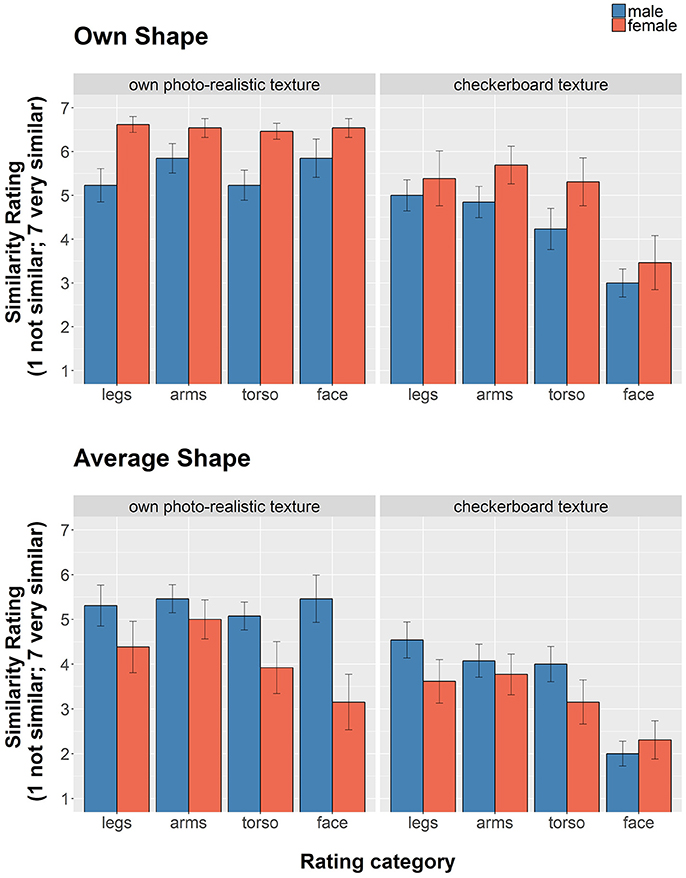

We further examined the similarity ratings for the body parts (Figure 10). Not surprisingly, the highest drop in similarity ratings when reducing the personalized features (shape, texture) was for the face. Additionally, for the similarity ratings of the body parts the same general importance of visual cues held, namely own body shape was more important for females and own photo-realistic texture was more important for males.

Figure 10. Mean similarity ratings for body parts of the avatar as assessed by the post-questionnaires for all four conditions for male and female participants. Error bars represent one standard error of the mean.

3.4. Questionnaire Responses

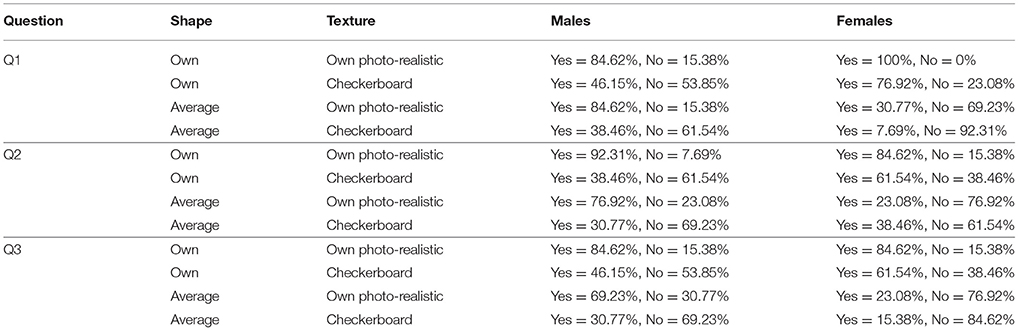

In addition, participants were asked whether they knew the avatar and whether they felt that the avatar represented them in a virtual environment (Table 2). In line with the similarity ratings, males and females perceived the avatar with their own shape and own photo-realistic texture as very familiar and indicated that they felt the avatar represented them in a virtual environment. Further, as for the similarity ratings, own photo-realistic texture was more important for the males, whereas body shape was more important for the females. Males perceived the avatar with their own photo-realistic texture and an average body shape as similarly familiar as the avatar with their own body shape. For females, body shape had a greater influence than texture. Avatars with own shape were perceived as more familiar than avatars with the average shape independent of texture.

Table 2. Responses to Q1: “Do you know this avatar? Yes/No,” Q2: “Do you feel the avatar was representing you in the virtual environment? Yes/No,” Q3: “Do you agree with the statement? When I was looking at the avatar, sometimes I had the feeling that I was looking at myself.”

We also asked participants whether looking at the avatars elicited any feeling of uncanniness (feeling of eeriness) and if they felt this was the case, which body part they thought looked most strange to them. It has been suggested that people's responses to virtual humans (avatars) shift from empathy to revulsion when the avatars approach a lifelike appearance, but fail to attain it (Mori et al., 2012). Fewer females indicated uncanny feelings compared to men, independent of the condition. Overall, more males indicated some strangeness when looking at the avatars, especially for avatars with their own photo-realistic texture. Specifically, 69.23% of the men and 23.08% of the women indicated feelings of uncanniness for own shape and own photo-realistic texture, 30.77% of the men and 7.69% of the women for own shape and checkerboard texture, 61.54% men and 23.08% women for average shape and own photo-realistic texture, and 30.77% men and 15.38% women for average shape and checkerboard texture. The qualitative responses to “Which part of the avatar felt the most strange to you?” were as follows; Males answered: Torso (53.85%), arms (53.85%), legs (38.46%), belly (30.77%), eyes (23.08%), hair (7.69%), ears (7.69%), head in general (7.69%), shoulders (7.69%), clothing/lighting (7.69%), neck (7.69%), other: “all, because it's too static” (7.69%); whereas females answered: Eyes (38.46%), nose (15.38%), legs (15.38%), face/head (7.69%), mouth (7.69%), torso (7.69%), arms (7.69%).

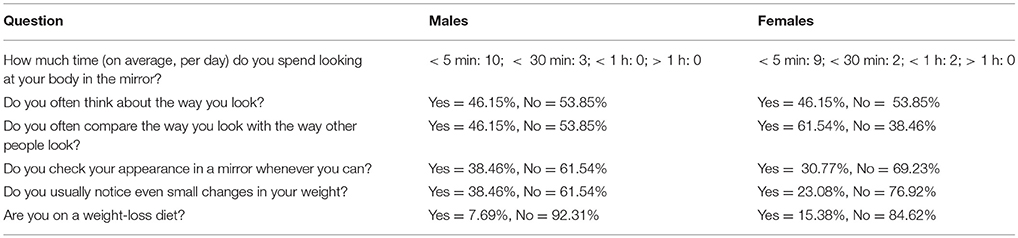

Finally, to consider possible confounds, participants were asked general body image related questions in the post-questionnaire (Table 3). Overall, males and females did not differ with regard to concerns about their appearance.

4. Discussion

The current study investigated potential gender differences in the use of visual cues (shape and texture) of a static avatar to estimate body weight and to evaluate the avatar's appearance. With regard to the visual perception of the avatars, we found no gender difference in the accuracy of body weight estimation. As with females (Piryankova et al., 2014a), we also found that males' perceptions of the avatar's body weight was influenced by the texture displayed on the avatar. Bodies with a checkerboard texture were perceived as heavier than bodies with one's own photo-realistic texture. Luminance variation due to shading is an important indicator of an object's shape. In contrast to the checkerboard texture that has no directional lighting information, the photo-realistic texture contains some lighting information that provides shape from shading information as an additional cue for body shape. Other studies have found that garments (horizontal and/or vertical stripes) influence body size perception (Thompson and Mikellidou, 2011; Swami and Harris, 2012), but with conflicting results, which suggests that this effect of garment may vary based on individual body size factors (Ashida et al., 2013).

The body shape of the avatar (own, average) had no influence on participants' body weight estimates. In the current study, two psychophysical tasks were used to assess body weight estimation: A one-alternative forced choice paradigm (1AFC) in which participants estimated whether the presented bodies varying in BMI corresponded to their own body weight, and a method of adjustment task (MoA) in which participants adjusted the body weight until it matched their own. Our results show that the two methods produced slightly different results. Males and females significantly underestimated the avatar's weight in the 1AFC task whereas they accurately estimated the avatar's weight in the MoA task. This difference in results could be due to the finer step size in the MoA task that allows more precise adjustments. Nevertheless, the MoA might be a possible alternative method to the 1AFC for measuring perceived weight that is much faster to implement with participants. Additionally, the MoA task lends itself well to possible avatar creation tasks because users could adjust a body shape to their own perceived bodily dimensions before using it in virtual reality.

We found gender differences in the weight range of the avatar that participants accepted as corresponding to their own body. Males were more willing to accept a larger weight range as similar to their own weights when compared to females. The higher sensitivity to weight changes for females is in line with literature showing that female body image concerns center around their body weight (Feingold and Mazzella, 1998). Future studies should investigate visual perception of different aspects of the body, such as muscularity, masculinity, strength (Wellerdiek et al., 2015), confidence, attractiveness, and how this relates to personality traits and body acceptance.

With regard to the evaluation of the avatars in terms of the desired body, we found no difference between the actual and desired body weight for males, whereas females desired a body with a significantly lower body weight than their own. This finding is in line with previous research showing that females wish for a significantly thinner body (Grabe et al., 2008). Importantly however, our results do not necessarily imply that males were satisfied with their body overall, but that the positive and negative changes in weight did not result in a desired body shape.

Viewing one's own photo-realistic texture was more important to males for higher ratings of similarity of the avatar's appearance to their own bodies. Females on the other hand, rated avatars with their own underlying body shape as more similar to their own body, independent of the texture. These results suggest that both texture and shape are important for creating self-avatars. Thus, if self-avatars are created with the desire that the participant identifies strongly with the avatar, it may be more important for women to focus on the creation of own body shape (i.e., in tools like Body Talk (Streuber et al., 2016): http://bodytalk.is.tue.mpg.de/, and Body Visualizer: http://bodyvisualizer.com/), whereas for men it may be more important to focus on the realism of the texture. This might be explained by own body shape carrying the necessary information about weight; and the photo-realistic texture carrying pertinent information about own muscularity. However, more research needs to be done to investigate rapid creation of self-avatars and the relative influence of shape and texture on the many factors of self-identification (i.e., self-identity, weight, muscularity, posture, motion, agency). Future work should also test these implications with different textures.

Men reported more uncanniness when looking at the avatars, especially when looking at their own photo-realistic texture. Possibly, the higher uncanniness ratings in males is, in part, due to a higher sense of similarity. Future studies should additionally employ measures of perceived realism and embodiment. In this regard, it would be interesting to examine how animating the avatar to replicate the user's motion would influence the perception and evaluation of the avatar's appearance. Future research could also try to investigate any possible interactions between visual perception and evaluation of self-avatars. While the body image literature suggests that those components are largely independent of each other, it would be worth investigating if attentional mechanisms may play a role. Specifically, if uncanniness is perceived in certain body parts, then that could draw attention to the body part, thereby over-emphasizing that body part and possibly biasing overall body perception.

5. Conclusion

We showed that there are some gender differences in the visual perception of self-avatars and in the evaluation of the appearance of self-avatars. With regard to visual perception of the avatars, we found no gender difference in the accuracy of body weight estimation. Men, however, were less sensitive to weight changes in the avatars than females and accepted a larger weight range as corresponding to their own weight. With regard to the evaluation of the avatars' appearance, we found no difference between actual and desired body weight for males, whereas females' desired body weight was lower than their actual weight. Additionally, viewing one's own photo-realistic texture was more important to men for higher ratings of similarity of the avatar's appearance to their own bodies, while own body shape seemed to be more important to women. Men reported more uncanniness when viewing the avatars. These gender differences suggest that when creating self-avatars for scenarios where it is more important for the avatar to be matched to users' perception of themselves, rather than being metrically accurate, important consideration needs to be given to design if both genders are to-be-expected users of the VR setup. Likewise, if the aim is to create a highly similar self-avatar for only one gender, this research suggests that for female avatar creation the focus should be on shape; and for male avatar creation the focus should be on texture.

Author Contributions

IP, JS, SdlR, and BM conceived the study and the experimental protocol. MB provided the body scanning facilities and resources. IP, JR and SS processed the body scans and generated the avatars. IP ran the experiment. AT analyzed and visualized the data, wrote the manuscript and addressed the revisions. AT and BM prepared the body shape analysis, SP analyzed the shape differences. JS and BM helped revising the manuscript. All authors provided final revisions and approval.

Funding

This research was supported by the Alexander von Humboldt Foundation, Fellowship for Experienced Researchers for JS, and the National Science Foundation (NSF IIS-11-16636), the EC FP7 project VR-HYPERSPACE (AAT-2011-RTD-1-285681), and the Centre for Integrative Neuroscience Tübingen through the German Excellence Initiative (EXC307) Pool-Projekt-2014-03.

Conflict of Interest Statement

MB is a co-founder, investor, and member of the board of Body Labs Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowleadgments

We wish to thank Naureen Mahmood for useful suggestions and discussions related to the features of the body scans; Emma-Jayne Holderness, Sophie Lupas, and Andrea Keller for scanning the participants; Joachim Tesch for help with Unity programming; Trevor Dodds and Aurelie Saulton for suggestions and discussions about the psychophysical experiment and perception of body size, and Eric Rachlin and Jessica Purmort for early discussions.

Footnotes

1. ^None of the participants indicated a difference between their biological sex and gender, we therefore use the words interchangeably.

2. ^The time interval for men was significantly larger than for females, but because our analyses of the accuracy of body size estimation in the 1AFC task across conditions does not show more variability in males than females, we think it is unlikely that this time difference between testing males and females influenced our results. Further, the 5%-unit BMI steps of the avatars would have warranted a fairly large change in weight in those 3-4 months for males to change their body size estimates.

References

Ashida, H., Kuraguchi, K., and Miyoshi, K. (2013). Helmholtz illusion makes you look fit only when you are already fit, but not for everyone. i Perception 4, 347–351. doi: 10.1068/i0595rep

Badler, N. (1997). “Virtual humans for animation, ergonomics, and simulation,” in Nonrigid and Articulated Motion Workshop, 1997. Proceedings., IEEE, (San Juan: IEEE) 28–36.

Bailenson, J. N., Yee, N., Merget, D., and Schroeder, R. (2006). The effect of behavioral realism and form realism of real-time avatar faces on verbal disclosure, nonverbal disclosure, emotion recognition, and copresence in dyadic interaction. Presence 15, 359–372. doi: 10.1162/pres.15.4.359

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Biocca, F. (2014). “Connected to my avatar,” in International Conference on Social Computing and Social Media (Heraklion: Springer), 421–429.

Blond, A. (2008). Impacts of exposure to images of ideal bodies on male body dissatisfaction: a review. Body Image 5,244–250. doi: 10.1016/j.bodyim.2008.02.003

Christou, C., and Michael, D. (2014). “Aliens versus humans: do avatars make a difference in how we play the game?,” in Games and Virtual Worlds for Serious Applications (VS-GAMES), 2014 6th International Conference on (Valletta: IEEE), 1–7.

Combs, C. D., Sokolowski, J. A., and Banks, C. M. (2015). The Digital Patient: Advancing Healthcare, Research, and Education. Hoboken, NJ: John Wiley & Sons.

Dodgson, N. A. (2004). “Variation and extrema of human interpupillary distance,” in Stereoscopic Displays and Virtual Reality Systems XI, Vol. 5291 (San Jose, CA:International Society for Optics and Photonics), 36–46.

Ducheneaut, N., Wen, M.-H., Yee, N., and Wadley, G. (2009). “Body and mind: a study of avatar personalization in three virtual worlds,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Boston, MA: ACM), 1151–1160.

Dunn, R. A., and Guadagno, R. E. (2012). My avatar and me – Gender and personality predictors of avatar-self discrepancy. Comput. Hum. Behav. 28, 97–106. doi: 10.1016/j.chb.2011.08.015

Feingold, A., and Mazzella, R. (1998). Gender differences in body image are increasing. Psychol. Sci. 9, 190–195.

Garau, M., Slater, M., Vinayagamoorthy, V., Brogni, A., Steed, A., and Sasse, M. A. (2003). “The impact of avatar realism and eye gaze control on perceived quality of communication in a shared immersive virtual environment,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Ft. Lauderdale, FL: ACM), 529–536.

Gardner, R., and Brown, D. (2011). “Measurement of the perceptual aspects of body image,” in Body Image: Perceptions, Interpretations and Attitudes, ed S. B. Greene (New York, NY: Nova Science), 81–102.

Gardner, R. M. (2014). Weight status and the perception of body image in men. Psychol. Res. Behav. Manage. 7, 175–184. doi: 10.2147/PRBM.S49053

GBE (2013). Gesundsheitsberichterstattung des Bundes - Distribution of the German Population to Groups in Terms of Body Mass Index in Percent. (Accessed 2018-03-07).

Grabe, S., Ward, L. M., and Hyde, J. S. (2008). The role of the media in body image concerns among women: a meta-analysis of experimental and correlational studies. Psychol. Bull. 134, 460–476. doi: 10.1037/0033-2909.134.3.460

Guan, P., Reiss, L., Hirshberg, D. A., Weiss, A., and Black, M. J. (2012). DRAPE: DRessing Any PErson. ACM Trans. Graph. 31, 35–41. doi: 10.1145/2185520.2185531

Hayes, A. T., Straub, C. L., Dieker, L. A., Hughes, C. E., and Hynes, M. C. (2013). Ludic learning: exploration of tle teachlive™ and effective teacher training. Int. J. Gaming Comput. Mediat. Simul. 5, 20–33. doi: 10.4018/jgcms.2013040102

Hirshberg, D. A., Loper, M., Rachlin, E., and Black, M. J. (2012). Coregistration: simultaneous alignment and modeling of articulated 3D shape. Lecture Notes Comput. Sci. 7577 (Pt 6), 242–255. doi: 10.1007/978-3-642-33783-3_18

Honglun, H., Shouqian, S., and Yunhe, P. (2007). Research on virtual human in ergonomic simulation. Comput. Indus. Eng. 53, 350–356. doi: 10.1016/j.cie.2007.06.027

Jun, E., Stefanucci, J. K., and Creem-regehr, S. H. (2015). Big Foot: using the size of a virtual foot to scale gap width. ACM Trans. Appl. Percept. 12, 1–16. doi: 10.1145/2811266

Lee, Y.-A., Damhorst, M. L., Lee, M.-S., Kozar, J. M., and Martin, P. (2012). Older women's clothing fit and style concerns and their attitudes toward the use of 3D body scanning. Cloth Text. Res. J. 30, 102–118. doi: 10.1177/0887302X11429741

Lenth, R. V. (2016). Least-squares means: the R package lsmeans. J. Stat. Softw. 69, 1–33. doi: 10.18637/jss.v069.i01

Leyrer, M., Linkenauger, S. A., Bülthoff, H. H., Kloos, U., and Mohler, B. (2011). “The influence of eye height and avatars on egocentric distance estimates in immersive virtual environments,” Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization - APGV '11, Vol. 1 (Toulouse), 67–74.

Linkenauger, S. A., Bülthoff, H. H., and Mohler, B. J. (2015). Virtual arm's reach influences perceived distances but only after experience reaching. Neuropsychologia 70, 393–401. doi: 10.1016/j.neuropsychologia.2014.10.034

Linkenauger, S. A., Ramenzoni, V., and Proffitt, D. R. (2010). Illusory shrinkage and growth: body-based rescaling affects the perception of size. Psychol. Sci. 21, 1318–1325. doi: 10.1177/0956797610380700

Linkenauger, S. A., Witt, J. K., and Proffitt, D. R. (2011). Taking a hands-on approach: Apparent grasping ability scales the perception of object size. J. Exp. Psychol. Hum. Percept. Perform. 37, 1432–1441. doi: 10.1037/a0024248

Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., and Black, M. J. (2015). SMPL: A Skinned Multi-person Linear Model. ACM Trans. Graph. 34, 1–16. doi: 10.1145/2816795.2818013

Magnenat-Thalmann, N., Kevelham, B., Volino, P., Kasap, M., and Lyard, E. (2011). 3D Web-based virtual try on of physically simulated clothes. Comput. Aided Design Appl. 8, 163–174. doi: 10.3722/cadaps.2011.163-174

Malleson, C., Kosek, M., Klaudiny, M., Huerta, I., Bazin, J.-C., Sorkine-Hornung, A., et al. (2017). “Rapid one-shot acquisition of dynamic VR avatars,” in IEEE Virtual Reality (VR) (Los Angeles, CA: IEEE), 131–140.

Mark, L. S. and Vogele, D. (1987). A biodynamic basis for perceived categories of action: a study of sitting and stair climbing. J. Motor Behav. 19, 367–384.

McManus, E. A., Bodenheimer, B., Streuber, S., De La Rosa, S., Bülthoff, H. H., and Mohler, B. J. (2011). “The influence of avatar (self and character) animations on distance estimation, object interaction and locomotion in immersive virtual environments,” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception in Graphics and Visualization (Toulouse: ACM), 37–44.

Mohler, B. J., Creem-Regehr, S. H., Thompson, W. B., and Bülthoff, H. H. (2010). The effect of viewing a self-avatar on distance judgments in an HMD-based virtual environment. Presence 19, 230–242. doi: 10.1162/pres.19.3.230

Mölbert, S. C., Thaler, A., Mohler, B. J., Streuber, S., Romero, J., Black, M. J., et al. (2017a). Assessing body image in anorexia nervosa using biometric self-avatars in virtual reality: attitudinal components rather than visual body size estimation are distorted. Psychol. Med. 48, 642–653. doi: 10.1017/S0033291717002008.

Mölbert, S. C., Thaler, A., Streuber, S., Black, M. J., Karnath, H.-O., Zipfel, S., et al. (2017b). Investigating body image disturbance in anorexia nervosa using novel biometric figure rating scales: A pilot study. Eur. Eat. Disord. Rev. 25, 607–612. doi: 10.1002/erv.2559

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 19, 98–100. doi: 10.1109/MRA.2012.2192811

Piryankova, I. V., Stefanucci, J. K., Romero, J., De La Rosa, S., Black, M. J., and Mohler, B. J. (2014a). Can I recognize my body's weight? The influence of shape and texture on the perception of self. ACM Trans. Appl. Percept. 11:13. doi: 10.1145/2641568

Piryankova, I. V., Wong, H. Y., Linkenauger, S. A., Stinson, C., Longo, M. R., Bülthoff, H. H., et al. (2014b). Owning an overweight or underweight body: distinguishing the physical, experienced and virtual body. PLoS ONE 9:e103428. doi: 10.1371/journal.pone.0103428

Pons-Moll, G., Pujades, S., Hu, S., and Black, M. J. (2017). Clothcap: Seamless 4d clothing capture and retargeting. ACM Trans. Graph. 36, 1–15. doi: 10.1145/3072959.3073711

Ries, B., Interrante, V., Kaeding, M., and Anderson, L. (2008). “The effect of self-embodiment on distance perception in immersive virtual environments,” in Proceedings of the 2008 ACM symposium on Virtual Reality Software and Technology - VRST '08 (Bordeaux), 167.

Robinette, K. M., Blackwell, S., Daanen, H., Boehmer, M., and Fleming, S. (2002). Civilian American and European Surface Anthropometry Resource (caesar), Final report. vol. 1. Summary. Technical report, SYTRONICS INC, DAYTON OH.

Robinette, K. M., Daanen, H., and Paquet, E. (1999). “The CAESAR project: a 3-D surface anthropometry survey,” in Second International Conference on 3D Digital Imaging and Modeling Cat NoPR00062 (Ottawa, ON), 380–386.

Rosenberg, M. (1965). Society and the Adolescent Self-Image. Princeton, NJ: Princeton University Press.

Shapiro, A., Feng, A., Wang, R., Li, H., Bolas, M., Medioni, G., et al. (2014). Rapid avatar capture and simulation using commodity depth sensors. Comput. Anim. Virtual Worlds 25, 201–211. doi: 10.1002/cav.1579

Sherman, W. R., and Craig, A. B. (2002). Understanding Virtual Reality: Interface, Application, and Design. San Francisco, CA: Elsevier Science.

Slater, M., and Steed, A. (2002). “Meeting people virtually: Experiments in shared virtual environments,” in The Social Life of Avatars: Presence and Interaction in Shared Virtual Environments, ed R. Schroeder (London: Springer Science & Business Media), 146–171.

Spanlang, B., Normand, J.-M., Borland, D., Kilteni, K., Giannopoulos, E., Pomés, A., et al. (2014). How to build an embodiment lab: achieving body representation illusions in virtual reality. Front. Robot. AI 1:9. doi: 10.3389/frobt.2014.00009

Stevens, A., Hernandez, J., Johnsen, K., Dickerson, R., Raij, A., Harrison, C., et al. (2006). The use of virtual patients to teach medical students history taking and communication skills. Am. J. Surg. 191, 806–811. doi: 10.1016/j.amjsurg.2006.03.002

Streuber, S., Quiros-Ramirez, M. A., Hill, M. Q., Hahn, C. A., Zuffi, S., O'Toole, A., et al. (2016). Body Talk: crowdshaping realistic 3D avatars with words. ACM Trans. Graph. 35, 1–14. doi: 10.1145/2897824.2925981

Swami, V., and Harris, A. S. (2012). The effects of striped clothing on perceptions of body size. Soc. Behav. Pers. 40, 1239–1244. doi: 10.2224/sbp.2012.40.8.1239

Thaler, A., Geuss, M. N., Mölbert, S. C., Giel, K. E., Streuber, S., Romero, J., et al. (2018). Body size estimation of self and others in females varying in BMI. PLoS ONE 13:e0192152. doi: 10.1371/journal.pone.0192152

Thompson, J. K. (2004). The (mis)measurement of body image: ten strategies to improve assessment for applied and research purposes. Body Image 1, 7–14. doi: 10.1016/S1740-1445(03)00004-4

Thompson, J. K., Heinberg, L. J., and Tantleff-dunn, S. (1999). Exacting Beauty: Theory, Assessment and Treatment of Body Image Disturbance. Washington, DC: American Psychological Association.

Thompson, P., and Mikellidou, K. (2011). Applying the helmholtz illusion to fashion: horizontal stripes won't make you look fatter. i Perception 2, 69–76. doi: 10.1068/i0405

Wellerdiek, A. C., Breidt, M., Geuss, M. N., Streuber, S., Kloos, U., Black, M. J., et al. (2015). “Perception of strength and power of realistic male characters,” in Proceedings of the ACM SIGGRAPH Symposium on Applied Perception (Tübingen: ACM), 7–14.

Wichmann, F. A., and Hill, N. J. (2001). The psychometric function: I. Fitting, sampling, and goodness of fit. Percept. Psychophys. 63, 1293–1313. doi: 10.3758/BF03194544

Wilkinson, G., and Rogers, C. (1973). Symbolic description of factorial models for analysis of variance. Applied Stat. 22, 392–399.

Keywords: biometric self-avatars, immersive virtual reality, body weight estimation, avatar appearance, gender differences

Citation: Thaler A, Piryankova I, Stefanucci JK, Pujades S, de la Rosa S, Streuber S, Romero J, Black MJ and Mohler BJ (2018) Visual Perception and Evaluation of Photo-Realistic Self-Avatars From 3D Body Scans in Males and Females. Front. ICT 5:18. doi: 10.3389/fict.2018.00018

Received: 03 January 2018; Accepted: 16 August 2018;

Published: 04 September 2018.

Edited by:

Maria V. Sanchez-Vives, Consorci Institut D'Investigacions Biomediques August Pi I Sunyer, SpainReviewed by:

Victoria Interrante, University of Minnesota Twin Cities, United StatesTabitha C. Peck, Davidson College, United States

Copyright © 2018 Thaler, Piryankova, Stefanucci, Pujades, de la Rosa, Streuber, Romero, Black and Mohler. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Anne Thaler, YW5uZS50aGFsZXJAdHVlYmluZ2VuLm1wZy5kZQ==

Anne Thaler

Anne Thaler Ivelina Piryankova1

Ivelina Piryankova1 Jeanine K. Stefanucci

Jeanine K. Stefanucci Stephan de la Rosa

Stephan de la Rosa Stephan Streuber

Stephan Streuber Javier Romero

Javier Romero Betty J. Mohler

Betty J. Mohler