- 1Human Media Interaction, University of Twente, Enschede, Netherlands

- 2Perceptual and Cognitive Systems, TNO, Soesterberg, Netherlands

Social touch forms an important aspect of the human non-verbal communication repertoire, but is often overlooked in human–robot interaction. In this study, we investigated whether robot-initiated touches can induce physiological, emotional, and behavioral responses similar to those reported for human touches. Thirty-nine participants were invited to watch a scary movie together with a robot that spoke soothing words. In the Touch condition, these words were accompanied by a touch on the shoulder. We hypothesized that this touch—as compared with no touch—could (H1) attenuate physiological [heart rate (variability), skin conductance, cortisol, and respiration rate] and subjective stress responses that were caused by the movie. Moreover, we expected that a touch could (H2) decrease aversion toward the movie, (H3) increase positive perceptions of the robot (e.g., its appearance and one’s attitude toward it), and (H4) increase compliance to the robot’s request to make a monetary donation. Although the movie did increase arousal as intended, none of the hypotheses could be confirmed. Our findings suggest that merely simulating a human touching action with the robot’s limbs is insufficient to elicit physiological, emotional, and behavioral responses in this specific context and with this amount of participants. To inform future research on the opportunities and limitations of robot-initiated touch, we reflect on our methodology and identify dimensions that may play a role in physical human–robot interactions: e.g., the robot’s touching behavior, its appearance and behavior, the user’s personality, the body location where the touch is applied, and the (social) context of the interaction. Social touch can only become an integral and effective part of a robot’s non-verbal communication repertoire, when we better understand if, and under which boundary conditions such touches can elicit responses in humans.

Introduction

As the term implies, “social robots” behave socially toward their users. They emulate human interaction through speech, gaze, gesture, intonation, and other non-verbal modalities (Cramer et al., 2009). As a consequence, interaction with a social robot becomes more natural and intuitive for the user. Due to their embodiment, social robots allow for physical interaction (Lee et al., 2006). But even though touch is one of the most prominent forms of human non-verbal communication, research on social touch in human–robot interaction is only just emerging (van Erp and Toet, 2015). It is still unclear to what extent people’s physiological, emotional, and behavioral responses to a robot-initiated touch are similar to their responses to human touch.

Social touches form a prominent part of our non-verbal communication repertoire (Field, 2010; Gallace and Spence, 2010). These touches, such as a comforting pat on the back, systematically change another’s perceptions, thoughts, feelings, and/or behavior in relation to the context in which they occur (Hertenstein, 2002; Gallace and Spence, 2010; Cranny-Francis, 2011). Touch is for instance the most commonly used method to comfort someone who experiences stress or negative arousal (Dolin and Booth-Butterfield, 1993). Receiving social touches has for instance resulted in decreased cortisol levels [i.e., the “stress-hormone” (Heinrichs et al., 2003)], blood pressure, and heart rate (HR) in a variety of stressful contexts. Examples include several forms of physical contact prior to a public speaking task (Grewen et al., 2003; Ditzen et al., 2007), holding hands while being threatened with physical pain (Coan et al., 2006) or while watching unpleasant videos (Kawamichi et al., 2015), or a simple touch from a nurse to a patient, prior to surgery (Whitcher and Fisher, 1979). It is also thought that social touches can deflect one’s attention from aversive stimuli (Bellieni et al., 2007; Kawamichi et al., 2015). Besides physiological responses, touch can be applied to emphasize the affective content of a message (App et al., 2011), and discrete emotions can be conveyed by means of merely touch (Hertenstein et al., 2006, 2009). Moreover, social touches can enhance the bond between two people in terms of attachment, trust, and pro-social behavior [e.g., the “Midas Touch” effect (Crusco and Wetzel, 1984)]. This Midas Touch—i.e., a brief, casual touch on arm or shoulder—results in increases in helpful behavior and/or the willingness to comply with a request [a meta-analysis is provided by (Guéguen and Joule, 2008)]. Social touches thus have a strong impact on our behavior and on our physiological and emotional well-being, in a plethora of contexts. For extensive overviews, we refer to Field (2010) and Gallace and Spence (2010).

A human social touch is a complex composition of physical parameters and qualities (Hertenstein, 2002), and it is therefore nearly impossible to fully reproduce one by means of haptic actuators. It is suggested that a simulated touch should as closely as possible resemble a human touch in order to be processed without ambiguity or increases in cognitive load (Rantala et al., 2011). Preliminary research, however, demonstrates that a simulated touch—even when it constitutes a highly degraded representation of a human touch—can induce physiological, emotional, and behavioral responses similar to human touch [for overviews, see Haans and IJsselsteijn (2006) and van Erp and Toet (2015)]. When applied in mediated interpersonal communication, haptic actuators can decrease physiological stress responses [for instance, while watching a sad movie (Cabibihan and Chauhan, 2017)], convey discrete emotions (e.g., Bailenson et al., 2007; Smith and MacLean, 2007), and enhance pro-social behavior (Haans and IJsselsteijn, 2009; Haans et al., 2014). It is suggested that in cases of highly degraded representations of touch, people have lower expectations of it. People would mostly rely on the symbolic meaning that is attributed to the simulated touch, rather than on the actual feeling (Haans and IJsselsteijn, 2006). The actual underlying mechanisms are, however, not yet understood, as research on simulated touch in interpersonal communication is still sparse and inconclusive (van Erp and Toet, 2015).

Inherent to their embodiment, robots allow for physical interaction, but this is oftentimes limited to passive touch in which the robot is the receiver of a person’s touch (e.g., Argall and Billard, 2010; Bainbridge et al., 2011). Active, robot-initiated touch is much more complex and hardly considered yet in human–robot interaction research. Since robots employ haptic technologies—similar to those in mediated interpersonal communication—to emulate human touch, it seems plausible that a touch by a robot can induce responses that are similar to those induced by human touch (van Erp and Toet, 2013, 2015). Preliminary research indeed suggests that touches by an embodied agent are consequently associated with specific affect and arousal levels (Bickmore et al., 2010). Moreover, a robot’s touch can increase one’s perceived level of friendship with, and trust in, the robot (Bickmore et al., 2010; Nakagawa et al., 2011; Fukuda et al., 2012; Nie and Park, 2012). The increase in trust is also reflected in increases in pro-social behavior and associated brain activity. People were more willing to carry out a monotonous task (Nakagawa et al., 2011) and to accept an unfair monetary offer (Fukuda et al., 2012) after a robot’s touch. Despite these promising findings, there is no coherent understanding about the plethora of factors that may play a role in physical human–robot interaction. A robot’s touch requires careful consideration with regard to the design and context (Cramer et al., 2009). Since the robot is embodied, there is an inherent interplay between perceptions of the touch and of other anthropomorphic characteristics such as the shape, movements, gaze, and speech (Breazeal, 2003). The robot’s personality and accompanying (social touch) behavior [e.g., pro-actively touching (Cramer et al., 2009)] in relation with perceived intentions of the robot’s touch (Chen et al., 2011) can affect one’s responses to the touch. The richness—i.e., the extent to which it facilitates the conveyance of immediate feedback, multiple verbal and non-verbal communication channels, and contextual information (Daft and Lengel, 1986)—of this physical human–robot interaction leads to higher expectations with regard to the quality of the interaction. It may thus be the case that users rely less on the symbolic meaning of the touch and more on the actual feel in relation to the contextual factors. When the touching behavior does not meet one’s expectations, a psychological discrepancy may arise that results in null or even negative social and behavioral effects (Lee et al., 2006): the Uncanny Valley (Mori, 1970; Mori et al., 2012).

Preliminary research thus suggests that robot-initiated touches can—under specific circumstances—induce physiological, emotional, and behavioral responses, similar to those reported for human touch. In order to be able to develop meaningful human–robot social touch interactions, a coherent understanding of these specific circumstances and the underlying mechanisms is necessary, but currently lacking (van Erp and Toet, 2015). We set out to advance the general understanding of this multidimensional design space by conducting a study in which participants, together with a robot, watched a scary movie in order to induce arousal. The robot tried to soothe the participant verbally, and either did or did not accompany these words with a touch on the shoulder. There is substantial evidence that human touches have beneficial effects in stressful contexts; watching a scary movie is just one instantiation of such a context (Field, 2010; Gallace and Spence, 2010; van Erp and Toet, 2015). We decided to apply this paradigm since both human (e.g., Kawamichi et al., 2015) and simulated touches (e.g., Cabibihan and Chauhan, 2017; Nie and Park, 2012) are considered to be beneficial in a movie context. Moreover, visual stimuli are a widely applied approach to induce arousal in a controlled lab-setting. Since the primary focus was on the effects of the touches, other (social) cues were deliberately kept very basic or omitted. On the premise that even highly degraded haptic representations of human touch can already induce responses in people, we hypothesized that:

H1: Being touched by a robot will have beneficial effects on the participant’s arousal level in stressful circumstances, as compared with not being touched. This will be reflected in subjective self-report measures (e.g., an attenuation in the increase in subjective arousal and less negative affect after receiving touches), as well as in attenuations in the objective physiological responses.

H2: People who are touched by a robot will perceive the stressor—i.e., the scary movie—as less aversive, as compared with people who are not touched.

H3: A robot’s touch will induce more positive perceptions of the physical appearance of the robot and one’s relation with it and will decrease one’s negative attitude toward the robot.

H4: A robot-initiated touch can induce a Midas Touch effect: i.e., an increased willingness to comply with the robot’s request to donate (a part of) a monetary bonus to charity; both in the proportion of participants willing to donate and in the amount of money donated.

Materials and Methods

Participants

Participants were invited via the participant database of TNO when they met the inclusion criteria for the study. Participants had to be at least 18 years of age and should not suffer from hearing or vision problems. A total of 40 participants started the experiment, of which one person did not finish the entire session. The mean age of the remaining 39 participants was 35.72 (SD: 9.12, range: 19–52) and 21 of them (53.8%) were female. Participants were randomly assigned to either the control condition [19 people (8 female), mean age: 34.68] or the touch condition [20 people (13 female), mean age: 36.7]. The study was reviewed and approved by the TNO internal review board (TNO, Soesterberg, the Netherlands) and was in accordance with the Helsinki Declaration of 1975, as revised in 2013 (World Medical Association, 2013). Participants were financially compensated for their participation.

Setting and Apparatus

In the experiment, a Wizard-of-Oz setup was applied, in which the robot behavior was controlled by the experimenter. In order to facilitate touching behavior, two Aldebaran Nao1 robots (v4, NAOqi 1.12.5) were wirelessly connected in a master-slave setup. The slave robot (located in the lab, on the right-hand side of the participant) reproduced movements that were carried out with the master robot’s limbs and head (in the control room). For the slave robot’s pre-programmed (Dutch) utterances, the Acapela Femke Dutch Female 22 kHz Text-to-Speech converter2 was utilized. The default speech velocity and pitch were increased with 20% in order to create a robot-like voice (i.e., no information regarding age or sex of the robot could directly be derived from the speech). The participant was recorded and observed via a video-connection throughout the experimental session. A trained experimenter utilized the video-feed to apply the robot’s touches in the desired manner. Over the course of approximately 5 s, the robot’s left arm extended toward the participant’s right shoulder, on which the robot’s hand (with the fingers fully extended) was put to rest. The duration of the eight physical contacts during the experiment varied between 10 and 40 s. To conclude the touching action, the arm was returned to its initial position in approximately 10 s.

The lab (approximately 3 m × 4 m) was furnished as a cozy home-like environment, with a couch, small tables, and decorations such as paintings, flowers, and a table lamp (which was on throughout the movie). The participant would sit on the right-hand side of the couch next to the robot, who sat on the right armrest (see Figure 1 3). A small table was placed on the couch, on the left side of the participant, to make sure the participant would stay within reach of the robot’s arms. A side table containing a monetary bonus (in a small gift box) and an official, sealed Red Cross money box was standing at the right-hand side of the couch; not in the focus of the participant. The movie was projected on a 2.5 m × 1.5 m screen approximately 3 m in front of the viewer by means of a Sanyo PLC-WL 2500 A Projector (1,280 px× 1,024 px) with speakers on either side of the screen.

Figure 1. (A) Overview of the experimental setting. (B) Manipulation of the master-robot. (C) The robot applying the touch on the shoulder. The images are intended to provide an impression of the setting; the person depicted is not an actual participant.

Two short movies were displayed in succession: “The Descendent” (Anderson and Glickert, 2006) and “Red Balloon” (Trounce et al., 2010). These movies continuously build up excitement and contain several scenes that are likely to cause a startle response. Moreover, the movies did not contain possibly disturbing explicit scenes. The introductory credits of “Red Balloon” were removed in order to continue excitement. The combined duration of the movies was 26 min and 36 s. Custom built software on a PC in the adjacent control room displayed the movie and informed the experimenter about when to execute the robot behavior. During the interaction moments, synchronization markers were placed in the physiological recordings. For the physiological measures, the BioSemi ActiveTwo4 system was used, in combination with flat Ag-AgCl electrodes (to measure cardiac activity), passive Nihon Kohden electrodes [Galvanic Skin Response (GSR)], and the SleepSense 1387-kit to measure respiration. Physiological data were recorded by means of Actiview software (v7.03), with a sampling rate of 2,048 Hz.

Measures

Arousal

To investigate the participant’s arousal level, we recorded the following physiological and subjective responses.

Galvanic Skin Response

The GSR is a measure of the conductivity of the skin, of which the changes are linearly correlated with arousal (Lang, 1995). As such, GSR reflects both emotional responses and cognitive activity. The electrodes were located at the palm and on top of the first lumbrical muscle of the left hand, and as suggested by Lykken and Venables (1971), range correction was applied on the GSR data for each individual participant. We divided each GSR data point by the maximum GSR value for that specific individual and computed the mean GSR subsequently.

Electrocardiography

An electrocardiogram (ECG) was made by means of two electrodes that were placed on the right clavicle and the left floating rib. From the ECG, HR and heart rate variability (HRV)—i.e., the temporal differences between successive inter-beat intervals in the ECG wave (The North American Society of Pacing Electrophysiology – Task Force of the European Society of Cardiology, 1996)—were derived. HR is associated with emotional intensity: when one is more aroused, the HR increases (Mandryk et al., 2006). Moreover, HRV decreases when participants are under stress and emerges when they are relaxed. We utilized the root mean square of successive differences (RMSSD) as measure for HRV.

Respiration

The respiration rate was measured with an elastic belt around the thorax, directly below the sternum. Respiration rate decreases in relaxation whereas it increases during emotional arousal (Stern et al., 2001).

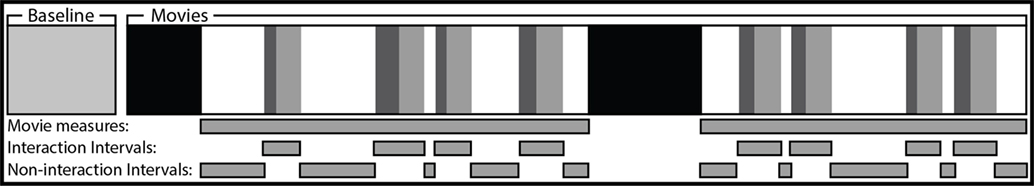

We determined the mean GSR, HR, HRV, and respiration rate for (1) a 75-s baseline period prior to the experiment (2) the entire movie session excluding the non-scary introductory scenes (21m06), (3) the (eight) interaction moments, including the first 45 s following each interaction (9m35), and (4) the intervals between these successive interaction moments and accompanying 45 s, excluding the recordings made during the introductory scenes of each movie (11m31). Considering the short duration of some of the intervals, we decided to determine one aggregated score for the eight interaction intervals and one for the non-interaction intervals, in order to provide the most reliable representation of the participant’s physiological state. This is of particular importance for the HR(V) measures (The North American Society of Pacing Electrophysiology – Task Force of the European Society of Cardiology, 1996). The physiological values of aforementioned recording intervals provided the opportunity to investigate whether a robot’s touch can induce direct physiological responses, as well as effects on the longer term. A schematic overview of the recording intervals is provided in Figure 2.

Figure 2. Schematic overview of the physiological measurement intervals. The solid black intervals represent the introductory scenes of both movies, which were omitted from the analyses. Each of the eight interaction intervals consists of the actual interaction (darker gray) and the 45 s thereafter (lighter gray).

Cortisol

Four saliva samples per participant were collected to measure free cortisol (Vining and McGinley, 1987). To compensate for varying onset times (i.e., cortisol levels tend to peak approximately 15–20 min after the stressor), we collected one baseline sample and three samples after the movie (approximately 3, 10, and 15 min after the movie). As the cortisol onset moment differs per individual, the highest cortisol value of the latter three was considered to be the best approximation of the actual cortisol peak caused by the movie, and therefore used for analysis. The salivary samples were labeled and stored at −18°C throughout the time-span of all experimental sessions, after which they were collectively sent to an external lab for analysis.

Self-Reports

To measure the participant’s current emotional state, we applied the Self-Assessment Manikin (SAM) (Bradley and Lang, 1994) and a Dutch translation (Peeters et al., 1996) of the Positive and Negative Affect Schedule (PANAS) (Watson et al., 1988). The SAM is a 9-point pictorial scale to measure Valence, Arousal, and Dominance, and the PANAS indicates one’s self-reported levels of Positive and Negative Affect by means ratings of 20 adjectives related to affective state.

Experience of the Stressor

We applied items of both the Fear Arousal Scale (FAS) and Disgust Arousal Scale (DAS) (Rooney et al., 2012), for each movie separately in order to investigate how supposedly comforting touches affect the perception of the movie itself. For each scale, four questions such as “I found the fragment very scary” were answered on a 5-point Likert scale. Participants were also asked whether they were familiar with either of the fragments (Davydov et al., 2011).

Perceptions of the Robot

The measures regarding one’s perceptions of the robot were divided into three categories.

Attitude toward the Robot

Attitude toward the robot was measured with the Negative Attitude toward Robots Scale (NARS) (Nomura et al., 2008), both prior to (as a baseline) and after the movie. Participants assessed 14 statements—for instance, “I would feel uneasy if robots really had emotions”—on a 5-point Likert scale [“strongly disagree” (1) to “strongly agree” (5)]. The responses were aggregated into scores for one’s negative attitude toward interaction with, social influence of, and emotional interactions with robots.

Perceptions of the Social Relationship with the Robot

Four items of the Affective Trust Scale [adopted from Johnson and Grayson (2005), as applied by Kim et al. (2012)] and a selection of items of the Perceived Trust (PTR) Scale [as described by Kidd (2003) and Rubin et al. (2009)] were applied to measure how participants perceived the robot’s attitude toward them. Statements such as “the robot displayed a warm and caring attitude toward me” and semantic differentials (e.g., “distant–close”) were to be assessed on a 7-point Likert scale. The answers were aggregated into scores for PTR toward the robot (2 items), reliability of the robot (3), immediacy of the robot (4), and credibility of the robot (8). Moreover, we used subscales of the Perceived Friendship (PF) toward the Robot scale [derived from Pereira et al. (2011), as used earlier by Nie and Park (2012)] and an adaptation of the Attachment scale by Schifferstein and Zwartkruis-Pelgrim (2008) (five 5-point Likert scale items) to investigate how socially close participants felt to the robot. With the PF scale, three dimensions of friendship [i.e., Help (2 items), Intimacy (2), and Emotional Security (2)] were measured by means of 10 items on a 7-point Likert scale (e.g., “The robot showed sensibility toward my affective state”).

Physical Appearance of the Robot

To investigate whether touches of the robot increased the perceptions of human-like behavior, four items of the Human Likeness Scale (Hinds et al., 2004) and the three Perceived Human Likeness semantic differential scales [as applied by MacDorman (2006)] were applied. Questions such as “To what extent does the robot have human-like attributes?” were to be answered on a 7-point scale.

Dutch translations of all questionnaire items were used after being verified by a translator and back-translation procedure.

Midas Touch

Participants were asked by the robot to donate (a part of) a monetary bonus of five 1 Euro coins to the Red Cross. To investigate a potential Midas Touch effect, we recorded both the proportion of the participants who were willing to donate and the amount of money that actually was donated (i.e., 0–5 Euro). The Red Cross money box was official and therefore sealed; donations were real. We did not ask participants how much they donated, as the risk of socially desirable responses was deemed too high. Instead, we weighed the money box after each experimental session in order to deduce how many 1 Euro coins were donated.

Covariates

The participant’s preconceptions with regard to interacting with robots may affect the outcomes of the experiment. To be able to statistically control for this, we applied the Robot Anxiety Scale (RAS) (Nomura et al., 2008). The RAS consists of 11 statements such as “I’m afraid of how fast the robot will move,” with answers ranging from “I do not feel anxiety at all” (1) to “I feel very anxious” (6). The scores were aggregated into three subscale scores (communication capabilities, behavioral characteristics, and discourse), which in turn were utilized as possible covariates in the statistical analyses. Moreover, as earlier research suggests that males respond differently to social touches than females (e.g., Derlega et al., 1989), gender was also included as a possible covariate.

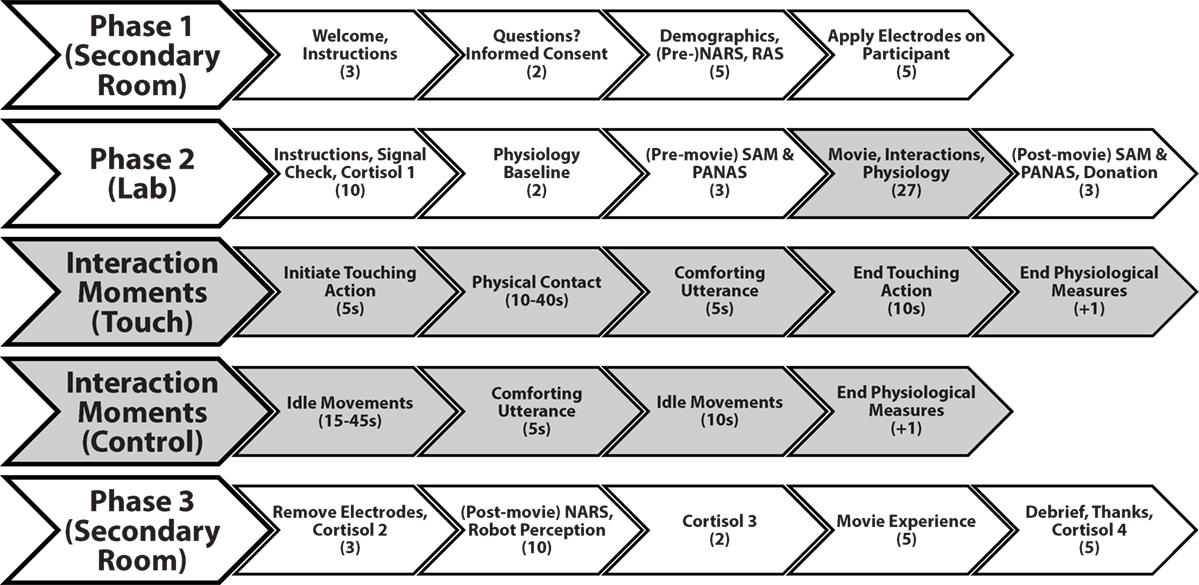

Procedure

After receiving written and verbal instructions about the ostensible aim of the study and the procedure (paraphrased: “We will test whether our robot can detect your emotions and can adjust its behavior accordingly”) and signing a consent form, the participant answered the demographic, RAS, and the baseline NARS questions online (utilizing Google Forms). Thereafter, the participant changed into a white t-shirt (to enhance contrast in the video images and to decrease variations in perceived touch intensity due to different clothing). The electrodes were attached subsequently.

Upon entering the lab, the robot looked and waved at the participant while uttering “Hello.” This was respectively followed by verification of the physiological signal, collection of the first saliva sample, and additional verbal instructions. Next, the experimenter left the room, and a 75-s recording of the physiological signals was made to serve as baseline. When the robot uttered: “you can now fill out the questionnaire,” the participant filled out the pre-movie SAM and PANAS (on paper). The movie started after the robot uttered: “We are going to watch a movie together, are you ready?” During eight predetermined interaction moments, the robot spoke calming words to the participant (e.g., “Luckily, it’s just a movie”). These were either accompanied by a touch on the shoulder or by calm movement of the limbs and head of the robot (i.e., without physical contact). We decided to include these idle movements in the Control condition, rather than no activity at all, in order to minimize possible biasing effects of perceived differences in natural behavior and/or sudden sounds of the robot’s motors. The duration of the interaction moments varied between 30 and 55 s. In between the interactions, the robot displayed idle movements.

After the movie-sequence, an on-screen message referred the participant to a monetary bonus he or she could obtain from the side table. Thereafter, the participant filled out the post-movie SAM and PANAS (when necessary, reminded by the robot). When finished, the robot asked whether the participant was willing to donate a part of his or her bonus in the Red Cross money box. After some time, to make the donation, the experimenter entered the lab and escorted the participant to another room. The second saliva sample was collected while the electrodes were detached from the participant’s body, after which the final questionnaires (i.e., robot perceptions and movie experiences) were filled out. Halfway these questionnaires, the third saliva sample was collected. After the questionnaires, administrational details were arranged and finally, the experimenter initiated a funneled debriefing to verify whether the participant was aware of the actual purpose of the experiment. During the debriefing, the final saliva sample was collected. A schematic overview of the entire experiment can be found in Figure 3.

Figure 3. Schematic overview of the experimental procedure. The interaction moments for both the Touch and Control condition are highlighted in gray.

Results

None of the participants indicated to be familiar with either of the two movie fragments, and therefore, data from all 39 participants were analyzed and reported, unless stated otherwise. The effects of a robot’s touch on the dependent variables were not affected when the three subscales of the RAS were included as covariates. Gender as covariate did not affect the interpretation of the results either. The analyses including these covariates are therefore not further reported. Analyses were carried out with IBM SPSS 235, and significance is reported at the p = 0.05 level.

Pre-processing

The physiological measurements were processed with Mathworks MATLAB R2013b6 and imported with the FieldTrip toolbox (Oostenveld et al., 2011). The low-frequency components in the ECG were removed (i.e., changed to zero) by means of a Fast Fourier Transform. Subsequently, a peak-detection algorithm was applied on the filtered ECG, from which the HR and HRV (RMSSD) were derived for the baseline period, the movie, the interaction moments, and the non-interaction moments. Range correction, as suggested by Lykken and Venables (1971), was applied on the GSR data before the mean scores were computed for the different intervals. With regard to the respiration data, first, a second-order low-pass Butterworth filter was applied to remove the high frequency component of the signal. Next, a peak-detection algorithm was applied on the filtered signal, to identify the moments of breathing. Subsequently, the respiration rates were computed for the aforementioned intervals.

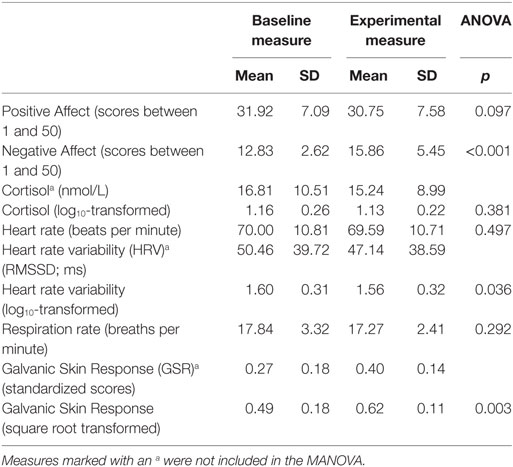

Manipulation Check

To verify whether the movie indeed increased arousal, a repeated measures MANOVA was carried out with the physiological data (baseline responses and responses throughout the movie) and the scores for positive and negative affect (pre- and post-movie measures) as dependent variables. The physiological data included cortisol, HR, HRV, GSR, and respiration rate. The cortisol and HRV values were log10-transformed, whereas the GSR values were square root transformed because of violations of the normality assumption. Moreover, cortisol and HR(V) data from three participants were deemed invalid and therefore omitted from the analyses. The MANOVA was carried out on data from 36 participants; 19 in the Touch and 17 in the Control condition. The analysis demonstrated a significant main effect of the measuring moments: Wilks’ λ = 0.305, F(7, 29) = 9.46, p < 0.001, partial η2 = 0.695. Subsequent investigation of the repeated measures ANOVAs of each dependent variable demonstrated that both Negative Affect and GSR were significantly higher during the movies than during the baseline, whereas the HRV was significantly lower. Moreover, a negative trend was visible in the Positive Affect scores, albeit not significant. The movies thus induced arousal, which means that the manipulation was successful. The mean scores and p-values of the repeated measures ANOVAs can be found in Table 1.

Affect and Arousal

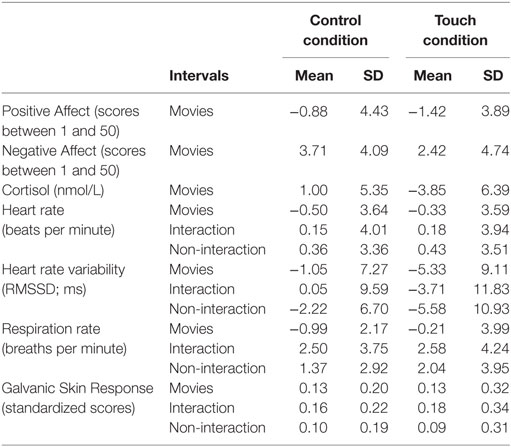

To investigate whether a robot’s touch can indeed attenuate physiological and psychological stress responses (H1), a one-way MANOVA was carried out with experimental condition (Touch, Control) as independent variable. The differences between the (untransformed) physiological data as measured during (or after) the movies (excluding the introductory scenes), and their baseline counterparts were computed. Moreover, the differences between post- and pre-movie Positive and Negative Affect scores were computed. The computed differences were included as dependent variables in the MANOVA. The invalid data of the same participants were excluded again. The MANOVA did not yield a significant difference between conditions: Wilks’ λ = 0.735, F(7, 28) = 1.44, p = 0.23, partial η2 = 0.265.

The differences between the physiological responses during the interaction moments (aggregated value of the eight moments) and the associated baseline measure were computed for the GSR, HR, HRV, and respiration rate. These (untransformed) values of the same 36 participants were included as dependent variables in a one-way MANOVA with experimental condition as independent variable. No significant difference between the conditions was found: Wilks’ λ = 0.96, F(4, 31) = 0.327, p = 0.858, partial η2 = 0.040.

Although no differences in physiological responses between the conditions were found during the interaction moments, it may have been the case that the interaction in itself temporarily increased the arousal levels. To investigate a potential effect of robot touch on the longer term, we included the differences between the aggregated values of the physiological signals throughout the non-interaction moments and their baseline counterparts in a one-way MANOVA. The aforementioned invalid data were again excluded. We did not find a significant difference between the Touch and Control conditions: Wilks’ λ = 0.952, F(4, 31) = 0.387, p = 0.816, partial η2 = 0.048. An overview of the physiological responses for the different intervals can be found in Table 2.

The differences between post- and pre-movie Self Assessment Manikin scores (i.e., Valence, Arousal, Dominance) were computed and used as dependent variables. These differences violated the normality assumption and were therefore analyzed with non-parametric tests. For each of the three variables, a Mann–Whitney U test was carried out, with experimental condition as independent variable. The analyses did not demonstrate any significant differences between the experimental conditions: all ps > 0.491. The results from the affect and arousal analyses do not provide support for H1.

Movie Experiences

To investigate whether people who were touched by the robot experienced the stressor, as expected, as less aversive (H2), the FAS and DAS scores for each of the movies were included as dependent variables in a MANOVA. No significant main effect of experimental condition was found: Wilks’ λ = 0.907, F(4, 34) = 0.87, p = 0.49, partial η2 = 0.093. H2 was not supported by the findings.

Perception of the Robot

Attitude toward the Robot

We carried out a one-way MANOVA on the differences between the post- and pre-measures of the three subscales of the NARS, with the experimental condition as independent variable. No statistical differences between the two conditions were found: Wilks’ λ = 0.900, F(3, 35) = 1.30, p = 0.291, partial η2 = 0.100.

Perceived Social Relationship with the Robot

The possible effects of a robot’s touch on one’s perceptions of the social relationship with the robot were analyzed with a one-way MANOVA in which the Affective Trust, Attachment, PTR, and PF scales and subscales were included as dependent variables. The Immediacy subscale of the PTR and all three subscales of the PF (i.e., Help, Intimacy, and Emotional Security) were log10-transformed in order to make the data distribution normal. No main effect of experimental condition was found in the MANOVA: Wilks’ λ = 0.777, F(9, 29) = 0.93, p = 0.518, partial η2 = 0.223.

Physical Appearance

The appreciation of the physical appearance of the robot was analyzed with a one-way MANOVA with the experimental condition as between subjects independent variable. The Human Likeness scale (log10-transformed) and the Perceived Human Likeness scales (of which the Eeriness subscale was log10-transformed) were included as dependent variables. The MANOVA did not demonstrate any significant effect of experimental condition: Wilks’ λ = 0.884, F(4, 34) = 1.12, p = 0.366, partial η2 = 0.116. Since no differences between the conditions were found in either of the three categories, H3 could not be supported.

Midas Touch

To verify whether a touch would, as expected, increase one’s pro-social behavior (H4), we compared the two groups on the amount of money that was donated to the Red Cross by means of a Mann–Whitney U test. No significant differences between the touch (Mdn: 3.0) and the control (Mdn: 2.0) conditions were found: U = 175.5, z = −0.421, p = 0.674. A chi-square test to investigate possible differences between the conditions on the amount of people that actually donated did not demonstrate any effects either: χ2(1, N = 39) = 0.003, p = 0.957 (2-sided). These findings do not provide support for H4.

Discussion

We devised and conducted an experiment to investigate whether a robot-initiated social touch could decrease physiological and subjective stress responses (H1), deflect one’s attention from aversive stimuli (H2), increase positive perceptions of the robot (H3), and induce a Midas Touch effect (H4); all similar to effects reported for human-initiated touch. With this variety of objective physiological responses and (validated) subjective measures, we expected to be able to provide a coherent impression of the effects of a robot’s touch. Moreover, since the sample size of our study was similar to related experiments in which effects have been found, and because we applied multivariate analyses, the statistical power to detect real effects was deemed great enough. Nonetheless, replications are necessary to further substantiate (or disprove) our findings, in particular, because the research area is still in an embryonic stage (van Erp and Toet, 2015). Our research protocol can be considered a valuable contribution to the field, as no standard research protocols are available yet (van Erp and Toet, 2015). However, the operationalization of the Midas Touch measure (i.e., the donation) should be reconsidered. The fact that the donations came from an unexpected bonus, rather than from one’s own assets, could have made it easier to comply with the robot’s request (note that nearly every participant donated). The supposedly soothing interaction can—when disregarding the lab setting—be considered relevant as social robots are likely to become supportive companions (Breazeal, 2011). Moreover, our setup allows for further investigations of other robot appearances and behaviors, in particular variations in touching behavior.

The fact that no support for any of the four hypotheses was found could suggest that a robot-initiated touch in the current form does neither have added value over solely soothing speech (or even over the mere presence of a robot) nor negative effects. This would be contrary to human touch, which has demonstrated to be beneficial in a variety of stressful contexts (Grewen et al., 2003; Coan et al., 2006; Ditzen et al., 2007; App et al., 2011). Another possible explanation is that the effects of robot-initiated touch are considerably smaller than those of human touch, and therefore difficult to detect with the sample size of our study. On the premise that a robot’s touch can—under specific circumstances—induce responses that are similar to those to human touches, our results at least demonstrate that these circumstances are not trivial. It is therefore essential to advance the understanding of the multidimensional design space of robotic social touch, in order to be able to develop meaningful physical human–robot interactions. In the remainder of this section, we will critically reflect on our study and identify several dimensions that may play a role in physical human–robot interaction. We do not intend to suggest that the specific dimensions we propose are exhaustive and/or relevant in every context in which physical human–robot interaction may occur though. Our study and the following discussion should be considered as a first step on the pathway to socially touching robots and could inform future research that addresses the question of whether it actually is possible to elicit responses to robot-initiated touch, and if so, under which specific circumstances.

The Parameters and Qualities of a Touch

The meaning of a touch is formed by the composition of different parameters (e.g., duration, location, and amount of touches) and qualities (i.e., the features of the actual physical stimulus) of a touch (Hertenstein, 2002). Here, we first suggest how the parameters may have had their influence on the findings. Next, we address the physical qualities of the robot’s touch.

Stress Responses and Stimuli Aversion (H1 and H2)

Contrary to the expectations, being touched by a robot did neither seem to lead to an attenuation of physiological and subjective stress responses nor to a deflection of one’s attention from the stressor. It could be the case that the robot’s touch on the shoulder was too limited in contact area and duration. Some literature namely suggests that relatively extensive touching actions such as hugs or massages (contact area) or holding hands (duration) can decrease (physiological) stress responses (e.g., Grewen et al., 2003; Coan et al., 2006; Ditzen et al., 2007). On the other hand, it is claimed that briefly touching one’s arm, or providing a supportive pat on the back (e.g., Whitcher and Fisher, 1979; Drescher et al., 1980) can already decrease stress. The duration and contact area may thus influence the responses to a human touch, but the underlying mechanisms are not yet fully understood (Field, 2010; van Erp and Toet, 2015). However, since the robot simulated a human touching action for which effects have been found before, it is unlikely that the contact area and duration parameters fully explain the absence of the anticipated effects. It seems plausible that there are additional aspects that underlie the responses to a robot-initiated social touch.

Social Perceptions of the Robot (H3)

A robot’s touch in the form as applied in our study was not able to significantly alter one’s perceptions of the robot. One’s attitude toward the robot did not become less negative, the robot was not considered more humanlike, and relational aspects were not assessed more positively after a touch. Bickmore et al. (2010) found that both the amount and duration of the touches are related to the valence of the agent who initiated the touches. Although our findings do not indicate that a robot’s touch can directly (i.e., after a touch) or indirectly (i.e., on the longer term) alter objective responses, it may have been the case that the overall duration (i.e., there was physical contact during approximately 13.5% of the total duration of the movies, or 17% when the introductory scenes are disregarded) and the amount (i.e., 8) of touches in our experiment were too limited to alter the subjective responses.

Pro-social Behavior (H4)

Contrary to our expectations and findings in related work (Nakagawa et al., 2011; Fukuda et al., 2012), a robot-initiated touch did not induce a Midas Touch effect; a touch did not change the willingness to donate money. A possible parameter that may have had its influence on these findings is the body location to which the touch was administered. Due to physical limitations of the robot, the touches were applied on the shoulder of the participants. According to Paulsell and Goldman (1984), however, touches on the shoulder do not necessarily lead to increases in compliant behavior; touches on the upper arm are relatively more effective. Future investigations could thus take the body location of the touch into account.

The Physical Qualities of the Robot’s Touch

The body location to which the touch is applied is not merely important with regard to its influence on compliant behavior. The body location can severely influence the perceptions of the touch and in turn the associated physiological, emotional, and behavioral responses (Nguyen et al., 1975; Hertenstein, 2002; Hertenstein et al., 2009). Whereas receiving a touch on the shoulder while watching a movie may be perceived as socially acceptable, a similar touch on the head or thigh may be perceived as highly inappropriate and could lead to aversive feelings.

Although parameters such as duration, frequency, and location of a human touch can easily be reproduced by a robot, the physical composition of a human touch will remain vastly different from a robot’s (Gallace and Spence, 2010). In general, a robot’s touch appears and feels more mechanical, which may have affected the findings with regard to all four hypotheses. It is suggested that a specific class of fibers—C-Tactile afferents—constitutes the neurobiological substrate for the affective properties of touch in humans (McGlone et al., 2014). This pathway appears to be unrelated to the somatosensory pathway (i.e., the discriminative touch system that responds to for instance pressure, skin stretch, and vibration) (Olausson et al., 2008). The firing rate of the CT-afferents in the hairy skin increases when soft stroking touches are applied with a velocity of circa 3 cm/s, and this firing rate is strongly correlated with subjective ratings of pleasantness (Morrison et al., 2011). Moreover, the firing rate of the CT-afferent fibers is also affected by the temperature of the touch (Ackerley et al., 2014), with human skin temperature (around 32°C) as optimum. Since the robot’s touch did neither consist of the optimal stroking velocity nor temperature, it is possible that it mainly activated the discriminative system, rather than the affective. The robot’s touches were mainly applied as a pat on the shoulder (contrary to a stroke with the optimal speed of 3 cm/s). Moreover, the robot’s hands were at room temperature (ca. 20°C), contrary to the supposedly optimal 32°C. This may have attenuated the anticipated affective responses. Moreover, neural thermoregulatory systems (that serve the adjustment of body temperature to external temperatures) are linked with cognitive and affective functions (Raison et al., 2015): physical warmth increases positive social perceptions (Williams and Bargh, 2008; IJzerman and Semin, 2009). Earlier research on touching robots suggests that, albeit without referring to the underlying neurobiological pathways, the temperature of a robot’s touch indeed affects perceptions of friendship with and trust in the robot (Nie and Park, 2012). A more advanced physical composition of the touch could thus help to induce more pronounced responses.

Richness

The touch as applied in the current study could thus be considered relatively poor (i.e., physically). Our four hypotheses were however based on the premise that physiological, emotional, and social responses can be induced by haptic technologies, even when these constitute highly degraded representations of human touch (e.g., Bailenson et al., 2007; Smith and MacLean, 2007; Rantala et al., 2013; van Erp and Toet, 2015). This should thus also apply for robot-initiated touches. It is suggested that when the touch is low in richness, people rely more on the symbolic meaning rather than on the actual physical perception (Haans and IJsselsteijn, 2006); people may have lower expectations. The lack of clear-cut effects in our study may suggest that the mere symbolic meaning of the robotic touch is insufficient to induce physiological, emotional, or social responses. Additional aspects seem to be at stake. In order to better understand these aspects, it seems logical to adhere to a more integrative view on the robot and its touching behavior. A robot’s touch should in that case not be considered a mere physical stimulus, but an element of the entire robot’s appearance and social behavior. When we address our robot’s touching behavior from this integrative perspective, this provides two additional possible explanations for the lack of anticipated effects in our study: the robot could be considered either too rich or not rich enough.

The first series of possible explanations involves the relative richness of the robot (due to for instance its anthropomorphic appearance and ability to speak), as compared with other devices that simulate human touch with merely haptic technologies. The mere presence of a social entity in the same room as the participant—i.e., “having someone with him or her”—may have provided enough comfort in itself; thereby occluding the assumed calming effect of the touch. It seems unlikely that the presence of the robot actually increased the stress levels, as one would expect a negative effect of the robot’s touches in that case. Future research should consider the role that the actual presence of the robot plays under stressful circumstances. Another consideration related to the richness of the robot is that people may have had higher expectations of the touch and that the rather mechanical appearance and feel of the touch may not have been in line with these expectations. This discrepancy between expectations and actual perceptions could have nullified the anticipated responses to the touch (Lee et al., 2006). When a robot approaches, but fails to attain, a lifelike appearance, this could result in feelings of strong revulsion; i.e., the “Uncanny Valley” (Mori, 1970; Mori et al., 2012). An example of the uncanny valley as provided in the literature is that of a prosthetic hand: whereas it may look like a real human hand, the actual feel (cold temperature and lack of soft tissue) can be unfamiliar and as a consequence uncanny (Mori, 1970; Mori et al., 2012).

A second possible explanation with regard to the richness of the touching robot is that the robot as a whole was not rich enough. Social robots employ their embodiment (Jung and Lee, 2004), physical interaction with the user (Lee et al., 2006), and other human-like characteristics (Breazeal, 2003) to enhance the so-called social presence. Social Presence is a “psychological state in which virtual (para-authentic or artificial) actors are experienced as actual social actors in either sensory or non-sensory ways” (Lee, 2004). A higher social presence can positively affect the perceptions of the robot (Hinds et al., 2004; Lee et al., 2006), as well as the relationship with the robot (Gonsior et al., 2012; Schneider et al., 2012). Media low in richness usually fail to provide the user with a sense of social presence (Haans and IJsselsteijn, 2006). Since the social cues of the robot in our study were deliberately limited, the robot may not have been perceived as being socially present. As a consequence, the social presence may have been too low for a robot’s touch to have social meaning and thus to induce physiological, emotional, or behavioral responses. Whereas we expected that robot-initiated physical contact with the user would positively affect the perceived human likeness of and relationship with the robot, it may in fact be the other way around. It could be that a higher social presence is a prerequisite for a robot’s touch to have effects, rather than a consequence of a robot’s touch. That is, people may need to consider the robot as an actual social actor first, in order to let the robot’s touch actually induce responses.

Closely related to the richness of the robot is the interplay between the several social cues. A robot-initiated touch may only become appropriate and effective when it corresponds with other non-verbal social cues such as the intonation, facial expressions, or gestures (Breazeal, 2003; Eyssel et al., 2012). As there was no coherent interplay between the several social cues, the touch, and the participant’s feelings and behavior, the touch may have become ineffective: “Social interaction is not just a scheduled exchange of content, it is a fluid dance between the participants,” as (Breazeal, 2003) puts it. It could be the case that soothing (touching) behavior should not simply be scripted but should be interactive and personalized.

Relationship Status

Related to the issue of whether the robot is considered an actual social actor or not, is the acquaintance one has with the robot, either as a technology, or as an actual social actor. When people interact with new technologies for the first time, novelty effects (i.e., first responses to a technology that usually differ from the sustained use patterns that are established over time) may occur. In the context of touching robots, this could mean that users first may have to get used to the robot and its behavior, before the touches may actually induce responses. When the novelty effect of the robot wears off, a comparison between a touch and no touch condition may provide more nuanced insights.

Taking time to get acquainted with a technology that emulates human behavior could eventually evolve in a social relationship with that technology. Robots can emulate human strategies for the formation and maintenance of relationships; on-going relationships between human and robot can thus exist (e.g., Bickmore and Picard, 2005; Gonsior et al., 2012; Kim et al., 2013; Kühnlenz et al., 2013; Leite et al., 2013). Some human interpersonal touches are only appropriate between people in a close relationship (e.g., Burgoon et al., 1992; Thompson and Hampton, 2011; Camps et al., 2012). Moreover, the effectiveness of touch on stress reduction also appears to depend on the strength of the interpersonal relationship (e.g., Grewen et al., 2003; Coan et al., 2006; Ditzen et al., 2007). It has been suggested that simulated touches are also only appropriate between actors in a close relationship (Gooch and Watts, 2010; Rantala et al., 2013) and that simulated touches from a stranger even may cause discomfort (Smith and MacLean, 2007). Social touching behavior of a robot may thus only be appropriate and effective when a person already has a strong social bond with the robot. In the current experiment, we deliberately focused on the social and physiological responses to a robot’s touch, while minimizing or omitting other social cues. Since the robot neither showed an outspoken personality, expressed personal feelings, nor showed tailored empathy (i.e., all behavior was scripted), it seems unlikely that a relatively intimate bond was formed during the experiment. As a consequence, the robot’s touch may have been ineffective or even inappropriate.

Personal Characteristics

Although controlling for the gender of the participant and one’s anxiety toward robots did not affect the interpretation of the results, the role of user characteristics should not be underestimated. Research has for instance demonstrated that one’s Immersive Tendency and Need to Belong are essential in the formation of relationships with robots (Kim et al., 2012), as they affect perceptions of Social Presence (Lee, 2004) of the robot. Moreover, one’s Extraversion (Erk et al., 2015) and Touch Receptivity (Bickmore et al., 2010; Erk et al., 2015) may also determine perceptions of a simulated social touch and associated social responses. Future research should therefore investigate which personality characteristics interact with the robot’s (touching) behavior and how.

Conclusion

The results of the study did not provide support for the suggestion that people, when in a specific stressful context, will respond similarly—i.e., physiologically, emotionally, or behaviorally—to a simulated touch applied by a robot, as to a human touch. This could mean that it is not possible to induce responses to a robot-initiated touch, but this is not necessarily the case. It could also suggest that robot-initiated touches can only elicit responses when specific boundary conditions apply. More investigations—both replications of the study as reported here, and research on robot-initiated touch in different contexts, under different boundary conditions, and perhaps with larger samples—are necessary to advance the understanding of the opportunities and limitations of robot-initiated social touch. Our study can be considered a first step toward this aim and could serve as a pointer for further investigations. Thereto, our research paradigm may come in useful, as it has proven to increase the participants’ stress levels as intended. On the premise that it actually is possible, under specific circumstances, to make a robot’s touch truly social, we highlighted several aspects that could mediate or moderate the responses to robot-initiated touch: e.g., the physical composition and body location of the touch, the other social cues as provided by the robot, the social context of the interaction, one’s personality and expectations, and the potential interplay between these aspects. We however do not intend to suggest that the proposed aspects are exhaustive and/or that every aspect is applicable in every context. Future research should paint a more comprehensive picture on how and when robot-initiated touches can be applied effectively.

Our initial perspective was that relatively simple haptic technologies can already induce responses, and thus a robot-initiated touch as well. However, physical human–robot interaction may require a more integrative approach. In our study, the robot’s behavior may have been too rich to let people solely rely on the symbolic meaning of the touch, but too poor to let people actually consider the robot as a social actor. Earlier research suggests that increases in human-like behavior—and thus touching behavior—result in increased social presence and thus increased positive feelings toward the robot. Our results may indicate that a relatively high social presence is a prerequisite for a robot’s touch to have social effects, rather than a consequence of the touch. This suggestion, as well as the others, remains speculative until they are scrutinized in future research. With a more thorough understanding of the boundary conditions within which robot-initiated touch may induce physiological, emotional, and behavioral responses, social touch could become an integral part of a robot’s non-verbal communication repertoire.

Ethics Statement

The study was reviewed and approved by the TNO internal review board (TCPE, Toetsings-Commissie Proefpersoon Experimenten; assessment-committee Human Participant Experiments, TNO, Soesterberg, the Netherlands) and was carried out in accordance with the Helsinki Declaration of 1975, as revised in 2013 (World Medical Association, 2013). Written informed consent was obtained from all individual participants included in the study.

Author Contributions

Conceived and designed the experiments; contributed reagents/materials/analysis tools; wrote the paper: CW, AT, and JE. Performed the experiments: CW. Analyzed the data: CW and JE.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank Camiel Jalink for helping in performing the study and Pjotr van Amerongen, Ingmar Stel, and Bert Bierman for providing us with the necessary software. Moreover, we would like to thank Ellen Wilschut and Maarten Hogervorst for their help regarding the collection and analyses of the physiological data.

Footnotes

- ^http://www.aldebaran.com

- ^http://www.acapela-group.com

- ^Electrodes were attached to the face to record eye movements and the startle reflex. Due to technical problems, these data were invalid and therefore not reported.

- ^http://www.biosemi.com

- ^http://www.ibm.com/software/products/en/spss-statistics

- ^http://www.mathworks.com

References

Ackerley, R., Backlund Wasling, H., Liljencrantz, J., Olausson, H., Johnson, R. D., and Wessberg, J. (2014). Human C-tactile afferents are tuned to the temperature of a skin-stroking caress. J. Neurosci. 34, 2879–2883. doi: 10.1523/JNEUROSCI.2847-13.2014

App, B., McIntosh, D. N., Reed, C. L., and Hertenstein, M. J. (2011). Nonverbal channel use in communication of emotion: how may depend on why. Emotion 11, 603–617. doi:10.1037/a0023164

Argall, B. D., and Billard, A. G. (2010). A survey of tactile human–robot interactions. Rob. Auton. Syst. 58, 1159–1176. doi:10.1016/j.robot.2010.07.002

Bailenson, J. N., Yee, N., Brave, S., Merget, D., and Koslow, D. (2007). Virtual interpersonal touch: expressing and recognizing emotions through haptic devices. Hum. Comput. Interact. 22, 325–353. doi:10.1080/07370020701493509

Bainbridge, W., Nozawa, S., Ueda, R., Okada, K., and Inaba, M. (2011). “Robot sensor data as a means to measure human reactions to an interaction,” in 2011 11th IEEE-RAS International Conference on Humanoid Robots (Bled: IEEE), 452–457.

Bellieni, C. V., Cordelli, D. M., Marchi, S., Ceccarelli, S., Perrone, S., Maffei, M., et al. (2007). Sensorial saturation for neonatal analgesia. Clin. J. Pain 23, 219–221. doi:10.1097/AJP.0b013e31802e3bd7

Bickmore, T. W., Fernando, R., Ring, L., and Schulman, D. (2010). Empathic touch by relational agents. IEEE Trans. Affect. Comput. 1, 60–71. doi:10.1109/T-AFFC.2010.4

Bickmore, T. W., and Picard, R. W. (2005). Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput. Hum. Interact. 12, 293–327. doi:10.1145/1067860.1067867

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the Self-Assessment Manikin and the semantic differential. J. Behav. Ther. Exp. Psychiatry 25, 49–59. doi:10.1016/0005-7916(94)90063-9

Breazeal, C. (2003). Toward sociable robots. Rob. Auton. Syst. 42, 167–175. doi:10.1016/S0921-8890(02)00373-1

Breazeal, C. (2011). Social robots for health applications. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2011, 5368–5371. doi:10.1109/IEMBS.2011.6091328

Burgoon, J. K., Walther, J. B., and Baesler, E. J. (1992). Interpretations, evaluations, and consequences of interpersonal touch. Hum. Commun. Res. 19, 237–263. doi:10.1111/j.1468-2958.1992.tb00301.x

Cabibihan, J.-J., and Chauhan, S. (2017). Physiological responses to affective tele-touch during induced emotional stimuli. IEEE Trans. Affect. Comput. 8, 108–118. doi:10.1109/TAFFC.2015.2509985

Camps, J., Tuteleers, C., Stouten, J., and Nelissen, J. (2012). A situational touch: how touch affects people’s decision behavior. Soc. Influence 8, 237–250. doi:10.1080/15534510.2012.719479

Chen, T. L., King, C.-H., Thomaz, A. L., and Kemp, C. C. (2011). “Touched by a robot: an investigation of subjective responses to robot-initiated touch categories and subject descriptors,” in 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Lausanne, 457–464.

Coan, J. A., Schaefer, H. S., and Davidson, R. J. (2006). Lending a hand: social regulation of the neural response to threat. Psychol. Sci. 17, 1032–1039. doi:10.1111/j.1467-9280.2006.01832.x

Cramer, H., Kemper, N. A., Amin, A., Wielinga, B., and Evers, V. (2009). ‘Give me a hug’: the effects of touch and autonomy on people’s responses to embodied social agents. Comput. Anim. Virtual Worlds 20, 437–445. doi:10.1002/cav

Cranny-Francis, A. (2011). Social semiotics semefulness: a social semiotics of touch. Soc. Semiotic. 21, 463–481. doi:10.1080/10350330.2011.591993

Crusco, A. H., and Wetzel, C. G. (1984). The midas touch: the effects of interpersonal touch on restaurant tipping. Pers. Soc. Psychol. Bull. 10, 512–517. doi:10.1177/0146167284104003

Daft, R. L., and Lengel, R. H. (1986). Organizational information requirements, media richness and structural design. Manage. Sci. 32, 554–571. doi:10.1287/mnsc.32.5.554

Davydov, D. M., Zech, E., and Luminet, O. (2011). Affective context of sadness and physiological response patterns. J. Psychophysiol. 25, 67–80. doi:10.1027/0269-8803/a000031

Derlega, V. J., Lewis, R. J., Harrison, S., Winstead, B. A., and Costanza, R. (1989). Gender differences in the initiation and attribution of tactile intimacy. J. Nonverbal Behav. 13, 83–96. doi:10.1007/BF00990792

Ditzen, B., Neumann, I. D., Bodenmann, G., von Dawans, B., Turner, R. A., Ehlert, U., et al. (2007). Effects of different kinds of couple interaction on cortisol and heart rate responses to stress in women. Psychoneuroendocrinology 32, 565–574. doi:10.1016/j.psyneuen.2007.03.011

Dolin, D. J., and Booth-Butterfield, M. (1993). Reach out and touch someone: analysis of nonverbal comforting responses. Commun. Q. 41, 383–393. doi:10.1080/01463379309369899

Drescher, V. M., Horsley Gantt, W., and Whitehead, W. E. (1980). Heart rate response to touch. Psychosom. Med. 42, 559–566. doi:10.1097/00006842-198011000-00004

Erk, S. M., Toet, A., and Van Erp, J. B. F. (2015). Effects of mediated social touch on affective experiences and trust. PeerJ 3, e1297. doi:10.7717/peerj.1297

Eyssel, F., Kuchenbrandt, D., Hegel, F., and De Ruiter, L. (2012). “Activating elicited agent knowledge: how robot and user features shape the perception of social robots,” in IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, 851–857.

Field, T. (2010). Touch for socioemotional and physical well-being: a review. Dev. Rev. 30, 367–383. doi:10.1016/j.dr.2011.01.001

Fukuda, H., Shiomi, M., Nakagawa, K., and Ueda, K. (2012). “Midas touch’ in human-robot interaction: evidence from event-related potentials during the ultimatum game,” in Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction (Boston, MA: IEEE), 131–132.

Gallace, A., and Spence, C. (2010). The science of interpersonal touch: an overview. Neurosci. Biobehav. Rev. 34, 246–259. doi:10.1016/j.neubiorev.2008.10.004

Gonsior, B., Sosnowski, S., Buß, M., Wollherr, D., and Kühnlenz, K. (2012). “An emotional adaption approach to increase helpfulness towards a robot,” in IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, 2429–2436.

Gooch, D., and Watts, L. A. (2010). “Communicating social presence through thermal hugs,” in Proceedings of the First Workshop on Social Interaction in Spatially Separated Environments (SISSI 2010), Copenhagen, 11–20.

Grewen, K. M., Anderson, B. J., Girdler, S. S., and Light, K. C. (2003). Warm partner contact is related to lower cardiovascular reactivity. Behav. Med. 29, 123–130. doi:10.1080/08964280309596065

Guéguen, N., and Joule, R.-V. (2008). Contact tactile et acceptation D’une Requete: Une meta-analyse. Les Cahiers Internationaux de Psychologie Sociale 80, 39–58. doi:10.3917/cips.080.0039

Haans, A., de Bruijn, R., and IJsselsteijn, W. A. (2014). A virtual midas touch? Touch, compliance, and confederate bias in mediated communication. J. Nonverbal Behav. 38, 301–311. doi:10.1007/s10919-014-0184-2

Haans, A., and IJsselsteijn, W. A. (2006). Mediated social touch: a review of current research and future directions. Virtual Real. 9, 149–159. doi:10.1007/s10055-005-0014-2

Haans, A., and IJsselsteijn, W. A. (2009). The virtual midas touch: helping behavior after a mediated social touch. IEEE Trans. Haptics 2, 136–140. doi:10.1109/TOH.2009.20

Heinrichs, M., Baumgartner, T., Kirschbaum, C., and Ehlert, U. (2003). Social support and oxytocin interact to suppress cortisol and subjective responses to psychosocial stress. Biol. Psychiatry 54, 1389–1398. doi:10.1016/S0006-3223(03)00465-7

Hertenstein, M. J. (2002). Touch: its communicative functions in infancy. Hum. Dev. 45, 70–94. doi:10.1159/000048154

Hertenstein, M. J., Holmes, R. M., McCullough, M., and Keltner, D. (2009). The communication of emotion via touch. Emotion 9, 566–573. doi:10.1037/a0016108

Hertenstein, M. J., Keltner, D., App, B., Bulleit, B. A., and Jaskolka, A. R. (2006). Touch communicates distinct emotions. Emotion 6, 528–533. doi:10.1037/1528-3542.6.3.528

Hinds, P. J., Roberts, T. L., and Jones, H. (2004). Whose job is it anyway? A study of human-robot interaction in a collaborative task. Hum. Comput. Interact. 19, 151–181. doi:10.1207/s15327051hci1901&2_7

IJzerman, H., and Semin, G. R. (2009). The thermometer of social relations: mapping social proximity on temperature. Psychol. Sci. 20, 1214–1220. doi:10.1111/j.1467-9280.2009.02434.x

Johnson, D., and Grayson, K. (2005). Cognitive and affective trust in service relationships. J. Bus. Res. 58, 500–507. doi:10.1016/S0148-2963(03)00140-1

Jung, Y., and Lee, K. M. (2004). “Effects of physical embodiment on social presence of social robots,” in Proceedings of PRESENCE 2004, Valencia, 80–87.

Kawamichi, H., Kitada, R., Yoshihara, K., Takahashi, H. K., and Sadato, N. (2015). Interpersonal touch suppresses visual processing of aversive stimuli. Front. Hum. Neurosci. 9:164. doi:10.3389/fnhum.2015.00164

Kidd, C. D. (2003). Sociable Robots: The Role of Presence and Task in Human-Robot Interaction. Master thesis, Massachussets Institute of Technology, Cambridge.

Kim, K. J., Park, E., and Shyam Sundar, S. (2013). Caregiving role in human–robot interaction: a study of the mediating effects of perceived benefit and social presence. Comput. Human Behav. 29, 1799–1806. doi:10.1016/j.chb.2013.02.009

Kim, K. J., Park, E., Shyam Sundar, S., and del Pobil, A. P. (2012). “The effects of immersive tendency and need to belong on human-robot interaction,” in Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction – HRI ’12 (New York, NY: ACM Press), 207–208.

Kühnlenz, B., Sosnowski, S., Buß, M., Wollherr, D., Kühnlenz, K., and Buss, M. (2013). Increasing helpfulness towards a robot by emotional adaption to the user. Int. J. Soc. Rob. 5, 457–476. doi:10.1007/s12369-013-0182-2

Lang, P. J. (1995). The emotion probe: studies of motivation and attention. Am. Psychol. 50, 372–385. doi:10.1037/0003-066X.50.5.372

Lee, K. M., Jung, Y., Kim, J., and Kim, S. R. (2006). Are physically embodied social agents better than disembodied social agents? The effects of physical embodiment, tactile interaction, and people’s loneliness in human–robot interaction. Int. J. Hum. Comput. Stud. 64, 962–973. doi:10.1016/j.ijhcs.2006.05.002

Leite, I., Martinho, C., and Paiva, A. (2013). Social robots for long-term interaction: a survey. Int. J. Soc. Rob. 5, 291–308. doi:10.1007/s12369-013-0178-y

Lykken, D. T., and Venables, P. H. (1971). Direct measurement of skin conductance: a proposal for standardization. Psychophysiology 8, 656–672. doi:10.1111/j.1469-8986.1971.tb00501.x

MacDorman, K. F. (2006). “Subjective ratings of robot video clips for human likeness, familiarity, and eeriness: an exploration of the uncanny valley,” in ICCS/CogSci-2006 long symposium: Toward social mechanisms of android science, Vancouver, 26–29.

Mandryk, R. L., Inkpen, K. M., and Calvert, T. W. (2006). Using psychophysiological techniques to measure user experience with entertainment technologies. Behav. Inf. Technol. 25, 141–158. doi:10.1080/01449290500331156

McGlone, F., Wessberg, J., and Olausson, H. (2014). Discriminative and affective touch: sensing and feeling. Neuron 82, 737–755. doi:10.1016/j.neuron.2014.05.001

Mori, M., MacDorman, K. F., and Kageki, N. (2012). The uncanny valley. IEEE Rob. Autom. Mag. 19, 98–100. doi:10.1109/MRA.2012.2192811

Morrison, I., Löken, L. S., Minde, J., Wessberg, J., Perini, I., Nennesmo, I., et al. (2011). Reduced C-afferent fibre density affects perceived pleasantness and empathy for touch. Brain 134, 1116–1126. doi:10.1093/brain/awr011

Nakagawa, K., Shiomi, M., Shinozawa, K., Matsumura, R., Ishiguro, H., and Hagita, N. (2011). “Effect of robot’s active touch on people’s motivation,” in Proceedings of the 6th International Conference on Human-Robot Interaction – HRI ’11 (New York, NY: ACM Press), 465–472.

Nguyen, T., Heslin, R., and Nguyen, M. L. (1975). The meanings of touch: sex differences. J. Commun. 25, 92–103. doi:10.1111/j.1460-2466.1975.tb00610.x

Nie, J., and Park, M. (2012). Can you hold my hand? Physical warmth in human-robot interaction. Proc. Hum. Rob. Interact. 2012, 201–202. doi:10.1145/2157689.2157755

Nomura, T., Kanda, T., Suzuki, T., and Kato, K. (2008). Prediction of human behavior in human-robot interaction using psychological scales for anxiety and negative attitudes toward robots. IEEE Trans. Robot. 24, 442–451. doi:10.1109/TRO.2007.914004

Olausson, H. W., Cole, J., Vallbo, Å, McGlone, F., Elam, M., Krämer, H. H., et al. (2008). Unmyelinated tactile afferents have opposite effects on insular and somatosensory cortical processing. Neurosci. Lett. 436, 128–132. doi:10.1016/j.neulet.2008.03.015

Oostenveld, R., Fries, P., Maris, E., and Schoffelen, J.-M. (2011). FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 1–9. doi:10.1155/2011/156869

Paulsell, S., and Goldman, M. (1984). The effect of touching different body areas on prosocial behavior. J. Soc. Psychol. 122, 269–273. doi:10.1080/00224545.1984.9713489

Peeters, F. P. M. L., Ponds, R. W. H. M., and Vermeeren, M. T. G. (1996). Affectiviteit En Zelfbeoordeling van Depressie En Angst. Tijdschr. Psychiatr. 38, 240–250.

Pereira, A., Leite, I., Mascarenhas, S., Martinho, C., and Paiva, A. (2011). “Using empathy to improve human-robot relationships,” in Human-Robot Personal Relationships, Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, Vol. 59, eds M. H. Lamers and F. J. Verbeek, (Berlin; Heidelberg: Springer), 130–138. doi:10.1007/978-3-642-19385-9_17

Raison, C. L., Hale, M. W., Williams, L. E., Wager, T. D., and Lowry, C. A. (2015). Somatic influences on subjective well-being and affective disorders: the convergence of thermosensory and central serotonergic systems. Front. Psychol. 5:1580. doi:10.3389/fpsyg.2014.01580

Rantala, J., Raisamo, R., Lylykangas, J., Ahmaniemi, T., Raisamo, J., Rantala, J., et al. (2011). The role of gesture types and spatial feedback in haptic communication. IEEE Trans. Haptics 4, 295–306. doi:10.1109/TOH.2011.4

Rantala, J., Salminen, K., Raisamo, R., and Surakka, V. (2013). Touch gestures in communicating emotional intention via vibrotactile stimulation. Int. J. Hum. Comput. Stud. 71, 679–690. doi:10.1016/j.ijhcs.2013.02.004

Rooney, B., Benson, C., and Hennessy, E. (2012). The apparent reality of movies and emotional arousal: a study using physiological and self-report measures. Poetics 40, 405–422. doi:10.1016/j.poetic.2012.07.004

Rubin, R. B., Rubin, A. M., Graham, E. E., Perse, E. M., and Seibold, D. R. (2009). Communication Research Measures II: A Sourcebook. New York, NY: Routledge.

Schifferstein, H. N. J., and Zwartkruis-Pelgrim, E. P. H. (2008). Consumer-product attachment: measurement and design implications. Int. J. Des. 2, 1–14.

Schneider, S., Berger, I., Riether, N., Wrede, S., and Wrede, B. (2012). “Effects of different robot interaction strategies during cognitive tasks,” in Social Robotics. ICSR 2012. Lecture Notes in Computer Science, Vol. 7621, eds S. Ge, O. Khatib, J.-J. Cabibihan, R. Simmons, and M. A. Williams (Berlin; Heidelberg: Springer-Verlag), 496–505.

Smith, J., and MacLean, K. E. (2007). Communicating emotion through a haptic link: design space and methodology. Int. J. Hum. Comput. Stud. 65, 376–387. doi:10.1016/j.ijhcs.2006.11.006

Stern, R. M., Ray, W. J., and Quigley, K. S. (2001). Psychophysiological Recording. New York: Oxford University Press.

The North American Society of Pacing Electrophysiology – Task Force of the European Society of Cardiology. (1996). Heart rate variability: standards of measurement, physiological interpretation, and clinical use. Circulation 93, 1043–1065. doi:10.1161/01.CIR.93.5.1043

Thompson, E. H., and Hampton, J. A. (2011). The effect of relationship status on communicating emotions through touch. Cogn. Emot. 25, 295–306. doi:10.1080/02699931.2010.492957

van Erp, J. B. F., and Toet, A. (2013). “How to touch humans: guidelines for social agents and robots that can touch,” in Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, 780–785.

van Erp, J. B. F., and Toet, A. (2015). Social touch in human-computer interaction. Front. Digit. Humanit. 2:1–14. doi:10.3389/fdigh.2015.00002

Vining, R. F., and McGinley, R. A. (1987). The measurement of hormones in saliva: possibilities and pitfalls. J. Steroid Biochem. 27, 81–94. doi:10.1016/0022-4731(87)90297-4

Watson, D., Clark, L. A., and Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: the PANAS scales. J. Pers. Soc. Psychol. 54, 1063–1070. doi:10.1037/0022-3514.54.6.1063

Whitcher, S. J., and Fisher, J. D. (1979). Multidimensional reaction to therapeutic touch in a hospital setting. J. Pers. Soc. Psychol. 37, 87–96. doi:10.1037/0022-3514.37.1.87

Williams, L. E., and Bargh, J. A. (2008). Experiencing physical warmth promotes interpersonal warmth. Science 322, 606–607. doi:10.1126/science.1162548

Keywords: human–robot interaction, robot-initiated touch, social touch, stress reduction, haptic technology, physiological stress responses, Midas Touch, robot perception

Citation: Willemse CJAM, Toet A and van Erp JBF (2017) Affective and Behavioral Responses to Robot-Initiated Social Touch: Toward Understanding the Opportunities and Limitations of Physical Contact in Human–Robot Interaction. Front. ICT 4:12. doi: 10.3389/fict.2017.00012

Received: 19 September 2016; Accepted: 02 May 2017;

Published: 22 May 2017

Edited by:

Stefan Kopp, Bielefeld University, GermanyReviewed by:

Michael D. Coovert, University of South Florida, USAAmit Kumar Pandey, Aldebaran Robotics, France

Copyright: © 2017 Willemse, Toet and van Erp. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christian J. A. M. Willemse, Yy5qLmEubS53aWxsZW1zZUB1dHdlbnRlLm5s

Christian J. A. M. Willemse

Christian J. A. M. Willemse Alexander Toet

Alexander Toet Jan B. F. van Erp

Jan B. F. van Erp