94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

SYSTEMATIC REVIEW article

Front. Hum. Dyn. , 12 August 2021

Sec. Digital Impacts

Volume 3 - 2021 | https://doi.org/10.3389/fhumd.2021.689856

This article is part of the Research Topic Trust, Safety and Passenger Experience in Intelligent Mobility View all 6 articles

While virtual reality (VR) interfaces have been researched extensively over the last decades, studies on their application in vehicles have only recently advanced. In this paper, we systematically review 12 years of VR research in the context of automated driving (AD), from 2009 to 2020. Due to the multitude of possibilities for studies with regard to VR technology, at present, the pool of findings is heterogeneous and non-transparent. We investigated N = 176 scientific papers of relevant journals and conferences with the goal to analyze the status quo of existing VR studies in AD, and to classify the related literature into application areas. We provide insights into the utilization of VR technology which is applicable at specific level of vehicle automation and for different users (drivers, passengers, pedestrians) and tasks. Results show that most studies focused on designing automotive experiences in VR, safety aspects, and vulnerable road users. Trust, simulator and motion sickness, and external human-machine interfaces (eHMIs) also marked a significant portion of the published papers, however a wide range of different parameters was investigated by researchers. Finally, we discuss a set of open challenges, and give recommendation for future research in automated driving at the VR side of the reality-virtuality continuum.

The advancement of automated driving (AD) systems and corresponding human-machine interfaces (HMI) marks currently one of the biggest transformations in transportation research and development. In 2018, the Society of Automotive Engineers (SAE On-Road Automated Vehicle Standards Committee, 2021) published the latest version of their definition of vehicle automation, and their different levels, addressing challenges and laying the foundation for future standardization, as well as establishing a common language. With SAE Level 2 (L2) driving automation already on the road, it is only a matter of time until SAE Level 3 (L3) automated vehicles, or even higher levels, become commercially available. In the automotive domain specifically, the progress of both automated driving and mixed reality technology are pushing academia and industry to explore new vehicular user interface concepts. More and more HMI concepts investigate the reality-virtuality (RV) continuum (Milgram et al., 1995), from conventional 2D screens towards augmented reality (AR), and virtual reality (VR). Application areas include automotive, aeronautics, logistics, production, education, cultural heritage, and others (Dey et al., 2018). In AR, digital virtual images are overlaid on the real world, such that the digital content is aligned with real world objects (Azuma, 1997). Contrarily, in VR, environments solely consist of 3D digital objects (Steuer, 1992). Subsequently, mixed reality (MR) encompasses all potential positions on the RV continuum, i.e., between the real world and the virtual environment. Specifically, in the automotive domain, MR technology has been adopted for a variety of use cases. For example, head-up displays (HUDs) and larger windshield displays (WSDs) utilize AR technology to increase drivers’ situational awareness by keeping their gaze on the road, and even going further by displaying work- or entertainment-related content for performing non-driving related tasks (NDRTs) for more highly automated vehicles in prototype studies.

VR can be categorized according to the level of immersion provided to the users. Non-immersive visualizations, such as desktop monitor setups are easy and cost-efficient to implement. Semi-immersive VR is accomplished with head-up displays (HUDs) that provide an augmentation of the real world with a digital overlay. In contrast, head-mounted displays (HMDs), and Cave automatic virtual environments (CAVEs), which are comprised of projection being cast on walls, provide a high level of immersion to the user, as the real environment is moving into the background.

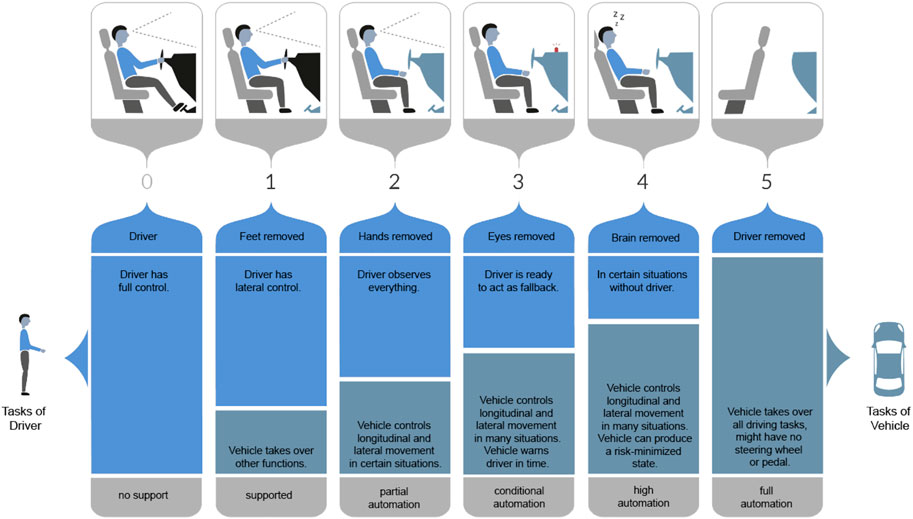

The advancement of vehicle automation from manual and assisted driving towards highly and fully automated vehicles requires a classification for these different automation levels, as mixed traffic, where manual and automated vehicles will share the road, will be more and more common. Therefore, the SAE On-Road Automated Vehicle Standards Committee (2021) has introduced a common language to identify these automation levels (see Figure 1). Each level of vehicle automation provides both opportunities and challenges for researchers and practitioners. While for the lower levels, situation awareness and safety concerns need to be addressed, higher automation levels could bring new interaction and passenger experiences to the car.

FIGURE 1. SAE levels of vehicle automation (SAE On-Road Automated Vehicle Standards Committee, 2021).

Consequently, VR technology has been used to research automated driving features and experiences, while remaining in a safe and controlled environment. Additionally, the current limited availability of highly automated vehicles drives researchers to investigate AD scenarios by other means, such as virtual reality simulations. Furthermore, technological advancements and increased affordability of VR equipment facilitate its utilization. Studies show that participants’ subjective and psychological responses in virtual reality environments are closely coupled to their experience and behaviors in a real-world setup (Slater et al., 2006). Additionally, VR setups allow for controlled, reproducible, and safe experiments (De Winter et al., 2012). Furthermore, data collection (e.g., driving performance) is less cumbersome than with real vehicles (De Winter et al., 2012). Pettersson et al. (2019) compared a VR setup to a field study, and found that while VR studies do not fully replace field studies, they can provide added value to driving simulators and desktop testing. In particular, VR can be used for conveying the overall principles, or “the story” behind a user study. Another comparison study conducted by Langlois et al. (2016) revealed that the virtual environment is able to replicate relative distance perception between the virtual items (e.g., vehicles, pedestrians, road objects) and their overlays. Therefore, Langlois et al. (2016) recommend that user experiments can be conducted in virtual environments with simulated AR HUDs or WSDs. Further comparison studies (e.g., Weidner et al., 2017; Bolder et al., 2018; Rhiu et al., 2020) also show the viability and applicability of using VR for driving research.

Additionally, VR technology for driving research is not only used as a “means to an end” (i.e., to conduct user studies), but increasingly, the use of VR HMDs for drivers/passengers is also being explored. As our intention of this survey is to give a holistic overview of user studies conducted with VR in the automotive domain, we include both aspects.

As a result, there is a wide range of challenges that need to be overcome, and a multitude of papers addressing these timely issues have been published over the last years. However, it is often hard to integrate and/or compare the obtained findings, as topics are often investigated differently. Furthermore, due to the sheer amount of results, it is hard for researchers to identify gaps where they could build upon. Thus, we claim that the time has come to systematically review which topics in research on virtual reality applications in driving automation received the most attention, and which methods have been applied to investigate these. To help the automotive UI community improve usability and user experience of VR applications, this paper provides an overview of 12 years of VR studies carried out in the automotive domain. We chose to start with the year 2009, as this was the year the AutomotiveUI community (AutomotiveUI, 2009) was established, with particular focus on user interface design and HCI research in the automotive domain. The overarching aim of this paper is to provide an overview of current and past use of VR in automated vehicles, with respect to their level of vehicle automation, to support researchers and practitioners finding example papers encompassing related studies, and to help determine current research gaps that should be filled with future studies. Hence, this survey holds a mirror to the automotive UI community on what has been accomplished and which future aspects need more attention.

The focus of this survey is on the combination of two emerging technologies: 1) virtual reality applications and usage, and 2) automated driving systems. Gartner’s hype cycle (Gartner, Inc., 2021), an indicator for common patterns that occur with emerging technologies, visualizes the current maturity status of these technologies which is an estimator for growth or depletion of a given technology. In 2014, both augmented and virtual reality were in the phase of “Trough of Disillusionment”, indicating that these technologies started to be rigorously tested with experiments and implementations (Gartner, Inc., 2014). Five years later, both AR and VR were removed from the Gartner hype cycle for emerging technologies (Gartner, Inc., 2019), meaning that they left the experimental status, and had matured, i.e., become usable and useful. Autonomous driving at SAE level 4 has not yet reached this status yet. The implication for automotive HCI researchers and practitioners is that MR will enter conditionally automated vehicles (AVs) in the near term. Accordingly, Gabbard et al. (2014) state that manufacturers are expected to build upon these technologies and provide AR applications in the foreseeable future. For example, Daimler has recently previewed an AR HUD which enables the visualization of world-relative navigational cues and vehicle-related information to the driver (Daimler, 2020). In the meantime, VR technology is used to simulate AR applications, as technical and cost constraints have not yet enabled a wide adoption of pure AR systems (e.g., Riegler et al., 2019b; Gerber et al., 2019). We therefore want to review the role that virtuality plays, and inherently investigate the VR side of the RV continuum (Milgram et al., 1995).

We also want to reflect that MR technology could be viewed as a “means to an end”, that is to investigate how this technology can be utilized to calibrate trust and acceptance in automated driving. Therefore, another important topic we intend to evaluate are application areas. We are interested in the evolution of early VR applications for non-automated vehicles (up to SAE L2) towards futuristic concepts and studies for fully automated vehicles (SAE L5). This includes route navigation, system status feedback, performing work- and entertainment related activities, among others. For example, early research efforts in automated driving have often focused on take-over request (TOR) scenarios, where the vehicle exceeds its operational design domain or encounters an emergency, and prompts the driver to interrupt their non-driving related task (NDRT), and to transition to manual vehicle control. VR application research therefore aims at investigating underlying mechanisms needed to substitute former driving experiences with other, potentially meaningful activities, and appropriate user interfaces which carve out advantages and balance negative effects of automation. With increasing vehicle automation, vehicles will transform more and more into mobile work and living spaces (Schartmüller et al., 2018).

As automated driving systems advance, traditional drivers evolve into passive passengers. Consequently, another focus of this survey is on (potential) users of VR technology in and around vehicles, such as drivers, passengers, pedestrians, and other road users. New paradigms are needed for communicating intent between automated vehicles and pedestrians, for example using external human-machine interfaces (eHMIs). MR technology can be applied to investigate corresponding scenarios, e.g. using simulated environments. Hence, we provide an overview of utilized devices for research in virtual reality combined with automated driving. This includes head-mounted displays (HMDs), HUDs, WSDs, cave automatic virtual environments (CAVEs), 2D monitor setups and projections, among others.

In contrast to the recent survey on user studies on automated driving conducted by Frison et al. (2020), this work focuses on user studies on vehicles with different levels of driving automation conducted within virtual reality environments. In 2020, Riegler et al. (2020b) presented workshop results on grand challenges in mixed reality applications for intelligent vehicles, and thus provides a good overview for future user studies in the form of a research agenda, whereas our view is more focused on VR as a subtopic of mixed reality, and covers both the main research themes of recent user studies and how these studies were conducted. Gabbard et al. (2014) focus on driver challenges and opportunities for in-vehicle AR applications. We recognize that VR should also receive attention for in-vehicle applications, both by using VR as a tool to conduct user studies, and as an end product (e.g., HMDs). McGill and Brewster (2019), in their review only look at user studies focussing on passenger experiences with VR HMDs, while we take a holistic approach towards VR usage for driving-related user studies. Another subtopic of VR in AD research was investigated by Rangelova and Andre (2019) in their survey on simulation sickness caused by VR HMDs. In the present literature review, we provide an overview of all potential VR devices causing simulator sickness, including HMDs and CAVEs, for example. Additionally, Rouchitsas and Alm (2019) provide a review of external human-machine interface (eHMI) research for vehicle-pedestrian communication, both in VR and non-VR setups. However, their focus was on topics covered by the reviewed user studies, while we emphasize methodological approaches, used measures and VR devices, as well as discuss implications and potential future eHMI research directions. This wealth of recent surveys in all these adjacent areas emphasizes the importance and timeliness of the topic, in our opinion.

To capture the latest trends in VR in AD research, we have conducted a thorough, systematic review of 12 years of relevant papers published between 2009 and 2020. We classified these papers based on their application areas, methodologies used, and their utilized levels of vehicle automation. Moreover, we intend to provide impetus towards a standardization of methodology concerning self-report, behavioral, and physiological measures in VR driving studies.

The main contribution of this work is four-fold, concerning the status quo and derivation of future research directions:

• Identify the primary application areas for automotive user interface research using VR technology.

• Provide an overview of the methodologies and VR environments commonly utilized in driving automation research.

• Identify open challenges and opportunities, and propose recommendations for the research of VR in AD.

• Provide a set of recommendations and emerging possibilities for VR study design in driving automation research.

The rest of the paper is organized as follows: Section 2 details the method and process we followed to find, rate and review the relevant papers. Section 3 then gives a high-level overview of the core relevant papers and studies, and establishes the classifications, followed by detailed reports on each of the classifications. Section 4 provides details each of the classifications, highlighting some of the most impactful user studies as well as challenges for each application area. Section 5 concludes by summarizing the review and identifying challenges, opportunities, and recommendations for future research. Finally, in the appendix, we have included a list of all papers reviewed in each of the application areas.

Considering the current research status, topics of interest in virtual reality HCI research in automated driving, and the authors’ experience in VR and AD research, we defined the reviewing process for this literature review. For the analysis of related literature, we reviewed publications at the intersection of augmented and virtual reality, human-computer interaction, and automated driving.

We followed a process of three stages, which are search (find relevant candidate publications), rate (determine the relevance of all candidates) and code (extract important details of the core relevant papers) in our literature review. Although these steps were mainly conducted in sequence, we performed several re-iterations and overlaps to ensure their validity.

Search: For exploring the body of work in VR in AD, we conducted a thorough literature research using the standard scientific search engines and databases ACM Digital Library, IEEE Xplore, Science Direct, Wiley Online Library, and Google Scholar. Furthermore, besides being a rich source of VR and AD research, they contain influential conferences and highly ranked journals such as CHI, DIS and TOCHI, VRST, AutomotiveUI, IV, and VR. We based our search on combinations of the terms “augmented reality”, “virtual reality”, “mixed reality”, “driving”, “automated vehicle”, and “vehicle automation”:

“augmented reality” OR “virtual reality” OR “mixed reality”

AND

“driving” OR “automated vehicle” OR “vehicle automation”

Additionally, we focused our search on contributions integrating aspects of VR in AD, which were introduced in top level AR/VR visualization, human-computer interaction, and automotive user interfaces conferences and journals. The basis for the selection process was research papers that were published in the respective venues between 2009 and up to 2020 (inclusive). The literature corpus also encompassed literature from a pre-existing list based on prior work by the authors. The initial determination of inclusion in the list of candidates was mainly based on their keywords, title, and abstract in order to initially reduce the number of papers in a reasonable way, while at the same time ensuring that no potentially relevant paper would be excluded. We further excluded the current year (2021), since the publication year was not yet finished and thus not all possibly relevant papers were available. In addition to papers found in this initial search, some papers were later rated as relevant, e.g., by analyzing the related work of reviewed publications. Finally, this stage resulted in a total of 293 candidate papers which were identified as interesting for further analysis.

Rate: For an initial classification of the found literature, the full set of 293 candidate papers was rated on their relevance for VR in AD. Prior to the rating process, the authors discussed and established initial relevance criteria. Subsequent to determining this initial set of criteria, every paper was analyzed if it is interesting for further consideration in the list of core relevant papers. These continuous meetings during the rating stage also helped to further refine and substantiate the relevance criteria, and to unify the way in which they were used among the authors. For the entire literature corpus, the criteria for inclusion depended on quality and the provision of user study details. For example, we excluded publications where only an abstract or no detailed description were provided. Further criteria for relevance were based on the type of paper, such that for example papers covering pedestrian safety using augmented reality technology were rated relevant based on whether the system was considered to be utilized in the context of automated driving. Papers focusing on interaction techniques or devices, as well as user studies and other evaluations or benchmarks were considered with regard to the quality or usefulness of the particular interaction modality or study in the context of VR in AD. Furthermore, we disregarded literature reviews, as well as juridical, theoretical, or ethical papers (e.g., Inners and Kun, 2017). Out of the set of candidate papers, 176 core relevant papers were finally identified.

Code: After selecting the papers, we established a reviewing strategy for literature analysis aimed at developing a standardized reviewing procedure. We used the PRISMA flow diagram (Moher et al., 2010), shown in Figure 2, to decide in a standardized and step-wise manner whether to record the individual papers. Additionally, in several brainstorming sessions, we introduced a categorization of sub-topics of interest to be investigated. Based on the keywords and abstract of the respective papers, as well as recent and historic paper tracks at the AutomotiveUI conference, the “premier forum for UI research in the automotive domain” (AutomotiveUI, 2009), we categorized our literature corpus into twelve main application areas. These sub-topics are reflected by the sub-sections in Section 3. If a paper covered multiple application areas (e.g., navigation and trust), we would determine its classification by the core intent of that paper. For example, if the effect of various navigational cues on driver trust and acceptance was investigated, we would classify that paper into the Trust, Acceptance, and Ethics application area (e.g., Wintersberger et al., 2017), rather than Vehicle Navigation and Routing. Following the categorization, we coded the core relevant papers using keywords derived from the analyzed papers, or based on already existing taxonomies on the respective topic. During the coding stage, we removed 35 non-referenced papers from the list of core relevant papers which we attribute to the evolving process of relevance assessment. Furthermore, an additional 20 papers were included to the list of core relevant papers in this stage of the process, as we deemed them to be relevant to a certain sub-topic.

Although we made every effort to be methodical and rigorous in our selection and review of these 176 papers, we acknowledge several limitations and validity issues with our approach. First, we might have missed bibliographic sources. While the use of the aforementioned bibliographic databases has the advantage of including a variety of publication venues and topics, it is possible that we have overlooked venues and papers that should have been added. The second involves the used search parameters. Although the search terms we employed seem genuine and conscientiously selected, there may be papers that did not add the suggested keywords when describing AR, VR, or MR applications and usages in the automotive domain. For example, papers might have described driving behavior research evaluated with a VR driving simulator, yet, did not include VR in their keywords, title, or abstract. Finally, we did not measure the average citation count (ACC) for each paper. While this filter was applied in previous literature reviews (e.g., Dey et al., 2018), we refrained from excluding papers based on their impact, as some papers with seemingly narrow use cases, and maybe no immediately generalizable application area for VR in AD research, might soon prove influential.

In total, we investigated the user studies reported in 176 papers. Table 1 provides a summary statistics for these papers, and Table 2 gives an overview of the user study details.

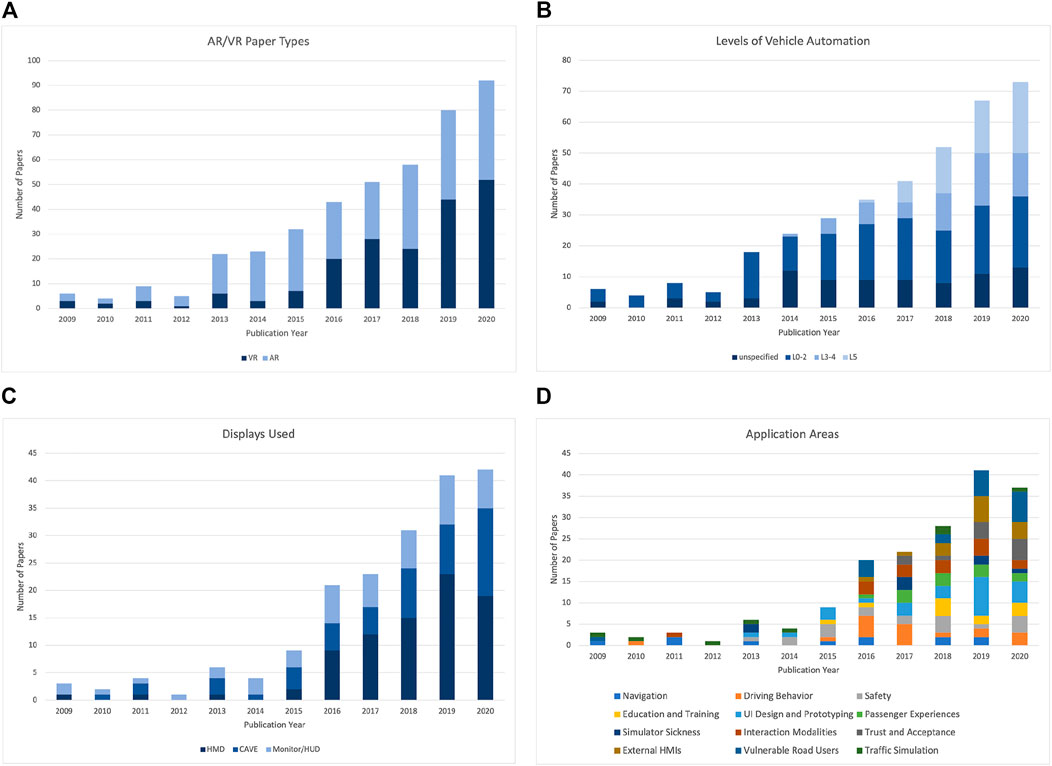

Figure 3A visualizes the total number of VR papers published between 2009 and 2020 with automotive context, categorized by papers at different stages of the RV continuum. The figure shows that the number of AR and VR papers published in 2020 is more than ten times that published in 2009. Overall, the number of papers utilizing mixed reality technology is growing rapidly.

FIGURE 3. High-level overview of the analyzed papers (A) AR/VR papers utilized in automotive research (B) Levels of vehicle automation (C) VR displays/devices used (D) Categorization of the papers into twelve application areas.

As displayed in Table 2, most of the papers (127, or 76%) employed a within-subjects design, 29 papers (17%) used a between-subjects design, and 12 papers (7%) used a mixed-factorial design. For eight studies, it was not reported or clear from the study details which study design they applied.

We found that it was uncommon for researchers to provide details on pilot studies before their main study. Only 21 papers (11%) reported conducting a pilot study in their experimentation process. Only six papers report just the results of the pilot study, without further main studies, which indicates that the importance of pilot studies is not well acknowledged. Most studies (156, or 83%) were performed in controlled laboratory environments, while only 10 studies (6%) were conducted in a natural environment or as a field study. In the Traffic Simulation application area, we found only two user studies were conducted, as simulations usually employ heuristics for evaluation. As most of the user studies were formal user studies with participants, and, with the exception of Traffic Simulation studies, employed no heuristic evaluation, might be an indication that heuristics and standardizations (Dey et al., 2018) need to be developed for future VR applications and use cases in the automotive domain. Due to design limitations, many VR studies use a simplified version of traffic scenes, as well as their visual fidelity regarding pedestrians, and other road users, among others. While most papers provide screenshots of the VR environment, it is often unclear to what extent the VR scene resembles the real world, and real-world situations. Consequently, this circumstance has a negative impact on the external validity of the user study findings, as there is no guarantee that they can be transferred to real environments, since VR driving simulators may not be realistic enough due to the limited field of view, or lack of motion feedback. As Yeo et al. (2020) found, mixed reality setups in real vehicles with motion feedback achieve the highest level of presence among automated driving simulators. However, conditions in realistic driving experiments are not as controlled or replicable as in a lab environment, and might differ in terms of surrounding traffic, weather conditions or vehicle speeds. Therefore, as computing and graphics power increase, we see the need to create “digital twins” of the real world (e.g., a section of a highway, a city borough) that feature content-rich real-world representations. Our review results also show that most studies conducted single sessions of interaction, and therefore only display snapshots of first time use. Even though usability has been shown to provide reliable information about user engagement within a single session experiment (Forster et al., 2019), user experience, and trust may require a longer time period to reach a robust behavior and attitude level (Lee and Moray, 1992). Longitudinal studies should therefore address these issues.

We investigated the use of qualitative (subjective) and quantitative (objective) data types, and found that a total of 95 papers (56%) collected both quantitative and qualitative data, 34 (20%) papers only quantitative, and 41 (24%) only qualitative. The predominantly used quantitative data involved driving performance (e.g., reaction time, braking distance), gaze and glance behavior, and physiological measurements, such as the heart rate variability and breathing metrics. Further, task performance (error/accuracy) was measured. As for subjective ratings, workload, user experience, simulator sickness, as well as custom self-ratings were reported. The concrete data types to collect often depend on the respective application area. For example, quantitative metrics such as braking and take-over performance are necessary for safety applications, while self-rated qualitative data such as acceptance and user experience metrics are more relevant for evaluating drivers’/passengers’ trust and well-being. In VR setups, we identify the need to at least include one subjective measurement, which is the simulator sickness that determines the internal validity of the user study. Additionally, quantitative data such as eye or head tracking data can nowadays more easily be acquired using VR HMDs (such as the HTC Vive Pro Eye). Generally, we recommend using both quantitative and qualitative data types for conditionally automated driving studies (SAE level 3 and 4), as they should include take-over requests (i.e., measuring the take-over performance). Additionally, task performance and situation awareness can be quantitatively determined (effectiveness and efficiency according to ISO-9241 ISO, 2018). Encompassing user experience aspects, satisfaction as an attitudinal component (ISO, 2018) should be included using qualitative measurements such as self-rating questionnaires and interviews. For fully automated driving setups (SAE level 5), the focus of user studies will shift to in-vehicle experiences such as transforming the vehicle into a mobile office or entertainment platform (Schartmüller et al., 2018), and hence subjective ratings might be of higher importance. In general, we recommend to use method triangulation in user studies as described by Pettersson et al. (2018), including questionnaires (both standardized and custom), interviews, and activity logging. While the research by Pettersson et al. (2018) focus on user experience, we encourage to use method triangulation also for other application areas (see Section 4).

Further, the majority of the user studies was conducted in an indoor environment (156, or 92%), while outdoor studies were made in 10 cases (6%), and 3 studies reported a combination of both settings (2%). These results seem plausible, as the use of virtual reality technology is currently still constrained by the lack of outdoor capabilities, such as power consumption and tracking devices. With the advances of wireless, battery-powered VR HMDs, however, coupled with the need to determine the external validity of VR indoor studies, we encourage researchers and practitioners to conduct user studies with this novel VR technology in outdoor settings.

The analysis of demographic data of the participants provided by the studies revealed that most studies were run with young participants from university populations. The mean age of participants utilized in the user studies was 29 years. While most user studies also included female participants (82%, some studies did not explicitly report on gender), however, the ratio of female-male participants was quite low (34% of total participants where a distinction between male and female subjects was specified). Regarding the recruitment source, we found that the majority of participants were recruited in universities (45%), while public (10%) and private company (3%) recruitments were relatively rare. The remaining studies did not explicitly report the source of recruitment, which is an indication that many researchers may not be aware of reporting user study details, which limits their reproducibility and validity. The participant analysis shows that many VR user studies in the automotive domain are conducted with young male university students, as opposed to a more diverse and representative sample of the population. Our review provides a distinction between male and female participants only, as we did not identify any papers in our literature corpus that report additional genders. However, we encourage researchers and practitioners to include and report other genders as well to support inclusiveness. As most reported demographics were young, male students, an inherent bias is created in the study results, and thus, the external validity of the findings is limited. Additionally, the technical and technological affinity of these participants tends to be much higher than that of the general public, and its impact of their performance may be significant. We therefore urge experimenters to invest more effort in attracting female and other participants, as well as different age groups, cultural backgrounds, and levels of technological affinity to diminish the bias in the results, and foster their generalizability (Howard and Borenstein, 2018).

Figure 3C gives an overview of devices used in the reported user studies, categorized into immersive HMDs and CAVEs, as well as non-to semi-immersive monitor/projection/HUD setups. While the usage of immersive displays has increased steadily since 2015, the usage of less immersive VR devices decreased. This shows an improved availability of advanced VR devices provided to researchers. Overall, approx. 82 papers (47%) reported using HMDs, 54 (31%) utilized CAVEs, and less immersive setups were used by 48 (27%) of papers. This results in more than 100% as several studies utilized multiple VR setups. Overall, the vast majority of user studies was carried out by utilizing VR as a tool for prototyping different scenarios (94%), while only 6% of the selected research papers would investigate the actual use of VR in the vehicle. A more detailed overview of utilized devices can be found in each application area in Section 4. While HMDs have a cost as well as portability benefit, CAVEs are not limited by a limited field of view, as projections are utilized to generate the VR environment. Over the last decade, VR technology became more affordable and easier to setup. Consequently, non-immersive VR setups, including monitors, should be avoided especially for continuous-depth applications such as simulated AR head-up or windshield displays. Additionally, the use and continuous improvement of open-source VR driving simulators (see Section 4.13) should be encouraged to consistently increase their fidelity, and democratization of scenarios and study setups.

Figure 3B visualizes the total number of papers we investigated for the years 2009–2020, categorized by the researched level of vehicle automation, from manual (SAE L0) to fully automated driving (SAE L5). VR research on conditionally automated driving (SAE L3–4) was reported starting in 2015 with the emergence of VR HMDs of adequate quality for research. No vehicle automation was reported by 89 papers (50%). Conditionally automated driving (SAE level 3 and 4) was researched by 28 papers (16%), fully automated driving (SAE level 5) by 22%, and research where the level of vehicle automation was not critical, and therefore not reported, was covered by 12% of the reviewed papers. Evidently, the utilization of AR/VR technology (Figure 3A) and research on higher levels of vehicle automation appear to go hand-in-hand, which seems to correspond to Gartner’s hype cycle for emerging technologies (Gartner, Inc., 2014).

We categorized the 176 into a total of twelve application areas which shows that there is a wide and open field for automotive researchers utilizing VR technology. Figure 3D gives an overview of the advancement of these application areas over time. Initial research with VR in the automotive domain concerned mostly navigation and driving behavior, as well as safety aspects. More recently, passenger experiences, vulnerable road users (such as pedestrians, and cyclists), as well as trust and acceptance of automated vehicles are the focus of research. Additionally, interaction concepts inside the vehicle and outside (external HMIs) are being investigated.

As shown in Table 1, most application areas had a similar mean number of authors for each paper, ranging between 3.00 (Simulator Sickness) and 3.88 (Interaction Modalities). However, papers in the Passenger Experiences area had the highest average number of authors (4.92), which highlights the interdisciplinary research field (automotive, human-computer interaction, and AR/VR visualization). Most papers were published at conferences venues, while for some application areas (Driving Behavior, Safety, and Trust and Acceptance) many studies were published in journals (approx. 50%).

Overall, a total of 187 studies was reported in these 176 papers (Table 1), most of them (150, or 85%) reported only one user study. The remaining papers reported two or more studies, including pilot studies. Regarding the median number of participants used, we found that Education and Training (52) and Safety (32) included the highest number of participants per study. Other application areas used between 20 and 30 participants per user study. Therefore, utilizing 20 to 30 participants in user studies seems to be a typical range in the VR automotive community.

In the following section, we review user studies and simulations in each of the twelve application areas separately. We further present overview tables, and discuss each application area by examining already conducted research, so that readers can get more detailed insights into user studies from that domain, as well as research gaps to find potential areas for future research.

A total of 11 studies were reported in the Vehicle Navigation and Routing application area. The majority of the studies investigated navigational aids for manual driving (see Table 3), although Sawitzky et al. (2019) presented an AR interface for fully automated vehicles. Interestingly, out of the 11 studies, six reported using CAVEs, three used HMDs, and two used some form of monitor/HUD display. This makes sense as navigational aids usually require a full view of the surrounding (virtual) environment, which CAVEs and HMDs offer. While eight studies were designed to be within-subjects, two studies were designed as mixed (within and between design). One pilot study did not specify the study design. On average, 20 participants joined a within-subject study, with approx. one third of the subjects being female. All studies were carried out in indoor locations. 75% of studies reported both objective (quantitative) and subjective (qualitative) data, while the rest focussed on qualitative results. Regarding objective data, error/accuracy and gaze metrics were reported prominently, while subjective feedback included self-ratings, NASA TLX, and perceived safety. Furthermore, all studies used VR as tool for conducting the experiment rather than as an end product.

The conducted research in this application area is mostly considering manual and low levels of vehicle automation. With advancements of HMD and CAVE technology, navigation and routing tasks can more immersively be researched than monitors. Work needs to be done with regard to higher levels of vehicle automation, as motion sickness (covered in Section 4.7) needs to be investigated further, and navigational aids (in combination with AR HUDs or WSDs) could prove helpful (e.g., Topliss et al., 2018). Further, the advent of AR technology can help to transition traditional, two-dimensional direction instructions towards world-relative three-dimensional instructions that blend into the outside environment (e.g., Ying and Yuhui 2011; Palinko et al., 2013; Bolton et al., 2015). Navigation is one area where AR technology could offer substantial advantages due to its ability to overlay virtual cues on top of the simulated world with continuous depth. This is becoming more and more relevant as AR displays become increasingly common in cars (e.g., head-up displays on the windshield) (Jose et al., 2016) and consumers begin to wear head-mounted displays outdoors. A novel future research direction for automated vehicles would be to integrate navigation tasks with passenger experiences. Navigation studies will also need to address the spatial capabilities of the vehicle and driver/passengers, the communication of depth cues, and spatial information display modalities (Gabbard et al., 2014). Further, future user studies should consider conducting outdoor navigation studies in a realistic test environment and the need to collect a range of qualitative and quantitative data.

A total of 18 studies were reported in the Driving Behavior application area. As expected, the majority of the studies investigated lower levels of vehicle automation (see Table 4), although three studies concentrated on conditionally automated driving (SAE L3–4). Out of the 18 studies, seven reported using CAVEs, seven used HMDs, and four used some form of monitor/HUD setup. Only one study collected solely subjective data, as the majority of studies reported objective or mixed data. Among the quantitative data, distance, steering, acceleration/braking, and gaze metrics were reported highly, while subjective data included workload (NASA TLX), self-ratings, and SSQ (simulator sickness questionnaire). For the conditionally automated driving studies, take-over reaction times were additionally collected. While fifteen studies were designed to be within-subjects, two studies were designed as between and one as mixed. On average, 21 participants joined a within-subject study, with approx. one fourth of the subjects being female. Two of the studies were carried out in outdoor locations. Additionally, all studies used VR as tool for conducting the experiment rather than as an end product.

Driving behavior is strongly associated with manual driving, focussing on the primary driving task. However, more recent concepts and studies show that non-driving related tasks are being investigated more extensively. As such, take-over requests (TORs), where the automated vehicle surrenders control to the human driver, will have to be researched more thoroughly. Keeping the driver “in the loop” is therefore one of the main tasks for driving behavior research. Gamification can be a great enabler in this area (Ali et al., 2016). A novel research approach would be to equip simulated automated vehicles with certain behaviors, such as “eco-driver” or “sporty driver”. For example, novel interfaces could support the driver by giving constant feedback to the driver on their driving style (e.g., “eco-friendly”). In this regard, personalization concepts should be explored further, as user experience aspects will become more important in future vehicles (e.g., Riegler et al., 2020b; Lee et al., 2020). Furthermore, the inclusion of elderly drivers should receive more attention, and future in-vehicle interfaces should be tailored to this growing target group (Robertson and Vanlaar, 2008).

A total of 19 studies were reported in the Safety application area. The majority of the studies investigated safety aspects for manual driving (see Table 5), although six studies examined highly automated driving, and two studies evaluated mixed traffic situations, where both manual and automated vehicles share the road. Interestingly, only one study (Lindemann et al., 2018b) evaluated safety in the context of fully automated vehicles. Most studies regarded situation awareness and crash warnings, however, also smartphone usage and teleoperated driving were investigated. Out of the 19 studies, ten reported using CAVEs, four used HMDs, and five used some form of monitor/HUD display. While eleven studies were designed to be within-subjects, six studies were designed as between, and two as mixed. On average, 32 participants joined a within-subject study, with approx. one third of the subjects being female. All studies were carried out in indoor locations. About half of all studies reported both objective and subjective data, while the rest focussed on either quantitative or qualitative results. Regarding objective data, error/accuracy, collisions, distance, steering reversal rate (SRR), times such as reaction times, and time to collision (TTC), as well as gaze metrics were reported prominently. Physiological measures encompassed galvanic skin response (GSR), skin conductance response time (SCR), and heart rate (HR). Subjective feedback included user experience (AttrakDiff) and workload (NASA TLX), among custom questionnaires. Almost all reviewed studies all reported using VR as study setup. One study evaluated the use of VR HMDs for supporting teleoperations of shared automated vehicles, and one study used HMDs to alert drivers on safety-critical issues while using their smartphone while driving.

Similar to the Navigation and Routing, and Driving Behavior application areas, the Safety category is dominated by research on manual driving. However, as mixed traffic situation will occur more prominently in the future, we believe that research interest will increase for highly automated vehicles, for example, when it comes to cooperative driving, and teleoperated driving. VR plays an important role in safety research, as AR awareness/attention cues can be simulated. As such, new opportunities will emerge encompassing both AR and VR in automotive safety research, including gamification concepts (Schroeter and Steinberger, 2016). As all reviewed papers in this category reported user studies being conducted indoors, opportunities emerge to research safety aspects in outdoor environments with VR HMDs, for example on test tracks (e.g., Frison et al., 2019). Additionally, several VR HMDs nowadays offer video/AR see-through capabilities which could be researched for conditionally- and fully automated vehicles as modality to keep the driver in the loop. The review also shows that qualitative measurements are not as common as quantitative ones. When conducting further research in safety aspects, such as contact analogue warnings on a HUD, for example, we encourage researchers and practitioners to go beyond traditional measurements such as reaction, braking and take-over times, but also include user experience metrics using self-rating questionnaires (e.g., User Experience Questionnaire, AttrakDiff). Additionally, VR HMDs as tools for immersive teleoperations should be researched further, as initial research (e.g., Bout et al., 2017) shows promising results in spatial awareness for remote operators.

We assessed 11 studies in the Driving Education and Training application area. Many of the studies investigated lower levels of vehicle automation (see Table 6), as educational driving simulators are evaluated for their application in driving schools or health centers. Nonetheless, four studies concentrated on highly automated driving. HMDs and monitors/HUDs were most commonly used, while a CAVE environment was only utilized in one study. All studies except one, which only gathered quantitative data, collected subjective data, and four studies (including all higher-level automation studies) additionally evaluated objective criteria, mostly TOR times. Physiological measurements included electrocardiogram signals (ECG) as well as galvanic skin response (GSR) data. As for qualitative data, self-ratings using custom questionnaires were used most extensively. Additionally, simulator sickness questionnaire (SSQ) and IGroup Presence Questionnaire (IPQ) were utilized. Six studies were designed as within-subject studies, and five as between-subject studies. The within-subject studies were conducted with 40 participants on average, with 43% of the subjects being female. With respect to the location, all studies took place in an indoor environment. Additionally, all studies used VR as tool for conducting the experiment rather than as an end product.

As VR environments and scenarios can easily be tailored to their users’ needs, VR systems are well suited for education purposes. For example, particular real-world situations that would be difficult to find, or replicate, can be modeled in VR, and even replicated as digital twins. Sportillo et al. (2018b) explored different educational simulators, and found that the acquisition of driving skills in conditionally automated vehicles was most highly rated for the VR solution, as compared to a user manual and a fixed-base simulator. Driving training in the context of mixed traffic might be especially useful for driving learners who have to consider cooperative driving situations with vehicles of different automation level, and driving simulators can help them achieve this paradigm shift (Sportillo et al., 2019). In addition, driving training can provide great benefits to people with visual and mental impairments to improve their orientation and mobility in road environments (Thevin et al., 2020). Therefore, we encourage automotive researchers to investigate health aspects as part of the education and training process in VR driving simulations. Moreover, we also see the need to conduct longitudinal studies in this domain, such as the one reported by Sibi et al. (2020). For example, future research could investigate longitudinal effects of future mixed traffic scenarios for novice drivers, and the effect on trust and acceptance of AVs, which shows the interdisciplinary nature of this application area.

A total of 26 studies were reported in the UI Design and Prototyping application area. We found that HUD and WSD concepts were most prominently researched (see Table 7). Regarding vehicle automation, all manual, conditional, and fully automated driving scenarios were examined. Further, there was no clear preference towards utilized devices, as HMDs, CAVEs, and monitors/HUDs were employed. While 18 studies were designed to be within-subjects, four studies were designed as between, and four as mixed. On average, 24 participants joined a within-subject study, with 36% of the subjects being female. With the exception of two studies, all studies were carried out in indoor locations. The majority of all studies reported both objective and subjective data, while the rest focussed on quantitative results only. Regarding objective data, reaction times, error/accuracy, and gaze metrics were reported prominently, while subjective feedback included NASA TLX, SSQ, Toronto Mindfulness Scale (TMS), and self-ratings. Additionally, most studies used VR as tool for conducting the experiment. However, three studies evaluated VR as end product such as a passenger wearing an HMD for content overlays synchronized with the vehicle’s movements, and HMDs used as immersive instruments for vehicle interior and exterior design.

Another application area of VR is the rapid UI design and prototyping process that can be achieved while being immersive and cost-effective Goedicke et al. (2018). For example, Morra et al. (2019), Riegler et al. (2020c), Charissis et al. (2013), and Gerber et al. (2020) use VR simulation to iteratively design AR HUDs or WSDs. Generally, vehicle interiors, surface quality, and shapes can be inspected in VR by using certain materials and shaders (Tesch and Dörner, 2020), and aid in the manufacturing process (Gong et al., 2020). Merenda et al. (2019a) and Merenda et al. (2019b) emphasize the level of detail and visual fidelity the virtual environment must have, as driving performance and gaze behavior are influenced by these parameters. Similarly, Paredes et al. (2018) suggest that VR experiences require a degree of sophistication even early on in the prototyping phase, which includes the use of high-fidelity content. We found that both qualitative and quantitative assessments were conducted for this application area, which we encourage to continue, as the performance of certain HMIs, but also their subjective ratings must be considered. In this regard, personalization should be researched further, as the emergence of more and more digital interfaces inside vehicles, and the concurrent discontinuation of hardware UIs (e.g., buttons, sliders) will allow novel tailored UIs to be crafted for any user.

We investigated a total of 12 studies in the Passenger Experiences application area. Most of the studies investigated higher levels of vehicle automation (see Table 8), with special focus on fully automated driving reported by five studies. HMDs and monitors/HUDs were commonly used, while a CAVE environment was only utilized in two studies. All studies collected qualitative data, and two studies additionally evaluated quantitative criteria, such as TOR times, and physiological data, including heart rate variability (HRV) and breathing rate (BR). Regarding subjective data, self-ratings using custom questionnaires were used most extensively, among simulator sickness questionnaires such as the SSQ and Engagement, Enjoyment, and Immersion (E2I) questionnaire. Nine studies were designed as within-subject studies, two as between-subject studies, and one as mixed-design. One study did not report the study design. The within-subject studies were conducted with 27 participants on average, with almost one third of the subjects being female. With respect to the location, two of the studies took place in an outdoor environment, the remaining studies were conducted in indoor lab environments. Nearly half of all papers utilized VR as an end product for investigating the use of HMDs for passengers, while the remaining papers employed VR to simulate AR content, for example.

VR technology can further be employed to investigate passenger activities and experiences inside vehicles. Equivalent to windshield displays, side windows can also be used as displays utilizing corresponding technology to visualize content for side and back-seat passengers. In particular, Wang et al. (2016) investigated displaying entertainment and games on the side windows intended to keep younger passengers entertained on longer trips. Li J. et al. (2020) investigated back-seat commuters’ preferences for activities in VR. Preferences indicate increased interest in productivity work, as being immersed in VR was perceived less distracting and more focussed. However, passengers should also be aware of physical borders to avoid bumping into the vehicle interior or other passengers, which should be researched further (proxemics, Li J. et al., 2020). VR HMDs can further enable passengers to “dive” into new (virtual) worlds in otherwise mundane drives (Paredes et al., 2018). To this end, Paredes et al. (2018) found that the proper match between VR content and the movement of the car can lead to significantly lower levels of motion sickness. Therefore, it is essential to match the speed of the VR content with that of the real vehicle. For highly automated driving, when drivers become passengers, this research will be especially valuable, as vehicles will be used as mobile entertainment or office platforms. We therefore encourage the automotive research community to investigate comfort, anxiety, entertainment, and work aspects, using virtual reality simulations for autonomous vehicles. As VR HMDs increase in portability, performance, and affordability, we believe that future in-vehicle experiences will include AR content being displayed on the windshield and side windows, thereby superimposing digital objects onto the real world, as well as VR headsets providing immersive virtual content for rear-seat passenger. For the former, VR can be used to simulate augmentation concepts (e.g., Wang et al., 2017), while the latter used VR as end product.

A total of 8 studies were reported in the Simulator and Motion Sickness application area. We found that research focussed on visual cues to tackle simulator sickness (see Table 9). Regarding vehicle automation, all manual, conditional, and fully automated driving scenarios were examined. Further, most user studies reported using HMDs, while two user studies used a CAVE or monitor/HUD setup, respectively. Similarly, an analysis of the collected data types shows that subjective criteria were evaluated most commonly, followed by mixed data types (3) and objective-only (1). Regarding objective data, driving times, error/accuracy, acceleration/braking, vection, and speed metrics were reported, while subjective feedback included SSQ, Presence Questionnaire (PQ), Motion Sickness Assessment Questionnaire (MSAQ), and self-ratings. All but one study reported using a within-subject design, as one study reported a between-subject design. On average, 29 participants joined a within-subject study, with only 23% of the subjects being female. Moreover, all studies were carried out in indoor locations, and most used the VR technology as tool rather than as an end product. In three studies, VR HMDs were utilized to investigate their applicability on displaying diminished or additional virtual content.

When conducting user studies in VR in general, and on automated driving in particular, as active drivers become passive passengers, simulator and motion sickness must be considered. Simulator sickness is visually induced motion sickness (Rangelova and Andre, 2019). Motion sickness can occur when stationary users perform self-motion or when there are delays between head movements and the visual display presentation Hettinger and Riccio (1992). Known symptoms caused by motion sickness include nausea, disorientation, headache, and general discomfort, among others (Kennedy and Fowlkes, 1992). McGill and Brewster (2019) and McGill et al. (2017) explore the use of VR HMDs in vehicles worn by passengers. Motion sickness can occur through sensory mis-match which occurs when the eyes see that the digital content in VR does not conform to the vehicular movements registered by the vestibular system (Reason and Brand, 1975). Moreover, McGill et al. (2019) suggest that postural sway/head position changes should be kept to a minimum to reduce simulator sickness in case no positional tracking can be ensured by the simulator. Sawabe et al. (2017) use vection illusion to invoke pseudo-acceleration which leads to reduced acceleration stimulus, thereby helping passengers to counter simulator and motion sickness. A visual solution is proposed by Hanau and Popescu (2017) as they display visual cues of the acceleration to passengers, thus reducing the sensory conflict between the perceived acceleration and the absence of corresponding visuals. Williams et al. (2020) propose a multisensory setting, i.e., visuo-haptic feedback in VR driving simulation, and Lucas et al. (2020) explore seat vibrations and their effect on simulator sickness. Their findings show that feedback congruency and simulating road vibrations leads to more immersion for the driver, thereby creating a positive impact on simulator sickness. We see a wide range of proposed solutions to simulator/motion sickness being researched. It should be noted, that both physiological and subjective ratings (Simulator Sickness Questionnaire - SSQ, Presence Questionnaire - PQ) should be assessed and reported for user studies. Further, it is recommended to consider parameters such as study duration and visual fidelity in VR simulations, which influence the level of immersion and presence (Bowman and McMahan, 2007). Furthermore, as future in-vehicle experiences might include VR HMDs, we encourage researchers to explore their viability and applicability as end products for rear seat passengers, for example (McGill and Brewster, 2019). To this end, outdoor driving studies should be conducted, and congruency of visual and motion information, and lag effects should be researched.

We examined a total of 16 studies in the Interaction Modalities application area. We found that research was conducted for both lower and higher levels of vehicle automation (see Table 10), and two studies reported details for all levels of vehicle automation. All VR devices (HMDs, CAVEs, monitor/HUDs) were utilized. Further, all but two studies collected both qualitative and quantitative data. Quantitative criteria included TOR times, error/accuracy, gaze, and steering metrics, among others. Qualitative data included NASA TLX, SSQ, self-ratings, and interviews, among others. 15 studies were designed as within-subject studies, and one as between-subject study. The within-subject studies were conducted with 19 participants on average, with about one third of the subjects being female. With respect to the location, all studies were conducted in indoor lab environments. Furthermore, all papers reported using VR as tool for conducting the study rather than as an end product.

Future intelligent vehicles will need tailored modalities for interacting with their UIs. Instead of more traditional buttons, knobs, sliders and touch screens, novel forms of user interfaces (e.g., touch, speech, gestures, handwriting, and vision) Norman (2010) are researched further in the automotive domain. As Bottone and Johnsen (2016) state, one of the goals of VR driving simulation is to achieve high fidelity of interaction, maximizing the overall presence of the user in the simulation. This can be attained by providing real objects (e.g., pedals, steering wheel etc.) into the virtual environment. Manawadu et al. (2016) evaluate hand gestures as an alternative to the steering wheel in semi-automated vehicles. However, the majority of research on driver-vehicle interaction focuses on NDRTs instead of the primary driving task. For example, Riegler et al. (2020a) explore head gaze-based interactions with WSDs to interoperate with an infotainment system. Eye-tracking within VR has also been explored (Makedon et al., 2020). Riegler et al. (2020c) utilize speech-based interactions to perform a NDRT in semi-automated vehicles. Pan et al. (2017) used brain signals (i.e., electroencephalo-graph, or EEG) to let drivers navigate a vehicle in VR. Moreover, joysticks and similar hardware controllers already established to navigate 3D worlds were investigated (Chia and Balash, 2020). While we recognize that many interaction modalities are being researched separately, we encourage the utilization of sensor and interaction fusion, i.e., integrating multiple interaction modalities that are enabled according to the current context of being in a highly automated vehicle (e.g., work/leisure trip, entertainment/work, private/public transport, time of day). Additionally, different mobility concepts (e.g., shared public vehicles) should be evaluated in combination with accepted interaction types and their proxemics. In the areas of automotive context awareness, and personalization, in combination with mixed reality, we believe that research is still early and should be expanded.

A total of 12 studies were reported in the Trust, Acceptance, and Ethics application area. We found that fully automated vehicles were most prominently researched (see Table 11), however, also manual driving was researched in the area of ethical decision making. Further, HMDs and CAVE environments were employed in most studies, while monitor/HUD setups were utilized for two user studies. Eight studies were designed to be within-subjects, and four studies were designed as between studies. On average, 25 participants joined a within-subject study, with less than one third of the subjects being female. All studies were carried out in indoor locations. All studies reported subjective data, and half of the studies included objective data as well. Regarding objective data, HRV, decision times, and gaze metrics were reported prominently, while subjective feedback included Trust Scale (TS), Technology Acceptance Model (TAM), User Experience Questionnaire (UEQ), NASA TLX, and self-ratings. Moreover, all studies used VR as tool for conducting the experiment rather than as an end product.

A number of research studies were carried out in VR environments to investigate trust in AD systems. Shahrdar et al. (2019) use a VR autonomous vehicle simulator to measure the evolution of trust between humans and self-driving cars. To this end, they developed a real-time trust measurement to explore trust levels according to different driving scenarios. Similarly, Ha et al. (2020) examine the impact of explanation types and perceived risk on trust in AVs. Simple explanations, or feedback, such as descriptions of the vehicle’s tasks, led to elevated trust in AVs, while too much explanation led to potential cognitive overload, and did not increase trust (Ha et al., 2020). Djavadian et al. (2020) evaluate the acceptance of automated vehicles and found that especially heavy traffic situations lead to a higher acceptance as the vehicle would choose faster and less congested routes. Further, trust and acceptance should not only be evaluated from the driver’s perspective, but also from an outsider’s view. For example, Holländer et al. (2019b) look into communication concepts between AVs and pedestrians and their impact on trust by using VR simulations. In particular, they look at crossing scenarios and how AVs can let pedestrians know when it is safe to cross the street. Additionally, Colley et al. (2020) explore trust in AVs by detecting and recognizing pedestrians’ intent in order to calibrate driver’s trust. As challenges in this application area, we see the need to measure trust over longer periods of time, and the possibility of decreasing and subsequently re-building trust in automated vehicles. Context awareness that is influenced by the driver’s physiological metrics (e.g., stress), could be used to adapt (i.e., increase or decrease) system feedback and information display.

We examined a total of 15 studies in the External HMI application area. We found that research was conducted almost exclusively for fully automated vehicles (see Table 12). All studies utilized HMDs for their HMI concepts. Further, all studies collected qualitative data. Additionally, quantitative data was reported by eight studies. Quantitative criteria included time measurements, error/accuracy, and gaze metrics, among others. Qualitative data included NASA TLX, User Experience Questionnaire (UEQ-S), self-ratings, and interviews, among others. Most studies were designed as within-subject studies, and were conducted with 32 participants on average, with 43% of the subjects being female. With respect to the location, all studies were conducted in indoor environments. In addition, all these studies utilized VR as tool for conducting the research rather than using it as an end product.

Several studies in automotive research exist that focus on external car bodies as a design space. Since the technical implementation for real vehicles is cumbersome and costly, the majority of research utilizes VR to explore external HMI (eHMI) concepts. External automotive interfaces provide services to different road users, such as pedestrians, passengers, and drivers of other vehicles. In mixed traffic situations, where automated and non-automated vehicles share the road, eHMIs can provide additional information, such as the driving state and intent, to other road users. In a VR simulator study, Singer et al. (2020) explore a communication interface between the automated vehicle intending to park, and pedestrians. The user study results reveal that additional signals achieve a better perception of the vehicle’s intention, and improve perceived safety. As such, external HMIs provide a novel design space for pedestrians. Asha et al. (2020) investigate various eHMI concepts, such as public displays, warning systems, and malfunction alerts. Additionally, eHMIs can be used as ambient displays by blending into the environment to reduce visual pollution in the cityscape (Asha et al., 2020). However, such eHMIs are not limited to visualization only (e.g., Chang et al., 2017; Stadler et al., 2019), indeed, they can be used for interaction purposes, such as active route navigation for pedestrians using gesture interaction (Gruenefeld et al., 2019). We encourage researchers to investigate eHMIs not only for pedestrians, but also for cyclists to enable safe interactions, and foster social connections between automated vehicles and vulnerable road users, for example by defining a common understanding, or guidelines, between them Kaß et al. (2020). Additionally, our review shows that most eHMI research is focused on SAE L5 fully automated vehicles, however, conditionally automated vehicles need to communicate with pedestrians as well, as the driver might not be fully attentive to the road environment, yet still being present in the vehicle. For example, SAE L3 and L4 vehicles could monitor the driver’s attentiveness towards the outside environment, and accordingly visualize the eHMI to pedestrians.

A total of 20 studies were reported in the Vulnerable Road Users (VRU) application area. We found that pedestrian behavior, especially crossing behavior with automated vehicles, was most prominently researched. In addition, the driver perspective regarding pedestrian safety was also extensively researched. As such, the research in this application area was applied to all levels of vehicle automation (see Table 13). Further, HMDs and CAVE environments were employed in most studies, while monitor/HUD setups were utilized for three user studies. Sixteen studies were designed to be within-subjects, and four studies were designed as between studies. On average, 32 participants joined a within-subject study, with approx. 43% of the subjects being female. All studies were carried out in indoor locations, one study included an outdoor setup in addition, and one study was solely outdoor-based. All studies reported objective data, and about half of the studies included subjective ratings as well. Regarding objective data, time and distance measurements, speed, acceleration/braking, and gaze metrics were reported prominently, while subjective feedback included NASA TLX, System Usability Scale (SUS), Trust in Automation Questionnaire, and self-ratings. Furthermore, all studies made use of VR as tool, rather than as an end product.

Vulnerable road users, including pedestrians and cyclists, are being researched from two perspectives: 1) the driver perspective that has the responsibility to be aware of the surroundings in manual driving (e.g., Bozkir et al., 2019; Frémont et al., 2019), and 2) the VRU perspective that needs to understand the driver/vehicle intentions (e.g., Doric et al., 2016; Velasco et al., 2019). As such, speed perceptions and crossing behaviors have to be thoroughly evaluated. With the emergence of highly automated vehicles, we recognize that drivers might be engaged in NDRTs, such as entertainment or work activities (Pfleging et al., 2016). The communication of situation awareness even with higher levels of vehicle automation must therefore be researched more, including feedback strategies and visual, auditory, or multimodal transitions from the NDRT to the attention-required task. Therefore, take-overs need to be carefully explored, and we encourage researchers to investigate both quantitative and qualitative measures. Particularly, augmented reality head-up and windshield displays visualizing hazard cues to the driver could prove beneficial for the safety of VRUs (e.g., Phan et al., 2016). The possibility to simulate AR cues in VR environments enables researchers with new ways to explore VRU scenarios in safe and controlled study settings.

In this application area, virtual reality is used to generate and analyze traffic scenarios (e.g., traffic jams, throughput at intersections, among others). Throughout the analysis we identified 11 out of 176 papers which are focusing on traffic simulations and gave them more attention. We omitted an overview table, since only two of these papers included user study findings. Ayres and Mehmood (2009) developed an open-source traffic simulator capable of generating many permutations and adjustments of parameters to simulate real life highway situations. The advantages of using VR are 1) to visualize the virtual road traffic environment in real time in an immersive manner from different perspectives, and 2) displaying the data generated from the virtual sensors and creating graphical renderings, such as graphs and charts. In the context of highway scenarios, Xu et al. (2020) investigate merging conflicts visualized in the VR environment rather than in traditional microscopic simulations for a more immersive analysis. Traffic simulations focussing on city traffic are conducted by Grasso et al. (2010), who evaluate commute times as well as eco-friendliness of various traffic simulations. Luo et al. (2012) developed a VR traffic simulation with emphasis on weather effects, such as cloudy and icy conditions, as numerous traffic accidents can be traced back to harsh climate or complicated terrain conditions. Yu et al. (2014) further consider wind effects in their microscopic VR traffic simulator by calculating aerodynamic parameters in order to optimize intelligent traffic throughput. Additionally, Yu et al. (2013) investigate overtaking behaviors in their VR traffic simulator by modeling lane changing behavior. In order to verify the simulation correctness and effectiveness of their model, Yu et al. (2013) evaluated complex traffic maneuvers. A user study on lane-change behavior conducted by Zimmermann et al. (2018) showed that game-theoretic approaches have the potential to power local cooperation between traffic participants using social interaction concepts. Mixing the virtual and real world, using so-called digital twins, is accomplished by Szalai et al. (2020) in the form of testing a real vehicle in a virtual environment. While the utilization of traffic simulation provides a large number of possible scenarios, a test vehicle with its full control algorithm can be evaluated in various traffic situations. With the mixed reality or digital twin approach, Szalai et al. (2020) state that simulation environments can greatly support the automated vehicle development process, and facilitate validation procedures for such systems.

We see great potential for digital twin approaches in mixed traffic simulations and user studies, where study participants can experience close-to-reality scenarios. Potential application areas are driving education and training, as fuel consumption, energy efficiency etc. can be calculated in real time. We further see the need to develop heuristics in order to evaluate scenarios according to a set of parameters, and allow for reproducibility and validity evaluation of simulations. To this end, we believe it is essential to share datasets and make evaluation results available open-source to foster synergies between researchers.

Overall, a rise in VR user studies for a growing number of use cases can be identified. Accordingly, the role, importance, and responsibility of VR driving simulators is increasing as well. While early VR driving simulators were monitor-based (e.g., Weinberg et al., 2011; Yang et al., 2016), more recent research is conducted with more immersive solutions, such as HMDs and CAVEs. For example, Ihemedu-Steinke et al. (2017) and Grasso et al. (2020) provide details into the conceptionalization, architectural design, modeling, and implementation phases of creating a VR driving simulator using HMDs. More details on a CAVE infrastructure for VR driving simulation with focus on investigating AR HUDs are presented by Gabbard et al. (2019). While most VR driving simulators are placed in indoor lab environments, for example, Ghiurãu et al. (2020) investigate see-through AR concepts in real vehicle while the driver is wearing a VR HMD. A recent comparative study between six different autonomous driving simulation platforms with varying levels of visual and motion fidelity conducted by Yeo et al. (2020) shows that MR and VR HMDs surpass simple monitor setups for driving simulators, even more so, when applied in a real vehicle. Additional research is being conducted on the use of hardware controllers (Sportillo et al., 2017), and natural interaction metaphors (Moehring and Froehlich, 2011) within VR driving simulator setups.

Moving forward, we recommend readers to consider the use of Open Source VR driving simulators, because of the research community support, reproducibility, and validity for user studies. Several solutions exist, which we outline in the following:

• AutoWSD (Riegler et al., 2019a; Riegler et al., 2019a): focus on rapid prototyping of HCI concepts (interaction modalities, windshield displays) in automated driving (SAE L3–5) using customizable scenarios.

• CARRS-Q IVAD Simulator (Schroeter and Gerber, 2018; Gerber et al., 2019): focus on rapid prototyping of HCI concepts for SAE L3–5 AVs based on pre-recorded 180/360° videos.

• VR-OOM (Goedicke et al., 2018): focus on evaluating HCI concepts in VR in a real vehicle.

• OpenROUTS3D (Neumeier et al., 2019) focus on teleoperated driving to help overcome the problems of automated driving.

• AirSim (Shah et al., 2017): focus on physically and visually realistic simulations for autonomous vehicles and drones.

• GENIVI (GENIVI Vehicle Simulator, 2016): focus on development and testing of in-vehicle infotainment systems.

In this paper, we reported on the status quo of VR applications utilized in human factors research in driving automation, from 2009 to 2020. We reviewed papers from a wide range of journals and conferences, which included 176 papers. We followed a structured approach to give an overview of the research domain by selecting relevant papers, and reviewing them in a standardized manner. In the continuously growing number of papers over the years 2009–2020, our exploration shows that as increasing levels of vehicle automation are researched, the usage of VR applications is utilized accordingly. There is a good portion of research in different aspects of VR utilization in driving automation indicating that researchers in the community work on the issue of developing and improving human-machine interfaces for automated vehicles and entities affected by them, such as drivers, passengers, pedestrians, and other road users. When researchers and practitioners plan to engage in research and development of automated driving, the present work provides them with an overview of the current landscape. Thus, one can derive information about main research areas and emerging trends that have not been studied extensively so far.