- 1Department of Systems Biology, Columbia University Irving Medical Center, New York, NY, United States

- 2Academy of Mathematics and Systems Science, Chinese Academy of Sciences, Beijing, China

- 3School of Mathematical Sciences, University of Chinese Academy of Sciences, Beijing, China

- 4Institute of Zoology, Chinese Academy of Sciences, Beijing, China

Robust Principal Component Analysis (RPCA) offers a powerful tool for recovering a low-rank matrix from highly corrupted data, with growing applications in computational biology. Biological processes commonly form intrinsic hierarchical structures, such as tree structures of cell development trajectories and tumor evolutionary history. The rapid development of single-cell sequencing (SCS) technology calls for the recovery of embedded tree structures from noisy and heterogeneous SCS data. In this study, we propose RobustTree, a unified framework to reconstruct the inherent topological structure underlying high-dimensional data with noise. By extending RPCA to handle tree structure optimization, RobustTree leverages data denoising, clustering, and tree structure reconstruction. It solves the tree optimization problem with an adaptive parameter selection scheme that we proposed. In addition to recovering real datasets, RobustTree can reconstruct continuous topological structure and discrete-state topological structure of underlying SCS data. We apply RobustTree on multiple synthetic and real datasets and demonstrate its high accuracy and robustness when analyzing high-noise SCS data with embedded complex structures. The code is available at https://github.com/ucasdp/RobustTree.

1 Introduction

Cell fate decisions and tumorigenesis are complex biological processes that experience many state transitions, such as cell differentiation and somatic cell evolution. The rapid development of single-cell sequencing (SCS) technology makes it possible to unveil the dynamics of such biological processes. However, the limited genetic content of a single cell and the stochastic nature of sequencing techniques can result in high rates of gene dropout and various sequencing errors, leading to noisy SCS data (Gawad et al., 2016; Chen et al., 2022; Wen et al., 2022). It poses additional challenges to reconstruct the potential hierarchical topological structure or dynamics of cells from such noisy high-dimensional SCS data.

In recent years, recovering intrinsic structure from high-dimensional data has become a central topic in the data science and machine learning community. Pioneering works generally seek to perform data dimensionality reduction or associate data with certain structured objects (Mao et al., 2016). For example, principal curve (Hastie and Stuetzle, 1989) and its successors (Tibshirani, 1992; Bishop et al., 1998; Kégl et al., 2000; Smola et al., 2001; Sandilya and Kulkarni, 2002; Olivas et al., 2009), fits/maps an infinitely differentiable curve with a finite length to pass through the middle of data. However, these methods cannot handle self-intersection data. To address this gap, principal graph method uses a collection of piecewise smooth curves to approximate the data structures with self-intersection (Kégl and Krzyzak, 2002). Topological data analysis, which performs graph representation of high-dimensional datasets, provides another way to handle self-intersection structure (Carlsson, 2009). Ge et al. (2011) develops an efficient topological data analysis algorithm, which uses Reeb graphs (Dey and Wang, 2011) to extract a one-dimensional skeleton from unorganized data. Another tool, Mapper (Singh et al., 2007), builds simplicial complexes to preserve certain topological structures from the original dataset, and has been applied on single-cell data to reconstruct the dynamical structures of cell states (Rizvi et al., 2017). In addition, Mao et al. (2016) proposes a principal graph and structure learning framework based on reversed graph embedding (RGE) to capture the local information of the underlying graph structure. RGE is subsequently equipped by Monocle2 (Qiu et al., 2017) for single-cell trajectory inference.

The high noise in SCS data, which is brought by either technological or/and experimental issues, could possibly affect downstream clustering or distort the reconstruction of intrinsic structures. A number of computational methods have been proposed to retrieve lost and corrupted information from SCS data (Chen Z. et al., 2020; Patruno et al., 2021). Among them, methods based on matrix decomposition are computationally more efficient, especially extensions of robust principle component analysis (RPCA) algorithms. RPCA is an efficient low-rank matrix decomposition method for recovering low-dimensional subspace from corrupted data (Lin et al., 2010; Candes et al., 2011; Hsu et al., 2011; Vidal et al., 2016), which has been applied to denoise either DNA or RNA profiles of SCS data (Chen C. et al., 2020; Chen Z. et al., 2020; Su et al., 2022). Since the assayed cells often come from a few states in cell development or clones in tumor, and cells of same state or clone have similar or identical expression or genomic profile. In addition, noise in observed SCS data generally introduced by technologies can be random and sparse (Chen Z. et al., 2020; Su et al., 2022). Therefore, SCS data well suits the low-rank plus sparse matrices assumptions of RPCA.

In this study, we propose a unified framework, termed RobustTree, to reconstruct the inherent topological structure underlying high-dimensional data with high noise. The framework involves a matrix decomposition process that recovers the latent data points in a low-rank space. And these data points are used directly to reconstruct a tree to represent the inherent single-cell evolutionary trajectory. We also introduce a discriminative and compact feature representation for clustering problems with an assumption that the cluster centers should be close to each other when connected on the learned tree structure, otherwise they should be distant (Mao et al., 2015). More specifically, the optimization objective function of the framework consists of the following three basic components, including 1) denoising using robust principal component analysis (RPCA) and extended RPCA method; 2) performing data clustering with a soft assignment strategy; 3) reconstructing the minimum spanning tree (MST) among cluster centers as the potential topological structure. RobustTree leverages data denoising, clustering, and tree structure reconstruction and solves the tree optimization problem with an adaptive parameter selection scheme. Based on adaptive trade-off parameters, RobustTree not only can reconstruct continuous topological structure, e.g., cell development trajectory based on single-cell RNA sequencing gene expression data, but also display discrete-state topological structure, e.g., tumor evolution history based on single-cell DNA sequencing genetic variant data, including single-cell single nucleotide variation (scSNV) data and single-cell copy number alteration (scCNA) data. By using multiple simulated and real datasets, we demonstrate that RobustTree is accurate and robust on high-noise data with complex structures.

2 Materials and methods

Let

In the following, we introduce three components of the proposed framework, including 1) RPCA and extended RPCA algorithms (Section 2.1), which are used to recover low-rank subspace from data matrix with corrupted and/or missing entries, 2) data clustering (Section 2.2) and 3) the minimum spanning tree (MST) optimization problem (Section 2.3). Finally, we describe our RobustTree framework, which is a low-rank matrix recovery framework coupled with tree structure optimization (Section 2.4).

2.1 RPCA and extended RPCA

2.1.1 RPCA

The celebrated dimensionality reduction method, PCA (Vidal et al., 2005), which assumes that noise follows a Gaussian distribution, is unrobust to in-sample outliers. As a consequence, the robust PCA (RPCA) (Lin et al., 2010; Candes et al., 2011; Hsu et al., 2011; Vidal et al., 2016) emerges to recover the potential low-rank matrix from data with sharp and sparse noise.

Assume that the observed data matrix XM×N is generated by the sum of two matrices X = A0 + E0, where A0 is a low-rank matrix, and E0 represents the intra-sample outliers affected by random sparse noise, then the RPCA problem can be formulated as:

where ‖ ⋅‖0 denotes 0-norm of the matrix (i.e., the number of non-zero entries in the matrix), and λ is a trade-off parameter. However, solving Problem Eq. 1 is generally NP-hard (Vidal et al., 2005; Chen Z. et al., 2020). Therefore, in order to reduce the above computation burden, Problem Eq. 1 can be convexly relaxed as

where ‖ ⋅‖* and ‖⋅‖1 represent the nuclear norm and the ℓ1 norm of the matrix, respectively. We refer to Problem Eq. 2 as a relaxed version of RPCA, which can be efficiently solved by augmented Lagrange multipliers (ALM) (Lin et al., 2010).

2.1.2 Extended RPCA

In practice, dropout/missing events can occur frequently in the observed matrix, except for corrupted entries. In order to model the missing entries, we first define a linear mapping

which aims to decompose X into a low-rank matrix A and sparse component E based only on the observed data PΦ(X). The optimization Problem Eq. 3 is shown to be equivalent to solving the following constrained optimization problem (Shang et al., 2014):

which can also be solved by the ALM algorithm (Shang et al., 2014; Chen Z. et al., 2020). It is worth noting that when Φ is the index set of all entries in X, Problem Eq. 4 can be transformed into Problem Eq. 2; that is, Problem Eq. 2 can be regarded as a special case of Problem Eq. 4. Thus, we can solve these problems in a unified form provided by Problem Eq. 4.

2.2 Data clustering

The second component of our proposed framework is clustering of the recovered low-rank matrix. Since RPCA-based recovery of low-rank matrices may not fully guarantee an error-free state, we cannot obtain cluster centers by simply merging rows with the same features in the low-rank matrix A (Chen Z. et al., 2020). Assume there exist K clusters in the data points. Then we denote Ck as the kth cluster centroid of A (k ∈ {1, …, K}). We minimize the following quantization error (Smola et al., 2001) to get the optimal cluster centroids:

When K < M, we introduce an indicator matrix Δ ∈ [0,1]M×K, where the (i, k)th element δi,k = 1 indicates that data point Ai is assigned to the kth cluster, and δi,k = 0 otherwise. Then, we get the equivalent optimization objective as follows:

where

where σ > 0 is a regularization parameter. When R ∈ [0,1]M×K is a left stochastic matrix with each column summing to one, optimization Problem Eq. 7 is equivalent to mean shift clustering, and when R ∈ [0,1]M×K is a right stochastic matrix with each row summing to one, optimization Problem Eq. 7 is equivalent to the Gaussian mixture model with uniform weights (Mao et al., 2016).

2.3 Minimum spanning tree (MST)

MST is a common graphical representation applied in the reconstruction of dynamic biological processes, such as cell developmental trajectory reconstruction and tumor evolutionary history recovery (Gawad et al., 2014; Yuan et al., 2015; Ross and Markowetz, 2016; Qiu et al., 2017; Chen et al., 2019; Chen Z. et al., 2020). MST characterizes lineage tracing path between different cell states or tumor clones and can explicitly reflect the process of cell development or the progression of subclones (Chen Z. et al., 2020).

We follow the MST optimization scheme proposed by Mao et al. (2015). Let

Let B = [bi,j] ∈ {0,1}K×K; Then the integer linear programming formulation of MST can be represented as follows (Mao et al., 2015):

Worth noting that there are three constraints applied to set

where bi,j ≥ 0, the set of linear constraints is given by

2.4 Low-rank matrix recovery coupled with tree structure optimization

Based on the three building blocks described in Sections 2.1, Sections 2.2, and Sections 2.3, we are ready to formulate our unified framework to learn the latent topological structure hidden underneath noisy SCS data. We use the alternating direction multiplier method (ADMM) to solve the proposed optimization objective function by simultaneously recovering real data points and learning a tree-like structure with guaranteed convergence.

2.4.1 RobustTree framework

Given observed input SCS data XM×N, our goal is to reveal the underlying tree structure that generates X. Since the observed data may be corrupted by noise, it is not appropriate to recover the underlying topology directly from the observation matrix. Instead, to unveil the underlying structure, we assume that a latent low-rank matrix AM×N can be recovered from XM×N, and we focus on learning a tree structure

where λ, θ, γ and σ are trade-off parameters, ri,k indicates the (i, k)th entry in matrix

2.4.2 RobustTree framework optimization algorithm

We solve optimization Problem Eq. 10 by alternating direction multiplier method (ADMM). We divide variables into two disjoint groups as {A, E, C} and {B, R}, and then solve each subproblem iteratively until convergence is achieved. We show details of the two following subproblems below.

• Fix {B, R} and update {A, C, E}

When fixing {B, R}, Problem Eq. 10 can be simplified into the following subproblem:

The corresponding augmented Lagrange function is:

Optimization Problem Eq. 12 can be transformed into the following form after some matrix manipulations:

Updating C.

Let the partial derivative of

Substituting CA into

Updating E.

By retaining items related only to E in Problem Eq. 14, we have

Solution E of Problem Eq. 15 can be written as (Shang et al., 2014; Chen Z. et al., 2020):

Updating A.

After removing items unrelated to A in Problem Eq. 14, we have

Then Problem Eq. 17 is equivalent to

where

then

Owing to

we have

Then, we can set Lf = ‖(μ + 2γ)I − 2γSST‖. Considering the quadratic approximation function of

since formula (19) is a strong convex function, a primal solution exists for

Let

Then, we obtain pseudocode for the subproblems of solving A, E, C with fixed B, R, as shown in Algorithm 1.

Algorithm 1. The Algorithm of solution for A, E, C with fixed B, R

fixing {B, R}

while not converged do

while not converged do

• Fix {A, C, E} and update {B, R}

Given {A, C, E}, Problem Eq. 10 with respect to B and R is a jointly convex optimization problem, which can be solved independently.

Updating R.

When fixing {A, E, C}, the optimization function related to R can be written as follows:

The KKT condition is ‖Ai − Ck‖2 + σ(1 + log (ri,k)) + α = 0 and ∑kri,k = 1, ri,k ≥ 0, ∀k ∈ {1, …, K}. Then we have the analytic solution of R given by ri,k = exp (‖Ai − Ck‖2/σ − (1 + α/σ)). Owing to ∑kri,k = 1, we can get

Updating B.

The term associated with B in optimization function (10) is to find the minimum spanning tree among cluster centers in C, which can be solved via Kruskal’s algorithm (Kruskal, 1956; Cormen et al., 2022). Then we can sort out the pseudocode for the subproblem of solving B, R with fixed A, C, E as shown in Algorithm 2.

Algorithm 2. The Algorithm of solution for B, R with fixed A, C, E

fixing {A, C, E}

dk,k′ = ‖Ck − Ck′‖2, ∀k, ∀k′

Obtain B by solving optimization Problem Eq. 9 via Kruskal’s algorithm

Compute R with each element as

Finally, combining the two subproblems above, we formulate the complete pseudocode of exact RobustTree algorithm in Algorithm 3. Fortunately, as it turns out, in the kth iteration of B and R, we do not have to solve the subproblem

Algorithm 3. The exact RobustTree algorithm

Input: X, λ, θ, γ, and σ

Output: A, C, E, B, R

Initialize A by ZF(X), K, C

While not converged do

dk,k′ = ‖Ck − Ck′‖2, ∀k, ∀k′

Obtain B by solving Problem Eq. 9 via Kruskal’s algorithm

Compute R with each element as

While not converged do

While not converged do

Algorithm 4. The inexact RobustTree algorithm

Input: X, λ, θ, γ, and σ

Output: A, C, E, B, R

Initialize A by ZF(X), K, C

while not converged do

dk,k′ = ‖Ck − Ck′‖2, ∀k, ∀k′

Obtain B by solving Eq. 9 via Kruskal’s algorithm

Compute R with each element as

2.4.3 Convergence analysis

Since optimization Problem Eq. 10 is non-convex, many local optimal solutions are possible. We perform theoretical convergence analysis as shown in Theorem 1.

Theorem 1. Let {Bl, Rl, Al, Cl, El} be the solution of Problem (10) in the lth iteration, and let

1.

2. Sequences {Bl, Rl, Al, Cl, El} and

Then, sequence

2.4.4 Adaptive parameter selection

We denote missing rate as s in the observed input SCS data. Then we select the hyper-parameters in Problem Eq. 10 as follows:

where the selection of λ refers to Chen Z. et al. (2020). And we choose the other parameters to coordinate the value of each single item in Problem 10 with a similar magnitude during the optimization process.

We initialize K as following:

Each cell i is assigned to cluster k, which has the maximum value ri,j, ∀j ∈ {1, …, K}, i.e., k = arg maxj∈{1,…,K}ri,j, and finally remove the repeated cluster centers to get the final cluster centers. With the above parameter settings, RobustTree can be applied to the reconstruction of continuous trajectory and discrete-state topological structure.

2.5 Evaluations

To evaluate cluster assignment and data recovery performance, we adopt the following measurements, including 1) adjusted rand index (ARI) (Rand, 1971; Qiu et al., 2017; Chen et al., 2019); 2) the error rate of the recovered matrixes to the ground truth (Chen Z. et al., 2020); 3) the percentage of missing entries imputed correctly (Miura et al., 2018; Chen Z. et al., 2020) and 4) the false positives and false negatives (FPs + FNs) ratios of output genotype matrix to input genotype matrix for scSNV data (Miura et al., 2018; Chen Z. et al., 2020).

3 Results

3.1 RobustTree reconstructs continuous trajectories on noisy simulation data with high accuracy

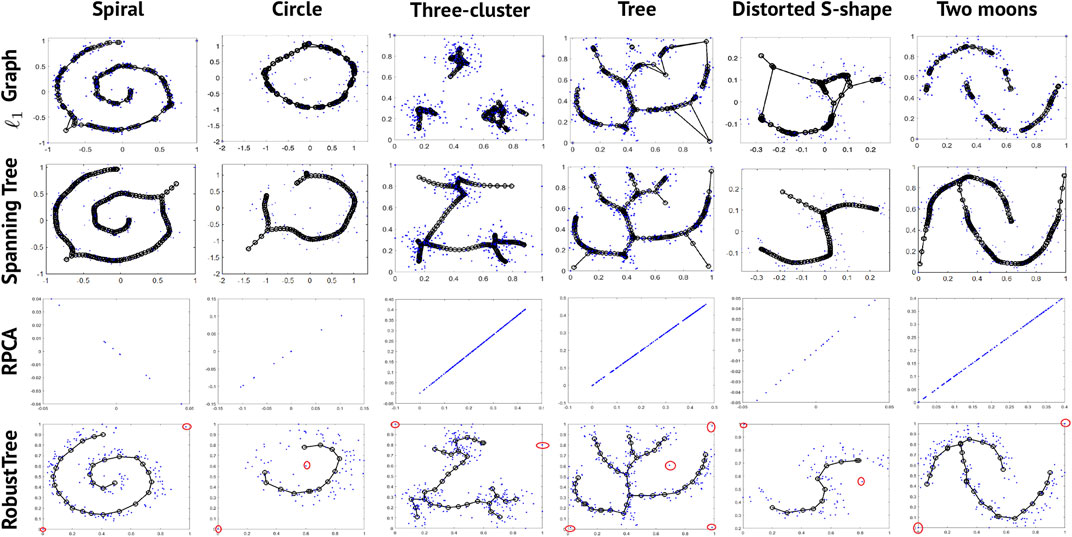

To demonstrate that RobustTree can preserve the global structure and handle high-noise data with continuous topology, we apply RobustTree to 6 simulated datasets with continuous trajectories. The original data are taken from Mao et al. (2016), which contain 200 (Spiral), 100 (circle), 300 (Three-cluster), 300 (Tree), 100 (Distorted S-shape), and 200 (Two moons) data points, respectively. To test the robustness of RobustTree to noise, we add 1 to 4 sharp noise points (points in the red circle in the fourth row of Figure 1) to each datum. We compare the results of RobustTree with l1 Graph and Spanning Tree, which are two algorithms performing principal graph and structure learning based on inverse graph embedding (Mao et al., 2016), as well as RPCA/RobustClone (Chen Z. et al., 2020).

FIGURE 1. Results of 4 algorithms on 6 synthetic datasets with continuous topological structure. The red circle points in the fourth row are the artificially added noise points.

Although l1 Graph and Spanning Tree show better effectiveness and stability than the Polygonal Line method (Kégl et al., 2000), SCMS (Ozertem and Erdogmus, 2011), and Mapper (Singh et al., 2007) algorithms in the results of Figure 5 in Mao et al. (2016), they are unstable to sharp noise. As shown in Figure 1, l1 Graph and Spanning Tree will generate redundant bifurcations or edges to connect noise points with parameters tuned for the original dataset by Mao et al. (2016), highlighting the obviously ineffective identification or removal of noise. When we directly apply RPCA, which is the first step in RobustClone algorithm, to this synthetic dataset, it tends to optimize the continuous topological structure into a straight line. This is probably due to the fact that the original two dimensional data matrices do not have the relatively low-rank property required by the RPCA method.

In contrast, since RobustTree optimizes denoising, clustering and tree reconstruction in a unified framework, it shows stronger robustness than other methods. As shown in Figure 1, RobustTree effectively extracts the sharp noise into E, clusters the recovered latent low-rank matrix, and reconstructs the intrinsic continuous trajectory with multiple types of data, including structures with linear or simple bifurcations.

3.2 RobustTree reconstructs continuous multi-branch trajectory effectively

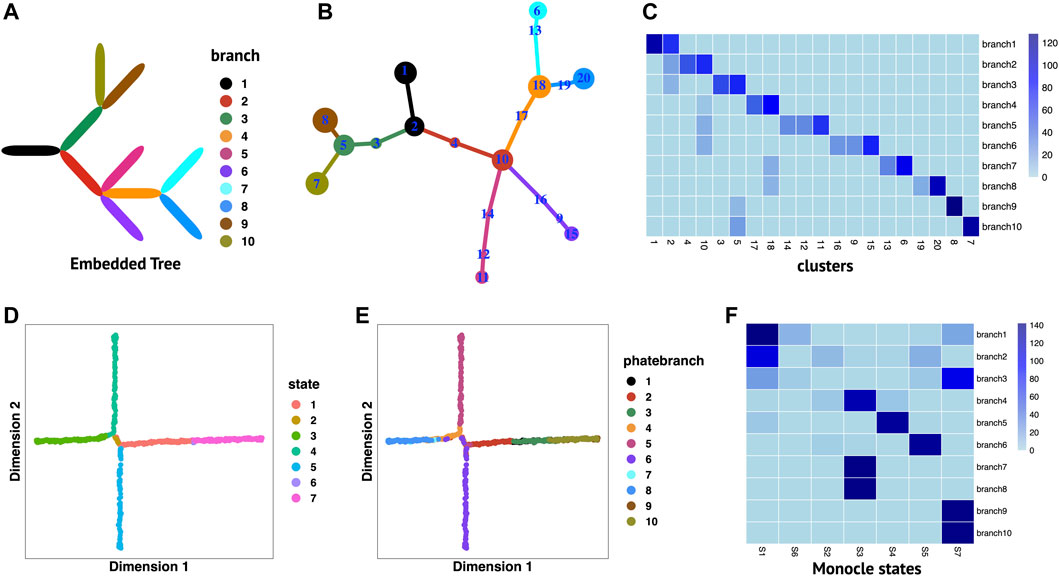

We perform RobustTree on simulated PHATE data to demonstrate its ability to handle data with continuous multi-branch development structures. The original data contain 1440 single cells and 60 genes (Moon et al., 2019), which imply an embedded continuous tree structure with 10 uniform branches to model a system where development along a given branch corresponds to increased expression of several genes (Figure 2A) (Chen et al., 2019; Moon et al., 2019).

FIGURE 2. RobustTree reconstructs continuous multi-branch trajectory on PHATE data. (A) The real embedded tree structure of simulated PHATE data. (B) Tree trajectory reconstructed by RobustTree and visualized by R package igraph. The size of the cluster is proportional to the number of cells it contains, and the branch length is proportional to the distance between connected clusters. (C) Heatmap shows the percentage of cells in cluster (x-axis) distributed into real branch (y-axis). (D) Monocle2 reduces dimension on PHATE data. (E) Truth branch assignment on 2D embedding of Monocle2. (F) Heatmap shows the percentage of cells in Monocle states (x-axis) distributed into real branch (y-axis).

RobustTree identifies 20 clusters and accurately reconstructs continuous trajectory with multi-branch on PHATE data, which contains three bifurcating events and one trifurcating event (Figure 2B). Figure 2C displays the distribution of clusters over branches. Clusters identified by RobustTree are almost exactly divided into a certain branch, except for clusters 2, 5, 10, and 18, which are located at branching points. The ARI between the branches identified by RobustTree and truth branch assignment is 0.6765. Since clusters at branching point contain cells from different branches, when excluding these clusters in ARI computation, we can achieve 0.9383.

We compare the RobustTree to Monocle2 (Qiu et al., 2017), a method that resolves complex single-cell trajectories using RGE, on this dataset. Monocle2 identifies 7 cell states, denoted as S1–S7, (Figures 2D, E, F), where real branches 1, 2 and 3, real branches 7, 8 and real branches 9, 10, are merged into S1, S3, and S7, respectively, leading to 6 main Monocle2 branches (Figures 2D, E, F). The ARI between the cell states identified by Monocle2 and the truth branch assignment is 0.4427.

3.3 RobustTree recovers discrete cell evolutionary history accurately on simulation data

Tumor evolution has been a subject with longstanding discussion (Nowell, 1976; Chen Z. et al., 2020). In tumors, cells form subpopulations (subclones) with nearly or completely identical genetic compositions, usually making the number of subclones much smaller than the number of cells or the number of mutational sites. In practice, the observed single-cell data are often incorporated with random noise caused by technical errors, including sequencing errors and dropout events. Accordingly, an important topic in tumor single-cell data analysis involves recovering subclonal genotypes and reconstructing the evolutionary history of subclones from corrupted data. In this study, we can also perform RobustTree on tumor single-cell DNA sequencing data to study the above problems.

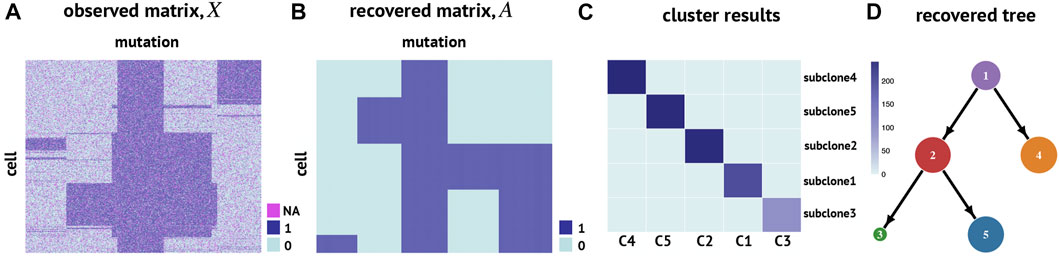

We first apply RobustTree to simulated scSNV data, which contain 1000 cells and 300 mutation sites, along with a sequencing error rate of 30% and a dropout rate of 20% (Chen Z. et al., 2020) (Figure 3A). There are 5 subclones along the real cell evolution tree, containing 193, 235, 93, 241, and 238 cells, respectively (Supplementary Figure S1). We use RobustTree to recover real cell genotypes and reconstruct the subclone evolutionary tree, and compare the performance of RobustTree to a state-of-the-art method, SCG, on the dataset.

FIGURE 3. RobustTree reconstructs the tumor evolutionary tree on simulated single-cell DNA sequencing data. (A) Observed cell genotype matrix. (B) Recovered cell genotype matrix by RobustTree. (C) Heatmap shows the percentage of cells in each subclone (y-axis) distributed into real subclones (x-axis). (D) Subclone evolutionary tree reconstructed by RobustTree.

RobustTree shows more accuracy than SCG on this simulation dataset. Specifically, for subclone identification, RobustTree identifies 5 subclone assignments on the tree, where the subclone assignment is exactly the same as the true subclone assignment (Figure 3C), that is, the ARI between the subclones identified by RobustTree and the true clone assignment is 1. However, SCG identifies 4 clusters, containing 278, 93, 391, and 238 cells, respectively. The ARI between the SCG clusters and the real clone assignment is 0.7245. For genotype recovery, RobustTree recovers the true genotype matrix with 100% accuracy (Figure 3B) with error rate and FPs + FNs(output/input) as 0, missing imputed correctly rate as 1. And the recovered tree structure (Figure 3D) exactly coincides with the real evolutionary history (Supplementary Figure S1). In contrast, the FPs + FNs(output/input) and missing imputed correctly rate are 0.2484, and 0.9603, respectively, leading to the total error rate of 3.77% in SCG results.

3.4 RobustTree recovers scSNV genotype and infers subclonal tree on high-grade serous ovarian cancer data

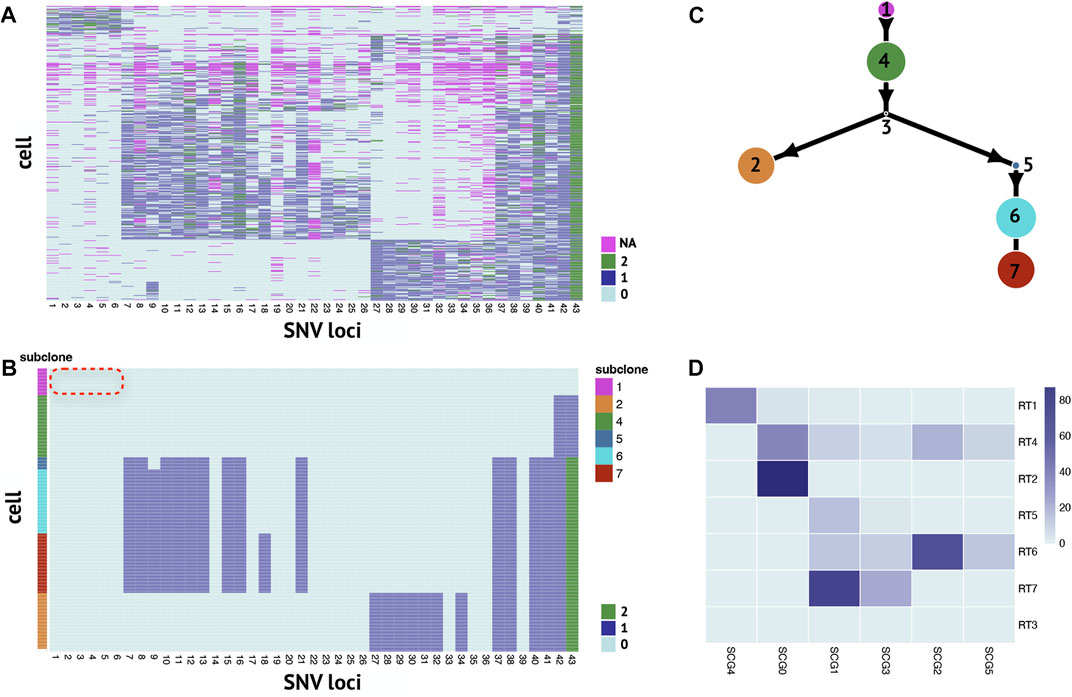

We apply RobustTree on the single-cell high-grade serous ovarian cancer (abbreviated as HGSOC) data (McPherson et al., 2016; Roth et al., 2016; Chen Z. et al., 2020), which contain 420 cells and 43 selected SNV sites with a missing rate of 10.7% (Figure 4A). RobustTree efficiently recovers the real genotype by imputing the missing data and correcting the noisy entries (Figure 4B) and identifies 7 subclones on the reconstructed MST, which contain 40, 87, 0, 92, 18, 95, and 88 cells, respectively (Figure 4C). Since subclone1 does not contain any mutations, it is assigned as the root subclone (Figure 4C).

FIGURE 4. RobustTree reconstructs the tumor evolutionary tree on single-cell high-grade serous ovarian cancer data. (A) Observed noisy SNV genotype matrix. (B) Recovered SNV genotype matrix by RobustTree. (C) Subclone evolutionary tree reconstructed by RobustTree. (D) Heatmap shows the percentage of cells in each subclone (y-axis) distributed into SCG subclones (x-axis).

Along the phylogenetic trees reconstructed along RobustTree, heterozygous mutations first occur at loci 42 and 43 in subclone4. Followed by homozygous mutation at locus 43 and heterozygous mutations at loci 37, 38, 40 and 41, all descendant subclones inherit these mutations. In addition, based on the observable ancestor subclone4, subclone2 accumulates mutations at loci 27-34, on the other branch, mutations mostly occur at loci 7–21.

We compare RobustTree to SCG (Roth et al., 2016) on this dataset. SCG identifies 6 clusters, where one main branch in SCG results, SCG0, contains cells from both subclone2 and subclone4 on RobustTree, and another main branch consisting of clusters SCG1, SCG3, SCG2, SCG5 corresponds to the branch comprised of subclones 5, 6, 7 on RobustTree. The cells of root cluster SCG4 dominate subclones1 of RobustTree, which can be interpreted as normal subclone (Figure 4D). However, SCG recovers some precancerous mutations in the normal cluster. In general, these precancerous mutations are expected to be carried in subsequent subclones, but they are completely absent in all progeny subclones (Figure 3 in Roth et al. (2016)). In contrast, RobustTree and RobustClone identify these mutations as false positive issues and recover the genotype without these mutations (Figure 4B), which seems more reasonable.

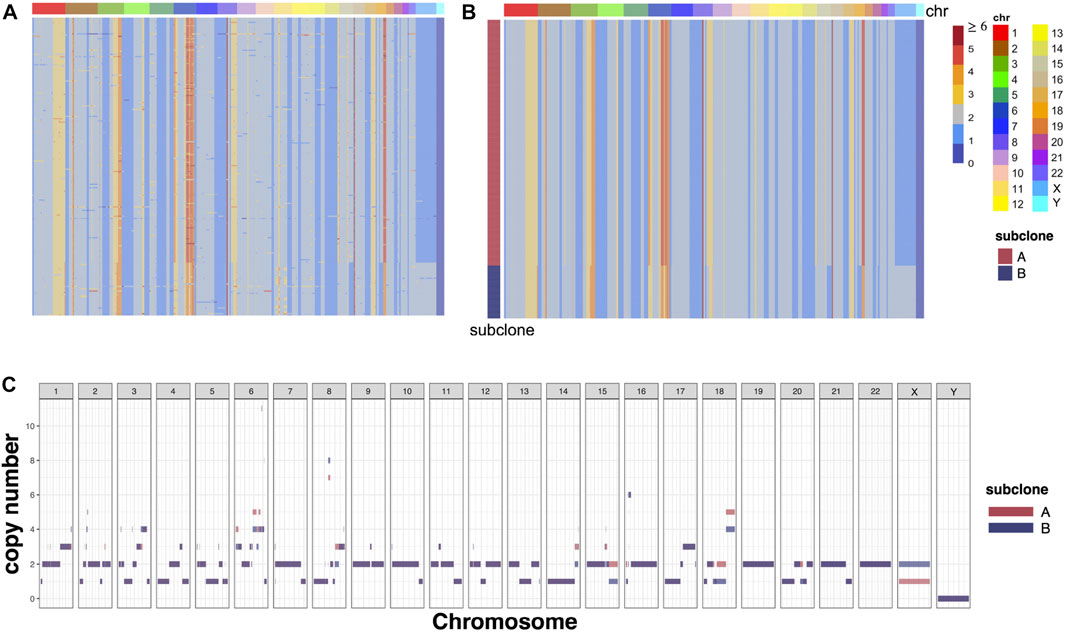

3.5 RobustTree recovers scCNA genotype on SA501X3F data

RobustTree can also detect copy number heterogeneity and identify subclones in scCNA data. To demonstrate this, the RobustTree algorithm was applied to the cell copy number profile from primary triple-negative breast cancer (TNBC) xenograft passages, denoted as SA501X3F data (Zahn et al., 2017; Campbell et al., 2019). The data consist of the copy number states with 260 single cells and 20,651 genomic bins, as shown in Figure 5A. By leveraging data denoising, clustering, and tree structure reconstruction, RobustTree identifies two subclones (Figure 5B), containing 214 cells (subclone A) and 46 cells (subclone B), respectively. RobustTree recovers true cell genotypes (Figure 5B) and subclonal genotypes (Figure 5C), where the difference between the genotypes of the two subclones lies in the large fragment variation on the X chromosome, and small fragment variants on the chromosomes 6, 8, 15, and 18 (Figure 5BC).

FIGURE 5. RobustTree reconstructs the tumor evolutionary tree on SA501X3F data. (A) Observed noisy CNA genotype matrix. (B) Recovered CNA genotype matrix by RobustTree. (C) Genotypes of subclones recovered by RobustTree.

This result is completely consistent with the result of RobustClone, that is, the ARI value between RobustTree and RobustClone classification is 1. And the cell assignment of subcloneA is also completely consistent with the major subclone identified in Zahn et al. (2017); Campbell et al. (2019). In the results of Zahn et al. (2017), there are two subclones derived from the subcloneB of RobustTree, which contain 28 and 18 cells, respectively. Since Zahn et al. (2017) identifies clones without explicitly correction for noise, there exists some uncertainty of assignment between these two minor subclones (Campbell et al., 2019). Therefore, classification results with two subclones are more robust (Chen Z. et al., 2020).

4 Conclusion

Computational methods based on SCS data to reconstruct inherent structure can provide important insight into the understanding of cell development and tumor progression. In this study, we propose a unified framework, RobustTree, which can recover corrupted entries and reconstruct the intrinsic structure underlying data. By coupling RPCA with tree structure optimization, RobustTree can leverage data denoising, clustering and tree structure reconstruction, as well as solve the tree optimization problem using adaptive parameter selections. By comparing to some other state-of-the-art methods, experimental results demonstrate the effectiveness of RobustTree on various types of datasets with different topological structures, including continuous cellular complex development trajectory and discrete cell evolutionary tree.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://liwang8.people.uic.edu/PAMI2016-PGSL.html; https://github.com/KrishnaswamyLab/PHATE; https://github.com/ucasdp/RobustClone; https://static-content.springer.com/esm/art%3A10.1038%2Fnmeth.3867/MediaObjects/41592_2016_BFnmeth3867_MOESM262_ESM.xls; https://zenodo.org/record/2363826#.Y_Wn1OzMKdY.

Author contributions

ZC, BZ, FG, LM, and LW conceived the model. ZC and BZ conducted the experiments. ZC, BZ, LM, and LW analyzed the results. ZC, BZ, FG, LM, and LW wrote and reviewed the manuscript.

Funding

This work is supported by the National Key R&D Program of China under Grant 2019YFA0709501, NSFC grants (Nos.11971459, 12071466), NCMIS of CAS, and LSC of CAS.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fgene.2023.1110899/full#supplementary-material

References

Bishop, C. M., Svensén, M., and Williams, C. K. (1998). Gtm: The generative topographic mapping. Neural Comput. 10, 215–234. doi:10.1162/089976698300017953

Campbell, K. R., Steif, A., Laks, E., Zahn, H., Lai, D., McPherson, A., et al. (2019). clonealign: statistical integration of independent single-cell rna and dna sequencing data from human cancers. Genome Biol. 20, 54–12. doi:10.1186/s13059-019-1645-z

Candes, E. J., Li, X., Ma, Y., and Wright, J. (2011). Robust principal component analysis? J. AMC 58, 1–37. doi:10.1145/1970392.1970395

Carlsson, G. (2009). Topology and data. Bull. Am. Math. Soc. 46, 255–308. doi:10.1090/s0273-0979-09-01249-x

Chen, C., Wu, C., Wu, L., Wang, X., Deng, M., and Xi, R. (2020). scRMD: imputation for single cell RNA-seq data via robust matrix decomposition. Bioinformatics 36, 3156–3161. doi:10.1093/bioinformatics/btaa139

Chen, Z., An, S., Bai, X., Gong, F., Ma, L., and Wan, L. (2019). DensityPath: An algorithm to visualize and reconstruct cell state-transition path on density landscape for single-cell RNA sequencing data. Bioinformatics 35, 2593–2601. doi:10.1093/bioinformatics/bty1009

Chen, Z., Gong, F., Wan, L., and Ma, L. (2022). BiTSC2: Bayesian inference of tumor clonal tree by joint analysis of single-cell SNV and CNA data. Briefings Bioinforma. 23, bbac092. doi:10.1093/bib/bbac092

Chen, Z., Gong, F., Wan, L., and Ma, L. (2020). RobustClone: A robust PCA method for tumor clone and evolution inference from single-cell sequencing data. Bioinformatics 36, 3299–3306. doi:10.1093/bioinformatics/btaa172

Cormen, T. H., Leiserson, C. E., Rivest, R. L., and Stein, C. (2022). Introduction to algorithms. Massachusetts: MIT press.

Dey, T. K., and Wang, Y. (2011). “Reeb graphs: Approximation and persistence,” in Proceedings of the twenty-seventh annual symposium on Computational geometry, Paris, France, June 13-15, 2011, 226–235.

Gawad, C., Koh, W., and Quake, S. R. (2014). Dissecting the clonal origins of childhood acute lymphoblastic leukemia by single-cell genomics. Proc. Natl. Acad. Sci. 111, 17947–17952. doi:10.1073/pnas.1420822111

Gawad, C., Koh, W., and Quake, S. R. (2016). Single-cell genome sequencing: Current state of the science. Nat. Rev. Genet. 17, 175–188. doi:10.1038/nrg.2015.16

Ge, X., Safa, I., Belkin, M., and Wang, Y. (2011). “Data skeletonization via reeb graphs,” in Advances in neural information processing systems (Massachusetts: Massachusetts Institute of Technology Press), 24.

Hastie, T., and Stuetzle, W. (1989). Principal curves. J. Am. Stat. Assoc. 84, 502–516. doi:10.1080/01621459.1989.10478797

Hsu, D., Kakade, S. M., and Zhang, T. (2011). Robust matrix decomposition with sparse corruptions. IEEE Trans. Inf. Theory 57, 7221–7234. doi:10.1109/tit.2011.2158250

Kégl, B., Krzyzak, A., Linder, T., and Zeger, K. (2000). Learning and design of principal curves. IEEE Trans. Pattern Analysis Mach. Intell. 22, 281–297. doi:10.1109/34.841759

Kégl, B., and Krzyzak, A. (2002). Piecewise linear skeletonization using principal curves. IEEE Trans. Pattern Analysis Mach. Intell. 24, 59–74. doi:10.1109/34.982884

Kruskal, J. B. (1956). On the shortest spanning subtree of a graph and the traveling salesman problem. Proc. Am. Math. Soc. 7, 48–50. doi:10.1090/s0002-9939-1956-0078686-7

Lin, Z., Chen, M., and Ma, Y. (2010). The augmented Lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv preprint arXiv:1009.5055.

Mao, Q., Wang, L., Goodison, S., and Sun, Y. (2015). “Dimensionality reduction via graph structure learning,” in Proceedings of the 21th ACM SIGKDD international conference on knowledge discovery and data mining. Editor L. Cao (New York: Association for Computing Machinery), 765–774.

Mao, Q., Wang, L., Tsang, I. W., and Sun, Y. (2016). Principal graph and structure learning based on reversed graph embedding. IEEE Trans. Pattern Analysis Mach. Intell. 39, 2227–2241. doi:10.1109/tpami.2016.2635657

McPherson, A., Roth, A., Laks, E., Masud, T., Bashashati, A., Zhang, A. W., et al. (2016). Divergent modes of clonal spread and intraperitoneal mixing in high-grade serous ovarian cancer. Nat. Genet. 48, 758–767. doi:10.1038/ng.3573

Miura, S., Huuki, L. A., Buturla, T., Vu, T., Gomez, K., and Kumar, S. (2018). Computational enhancement of single-cell sequences for inferring tumor evolution. Bioinformatics 34, i917–i926. doi:10.1093/bioinformatics/bty571

Moon, K. R., van Dijk, D., Wang, Z., Gigante, S., Burkhardt, D. B., Chen, W. S., et al. (2019). Visualizing structure and transitions in high-dimensional biological data. Nat. Biotechnol. 37, 1482–1492. doi:10.1038/s41587-019-0336-3

Nowell, P. C. (1976). The clonal evolution of tumor cell populations: Acquired genetic lability permits stepwise selection of variant sublines and underlies tumor progression. Science 194, 23–28. doi:10.1126/science.959840

Olivas, E. S., Guerrero, J. D. M., Martinez-Sober, M., Magdalena-Benedito, J. R., Serrano, L., Jos, A., et al. (2009). Handbook of research on machine learning applications and trends: Algorithms, methods, and techniques: Algorithms, methods, and techniques. Pennsylvania: IGI global.

Ozertem, U., and Erdogmus, D. (2011). Locally defined principal curves and surfaces. J. Mach. Learn. Res. 12, 1249–1286.

Patruno, L., Maspero, D., Craighero, F., Angaroni, F., Antoniotti, M., and Graudenzi, A. (2021). A review of computational strategies for denoising and imputation of single-cell transcriptomic data. Briefings Bioinforma. 22, bbaa222. doi:10.1093/bib/bbaa222

Qiu, X., Mao, Q., Tang, Y., Wang, L., Chawla, R., Pliner, H. A., et al. (2017). Reversed graph embedding resolves complex single-cell trajectories. Nat. Methods 14, 979–982. doi:10.1038/nmeth.4402

Rand, W. M. (1971). Objective criteria for the evaluation of clustering methods. J. Am. Stat. Assoc. 66, 846–850. doi:10.1080/01621459.1971.10482356

Rizvi, A. H., Camara, P. G., Kandror, E. K., Roberts, T. J., Schieren, I., Maniatis, T., et al. (2017). Single-cell topological rna-seq analysis reveals insights into cellular differentiation and development. Nat. Biotechnol. 35, 551–560. doi:10.1038/nbt.3854

Ross, E. M., and Markowetz, F. (2016). Onconem: Inferring tumor evolution from single-cell sequencing data. Genome Biol. 17, 69–14. doi:10.1186/s13059-016-0929-9

Roth, A., McPherson, A., Laks, E., Biele, J., Yap, D., Wan, A., et al. (2016). Clonal genotype and population structure inference from single-cell tumor sequencing. Nat. Methods 13, 573–576. doi:10.1038/nmeth.3867

Sandilya, S., and Kulkarni, S. R. (2002). Principal curves with bounded turn. IEEE Trans. Inf. Theory 48, 2789–2793. doi:10.1109/tit.2002.802614

Shang, F., Liu, Y., Cheng, J., and Cheng, H. (2014). “Robust principal component analysis with missing data,” in Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, Shanghai, China, November 3 - 7, 2014, 1149–1158.

Singh, G., Mémoli, F., and Carlsson, G. E. (2007). “Topological methods for the analysis of high dimensional data sets and 3d object recognition,” in Eurographics Symposium on Point-Based Graphics, Mario Botsch, ETH Zurich, 2-3 Sept 2007.

Smola, A., Mika, S., Schölkopf, B., and Williamson, R. (2001). Regularized principal manifolds. Massachusetts: MIT Press.

Su, Y., Wang, F., Zhang, S., Liang, Y., Wong, K.-C., and Li, X. (2022). scWMC: weighted matrix completion-based imputation of scRNA-seq data via prior subspace information. Bioinformatics 38, 4537–4545. doi:10.1093/bioinformatics/btac570

Tibshirani, R. (1992). Principal curves revisited. Statistics Comput. 2, 183–190. doi:10.1007/bf01889678

Vidal, R., Ma, Y., and Sastry, S. (2016). Generalized principal component analysis. Berlin: Springer.

Vidal, R., Ma, Y., and Sastry, S. (2005). Generalized principal component analysis (gpca). IEEE Trans. Pattern Analysis Mach. Intell. 27, 1945–1959. doi:10.1109/TPAMI.2005.244

Wen, L., Li, G., Huang, T., Geng, W., Pei, H., Yang, J., et al. (2022). Single cell technologies: From research to application. Innovation 3, 100342. doi:10.1016/j.xinn.2022.100342

Wright, J., Ganesh, A., Min, K., and Ma, Y. (2013). Compressive principal component pursuit. Inf. Inference A J. IMA 2, 32–68. doi:10.1093/imaiai/iat002

Yuan, K., Sakoparnig, T., Markowetz, F., and Beerenwinkel, N. (2015). Bitphylogeny: A probabilistic framework for reconstructing intra-tumor phylogenies. Genome Biol. 16, 36–16. doi:10.1186/s13059-015-0592-6

Keywords: single-cell sequencing, robust principal component analysis, data denoising, clustering, tree structure reconstruction

Citation: Chen Z, Zhang B, Gong F, Wan L and Ma L (2023) RobustTree: An adaptive, robust PCA algorithm for embedded tree structure recovery from single-cell sequencing data. Front. Genet. 14:1110899. doi: 10.3389/fgene.2023.1110899

Received: 29 November 2022; Accepted: 13 February 2023;

Published: 08 March 2023.

Edited by:

Lin Hou, Tsinghua University, ChinaReviewed by:

Nayang Shan, Capital University of Economics and Business, ChinaJianyong Sun, Xi’an Jiaotong University, China

Copyright © 2023 Chen, Zhang, Gong, Wan and Ma. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lin Wan, bHdhbkBhbXNzLmFjLmNu; Liang Ma, bWFsaWFuZ0Bpb3ouYWMuY24=

†These authors have contributed equally to this work and share first authorship

Ziwei Chen

Ziwei Chen Bingwei Zhang

Bingwei Zhang Fuzhou Gong2,3

Fuzhou Gong2,3 Lin Wan

Lin Wan Liang Ma

Liang Ma