- 1College of Mechanical Engineering, Dalian University of Technology, Dalian, China

- 2School of Innovation and Entrepreneurship, Dalian University of Technology, Dalian, China

- 3The First Affiliated Hospital, Dalian Medical University, Dalian, China

- 4International Business School, Dongbei University of Finance and Economics, Dalian, China

In the field of bioinformatics, understanding protein secondary structure is very important for exploring diseases and finding new treatments. Considering that the physical experiment-based protein secondary structure prediction methods are time-consuming and expensive, some pattern recognition and machine learning methods are proposed. However, most of the methods achieve quite similar performance, which seems to reach a model capacity bottleneck. As both model design and learning process can affect the model learning capacity, we pay attention to the latter part. To this end, a framework called Multistage Combination Classifier Augmented Model (MCCM) is proposed to solve the protein secondary structure prediction task. Specifically, first, a feature extraction module is introduced to extract features with different levels of learning difficulties. Second, multistage combination classifiers are proposed to learn decision boundaries for easy and hard samples, respectively, with the latter penalizing the loss value of the hard samples and finally improving the prediction performance of hard samples. Third, based on the Dirichlet distribution and information entropy measurement, a sample difficulty discrimination module is designed to assign samples with different learning difficulty levels to the aforementioned classifiers. The experimental results on the publicly available benchmark CB513 dataset show that our method outperforms most state-of-the-art models.

Introduction

Gene controls the individual characters of biology through the guidance of protein synthesis to express its own genetic information. With the completion of the human genome project, scientists have never stopped studying the protein structure. Understanding protein secondary structure is very important for exploring diseases and finding new treatments (Huang et al., 2016; Li et al., 2021). Protein structure prediction is a very important research topic in the field of bioinformatics. Protein is the material basis of life activities, the basic organic matter of cells, and the main undertaker of life activities. Proteins can be folded into different structures or conformations, showing the feasibility of various biological processes in organisms. The protein structure determines its function, so the prediction of protein structure has great research value. In the field of bioinformatics, it is difficult to predict the spatial protein structure from the primary structure, so the prediction of the protein secondary structure has attracted much attention (Zhang, 2008; Källberg et al., 2012). Protein secondary structures refer to the local spatial structure of the polypeptide chain skeleton, not considering the conformation of the side chain and the spatial arrangement of the whole peptide chain. Besides, protein secondary structures are stabilized by hydrogen bonds on the backbone and are considered the linkages between primary sequences and tertiary structures (Myers and Oas, 2001). According to the distinct hydrogen bonding modes, generally, three types of secondary structures have been identified, namely helix (H), strand (E), and coil (C), where the helix and strand structures are most common in nature (Pauling et al., 1951). In the new classification calculated by the DSSP algorithm, the previous three states are extended to eight states, including α-helix (H), 310 helix (G), π-helix (I), β-strand (E), β-bridge (B), β-turn (T), bend (S), and coil (C) (Kabsch and Sander, 1983), among which the α-helix and β-strand are the principal structure features (Lyu et al., 2021).

In the field of genetics and bioinformatics, protein secondary structure prediction is intended to predict the three-dimensional structure of a protein from its amino acid sequence (Drozdetskiy et al., 2015). The protein structure prediction is very important for understanding the relationship between protein structure and its function. Experimental protein structure determination methods include X-ray crystallography, nuclear magnetic resonance spectroscopy, and electron microscopy. However, all of these methods are very time-consuming and expensive and require expertise. What is more, at present, the growth rate of the protein sequence is much higher than the chemical or biological protein structure determination methods (Fang et al., 2020). Hence, it is very urgent to explore the protein secondary structure prediction methods. Although the three-dimensional structure of a protein cannot be accurately predicted directly from the amino acid sequence of the protein, we can predict the protein secondary structure to understand the three-dimensional structure of the protein. The protein secondary structure reserves part of the three-dimensional structure information and can help understand the three-dimensional morphology of the amino acid in the primary structure (Hanson et al., 2019).

Due to the high application value of the protein secondary structure prediction in many biological aspects, plenty of related algorithms based on deep learning methods have been proposed over the years (Li and Yu., 2016; Wang et al., 2016; Heffernan et al., 2017; Fang et al., 2018; Zhang et al., 2018; Uddin et al., 2020; Guo et al., 2021; Lyu et al., 2021; Drori et al., 2018). Current methods mainly utilize the convolutional and recurrent neural network to extract different protein features and then apply them to protein secondary structure prediction. For example, Li and Yu (2016) proposed an end-to-end deep network to predict the secondary structure of proteins from the integrated local and global context features, which leveraged convolutional neural networks with different kernel sizes to extract multiscale local contextual features and a bidirectional neural network consisting of the gated recurrent unit to capture global contextual features. Wang et al. (2016) presented Deep Convolutional Neural Fields (DeepCNF) for protein secondary structure prediction, which can model not only complex sequence-structure relationship by a deep hierarchical architecture but also interdependency between adjacent protein secondary labels, so it is much more powerful than traditional Convolutional Neural Fields. Lyu et al. (2021) presented a reductive deep learning model MLPRNN to predict either 3-state or 8-state protein secondary structures. Besides, Uddin et al. (2020) incorporated a self-attention mechanism within the Deep Inception-Inside-Inception network (Fang et al., 2018) to capture both the short- and long-range interactions among the amino acid residues. Guo et al. (2021). Integrated and developed multiple advanced deep learning architectures (DNSS2) to further improve secondary structure prediction. As described above, most researchers currently focus on exploring the complex deep learning models, and a few try to solve the protein secondary structure prediction task from the perspective of model learning or training methods, for example, “ELF: An Early-Exiting Framework for Long-Tailed Classification” (Duggal et al., 2020).

Real data usually follow a long-tailed distribution, most concentrated in only a few classes. On datasets following this distribution, neural networks usually cannot deal well with all classes (majority or rareness classes). If the model performs well on majority classes, it tends to perform poorly on the rareness classes and vice versa, resulting in poor performance. The protein secondary structure prediction task also shows a similar problem. For example, we visualize the CB6133-filtered and CB513 datasets (Zhou and Troyanskaya, 2014) and find an imbalance problem in the protein secondary structure labels distribution. For example, the number of labels α-helix (H), β-strand (E), and coil (C) is much greater than other labels. This imbalance problem has traditionally been solved by resampling the data (e.g., undersampling and oversampling) (Chawla et al., 2002; Minlong et al., 2019) or reshaping the loss function (e.g., loss reweighting and regularization) (Cao et al., 2019; Cui et al., 2019). However, by treating each example within a class equally, these methods fail to account for the important notion of example hardness. In other words, within each class, some examples are easier to classify than others (Duggal et al., 2020). Hence, “ELF: An Early-Exiting Framework for Long-Tailed Classification” is proposed to overcome the above-described limitations and address the challenge of data imbalance. ELF incorporates the notion of hardness into the learning process and can induce the neural network to increasingly focus on hard examples because they contribute more to the overall network loss. Hence, it frees up additional model capacity to distinguish difficult examples and can improve the classification performance of the model.

To our knowledge, few studies try to solve the protein secondary structure prediction task from the perspective of the model learning process. This study proposes a framework called Multistage Combination Classifier Augmented Model (MCCM) to solve that task and fill in the blanks. We first introduce a feature extraction module to extract features with different learning difficulty levels. Then, we design two classifiers that can learn the decision boundaries for easy and hard samples, respectively. Finally, we propose a sample learning difficulty discrimination module via exploring two strategies. Specifically, the first strategy is label-dependent, assuming the sample is hard if it is misclassified. However, the actual data is lack of labels. Hence, the second strategy utilizes Dirichlet distribution and information entropy measurement. The experimental results based on the first method and the benchmark CB513 dataset show that our proposed framework outperforms other state-of-the-art models by a large margin, indicating that if the multilevel samples discriminating module can be designed effectively, our framework can obtain state-of-the-art performance. Furthermore, the results based on the second method also show that our model outperforms most state-of-the-art models. In this work, we made the following key contributions:

• We are first to develop a Multistage Combination Classifier Augmented Framework for protein secondary structure prediction task. It consists of multilevel (easy or hard level in this study) features extraction, multistage combination classifiers, and multilevel samples discrimination module. The last module is realized based on label-dependent and label-independent methods, respectively.

• For our core multilevel samples discrimination module, a label-independent measurement standard to discriminate the easy and hard samples is first explored by our work based on Dirichlet distribution and information entropy theory. The Dirichlet distribution is designed to measure the model confidence based on subjective logic theory. The information entropy is designed to evaluate whether the Dirichlet distribution shows a highly confident distribution and, thus, capture the easy samples that tend to be classified accurately by the easy classifier.

• The results based on the label-independent method show that our model outperforms most state-of-the-art methods, indicating that the designed multilevel samples discrimination module herein is effective. The excellent result based on the label-dependent method means that our framework can obtain a state-of-the-art performance if the multilevel samples discriminating module is designed appropriately. Hence, our work not only offers a new idea to deal with the protein secondary structure prediction task but also leaves room for further research focusing on how to design a more effective multilevel samples discrimination module.

Methods and Materials

Benchmark Datasets

In the field of protein secondary structure prediction in genetics and bioinformatics, CB6133-filtered and CB513 datasets (Zhou and Troyanskaya, 2014) are two benchmark datasets widely used by the researchers (Li and Yu., 2016; Fang et al., 2018; Zhang et al., 2018; Guo et al., 2021; Lyu et al., 2021). The CB6133-filtered dataset is filtered to remove redundancy with the CB513 dataset (for testing performance on the CB513 dataset). In particular, the CB6133-filtered dataset is used to train the model, and the CB513 dataset is used to test the model. The training CB6133-filtered dataset is a large non-homologous sequence and structure containing 5,600 training sequences. The dataset is made by the PISCES Cull PDB server, a public server for screening protein sequence sets from the Protein Data Bank (PDB) according to sequence identification and structural quality standards (Wang and Dunbrick, 2003). The testing dataset CB513 was introduced by Cuff and Barton (Cuff and Barton, 1999, 2000), which contains 514 sequences. The two available benchmark datasets can be obtained by Zhou’s website.

Input Features

Considering the difficulty of protein secondary structure prediction in genetics and bioinformatics, we use four types of features to characterize each residue in a protein sequence, including 21-dimensional amino acid residues, one-hot coding, and the sequence of 21-dimensional profile features, obtained from the PSI-BLAST (Altschul et al., 1997) log file and rescaled by a logistic function (Jones, 1999). Furthermore, the seven-dimensional physical property features (Jens et al., 2001) were previously used for the protein structure and property prediction by researchers (Heffernan et al., 2017) and obtained a good performance. The physical properties include steric parameters (graph-shape index), polarizability, normalized van der Waals volume (VDWV), hydrophobicity, isoelectric point, helix probability, and sheet probability. We also take them as one of the input features, and the features can be downloaded from Meiler’s study (Jens et al., 2001). Finally, the one-dimensional conservation score was obtained by applying the method (Quan et al., 2016):

The residues are transformed according to the frequency distribution of amino acids in the corresponding column of homologous protein multiple sequence alignment. The score information in the profile features was calculated from this probability. Residue score in the ith column was calculated as follows (Altschul et al., 1997):

where

Model Design

The proposed model for protein secondary structure prediction in genetics and bioinformatics consists of a feature extracting module and a Multistage Combination Classifier Module. This section firstly introduces the two modules separately and then explains the overall architecture in detail.

Multilevel Features Extraction

We use a multilevel features extraction module to extract easy (low level) and hard (high level) features. The easy feature is obtained through four linear layers and multiscale one-dimensional convolution layers. At first, we apply the four linear layers to the amino acid residues one-hot coding, sequence profile, physical property, and conservation score features, respectively. Further, we apply the concatenation function for the outputs, intended to obtain the feature representations with denser and more information. We define the concatenated outputs as

where

where

where

Then, based on the obtained easy feature

where

where

Finally, the easy feature

Multistage Combination Classifier Module

Predicting protein secondary structure in genetics and bioinformatics is a challenging task that we try to solve from the perspective of the model learning method. On the one hand, the existing research results point out that, within different classes of all samples (the classes are either majority or minority), some examples are easier than the others (Duggal et al., 2020). On the other hand, different people may be suitable for different work. Similarly, the different classifiers may be suitable for classifying different samples. Following the theory and intuition, we design two classifier branches in the model to deal with samples with different difficulty levels. The first classifier branch comprises a simple linear layer, which aims to deal with the simple samples (easy to classify). The second classifier branch comprises a multi-layer perceptron (

where

Sample Difficulty Discrimination Module

We have designed the easy and hard classifiers to deal with different samples. However, we need to further design a measurement standard to discriminate between the easy and hard samples among all samples. For the model, a label is an ideal tool to realize our purpose. If samples are classified accurately according to their labels, we regard them as easy samples and the others as hard samples sent to the hard classifier. In this way, the different classifiers can be assigned suitable samples, and our model can be trained well. However, the actual data is lack of labels. Hence, we just can design the measurement standard to get close to the ideal effect in all possible ways. In this study, we design a measurement standard based on subjective logic (SL) and Dirichlet distribution with Z parameters

where

where

where B is the batch size of the samples,

Finally, we use the information entropy (IE) to know whether the easy classifier has a highly motivated subjective opinion on the samples. Given the predicted Dirichlet distribution parameters

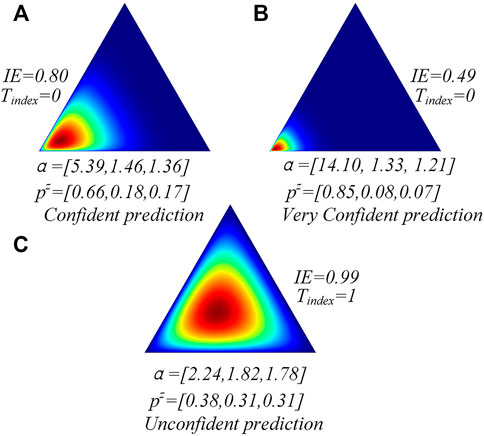

As shown in Figure 1, if the easy classifier is very confident to classify the sample accurately, its information entropy tends to be lower than other conditions. We can also find that the classifier with low information entropy shows a highly motivated subjective opinion on the current samples and classifies them accurately (Figures 1A,B). However, the classifier with high information entropy shows a uniform subjective opinion on the current sample labels and classifies it incorrectly. Hence, the information entropy can be used to help the model discriminate between the easy and hard samples. We define the discriminating process as

where

Hence, the final loss function of our model can be obtained by uniting Eqs. 16, 22:

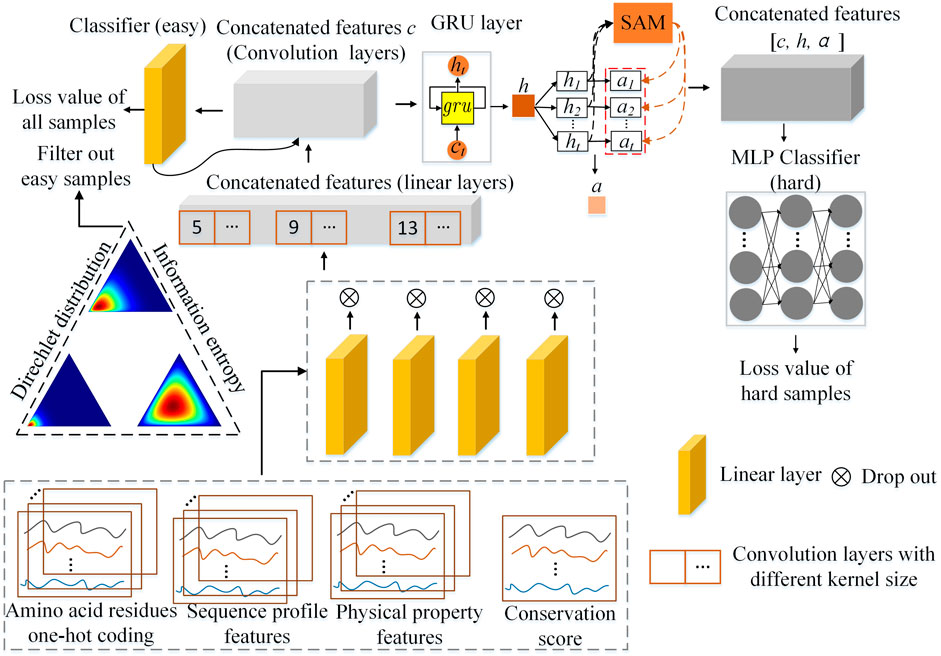

According to Eq. 22,

The architecture of the Multistage Combination Classifier Augmented Model (MCCM) is shown in Figure 2. After preprocessing the dataset, 50-dimensional features are obtained and taken as the input features, including the 21-dimensional amino acid residues one-hot coding, 21-dimensional sequence profile, 7-dimensional physical property, and 1-dimensional conservation score. The features are first preprocessed into easy ones through four linear layers and multiscale one-dimensional convolution layers. Based on the Dirichlet distribution and information entropy, the samples are divided into easy and hard ones by an easy classifier (a simple linear layer). Then, the easy feature is further preprocessed into a hard one through

FIGURE 2. The overall architecture of the Multistage Combination Classifier Augmented Model (MCCM) for protein secondary structure prediction in genetics and bioinformatics.

Implementation Details

The hidden sizes of the four linear layers used for the 21-dimensional amino acid residues one-hot coding, 21-dimensional sequence profile, 7-dimensional physical property, and 1-dimensional conservation score features are 64, 128, 32, and 16, respectively. The hidden size of the multiscale one-dimensional convolution layers is 64 and the corresponding kernel sizes are 5, 9, and 13, respectively. The GRU layer is bidirectional and the hidden size is 256. The hidden size of the linear layer used in the attention mechanism is 256. The hidden sizes of the first two layers used in MLP are 512 and 1,024. The models are optimized by Adam optimizer, and the learning rates are set to 0.0005. During training, the dropout function can randomly zero some of the elements of the input tensor with probability

Performance Evaluation

In the field of protein secondary structure prediction in genetics and bioinformatics, the Q score measurement formulated as Eq. 6 has been widely used to evaluate the performance of the proposed models. It measures the percentage of residues for which the predicted secondary structures are correct (Wang et al., 2016):

where

Results and Discussion

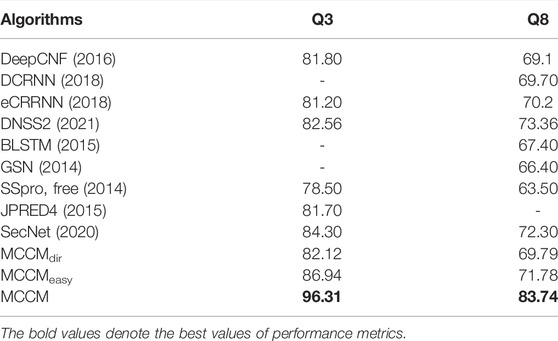

Experimental Results of Evaluating Indicators

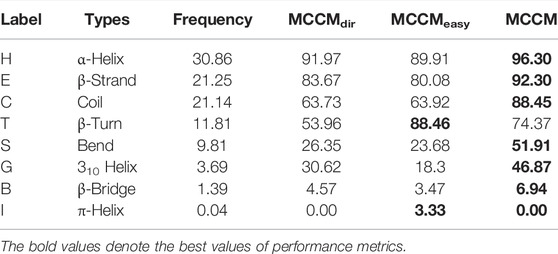

The evaluation results of the proposed model based on the public CB513 test dataset are shown in Table 1. MCCMdir means using the Dirichlet distribution and information entropy to divide the samples into easy and hard ones. In MCCMeasy and MCCM, we use label information to divide the samples, an ideal measurement method that can help us explore the theoretical best performance. Besides, MCCMeasy means there is no backpropagation operation through the loss function

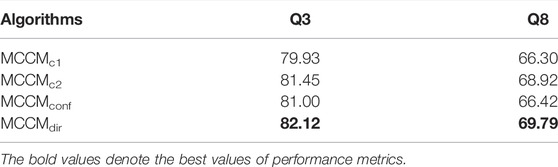

Ablation Study

This section gives a more comprehensive analysis regarding the effectiveness of the proposed framework. The different levels of classifiers equipped with the multilevel samples discriminating module can improve the performance of the prediction task. The compared variants are as follows:

MCCMc1: the variant is the front part of our proposed model (without the multilevel classifiers and sample difficulty discrimination module), which only uses the concatenated features c and easy classifier (see Figure 2) to deal with the prediction task. This model only uses the convolution layer to extract the features and then conducts the classification task based on the low-level features.

MCCMc2: the variant is the part of our proposed model (without the multilevel classifiers and sample difficulty discrimination module) that uses the concatenated features [c,h,a] and hard classifier (see Figure 2) to deal with the prediction task. This model uses not only the convolution layer but also

MCCMconf: the variant is designed based on the ELF (Duggal et al., 2020), which uses the classifier confidence to distinguish the samples into easy and hard ones. Particularly, in the training process, if the samples are classified correctly and their classifier confidence is lower than the threshold (0.9 used in ELF and this study), they will not be sent to the hard classifier. Otherwise, the samples will be sent to the hard classifier again. In the test process, the samples with low classifier confidence will be sent to the hard classifier for the final prediction result.

The experiment results are shown in Table 2. The performance of MCCMc1 is the worst, and the performance of MCCMc2 is better than it, which means that the addition of the high-level features extractor GRU and attention mechanism is effective. The performance of MCCMdir is better than MCCMc2, which means that our proposed framework is effective. The designed multilevel classifiers and sample difficulty discrimination module can help the model pay more attention to the hard samples and improve the model performance. Note that, if we can increase the depth of our network and use more classifier branches, the model performance will be better. Moreover, the performance of MCCMdir is better than that of MCCMconf, which means that our designed sample difficulty discrimination module is better than that proposed in ELF (Duggal et al., 2020). The Dirichlet distribution united with the information entropy can divide the samples into hard and easy ones, which is better than using the simple classifier confidience.

Analysis of the Training Process

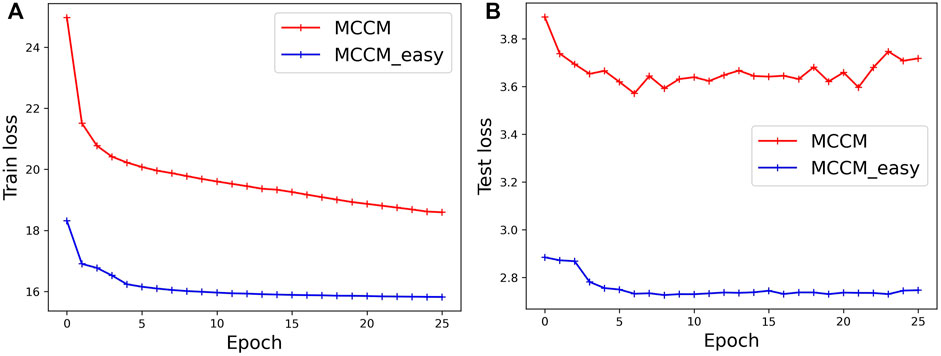

The training loss computed on the CB6133-filtered dataset and the test loss computed on the CB513 dataset are shown in Figure 3. Label-dependent MCCM and label-independent MCCMdir are all optimized by the loss functions

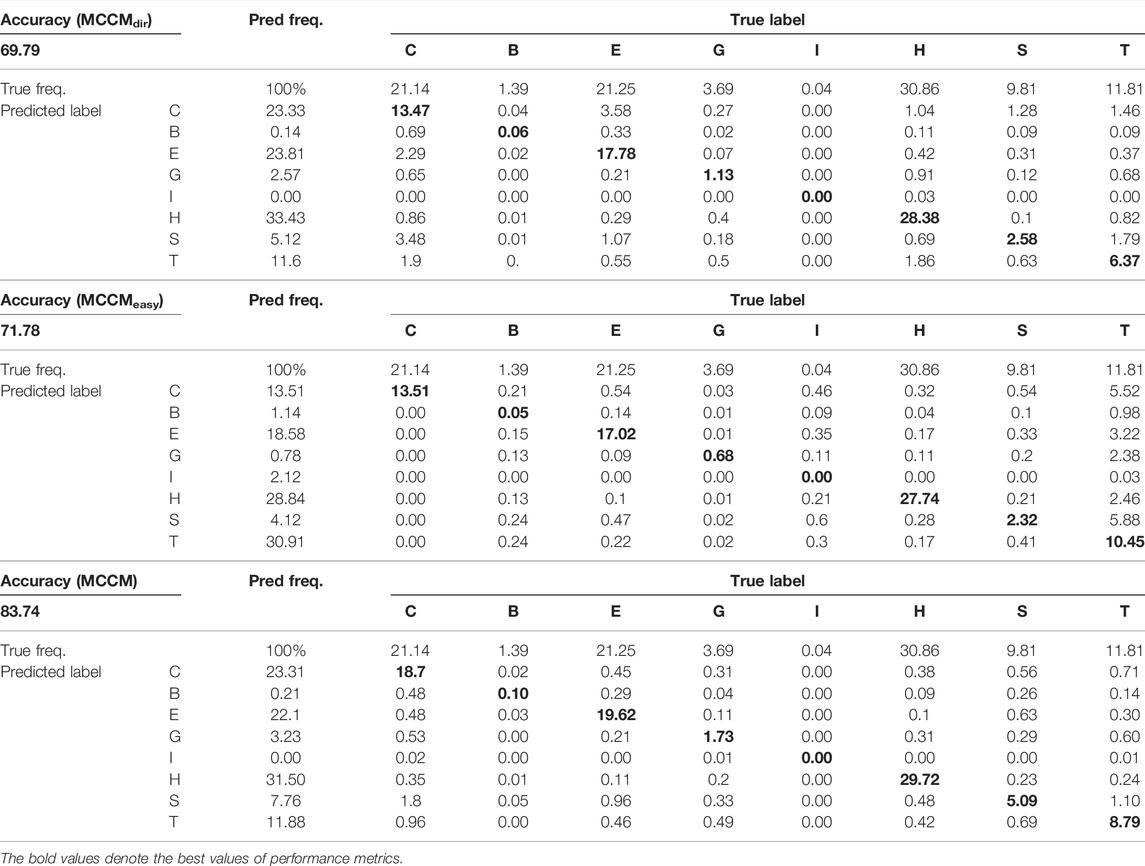

Analysis of the Prediction of Q8 States and Confusion Matrix

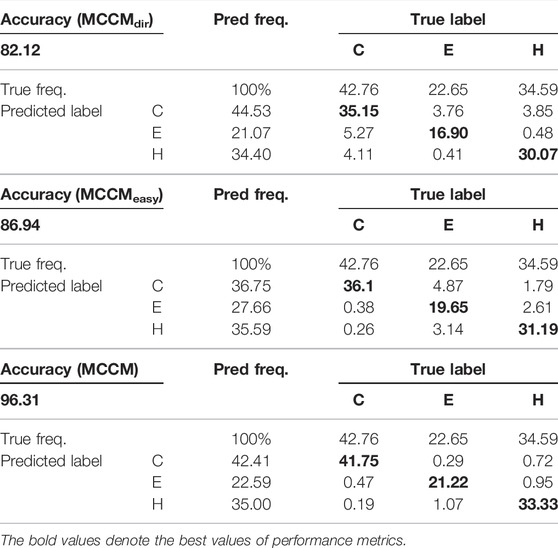

In the field of protein secondary structure prediction in genetics and bioinformatics, the predictive precision for each class of Q8 would provide more useful information, and we compute the prediction accuracy of each label in the Q8 states based on the CB513 dataset. At the same time, we compute the confusion matrix to further explore the model performance. Table 3 shows the prediction accuracy (MCCMdir, MCCMeasy, and MCCM) of each label in the Q8 states. Tables 4, 5 show the Q3 and Q8 prediction confusion matrix, respectively. Associating the three tables, we can find that although MCCMeasy is only optimized by the loss function

The existing research results point out that, within different classes of all samples (either the classes is majority or minority), some examples are easier than others (Duggal et al., 2020). Comparing the performance of MCCMeasy and MCCM, we can find that the former based on loss function

Associating the performance of MCCMdir and MCCM, we can induce that if we can design a method to distinguish the samples well (the discrimination effect is close to using label information), our method can obtain the state-of-the-art performance. Future research can focus on this point.

Conclusion

In the field of bioinformatics, understanding protein secondary structure is very important for exploring diseases treatments. This study proposes a framework for predicting the protein secondary structure, consisting of multilevel features extraction, multistage combination classifiers, and multilevel samples discriminating module. In the multilevel features extraction module, we design a different backbone network to extract the features of the multilevel (easy and hard levels in this study) from the original data. In the multistage combination classifiers module, we design two classifiers to deal with samples with different difficulty levels, respectively. Finally, in the multilevel samples discriminating module, we design a measurement standard based on the Dirichlet distribution and information entropy to assign suitable samples to different classifiers (multistage combination classifiers) with different levels. The first classifier is used to learn and classify the easier samples and filter them out, avoiding being sent to the second classifier. Further, the remaining harder samples will be sent to the second classifier. We compute the loss value of the two classifiers. Consequently, the loss value of the harder samples will be accumulated and will always be greater than the easier ones. This method can induce the model to pay more attention to harder samples and improve the classification performance. The experimental results on the publicly available benchmark CB513 dataset show the superior performance of the proposed method.

However, the experimental results show that the current multilevel samples discriminating the module in this study are not designed well, which limits the performance of our framework. Herein, the related experiments show that if the multilevel samples discriminating module is designed well, our framework can obtain state-of-the-art performance. Besides, the depth of our network and the number of classifier branches also can be further increased to raise the performance of our framework. Hence, future work can focus on designing a more effective multilevel samples discriminating module and designing the deeper network as well as the more classifier branches to further improve the model performance.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

Conceptualization: XZ, LZ, and BJ; methodology, XZ, YL, LZ and YW; software, XZ; validation, YL, YW and LF; investigation, HZ, YW and LF; writing—original draft preparation, XZ and HZ; writing—review and editing, LZ, YL, BJ and LF; supervision, BJ; funding acquisition, BJ. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant no. 61772110) and the Key Program of Liaoning Traditional Chinese Medicine Administration (Grant no. LNZYXZK201910).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Altschul, S. F., Madden, T. L., Schäffer, A. A., Zhang, J., Zhang, Z., Miller, W., et al. (1997). Gapped BLAST and PSI-BLAST: a New Generation of Protein Database Search Programs. Nucleic Acids Res. 25 (17), 3389–3402. doi:10.1093/nar/25.17.3389

Cao, K., Wei, C., Gaidon, A., Arechiga, N., and Ma, T. (2019). “Learning Imbal-Anced Datasets with Label Distribution-Aware Margin Loss,” in Advances in Neural Information Processing Systems, 1565–1576.

Chawla, N. V., Bowyer, K. W., Hall, L. O., and Kegelmeyer, W. P. (2002). Smote: Synthetic Minority Over-sampling Technique. J. Artif. Intelligence Res. 16, 321–357. doi:10.1613/jair.953

Cuff, J. A., and Barton, G. J. (2000). Application of Multiple Sequence Alignment Profiles to Improve Protein Secondary Structure Prediction. Proteins 40, 502–511. doi:10.1002/1097-0134(20000815)40:3<502:AID-PROT170>3.0.CO;2-Q

Cuff, J. A., and Barton, G. J. (1999). Evaluation and Improvement of Multiple Sequence Methods for Protein Secondary Structure Prediction. Proteins 34, 508–519. doi:10.1002/(SICI)1097-0134(19990301)34:4<508:AID-PROT10>3.0.CO;2-4

Cui, Y., Jia, M., Lin, T. Y., Song, Y., and Belongie, S. (2019). “Class-balanced Loss Based on Effective Number of Samples,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 9268–9277. doi:10.1109/cvpr.2019.00949

Dempster, A. P. (2008). “A Generalization of Bayesian Inference,” in Classic Works of the Dempster-Shafer Theory of Belief Functions (Berlin, Germany: Springer), 73–104. doi:10.1007/978-3-540-44792-4_4

Drori, I., Dwivedi, I., Shrestha, P., Wan, J., Wang, Y., He, Y., et al. (2018). High Quality Prediction of Protein Q8 Secondary Structure by Diverse Neural Network Architectures. arXiv [Preprint]. arXiv:1811.07143.

Drozdetskiy, A., Cole, C., Procter, J., and Barton, G. J. (2015). JPred4: a Protein Secondary Structure Prediction Server. Nucleic Acids Res. 43, W389–W394. doi:10.1093/nar/gkv332

Duggal, R., Freitas, S., Dhamnani, S., Chau, D. H., and Sun, J. (2020). ELF: An Early-Exiting Framework for Long-Tailed Classification. arXiv [Preprint]. arXiv: 2006.11979.

Fang, C., Li, Z., Xu, D., and Shang, Y. (2020). MUFold-SSW: a New Web Server for Predicting Protein Secondary Structures, Torsion Angles and Turns. Bioinformatics 36, 1293–1295. doi:10.1093/bioinformatics/btz712

Fang, C., Shang, Y., and Xu, D. (2018). MUFOLD-SS: New Deep Inception-Inside-Inception Networks for Protein Secondary Structure Prediction. Proteins 86, 592–598. doi:10.1002/prot.25487

Feng, F. L., Chen, H. M., He, X. L., Ding, J., Sun, M., and Chua, T. S. (2019). Enhancing Stock Movement Prediction with Adversarial Training. California: IJCAI, 5843–5849.

Guo, Z., Hou, J., and Cheng, J. (2021). DNSS2 : Improved Ab Initio Protein Secondary Structure Prediction Using Advanced Deep Learning Architectures. Proteins 89, 207–217. doi:10.1002/prot.26007

Hanson, J., Paliwal, K., Litfin, T., Yang, Y., and Zhou, Y. (2019). Improving Prediction of Protein Secondary Structure, Backbone Angles, Solvent Accessibility and Contact Numbers by Using Predicted Contact Maps and an Ensemble of Recurrent and Residual Convolutional Neural Networks. Bioinformatics 35, 2403–2410. doi:10.1093/bioinformatics/bty1006

Heffernan, R., Yang, Y., Paliwal, K., and Zhou, Y. (2017). Capturing Non-local Interactions by Long Short-Term Memory Bidirectional Recurrent Neural Networks for Improving Prediction of Protein Secondary Structure, Backbone Angles, Contact Numbers and Solvent Accessibility. Bioinformatics 33, 2842–2849. doi:10.1093/bioinformatics/btx218

Huang, Y., Chen, W., Dotson, D. L., Beckstein, O., and Shen, J. (2016). Mechanism of Ph-dependent Activation of the Sodium-Proton Antiporter Nhaa. Nat. Commun. 7, 12940. doi:10.1038/ncomms12940

Jens, M., Michael, M., Anita, Z., and Felix, S. (2001). Generation and Evaluation of Dimension-Reduced Amino Acid Parameter Representations by Artificial Neural Networks. J. Mol. Model. 7 (9), 360–369.

Jones, D. T. (1999). Protein Secondary Structure Prediction Based on Position-specific Scoring Matrices 1 1Edited by G. Von Heijne. J. Mol. Biol. 292 (2), 195–202. doi:10.1006/jmbi.1999.3091

Josang, A. (2016). Subjective Logic: A Formalism for Reasoning under Uncertainty. Berlin, Germany: Springer. 978-3-319-42337-1.

Kabsch, W., and Sander, C. (1983). Dictionary of Protein Secondary Structure: Pattern Recognition of Hydrogen-Bonded and Geometrical Features. Biopolymers 22, 2577–2637. doi:10.1002/bip.360221211

Källberg, M., Wang, H., Wang, S., Peng, J., Wang, Z., Lu, H., et al. (2012). Template-based Protein Structure Modeling Using the Raptorx Web Server. Nat. Protoc. 7, 1511–1522. doi:10.1038/nprot.2012.085

Li, X., Zhong, C.-Q., Wu, R., Xu, X., Yang, Z.-H., Cai, S., et al. (2021). RIP1-dependent Linear and Nonlinear Recruitments of Caspase-8 and RIP3 Respectively to Necrosome Specify Distinct Cell Death Outcomes. Protein Cell 12, 858–876. doi:10.1007/s13238-020-00810-x

Li, Z., and Yu, Y. (2016). Protein Secondary Structure Prediction Using Cascaded Convolutional and Recurrent Neural Networks. arXiv [Preprint]. arXiv:1604.07176.

Lyu, Z., Wang, Z., Luo, F., Shuai, J., and Huang, Y. (2021). Protein Secondary Structure Prediction with a Reductive Deep Learning Method. Front. Bioeng. Biotechnol. 9, 687426. doi:10.3389/fbioe.2021.687426

Minlong, P., Zhang, Q., Xing, X. Y., Gui, T., Huang, X. J., Jiang, Y. G., et al. (2019). Trainable Undersampling for Class-Imbalance Learning. Proc. AAAI Conf. Artif. Intelligence 33, 4707–4714.

Myers, J. K., and Oas, T. G. (2001). Preorganized Secondary Structure as an Important Determinant of Fast Protein Folding. Nat. Struct. Biol. 8, 552–558. doi:10.1038/88626

Pauling, L., Corey, R. B., and Branson, H. R. (1951). The Structure of Proteins: Two Hydrogen-Bonded Helical Configurations of the Polypeptide Chain. Proc. Natl. Acad. Sci. 37, 205–211. doi:10.1073/pnas.37.4.205

Quan, L., Lv, Q., and Zhang, Y. (2016). STRUM: Structure-Based Prediction of Protein Stability Changes upon Single-point Mutation. Bioinformatics 32 (19), 2936–2946. doi:10.1093/bioinformatics/btw361

Shapovalov, M., Dunbrack, R. L., and Vucetic, S. (2020). Multifaceted Analysis of Training and Testing Convolutional Neural Networks for Protein Secondary Structure Prediction. PLOS ONE 15 (5), e0232528. doi:10.1371/journal.pone.0232528

Uddin, M. R., Mahbub, S., Rahman, M. S., and Bayzid, M. S. (2020). Saint: Self-Attention Augmented Inception-Inside-Inception Network Improves Protein Secondary Structure Prediction. Bioinformatics 36, 4599–4608. doi:10.1093/bioinformatics/btaa531

Wang, G., and Dunbrack, R. L. (2003). Pisces: a Protein Sequence Culling Server. Bioinformatics 19, 1589–1591. doi:10.1093/bioinformatics/btg224

Wang, S., Peng, J., Ma, J., and Xu, J. (2016). Protein Secondary Structure Prediction Using Deep Convolutional Neural fields. Sci. Rep. 6, 1–11. doi:10.1038/srep18962

Zhang, B., Li, J., and Lü, Q. (2018). Prediction of 8-state Protein Secondary Structures by a Novel Deep Learning Architecture. BMC Bioinformatics 19, 293. doi:10.1186/s12859-018-2280-5

Zhang, Y. (2008). I-tasser Server for Protein 3D Structure Prediction. BMC Bioinformatics 9, 40. doi:10.1186/1471-2105-9-40

Keywords: genetics, biology, protein secondary structure, deep learning, combination classifier, amino acid sequence

Citation: Zhang X, Liu Y, Wang Y, Zhang L, Feng L, Jin B and Zhang H (2022) Multistage Combination Classifier Augmented Model for Protein Secondary Structure Prediction. Front. Genet. 13:769828. doi: 10.3389/fgene.2022.769828

Received: 02 September 2021; Accepted: 25 January 2022;

Published: 23 May 2022.

Edited by:

Jiajie Peng, Northwestern Polytechnical University, ChinaReviewed by:

Pengyang Wang, University of Macau, ChinaPing Zhang, The Ohio State University, United States

Copyright © 2022 Zhang, Liu, Wang, Zhang, Feng, Jin and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bo Jin, amluYm9AZGx1dC5lZHUuY24=

Xu Zhang

Xu Zhang Yiwei Liu2

Yiwei Liu2 Bo Jin

Bo Jin