- 1Department of Natural Resources Science, University of Rhode Island, Kingston, RI, United States

- 2Department of Nutrition and Food Sciences, University of Rhode Island, Kingston, RI, United States

- 3Department of English, University of Wisconsin-Madison, Madison, WI, United States

- 4College of Arts & Sciences, University of Rhode Island, Kingston, RI, United States

There is an urgent need for scientists to improve their communication skills with the public, especially for those involved in applying science to solve conservation or human health problems. However, little research has assessed the effectiveness of science communication training for applied scientists. We responded to this gap by developing a new, interdisciplinary training model, “SciWrite,” based on three central tenets from scholarship in writing and rhetoric: 1) habitual writing, 2) multiple genres for multiple audiences, and 3) frequent review and created an interdisciplinary rubric based on these tenets to evaluate a variety of writing products across genres. We used this rubric to assess three different genres written by 12 SciWrite-trained graduate science students and 74 non-SciWrite-trained graduate science students at the same institution. We found that written work from SciWrite students scored higher than those from non-SciWrite students in all three genres, and most notably thesis/dissertation proposals were higher quality. The rubric results also suggest that the variation in writing quality was best explained by the ability of graduate students to grasp higher-order writing skills (e.g., thinking about audience needs and expectations, clearly describing research goals, and making an argument for the significance of their research). Future programs would benefit from adopting similar training activities and goals as well as assessment tools that take a rhetorically informed approach.

Introduction

Institutions in the United States and Europe, such as the National Science Foundation, Council of Graduate Schools, and the European Commission, have recently called for Science, Technology, Engineering, and Mathematics (STEM) graduate programs to incorporate more communication training to better prepare future scientists to communicate to a variety of audiences (Linton, 2013; Kuehne et al., 2014; Druschke et al., 2018; Costa et al., 2019). Such calls for better science communication training for future scientists are driven by the realization that scientists should be involved in effectively conveying scientific information to a broad cross-section of society (Roux et al., 2006; Nisbet and Scheufele, 2009; Meyer et al., 2010; Smith et al., 2013; Taylor and Kedrowicz, 2013; Kuehne and Olden, 2015; National Research Council, 2008). Despite this expressed need for broader impacts and improved communication training for scientists, little research has been conducted on the most effective ways to implement and assess communication training programs for science graduate students (Kuehne et al., 2014; Skrip, 2015; Druschke et al., 2018; National Alliance for Broader Impacts, 2018). We responded to this gap by developing, implementing, and assessing a new, interdisciplinary model for developing more effective graduate science writers at the University of Rhode Island (URI) — the SciWrite@URI program (hereafter “SciWrite”). This program was designed to be adapted for a broad cross-section of science disciplines for a variety of scientists and communicators at institutions across the globe.

The goal of the SciWrite program was to better equip science graduate students with the tools necessary to be effective writers for any audience. What makes SciWrite unique from other programs with similar goals is its foundation in rhetoric-based theories and practices. Though the term rhetoric often circulates in common discourse as a term meaning “political spin,” the discipline of rhetorical studies is a field of research, at least 2,000 years old, dedicated to better understanding the ways that humans communicate, in speaking, writing, and other modes, for a variety of audiences and a variety of ends. SciWrite adopted theoretical work from the field of writing and rhetoric in order to create tangible and practical learning outcomes for our SciWrite graduate students. We developed three primary learning outcomes for SciWrite students based on three central rhetorical tenets often taught in writing and rhetoric courses: habitual writing, multiple genres for multiple audiences, and frequent review (Bruffee, 1981; Porter, 1986; DiPardo and Freedman, 1988; Lunsford, 1991; Lundstrom and Baker, 2009; Crowley and Hawhee, 2012). Upon successful completion of the SciWrite program, we expected that students would meet the following primary learning outcomes associated with each rhetorical tenet:

1) Habitual writing–students will produce high quality writing earlier and more frequently in their graduate school tenure

2) Multiple genres for multiple audiences–students will demonstrate effective command of writing in multiple genres for multiple audiences

3) Frequent review–students will evaluate peer drafts in order to provide helpful writing feedback and to improve their own writing skills

In this article, we focus on assessment of student writing for Learning Outcome Two (related to Multiple genres for multiple audiences), which required the development of a flexible rubric for the assessment of written products of different genres. For the purposes of this article, we are defining genre as “a category of writing” (e.g., scientific manuscripts, proposals, and news articles). This rubric allowed us to evaluate whether students demonstrated effective command of writing in multiple genres for multiple audiences and also provided us an effective, holistic framework for feedback. Learning Outcomes One and Three (related to Habitual writing and Frequent review) were assessed using SciWrite Fellows’ self-reported surveys and not the rubric. Because this assessment methodology was so different from our rubric assessment, we will report on that portion of the study in a different article.

Science communication training informed by rhetoric reorients the assessment and revision of writing, and this assessment and revision process is crucial for learning and improving academic and public writing. As a case in point, there is often disagreement among science faculty about the most helpful strategies for providing writing feedback, and many attribute this confusion to a lack of adequate instruction in the teaching of writing and/or inadequate support in developing their own writing skills (Pololi et al., 2004; Reynolds et al., 2009). Due to this lack of training in writing and rhetoric, common feedback approaches consist of either copious sentence-level edits or providing almost no feedback at all (Reynolds et al., 2009). However, decades of research in writing and rhetoric (Bruffee, 1981; Lunsford, 1991; Chinn and Hilgers, 2000; Bell, 2001; Lerner, 2009; Nordlof, 2014) tell us that to help students improve their writing over the long term there are a number of feedback strategies that are more important to focus on than merely directive, sentence-level editing (Neman, 1995; Straub, 1996). For example, a focus on “higher-order concerns” is much more effective than a focus on “lower-order concerns,” and because of this, most assessment and feedback should place more emphasis on higher-order concerns (Elbow, 1981; North, 1984).

Higher-order concerns deal with matters such as thinking about audience needs and expectations, developing clear arguments, and adhering to genre conventions. Such writing practices are critical when science students must determine how best to convey their results in writing (Groffman et al., 2010; Druschke et al., 2018). For example, the general wisdom is that when science students write about their research for a scientific audience they should establish credibility by being explicit yet concise with their methodology and deliberate with citing previous studies relevant to their research. However, when writing about this same research for a public audience, methodology and citation of sources would not be relevant to their readers (Baron, 2010; Heath et al., 2014; Kuehne et al., 2014). Instead, they can best establish credibility with a public audience by making it clear why the results are important and how they may affect society at large—this is what writing and rhetoric scholars refer to as an awareness of the “rhetorical situation” (Bitzer, 1968; Fahnestock, 1986; Druschke and McGreavy, 2016). For the purposes of this article, rhetorical situation can be defined simply as the context within which scientists communicate their research to others. Important parts of this rhetorical situation are the audience they are communicating to, the expectations and needs of that audience, and the purpose for communicating their research. When writers can identify the purpose of their writing project and the needs and expectations of their audience, they have a strong awareness of their rhetorical situation. Writing and rhetoric studies stress the importance of writing feedback that takes a more holistic approach than simply proofreading; the most useful writing feedback for graduate science writers will focus on higher-order concerns and will help students better understand their rhetorical situation (North, 1984; Neman, 1995; Straub, 1996). Our study will help to evaluate the utility of such skills. Here we present and use an evaluation rubric that emphasizes this holistic approach, and that is applicable for assessing the written work of graduate students in the sciences writing for multiple audiences with a variety of needs and expectations.

The aim of this study was to determine whether SciWrite students were able to demonstrate effective command of writing in multiple genres for multiple audiences, and if so, what factors most contributed to their ability to meet this learning outcome. We also aimed to test whether there was a difference in rubric scores for SciWrite trained students versus non-SciWrite trained students. First, we developed and present here a rubric that assesses students’ writing progress on both higher- and lower-order fronts, and helps science faculty members give their students more effective writing feedback. We then used this SciWrite rubric to assess two important types of writing products that many science graduate students at URI produce as part of their program requirements: 1) a thesis/dissertation proposal that outlines the rationale, study design, and planned outcomes of their graduate project, and 2) relevant written assignments from graduate-level science courses that included writing training. For all genres of writing, we compared the writing of SciWrite-trained graduate students to non-SciWrite trained science graduate students. This study design helps us to better determine how rhetorical tenets can contribute to other science writing programs, and also helped us evaluate the potential utility of the SciWrite program for other institutions with similar writing program goals.

Materials and Methods

Below we describe the comparison groups of science graduate students in more detail, the training experienced by SciWrite-trained students, the SciWrite rubric used to assess the writing products, and the assessment process.

Recruitment

In 2016 and 2017, two cohorts of SciWrite fellows were recruited via departmental and university announcements, faculty and staff recommendations, and word of mouth. Only graduate students at URI who had at least 2 years remaining in their program and were enrolled in a graduate science program were eligible to participate. Candidates were chosen based on perceived level of dedication and ability to participate in the intensive 2-year writing program, rather than writing ability. This allowed us to avoid potential bias toward candidates who already had above average writing skills compared to the average science graduate student.

Study Design

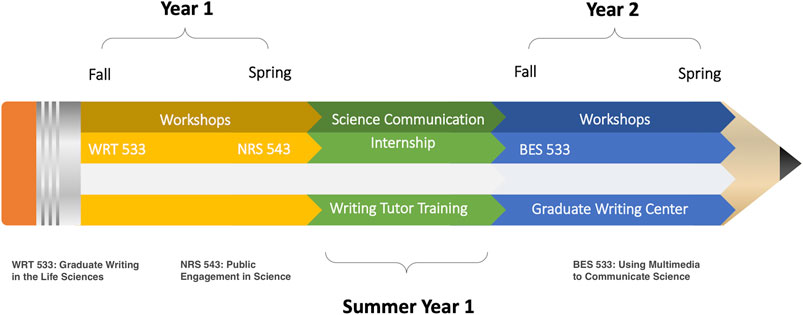

The overall 2-year timeline of the SciWrite program for each cohort of students consisted of regularly scheduled workshops (4 over the 2 years), two writing-intensive courses, a summer science communication internship in the first year, followed by a multimedia journalism class, writing tutor training, and writing tutor work at the URI Graduate Writing Center in the second year (Figure 1). Two separate cohorts of six students completed this timeline from 2016 to 2019. All of these activities were designed with program learning outcomes in mind. We have outlined a more detailed description of the particular components of the SciWrite program and the ways in which SciWrite differs from other writing programs in Druschke et al. (2018). Therefore, the description we give here will be an abbreviated version that can be supplemented with additional details from Druschke et al. (2018).

FIGURE 1. Timeline of the 2-year SciWrite Program used to train each of the two cohorts of graduate students from 2016 to 2019: Progression through three courses (WRT 533 Graduate Writing in the Life Sciences, NRS 543 Public Engagement in Science, and BES 533 Using Multimedia to Communicate Science), simultaneous workshops, a summer internship and writing tutor training, and working as writing tutors at the Graduate Writing Center.

In our courses and trainings, SciWrite fellows engaged in habitual writing, wrote in multiple genres for multiple audiences, and participated in frequent review. For example, assignments were scaffolded into simpler, shorter assignments (rather than entire drafts) (Druschke et al., 2018). Such an approach helped students to take on writing projects that were less daunting and lower stakes, and so made it easier for students to get into the habit of writing early and often for writing assignments (Coe 2011; Petersen et al., 2020). SciWrite fellows practiced writing in multiple genres (e.g., manuscripts, blog posts, news articles, editorials, White Papers, proposals) for academic and nonacademic audiences (e.g., lay readers, technicians, practitioners, and scientists). After working on assignments in their courses and workshops, SciWrite fellows entered a process of review and revision in one-on-one and small group tutorials in classrooms, online forums, and while working as writing tutors at the Graduate Science Writing Center that we opened in fall 2017. SciWrite fellows learned how to provide facilitative feedback rather than directive feedback and to focus their feedback on higher-order rather than lower-order concerns (Elbow 1981; North 1984; Neman 1995; Straub 1996). This allowed SciWrite fellows to practice giving and receiving peer feedback in a structured, holistic way. Three genres of writing that SciWrite students practiced during their tenure with SciWrite were used for assessment: 1) a thesis/dissertation proposal submitted to the graduate school, 2) one final “Writing in The Life Sciences” assignment, and 3) one final “Public Engagement in Science” assignment.

The three writing products (i.e., thesis/dissertation proposal, one assignment from each of two courses) produced by SciWrite fellows and other science graduate students were assessed over the course of the SciWrite program from 2016 to 2020. Proposals for non-SciWrite students were selected using a random-stratified process. Proposal samples were stratified by department in order to ensure a departmental composition roughly equivalent to that of the SciWrite fellows. Individual proposals were randomly selected from each stratum for the non-SciWrite group. Final course assignments for both SciWrite and non-SciWrite students were assessed at the end of each course.

Assessment of Three Genres of Writing

Trained assessors used the SciWrite rubric to assess three written products: the thesis/dissertation proposal, the public science writing piece, and the public engagement in science project report. Assessors were seven graduate students in the Writing and Rhetoric graduate program, one graduate student in the Biological and Environmental Sciences graduate program, and one faculty member with a joint appointment in the Writing and Rhetoric program and Biological and Environmental Sciences program. All assessors were previously trained in writing program assessment best practices. Assessors convened for shared norming sessions and discussions of sample essays and baseline scores. Where necessary, norming sessions included defining key terms and reference guides to aid coders in scoring. In total, the norming process consisted of roughly 10 h of training in 2017, 2019, and 2020.

Proposal

Identifying information was removed from all thesis/dissertation proposals, so assessors were unaware of author identity or their participation in the SciWrite program. Each of the 49 graduate proposals (n = 10 for SciWrite and n = 39 for non-SciWrite) was randomly assigned to two different assessors, and assessor rubric scores were averaged. Assessors gave each of the eight rubric items a score between 1 and 3 (i.e., “does not meet expectations,” “approaches expectations,” and “meets expectations,” Table 1). We compared assessors’ scores of the proposals to assess inter-rater reliability of the rubric. In instances where one or more rubric items had a disagreement of more than one point between assessors, those written products were then assessed by a third assessor and scores were averaged. The maximum score for the proposal rubric was 24 points; the lowest possible score for the proposal rubric was eight points.

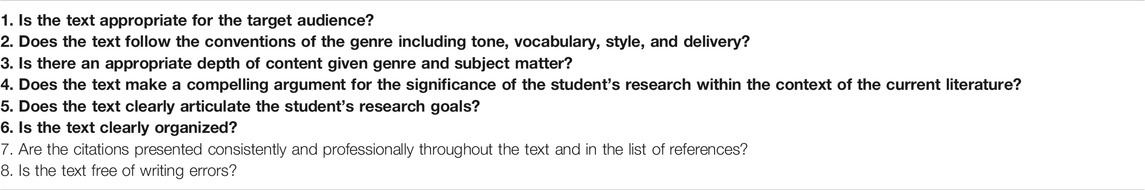

TABLE 1. Rubric items used to assess SciWrite and non-SciWrite academic written products, arranged from “higher-order concerns” to “lower-order concerns.” Rubric items in bold address higher-order concerns. Written products were assessed on a scoring scale from 1 to 3: “does not meet expectations,” “approaches expectations,” and “meets expectations.”

Public Science Writing Piece and Public Engagement Project Report

In the first year of the SciWrite program, course assignments were assessed by the course instructor. There were 14 Public science writing pieces (n = 6 for SciWrite and n = 8 for non-SciWrite) and 15 Public engagement project reports (n = 5 for SciWrite and n = 10 for non-SciWrite). For the second cohort of the SciWrite program, each of the 13 Public science writing pieces (n = 5 for SciWrite and n = 8 for non-SciWrite) and 15 Public engagement project reports (n = 6 for SciWrite and n = 9 for non-SciWrite) was randomly assigned to one assessor. All assessors gave each rubric item a score between 1 and 3 (Table 2). The maximum score for the Public engagement project report rubric was 24 points; the lowest possible score for the Public engagement project report rubric was eight points. The maximum score for the Public science writing rubric was 21 points because in-text citations are inappropriate for public science writing, and so the citations and references rubric item was removed; the lowest possible score for the Public science writing rubric was eight points.

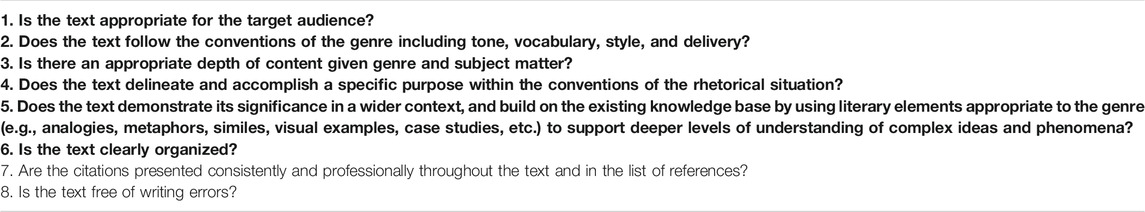

TABLE 2. Rubric items used to assess SciWrite and non-SciWrite written products for public audiences, arranged from “higher-order concerns” to “lower-order concerns”. Rubric items in bold address higher-order concerns. Written products were assessed on a scoring scale from 1 to 3: “does not meet expectations,” “approaches expectations,” and “meets expectations.”

Given that we were most interested in differences between SciWrite and non-SciWrite students in both courses (rather than differences between years and assessors), we combined rubric scores between years for each course for this analysis which resulted in a comparison of 11 SciWrite and 16 non-SciWrite students for the Public science writing piece, and 10 SciWrite and 19 non-SciWrite students for the Public engagement project report. The final count for SciWrite Public science writing pieces was 11 because one student in the second cohort left the program after the first year. The final count for SciWrite Public engagement project reports was 10 because one student in the second cohort left the program after the first year and because an additional SciWrite student had already taken the Public Engagement in Science course the year before the SciWrite program began.

Intended Audiences and Genre Expectations of All Three Products

Because this rubric is deliberately adaptable for a variety of audiences, we used it in conjunction with the assignment guidelines of the written product being assessed. This assured the assessor could understand the genre conventions and specific expectations of each assignment. Therefore, we have listed the conventions and expectations of the written products below.

The thesis/dissertation proposal submitted to the graduate school was a research project proposal standard to most science graduate programs. The intended audience was an academic audience that did not necessarily have science training specific to that discipline (see Supplementary Materials for further description of genre expectations). This written product was assessed with these genre expectations and rhetorical situation in mind.

The Writing in The Life Sciences course assignment was a writing piece intended for a public audience, in which each student was required to write about a specific scientific study in an engaging and accessible way for an audience with no scientific background (see Supplementary Materials for further description of genre expectations). The Public Engagement in Science course assignment was a project report that each student had to compose that assessed and evaluated their own public engagement project which they had created for the course. The intended audience was a professional and/or academic audience that did not necessarily have a scientific background (see Supplementary Materials for further description of genre expectations). Each of these written products were assessed with these genre expectations and rhetorical situations in mind.

Rubric Background

During the first year of the project, the SciWrite team developed a rubric to assess all written products created by SciWrite program participants. This was one primary result of the SciWrite program, and the rubric is now being used in the URI Graduate Writing Center and in some URI graduate courses as a helpful feedback framework. The SciWrite rubric was adapted from Duke University’s BioTAP rubric for scientific writing (Reynolds et al., 2009). The BioTAP rubric placed emphasis on creating a flexible and adaptable assessment tool for science students and faculty that could be used across a diversity of writing products, genres, audiences, and subjects. In addition, the BioTAP rubric encourages faculty to give holistic, “reader-based” feedback (Reynolds et al., 2009). This emphasis on adaptability and holistic feedback aligned closely with the goals of the SciWrite program.

The original BioTAP rubric was designed to evaluate success based on both the standards of writing and rhetoric, and the goals of the biology department at Duke University. To ensure the rubric language lent itself to accurate evaluation, the rubric was based on best practices from foundational academic writing courses, and researchers consulted with their Writing in the Disciplines department and Office of Assessment program as well as collaborating with biology faculty (Reynolds et al., 2009). The authors designed the rubric with the goal of it serving as a model for other STEM departments. Furthermore, the rubric was tested on a large sample size (190 written products) and each writing product was evaluated by two separate assessors. Researchers found there was moderate to strong agreement between raters. Because this rubric was designed to serve as a model for other STEM departments, and because of the tested reliability of this rubric as an assessment tool, we chose to adopt it for our assessment process as well with only a few important additions (see “Development of rubric” section below).

Reynolds et al. (2009) highlighted the standards addressed in each section of the rubric, and we modelled our assessment off of these standards as well. The first section (questions 1–5 in our rubric) addresses higher-order concerns such as targeting the intended audience, contextualizing the research within the scientific literature, and communicating research aims (Reynolds et al., 2009). The second section (questions 6–8 in our rubric) addresses organization, mechanistic issues, and citations. To receive a score of “approaches expectations” for question 1, for example, the written product must include appropriate definitions or explanations of key terms and concepts with minor lapses that do not prevent the primary intended audience from accessing or engaging with the research/text (Supplementary Materials, SciWrite rubric). In comparison, to receive a score of “meets expectations” the written product must make the research not only accessible but also engaging for the intended audience. To adequately define the intended audience and genre conventions of the written product they were assessing, assessors always referred to the assignment sheet for that written product. (For further explanation of assessment standards, consult Reynolds et al. (2009) as well as our rubric in the Supplementary Materials section).

Development of Rubric

We collaborated with departments in the College of the Environment and Life Sciences, Writing and Rhetoric faculty, and program assessment experts to specifically tailor the rubric to the needs of the SciWrite program (Tables 1, 2). The rubric was slightly adapted to incorporate multiple criteria that assessed students’ ability to meet our program learning outcomes. For example, items 1–3 and 9–10 addressed Learning Outcome Two, related to Multiple genres for multiple audiences (Tables 1, 2). To determine if students were demonstrating effective command of their writing in multiple genres for multiple audiences, the rubric evaluated whether the writing was audience appropriate, followed genre conventions, and used techniques appropriate to the genre and rhetorical situation. We added one additional item for the academic writing rubric, and two additional items for the public writing rubric, in order to more fully assess Learning Outcome Two. The additional items were: 1) Is there an appropriate depth of content given genre and subject matter? 2) Does the text delineate and accomplish a specific purpose within the conventions of the rhetorical situation? and 3) Does the text demonstrate its significance in a wider context, and build on the existing knowledge base by using literary elements appropriate to the genre (e.g., analogies, metaphors, similes, visual examples, case studies, etc.) to support deeper levels of understanding of complex ideas and phenomena? (See Supplementary Materials)

The final rubric consisted of 10 items that addressed both higher-order and lower-order concerns, and we created two different versions of the rubric for academic versus public audiences (Tables 1, 2). Items were arranged along a hierarchy of higher-order concerns to lower-order concerns.

Statistical Analysis

We examined rubric items for potential correlations using Spearman’s correlation tests. We found no significant (r ≥ 0.70) correlations between rubric items and thus retained all rubric items in our analyses, except as explained below. For the thesis/dissertation proposals, we used a parametric t-test to compare the total rubric scores for proposals written by SciWrite versus non-SciWrite students. Data conformed to normality assumptions and we detected no outliers. Given that we were comparing relatively few thesis/dissertation proposals written by SciWrite students to many more written by non-SciWrite students, we bootstrapped the data with 1,000 samples using the BCa method and created bias-corrected confidence intervals (Hall, 1988; Lehtonen and Pahkinen, 2004). In addition, we conducted a principal component analysis (PCA) to determine which of the eight rubric items contributed most to the variation in writing quality scores of thesis/dissertation proposals. We used a Varimax rotation with Kaiser normalization to simplify interpretation of the resulting PCA loadings for each rubric item (Abdi, 2003). For the two course assignments, we used separate Mann-Whitney tests to compare the writing quality between SciWrite and non-SciWrite students for the Public science writing piece (n = 11 and 16, respectively) and for the Public engagement project report (n = 10 and 19, respectively). We used this non-parametric test because the course data were not normally distributed. We used a paired t-test to detect potential improvement over time in course-based writing assignment scores for SciWrite students in their first course (Writing in The Life Sciences) versus second course (Public Engagement in Science). To make total possible points for the two course rubric datasets equivalent, we removed the citations and references score from the Public Engagement in Science data before conducting this paired t-test. All statistical analyses were performed using SPSS (IBM SPSS Statistics Version 26).

Results

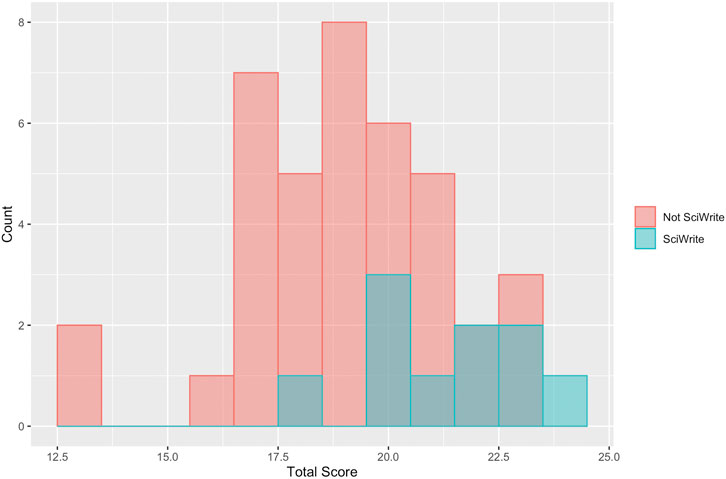

Writing Quality of Proposals

Writing quality of thesis/dissertation proposals differed significantly between SciWrite and non-SciWrite students. Total rubric score (mean ± SE) for SciWrite proposals was 2.4 points higher (21.55 ± 0.58) than that of non-SciWrite proposals (19.15 ± 0.38, t9 = −2.98, p = 0.005), a mean difference that equates to an entire letter grade if the rubric was being used for grading purposes. All SciWrite proposals received scores between 18 and 24, whereas non-SciWrite proposals received scores between 13 and 23 (Figure 2). One SciWrite proposal received the maximum score, but no non-SciWrite proposals did.

FIGURE 2. Frequency distribution of total rubric scores for thesis/dissertation proposals written by graduate students trained in the SciWrite program vs. those not trained in this program (“Not SciWrite”).

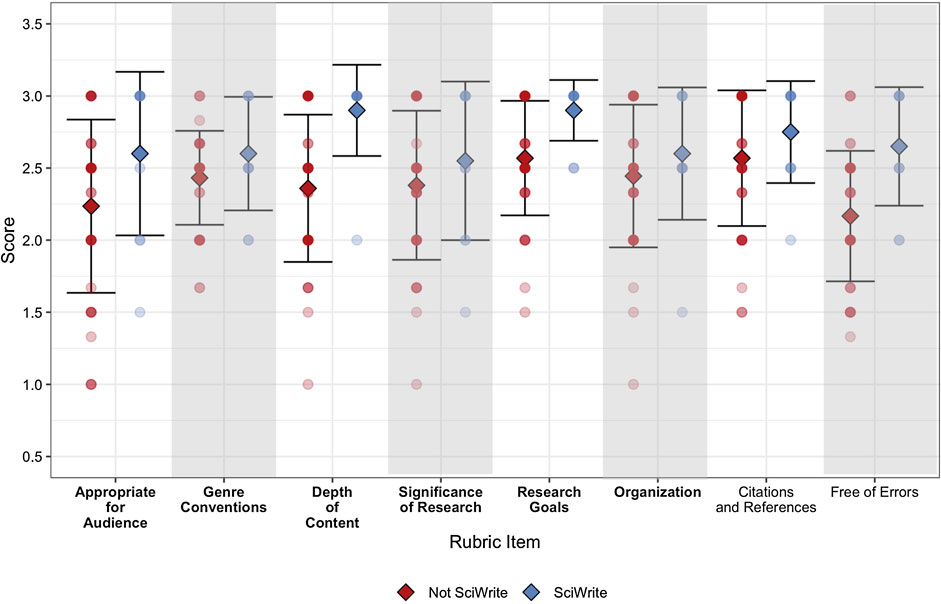

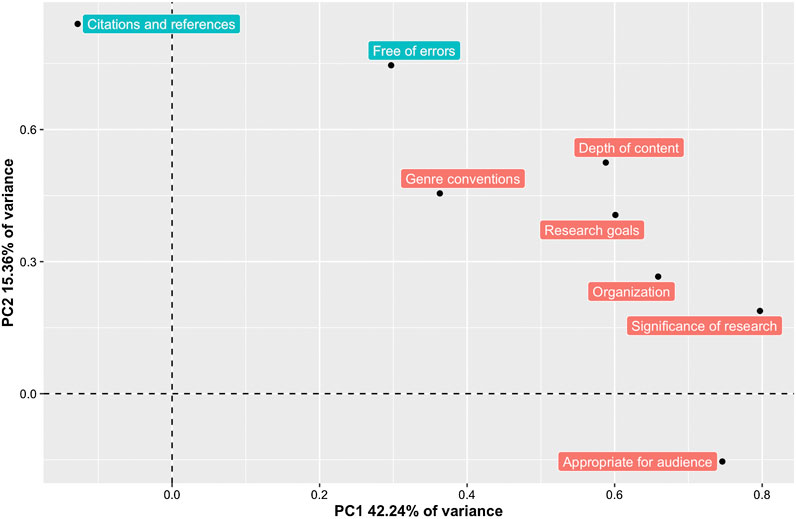

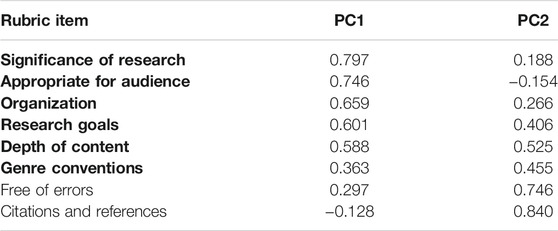

The mean score for each rubric item was consistently higher for thesis/dissertation proposals produced by SciWrite students compared to those produced by non-SciWrite graduate students, and the range of scores was always smaller for SciWrite participants (Figure 3). We found that two components were sufficient (i.e., eigenvalues >1) to explain variation in rubric scores and that higher-order concerns on the rubric were a better predictor of variation in writing quality of the proposal than lower-order concerns (Figure 4). PC1 explained 42.2% of the variation in rubric scores for the proposal (Figure 4). PC1 had relatively large positive associations with all the rubric items addressing higher-order concerns (e.g., Appropriate for audience, Argument for significance of research, Research goals, etc.) except for Genre conventions and the lowest loadings for the two lower-order concerns (i.e., Citations and references, Free of errors), suggesting that PC1 primarily indicates higher-order concerns (Table 3). PC2 explained 15.4% of the variation in rubric scores for the thesis/dissertation proposals (Figure 4) and had large positive associations with the rubric items addressing the lower-order concerns (i.e., Free of errors and Citations and references) suggesting this component primarily indicates lower-order concerns (Table 3). The PCA also indicates that if a proposal was free of errors and contained appropriate citations and references (lower-order concerns), it was not necessarily appropriate for the intended audience (Figure 4).

FIGURE 3. Each of the eight rubric item scores (mean ± SD) for proposals written by SciWrite and non-SciWrite students. Mean scores are diamonds. Scores of individual students on each rubric item are the circular points with darker points for scores earned by at least five students and lighter points for scores earned by fewer than four students. Rubric items in bold address higher-order concerns.

FIGURE 4. Plot of principal component scores after Varimax rotation with Kaiser normalization for the eight rubric items used to evaluate thesis/dissertation proposals written by SciWrite and non-SciWrite students. Rubric items that addressed higher-order concerns are labeled in red, rubric items that addressed lower-order concerns are labeled in blue.

TABLE 3. Component weights from the Principal Component Analysis after Varimax rotation with Kaiser normalization for all eight rubric items for both SciWrite and non-SciWrite proposals. Rubric items addressing higher-order concerns are in bold, and the order is determined by the first principal component (PC1) weights. The first two principal components are presented because only their eigenvalues were >1.

Writing Quality of Public Science Writing Pieces and Public Engagement Reports

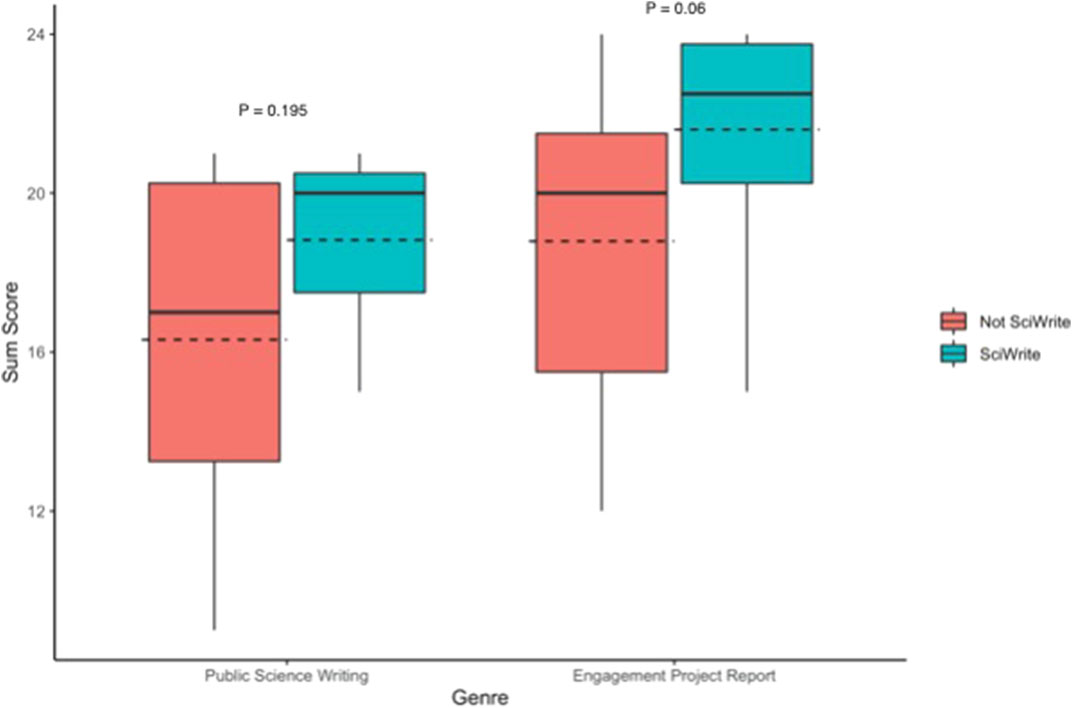

For both genres, mean total rubric score was more than two points higher on average for SciWrite students compared to non-SciWrite students (Figure 5). For the Public science writing piece, the higher total rubric score (mean ± SE) for SciWrite students (18.82 ± 0.64, range: 15–21, n = 11) compared to non-SciWrite students (16.31 ± 1.09, range: 9–21, n = 16) was not significantly different (Mann-Whitney U = 114.5, p = 0.195). For the Public engagement project report, the higher total rubric score (mean ± SE) for the SciWrite students (21.6 ± 0.88, range: 15–24, n = 10) versus non-SciWrite students (18.79 ± 0.92, range: 12–24, n = 19) approached statistical significance (Mann-Whitney U = 136.0, p = 0.062).

FIGURE 5. Box and whisker plot of SciWrite versus non-SciWrite total score for the Public science writing piece and Public engagement project report. For the public science writing pieces, minimum possible score was eight and maximum possible score was 21. For the public engagement project reports, minimum possible score was eight and maximum possible score was 24. Boxes show 50% of the values for reported scores, solid lines within boxes show median score, dashed lines show mean score, whiskers show minimum and maximum reported scores. P-values are from a Mann-Whitney U test comparing scores of SciWrite versus non-SciWrite students for each of the two genres.

Total rubric score (mean ± SE) for SciWrite students on the second of their two course-based writing assignments did not differ statistically from that of their first assignment (18.6 ± 0.67 vs. 18.9 ± 0.71, t9 = −0.605, p = 0.560, n = 10).

Inter-Rater Reliability

Rubrics used to assess writing quality should ideally produce scores that are repeatable and consistent across trained assessors (Jonsson and Svingby, 2007; Rezaei and Lovorn, 2010; Cockett and Jackson, 2018). We compared assessors’ scores of the proposals to assess inter-rater reliability of the rubric. There was disagreement between raters on only 14 out of 49 proposals for a total of 22 out of 392 rubric item scores (5.6%). After a third assessor scored the proposals for which there was disagreement, total rubric items with disagreement was reduced to 15 out of 392 total rubric item scores (3.8%). Two rubric items accounted for nearly half of the disagreements between assessors (44%) — the Citation and references and Free of errors rubric items. Thus, of the 3.8% disagreement in scores, assessors disagreed mostly on the evaluation of rubric items that addressed the two lower-order concerns.

Discussion

The rubric developed as a part of SciWrite allowed us to evaluate how well students wrote in multiple genres for multiple audiences (Learning Outcome Two) and also provided an effective, holistic framework for feedback. We determined that higher-order concerns best explained the variation in rubric scores for the proposals. A number of these higher-order rubric items were specifically developed to assess the writers’ command of genre and audience. This indicates that using such a rubric to assess Learning Outcome Two was an effective choice. Our findings also support what Reynolds et al. (2009) found with their BioTAP rubric and what other studies have found about writing feedback geared towards long-term learning (Nordrum et al., 2013; Panadero and Jonsson, 2013). For example, writing and rhetoric scholars such as Neman (1995) report that heavy sentence-level revision with a focus on “errors” rarely helps students learn to assess and revise their own writing and this has become common knowledge for writing and rhetoric practitioners (Neman, 1995). Furthermore, scholars have found that when faculty members heavily revise their students’ papers and provide mostly directive (rather than facilitative) comments it becomes difficult for students to consider that there are a variety of choices to make in their revision process, especially if these edits are made without any sort of feedback framework to provide students with reasoning for those revisions (Neman, 1995; Straub, 1996; Reynolds et al., 2009). Such directive approaches prevent students from having autonomy over their own writing and revision process (Neman 1995; Straub 1996). Studies have found that rubrics, if constructed with higher-order concerns in mind, can reduce the potential negative impact of directive feedback and help students learn self-assessment of their own writing which can promote writing autonomy and long-term learning (Nordrum et al., 2013; Panadero and Jonsson, 2013; Fraile et al., 2017). Therefore, this rubric, if implemented in concert with rhetorically-informed courses and workshops, may be especially useful for science faculty and graduate programs with limited experience in providing holistic writing feedback for both higher- and lower-order concerns.

The rhetoric-based SciWrite training program required graduate students to write early and often, for multiple audiences, and to frequently review their own and others’ written works, and we maintain that such training helped SciWrite students improve their writing skills. We found that graduate students who received SciWrite training scored higher on average on three different genres of writing than students who did not receive the training, and most notably produced higher quality proposals. The largest differences in scores on specific rubric items between SciWrite and non-SciWrite student thesis/dissertation proposals were primarily higher-order concerns (e.g., the Depth of content and Student’s research goals rubric items). Furthermore, our PCA results indicated that higher-order concerns (e.g., Appropriate for audience and Argument for significance of research) were a better predictor of writing quality for the proposal. These findings indicate that SciWrite students better met audience expectations, and therefore had a better awareness of their rhetorical situation. There are other programs and courses similar to SciWrite that have also focused their training on higher-order rather than lower-order concerns in their curriculum (Smith et al., 2013; Heath et al., 2014; Kuehne et al., 2014; Clarkson et al., 2018). However, none of these programs placed an emphasis on learning outcomes designed specifically for helping students learn how to better understand the rhetorical situation of their writing projects. SciWrite is unique because we developed specific learning outcomes and best practices based on rhetorical tenets that, according to our assessment, better prepared them to write in different genres for a variety of audiences. Research has shown that genre conventions and communication strategies within the sciences, and other fields of scholarship, can be highly discipline-specific; students will only be successful communicators in their field if they are adequately prepared to adapt to the discipline-specific conventions and audiences for which they are writing and communicating (Darling, 2006; Dannels, 2009). Given that all science graduate students must produce proposals as well as other writing products for a variety of audiences, and must adapt to discipline-specific modes of communication, we maintain that such rhetoric-based training may be helpful for improving students’ scientific writing for both academic and public audiences across a broad cross-section of science disciplines.

The written assignments from the two courses were not as clearly different for SciWrite students compared to non-SciWrite students as the proposals. As expected, both groups of students in the first course (Graduate Writing in the Life Sciences) produced assignments that were similar in total rubric score. We believe this is because Writing in The Life Sciences was taken during the first few months of the SciWrite students’ participation in the SciWrite program. The mean score of SciWrite student assignments in the second course (Public Engagement in Science) tended to be higher on average than non-SciWrite student assignments (p = 0.062). It’s important to note that the intended audience for the Public science writing piece was a general public audience, whereas the intended audience for the Public engagement project report was a specialized professional audience that may or may not have had an academic background. Although there was not definitive individual improvement over the course of a year in the SciWrite program, we conclude writing performance may have varied depending on genre and intended audience and SciWrite students were able to successfully compose assignments intended for highly specialized audiences and rhetorical situations. Other studies have suggested that teaching science communication for different types of audiences may require different courses and methods of instruction (Heath et al., 2014). And though many experts cite using a genre approach for writing instruction, almost no studies have investigated the ways in which an individual students’ writing abilities may vary depending on the genre at hand (Rakedzon and Baram-Tsabari, 2017). We were not able to investigate the potential factors that may contribute to variation in writing quality according to genre, and so we recommend future programs investigate these factors.

Our findings corroborate what practitioners in writing and rhetoric have emphasized as perhaps the most important tool for helping students to improve their writing—following a hierarchy of concerns when giving feedback (Elbow 1981; North 1984; Reynolds et al., 2009). Writing and rhetoric scholars have long argued that higher- and lower-order concerns are two different components of writing that should not necessarily be given equal weight when helping students improve their writing (Elbow 1981; North 1984). According to our analyses, a written product with a strong awareness of the rhetorical situation is more likely to be higher in quality than a paper that is merely free of errors. Put another way—true to the argument often made in the field of writing and rhetoric, “free of errors” does not necessarily equate to “good” writing. In the context of the SciWrite program, specifically, these findings seem especially pertinent. Our learning outcomes and program design were all framed around writing and rhetoric best practices, so SciWrite training placed virtually all of its focus on higher-order concerns rather than lower-order concerns.

Recommendations for Use of the Rubric and Implementation in Courses

One goal of the SciWrite program was to assist faculty members and other institutions in providing their students with holistic writing feedback that helps students improve their writing skills over time. As such, we wish to provide readers with recommendations from well-established, evidence-based writing and rhetoric best practices that will help readers use this rubric in their own programs and courses.

As mentioned previously, science faculty mentors are usually only equipped to help their students with less complex, lower-order writing concerns, and rarely receive training in giving holistic writing feedback. Adapting the BioTAP rubric allowed us to create a writing rubric for science students and faculty that encourages faculty to give “reader-based” feedback (Brannon and Knoblauch, 1982) and make comments on drafts from the perspective of a member of the target audience rather than as merely an editor or grader (Elbow 1981; Reynolds et al., 2009; Druschke and McGreavy 2016). For example, instead of using a rubric to take off points for typographical errors, faculty members could use this rubric to encourage their students to think deeply about their rhetorical situations. Faculty members could engage their students in facilitative questions (rather than directive statements) standard to the writing and rhetoric field such as: “who is the intended audience?”, “what strategies did you use to make this text engaging and persuasive, given the intended audience?”, and “as a reader I’m confused by … because … how could you explain this more clearly?” (Straub 1996; Reynolds et al., 2009). Faculty members could also give their students suggestions for ways to make the text more engaging, and the tone more appropriate, depending on the audience, using the rubric as a feedback framework. In addition, we recommend faculty assign multiple drafts for writing projects, and use this rubric to provide feedback on earlier drafts rather than saving feedback for one final draft (Reynolds et al., 2009). This scaffolded, reader-based feedback approach not only helps students to see writing as a long-term, complex process, but it also reduces the amount of time a faculty member must invest in making copious sentence-level edits on the final product (Reynolds et al., 2009).

We recommend faculty do not simply integrate this rubric into a course that is not designed with rhetorical tenets in mind. For those interested in building off of the SciWrite model, there are a number of writing and rhetoric best practices one can incorporate into a course, in addition to using our rubric (Petersen et al., 2020). For example, writing assignments can be scaffolded into simpler, shorter assignments. This approach of assigning “chunks” of lower stakes writing, rather than complete drafts, helps students get into the habit of writing early and often (Petersen et al., 2020). In addition, students can be assigned different genres of writing with different intended audiences to help them learn how to adjust their approaches according to the needs and expectations of different audiences (Druschke et al., 2018). Lastly, students can be encouraged to engage in peer review, placing an emphasis on higher-order rather than lower-order concerns, and facilitative feedback rather than directive feedback (Elbow, 1981; North, 1984; Neman, 1995; Straub, 1996). We were not able to extensively discuss these strategies in this article, so recommend readers consult Reynolds et al. (2009) and Druschke et al. (2018) for more detailed program design suggestions.

Lessons Learned

Despite the success of the SciWrite program, there is still much progress to be made with helping science graduate students improve their writing and communication skills. Further collaboration between science departments and Writing and Rhetoric departments is highly recommended as this will allow for development of comprehensive and interdisciplinary program learning outcomes. Such an interdisciplinary approach allowed us to create a holistic writing rubric that could assess written products of multiple genres, so this approach will likely allow other programs to develop a broader variety of program outcomes and assessment strategies as well.

Our assessment approach had limitations that future programs should address. First, using course data for overall program assessment may have complicated our results. Because courses are only 3 months long, it may be difficult to quantify writing growth over such a short time span. (Heritage and The Council of Chief State School Officers, 2010; Panadero and Jonsson, 2013). If we had assessed a writing sample from all students before taking each course, this would have given us baseline data to compare for each student to their final writing assignment for each course. We may have been able to more effectively quantify differences between SciWrite and non-SciWrite students using this repeated-measures design. Furthermore, rather than merely looking for statistically significant differences in rubric scores over relatively short time periods, future programs would be wise to supplement this information with additional assessment strategies that are formative, student-centered, and qualitative in approach (Samuels and Betts, 2007; Panadero and Jonsson, 2013; Cockett and Jackson, 2018).

We found that execution of different genres of public science writing may be more, or less, difficult for individual students, depending on a variety of factors. We were not able to investigate what those factors may be (in part, because we did not develop a formative, qualitative assessment method for looking at individual growth over time). Future programs similar to SciWrite could use our rubric to determine whether there is in fact variation in an individual’s writing performance depending on the genre at hand. We would recommend future programs use formative, qualitative self-assessment methodologies, such as rubric-guided self-assessment activities and portfolio self-assessment, in order to investigate learning on a more individual level (Panadero and Jonsson, 2013; Reynolds and Davis, 2014; Fraile et al., 2017). This study design would allow an investigation of individual growth over time in a formative, student-centered, and qualitative way (Samuels and Betts, 2007; Cockett and Jackson, 2018). Investigating individual students’ learning progress, and potential contributing factors to such learning, could then help programs to develop more pointed, research-informed training strategies with a variety of learning outcomes and best practices depending on the rhetorical situation in which students are engaging.

We recommend that future programs like SciWrite that wish to assess students’ written products use an adaptable rubric such as ours that prioritizes higher-order concerns. This approach will be crucial in order to assess multiple genres of writing for a variety of audiences, because it allows assessors to evaluate whether students’ writing was audience appropriate, followed genre conventions, and used techniques appropriate to the genre and rhetorical situation. We were somewhat surprised that there appeared to be differences in writing quality depending on genre, and perhaps different genres of public science writing require different types of training with varying learning outcomes and assessment, depending on genre.

In addition, we recommend that future programs take a similarly interdisciplinary approach to program development, because this will encourage novel program design and a wider variety of assessment approaches. Programs with similar goals to SciWrite would likely benefit from creating learning outcomes and program assessment rubrics with this interdisciplinary, rhetoric-based approach in mind.

Conclusion

It is imperative that scientists improve their communication skills with the public, and one way to address this issue is to better prepare graduate science students for any kind of writing that will be required in their future careers. Our research suggests that our graduate fellows benefited from being in the SciWrite program, in large part because they are now better prepared to communicate science effectively to a variety of audiences. After successfully implementing our program for 3 years, it seems that what likely had the most impact was focusing our program activities and writing feedback on higher-order concerns such as thinking about audience needs and expectations, clearly describing research goals, and making an argument for the significance of the research (rather than placing emphasis on “fixing” SciWrite fellows’ writing). Somewhat unexpectedly, many fellows anecdotally reported to us how helpful it was to their writing process that their SciWrite cohort created a supportive community of practice. Fellows also gave some anecdotal reports of instructors in other classes who focused on directly “fixing” student writing, and this felt discouraging and not as helpful as the supportive, facilitative approach that their fellow SciWrite members took when giving writing feedback. Perhaps the long-term quality of the program helped to slowly build a sense of trust and community for the fellows and being in a community of practice provided them with confidence in their writing process and ultimately helped them to learn more about writing from one another. Lastly, the rhetoric-based approach of our program is likely what helped better prepare fellows to skillfully write in a variety of genres for a variety of audiences. We believe all of the experiences in the SciWrite program will help our fellows in their future careers and will prepare them to respond flexibly and adeptly in any rhetorical situation.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by University of Rhode Island Institutional Review Board. The participants provided their written informed consent to participate in this study.

Author Contributions

SM, IEL, CGD, NK, and NR conceived and designed research and acquired research funding; SM, IEL, CGD, NK, and NR developed SciWrite rubric; EH collected data; EH and IEL supervised assessment; EH analyzed data; EH and SM interpreted results; EH prepared figures; EH drafted article; EH, SM, IEL, CGD, NK, and NR edited and revised article; EH, SM, IEL, CGD, NK, and NR approved final version of article.

Funding

This research was funded by a National Science Foundation NRT-IGE Grant (#1545275) to SM, IEL, CGD, NK, and NR. Additional funding was also provided by the URI Graduate School and URI’s College of the Environment and Life Sciences.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

This project was truly an interdisciplinary and collaborative effort, and therefore we are indebted to many individuals and institutions across multiple departments and universities! We gratefully acknowledge the help and support provided by Cara Mitnick, Esq., Dr. Alycia Mosley Austin, Dr. Andrea Rusnock, and the Graduate School. We are indebted to Dr. Ashton Foley-Schramm for her hard work building and coordinating the Graduate Writing Center (GWC), and to Cara Mitnick for serving as the Administrative Director of the GWC. We are also incredibly thankful to Director Dennis Bennett and Coordinator Chris Nelson of Oregon State University’s Graduate Writing Center for providing us with materials and advice for training our SciWrite fellows and developing a Graduate Writing Center program. We would like to thank Director Heather Price of URI’s undergraduate Writing Center for her helpful counsel as well. We would also like to thank Dr. Sunshine Menezes and Dr. Kendall Moore for their help with designing and teaching SciWrite courses. We are grateful for Dr. Jenna Morton-Aiken’s help with creating the SciWrite@URI project and for assisting us with assessment, and also for the help from all of our trained assessors. We would also like to thank Dr. Melissa Meeks of Eli Review for providing us with so much helpful advice and collaborating with us for numerous SciWrite workshops and projects and Dr. Kate Webster of URI’s Department of Psychology for helping us with some of our statistical analyses. Lastly, we would like to thank University of Rhode Island Institutional Review Board for approving our research protocol.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fenvs.2021.715409/full#supplementary-material

References

Abdi, H. (2003). in The SAGE Encyclopedia of Social Science Research Methods. Editors M. S. Lewis-Beck, A. Bryman, and T. F. L. Futing (Thousand Oaks, Calif: SAGE).

Baron, N. (2010). Escape from the Ivory tower: A Guide to Making Your Science Matter. Washington, DC: Island Press.

Brannon, L., and Knoblauch, C. H. (1982). On students’ rights to their own texts: A model of teacher response. Coll. Comp. Comm. 33, 157–166.

Chinn, P. W. U., and Hilgers, T. L. (2000). From Corrector to Collaborator: The Range of Instructor Roles in Writing-Based Natural and Applied Science Classes. J. Res. Sci. Teach. 37, 3–25. doi:10.1002/(SICI)1098-2736(200001)37:1<3::AID-TEA2>3.0.CO10.1002/(sici)1098-2736(200001)37:1<3::aid-tea2>3.0.co;2-z

Clarkson, M. D., Houghton, J., Chen, W., and Rohde, J. (2018). Speaking about Science: a Student-Led Training Program Improves Graduate Students' Skills in Public Communication. Jcom 17, A05–A20. doi:10.22323/2.17020205

Cockett, A., and Jackson, C. (2018). The Use of Assessment Rubrics to Enhance Feedback in Higher Education: An Integrative Literature Review. Nurse Edu. Today 69, 8–13. doi:10.1016/j.nedt.2018.06.022

Costa, E., Davies, S. R., Franks, S., Jensen, A. M., Villa, R., Wells, R., et al. (2019). D4.1: Science Communication Education and Training across Europe.

Crick, N. (2017). The Rhetorical Situation. Philos. Rhetoric 1, 137–159. doi:10.4324/9781315232522-7

Crowley, S., and Hawhee, D. (2012). Ancient Rhetorics for Contemporary Students. 5th ed. Boston: Pearson.

Dannels, D. P. (2001). Time to Speak up: A Theoretical Framework of Situated Pedagogy and Practice for Communication across the Curriculum. Commun. Edu. 50, 144–158. doi:10.1080/03634520109379240

Darling, A. L. (2005). Public Presentations in Mechanical Engineering and the Discourse of Technology. Commun. Edu. 54, 20–33. doi:10.1080/03634520500076711

DiPardo, A., and Freedman, S. W. (1988). Peer Response Groups in the Writing Classroom: Theoretic Foundations and New Directions. Rev. Educ. Res. 58, 119–149. doi:10.2307/117033210.3102/00346543058002119

Druschke, C. G., and McGreavy, B. (2016). Why Rhetoric Matters for Ecology. Front. Ecol. Environ. 14, 46–52. doi:10.1002/16-0113.1

Druschke, C. G., Reynolds, N., Morton-Aiken, J., Lofgren, I. E., Karraker, N. E., and McWilliams, S. R. (2018). Better Science through Rhetoric: A New Model and Pilot Program for Training Graduate Student Science Writers. Tech. Commun. Q. 27, 175–190. doi:10.1080/10572252.2018.1425735

Elbow, P. (1981). Writing with Power: Techniques for Mastering the Writing Process. New York: Oxford University Press.

Fahnestock, J. (1986). Accommodating Science. Written Commun. 3, 275–296. doi:10.1177/0741088386003003001

Fraile, J., Panadero, E., and Pardo, R. (2017). Co-creating Rubrics: The Effects on Self-Regulated Learning, Self-Efficacy and Performance of Establishing Assessment Criteria with Students. Stud. Educ. Eval. 53, 69–76. doi:10.1016/j.stueduc.2017.03.003

Groffman, P. M., Stylinski, C., Nisbet, M. C., Duarte, C. M., Jordan, R., Burgin, A., et al. (2010). Restarting the Conversation: Challenges at the Interface between Ecology and Society. Front. Ecol. Environ. 8, 284–291. doi:10.1890/090160

Hall, P. (1988). Theoretical Comparison of Bootstrap Confidence Intervals. Ann. Stat. 16, 927–953. doi:10.1214/aos/1176350933

Heath, K. D., Bagley, E., Berkey, A. J. M., Birlenbach, D. M., Carr-Markell, M. K., Crawford, J. W., et al. (2014). Amplify the Signal: Graduate Training in Broader Impacts of Scientific Research. BioScience 64, 517–523. doi:10.1093/biosci/biu051

Heritage and The Council of Chief State School Officers (2010). Formative Assessment and Next-Generation Assessment Systems: Are We Losing an Opportunity?.

Jonsson, A., and Svingby, G. (2007). The Use of Scoring Rubrics: Reliability, Validity and Educational Consequences. Educ. Res. Rev. 2, 130–144. doi:10.1016/j.edurev.2007.05.002

Kuehne, L. M., and Olden, J. D. (2015). Opinion: Lay Summaries Needed to Enhance Science Communication. Proc. Natl. Acad. Sci. USA 112, 3585–3586. doi:10.1073/pnas.1500882112

Kuehne, L. M., Twardochleb, L. A., Fritschie, K. J., Mims, M. C., Lawrence, D. J., Gibson, P. P., et al. (2014). Practical Science Communication Strategies for Graduate Students. Conservation Biol. 28, 1225–1235. doi:10.1111/cobi.12305

Lehtonen, R., and Pahkinen, E. (2004). Practical Methods for Design and Analysis of Complex Surveys. 2nd ed. Wiley.

Lerner, N. (2009). The Idea of a Writing Laboratory. 1st ed. Carbondale: Southern Illinois University Press.

Linton, R. (2013). Hong Kong's War Crimes Trials. Grad. Edge 2, 1–4. doi:10.1093/acprof:oso/9780199643288.001.0001

Lundstrom, K., and Baker, W. (2009). To Give Is Better Than to Receive: The Benefits of Peer Review to the Reviewer's Own Writing. J. Second Lang. Writing 18, 30–43. doi:10.1016/j.jslw.2008.06.002

Meyer, J. L., Frumhoff, P. C., Hamburg, S. P., and De La Rosa, C. (2010). Above the Din but in the Fray: Environmental Scientists as Effective Advocates. Front. Ecol. Environ. 8, 299–305. doi:10.1890/090143

National Alliance for Broader Impacts (2018). The Current State of Broader Impacts. Advancing science and benefiting society. Available at: https://vcresearch.berkeley.edu/sites/default/files/wysiwyg/filemanager/BRDO/Current%20state%20of%20Broader%20Impacts%202018.pdf.

National Research Council (2008). Public Participation in Environmental Assessment and Decision Making. Washington, DC: The National Academies Press. Available at: https://www.nap.edu/catalog/12434/public-participation-in-environmental-assessment-and-decision-making#rights.

Nisbet, M. C., and Scheufele, D. A. (2009). What's Next for Science Communication? Promising Directions and Lingering Distractions. Am. J. Bot. 96, 1767–1778. doi:10.3732/ajb.0900041

Nordlof, J. (2014). Vygotsky, Scaffolding, and the Role of Theory in Writing center Work. Writing Cent. J. 34, 45–65.

Nordrum, L., Evans, K., and Gustafsson, M. (2013). Comparing Student Learning Experiences of In-Text Commentary and Rubric-Articulated Feedback: Strategies for Formative Assessment. Assess. Eval. Higher Edu. 38, 919–940. doi:10.1080/02602938.2012.758229

Panadero, E., and Jonsson, A. (2013). The Use of Scoring Rubrics for Formative Assessment Purposes Revisited: A Review. Educ. Res. Rev. 9, 129–144. doi:10.1016/j.edurev.2013.01.002

Petersen, S. C., McMahon, J. M., McFarlane, H. G., Gillen, C. M., and Itagaki, H. (2020). Mini-Review - Teaching Writing in the Undergraduate Neuroscience Curriculum: Its Importance and Best Practices. Neurosci. Lett. 737, 135302. doi:10.1016/j.neulet.2020.135302

Pololi, L., Knight, S., and Dunn, K. (2004). Facilitating Scholarly Writing in Academic Medicine. J. Gen. Intern. Med. 19, 64–68. doi:10.1111/J.1525-1497.2004.21143.X

Porter, J. E. (1986). Intertextuality and the Discourse Community. Rhetoric Rev. 5, 34–47. doi:10.1080/07350198609359131

Rakedzon, T., and Baram-Tsabari, A. (2017). To Make a Long story Short: A Rubric for Assessing Graduate Students' Academic and Popular Science Writing Skills. Assessing Writing 32, 28–42. doi:10.1016/j.asw.2016.12.004

Reynolds, J., Smith, R., Moskovitz, C., and Sayle, A. (2009). BioTAP: A Systematic Approach to Teaching Scientific Writing and Evaluating Undergraduate Theses. BioScience 59, 896–903. doi:10.1525/bio.2009.59.10.11

Reynolds, N., and Davis, E. (2014). Portfolio Keeping: A Guide for Students. Third. Boston/New York: Bedford/St. Martin’s.

Rezaei, A. R., and Lovorn, M. (2010). Reliability and Validity of Rubrics for Assessment through Writing. Assessing Writing 15, 18–39. doi:10.1016/j.asw.2010.01.003

Roux, D. J., Rogers, K. H., Biggs, H. C., Ashton, P. J., and Sergeant, A. (2006). Bridging the Science–Management Divide: Moving from Unidirectional Knowledge Transfer to Knowledge Interfacing and Sharing. E&S 11. doi:10.5751/es-01643-110104

Samuels, M., and Betts, J. (2007). Crossing the Threshold from Description to Deconstruction and Reconstruction: Using Self‐assessment to Deepen Reflection. Reflective Pract. 8, 269–283. doi:10.1080/14623940701289410

Skrip, M. M. (2015). Crafting and Evaluating Broader Impact Activities: A Theory-Based Guide for Scientists. Front. Ecol. Environ. 13, 273–279. doi:10.1890/140209

Smith, B., Baron, N., English, C., Galindo, H., Goldman, E., McLeod, K., et al. (2013). COMPASS: Navigating the Rules of Scientific Engagement. Plos Biol. 11, e1001552. doi:10.1371/journal.pbio.1001552

Straub, R. (1996). The Concept of Control in Teacher Response: Defining the Varieties of “Directive” and “Facilitative” Commentary. Coll. Compost. Commun. 47, 223. doi:10.2307/358794

Keywords: science communication, STEM, graduate training, program assessment, SciWrite, rubric development, science writing, writing and rhetoric

Citation: Harrington ER, Lofgren IE, Gottschalk Druschke C, Karraker NE, Reynolds N and McWilliams SR (2021) Training Graduate Students in Multiple Genres of Public and Academic Science Writing: An Assessment Using an Adaptable, Interdisciplinary Rubric. Front. Environ. Sci. 9:715409. doi: 10.3389/fenvs.2021.715409

Received: 26 May 2021; Accepted: 10 August 2021;

Published: 06 December 2021.

Edited by:

Stacey Sowards, University of Texas at Austin, United StatesReviewed by:

Carlos Anthony Tarin, The University of Texas at El Paso, United StatesEmma Frances Bloomfield, University of Nevada, Las Vegas, United States

Copyright © 2021 Harrington, Lofgren, Gottschalk Druschke, Karraker, Reynolds and McWilliams. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Erin R. Harrington, ZV9oYXJyaW5ndG9uQHVyaS5lZHU=

Erin R. Harrington

Erin R. Harrington Ingrid E. Lofgren

Ingrid E. Lofgren Caroline Gottschalk Druschke

Caroline Gottschalk Druschke Nancy E. Karraker1

Nancy E. Karraker1 Scott R. McWilliams

Scott R. McWilliams