- The Seventh Research Division, Beihang University, Beijing, China

In this paper, a stochastic model predictive control (MPC) is proposed for the wheeled mobile robot to track a reference trajectory within a finite task horizon. The wheeled mobile robot is supposed to subject to additive stochastic disturbance with known probability distribution. It is also supposed that the mobile robot is subject to soft probability constraints on states and control inputs. The nonlinear mobile robot model is linearized and discretized into a discrete linear time-varying model, such that the linear time-varying MPC can be applied to forecast and control its future behavior. In the proposed stochastic MPC, the cost function is designed to penalize its tracking error and energy consumption. Based on quantile techniques, a learning-based approach is applied to transform the probability constraints to deterministic constraints, and to calculate the terminal constraint to guarantee recursive feasibility. It is proved that, with the proposed stochastic MPC, the tracking error of the closed-loop system is asymptotically average bounded. A simulation example is provided to support the theoretical result.

1 Introduction

Model predictive control (MPC) is a useful tool when dealing with stabilization or tracking problem with constraints. It solves a finite horizon optimal control problem in a receding horizon manner while only implementing one-step-ahead predictive control at next time step. please see the survey paper Mayne et al. (2000) for more detailed directions of MPC. Some recent results on MPC include (but do not limited to) adaptive MPC (Zhu et al., 2020), distributed MPC (Zhu et al., 2018; Wang et al., 2020), robust MPC (Yang et al., 2021), and stochastic MPC (Hewing and Zeilinger, 2018). Due to its advantages on improving system performance and handling constraints, MPC can be applied to motion control (Alcalá et al., 2020), process control (Wu et al., 2020), energy systems (Stadler et al., 2018; Rodas et al., 2021), etc. Several approaches of Robust MPC for wheeled mobile robots have been studied in Gonzalez et al. (2011) and Sun et al. (2018). However, conservative results will be obtained owing to disturbances (Mayne, 2016).

Stochastic MPC resolves the contradiction of safety and economy by taking advantage of uncertainties with known distributions. In addition, violations of constraints are permitted in a predetermined level and thus the soft constraints can balance system performance and limits on states in the meantime. Such characteristic renders stochastic MPC a promising method in a wide range of applications in energy or under actuated systems (Farina et al., 2016), such as energy scheduling (Rahmani-andebili and Shen, 2017), (Scarabaggio et al., 2021), (Jørgensen et al., 2016), energy management for vehicles (Cairano et al., 2014), temperature control in buildings (Hewing and Zeilinger, 2018), racing car Carrau et al. (2016), quadrotors (Yang et al., 2017), overhead cranes (Wu et al., 2015), automated driving vehicles (Suh et al., 2018) and mobile robots (Goncalves et al., 2018). The majority of approaches in Stochastic MPC community are developed for LTI systems (Mesbah, 2016). Several attempts in motion control have been made for LTV or nonlinear systems with ignoring requirement of recursive feasibility (Yang et al., 2017; Goncalves et al., 2018).

This paper presents a stochastic MPC method for wheeled mobile robots based on framework of Hewing et al. (2020). The proposed method remains valid for other LTV systems or nonlinear systems after linearization. The main contribution is to develop a stochastic MPC method to forecast and control the wheeled mobile robot to track its reference trajectory against constraints and additive random disturbance with known distributions. In the proposed stochastic MPC, the cost function is designed to penalize both the tracking error and the energy consumption. The learning-based technique and probabilistic reachable set are applied, such that probability constraints can be transformed to deterministic constraints, and the terminal constraint can be calculated to ensure the recursive feasibility and asymptotic average boundedness. A simulation example with its simulated statistical data is provided to validate the proposed theoretical results.

The rest of the paper is arranged as follows. In Section 2, the LTV kinematic model for the mobile robot is introduced, and the control problem is formulated. In Section 3, the proposed stochastic MPC is designed in detail. In Section 4, recursive feasibility of optimization and asymptotic average boundedness are analysed in detail. In Section 5, the simulation result is provided to show the efficacy of the method.

2 Problem Statement

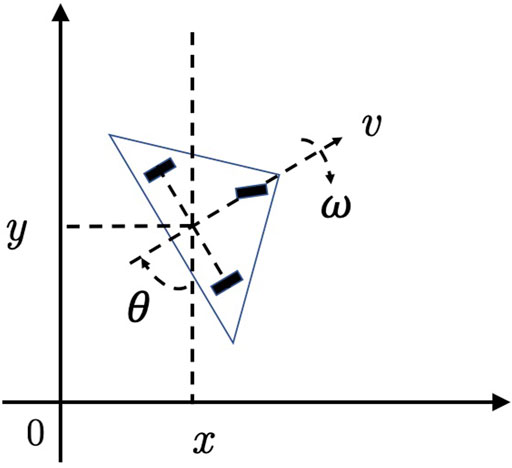

The wheeled mobile robot consists of two differential driving wheels and a guide wheel, as illustrated by Figure 1. Translational velocity and angular velocity of the robot can be expressed by rotation rates of driving wheels as

where q = [x,y,θ]T denotes the position and orientation of the mobile robot; u = [v,ω]T denotes the translational velocity and angular velocity, which are considered as the inputs of the system;

The reference model to be tracked is given by

where xr, yr, θr are the reference position and orientation, respectively.

Define the tracking error by

where

If the sampling interval T is chosen small enough, it is appropriate to apply Euler discretization to obtain the time-varying linear discrete-time model:

where T is the sampling period; k is the sampling time; and

It is supposed that the mobile robot is subject to the probability constraints:

where Vq and Vu are constant matrices with proper dimensions; px and pu are constant vectors with proper dimensions. It can be seen from (6) and 7 that, if px = 1 or pu = 1, then the constraints appear to be deterministic.

The objective of this paper is that, design a model predictive controller, such that the mobile robot (subject to stochastic disturbance) is capable of tracking its reference trajectory within a finite task horizon

3 Stochastic Linear Time-Varying MPC

In this section, the robust dual-mode MPC is applied as the fundamental structure. At time k, the predictive control are set by.

where the notation (⋅) (i|k) denotes the ith step prediction at time k; N is the predictive horizon. In this paper, the control horizon is set equal to the predictive horizon. The tracking error

where v is the nominal MPC for the nominal state s; K is obtained from solving the following Riccati equation at every time instant:

where Q and R are the positive-definite weighting matrices in MPC design; S is to be solved based on the given Q and R. In (14) and 15, we have dropped k for brevity.

3.1 Cost Function

The cost function in MPC is designed by

where Q and R are the positive-definite weighting matrices, and p(k) is solved from the following Lyapunov equation at every time step k − 1:

In (16), the first term is to penalize the tracking error; the second term is to penalize the control power (which reflects the energy efficiency); and the third term is the terminal cost to ensure the overall feasibility and average performance.

The forecasting equation of the nominal part of the system dynamics can be rewritten in the compact form:

where.

Then the cost function can be written by

where

The cost function can be further rewritten by

where

and

It can be seen that d(k) is independent of v(k).

3.2 Constraints

In MPC design, the constraints (6) and (7) have to be handled.

Let

Here, we apply the technique of probabilitic reacheable set (Hewing and Zeilinger, 2018). The k-step probabilistic reachable sets can be calculated by.

where

for

The distribution of e(k) can be computed with distributions of w(k) by the predictive error dynamics

Constraints for nominal states s(k) can be calculated by

or specifically

where j = 1, … , nV denotes the jth row of the matrix. The constraint (37) can be rewritten in compact form by

The constraints for nominal inputs v(k) can be calculated in the similar process, namely,

where

or specifically

For the recursive feasibility of optimization in MPC algorithm, terminal state constraints are imposed on the nominal states:

where ST is a positive invariant set for the nominal system.

The terminal state set ST can be defined based on the terminal control set:

where vinf is the smallest vk in (40).

An iterative learning-based procedure (Gonzalez et al., 2011) can be applied to calculate the terminal constraint:

where the sets are nested, i.e. Z(i) ⊆ Z (i − 1) ⊆⋯ ⊆ Z (0), and

where

The calculation of Z(1) continues with Z (i + 1) = Z(1) ∩ Q(i), where

Then the terminal set can be approximated by ST = Z(1) when Z (i + 1) = Z(i).

3.3 Optimization and Implementation

Based on the above cost function and constraints, the optimization in each time instant of MPC can be constructed by

subject to.

The nominal MPC can be implemented by receding horizon scheme:

and the stochastic MPC for the mobile robot can be implemented by

4 Analysis on the Closed-Loop System

4.1 Feasibility of the Optimization

In this paper, the recursive feasibility is slightly different from the conventional feasibility of MPC. The aim is to prove that the optimization 48) is recursively feasible throughout the task horizon

To prove the recursive feasibility within the task horizon, the “tail” method can be applied here. Suppose that the optimization is feasible at time k. At time k + 1, a feasible solution can be constructed by using the “tail” of the optimal solution at time k, namely,

which satisfies the input constraint (50). Moreover, in (54), K (k + N)s (N|k) ∈ Vinf ⊆ Vk+N, indicating that the terminal constraint (51) is satisfied. Satisfaction of state constraint (49) can also be checked in a similar way.

Satisfaction of constraints (49)–(51) indicates that the original stochastic constraints (6) and (7) are satisfied. Consequently, at least one feasible solution exists at time k + 1, if the optimization is feasible at time k. The optimization in (48) is recursively feasible.

4.2 Average Asymptotic Boundedness of the Closed-Loop System

In this section, the conventional concept of asymptotic stability is inapplicable, since only performances within the task horizon are concerned. We are aiming to prove that the states and inputs satisfy all constraints, while the states are average asymptotically bounded.

Let J*(k) denote the optimal cost at time step k. Let J−(k) denote the feasible cost at time step k by using the “tail” information at time k − 1. Specifically,

where ∗ denotes the optimal control or state sequence. And

It follows that

It then follows from (17) that

Summing both sides of the above inequality for

where J∗(0) is finite, and

where

The limitation 56) indicates that the tracking error of the closed-loop system is asymptotic average bounded.

5 Simulation

A simulation example is presented to validate the performance of the proposed method. In simulation, the sampling interval is T = 0.1s; and the task horizon is given by 13s. Initial conditions of the mobile robot and the reference model are supposed to be

The control inputs are subject to the following constraints:

where

The probability constraints on states are supposed to be given by (6), where

In the stochastic MPC, the predictive horizon is chosen to be N = 10; weighting matrices are selected by

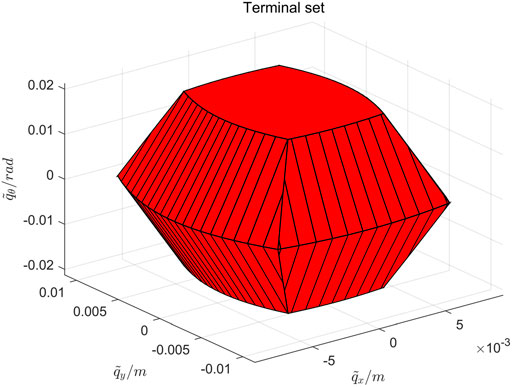

The terminal constraint can be calculated by the learning-based algorithm (42)–(47), and can be illustrated by Figure 2.

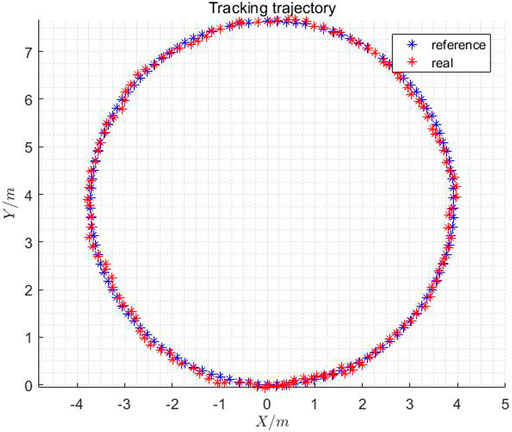

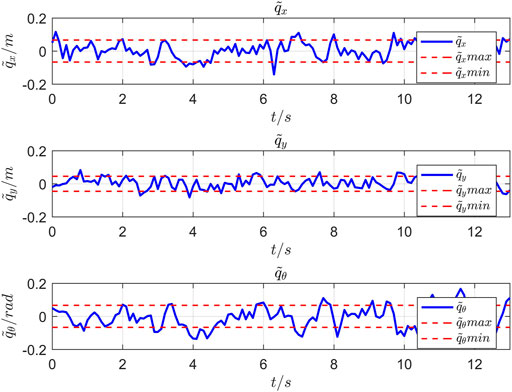

Simulation results are displayed in Figures 3–5. In Figure 3, it can be seen that, with the proposed stochastic MPC, the wheeled mobile robot is capable of tracking its reference trajectory within the task horizon. Only limited tracking errors are witnessed in Figure 4. This simulation example has been run for 500 times with stochastic w, where px in (33) can be calculated approximately by

and px in (6) can be calculated approximately by

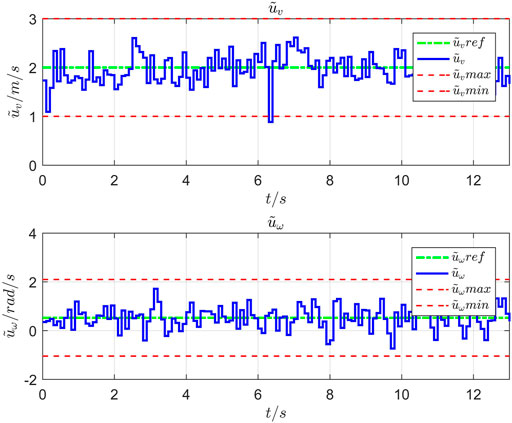

which is in well accordance with the value given in (60). It can be seen from Figure 5 that the control inputs almost always satisfy their constraints, where the constraint on

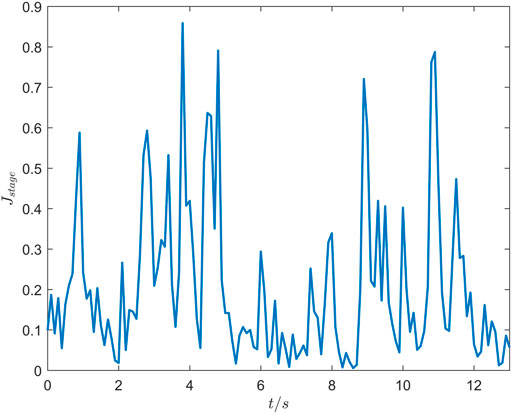

where the first term implies the accuracy of tracking, and the second term indicates the energy consumption (or the consumed control power to eliminate the tracking error). It can be seen from Figure 6 that, in case of stochastic disturbances, the running stage cost can be maintained small. It implies that, the tracking error remains asymptotically average bounded, whereas the energy consumption is small.

6 Conclusion

A stochastic MPC is proposed for the wheeled mobile robot subject to probability constraints and stochastic disturbance to track its reference trajectory within the task horizon. The motion of the mobile robot is forecast and optimized by using its linearized discretized model. The cost function in the proposed MPC is designed to penalize its tracking error and energy consumption. By using the tightening probability reachable set technique, the probability constraints can be transformed into deterministic constraints, facilitating the MPC design. The terminal constraint is calculated with a learning-based algorithm. It is proved theoretically that, the optimization is recursively feasible, and the tracking error of the closed-loop system with the proposed stochastic MPC is asymptotically average bounded within the task horizon. The tracking error and statistical data in simulation imply that the performance of the proposed stochastic MPC is satisfactory.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

WZ designed the main algorithm, implemented the simulation example, produced the main results, and wrote the main parts of the paper. BZ initiated the main idea, revised and polished the paper.

Funding

This work was supported by National Natural Science Foundation of China under grant no. 62073015.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alcalá, E., Puig, V., Quevedo, J., and Rosolia, U. (2020). Autonomous Racing Using Linear Parameter Varying-Model Predictive Control (Lpv-mpc). Control. Eng. Pract. 95, 104270. doi:10.1016/j.conengprac.2019.104270

Cairano, S. D., Bernardini, D., Bemporad, A., and Kolmanovsky, I. V. (2014). Stochastic Mpc with Learning for Driver-Predictive Vehicle Control and its Application to Hev Energy Management. IEEE Trans. Contr. Syst. Technol. 22, 1018–1031. doi:10.1109/TCST.2013.2272179

Carrau, J. V., Liniger, A., Zhang, X., and Lygeros, J. (2016). “Efficient Implementation of Randomized Mpc for Miniature Race Cars,” in 2016 European Control Conference (ECC), Aalborg, Denmark, June 1–July, 2016, 957–962. doi:10.1109/ECC.2016.7810413

Farina, M., Giulioni, L., and Scattolini, R. (2016). Stochastic Linear Model Predictive Control with Chance Constraints - A Review. J. Process Control. 44, 53–67. doi:10.1016/j.jprocont.2016.03.005

Goncalves, L., Basso, G. F., Dorea, C., and Nascimento, T. P. (2018). “Stochastic Nonlinear Model Predictive mobile Robot Motion Control,” in 2018 Latin American Robotic Symposium, 2018 Brazilian Symposium on Robotics (SBR) and 2018 Workshop on Robotics in Education (WRE), Pessoa, Brazil, November 6–10, 2018.

Gonzalez, R., Fiacchini, M., Alamo, T., Guzmán, J. L., and Rodríguez, F. (2011). Online Robust Tube-Based Mpc for Time-Varying Systems: A Practical Approach. Int. J. Control. 84, 1157–1170. doi:10.1080/00207179.2011.594093

Hewing, L., Wabersich, K. P., and Zeilinger, M. N. (2020). Recursively Feasible Stochastic Model Predictive Control Using Indirect Feedback. Automatica 119, 109095. doi:10.1016/j.automatica.2020.109095

Hewing, L., and Zeilinger, M. N. (2018). “Stochastic Model Predictive Control for Linear Systems Using Probabilistic Reachable Sets,” in 2018 IEEE Conference on Decision and Control (CDC), Miami, FL, December 17–19, 2018, 5182–5188. doi:10.1109/cdc.2018.8619554

Jørgensen, J. B., Sokoler, L. E., Standardi, L., Halvgaard, R., Hovgaard, T. G., Frison, G., et al. (2016). “Economic Mpc for a Linear Stochastic System of Energy Units,” in 2016 European Control Conference (ECC), Aalborg, Denmark, June 29–July 1, 2016, 903–909. doi:10.1109/ecc.2016.7810404

Kouvaritakis, B., Cannon, M., Raković, S. V., and Cheng, Q. (2010). Explicit Use of Probabilistic Distributions in Linear Predictive Control. Automatica 46, 1719–1724. doi:10.1016/j.automatica.2010.06.034

Mayne, D. Q., Rawlings, J. B., Rao, C. V., and Scokaert, P. O. M. (2000). Constrained Model Predictive Control: Stability and Optimality. Automatica 36, 789–814. doi:10.1016/s0005-1098(99)00214-9

Mayne, D. (2016). Robust and Stochastic Model Predictive Control: Are We Going in the Right Direction? Annu. Rev. Control. 41, 184–192. doi:10.1016/j.arcontrol.2016.04.006

Mesbah, A. (2016). Stochastic Model Predictive Control: An Overview and Perspectives for Future Research. IEEE Control. Syst. Mag. 36, 30–44. doi:10.1109/MCS.2016.2602087

Rahmani-andebili, M., and Shen, H. (2017). “Cooperative Distributed Energy Scheduling for Smart Homes Applying Stochastic Model Predictive Control,” in 2017 IEEE International Conference on Communications (ICC), Paris, France, May 21–25, 2016, 1–6. doi:10.1109/icc.2017.7996420

Rodas, J., Gonzalez-Prieto, I., Kali, Y., Saad, M., and Doval-Gandoy, J. (2021). Recent Advances in Model Predictive and Sliding Mode Current Control Techniques of Multiphase Induction Machines. Front. Energ. Res. 9, 445. doi:10.3389/fenrg.2021.729034

Scarabaggio, P., Carli, R., Jantzen, J., and Dotoli, M. (2021). “Stochastic Model Predictive Control of Community Energy Storage under High Renewable Penetration,” in 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, June 22–25, 2021, 973–978. doi:10.1109/MED51440.2021.9480353

Stadler, P., Girardin, L., Ashouri, A., and Maréchal, F. (2018). Contribution of Model Predictive Control in the Integration of Renewable Energy Sources within the Built Environment. Front. Energ. Res. 6, 22. doi:10.3389/fenrg.2018.00022

Suh, J., Chae, H., and Yi, K. (2018). Stochastic Model-Predictive Control for Lane Change Decision of Automated Driving Vehicles. IEEE Trans. Veh. Technol. 67, 4771–4782. doi:10.1109/TVT.2018.2804891

Sun, Z., Dai, L., Liu, K., Xia, Y., and Johansson, K. H. (2018). Robust Mpc for Tracking Constrained Unicycle Robots with Additive Disturbances. Automatica 90, 172–184. doi:10.1016/j.automatica.2017.12.048

Wang, Q., Duan, Z., Lv, Y., Wang, Q., and Chen, G. (2020). Distributed Model Predictive Control for Linear-Quadratic Performance and Consensus State Optimization of Multiagent Systems. IEEE Trans. Cybern 51, 2905–2915. doi:10.1109/TCYB.2020.3001347

Wu, Z., Rincon, D., and Christofides, P. D. (2020). Process Structure-Based Recurrent Neural Network Modeling for Model Predictive Control of Nonlinear Processes. J. Process Control. 89, 74–84. doi:10.1016/j.jprocont.2020.03.013

Wu, Z., Xia, X., and Zhu, B. (2015). Model Predictive Control for Improving Operational Efficiency of Overhead Cranes. Nonlinear Dyn. 79, 2639–2657. doi:10.1007/s11071-014-1837-8

Yang, W., Xu, D., Jiang, B., and Shi, P. (2021). A Novel Dual-Mode Robust Model Predictive Control Approach via Alternating Optimizations. Automatica 133, 109857. doi:10.1016/j.automatica.2021.109857

Yang, Y., Chen, Y., Tang, C., and Chai, L. (2017). “Quadrotor Helicopters Trajectory Tracking with Stochastic Model Predictive Control,” in 2017 Australian and New Zealand Control Conference (ANZCC), Gold Coast, QLD, December 17–20, 2017 (IEEE), 166–171. doi:10.1109/anzcc.2017.8298505

Zhu, B., Guo, K., and Xie, L. (2018). A New Distributed Model Predictive Control for Unconstrained Double-Integrator Multiagent Systems. IEEE Trans. Automat. Contr. 63, 4367–4374. doi:10.1109/tac.2018.2819429

Keywords: model predictive control, mobile robot, probability constraint, linear time-varying systems, optimization

Citation: Zheng W and Zhu B (2021) Stochastic Time-Varying Model Predictive Control for Trajectory Tracking of a Wheeled Mobile Robot. Front. Energy Res. 9:767597. doi: 10.3389/fenrg.2021.767597

Received: 31 August 2021; Accepted: 11 October 2021;

Published: 18 November 2021.

Edited by:

Xianming Ye, University of Pretoria, South AfricaReviewed by:

Bo Wang, Huazhong University of Science and Technology, ChinaZhou Wu, Chongqing University, China

Copyright © 2021 Zheng and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bing Zhu, emh1YmluZ0BidWFhLmVkdS5jbg==

Weijiang Zheng

Weijiang Zheng Bing Zhu

Bing Zhu