- 1International Seismic Safety Organization (ISSO), Arsita, Italy

- 2Oregon Earthquake Awareness, Portland, OR, United States

- 3Institute of Earthquake Prediction Theory and Mathematical Geophysics, Russian Academy of Sciences (IEPT RAS), Moscow, Russia

- 4Accademia Nazionale dei Lincei, Rome, Italy

- 5Institute of Geophysics, China Earthquake Administration, Beijing, China

- 6Accademia Nazionale Delle Scienze detta dei XL, Rome, Italy

- 7Beijing University of Civil Engineering and Architecture (BUCEA), Beijing, China

- 8Associate to the National Institute of Oceanography and Applied Geophysics - OGS, Trieste, Italy

Neo-Deterministic Seismic Hazard Assessment (NDSHA), dating back to the turn of the Millennium, is the new multi-disciplinary scenario- and physics-based approach for the evaluation of seismic hazard and safety–guaranteeing “prevention rather than cure.” When earthquakes occur, shaking certainly does not depend on sporadic occurrences within the study area, nor on anti-seismic (earthquake-resistant) design parameters scaled otherwise to probabilistic models of earthquake return-period and likelihood — as adopted in the widespread application of the model-driven Probabilistic Seismic Hazard Analysis (PSHA). Therefore, from a policy perspective of prevention, coherent and compatible with the most advanced theories in Earth Science, it is essential that at least the infrastructure installations and public structures are designed so as to resist future strong earthquakes. Evidences and case histories detailed in the newly published book Earthquakes and Sustainable Infrastructure present a new paradigm for Reliable Seismic Hazard Assessment (RSHA) and seismic safety — comprehensively detailing in one volume the ‘state-of-the-art’ scientific knowledge on earthquakes and their related seismic risks, and actions that can be taken to ensure greater safety and sustainability. The book is appropriately dedicated to the centenary of Russian geophysicist Vladimir Keilis-Borok (1921–2013), whose mathematical-geophysical insights have been seminal for the innovative paradigm of Neo-deterministic seismic hazard assessment. This review focuses on Hazards, Risks and Prediction initially discussed in the introductory Chapter 1 — an understanding of which is essential in the applications of the state-of-the-art knowledge presented in the book’s 29 following chapters.

Introduction

Newly published Earthquakes and Sustainable Infrastructure (Panza et al., 2021) presents a new paradigm for seismic safety — comprehensively detailing in one volume the ‘state-of-the-art’ scientific knowledge on earthquakes and their related seismic risks, and the actions that can be taken to reliably ensure greater safety and sustainability. This book is appropriately dedicated to the centenary of Russian geophysicist Vladimir Keilis-Borok (1921–2013), whose mathematical insights have been seminal for the innovative paradigm of Neo-Deterministic Seismic Hazard Assessment (NDSHA). Dating back to the turn of the Millennium, NDSHA is the new multi-disciplinary scenario- and physics-based approach for the evaluation of seismic hazard and safety — guaranteeing “prevention rather than cure.”

When earthquakes occur with a given magnitude (M), the shaking certainly does not depend on sporadic occurrences within the study area, nor on anti-seismic (earthquake resistant) design parameters scaled otherwise to probabilistic models of earthquake return period and likelihood — as adopted in the widespread application of the model-driven Probabilistic Seismic Hazard Analysis (PSHA), e.g., by the Global Earthquake Model (GEM) project and its recent spinoff Modello di Pericolosità Sismica (MPS19) for Italy.

An earthquake compatible with the seismogenic characteristics of a certain area, even if sporadic and therefore labelled as “unlikely”, can occur at any time, and the anti-seismic design parameters must take into account the magnitude values defined according to both the seismic history and the seismotectonics. Therefore, from a policy perspective of prevention, coherent and compatible with the most advanced theories in Earth Science, it is essential that at least the infrastructure installations and public structures are designed so as to resist (or sustain) future strong earthquakes and continue operation in their original capacities.

Thirty chapters of the book provide comprehensive reviews and updates of NDSHA research and applications so far in Africa, America, Asia and Europe — a collection of evidences and case histories that hopefully will persuade responsible people and authorities to consider these more reliable procedures for seismic hazard analyses and risk evaluation. Providing awareness that the use of PSHA may result in the design of unsafe buildings, NDSHA evaluations must be considered in the next versions of earthquake-resistant design standards and explicitly taken as the reference approach for both safety and sustainability.

The book fulfils essential needs of geophysicists, geochemists, seismic engineers, and all those working in disaster preparation and prevention; and is the only book to cover earthquake prediction and civil preparedness measures from a Neo-Deterministic (NDSHA) approach. In this review we focus on the lead chapter: Hazard, Risks, and Prediction by Vladimir Kossobokov (2021) — an understanding of which is essential in the applications of the state-of-the-art knowledge presented in the book’s 29 following chapters.

Science should be able to warn people of looming Disaster

« Science should be able to warn people of looming disaster, Vladimir Keilis-Borok believes.“My main trouble,” he says, “is my feeling of responsibility.” »(Los Angeles Times, 9 July 2012)

Nowadays, in our Big Data World, Science can disclose Natural Hazards, assess Risks, and deliver the state-of-the-art Knowledge of Looming Disasters in advance of catastrophes, along with useful Recommendations on the level of risks for decision-making with regard to engineering design, insurance, and emergency management. Science cannot remove, yet, people’s favor for fable and illusion regarding reality, as well as political denial, sincere ignorance, and conscientious negligence among decision-makers. The general conclusion above is confirmed by application and testing against Earthquake Reality, that the innovative methodology of Neo-Deterministic Seismic Hazard Assessment (NDSHA) “Guarantees Prevention Rather Than Cure.” NDSHA results are based on reliable seismic evidence, Pattern Recognition of Earthquake Prone Areas (PREPA), implications of the Unified Scaling Law for Earthquakes (USLE), and exhaustive scenario-based modeling of ground shaking.

The UN World Conference on Disaster Reduction, held January 18–22, 2005 in Kobe, Hyogo, Japan, formally adopted the Hyogo Framework for Action 2005–2015: “Building the Resilience of Nations and Communities to Disasters”, just days following the 26 December 2004, MW 9.2 Great Indian Ocean mega-earthquake and tsunami. During the Conference, a Statement (Kossobokov, 2005a) at the “Special Session on the Indian Ocean Disaster: risk reduction for a safer future” was urging or insistent on a possibility of a few mega-earthquakes of about the same magnitude MW 9.0 occurring globally within the next 5–10 years. This prediction was confirmed, unfortunately, by both the 27 February 2010, MW 8.8 mega-thrust offshore Maule, Chile and the 11 March 2011, MW 9.1 mega-thrust and tsunami off the Pacific coast of Tōhoku, Japan (Kossobokov, 2011; Ismail-Zadeh and Kossobokov, 2020).

An opportunity to reduce the impacts from both these earthquakes and tsunami disasters was missed. Davis et al. (2012) showed how the prediction information on expected world’s largest earthquakes provided by the M8 and MSc algorithms (Keilis-Borok and Kossobokov, 1990; Kossobokov et al., 1990), although limited to the intermediate-term span of years and middle-range location of a thousand km, can be used to reduce future impacts from the world’s largest earthquakes.

The primary reasons for having not used the prediction for improving preparations in advance of the Tōhoku earthquake “included: 1) inadequate links between emergency managers and the earthquake prediction information; and 2) no practiced application of existing methodologies to guide emergency preparedness and policy development on how to make important public safety decisions based on information provided for an intermediate-term and middle-range earthquake prediction having limited but known accuracy.” The Tōhoku case-study exemplifies how reasonable, prudent, and cost-effective decisions can be made to reduce damaging effects in a region, when given a reliable Time of Increased Probability (TIP) for the occurrence of a large earthquake and associated phenomena like tsunami, landslides, liquefaction, floods, fires, etc.

The Sendai Framework for Disaster Risk Reduction 2015–2030, a successor of the Hyogo Framework for Action, is a set of agreed-upon commitments to proactively ensure the prevention of “new” Disasters — through the timely implementation of integrated economic, structural, legal, social, health, cultural, educational, environmental, technological, political, and institutional measures (Briceño, 2014; Mitchell, 2014). Years after the 2005 Hyogo and 2015 Sendai Frameworks for Disaster Risk Reduction, countries are now following a range of different approaches and mitigation strategies, due to the variety of both societal systems and hazards. However, Gilbert White’s (2005) observation from the tragic tsunami beginnings of this heightened awareness that it was “important to recognize that no country in the world has achieved a completely effective policy for dealing with the rising tide of costs from natural hazards” is still largely true today.

Our beliefs in models, myths can contradict real-world observations

Moreover, the ongoing COVID-19 pandemic is an itchy and troubling global example of how public policies based on presumably both “the best science available” and also data of high quality nonetheless appear to be extremely difficult, uneven, and may sometimes lead to Disaster even in those countries that were supposedly well-prepared for such an emergency. In fact, the pandemic (https://coronavirus.jhu.edu/map.html), with a rapidly growing less-than-a-year death toll of 1,820,841 and 83,579,767 global cases reported on 1 January 2021, as of 13 February 2021, had the numbers alarmingly already raised to 2,385,203 and 108,289,000 respectively — and thereby sheds a sobering shower on our existing unperturbed and unchallenged myths about disasters (Mitchell, 2014). As of 2 September 2021, the totals had more than doubled, rising to 4,702,119 and 229,159,687 despite enormous efforts on vaccination. JHU has stopped collecting data as of March 10, 2023 when the death toll reached 6,881,955; total cases reached 676,609,955; and total vaccine doses administered reached 13,338,833,198.

In one disastrous outcome, “the anzen shinwa (“safety myth”) image portrayed by the Japanese government and electric power companies tended to stifle honest and open discussion of the risks” from nuclear power, in the years leading up to the 2011 Fukushima disaster (Nöggerath et al., 2011). Kaufmann and Penciakova (2011) illustrate how “countries with good governance”— for example, Chile in 2010, “can better prepare for and mitigate the devastating effects of natural disasters” through leadership and transparency. In exploring “Japan’s governance in an international context and its impact on the country’s crisis response,” they reveal how failures in the nuclear plant regulatory environment (including regulatory capture — wherein “the rulemaking process also appears to be riddled with conflict of interest”) led to an unmitigated disaster that was totally avoidable. See also (Saltelli et al., 2022).

Can nothing be done to stop the increasing number of disasters?

Is there any reason, when estimating long-term trends, for inventing the Myth that now “fewer people are dying in disasters” (Mitchell, 2014), if a pandemic like COVID-19 (or even a single deadly event like the 2004 Great Indian Ocean mega-earthquake and tsunami that killed 227,898 people) can push up significantly the expected average rate of death tolls? Is Climate Change now the biggest cause of disasters, since both vulnerable populations and infrastructures presently exist widespread in the areas exposed to extreme catastrophic events of different kinds?

Is it true that nothing can be done to stop the increasing number of disasters, if, alternatively, a country can radically reduce its risks from disasters by appropriate investments, incentives, and political leadership? Unlike 30 years ago, Science presently does have the know-how to reduce damage from even the major hazardous events to the level of incidents rather than disasters.

Evidently, we do not live in a black-and-white disaster world, and our beliefs, i.e., our mental models, or the “conceptualizations” that we “bring to the task” (pages 2–3 in Chu, 2014) in “initial basic principles” may unfortunately lead us to rather prefer models that contradict with our real-world observations. We know quite well the famous quotation that “all models are wrong, but some are useful” from George Box (1979), but too often we forget that some models are useless and some others are really harmful, especially, when viewed as complete substitutes for the original natural phenomenon (Gelfand, 1991).

Nowadays, in our Big Data World, where the global information storage capacity routinely surpasses a level of more than 6 Zettabytes (6 × 10+21 in optimally compressed bytes) per year, “open data”, together with the enormous amount of available pretty fast user-friendly software, provide unprecedented opportunities for the development and enhancement of pattern recognition studies — in particular, those studies applied to Earth System processes. However, a Big Data World alternatively opens up as well many wide avenues, narrow pathways, and even rabbit holes for finding and/or imagining deceptive associations (i.e., Quixote-like patterns that are not really there) in both inter- and trans-disciplinary data — therein then subsequently inflicting misleading inventions, predictions, and, regretfully, wrong decisions that eventually may lead to different kinds of disasters.

The core seed of disaster is risk

The “common language vocabulary” by itself is oftentimes confusing to common peoples’ understandings of well-intentioned messaging conveying importance of dangers and their likelihood, even though generally being both thought-provoking and pretty much instructive: see Cambridge Dictionary for Disaster; Hazard; Risk; Vulnerability; and Prediction (https://dictionary.cambridge.org/us/).

“Although ‘hazard’ and ‘risk’ are commonly regarded as synonyms, it is useful to distinguish between them. Hazard can be thought of as the possibility that a dangerous phenomenon might occur, whereas risk is a measure of the loss to society that would result from the occurrence of the phenomenon. More concisely, ‘risk is a measure of the probability and severity of adverse effects’ (Lowrance, 1976; Peterson, 1988).” Seismic hazard refers to the natural phenomenon of earthquakes, ground motion in particular, which can cause harm. Seismic risk refers to the possibility of loss or injury caused by a seismic hazard.

We are all living in a risky world, and Figure 1 illustrates further our appetite for all the essential intertwined loops of Risk: defined in common language as “the chance of injury, damage, or loss.” The figure complements with the fifth basic component of Time the four components presented by Boissonnade and Shah (1984), who define Risk “as the likelihood of loss”. In insurance studies: a) the Exposure is defined as “the value of structures and contents, business interruption, lives, etc.”; and b) Vulnerability as the sensitivity to Hazard(s) at certain Location(s) — i.e., “the position of the exposure relative to the hazard.” Since Hazard is likely to cause damage and losses sometimes, the origin Time and duration of any hazardous event may become critical in its transformation to Disaster, as illustrated later.

FIGURE 1. A knot that symbolically intertwines hazard, location, time, exposure, and vulnerability — all around Risk.

Natural hazards

In the natural hazard realm, these dangerous and damaging phenomena may include earthquake, tsunami, flood, landslide, volcanic eruption, hurricane, tornado, wildfire, etc. Hazards (or possibilities that dangerous phenomena might occur) are especially “risky” when they are only thought of in terms of the perceived probabilities for their occurrence (i.e., low hazard or high hazard) — because here we really need to consider the components of Location, Time, and Exposure versus Vulnerability as well.

We also know quite well from experience that hazardous events may cascade — where (under certain circumstances) a primary event may initiate or cause further secondary, tertiary, etc. damages, disruptions, and losses — such as the recent August 26 2021 Hurricane Ida, a Category 4 storm that blasted ashore in Louisiana midday “knocking out power to all of New Orleans, blowing roofs off buildings and reversing the flow of the Mississippi River as it rushed from the Louisiana coast into one of the nation’s most important industrial corridors” in the middle of increasing Delta Variant infections/hospitalizations due to the ongoing COVID-19 pandemic. Thus, depending on both the particular risky situation and our response, a hazardous event scenario may either cause or not cause a Disaster.

Can uncertainty be computed?

While Risk can be computed, uncertainty cannot. So regretfully, the following statement, originally attributed to seismic hazard assessment some four decades ago, has not lost its relevance today, and still applies appropriately to present day situations we face in analyzing other potential damages and losses — for the timely implementation of integrated economic, structural, legal, social, health, cultural, educational, environmental, technological, political, and institutional measures:

However, ignorance still exists on the seismic severity (usually expressed in intensity values) a site may expect in the future as well on the damage a structure may sustain for a given seismic intensity. (Boissonnade and Shah, 1984, p. 233)

And while prediction is “the act of saying what you think will happen in the future: e.g., ‘I wouldn’t like to make any predictions about the result of this match.’”— even the advanced tools of data analysis may lead to wrong assessments, when inappropriately used to describe the phenomenon under study. A (self-) deceptive conclusion could be avoided by verification of candidate models against (reproducible) experiments on empirical data — and in no other way.

Risk communication in disaster planning

When decisions are made about required actions in response to prediction of a disaster, the choices made are usually based on a comparison of expected “black eyes” (risks/costs) and “feathers in caps” (benefits). If the latter exceed the former, it is reasonable to go forward. But each of decision-makers may have rather different opinions on hazards, risks, and outcomes of different decisions and, as it is well-known, even two experts (scientists, in particular) may have three or more opinions!

Therefore, actual decisions sometimes (if not always) are not optimal, especially when there are alternative ways of gaining personal benefits or avoiding personal guilt. In many practical cases, decision makers do not have any opinion due to: i) ignorance in beyond-design circumstances; ii) denial of hazard and risk — based on misconceptions; and iii) a sense of personal responsibility to an impending disaster when it is too late to take effective countermeasures. As a result, since Prediction again is “the act of saying what you think will happen in the future: e.g., ‘I wouldn't like to make any predictions about the result of this match.’” — this mimicked view in policy decisions becomes a common way to avoid responsibility.

Since there is already a lot of flexibility in common language that justifies the following disclaimer note: “Any opinions in the examples do not represent the opinion of the Cambridge Dictionary editors or of Cambridge University Press or its licensors.” — we note that many people, including scientists, do not well distinguish between ‘unpredictable’, ‘random’, and ‘haphazard’, which distinctions are, nevertheless, crucial for scientific reasoning and conclusions. In particular, Stark (2017, 2022) emphasizes that: “‘Random’ is a very precise statistical term of art” and that notions of probability can only apply “if the data have a random component.”

Risk Modeling (Michel, 2018) is about the future of Exposure and necessarily convolves Hazard (where possibility now ≈ likelihood of an event) with the components of Location, Time, and Vulnerability (Cannon, 1993; McEntire et al., 2002; McEntire, 2004). Fischhof and Kadvany (2011) informatively note that Risk “shows how to evaluate claims about facts (what might happen) and about values (what might matter)”, further observing that as was previously noted with regard to the global COVID-19 pandemic, officially declared 11 Mar 2020 by the World Health Organization (WHO): “societies define themselves by how they define and manage dangers.” See also (SISMA-ASI (2009); Kaufmann and Penciakova, 2011; May, 2001; Berke and Beatley, 1992; Scawthorn, 2006; SISMA-ASI (2009); Wang, 2008; Wiggins, 1972; Bolt, 1991; Tierney, 2014).

Thus, an earthquake hazard with a presumed low-likelihood (or low probability) can nevertheless represent a high or even unacceptable risk (Berke and Beatley, 1992; May, 2001; Marincioni et al., 2012; Bela, 2014; Tanner et al., 2020), in addition to references cited in previous paragraph — and particularly for those cases noted in “Earthquakes and Sustainable Infrastructure” (Panza et al., 2021), the state-of-the-art approaches are “aimed at the level of natural risks for decision-making in regard to engineering design, insurance, and emergency management”.

And while insurance can repair the damage, and while catastrophic reinsurance can even further spread the risk and keep first insurers solvent, lives can only be saved and infrastructure installations and public structures can only “resist (or sustain) future strong earthquakes and continue to operate in their original capacity” if they can withstand the shaking. An often unappreciated and complicating factor is that “earthquake risk is characteristically seen as ‘remote’ ”— with naturally rare earthquake events “resulting in low risk awareness and low risk reward (Michel, 2014).”

Volcanic disasters: Nyiragongo and Mt. St. Helens

The recent 22 May 2021 Nyiragongo volcano (DR Congo) flank eruption is tellingly illustrative of a volcanic disaster. After just 19 years since the catastrophic January-February 2002 flank eruption, a new flank eruption began on 22 May 2021 (coincidentally on the same date as the Mw 9.5 1960 Chile earthquake, the largest recorded earthquake of the 20th century). As of 27 May 2021 “More than 230,000 displaced people are crowding neighboring towns and villages. Lack of clean water, food and medical supplies, as well as electricity in parts of Goma, are creating catastrophic conditions in many places. To add to all this misery, health authorities are worried about outbreaks of cholera — at least 35 suspected cases have been found so far.” (https://www.volcanodiscovery.com/nyira-gongo/eruption-may-2021/activity-update.html).

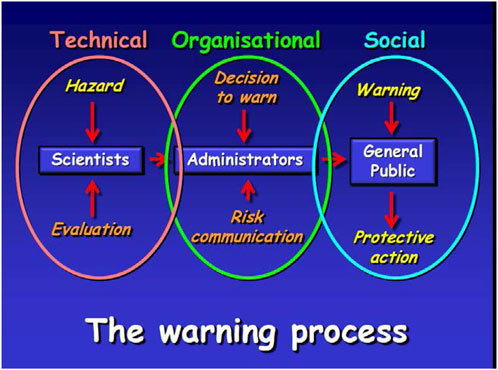

USGS volcanologist Donald Peterson, who witnessed first-hand the catastrophic 1980 Eruption of Mt. St. Helens in southwestern Washington state, United States, observed in a comprehensive review of “Volcanic Hazards and Public Response” (Peterson, 1988) that “although scientific understanding of volcanoes is advancing, eruptions continue to take a substantial toll of life and property.” And although “scientists sometimes tend to feel that the blame for poor decisions in emergency management lies chiefly with officials or journalists because of their failure to understand the threat,” he believes otherwise that “however, the underlying problem embraces a set of more complex issues comprising three pervasive factors: 1) the first factor is the volcano: signals given by restless volcanoes are often ambiguous and difficult to interpret, especially at long-quiescent volcanoes; 2) the second factor is people: people confront hazardous volcanoes in widely divergent ways, and many have difficulty in dealing with the uncertainties inherent in volcanic unrest; 3) the third factor is the scientists: volcanologists correctly place their highest priority on monitoring and hazard assessment, but they sometimes fail to explain clearly their conclusions to responsible officials and the public, which may lead to inadequate public response.” And since “of all groups in society, volcanologists have the clearest understanding of the hazards and vagaries of volcanic activity; they thereby assume an ethical obligation to convey effectively their knowledge to benefit all of society.”

Explaining uncertainty; miscommunication and disasters

Common language vocabulary issues aside; “it is not easy to explain the uncertainties of volcanic hazards to people not familiar with volcanoes, and often these difficulties lead to confusion, misunderstanding, and strained relations between scientists and persons responsible for the public welfare, such as civil officials, land managers, and journalists” (Peterson, 1988) — and notably, the fatal 6 April 2009 Mw 6.3 earthquake disaster that occurred in the Abruzzi region of Central Italy, killing more than 300 people and wrecking the medieval heart of the city, is just such a case-in-point: showing that the above miscommunication reality will apply mutatis mutandis to earthquakes and other hazards. The 2009 L’Aquila earthquake had been preceded by much seismic activity beginning in October 2008, analogous to the preparatory rumblings of an awakening volcano. But even though it occurred in a zone defined at high seismic hazard, as charted on a map — high vulnerabilities combined with major failures in Disaster Risk Mitigation to produce both the tragic large losses and an ensuing legal prosecution of six scientists and one government official, “the L'Aquila Trial” (See Marincioni et al., 2012; Panza and Bela, 2020 and Supplementary Material therein).

Effective communication

In comprehensively addressing the public response, Peterson “advanced the view that volcanologists should regard the development of effective communications with the public just as important a challenge as that of monitoring and understanding the volcanoes. We must apply the same degree of creativity and innovation to improving public understanding of volcanic hazards,” he believed, “as we apply to the problems of volcanic processes. Only then will our full obligation to society be satisfied.”

To be creatively most effective, in developing effective communications with the public (all people or groups not involved in the scientific study of volcanoes, earthquakes, etc.), Peterson offered these insights systematically researched and provided from the social sciences, which “deal with the interaction of people with all kinds of hazards.”

Sorensen and Mileti (1987), pages 14–53 showed that the response to a warning by a person (Figure 2) or group includes a series of steps that involve hearing a message, understanding it, believing it, personalizing it (that is, being convinced that it really applies to the individual), and finally taking action. Different people and different societies react in individual ways as they progress through these steps. The style of a warning message greatly influences the response it produces, and warnings are most effective if they are specific, consistent, accurate, certain, and clear (Sorensen and Mileti, 1987, page 20). If one or more of these attributes is missing, the message is more likely to be ignored or disbelieved.

What (we think) we know about earthquakes

For a reliable seismic hazard assessment, a specialist must be knowledgeable in understanding seismic effects:

• An earthquake is a sudden movement that generates seismic waves inside the Earth and shakes the ground surface.

• Although historical records on earthquakes are known from 2100 B.C., generally most of the earthquakes before the middle of the 18th century are lacking a reliable description, with a possible exception being the Catalogo Parametrico dei Terremoti Italiani (Gasperini et al., 2004) based upon both historical and instrumental data comprising an Italian Earthquakes Catalog more than a thousand years long.

• Earthquakes are complex phenomena. Their extreme catastrophic nature has been known for centuries, due to resulting devastations recorded from many of them.

• Their abruptness, along with their sporadic, irregular and apparently rare occurrences, all facilitate formation of the common perception that earthquakes are random and unpredictable phenomena.

However, modern advances in seismology prove that this perceived random and unpredictable behavior is not really the case in a number of important aspects (Kossobokov, 2021).

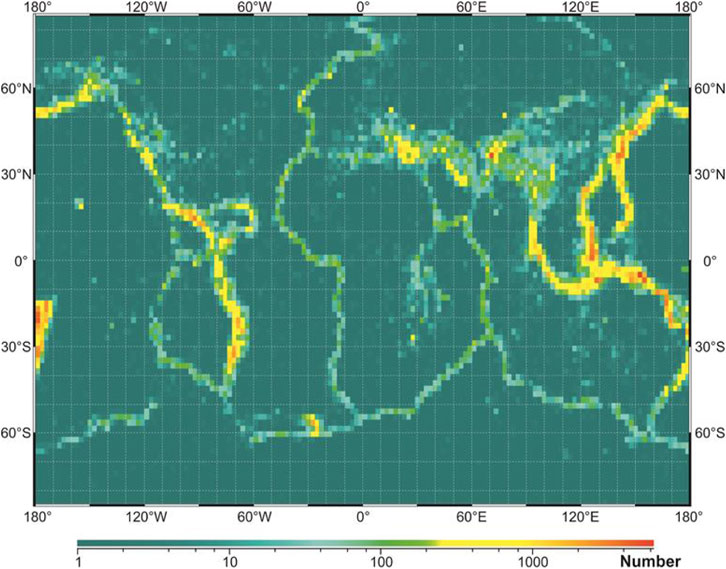

Nowadays, the location of earthquake-prone sites is accurately mapped (Figure 3) due to rather accurate hypocenter determinations, along with estimates of their source size. The “seismic effects” of earthquakes that are needed for a Seismic Hazard Assessment (SHA) can be characterized from both physically felt and observed effects (Macroseismic Intensity), and also from instrumentally recorded earthquake records: a) seismograms and b) records of the actual ground shaking characterizing acceleration, velocity, and displacement — see chapters in Encyclopedia of Solid Earth Geophysics (Gupta, 2020).

FIGURE 3. Locations of earthquake prone sites: Global map showing the numbers of the M ≥ 4 earthquake epicenters within 2.4°×2.0° grid cells during the period 1963–2020.

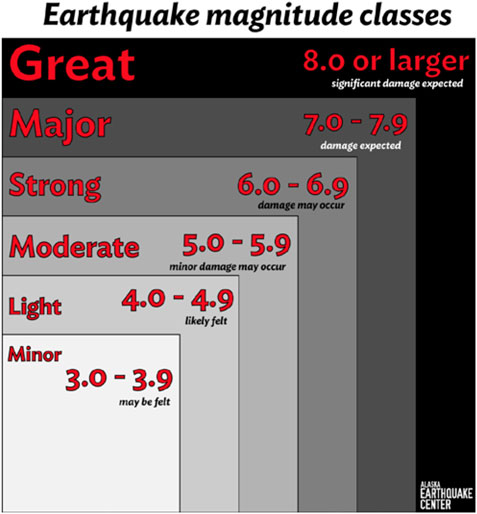

A detailed historical review of earliest seismological attempts to quantify sizes of earthquake sources through a measure of their energy radiated into seismic waves, which occurred also in connection with the parallel development of the concept of earthquake magnitude, is supplied by Gutenberg and Richter (1949), Panza and Romanelli (2001), and Okal (2019). Figure 4 illustrates the commonly accepted notation of earthquake magnitude classes.

Ellsworth (1990) offers these important caveats whenever performing a systematic SHA: a) “earthquakes are complex physical processes generated by sudden slip on faults, and as such they can only be grossly characterized by simple concepts”; and b) “Magnitude, as commonly used to compare the sizes of different earthquakes, also represents an extreme simplification (cf Felt Intensity: center of energy; Instrumental Seismometer: point of first rupture) of the earthquake process and by itself cannot fully characterize the size of any event. Traditionally, seismologists have developed a suite of magnitude scales, each with its own purpose and range of validity to measure an earthquake. Because no single magnitude scale can be systematically applied to the entire historical record, a summary magnitude, M, is introduced here to facilitate comparisons between events.”

Many shaking intensity scales have been developed over a few centuries to measure the damaging results from earthquakes, of which the Modified Mercalli Intensity (MMI) is among the most commonly used. This scale, which maps the center of energy release for pre-instrumental records, classifies qualitatively the effects from an earthquake upon the Earth’s surface: ranging from “not felt” (intensity I); to “extreme” (intensity X), when most masonry and frame structures are destroyed with foundations; and finally, to “total destruction” (intensity XII on the MMI scale), when rolling waves are seen on the ground surface and objects are thrown upward into the air. We feel worth mentioning here also that, for the Mercalli Cancani Sieberg (MCS) intensity scale, a doubling of Peak Ground Acceleration (PGA) practically corresponds to one unit increment of Macroseismic Intensity (Cancani, 1904).

Numerous approaches to the determination of an earthquake source size have resulted in a number of quantitative determinations of magnitude M based on instrumental, macro-seismic, and other data (Bormann, 2020). Charles Richter (1935) used the physically dimensionless logarithmic scale (because of the very large differences in displacement amplitude between different sized events) for his definition of magnitude М — that appears naturally appropriate due to apparent hierarchical organization of the lithosphere (which contains mobile blocks ranging from just the size of a grain ∼10−3 m across — on up to scale of tectonic plates ∼106 m). (Keilis-Borok, 1990; Sadovsky, 2004; Ranguelov, 2011; Ranguelov and Ivanov, 2017).

It is not surprising that, for shallow-depth earthquakes, the magnitude M (originally determined by Richter from the ground displacement recorded on a seismogram) is about two-thirds of the MMI intensity at the epicenter I0, thus М = ⅔ I0 + 1 (Gutenberg and Richter, 1956). Accordingly, then, a strong (M = 6.0) shallow earthquake may cause only negligible damage in buildings of good design and construction near the epicenter, but otherwise considerable damage in poorly built or badly designed structures.

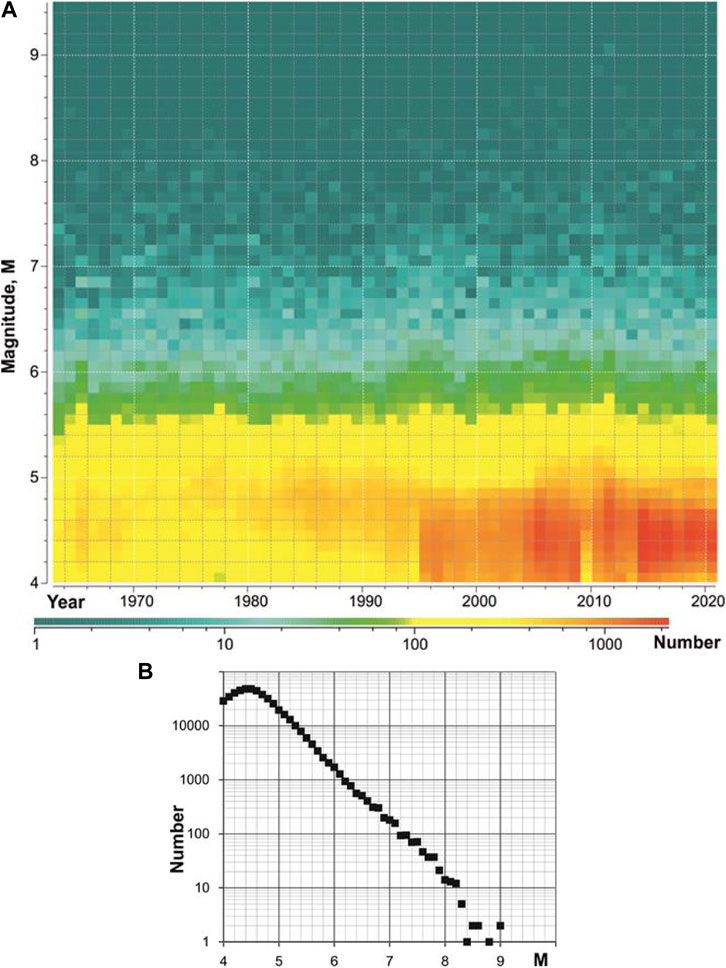

Figure 5 illustrates the global magnitude distribution by year for the time period 1963–2020, i.e., after installation of the analog World-Wide Network of Standard Seismograph Stations (WWNSS) https://science.sciencemag.org/content/174/4006/254 (top); and the empirical non-cumulative Gutenberg-Richter plot of N(M) for the entire 58 years of record, with b-value estimated at 0.998 (R2 = 0.977) for the best fit of log10N(M) = a + b × (8 – M) (bottom).

FIGURE 5. The global non-cumulative magnitude distribution by year in 1963–2020 (A) and the Gutenberg-Richter plot (B).

The coastline of Britain and the seismic locus of earthquake epicenters

The set of earthquake epicenters or, in other words, the seismic locus, has the same fractal properties as the coastline of Britain. Benoit Mandelbrot (1967) notes: “Geographical curves are so involved in their detail that their lengths are often infinite or, rather, undefinable. However, many are statistically ‘self-similar’, meaning that each portion can be considered a reduced-scale image of the whole. Indeed, self-similarity methods are a potent tool in the study of chance phenomena, wherever they appear, from geostatistics to economics and physics. Therefore, scientists ought to consider dimension as a continuous quantity ranging from 0 to infinity.”

Following the pioneering works by Mikhail A. Sadovsky (Sadovsky et al., 1982) and Keiiti Aki (Okubo and Aki, 1987), our understanding of the fractal nature of earthquakes and seismic processes has increasingly grown (Kossobokov, 2020) — along with a concomitantly scientifically revolutionary better understanding and mapping of both the Earth’s interior (Stacey and Davis, 2020), as well as of geophysical aspects of seismic waves propagation (Florsch et al., 1991; Fäh et al., 1993; La Mura et al., 2011; Iturrarán-Viveros and Sánchez-Sesma, 2020).

Naturally, or as might be expected due to hierarchical organization of the lithosphere (referring to the size-related distribution of geographical/seismological phenomena), the number of earthquakes globally, or within a region, is scaled by magnitude, according to the Gutenberg-Richter Frequency-Magnitude (FM) relation (Gutenberg and Richter, 1944; 1954). Globally, and for the time period of 58 years shown in Figure 5 the slope (so called b-value) of the plot is about 1, so that each one unit change in magnitude between M = 5 and M = 9 results in approximately a 10-fold change in the number of earthquakes; there were approximately two hundred M = 7 earthquakes compared to about twenty M = 8 and 20,000 M = 5 earthquakes.

The Gutenberg-Richter relation is a power law and can be written as log10 N = a − bM. As shown in Figure 5, this is also a Pareto distribution, or a distribution with “fat” tails, which serves as a reminder that, in SHA, outliers always do exist as “possibilities” and must therefore be duly recognized and accounted for in seismic hazard [see e.g., Kanamori (2014; 2021)].

Generalized Gutenberg-Richter relationship and unified scaling law for earthquakes

The Gutenberg-Richter relationship just shown above was further generalized by Kossobokov and Mazhkenov (1988, 1994) to the following fractal form:

where: i) N(M,L) is the expected annual number of main shocks of magnitude M within an area of linear size L; ii) the similarity coefficients A and B are similar to the a- and b-values from the classical Gutenberg-Richter law; iii) the newly added similarity coefficient C is the fractal dimension (D per Mandelbrot) of the set of epicenters; and iv) M–and M–are the limits of the magnitude range where this relationship holds. The three frequency-magnitude-spatial coefficients provide an insight into scaling properties of actual seismicity, and therefore they are of specific interest to seismologists working on seismic zonation and risk assessment.

It was shown that C is significantly different from 2, and that it correlates with the geometry of tectonic structures: i) high values of C (∼1.5) correspond to the regions of complex dense patterns of faults of different strikes and high degrees of fracturing, whereas; ii) lower values of C (∼1) are related to regions exhibiting a predominant linear major fault zone (which is consistent with rectifiable curves and straight lines, where D = 1).

Moreover, for example, in the specific case of the Lake Baikal region in the mountainous Russian region of Siberia, north of the Mongolian border (with area of 1,500,000 km2 and C = 1.25), it was demonstrated (Kossobokov and Mazhkenov, 1988; Kossobokov and Mazhkenov, 1994) that: i) the inclusion of aseismic areas leads to underestimation of seismic activity in an area of 1,000 km2 by a factor of 15; and alternatively ii) when a characteristic of seismic activity over 1,000 km2 is computed for a grid 10 km × 10 km, this leads to overestimation by a factor greater than 2.

Earlier, in order to avoid just such seismic activity bias, in a pilot study assessing seismic risk for 76 selected Largest Cities of the World in active seismic regions, Keilis-Borok et al. (1984) compared these two integral estimates: 1) the number of cities with population of one million or more affected in 30 years by strong motion of intensity I ≥ VIII; and 2) the total population in these cities — with the actual aftermaths of these past earthquakes — for reliable “validation of the results” showing specifically that: a) “available data may be sufficient to estimate the seismic risk for a large set of objects, while not for each separate object”; and b) “it indicates, that global seismic risk is rapidly increasing, presenting new unexplored problems.”

The Unified Scaling Law for Earthquakes (USLE), got its name later, when Bak et al. (2002) presented an alternative formulation from that above — making use of the inter-event time between the earthquake occurrences, instead of their annual number. Using the USGS/NEIC Global Hypocenters Data Base, 1964–2001, and a robust box-counting algorithm; Nekrasova and Kossobokov (2002) managed to map the values of A, B, and C in every 1°× 1° box on the Earth marked by record of earthquake occurrence, wherever the catalog of shallow earthquakes of M ≥ 4 permitted a reliable estimation. The results of this global mapping are available at the data repository of the International Seismological Centre (Nekrasova and Kossobokov, 2019).

The distribution of the number of seismic events by magnitudes — the Gutenberg-Richter frequency magnitude relation — is of paramount importance for seismic hazard assessment of a territory. Accordingly, the generalization of the original Gutenberg-Richter relation into the Unified Scaling Law for Earthquakes (USLE) as originally proposed in 1988 makes it possible now to take into account as well the pattern of epicentral distribution of seismic events, whenever changing the spatial scale of the analysis. This is extremely important for adequate downscaling of the frequency-of-occurrence into a smaller target area within any territory under study (e.g., into a megalopolis).

At the time, when Per Bak (Bak et al., 2002) suggested a dual formulation of USLE using the time between seismic events, the Institute of Earthquake Prediction Theory and Mathematical Geophysics of the Russian Academy of Sciences developed a modified algorithm for statistically improved, confident Scaling Coefficients Estimation (referred to as SCE) of the USLE parameters to be used for producing seismic hazard maps of territories prone to seismic effects. An updated brief review, focused on the use of the USLE approach in relation to assessment of seismic hazard and associated risks, is provided in (Nekrasova et al., 2020).

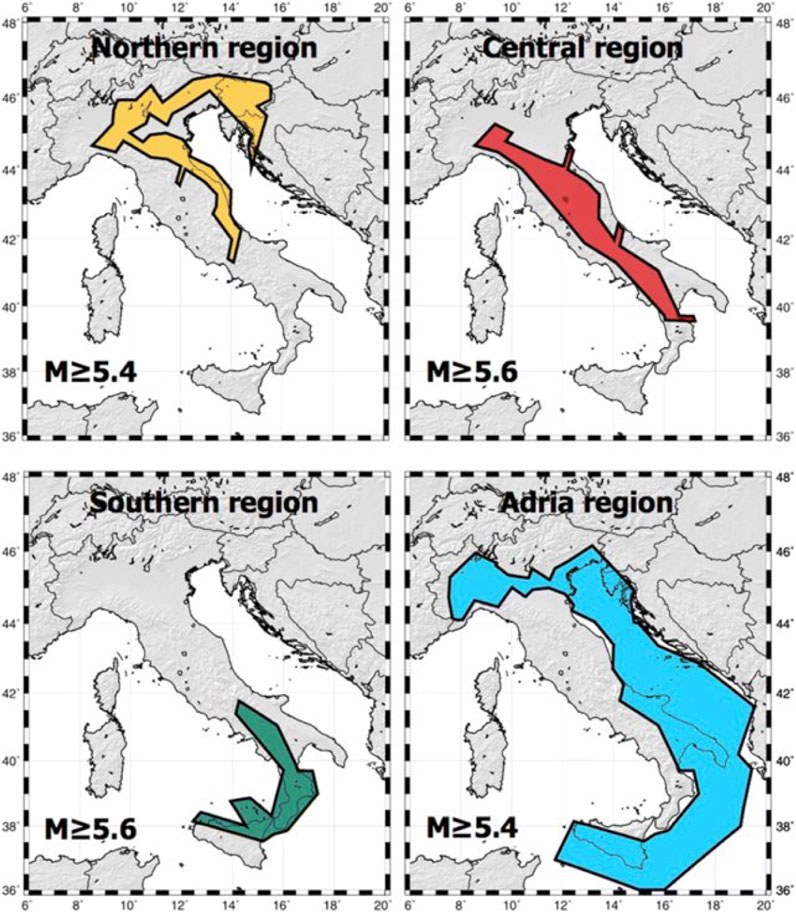

Multi-scale seismicity model

Complementary to USLE is the Multi-scale Seismicity Model (MSM) by Molchan et al. (1997). For a general use of the classical frequency-magnitude relation in seismic risk assessment, they formulated a multi-scale seismicity model that relies on the hypothesis that “only the ensemble of events that are geometrically small, compared with the elements of the seismotectonic regionalization, can be described by a log-linear FM relation.” It follows then that the seismic zonation must be performed at several scales, depending upon the self-similarity conditions of the seismic events and the log-linearity of the frequency-magnitude relation, within the magnitude range of interest. The analysis of worldwide seismicity, using the Global Centroid Moment Tensor (GCMT) Project catalog (where the seismic moment is recorded for the earthquake size) corroborates the idea and observation that a single FM relation is not universally applicable. The MSM of the FM relation has been tested in the Italian region, and MSM is one of the considered appropriate ingredients of NDSHA.

Earthquake catalogs evidence clear patterns that there exists a space-time energy distribution of seismic events because: i) earthquakes do not happen everywhere, but preferentially in tectonically well-developed highly fractured fault zones within the Earth’s lithosphere; ii) earthquake sizes follow the Gutenberg-Richter relationship, which is a surprisingly robust power law (such that, for every magnitude M event, there are ∼10 magnitude M − 1 quakes — within an area that is large enough); iii) earthquakes cluster in time — in particular, seismologists observe: a) surges and swarms of earthquakes; b) seismically driven decreasing cascades of aftershocks; and c) less evident inverse cascade (energy increase), or crescendo of rising activity in foreshocks premonitory to the main shock.

Since earthquake-related observations are generally limited to the recent-most decades (sometimes centuries in just a few rare cases), getting reasonable confidence limits on an objective estimate of the occurrence rate or inter-event times of a strong earthquake within any particular geographic location necessarily requires a geologic span of time that is unfortunately unreachable for instrumental, or even historical seismology [see, e.g., (Ellsworth, 1990; Beauval et al., 2008; Stark, 2017; 2022)]. That is why probability estimates in Probabilistic Seismic Hazard Analysis (PSHA) remain subjective values ranging between 0 and 1, derived from evidently imaginary (but enticingly both analytically and numerically tractable) unrealistic hypothetical models of seismicity.

Seismic Roulette: Nature spins the wheel!

“Look deep into nature, and then you will understand everything better”.- Albert Einstein

Regretfully, most, if not all, of earthquake prediction claims can be characterized as “invented” windmills, wherein we see the earth “not as it is”, but “as it should be” due to very small, if any, samples of clearly defined evidence! Many prediction claims are hampered at their start from the misuse of Error Diagram and its analogues — ignoring the evident heterogeneity of earthquake distributions in space as well as in time. See e.g. (Bela and Panza, 2021).

A rigorous mathematical formulation of a natural spatial measure of seismicity is given in (Kossobokov et al., 1999). This “Seismic Roulette null-hypothesis” (Kossobokov and Shebalin, 2003) (or the hypothesis that chance alone in a random process is responsible for the results) is a nice analogy for using the simple recipe that accounts for this spatial patternicity (Kossobokov, 2006a) using statistical tools available since Blaise Pascal (1623–1662):

consider a roulette “wheel” with as many sectors as the number of events in the best available catalog of earthquakes, one sector per earthquake epicenter event;

consider a roulette “wheel” with as many sectors as the number of events in the best available catalog of earthquakes, one sector per earthquake epicenter event; make your best bet according to any prediction strategy: determining which events are inside a projected space-time “area of alarm” — and then

make your best bet according to any prediction strategy: determining which events are inside a projected space-time “area of alarm” — and then place one chip upon each of the corresponding sectors.

place one chip upon each of the corresponding sectors.Nature then spins the “wheel”, before introducing an energized target-seeking earthquake “ball”. If you play seismic roulette systematically, then you win and lose systematically (Figure 6). If you are smart enough, and your predictions are effective, the first will outscore the second.

However, if Seismic Roulette is not perfect in confirming your betting strategy (and thus alternatively is nullifying your hypothesis), and still you are smart enough to choose an effective strategy, then your wins will outscore your losses! And after a while . . . you can then use your best wisdom, or even now an “antipodal strategy”, wherein the earthquake “prediction problem” is examined from the standpoint of decision theory and goal optimization per Molchan (2003) — so as to win both systematically and statistically self-similarly in the future bets!

The results of just such a global “betting” test of the prediction algorithms M8 and MSc did confirm such an “imperfection” of Seismic Roulette (Seismic Roulette is not perfet!) in the recurrence of earthquakes in Nature (Ismail-Zadeh and Kossobokov, 2020); but these same results still suggest placing future bets can be useful, if used in a knowledgeable way for the benefit of the populations exposed to seismic hazard. Their accuracy is already enough for undertaking earthquake preparedness measures, which would prevent a considerable part of damage and human loss, although far from the total. And fortunately, the methodology linking prediction with disaster management strategies does already exist (Molchan, 1997).

Pattern recognition of earthquake prone areas

In lieu of local seismic observations long enough for trustworthy and reliable SHA, alternatively one may try using Pattern Recognition of Earthquake-Prone Areas (PREPA) based, however, on the appropriate geological and geophysical data sets that are available. This geomorphological pattern recognition approach (Gelfand et al., 1972; Kossobokov and Soloviev, 2018) is an especially useful preparedness and mitigation tool in seismic regions that have passed validation: i) first, by exhaustive retrospective testing; and then ii) by the decisive confirmation check afforded by actual strong earthquakes that have occurred. Validity of this pattern recognition PREPA methodology has been proven by the overall statistics of strong earthquake occurrences — after numerous publications of pattern recognition results encompassing both many seismic regions and also over many magnitude ranges (see Gorshkov et al., 2003; Gorshkov and Novikova, 2018 and references therein).

Those who can’t model are doomed to reality!

One application of PREPA deserves a special comment. Regional pattern recognition problems solved by Gelfand et al. (1976) treated two different sets of natural recognition objects for the two overlapping regions: i) regularly spaced points along major strike-slip faults in California; and ii) intersections of morphostructural lineaments in California and adjacent territories of Nevada (Figure 7). They then drew from these the qualitative conclusion that areas prone to M ≥ 6.5 are characterized by proximity to the ends (or to intersections) of major faults, in association with both: a) low relief; and b) often also with some kind of downward neotectonic movement expressed in regional topography and geology — with their conclusion further supported by both PREPA classifications: i) points; and ii) intersections — wherein the same five groups of earthquake-prone areas show up in both cases. Slight differences are due to the fact that the study of intersections covers a larger territory. This supports the idea derived from recognition of points — that strong earthquake-prone intersections often associate with neotectonic subsidence on top of a background weak uplift.

FIGURE 7. Circular 40-km radius outlines of the D-intersections of morphostructural lineaments in California and Nevada and epicenters of magnitude 6.5+ earthquakes before (black stars) and after (red stars with names) publication in 1976 (Gelfand et al., 1976).

As evident from Figure 7, the PREPA termless prediction for California and Nevada has been statistically justified by the subsequent occurrence of 16 out of 17 magnitude 6.5+ earthquakes within a narrow vicinity of the 73 Dangerous D-intersections of morphostructural lineaments (union of yellow circles in Figure 7) determined by Gelfand et al. (1976) as prone to seismic events that large. The target earthquakes included the recent-most 15 May 2020, M6.5 Monte Cristo Range (NV) earthquake and 6 July 2019, M7.1 Ridgecrest (CA) main shock, i.e., the one exceptional near-miss within the study area since 1976. In fact, the first day cascading aftershocks for this event, as well as the entire 2019 Ridgecrest earthquake sequence, extend to the D-intersection. It is also notable that the Puente Hills thrust fault beneath metropolitan Los Angeles coincides exactly (Kossobokov, 2013) with the lineament drawn back in 1976, decades in advance of its “rediscovery” by the 1994 Northridge Earthquake (Shaw and Shearer, 1999).

Finally (and importantly for seismic hazard assessment), PREPA is a readily available hazard-related quantity that can be naturally included in NDSHA, while so far, no comparable way exists to formulate a direct use for it within PSHA — wherein earthquake “possibilities” are instead viewed temporally by Senior Seismic Hazard Analysis Committee (SSHAC, 1997) of the United States Nuclear Regulatory Commission as “annual frequencies of exceedance of earthquake-caused ground motions [that, however] can be attained only with significant uncertainty.” Therefore, ahem . . . those who can’t model are doomed to reality!

Seismic hazard and associated risks

“At half-past two o'clock of a moonlit morning in March, I was awakened by a tremendous earthquake, and though I had never before enjoyed a storm of this sort, the strange thrilling motion could not be mistaken, and I ran out of my cabin, both glad and frightened, shouting, "A noble earthquake! A noble earthquake!" feeling sure I was going to learn something.”John Muir, The Yosemite, Chapter 4

Ground shaking may be frightening, but it may not necessarily kill people. For example, the earliest reported earthquake in California was on 28 July 1769, and was documented in diaries by the exploring expedition of Gaspar de Portola, enroute from San Diego to chart a land route to Monterey. While camped along the Santa Ana River, about 50 km southeast of Los Angeles, “a sharp earthquake was felt that ‘lasted about half-as-long as an Ave Maria.” Based on descriptions of the quake, it was likely a moderate or strong earthquake. Some described the shaking in expedition diaries as violent, and occurring for over the next several days, suggesting aftershocks. Although the magnitude and epicenter are unclear, by comparing these descriptions with more recent events, the quake may have been similar to the M 6.4 1933 Long Beach or the M 5.9 1987 Whittier Narrows earthquake (https://geologycafe.com/california/pp1515/chapter6.html#history).

The exploring party, personally uninjured and unimpeded in this M 5–6 earthquake event, noted not that the region portended high seismic hazard and landslide risk, but instead benignly rather that it appeared to be a good place for agriculture!

“Earthquakes do not kill people, buildings do!” is a long-time refrain in the world of seismic hazard preparedness and earthquake engineering or do they? While inadequately designed and poorly constructed buildings, infrastructure and lifeline systems can kill people (Gere and Shah, 1984; Bilham, 2009), tsunamis and landslides are directly triggered earthquake phenomena that tragically do kill people, as well!

Therefore, for reliably assessing the hazard and estimating the risk that a population is exposed to, one needs to know the possible distribution of earthquakes large enough to produce a primary damage state. The global map of the maximal magnitude (Mmax) observed during the last 57 years, as portrayed within 2.4° × 2.0° grid cells (Figure 8) could be used for this purpose, as a very rough approximation.

Earthquake vulnerability, intensity and disaster

An earthquake of about M ∼ 5 (Intensity VI on the MMI scale), may cause slight damage (if any) to an ordinary structure located nearby the epicenter; and therefore, cannot produce any significant loss. On the other hand, a strong earthquake (M 6.0–M 6.9) may result in a real disaster — as has happened on several occasions in the past. See e.g., the M 6.3 L’Aquila Earthquake of 6 April 2009 (Alexander, 2010).

For example, the 21 July 2003, M 6.0 Yunnan (China) earthquake and induced landslides: i) destroyed 264,878 buildings; ii) damaged 1,186,000 houses; iii) killed at least 16 people; and iv) injured 584. Moreover, v) a power station was damaged; vi) roads were blocked; and vii) 1,508 livestock were killed in the province. The resulting damage due to direct and indirect losses (consequences) of this earthquake was estimated at ∼ 75 million United States dollars. So, this is a rare case when a shallow M 6 earthquake (one at the fringe of the smallest threshold of potentially hazardous earthquakes) occurred at both a location and also at 23:16 local time that together unfortunately combined maximum Vulnerability × Exposure of the province (i.e., slopes prone to failure; buildings, houses, etc., that could not withstand the shaking; dense population at home; and livestock still sheltered in their facilities).

Half of a clock face on Modenesi’s Towers of Finale Emilia, Ferrara, Italy (Figure 9) — destroyed following an earthquake and aftershocks May 20–29, 2012. Felt Intensities exceeded VII, as depicted on the clock face after the main shock. It was the first strong earthquake “anywhere nearby” since the Ferrara quake of 1570. The relatively small number in only 7 fatalities, when a strong and unusually shallow M 6 earthquake struck the Emilia Romagna region of northern Italy, is connected with the event’s occurrence time at just after 4 a.m. — fortuitously very early on that Sunday morning 20 May 2012 — on account of the fact that “the affected region is home to countless historic churches, castles, and towers — many of which were damaged or toppled.” With so many vulnerable churches collapsed or severely damaged, an origin time in the late morning might have easily claimed hundreds of victims from worshipers participating in religious ceremonies (Panza et al., 2014).

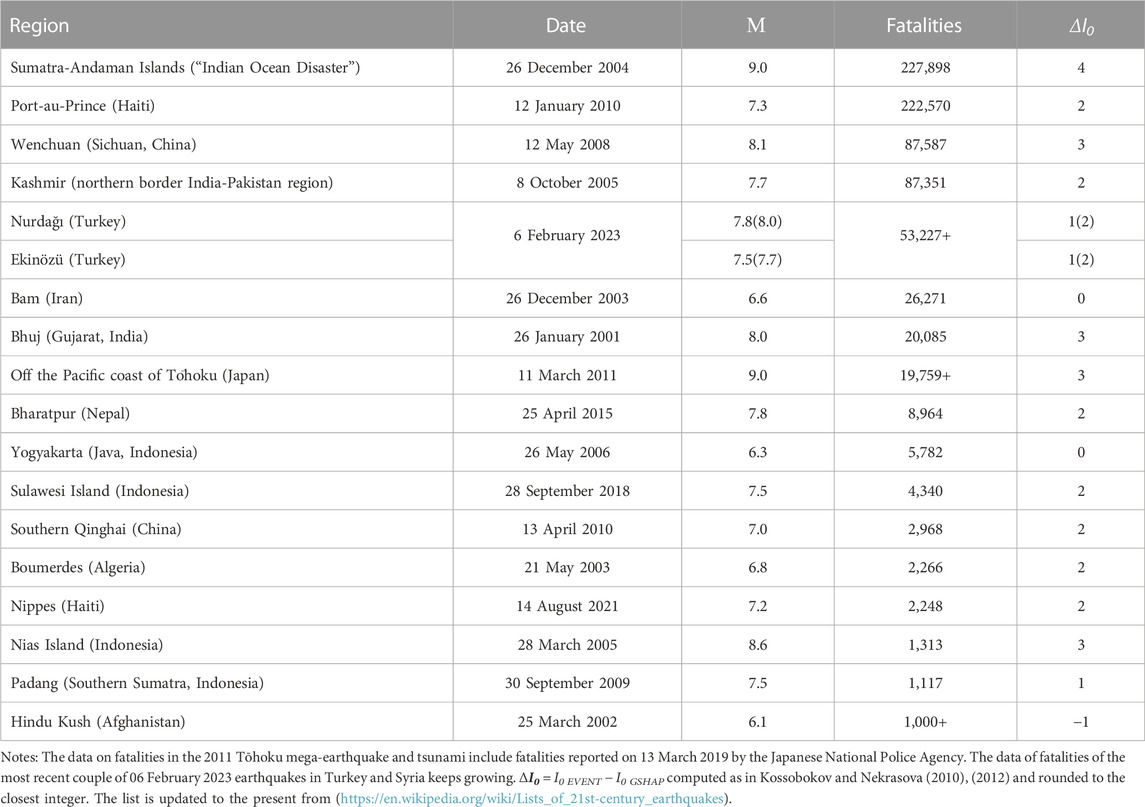

The world’s deadliest earthquakes since 2000

Table 1 lists all eighteen of the World’s deadliest earthquakes since the year 2000 — where the number of fatalities in each case exceeded one thousand. Remembering the earlier comment by Ellsworth (1990), i.e., that “earthquakes are complex physical processes generated by sudden slip on faults, and as such they can only be grossly characterized by simple concepts.” — we note that the magnitude of any one of these disastrous events has a poor correlation with the loss of lives: i) the two deadliest earthquakes, namely, the M 9.0 Indian Ocean disaster of 2004, and the M 7.3 Haiti earthquake of 2010, differ in seismic energy by a factor >350 — but resulted in roughly the same death tolls of above 200,000 people; while ii) the death toll of a later occurring M 9.1 mega-thrust earthquake and tsunami off the coast of Tōhoku (Japan) in 2011 was 2 times lower than that for the strong crustal earthquake of only M 6.6 in Bam (Iran). See also (Bela, 2014) and references therein.

TABLE 1. Top deadliest earthquakes since 2000 of at least 1,000+ fatalities including victims of tsunami and other associated effects.

There is one single case showing a negative value ΔI0 that refers to the smallest of these 18 deadliest earthquakes: the M 6.1 earthquake that struck Hindu Kush (Afghanistan) on 25 March 2002 causing 1,000 + fatalities. A larger M 7.4 deep earthquake (at 200 + km depth) and at distance greater than 150 km, occurred within less than a month on 03.03.2002, causing at least 150 fatalities. The last column in Table 1 shows the difference ΔI0 between the real Macroseismic Intensity I0 EVENT and that predicted by the GSHAP Map I0 GSHAP. These computed ΔI0 = I0 EVENT − I0 GSHAP values are (in all but one case) positive — with their median value of 2.

Seismic hazard mapping

An accurate characterization of seismic hazard at local scale requires use of detailed geologic maps of both active faults and past earthquake epicenter determinations. The typical seismic hazard assessment undertaken strives to provide a preventive determination of the ground motion characteristics that may be associated with future earthquakes — at regional, local, and even urban scales (Panza et al., 2013).

The first scientific seismic hazard assessment maps were deterministic in scope, and they were based on the observations that primary damage: i) decreases generally with the distance away from the earthquake source; and ii) is often correlated with the physical properties of underlying soils at a particular site, e.g., rock and gravel. In the 1970s, after publication of Engineering Seismic Risk Analysis by Alin Cornell (1968), the development of probabilistic seismic hazard maps became first fashionable, then preferred, and finally “required” — so that in the 1990s the probabilistic mapping of seismic hazard came to prevail over the heretofore deterministic cartography. For a chronologic history (and a Bibliographic Journey of that history), see in particular (Nishioka and Mualchin, 1996; 1997; Hanks, 1997; Bommer and Abrahamson, 2006; McGuire, 2008; Mualchin, 2011; Panza and Bela, 2020, especially Supplementary Material therein).

Global Seismic Hazard Assessment Program (GSHAP) 1992–1999

In particular, a widespread application of PSHA began when the Global Seismic Hazard Assessment Program (GSHAP) was launched three decades ago in 1992 by the International Lithosphere Program (ILP) with the support of the International Council of Scientific Unions (ICSU), and also endorsed as a demonstration program within the framework of the United Nations International Decade for Natural Disaster Reduction (UN/IDNDR). The GSHAP project terminated in 1999 (Giardini, 1999) with publication of the final GSHAP Global Seismic Hazard Map (Giardini et al., 1999).

However, following a number of publications critical of the PSHA technoscience paradigm, e.g., (Krinitzsky, 1993a; Krinitzsky, 1993b; Krinitzsky, 1995; Castanos and Lomnitz, 2002; Klügel, 2007; see also Udias, 2002), and pivotably the catastrophic 2010 Haiti earthquake, a systematic comparison of the GSHAP peak ground acceleration (PGA) estimates with those related to the actual earthquakes that had occurred disclosed gross inadequacy of this “probabilistic” product (Kossobokov, 2010a). The discrepancy between: a) the PGA on the GSHAP map; and b) accelerations at epicenters of 1,320 strong (M ≥ 6.0) earthquakes that happened after publication of the 1999 Map appeared to be a disservice to seismic zonation and associated building codes adopted in many countries on both national or regional scale (see Bommer and Abrahamson, 2006 and references therein). For fully half of these earthquakes, the PGA values on the map were surpassed by 1.7 m/s2 (0.2 g) or more within just 10 years of publication, which fact (of exceeding more than 50% of the PSHA hazard map values within just 10 years) evidently contradicts the GSHAP predicted “10% chance of exceedance in 50 years” — for the ground motion contours displayed on the map.

These problematic GSHAP results were naturally reported to a wide geophysical community at the Euroscience Open Forum (ESOF 2010) session on “Disaster prediction and management: Breaking a seismo-ill-logical circulus vitiosus”, and also at the Union sessions of the Meeting of the Americas and the American Geophysical Union (AGU) 2010 Fall Meeting (Kossobokov, 2010b; Kossobokov, 2010c; Kossobokov and Nekrasova, 2010; Soloviev and Kossobokov, 2010); and later at the EGI Community Forum (EGICF12) by Peresan et al. (2012a). Then, with finally the 11 March 2011, Mw 9.0 Tōhoku mega-earthquake and tsunami disaster, it became absolutely clear that the GSHAP Probabilistic Seismic Hazard Analysis—despite parascientific apologetics of its “legacy” advocated by Danciu and Giardini (2015) — is UNACCEPTABLE FOR ANY KIND OF RESPONSIBLE SEISMIC RISK EVALUATION AND KNOWLEDGEABLE DISASTER PREVENTION (Kossobokov and Nekrasova, 2012). See, e.g., (Wyss et al., 2012; Mulargia et al., 2017).

Unsurprising surprises

While, “like Sumatra in 2004, the power of the Tōhoku earthquake in 2011 took us by surprise (Wang, 2012)”, and made us question: “After decades of scientific research, how well or how badly are we doing in understanding subduction earthquakes?” ... In retrospect, the Tōhoku earthquake and its tsunami were consistent with what we had learned from comparative studies of different subduction zones–and therefore, “despite its wrenching pain”, the cascading 2011 Tōhoku Mw 9.0 Megathrust Earthquake — Tsunami — Fukushima Disaster (from both an earth and tsunami science perspective) was an “Unsurprising Surprise!”

The last column in Table 1 shows the difference ΔI0 between the real Macroseismic Intensity I0 EVENT and that predicted by GSHAP I0 GSHAP, which values are all (but one case) positive with average and median values of about 2! The same holds as well for all M ≥ 7.5 earthquakes, including the most recent 6 February 2023 coupled earthquakes in Turkey. This underestimation by two units on MMI scale can mean an event experience of “severe damage in substantial buildings with partial collapse” instead of a GSHAP forecast “highly likely” intensity of “slight damage to an ordinary structure.”

Moreover, it should be noted that, in common sense, such a poor performance of the GSHAP product could have already been found at the time of its 1999 publication, and this should have been done by the contributors to the Program as the first order validation test of the GSHAP final map! The claim of a 10% chance of exceedance in 50 years is violated already in 1990–1999 for more than 40% of 2,200 strong M ≥ 6.0, for 94% of 242 significant M ≥ 7.0, and 100% for major M ≥ 7.5 earthquakes (Kossobokov and Nekrasova, 2012)! Note also that GSHAP directly overlapped the time when it was openly realized and discussed by the earthquake engineering community that “10% probability of exceedance in 50 years was too risky for a life-safety criterion” in the United States Building Codes (Frankel et al., 1996) — because earthquake-resistant design standards, when scaled to 10% in 50 years hazard curve ground motions, were insufficient protection when damaging major and great earthquakes did inevitably occur!

Synthetic seismograms: Increasing the reliability of seismic hazard assessment

On the other hand, with our current knowledge of the physical processes of both earthquake generation and seismic wave propagation in anelastic attenuating media, we can increase the reliability of seismic hazard assessments by basing them instead on computation of synthetic seismograms — in terms of a more realistic modeling of ground motion (see e.g., Panza, 1985; Fäh et al., 1993; Panza et al., 1996; Panza et al., 2001; Panza and Romanelli, 2001; Paskaleva et al., 2007; Peresan et al., 2012b).

NDSHA, which is immediately falsifiable by the occurrence of a damaging event with magnitude exceeding the predicted threshold, has so far been validated in all regions where hazard maps prepared under its methodology have existed at the time of later strong or larger occurring earthquakes. PSHA, by providing a minimum ground motion that has a commonly 10% or 2% chance of exceedance in 50 years in its hazard model, is therefore not falsifiable at the occurrence of any single event that far exceeds this minimum ground motion value, as shown in Table 1.

Furthermore, such ambiguity (authoritatively calculated and endorsed) also provides a legal shield against both “unsurprising surprises”, as well as any responsibilities for ensuring satisfactory outcomes to civil populations for any such presumed unlikely and rare events–on the part of administrators, politicians, engineers and even scientists (e.g., “the L’Aquila Trial,” as previously mentioned).

Finally, there are existing algorithms for the diagnosis of “times of increased probability” (TIP) that have also been proven reliable in the long-lasting and on-going earthquake prediction experiment that began in 1985 (Kossobokov et al., 1999; Kossobokov and Shebalin, 2003; Kossobokov, 2013) and these can deliver an intermediate-term Time and middle-range Space component to the newer Neo-Deterministic NDSHA approach in a more targeted public-safety centered evaluation of seismic hazard (Peresan et al., 2011; Peresan et al., 2012a). In some cases, additional geophysical observations can further help in reducing the spatial uncertainty to the narrow-range about tens of kilometers, e.g., (Crespi et al., 2020).

Advanced seismic hazard assessment

The results by Wyss et al. (2012) regarding “Errors in expected human losses due to incorrect seismic hazard estimates” are well in line with the two Topical Volumes Advanced Seismic Hazard Assessment edited by Panza et al. (2011), which supply multifaceted information on the modern tools for Seismic Hazard Assessment (SHA).

The contributors to these special issues make clear the significant differences between hazard, risk, hazard mitigation, and risk reduction (Klügel, 2011; Peresan et al., 2011; Wang, 2011; Zuccolo et al., 2011), which are of paramount importance as the critical arguments toward revising fundamentally our present existing hazard maps, risk estimates, and engineering practices.

All ideas have consequences. Therefore, any Standard Method must be Reliable in the first place! That is, it must be: a) good; b) right; and c) true! It must consider: i) the fragility of the local built environment; ii) soil conditions; and iii) furnish now far more informative risk/resiliency assessments of cities and metropolitan territories (Paskaleva et al., 2007; Trendafiloski et al., 2009). The consequences of the Maximum Credible Earthquake (MCE) should be the criteria for Reliable Seismic Hazard Assessment (RSHA), because “what can happen” is a more important consideration than “what gets approved” based on a hazard model (see again Kanamori, 2014, 2021). Incidentally, we note that MCE as practiced in NDSHA (per Rugarli et al., 2019) supplies for Japan an enveloping magnitude M 9.3.

Backward into the future!

In spite of both: i) the numerous evidenced shortcomings of PSHA (see Stein et al., 2012; Wyss and Rosset, 2013 for a comprehensive discussion); and ii) its unreliable and poor performances — PSHA (emboldened now by 50 years of dangerous “sincere ignorance and conscientious stupidity”) is still widely applied at regional and global scale “to continue the vision of a global seismic hazard model” (Danciu and Giardini, 2015; Gerstenberger et al., 2020; Meletti et al., 2021). Regretfully, the Global Earthquake Model project (GEM, http://www.globalquakemodel.org/) is evidently still on the preferred “circulus vitiosus” — a situation in which the solution to a problem creates another problem. Recently, the GEM Foundation released Global Seismic Hazard Map (version 2018.1) that depicts the geographic distribution of the PGA “with a 10% probability of being exceeded in 50 years” and makes the same fatal errors of the GSHAP 1999 PSHA map — see also “Development of a global seismic risk model” (Silva et al., 2020).

In the recent AGU Reviews in Geophysics article, entitled “Probabilistic Seismic Hazard Analysis at Regional and National Scales: State of the Art and Future Challenges,” Gerstenberger et al. (2020): i) keep advocating the evident misuse of statistics by attributing any exposure of the fundamental flaws of PSHA (e.g., Castanos and Lomnitz, 2002; Klügel, 2007; Mulargia et al., 2017; Stark, 2017, 2022; Panza and Bela, 2020, etc.) to “subjective experts’ judgment”; and ii) keep ignoring both — a) the systematic failures-to-predict the magnitude of exceedance (Kossobokov and Nekrasova, 2012; Wyss et al., 2012; Wyss and Rosset, 2013; Wyss, 2015); as well as b) the already two-decades-long existing and much more reliable alternative of the neodeterministic approach (Panza et al., 2012; Panza et al., 2014; Kossobokov et al., 2015a; Nekrasova et al., 2015a; Kossobokov et al., 2015b).

The PSHA’s “State of the Art and Future Challenges” (which more correctly should have been alternatively released under the technoscience warning label “Reviews in Risk Modeling for Hazards and Disasters”, rather than a true scientific oriented “Reviews in Geophysics”) purposely “sincerely” missed referencing “NDSHA: A new paradigm for reliable seismic hazard assessment” (Panza and Bela, 2020), published online already about 2 months prior to the Gerstenberger et al. (2020) acceptance date (10 January 2020) and ignored as well Advanced Seismic Hazard Assessment, which was published in Pure and Applied Geophysics already 9 years prior (Panza et al., 2011).

Furthermore, Jordan et al. (2014) have referenced (Peresan et al., 2012a), which reference fully reveals the qualities, attributes, and applicability of NDSHA to “Operational earthquake forecast/prediction” with direct attention called in the Abstract to the “very unsatisfactory global performance of Probabilistic Seismic Hazard Assessment at the occurrence of most of the recent destructive earthquakes.” Peresan et al. (2012a, p. 135) also discuss in detail the “Existing operational practice in Italy,” which has been “following an integrated neo-deterministic approach” since 2005.

Supplementary Material in (Panza and Bela, 2020): Bibliographic Journey To A New Paradigm, provides detailed references in their chronologically developing order, so that one can see PSHA and NDSHA publications side-by-side over now more than two decades — as NDSHA effectively “built a new model that made the existing model obsolete!” Finally, one of just a few references critical of PSHA that were surprisingly included by Gerstenberger et al. (2020) did manage to state with absolute clarity: “Reliance on PSHA for decisions that affect public safety should cease” (Wyss and Rosset, 2013)!

Seismic Roulette is a game of chance

Seismic Roulette is a game of chance! It is true that we gamble against our will, but that does not make it any less of a game! Disastrous earthquakes are low-probability events locally; however, in any of the earthquake-prone areas worldwide, they reoccur as “unsurprising surprises” with certainty, i.e., with 100% probability sooner or later! Should we then synchronize our watches and historic clock towers. And then wait for another decade, while “Nature spins the wheel”, to find out that GEM is as wrong as GSHAP?

The Neo-Deterministic Seismic Hazard Assessment (NDSHA) methodology (Fäh et al., 1993; Panza et al., 2012; Peresan et al., 2012a; Bela and Panza, 2021; Panza and Bela, 2020 and references therein) has demonstrated its abilities to serve as the Standard Method for Reliable Seismic Hazard Assessment (RSHA). NDSHA, proposed some 20 years ago (Panza et al., 1996; Panza and Romanelli, 2001), has proven to both reliably and realistically simulate comprehensive sets of hazardous earthquake ground motions throughout many regions worldwide. NDSHA, in making use of: i) our present-day comprehensive physical knowledge of seismic source structures and processes; ii) the propagation of earthquake waves in heterogeneous anelastic media; and iii) site conditions — effectively accounts for the complex, essentially tensor nature of earthquake ground motions in the affected area. Therefore, NDSHA applications provide realistic synthetic time series of ground shaking at a given place, when the best available distribution of the potential earthquake sources can be used for scenario modelling.

Conservative estimates of the maximum credible seismic hazard are obtained when they are based on the actual empirical distribution of earthquake characteristics — supplemented further with i) the existing geologic, tectonic, macro- and paleo-seismic evidence, ii) the results of PREPA, and iii) the implications of USLE, accounting for the local fractal structure of the lithosphere. In fact, USLE allows for a comparison between PSHA and NDSHA by providing reliable estimates of PGA values associated with model earthquakes of maximal expected magnitude within 50 years (Nekrasova et al., 2014; Parvez et al., 2014; Nekrasova et al., 2015a; Nekrasova et al., 2015b; Kossobokov et al., 2020), it has been comparatively demonstrated that the NDSHA maps that use such estimates outscore GSHAP generated PSHA maps in identifying correctly the sites of moderate, strong, and significant earthquakes.

Specifically, the number of unacceptable errors (when PGA on a hazard map at the epicenter of a real earthquake is less, by factor 2 or greater, than PGA attributed to this earthquake) is several times larger for the GSHAP map than for the NDSHA — USLE derived map (e.g., PGA is 11.4, 1.7, and 2.5 times larger for strong earthquakes in Himalayas and surroundings, Lake Baikal region, and Central China, respectively, than on the GSHAP PGA hazard map). This cannot be attributed solely to the difference of the empirical probability distributions of the model PGA values within a region, although evidently the USLE model favors larger estimates in the Baikal and Central China regions. Note that at the regional scale of investigation, the GSHAP estimates of seismic hazard can be grossly underestimated in the areas of sparse explorations of seismically active faults, such as those to the east of the upper segment of the Baikal rift zone.

Earthquake prediction

“Science has not yet mastered prophecy. We predict too much for the next year and yet far too little for the next ten.”Neil Armstrong(Speech to a joint session of Congress, 16 September 1969)

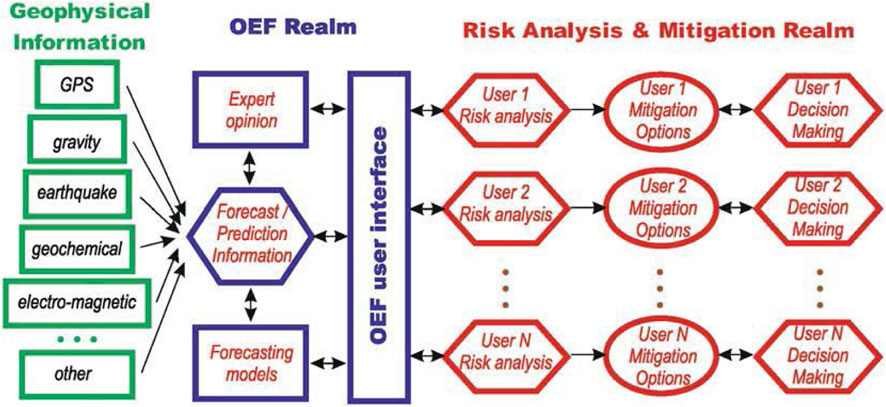

The terms “Earthquake Forecast/Prediction” described in this section are focused primarily on Operational Earthquake Forecasting (OEF) and mean: i) first specifying the time, place, and energy (as a rule in terms of magnitude) of an anticipated seismic event with sufficient accuracy/precision to then ii) provide authoritative warning to those responsible for the undertaking of civil preparedness actions intended to: a) reduce loss-of-life and damage to property; and b) mitigate disruption to life lines and social fabric (i.e., harden community resilience).

Some distinguish forecasting as prediction supplemented with probability of occurrence (Allen, 1976; NEPEC, 2016). In common everyday language, however, “forecast” and “prediction” are synonymous to the public when they are referring to earthquake phenomena — at least from a practical awareness and actionable viewpoint. Note, however, that estimates of earthquake probability or likelihood are the result of one’s usually subjective deterministic choice of probability model — which might mislead personal belief away from the actual phenomena under study (Gelfand, 1991).

In J.R.R. Tolkien’s fantasy adventure “The Hobbit: An Unexpected Journey” — the “necessity of identifying risk in any thorough plans in life” is underscored in making reference to the actual phenomenon: “It does not do to leave a live dragon out of your calculations, if you live near him.”

Therefore, earthquake forecast/prediction is neither an easy nor a straight-forward task (but rather an unexpected journey) and therefore implies both an informed as well as a delicate application of statistics (Vere-Jones, 2001). Whenever the problems are very broad, as they particularly are here in the earthquake realm of geophysics and the earth sciences — it is always very important to distinguish the facts from the assumptions, so that one can fully understand the limitations of the assumptions, as a scientific safeguard against committing the equivalent of geophysical malpractice (see P.B. Stark’s “Thoughts on applied statistics” at https://www.stat.berkeley.edu/users/stark/other.htm).

Regretfully, in many cases of Seismic Hazard Assessment (SHA): from i) time-independent (term-less); to ii) time-dependent probabilistic (PSHA); from iii) deterministic (DSHA, NDSHA); to also iv) Short-term Earthquake Forecasting (StEF) — since the claims of a high potential success of the prediction method are based on a flawed application of statistics, they are therefore hardly suitable for communication to responsible decision makers.