- 1Department of Psychology, University of Cambridge, Cambridge, United Kingdom

- 2Department of Pure Mathematics and Mathematical Statistics, University of Cambridge, Cambridge, United Kingdom

- 3UCL Institute for Risk and Disaster Reduction, London, United Kingdom

- 4Anglia Ruskin University, Chelmsford, United Kingdom

- 5Swiss Seismological Service, ETH Zurich, Zurich, Switzerland

- 6Department of Public Health, University of Otago Wellington, Wellington, New Zealand

- 7United States Geological Survey, Earthquake Science Center, California, CA, United States

- 8Euro-Mediterranean Seismological Centre, Arpajon, France

- 9Joint Centre for Disaster Research, Massey University, Wellington, New Zealand

Misinformation carries the potential for immense damage to public understanding of science and for evidence-based decision making at an individual and policy level. Our research explores the following questions within seismology: which claims can be considered misinformation, which are supported by a consensus, and which are still under scientific debate? Consensus and debate are important to quantify, because where levels of scientific consensus on an issue are high, communication of this fact may itself serve as a useful tool in combating misinformation. This is a challenge for earthquake science, where certain theories and facts in seismology are still being established. The present study collates a list of common public statements about earthquakes and provides–to the best of our knowledge–the first elicitation of the opinions of 164 earth scientists on the degree of verity of these statements. The results provide important insights for the state of knowledge in the field, helping identify those areas where consensus messaging may aid in the fight against earthquake related misinformation and areas where there is currently lack of consensus opinion. We highlight the necessity of using clear, accessible, jargon-free statements with specified parameters and precise wording when communicating with the public about earthquakes, as well as of transparency about the uncertainties around some issues in seismology.

Introduction

Misinformation is considered one of the most pervasive threats to individuals and societies worldwide (Lewandowsky et al., 2017; Lewandowsky and van der Linden, 2021), impacting topics from politics (Allcott and Gentzkow, 2017; Guess et al., 2019; Lee, 2019; Mosleh et al., 2020) to pandemics (e.g. COVID-19) (Jolley and Paterson, 2020; Lobato et al., 2020; Roozenbeek et al., 2020), and seismology is no exception. From false earthquake “predictions” during the L’Aquila (Alexander, 2014) and Christchurch (New Zealand Herald, 2011a; 2011b; Griffin, 2011; Wood and Johnston, 2011; Johnson and Ronan, 2014) sequences, to terrorist plots in the United States (Hernandez, 2016), misinformation about earthquakes has been demonstrated to have severe, real-world consequences.

Several methods of combating misinformation have been proposed, including the use of algorithms to prevent misinformation from appearing on social media platforms (Calfas, 2017; Elgin and Wang, 2018; van der Linden and Roozenbeek, 2020), correcting misinformation via fact checking or “debunking” approaches (see Lewandowsky et al. (2020) for a best practice guide), building psychological resilience to misinformation via psychological inoculation or “prebunking” (e.g., McGuire, 1970; Compton, 2013; Van der Linden and Roozenbeek, 2020), and legislative approaches that regulate the content that media outlets post online (e.g., United Kingdom’s Online Safety Bill (Woodhouse, 2021); Germany’s Network Enforcement Act (Bundesministerium, 2017). Further, Dallo et al. (2022) recently published a communication guide on how to fight the most common myths about earthquakes specifically; available in English and Spanish.

Key to being able to implement many of these approaches, however, is an understanding of the types of potentially misinformative statements that are common in public discourse, and a clear scientific consensus (which we define following (Myers et al., 2021) and in line with the Cambridge, Merriam Webster and Oxford dictionaries as ‘general agreement’) on the state of knowledge regarding the “real” truthfulness or reliability of these statements in the domain in question (Dallo et al., 2022). Indeed, where levels of scientific consensus on an issue are high, communication of this fact may itself serve as a useful tool in combating misinformation. Maibach and van der Linden (2016) write that perceptions of scientific agreement act as an important determinant of public opinion and “communicating the scientific consensus about societally contested issues. Has a powerful effect on realigning public views of the issue with expert opinions.” (p. 2).

This is a challenge for earthquake science (e.g., seismology and geology). Some domains have relatively high and, indeed, quantified, degrees of scientific consensus on the likelihood of a hypothesis being true (for example the high scientific consensus that climate change is anthropogenic (Oreskes, 2004; Cook et al., 2013; Maibach et al., 2014; Myers et al., 2021) or that COVID-19 is not caused by 5G phone masts (Grimes, 2021). Earthquake science however, is a fairly young and active field where certain theories and facts are still being established. The opinions amongst scholars on certain aspects of earthquake science are still actively and openly debated. Additionally, different ontologies, paradigms and epistemologies within science itself mean that scholars come to the table with a range of backgrounds and ways of collecting and interpreting data, which ultimately influence the way they communicate their science (McBride, 2017). What complicates earthquake communication further is the diversity of phenomenology and terms used, e.g. tremors, quakes, shocks, seismic events. Further, there are semantic differences between languages. In English for example, prediction is used for precise, deterministic statements and forecast for probabilistic ones. In comparison, in Nepali, only one word exists, and this refers to deterministic predictions (Michael and McBride, 2019). Thus, a precise distinction between deterministic prediction and probabilistic forecast can be made in some languages, but not others.

As such, a key first step to combating misinformation about earthquakes is to understand the range of perceptions scientists have about earthquake science, why they hold these views, and what the level of scientific agreement or consensus on these topics is. The present study collates a list of common publicly-made statements about earthquakes from our daily experiences communicating with the public and workshops with the earth science community [see Dryhurst et al. (2022) for workshop synthesis]. It provides–to the best of our knowledge—the first elicitation of the opinions of 164 earth scientists on the degree of “truthfulness” or otherwise of these. It therefore addresses the research question, “what is the expert consensus regarding 13 common statements about earthquakes?”.

Materials and methods

Using a combination of desk research, workshops with the earth scientist community and exchanges on daily communication experiences between the authors [see Dryhurst et al. (2022) for workshop synthesis], we first collated thirteen statements about earthquakes that are commonly queried and/or misunderstood by the public (Figure 1).

FIGURE 1. Overview of the project activities that the online survey was embedded in, as part of Phase 3 of the project. Includes a literature review, expert interviews (Phase 1), a virtual workshop with earth science community members (Phase 2), an online survey with the earth science community (Phase 3), Virtual Workshop with earth science community (Phase 4), and a communication guide (Phase 5).

Between 15th and 22nd March 2021, we then surveyed 164 earth scientists (n = 75 geophysicists, n = 47 geologists, n = 26 seismologists, n = 13 engineers, n = 1 science communicator, n = 1 physicist) studying earthquakes occurring across six continents. The survey was hosted on Qualtrics (Qualtrics, 2017) and participants were recruited via a selective snowball sampling method (Parker et al., 2019); the authors contacted expert colleagues via the mailing lists of two European Horizon 2020 projects (RISE and TURNkey) and via personal networks in the United States, New Zealand and Europe, asking if they would fill in an online survey and pass it on to further expert earth scientist colleagues upon completion.

To measure their level of consensus about the truth or falsehood of the thirteen statements, participants were asked to rate each statement on a Likert scale (Joshi et al., 2015) from 1 = Completely true to 7 = Completely false, with an eighth option available for “Undecided”. Percentage consensus ratings of truth or falsehood for each statement were calculated as the proportion of participants choosing each answer option. Mean ratings of truth or falsehood and associated standard deviations for each statement were also calculated, excluding participants who answered “Undecided”.

Several of these statements were purposefully ambiguous in aspects of their phrasing, to keep them true to the way such statements are commonly phrased in public discourse. This allowed us both to garner the responses of earth scientists to misinterpretations that have “real-world” validity, and raise awareness amongst this community of the nature of such misinterpretations.

Participants were also given the option to add written comments about their rating of truth or falsehood for each statement in turn. These qualitative data leant important context to the quantitative ratings, helping identify those statements for which there is a reasonable level of consensus, and the qualifiers and caveats that reveal issues about which there is still open debate and uncertainty amongst the scientific community. The qualitative analysis of these data for the purposes of this paper is based on one round of coding using an emic/inductive process, as outlined by Daymon and Holloway (2002), to which all authors contributed. Statements 1, 2, 4, and 5 were coded in NVIVO (Bazeley and Jackson, 2013; statements 3, 6, 7, 10, 11, 12, and 13 were coded in Excel (Meyer and Avery, 2009); and statements 8 and 9 were coded using QDA Miner (Lewis and Maas, 2007). Different programmes were used because multiple team members undertook the coding, then a master spreadsheet was provided so that all coders could upload their data, cross-check the codes other people were using, and apply those codes to their own coding schedule if applicable.

This project was reviewed by the University of Cambridge Psychology Research Ethics Committee (No: PRE. 2021.018).

Results and discussion

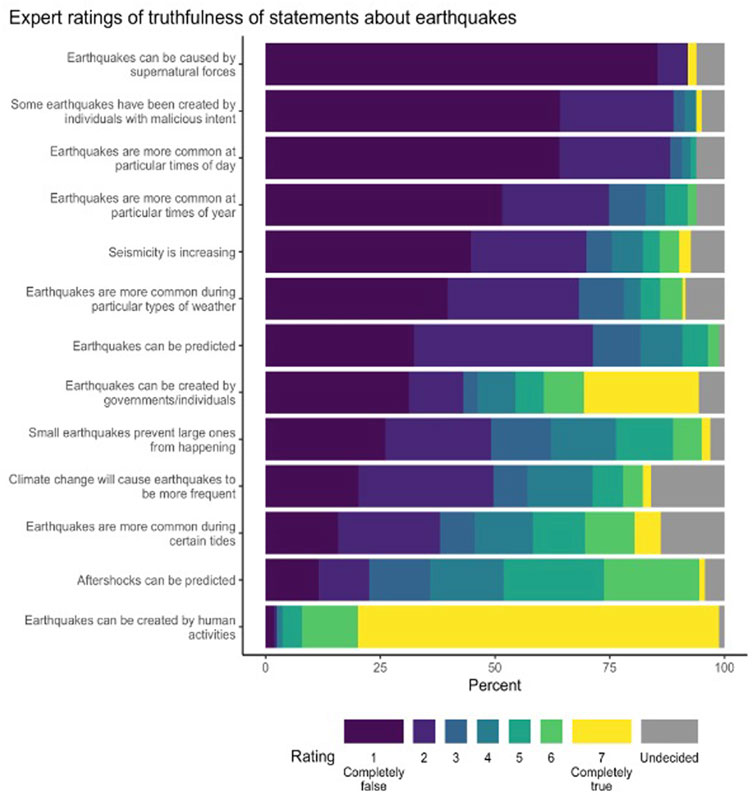

A visual summary of the proportion of participants choosing each rating of truth or falsehood for each statement can be seen in Figure 2.

FIGURE 2. Proportion of participants choosing each rating of truth or falsehood for each statement in turn, from 1 = Completely false to 7 = Completely true, including in grey the proportion choosing “Undecided”. Statements are ordered by the proportion of participants choosing answer option 1 = Completely false.

Statements about earthquake creation

- “Earthquakes can be created by human activities”

- “Earthquakes can be created by governments/individuals”

- “Earthquakes can be created by individuals with malicious intent”

The results relating to the three statements about earthquake “creation” suggest high levels of consensus on the reality of some earthquakes being triggered by human activities such as fracking, waste-water disposal and mining (Ellsworth, 2013) (90.9%, n = 149 chose rating 6 or 7 at the completely true end of the 1–7 rating scale). There was also substantial doubt over the prevalence of such triggering being used with malicious intent. Relatedly, the results highlighted the importance of avoiding intent-based and potentially conspiratorial language such as “created” in communications about human-induced earthquakes and moving towards more neutral wording such as triggered or induced. This would reduce the ambiguity of the statements and consequent variation in their (mis)interpretation. For example, one participant noted “Earthquakes can be induced (directly produced) or triggered by human activities … I would not use the word “create” in this context though”.

The statement “Earthquakes can be created by governments/individuals” was particularly ambiguous to participants, as demonstrated by some interpreting it to mean something nefarious (e.g. “I don’t think that government or individuals would dare to use seismicity as a kind of weapon, and it would be very hard”), and others simply as another way of saying that human activities, including those that governments can commission, (e.g., wastewater injection) can trigger earthquakes [e.g., “I do not understand the question. It seems to be the same question as the previous question (about human activities)”].

Statements about prevalence and causes of earthquakes

- “Earthquakes can be caused by supernatural forces”

- “Earthquakes are more common at particular times of day”

- “Earthquakes are more common at particular times of year”

- “Earthquakes are more common during particular types of weather”

- “Seismicity is increasing”

- “Small earthquakes prevent larger ones from happening”

- “Earthquakes are more common during certain tides”

- “Climate change will cause earthquakes to be more frequent”

Overall, the perceptions of statements about the prevalence and causes of earthquakes were varied among our participants. There was a high level of consensus that earthquakes are not caused by supernatural forces (92.1%, n = 151 chose rating 1 or 2 at the completely false end of the 1–7 scale), although the proportion of participants who were undecided in their rating of this statement was not insubstantial, at 6.1% (n = 10). Looking in more depth at the qualitative comments from participants, several (11) found the term “supernatural forces” ambiguous. One wrote, for example, “I do not even know what you are talking about”, whilst another wrote “Supernatural forces???? What does it mean?”. Another flagged that human activities such as fluid injection might be considered supernatural in that they are “man-made”. These comments again highlight the need to be clear in the use of language used in communication.

It is notable that several (7) respondents detailed that the supernatural does not fall within the remit of science. One wrote, for example, “supernatural forces are not within the tools and scope of science. If someone believes in supernatural forces – science cannot overrule his belief”. This separation of science from the supernatural may explain why some scientists rated the statement as truthful, or indicated that they were undecided in their response. One participant who rated the statement as completely true explicitly stated their religious beliefs: “As a Christian I believe in a creator who controls the physical laws at all times and in all places”. Another participant who recorded their response as undecided also touched on religious ideas, but more as reasoning for why some might invoke supernatural forces such as an act of God in their search for an explanation (evidenced in Joffe et al., 2013), especially during a period of trauma such as loss during an earthquake: “Although seismologists declare knowledge of the inner workings of a quake, it does not change the fact that, for people at the site of a quake, there is a feeling of supernatural power and, arguably, fury and wrath, especially given the destruction and loss of life that quakes are capable of. So, I do not know that you can honestly falsify the statement “earthquakes can be caused by supernatural forces” without simply asserting that there are no supernatural forces, to which someone who just watched their apartment building fall down and crush their whole family is going to say, “Yeah, then who is responsible for that?!” (*who* not *what*)”. Another “undecided” participant described how “supernatural forces are unknown unknowns [and] it is unknown how they interact with earthquakes”. All these comments suggest that a separation between science and faith in discussions and communications about earthquakes might be useful.

There was also a reasonably high level of consensus in the falsehood of the statement “earthquakes are more common at particular times of day” (88.2%, n = 142 chose rating 1 or 2, 6.2%, n = 10 were undecided), although some participants did note possible links with tides (see below) and the fact that induced seismicity is more likely to occur during the day. The size of this consensus on falsehood dropped to 74.8% (n = 122) as the timeframe over which this statement was expressed increased to a year; whilst the majority still think the statement is false, some acknowledge that the statement is plausible, although several note that if such effects exist, they will be small and not of concern. For example, “I would say this is false for large earthquakes, but seasonal loading from e.g., rain in the monsoon can affect stresses which have been shown to modulate small scale seismicity”. This highlights that qualifications, including specifying the parameters of each statement (e.g., timeframe, size of geographic area, size of effect), will be important to lend clarity to communication of earthquake related information, although it is important that such qualifications are in formats that will be interpretable by public audiences without domain expertise.

A similar majority rating of falsehood without a clear consensus also occurred for the statements “earthquakes are more common during particular types of weather” (68.3%, n = 112 chose rating 1 or 2, 8.5%, n = 14 were undecided) and “seismicity is increasing” (70%, n = 114 chose rating 1 or 2, 7.4%, n = 12 were undecided). For the latter, qualitative comments suggest some of this variability in expert opinion again comes down to ambiguity in how the statement is phrased. Some participants noted that their answer would be different depending on whether one is talking about shorter timeframes, where there may be increases in seismicity due to periods of heightened seismic activity, or longer timeframes, where there is likely no such pattern (e.g. “In order to define an increase in seismicity one needs to be aware of the relevant time window of analysis.”). It was also noted that the answer would differ if talking about human triggered versus “natural” earthquakes, where for the former there may be an increase in local seismicity where activities such as fracking are taking place (e.g. “Natural seismicity varies, but is not increasing. Man-made seismicity has increased significantly due to fracking and geothermal operations.”). These are all important parameters to specify in order to improve clarity when communicating to the public about this particular issue (for example that an individual may experience an increase in the number of earthquakes in their location because of an earthquake event “triggering” further events, but that this is not the case on average globally, or over much longer geological timeframes), and again highlights the importance of such precision and specification in the wording of these communications more generally. It is interesting to note that several participants thought that people might perceive that seismicity is increasing due to improved recording of, and communication about, earthquake events in recent years.

There was a low level of consensus about the statement “small earthquakes prevent larger ones from occurring” (49.1%, n = 79 chose rating 1 or 2, 3.1%, n = 5 were undecided), although this may again stem from imprecision in the phrasing of the statement, where no detail on the specifics of what constitutes a “small” earthquake (e.g., magnitude) or on time frames was given. Interestingly, some noted that the word “prevent” regarding the incidence of earthquakes incorrectly implies that it is possible to reduce seismic hazard levels to zero and suggested using terms such as “delay” instead, to reduce the likelihood of such misinterpretation of communications (e.g., “They do not “prevent”. Having frequent small earthquakes may decrease the probability of observing a larger one in certain tectonic settings, but we cannot speak of “preventing”, and speaking of “preventing” gives the public the wrong impression.”).

Arguably, one of the lowest levels of consensus for any of the statements considered was for the statement “earthquakes are more common during certain tides”, where ratings were distributed more evenly across answer choices than for most other statements. This lack of consensus was further evidenced by a substantial minority of participants who were undecided in their rating (13.9%, n = 22). Several qualitative comments suggested that tides can cause stress changes in the earth’s crust, but that would only trigger small events (e.g., “Holds true I think for smaller earthquakes – not large”). Since the statement itself was not specific about the nature of the earthquakes in question (e.g. size), this might have resulted in variation in interpretation of the statement, and thus could explain some of the variation in participant responses. Some comments also suggest that this is a topic still debated within the community and that evidence is contradictory (e.g.“Trick question. This is still being debated in the community. Tides do cause tiny stress changes in the Earth crust, and local variations in earthquake activity have been found that appear to correlate with tidal changes. But does correlation mean causality -- the debate continues!”), which may also help explain the lack of consensus on the statement; openness about this uncertainty in communications with the public on this topic will likely be key, especially where the state of knowledge may be set to change (van der Bles et al., 2020; Batteux et al., 2021; Kerr et al., 2021; Schneider et al., 2021).

Participants appeared most uncertain about the statement “climate change will cause earthquakes to be more frequent”, with 16% (n = 26) remaining undecided in their rating. There was also a low consensus on falsehood (49.6%, n = 81 chose rating 1 or 2). The level of uncertainty about this statement was also apparent through the qualitative comments, where hedge words such as “might” and “can” were used by some [although others were more deterministic in their language (“will”)]. This appears to be an area, that is still actively debated and researched, however there were suggestions in these comments of a variety of indirect links between climate change and earthquakes, such as increased rainfall, changes in lithostatic pressure and an increase in geological pressures from alternative energy use such as geothermal. Nevertheless, many participants suggested that climate change induced earthquakes would relate to local stresses and not larger tectonic processes [e.g., “Not generally. However, some localised consequences (small quakes) associated with isostatic rebound in polar areas (due to large/broad-scale loss of ice cover) could occur.”]. Once again, clarity about parameters, size of effects and transparency about the uncertainty in expert opinion will likely be key to public communication on this issue.

Statements about earthquake prediction

- “Earthquakes can be predicted”

- “Aftershocks can be predicted”

There was a lack of strong consensus on the truth or falsehood of the statement “earthquakes can be predicted”, although the majority of participants did choose ratings 1 and 2 at the “completely false” end of the rating scale (71.3%, n = 117). The statement “aftershocks can be predicted” had lower levels of consensus, with answers distributed more evenly across options than for the former statement. For example, 22.6% (n = 37) chose ratings 1 and 2 at the “completely false” end of the 1–7 scale, whilst 21.9% (n = 36) chose ratings 6 and 7 at the “completely true” end of the scale. In both cases, several qualitative comments highlighted that it is not possible to predict earthquakes or aftershocks in a deterministic way that gives exact information about upcoming earthquake events, but that probabilistic forecasting of such events, notably aftershocks, is possible (e.g., “We can’t currently predict earthquakes but we can forecast earthquakes.”). It should be noted that some participants took issue with the word “aftershock” when it comes to forecasting, since such a determination cannot be attributed a priori.

In turn, several comments for both statements highlighted the ambiguity in the meaning of the word “prediction”—whether it was probabilistic or deterministic—which likely explains the variation in quantitative ratings even where qualitative comments seem to imply a reasonable level of agreement (e.g., “I interpreted your use of the term “prediction” as the deterministic establishment before the event actually takes place of its exact place, date and time. If, instead, by “prediction” you meant a probabilistic estimation, then my answers above would have been very different.”). Forecasting versus prediction has a rich and complex history in earthquake science, as explored in Michael and McBride (2019), and our results here underline the necessity of clearly explaining the meaning of such words, and perhaps even avoiding the word “predict” entirely in communication. Nevertheless, communicating probabilistic information in a comprehensible way is challenging; everyone, whether or not they have high levels of domain expertise, has a propensity towards bias in judgments involving statistical information, that is, presented in certain ways (e.g., Tversky and Kahneman, 1974; Kahneman and Tversky, 1979; Freeman and Parker, 2021). As such, communications of probabilistic information need to be carefully designed, for example making use of formats that aid comprehension in certain circumstances, such as natural frequencies (Gigerenzer and Hoffrage, 1995; Gigerenzer et al., 2007) and risk comparisons (Dryhurst et al., 2021; Freeman and Kerr, 2021). Since different formats help in different circumstances, communications need to be co-designed with their audiences, and evaluated carefully to ensure they support understanding and decision-making (Becker et al., 2019).

Conclusion

Our analysis suggests that some statements commonly seen in the public realm about earthquakes, and which might be considered by some to be “misinformation”, are actually still debated within the expert community, and that evidence around them can be contradictory (e.g., “Climate change will cause earthquakes to be more frequent”; “Earthquakes are more common during certain tides”). This active debate helps explain some of the lack of expert consensus on these statements. To ensure that the expert community is trustworthy in its communication with the public, openness about this uncertainty in communication of these topics will likely be key (e.g., Doyle et al., 2019; Padilla, 2021; Schneider et al., 2021).

Our analysis further suggests, however, that some of the uncertainty and overall lack of consensus in experts’ ratings of many of the statements put to them may come down to the way these statements were phrased by the researchers and thus to variation in their interpretation. In our survey, we phrased our statements in the way that lay people might, e.g., “earthquakes can be predicted”. However, our expert respondents indicated that they needed the statements to be more precise to rate them meaningfully, and in instances where experts agreed with statements we put to them, such agreement was often framed with “it depends”; definitive support for statements without caveats was rare. This may illustrate that while our participants view these statements in complexity, non-experts may perceive these to be yes or no questions.

Several comments from participants indicated that 1) it is necessary to provide clarity on whether statements relating to earthquake prediction refer to deterministic predictions or probabilistic forecasts; 2) the magnitude and other key parameters of the earthquakes the statements relate to (e.g., induced vs. naturally occurring) should be specified; 3) intent-based and potentially conspiratorial language such as “created” in communications about human-induced earthquakes should be avoided and more neutral wording such as “triggered” or “induced” used instead; 4) individual and cultural context may determine belief in information (e.g., more religious people placing greater belief in supernatural forces).

The disconnect between the publics’ phrasing of statements about earthquakes and the increased demand for precision and content by experts can be understood via the lens of Mental Models (Bostrom et al., 1992), which posits that those with expert knowledge view issues with more complexity and higher risk than those with non-expert knowledge, and can complicate risk communication initiatives and campaigns (Bostrom et al., 1994). This indicates that careful consideration of wording and providing qualifications (e.g., specifying the parameters of each statement) might be necessary when communicating about earthquake related information, both to experts and the public.

This research was intended to be exploratory and informative, rather than conclusive and generalizable. It constitutes an important first step in establishing degrees of consensus within earthquake science, understanding how divergence in consensus might be managed, and opening discussions about the framing of statements about earthquakes in public discourse. The results underline the importance of clarity and precision in communication about earthquakes to both experts and publics, and provide important insights for the state of knowledge in the field. This should aid understanding of what may be classified as earthquake “misinformation”, help identify where consensus exists and could be communicated in order to fight such misinformation, and highlight where scientific debate continues and could be openly communicated with the public to aid understanding of where and why, at present, a clear true or false answer cannot be given on certain aspects of earthquake science.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed by the University of Cambridge Psychology Research Ethics Committee (No: PRE. 2021.018). The patients/participants provided their written informed consent to participate in this study.

Author contributions

SD, ID, and LF designed the study. All authors contributed to coding and data analysis. SD wrote the manuscript, with contributions from FM, JK, SM, and ID. All authors edited the manuscript, and read and approved the submitted manuscript.

Acknowledgments

The authors would like to thank all the earth scientists who completed the survey and participated in the online workshops. This investigation is part of two EU Horizon-2020 projects: RISE (grant agreement No 821115) and TURNkey (grant agreement No 821046). JB time was supported by QuakeCORE a New Zealand Tertiary Education Commission-funded Centre (publication 0755). SM time was supported by the U.S. Geological Survey. Any use of trade, firm, or product names is for descriptive purposes only and does not imply endorsement by the U.S. Government. The research described in this article was not conducted on behalf or funded by the U.S. Geological Survey. Open access funding provided by ETH Zurich. We thank our internal reviewer in the U.S. Geological Survey for providing valuable and constructive feedback on our manuscripts. We also thank our external peer reviewers for their insightful comments and suggestions, which helped improve the quality of our manuscript.

Conflict of interest

SD was formerly employed by Frontiers.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Alexander, D. E. (2014). Communicating earthquake risk to the public: The trial of the “L’Aquila Seven. Nat. Hazards (Dordr). 72 (2), 1159–1173. doi:10.1007/s11069-014-1062-2

Allcott, H., and Gentzkow, M. (2017). Social media and fake news in the 2016 election. J. Econ. Perspect. 31 (2), 211–236. doi:10.1257/jep.31.2.211

Batteux, E., Bilovich, A., Johnson, S. G. B., and Tuckett, D. (2021). The negative consequences of failing to communicate uncertainties during a pandemic: The case of COVID-19 vaccines. Cold Spring Harb. Lab. Press, 201. doi:10.1101/2021.02.28.21252616

Bazeley, P., and Jackson, K. (2013). Qualitative data analysis with NVivo. Thousand Oaks, CA: Sage Publications.

Becker, J. S., Potter, S. H., McBride, S. K., Wein, A., Doyle, E. E. H., and Paton, D. (2019).When the Earth doesn’t stop shaking: How experiences over time influenced information needs, communication, and interpretation of aftershock information during the Canterbury Earthquake Sequence, New Zealand, Int. J. Disaster Risk Reduct., 34. Elsevier, 397–411. doi:10.1016/j.ijdrr.2018.12.009

Bostrom, A., Atman, C. J., Fischhoff, B., and Morgan, M. G. (1994). Evaluating risk communications: Completing and correcting mental models of hazardous processes, Part II. Risk Anal. 14 (5), 789–798. doi:10.1111/j.1539-6924.1994.tb00290.x

Bostrom, A., Fischhoff, B., and Granger Morgan, M. (1992). Characterizing mental models of hazardous processes. A Methodol. Appl. radon 48 (4), 85–100.

Bundesministerium, D. J. (2017) Network Enforcement Act (Netz DG), bmj.de. Available at: https://www.bmj.de/DE/Themen/FokusThemen/NetzDG/NetzDG_EN_node.html;jsessionid=864F8FEF9DBE269382993ADE0D400149.2_cid297 (Accessed: 6 April 2022).

Calfas, J. (2017). Google is changing its search algorithm to combat fake news. Fortune. Available at: https://fortune.com/2017/04/25/google-search-algorithm-fake-news/.

Compton, J. (2013). “Inoculation theory,” in The SAGE handbook of persuasion: Developments in theory and practice. Editor S. Publications. 2nd ed. (Thousand Oaks, 220–236.

Cook, J., Nuccitelli, D., Green, S. A., Richardson, M., Winkler, B., Painting, R., et al. (2013). Quantifying the consensus on anthropogenic global warming in the scientific literature. Environ. Res. Lett. 8, 024024. doi:10.1088/1748-9326/8/2/024024

Dallo, I., Corradini, M., Fallou, L., and Marti, M. (2022). How to fight misinformation about earthquakes ? - a communication guide. ETH Res. Collect. doi:10.3929/ethz-b-00055928810.3929/ethz-b-000559288

Daymon, C., and Holloway, I. (2002). Qualitative research methods in public relations and marketing communications. Abingdon, OX: Psychology Press.

Doyle, E. E., Johnston, D. M., Smith, R., and Paton, D. (2019). Communicating model uncertainty for natural hazards: A qualitative systematic thematic review. Int. J. disaster risk Reduct. 33, 449–476. doi:10.1016/j.ijdrr.2018.10.023

Dryhurst, S., Luoni, G., Dallo, I., Freeman, A. L. J., and Marti, M. (2021). Designing & implementing the seismic portion of dynamic risk communication for long-term risks, variable short-term risks, early warnings. RISE Deliv. Available at: http://static.seismo.ethz.ch/rise/deliverables/Deliverable_5.3.pdf.

Dryhurst, S., Mulder, F., Dallo, I., Kerr, J. R., Fallou, L., and Becker, J. S. (2022). Output from workshops with Earth scientists about earthquake related misinformation. ETH Res. Collect. doi:10.3929/ethz-b-000566193

Elgin, B., and Wang, S. (2018). Facebook’s battle against fake news notches an uneven scorecard. Bloomberg. Available at: https://www.bloomberg.com/news/articles/2018-04-24/facebook-s-battle-against-fake-news-notches-an-uneven-scorecard#xj4y7vzkghttps://www.bloomberg.com/news/articles/2018-04-24/facebook-s-battle-against-fake-news-notches-an-uneven-scorecard#xj4y7vzkg.

Ellsworth, W. L. (2013). Injection-induced earthquakes. Science 341 (6142), 1225942. doi:10.1126/science.1225942

Freeman, A. L. J., Kerr, J., Recchia, G., Schneider, C. R., Lawrence, A. C. E., Finikarides, L., et al. (2021). Communicating personalized risks from COVID-19: Guidelines from an empirical study. R. Soc. open Sci. 8, 201721. doi:10.1098/rsos.201721

Freeman, A. L. J., Parker, S., Noakes, C., Fitzgerald, S., Smyth, A., Macbeth, R., et al. (2021). Expert elicitation on the relative importance of possible SARS-CoV-2 transmission routes and the effectiveness of mitigations. BMJ Open 11 (12), e050869. doi:10.1136/bmjopen-2021-050869

Gigerenzer, G., Gaissmaier, W., Kurz-Milcke, E., Schwartz, L. M., and Woloshin, S. (2007). Helping doctors and patients make sense of health statistics: Toward an evidence-based society. Psychol. Sci. Public Interest 8 (2), 53–96. doi:10.1111/j.1539-6053.2008.00033.x

Gigerenzer, G., and Hoffrage, U. (1995). How to improve Bayesian reasoning without instruction: Frequency formats. Psychol. Rev. 102 (4), 684–704. doi:10.1037/0033-295X.102.4.684

Grimes, D. R. (2021). Medical disinformation and the unviable nature of COVID-19 conspiracy theories. PLoS One 16 (3), e0245900. doi:10.1371/journal.pone.0245900

Guess, A., Nagler, J., and Tucker, J. (2019). Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Sci. Adv. 5 (1), eaau4586. doi:10.1126/sciadv.aau4586

Hernandez, S. (2016). Two men accused of plotting attack on Alaska facility they believed trapped souls. BuzzFeed News 35, 4345.

Joffe, H., Rossetto, T., Solberg, C., and O'Connor, C. (2013). Social representations of earthquakes: A study of people living in three highly seismic areas. Earthq. Spectra 29 (2), 367–397. doi:10.1193/1.4000138

Johnson, V. A., and Ronan, K. R. (2014). Classroom responses of New Zealand school teachers following the 2011 Christchurch earthquake. Nat. Hazards (Dordr). 72 (2), 1075–1092. doi:10.1007/s11069-014-1053-3

Jolley, D., and Paterson, J. L. (2020). Pylons ablaze: Examining the role of 5G COVID-19 conspiracy beliefs and support for violence. Br. J. Soc. Psychol. 59 (3), 628–640. doi:10.1111/bjso.12394

Joshi, A., Kale, S., Chandel, S., and Pal, D. K. (2015). Likert scale: Explored and explained. Br. J. Appl. Sci. Technol. 7 (4), 396–403. doi:10.9734/bjast/2015/14975

Kahneman, D., and Tversky, A. (1979). Prospect theory: An analysis of decision under risk. Econometrica 47 (2), 263–292. doi:10.2307/1914185

Kerr, J. R., van der Bles, A. M., Schneider, C., Dryhurst, S., Chopurian, V., Freeman, A. L. J., et al. (2021). The effects of communicating uncertainty around statistics on public trust: An international study, Psychol. COGNITIVE Sci., 117(14). 7672–7683. doi:10.1073/pnas.1913678117

Lee, T. (2019). The global rise of “fake news” and the threat to democratic elections in the USA. Public Adm. Policy 22 (1), 15–24. doi:10.1108/pap-04-2019-0008

Lewandowsky, S., Cook, J., Ecker, U. K. H., Albarracín, D., Amazeen, M. A., Kendeou, P., et al. (2020) The debunking handbook. Available at https://sks.to/db2020. doi:10.17910/b7.1182

Lewandowsky, S., Ecker, U. K. H., and Cook, J. (2017). Beyond misinformation: Understanding and coping with the “post-truth” era. J. Appl. Res. Mem. Cognition 6 (4), 353–369. doi:10.1016/j.jarmac.2017.07.008

Lewandowsky, S., and van der Linden, S. (2021). Countering misinformation and fake news through inoculation and prebunking. Eur. Rev. Soc. Psychol. 32 (2), 348–384. doi:10.1080/10463283.2021.1876983

Lewis, R. B., and Maas, S. M. (2007). QDA Miner 2.0: Mixed-model qualitative data analysis software. Field methods 19 (1), 87–108. doi:10.1177/1525822x06296589

Lobato, E. J. C., Powell, M., Padilla, L. M. K., and Holbrook, C. (2020). Factors predicting willingness to share COVID-19 misinformation. Front. Psychol. 11 (76), 566108. doi:10.3389/fpsyg.2020.566108

Maibach, E. W., Myers, T., and Leiserowitz, A. (2014). Climate scientists need to set the record straight: There is a scientific consensus that human-caused climate change is happening. Earth's. Future 2 (5), 295–298. doi:10.1002/2013ef000226

Maibach, E. W., and van der Linden, S. L. (2016).The importance of assessing and communicating scientific consensus, Environ. Res. Lett., 11. IOP Publishing. doi:10.1088/1748-9326/11/9/091003

McBride, S. K. (2017). The Canterbury Tales: An insider’s lessons and reflections from the Canterbury Earthquake Sequence to inform better communication models. Palmerston North, New Zealand: Massey University.

Meyer, D. Z., and Avery, L. M. (2009). Excel as a qualitative data analysis tool. Field methods 21 (1), 91–112. doi:10.1177/1525822x08323985

Michael, A. J., McBride, S. K., Hardebeck, J. L., Barall, M., Martinez, E., Page, M. T., et al. (2019). Statistical seismology and communication of the USGS operational aftershock forecasts for the 30 November 2018 Mw 7.1 Anchorage, Alaska, earthquake. Seismol. Res. Lett. 91 (1), 153–173. doi:10.1785/0220190196

Mosleh, M., Pennycook, G., and Rand, D. G. (2020). Self-reported willingness to share political news articles in online surveys correlates with actual sharing on Twitter. PLoS ONE 15 (2), e0228882–e0228889. doi:10.1371/journal.pone.0228882

Myers, K. F., Doran, P. T., Cook, J., Kotcher, J. E., and Myers, T. A. (2021). Consensus revisited: Quantifying scientific agreement on climate change and climate expertise among earth scientists 10 years later. Environ. Res. Lett. 16, 104030. doi:10.1088/1748-9326/ac2774

New Zealand Herald, (2011a). Christchurch earthquake: Ring’s tip sends families fleeing. N. Z. Her. Available at: https://www.nzherald.co.nz/nz/christchurch-earthquake-rings-tip-sends-families-fleeing/ICTUZ7ZRR65QSS3KPCJGISIAKM/.

New Zealand Herald, (2011b). Ken ring coverage wins skeptics’ bent spoon award. N. Z. Her. Available at: https://www.nzherald.co.nz/nz/ken-ring-coverage-wins-skeptics-bent-spoon-award/4S7J2BZ7DYX45BZB4UYQQMW2RE/.

Oreskes, N. (2004). The scientific consensus on climate change. Science 306 (5702), 1686. doi:10.1126/science.1103618

Padilla, E. (2021). Multiple hazard uncertainty visualization: Challenges and paths forward. Front. Psychol. 12, 579207. doi:10.3389/fpsyg.2021.579207

Parker, C., Scott, S., and Geddes, A. (2019). “Snowball Sampling,” in SAGE Research Methods Foundations. Editors P. Atkinson, S. Delamont, A. Cernat, J. W. Sakshaug, and R. A. Williams. doi:10.4135/9781526421036831710

Roozenbeek, J., Schneider, C. R., Dryhurst, S., Kerr, J., Freeman, A. L. J., Recchia, G., et al. (2020). Susceptibility to misinformation about COVID-19 around the world. R. Soc. open Sci. 7 (10), 201199. doi:10.1098/rsos.201199

Schneider, C. R., Freeman, A. L. J., Spiegelhalter, D., and van der Linden, S. (2021). The effects of quality of evidence communication on perception of public health information about COVID-19: Two randomised controlled trials. Plos One 16 (11), e0259048. doi:10.1371/journal.pone.0259048

Tversky, A., and Kahneman, D. (1974). Judgment under uncertainty: Heuristics and biases. Science 185 (4157), 1124–1131. doi:10.1126/science.185.4157.1124

van der Bles, A. M., van der Linden, S., Freeman, A. L. J., and Spiegelhalter, D. J. (2020). The effects of communicating uncertainty on public trust in facts and numbers. Proc. Natl. Acad. Sci. U. S. A. 117 (14), 7672–7683. doi:10.1073/pnas.1913678117

Van der Linden, S., and Roozenbeek, J. (2020). Psychological inoculation against fake news. Psychol. Fake News, 147–169. doi:10.4324/9780429295379-11

Van der Linden, S., and Roozenbeek, J. (2020). “Psychological inoculation against fake news,” in The Psychology of fake news: Accepting, sharing, and correcting misinformation, 147–169. doi:10.4324/9780429295379-11

Wood, S., and Johnston, K. (2011). Ken Ring’s Christchurch earthquake claims “terrifying” people. stuff.Co.nz. Available at: https://www.stuff.co.nz/dominion-post/news/4776904/Ken-Rings-Christchurch-earthquake-claims-terrifying-people.

Woodhouse, J. (2021). Regulating online harms. House Commons Libr. (8743), 1–25. Available at: https://commonslibrary.parliament.uk/research-briefings/cbp-8743/%0Ahttps://www.nesta.org.uk/blog/regulating-online-harm.

Keywords: misinformation, Earth science, seismology, earthquakes, risk communication, scientific consensus, expert elicitation, crisis communication

Citation: Dryhurst S, Mulder F, Dallo I, Kerr JR, McBride SK, Fallou L and Becker JS (2022) Fighting misinformation in seismology: Expert opinion on earthquake facts vs. fiction. Front. Earth Sci. 10:937055. doi: 10.3389/feart.2022.937055

Received: 05 May 2022; Accepted: 14 November 2022;

Published: 16 December 2022.

Edited by:

Anna Scolobig, Université de Genève, SwitzerlandReviewed by:

Serguei Bychkov, University of British Columbia, CanadaGemma Musacchio, Istituto Nazionale di Geofisica e Vulcanologia (INGV), Italy

Iain Stewart, Royal Scientific Society, Jordan

Copyright © 2022 Dryhurst, Mulder, Dallo, Kerr, McBride, Fallou and Becker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah Dryhurst, cy5kcnlodXJzdEB1Y2wuYWMudWs=; Irina Dallo, aXJpbmEuZGFsbG9Ac2VkLmV0aHouY2g=

Sarah Dryhurst

Sarah Dryhurst Femke Mulder4

Femke Mulder4 Sara K. McBride

Sara K. McBride Julia S. Becker

Julia S. Becker