94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

REVIEW article

Front. Dent. Med., 20 February 2023

Sec. Dental Materials

Volume 4 - 2023 | https://doi.org/10.3389/fdmed.2023.1085251

This article is part of the Research TopicArtificial Intelligence in Modern Dentistry: From Predictive to Generative ModelsView all articles

Artificial Intelligence (AI) is the ability of machines to perform tasks that normally require human intelligence. AI is not a new term, the concept of AI can be dated back to 1950. However, it did not become a practical tool until two decades ago. Owing to the rapid development of three cornerstones of current AI technology—big data (coming through digital devices), computational power, and AI algorithm—in the past two decades, AI applications have started to provide convenience to people's lives. In dentistry, AI has been adopted in all dental disciplines, i.e., operative dentistry, periodontics, orthodontics, oral and maxillofacial surgery, and prosthodontics. The majority of the AI applications in dentistry are for diagnosis based on radiographic or optical images, while other tasks are not as applicable as image-based tasks mainly due to the constraints of data availability, data uniformity, and computational power for handling 3D data. Evidence-based dentistry (EBD) is regarded as the gold standard for decision making by dental professionals, while AI machine learning (ML) models learn from human expertise. ML can be seen as another valuable tool to assist dental professionals in multiple stages of clinical cases. This review describes the history and classification of AI, summarizes AI applications in dentistry, discusses the relationship between EBD and ML, and aims to help dental professionals better understand AI as a tool to support their routine work with improved efficiency.

The fourth industrial revolution is opening a new era, one of the most important contributions of which is Artificial Intelligence (AI). With more and more electronic devices assisting people's life comprehensively, it has become possible to use and analyze the data from these devices through AI. AI is blossoming and expanding rapidly in all sectors. It can learn from human expertise and undertake works typically requiring human intelligence. One of its definitions (1) is “the theory and development of computer systems able to perform tasks normally requiring human intelligence, such as visual perception, speech recognition, decision making, and translation between languages”.

AI has been adopted in many fields of industry, such as robots, automobiles, smart city, and financial analysis, etc. It has also been used in medicine and dentistry, for example, medical and dental imaging diagnostics, decision support, precision and digital medicine, drug discovery, wearable technology, hospital monitoring, robotic and virtual assistants. In many cases, AI can be regarded as a valuable tool to help dentists and clinicians reduce their workload. Besides diagnosing diseases using a single information source directed at a specified disease, AI can learn from multiple information sources (multi-modal data) to diagnose beyond human capabilities. For example, fundus photographs with other medical data such as age, gender, BMI, smoking habits, blood pressure, and the likelihood of diabetes has been used to predict heart disease (2). Thus, the AI can discover not only eye diseases such as diabetic retinopathy from fundus photography, but also heart disease. It looks like image-based analysis using AI is sound and successful. All these rely on the rapid development (as an output) of computing capacity (hardware), algorithmic research (software), and large database (input data). Given these, there is great potential for the use of AI in the dental and medical field.

Many studies on AI applications in dentistry are underway or even have been put into practise in the aspects such as diagnosis, decision-making, treatment planning, prediction of treatment outcome, and disease prognosis. Many reviews regarding dental AI (3–8) have been published, while this review aims to narrate the development of AI from incipient stages to present, describe the classifications of AI, summarise the current advances of AI research in dentistry, and discuss the relationship between Evidence-based dentistry (EBD) and AI. Limitations of the current AI development in dentistry are also discussed.

Artificial intelligence is not a new term. Alan Turing wrote in his paper “Computing Machinery and Intelligence” (9) in the 1950 issue of Mind:

“I believe that at the end of the century (20th), the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.”

Back then, there was no term to interpret AI; Turing described AI as “machines thinking”. He mathematically investigated the feasibility of AI and explored how to construct intelligent machines and assess machine intelligence. He proposed that humans solve problems and make decisions by utilising available information and inference, machines also can do the same thing.

In the paper (9), Turing proposed setting a test as to whether a machine can achieve human-level intelligence. This test is known as the Turing Test. It lies on the following lines: Assuming a human evaluator could distinguish natural language communications between a human test taker and a machine. It is given that a human evaluator knows that the conversation is between a human and a machine, and the human evaluator, human test taker and machine are separated from one another. The conversation between the human test taker and the machine is limited to plain text, i.e., keyboard input, instead of speech. This is to make the test only focus on the machine's ability to answer the questions logically instead of testing its speech interpretation ability. If the human evaluator cannot distinguish the human test taker and the machine, the machine can be viewed as having passed the Turing Test, and such a machine is said to have “machine intelligence”.

Later, in 1955, the term AI was first proposed in a 2-month workshop: Dartmouth Summer Research Project on Artificial Intelligence (10) led by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. However, the concept was only on paper. Certain restrictions stopped researchers from developing real AI machines in the 1950s. Firstly, computers before 1949 lacked a fundamental prerequisite for AI tasks: there was no storage function, which means the codes could not be stored, they could only be executed. Secondly, computers were costly at that time. Lastly, funding sources had conservative attitudes towards this new field back then (11).

From 1957 to 1974, the AI field was fast-growing because of the growth of computer power, its accessibility, and AI algorithms. Examples include ELIZA, a computer program that could interpret spoken language and solve problems via text (12). Two “AI Winters” arrived after the first wave of development due to insufficient practical applications and research funding reduction in the mid-1970s and late 1980s (13). However, AI had its breakthrough between the two periods with very few developments. In the 1980s, it developed through two paths: machine learning (ML) and expert systems. Theoretically, these are two opposite approaches to AI. ML allows computers to learn by experience (14); expert systems, on the contrary, simulate the decision-making process of human experts (15). In other words, ML finds the solution by learning and summarizing the experience by itself, while expert systems need human experts to input all possible situations and solutions in advance. Expert systems have largely been used in industry since then. The example includes R1 (Xcon) program, an expert system with around 2,500 rules for assisting component selection for computer assembly was developed (16) and used by DEC, a computer manufacturer.

Two important time points in computer vision are 2012 and 2017. In 2012, a graphics processing unit (GPU)-implemented deep learning (DL) network with eight layers was developed (17), The work won the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) and achieved a classification top-5 error of 15.3%. The error rate was more than 10.8% lower than the runner-up. In 2017, SE-NET further lowered the top-5 error to 2.25%, surpassing the human top-5 error (5.1%) (18).

Later famous AI examples include Deep Blue—a chess-playing expert system, which defeated chess champion of the time Gary Kasparov in 1997 (19); 20 years later in 2017, Google's AlphaGo, a DL program, defeated the world No. 1 ranked player Jie Ke in a Go match (20); recently in late 2022, OpenAI launched ChatGPT (Chat Generative Pre-trained Transformer), it is a text-generation model that can generate human-like responses based on text input, the model received extensive discussion since its launch (21). These examples used different AI approaches to operate.

There are many approaches to achieving AI: different types of AI can achieve different tasks, and researchers have created different AI classification methods.

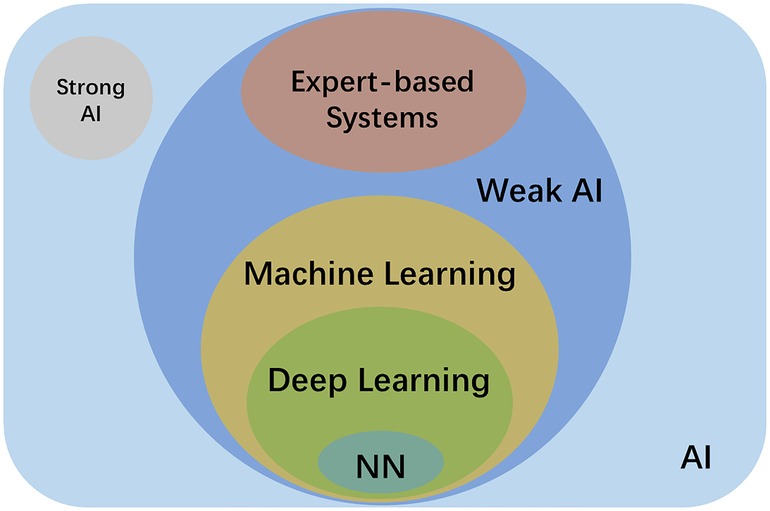

AI is a generic term for all non-human intelligence. As Figure 1 shows, AI can be further classified as weak AI and strong AI. Weak AI, also called narrow AI, uses a program trained to solve single or specific tasks. The AI of today is mostly weak AI. Examples include reinforcement learning, e.g., AlphaGo, and automated manipulation robots; natural language processing, e.g., Google translation, and Amazon chat robots computer vision, e.g., Tesla Autopilot, and face recognition; data mining, e.g., market customer analysis and personalised content recommendation on social media (22). Strong AI refers to the ability and intelligence of AI equalling that of humans—it has its own awareness and behaviour as flexible as humans (23). Strong AI aims to create a multi-task algorithm to make decisions in multiple fields. Research on strong AI has to be very cautious as there might be ethical issues, and it could be dangerous. Thus, there are no strong AI applications up to now.

Figure 1. Schematic diagram of the relationship between AI, strong AI, weak AI, expert-based systems, machine learning, deep learning and neural network (NN).

ML and expert systems are two different subgroups of weak AI. As shown in Table 1, ML can be further classified as supervised, semi-supervised and unsupervised learning based on the theory of the methods. Supervised learning uses labelled datasets for training, and these labelled datasets are the “supervisor” of the algorithm. The algorithm learns from the labelled input, and extracts and identifies the common features of the labelled input to make predictions about unlabelled input (24). Examples of supervised learning include k-nearest neighbors, logistic regression, random forest, and support-vector machines (25). Unsupervised learning, on the contrary, works on its own to find the various features of unlabelled data (26). Semi-supervised learning lies between those two, which utilises a small amount labelled data together with a large amount of unlabelled data during training (27). Recently, a new method called weakly-supervised learning became increasingly popular in the AI field to alleviate labelling costs. In particular, the object segmentation task only uses image-level labels (i.e., only knowing what objects are in the images) instead of object boundary or location information for training (28).

Deep learning is currently a very prominent research area and forms a subset of ML. It can involve both supervised and unsupervised learning. As Figure 2 shows, “deep” represents an artificial “neural network” consisting of a minimum of three nodal layers—input, multiple “hidden”, and output layers such that each layer consists of various numbers of interconnected nodes (artificial neurons) whereas each node x has an associated weight (wi) and biased threshold (t) from m decisive factors, given by its own (simplified) linear regression model . The weight is assigned when there is an input of the node. If , then the output = 1, meaning the data is passed to another node in another layer. The process of passing data from one layer to the next defines the neural network as a feedforward network, similar to a decision tree model.

As mentioned above, a deep neural network can extract features from the imported data, which does not require human intervention. Instead, it can learn those features from large datasets. On the other hand, expert systems require human intervention to learn, which indeed tuning the wi and t manually. So, less data is required.

Neural networks (NNs) are biologically inspired networks that can be regarded as the pillars of deep learning algorithms. There are different variations of NNs, among which the most important types of neural networks are artificial neural networks (ANNs), neural networks (CNNs), and generative adversarial networks (GANs).

ANN comprises a group of neurons and layers, as illustrated in Figure 2. As mentioned above, this model is a basic model for deep learning, consisting of a minimum of three layers. The inputs are processed only in the forward direction. Input neurons extract features of input data from the input layer and send data to hidden layers, and the data goes through all the hidden layers successively. Finally, the results are summarised and shown in the output layer. All the hidden layers in ANN can weigh the data received from previous layers and make adjustments before sending the data to the next layer. Each hidden layer acts as an input and output layer, allowing the ANN to understand more complex features (29).

CNN is a type of deep learning model mainly used for image recognition and generation. The mean difference between ANN and CNN is that CNN consists of convolution layers, in addition to the pooling layer and the fully connected layer in the hidden layers. Convolution layers are used to generate feature maps of input data using convolution kernels. The input image is folded by the kernels completely. It reduces the complexity of images because of the weight sharing by convolution. The pooling layer is usually followed by each group of convolution layers, which reduces the dimension of feature maps for further feature extraction. The fully connected layer is used after the convolution layer and pooling layer. As the name indicates, the fully connected layer connects to all activated neurons in the previous layer and transforms the 2D feature maps into 1D. 1D feature maps are then associated with nodes of categories for classification (30, 31). By using the above-mentioned functional hidden layers, CNN showed higher efficiency and accuracy in image recognition compared with ANN.

GAN is one kind of deep learning algorithm designed by Goodfellow et al. (32) in 2014. It is an unsupervised learning method designed to automatically discover patterns from the input data and generate new data with similar features or patterns compared with the input data. GAN consists of two neural networks: a generator and a discriminator. The ultimate goal for the generator is to generate data such that the discriminator cannot determine whether the data is generated by the generator or from the original input data. The ultimate goal for the discriminator is to distinguish the generator-generated data from the original input data as much as possible. The two networks compete with each other in GAN, and both networks improve themselves during the competition.

Since GAN was designed, the network has rapidly spread in AI applications. They are mainly applied to image-to-image translation and generating plausible photos of objects, scenes, and people (33, 34). Wu et al. (35) proposed a new 3D-GAN framework in 2016 based on a traditional GAN network. 3D-GAN generates 3D objects from a given 3D space by combining recent advances in GAN and volumetric convolutional networks. Unlike a traditional GAN network, it can generate objects in 3D directly or from 2D images. It gives a broader range of possible applications in 3D data processing compared with its 2D form.

As in other industries, AI in dentistry has started to blossom in recent years. From a dental perspective, applications of AI can be classified into diagnosis, decision-making, treatment planning, and prediction of treatment outcomes. Among all the AI applications in dentistry, the most popular one is diagnosis. AI can make more accurate and efficient diagnoses, thus reducing dentists' workload. On one hand, dentists are increasingly relying on computer programs for making decisions (36, 37). On the other hand, computer programs for dental use are becoming more and more intelligent, accurate, and reliable. Research on AI has spread over all fields in dentistry.

Although a large amount of journal articles regarding dental AI have been published, it is still difficult to compare between articles in terms of study design, data allocation (i.e., training, test, and validation sets), and model performance (i.e., accuracy, sensitivity, specificity, F1, AUC {Area Under [the receiver operating characteristic (ROC)] Curve}, recall). Most articles failed to report the information mentioned above entirely. Thus, the MI-CLAIM (Minimum Information about Clinical Artificial Intelligence Modeling) checklist has been advocated to bring similar levels of transparency and utility to the application of AI in medicine (38).

Traditionally, dentists diagnose caries by visual and tactile examination or by radiographic examination according to a detailed criterion. However, detecting early-stage lesions is challenging when deep fissures, tight interproximal contacts, and secondary lesions are present. Eventually, many lesions are detected only in the advanced stages of dental caries, leading to a more complicated treatment, i.e., dental crown, root canal therapy, or even implant. Although dental radiography (whether panoramic, periapical, or bitewing views) and explorer (or dental probe) have been widely used and regarded as highly reliable diagnostic tools detecting dental caries, much of the screening and final diagnosis tends to rely on dentists' experience.

In operative dentistry, there has been research on the detection of dental caries, vertical root fractures, apical lesions, pulp space volumetric assessment, and evaluation of tooth wear (39–44) (Table 2). In a two-dimensional (2D) radiograph, each pixel of the grayscale image has an intensity, i.e., brightness, which represents the density of the object. By learning from the above-mentioned characteristics, an AI algorithm can learn the pattern and give predictions to segment the tooth, detect caries, etc. For example, Lee et al. (45) developed a CNN algorithm to detect dental caries on periapical radiographs. Kühnisch et al. (46) proposed a CNN algorithm to detect caries on intraoral images. Schwendicke et al. (47) compared the cost-effectiveness of AI for proximal caries detection with dentists' diagnosis; the results showed that AI was more effective and less costly.

Several studies mentioned above showed that AI has promising results in early lesion detection, with the same accuracy or even better compared with dentists. This achievement requires interdisciplinary cooperation between computer scientists and clinicians. The clinicians manually label the radiographic images with the location of caries while the computer scientists prepare the dataset and ML algorithm. Finally, clinicians and computer scientists jointly check and verify the accuracy and precision of the training results (48).

Periodontitis is one of the most widespread diseases. It is a burden for billions of individuals and, if untreated, can lead to tooth mobility and even tooth loss (49). To prevent severe periodontitis, early detection and treatment are needed. In clinical practise, periodontal disease diagnosis is based on evaluating pocket probing depths and gingival recession. The Periodontal Screening Index (PSI) is frequently used to quantify clinical attachment loss. However, this clinical evaluation has low reliability: the screening for periodontal disease is still based on the experience of dentists, and they may miss localized periodontal tissue loss (50).

In periodontics, AI has been utilised to diagnose periodontitis and classify plausible periodontal disease types (51, 52). In addition, Krois et al. (50) adopted CNN in the detection of periodontal bone loss (PBL) on panoramic radiographs. Lee et al. (53) evaluated the potential usefulness and accuracy of a proposed CNN algorithm to detect periodontally compromised teeth automatically. Yauney et al. (54) claimed that periodontal conditions could be examined by a CNN algorithm developed by their research group using systemic health-related data (Table 3).

Orthodontic treatment planning is usually based on the experience and preference of the orthodontists. As every patient and orthodontist is unique, the treatment is decided mutually by both sides. Traditionally, it takes a lot of effort for orthodontists to diagnose malocclusion, as many variables need to be considered in the cephalometric analysis, such that it is difficult to determine the treatment plan and predict the treatment outcome (55). AI is an ideal tool for solving orthodontic problems. In orthodontics, AI has applications (Table 4) in treatment planning and prediction of treatment results, such as simulating the changes in the appearance of pre- and post-treatment facial photographs. The impact of orthodontic treatment, the skeletal patterns, and the anatomic landmarks in lateral cephalograms (67) can be clearly seen with the aid of AI algorithms, greatly assisting communication between patients and dentists.

A Bayesian-based decision support system was developed by Thanathornwong (57) to diagnose the need for orthodontic treatment based on orthodontics-related data as input. Xie et al. (58) proposed an ANN model to evaluate whether extractions are needed from lateral cephalometric radiographs; A similar evaluation system was proposed by Jung et al. (59). Apart from the application in predicting the extractions needed for orthodontic purposes, AI has been adopted to locate cephalometric landmarks. Park et al. (60, 61) demonstrated a DL algorithm for the automatically identifying cephalometric landmarks on radiographs with a high accuracy. Bulatova (68) et al. and Kunz et al. (69) developed similar AI algorithms, with accuracies comparable with human examiners in identifying those landmarks. An automatic system for skeletal classification using lateral cephalometric radiographs was proposed by Yu et al. (63).

Besides locating multiple cephalometric landmarks and classification, AI systems have been used in orthodontic treatment planning. Choi et al. (64) proposed an AI model to judge whether surgery is needed using lateral cephalometric radiographs. It looks like most of the orthodontic applications are on landmarking identification and treatment planning, which are tedious procedures for orthodontists. A basic task for orthodontic treatment planning is to segment and classify the teeth. AI has also been used for these purposes on multiple sources, such as radiographs and full-arch 3D digital optical scans (65, 66). Cui et al. proposed several AI algorithms to automatically segment teeth on a digital teeth model scanned by a 3D intraoral scanner (65) and CBCT images (66, 70). In addition to tooth segmentation, they also segmented alveolar bone, the efficiency exceeded the radiologists' work (i.e., 500 times faster). The paper also claimed that the algorithm works well in challenging cases with variable dental abnormalities (66).

Oral and Maxillofacial Pathology (OMFP) is a specialty for examining pathological conditions and diagnosing diseases in the oral and maxillofacial region. The most severe type of OMFP is oral cancer. Statistics from the World Health Organization (WHO) show that every year there are over 657,000 patients diagnosed with oral cancer globally, among which there are more than 330,000 deaths (71). In OMFP, as shown in Table 5, AI has been researched mostly for tumour and cancer detection based on radiographic, microscopic and ultrasonographic images. In addition, AI can be used to detect abnormal sites on radiographs (72), such as nerves in the oral cavity, interdigitated tongue muscles, and parotid and salivary glands. CNN algorithms were demonstrated to be a suitable tool for the automatically detecting cancers (73, 78). It is worth mentioning that AI also plays a role in managing cleft lip and palate in risk prediction, diagnosis, pre-surgical orthopaedics, speech assessment, and surgery (79).

Early detection and diagnosis of various mucosal lesions are essential to classify them as benign or malignant. Surgery resection is required for malignant lesions. However, some of the lesions behave similarly in appearance, thus requiring the diagnosis by biopsy slides and radiographs. Pathologists diagnose disease by observing the morphology of stained specimens on glass slides using a microscope (80). It is tedious work that requires much of effort for pathologists. Of all the biopsies that need to be examined, only around 20% of them are found to be malignancies. Thus, AI can be a suitable tool for aiding pathologists in this task.

Warin et al. (74) used a CNN approach to detect oral potentially malignant disorders (OPMDs) and oral squamous cell carcinoma (OSCC) in intraoral optical images. In addition to intraoral optical images, OCT has been used in identify benign and malignant lesions in the oral mucosa. James et al. (75) used ANN and SVM models to distinguish malignant and dysplastic oral lesions. Heidari et al. (76) used a CNN network, AlexNet (17), to distinguish normal and abnormal head and neck mucosa. Abureville et al. (73) used a CNN algorithm to automatically diagnose oral squamous cell carcinoma (SCC) from confocal laser endomicroscopy images; the study showed that the CNN algorithm used in the study was especially suitable for early diagnosis of SCC. Poedjiastoeti et al. (77) also used a CNN algorithm to identify and distinguish ameloblastoma and keratocystic odontogenic tumour (KCOT). The two oral tumours with similar features in radiographic images. By comparing the computer-generated results with the biopsy results, the accuracy of the CNN algorithm was found to be 83% and the diagnostic time 38 s. These values were similar to those of oral and maxillofacial specialists.

In prosthodontics, a typical treatment process to prepare a dental crown includes tooth preparation, impression taking, cast trimming, restoration design, fabrication, try-in, and cementation. The application of AI in prosthodontics mainly lies in the restoration design (Table 6). CAD/CAM has digitalised the design work in commercialized products, including CEREC, Sirona, 3Shape, etc. Although this has dramatically increased the efficiency of the design process by utilising a tooth library for crown design, it still cannot achieve a custom-made design for individual patients (81). With the development of AI, Hwang et al. (82) and Tian et al. (83) proposed novel approaches based on 2D-GAN models to generate a crown by learning from technicians' designs. The training data was 2D depth maps converted from 3D tooth models. Ding (84) reported a 3D-DCGAN network in the crown generation, which utilised 3D data directly in the crown generation process, the morphology of generated crowns was similar compared with natural teeth. Integrating AI with CAD/CAM or 3D/4D printing can achieve a more desirable workflow with high efficiency (88). AI has also been used in shade matching (85) and debonding prediction of CAD/CAM restorations (86).

Apart from fixed prosthodontics, the design in removable prosthodontics is more challenging as more factors and variables need to be considered. No ML algorithm is available for the purpose of designing removable dentures while several expert (knowledge based) systems have been introduced (89–91). Current ML algorithms are more focused on assisting the design process of removable dentures, e.g., classification of dental arches (87), and facial appearance prediction in edentulous patients (92).

Given the success of AI, it has been proved that AI can learn beyond human expertise. In fact, the development of AI cannot be achieved without the development of computer technology (software), computing capacity (hardware), and large database (input data). ML tasks involving 3D models require high computational power to train the algorithm. Current computational power may be still insufficient to work directly on 3D data to perform classification or regression tasks compared with well-studied 2D image and video-based tasks. Millions of point clouds or meshes in a 3D model cannot be loaded to GPU at once. Sampling and representations of a 3D model (i.e., depth map, voxels, point cloud, and mesh) are often used to reduce the computation burden, such that the details would be sacrificed during the transition. In addition to the massive amount of digitalised medical data used for training ML models, which did not exist previously, the development of wearable devices also contributes to the acquisition of medical big data. Thus, the evolution of AI applications is greatly dependent on the AI algorithm, computational power, and digitalised training data.

Evidence-Based Dentistry (EBD), a more specific branch of Evidence-Based Medicine (EBM), is defined as “an approach to oral health care that requires the judicious integration of systematic assessments of clinically relevant scientific evidence, relating to the patient's oral and medical condition and history, with the dentist's clinical expertise and the patient's treatment needs and preferences” (93). Both EBM and EBD are regarded as the gold standard for the decision-making of health professionals. While ML models learn from human expertise, this can be seen as another useful tool for health professionals in multiple stages of clinical cases.

On one hand, ML could assist clinicians in storing and analysing constantly updated medical knowledge and patient-related data. ML algorithms are adept at finding patterns in patients' diagnostic data, improving current medical treatment, discovering new drugs, precision medicine, and minimising human error. EBD has a similar aim, but ML can finish it more quickly as it uses existing data, while EBD usually needs randomized controlled trials to achieve those aims. On the other hand, medical data are challenging to handle since the diagnosis is usually based on multiple sources. ML requires a large amount of data for training which may be subject to systematic bias or be inaccessible; these could influence the ultimate result. It is not easy to improve the precision of a ML model by only increasing the training data instead of increasing the quality of the data. Also, ML cannot account for the differing diagnoses by different clinicians using different data sources.

In addition, medical data are often stored within isolated, individualised, and limitedly interoperable systems due to concerns such as ethical problems, data protection, and organisational barriers. The research on federated learning (94) of ML is a potential way to solve data privacy protection problems. Besides, professional personnel are usually required to label dental and medical data. These limitations lead to the datasets lacking structure and insufficient, at least when compared with other AI fields (95). Few-shot learning has been studied to tackle this problem (96).

To use dental and medical data for ML training, one must be very careful with its complex, sensitive, and limited validation methods (97). Dental and medical data from electronic records are usually of low integrity. The data often lack of systematic allocation and is not at random, e.g., data from the hospital may have a risk of being overly sick; data collected from wearable devices may have a risk of being overly healthy. Furthermore, healthcare system level in different countries or regions is unbalanced. Data from one single country or region could possibly lead to the training result being precise but not accurate and cannot apply to countries with different healthcare system conditions. AI applications trained by such data will be biased (95). ML using such long-tailed data have been studied to minimise its influence (98). Besides, the outcomes of AI are often not readily applicable. The single output provided by most contemporary medical AI applications will only partially inform the required and complex decision-making of clinical applications. Unlike EBD, ML does not have a system to monitor the quality of the input medical data and the degree of bias. EBD has a more macroscopic awareness, and decisions are usually made based on several data sources to minimise bias. Due to the above-mentioned constraints, some clinicians have reserved their opinion on ML due to its “black box” mechanism, which the rationale for getting to the specific results cannot be explained. Although explainable AI has been studied for this purpose (99), EBD is straightforward and has a more transparent mechanism (100).

EBD and ML have their own advantages and disadvantages. ML is a new approach in the medical field to improve diagnosis and predict treatment outcomes by discovering patterns and associations amongst medical datasets. However, while current ML applications mainly rely on the same type of dataset, ML is capable of acquiring information from EBD, which uses different kinds of data for diagnosis. EBD can also benefit from the addition of ML in facilitating the discovery of the underlying connection between medical data and disease and in providing a better and individualised diagnosis. EBD and ML are complementary to serve clinicians better; clinicians can refer to both to maximise their advantage and apply them to medical practise.

New technologies are developed and adopted rapidly in the dental field. AI is among the most promising ones, with features such as high accuracy and efficiency if unbiased training data is used and an algorithm is properly trained. Dental practitioners can identify AI as a supplemental tool to reduce their workload and improve precision and accuracy in diagnosis, decision-making, treatment planning, prediction of treatment outcomes, and disease prognosis.

HD: Methodology, Investigation, Visualization, Writing—original draft. JW: Writing—review & editing. WZ: Writing—review & editing. JPM: Writing—review & editing. MFB: Writing—review & editing. JKHT: Conceptualization, Investigation, Writing—review & editing. All authors agree to be accountable for the content of the work. All authors have contributed to the article and have approved the submitted version.

This study was submitted in partial fulfilment of the requirements for the PhD degree of the first author at the University of Hong Kong. This work was supported by the General Research Fund (grant no. 17120220) of Research Grants Council of Hong Kong and the Innovation and Technology Fund (MHKJFS/075/20) of Hong Kong Special Administrative Region Government, China.

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

2. Poplin R, Varadarajan AV, Blumer K, Liu Y, McConnell MV, Corrado GS, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng. (2018) 2(3):158–64. doi: 10.1038/s41551-018-0195-0

3. Khanagar SB, Al-ehaideb A, Maganur PC, Vishwanathaiah S, Patil S, Baeshen HA, et al. Developments, application, and performance of artificial intelligence in dentistry—a systematic review. J Dent Sci. (2021) 16(1):508–22. doi: 10.1016/j.jds.2020.06.019

4. Khanagar SB, Al-Ehaideb A, Vishwanathaiah S, Maganur PC, Patil S, Naik S, et al. Scope and performance of artificial intelligence technology in orthodontic diagnosis, treatment planning, and clinical decision-making—a systematic review. J Dent Sci. (2021) 16(1):482–92. doi: 10.1016/j.jds.2020.05.022

5. Mahmood H, Shaban M, Indave BI, Santos-Silva AR, Rajpoot N, Khurram SA. Use of artificial intelligence in diagnosis of head and neck precancerous and cancerous lesions: a systematic review. Oral Oncol. (2020) 110:104885. doi: 10.1016/j.oraloncology.2020.104885

6. Farook TH, Jamayet NB, Abdullah JY, Alam MK. Machine learning and intelligent diagnostics in dental and orofacial pain management: a systematic review. Pain Res Manag. (2021) 2021:6659133. doi: 10.1155/2021/6659133

7. AbuSalim S, Zakaria N, Islam MR, Kumar G, Mokhtar N, Abdulkadir SJ. Analysis of deep learning techniques for dental informatics: a systematic literature review. Healthcare. (2022) 10(10):1892. doi: 10.3390/healthcare10101892

8. Mohammad-Rahimi H, Motamedian SR, Pirayesh Z, Haiat A, Zahedrozegar S, Mahmoudinia E, et al. Deep learning in periodontology and oral implantology: a scoping review. J Periodont Res. (2022) 57(5):942–51. doi: 10.1111/jre.13037

10. McCarthy J, Minsky M, Rochester N, Shannon CE. A proposal for the dartmouth summer research project on artificial intelligence. AI magazine. (2006) 27(4):12–14. http://jmc.stanford.edu/articles/dartmouth/dartmouth.pdf

11. Tatnall A. History of computers: hardware and software development. In: Encyclopedia of Life Support Systems (EOLSS), Developed under the Auspices of the UNESCO. Paris, France: Eolss. (2012). https://www.eolss.net

12. Weizenbaum J. ELIZA—a computer program for the study of natural language communication between man and machine. Commun ACM. (1966) 9(1):36–45. doi: 10.1145/365153.365168

13. Hendler J. Avoiding another AI winter. IEEE Intell Syst. (2008) 23(02):2–4. doi: 10.1109/MIS.2008.20

14. Schmidhuber J. Deep learning. Scholarpedia. (2015) 10(11):32832. doi: 10.4249/scholarpedia.32832

15. Liebowitz J. Expert systems: a short introduction. Eng Fract Mech. (1995) 50(5–6):601–7. doi: 10.1016/0013-7944(94)E0047-K

16. McDermott JP. RI: an expert in the computer systems domain. AAAI Conference on artificial intelligence (1980).

17. Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Commun ACM. (2017) 60(6):84–90. doi: 10.1145/3065386

18. Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. Imagenet large scale visual recognition challenge. Int J Comput Vis. (2015) 115(3):211–52. doi: 10.1007/s11263-015-0816-y

19. Campbell M, Hoane Jr AJ, Hsu F-h. Deep blue. Artif Intell. (2002) 134(1–2):57–83. doi: 10.1016/S0004-3702(01)00129-1

20. Chao X, Kou G, Li T, Peng Y. Jie ke versus AlphaGo: a ranking approach using decision making method for large-scale data with incomplete information. Eur J Oper Res. (2018) 265(1):239–47. doi: 10.1016/j.ejor.2017.07.030

21. Open AI. Chat GPT. Optimizing language, odels for dialogue. Available at: https://openai.com/blog/chatgpt/ (accessed on 7 February 2023).

22. Fang G, Chow MC, Ho JD, He Z, Wang K, Ng T, et al. Soft robotic manipulator for intraoperative MRI-guided transoral laser microsurgery. Sci Robot. (2021) 6(57):eabg5575. doi: 10.1126/scirobotics.abg5575

23. Flowers JC. Strong and weak AI: deweyan considerations. AAAI Spring symposium: towards conscious AI systems (2019).

24. Hastie T, Tibshirani R, Friedman J. Overview of supervised learning. In: Hastie T, Tibshirani R, Friedman J, editors. The elements of statistical learning. New York, NY, USA: Springer (2009). p. 9–41. doi: 10.1007/978-0-387-84858-7_2

25. Ray S. A quick review of machine learning algorithms. International conference on machine learning, big data, cloud and parallel computing (COMITCon); 2019 14–16 Feb (2019).

26. Hastie T, Tibshirani R, Friedman J. Unsupervised learning. In: Hastie T, Tibshirani R, Friedman J, editors The elements of statistical learning. New York, NY, USA: Springer (2009). p. 485–585. https://doi.org/10.1007/978-0-387-84858-7_14

27. Zhu X, Goldberg AB. Introduction to semi-supervised learning. Synth Lect Artif Intell Mach Learn. (2009) 3(1):1–130. doi: 10.1007/978-3-031-01548-9

28. Zhou Z-H. A brief introduction to weakly supervised learning. Natl Sci Rev. (2017) 5(1):44–53. doi: 10.1093/nsr/nwx106

29. Agatonovic-Kustrin S, Beresford R. Basic concepts of artificial neural network (ANN) modeling and its application in pharmaceutical research. J Pharm Biomed Anal. (2000) 22(5):717–27. doi: 10.1016/S0731-7085(99)00272-1

30. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. (2015) 521(7553):436–44. doi: 10.1038/nature14539

31. Nam CS. Neuroergonomics: Principles and practice. Gewerbestrasse, Switzerland: Springer Nature (2020). doi: 10.1007/978-3-030-34784-0

32. Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, et al. Generative adversarial nets. Adv Neural Inf Process Syst. (2014) 27. 2672–80. doi: 10.5555/2969033.2969125

33. Gui J, Sun Z, Wen Y, Tao D, Ye J. A review on generative adversarial networks: algorithms, theory, and applications. arXiv preprint arXiv:200106937 (2020).

34. Aggarwal A, Mittal M, Battineni G. Generative adversarial network: an overview of theory and applications. Int J Inf Manage Data Insights. (2021): 33(1):100004. doi: 10.1016/j.jjimei.2020.100004

35. Wu J, Zhang C, Xue T, Freeman WT, Tenenbaum JB. Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. Proceedings of the 30th international conference on neural information processing systems (2016).

36. Schleyer TK, Thyvalikakath TP, Spallek H, Torres-Urquidy MH, Hernandez P, Yuhaniak J. Clinical computing in general dentistry. J Am Med Inform Assoc. (2006) 13(3):344–52. doi: 10.1197/jamia.M1990

37. Chae YM, Yoo KB, Kim ES, Chae H. The adoption of electronic medical records and decision support systems in Korea. Healthc Inform Res. (2011) 17(3):172–7. doi: 10.4258/hir.2011.17.3.172

38. Norgeot B, Quer G, Beaulieu-Jones BK, Torkamani A, Dias R, Gianfrancesco M, et al. Minimum information about clinical artificial intelligence modeling: the MI-CLAIM checklist. Nat Med. (2020) 26(9):1320–4. doi: 10.1038/s41591-020-1041-y

39. Huang Y-P, Lee S-Y. An Effective and Reliable Methodology for Deep Machine Learning Application in Caries Detection. medRxiv (2021).

40. Fukuda M, Inamoto K, Shibata N, Ariji Y, Yanashita Y, Kutsuna S, et al. Evaluation of an artificial intelligence system for detecting vertical root fracture on panoramic radiography. Oral Radiol. (2020) 36(4):337–43. doi: 10.1007/s11282-019-00409-x

41. Vadlamani R. Application of machine learning technologies for detection of proximal lesions in intraoral digital images: in vitro study. Louisville, Kentucky, USA: University of Louisville (2020). doi: 10.18297/etd/3519

42. Setzer FC, Shi KJ, Zhang Z, Yan H, Yoon H, Mupparapu M, et al. Artificial intelligence for the computer-aided detection of periapical lesions in cone-beam computed tomographic images. J Endod. (2020) 46(7):987–93. doi: 10.1016/j.joen.2020.03.025

43. Jaiswal P, Bhirud S. Study and analysis of an approach towards the classification of tooth wear in dentistry using machine learning technique. IEEE International conference on technology, research, and innovation for betterment of society (TRIBES) (2021). IEEE.

44. Shetty H, Shetty S, Kakade A, Shetty A, Karobari MI, Pawar AM, et al. Three-dimensional semi-automated volumetric assessment of the pulp space of teeth following regenerative dental procedures. Sci Rep. (2021) 11(1):21914. doi: 10.1038/s41598-021-01489-8

45. Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent. (2018) 77:106–11. doi: 10.1016/j.jdent.2018.07.015

46. Kühnisch J, Meyer O, Hesenius M, Hickel R, Gruhn V. Caries detection on intraoral images using artificial intelligence. J Dent Res. (2021) 101(2). doi: 10.1177/00220345211032524.

47. Schwendicke F, Rossi J, Göstemeyer G, Elhennawy K, Cantu A, Gaudin R, et al. Cost-effectiveness of artificial intelligence for proximal caries detection. J Dent Res. (2021) 100(4):369–76. doi: 10.1177/0022034520972335

48. Chen Y-w, Stanley K, Att W. Artificial intelligence in dentistry: current applications and future perspectives. Quintessence Int. (2020) 51(3):248–57. doi: 10.3290/j.qi.a43952

49. Tonetti MS, Jepsen S, Jin L, Otomo-Corgel J. Impact of the global burden of periodontal diseases on health, nutrition and wellbeing of mankind: a call for global action. J Clin Periodontol. (2017) 44(5):456–62. doi: 10.1111/jcpe.12732

50. Krois J, Ekert T, Meinhold L, Golla T, Kharbot B, Wittemeier A, et al. Deep learning for the radiographic detection of periodontal bone loss. Sci Rep. (2019) 9(1):1–6. doi: 10.1038/s41598-019-44839-3

51. Kim E-H, Kim S, Kim H-J, Jeong H-o, Lee J, Jang J, et al. Prediction of chronic periodontitis severity using machine learning models based on salivary bacterial copy number. Front Cell Infect. (2020) 10:698. doi: 10.3389/fcimb.2020.571515

52. Huang W, Wu J, Mao Y, Zhu S, Huang GF, Petritis B, et al. Developing a periodontal disease antibody array for the prediction of severe periodontal disease using machine learning classifiers. J Periodontol. (2020) 91(2):232–43. doi: 10.1002/JPER.19-0173

53. Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J Periodontal Implant Sci. (2018) 48(2):114–23. doi: 10.5051/jpis.2018.48.2.114

54. Yauney G, Rana A, Wong LC, Javia P, Muftu A, Shah P. Automated process incorporating machine learning segmentation and correlation of oral diseases with systemic health. 41st Annual international conference of the IEEE engineering in medicine and biology society (EMBC) (2019). IEEE.

55. Proffita WR. The evolution of orthodontics to a data-based specialty. Am J Orthod Dentofacial Orthop. (2000) 117(5):545–7. doi: 10.1016/S0889-5406(00)70194-6

56. Tanikawa C, Yamashiro T. Development of novel artificial intelligence systems to predict facial morphology after orthognathic surgery and orthodontic treatment in Japanese patients. Sci Rep. (2021) 11(1):1–11. doi: 10.1038/s41598-020-79139-8

57. Thanathornwong B. Bayesian-based decision support system for assessing the needs for orthodontic treatment. Healthc Inform Res. (2018) 24(1):22–8. doi: 10.4258/hir.2018.24.1.22

58. Xie X, Wang L, Wang A. Artificial neural network modeling for deciding if extractions are necessary prior to orthodontic treatment. Angle Orthod. (2010) 80(2):262–6. doi: 10.2319/111608-588.1

59. Jung S-K, Kim T-W. New approach for the diagnosis of extractions with neural network machine learning. Am J Orthod Dentofacial Orthop. (2016) 149(1):127–33. doi: 10.1016/j.ajodo.2015.07.030

60. Park J-H, Hwang H-W, Moon J-H, Yu Y, Kim H, Her S-B, et al. Automated identification of cephalometric landmarks: part 1—comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod. (2019) 89(6):903–9. doi: 10.2319/022019-127.1

61. Hwang H-W, Park J-H, Moon J-H, Yu Y, Kim H, Her S-B, et al. Automated identification of cephalometric landmarks: part 2-might it be better than human? Angle Orthod. (2020) 90(1):69–76. doi: 10.2319/022019-129.1

62. Wei G, Cui Z, Zhu J, Yang L, Zhou Y, Singh P, et al. Dense representative tooth landmark/axis detection network on 3D model. Comput Aided Geom Des. (2022) 94:102077. doi: 10.1016/j.cagd.2022.102077

63. Yu H, Cho S, Kim M, Kim W, Kim J, Choi J. Automated skeletal classification with lateral cephalometry based on artificial intelligence. J Dent Res. (2020) 99(3):249–56. doi: 10.1177/0022034520901715

64. Choi H-I, Jung S-K, Baek S-H, Lim WH, Ahn S-J, Yang I-H, et al. Artificial intelligent model with neural network machine learning for the diagnosis of orthognathic surgery. J Craniofac Surg. (2019) 30(7):1986–9. doi: 10.1097/SCS.0000000000005650

65. Cui Z, Li C, Chen N, Wei G, Chen R, Zhou Y, et al. TSegnet: an efficient and accurate tooth segmentation network on 3D dental model. Med Image Anal. (2021) 69:101949. doi: 10.1016/j.media.2020.101949

66. Cui Z, Fang Y, Mei L, Zhang B, Yu B, Liu J, et al. A fully automatic AI system for tooth and alveolar bone segmentation from cone-beam CT images. Nat Commun. (2022) 13(1):1–11. doi: 10.1038/s41467-022-29637-2

67. Junaid N, Khan N, Ahmed N, Abbasi MS, Das G, Maqsood A. Development, application, and performance of artificial intelligence in cephalometric landmark identification and diagnosis: a systematic review. Healthcare. (2022) 10(12):2454. doi: 10.3390/healthcare10122454

68. Bulatova G, Kusnoto B, Grace V, Tsay TP, Avenetti DM, Sanchez FJC. Assessment of automatic cephalometric landmark identification using artificial intelligence. Orthod Craniofac Res. (2021) 24:37–42. doi: 10.1111/ocr.12542

69. Kunz F, Stellzig-Eisenhauer A, Zeman F, Boldt J. Artificial intelligence in orthodontics: evaluation of a fully automated cephalometric analysis using a customized convolutional neural network. J Orofac Orthop. (2020) 81(1):52–68. doi: 10.1007/s00056-019-00203-8

70. Cui Z, Zhang B, Lian C, Li C, Yang L, Wang W, et al. Hierarchical morphology-guided tooth instance segmentation from CBCT images. International conference on information processing in medical imaging (2021), Springer.

71. World Health Organization. Cancer Prevention [Available from: https://www.who.int/cancer/prevention/diagnosis-screening/oral-cancer/en/

72. Choi E, Lee S, Jeong E, Shin S, Park H, Youm S, et al. Artificial intelligence in positioning between mandibular third molar and inferior alveolar nerve on panoramic radiography. Sci Rep. (2022) 12(1):1–7. doi: 10.1038/s41598-021-99269-x

73. Aubreville M, Knipfer C, Oetter N, Jaremenko C, Rodner E, Denzler J, et al. Automatic classification of cancerous tissue in laserendomicroscopy images of the oral cavity using deep learning. Sci Rep. (2017) 7(1):1–10. doi: 10.1038/s41598-017-12320-8

74. Warin K, Limprasert W, Suebnukarn S, Jinaporntham S, Jantana P, Vicharueang S. AI-based analysis of oral lesions using novel deep convolutional neural networks for early detection of oral cancer. PLoS One. (2022) 17(8):e0273508. doi: 10.1371/journal.pone.0273508

75. James BL, Sunny SP, Heidari AE, Ramanjinappa RD, Lam T, Tran AV, et al. Validation of a point-of-care optical coherence tomography device with machine learning algorithm for detection of oral potentially malignant and malignant lesions. Cancers. (2021) 13(14):3583. doi: 10.3390/cancers13143583

76. Heidari AE, Pham TT, Ifegwu I, Burwell R, Armstrong WB, Tjoson T, et al. The use of optical coherence tomography and convolutional neural networks to distinguish normal and abnormal oral mucosa. J Biophotonics. (2020) 13(3):e201900221. doi: 10.1002/jbio.201900221

77. Poedjiastoeti W, Suebnukarn S. Application of convolutional neural network in the diagnosis of jaw tumors. Healthc Inform Res. (2018) 24(3):236–41. doi: 10.4258/hir.2018.24.3.236

78. Xu B, Wang N, Chen T, Li M. Empirical evaluation of rectified activations in convolutional network. arXiv preprint arXiv:150500853 (2015).

79. Dhillon H, Chaudhari PK, Dhingra K, Kuo R-F, Sokhi RK, Alam MK, et al. Current applications of artificial intelligence in cleft care: a scoping review. Front Med. (2021) 8:1–14. doi: 10.3389/fmed.2021.676490

80. Chang HY, Jung CK, Woo JI, Lee S, Cho J, Kim SW, et al. Artificial intelligence in pathology. J Pathol Transl Med. (2019) 53(1):1–12. doi: 10.4132/jptm.2018.12.16

81. Chen Y, Lee JKY, Kwong G, Pow EHN Pow, Tsoi JKH. Morphology and fracture behavior of lithium disilicate dental crowns designed by human and knowledge-based AI. J Mech Behav Biomed Mater. (2022) 131:105256 doi: 10.1016/j.jmbbm.2022.105256

82. Hwang J-J, Azernikov S, Efros AA, Yu SX. Learning beyond human expertise with generative models for dental restorations. arXiv preprint arXiv:180400064 (2018).

83. Tian S, Wang M, Dai N, Ma H, Li L, Fiorenza L, et al. DCPR-GAN: dental crown prosthesis restoration using two-stage generative adversarial networks. IEEE J Biomed Health Inform. (2021) 26(1):151–60. doi: 10.1109/JBHI.2021.3119394

84. Ding H, Cui Z, Maghami E, Chen Y, Matinlinna JP, Pow EHN, et al. Morphology and mechanical performance of dental crown designed by 3D-DCGAN. Dent Mater. (2023) doi: 10.1016/j.dental.2023.02.001

85. Wei J, Peng M, Li Q, Wang Y. Evaluation of a novel computer color matching system based on the improved back-propagation neural network model. J Prosthodont. (2018) 27(8):775–83. doi: 10.1111/jopr.12561

86. Yamaguchi S, Lee C, Karaer O, Ban S, Mine A, Imazato S. Predicting the debonding of CAD/CAM composite resin crowns with AI. J Dent Res. (2019) 98(11):1234–8. doi: 10.1177/0022034519867641

87. Takahashi T, Nozaki K, Gonda T, Ikebe K. A system for designing removable partial dentures using artificial intelligence. Part 1. Classification of partially edentulous arches using a convolutional neural network. J Prosthodont Res. (2021) 65(1):115–8. doi: 10.2186/jpr.JPOR_2019_354

88. Rokaya D, Kongkiatkamon S, Heboyan A, Dam VV, Amornvit P, Khurshid Z, et al. 3D-Printed Biomaterials in biomedical application. In: Jana S, Jana S, editors. Functional biomaterials: drug delivery and biomedical applications. Singapore: Springer Singapore (2022). p. 319–39.

89. Sporring J, Hommelhoff Jensen K. Bayes Reconstruction of missing teeth. J Math Imaging Vis. (2008) 31(2):245–54. doi: 10.1007/s10851-008-0081-6

90. Zhang J, Xia JJ, Li J, Zhou X. Reconstruction-Based digital dental occlusion of the partially edentulous dentition. IEEE J Biomed Health Inform. (2017) 21(1):201–10. doi: 10.1109/JBHI.2015.2500191

91. Chen Q, Lin S, Wu J, Lyu P, Zhou Y. Automatic drawing of customized removable partial denture diagrams based on textual design for the clinical decision support system. J Oral Sci. (2020) 62(2):236–8. doi: 10.2334/josnusd.19-0138

92. Cheng C, Cheng X, Dai N, Jiang X, Sun Y, Li W. Prediction of facial deformation after complete denture prosthesis using BP neural network. Comput Biol Med. (2015) 66:103–12. doi: 10.1016/j.compbiomed.2015.08.018

93. American Dental Association. Policy on Evidence-Based Dentistry (2001). Available at: https://www.ada.org/en/about-the-ada/ada-positions-policies-and-statements/policy-on-evidence-based-dentistry

94. Rieke N, Hancox J, Li W, Milletarì F, Roth HR, Albarqouni S, et al. The future of digital health with federated learning. NPJ Digit Med. (2020) 3(1):119. doi: 10.1038/s41746-020-00323-1

95. Schwendicke F, Samek W, Krois J. Artificial intelligence in dentistry: chances and challenges. J Dent Res. (2020) 99(7):769–74. doi: 10.1177/0022034520915714

96. Ge Y, Guo Y, Yang Y-C, Al-Garadi MA, Sarker A. Few-shot learning for medical text: A systematic review. arXiv preprint arXiv:220414081 (2022).

97. Vest JR, Gamm LD. Health information exchange: persistent challenges and new strategies. J Am Med Inform Assoc. (2010) 17(3):288–94. doi: 10.1136/jamia.2010.003673

98. Zhang Y, Kang B, Hooi B, Yan S, Feng J. Deep long-tailed learning: A survey. arXiv preprint arXiv:211004596 (2021).

99. Hagras H. Toward human-understandable, explainable AI. Computer. (2018) 51(9):28–36. doi: 10.1109/MC.2018.3620965

Keywords: artficial intelligence (AI), machine learning, neural network, dentistry, evidence-based dentistry

Citation: Ding H, Wu J, Zhao W, Matinlinna JP, Burrow MF and Tsoi JKH (2023) Artificial intelligence in dentistry—A review. Front. Dent. Med 4:1085251. doi: 10.3389/fdmed.2023.1085251

Received: 31 October 2022; Accepted: 31 January 2023;

Published: 20 February 2023.

Edited by:

Ziyad S. Haidar, University of the Andes, ChileReviewed by:

Artak Heboyan, Yerevan State Medical University, Armenia© 2023 Ding, Wu, Zhao, Matinlinna, Burrow and Tsoi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: James K. H. Tsoi, amtodHNvaUBoa3UuaGs=

Specialty Section: This article was submitted to Dental Materials, a section of the journal Frontiers in Dental Medicine

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.