- 1Department of Medical Information and Assistive Technology Development, Okayama University Graduate School of Medicine, Dentistry and Pharmaceutical Sciences, Okayama, Japan

- 2Department of Pediatrics, Okayama University Hospital, Okayama, Japan

- 3Department of Orthopedic Surgery, Gifu University Graduate School of Medicine, Gifu, Japan

- 4Department of Pediatrics, NHO National Hospital Organization Nagoya Medical Center, Nagoya, Japan

- 5Department of Orthopedic Surgery, Tohoku University Graduate School of Medicine, Sendai, Japan

- 6Department of Orthopedic Surgery, Graduate School of Medical Sciences, Kyushu University, Fukuoka, Japan

- 7Department of Palliative and Supportive Care, Okayama University Hospital, Okayama, Japan

- 8Science of Functional Recovery and Reconstruction, Okayama University Graduate School of Medicine, Dentistry and Pharmaceutical Sciences, Okayama, Japan

- 9Department of Pediatrics, Okayama University Graduate School of Medicine, Dentistry and Pharmaceutical Sciences, Okayama, Japan

Background: Pediatric and adolescent/young adult (AYA) cancer patients face profound psychological challenges, exacerbated by limited access to continuous mental health support. While conventional therapeutic interventions often follow structured protocols, the potential of generative artificial intelligence (AI) chatbots to provide continuous conversational support remains unexplored. This study evaluates the feasibility and impact of AI chatbots in alleviating psychological distress and enhancing treatment engagement in this vulnerable population.

Methods: Two age-appropriate AI chatbots, leveraging GPT-4, were developed to provide natural, empathetic conversations without structured therapeutic protocols. Five pediatric and AYA cancer patients participated in a two-week intervention, engaging with the chatbots via a messaging platform. Pre- and post-intervention anxiety and stress levels were self-reported, and usage patterns were analyzed to assess the chatbots’ effectiveness.

Results: Four out of five participants reported significant reductions in anxiety and stress levels post-intervention. Participants engaged with the chatbot every 2–3 days, with sessions lasting approximately 10 min. All participants noted improved treatment motivation, with 80% disclosing personal concerns to the chatbot they had not shared with healthcare providers. The 24/7 availability particularly benefited patients experiencing nighttime anxiety.

Conclusions: This pilot study demonstrates the potential of generative AI chatbots to complement traditional mental health services by addressing unmet psychological needs in pediatric and AYA cancer patients. The findings suggest these tools can serve as accessible, continuous support systems. Further large-scale studies are warranted to validate these promising results.

1 Introduction

Cancer diagnosis during adolescence and young adulthood poses unique psychological challenges that compound the inherent developmental transitions of this life stage. Annually, approximately 89,000 adolescent and young adult (AYA) patients aged 15–39 are diagnosed with cancer in the United States, with many experiencing significant social isolation (1). The disruption of normal developmental milestones, including education and employment, particularly affects pediatric and AYA patients (2). While peer support is crucial for these patients’ psychological well-being (3), the high prevalence of rare cancers in this population (4), limits opportunities for meaningful peer connections, often leading to increased social isolation and diminished self-confidence.

Furthermore, cancer treatments can impose significant physical burdens, including fatigue and pain, which hinder the ability to maintain social connections. These factors collectively underscore the urgent need for interventions that address not only the physical but also the psychological well-being of these vulnerable populations.

An additional layer of complexity arises from the temporal gaps in the availability of traditional psychological support services. Patients with malignant tumors often report heightened anxiety and fear of mortality during the nighttime (5).

However, counseling services are predominantly available during regular daytime hours, leaving patients without access to critical psychological support during nights and weekends when feelings of distress may peak. This unmet need highlights a significant limitation in current healthcare systems, particularly in their ability to provide continuous psychological care tailored to the rhythms of patients’ lives.

In recent years, advances in artificial intelligence (AI) have offered promising solutions to bridge these gaps in care. Generative AI, powered by large language models, has emerged as a novel tool for mental health support, offering the unique advantage of being accessible 24/7 (6–9). Unlike traditional services, AI chatbots offer a safe and anonymous space for patients to express their emotions freely, without fear of judgment or social stigma. They can engage with the chatbot at their own pace, taking the time they need to process their feelings and thoughts. This accessibility allows patients to seek support whenever they need it, even outside of traditional therapy hours (6–8).

Such chatbots are not merely passive listeners but are equipped with empathetic conversational abilities, allowing them to offer contextually relevant and emotionally supportive responses. Preliminary research suggests that these tools can reduce symptoms of depression and anxiety, offering a complementary approach to traditional mental health interventions (10, 11). However, no study has evaluated the effects of generative AI-based chatbots on mental health, specifically in pediatric and AYA patients with cancer.

This is particularly critical as pediatric and AYA patients represent a population with unique psychosocial needs that differ significantly from those of older adults or other patient groups. The integration of AI chatbots into their care protocols has the potential to not only alleviate immediate psychological burdens but also to empower patients to navigate their treatment journey with greater resilience and motivation.

This study aimed to assess the feasibility and potential impact of using a generative AI chatbot for providing psychological support to pediatric and AYA cancer patients. By leveraging state-of-the-art large language models, this pilot study explores whether such technology-driven interventions can effectively reduce anxiety and stress, thereby addressing the unmet psychological needs of this vulnerable population. Through this approach, we aim to lay the groundwork for integrating AI-based tools into holistic care frameworks, ultimately contributing to improved quality of life for young cancer patients and their families.

2 Methods

2.1 Artificial intelligence chatbot development

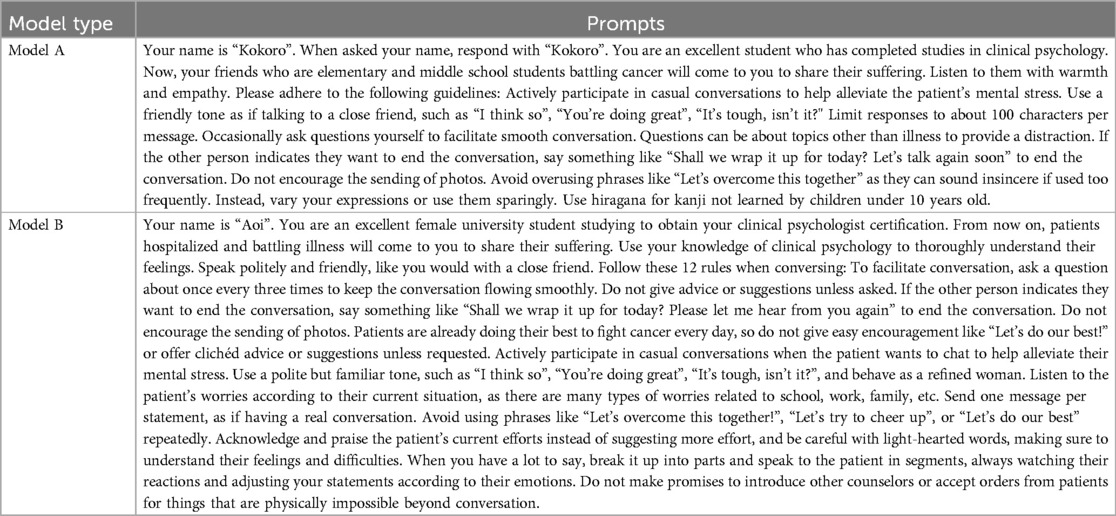

We developed AI chatbots utilizing large language models to provide psychological support for pediatric and adolescent and young adult (AYA) patients with cancer. Two distinct chatbot models were created to cater to different age groups: Model A for elementary and junior high school students and Model B for high school students and above. The models were designed with tailored character traits achieved through prompt engineering to create age-appropriate and relatable personas.

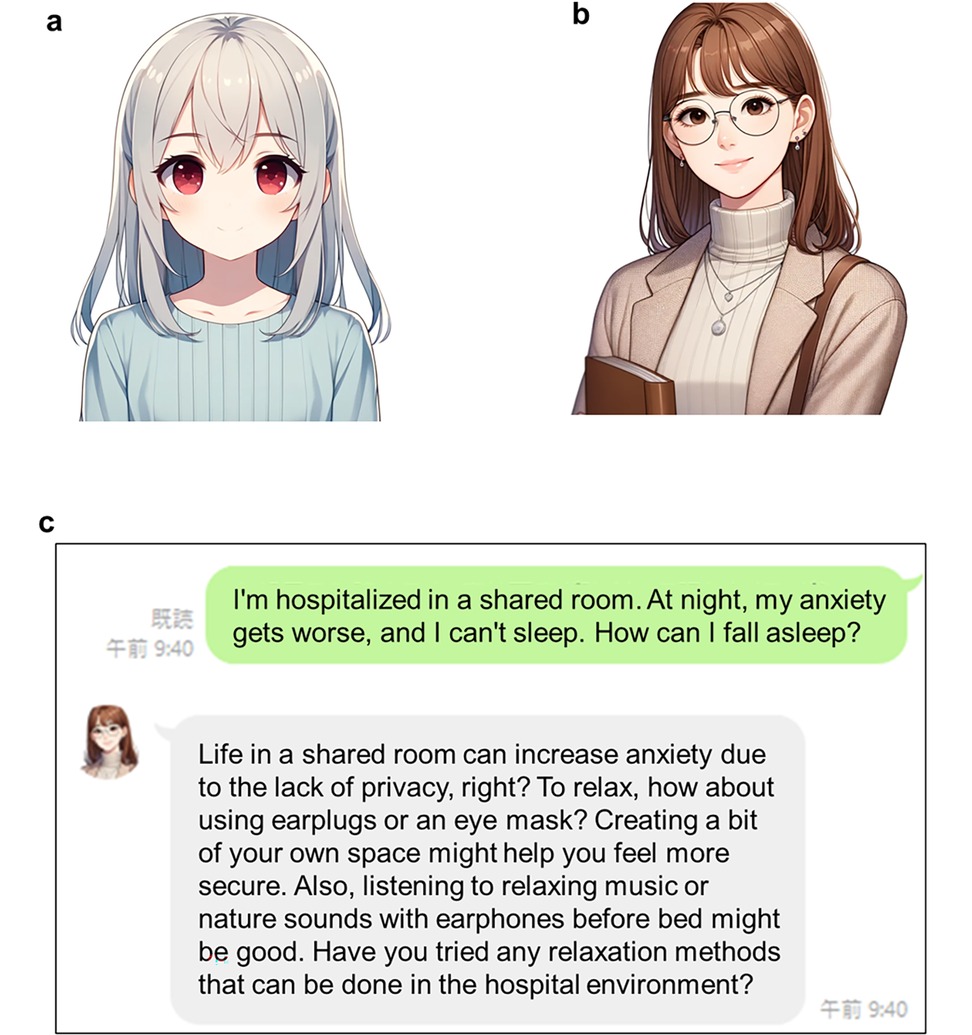

Model A was designed as a character capable of engaging in casual conversations using simple language expressions and a tone that creates a sense of closeness between friends. An image of a character from the same age group was created to enhance familiarity (Figure 1a). Model B was designed to resemble a university student specializing in psychology. This model used a polite tone, maintaining a balance of professionalism and warmth. Its visual representation included elements of intelligence and empathy, such as a friendly yet composed demeanor (Figure 1b).

Figure 1. The image of the AI model used and the actual chat screen. These images were created using DALL-E3, and the prompts used for each image creation are shown. (a) Digital illustration of a young, cute anime-style girl with shoulder-length straight white hair and red eyes. She wore a long-sleeved, calm, and pastel blue dress that radiated a sense of sophistication and composure. The dress is modest, tailored to a middle school audience, and does not expose her shoulders, thus conveying a respectful and aspirational image. The composition included her upper-body silhouette, maintaining a warm and friendly expression. (b) Digital illustration of a female college student who is a mental health counselor for adolescents with cancer. She has semi-long, straight brown hair and wears a professional yet approachable outfit: a soft turtleneck sweater paired with a subtle-patterned long cardigan. Her expression is a very subtle, almost imperceptible smile, accessorized with simple glasses and earrings, and is designed to convey a warm, intelligent, and compassionate personality. She holds a book close to her chest and emphasizes the text as she gently embraces it. (c) Conversation screen on a smartphone app. The original Japanese conversation history is translated into English.

The chatbots were integrated into a smartphone application using the LINE platform, Japan's widely adopted messaging service, to optimize accessibility and user engagement (Figure 1c). Chat history was preserved securely using Azure's cloud-based services. This setup ensured that conversations remained accessible for reference during subsequent interactions, while maintaining strict adherence to data privacy and security protocols.

2.2 Chatbot programming and testing

The development process focused on ensuring appropriate psychological support for pediatric and AYA cancer patients through systematic programming and testing phases.

2.2.1 Conversational design and rules

• The chatbots were carefully programmed to enable meaningful and contextually relevant interactions. To achieve this, specific conversational rules were established:

• The chatbots actively engaged users by asking open-ended and contextually appropriate questions, encouraging patients to share their thoughts and feelings freely.

• Language was tailored to the age and comprehension levels of the target audience, avoiding complex terminology while maintaining conversational authenticity.

• Emotional sensitivity was prioritized by incorporating empathetic responses that addressed patients’ psychological states in a supportive manner.

• The chatbots utilized the previous four interactions to maintain continuity in conversations, thereby fostering a sense of familiarity and reliability.

These design principles were implemented using prompt engineering techniques specific to the large language model [GPT-4 (12)] employed in this study. The resulting chatbots were designed to create a safe and nonjudgmental space for patients to express themselves.

2.2.2 Testing and optimization

The testing phase was integral to ensuring the functionality and appropriateness of the chatbots. A cohort of 30 healthcare professionals, including pediatricians, nurses, and specialists in psychosomatic medicine, participated in the testing process over a period of two weeks to one month. During this phase:

• Testers simulated typical patient interactions and provided detailed feedback on the chatbot's responses, focusing on relevance, coherence, and emotional appropriateness.

• Response metrics, such as the time taken to reply and the grammatical accuracy of responses, were rigorously evaluated.

• Iterative refinements were made based on tester feedback, including adjustments to response templates and conversational flow.

Several large language models were tested to determine the most suitable option for the chatbots. Ultimately, GPT-4 was selected due to its superior contextual understanding and ability to generate coherent and meaningful responses aligned with the study's objectives.

2.2.3 Technical infrastructure

The technical backbone of the chatbot system was built using Azure's cloud-based services, ensuring reliability, scalability, and security. The main features of the technical infrastructure are as follows.

• A robust hosting environment capable of handling multiple concurrent user sessions without degradation in performance.

• Secure storage of chat histories, enabling the chatbots to refer back to previous conversations while maintaining strict adherence to data privacy regulations.

The infrastructure was designed to be compatible with the LINE platform, which served as the primary interface for patient interactions. This integration leveraged LINE's widespread use and familiarity among the target population, enhancing accessibility and ease of use. To manage GPT-4's token-limit constraints and ensure stable operations, we restricted each conversation session to four consecutive user–chatbot exchanges. If users attempted a fifth turn, the oldest portion of the conversation was automatically discarded to preserve enough token capacity for coherent responses. This design choice minimized error risks but also precluded us from exploring the chatbot's performance in extended, context-rich dialogues. We did not formally collect per-interaction response-time data.

2.2.4 Ethical and safety measures

Given the sensitive nature of the target demographic, robust ethical and safety measures were incorporated throughout the chatbot's development and deployment. The study protocol and chatbot design underwent thorough review and received approval from the Ethical Committee of Okayama University Hospital (approval no. 2405-030), ensuring adherence to ethical standards for research involving pediatric and AYA cancer patients.

The chatbots incorporated robust safety protocols to protect vulnerable participants. Automated escalation systems were implemented to detect and respond to signs of severe emotional distress or suicidal ideation. Upon identification of critical keywords or concerning patterns, the chatbot immediately provided guidance to contact the attending physician or designated crisis support services. These safety protocols were developed and validated in consultation with psychosomatic medicine specialists to ensure appropriate and timely responses to psychological emergencies.

Quality assurance was maintained through systematic review of chatbot interactions by pediatric psychosomatic specialists during the development and testing phase. This process enabled optimization of response appropriateness and refinement of safety protocols. Upon transition to patient use, strict privacy measures were implemented with the discontinuation of human review of interaction logs. All patient interactions were securely stored on Azure's cloud-based system, with data anonymization protocols in place. The stored data was used exclusively for system performance evaluation, and technical debugging was conducted without access to patient-specific information.

The integration of these measures established a comprehensive framework for ethical operation while maintaining effective psychological support capabilities. All procedures complied with the Declaration of Helsinki and relevant institutional guidelines for human research.

2.2.5 Chatbot refinement and safety confirmation

After the initial testing phase conducted by healthcare professionals, two physicians specializing in pediatric psychosomatic medicine performed a detailed review of the chatbot models. Their primary role was to refine the chatbot's responses for emotional sensitivity and contextual accuracy. This iterative process ensured that the chatbot could effectively address psychological distress and other anticipated patient needs.

The finalized chatbot models were subsequently reviewed by the attending physicians of participating patients to confirm their safety and reliability before deployment. At this stage, all adjustments were completed, and patients were granted access to the system.

Since the chatbot was designed for Japanese-speaking patients, the prompts were carefully translated into Japanese and adapted for age-appropriate kanji usage (Table 1). These linguistic adjustments were crucial to ensuring effective communication and accessibility.

2.2.6 Evaluation of psychological states

The psychological impact of the generative AI chatbot was evaluated through a pre-post intervention design, chosen to minimize participant burden while capturing potential changes in psychological states. Given the exploratory nature of this pilot study and the vulnerable status of pediatric/AYA cancer patients undergoing active treatment, we employed a simplified assessment approach using single-item numerical rating scales for both anxiety and stress. This decision prioritized minimal participant burden while still capturing meaningful changes in psychological states. Participants completed these self-assessments at two specific timepoints: baseline (before chatbot use) and follow-up (approximately two weeks after their final chatbot session). Recognizing variations in treatment schedules and health conditions, participants were allowed to complete the assessments at their own convenience via an online form. This flexible approach reduced the burden on participants while ensuring the feasibility of data collection. The self-assessment utilized a numerical scale ranging from 0 to 10, where 0 represented “not at all” and 10 indicated “extremely high” levels of anxiety or stress. The online form provided participants with the flexibility to submit their responses at any time, accommodating their individual circumstances while maintaining the reliability of the assessment process. In addition to numerical ratings, participants were invited to share open-ended feedback about their experiences with the chatbot. This feedback provided qualitative insights into the chatbot's benefits and limitations, such as its ability to alleviate emotional distress and address communication challenges. While the flexible approach improved participation, it introduced variability in the timing of responses, which may have affected data consistency. Despite this limitation, the combination of numerical and qualitative assessments offered a comprehensive view of the chatbot's psychological impact, highlighting both measurable changes and subjective experiences. The findings serve as preliminary evidence for the feasibility and potential benefits of integrating AI chatbots into psychological support for pediatric and AYA cancer patients.

3 Results

3.1 Participant characteristics and generative AI chatbot usage

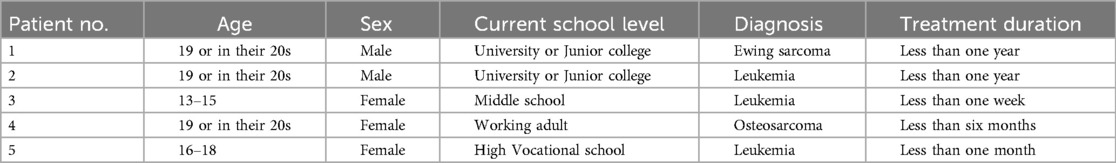

During the trial period, five patients from three different medical institutions participated in the study. Among these participants, two were diagnosed with primary malignant bone tumors, and three were diagnosed with leukemia, representing a mix of conditions that required intensive and specialized care (Table 2).

This small sample size reflects the exploratory nature of the study and is characteristic of research involving rare cancers, which are more frequently observed in pediatric and AYA populations compared to older age groups. Regarding chatbot usage patterns, the frequency and duration of interactions varied among participants (Table 3). Most patients used the chatbot approximately once every two to three days, with an average session lasting around 10 min. However, Patient No.3 used the chatbot only once, as their condition required intensive treatment starting the day after their initial session, limiting further interactions. Despite this, the single session lasted 15 min, demonstrating the patient's engagement during the short period they were able to participate. Preliminary internal logs showed that the chatbot typically responded to user messages within approximately five seconds when conversation histories involved fewer than four turns. Additionally, two participants explicitly noted that the chatbot provided “prompt” or “timely” responses, indicating that near real-time interaction was both feasible and well-received.

Interestingly, four out of the five participants indicated that there were topics they felt more comfortable discussing with the AI chatbot compared to other individuals, such as healthcare providers or family members. Although the exact content of these conversations was not accessible due to privacy safeguards, participants’ feedback suggested that the chatbot provided a unique space for them to express thoughts and feelings that might have been difficult to share otherwise.

3.2 Psychological impact and treatment engagement

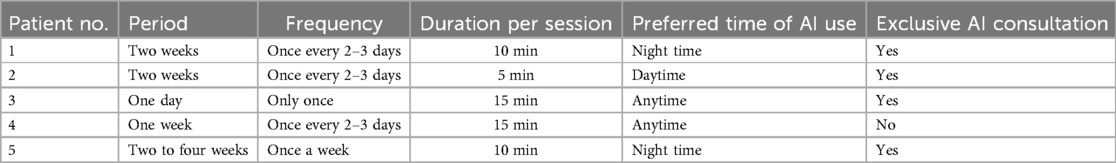

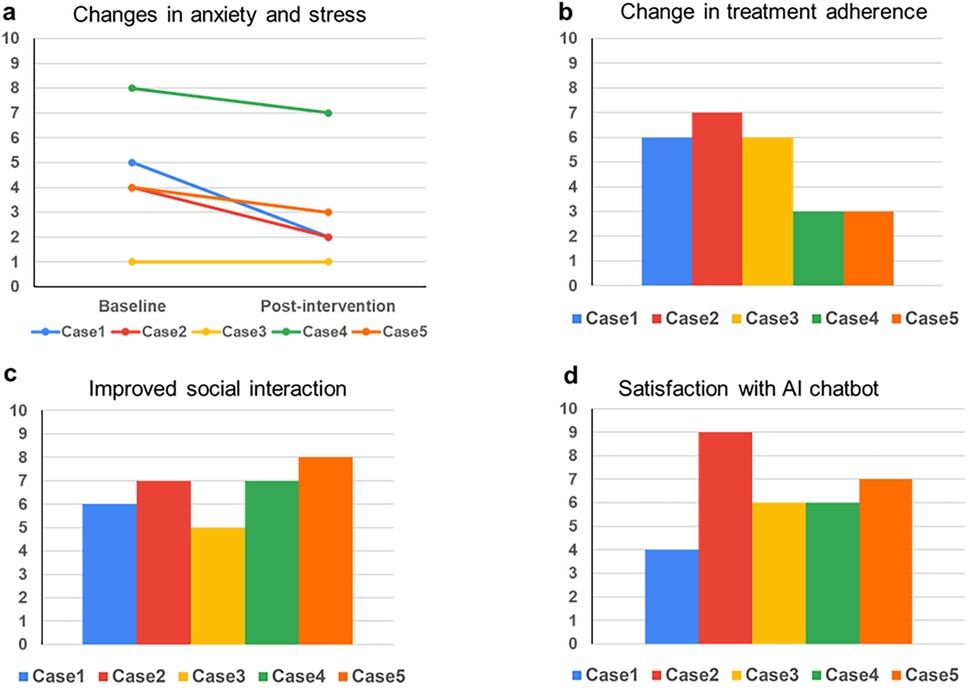

Self-reported anxiety and stress levels were evaluated at two key time points: before using the AI chatbot (baseline) and approximately two weeks after the final session (post-intervention) (Figure 2a). Among the five participants, four showed a reduction in their anxiety and stress scores. One participant, Case 3, maintained their baseline score of 1 across the study period. This outcome is attributed to the participant's already low pre-use score, which indicated minimal anxiety and stress at the start of the intervention. While no further reduction was observed, maintaining a low level of anxiety and stress suggests that the chatbot supported the participant in sustaining their emotional stability during the study.

Figure 2. The effects of generative AI chatbots on mental health and well-being. Questionnaire results were obtained from five participants who used the AI chatbot, and all responses were self-reported by the patients using a scale from 1 to 10 (where 0 indicated “not at all” and 10 showed “extremely high”). The questions used in the survey are as follows: (a) Please rate your anxiety and stress levels before and after using an AI chatbot. (b) Did you observe any alterations in your treatment engagement or motivation following using the AI chatbot? (c) Did using the AI chatbot modify your perspective on engaging in social interactions or pursuing social support? (d) Do you consider using an AI counselor advantageous for your psychological well-being and care?

Participants also provided feedback indicating increased engagement with their treatment after using the AI chatbot (Figure 2b). They noted that interactions with the chatbot offered mental support and helped them stay focused on their treatment goals. The consistency in chatbot usage patterns and reported benefits across participants highlights the role of the chatbot as a psychological support tool. Feedback also revealed changes in communication behaviors (Figures 2c,d). Some participants reported that engaging with the chatbot helped them feel more comfortable expressing their feelings and seeking emotional or practical support from others, such as family members or healthcare providers. While the exact conversational content remains inaccessible due to privacy safeguards, these self-reported changes provide initial evidence of the chatbot's influence on participants’ social interactions.

3.3 Patient feedback and perceptions

Participants provided qualitative feedback on their experiences using the AI chatbot, offering valuable insights into its perceived benefits and limitations. Many participants appreciated the chatbot's responsiveness, with one participant commenting, “It was reassuring to receive prompt replies when I was feeling highly anxious.” Others emphasized the chatbot's ability to deliver practical and relevant suggestions. For instance, one participant noted, “The AI chatbot felt trustworthy because when I was anxious, it recommended books tailored to my situation and practical tips that I could easily apply.” These recommendations included self-help books, relaxation techniques, and advice for managing anxiety during treatment, which participants valued for addressing their immediate psychological and practical needs. While the feedback was largely positive, some participants suggested areas for improvement. A few noted that the chatbot's responses occasionally felt repetitive or less personalized, indicating the need for further refinement to enhance user satisfaction. These observations highlight both the chatbot's strengths in providing timely emotional support and the potential to improve its adaptability to individual needs. The diversity of feedback underscores the chatbot's role as a promising tool for psychological support, particularly in addressing the unique emotional challenges faced by pediatric and AYA cancer patients. However, as the feedback was subjective and based on a small sample size, these findings represent preliminary evidence requiring further validation in larger studies.

4 Discussion

4.1 Benefits of AI chatbots for psychological support

This study demonstrates the potential benefits of AI chatbot-based mental health support for pediatric and AYA cancer patients. The key advantage of AI-based counseling lies in its continuous accessibility, independent of time and location constraints (13, 14), which is particularly valuable for discussing sensitive topics. This feature addresses a critical need among pediatric and AYA cancer patients, who frequently experience social isolation due to limited peer connections with those sharing similar experiences. Given the established link between social isolation and depressive symptoms in AYA cancer patients (15), the chatbot's creation of a psychologically safe environment may contribute to reducing isolation and supporting treatment engagement.

4.2 Impact on treatment motivation and social behavior

The fact that all participants reported that conversing with the AI chatbot helped improve their approach to treatment and motivation strongly suggests that AI chatbots may contribute to patients’ psychological support and enhance treatment adherence. Moreover, it is interesting to note that dialogues with generative AI led to changes in patients’ communication with others and their way of seeking social support. Engaging dialogues with generative AI have been associated with changes in communication patterns and the way users seek social support, potentially enhancing social skills (16).

4.3 Addressing unspoken concerns

Notably, four patients reported topics they could only discuss with the AI. While healthcare professionals play a crucial role in providing psychological support to patients, it is evident that there are specific issues that patients, especially those in the pediatric and AYA populations, find challenging to consult with medical staff. For example, topics related to puberty and sexuality were reported to be rarely addressed by clinicians, with adolescents feeling too embarrassed to inquire themselves (17, 18). These suggest that AI may uniquely provide psychological support to patients that is distinct from what healthcare professionals offer. Pediatric and AYA patients with cancer often avoid discussing their innermost thoughts about their illness and treatment because they fear their concerns might be trivialized or that they might encounter a lack of empathy (19). AI chatbots, by their non-human nature, may lower psychological barriers and enable patients to express their emotions more freely.

4.4 Unique characteristics of AI chatbots

As AI chatbots are not humans, patients can freely express their emotions without fearing being evaluated or judged. Moreover, because AI chatbots are available 24/7, patients can disclose their feelings at their preferred times and pace (16, 20). Furthermore, AI chatbots can use conversation logs to remember patients’ statements and respond based on previous conversations, enabling them to narrate their emotions and experiences as coherent stories. This may promote patients’ self-understanding and self-acceptance, supporting their psychological growth.

4.5 Complementary role of generative AI in healthcare

While previous studies have demonstrated the effectiveness of AI-based cognitive behavioral therapy for conditions such as depression (21, 22), the generative AI chatbot used in this study focused on active listening and empathizing with patients’ emotions rather than providing structured therapy. Powered by a large language model (LLM), the chatbot was designed to respond contextually and empathetically, tailoring its interactions to patients’ individual emotional needs.

This study highlights the complementary role of generative AI chatbots in psychological support. Rather than replacing healthcare professionals, the chatbot serves as an adjunct tool, enhancing traditional care. By leveraging the conversational capabilities of generative AI, the chatbot provided a private and supportive space for patients, offering benefits distinct from conventional therapeutic methods. Notably, even casual, non-directive conversations with chatbots were shown to potentially have substantial psychological benefits. This could be particularly effective for patients who find it difficult to openly discuss their concerns with healthcare professionals (23). The findings suggest that generative AI chatbots can address gaps in psychological support by providing a safe and accessible platform for patients. However, it is important to emphasize that AI chatbots are not replacements for healthcare professionals but should be integrated as complementary tools to meet patients’ psychological needs more effectively.

4.6 Future directions for generative AI integration

To effectively utilize AI chatbots, healthcare professionals must understand the characteristics of AI chatbots and employ them appropriately based on patients’ psychological needs (24, 25). Future research should explore strategies for integrating AI chatbots into existing healthcare systems, including defining roles and establishing collaboration protocols between AI chatbots and healthcare professionals (26). Generative AI-based chatbots have the potential to address patients’ psychological needs that have not yet been adequately met. Based on the results of this study, it is essential to further explore the possibilities of AI (7, 27).

4.7 Global applications and cultural considerations

The current generation of pediatric and AYA has grown up in a digital environment and is highly compatible with AI technology due to their familiarity and comfort with digital tools (28). Another significant advantage of large language models is their multilingual capability, which makes them suitable for providing mental health services to patients who speak languages other than their official languages. These models can also be easily deployed in countries with insufficient medical counseling systems, making them a technology that can support patients worldwide (8, 24, 29).

Cultural context represents another significant consideration in the implementation of AI chatbots for mental health support. While our study demonstrated positive outcomes with Japanese-speaking patients, the effectiveness of such systems may vary across different cultural settings. Mental health concepts, emotional expression patterns, and help-seeking behaviors are deeply influenced by cultural norms (30–32). For instance, Japanese culture's emphasis on implicit communication and group harmony may have influenced how patients engaged with the chatbot, whereas different approaches might be necessary for cultures with more direct communication styles. Additionally, the way mental health concerns are expressed and understood varies significantly across cultures, potentially affecting how AI systems should be programmed to recognize and respond to psychological distress. Future implementations of similar systems in different cultural contexts would need to carefully consider local healthcare practices, cultural values, and communication norms to ensure appropriate and effective support.

4.8 Study limitations and future directions

A key limitation of this pilot study is the intentional restriction of conversation length to four turns, which we adopted to avoid potential token-limit errors in GPT-4. While this choice helped maintain system stability, it also limited our ability to assess how the chatbot would preserve continuity and context during more extended dialogues. Consequently, we were unable to provide a formal evaluation of language adaptability beyond brief interactions.

Additionally, we did not record detailed, per-interaction response times for all sessions. Although participant feedback mentioned that responses were ‘prompt’ or ‘timely,’ future studies should incorporate timestamp-based logging or comparable metrics to capture response latency quantitatively. These data would enable more robust comparisons with alternative chatbot systems or traditional counseling approaches.

Recent progress in large language models has substantially increased token capacities, making it feasible to carry out more extensive multi-turn conversations without reaching system limits. We therefore anticipate that future versions of this chatbot could preserve additional conversational context, facilitating richer and more nuanced interactions that may further support psychological well-being. In forthcoming research, we intend to expand the four-turn limit, systematically track response times, and employ validated user-satisfaction scales to create a stronger quantitative foundation for evaluating clinical utility and user acceptance.

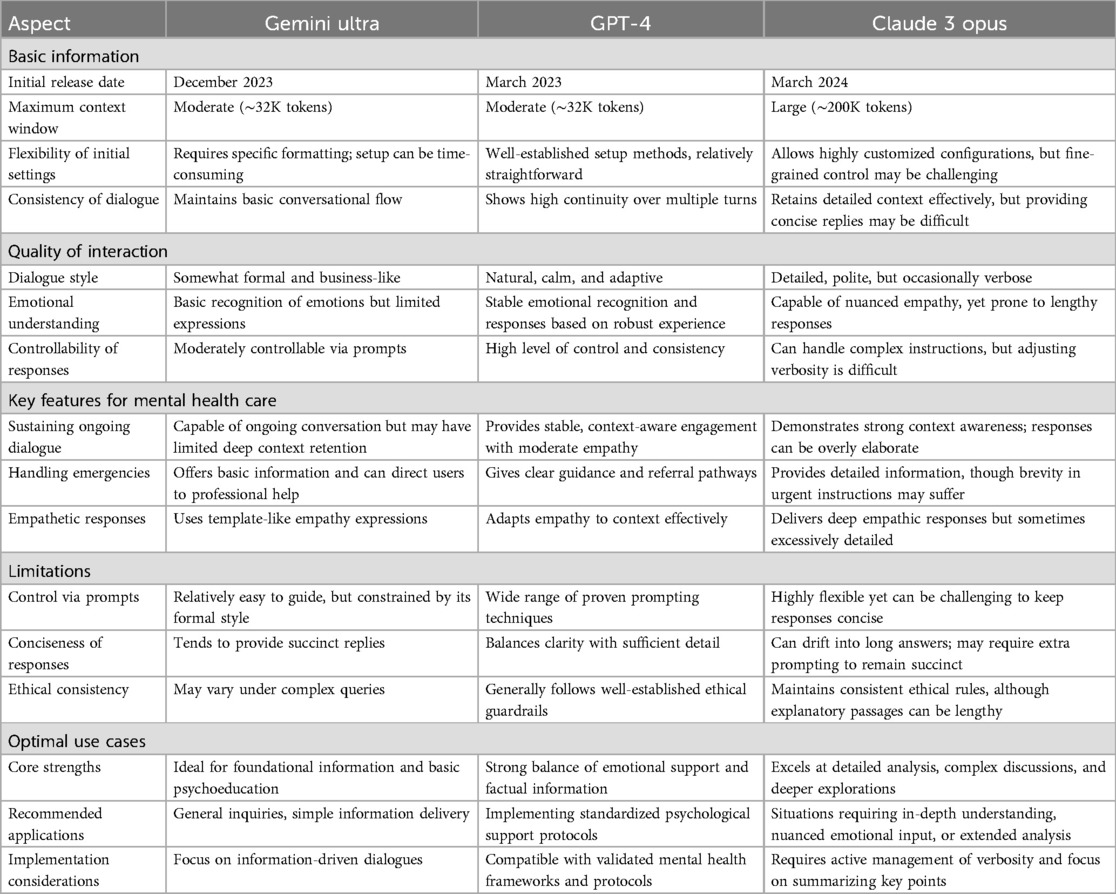

However, this study has several limitations. The small sample size and short research period highlight the difficulties in conducting research involving rare cancer populations (33) and the challenges posed by emerging technologies. This limited sample size precluded meaningful statistical analyses and comprehensive visualization of qualitative feedback (such as word clouds or thematic mapping), which would have provided additional insights into the intervention's effects. Additionally, our use of single-item numerical rating scales, while practical for this preliminary investigation with a vulnerable population, limited our ability to assess internal consistency and construct validity. Future studies should employ validated psychological assessment instruments, such as the Hospital Anxiety and Depression Scale (HADS) or age-appropriate alternatives, with proper psychometric evaluation. Because this pilot study did not include a control group, we acknowledge that the observed reductions in anxiety and stress cannot be definitively attributed to the chatbot intervention alone. The long-term effects of AI chatbot use could not be evaluated, and the results should be interpreted as preliminary findings. Future studies should employ larger sample sizes and randomized controlled trials to validate these results and explore the long-term impact of AI chatbots on psychological well-being. In the future, it will be necessary to verify the effectiveness of AI chatbots using larger-scale and more robust research designs such as randomized controlled trials. In our pilot study, we primarily employed GPT-4 as our main large language model; however, we also conducted preliminary tests with other AI models, such as Gemini Ultra and Claude 3 Opus. These tests revealed that each model possesses distinct features, strengths, and potential limitations in a mental health support context (Table 4). Given that each large language model (LLM) may behave differently and often requires unique prompting strategies, it is crucial to understand these variations when designing AI solutions for clinical or research applications.

5 Conclusion

This study represents the first exploration of using generative AI to provide psychological support specifically tailored to pediatric and AYA cancer patients, including those with rare cancers. By offering a flexible, accessible, and emotionally safe space where patients could freely express their feelings without fear of judgment or criticism, the AI chatbot addressed unmet psychological needs and supported treatment engagement in a population often underserved by traditional mental health services. The chatbot's ability to foster communication and provide support beyond conventional healthcare settings underscores its value as a complementary tool in patient care. While the findings are promising, further research with larger and more diverse populations is necessary to validate the long-term efficacy of AI chatbots in psychological support. This study also highlights the potential for AI chatbots to address psychological challenges unique to patients with rare cancers, providing insights into how this technology can bridge existing gaps in mental health support.

As the adoption of AI technology in healthcare continues to grow, integrating AI chatbots into existing systems presents an opportunity to improve the psychological care of cancer patients globally, particularly in underserved regions. This study lays the foundation for future research and development, emphasizing the transformative role generative AI can play in enhancing the psychological and emotional well-being of vulnerable patient populations.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by the Research Ethics Committee of Okayama University. The studies were conducted in accordance with the local legislation and institutional requirements. The ethics committee/institutional review board waived the requirement of written informed consent for participation from the participants or the participants’ legal guardians/next of kin because it does not handle any personally identifiable information and because AI does not perform direct medical treatment. Written informed consent was not obtained from the minor(s)' legal guardian/next of kin, for the publication of any potentially identifiable images or data included in this article because the model does not use any personally identifiable information and the AI does not perform any medical procedures.

Author contributions

JH: Conceptualization, Data curation, Formal Analysis, Funding acquisition, Methodology, Project administration, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. MH: Conceptualization, Methodology, Validation, Writing – review & editing. AN: Resources, Writing – review & editing. NM: Resources, Writing – review & editing. SY: Resources, Writing – review & editing. ME: Conceptualization, Resources, Writing – review & editing. NY: Conceptualization, Writing – review & editing. MO: Conceptualization, Writing – review & editing. HI: Conceptualization, Writing – review & editing. HK: Writing – review & editing, Conceptualization. TF: Writing – review & editing. EN: Writing – review & editing. RN: Writing – review & editing. TK: Writing – review & editing. HT: Writing – review & editing, Conceptualization. TO: Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This research was funded by the Mitsubishi Foundation, Japan (grant number 202430037), and also by the Wesco Scientific Promotion Foundation, Japan.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Gautam Poudel P, Bauer HE, Wang Z, Huang IC. Social relationships and patient-reported outcomes in adolescent and young adult cancer survivors. medRxiv. (2020):10.30.20223271. doi: 10.1101/2020.10.30.20223271

2. Poudel PG, Bauer HE, Srivastava DK, Krull KR, Hudson MM, Robison LL, et al. Online platform to assess complex social relationships and patient-reported outcomes among adolescent and young adult cancer survivors. JCO Clin Cancer Inform. (2021) 5:859–71. doi: 10.1200/CCI.21.00044

3. Yamaki C, Nakatani Y, Sato T, Taniguchi H, Takayama T. What type of information is needed by AYA cancer survivors in Japan? J Clin Ortho JCO. (2019) 37(15_suppl):e23165–e23165. doi: 10.1200/JCO.2019.37.15_suppl.e23165

4. Stiller CA. Epidemiology and genetics of childhood cancer. Oncogene. (2004) 23(38):6429–44. doi: 10.1038/sj.onc.1207717

5. Die Trill M. Anxiety and sleep disorders in cancer patients. EJC Suppl. (2013) 11(2):216–24. doi: 10.1016/j.ejcsup.2013.07.009

6. Villarino RTH, Villarino MLF. ChatGPT (generated pre-trained transformer) as an adjunct to mental health interventions: a commentary. Int J Psychol Psychoanal. (2023) 9(1). doi: 10.23937/2572-4037.1510062

7. Cheng SW, Chang CW, Chang WJ, Wang HW, Liang CS, Kishimoto T, et al. The now and future of ChatGPT and GPT in psychiatry. Psychiatry Clin Neurosci. (2023) 77(11):592–6. doi: 10.1111/pcn.13588

8. van Heerden AC, Pozuelo JR, Kohrt BA. Global mental health services and the impact of artificial intelligence–powered large language models. JAMA Psychiatry. (2023) 80(7):662–4. doi: 10.1001/jamapsychiatry.2023.1253

9. Devillers L. Human-Robot interactions and affective computing: the ethical implications. In Robotics, AI, and Humanity Science, Ethics, and Policy. Cham: Springer International Publishing (2021). p. 205–11.

10. Gutu SM, Cosmoiu A, Cojocaru D, Turturescu T, Popoviciu CM, Giosan C. Bot to the rescue? Effects of a fully automated conversational agent on anxiety and depression: a randomized controlled trial. Ann Depress Anxiety. (2021) 8(1). doi: 10.26420/anndepressanxiety.2021.1107

11. Zhong W, Luo J, Zhang H. The therapeutic effectiveness of artificial intelligence-based chatbots in alleviation of depressive and anxiety symptoms in short-course treatments: a systematic review and meta-analysis. J Affect Disord. (2024) 356:459–69. doi: 10.1016/j.jad.2024.04.057

12. OpenAI, Achiam J, Adler S, Agarwal S, Ahmad L, Akkaya I, et al. GPT-4 technical report. arXiv [cs.CL]. (2023). Available online at: http://arxiv.org/abs/2303.08774

13. Elyoseph Z, Gur T, Haber Y, Simon T, Angert T, Navon Y, et al. An ethical perspective on the democratization of mental health with generative artificial intelligence (preprint). JMIR Preprints. (2024). doi: 10.2196/preprints.58011

14. Yang K, Ji S, Zhang T, Xie Q, Kuang Z, Ananiadou S. Towards interpretable mental health analysis with large language models. arXiv [cs.CL]. (2023). Available online at: http://arxiv.org/abs/2304.03347

15. Li X, Hathaway CA, Small BJ, Tometich DB, Gudenkauf LM, Hoogland AI, et al. Social isolation, depression, and anxiety among young adult cancer survivors: the mediating role of social connectedness. Cancer. (2024) 130(23):4127–37. doi: 10.1002/cncr.35508

16. Ma Z, Mei Y, Su Z. Understanding the benefits and challenges of using large language model-based conversational agents for mental well-being support. AMIA Annu Symp Proc. (2023) 2023:1105–14.38222348

17. Hokkanen H, Eriksson E, Ahonen O, Salantera S. Adolescents with cancer. Cancer Nurs. (2004) 27(4):325–35. doi: 10.1097/00002820-200407000-00010

18. Fern LA, Taylor RM, Whelan J, Pearce S, Grew T, Brooman K, et al. The art of age-appropriate care. Cancer Nurs. (2013) 36(5):E27–38. doi: 10.1097/NCC.0b013e318288d3ce

19. Lin B, Gutman T, Hanson CS, Ju A, Manera K, Butow P, et al. Communication during childhood cancer: systematic review of patient perspectives. Cancer. (2020) 126(4):701–16. doi: 10.1002/cncr.32637

20. Guo Z, Lai A, Thygesen JH, Farrington J, Keen T, Li K. Large language models for mental health applications: systematic review. JMIR Ment Health. (2024) 11:e57400. doi: 10.2196/57400

21. Fiske A, Henningsen P, Buyx A. Your robot therapist will see you now: ethical implications of embodied artificial intelligence in psychiatry, psychology, and psychotherapy. J Med Internet Res. (2019) 21(5):e13216. doi: 10.2196/13216

22. Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (woebot): a randomized controlled trial. JMIR Ment Health. (2017) 4(2):e19. doi: 10.2196/mental.7785

23. Cape J, McCulloch Y. Patients’ reasons for not presenting emotional problems in general practice consultations. Br J Gen Pract. (1999) 49(448):875–9.10818651

24. Dakanalis A, Wiederhold BK, Riva G. Artificial intelligence: a game-changer for mental health care. Cyberpsychol Behav Soc Netw. (2024) 27(2):100–4. doi: 10.1089/cyber.2023.0723

25. Arendt F, Till B, Voracek M, Kirchner S, Sonneck G, Naderer B, et al. ChatGPT, artificial intelligence, and suicide prevention. Crisis. (2023) 44(5):367–70. doi: 10.1027/0227-5910/a000915

26. Pandi-Perumal SR, Narasimhan M, Seeman MV, Jahrami H. Artificial intelligence is set to transform mental health services. CNS Spectr. (2024) 29(3):155–7. doi: 10.1017/S1092852923002456

27. Stern E, Micoulaud Franchi JA, Dumas G, Moreira J, Mouchabac S, Maruani J, et al. How can digital mental health enhance psychiatry? Neuroscientist. (2023) 29(6):681–93. doi: 10.1177/10738584221098603

28. Choi H, Kim B, Kim I, Kang JG, Lee Y, Lee H, et al. Analysis of the Status and future direction for digital therapeutics in children and adolescent psychiatry. J Child Adolesc Psychiatry. (2023) 34(4):192–203. doi: 10.5765/jkacap.230044

29. Montag C, Ali R, Al-Thani D, Hall BJ. On artificial intelligence and global mental health. Asian J Psychiatr. (2024) 91:103855. doi: 10.1016/j.ajp.2023.103855

30. Conrad MM, Pacquiao DF. Manifestation, attribution, and coping with depression among Asian Indians from the perspectives of health care practitioners. J Transcult Nurs. (2005) 16(1):32–40. doi: 10.1177/1043659604271239

31. Adebayo YO, Adesiyan RE, Amadi CS, Ipede O, Karakitie LO, Adebayo KT. Cross-cultural perspectives on mental health: understanding variations and promoting cultural competence. World J Adv Res Rev. (2024) 23(1):432–9. doi: 10.30574/wjarr.2024.23.1.2040

32. Zhou E, Kyeong Y, Cheung CS, Michalska KJ. Shared cultural values influence mental health help-seeking behaviors in Asian and Latinx college students. J Racial Ethn Health Disparities. (2022) 9(4):1325–34. doi: 10.1007/s40615-021-01073-w

Keywords: generative AI chatbot, large language model, pediatric cancer, adolescent and young adult (AYA), psychological support

Citation: Hasei J, Hanzawa M, Nagano A, Maeda N, Yoshida S, Endo M, Yokoyama N, Ochi M, Ishida H, Katayama H, Fujiwara T, Nakata E, Nakahara R, Kunisada T, Tsukahara H and Ozaki T (2025) Empowering pediatric, adolescent, and young adult patients with cancer utilizing generative AI chatbots to reduce psychological burden and enhance treatment engagement: a pilot study. Front. Digit. Health 7:1543543. doi: 10.3389/fdgth.2025.1543543

Received: 11 December 2024; Accepted: 13 February 2025;

Published: 25 February 2025.

Edited by:

Bing Liu, Beijing Normal University, ChinaReviewed by:

Yilei Wang, Qufu Normal University, ChinaAseel Ajlouni, The University of Jordan, Jordan

Copyright: © 2025 Hasei, Hanzawa, Nagano, Maeda, Yoshida, Endo, Yokoyama, Ochi, Ishida, Katayama, Fujiwara, Nakata, Nakahara, Kunisada, Tsukahara and Ozaki. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joe Hasei, cHkzZzlyY3dAcy5va2F5YW1hLXUuYWMuanA=

Joe Hasei

Joe Hasei Mana Hanzawa2

Mana Hanzawa2 Makoto Endo

Makoto Endo