- 1Biomedical Robotics Lab, Advanced Robotics, Istituto Italiano di Tecnologia, Genoa, Italy

- 2Department of Computer Science, Bioengineering, Robotics and Systems Engineering (DIBRIS), University of Genoa, Genova, Italy

- 3Simulation and Advanced Education Center, University of Genova, Genova, Italy

- 4Humanoid and Human Centred Mechatronics (HHCM), Istituto Italiano di Tecnologia, Genoa, Italy

- 5Department of Information Engineering and Mathematics, University of Siena, Siena, Italy

- 6Fos SpA, Genova, Italy

- 7Unit of Surgical Oncology, IRCCS Policlinico San Martino, Genoa, Italy

Introduction: The use of robotic systems in the surgical domain has become groundbreaking for patients and surgeons in the last decades. While the annual number of robotic surgical procedures continues to increase rapidly, it is essential to provide the surgeon with innovative training courses along with the standard specialization path. To this end, simulators play a fundamental role. Currently, the high cost of the leading VR simulators limits their accessibility to educational institutions. The challenge lies in balancing high-fidelity simulation with cost-effectiveness; however, few cost-effective options exist for robotic surgery training.

Methods: This paper proposes the design, development and user-centered usability study of an affordable user interface to control a surgical robot simulator. It consists of a cart equipped with two haptic interfaces, a VR visor and two pedals. The simulations were created using Unity, which offers versatility for expanding the simulator to more complex scenes. An intuitive teleoperation control of the simulated robotic instruments is achieved through a high-level control strategy.

Results and Discussion: Its affordability and resemblance to real surgeon consoles make it ideal for implementing robotic surgery training programs in medical schools, enhancing accessibility to a broader audience. This is demonstrated by the results of an usability study involving expert surgeons who use surgical robots regularly, expert surgeons without robotic surgery experience, and a control group. The results of the study, which was based on a traditional Peg-board exercise and Camera Control task, demonstrate the simulator’s high usability and intuitive control across diverse user groups, including those with limited experience. This offers evidence that this affordable system is a promising solution for expanding robotic surgery training.

1 Introduction

The annual number of robotic surgical procedures performed worldwide continues to increase rapidly (1). The benefits of a minimally invasive access to the surgical field are combined with increased manoeuvrability, precise movements, an immersive 3D view and ergonomic teleoperated procedures executed from a remote console (2). However, the core of the surgery still relies on the surgeon’s degree of expertise and experience, making the outcome of the surgery vary according to the surgeon’s skills. Robotic surgical skills are unique and not derivative from open or laparoscopic surgery (3). As demonstrated in laparoscopy, relocating the place for acquiring essential skills from the operating room to the simulation laboratory offers notable benefits for trainees, hospitals, and patients (4).

Challenges associated with the use of real surgical robotic systems for training practice include cost, low availability due to extensive clinical utilization, and the risk of equipment damage. A solution relies on computer-based or virtual reality (VR) simulators designed specifically for robotic surgery. VR training for robotic skills acquisition is described in the literature as early as 2007 (5, 6). In recent years, simulation has expanded into the surgical area as a safe and cost-effective method for training (7). In fact, it provides a VR environment for novice robotic surgeons to practice surgical skills without compromising patient safety (8). While the simulated procedure cannot fully replicate the intraoperative experience, it serves as a crucial component in the training of surgical professionals. The primary goal remains skill acquisition, which, when optimized, could enhance outcomes and patient safety (9).

As stated in (7), there are five VR simulators available for robot-assisted surgery in the field of urology: the Da Vinci Skills Simulator (89,000 USD), the Mimic dV Trainer (158,000 USD), the Simsurgery Educational Platform simulator (62,000 USD), the Robotic Surgical Simulator (120,000 USD) and the RobotiX Mentor (137,000 USD). Additionally, a more affordable solution has recently been introduced, namely BBZ’s LEO simulator (40,000 USD) (10). However, most of these simulators cannot fully meet demand due to price barriers (11). While the average cost of a simulator may be affordable for hospitals, the same may not hold true for all universities or medical schools aiming to offer training in robotic surgery. Indeed, as remarked in (7), the cost-effectiveness of simulators merits consideration. A systematic review of the effectiveness of simulation in urology indicates that certain low-fidelity simulators are considered more cost-effective than their high-fidelity counterparts (12).

As noted by (13), the concept of utilizing low-cost simulations has been in practice for several years. One of the first exercises developed is knot tying (14). They used low-cost and easily accessible materials to develop a cost-effective curriculum, demonstrating construct validity. Recently, (15) introduced a cost-effective chest tube simulator. They used a 3D printer to replicate the human chest cavity and facilitate the practice of closed chest drainage techniques. (16) have developed a simulator for neurosurgery, specifically for cerebrovascular bypass surgery. The authors constructed a low-cost, reusable, high-fidelity simulator utilizing an anatomical skull and brain model, artificial vessels, and a water pump to mimic extracranial and intracranial circulations. An interesting example in the field of open surgery and with a cost of less than 1 USD is (17). They developed a training simulator for open dismembered pyeloplasty using a catheter tip syringe filled with 30 mL of air, tape, a 260 modeling balloon (the urether), and an 11-inch party balloon (the dilated renal pelvis). Moving to laparoscopy, while numerous low-cost laparoscopic box trainers are available for traditional, non-robotic laparoscopy (18, 19), the same does not hold true for robotic laparoscopy.

Few attempts were made to develop cost-effective robotic surgery simulators, possibly due to the availability of commercial solutions. The architecture proposed by (6) utilizes a PC screen and two Phantom Omni devices (an earlier version of Geomagic Touch) equipped with an electromechanical gripper to simulate the surgeon’s master console. The control of the simulated robotic arms relies on inverse kinematics equations. They created a bean drop task and visualized the scene using the OpenSceneGraph rendering library. (20, 21) employed a similar architecture, utilizing a VR visor and two Phantom Omni equipped with the standard stylus. Using V-REP (Coppelia), they designed various surgical training tasks, including pick and place, peg board, and suturing. Lastly, (22) proposes a different approach using two Razer Hydra game controllers to control the simulated robotic arms within a Unity environment. The exercise developed is a bead move task. None of these solutions incorporate pedals, commonly utilized in surgical robots for controlling robotic arm functions. Moreover, none aim to accurately replicate the console’s ergonomics to ensure that the surgeon’s head, shoulders, and arms maintain the same positioning as in actual surgery. Finally, no one proposes a usability study with surgeons and a control group to evaluate the effectiveness of the offered solution.

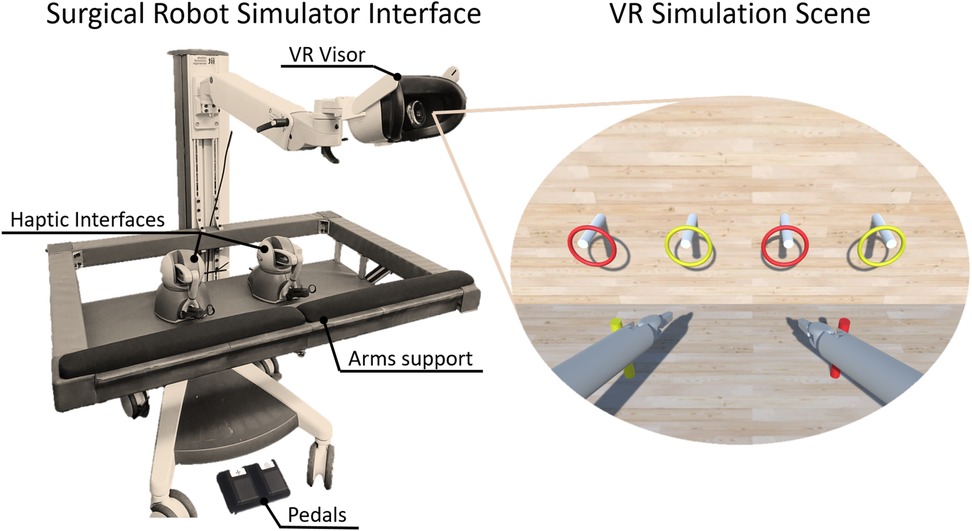

To face these needs, inspired by (21), we propose the design, development and user-centered usability evaluation of an affordable user interface to control a surgical robot simulator, shown in Figure 1. The system was designed to be simple, scalable and cost-effective. A cart was structured to integrate all the devices necessary to control the robotic surgical simulator: two haptic interfaces, two pedals and a VR Visor. A software interface was developed to enable the teleoperation of the simulated robotic instruments. In addition, we introduced the possibility of enabling haptic feedback in case of collisions between the simulated grippers and the scene elements. Finally, we developed two basic exercise tasks exploiting Unity. Its flexible development environment enables the creation of intricate scenes, incorporating diverse components such as robots, tools, physics, and organs to enhance the simulator further.

Figure 1 The proposed surgical robot simulator. The interface consists of two haptic devices, two USB pedals and a VR headset displaying the simulation scene.

The major contribution of this work lies in its validation through a comprehensive usability study involving three distinct groups of subjects: controls, robotic surgeons and non-robotic surgeons. The study’s primary goal was to evaluate performance differences between controls and robotic surgeons. For this, we recruited 13 trained surgeons experienced in robotic surgery, while for the control group, we selected 23 inexperienced subjects without any previous experience in surgery and robotics. We then aimed to determine if we could discern performances between robotic and non-robotic surgeons. To explore this hypothesis, we recruited a new subset of 6 surgeons with no prior experience in robotic surgery. Lastly, despite the absence of haptic feedback in current robotic surgery, debates on its utility remain active and unresolved in the literature (23, 24). Therefore, given the option to include haptic feedback in our simulator, we integrated it into an exercise to conduct a pilot study. The objective was to assess whether performance differs when the task is executed with or without it.

In summary, we have maintained the advantages of previously proposed surgical robot simulators while incorporating the missing parts, such as the physical interface and pedals. Then, we evaluated it with a comprehensive usability study involving both surgeons and controls.

The article is structured as follows. The main technical features of the system are detailed in Section 2. The experimental validation and usability study are presented in Section 3. The results and discussions are outlined in Section 4. Finally, the conclusions are reported in Section 5.

2 Material and methods

2.1 Surgical robot simulator console cart

A cost-effective console was developed to integrate all the required devices for control of the surgical robot simulator, as shown in Figure 2. The design was based on the regulations for Video-Display-Terminal workstations (ISO 9241) to guarantee an ergonomic seat, such as (i) desk or support for the surgeons’ arms at the height of , (ii) support arms depth from 10 cm to 20 cm, (iii) adjustable chair to allow the regulation of the seat height, and (iv) distance between the eyes and the 2D/3D monitor around 50–70 cm or an adaptable arm holding the VR visor. Although the two setups are different in terms of ergonomics, they are both exploited by surgical robots on the market. For example, Da Vinci exploits an immersive visual interface similar to VR, while Hugo (Medtronic) and Versius (CMR Surgical) use a display setup. The haptic devices’ encumbrance and workspace have been taken into account in order to be able to handle the grippers at a distance of 15 cm from the arm support while keeping the wrist free for movement. To meet these specifications, a standard commercial cart was customized by incorporating arm supports and a shelf using aluminium bars. The console was equipped with:

• Two haptic interfaces used as master manipulators to teleoperate the simulated robotic instruments and the endoscope. Each haptic device was outfitted with a gripper interface (Twee, BBZ s.r.l, Italy) to provide an additional degree of freedom to control the forceps of the robotic surgical instruments;

• A VR visor or a 3D monitor for stereoscopic visualization of the simulated scene;

• Two USB pedals for control of the instruments and the camera motion.

Figure 2 CAD design of the surgical robot simulator interface, which can be equipped with a 2D/3D monitor or with a VR visor.

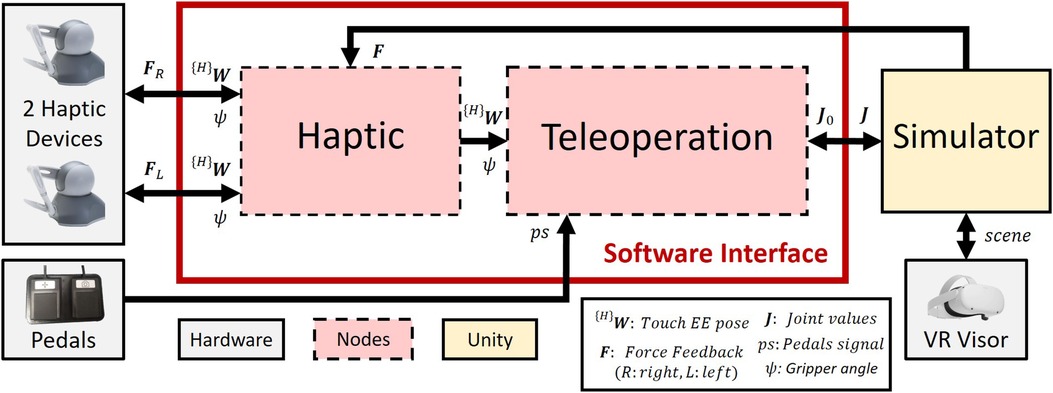

2.2 Surgical robot simulator software architecture

Figure 3 shows the Surgical Robot Simulator architecture. A software interface node Haptic is implemented to retrieve position and orientation data from two haptic devices. Using this input data along with the signal from the pedals, the Teleoperation node manages the high-level control of a robotic surgical system, simulated in Unity (25), and displayed through the visor. Finally, the Haptic node gets the interaction forces information from the simulation and provides haptic feedback to the manipulators. The two nodes are kept separate to ensure greater modularity. This allows for parts of the architecture to be reused for other purposes, as demonstrated in (26).

Figure 3 Surgical robot simulator architecture. The Haptic node retrieves the haptic device data ((H)W and ) and sends them to the Teleoperation node, which controls the robotic arms’ movements in the simulation (Section 2.2.3). The Unity scene is shown through the VR, and the haptic feedback forces (Section 2.3.1) are directly sent to the Haptic node.

2.2.1 Pedals

Foot pedals are required to switch between the control of the robotic arm and the camera control. The pedal set of a Da Vinci robot consists of 7 pedals (Figure 4A). The yellow and blue pedals on the right are used to carry out the coagulation (blue) and cutting (yellow) operations. Considering that this project does not currently implement the simulation of these functionalities, only the two black pedals (Figure 4B) have been replicated, in particular:

• The pedal with the camera symbol is used to activate the robotic camera control. The simulator allows controlling the camera through the haptic device’s movement by this pedal.

• The pedal with the compass symbol is a clutch used to deactivate the two instruments, allowing the surgeon to reposition the manipulators in the preferred position. In order to activate this feature, the user needs to the pedal.

As shown in Figure 3, when the user presses either pedal, a signal () is sent to the Teleoperation node.

2.2.2 Haptic device software interface

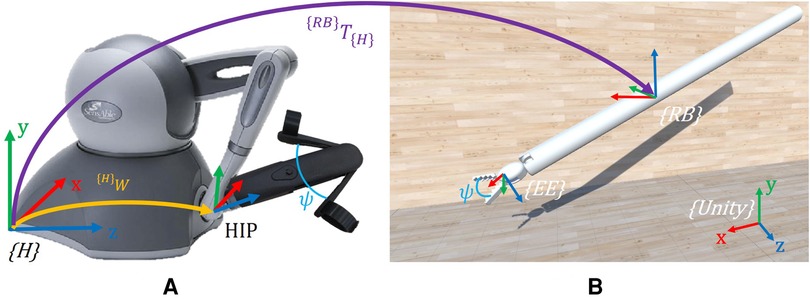

Observing Figure 3, the Haptic node serves as the software interface that retrieves the raw position and orientation of the haptic device’s end-effector, as well as the gripper’s opening angle and sends all of them to the Teleoperation node. Moreover, the Haptic node receives force feedback reference signals from the Simulator node, which are then sent to and rendered by the haptic devices. Figure 5A shows the homogeneous matrix (H)W representing the pose of the end-effector, also called Haptic Interface Point (HIP), in ; is the gripper’s opening angle. In order to eliminate noise in the opening angle measurement, a software calibration was implemented to remove the offset of , setting the minimum value to when the gripper is closed.

Figure 5 Description of the reference systems adopted. (A) Haptic interface reference system . (B) Simulated robotic instrument. Here, only the Endo-Wrist part of the Patient Side Manipulator (PSM) is present in the simulation. is the Unity world reference system, the robot base, and the end-effector. {RB}T{H} is the transformation matrix between and . {H}W is the pose of the HIP in . is the gripper opening angle, which is reproduced in the simulated instrument. Note that in Unity, the reference systems are left-handed.

2.2.3 High-level teleoperation control

The Teleoperation node is implemented to replicate the movements of the haptic interfaces on the robotic surgical instruments. In the simulation, in order to simplify and generalize the representation of the surgical robotic system, two generic instruments, similar to the Endo-Wrist of the Da Vinci Patient Side Manipulator (PSM) (27), are used (Figure 5B).

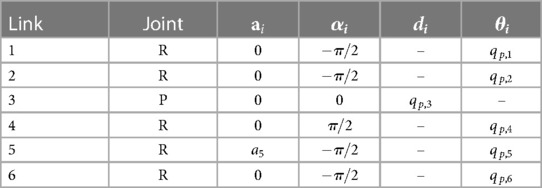

Resembling the Da Vinci, each PSM is a 7-DoF actuated arm that moves a surgical instrument around a Remote Center of Motion (RCM). The first 6 DoFs correspond to Revolute (R) or Prismatic (P) joints combined in an RRPRRR sequence. The last DoF corresponds to the opening and closing motion of the gripper. The end-effector (EE) is defined as the simulated gripper’s fulcrum (Figure 5B). The standard Da Vinci DH parameters (21) are used to define the transformation between the robot base and the (see Table 1).

The robotic tool is initialized with the initial joint values (), sent by the Simulator node (Figure 3), and its teleoperation is governed by the logic described in Section 2.2.1 and the associated signal. The pose of the EE is updated using an incremental approach, where the real-time EE pose is continuously adjusted by adding the incremental relative movements, namely the variation in position and orientation of the HIP, as follows:

where and are the homogeneous matrices describing the final and current pose of the EE, with respect to the reference system. describes the pose variation of the HIP in and is defined as:

where is the rigid transformation between and , while and are the final and initial pose of the HIP in . All variables used are in the form of homogeneous matrices.

Exploiting a software library for inverse kinematics calculations and specifying as the goal pose, we can determine the desired joint values (). will then be transmitted to the Unity simulation.

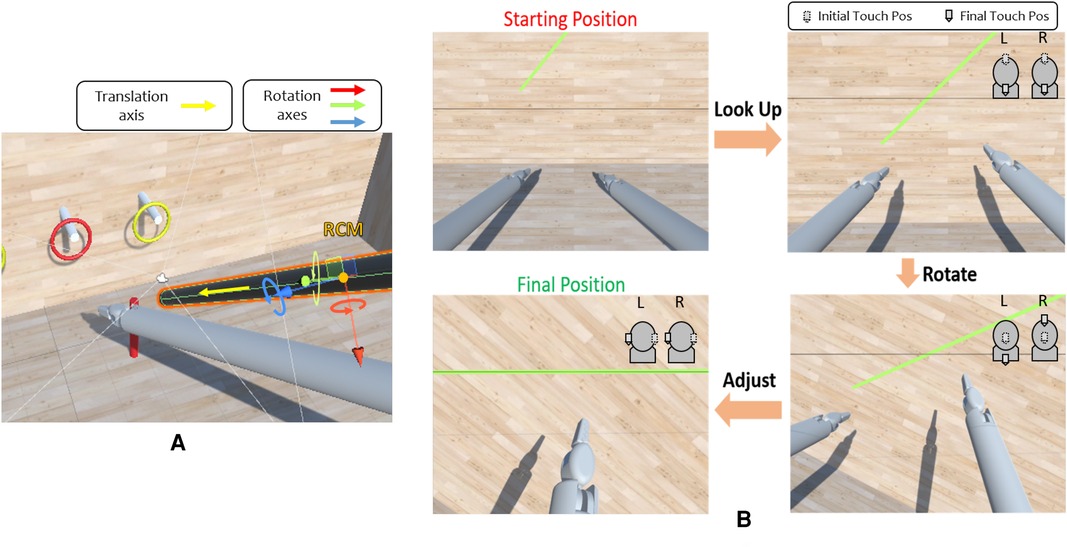

2.2.4 Robotic camera control

The Robotic Camera (RCam) is a 4-DoF actuated arm, which moves the camera about its RCM through revolute and prismatic joints combined in an RRPR sequence. The Da Vinci Endoscopic Camera Manipulator requires that master manipulators are used simultaneously to move the camera, resembling the action of “dragging” the scene. As for the surgical instrument, only the subset of RCam joints is provided in the Unity simulation, and not the entire robot. The RCam rotates around its RCM axes (Figure 6A) based on the delta displacements of the two haptic devices moving in the same direction (Figure 6B). This allows the user to control 3 DoF out of 4, i.e., move the camera right/left, up/down, and zoom in/out. The last DoF is the camera roll rotation, which is controlled by moving the two controllers in opposite directions along the same axis. For example, as shown in Figure 6B in the bottom right image, one controller moves up while the other moves down.

Figure 6 (A) Robotic camera degrees of freedom. The camera can rotate around the three axes of its RCM and move along its own body axis. (B) Example of camera control task. These are possible camera movements to perform the exercise. The task is completed when the user is satisfied with the obtained alignment. At the top right of each image, there is an example of how the left (L) and right (R) Touch end-effectors can be moved to achieve the desired camera orientation. The dotted EE indicates the starting position. For instance, in the top right image, moving both the EEs down leads the camera to look up. In the bottom right image, moving one EE up and the other down results in a rotational movement.

Thus, the camera rotation control is achieved by: (i) comparing the delta displacements to verify that they are parallel and discordant, (ii) exploit the delta displacement as rotational magnitude and (iii) computing cross-products to find the direction of rotation. This is described by the formulas:

where is the delta displacement, is the result of the cross-product (31 vector), while and are the cartesian positions (31 vectors) in two consecutive time instants for the right Geomagic Touch instrument. The same goes for and , which are calculated with and . When computing the cross product between two three-dimensional vectors, such as and , the result is a vector that is perpendicular to the plane defined by and . This resulting vector can be interpreted as the axis of rotation that brings to . The z-component of the resulting vector () provides crucial information about the direction of rotation. In particular, if and are positive, then the rotation is counterclockwise; otherwise, it is clockwise. Inverse kinematics surgical instruments and camera control allow a scaling factor to control the motion speed in the simulation. This factor can be set by an external tablet communicating with the node via MQTT (28).

2.3 Simulator

The training scene is implemented using Unity Engine (25), one of the most famous cross-platform game development software. Given that Unity does not inherently support the simulation of robots, unlike Coppelia (V-REP), we exploited Unity Empty GameObjects to build the virtual kinematic chain following the Da Vinci DH tables (Table 1).

Compared to (21), which simulates and visualizes the entire Da Vinci robot, our study simulates the whole robot but only visualizes the last segment of the surgical instrument (Figure 5B). Specifically, we group the first four joints (3 revolute and 1 prismatic) into , as illustrated in Figure 5B. These joints are responsible for the RCM condition, with the RCM positioned at the origin of . We did this to simplify the simulation by reducing the number of components to be rendered.

To control the whole virtual robot kinematic chain, we retrieve the six joint values () solving the inverse kinematic equations, computed within the Teleoperation node (Section 2.2.3). These values are used in the Unity simulation as inputs for the respective joint GameObjects.

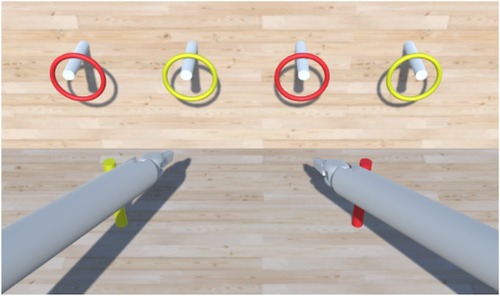

In order to test and assess the system’s usability, we implemented a Peg Board scene. As in other existing simulators, such as (29–31), the Peg Board represents one of the basic exercises for surgical training for object manipulation. As shown in Figure 7, the Peg Board exercise consists of picking up the rings on the pegs and placing them on the ground in the pegs of the corresponding colour. The scene is implemented using GameObjects and , essential to recognize a collision between two or more GameObjects. It is important to note that Unity lacks a built-in collider shape that resembles a hollow ring. Thus, it was necessary to implement multiple for each ring element, with a careful arrangement to achieve the desired shape and collision behaviour.

Figure 7 Unity scene showing the Peg Board exercise. The task is completed when all the rings are in the relative peg on the floor.

The Unity simulation needs the data computed by the Teleoperation node to control the joints’ movements, and these data are sent via ROS2 messages. The Ros2ForUnity plugin (32) is used to make Unity interact with ROS2 publishers and subscribers.

One of the major advantages of adopting Unity is its capacity to facilitate the creation of complex scenes, including various components such as robots, tools, objects, devices, physics and organs. This flexibility enables the rapid expansion of the simulator’s capabilities, making it a valuable choice for diverse and advanced simulations.

2.3.1 Haptic feedback

The effectiveness of haptic feedback in robotic surgery is a highly debated topic (23, 24, 33, 34). Several commercial surgical robotic platforms, such as the Da Vinci robotic system, do not include haptic feedback, making the surgeon rely only on his eyesight to perform the surgery. Since this work aims to lay the foundations for creating a training platform for young surgeons, haptic feedback could be an added value in the learning phase (35, 36). As a result, an initial implementation of haptic feedback was incorporated, but its development has been limited to pliers interactions with scene elements. In detail:

1. Vibration when a ring is gripped;

2. Preventing the user from crossing surfaces with surgical instruments. These surfaces are the simulation floor, the walls and pegs;

3. Interaction feedback between the gripped ring and the pegs.

The feedback (1) is implemented by sending a force feedback of 1N when both the grippers of an instrument touch a ring. This feedback is constant and is applied for 3 ms in the positive direction of the x-axis (Figure 5A).

Conversely, feedback (2) and (3) follow a more detailed model. This work proposes a Mass-Spring-Damper model (5).

All the vectors are defined in . is the stiffness coefficient, is the viscosity coefficient, is the displacement from the equilibrium position (i.e., how much the EE penetrates the surface of the object), and is the velocity of the EE. and (both 31 vectors) are provided by Unity, while and were found in an empirical way to ensure the sensation closely resembled contact with a rigid surface. All the feedback are sent via ROS2 messages and are received by the Haptic node, which renders the forces. The transformation between and is necessary to refer the feedback values in . Some feedback has not been implemented, such as when the two surgical instruments collide or when the body of an instrument makes contact with a scene element. In Unity, the collisions are handled through elements.

3 Experimental validation

3.1 Experimental setup

Figure 1 illustrates the experimental setup. We opted for the Pro-Cart (ITD GmbH, Germany)(5,500 USD) as the commercial cart, given its availability in the lab. A lower cost cart can easily be found (e.g., the Medical Trolley OC-1T by Likaymo, 400 USD). The selected haptic devices are the Geomagic Touch (3DSystems, USA)(5,000 USD), with each stylus replaced by the Twee stylus (BBZ Srl, Italy)(1,600 USD) gripper interface, offering an additional degree of freedom to control the surgical instrument’s forceps. Although the system can display interleaved images for 3D viewing with polarized glasses or standard 2D images, we opted for the Meta Quest 2 VR headset (Meta, USA)(350 USD) to replicate a visual mode closely resembling the surgical one. Windows operating system (PC cost: 1,000 USD) was chosen based on its compatibility with the VR visor. The cost for each element of the Surgical Robot Simulator interface is indicated in brackets. Thus, the estimated total cost of the architecture components is approximately 8,400 USD if a lower cost cart is selected.

All the nodes are implemented in C++, using the ROS2 framework (37), which manages the communication of the packages. The libraries used are (i) (ROS2 package) to compute the joints value through the inverse kinematic and (ii) the library (3DSystems, USA) to retrieve the data from the Geomagic Touch.

3.2 Teleoperation accuracy assessment

The teleoperation accuracy was assessed with a displacement analysis by comparing input and output displacements between the haptic devices and the simulated instruments. The main objective is to estimate the error introduced by our teleoperation architecture (Figure 3), which comprehends input extraction, data elaboration, inverse kinematics equations and applying the results to tools simulated in Unity. Therefore, the idea is to compare the input displacement of the HIP, controlled by the Geomagic Touch, with the output displacement of the simulated EE. Both linear and angular variations have been checked by moving the Geomagic Touch HIP along its axes and measuring a set of initial and final pose in both the reference systems, and . The homogeneous matrix describing the pose error can be defined in compact notation as:

where is a vector representing the linear error, while is a matrix indicating the angular error. can be further explained in:

where is the current pose variation in , and is the desired pose variation in .

3.3 Usability study

A usability study was conducted in order to evaluate the accuracy, intuitiveness and usability of the surgical robot simulator interface. This study was approved by the Regional Ethics Committee of Liguria under the protocol IIT_ADVR_TELE01, number 229/2019 - ID 4621.

3.3.1 Subjects

We enrolled a total of 42 subjects. Initially, we recruited 13 expert surgeons in Robotic Surgery (RS Surg: age mean std: 41.7 10 years, age range 32–69 years, 1 woman) and 23 controls (CTRL: age mean std: 49.3 11.2 years, age range 32–69 years, 10 women). Then, we enrolled a new set of 6 surgeons without experience in robotic surgery (NO RS Surg: age mean std: 55 8.7 years, age range 32–69 years, 1 woman). Inclusion criteria were: (i) not having any medical experience (i.e., no healthcare-related studies) for CTRL, (ii) being specialized surgeons with experience in robotic surgery for the RS Surg, and (iii) being specialized surgeons with no experience in robotic surgery for the NO RS Surg. All subjects completed the subsequent experimental protocol except for one control. This subject wore glasses with progressive lenses, which prevented the VR headset image from being correctly focused. Consequently, the subject was unable to perform the tasks, leading to the suspension of the experiment.

3.3.2 Experimental protocol

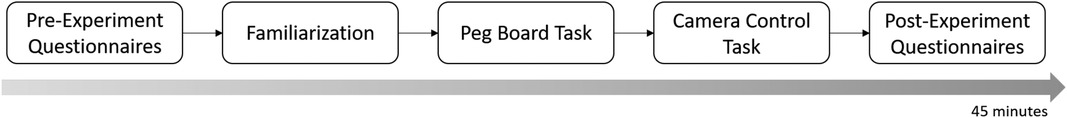

The experimental protocol was divided into five distinct phases (Figure 8):

• Pre-experiment questionnaires;

• Familiarization;

• Peg board task;

• Camera movement task;

• Post-experiment questionnaires;

Figure 8 Experimental Pipeline. Each experiment consists of four macro-phases: two pre-experiment questionnaires, a familiarization phase, the execution of the two main tasks and the completion of further questionnaires after them. The average execution time of the complete pipeline is approximately 45 min.

The overall duration was about 45 min.

3.3.2.1 Pre-experiment questionnaires and familiarization

The pre-experiment questionnaires consist of two surveys: a demographic one and a Simulation Sickness Questionnaire (SSQ) (38). The demographic questionnaire was proposed to obtain general information about the subject (gender and age) and their frequency of use of technological devices such as smartphones and video game consoles. Finally, there were questions reserved for surgeons to determine their confidence level with laparoscopy and robotic surgery. The SSQ questionnaire is a standard validated questionnaire aimed at measuring the discomfort level resulting from exposure to a VR environment. It outlines 16 symptoms typically related to ’Simulation Sickness’ and requests the user to assess their severity on a scale from 0 to 3. This questionnaire was administered to the participants before and after the experimental phase, ensuring that any discomfort reported could be attributed to the simulation. After the pre-experiment questionnaires phase, the subjects started the familiarization phase: every user had a little time to get used to the system (5 to 10 min). At the end of the familiarization, the subject started to perform the two following tasks.

3.3.2.2 Peg board task

The Peg Board task was conducted twice for each participant: once with haptic feedback enabled and once without it. The testing order was randomized for every user to mitigate potential bias introduced by using a specific system first. As shown in Figure 7, the Peg Board task consists of picking the four rings from the cylinders on the wall and placing them in the two corresponding cylinders on the floor. This experiment aimed to determine whether users controlling the system can reliably pick objects up from one location and place them at another using the gripper end effector. Moreover, comparing the presence/absence of haptic feedback aims to investigate its utility and any differences in the performance between the two cases.

3.3.2.3 Camera control task

Regarding the camera movement task (Figure 6B, the user was asked to move the camera, using all 4 DoF, to align a horizontal grey line fixed on the camera plane with a green rectangle placed on the front wall in the simulation. The goal was to assess the accuracy and intuitiveness of the camera movements. The task was performed without haptic feedback as it is purely visual and does not require direct contact with any scene element.

3.3.2.4 Post-experiment questionnaires

The post-experiment questionnaires consist of four surveys:

• SSQ: The first to be proposed is the SSQ to evaluate the subject’s symptoms immediately after using the simulator.

• User Experience Questionnaire (UEQ) (39): The subject is required to complete the UEQ (40), which provides a comprehensive assessment of user experience, encompassing both traditional usability metrics such as effectiveness, controllability, and learnability, as well as non-goal-oriented or hedonic factors like stimulation, fun, novelty, emotions, and aesthetics. This questionnaire comprises 26 items grouped into 6 scales: attractiveness, efficiency, perspicuity, dependability, originality, and stimulation. These scales are interrelated, with the attractiveness scale capturing the user’s overall impression influenced by values on the other 5 scales (41).

• SIM-TLX (42): The SIM-TLX questionnaire is a customized version of the NASA-TLX (43). SIM-TLX is designed explicitly for VR simulations, and it evaluates the workload associated with different tasks across various dimensions, such as mental, physical, and temporal demands, as well as complexity, distraction, and frustration levels.

• Face Validity Questionnaire: Face Validity is a custom questionnaire to assess the superficial appearance or “face value” of an instrument, such as a test, a device, or an assessment tool. Face validity allows us to gather subjective opinions on whether our simulator is helpful at first glance. The questionnaire contains multiple items, each employing a Likert scale response format with five points ranging from “very bad” to “very good.” First, there are four general items concerning the level of realism of the simulated instruments, the quality of the images, the comfort of the hardware interface, and whether haptic feedback helps or hinders. Then, four extra items were reserved for surgeons, which regard: (i) the ability to triangulate an object in the simulation, (ii) the realism of hand movements compared to robotic surgery, (iii) the comfort level of the interface compared to commercial ones, (iv) the usefulness of simulation in the acquisition of hand-eye coordination.

3.3.3 Analysis

The performance data recorded during the Peg Board Task include:

• Time required to perform the task (Peg Board Execution Time, )

• Number of rings transferred correctly ()

• Number of rings dropped ()

• Number of instrument collisions ()

We established the number of errors () in the PegBoard task as the sum of and .

Regarding the Camera Control Task we recorded:

• Time required to perform the task (camera execution time, )

• Alignment Error ()

Referring to Figure 6B, we estimated the by comparing the angle between the green rectangle and the grey line, which is the horizontal baseline. Thus, the corresponds to the slope of the green rectangle. A binary mask is computed by segmenting the green colour on the view of the virtual camera in the simulation. Therefore, the coordinates of 2 points belonging to the rectangle mask’s upper side are automatically identified to calculate the gradient of the straight line passing between them. The is expressed in degrees since the angular coefficient is converted into the relative slope angle with respect to the abscissa axis through the operation.

Questionnaire data and performance data were compared among the groups using the non-parametric Wilcoxon-Mann-Whitney test due to the non-normal distribution of the data. Results were considered significant with a p-value < 0.05. All analyses were conducted using Matlab 2022b.

4 Results and discussion

4.1 Teleoperation accuracy results

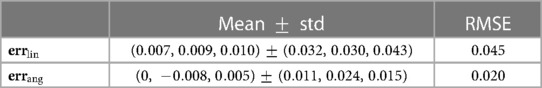

The accuracy of the software chain is described in Table 2. The experiments show that the are in the order of magnitude of mm, which is reasonable considering the simulation context and the fact that the resolution of the Touch’s position sensors is 0.055 mm. The is computed as a rotation matrix and converted to the ZYX Euler angles convention to simplify the interpretation of the actual angle error. The order of magnitude is degrees.

4.2 Peg board task

All subjects managed to complete the task by successfully inserting of all four rings, achieving a of 4.

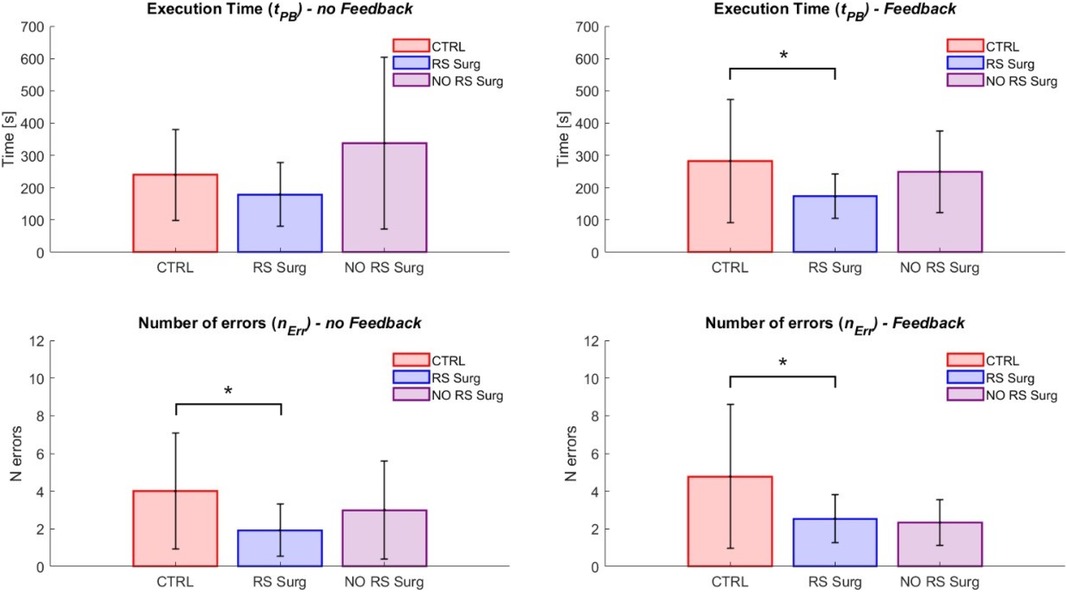

Figure 9 shows the results related to the execution times and number of errors in the Peg Board task. We examined potential differences between experts (RS Surg) and controls (CTRL) in the condition without haptic feedback. Regarding the time required to complete the task (mean std; CTRL = s; RS Surg = s), no significant difference was observed (Figure 9, left). However, in terms of number of errors (mean std; CTRL = ; RS Surg = ), a significant difference was evident with a . In surgery, the execution time is crucial as it impacts the procedure’s total duration and, therefore, its costs (44). Nonetheless, the number of errors made carries even more weight as it directly impacts patient health and can lead to complications and procedural delays. In this regard, the results are promising, demonstrating RS Surg’s higher proficiency and attentiveness. Robotic surgeons already possess hands-on experience with the robot, giving them an edge when using a simulator designed to emulate it. This enables them to accomplish the task with significantly fewer errors than the control group and with shorter average completion times. The superior performances of the RS Surg represent a good indicator of the fidelity of our simulator.

Figure 9 Peg board task results. The graphs illustrate the mean and standard deviation of the execution times () and the number of errors made (). The two graphs on the left show the outcomes in the scenario without feedback, while the ones on the right display those with haptic feedback. (*) means a statistical difference was found with a .

Given the significant difference between CTRL and RS Surg, we decided to investigate whether we could distinguish between the performances of robotic and non-robotic surgeons. Therefore, we compared RS Surg with NO RS Surg and found no significant differences in terms of either time (mean std; NO RS Surg = s; RS Surg = s) or number of errors (mean std; NO RS Surg = ; RS Surg = ). In this case, RS Surg exhibits superior performance on average compared to NO RS Surg, although not to a degree where significant differences are found. One potential factor could be the simplicity of the task at hand. Hence, evaluating both groups’ performances on a more demanding task would be interesting.

In the haptic feedback condition, we assessed the performance of both CTRL and RS Surg (Figure 9, right). We found a significant difference in both time (mean std; CTRL = s; RS Surg = s; ) and the number of errors (mean std; CTRL = ; RS Surg = ; ). Instead, no significant differences were revealed for these two parameters when comparing robotic surgeons to non-robotic surgeons (time: mean std; NO RS Surg = s; RS Surg = s; errors: NO RS Surg = ; RS Surg = ). These results confirm those previously observed in the scenario without feedback. Finally, we did not find significant differences within any group when comparing performances between tasks with and without feedback. This suggests that feedback might have a minimal impact on executing fundamental tasks, and its significance should be reconsidered for more complex tasks.

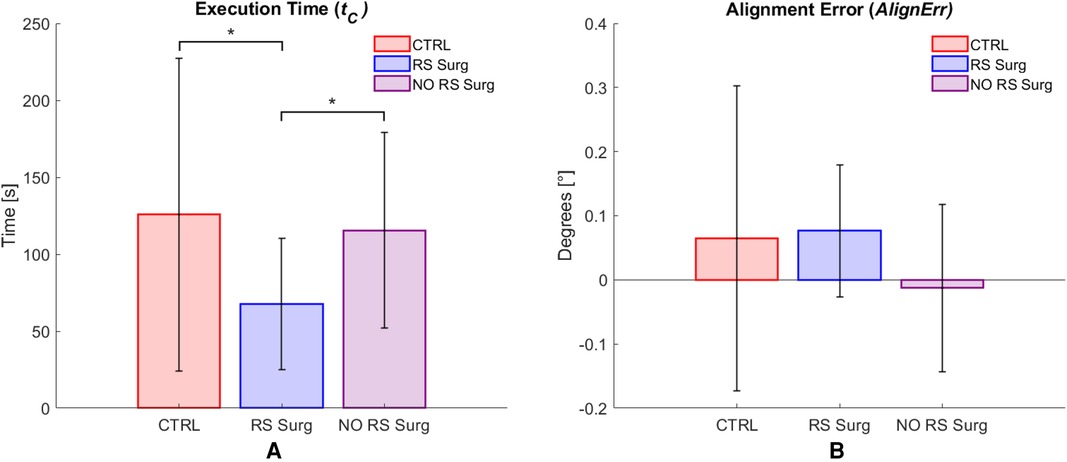

4.3 Camera control task

Figure 10 presents the results related to the camera task. Regarding the hypothesis of discerning between robotic surgeons and controls, the outcomes indicate that a significant difference was found in terms of time (CTRL vs. RS Surg: mean std; CTRL = s; RS Surg = s; ), but no significant difference was observed in terms of (CTRL vs. RS Surg: mean std; CTRL = ; RS Surg = ). We also investigated differences between robotic surgeons and non-robotic surgeons, and found significant differences in terms of time (NO RS Surg vs. RS Surg: mean std; NO RS Surg = s; RS Surg = s; ), but no significant difference was observed in terms of (NO RS Surg vs. RS Surg: mean std; NO RS Surg = ; RS Surg = ). We expected to find no statistical differences on in both tests since the task (Figure 6B) was to achieve the best alignment without any time constraints. Therefore, some subjects obtained excellent results despite investing more time. Hence, we evaluate execution time as the most crucial variable for this task. As evidenced by the tests, the RS Surg group outperformed the other groups in terms of speed, probably thanks to their prior knowledge.

Figure 10 Camera control task results. Average values per group and standard deviation are reported. (A) Execution time () results; (B) Alignment error () results. (*) means a statistical difference was found with a .

4.4 Questionnaires

The analysis of the SSQ data did not reveal any discomfort resulting from the use of the simulator; in fact, none of the participants indicated any worsening on the 16 symptoms tracked.

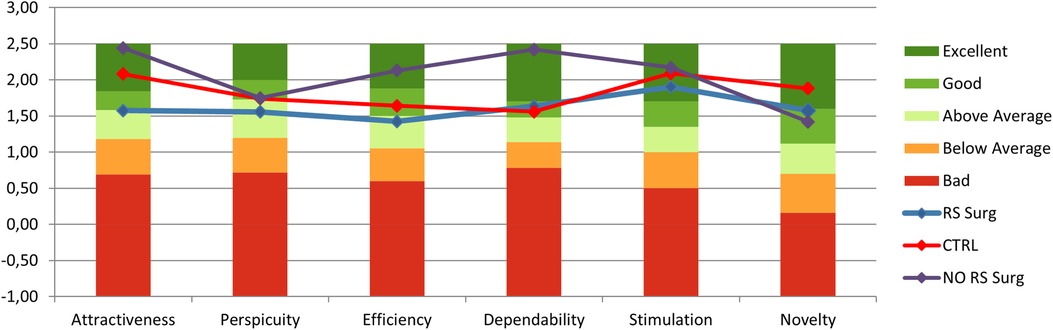

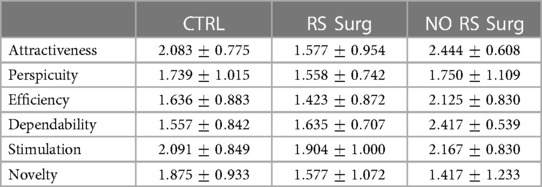

The outcomes of the user experience questionnaire (Figure 11) reveal values exceeding 1.5 (ranging from 1 to +3), indicating a favorable user experience during simulator usage. Initially, no notable disparities were detected between CTRL and RS Surg (Table 3), with both groups reporting a positive user experience. Similarly, upon comparing RS Surg with NO RS Surg (Table 3), no significant differences emerged, underscoring consistent positive user experience findings across both comparisons. Overall, RS Surg proved to be the most critical population, giving the lowest ratings on average, likely influenced by certain limitations of the simulator discussed in the following paragraphs. Despite this, the scores almost always fall into the “Good” range.

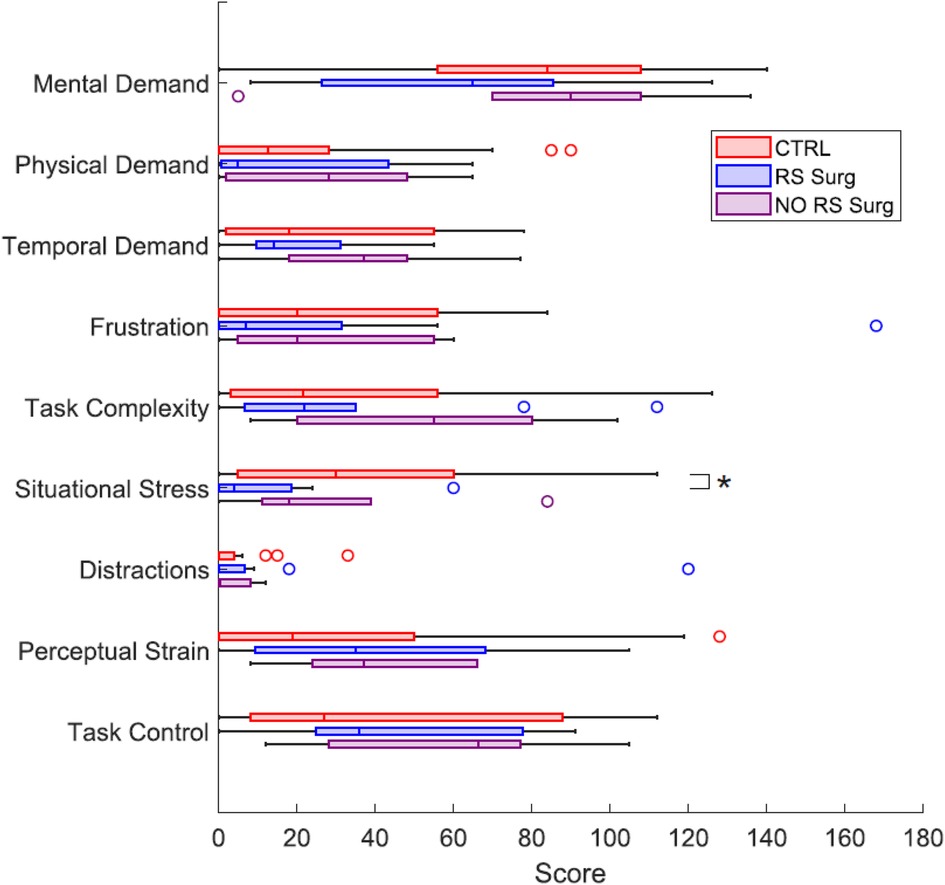

Regarding the SIM-TLX, we calculated the workloads associated with each dimension and generated the corresponding box plots, as shown in Figure 12. Initially, we evaluated potential differences in dimensions between CTRL and RS Surg by employing the Wilcoxon rank sum test on the median, as the scores are discrete data. The test shows a significant difference in the “Situational Stress” dimension (; CTRL = ; RS Surg = , ). The difference is reasonable, considering this is a primary task for the RS Surg, who are accustomed to significantly more demanding circumstances during their work. We would also have expected a difference between the two populations on the Mental Demand dimension; despite this, we obtained . A significant difference might be observed with a more complex task. The other dimensions did not reveal significant differences, although the average workloads of the RS Surg tend to be lower than those of the CTRL, except for the “Perceptual Strain.” Subsequently, similar to the comparison made for the Peg Board and Camera task, we proceeded to explore the diverse effects of the workload associated with different levels of experience, so we conducted the Wilcoxon test between RS Surg and NO RS Surg. However, we found no statistical difference in any dimension in this case. This leads us to think that the simulator is equally accessible to every subject, whether with or without previous experience.

Figure 12 Box plots representing the workloads of the 9 dimensions of the SIM-TLX questionnaire, compared between CTRL, RS Surg and NO RS Surg. (*) means a statistical difference was found with a .

A qualitative analysis is presented regarding the Face Validity Questionnaire. We observed the subjects’ responses while maintaining the division into the three groups and extracted the median score. We found that for the items “realism of the simulated tools” and “comfort of the hardware interface,” all three groups have a median score of 4 (“good”) out of 5. Regarding “image quality,” RS Surg have a value of 3, while CTRL and NO RS Surg have 4. In the items specific to RS Surg participants, a notable rating of 2 (“bad”) out of 5 is recorded under the category of “realism of hand movements compared to robotic surgery.” In this case, surgeons complained about the Geomagic Touch’s movement range, which is much narrower than Da Vinci’s, which they are used to. Indeed, the horizontal range of motion for the Geomagic Touch is approximately half of the Da Vinci one. In our setup, the clutch pedal is employed more often to compensate for this difference in range. However, based on feedback from surgeons, this pedal is less utilized with the Da Vinci system. Moving to the “ability to triangulate an object in the simulation,” they provided a median value of 3, which reflects the influence of the previously mentioned issue. Concerning the “comfort level of the interface compared to commercial ones,” their response yielded a median score of 3. Some participants noted issues with the tweezers, which were perceived as more slippery and challenging to adjust than Da Vinci’s. Others raised concerns about the absence of gravity compensation on the controllers. Probably, these factors also influenced the evaluation in the UEQ, as the scores provided by the RS Surg were slightly lower than those of the other two groups. However, despite these challenges, they rated the “usefulness of simulation in the acquisition of hand-eye coordination” with a score of 4, which may indicate the simulator presents high efficacy for training purposes. Finally, regarding haptic feedback, all three groups found it beneficial, indicating a median score of 4. This result may seem controversial, as we found no statistical differences in performance with or without feedback. Despite this, the impression is that users appreciate the presence of additional help during the tasks. It would be valuable to conduct further investigations with more challenging exercises and to increase the number of subjects.

5 Conclusions

This paper presents an affordable user interface integrated with a surgical robot simulator. As detailed in Section 3.1, the total cost of the architecture is approximately 8,400 USD, making it comparable to other affordable solutions. Regarding the evaluation of effectiveness, we relied on the results of the usability study.

Our system is designed to be generic and has the potential to simulate the user interfaces of a wide range of surgical robots available in the market. The simulator can be used with either a VR headset or a monitor. In the monitor mode, users can select between 2D images or interleaved images for 3D viewing with polarized glasses. In this study, we decided to simulate the Da Vinci robot, which is the most used in robotic surgery, exploiting Unity. The flexibility of this platform allows the development of increasingly complex and realistic scenes, encouraging further developments of the simulator. Moreover, leveraging the modularity of ROS2 nodes, we have previously used the interface to command a real robotic arm (26) simply by adjusting the robot kinematics within the Teleoperation node.

The system accuracy assessment demonstrates that the proposed interface complies with the positioning error requirements (<1 mm) for a surgical robot platform, with errors in the order of magnitude of mm. The usability study, which involved recruiting three groups: controls, robotic and non-robotic surgeons, demonstrates the validity of the simulator. As demonstrated in Sections 4.2, 4.3, the robotic surgeons exhibited significantly superior performances compared to the control group, indicating the transferability of their expertise to our simulator. Regarding the comparison between robotic and non-robotic surgeons, we did not find significant differences, although the RS Surg generally performed better on average. Since robotic surgical skills are highly specialized, further developments are needed to design more challenging tasks that can better showcase these skills. Thus, incorporating new metrics will enable us to evaluate different levels of surgeon experience during training. Additionally, this will provide an opportunity to reassess the haptic feedback, as the current experiments did not reveal significant performance differences. On the other hand, proposing two basic tasks allowed us to demonstrate the usability and intuitive control of the simulator; even users with minimal experience could accomplish both tasks after becoming familiar with the system. In support of this thesis, the positive results of the SSQ and UEQ (Section 4.4) demonstrate users’ satisfaction with the simulator.

It is important to acknowledge that the simulator has drawbacks. Robotic surgeons have pointed out that the range of motion of the Geomagic Touch is limited compared to that of the Da Vinci, and the tweezers have different handles. Consequently, the experience is less realistic than using the actual robot in the operating room. However, observing the face validity results (Section 4.4), they are willing to accept these compromises associated with adopting low-cost technologies. They believe this simulator can train novice surgeons in practising fundamental robotic surgery manoeuvres, such as wrist movements, coordinating with the clutch pedal, and controlling the camera. In conclusion, the surgeons endorsed our idea: the development of an affordable simulator, which opens up the possibility of establishing training programs in robotic surgery within medical schools and universities, is needed to make such training accessible to a broader audience.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Comitato Etico Territoriale – Liguria. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. MC: Conceptualization, Data curation, Formal analysis, Investigation, Validation, Visualization, Writing – original draft, Writing – review & editing. VP: Investigation, Methodology, Project administration, Supervision, Visualization, Writing – original draft, Writing – review & editing, Conceptualization, Formal analysis. AS: Conceptualization, Investigation, Methodology, Visualization, Writing – review & editing. AO: Data curation, Writing – review & editing. EnT: Software, Supervision, Writing – review & editing. MP: Software, Writing – review & editing. ET: Software, Writing – review & editing. DS: Resources, Supervision, Visualization, Writing – review & editing. FB: Funding acquisition, Project administration, Resources, Writing – review & editing. SR: Data curation, Formal analysis, Project administration, Resources, Supervision, Visualization, Writing – review & editing. LSM: Conceptualization, Formal analysis, Funding acquisition, Methodology, Project administration, Resources, Software, Supervision, Visualization, Writing – review & editing.

Funding

The authors declare financial support was received for the research, authorship, and/or publication of this article. This work was carried out in the framework of the project “ELVIS - Educational Laparoscopy with Virtual Instructive Simulations and robotics,” which was partially funded by the Programma Operativo Regionale (POR) 2014–2020, Obiettivo “Investimenti in favore della crescita e dell'occupazione” and co-financed by the Fondo Europeo di Sviluppo Regionale (F.E.S.R.).

Conflict of interest

MP, ET, FB were employed by Fos SpA.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Sheetz KH, Claflin J, Dimick JB. Trends in the adoption of robotic surgery for common surgical procedures. JAMA Network Open. (2020) 3(1):e1918911. doi: 10.1001/jamanetworkopen.2019.18911

2. Leal Ghezzi T, Campos Corleta O. 30 Years of robotic surgery. World J Surg. (2016) 40:2550–7. doi: 10.1007/s00268-016-3543-9

3. Bric JD, Lumbard DC, Frelich MJ, Gould JC. Current state of virtual reality simulation in robotic surgery training: a review. Surg Endosc. (2016) 30:2169–78. doi: 10.1007/s00464-015-4517-y

4. Scott DJ, Dunnington GL. The new ACS/APDS skills curriculum: moving the learning curve out of the operating room. J Gastrointest Surg. (2008) 12:213–21. doi: 10.1007/s11605-007-0357-y

5. Albani JM, Lee DI. Virtual reality-assisted robotic surgery simulation. J Endourol. (2007) 21:285–7. doi: 10.1089/end.2007.9978

6. Sun L, Van Meer F, Schmid J, Bailly Y, Thakre A, Yeung C. Advanced da vinci surgical system simulator for surgeon training and operation planning. Int J Med Rob Comput Assist Surg. (2007) 3:245–51. doi: 10.1002/rcs.139

7. MacCraith E, Forde JC, Davis NF. Robotic simulation training for urological trainees: a comprehensive review on cost, merits and challenges. J Rob Surg. (2019) 13:371–7. doi: 10.1007/s11701-019-00934-1

8. Moglia A, Ferrari V, Morelli L, Ferrari M, Mosca F, Cuschieri A. A systematic review of virtual reality simulators for robot-assisted surgery. Eur Urol. (2016) 69:1065–80. doi: 10.1016/j.eururo.2015.09.021

9. Hanks JB. Simulation in surgical education: influences of and opportunities for the southern surgical association. J Am Coll Surg. (2019) 228:317–28. doi: 10.1016/j.jamcollsurg.2018.12.029

10. Bovo F, De Rossi G, Visentin F. Surgical robot simulation with BBZ console. J Vis Surg. (2017) 3:57. doi: 10.21037/jovs.2017.03.16

11. Liu M, Curet M. A review of training research and virtual reality simulators for the da vinci surgical system. Teach Learn Med. (2015) 27:12–26. doi: 10.1080/10401334.2014.979181

12. Ahmed K, Jawad M, Abboudi M, Gavazzi A, Darzi A, Athanasiou T, et al. Effectiveness of procedural simulation in urology: a systematic review. J Urol. (2011) 186:26–34. doi: 10.1016/j.juro.2011.02.2684

13. Perone JA, Anton NE, Gardner AK, Steinemann S. Simulation training in surgical education. Curr Surg Rep. (2017) 5:20. doi: 10.1007/s40137-017-0182-5

14. Scott DJ, Goova MT, Tesfay ST. A cost-effective proficiency-based knot-tying and suturing curriculum for residency programs. J Surg Res. (2007) 141:7–15. doi: 10.1016/j.jss.2007.02.043

15. Bettega AL, Brunello LFS, Nazar GA, De-Luca GYE, Sarquis LM, Wiederkehr HDA, et al. Chest tube simulator: development of low-cost model for training of physicians and medical students [simulador de dreno de tórax: desenvolvimento de modelo de baixo custo para capacitação de médicos e estudantes de medicina.]. Rev Col Bras Cir. (2019) 46:e2011. doi: 10.1590/0100-6991e-20192011

16. Cikla U, Sahin B, Hanalioglu S, Ahmed AS, Niemann D, Baskaya MK. A novel, low-cost, reusable, high-fidelity neurosurgical training simulator for cerebrovascular bypass surgery. J Neurosurg. (2019) 130:1663–71. doi: 10.3171/2017.11.JNS17318

17. Rod J, Marret J-B, Kohaut J, Aigrain Y, Jais JP, de Vries P, et al. Low-cost training simulator for open dismembered pyeloplasty: development and face validation. J Surg Educ. (2018) 75:188–94. doi: 10.1016/j.jsurg.2017.06.010

18. Li MM, George J. A systematic review of low-cost laparoscopic simulators. Surg Endosc. (2017) 31:38–48. doi: 10.1007/s00464-016-4953-3

19. Sharma D, Agrawal V, Biyani CS. Low-cost simulation in urology. (2022). doi: 10.1007/978-3-030-88789-6-16

20. Ferro M, Brunori D, Magistri F, Saiella L, Selvaggio M, Fontanelli GA. A portable da vinci simulator in virtual reality. (2019). p. 447–8.

21. Fontanelli G, Selvaggio M, Ferro M, Ficuciello F, Vendiuelli M, Siciliano B. A v-rep simulator for the da vinci research kit robotic platform. (2018). p. 1056–61.

22. Grande K, Jensen RS, Kibsgaard M, Kraus M. Low-cost simulation of robotic surgery. (2013). doi: 10.1145/2466816.2466823

23. Koehn JK, Kuchenbecker KJ. Surgeons and non-surgeons prefer haptic feedback of instrument vibrations during robotic surgery. Surg Endosc. (2015) 29:2970–83. doi: 10.1007/s00464-014-4030-8

24. Meccariello G, Faedi F, AlGhamdi S, Montevecchi F, Firinu E, Zanotti C, et al. An experimental study about haptic feedback in robotic surgery: may visual feedback substitute tactile feedback? J Rob Surg. (2016) 10:57–61. doi: 10.1007/s11701-015-0541-0

25. UnityTechnologies (2005). Available online at: https://unity.com/ (accessed April 28, 2024).

26. Penza V, Neri A, Koskinopoulou M, Turco E, Soriero D, Scabini S, et al. Augmented reality navigation in robot-assisted surgery with a teleoperated robotic endoscope. (2023). p. 4621–6.

27. Freschi C, Ferrari V, Melfi F, Ferrari M, Mosca F, Cuschieri A. Technical review of the da vinci surgical telemanipulator. Int J Med Rob Comput Assist Surg. (2013) 9:396–406. doi: 10.1002/rcs.1468

28. Light RA. Mosquitto: server and client implementation of the MQTT protocol. J Open Source Softw. (2017) 2:265. doi: 10.21105/joss.00265

29. Schreuder HWR, Persson JEU, Wolswijk RGH, Ihse I, Schijven MP, Verheijen RHM. Validation of a novel virtual reality simulator for robotic surgery. Sci World J. (2014) 2014:507076. doi: 10.1155/2014/507076

30. Smith R, Truong M, Perez M. Comparative analysis of the functionality of simulators of the da vinci surgical robot. Surg Endosc. (2015) 29:972–83. doi: 10.1007/s00464-014-3748-7

31. Sridhar AN, Briggs TP, Kelly JD, Nathan S. Training in robotic surgery–an overview. Curr Urol Rep. (2017) 18(8):58. doi: 10.1007/s11934-017-0710-y

32. Robotec.ai. Ros2forunity (2021). Available online at: https://robotec.ai/open-source-release-of-ros2-for-unity/ (accessed April 28, 2024).

33. Gani A, Pickering O, Ellis C, Sabri O, Pucher P. Impact of haptic feedback on surgical training outcomes: a randomised controlled trial of haptic versus non-haptic immersive virtual reality training. Ann Med Surg. (2022) 83:104734. doi: 10.1016/j.amsu.2022.104734

34. Patel RV, Atashzar SF, Tavakoli M. Haptic feedback and force-based teleoperation in surgical robotics. Proc IEEE. (2022) 110:1012–27. doi: 10.1109/JPROC.2022.3180052

35. Okamura AM. Haptic feedback in robot-assisted minimally invasive surgery. Curr Opin Urol. (2009) 19:102–7. doi: 10.1097/MOU.0b013e32831a478c

36. Rangarajan K, Davis H, Pucher PH. Systematic review of virtual haptics in surgical simulation: a valid educational tool?. J Surg Educ. (2020) 77:337–47. doi: 10.1016/j.jsurg.2019.09.006

37. Macenski S, Foote T, Gerkey B, Lalancette C, Woodall W. Robot operating system 2: design, architecture, and uses in the wild. Sci Rob. (2022) 7:eabm6074. doi: 10.1126/scirobotics.abm6074

38. Kennedy RS, Lane NE, Berbaum KS, Lilienthal MG. Simulator sickness questionnaire: an enhanced method for quantifying simulator sickness. Int J Aviat Psychol. (1993) 3:203–20. doi: 10.1207/s15327108ijap0303-3

39. Schrepp M, Hinderks A, Thomaschewski J. Applying the user experience questionnaire (UEQ) in different evaluation scenarios. In: Design, User Experience, and Usability. Theories, Methods, and Tools for Designing the User Experience: Third International Conference, DUXU 2014, Held as Part of HCI International 2014, Heraklion, Crete, Greece, June 22–27, 2014, Proceedings, Part I3. Springer (2014). p. 383–92.

40. UEQ. Available online at: https://www.ueq-online.org/ (accessed April 28, 2024).

41. Schrepp M, Thomaschewski J, Hinderks A. Construction of a benchmark for the user experience questionnaire (UEQ). (2017).

42. Harris D, Wilson M, Vine S. Development and validation of a simulation workload measure: the simulation task load index (sim-tlx). Virtual Real. (2020) 24:557–66. doi: 10.1007/s10055-019-00422-9

43. Hart SG. Nasa-task load index (nasa-tlx); 20 years later. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting. Los Angeles: Sage Publications (2006). Vol. 50. p. 904–8.

Keywords: surgical simulator, surgical robot, virtual reality, teleoperation, medical training

Citation: Neri A, Coduri M, Penza V, Santangelo A, Oliveri A, Turco E, Pizzirani M, Trinceri E, Soriero D, Boero F, Ricci S and Mattos LS (2024) A novel affordable user interface for robotic surgery training: design, development and usability study. Front. Digit. Health 6: 1428534. doi: 10.3389/fdgth.2024.1428534

Received: 6 May 2024; Accepted: 16 July 2024;

Published: 30 July 2024.

Edited by:

Premananda Indic, University of Texas at Tyler, United StatesReviewed by:

Arnaud Leleve, Institut National des Sciences Appliquées de Lyon (INSA Lyon), FranceVincenzo Ferrari, University of Pisa, Italy

© 2024 Neri, Coduri, Penza, Santangelo, Oliveri, Turco, Pizzirani, Trinceri, Soriero, Boero, Ricci and Mattos. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alberto Neri, YWxiZXJ0by5uZXJpQGlpdC5pdA==

Alberto Neri

Alberto Neri Mara Coduri2,3

Mara Coduri2,3 Veronica Penza

Veronica Penza Enrico Turco

Enrico Turco Federico Boero

Federico Boero Serena Ricci

Serena Ricci Leonardo S. Mattos

Leonardo S. Mattos