- 1Division of Pediatric Emergency Medicine, UPMC Children’s Hospital of Pittsburgh, University of Pittsburgh School of Medicine, Pittsburgh, PA, United States

- 2Department of Emergency Medicine, University of Pittsburgh School of Medicine, Pittsburgh, PA, United States

- 3School of Computing and Information, University of Pittsburgh, Pittsburgh, PA, United States

- 4Division of General Academic Pediatrics, UPMC Children’s Hospital of Pittsburgh, University of Pittsburgh School of Medicine, Pittsburgh, PA, United States

- 5Division of Health Informatics, UPMC Children’s Hospital of Pittsburgh, University of Pittsburgh School of Medicine, Pittsburgh, PA, United States

- 6Division of Emergency Medicine, Department of Pediatrics, Ann & Robert H. Lurie Children's Hospital of Chicago, Northwestern University Feinberg School of Medicine, Chicago, IL, United States

Objective: Chest radiographs are frequently used to diagnose community-acquired pneumonia (CAP) for children in the acute care setting. Natural language processing (NLP)-based tools may be incorporated into the electronic health record and combined with other clinical data to develop meaningful clinical decision support tools for this common pediatric infection. We sought to develop and internally validate NLP algorithms to identify pediatric chest radiograph (CXR) reports with pneumonia.

Materials and methods: We performed a retrospective study of encounters for patients from six pediatric hospitals over a 3-year period. We utilized six NLP techniques: word embedding, support vector machines, extreme gradient boosting (XGBoost), light gradient boosting machines Naïve Bayes and logistic regression. We evaluated their performance of each model from a validation sample of 1,350 chest radiographs developed as a stratified random sample of 35% admitted and 65% discharged patients when both using expert consensus and diagnosis codes.

Results: Of 172,662 encounters in the derivation sample, 15.6% had a discharge diagnosis of pneumonia in a primary or secondary position. The median patient age in the derivation sample was 3.7 years (interquartile range, 1.4–9.5 years). In the validation sample, 185/1350 (13.8%) and 205/1350 (15.3%) were classified as pneumonia by content experts and by diagnosis codes, respectively. Compared to content experts, Naïve Bayes had the highest sensitivity (93.5%) and XGBoost had the highest F1 score (72.4). Compared to a diagnosis code of pneumonia, the highest sensitivity was again with the Naïve Bayes (80.1%), and the highest F1 score was with the support vector machine (53.0%).

Conclusion: NLP algorithms can accurately identify pediatric pneumonia from radiography reports. Following external validation and implementation into the electronic health record, these algorithms can facilitate clinical decision support and inform large database research.

1. Introduction

Pneumonia is a significant cause of morbidity among children, resulting in a large proportion of unscheduled healthcare visits worldwide (1). In the United States, pneumonia accounts for 1%–4% of all emergency department (ED) visits in children and leads to greater than 100,000 hospitalizations annually (2–5). Among patients in children’s hospitals with possible pneumonia, greater than 80% receive a chest radiograph (CXR) which are frequently used, in addition to clinical presentation, to determine the need for antimicrobial therapy (6, 7). Previous studies have demonstrated wide variation in management strategies, including variable use of guideline-concordant antibiotics and inconsistent severity-adjusted hospitalization rates among this patient population (8–11). Electronic clinical decision support (CDS) tools have emerged as a way to align patient care with guideline-concordant therapy and management strategies (12–14). The utility of CDS tools in the pneumonia literature has been limited by the ability to incorporate free-text data, including CXR reports, into the electronic algorithm. Natural language processing (NLP), a class of machine learning which uses rule-based algorithms to convert unstructured text into encoded data, may overcome this limitation by interpreting and classifying large volumes of unstructured electronic text. Use of NLP in a comprehensive CDS tool that incorporates the chief complaint, historical data, vital signs and laboratory values, may allow for the rapid and accurate identification of disease, assist with guideline-concordant recommendations, and minimize unnecessary alert fatigue.

As the electronic health record (EHR) evolves, clinicians and researchers are increasingly able to query and utilize large volumes of electronic data to generate electronic CDS tools and to inform large dataset research. A CDS tool for pneumonia, for example, would take clinical data in combination with radiology data (such as CXRs and their interpretation) to calculate a predicted probability for this outcome. When this probability occurs within certain stakeholder-defined risk parameters, CDS tools may inform the clinician with respect to the best course of action (Supplementary Figure) (15). NLP offers a mechanism by which to rapidly interpret CXR reports and incorporate the encoded result into a CDS tool. The reported use of NLP in the pediatric pneumonia literature has been sparse, with prior studies limited to a small number of radiology reports and variable diagnostic performance (16, 17).

In this study, our objective was to develop and internally validate a novel NLP tool capable of rapidly identifying pediatric pneumonia from CXR reports with comparable accuracy to content experts and diagnosis codes.

2. Methods

We used a multicenter dataset of pediatric CXR reports to develop and internally validate multiple models capable of automatically identifying radiographic pneumonia based on radiologists’ interpretation. Model programming was performed using Python (version 3.9.1). Data management, calculation of Cohen’s Kappa coefficient, and assessment of model performance were performed in R (version 3.6.3; R Foundation for Statistical Computing, Vienna Austria).

2.1. Data source

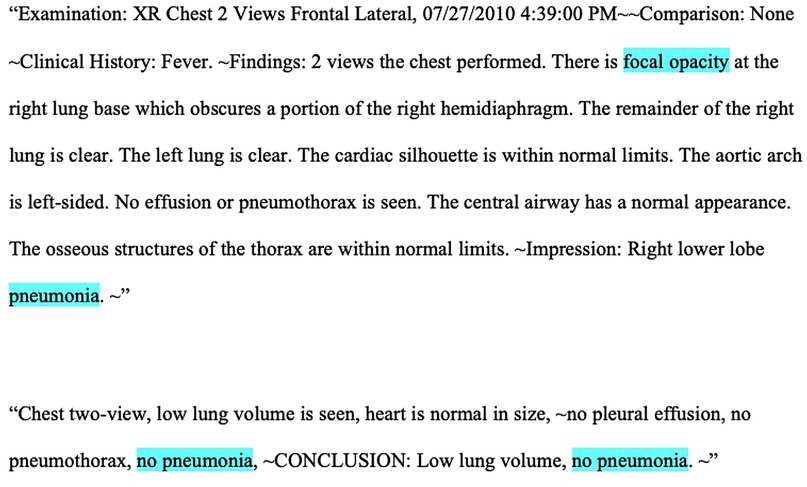

We performed a retrospective study of pediatric CXR reports from the Pediatric Health Information System plus (PHIS+) database, a federated collection of clinical and administrative data from six large pediatric hospitals, collected between January 1, 2010 and December 31, 2012, and which has previously been employed for research using granular data not found within administrative datasets (18, 19). Figure 1 shows two examples of typical CXR reports. These generally consisted of an explanation of the image type, a clinical prompt, findings, and an interpretation, though exact formats varied. This study was deemed as exempt research by the investigators’ Institutional Review Boards.

2.2. Inclusion criteria

From the PHIS+ dataset, we identified all encounters for children ages 3 months to 18 years with a CXR performed in the ED and for which corresponding clinical data were identifiable within PHIS+. We only included children whose ED visits resulted in discharge or admission, thus excluding ambulatory or surgical encounters. For encounters with multiple CXRs during the same encounter, we retained the first. All types of CXR series were included, including portable, single-view, two-view, multiple-view, and foreign body aspiration series, regardless of the imaging study indication. Records with incomplete or missing CXR reports were excluded.

2.3. Data abstraction

For included encounters, we abstracted the attending radiologist CXR report and patient demographic data, including age, sex, race, ethnicity, hospital, and season of visit. We also abstracted relevant clinical characteristics, including complex chronic conditions (using a previously published diagnosis code-based classification system) (20), inpatient admission status, intensive care unit (ICU) status, need for extracorporeal membrane oxygenation (ECMO), need for mechanical ventilation, and mortality. Observation status was considered equivalent to inpatient status (21). These demographic data were not utilized in the development of the NLP tool but were provided to better describe the sample of pediatric patients with suspected CAP in the study population. We abstracted all (primary, associated and admission) discharge diagnosis codes.

2.4. Definition of pneumonia

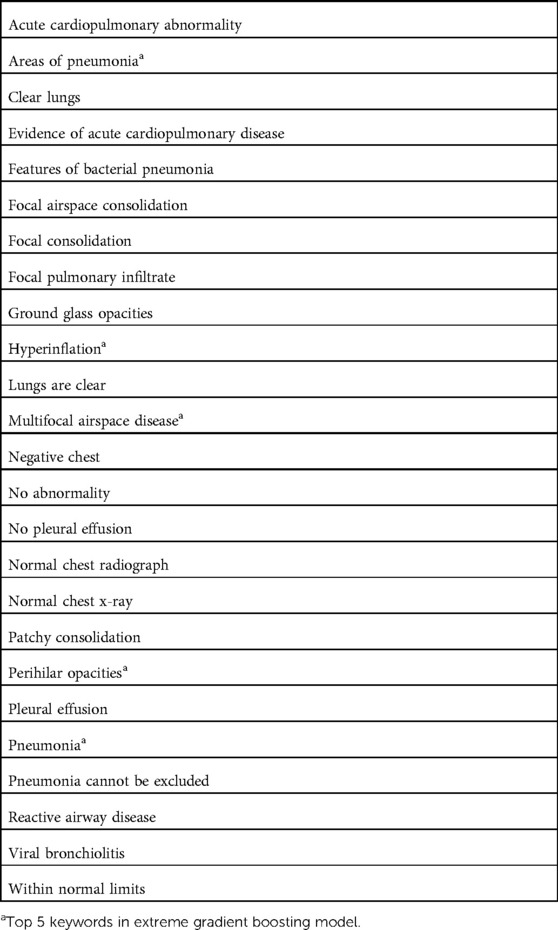

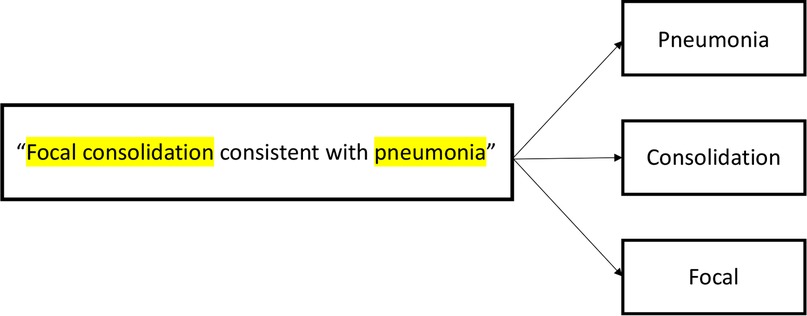

In order to train the models, three content experts (NR, SR, AF) reviewed 200 randomly selected CXR reports and generated a mutually agreed upon list of the most frequently utilized keywords used to denote pneumonia (Table 1). Negative combinations of the keywords (e.g., “no infiltrate”) were also included to represent the absence of pneumonia. These keywords were then converted into tokens, (i.e., broken down into their most basic components) (Figure 1), and were then used to train models.

2.5. Derivation and validation sample

To assess the performance of the NLP modeling, we first retained a random sample of approximately 1% of the total number of included encounters to create the validation sample. This proportion was primarily selected due to the large total number of radiology reports included in the derivation sample which exceeded 170,000; the manual review of more than 1% of such a large number of chest x-ray reports was time and labor-prohibitive. To generate a validation sample, we performed stratified random sampling of equal proportions of chest radiographs positive and negative for pneumonia, which included approximately 1/3 from admitted patients to ensure similar disease acuity. Radiographs not used in the validation sample were retained for the derivation sample. For radiographs in the validation sample, two authors (NR and SR) independently reviewed and classified all CXR reports within the validation sample as either positive or negative for pneumonia based on clinical expertise. We calculated a Cohen's kappa coefficient to assess interrater reliability between the primary reviewers. A third, independent content expert (JR) reviewed the discrepant records and assigned a final code of pneumonia or no pneumonia. The remaining 99% of CXR reports that were not included in the validation dataset were included in the derivation sample.

2.6. Outcome measures

For the purposes of model validation, we used two outcomes: any diagnosis code of pneumonia (including the principal, admission, and any associated diagnosis) and diagnosis by expert consensus. A diagnosis of pneumonia was defined by an International Classification of Disease, 9th edition (ICD-9) discharge diagnosis code of pneumonia (Supplementary Table). While content expert annotation was considered more clinically applicable, we also evaluated its performance in the prediction of ICD-9 codes, as models were trained using these parameters. We used a previously validated list of diagnosis codes for CAP (4).

2.7. Development of natural language processing tool

To derive the NLP algorithms, we used six frequently employed NLP methods: word embedding, extreme gradient boosting (XGBoost), light gradient boosting machine (LightGBM), Support Vector Machine (SVM), Naïve Bayes, and logistic regression. We chose these representative algorithms on the basis of their prior use (Naïve Bayes, Support Vector Machines), frequent use in other domains (word embedding, logistic regression), and novelty (XGBoost and LightGBM).

2.7.1. Word embedding

Word embedding refers to a classification technique in unsupervised machine learning that mathematically embeds a word or phrase from a space with potentially infinite meanings per word into a numerical vector space with fewer meanings in order to create the simplest numerical classification for complex language using an artificial neural network (22). Put another way, word embedding classifies many related words into simpler high dimensional vectors. For example, using word embedding, the words “happy” and “joyful” would be classified into the same numerical vector. We chose this machine learning technique, in part, because of its applicability to medicine, which often has many words that have similar meanings (for example, pneumonia, infiltrate, and consolidation).

We first trained specific word embedding on pneumonia-related clinical text. We then used sample phrases constructed by the authors for pneumonia. We converted these phrases into embedding by calculating the average word embedding for all tokens in a given phrase. Next, to apply word embedding to the dataset for classification, each CXR report was broken up into tokens. A skip-gram model, using a window of words of size 10 with a dimension of 100, was utilized to predict context words from the input, or target, words (Figure 2). A window of 10 is considered to be the reference standard size to train skip-gram models (24). We used 100 as the dimension of the vector because it was sufficient to capture an adequate amount of semantic information from words without requiring prolonged training time. The window of words with the highest cosine similarity score for each keyword was used to classify a CXR report as “pneumonia” or “no pneumonia.” We used the Genism Word2Vec library to train the word embedding from the given clinical documents (23).

2.7.2. XGBoost and LightGBM

Gradient boosting is a form of meta-analytic machine learning that combines weak, individual prediction models to generate a more accurate aggregate target model that can perform regression and classification analyses. XGBoost is an open-source software designed to improve gradient boosting by performing parallelized decision tree building, tree-pruning using a depth-first approach and employing regularization to avoid overfitting the data. XGBoost was utilized primarily for its recent emergence as the optimal technique in many machine learning applications (24). From our training dataset, each keyword and its potentially negative expansion were used as initial inputs (24). We controlled the maximum depth of the tree as 3 and the learning rate as 0.3 based on the optimal model performance with these hyperparameters (25). Similarly, LightGBM is a gradient boosting framework based on decision tree algorithms which develops asymmetric trees, but differs from XGBoost through the development of more selective (e.g., “leaf-wise”) growth instead of level-wise growth. The LightGBM was set to have 31 leaves, and a learning rate of 0.05. As with word embedding, both gradient boosting techniques use an artificial neural network to calculate the embedding of the input text.

2.7.3. SVM

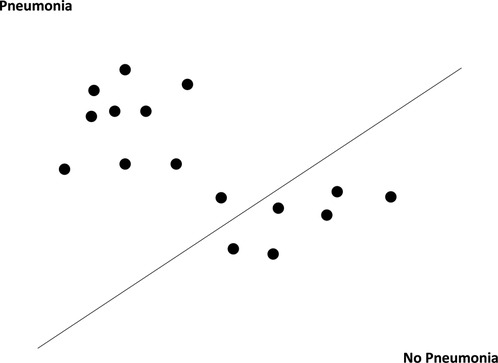

SVM is a classification technique in supervised machine learning that maps data points (or support vectors) onto an N-dimensional hyperplane (where N equals the number of features) in order to classify them. For our SVM model, we implemented the “linear kernel” method, which classifies support vectors in a linear decision plane (Figure 3) (26, 27). Previous studies have utilized SVM to extract free text information from CXR reports with reasonable sensitivity and specificity (28).

2.7.4. Naïve Bayes

Naïve Bayes is an NLP technique which relies on Bayes’ theorem and assumes independence of predictor variables when calculating the probability that predictor variables are related to the target variable (29). Naïve Bayes has served as a foundational NLP technique in studies examining both neonatal and adult pneumonia (16). For our Naïve Bayes model, we used the default configuration of a “Gaussian Naïve Bayes” model from the sklearn python package (30).

2.7.5. Logistic regression

Logistic regression is commonly applied in machine learning contexts and uses Maximum Likelihood Estimation to classify the probability of a dichotomous outcome using predictor data. In NLP contexts, logistic regression is applied following feature extraction of vectorized text, similar to SVM and Naïve Bayes.

2.8. Statistical analysis

Study demographics and clinical characteristics were summarized using proportions for categorical variables and median and interquartile range for continuous variables. Characteristics of CXR reports that were positive versus negative for pneumonia were compared using χ2 tests for categorical variables and Wilcoxon rank sum for continuous variables. We compared demographic and clinical characteristics of encounters in the derivation and validation datasets. Differences were considered statistically significant at a p value of <0.05. In the validation dataset, we calculated the sensitivity and specificity of any ICD-9 diagnosis code of pneumonia compared to a reference standard of consensus content expert interpretation. For radiograph reports in the validation set, we calculated the unweighted Cohen's Kappa to measure inter-rater agreement for the manual CXR report review between the two primary reviewers. We calculated the performance of the four NLP models on both the derivation and validation datasets. For each of the machine learning techniques, we calculated the sensitivity (recall), specificity, positive predictive value (precision), negative predictive value, and positive and negative likelihood ratios with corresponding 95% confidence intervals (CI). Additionally, we calculated the accuracy and F1 score as measures of model performance frequently used in machine learning applications:

3. Results

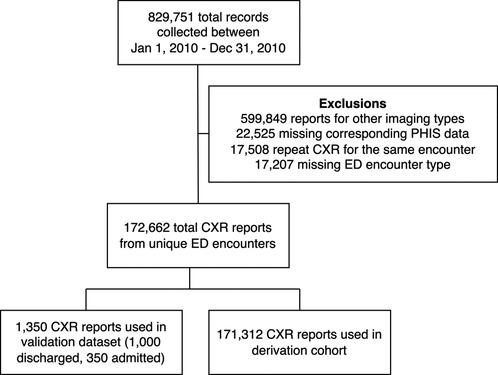

3.1. Study inclusion

A total of 829,751 encounters with imaging were collected from the six children’s hospitals within the PHIS+ database between January 1, 2010 and December 31, 2012. We excluded 599,849 (72%) imaging studies that were not CXRs, 22,525 (2.7%) that lacked a discharge diagnosis code, and 17,508 (2.1%) that were duplicate CXRs within the same encounter. 17,207 (2.1%) non-ED encounters were also excluded. A total of 172,662 (21%) CXR reports from unique ED encounters were included in the final analysis. This was divided into a derivation sample consisting of 171,312 (99.2%) CXR reports and a validation sample consisting of 1,350 (0.8%) CXR reports (Figure 4).

3.2. Descriptive data

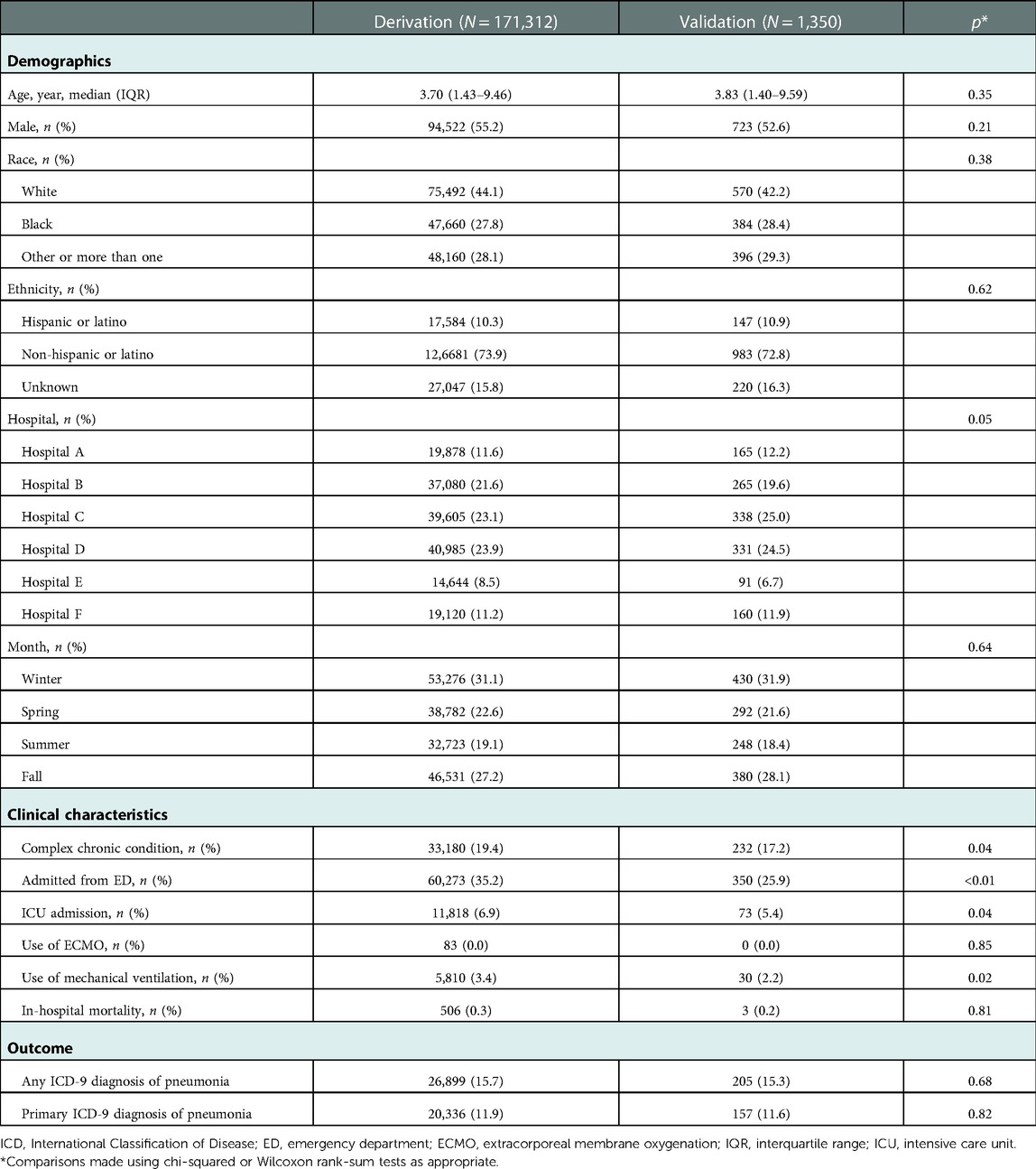

The mean patient age for all included encounters was 3.7 years (1.6–8.7); 55% of encounters represented male patients. A median of 28,694 (IQR 19,280–39,943) CXR reports were collected from each hospital. 33,412 (19.3%) of encounters contained at least one diagnosis code of a complex chronic condition. A total of 27,105 (15.6%) encounters contained any ICD-9 diagnosis code of pneumonia and 20,493 (11.9%) encounters had a primary diagnosis code of pneumonia (Table 2).

3.3. Validation sample

In the validation sample, 185/1,350 (13.7%) of CXR reports were classified by reviewers as positive for pneumonia. Concordance between reviewers demonstrated a kappa of 0.86. A total of 205 (15.3%) encounters had any ICD-9 diagnosis of pneumonia. 157 (11.6%) encounters had a primary ICD-9 diagnosis code of pneumonia (Table 3). There were statistically significant differences between encounters in the derivation and validation samples with regard to hospital location, complex chronic condition, admission from the ED, admission to the ICU, and the need for mechanical ventilation. There were no significant differences between in gender, race, ethnicity, need for ECMO, in-hospital mortality, or any primary ICD-9 diagnosis codes of pneumonia. When comparing the presence of an ICD-9 diagnosis code for pneumonia to annotation of radiographs by context experts (as the reference standard), ICD-9 diagnosis codes had a sensitivity of 93.3%, specificity of 69.2%, positive predictive value of 95.0%, and negative predictive value of 62.1%.

Table 3. Demographics and clinical characteristics of encounters in the derivation and validation samples.

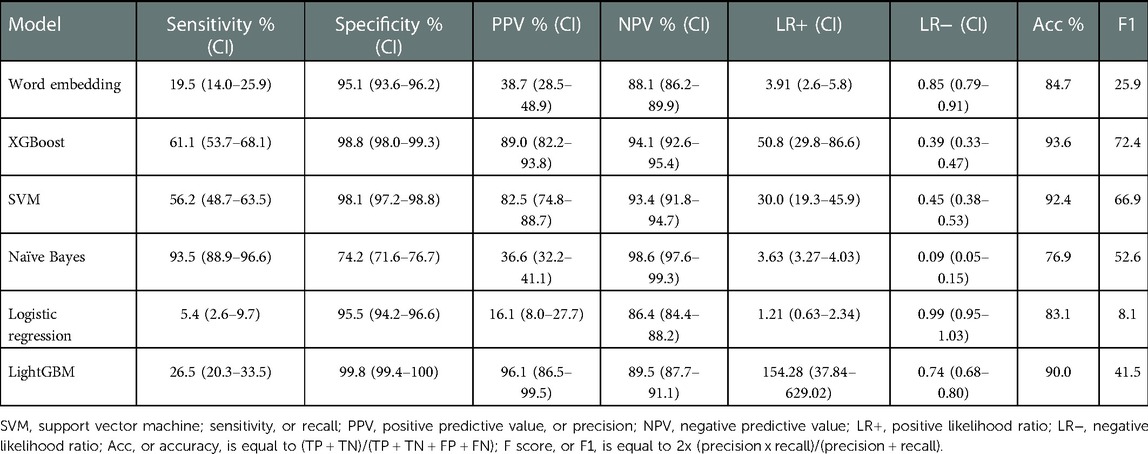

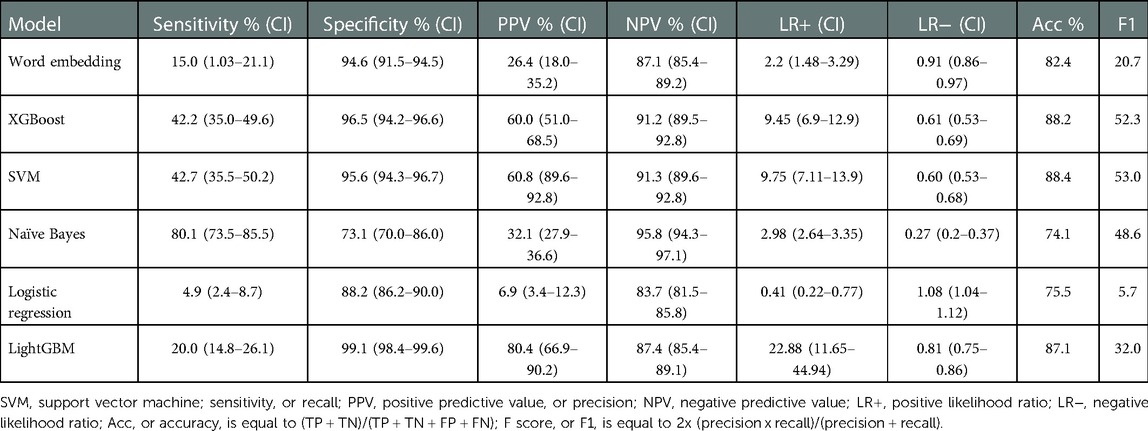

3.4. Model performance on validation sample

When evaluating model performance on the validation sample compared to manual review, the Naïve Bayes model had the highest sensitivity of 93.5% (Table 4). The XGBoost model had the highest specificity of 98.8%, positive predictive value of 89.0%, likelihood ratio of 50.8 and overall performance with an F1 score of 72.4. When evaluating the validation sample using an outcome of ICD-9 diagnosis codes of pneumonia, the performance of all models was lower (Table 5). The Word Embedding, SVM, XGBoost, and LightGBM models each retained a specificity greater than 90% when assessed with ICD-9 diagnosis codes.

Table 4. Model characteristics of the validation cohort based on manual review by three content experts.

Table 5. Model characteristics of the validation cohort when using an outcome based on any ICD-9 diagnosis code of pneumonia.

3.5. False positives and false negatives

Each NLP algorithm was subject to false positives and false negatives. Within the validation sample, the Naïve Bayes model demonstrated the lowest false positive rates of 6.5% and 19.1% when compared to expert consensus and ICD-9 codes, respectively. The LightGBM method demonstrated the lowest false negative rate of (0.2% when compared to expert consensus and 0.9% when compared to ICD-9 codes). There was no significant overlap of tokens that triggered false negative and false positive results between each of the models.

4. Discussion

In this investigation, we evaluated six NLP models to interpret pediatric CXRs with pneumonia. The Naïve Bayes model was the most sensitive and the XGBoost model was the most specific and had the overall best performance when compared to manual expert review. The incorporation of this type of machine learning tool into electronic CDS algorithms, in combination with other EHR data, carries the potential to augment the rapid identification of patients with pneumonia and to facilitate the real-time application of evidence-based clinical guidelines. In addition, this type of NLP tool may expedite large database research by reducing the time needed for manual review.

An accurate NLP model for the identification of pneumonia can be used in “non-knowledge” [or statistically/machine-learning derived (31)]-based CDS in order to decrease unnecessary variation in care, improve antibiotic stewardship, and improve prognostication. Used in conjunction with additional historical and clinical factors, a comprehensive CDS for pneumonia may be best able to identify patients for whom imaging may be indicated, followed by interpretation of radiograph findings in the context of clinical data in order to provide guidance with respect to antimicrobial utilization and ED disposition. Our findings are demonstrative of the utility of NLP-based interpretation of radiography reports to improve the evidence-based management of pediatric pneumonia. These expand upon prior research on the use of NLP for the identification of pediatric pneumonia in several ways, including in the use of a multicenter dataset, comparing multiple NLP algorithms, and evaluating two forms of internal validation (content experts and diagnosis codes). NLP results in optimal model performance when the quantity of the input data is large, which reduces the effect of noise, or unexplainable variation, in the data. Furthermore, dividing large volumes of input data into a training and test dataset mitigates this risk of over-fitting the model to the training dataset (32). Previous investigations into the role of NLP in the classification of pediatric CXR reports with pneumonia have used training datasets with several hundred CXR reports and, in some cases, used the same dataset for training and testing (16, 17, 33). Meystre, et al., used a sampling of 282 pediatric annotated CXR reports from the PHIS+ dataset using Textractor, a library included within the Apache Unstructured Information Management Architecture framework, to develop a model with a sensitivity of 52.7% and specificity of 96.6% (16). Mendonça, et al., evaluated the performance of a multimodular clinical NLP algorithm (called MedLEE) to assist in the interpretation of neonatal radiographs and reported a sensitivity of 71% and 99% (17). More recently, Smith, et al., used a random forest classifier trained using 10,000 chest radiographs performed among children hospitalized at a single institution and implemented it using a clinical decision support system (34). These models had high sensitivity (89.9%) and specificity (94.9%) during implementation and demonstrate the potential of these applications for identifying pneumonia, though its performance across clinical settings has not yet been reported. By training our models using more than 170,000 CXR reports from a multicenter database, we increase the scale of prior work by several orders of magnitude. In addition, we retained a separate validation dataset, which was interpreted by independent content experts with excellent concordance. Following external validation, our models may have potentially greater generalizability and applications to other populations.

Prior NLP studies of both adult and pediatric CXR reports have frequently relied on Bayesian methodology to determine the presence or absence of pneumonia (35, 36). While Bayesian logic is considered simple, fast and effective, it relies on the premise that all features in the dataset are both equally important and independent, a potentially inaccurate assumption in the highly variable interpretation of chest radiographs (37, 38). In this investigation, different NLP algorithms provided differing diagnostic accuracy. Importantly, the highest performing algorithm utilized XGBoost, a relatively novel machine learning technique which has been trialed in a wide variety of applications, functions well on multiple operating systems, supports all major programming languages and has consistently shown superior performance in comparison to other NLP methodologies (24).

The robust performance of our NLP models supports its incorporation into electronic CDS tools to efficiently integrate patient information with clinical guidelines. Implementation of and compliance with CDS tools varies widely between hospital systems, practice settings, EHRs and individual users (39). Factors associated with low utilization rates of CDS tools include complexity of the system and difficulty integrating the tool into the workflow of the EHR (40).

XGBoost has recently emerged as the one of the highest-performing NLP algorithms across a variety of machine learning platforms, currently making it the most widely applicable NLP technology. Not surprisingly, our XGBoost model demonstrated the best overall performance (as measured by the F1 score). The accuracy of our XGBoost model may be further improved by the incorporation of other structured elements within the EHR that are known to be associated with pneumonia (including age, fever, and hypoxemia) (41, 42). Used in combination with these clinical factors, the use of automated radiograph interpretation may allow for an improved diagnostic accuracy, decreasing both false alarms (i.e., false positives) and missed cases (i.e., false negatives). As such, this NLP model may offer a standardized approach to building and implementing CDS tools, with the goals of improving antimicrobial stewardship, risk severity prediction, and in reducing unnecessary hospitalizations. The successful deployment of these algorithms will depend on an engaged stakeholder base and should follow principles recently identified by Shortliffe, et al., including (a) transparent reasoning, (b) seamless integration, (c) intuitive utilization, (d) clinical relevance, (e) recognition of the expertise of the clinician, and (f) constructed on a sound evidence base (43).

Our results additionally support the use of NLP research to facilitate large database research. By automatically finding actionable insight within large and complex volumes of electronic health data, a robust NLP model provides a natural solution to time-intensive manual review usually required by large database research (44). All models demonstrated tradeoffs between sensitivity and specificity to varying extends which may impact the clinical applicability of these algorithms when embedded into a decision support system model. For example, the Naïve Bayes model, demonstrated the highest sensitivity at 93.5%, which is likely due to its reliance on simple probabilities. When trained with domain experts, we theorize that Naïve Bayes would be best utilized to accurately capture true positives while reducing the time burden associated with manually reviewing and classifying thousands of unstructured imaging reports. In contrast, the XG Boost model demonstrated a higher PPV, as reflected in its higher F1 score; this model also demonstrated extremely high specificity. In combination with a highly specific model like XGBoost, integration of the Naïve Bayes algorithm into the EHR could facilitate the rapid and accurate identification of pediatric patients with pneumonia for prospective enrollment in clinical studies and allow for the provision of automatic disease-specific management guidelines. Logistic regression demonstrated poor performance which favored the majority (e.g., negative) class, suggesting its limited potential to meaningfully identify true positives with correspondingly poor sensitivity and positive predictive value.

It is worth noting that each of the four models demonstrated superior measures of diagnostic accuracy when validated with domain experts versus ICD-9 diagnosis codes of pneumonia. As such, our findings likely reflect the inherent difference in specificity between screening for pneumonia based solely on a radiograph report and the impact of incorporating additional clinical data like vital signs, physical exam findings, laboratory results, comorbid conditions and response to initial treatments, all of which are integrated into a final diagnosis code. We surmise that incorporation of an XGBoost-based model into a cohesive CDS tool, in combination with other clinical electronic data, would potentially result in enhanced performance for specific clinical applications. Other applications of machine learning with radiography have focused on direct image interpretation. Within pediatric research, these applications have been trialed for the diagnosis of pneumonia using Näıve Bayes, SVM, K-nearest neighbor (45), and transfer learning techniques (46), among others (47). These algorithms may be combined with radiologist interpretation to potentially work together in a complementary fashion to improve the overall accuracy of the predictive model.

Our findings are subject several limitations. First, the PHIS+ database did not contain the actual radiographs to verify the radiologists’ interpretations. The interpretation of chest radiography is complex: prior work, for example, has demonstrated wide interrater variability between radiology assessments regarding infiltrate versus atelectasis on the interpretation of pediatric imaging (38, 40). Second, our manual review process was based solely on domain expert interpretation and did not utilize a standardized set of agreed upon words or phrases to dichotomize the CXR reports into positive or negative. Despite this, the two primary reviewers retained a high degree of inter-rater agreement, which was comparable to prior studies that used a predetermined set of words to define positive and negative reports (18). We believe our approach is more generalizable and accurately reflects the natural variation between providers when interpreting free text clinical data. Third, our validation dataset was small, containing 1% of the original sample. Given the large size of the derivation sample, this amounted to a total of 1,350 CXR reports, which remained a sizable sample. In addition, PHIS+ is primarily an administrative database, and, as such, does not include time-based data or clinical notes. Although we were able to take advantage of the large repository of radiological data within PHIS+, the lack of other clinical data (i.e., clinical notes, etc.) make the development of a deep learning-based tool less applicable and represents an area of future research. Finally, most CXR reports in both the training and test datasets were negative for pneumonia, reflecting the relative paucity of pneumonia in the pediatric population compared to viral lower respiratory tract disease. This imbalance in the training data, a commonly encountered phenomenon in healthcare-related machine learning applications, has the potential to artificially increase the precision and decrease the recall (48). Despite this, the F1, or overall performance, of our XGBoost model was robust indicating an acceptable balance between precision and recall. Future work should focus on external validation and enhancing the performance of this model through interactive learning, including manual review of the false positive and false negative reports in order to mitigate the effects of the skewed input data and optimize model performance.

In this investigation, we used supervised and unsupervised learning techniques to generate novel NLP algorithms capable of identifying pediatric CXR reports positive for pneumonia with comparable accuracy to content experts and robust measures of diagnostic accuracy. Our results suggest that, following external validation, such tools could be integrated into comprehensive electronic CDS systems to enhance the automatic identification of pediatric patients with radiographic pneumonia and to inform large database research. Future research is needed to refine the NLP algorithms in order to apply them to specific clinical settings.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Lurie Children's Hospital Institutional Review Board. Written informed consent from the participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

NR designed the study, acquired the data, analyzed the data, and drafted the article. ZW analyzed the data and revised it critically for important intellectual content. JM, SS, and TF interpreted the data and revised it critically for important intellectual content. SR conceived and designed the study, analyzed the data, and revised it critically for important intellectual content. All authors contributed to the article and approved the submitted version.

Acknowledgments

We would like to thank Jeffrey Rixe, for his contributions to this study.

Conflicts of interest

JM received funding from Merck for an unrelated study. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdgth.2023.1104604/full#supplementary-material.

References

1. Bryce J, Boschi-Pinto C, Shibuya K, Black R. WHO estimates of the causes of death in children. Lancet. (2005) 365(9465):1147–52. doi: 10.1016/S0140-6736(05)71877-8

2. Katz S, Williams D. Pediatric community-acquired pneumonia in the United States: changing epidemiology, diagnostic and therapeutic challenges, and areas for future research. Infect Dis Clin North Am. (2018) 32(1):47–63. doi: 10.1016/j.idc.2017.11.002

3. Williams DJ, Zhu Y, Grijalva CG, Self WH, Harrell FE, Reed C, et al. Predicting severe pneumonia outcomes in children. Pediatrics. (2016) 138(4):1–11. doi: 10.1542/peds.2016-1019

4. Williams DJ, Shah SS, Myers A, Hall M, Auger K, Queen MA, et al. Identifying pediatric community-acquired pneumonia hospitalizations: accuracy of administrative billing codes. JAMA Pediatr. (2013) 167(9):851–8. doi: 10.1001/jamapediatrics.2013.186

5. Brogan TV, Hall M, Williams DJ, Neuman MI, Grijalva CG, Farris RWD, et al. Variability in processes of care and outcomes among children hospitalized with community-acquired pneumonia. Pediatr Infect Dis J. (2012) 31(10):1036. doi: 10.1097/INF.0b013e31825f2b10

6. Lipsett SC, Monuteaux MC, Bachur RG, Finn N, Neuman MI. Negative chest radiography and risk of pneumonia. Pediatrics. (2018) 142(3):1–8. doi: 10.1542/peds.2018-0236

7. Geanacopoulos AT, Porter JJ, Monuteaux MC, Lipsett SC, Neuman MI. Trends in chest radiographs for pneumonia in emergency departments. Pediatrics. (2020) 145(3):1–9. doi: 10.1542/peds.2019-2816

8. Williams DJ, Edwards KM, Self WH, Zhu Y, Ampofo K, Pavia AT, et al. Antibiotic choice for children hospitalized with pneumonia and adherence to national guidelines. Pediatrics. (2015) 136(1):44–52. doi: 10.1542/peds.2014-3047

9. Williams DJ, Hall M, Shah SS, Parikh K, Tyler A, Neuman MI, et al. Narrow vs broad-spectrum antimicrobial therapy for children hospitalized with pneumonia. Pediatrics. (2013) 132(5):e1141–8. doi: 10.1542/peds.2013-1614

10. Neuman MI, Hall M, Hersh AL, Brogan TV, Parikh K, Newland JG, et al. Influence of hospital guidelines on management of children hospitalized with pneumonia. Pediatrics. (2012) 130(5):e823–30. doi: 10.1542/peds.2012-1285

11. Florin TA, Brokamp C, Mantyla R, Depaoli B, Ruddy R, Shah SS, et al. Validation of the pediatric infectious diseases society-infectious diseases society of America severity criteria in children with community-acquired pneumonia. Clin Infect Dis. (2018) 67(1):112–119. doi: 10.1093/cid/ciy031

12. Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med. (2012) 157(1):29–43. doi: 10.7326/0003-4819-157-1-201207030-00450

13. Karwa A, Patell R, Parthasarathy G, Lopez R, McMichael J, Burke CA. Development of an automated algorithm to generate guideline-based recommendations for follow-up colonoscopy. Clin Gastroenterol Hepatol. (2020) 18(9):2038–45. doi: 10.1016/j.cgh.2019.10.013

14. Hou JK, Imler TD, Imperiale TF. Current and future applications of natural language processing in the field of digestive diseases. Clin Gastroenterol Hepatol. (2014) 12(8):1257–61. doi: 10.1016/j.cgh.2014.05.013

15. Ramgopal S, Sanchez-Pinto LN, Horvat CM, Carroll MS, Luo Y, Florin T. Artificial intelligence-based clinical decision support in pediatrics. Pediatr Res. (2022):1–8. doi: 10.1038/s41390-022-02226.5

16. Brown SM, Jones BE, Jephson AR, Dean NC. Validation of the infectious disease society of America/American thoracic society 2007 guidelines for severe community-acquired pneumonia. Crit Care Med. (2009) 37(12):2–15. doi: 10.1097/CCM.0b013e3181b030d9

17. Meystre S, Gouripeddi R, Tieder J, Simmons J, Srivastava R, Shah S. Enhancing comparative effectiveness research with automated pediatric pneumonia detection in a multi-institutional clinical repository: a PHIS+ pilot study. J Med Internet Res. (2017) 19(5):e162. doi: 10.2196/jmir.6887

18. Mendonça EA, Haas J, Shagina L, Larson E, Friedman C. Extracting information on pneumonia in infants using natural language processing of radiology reports. J Biomed Inform. (2005) 38(4):314–21. doi: 10.1016/j.jbi.2005.02.003

19. Narus SP, Srivastava R, Gouripeddi R, Livne OE, Mo P, Bickel JP, et al. Federating clinical data from six pediatric hospitals: process and initial results from the PHIS+ consortium. AMIA Annual symposium proceedings (2011). American Medical Informatics Association. p. 994

20. Pelletier JH, Ramgopal S, Au AK, Clark RSB, Horvat CM. Maximum Pao2 in the first 72 h of intensive care is associated with risk-adjusted mortality in pediatric patients undergoing mechanical ventilation. Crit Care Explor. (2020) 2(9):1–4. doi: 10.1097/CCE.0000000000000186

21. Feudtner C, Feinstein JA, Zhong W, Hall M, Dai D. Pediatric complex chronic conditions classification system version 2: updated for ICD-10 and complex medical technology dependence and transplantation. BMC Pediatr. (2014) 14(1):2–7. doi: 10.1186/1471-2431-14-199

22. Macy ML, Hall M, Shah SS, Hronek C, Del Beccaro MA, Hain PD, et al. Differences in designations of observation care in US freestanding children’s hospitals: are they virtual or real? J Hosp Med. (2012) 7(4):287–93. doi: 10.1002/jhm.949

23. Mikolov T, Chen K, Corrado G, Dean J. Efficient estimation of word representations in vector space. arXiv (2013).

24. Řehůřek R. Gensim: topic modelling for humans (2022). Available at: https://radimrehurek.com/gensim/index.html (Accessed January 2, 2023).

25. Chen T, Guestrin C. XGBoost: a scalable tree boosting system. Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining; New York, NY, USA: Association for Computing Machinery (2016). p. 785–94

26. Python package introduction: xgboost. Available at: https://xgboost.readthedocs.io/en/latest/python/python_intro.html (Accessed July 26, 2021).

27. sklearn.svm.SVC—scikit-learn 0.24.2 documentation. Available at: https://scikit-learn.org/stable/modules/generated/sklearn.svm.SVC.html (Accessed July 26, 2021).

28. Cortes C, Vapnik V. Support-vector networks. Mach Learn. (1995) 20(3):273–97. doi: 10.1007/BF00994018

30. sklearn.naive_bayes.GaussianNB—scikit-learn 0.24.2 documentation. Available at: https://scikit-learn.org/stable/modules/generated/sklearn.naive_bayes.GaussianNB.html (Accessed July 26, 2021).

31. Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med. (2020) 3(1):17. doi: 10.1038/s41746-020-0221-y

32. Nadkarni PM, Ohno-Machado L, Chapman WW. Natural language processing: an introduction. J Am Med Informatics Assoc. (2011) 18(5):544–51. doi: 10.1136/amiajnl-2011-000464

33. Smith JC, Spann A, McCoy AB, Johnson JA, Arnold DH, Williams DJ, et al. Natural language processing and machine learning to enable clinical decision support for treatment of pediatric pneumonia. AMIA Annu Symp Proc. (2020) 2020:1130.33936489

34. Fiszman M, Chapman WW, Aronsky D, Evans RS, Haug PJ. Automatic detection of acute bacterial pneumonia from chest x-ray reports. J Am Med Informatics Assoc. (2000) 7(6):593–604. doi: 10.1136/jamia.2000.0070593

35. Dublin S, Baldwin E, Walker RL, Christensen LM, Haug PJ, Jackson ML, et al. Natural language processing to identify pneumonia from radiology reports. Pharmacoepidemiol Drug Saf. (2013) 22(8):834–41. doi: 10.1002/pds.3418

36. Davies HD, Wang EE-L, Manson D, Babyn P, Shuckett B. Reliability of the chest radiograph in the diagnosis of lower respiratory infections in young children. Pediatr Infect Dis J. (1996) 15(7):600–4. doi: 10.1097/00006454-199607000-00008

37. Johnson J, Kline JA. Intraobserver and interobserver agreement of the interpretation of pediatric chest radiographs. Emerg Radiol. (2010) 17(4):285–90. doi: 10.1007/s10140-009-0854-2

38. Knirsch CA, Jain NL, Pablos-Mendez A, Friedman C, Hripcsak G. Respiratory isolation of tuberculosis patients using clinical guidelines and an automated clinical decision support system. Infect Control Hosp Epidemiol. (1998) 19(2):94–100. doi: 10.2307/30141996

39. Demonchy E, Dufour J-C, Gaudart J, Cervetti E, Michelet P, Poussard N, et al. Impact of a computerized decision support system on compliance with guidelines on antibiotics prescribed for urinary tract infections in emergency departments: a multicentre prospective before-and-after controlled interventional study. J Antimicrob Chemother. (2014) 69(10):2857–63. doi: 10.1093/jac/dku191

40. Moxey A, Robertson J, Newby D, Hains I, Williamson M, Pearson S-A. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Informatics Assoc. (2010) 17(1):25–33. doi: 10.1197/jamia.M3170

41. Lipsett SC, Hirsch AW, Monuteaux MC, Bachur RG, Neuman MI. Development of the novel pneumonia risk score to predict radiographic pneumonia in children. Pediatr Infect Dis J. (2021) 41(1):24–30. doi: 10.1097/INF.0000000000003361

42. Ramgopal S, Ambroggio L, Lorenz D, Shah S, Ruddy R, Florin T. A prediction model for pediatric radiographic pneumonia. Pediatrics. (2022) 149(1):47–56. doi: 10.1542/peds.2021-051405

43. Shortliffe EH, Sepúlveda MJ. Clinical decision support in the era of artificial intelligence. J Am Med Assoc. (2018) 320(21):2199–200. doi: 10.1001/jama.2018.17163

44. Cai T, Giannopoulos AA, Yu S, Kelil T, Ripley B, Kumamaru KK, et al. Natural language processing technologies in radiology research and clinical applications. Radiographics. (2016) 36(1):176–91. doi: 10.1148/rg.2016150080

45. Sousa RT, Marques O, Soares FAAMN, Sene IIG Jr, de Oliveira LLG, Spoto ES. Comparative performance analysis of machine learning classifiers in detection of childhood pneumonia using chest radiographs. Procedia Comput Sci. (2013) 18:2579–82. doi: 10.1016/j.procs.2013.05.444

46. Chen Y, Roberts CS, Ou W, Petigara T, Goldmacher GV, Fancourt N, et al. Deep learning for classification of pediatric chest radiographs by WHO’s standardized methodology. PLoS ONE. (2021) 16(6):e0253239. doi: 10.1371/journal.pone.0253239

47. Padash S, Mohebbian MR, Adams SJ, Henderson RDE, Babyn P. Pediatric chest radiograph interpretation: how far has artificial intelligence come? A systematic literature review. Pediatr Radiol. (2022) 42(8):568–1580. doi: 10.1007/s00247-022-05368-1443w

Keywords: chest radiograph, clinical decision support, machine learning, natural language processing, pediatric, pneumonia

Citation: Rixe N, Frisch A, Wang Z, Martin JM, Suresh S, Florin TA and Ramgopal S (2023) The development of a novel natural language processing tool to identify pediatric chest radiograph reports with pneumonia. Front. Digit. Health 5:1104604. doi: 10.3389/fdgth.2023.1104604

Received: 21 November 2022; Accepted: 16 January 2023;

Published: 22 February 2023.

Edited by:

Lina F. Soualmia, Université de Rouen, FranceReviewed by:

Terri Elizabeth Workman, George Washington University, United StatesChiranjibi Sitaula, Monash University, Australia

© 2023 Rixe, Frisch, Wang, Martin, Suresh, Florin and Ramgopal. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sriram Ramgopal c3JhbWdvcGFsQGx1cmllY2hpbGRyZW5zLm9yZw==

Specialty Section: This article was submitted to Health Informatics, a section of the journal Frontiers in Digital Health

Nancy Rixe1

Nancy Rixe1 Judith M. Martin

Judith M. Martin Sriram Ramgopal

Sriram Ramgopal