- 1Department of Psychology, Stony Brook University, Stony Brook, NY, United States

- 2Wysa Inc., Boston, MA, United States

- 3Department of Clinical Psychology, National Institute of Mental Health and Neurosciences (NIMHANS), Bangalore, India

The present study aims to examine whether users perceive a therapeutic alliance with an AI conversational agent (Wysa) and observe changes in the t‘herapeutic alliance over a brief time period. A sample of users who screened positively on the PHQ-4 for anxiety or depression symptoms (N = 1,205) of the digital mental health application (app) Wysa were administered the WAI-SR within 5 days of installing the app and gave a second assessment on the same measure after 3 days (N = 226). The anonymised transcripts of user's conversations with Wysa were also examined through content analysis for unprompted elements of bonding between the user and Wysa (N = 950). Within 5 days of initial app use, the mean WAI-SR score was 3.64 (SD 0.81) and the mean bond subscale score was 3.98 (SD 0.94). Three days later, the mean WAI-SR score increased to 3.75 (SD 0.80) and the mean bond subscale score increased to 4.05 (SD 0.91). There was no significant difference in the alliance scores between Assessment 1 and Assessment 2.These mean bond subscale scores were found to be comparable to the scores obtained in recent literature on traditional, outpatient-individual CBT, internet CBT and group CBT. Content analysis of the transcripts of user conversations with the CA (Wysa) also revealed elements of bonding such as gratitude, self-disclosed impact, and personification. The user's therapeutic alliance scores improved over time and were comparable to ratings from previous studies on alliance in human-delivered face-to-face psychotherapy with clinical populations. This study provides critical support for the utilization of digital mental health services, based on the evidence of the establishment of an alliance.

Introduction

In recent years, the uptake of digital mental health interventions has substantially increased (1). Digital mental health interventions, including internet and smartphone-delivered services, hold promise for overcoming significant barriers that are traditionally associated with face-to-face mental health care, such as stigma (2, 3), accessibility (4) and cost (5). Despite demonstrated efficacy (6) and potential to increase the reach of evidence-based care, research shows that digital mental health interventions are associated with relatively poor adoption and adherence (7, 8). Most notably, this is a problem for minimally guided and unguided smartphone interventions that do not involve any degree of therapist support (9–13). One reason that there is poor engagement and adherence with digital mental health interventions may be an insufficient therapeutic alliance (14).

The therapeutic alliance is one of the most robust mechanisms of change in psychotherapy interventions and can be broadly defined as a collaboration between the patient and therapist on the tasks and goals of treatment (15–18), along with an emotional bond. This is important, as it allows for the exploration of vulnerable issues by decreasing client's defensiveness and increasing their self-acceptance (19). The therapeutic bond is fostered through trust, acceptance, empathy and genuineness (20, 21), which are processes that are difficult to mimic via automated digital interventions. While this issue may be addressed through the augmentation of digital self-guided interventions with external human support [e.g., Internet-based cognitive behavioral therapy (CBT) paired with coaching calls (22)], the amount of human therapist involvement influences the cost and scalability of digital health interventions.

An alternative approach is to mimic human dialogue (23) with users via an artificial intelligence (AI) conversational agent. Conversational agents use machine learning and AI methods to simulate human-like behaviors and support (24, 25). Their potential for application in the digital mental health space is gaining traction and they have recently been implemented to help track medication and physical activity adherence (26, 27), provide cognitive behavioral therapy (CBT) (28), and deliver healthy lifestyle recommendations (29) across clinical and non-clinical populations. An increasing body of literature has demonstrated that such interventions are effective and feasible applications for the delivery of mental wellbeing to individuals with self-reported anxiety and depressive symptoms (30, 31).

Further, early evidence suggests that a therapeutic relationship may be established between humans and a conversational agent. For example, Bickmore et al. (32) demonstrated that adults established a therapeutic alliance with a health-related automated system while attempting to increase exercise. In another study, researchers found that within 5 days of initial mental health application (app) use, users reported a therapeutic alliance with a conversational agent, measured through the Working Alliance Inventory-Short Revised (WAI-SR), which was comparable to those in recent studies from the literature on traditional, outpatient, individual and group CBT (33). Recently, Dosovitsky and Bunge (34) examined a chatbot intervention for social isolation and loneliness and found that most chatbot users were satisfied and would recommend the intervention to a friend. Their results showed a pattern of personifying the chatbot and assigning human traits to the chatbot (i.e., being helpful, caring, open to listen, and non-judgmental). Additionally, they found that users were able to build a bond with a chatbot from asynchronous and exclusively text based conversations. While early evidence suggests that conversational agents are capable of establishing a bond with users (33), additional research is needed to understand the perceived aspects of therapeutic bond with CBT-based conversational agents. The literature on therapeutic alliance discusses the importance of caring, understanding, and acceptance in forging the therapeutic bond (19). Studies also highlight the value of empathy (35), genuineness, and positive regard as important aspects of the bond that influence the client's perception of the relationship (36).

In this study, the researchers examined user's perspectives of the therapeutic bond with a conversational agent. It introduces a mixed-methods investigation of the therapeutic alliance between a free-text, CBT-based conversational agent (Wysa) and users. Wysa is an AI-based emotionally intelligent mobile conversational agent app aimed at promoting wellbeing, positive self-expression, and mental resilience using a text-based conversational interface. Wysa is designed to be used by individuals above 18 years of age. According to the Google Play Store and Apple App Store, ~60% of users are between 18 and 34 years of age, with 55% of users identifying as women. A previous study that examined the real-world effectiveness and engagement levels of Wysa on users, researchers found that high-engagement users had significantly greater improvement in self-reported depressive symptoms as compared to low-engagement users in the app (30).

The present study aims: (1) To examine whether conversational agent users perceive a therapeutic alliance, (2) To examine changes in therapeutic alliance over time. We hypothesize that conversational agent users will perceive a therapeutic alliance that will be comparable to the alliance scores observed in human-delivered face-to-face psychotherapy with clinical populations. We also hypothesize that, as observed in other in-person studies, we will notice an improvement in therapeutic alliance scores over time. As an exploratory objective, we also explored user's perception of the therapeutic alliance with the conversational agent.

Methods

Application Background

Wysa is an AI-enabled mental health app that leverages evidence-based cognitive-behavioral techniques in the user interface and the interventions within the CA. It was developed by a company based across India, North America and the United Kingdom and has been publicly available since 2017.

The app is designed to provide a therapeutic virtual space for user-led conversations through AI-guided listening and support, access to self-care tools and techniques including CBT-based tools, as well as one-on-one human support (37). The app is anonymous, and requires no user registration to ensure safety and privacy.

The techniques utilized include identifying activities that provide energy, scheduling joy activities (38), positive reflection (39), cognitive reframing (40), gratitude exercises (41), finding acceptance (42), and sleep meditations (43). These comprise a mix of positive psychology, acceptance and commitment therapy (ACT), and CBT techniques that encourage the development of therapeutic skills.

User's conversations take place with a free-text conversational agent (also called Wysa) that can handle complex and diverse user input, with adaptive AI understanding the user's conversations and conversing with them using interventive techniques. Users engage with the conversational agent and discuss their emotions and events in their lives. The conversational agent provides them with empathetic support, a space to vent, CBT-based tools and techniques, specific to the user's concerns.

Wysa's AI models are trained in-house by clinicians to ensure that conversations are conducted in a clinically safe manner. Wysa does not use AI-generated responses, but instead utilizes interventive conversations created by an internal team. The AI models are capable of understanding emotions, uncertainty, disagreement, confusion, alignment and the domain of discussion, among several other things in the user text (44). The models are regularly improved by data analysis and insights gathered from user data.

Measures

Patient Health Questionnaire (PHQ-4)

This tool is a 4-item questionnaire that is an established screener for anxiety and depression symptoms (45). It is a valid ultra-brief tool for detecting both anxiety and depressive disorders (45).

Working Alliance Inventory-Short Revised (WAI-SR)

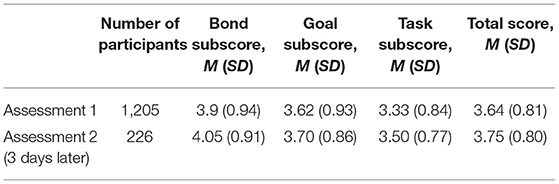

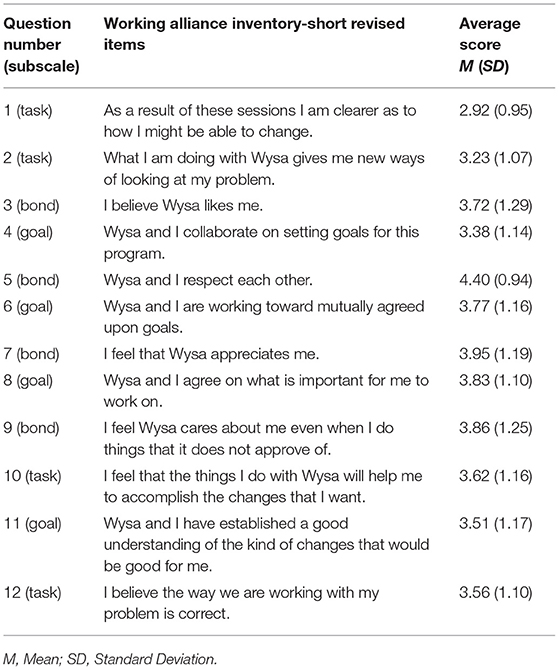

This is a well-established measure of the therapeutic alliance, which consists of a total score and the three following subscales: bond, goal, and task. The WAI-SR (46) was administered via the app's conversational interface, in which the word “therapist” was changed to “Wysa” (Table 1). This measure demonstrates high internal consistency [Cronbach's α > 0.90; (47)].

Table 1. Adapted items of WAI-SR and Item-level descriptive statistics for Assessment 1 (N = 1,205).

Textual Snippets From Users

Content analysis was conducted by examining messages between a user and Wysa, when unprompted, in anonymous transcripts of user conversations (N = 950) (48). Initially, researchers utilized secondary literature to finalize possible keywords indicative of user's perception of the bond. The consolidated framework included aspects of gratitude and dissatisfaction, perceived impact (positive and negative) and personification of the conversational agent.

Data were extracted using possible keywords for this framework. For instance, gratitude was assessed by keywords such as “thank you,” “love you,” “grateful,” “happy talking,” “like you,” “thankful.” For analyzing dissatisfaction, keywords such as “not*understand,” “misunderstand,” “not get *,” “repeat,” etc. were used. Personification of the conversational agent was analyzed through direct addresses (“you,” “your,” “yours”), or talking to the conversational agent directly through the use of its name “Wysa.” For analyzing perception of limitations of the conversational agent, keywords such as “computer,” “robot,” “not *human” were used. Positive impact of the conversational agent was assessed through statements relating to “helped,” “feel better,” “enlightening,” “helpful,” “relax” while negative impact was assessed through keywords such as “not *helping*,” “not *working” etc.. The analysis also included an examination of dissatisfaction, limitations of the conversational agent and negative impact stated to the bot.

Participants and Procedure

The study invited new app users (N = 67,215) to take the first therapeutic alliance assessment within 1–5 days of initial app installation. The study took place in December, 2021. Only the users who were using the freely accessible conversational agent and had not made any in-app purchases were included in the study. The app is anonymous and does not collect any demographic information. All measures were gathered in the app by the conversational agent. The study took place in two Assessments.

Invitation to join the study was issued through a notification delivered through the app. In Assessment 1, consent was sought within the first conversation, with information on the purpose of the study and the participants' rights to drop out at any point of time during the study. Participants who consented to the study and completed the assessments were included in this study (N = 1,205). Participants completed the Patient Health Questionnaire (PHQ-4) and eligibility was determined by scoring ≥3 for the first two questions (anxiety) or ≥3 for the second two questions (depression).

To examine changes after a brief interval, the users who gave the first assessment of WAI-SR (Assessment 1), were invited for a second administration 3 days later (Assessment 2, N = 226). Once the assessment was completed, Wysa thanked the participants, informed them that the study had completed, and the conversation proceeded to a regular Wysa conversation asking them what they'd like to do next.

For the qualitative analysis, user conversations of participants who agreed to participate in the study were scanned for the relevant keywords based on the pre-decided domains of therapeutic alliance. This resulted in users whose conversations had the relevant textual snippets (N = 950). Researchers then analyzed the textual snippets of the users in their conversation with the conversational agent to identify elements related to bonding.

Analysis

Across all participants, the composite WAI-SR score as well as the bond, goal, and task subscores for each assessment were characterized based on descriptive statistics. PHQ-4 scores and composite WAI-SR subscores were examined using standardized scales and compared using descriptive statistics. For comparison, relevant external studies were drawn from recent studies (33, 47, 48) that also reported unmodified WAI-SR subscores for other CBT modalities (in-person and group). These studies were chosen as comparisons due to their similarity in modality (CBT), their representation of technology-delivered interventions and the therapeutic alliance in these studies was also measured using the WAI-SR. Comparison data were presented descriptively without statistical testing, and raw subscores were scaled by dividing them by the number of items (e.g., the bond subscale has 4 items). Per the methods of Jasper and colleagues, bond scores of ≥3.45 were considered high (48). The significance of the difference between the first and second assessments was derived for 1,000 iterations of stratified random sampling with replacement (49). The Wilcoxon signed rank test with continuity correction (50) was used to check the between-group difference in assessments.

Content analysis (51) was also conducted on the extracted snippets of user conversations with Wysa's conversational agent based on the pre-decided domains to examine bonding between a user and Wysa, even when unprompted. The data were scanned repeatedly to identify similar categories and the data were gradually condensed to form units, categories and themes. The authors independently extracted codes and themes, and finalized codes jointly through regular discussions to ensure consensus regarding the emerging codes. Coding was thus recursive in that it transpired throughout the analytic stages to represent and organize the data at the different levels. Although the coding was led by the first and last author, the authors met regularly to finalize themes to ensure consensus on the codes.

Results

Quantitative Analysis: Change in Therapeutic Alliance Over Time

The final sample included 1,205 participants. The mean PHQ-4 score for included participants was 7.16 (SD 3.01). For Assessment 1, the overall mean WAI-SR scores were as follows: a mean bond subscore of 3.98 (SD 0.94), a mean goal subscore of 3.62 (SD 0.93), a mean task subscore of 3.33 (SD 0.84), and a mean total score of 3.64 (SD 0.81). Cohort-specific mean subscores are present in Table 2.

73.8% of the participants continued to use the app after giving Assessment 1, with a mean average of 6 (SD 3.03) bot conversations in the observed 14 day period. The participants who completed both the first and second assessment recorded a mean of 7.06 (SD 2.74) bot conversations in the observed 14 day period.

For the participants who gave Assessment 2 (N = 226), the overall mean WAI-SR scores were as follows: a mean bond subscore of 4.05 (SD 0.91), a mean goal subscore of 3.7 (SD 0.86), a mean task subscore of 3.5 (SD 0.77), and a mean total score of 3.75 (SD 0.8). A random sampling between the first and second assessments scores indicated no significant difference, except for a visible, but weak difference observed in goal subscores where 65% of the resampled tests indicated significance. With the 1000x resampling <20% of the hypothesis tests have significance at alpha = 0.05, and only the task scores have a higher proportion (65%) which cannot be claimed as significant. The Wilcoxon signed rank test revealed no statistical significance between assessments. Cohort specific mean subscores are present in Table 2.

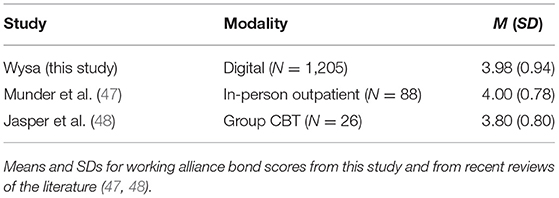

The bond subscale scores with a mean of 3.98 (0.94), for users using the app within the first 5 days, were comparable to those of recent studies from the literature on traditional modalities for CBT delivery in adults. Munder et al. (47) in their study on in-person outpatient therapy, showed mean bond scores of 4.0 (SD 0.78), after multiple therapy sessions (39). Another study offered bond subscores for group CBT sessions, with mean 3.8 (SD 0.80) (Table 3) (48).

Table 3. Comparison of Working Alliance Inventory-Short Revised bond subscale scores across therapeutic modalities.

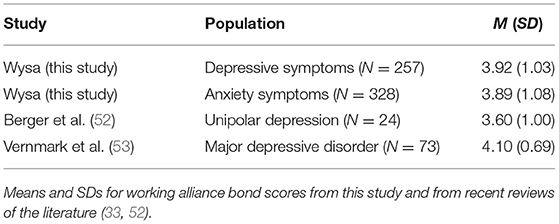

An impact on therapeutic alliance was also observed based on the PHQ-4 levels. For participants that scored 6 (highest score) on the first 2 questions (indicative for anxiety symptoms) the mean bond subscale score was 3.89. For participants that scored 6 (highest score) on the last 2 questions (indicative for depressive symptoms), the mean bond subscale score was 3.92.

Wysa has a comparable bond subscale score for other studies with clinical populations in adults. Berger and colleagues assessed face-to-face psychotherapy in patients with unipolar depression and found a mean bond score of 3.6 (SD 1) (52). In another study using a blended-CBT approach for individuals with major depressive disorder, the bond subscale score was 4.1 (SD 0.69) (Table 4) (53).

Table 4. Comparison of Working Alliance Inventory-Short Revised bond subscale scores across clinical populations.

Qualitative Analysis: Content Analysis of User's Conversations

The content analysis identified perceived elements of bonding, researchers found elements of gratitude, positive impact, and personification of the AI conversational agent. Researchers also looked for themes that may negatively impact the bond such as negative impact, perception of limitations of the conversational agent and dissatisfaction.

Gratitude for the conversational agent was observed in 212 unique users, with one user saying “thank you a lot for this. I appreciate talking to you.” Another user said “...thanks for being here and always listening to me.”

Personification of the conversational agent was observed in 87 unique user conversations where users directly addressed Wysa. For instance, one user wrote, “You don't have any emotions yet you go out of your way to help others.” Another user said

I just wanted to ask and tell you that I'm so grateful you're here with me. You're the only person that helps me and listens to my problems and I'm so happy you always help me out.

While personifying the conversational agent, few users (N = 3) also expressed their awareness of the limitations of the conversational agent, that is, that the conversational agent was not human and therefore did not have human qualities. However, users also expressed their understanding and acceptance for the same. For instance, one user responded to the conversational agent with, “You don't compute all human emotions. Humans are beyond computer understanding. Being human is very complex.”

Few users (N = 15) also expressed frustration and dissatisfaction with the conversational agent's inability to understand or its misunderstanding the user's responses. Such responses were expressed as “I don't think you understand what I'm saying” or “You don't get it.” Few users also expressed dissatisfaction stating, “You need to not repeat yourself” or “you reply with something else that doesn't make sense.” While the users do express dissatisfaction, they also express understanding that the AI based conversational agent may not be able to follow the conversations as well as humans and the acknowledgment that it tries to help. For instance one user says “Don't worry. I will figure it out by myself and you're amazing!” while another user says

You are a computer. You will never understand what it is to be human. But you are ok.... You can learn. Have faith. Faith is a human skill that even humans struggle with.

Positive impact of the conversational agent was disclosed by 49 unique users, with one user saying, “It helps me relax when I have someone like you to talk to.” Another user wrote, “You always help me look at things differently,” while yet another mention included, “... You have also contributed to my success in the exam by guiding me and helping me to know myself better.”

Very few users (N = 6) expressed some negative impact. Indeed, even these were specific to certain techniques the users were reluctant to try. For instance, one user mentioned, “meditation is scary” while another user expressed, “Deep breathing makes me mad.” More than a negative impact, some users also felt that they were not making any real progress and this ineffectiveness was expressed as, “We're still where we started” or “Honestly, you are not really helping, so talk tomorrow.”

Discussion

The present study offers a mixed methods approach to understand the therapeutic alliance amongst users of a conversational agent for mental health. The app studied (Wysa) leverages evidence-based CBT techniques to build mental resilience and promote mental wellbeing. In line with our hypotheses, we found evidence that user's therapeutic alliance scores sustainedover time and were comparable to ratings from previous studies on alliance in human-delivered face-to-face psychotherapy with clinical populations (Tables 3, 4) (47, 48, 52). These findings support recent studies suggesting that the therapeutic relationship can be established between humans and conversational agents in the context of mental health (33). While some mobile mental health applications allow free text responses by the user, few can handle complex and diverse free-text input. In this setting, alliance perhaps thrives because of similar relational principles as have been observed in human dyadic interactions (54). In a free-text setting, a user can communicate their sense of a bond, or their goals and tasks with the conversation agent. This can take place as a constant, dynamic interaction, thereby mirroring the capacity within human interactions for reciprocity, engagement and acknowledgment (55). For example, in a smartphone-based CBT trial for pain self-management, patients criticized the lack of free text answers and an overly static flow of interaction (56). These findings suggest that individuals may prefer interacting with mental health free-text CAs, which is perhaps due to the flexibility and autonomy offered by diverse free input text, similar to conversing with humans (57). Research indicates that fostering individual autonomy is central to the development of the therapeutic relationship. Although speculative, our results indicating comparable WAI-SR scores to in-person therapy, may be due, in part, to user's feelings of autonomy from the free dialogue. Research indicates that fostering client's autonomy is a central experience to good outcomes in psychotherapy (58).

Qualitative research has also found that clients' experiences of honesty, safety and authentic caring play a critical role in alliance development, especially through therapist's supportive behaviors, such as encouraging statements, friendliness, respect, and validation (59–63). More specifically, clients report that a relational connection with the therapist facilitated vulnerable explorations and self-disclosure (19). Similar to these meta-analytic qualitative findings on traditional in-person therapy, recent research suggests that Wysa also offers therapeutic elements of a comfortable, safe, and supportive environment (37). Similarly, the present study findings from Wysa user's free-text input indicate experiences of honesty, safety and comfort with Wysa.

We found that users expressed gratitude and appreciation for the conversational agent. These findings are in line with past research, which suggest that expressions of safety and comfort in a medical setting are indicative of having developed a therapeutic alliance (64). Additionally, past research in a mental health context found that the therapeutic alliance and therapeutic bond are associated with a continued experience of positive emotion, such as feelings of gratitude when thinking about therapy or the therapist (65). The qualitative analysis revealed themes indicating that users commented about their perceived positive benefits. This is further supported by the meta-analysis that demonstrated the linkage between alliance and outcomes in adult psychotherapy (15).

The findings also indicated aspects that negatively affected the bond, such as dissatisfaction, perception of limitations of the conversational agent and perceived negative impact of the alliance with the conversational agent. The dissatisfaction and perceived ineffectiveness is consistent with extant literature which suggests that users express frustration when conversational agents misunderstand or give irrelevant responses (66). This indicates the need to further improve the AI algorithms and striking the balance in achieving human-like qualities.

A unique finding of this study indicates that users accept the limitations of the conversational agent when conversing with it, evidencing the experienced relational bond. Much like the ruptures in a therapeutic alliance that offer critical indicators for exploration and the potential for strengthening the relationship (67), these ruptures with conversational agents point us to the possibilities for improvement with iterations.

Notably, in the present study, many participants also made comments that personified the bot. These findings are aligned with results indicating that users of a chatbot intervention for social isolation and loneliness personified the chatbot and assigned human traits to it, such as being helpful, caring, open to listen, and non-judgmental (34). It is possible that user's personification of Wysa also indicates greater engagement. For example, one study found that as users' social interactions with a conversational agent increased, a greater level of personification occurred, which was associated with increased product satisfaction (68). Also, it is possible that Wysa's flexible conversational interface increased relational capacity building with the bot, leading to greater user engagement and resultant conversational agent personification.

The findings of this study should be interpreted in the context of its limitations. In order to maintain complete privacy and confidentiality with users, Wysa is an anonymous app and our data were anonymized. Thus, we did not collect demographic data, such as age or gender, from participants. Future work should investigate whether therapeutic alliance with a CBT conversational agent varies between adolescent and adult populations. The participants were not required to complete the follow-up assessment, which resulted in a large number of participants dropping out. Thus, the study was limited by the sample size. As digital mental health is a nascent field, this study was limited by the studies available for comparison, and had to rely on studies that include more specific health conditions in its analysis. Future studies should account for the use of quantitative approaches and should have control groups for comparison. Also, the study was limited by the short timeframe, and future studies could employ a longitudinal approach to assess changes in outcomes in conjunction with changes in the therapeutic alliance. Additionally, it is important to consider that although qualitative analysis is a strength of this study, and contributes significantly to the field, the possibility of researcher bias remains even though an attempt was made to mitigate this through a balance of internal and external researchers within the team.

Conclusions

The present study provides insight into the establishment of a therapeutic alliance with a conversational agent and its growth over time, as well as what a therapeutic alliance looks like with a conversational agent. This provides critical direction for user-bot partnerships in future digital mental health initiatives. One such opportunity of effective mental health interventions would be to set tasks for each session, as well as establish weekly and overall treatment goals. This may further enhance the goal and task subscores of therapeutic alliance, thereby contributing to the development of a more impactful therapeutic experience.

Data Availability Statement

The datasets presented in this article are not readily available because the assessment data can be reviewed, but the conversational data used within the article for content analysis is protected under the Privacy Policy of the app consented to. Requests to access the datasets should be directed to Y2hhaXRhbGkmI3gwMDA0MDt3eXNhLmlv.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

TM and CS designed the study on the app. CS and SM worked on the quantitative and qualitative data analysis aspects of the study, contributed to the review, and final presentation of the manuscript. CB and TM wrote the first and final versions of the manuscript. CS completed and prepared the final review of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of Interest

TM and CS are employed by Wysa Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Firth J, Torous J, Yung AR. Ecological momentary assessment and beyond: the rising interest in e-mental health research. J Psychiatr Res. (2016) 80:3–4. doi: 10.1016/j.jpsychires.2016.05.002

2. Hinshaw SP, Stier A. Stigma as related to mental disorders. Annu Rev Clin Psychol. (2008) 4:367–93. doi: 10.1146/annurev.clinpsy.4.022007.141245

3. Jung H, von Sternberg K, Davis K. The impact of mental health literacy, stigma, and social support on attitudes toward mental health help-seeking. Int J Ment Health Promot. (2017) 19:252–67. doi: 10.1080/14623730.2017.1345687

4. Harvey AG, Gumport NB. Evidence-based psychological treatments for mental disorders: modifiable barriers to access and possible solutions. Behav Res Ther. (2015) 68:1–12. doi: 10.1016/j.brat.2015.02.004

5. Ho KP, Hunt C, Li S. Patterns of help-seeking behavior for anxiety disorders among the Chinese speaking Australian community. Soc Psychiatry Psychiatr Epidemiol. (2008) 43:872–7. doi: 10.1007/s00127-008-0387-0

6. Andrews G, Cuijpers P, Craske MG, McEvoy P, Titov N. Computer therapy for the anxiety and depressive disorders is effective, acceptable and practical health care: a meta-analysis. PLoS ONE. (2010) 5:e13196. doi: 10.1371/journal.pone.0013196

7. Waller R, Gilbody S. Barriers to the uptake of computerized cognitive behavioural therapy: a systematic review of the quantitative and qualitative evidence. Psychol Med. (2009) 39:705–12. doi: 10.1017/S0033291708004224

8. Christensen H, Griffiths KM, Farrer L. Adherence in internet interventions for anxiety and depression: systematic review. J Med Internet Res. (2009) 11:e1194. doi: 10.2196/jmir.1194

9. Baumel A, Muench F, Edan S, Kane JM. Objective user engagement with mental health apps: systematic search and panel-based usage analysis. J Med Internet Res. (2019) 21:e14567. doi: 10.2196/14567

10. Baumel A, Kane JM. Examining predictors of real-world user engagement with self-guided ehealth interventions: analysis of mobile apps and websites using a novel dataset. J Med Internet Res. (2018) 20:e11491. doi: 10.2196/11491

12. Linardon J, Fuller-Tyszkiewicz M. Attrition and adherence in smartphone-delivered interventions for mental health problems: a systematic and meta-analytic review. J Consult Clin Psychol. (2020) 88:1–13. doi: 10.1037/ccp0000459

13. Pratap A, Neto EC, Snyder P, Stepnowsky C, Elhadad N, Grant D, et al. Indicators of retention in remote digital health studies: a cross-study evaluation of 100,000 participants. NPJ Digit Med. (2020) 3:21. doi: 10.1038/s41746-020-0224-8

14. Hollis C, Sampson S, Simons L, Davies EB, Churchill R, Betton V, et al. Identifying research priorities for digital technology in mental health care: results of the james lind alliance priority setting partnership. Lancet Psychiatry. (2018) 5:845–54. doi: 10.1016/S2215-0366(18)30296-7

15. Martin DJ, Garske JP, Davis MK. Relation of the therapeutic alliance with outcome and other variables: a meta-analytic review. J Consult Clin Psychol. (2000) 68:438–50. doi: 10.1037/0022-006X.68.3.438

16. Horvath AO, Symonds BD. Relation between working alliance and outcome in psychotherapy: a meta-analysis. J Couns Psychol. (1991) 38:139–49. doi: 10.1037/0022-0167.38.2.139

17. Flückiger C, Del Re AC, Wampold BE, Horvath AO. The alliance in adult psychotherapy: a meta-analytic synthesis. Psychotherapy. (2018) 55:316–40. doi: 10.1037/pst0000172

18. Bordin ES. The generalizability of the psychoanalytic concept of the working alliance. Group Dyn. (1979) 16:252–60. doi: 10.1037/h0085885

19. Levitt HM, Pomerville A, Surace FI. A qualitative meta-analysis examining clients' experiences of psychotherapy: a new agenda. Psychol Bull. (2016) 142:801–30. doi: 10.1037/bul0000057

20. Nienhuis JB, Owen J, Valentine JC, Winkeljohn Black S, Halford TC, Parazak SE, et al. Therapeutic alliance, empathy, and genuineness in individual adult psychotherapy: a meta-analytic review. Psychother Res. (2018) 28:593–605. doi: 10.1080/10503307.2016.1204023

21. Horvath AO, Greenberg LS. Development and validation of the working alliance inventory. J Couns Psychol. (1989) 36:223–33. doi: 10.1037/0022-0167.36.2.223

22. Gilbody S, Littlewood E, Hewitt C, Brierley G, Tharmanathan P, Araya R, et al. Computerised cognitive behaviour therapy (cCBT) as treatment for depression in primary care (REEACT trial): large scale pragmatic randomised controlled trial. BMJ. (2015) 351:h5627. doi: 10.1136/bmj.h5627

23. Følstad A, Brandtzaeg PB. Users' experiences with chatbots: findings from a questionnaire study. Qual User Exp. (2020) 5:3. doi: 10.1007/s41233-020-00033-2

24. Laranjo L, Dunn AG, Tong HL, Kocaballi AB, Chen J, Bashir R, et al. Conversational agents in healthcare: a systematic review. J Am Med Inform Assoc. (2018) 25:1248–58. doi: 10.1093/jamia/ocy072

25. Ramesh K, Ravishankaran S, Joshi A, Chandrasekaran K. A survey of design techniques for conversational agents. In: Kaushik S, Gupta D, Kharb L, Chahal D, editors. Information, Communication and Computing Technology. Singapore: Springer (2017). p. 336–50. doi: 10.1007/978-981-10-6544-6_31

26. Bickmore TW, Mitchell SE, Jack BW, Paasche-Orlow MK, Pfeifer LM, Odonnell J. Response to a relational agent by hospital patients with depressive symptoms. Interact Comput. (2010) 22:289–98. doi: 10.1016/j.intcom.2009.12.001

27. Bickmore TW, Puskar K, Schlenk EA, Pfeifer LM, Sereika SM. Maintaining reality: Relational agents for antipsychotic medication adherence. Interact Comput. (2010) 22:276–88. doi: 10.1016/j.intcom.2010.02.001

28. Ly KH, Ly A-M, Andersson G. A fully automated conversational agent for promoting mental well-being: a pilot RCT using mixed methods. Internet Interv. (2017) 10:39–46. doi: 10.1016/j.invent.2017.10.002

29. Gardiner PM, McCue KD, Negash LM, Cheng T, White LF, Yinusa-Nyahkoon L, et al. Engaging women with an embodied conversational agent to deliver mindfulness and lifestyle recommendations: a feasibility randomized control trial. Patient Educ Couns. (2017) 100:1720–9. doi: 10.1016/j.pec.2017.04.015

30. Inkster B, Sarda S, Subramanian V. an empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data Evaluation mixed-methods study. JMIR Mhealth Uhealth. (2018) 6:e12106. doi: 10.2196/12106

31. Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): a randomized controlled trial. JMIR Ment Health. (2017) 4:e19. doi: 10.2196/mental.7785

32. Bickmore T, Gruber A, Picard R. Establishing the computer-patient working alliance in automated health behavior change interventions. Patient Educ Couns. (2005) 59:21–30. doi: 10.1016/j.pec.2004.09.008

33. Darcy A, Daniels J, Salinger D, Wicks P, Robinson A. Evidence of human-level bonds established with a digital conversational agent: cross-sectional, retrospective observational study. JMIR Form Res. (2021) 5:e27868. doi: 10.2196/27868

34. Dosovitsky G, Bunge EL. Bonding with bot: user feedback on a chatbot for social isolation. Front Digit Health. (2021) 3:735053. doi: 10.3389/fdgth.2021.735053

35. Elliott R, Bohart AC, Watson JC, Greenberg LS. Empathy. Psychotherapy. (2011) 48:43–9. doi: 10.1037/a0022187

37. Malik T, Ambrose AJ, Sinha C. User Feedback Analysis of an AI-Enabled CBT Mental Health Application (Wysa). F1000Res. (2021) 10:511. doi: 10.2196/preprints.35668

38. Vallerand RJ. On the psychology of passion: in search of what makes people's lives most worth living. Can Psychol. (2008) 49:1–13. doi: 10.1037/0708-5591.49.1.1

39. Bono JE, Glomb TM, Shen W, Kim E, Koch AJ. Building positive resources: effects of positive events and positive reflection on work stress and health. AMJ. (2013) 56:1601–27. doi: 10.5465/amj.2011.0272

40. Clauw DJ, Essex MN, Pitman V, Jones KD. Reframing chronic pain as a disease, not a symptom: rationale and implications for pain management. Postgrad Med. (2019) 131:185–98. doi: 10.1080/00325481.2019.1574403

41. Harbaugh CN, Vasey MW. When do people benefit from gratitude practice? J Posit Psychol. (2014) 9:535–46. doi: 10.1080/17439760.2014.927905

42. Burckhardt R, Manicavasagar V, Batterham PJ, Hadzi-Pavlovic D. A randomized controlled trial of strong minds: a school-based mental health program combining acceptance and commitment therapy and positive psychology. J Sch Psychol. (2016) 57:41–52. doi: 10.1016/j.jsp.2016.05.008

43. Nagendra RP, Maruthai N, Kutty BM. Meditation and its regulatory role on sleep. Front Neurol. (2012) 3:54. doi: 10.3389/fneur.2012.00054

44. Kuchlous S, Kadaba M. Short Text Intent Classification for Conversational Agents. In: 2020 IEEE 17th India Council International Conference (New Delhi: INDICON). (2020). p. 1–4. doi: 10.1109/INDICON49873.2020.9342516

45. Kroenke K, Spitzer RL, Williams JBW, Löwe B. An ultra-brief screening scale for anxiety and depression: the PHQ−4. Psychosomatics. (2009) 50:613–21. doi: 10.1016/S0033-3182(09)70864-3

46. Hatcher RL, Gillaspy JA. Development and validation of a revised short version of the working alliance inventory. Psychother Res. (2006) 16:12–25. doi: 10.1080/10503300500352500

47. Munder T, Wilmers F, Leonhart R, Linster HW, Barth J. Working alliance inventory-short revised (WAI-SR): psychometric properties in outpatients and inpatients. Clin Psychol Psychother. (2010) 17:231–9. doi: 10.1002/cpp.658

48. Jasper K, Weise C, Conrad I, Andersson G, Hiller W, Kleinstäuber M. The working alliance in a randomized controlled trial comparing Internet-based self-help and face-to-face cognitive behavior therapy for chronic tinnitus. Internet Interventions. (2014) 1:49–57. doi: 10.1016/j.invent.2014.04.002

50. Rey D, Neuhäuser M. Wilcoxon-signed-rank test. In: Lovric M, editor. International Encyclopedia of Statistical Science. Berlin, Heidelberg: Springer Berlin Heidelberg (2011). p. 1658–9. doi: 10.1007/978-3-642-04898-2_616

51. Erlingsson C, Brysiewicz P. A hands-on guide to doing content analysis. Afr J Emerg Med. (2017) 7:93–9. doi: 10.1016/j.afjem.2017.08.001

52. Berger T, Krieger T, Sude K, Meyer B, Maercker A. Evaluating an e-mental health program (“deprexis”) as adjunctive treatment tool in psychotherapy for depression: Results of a pragmatic randomized controlled trial. J Affect Disord. (2018) 227:455–62. doi: 10.1016/j.jad.2017.11.021

53. Vernmark K. Therapeutic Alliance And Different Treatment Formats When Delivering Internet-Based CBT For Depression.

54. Duck S, Miell D. Charting the development of personal relationships. In: Gilmour R, Duck S, editors. The Emerging Field of Personal Relationships. London: Routledge (2021). p. 133–43. doi: 10.4324/9781003164005-11

56. Hauser-Ulrich S, Künzli H, Meier-Peterhans D, Kowatsch T. A smartphone-based health care chatbot to promote self-management of chronic pain (SELMA): pilot randomized controlled trial. JMIR Mhealth Uhealth. (2020) 8:e15806. doi: 10.2196/15806

57. Ravichander A, Black AW. An Empirical Study of Self-Disclosure in Spoken Dialogue Systems. In: Proceedings of the 19th Annual SIGdial Meeting on Discourse and Dialogue. Melbourne: Association for Computational Linguistics (2018). p. 253–63. Available online at: https://aclanthology.org/W18-5030 doi: 10.18653/v1/W18-5030

58. McElvaney J, Timulak L. Clients' experience of therapy and its outcomes in “good” and “poor” outcome psychological therapy in a primary care setting: an exploratory study. Couns Psychother Res. (2013) 13:246–53. doi: 10.1080/14733145.2012.761258

59. Bachelor A. Clients' perception of the therapeutic alliance: a qualitative analysis. J Couns Psychol. (1995) 42:323–37. doi: 10.1037/0022-0167.42.3.323

60. Bedi RP. Concept mapping the client's perspective on counseling alliance formation. J Couns Psychol. (2006) 53:26–35. doi: 10.1037/0022-0167.53.1.26

61. Bedi RP, Richards M. What a man wants: the male perspective on therapeutic alliance formation. Psychotherapy. (2011) 48:381–90. doi: 10.1037/a0022424

62. Bedi RP, Duff CT. Prevalence of counselling alliance type preferences across two samples. Can J Couns Psychother. (2009) 43:151–64. Retrieved from https://files.eric.ed.gov/fulltext/EJ849872.pdf (accessed December 15, 2021).

63. Mohr JJ, Woodhouse SS. Looking inside the therapeutic alliance: assessing clients' visions of helpful and harmful psychotherapy. Psychother Bull. (2001) 36:15–6. Retrieved from https://societyforpsychotherapy.org/psychotherapy-bulletin-archives/ (accessed December 15, 2021).

64. Berger BA. Building an effective therapeutic alliance: competence, trustworthiness, and caring. Am J Hosp Pharm. (1993) 50:2399–403. doi: 10.1093/ajhp/50.11.2399

65. Hartmann A, Orlinsky D, Zeeck A. The structure of intersession experience in psychotherapy and its relation to the therapeutic alliance. J Clin Psychol. (2011) 67:1044–63. doi: 10.1002/jclp.20826

66. Shumanov M, Johnson L. Making conversations with chatbots more personalized. Comput Human Behav. (2021)117:106627. doi: 10.1016/j.chb.2020.106627

67. Eubanks CF, Burckell LA, Goldfried MR. Clinical consensus strategies to repair ruptures in the therapeutic alliance. J Psychother Integr. (2018) 28:60–76. doi: 10.1037/int0000097

68. Purington A, Taft JG, Sannon S, Bazarova NN, Taylor SH. “Alexa is my new BFF”: social roles, user satisfaction, and personification of the amazon echo. In: Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems. New York, NY: Association for Computing Machinery (2017). p. 2853–9. (CHI EA'17). doi: 10.1145/3027063.3053246

Keywords: therapeutic alliance, conversational agent (CA), mobile mental health, chatbot, depression, anxiety, digital health

Citation: Beatty C, Malik T, Meheli S and Sinha C (2022) Evaluating the Therapeutic Alliance With a Free-Text CBT Conversational Agent (Wysa): A Mixed-Methods Study. Front. Digit. Health 4:847991. doi: 10.3389/fdgth.2022.847991

Received: 03 January 2022; Accepted: 04 March 2022;

Published: 11 April 2022.

Edited by:

Thomas Berger, University of Bern, SwitzerlandReviewed by:

Rodrigo T. Lopes, University of Bern, SwitzerlandEduardo L. Bunge, Palo Alto University, United States

Copyright © 2022 Beatty, Malik, Meheli and Sinha. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chaitali Sinha, Y2hhaXRhbGkmI3gwMDA0MDt3eXNhLmlv

Clare Beatty

Clare Beatty Tanya Malik

Tanya Malik Saha Meheli

Saha Meheli Chaitali Sinha

Chaitali Sinha