94% of researchers rate our articles as excellent or good

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.

Find out more

ORIGINAL RESEARCH article

Front. Big Data, 25 June 2021

Sec. Big Data and AI in High Energy Physics

Volume 4 - 2021 | https://doi.org/10.3389/fdata.2021.665783

This article is part of the Research TopicHeterogeneous Computing for AI and Big Data in High Energy PhysicsView all 6 articles

The High Energy Physics (HEP) experiments, such as those at the Large Hadron Collider (LHC), traditionally consume large amounts of CPU cycles for detector simulations and data analysis, but rarely use compute accelerators such as GPUs. As the LHC is upgraded to allow for higher luminosity, resulting in much higher data rates, purely relying on CPUs may not provide enough computing power to support the simulation and data analysis needs. As a proof of concept, we investigate the feasibility of porting a HEP parameterized calorimeter simulation code to GPUs. We have chosen to use FastCaloSim, the ATLAS fast parametrized calorimeter simulation. While FastCaloSim is sufficiently fast such that it does not impose a bottleneck in detector simulations overall, significant speed-ups in the processing of large samples can be achieved from GPU parallelization at both the particle (intra-event) and event levels; this is especially beneficial in conditions expected at the high-luminosity LHC, where extremely high per-event particle multiplicities will result from the many simultaneous proton-proton collisions. We report our experience with porting FastCaloSim to NVIDIA GPUs using CUDA. A preliminary Kokkos implementation of FastCaloSim for portability to other parallel architectures is also described.

The ATLAS detector at the Large Hadron Collider (LHC) relies heavily on massive samples of simulated proton-proton collision events for detector modeling, upgrade studies and to deliver high-quality physics results. However, standard simulations–using Geant4 (Agostinelli and et al., 2003) for accurate and detailed modeling of physics processes and detector geometry–are extremely CPU-intensive, and its use for all Monte Carlo-based studies is impractical due to the formidable size of the LHC dataset. In particular, simulating electromagnetic and hadronic shower developments in the ATLAS calorimeters is extremely CPU-intensive, comprising more than 90% of the overall simulation time (The ATLAS Collaboration, 2010).

To reduce the significant processing time required to simulate the calorimeters, ATLAS developed FastCaloSim (The ATLAS Collaboration, 2018; ATLAS Software Repository, 2020), a primarily C++ package that uses a simplified detector geometry and parameterizations of shower development initiated by particles traversing the calorimeter volumes. The parameterizations are derived using single particles–electrons, photons and charged pions–with initial energy between 1 GeV and 4 TeV, uniformly distributed in pseudorapidity, η. During a simulation, the optimal parameterization is selected based on the particle type, energy deposit and location in the detector in order to best model the corresponding electromagnetic or hadronic shower. The parameterizations are then used to create calorimeter hits, which contain information about the location, and amount of energy deposited in the detector. Thus, the more initial and secondary particles produced in a shower, the larger the number of hits. Following the simulation, hits are then processed and used for event reconstruction. Therefore, it is the number of hits, not necessarily the number of initial particles, that determines the length of time required for a particular simulation.

A parameterized simulation can reduce the CPU time by a factor of 10 to 25–depending on the process being modeled–relative to that of fully Geant4-based simulations. However, with respect to detector simulation workflows combining Geant4 for the Inner Detector and Muon Spectrometer with FastCaloSim, simulation of the calorimeters is no longer the speed-limiting component. Nevertheless, there are a number of advantages of porting FastCaloSim to accelerators, in particular, GPUs. First, an intelligent port of the serial CPU code to GPU-friendly code permits parallelization over events (event-level parallelization) and also over particles within a single event (intra-event, or particle-/hit-level parallelization). The ability to parallelize in these ways becomes exceedingly important when simulating not only a single proton-proton collision (the “hard-scatter” event) but when additional collisions within the same bunch-crossing are also simulated (“pile-up” events). For example, at the LHC there are on average 30 (The ATLAS Collaboration, 2020) pile-up events for every one hard-scatter; in the conditions of the High-Luminosity LHC (HL-LHC) (Apollinari et al., 2017), there are expected to be more than 200 pile-up events for every hard-scatter. ATLAS foresees utilizing fast simulation for 60-90% of its simulation workload for various HL-LHC scenarios (Calafiura et al., 2020), making highly-parallelizable codes of this nature extremely desirable. Lastly, an initial port of a set of codes such as those comprising FastCaloSim, that makes significant use of GPUs and demonstrates their computational ability, can be invaluable to the high-energy physics community in general. It can serve as a proof of concept and motivation for other groups and collaborations to design and implement their own codes to execute on accelerator hardware, and prompt investigations into alternative portable solutions to exploit the wide range of modern architectures that are becoming increasingly pervasive.

The inputs to FastCaloSim are tuples containing information about the incoming particle: the particle type and associated four-vector, which consists of the particle’s energy and momentum. Additional details–e.g., calorimeter layer, cell and amount of energy deposited–are also included to determine the correct parameterization as the particle traverses the detector.

For the studies presented here, several different inputs were used; single-particle samples–electrons, photons and charged pions–with various initial energies between 16 GeV and 4 TeV, and η within the acceptance of the calorimeter; and a sample consisting of

In the ATLAS production runs, FastCaloSim is part of the Athena software framework (ATLAS Software Repository, 2020). In order to make it easy to experiment with the GPU porting, we used the standalone version of FastCaloSim to reduce the number of dependencies that are typically needed in the Athena framework. The version of the standalone FastCaloSim package we used consists of roughly 40,000 lines of C/C++ code.

In order to determine where the computational hotspots are located in FastCaloSim, we ran performance profiling using the test data described in Test Data for electrons with an energy of 65.536 GeV and

For porting to the GPU we focused on the function which, other than data unpacking, consumed the majority of the execution time: TFCSLateralShapeParametrizationHitChain::Simulate_hit() (see Box 1). This function performs the hit simulation in a calorimeter layer for a passing particle. In this function there is a loop over the number of hits which can range from 1 to 15,000 depending on particle type, energy, angle and calorimeter layer. For the input sample file of electrons with

Box 1 | Hit simulation function.

TFCSLateralShapeParametrizationHitChain::simulate(

TFCSSimulationState& simulstate

const TFCSTruthState* truth,

const TFCSExtrapolationState* extrapol ) {

int nhit = get_number_of_hits( simulstate, truth, extrapol );

float Ehit = simulstate.E( calosample() ) / nhit;

for ( int i = 0; i < nhit; ++i ) { // hit simulation loop

TFCSLateralShapeParametrizationHitBase::Hit hit;

hit.E() = Ehit;

for ( TFCSLateralShapeParametrizationHitBase* hitsim : m_chain ) {

hitsim->simulate_hit( hit, simulstate, truth, extrapol );

}

}

}

The hit simulation calculation requires detector geometry data and parametrization tables. To offload the calculation, this data first needs to be loaded onto the GPU.

For the data used, the detector geometry included 187,652 calorimeter elements and 24 layers or samples, located in 78 regions. However, on the host side, the detector geometry data structures make heavy use of the C++ Standard Template Library (STL) and pointers. For example, the host region data structures include std::vector<std::vector<CaloDetDescrElements *>>. These kinds of data structures are not supported by CUDA - in general, STL data structures will automatically re-allocate memory as their size changes, a feature not supported on GPUs due to their very limited memory resources. Furthermore, copying many small chunks of non-contiguous data generated with new() from CPU to GPU is very inefficient. We thus flattened the data, using arrays instead of pointers, and moved scattered data together before copying them to the GPU. After being copied to the GPU, all calorimeter detector element data as well as all region data were kept together as array of structs. The total size of the geometry data was about 20 MB.

Similarly, the parametrization tables, which are held in an std::vector, were transformed into a simple array before being copied to the GPU. Since the complete parametrization data is very large, only the needed tables were loaded onto the GPU during simulation. Furthermore, the same table is only loaded once, so if subsequent events require the same tables, they will not be loaded again. The total size of tables loaded to GPU depends on the type of simulations. Single particle simulations have a small footprint, while the data size for multi-particle simulations can be big. In our benchmark study for electrons with

Hit simulation requires a large amount of random numbers. Each hit needs 3 random numbers. The cuRand library function was used to generate random numbers on the GPU. However this will only produce statistically equivalent and not binary identical result when compared to CPU-only calculations. For code and physics validation, random numbers were also generated on the host, and copied to the GPU for calculations. This technique was only used for validation, and not for performance benchmarks, as it is much slower.

Other than the random number generator, there are 3 CUDA kernels in the code. The first, clean, resets the workspace. The number of threads is the same as the total number of detector cells. The second or main kernel, sim_a, does the hit simulation, which calculates which cell gets the hit, and deposits energy into it. Each thread simulates one hit. Atomic operations were chosen to accumulate the energy deposits, as the number of hits is relatively small. A block reduction method was also tried, but did not demonstrate any performance gain, and introduced more kernels. The last kernel, sim_ct, counts the hit cells and gathers the result for more efficient data transfer back to the host. The total number of threads is again the same as the total number of detector cells. The kernels are launched each time the code enters the hit simulation loop, i.e., for each calorimeter sample layer a particle is passing through. After the third kernel executes, the results, an array of struct {long long cell_id; float E } are copied back to host. For all 3 kernels, we used block size 256, i.e., 256 threads per block, in our benchmark. Since the algorithmic processing is sequential in nature, the kernels are launched synchronously. Using asynchronous kernel launches and pre-loading data for the next event would have required large scale reconfiguration of the code, and was beyond the scope of this study.

To avoid many small kernel launches that would be required if the random numbers were generated on demand, GPU memory was pre-allocated, and a large number of random numbers were generated before hand, so that they would not need to be generated in every hit simulation loop. Regeneration of random numbers is only triggered when they are all consumed. In our benchmark test, we generate 200,000 random numbers each time. The infrequent generation of these random numbers places a very small burden on the GPU, and does not significantly affect performance.

We noticed that for some combinations of (particle type, energy, η), the numbers of hits were very small. Since we only offloaded the simulation to the GPU when there were a minimum of 500 hits, most of the calculation could still be done on the host. To solve this problem, we explored the method of grouping hit simulations. We combined different layers, particles, events together so that each GPU simulation kernel launch can process more hits, with tasks of multiple entries to the simulation function as shown in Box 1. In order to combine these tasks, every time the hit simulation part is executed, instead of directly doing the calculations on either the GPU or the CPU, we only collect the parameters needed for simulation, such as particle type, position, layer, number of hits, energy etc. The simulation calculation is delayed until enough hits have been accumulated. This information is then loaded onto the GPU and the simulation kernel is launched with more threads. Each thread can discover its own parameters needed for the hit it simulates and perform proper simulation. Our preliminary results in Performance Benchmarks show that this technique can lead to positive gains.

To perform the benchmarks, we used the GPU nodes on the Brookhaven National Lab Institutional Cluster (BNL-IC) and the NERSC Cori GPU cluster. The BNL-IC node has 2 Intel® Xeon® CPU E5-2695 processors, each with 18 cores. It also has 2 NVIDIA P100 GPUs. Only one GPU was used when running a single instance of the code. Two GPUs were used only when we tested multiple instances of the program running with nvidia-cuda-mps-server.

Cori GPU nodes have two Intel® Xeon® Gold 6148 CPU and 8 NVIDIA V100 GPUs. Our benchmark job used only one CPU core and one GPU.

The program starts by reading parametrization data files, unpacking them, and setting up the simulation chains. It then goes through an event loop to perform simulation for each event. The accumulated results are saved after event loop is completed. Other than the event loop, the majority of the time was spent on unpacking data which doesn’t change much with different input sample files.

Our benchmarks collect time spent in the event loop and for the complete run by wrapping the code sections with calls to std::chrono::system_clock::now().

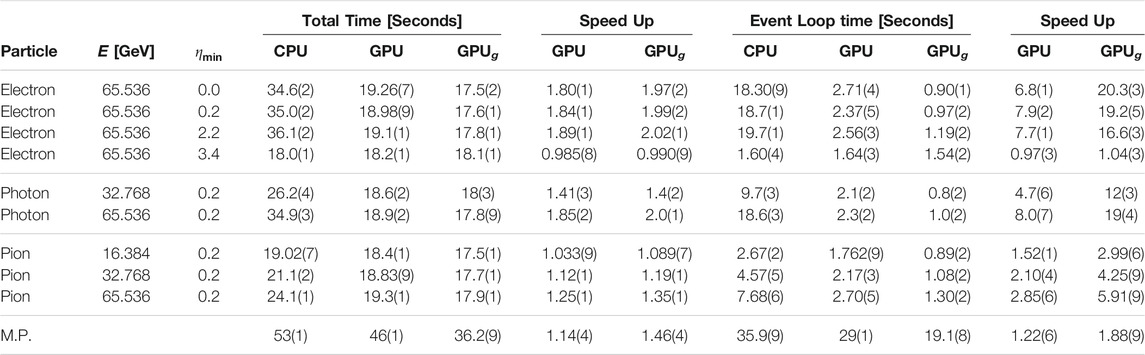

Table 1 shows the result from running on the BNL-IC, comparing the original CPU code and GPU accelerated code run time for a few different particles and pseudorapidities with an energy of less than or equal to

TABLE 1. Execution time for the complete job and for only the event loop part of the job, for various input files. M.P. stands for multi-particle. GPUg is for GPU group simulation code. Numbers in the parentheses are the significant digits of the standard deviations. For example, 34.6(2) means

In Table 1, the CPU code event loop time roughly reflects the total number of hits. It is important to note that the ATLAS forward calorimeters, with acceptance

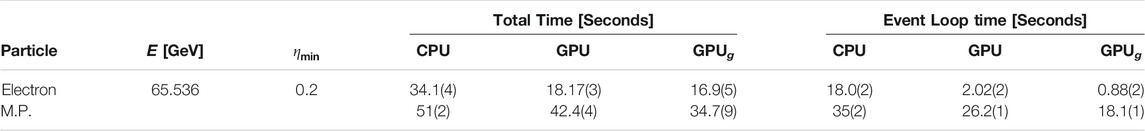

Table 2 shows the results of running on the NERSC Cori GPU node which are consistent with the BNL-IC results. Note that Cori has slightly faster CPUs and GPUs.

TABLE 2. Execution time of complete run and event loop on Cori GPU node. M.P. stands for multi-particle.

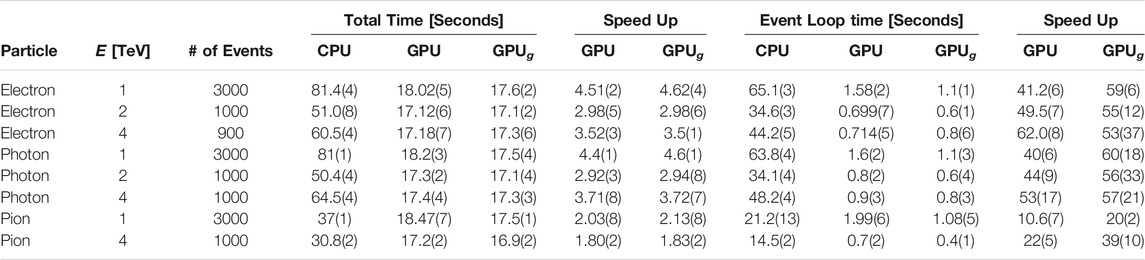

Simulations with higher energies at

TABLE 3. Execution time of complete run, event loop and speed up over CPU only code for high energy input files. All simulations are with

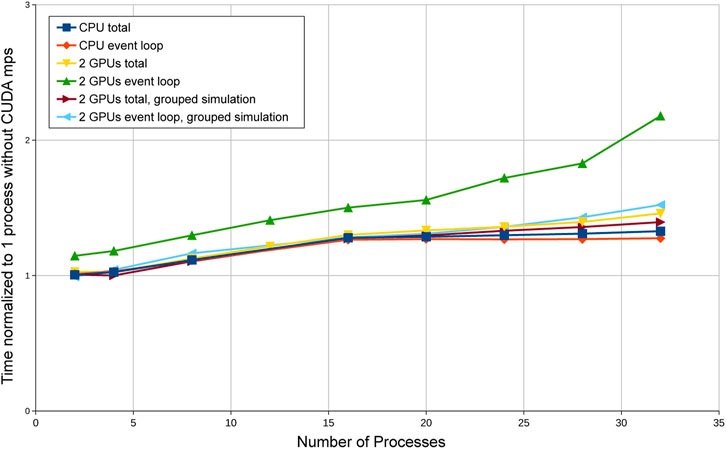

In all cases above, a single instance of the job will only use a small portion of the available GPU resources. Current high energy physics production calculations are performed in the High Throughput Computing (HTC) environments where many jobs are run on each multi-core node at the same time, usually with one thread or one process per core. To emulate this environment, we started multiple concurrent instances of the same job, and shared the GPUs on the node between them by means of nvidia-cuda-mps-server (NVIDIA multi-process service, 2020).

Figure 1 shows the result of running the benchmark on the same BNL-IC node, launching 2-32 processes of the program with CPU, GPU and group simulation codes. The input sample data consisted of single-electrons with

FIGURE 1. Normalized timing of multiple concurrent jobs running on the same node sharing GPUs with nvidia-cuda-mps-server.

Since GPU ported code uses the cuRAND random number generator, which creates a different random number set from the CPU simulation, we do not expect that the simulation results should be exactly comparable between the CPU and GPU. We should, however, be able to produce statistically consistent results, which we have checked and confirmed. In order to further validate the GPU code, we used the option of copying the same set of CPU generated random numbers to the GPU, performing the simulation on the GPU, and comparing the results with the simulation performed solely on the CPU.

We compared the results of around 62 million hits in the Electron 65.536 GeV run, and found that in the GPU simulation, there were two hits whose calculations ended up in different calorimeter cells compared to the CPU calculation. Further investigation showed that this was due to floating point rounding error differences between CPU and GPU. The two mismatches can be eliminated by using double precision (double) variables for certain calculations instead of single precision float. We can thus conclude that the GPU ported code is correctly performing the same calculations as the original CPU code.

Kokkos (Carter et al., 2014) is a C++ library, developed at Sandia National Laboratory, with a rich history and a strong user base. It is a portable, performant, single source shared-memory programming model that abstracts away the hardware, allowing developers to write algorithms once and run on any supported backend architectures. Currently supported backends are serial processing on a traditional CPU, parallel processing on multi and many-core CPUs via pThreads and OpenMP, and NVIDIA and AMD GPUs via CUDA and HIP. An Intel GPU backend is currently under development as well. A common API that hides the architecture-specific implementation details greatly reduces the intimate device knowledge that would otherwise be needed by developers. At compile time, Kokkos permits the selection of one host serial backend (e.g., Intel Haswell architecture), one host parallel backend (e.g., OpenMP), and one device parallel backend (e.g., NVIDIA V100). If different hardware backends are desired, both the user code and Kokkos runtime library must be recompiled.

Kokkos has a number of different parallel abstractions available, such as parallel_for, parallel_reduce, and parallel_scan. It also provides utilities such as random number generators, and supports atomic operations. Data access is accomplished via multi-dimensional structures that are templated in the type of the data called Kokkos::Views. The sizes of the structures can be specified at either run or compile time. Kokkos usage of C++ language features in kernels are only limited by the compilers necessitated by the selected backends–e.g., if the CUDA backend is chosen for NVIDIA hardware–and the available version of the nvcc compiler is 10.2, then C++14 is the latest standard that can be used in a kernel.

FastCaloSim uses CMake as its build tool. Modifying the CMake configuration files to pick up the Kokkos installation was relatively simple, as Kokkos provides the infrastructure support for CMake, with find_package(Kokkos). Configuring Kokkos was more complicated. There are three build parameters of Kokkos that must be selected when installing the library, which can affect how the FastCaloSim code is organized:

• The hardware backends (host serial, host parallel, device parallel)

• The use of shared libraries

• The use of relocatable device code

For testing purposes, the choice of the hardware backends is not problematic, as the developer knows where the code will run. It is more of an issue in a production environment where binaries might be distributed to a broad range of hardware architectures, requiring multiple different configurations to be built. The choice of using shared vs static libraries is usually dependent on what already exists in the code base that will be using Kokkos. In this case, FastCaloSim uses shared libraries, and modifying it to use static libraries would have made re-integration to the original code base much more challenging, so we decided to stay with shared libraries. Unfortunately, enabling shared libraries and relocatable device code are mutually exclusive in the Kokkos build. Disabling relocatable device code has significant implications for the structure of the kernel sources - all symbols in a kernel must be visible and resolvable within a single compilation unit.

In order to keep all source files as synchronized as possible, we chose to keep the serial, CUDA and Kokkos codes in the same git branch, selecting which feature to build at CMake configuration time via command line switches, such as -DENABLE_GPU=on and -DUSE_KOKKOS=on.

If the CUDA backend of Kokkos is selected as the device parallel backend, CUDA and Kokkos code can freely interoperate, with Kokkos kernels having the ability to call CUDA, and access data allocated via cudaMalloc. This feature allows for the incremental migration of CUDA kernels to Kokkos, simplifying testing and reproducibility. One by one, we rewrote the CUDA kernels as Kokkos lambda expressions and inlined functions, to be executed as parallel_for loops, but maintaining the CUDA memory allocations, and accessing the bare device pointers in the kernels. At each step, we validated the outputs to make sure that the results were consistent with the original CUDA code.

Once all kernels had been migrated to Kokkos, we tackled the data structures. Most are simple scalars, 1D and 2D arrays. These were easy to translate to Kokkos::Views. A couple of data structures, however, are jagged 2D arrays, i.e., arrays of arrays where the sizes of the inner arrays are not all the same. While it is technically possible to create Kokkos::View< Kokkos::View< T >>, this is not advised by the Kokkos developers, and requires a significant amount of extra boiler-plate code to create and access. Instead we chose to flatten the jagged arrays, either by adding a new array which indexed the starting locations of the inner arrays in a 1D structure when there was significant variation in the sizes of the inner arrays, or by padding to the largest size of the inner arrays, when they were close in size.

Since Kokkos use of relocatable device code was disabled, we had to structure the source files so that all symbols were visible within a single compilation unit. This was accomplished by creating a wrapper file that merely included the various individual kernel source files. Since the majority of the kernel device functions were identical for the Kokkos and CUDA implementations, in order to share as much of the code as possible, the shared code was in a single file, with CUDA and Kokkos specific features triggered via preprocessor macros (see Box 2).

Box 2 | Language functionality selection.

# ifdef USE_KOKKOS

# include <Kokkos_Core.hpp>

# include <Kokkos_Random.hpp>

# define __DEVICE__ KOKKOS_INLINE_FUNCTION

#else

# define __DEVICE__ __device__

# endif

__DEVICE__ void HitCellMapping_d ( Hit& hit, Chain0_Args args ) {

long long cellele = getDDE ( args.geo, args.cs, hit.eta(), hit.phi() );

# ifdef USE_KOKKOS

Kokkos::View<float*> cellE_v ( args.cells_energy, args.ncells );

Kokkos::atomic_fetch_add ( &cellE_v ( cellele ), hit.E() );

#else

atomicAdd( &args.cells_energy [cellele], hit.E () );

#end if

…

}

Implementations that were very different between the Kokkos and CUDA versions were kept in separate files, and included by wrappers appropriately.

Another complication is that Kokkos will compile source files differently depending on the suffix of the file name. If the suffix is .cu, the NVIDIA compiler nvcc will be used for the compilation with a specific set of command line switches that is different than if the same file had a .cpp or .cxx extension. Compilation errors will occur if Kokkos core headers are exposed to nvcc in .cu files, which required some careful organization of file names and wrappers.

A large number of random numbers are required during the execution of FastCaloSim. They are used in code that is executed on the device, and are not needed on the host. Thus it would make sense to generate them on the device, to avoid large memory transfers. Both CUDA and Kokkos provide libraries to generate high quality random numbers. However, in order to verify that different implementations produce the same results, it is very useful to be able to always use the same set of random numbers. We thus provided the ability to generate random numbers either on the host, and transferred to the device as needed, or directly on the device using either the CUDA or Kokkos RNGs.

Once we had fully migrated all kernels and data structures to Kokkos, we were able to test the performance of the code on multiple different parallel devices. The hardware platform was a dual Intel Xeon Gold 5220 CPU with 18 physical cores per CPU, for a total of 72 hyperthreaded cores. The host OS was Centos 7, and packages were compiled with GCC 8.2. Also installed on the server were a NVIDIA RTX 2080 Super GPU, and an AMD Vega 56 GPU. We used Kokkos version 3.1 for testing purposes, which was configured to target the CPU with Kokkos_ARCH_SKX, and the GPUs with Kokkos_ARCH_TURING75 and Kokkos_ARCH_VEGA900. The installed version of CUDA was 10.2.89, and of the ROCm libraries was 3.5.1. We also converted the CUDA code directly to HIP using hipify-perl. For the sake of brevity, when we refer to HIP in the subsequent sections, we mean the hcc backend of HIP that targets AMD devices, as opposed to the nvcc backend that targets NVIDIA devices.

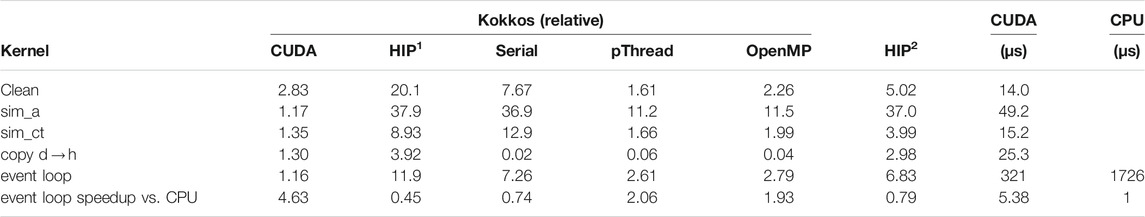

In Table 4 we show the relative execution times of various kernels with different Kokkos backends, using the CUDA implementation as a baseline, and reporting times as a factor slower than that of the CUDA implementation.

TABLE 4. Relative kernel average execution times with various Kokkos backends, as a factor of the pure CUDA implementation execution time. HIP1 is for Kokkos with the HIP backend, whereas HIP2 is a direct port from CUDA to HIP. The last two columns show the absolute kernel runtimes for the original CUDA code, and for the native CPU implementation, averaged over 10,000 events. The last row shows the relative speedup of the event loop versus the native CPU implementation.

The aspects that we measured were the time it takes to re-initialize a large array of approximately 200,000 floats (clean) at the start of each event, the time to execute the main kernel (sim_a), the time to execute the reduction kernel (sim_ct, the time it takes to copy the results back from the device to the host (copy d

We also directly measured the performance of the kernels using nvprof and rocprof, as show in Table 5.

TABLE 5. Relative kernel average execution times with CUDA and HIP1 Kokkos backends, and a direct HIP2 port, as a factor of the pure CUDA implementation execution time, as measured by GPU profiling tools nvprof and rocprof.

From these measurements, we can extract several important conclusions. First, using Kokkos to initialize memory on the device does impose some significant penalties as compared to a simple CUDA operation. This is due to extra steps required to create the host views before the device memory is reset with a Kokkos::deep_copy. In general though, while we do see some overhead from using Views and some small penalties from launching Kokkos kernels, the performance of the CUDA backend of Kokkos is reasonably close to that of the pure CUDA implementation. As the kernels get smaller and simpler, e.g., comparing the performance of sim_ct to that of sim_a, the Kokkos overhead become more noticeable. Furthermore, the performance of the serial backend of Kokkos performs considerably worse than the native CPU implementation (about 35% slower). As well, using the OpenMP or pThread backend with 15 threads (where the greatest speedup was achieved) would only be cost effective if there was no available work for the other CPUs on the host, otherwise throughput was about a factor of 7.5 worse than an equivalent number of single threaded (native CPU) jobs.

Second, the performance of AMD GPU is noticeably worse than that of the NVIDIA GPU. While it is hard to do a direct comparison as the architectures are very different, the clock speeds and number of computation units are within 25% of each other, yet we see timing differences in some cases of one and a half orders of magnitudes. Further tests of simple kernels seem to suggest that the kernel launch latencies on the AMD GPU are significantly higher than that of the NVIDIA GPUs (see Table 6). The clock frequency of the CPU on the host also has a significant impact on the launch latency.

TABLE 6. Kernel launch latencies in microseconds as a function of CPU clock frequency, measured by timing the execution of 10,000 empty kernels.

It is also worth noting that when the job was pinned to a single CPU core, the timings for the CUDA and Kokkos/CUDA did not change appreciably. However the time to execute the full event loop when utilizing the HIP backend of Kokkos increased by 30%, suggesting that scheduling and launching kernels on AMD devices places significant burden on the CPU.

The method that we chose to translate the CUDA code to Kokkos was obviously non-optimal, and in some ways deliberately so. Part of the exercise was to understand the issues involved in porting a project which may have a large amount of existing code from serial C++ to CUDA, and from CUDA to Kokkos, while attempting to leave existing serial C++ and CUDA code unmodified as much as possible. Given the relatively small size of the FastCaloSim code base, it would have been easier to completely rewrite the entire code base, refactoring to optimize for Kokkos data structures and kernels. This would no doubt have improved the performance of the Kokkos version. However, ultimate performance is not the end goal of our study, but rather performant portability, reproducibility, and the burden of code maintenance are more important metrics. With a large code base, it is very important to make sure that it is easy to maintain compatibility between all implementation flavours, and also to ease migration of code from serial C++

We have successfully ported the ATLAS fast calorimeter simulation code, FastCaloSim, to use GPUs. The code was first ported to NVIDIA GPUs using the proprietary CUDA API. As with many HTC applications, the compute-to-I/O ratio of FastCaloSim is not very large, limiting the potential gains of using GPUs. Several techniques have been incorporated in our implementations to increase the speed and efficiency of the computations on the GPUs, as well as the GPU’s overall utilization. The performance gains on the GPUs are greatly dependant on the types of simulations (particle type, energy, angle etc.). For the test cases we ran, we observed overall speed-ups by as much as a factor of five. But if we compare only the timing of the simulation part, the speedups on the GPUs were much more significant, as much as 60 times relative to executing with a single thread on a CPU core. The GPU was found to be underutilized, and a single GPU may be shared among many concurrent CPU jobs of FastCaloSim via nvidia-cuda-mps-server with very little loss of performance.

We have also shown a preliminary Kokkos implementation that supports running on multiple architectures, including multicore CPUs, NVIDIA GPUs and AMD GPUs. Kokkos has proven to be a powerful tool for performant portability on a number of different architectures. While there is some overhead with utilizing its data structures as compared with raw memory allocation, these are relatively small and offset by the gains in portability. Direct comparisons of kernel execution time running on both NVIDIA and AMD GPUs show reasonably similar measurements between Kokkos and device specific CUDA/HIP implementations. Since the Kokkos implementation was designed as a port from CUDA, and not rewritten from the ground up, it is very likely that further optimization of the Kokkos code would be possible. The ability to run on both NVIDIA and AMD GPUs, as well as multicore CPUs via OpenMP or pThreads, with minimal changes to user code, vastly outweighs any small performance losses.

Publicly available datasets were analyzed in this study. This data can be found here: https://github.com/cgleggett/FCS-GPU.

Original CPU code developed under guidance of HG. Port to CUDA performed by ML, ZD and KY. Port to Kokkos performed by CL and VP.

This work was supported by the DOE HEP Center for Computational Excellence at Brookhaven National Laboratory, and Lawrence Berkeley National Laboratory under B&R KA2401045. This work was also done as part of the offline software research and development programme of the ATLAS Collaboration, and we thank the collaboration for its support and cooperation. This research used resources of the National Energy Research Scientific Computing Center (NERSC), a U.S. Department of Energy Office of Science User Facility located at Lawrence Berkeley National Laboratory, operated under Contract No. DE-AC02-05CH11231. We gratefully acknowledge the use of the computing resources provided by Brookhaven National Laboratory. A git repository for the source code is available (FastCaloSim Software Repository, 2020).

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Agostinelli, S., Allison, J., Amako, K., Apostolakis, J., Araujo, H., Arce, P., et al. (2003). GEANT4–a Simulation Toolkit. Nucl. Instrum. Meth. A. 506, 250–303. doi:10.1016/S0168-9002(03)01368-8

Apollinari, G., Béjar Alonso, I., Brüning, O., Fessia, P., Lamont, M., Rossi, L., et al. (2017). High-Luminosity Large Hadron Collider (HL-LHC): Technical Design Report V. 0.1. Geneva: Tech. rep. doi:10.23731/CYRM-2017-004

ATLAS Software Repository (2020). Available at: https://gitlab.cern.ch/atlas/athena.

Calafiura, P., Catmore, J., Costanzo, D., and Di Girolamo, A. (2020). ATLAS HL-LHC Computing Conceptual Design Report. Tech. Rep. CERN-LHCC-2020-015. LHCC-G-178. Geneva: CERNAvailable at: https://cds.cern.ch/record/2729668.

Carter, H., Trott, C., and Sunderland, D. (2014). Kokkos: Enabling Manycore Performance Portability through Polymorphic Memory Access Patterns. J. Parallel Distributed Comput. 74, 3202–3216. doi:10.1016/j.jpdc.2014.07.003

FastCaloSim Software Repository (2020). Available at: https://github.com/cgleggett/FCS-GPU.

NVIDIA multi-process service (2020). Available at: https://docs.nvidia.com/deploy/pdf/CUDA\_Multi\_Process\_Service\_Overview.pdf.

The ATLAS Collaboration (2020). ATLAS Data Quality Operations and Performance for 2015–2018 Data-Taking. J. Instrumentation. 15, P04003. doi:10.1088/1748-0221/15/04/p04003

The ATLAS Collaboration (2008). The ATLAS experiment at the CERN Large Hadron Collider. J. Instrumentation. 3, S08003. doi:10.1088/1748-0221/3/08/s08003

The ATLAS Collaboration (2010). The ATLAS Simulation Infrastructure. The Eur. Phys. J. C. 70, 823–874. doi:10.1140/epjc/s10052-010-1429-9

The ATLAS Collaboration (2018). The New Fast Calorimeter Simulation in ATLAS. Tech. Rep. ATL-SOFT-PUB-2018-002. Geneva: CERN.

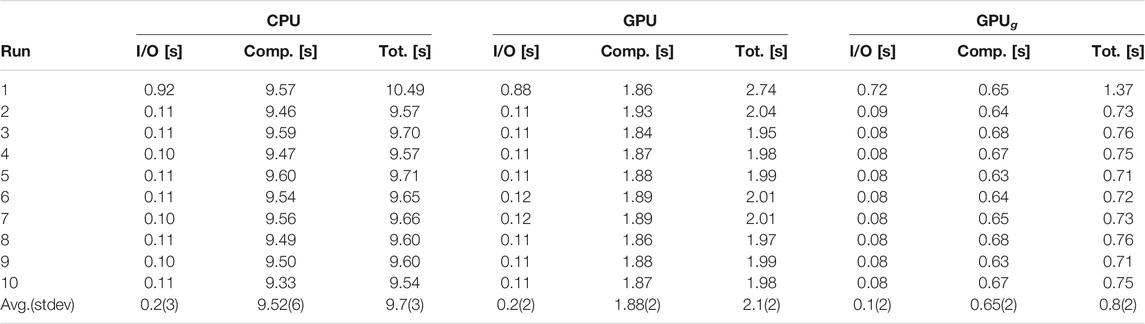

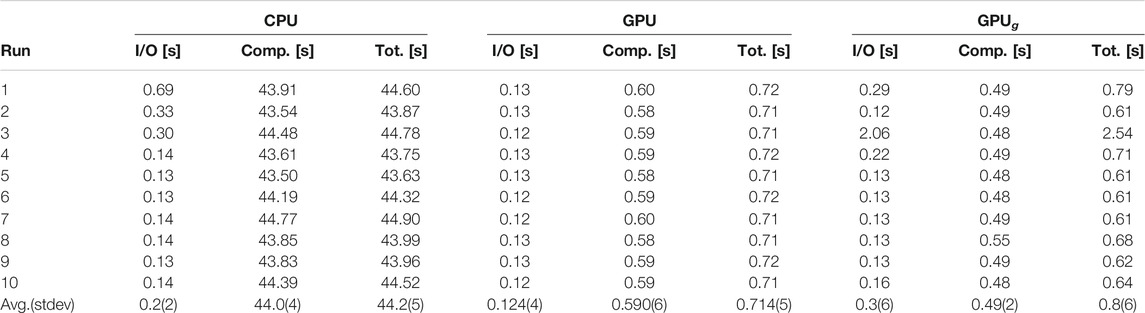

Here we show some representative examples of the detailed breakdown of the I/O time and compute time inside the event loop for the three implementations, original CPU (denoted as CPU), CUDA code (denoted as GPU) and CUDA group simulation code (denoted as GPUg). Table A1 shows the detailed timing breakdown for I/O and compute time during the event loop for the simulation of Photons with

TABLE A1. Breakdown of the Event Loop time (Tot.) into I/O time (I/O) and compute time (Comp.) for the simulation of Photons with

TABLE A2. Breakdown of the Event Loop time (Tot.) into I/O time (I/O) and compute time (Comp.) for the simulation of Electrons with

Keywords: large hadron collider, high performance computing, gpu, CUDA, kokkos, performance portability, particle physics

Citation: Dong Z, Gray H, Leggett C, Lin M, Pascuzzi VR and Yu K (2021) Porting HEP Parameterized Calorimeter Simulation Code to GPUs. Front. Big Data 4:665783. doi: 10.3389/fdata.2021.665783

Received: 08 February 2021; Accepted: 07 May 2021;

Published: 25 June 2021.

Edited by:

Daniele D'Agostino, National Research Council (CNR), ItalyReviewed by:

Felice Pantaleo, European Organization for Nuclear Research (CERN), SwitzerlandCopyright © 2021 Dong, Gray, Leggett, Lin, Pascuzzi and Yu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Charles Leggett, Y2dsZWdnZXR0QGxibC5nb3Y=

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Research integrity at Frontiers

Learn more about the work of our research integrity team to safeguard the quality of each article we publish.